Two-Dimensional Geometry Representation Learning-Based Construction Workers Activities Detection with Flexible IMU Solution

Abstract

1. Introduction

2. Related Work

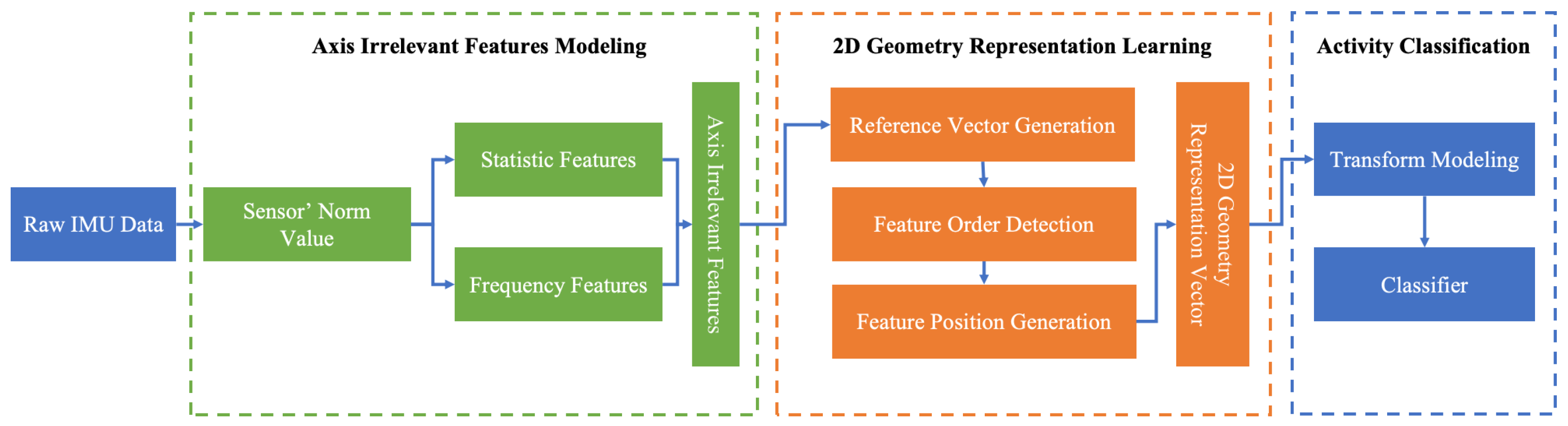

3. Flexible IMU Solution for Construction Worker Activity Detection

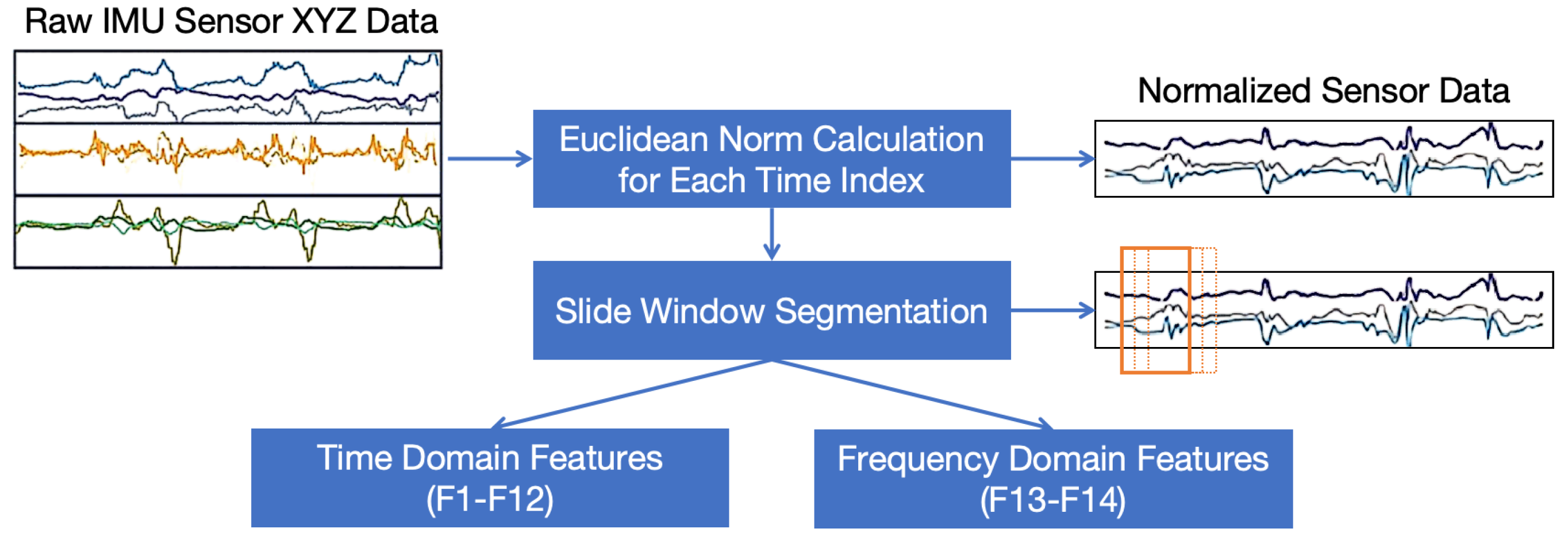

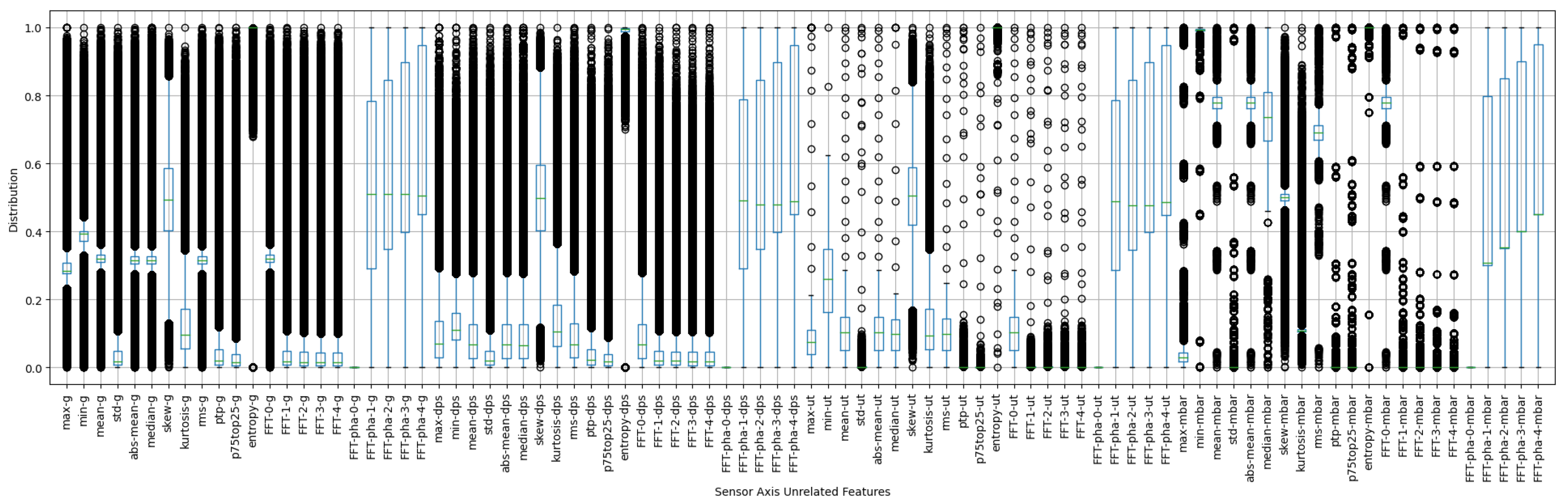

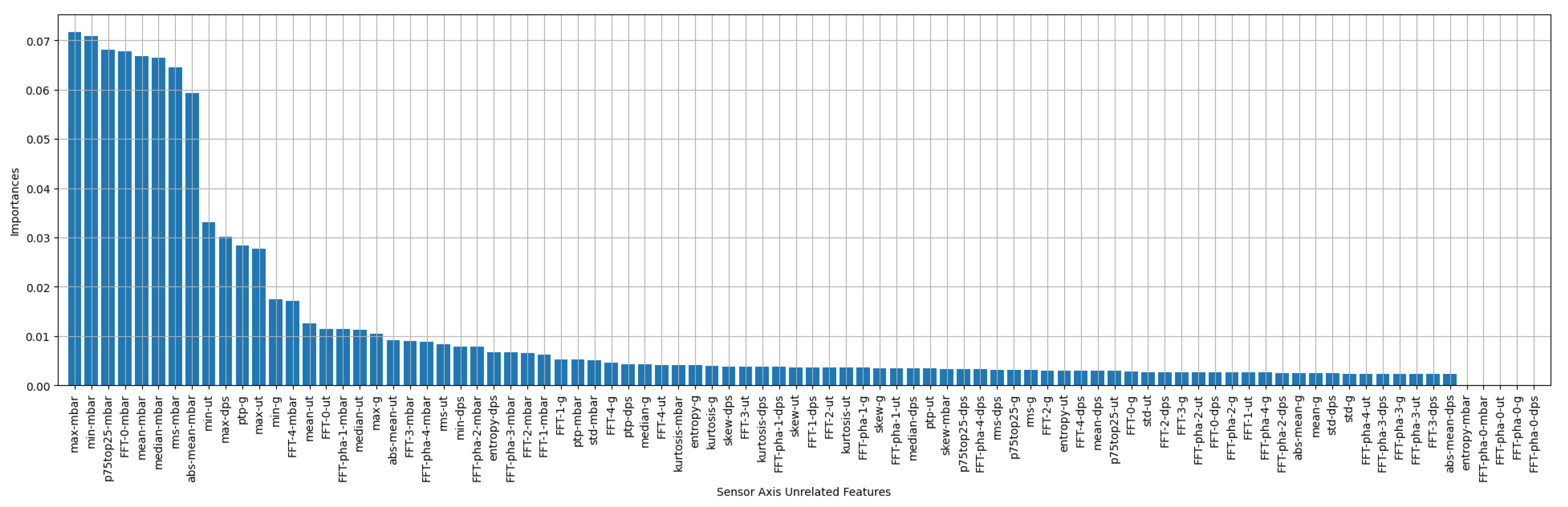

3.1. IMU Data Axis-Irrelevant Feature Modeling

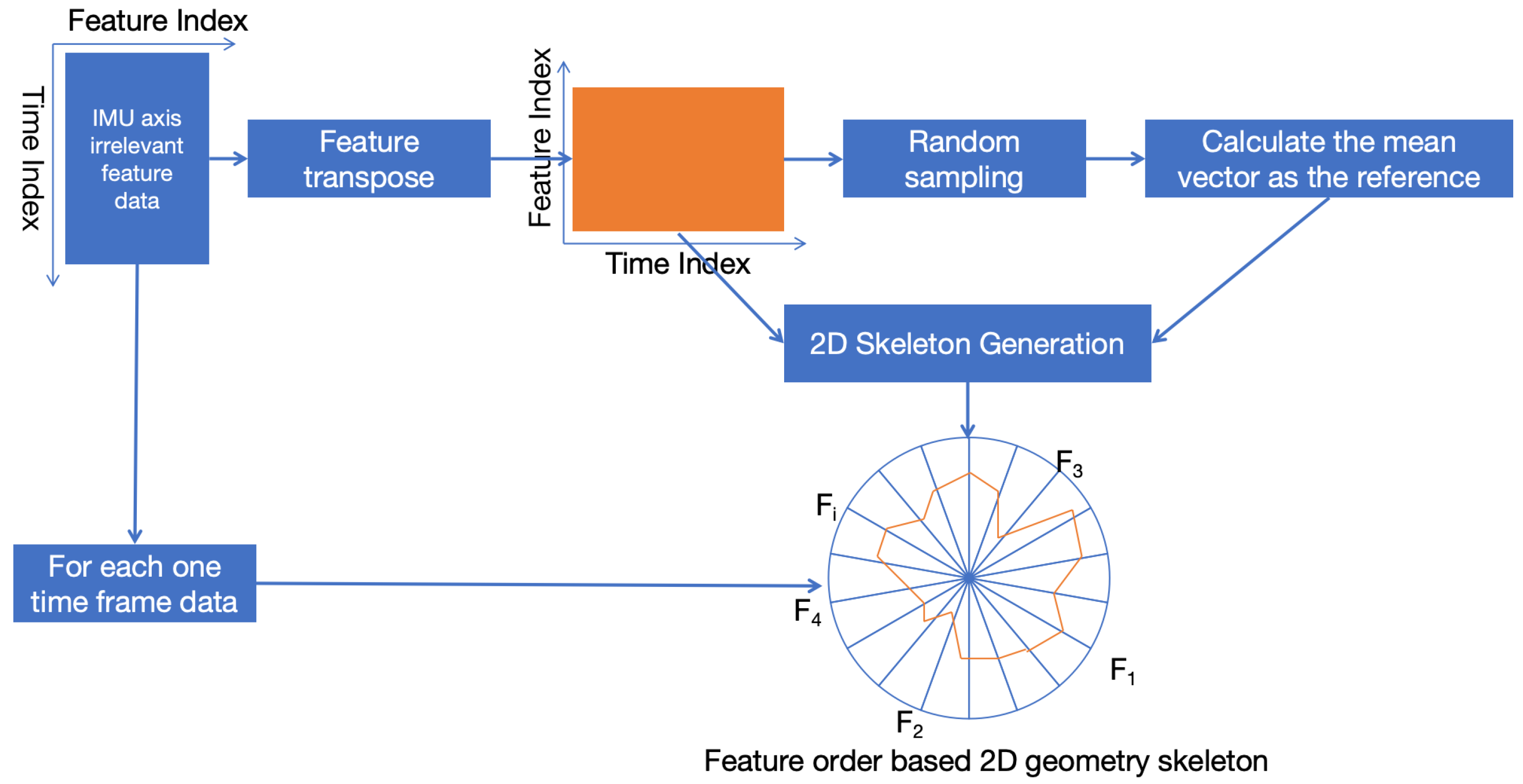

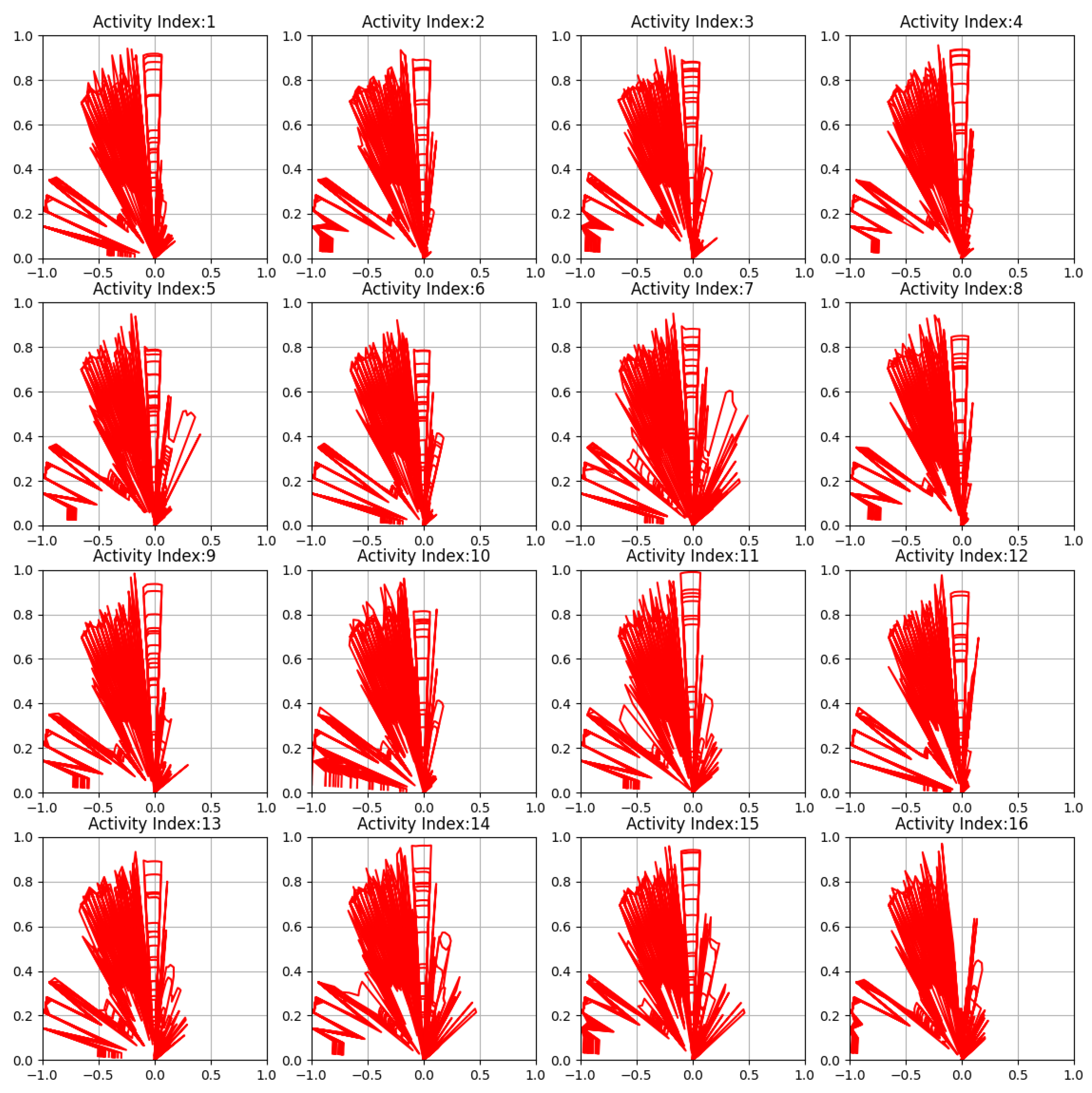

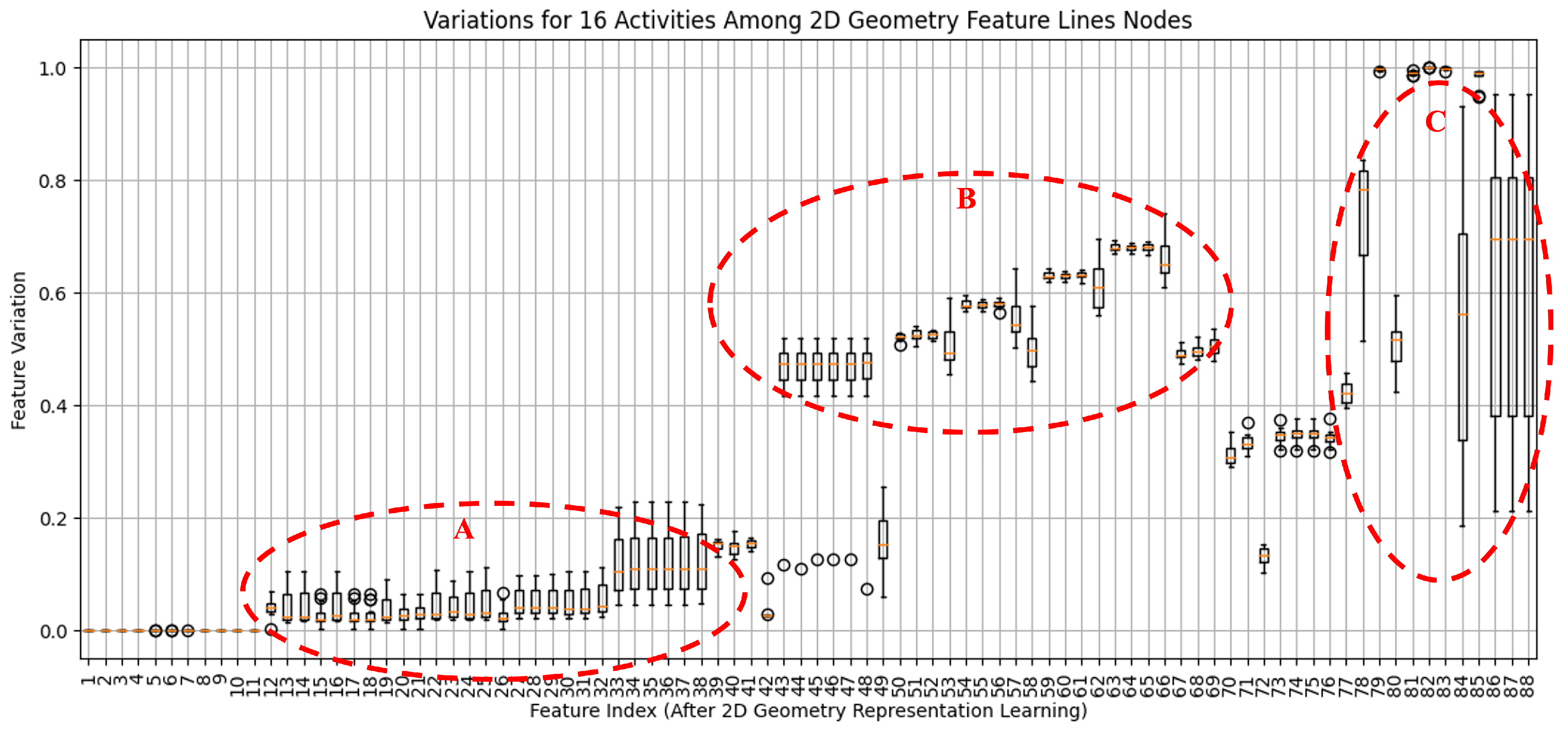

3.2. Two-Dimensional Geometry Representation Learning for IMU Data

| Algorithm 1 Two-dimensional geometry representation learning |

|

3.3. Activity Classification

4. Case Validation

4.1. IMU Axis-Irrelevant Feature Modeling

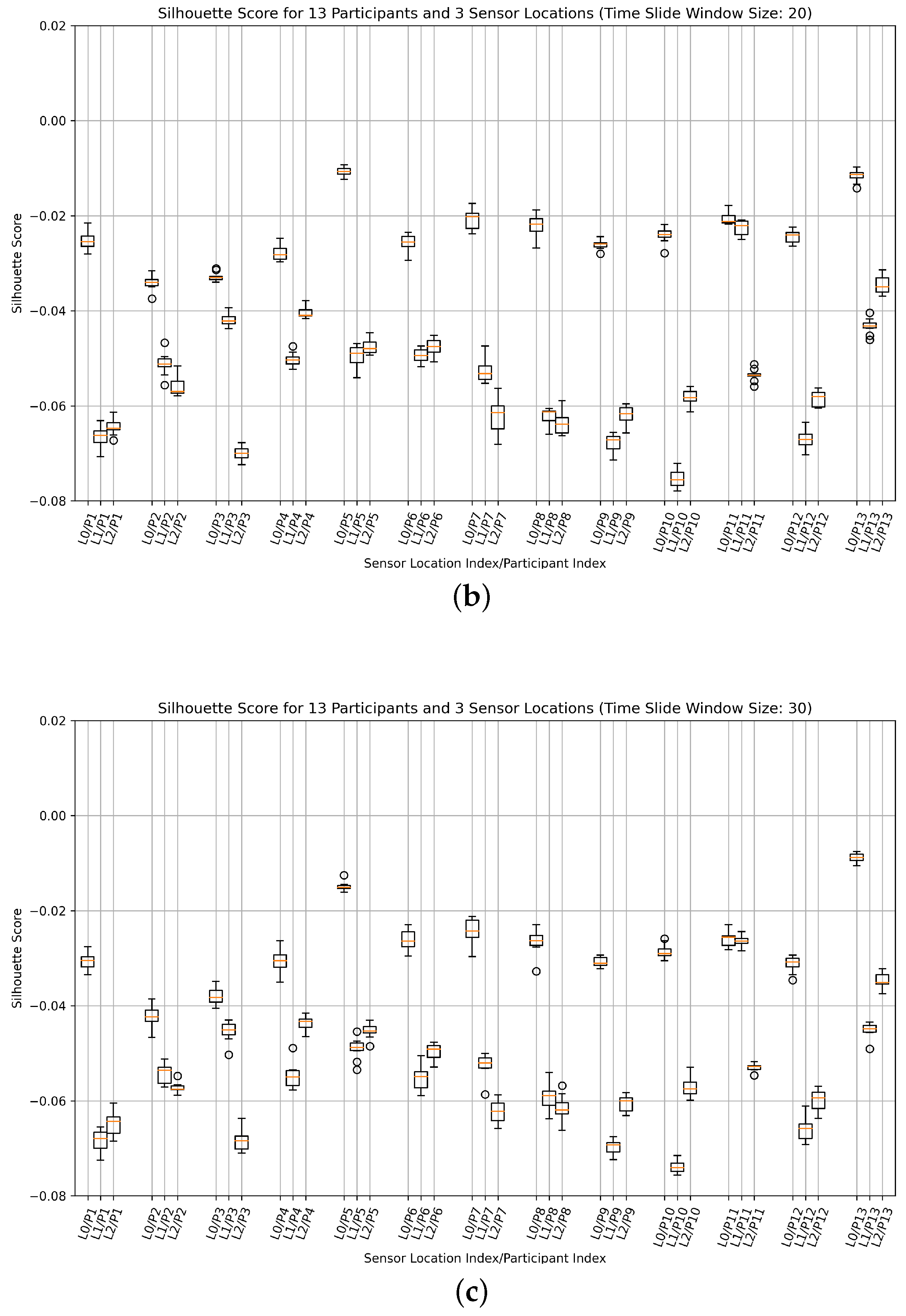

4.2. Construction Worker Activities Detection with Flexible IMU Deployment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khazen, M.; Nik-Bakht, M.; Moselhi, O. Monitoring workers on indoor construction sites using data fusion of real-time worker’s location, body orientation, and productivity state. Autom. Constr. 2024, 160, 105327. [Google Scholar] [CrossRef]

- Ashry, S.; Das, S.; Rafiei, M.; Baumbach, J.; Baumbach, L. Transfer Learning of Human Activities based on IMU Sensors: A Review. IEEE Sens. J. 2024, 25, 4115–4126. [Google Scholar] [CrossRef]

- Sherafat, B.; Ahn, C.R.; Akhavian, R.; Behzadan, A.H.; Golparvar-Fard, M.; Kim, H.; Lee, Y.C.; Rashidi, A.; Azar, E.R. Automated methods for activity recognition of construction workers and equipment: State-of-the-art review. J. Constr. Eng. Manag. 2020, 146, 03120002. [Google Scholar] [CrossRef]

- Li, J.; Miao, Q.; Zou, Z.; Gao, H.; Zhang, L.; Li, Z.; Wang, N. A review of computer vision-based monitoring approaches for construction workers’ work-related behaviors. IEEE Access 2024, 12, 7134–7155. [Google Scholar] [CrossRef]

- Li, P.; Wu, F.; Xue, S.; Guo, L. Study on the Interaction Behaviors Identification of Construction Workers Based on ST-GCN and YOLO. Sensors 2023, 23, 6318. [Google Scholar] [CrossRef]

- Yang, M.; Wu, C.; Guo, Y.; Jiang, R.; Zhou, F.; Zhang, J.; Yang, Z. Transformer-based deep learning model and video dataset for unsafe action identification in construction projects. Autom. Constr. 2023, 146, 104703. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, Y.; Zhu, W.; Liu, M.; Zhao, J. Real-Time Early Safety Warning for Personnel Intrusion Behavior on Construction Sites Using a CNN Model. Buildings 2023, 13, 2206. [Google Scholar] [CrossRef]

- Yu, W.D.; Liao, H.C.; Hsiao, W.T.; Chang, H.K.; Wu, T.Y.; Lin, C.C. Real-time Identification of Worker’s Personal Safety Equipment with Hybrid Machine Learning Techniques. Int. J. Mach. Learn. Comput. 2022, 12, 79–84. [Google Scholar]

- Li, X.; Hao, T.; Li, F.; Zhao, L.; Wang, Z. Faster R-CNN-LSTM Construction Site Unsafe Behavior Recognition Model. Appl. Sci. 2023, 13, 700. [Google Scholar] [CrossRef]

- Zhai, P.; Wang, J.; Zhang, L. Extracting Worker Unsafe Behaviors from Construction Images Using Image Captioning with Deep Learning-Based Attention Mechanism. J. Constr. Eng. Manag. 2023, 149. [Google Scholar] [CrossRef]

- Lee, B.; Hong, S.; Kim, H. Determination of workers compliance to safety regulations using a spatio-temporal graph convolution network. Adv. Eng. Informat. 2023, 56. [Google Scholar] [CrossRef]

- Wang, M.; Chen, J.; Ma, J. Monitoring and evaluating the status and behaviour of construction workers using wearable sensing technologies. Autom. Constr. 2024, 165, 105555. [Google Scholar] [CrossRef]

- Park, S.; Youm, M.; Kim, J. IMU Sensor-Based Worker Behavior Recognition and Construction of a Cyber–Physical System Environment. Sensors 2025, 25, 442. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. Automatic Recognition of Construction Worker Activities Using Deep Learning Approaches and Wearable Inertial Sensors. Intell. Autom. Soft Comput. 2023, 36, 2111–2128. [Google Scholar] [CrossRef]

- Hong, S.; Yoon, J.; Ham, Y.; Lee, B.; Kim, H. Monitoring safety behaviors of scaffolding workers using Gramian angular field convolution neural network based on IMU sensing data. Autom. Constr. 2023, 148, 104748. [Google Scholar] [CrossRef]

- Choo, H.; Lee, B.; Kim, H.; Choi, B. Automated detection of construction work at heights and deployment of safety hooks using IMU with a barometer. Autom. Constr. 2023, 147, 104714. [Google Scholar] [CrossRef]

- Chen, S.; Zhu, C.; Chen, X.; Yi, J. Machine Learning-Based Real-Time Walking Activity and Posture Estimation in Construction with a Single Wearable Inertial Measurement Unit. IEEE Trans. Autom. Sci. Eng. 2025, 22, 16144–16156. [Google Scholar] [CrossRef]

- Xiahou, X.; Li, Z.; Xia, J.; Zhou, Z.; Li, Q. A Feature-Level Fusion-Based Multimodal Analysis of Recognition and Classification of Awkward Working Postures in Construction. J. Constr. Eng. Manag. 2023, 149, 04023138. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, Z.; Xiong, R.; Tang, P.; Liu, Z. Dynamic human systems risk prognosis and control of lifting operations during prefabricated building construction. Dev. Built Environ. 2023, 14, 100143. [Google Scholar] [CrossRef]

- Hu, Z.; Chan, W.T.; Hu, H. Personalized Construction Safety Interventions Considering Cognitive-Related Factors. J. Constr. Eng. Manag. 2023, 149, 04023137. [Google Scholar] [CrossRef]

- Duan, P.; Goh, Y.M.; Zhou, J. Personalized stability monitoring based on body postures of construction workers working at heights. Saf. Sci. 2023, 162, 106104. [Google Scholar] [CrossRef]

- Kim, Y.S.; Lee, J.Y.; Yoon, Y.G.; Oh, T.K. Effectiveness analysis for smart construction safety technology (SCST) by test bed operation on small-and medium-sized construction sites. Int. J. Environ. Res. Public Health 2022, 19, 5203. [Google Scholar] [CrossRef]

- Gong, Y.; Seo, J.; Kang, K.S.; Shi, M. Automated recognition of construction worker activities using multimodal decision-level fusion. Autom. Constr. 2025, 172, 106032. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, A.; Han, F.; Zhu, J.; Wang, Y. Worker Abnormal Behavior Recognition Based on Spatio-Temporal Graph Convolution and Attention Model. Electronics 2023, 12, 2915. [Google Scholar] [CrossRef]

- Li, Z.; Li, D. UWB and IMU Fusion Construction Worker Localization Method Based on Transformer Correction. J. Comput. Civ. Eng. 2025, 39, 04025034. [Google Scholar] [CrossRef]

- Wan, H.P.; Zhang, W.J.; Ge, H.B.; Luo, Y.; Todd, M.D. Improved Vision-Based Method for Detection of Unauthorized Intrusion by Construction Sites Workers. J. Constr. Eng. Manag. 2023, 149, 04023040. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, Z.; Yu, R.; Sun, Y.; Li, H. Action Recognition Based on 3D Skeleton and LSTM for the Monitoring of Construction Workers’ Safety Harness Usage. J. Constr. Eng. Manag. 2023, 149, 04023015. [Google Scholar] [CrossRef]

- Mei, X.; Zhou, X.; Xu, F.; Zhang, Z. Human Intrusion Detection in Static Hazardous Areas at Construction Sites: Deep Learning–Based Method. J. Constr. Eng. Manag. 2023, 149, 04022142. [Google Scholar] [CrossRef]

- Huang, H.; Hu, H.; Xu, F.; Zhang, Z.; Tao, Y. Skeleton-based automatic assessment and prediction of intrusion risk in construction hazardous areas. Saf. Sci. 2023, 164, 106150. [Google Scholar] [CrossRef]

- Cooley, J.W.; Tukey, J.W. An algorithm for the machine calculation of complex Fourier series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Mäkela, S.M.; Lämsä, A.; Keränen, J.S.; Liikka, J.; Ronkainen, J.; Peltola, J.; Häikiö, J.; Järvinen, S.; Bordallo López, M. Introducing VTT-ConIot: A realistic dataset for activity recognition of construction workers using IMU devices. Sustainability 2021, 14, 220. [Google Scholar] [CrossRef]

| Index | Extended Features | Calculation Formula |

|---|---|---|

| F1 | Maximum (Ma) | |

| F2 | Minimum (Mi) | |

| F3 | Mean (Me) | |

| F4 | Standard Deviation (SD) | |

| F5 | Mean Absolute Deviation (MAD) | |

| F6 | Median (Md) | |

| F7 | Skewness (Sk) | |

| F8 | Kurtosis (Ku) | |

| F9 | Root Mean Square (RMS) | |

| F10 | Peak-to-Peak (PP) | |

| F11 | Inter-quartile Range (IQR) | |

| F12 | Entropy (En) | |

| F13 | Amplitude of Main Frequency (AMF) | |

| F14 | Phase of Frequency (PF) |

| Activity Index | Activity Name | Activity Description |

|---|---|---|

| A1 | Roll Painting | The subject uses a paint roller on a wall. |

| A2 | Spraying Paint | The subject uses a tube (that mimics a machine) to perform movements depicting the spraying of paint on a wall. |

| A3 | Leveling paint | The subject uses a tool to mimic the spreading of screed or paint on a wall. |

| A4 | Vacuum Cleaning | The subject uses a vacuum cleaner on the floor. |

| A5 | Picking objects | The subject picks up objects from the floor with their hands and throws them into a bin. |

| A6 | Climbing stairs | The subject goes up three steps on a stair, turns around, and goes down three steps. |

| A7 | Jumping down | The subject goes up three steps on a stair, turns around, and jumps down the three steps. |

| A8 | Laying back | The subject mimics working with his hands up while laying back on a mid-level surface. |

| A9 | Handsup high | The subject mimics working on tubes with their hands high above the head. |

| A10 | Handsup low | The subject mimics working on tubes with their hands at the head or shoulder level. |

| A11 | Crouch floor | The subject works on the floor, placing tiles while crouching. |

| A12 | Kneel floor | The subject works on the floor, placing tiles while kneeling. |

| A13 | Walk straight | The subject walks straight along a corridor for 20 m, turns around, and walks back. |

| A14 | Walk winding | The subject walks winding around seven cones for 20 m, turns around, and walks back. |

| A15 | Pushing cart | The subject walks along a corridor for 20 m pushing a cart, turns around, and pushes it back. |

| A16 | Stairs Up-down | The subject climbs stairs for 30 s, turns around, and comes back. |

| Type | Train Dataset Support | Test Dataset Support |

|---|---|---|

| A1 | 23,357 | 210,208 |

| A2 | 23,357 | 210,211 |

| A3 | 23,357 | 210,217 |

| A4 | 23,359 | 210,230 |

| A5 | 23,357 | 210,211 |

| A6 | 23,358 | 210,216 |

| A7 | 23,357 | 210,217 |

| A8 | 23,358 | 210,219 |

| A9 | 23,357 | 210,214 |

| A10 | 23,357 | 210,217 |

| A11 | 21,561 | 194,052 |

| A12 | 23,358 | 210,222 |

| A13 | 23,357 | 210,217 |

| A14 | 23,358 | 210,216 |

| A15 | 23,357 | 210,217 |

| A16 | 23,358 | 210,219 |

| Total | 371,923 | 3,347,303 |

| Sensor Axis Unrelated Datasets | 2D Geometry Represent Learning Datasets | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| KNN | SVM | Decision Tree | KNN | SVM | Decision Tree | |||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| Train | 0.58 | 0.50 | 0.54 | 0.21 | 0.21 | 0.21 | 0.68 | 0.69 | 0.69 | 0.58 | 0.50 | 0.54 | 0.20 | 0.20 | 0.20 | 0.83 | 0.74 | 0.78 |

| Test | 0.19 | 0.12 | 0.15 | 0.16 | 0.17 | 0.16 | 0.60 | 0.59 | 0.60 | 0.22 | 0.18 | 0.19 | 0.20 | 0.20 | 0.20 | 0.75 | 0.67 | 0.71 |

| Sensor Axis Unrelated Datasets | 2D Geometry Represent Learning Datasets | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| KNN | SVM | Decision Tree | KNN | SVM | Decision Tree | |||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| A1 | 0.39 | 0.90 | 0.54 | 0.18 | 0.08 | 0.11 | 0.66 | 0.69 | 0.67 | 0.39 | 0.90 | 0.54 | 0.17 | 0.07 | 0.10 | 0.84 | 0.69 | 0.76 |

| A2 | 0.38 | 0.78 | 0.51 | 0.28 | 0.16 | 0.20 | 0.66 | 0.67 | 0.66 | 0.38 | 0.78 | 0.51 | 0.27 | 0.14 | 0.18 | 0.88 | 0.77 | 0.82 |

| A3 | 0.39 | 0.66 | 0.49 | 0.15 | 0.10 | 0.12 | 0.62 | 0.59 | 0.60 | 0.39 | 0.66 | 0.49 | 0.14 | 0.09 | 0.11 | 0.79 | 0.84 | 0.81 |

| A4 | 0.41 | 0.56 | 0.47 | 0.20 | 0.21 | 0.20 | 0.62 | 0.63 | 0.62 | 0.41 | 0.56 | 0.47 | 0.19 | 0.18 | 0.18 | 0.79 | 0.71 | 0.75 |

| A5 | 0.48 | 0.61 | 0.54 | 0.21 | 0.26 | 0.23 | 0.70 | 0.68 | 0.69 | 0.48 | 0.61 | 0.54 | 0.20 | 0.25 | 0.22 | 0.87 | 0.80 | 0.83 |

| A6 | 0.45 | 0.50 | 0.47 | 0.16 | 0.00 | 0.00 | 0.68 | 0.66 | 0.67 | 0.45 | 0.50 | 0.47 | 0.16 | 0.00 | 0.00 | 0.86 | 0.72 | 0.78 |

| A7 | 0.54 | 0.50 | 0.52 | 0.19 | 0.20 | 0.19 | 0.69 | 0.68 | 0.68 | 0.54 | 0.50 | 0.52 | 0.19 | 0.20 | 0.19 | 0.81 | 0.65 | 0.72 |

| A8 | 0.56 | 0.46 | 0.51 | 0.22 | 0.37 | 0.28 | 0.63 | 0.72 | 0.67 | 0.56 | 0.46 | 0.51 | 0.21 | 0.34 | 0.26 | 0.83 | 0.64 | 0.72 |

| A9 | 0.59 | 0.36 | 0.45 | 0.13 | 0.25 | 0.17 | 0.70 | 0.70 | 0.70 | 0.59 | 0.36 | 0.45 | 0.13 | 0.27 | 0.18 | 0.84 | 0.77 | 0.80 |

| A10 | 0.61 | 0.36 | 0.45 | 0.17 | 0.30 | 0.22 | 0.69 | 0.70 | 0.69 | 0.61 | 0.36 | 0.45 | 0.16 | 0.34 | 0.22 | 0.86 | 0.69 | 0.77 |

| A11 | 0.73 | 0.39 | 0.51 | 0.26 | 0.22 | 0.24 | 0.70 | 0.69 | 0.69 | 0.73 | 0.39 | 0.51 | 0.28 | 0.21 | 0.24 | 0.78 | 0.63 | 0.70 |

| A12 | 0.68 | 0.29 | 0.41 | 0.16 | 0.10 | 0.12 | 0.64 | 0.66 | 0.65 | 0.68 | 0.29 | 0.41 | 0.13 | 0.07 | 0.09 | 0.80 | 0.87 | 0.83 |

| A13 | 0.67 | 0.42 | 0.52 | 0.26 | 0.30 | 0.28 | 0.70 | 0.72 | 0.71 | 0.67 | 0.42 | 0.52 | 0.24 | 0.29 | 0.26 | 0.88 | 0.84 | 0.86 |

| A14 | 0.76 | 0.51 | 0.61 | 0.28 | 0.31 | 0.29 | 0.76 | 0.76 | 0.76 | 0.76 | 0.51 | 0.61 | 0.27 | 0.29 | 0.28 | 0.78 | 0.78 | 0.78 |

| A15 | 0.77 | 0.34 | 0.47 | 0.21 | 0.12 | 0.15 | 0.68 | 0.69 | 0.68 | 0.77 | 0.34 | 0.47 | 0.21 | 0.09 | 0.13 | 0.85 | 0.63 | 0.72 |

| A16 | 0.82 | 0.42 | 0.56 | 0.28 | 0.39 | 0.33 | 0.78 | 0.78 | 0.78 | 0.82 | 0.42 | 0.56 | 0.27 | 0.38 | 0.32 | 0.78 | 0.73 | 0.75 |

| Sensor Axis Unrelated Datasets | 2D Geometry Represent Learning Datasets | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| KNN | SVM | Decision Tree | KNN | SVM | Decision Tree | |||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| A1 | 0.13 | 0.12 | 0.12 | 0.17 | 0.07 | 0.10 | 0.60 | 0.64 | 0.62 | 0.12 | 0.31 | 0.17 | 0.15 | 0.06 | 0.09 | 0.79 | 0.60 | 0.68 |

| A2 | 0.13 | 0.12 | 0.12 | 0.18 | 0.16 | 0.17 | 0.62 | 0.67 | 0.64 | 0.13 | 0.31 | 0.18 | 0.26 | 0.12 | 0.16 | 0.80 | 0.69 | 0.74 |

| A3 | 0.11 | 0.10 | 0.10 | 0.14 | 0.09 | 0.11 | 0.52 | 0.50 | 0.51 | 0.11 | 0.19 | 0.14 | 0.14 | 0.09 | 0.11 | 0.75 | 0.75 | 0.75 |

| A4 | 0.12 | 0.12 | 0.12 | 0.19 | 0.10 | 0.13 | 0.55 | 0.55 | 0.55 | 0.12 | 0.17 | 0.14 | 0.20 | 0.20 | 0.20 | 0.79 | 0.67 | 0.73 |

| A5 | 0.18 | 0.13 | 0.15 | 0.10 | 0.15 | 0.12 | 0.49 | 0.52 | 0.50 | 0.19 | 0.24 | 0.21 | 0.20 | 0.25 | 0.22 | 0.69 | 0.68 | 0.68 |

| A6 | 0.13 | 0.15 | 0.14 | 0.16 | 0.00 | 0.00 | 0.53 | 0.57 | 0.55 | 0.13 | 0.14 | 0.13 | 0.12 | 0.00 | 0.00 | 0.72 | 0.63 | 0.67 |

| A7 | 0.19 | 0.12 | 0.15 | 0.19 | 0.20 | 0.19 | 0.47 | 0.49 | 0.48 | 0.19 | 0.16 | 0.17 | 0.18 | 0.19 | 0.18 | 0.68 | 0.57 | 0.62 |

| A8 | 0.21 | 0.12 | 0.15 | 0.12 | 0.17 | 0.14 | 0.67 | 0.74 | 0.70 | 0.21 | 0.16 | 0.18 | 0.21 | 0.34 | 0.26 | 0.74 | 0.62 | 0.67 |

| A9 | 0.17 | 0.10 | 0.13 | 0.13 | 0.25 | 0.17 | 0.70 | 0.63 | 0.66 | 0.16 | 0.09 | 0.12 | 0.13 | 0.27 | 0.18 | 0.83 | 0.74 | 0.78 |

| A10 | 0.19 | 0.11 | 0.14 | 0.17 | 0.30 | 0.22 | 0.69 | 0.66 | 0.67 | 0.19 | 0.11 | 0.14 | 0.16 | 0.35 | 0.22 | 0.84 | 0.65 | 0.73 |

| A11 | 0.33 | 0.12 | 0.18 | 0.16 | 0.12 | 0.14 | 0.71 | 0.68 | 0.69 | 0.32 | 0.14 | 0.19 | 0.27 | 0.20 | 0.23 | 0.75 | 0.60 | 0.67 |

| A12 | 0.18 | 0.07 | 0.10 | 0.16 | 0.10 | 0.12 | 0.61 | 0.61 | 0.61 | 0.19 | 0.08 | 0.11 | 0.13 | 0.07 | 0.09 | 0.73 | 0.82 | 0.77 |

| A13 | 0.31 | 0.17 | 0.22 | 0.16 | 0.19 | 0.17 | 0.58 | 0.59 | 0.58 | 0.34 | 0.18 | 0.24 | 0.24 | 0.29 | 0.26 | 0.74 | 0.73 | 0.73 |

| A14 | 0.23 | 0.15 | 0.18 | 0.18 | 0.31 | 0.23 | 0.66 | 0.60 | 0.63 | 0.43 | 0.26 | 0.32 | 0.26 | 0.29 | 0.27 | 0.74 | 0.76 | 0.75 |

| A15 | 0.28 | 0.10 | 0.15 | 0.11 | 0.13 | 0.12 | 0.52 | 0.46 | 0.49 | 0.25 | 0.10 | 0.14 | 0.21 | 0.09 | 0.13 | 0.71 | 0.61 | 0.66 |

| A16 | 0.22 | 0.17 | 0.19 | 0.18 | 0.39 | 0.25 | 0.64 | 0.60 | 0.62 | 0.43 | 0.17 | 0.24 | 0.27 | 0.37 | 0.31 | 0.73 | 0.62 | 0.67 |

| Sensor Axis Unrelated Datasets | 2D Geometry Represent Learning Datasets | ||||||

|---|---|---|---|---|---|---|---|

| Time Window Size | Model | P | R | F1 | P | R | F1 |

| KNN | 0.19 | 0.12 | 0.15 | 0.22 | 0.18 | 0.19 | |

| 1s | SVM | 0.16 | 0.17 | 0.16 | 0.20 | 0.20 | 0.20 |

| Decision Tree | 0.60 | 0.59 | 0.60 | 0.75 | 0.67 | 0.71 | |

| WorkerNeXt | 0.60 | 0.60 | 0.60 | 0.80 | 0.72 | 0.76 | |

| KNN | 0.20 | 0.12 | 0.15 | 0.25 | 0.20 | 0.22 | |

| 2s | SVM | 0.17 | 0.17 | 0.17 | 0.23 | 0.25 | 0.24 |

| Decision Tree | 0.58 | 0.59 | 0.58 | 0.75 | 0.73 | 0.74 | |

| WorkerNeXt | 0.65 | 0.63 | 0.64 | 0.82 | 0.82 | 0.82 | |

| KNN | 0.12 | 0.11 | 0.11 | 0.23 | 0.20 | 0.21 | |

| 3s | SVM | 0.12 | 0.15 | 0.13 | 0.21 | 0.21 | 0.21 |

| Decision Tree | 0.54 | 0.52 | 0.53 | 0.75 | 0.70 | 0.72 | |

| WorkerNeXt | 0.65 | 0.66 | 0.65 | 0.82 | 0.84 | 0.83 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Liu, G.; Li, J. Two-Dimensional Geometry Representation Learning-Based Construction Workers Activities Detection with Flexible IMU Solution. Buildings 2025, 15, 2372. https://doi.org/10.3390/buildings15132372

Chen H, Liu G, Li J. Two-Dimensional Geometry Representation Learning-Based Construction Workers Activities Detection with Flexible IMU Solution. Buildings. 2025; 15(13):2372. https://doi.org/10.3390/buildings15132372

Chicago/Turabian StyleChen, Hainan, Guiwen Liu, and Jianjun Li. 2025. "Two-Dimensional Geometry Representation Learning-Based Construction Workers Activities Detection with Flexible IMU Solution" Buildings 15, no. 13: 2372. https://doi.org/10.3390/buildings15132372

APA StyleChen, H., Liu, G., & Li, J. (2025). Two-Dimensional Geometry Representation Learning-Based Construction Workers Activities Detection with Flexible IMU Solution. Buildings, 15(13), 2372. https://doi.org/10.3390/buildings15132372