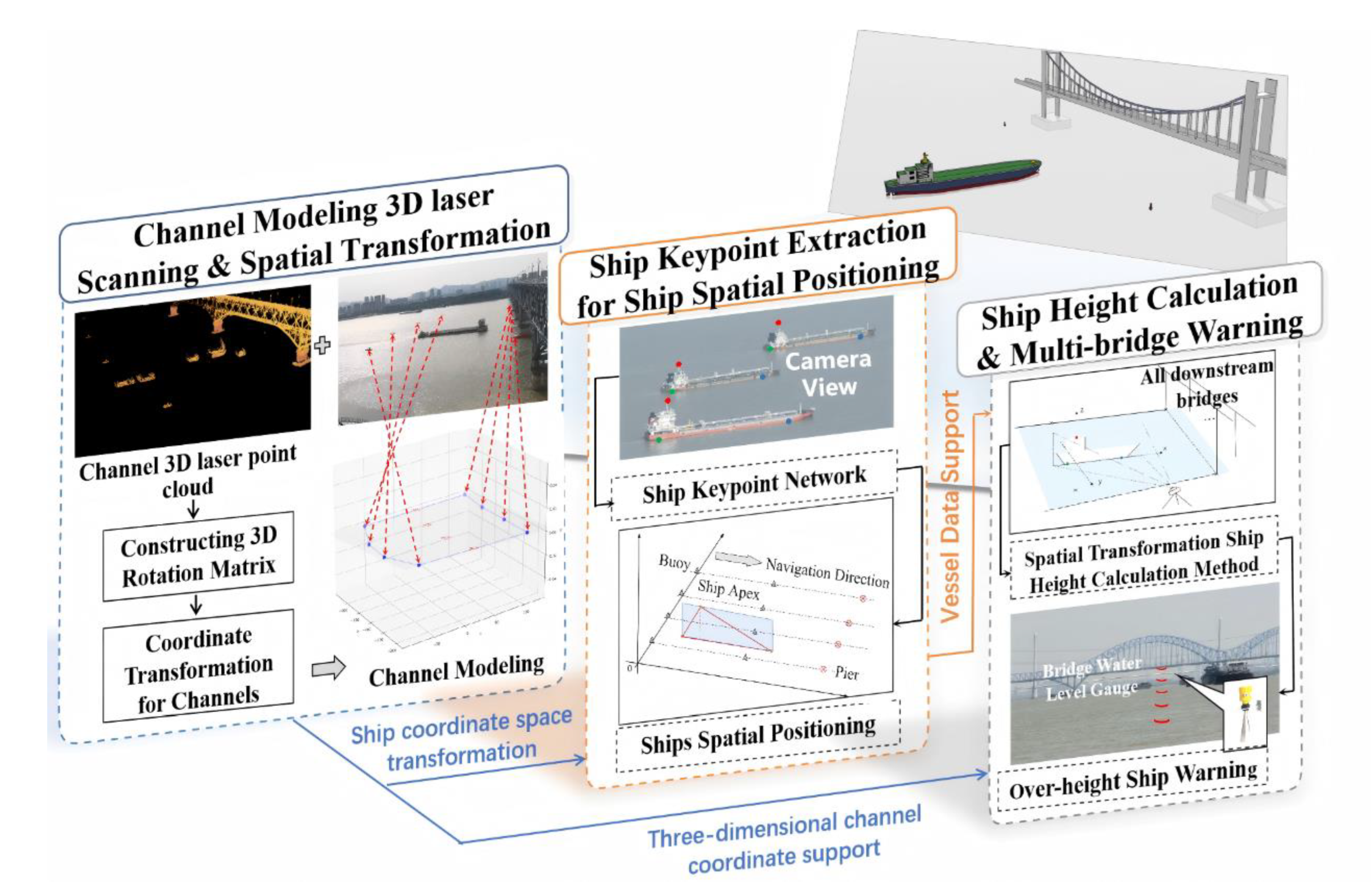

Real-Time Collision Warning System for Over-Height Ships at Bridges Based on Spatial Transformation

Abstract

1. Introduction

Background and Motivation

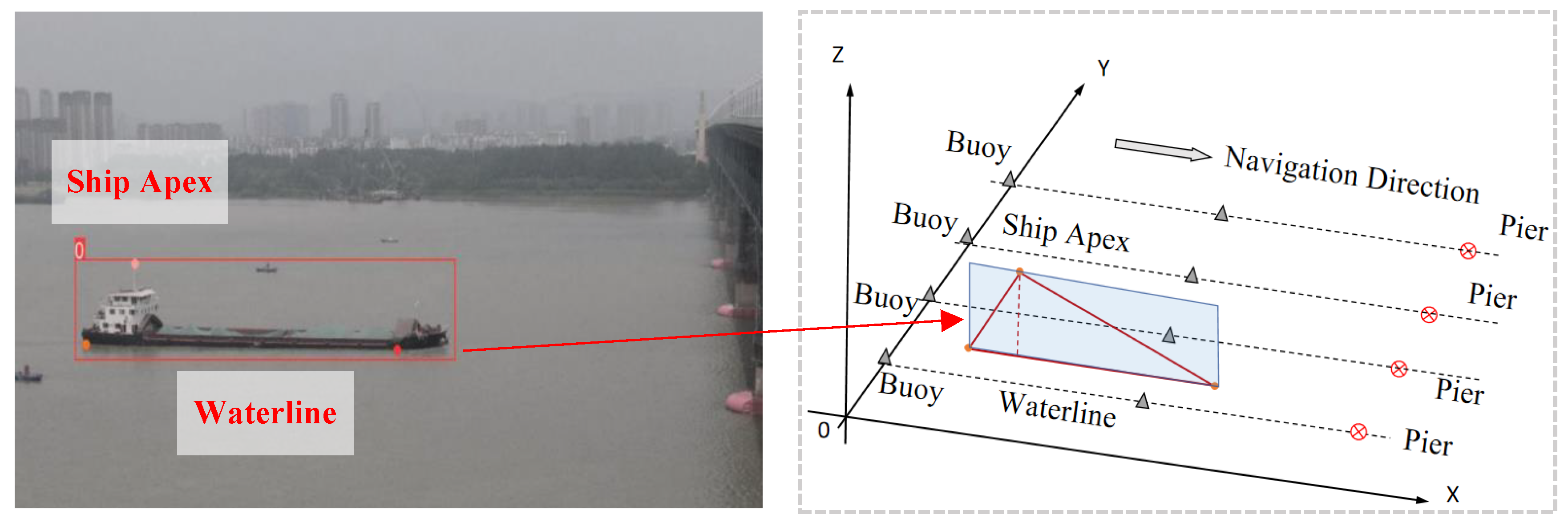

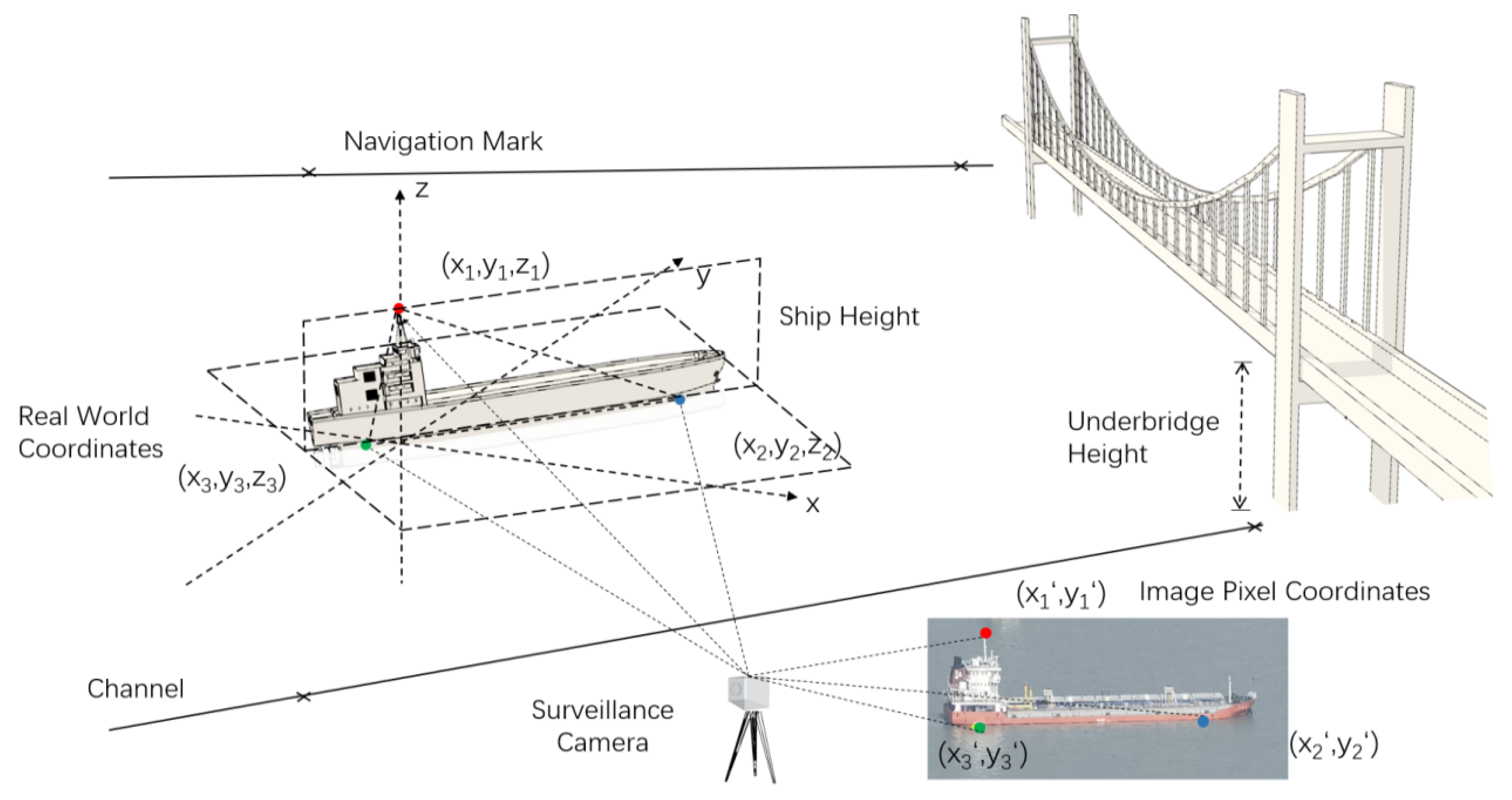

2. Theoretical Framework

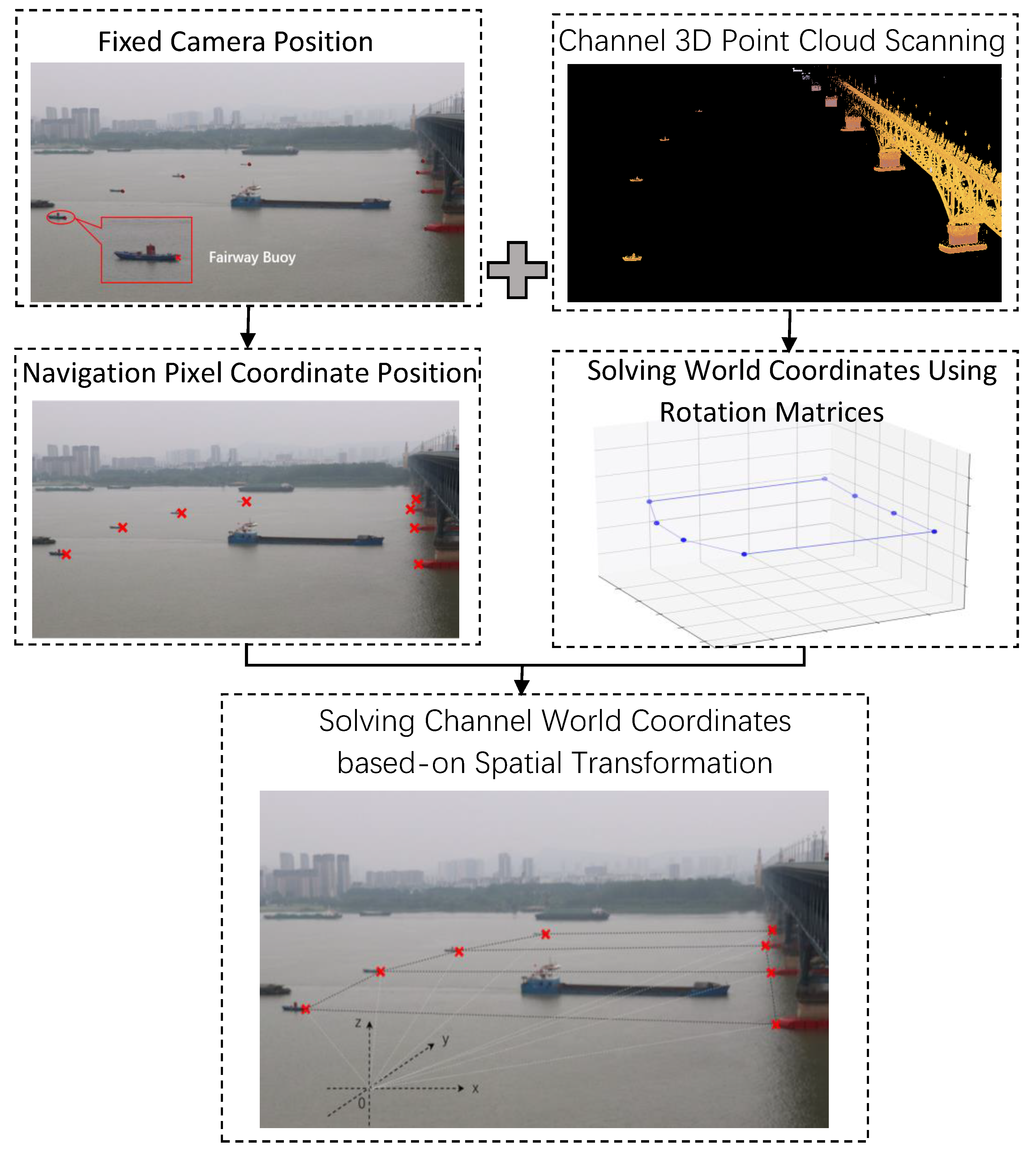

3. Precise Channel Coordinate Positioning Based on Spatial Transformation and 3D Laser Point Cloud

3.1. Principle and Structure of Spatial Transformation

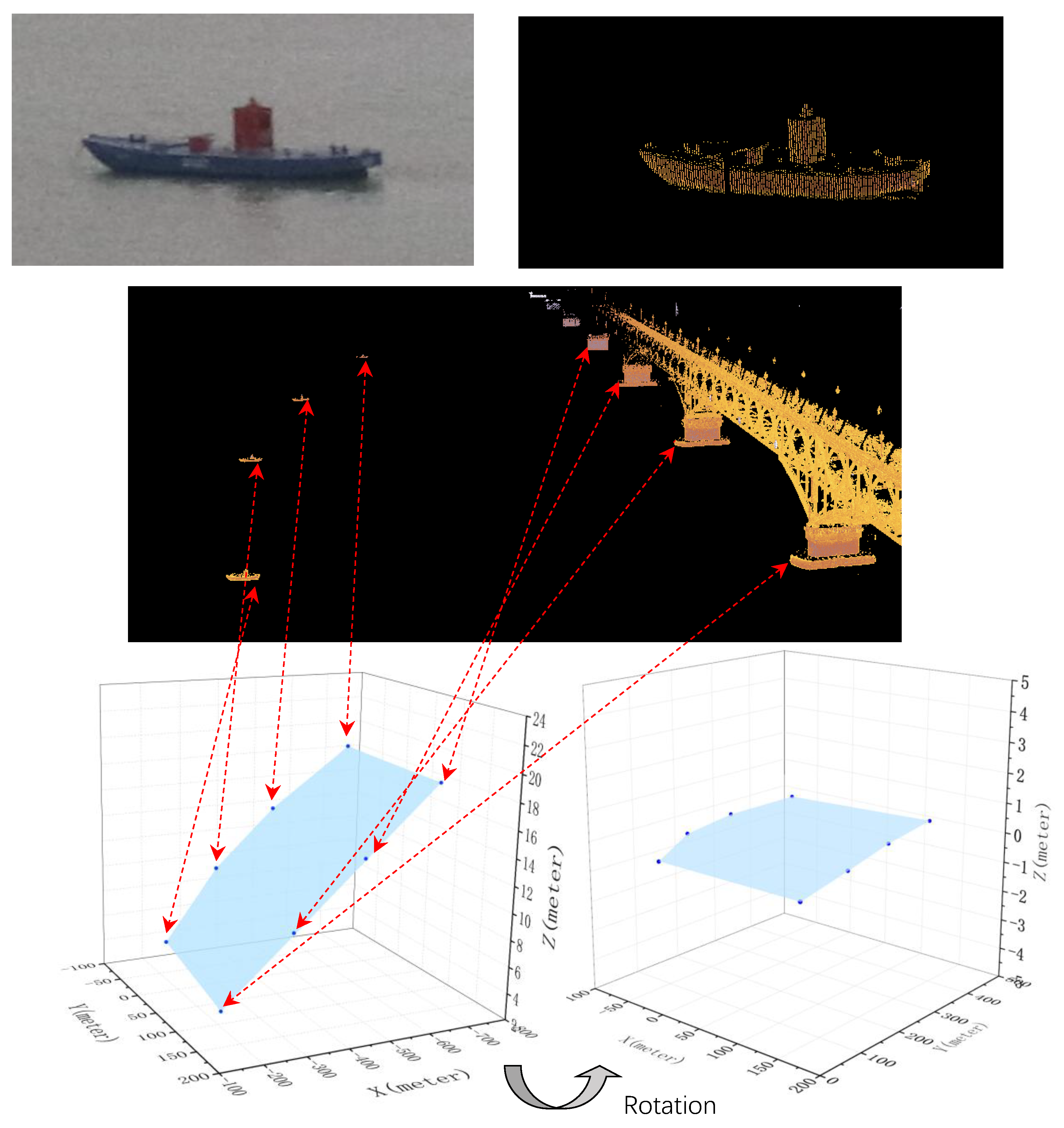

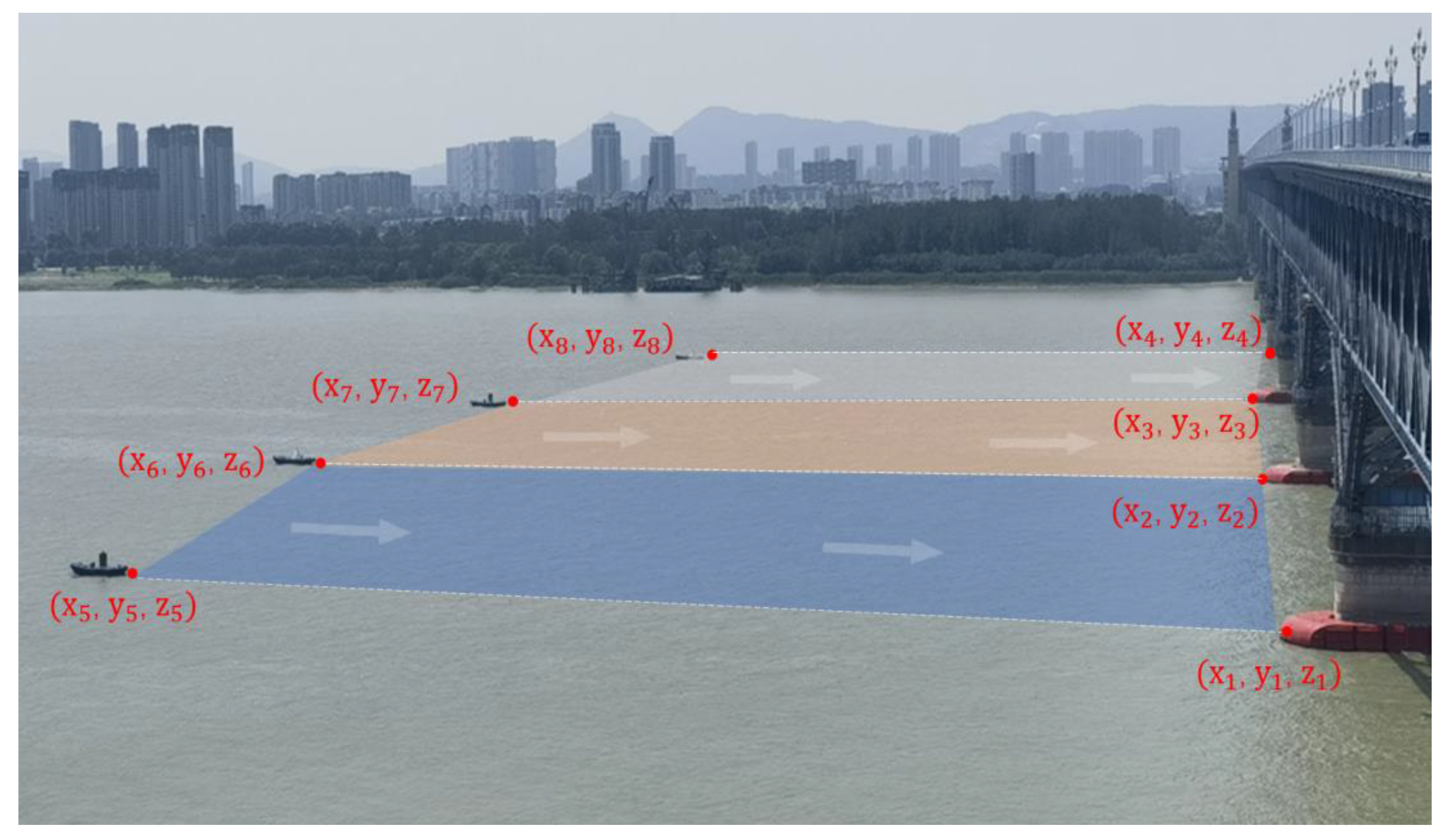

3.2. Calculation of Channel Spatial Coordinates Based on Laser Scanning

3.3. Principle of Transformation from Two-Dimensional Image Coordinates to Three-Dimensional Space

4. Ship Key Feature Extraction and Body Positioning Based on Edge Detection

4.1. Ship Recognition and Key Point Acquisition

4.2. Three-Dimensional Ship Body Positioning

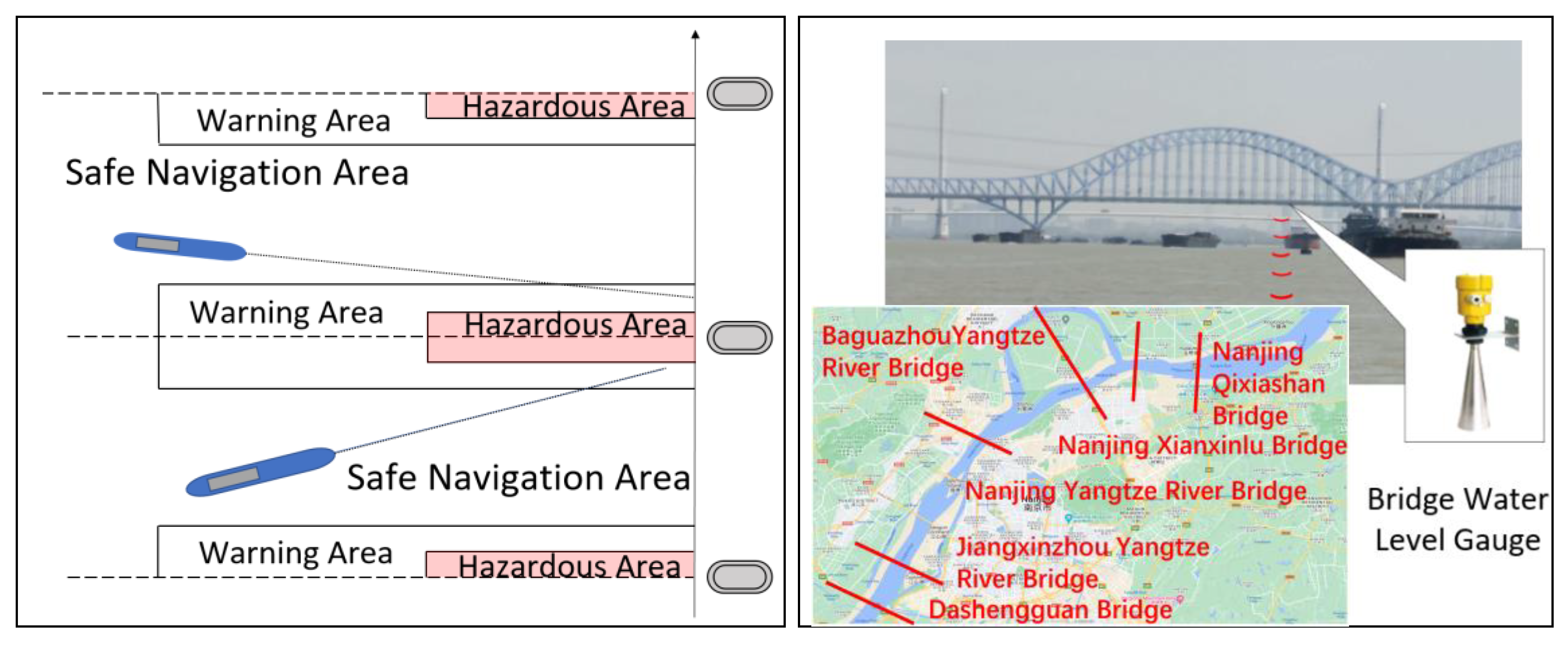

5. Multi-Pose Ship Height Calculation and Joint Over-Height and Yaw Warning

5.1. Multi-Pose Ship Height Calculation Method

5.2. Yaw Warning and Multi-Bridge Over-Height Warning

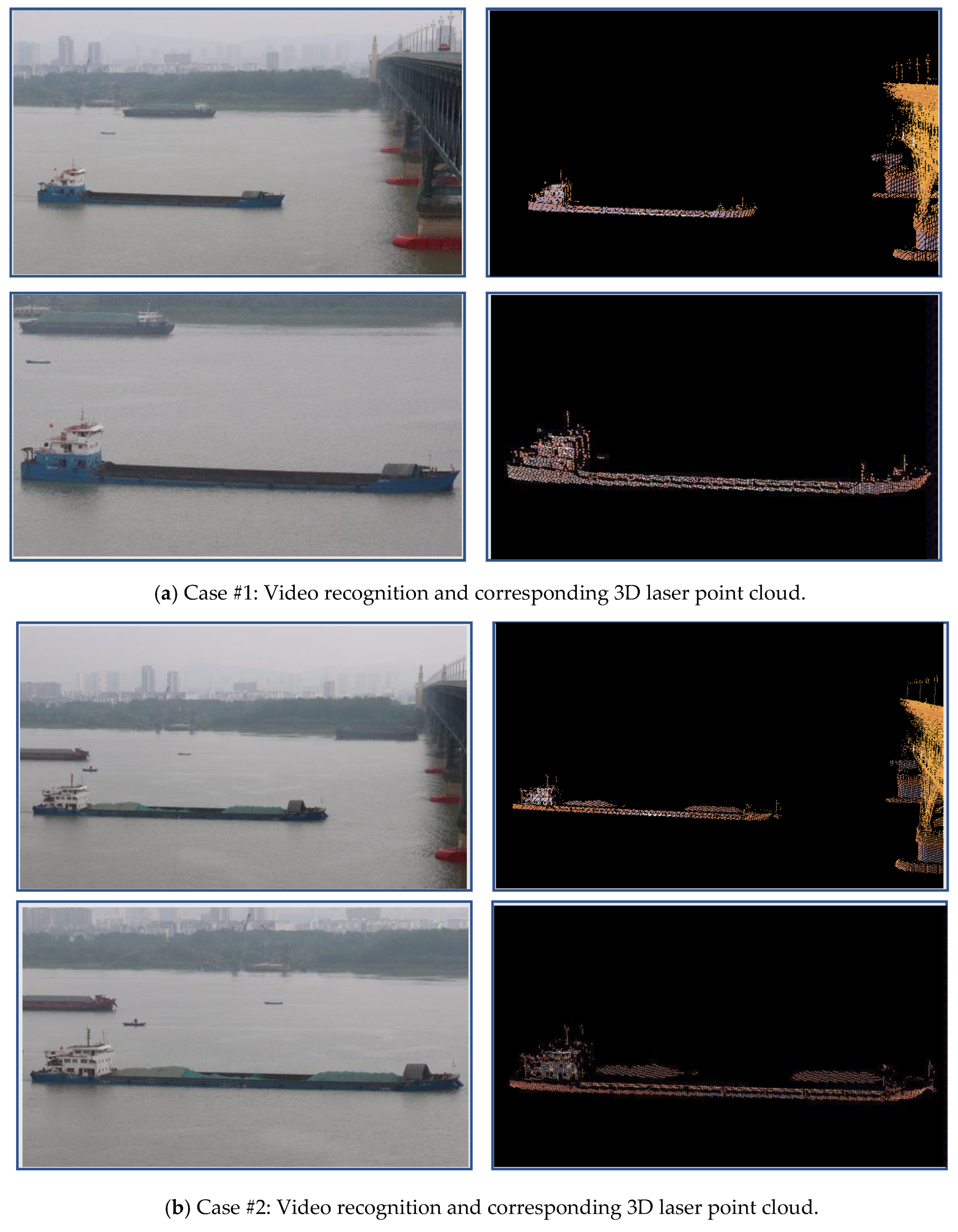

6. Experiment

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Full Term |

| SAR | Synthetic Aperture Radar |

| AIS | Automatic Identification System |

| GF-3 | Gaofen-3 |

| FLCBS | Foam-filled Lattice Composite Bumper System |

| FRP | Fiber-reinforced Polymer |

| CAM | Constant Added Mass |

| FGLPS | Functionally Graded Lattice Filled Protection Structure |

| BMS | Bridge Management Systems |

| SHM | Structural Health Monitoring Systems |

| 3D | Three-Dimensional |

| BIM | Building Information Modeling |

| UAV | Unmanned Aerial Vehicle |

| CNN | Convolutional Neural Networks |

| DAPN | Dense Attention Pyramid Network |

| GAN | Generative Adversarial Network |

| YOLOv5 | You Only Look Once Version 5 |

| MC-YOLOv5s | MobileNet Compact YOLOv5 |

| YOLOv3 | You Only Look Once Version 3 |

| PRR | Peak Pulse Repetition Rate |

| HRNet | High-Resolution Network |

References

- Wu, B.; Yip, T.L.; Yan, X.; Soares, C.G. Fuzzy logic based approach for ship-bridge collision alert system. Ocean Eng. 2019, 187, 106152. [Google Scholar] [CrossRef]

- Zhang, B.; Xu, Z.; Zhang, J.; Wu, G. A warning framework for avoiding vessel-bridge and vessel-vessel collisions based on generative adversarial and dual-task networks. Comput.-Aided Civ. Infrastruct. Eng. 2021, 37, 629–649. [Google Scholar] [CrossRef]

- Hou, X.; Ao, W.; Song, Q.; Lai, J.; Wang, H.; Xu, F. FUSAR-Ship: Building a high-resolution SAR-AIS matchup dataset of Gaofen-3 for ship detection and recognition. Sci. China Inf. Sci. 2020, 63, 140303. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, P.; Li, M.; Chen, L.; Mou, J. A data-driven approach for ship-bridge collision candidate detection in bridge waterway. Ocean Eng. 2022, 266, 113137. [Google Scholar] [CrossRef]

- Sha, Y.; Amdahl, J.; Liu, K. Design of steel bridge girders against ship forecastle collisions. Eng. Struct. 2019, 196, 109277. [Google Scholar] [CrossRef]

- Fang, H.; Mao, Y.; Liu, W.; Zhu, L.; Zhang, B. Manufacturing and evaluation of Large-scale Composite Bumper System for bridge pier protection against ship collision. Compos. Struct. 2016, 158, 187–198. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, W.; Fang, H.; Chen, J.; Zhuang, Y.; Han, J. Design and simulation of innovative foam-filled Lattice Composite Bumper System for bridge protection in ship collisions. Compos. Part B Eng. 2018, 157, 24–35. [Google Scholar] [CrossRef]

- Ye, X.; Fan, W.; Sha, Y.; Hua, X.; Wu, Q.; Ren, Y. Fluid-structure interaction analysis of oblique ship-bridge collisions. Eng. Struct. 2023, 274, 115129. [Google Scholar] [CrossRef]

- Nian, Y.; Wan, S.; Wang, X.; Zhou, P.; Avcar, M.; Li, M. Study on crashworthiness of nature-inspired functionally graded lattice metamaterials for bridge pier protection against ship collision. Eng. Struct. 2023, 277, 115404. [Google Scholar] [CrossRef]

- Guo, Y.; Ni, Y.; Chen, S. Optimal sensor placement for damage detection of bridges subject to ship collision. Struct. Control. Health Monit. 2016, 24, e1963. [Google Scholar] [CrossRef]

- Gholipour, G.; Zhang, C.; Mousavi, A.A. Nonlinear numerical analysis and progressive damage assessment of a cable-stayed bridge pier subjected to ship collision. Mar. Struct. 2020, 69, 102662. [Google Scholar] [CrossRef]

- Jeong, S.; Hou, R.; Lynch, J.P.; Sohn, H.; Law, K.H. An information modeling framework for bridge monitoring. Adv. Eng. Softw. 2017, 114, 11–31. [Google Scholar] [CrossRef]

- Farhangdoust, S.; Mehrabi, A. Health Monitoring of Closure Joints in Accelerated Bridge Construction: A Review of Non-Destructive Testing Application. J. Adv. Concr. Technol. 2019, 17, 381–404. [Google Scholar] [CrossRef]

- Ni, Y.; Wang, Y.; Zhang, C. A Bayesian approach for condition assessment and damage alarm of bridge expansion joints using long-term structural health monitoring data. Eng. Struct. 2020, 212, 110520. [Google Scholar] [CrossRef]

- Xi, C.X.; Zhou, Z.-X.; Xiang, X.; He, S.; Hou, X. Monitoring of long-span bridge deformation based on 3D laser scanning. Instrum. Mes. Métrologie 2018, 18, 113–130. [Google Scholar] [CrossRef]

- Yoon, S.; Wang, Q.; Sohn, H. Optimal placement of precast bridge deck slabs with respect to precast girders using 3D laser scanning. Autom. Constr. 2018, 86, 81–98. [Google Scholar] [CrossRef]

- Almukhtar, A.; Saeed, Z.O.; Abanda, H.; Tah, J.H.M. Reality Capture of Buildings Using 3D Laser Scanners. CivilEng 2021, 2, 214–235. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, X.; Fan, J.; Liu, X. Image-based crack assessment of bridge piers using unmanned aerial vehicles and three-dimensional scene reconstruction. Comput. Civ. Infrastruct. Eng. 2019, 35, 511–529. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense Attention Pyramid Networks for Multi-Scale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, X.; Wang, N.; Gao, X. A Robust One-Stage Detector for Multiscale Ship Detection With Complex Background in Massive SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, D.; Zhang, Y.; Cheng, X.; Zhang, M.; Wu, C. Deep learning for autonomous ship-oriented small ship detection. Saf. Sci. 2020, 130, 104812. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, Y.; Qian, L.; Zhang, X.; Diao, S.; Liu, X.; Cao, J.; Huang, H.; Teh, J.S. A lightweight ship target detection model based on improved YOLOv5s algorithm. PLoS ONE 2023, 18, e0283932. [Google Scholar] [CrossRef]

- Chen, X.; Qi, L.; Yang, Y.; Luo, Q.; Postolache, O.; Tang, J.; Wu, H. Video-Based Detection Infrastructure Enhancement for Automated Ship Recognition and Behavior Analysis. J. Adv. Transp. 2020, 2020, 7194342. [Google Scholar] [CrossRef]

- Li, S.; Zhou, Z.; Wang, B.; Wu, F. A Novel Inshore Ship Detection via Ship Head Classification and Body Boundary Determination. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1920–1924. [Google Scholar] [CrossRef]

- Zhang, M.; Kujala, P.; Musharraf, M.; Zhang, J.; Hirdaris, S. A machine learning method for the prediction of ship motion trajectories in real operational conditions. Ocean Eng. 2023, 283, 114905. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar] [CrossRef]

- Zhao, X.; Song, Y. Improved Ship Detection with YOLOv8 Enhanced with MobileViT and GSConv. Electronics 2023, 12, 4666. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Zeng, F.; Xiao, C.; Wang, W.; Gan, L.; Yuan, H.; Li, Q. A Novel Ship-Height Measurement System Based on Optical Axes Collinear Stereo Vision. IEEE Sens. J. 2023, 23, 6282–6291. [Google Scholar] [CrossRef]

| Pier Coordinates (from Shore to Channel)/m | Buoy Coordinates/m | ||||

|---|---|---|---|---|---|

| −182.599 | 122.924 | 3.72 | −217.01 | −50.07 | 4.485 |

| −343.005 | 132.309 | 8.932 | −364.294 | −67.271 | 9.551 |

| −503.107 | 140.814 | 13.88 | −503.783 | −56.778 | 14.165 |

| −662.499 | 156.126 | 19.097 | −666.48 | −24.677 | 19.186 |

| Pier Coordinates (from Shore to Channel)/m | Buoy Coordinates/m | ||||

|---|---|---|---|---|---|

| 176.383 | 0 | 0 | 0 | 0 | 0 |

| 154.293 | 159.155 | 0 | −45.605 | 141.097 | 0 |

| 131.399 | 317.84 | 0 | −62.528 | 279.954 | 0 |

| 115.319 | 477.157 | 0 | −60.726 | 446.196 | 0 |

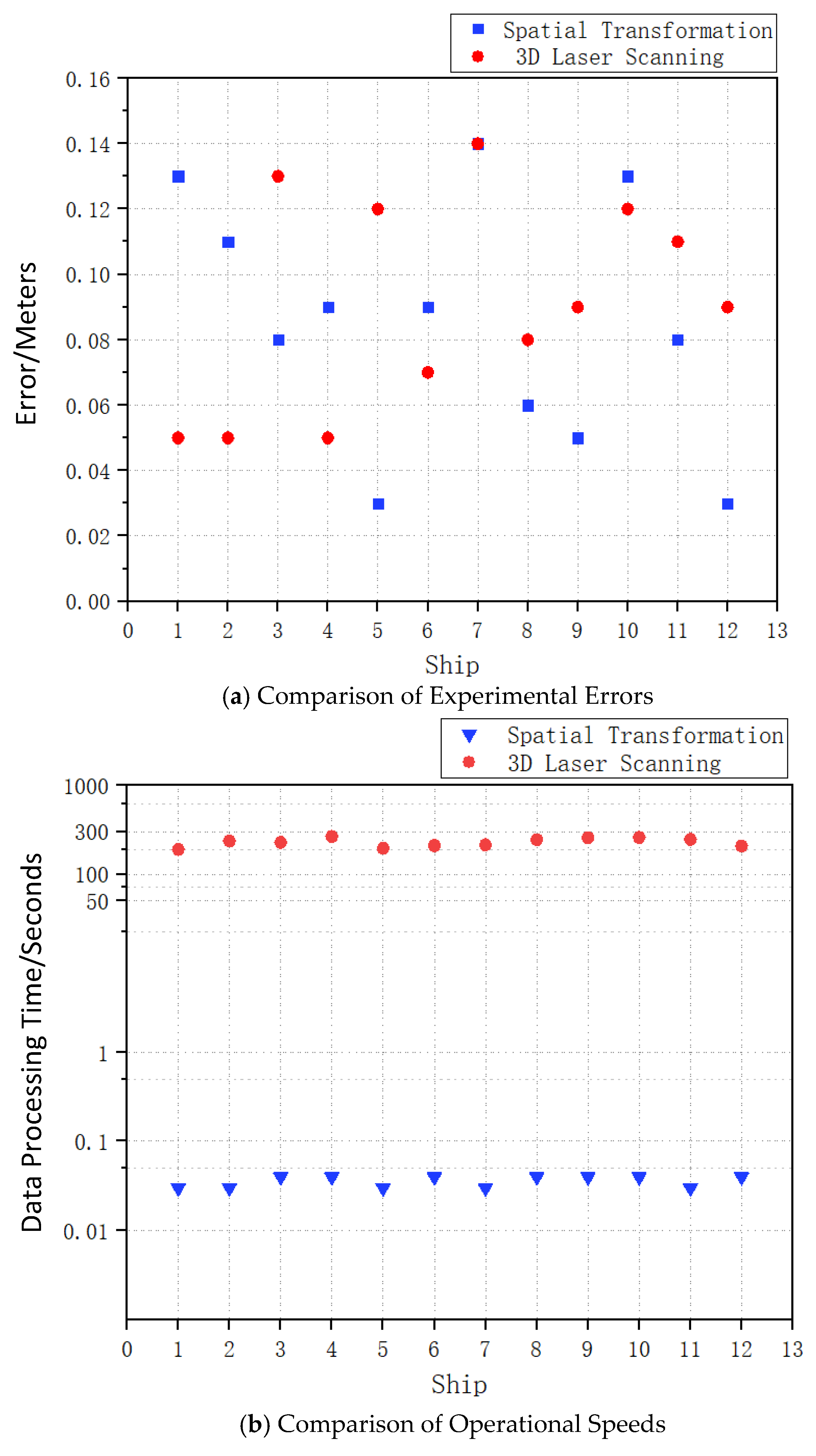

| Test #1 | Actual Ship Height/m | Spatial Transformation/m | Data Processing Time/s | Measurement Difference/m | 3D Laser Scanning/m | Data Processing Time/s | Measurement Difference/m |

|---|---|---|---|---|---|---|---|

| Vessel I | 14.5 | 14.63 | 0.03 | 0.13 | 14.55 | 189 | 0.05 |

| Vessel II | 15.6 | 15.49 | 0.03 | −0.11 | 15.55 | 235 | −0.05 |

| Vessel III | 14.7 | 14.78 | 0.04 | 0.08 | 14.57 | 227 | −0.13 |

| Vessel IV | 13.5 | 13.41 | 0.04 | −0.09 | 13.45 | 265 | −0.05 |

| Vessel V | 14.0 | 14.03 | 0.03 | 0.03 | 14.12 | 195 | 0.12 |

| Vessel VI | 14.6 | 14.69 | 0.04 | 0.09 | 14.53 | 209 | −0.07 |

| 0.035 | 0.088 | 220 | 0.078 | ||||

| Test #2 | Actual Ship Height/m | Spatial Transformation/m | Data Processing Time/s | Measurement Difference/m | 3D Laser Scanning/m | Data Processing Time/s | Measurement Difference/m |

| Vessel I | 14.5 | 14.36 | 0.03 | −0.14 | 14.64 | 213 | 0.14 |

| Vessel II | 15.6 | 15.54 | 0.04 | −0.06 | 15.52 | 244 | −0.08 |

| Vessel III | 14.7 | 14.75 | 0.04 | 0.05 | 14.61 | 256 | −0.09 |

| Vessel IV | 13.5 | 13.37 | 0.04 | −0.13 | 13.38 | 258 | −0.12 |

| Vessel V | 14.0 | 14.08 | 0.03 | 0.08 | 14.11 | 246 | 0.11 |

| Vessel VI | 14.6 | 14.63 | 0.04 | 0.03 | 14.51 | 207 | −0.09 |

| 0.037 | 0.082 | 237 | 0.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, S.; Zhang, J. Real-Time Collision Warning System for Over-Height Ships at Bridges Based on Spatial Transformation. Buildings 2025, 15, 2367. https://doi.org/10.3390/buildings15132367

Gu S, Zhang J. Real-Time Collision Warning System for Over-Height Ships at Bridges Based on Spatial Transformation. Buildings. 2025; 15(13):2367. https://doi.org/10.3390/buildings15132367

Chicago/Turabian StyleGu, Siyang, and Jian Zhang. 2025. "Real-Time Collision Warning System for Over-Height Ships at Bridges Based on Spatial Transformation" Buildings 15, no. 13: 2367. https://doi.org/10.3390/buildings15132367

APA StyleGu, S., & Zhang, J. (2025). Real-Time Collision Warning System for Over-Height Ships at Bridges Based on Spatial Transformation. Buildings, 15(13), 2367. https://doi.org/10.3390/buildings15132367