The Lightweight Method of Ground Penetrating Radar (GPR) Hidden Defect Detection Based on SESM-YOLO

Abstract

1. Introduction

2. Objectives

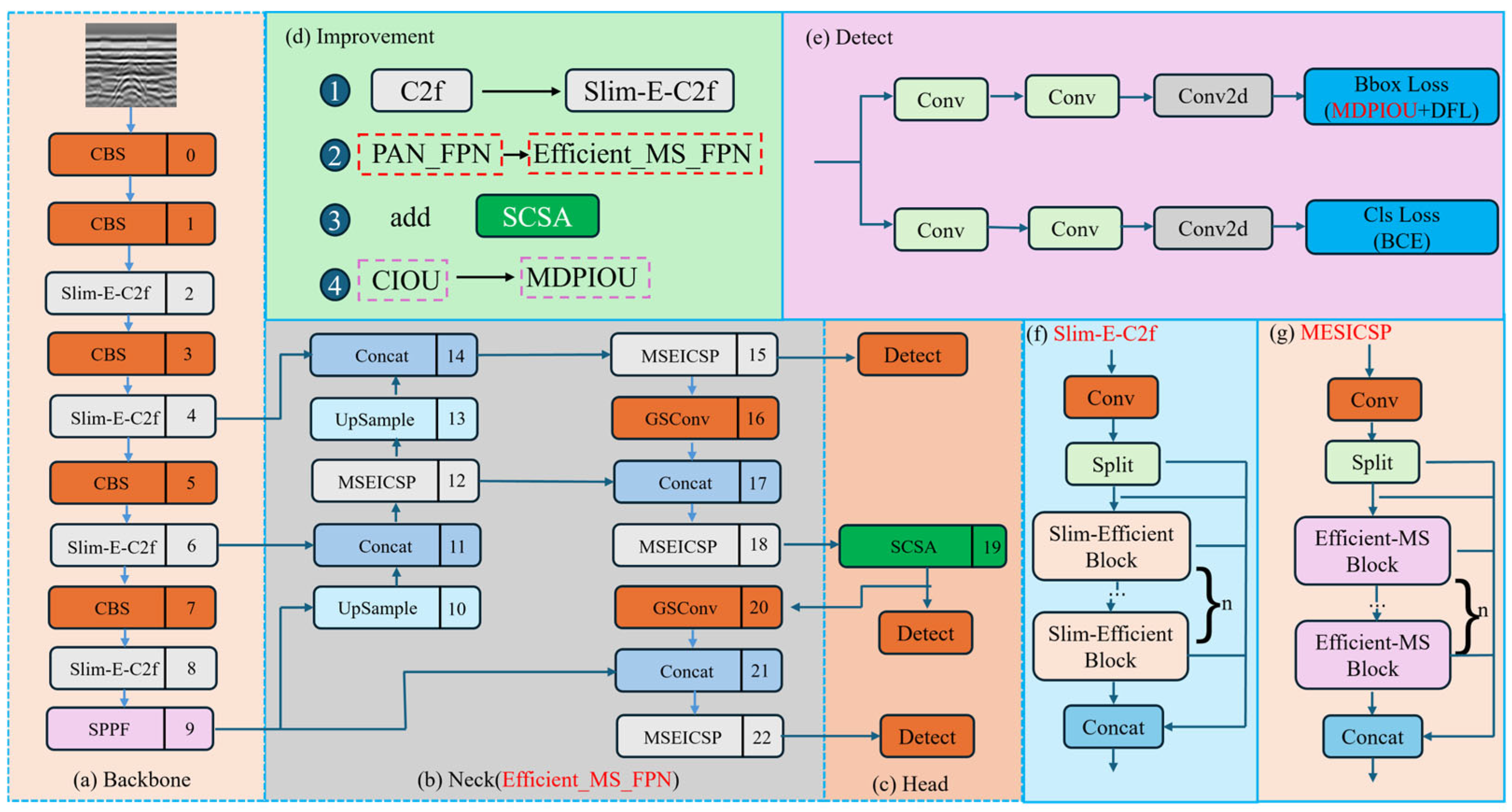

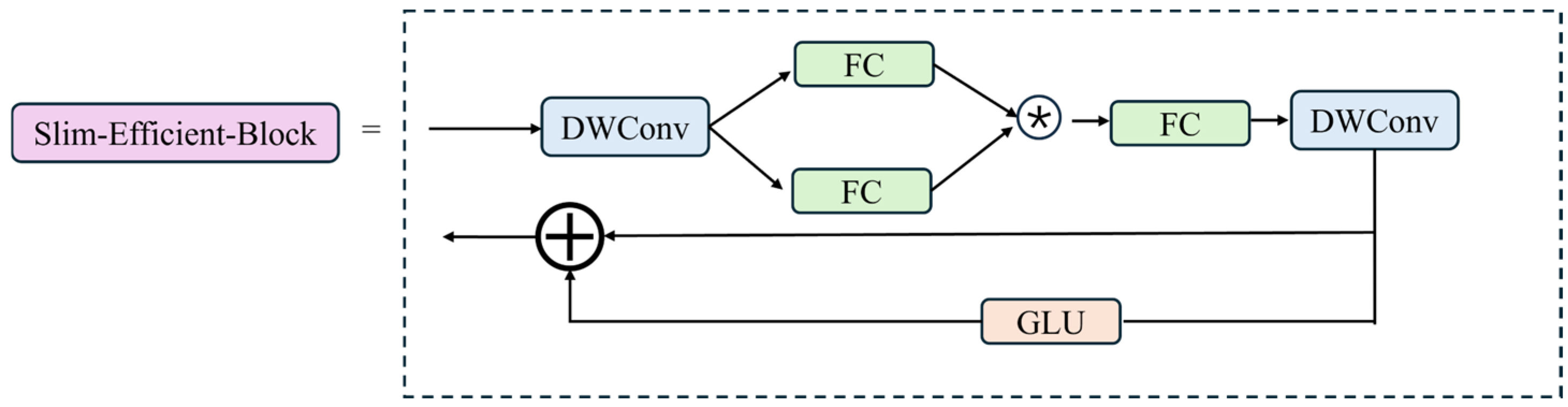

- We replace the bottleneck in the backbone’s C2f module with the Slim_Efficient_Block, maintaining the lightweight design while enhancing the network’s feature extraction capabilities.

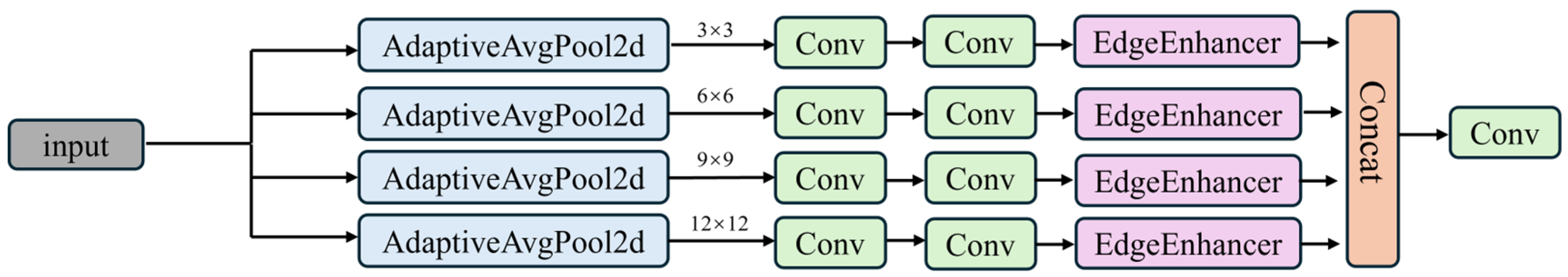

- We replace the PAFPN feature fusion network in the neck with the Efficient_MS_FPN feature fusion network, which effectively combines multi-scale and edge information, thereby improving the feature representation and model performance.

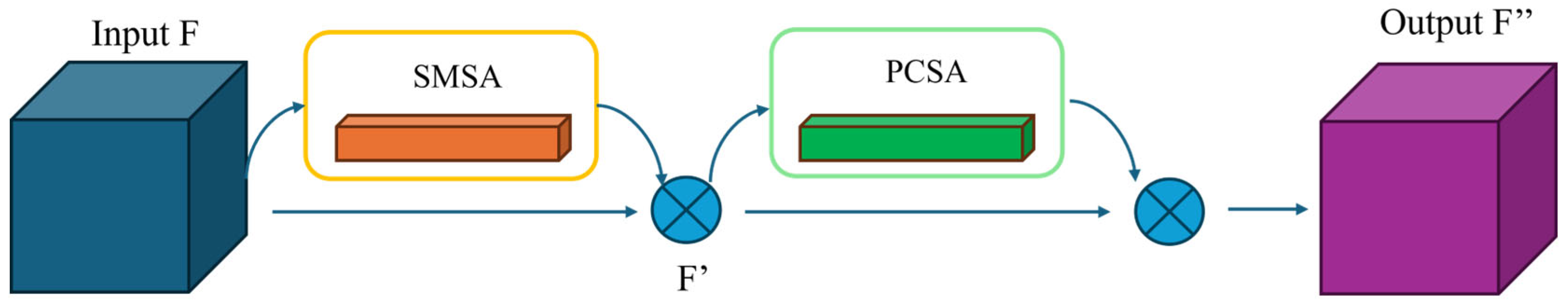

- We introduce the SCSA attention mechanism before the P3 detection head to enhance feature extraction and model robustness.

- We replace the loss function with MPDIoU, improving the localization accuracy of the target boxes.

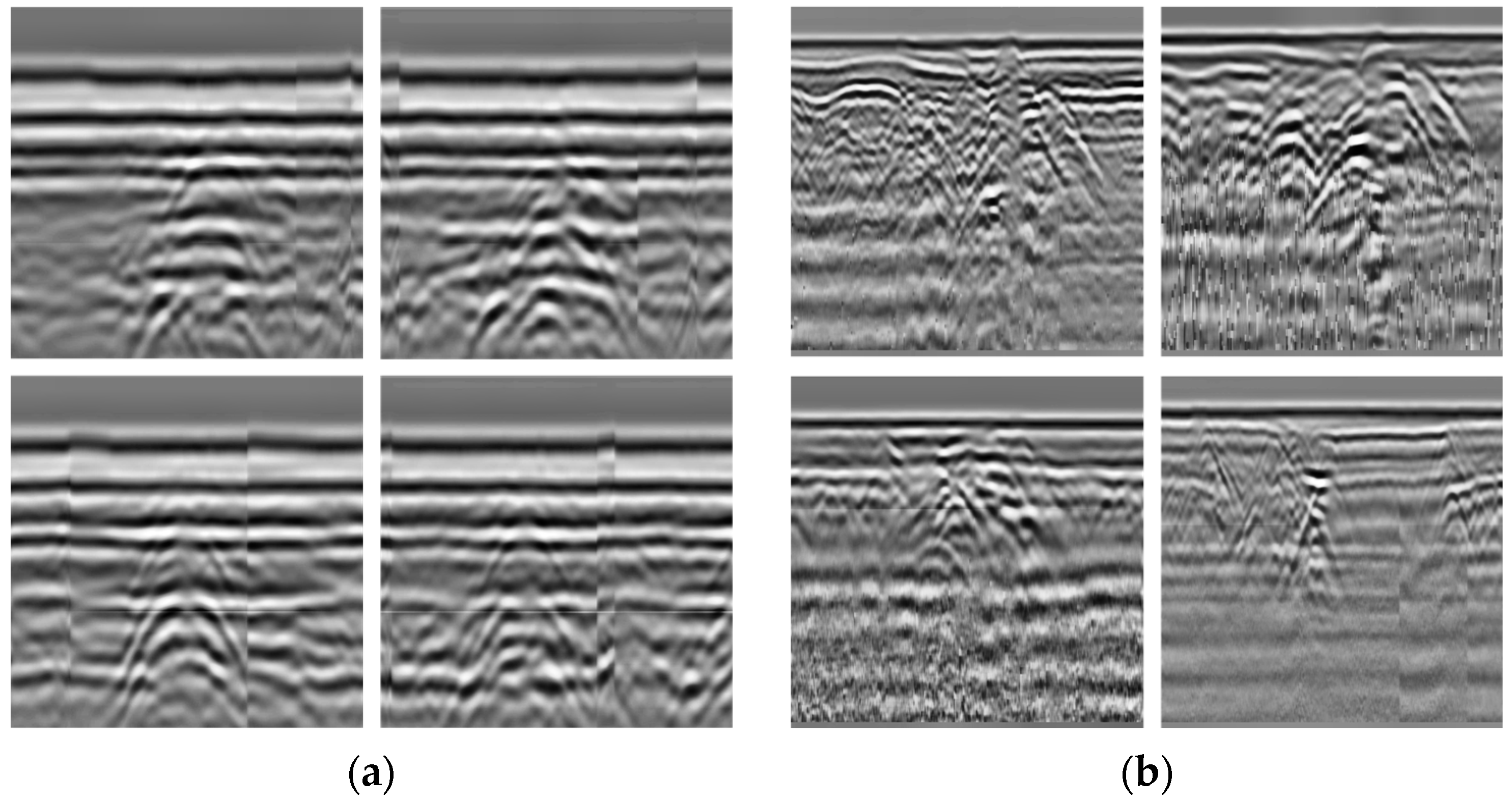

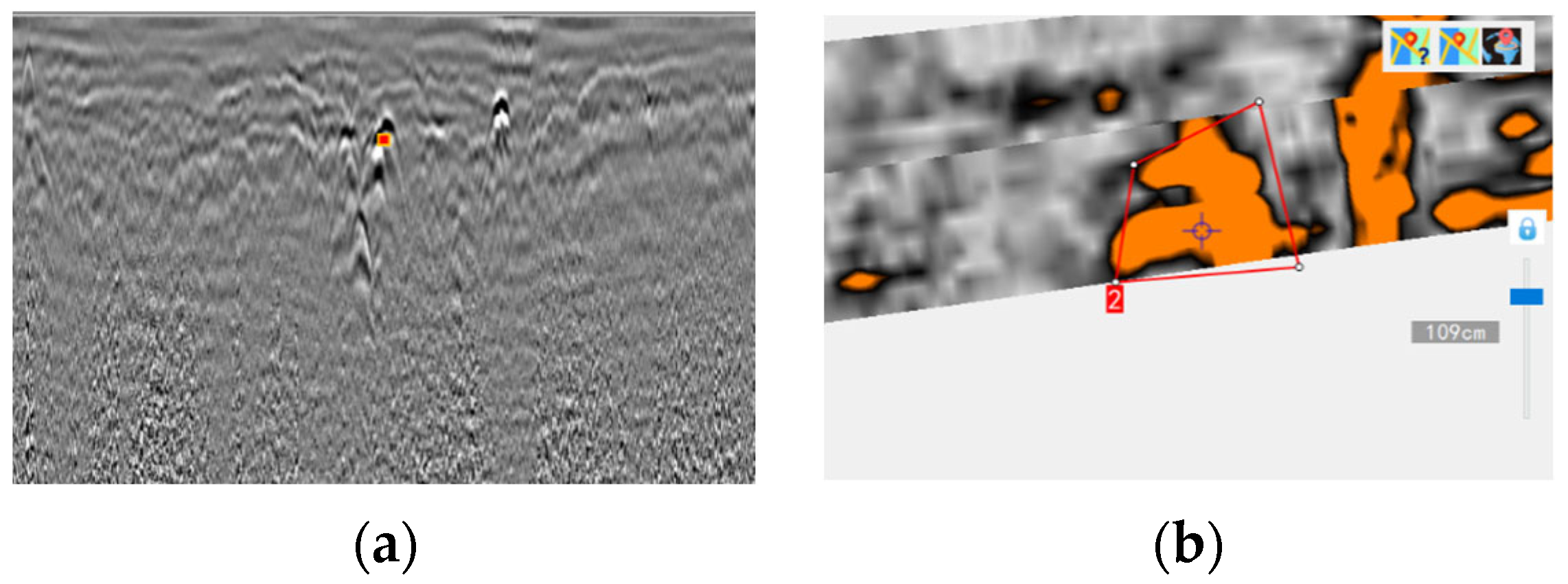

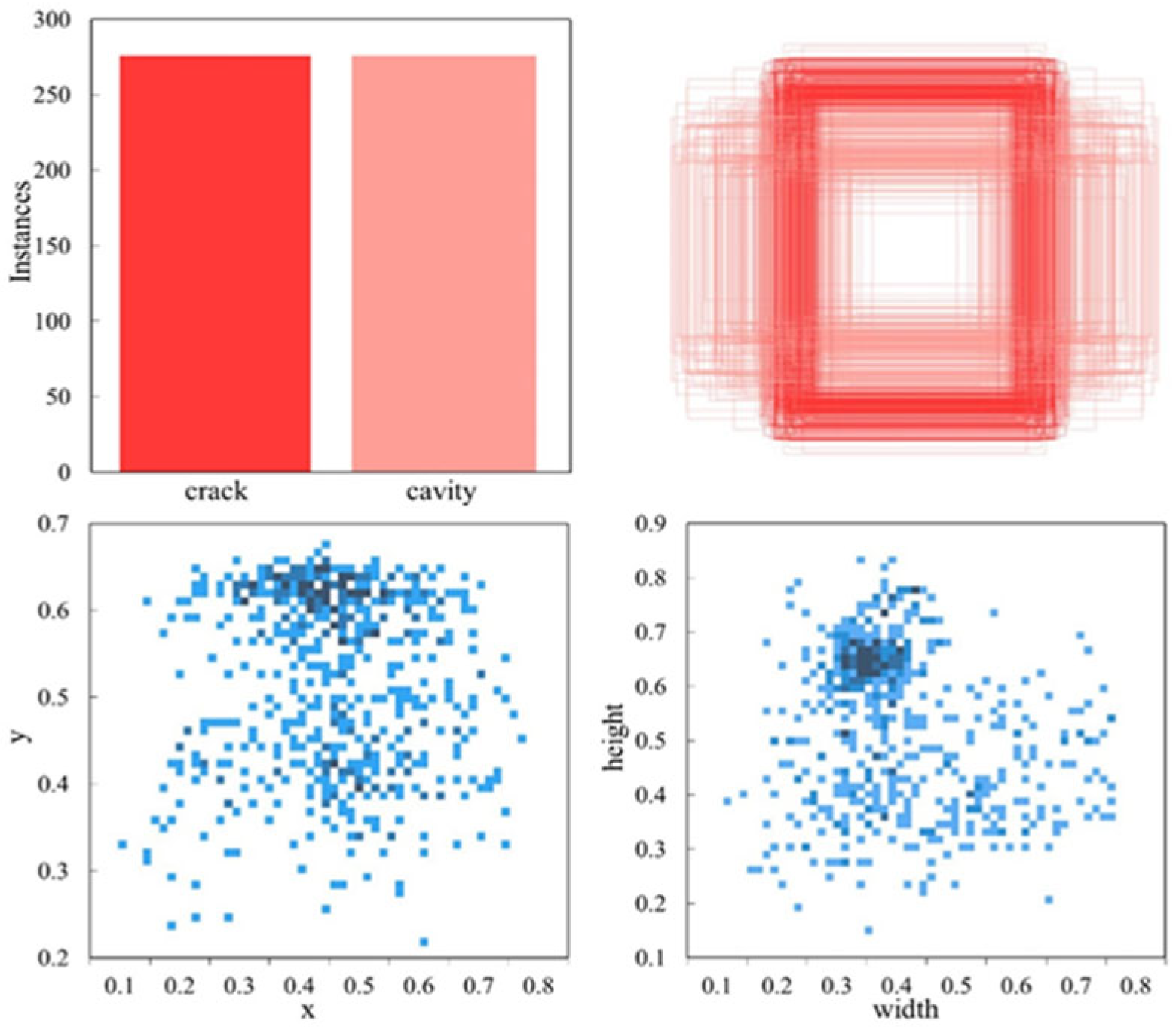

3. Preparation of GPR Dataset

4. Methodology

4.1. SESM-YOLO Network Architecture

- (1)

- To reduce model parameters and computational load, this study designed a Slim Efficient Block, as detailed in Section 4.2.

- (2)

- To extract deeper feature information from images, this study retains the PAN [24] and FPN [25] architectures of YOLOv8n while introducing the novel MEICSP module, as detailed in Section 4.3.

- (3)

- To enhance the model’s focus on defect-related features, we introduce the SCSA attention module, as detailed in Section 4.4.

- (4)

- To address the limitations of the CIoU loss function, we finally introduce the MPDIoU loss function, with comprehensive implementation and analysis presented in Section 4.5.

4.2. Improvement of the Backbone Module

4.3. Improvement of the Neck Module

4.4. Spatial and Channel-Wise Selective Attention

4.5. Improvement of the Loss Function

5. Experiment Results and Discussion

5.1. Experiment Introduction

5.1.1. Experimental Environment

5.1.2. Evaluation Indicators

5.2. Experiment Results

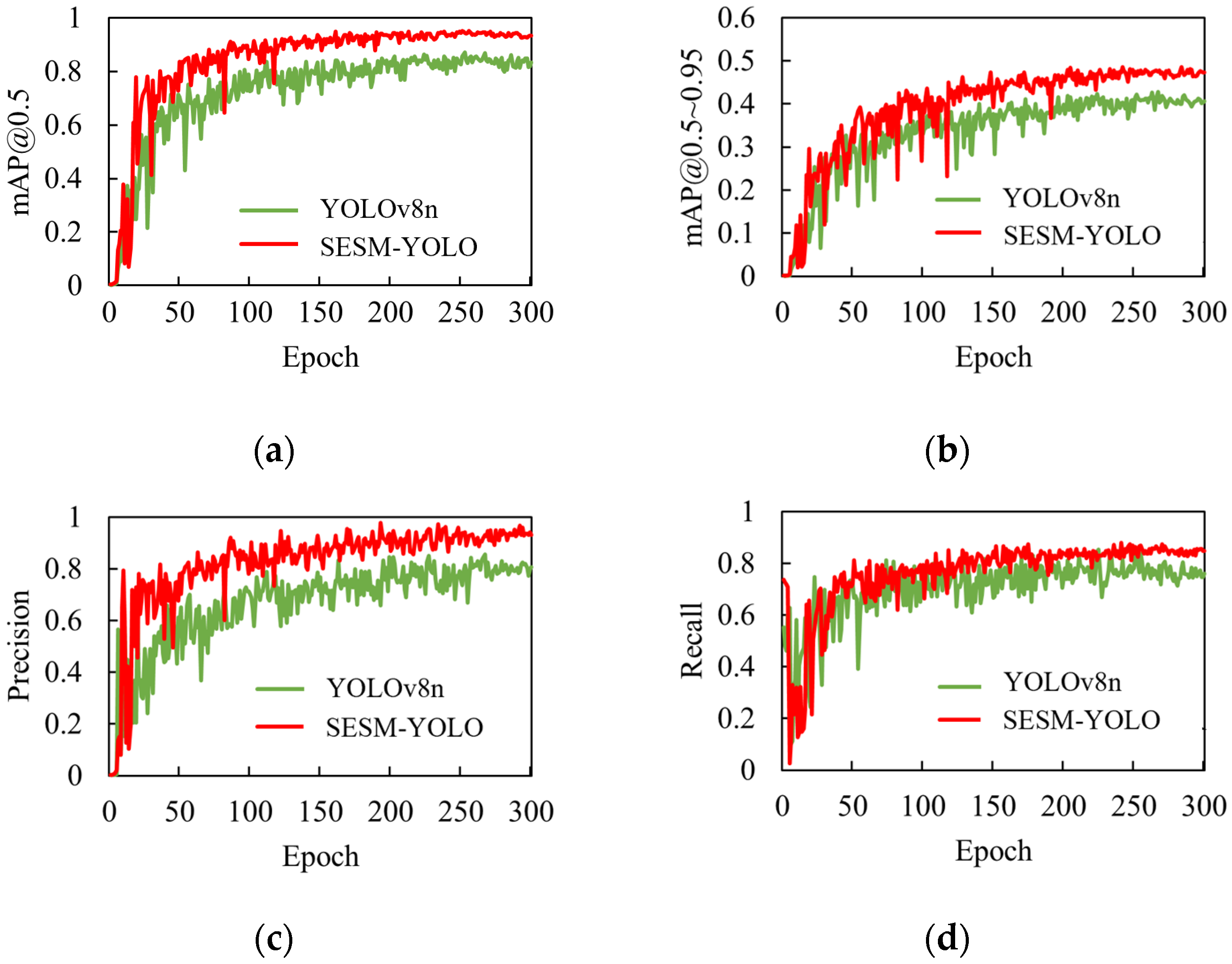

5.2.1. Training Process Comparison

5.2.2. Comparison Experiment of Attention Mechanisms

5.2.3. Comparison Experiment of Modified C2f Module

5.2.4. Comparison Experiment of Feature Fusion Network

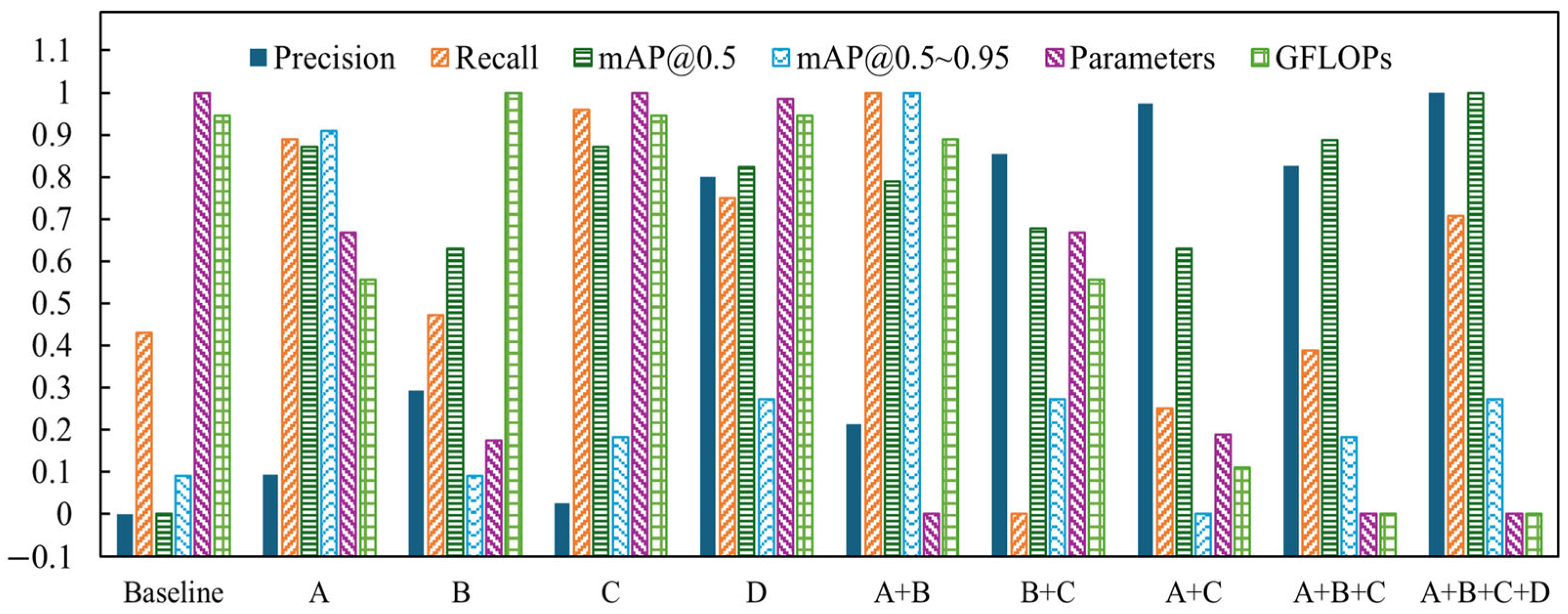

5.2.5. Ablation Experiments

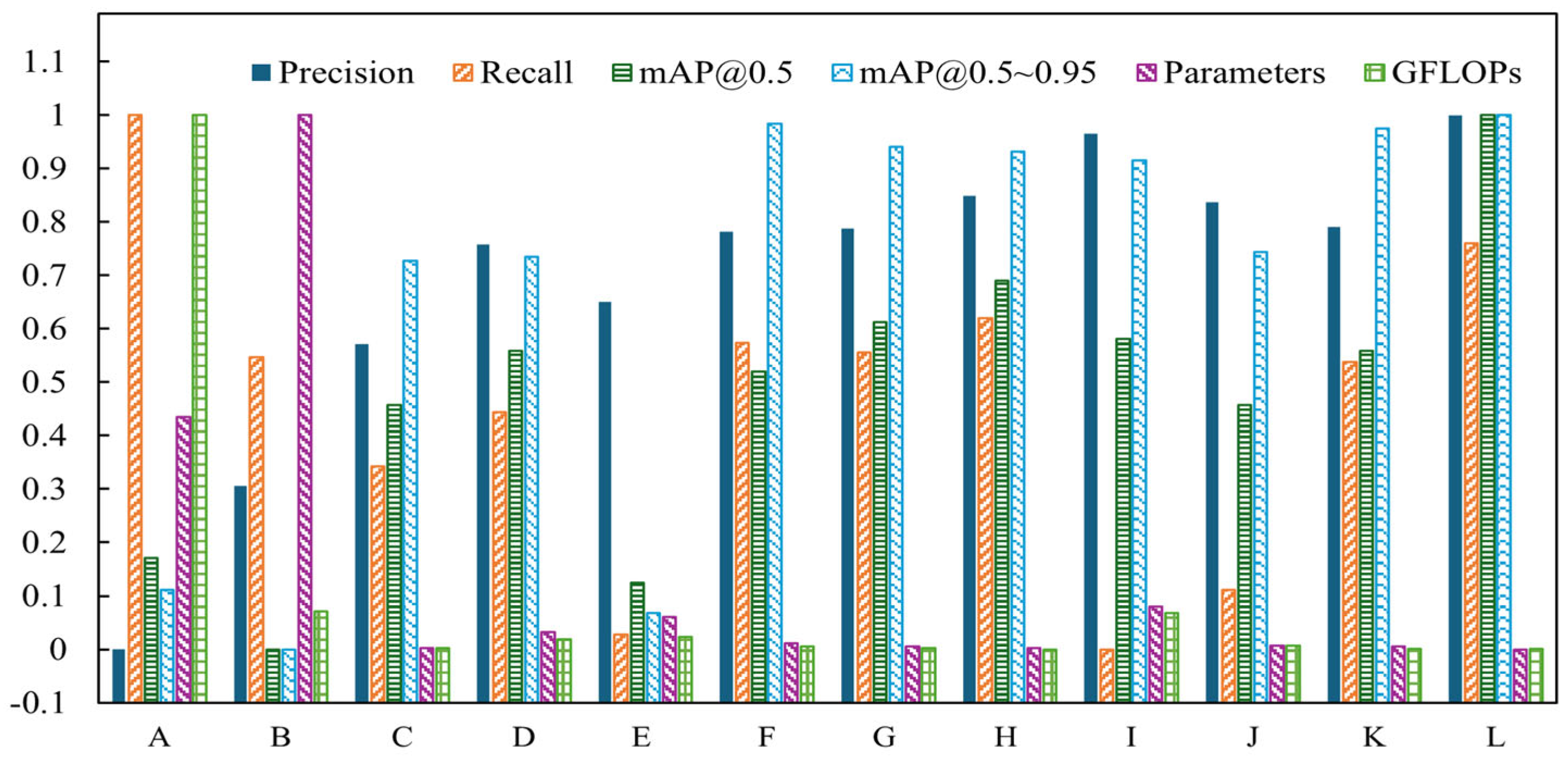

5.2.6. Comparison Experiments

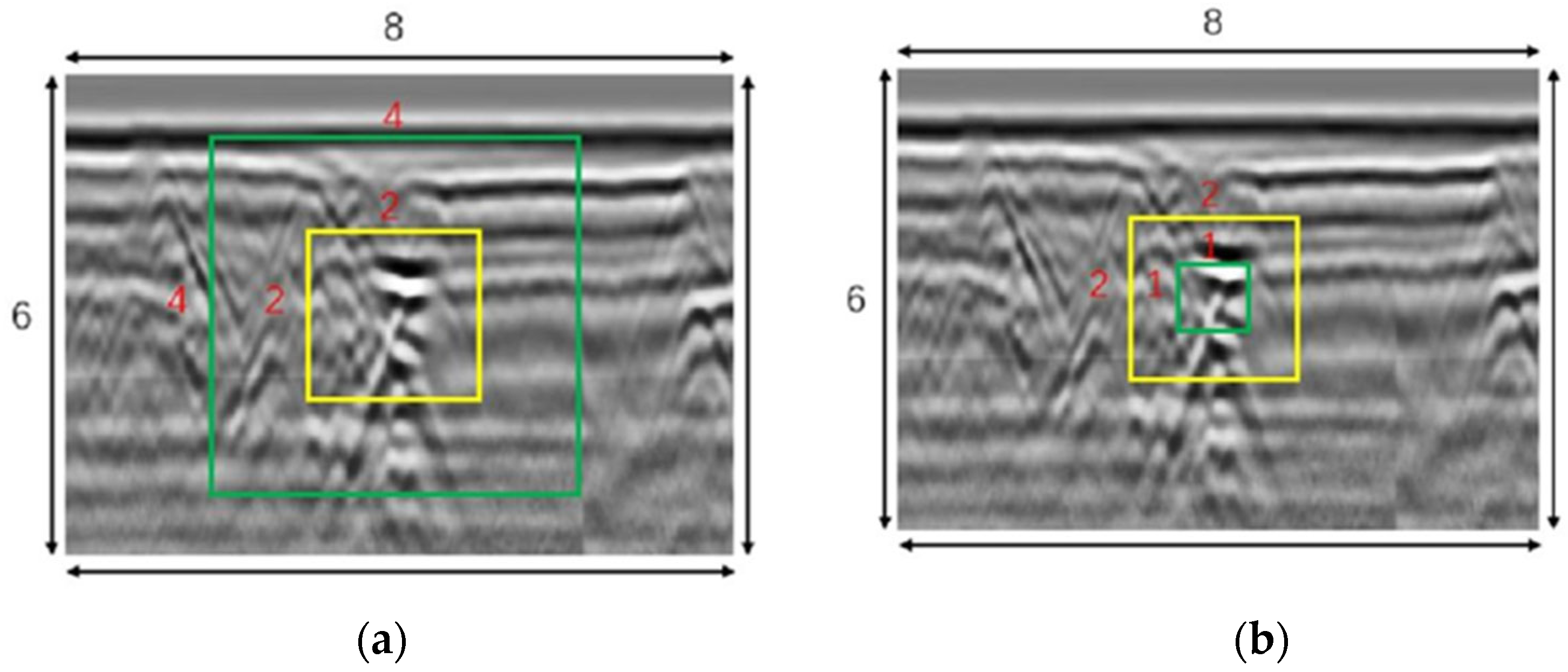

5.2.7. Visualization Analysis

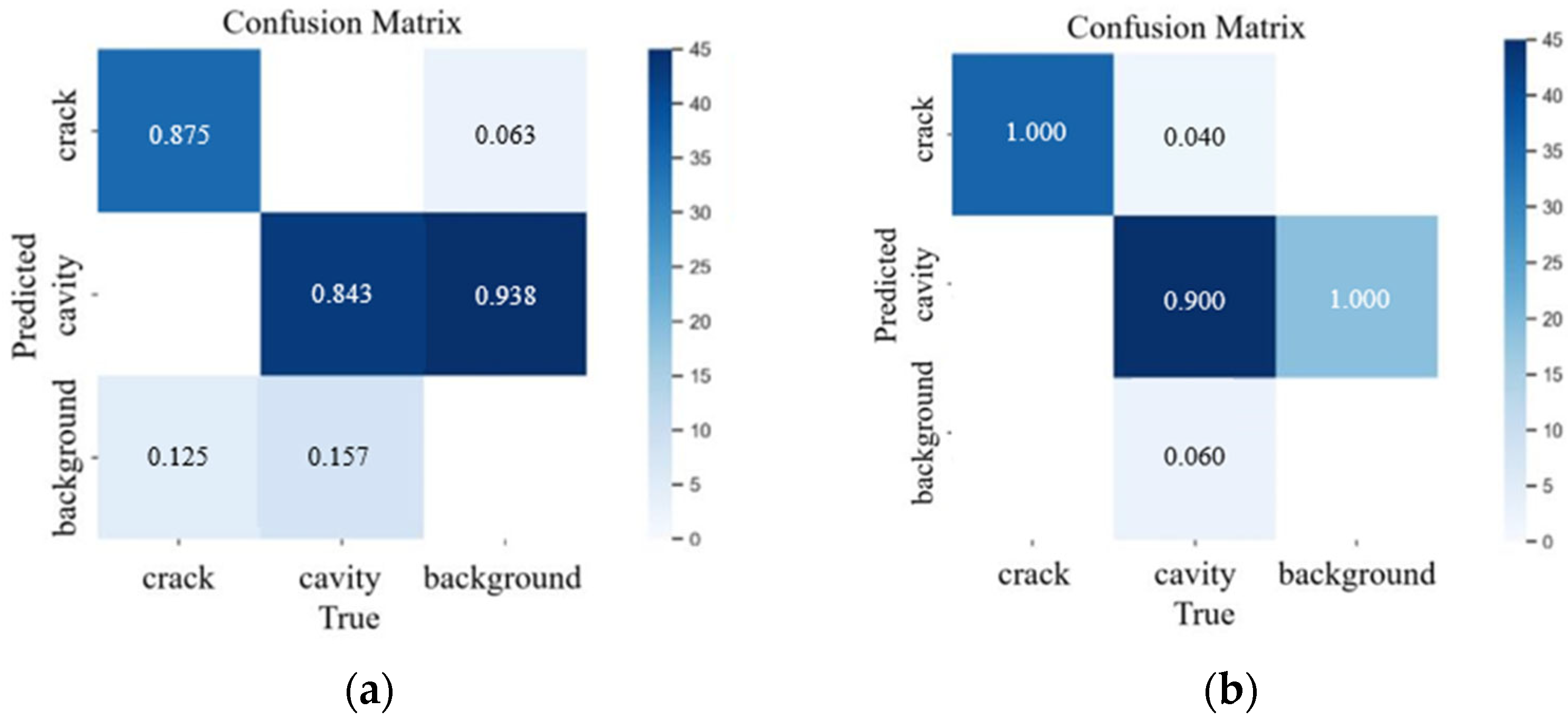

- 1.

- Confusion matrix

- 2.

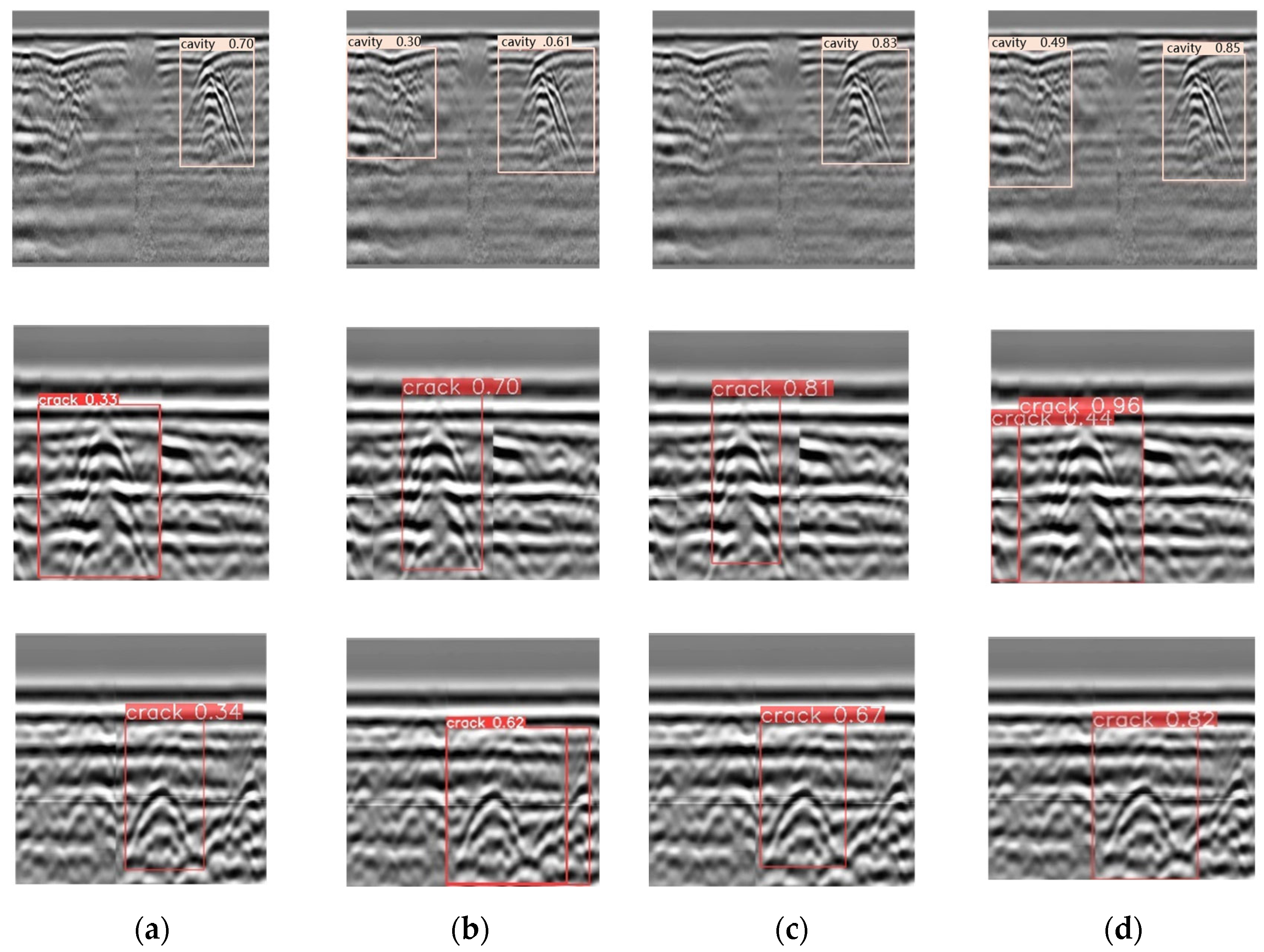

- Inference results

- 3.

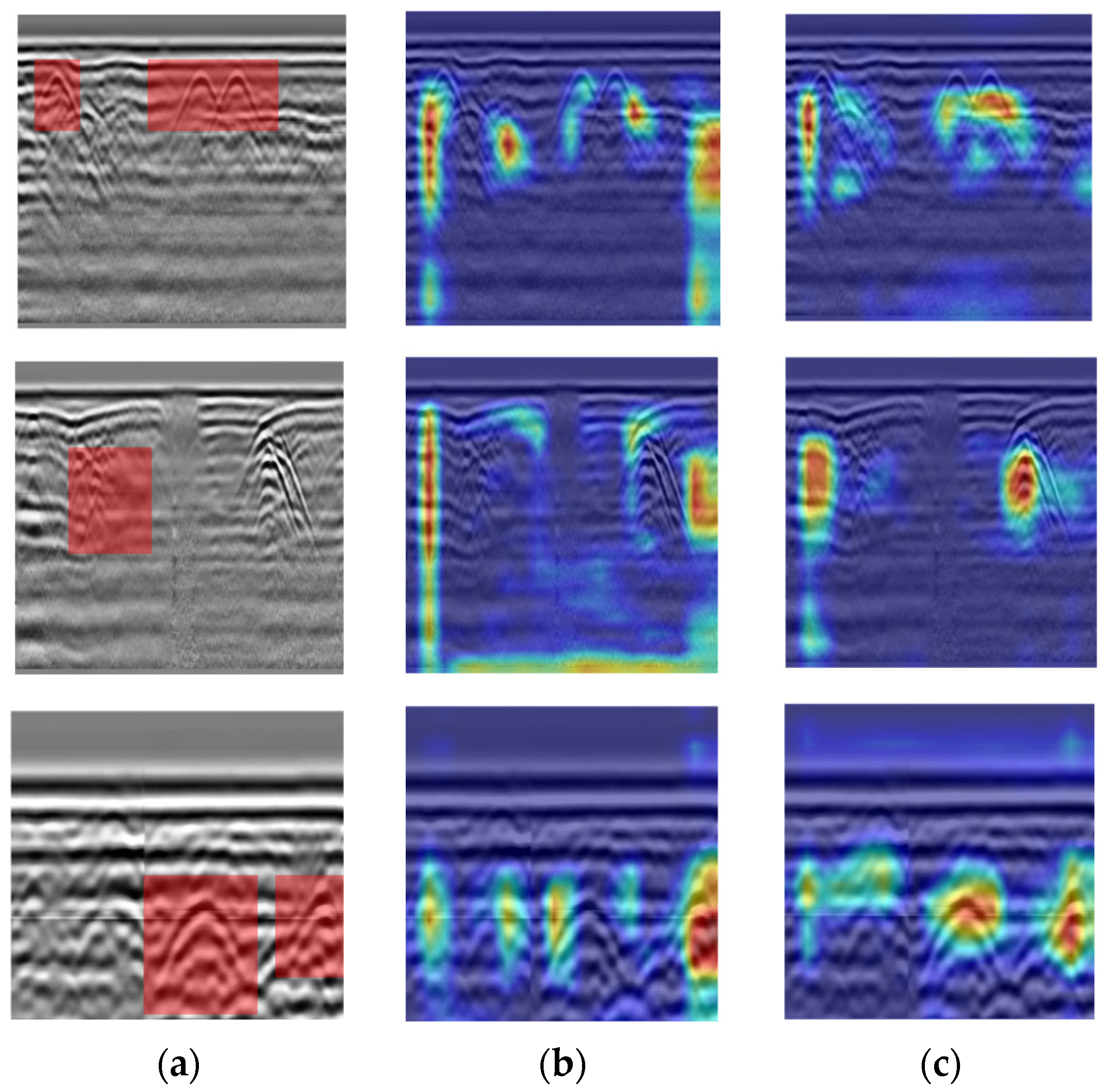

- Heat maps

6. Conclusions

- In comparative module-wise experiments, although the proposed improvements in this study did not achieve universal superiority across all evaluation metrics, they demonstrated marked superiority when holistically considering detection accuracy and lightweight deployment requirements. This balanced optimization stems from our systematic approach that prioritizes critical performance dimensions for embedded GPR applications;

- Compared to the YOLOv8n, the detection accuracy of SESM-YOLO is improved to 92.8%, while reducing the number of model parameters to 2.32M, meeting the requirements for lightweight detection;

- Comparative experiments with mainstream object detection models demonstrate that the algorithm proposed in this study outperforms existing state-of-the-art methods in both accuracy and inference speed for GPR defect image recognition tasks;

- The comparison results of real-time inference and heat maps indicate that SESM-YOLO places greater emphasis on the features of damage, reducing interference from background factors and effectively enhancing identification accuracy.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rasol, M.A.; Pérez-Gracia, V.; Fernandes, F.M.; Pais, J.C.; Santos-Assunçao, S.; Santos, C.; Sossa, V. GPR Laboratory Tests and Numerical Models to Characterize Cracks in Cement Concrete Specimens, Exemplifying Damage in Rigid Pavement. Measurement 2020, 158, 107662. [Google Scholar] [CrossRef]

- Solla, M.; Pérez-Gracia, V.; Fontul, S. A Review of GPR Application on Transport Infrastructures: Troubleshooting and Best Practices. Remote Sens. 2021, 13, 672. [Google Scholar] [CrossRef]

- Liu, C.; Du, Y.; Yue, G.; Li, Y.; Wu, D.; Li, F. Advances in Automatic Identification of Road Subsurface Distress Using Ground Penetrating Radar: State of the Art and Future Trends. Autom. Constr. 2024, 158, 105185. [Google Scholar] [CrossRef]

- Zhang, M.; Feng, X.; Bano, M.; Xing, H.; Wang, T.; Liang, W.; Zhou, H.; Dong, Z.; An, Y.; Zhang, Y. Review of Ground Penetrating Radar Applications for Water Dynamics Studies in Unsaturated Zone. Remote Sens. 2022, 14, 5993. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, W.; Gu, X.; Li, S.; Wang, L.; Zhang, T. Application of Combining YOLO Models and 3D GPR Images in Road Detection and Maintenance. Remote Sens. 2021, 13, 1081. [Google Scholar] [CrossRef]

- Leng, Z.; Al-Qadi, I.L. An Innovative Method for Measuring Pavement Dielectric Constant Using the Extended CMP Method with Two Air-Coupled GPR Systems. NDT E Int. 2014, 66, 90–98. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Besaw, L.E.; Stimac, P.J. Deep Convolutional Neural Networks for Classifying GPR B-Scans; Bishop, S.S., Isaacs, J.C., Eds.; SPIE: Baltimore, MD, USA, 2015; p. 945413. [Google Scholar]

- Reichman, D.; Collins, L.M.; Malof, J.M. Some Good Practices for Applying Convolutional Neural Networks to Buried Threat Detection in Ground Penetrating Radar. In Proceedings of the 2017 9th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Edinburgh, UK, 28–30 June 2017; pp. 1–5. [Google Scholar]

- Hou, Y.; Li, Q.; Zhang, C.; Lu, G.; Ye, Z.; Chen, Y.; Wang, L.; Cao, D. The State-of-the-Art Review on Applications of Intrusive Sensing, Image Processing Techniques, and Machine Learning Methods in Pavement Monitoring and Analysis. Engineering 2021, 7, 845–856. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3039–3048. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. ISBN 978-3-319-46447-3. [Google Scholar]

- Wang, D.; Liu, Z.; Gu, X.; Wu, W.; Chen, Y.; Wang, L. Automatic Detection of Pothole Distress in Asphalt Pavement Using Improved Convolutional Neural Networks. Remote Sens. 2022, 14, 3892. [Google Scholar] [CrossRef]

- Du, Y.; Yue, G.; Liu, C.; Li, F.; Cai, W. Automatic Identification Method for Urban Cavities Using Multi-Feature Fusion of Ground Penetrating Radar. China J. Highw. Transp. 2023, 36, 108–119. [Google Scholar]

- Liu, Z.; Gu, X.; Li, J.; Dong, Q.; Jiang, J. Deep Learning-Enhanced Approach for Numerical Simulation of Ground-Penetrating Radar and Road Crack Image Detection. Chin. J. Geophys. 2024, 67, 2455–2471. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Ozkaya, U.; Melgani, F.; Belete Bejiga, M.; Seyfi, L.; Donelli, M. GPR B Scan Image Analysis with Deep Learning Methods. Measurement 2020, 165, 107770. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO (Version 8.0.0) [Computer Software]. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 27 May 2025).

- Zhou, F.; Zhao, H.; Nie, Z. Safety Helmet Detection Based on YOLOv5. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; pp. 6–11. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhao, L.; Wu, Y.; Yan, W.; Zhan, W.; Huang, X.; Booth, J.; Mehta, A.; Bekris, K.; Kramer-Bottiglio, R.; Balkcom, D. StarBlocks: Soft Actuated Self-Connecting Blocks for Building Deformable Lattice Structures. IEEE Robot. Autom. Lett. 2023, 8, 4521–4528. [Google Scholar] [CrossRef]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language Modeling with Gated Convolutional Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-Neck by GSConv: A Better Design Paradigm of Detector Architectures for Autonomous Vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Si, Y.; Xu, H.; Zhu, X.; Zhang, W.; Dong, Y.; Chen, Y.; Li, H. SCSA: Exploring the Synergistic Effects Between Spatial and Channel Attention. Neurocomputing 2025, 634, 129866. [Google Scholar] [CrossRef]

- Ma, S.; Xu, Y. MPDIoU: A Loss for Efficient and Accurate Bounding Box Regression. arXiv 2023, arXiv:2307.07662. [Google Scholar]

- Bin, F.; He, J.; Qiu, K.; Hu, L.; Zheng, Z.; Sun, Q. CI-YOLO: A Lightweight Foreign Object Detection Model for Inspecting Transmission Line. Measurement 2025, 242, 116193. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. ISBN 978-3-030-01233-5. [Google Scholar]

- Chen, G.; Gu, T.; Lu, J.; Bao, J.-A.; Zhou, J. Person Re-Identification via Attention Pyramid. IEEE Trans. Image Process. 2021, 30, 7663–7676. [Google Scholar] [CrossRef]

- Yu, Z. RT-DETR-iRMB: A Lightweight Real-Time Small Object Detection Method. In Proceedings of the 2024 IEEE 6th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 24–26 May 2024; pp. 1454–1458. [Google Scholar]

- Huang, B. ASD-YOLO: An Aircraft Surface Defects Detection Method Using Deformable Convolution and Attention Mechanism. Measurement 2024, 238, 115300. [Google Scholar] [CrossRef]

- Jo, Y.; Oh, S.W.; Kang, J.; Kim, S.J. Deep Video Super-Resolution Network Using Dynamic Upsampling Filters Without Explicit Motion Compensation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3224–3232. [Google Scholar]

- Jia, G.; Xu, L.; Zhu, L. WTConv-Adapter: Wavelet Transform Convolution Adapter for Parameter Efficient Transfer Learning of Object Detection ConvNets. In Proceedings of the Eighth International Conference on Video and Image Processing (ICVIP 2024), Kuala Lumpur, Malaysia, 13–15 December 2024; p. 3. [Google Scholar]

- Han, D.; Wang, Z.; Xia, Z.; Han, Y.; Pu, Y.; Ge, C.; Song, J.; Song, S.; Zheng, B.; Huang, G. Demystify Mamba in Vision: A Linear Attention Perspective. arXiv 2024, arXiv:2405.16605. [Google Scholar]

- Chen, J.; Mai, H.; Luo, L.; Chen, X.; Wu, K. Effective Feature Fusion Network in BIFPN for Small Object Detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 699–703. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Kang, M.; Ting, C.-M.; Ting, F.F.; Phan, R.C.-W. ASF-YOLO: A Novel YOLO Model with Attentional Scale Sequence Fusion for Cell Instance Segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Wang, C.; Nie, W.H.Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Liu, R.-M.; Su, W.-H. APHS-YOLO: A Lightweight Model for Real-Time Detection and Classification of Stropharia Rugoso-Annulata. Foods 2024, 13, 1710. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

| Specification | Parameter | Configuration |

|---|---|---|

| Hardware environment | CPU | 13th Gen Intel Core(T)i9-13900K 3.00 GHz |

| GPU | NVIDIA GeForce RTX 4090 | |

| GPU memory size | 64 GB RAM | |

| Software environment | Operating system | Windows 10 |

| Pytorch | 1.17 | |

| CUDA | 11.2 | |

| Python | 3.9 |

| Parameters | Setup | Parameters | Setup |

|---|---|---|---|

| epochs | 300 | optimizer | SGD |

| patience | 50 | weight-decay | 0.0005 |

| batch | 16 | momentum | 0.937 |

| imgsz | 640 | warmup momentum | 0.8 |

| workers | 8 | close mosaic | 10 |

| irf | 0.01 | lr0 | 0.01 |

| Method | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5~0.95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| IEMA | 87.1 | 84 | 89.2 | 44.2 | 3.08 | 8.2 |

| CBAM | 87.7 | 85.3 | 90.9 | 44.3 | 3.25 | 8.3 |

| PSA | 80.7 | 81.2 | 87.8 | 43.9 | 3.1 | 8.1 |

| iRMB | 86.7 | 82.5 | 88.1 | 44 | 3.31 | 9 |

| SCSA | 85.1 | 88.5 | 92 | 44.1 | 3.01 | 8.1 |

| Method | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5~0.95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| SAConv [35] | 89 | 85.6 | 91.5 | 44.9 | 3.3 | 7.4 |

| DynamicConv [36] | 83.1 | 87.1 | 91.4 | 45.4 | 3.49 | 7.1 |

| WTConv [37] | 84.9 | 81.5 | 87.5 | 43.5 | 2.74 | 7.2 |

| MLLABlock [38] | 76.9 | 86.1 | 90.7 | 44.6 | 2.8 | 7.4 |

| Slim_Efficient_Block | 85.6 | 88 | 92 | 44.9 | 2.78 | 7.4 |

| Method | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5~0.95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| BiFPN [39] | 81.4 | 81.8 | 88.6 | 43.7 | 2.78 | 8.1 |

| CCFM [40] | 86.9 | 84.4 | 89.1 | 43.4 | 1.96 | 6.4 |

| ASFYOLO [41] | 87.3 | 85.5 | 90.1 | 44.9 | 3.04 | 8.5 |

| GoldYOLO [42] | 89.7 | 79.3 | 87.8 | 43.8 | 8.06 | 17.6 |

| HSFPN [43] | 86.4 | 86.6 | 90.2 | 44.9 | 5.15 | 30.9 |

| Efficient_MS_FPN | 87.1 | 85 | 90.5 | 44 | 2.44 | 8.2 |

| Method | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5~0.95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| Baseline | 84.9 | 84.7 | 86.6 | 44 | 3.01 | 8.1 |

| A | 85.6 | 88 | 92 | 44.9 | 2.78 | 7.4 |

| B | 87.1 | 85 | 90.5 | 44 | 2.44 | 8.2 |

| C | 85.1 | 88.5 | 92 | 44.1 | 3.01 | 8.1 |

| D | 90.9 | 87 | 91.7 | 44.2 | 3 | 8.1 |

| A + B | 86.5 | 88.8 | 91.5 | 45 | 2.32 | 8 |

| A + C | 91.3 | 81.6 | 90.8 | 44.2 | 2.78 | 7.4 |

| B + C | 92.2 | 83.4 | 90.5 | 43.9 | 2.45 | 6.6 |

| A + B + C | 91.1 | 84.4 | 92.1 | 44.1 | 2.32 | 6.4 |

| A + B + C + D | 92.4 | 86.7 | 92.8 | 44.2 | 2.32 | 6.4 |

| Method | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5~0.95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| Faster R-CNN(A) | 58.1 | 89.3 | 82.1 | 33.8 | 28.48 | 302.61 |

| SSD (B) | 68.6 | 84.4 | 79.9 | 32.5 | 62.5 | 27.5 |

| YOLOv5n(C) | 77.7 | 82.2 | 85.8 | 41 | 2.5 | 7.1 |

| YOLOv6n(D) | 84.1 | 83.3 | 87.1 | 41.1 | 4.23 | 11.8 |

| YOLOv7-tiny(E) | 80.4 | 78.8 | 81.5 | 33.3 | 6 | 13 |

| YOLOv8n(F) | 84.9 | 84.7 | 86.6 | 44 | 3.01 | 8.1 |

| YOLOv8n-WTConv(G) | 85.1 | 84.5 | 87.8 | 43.5 | 2.61 | 7.2 |

| YOLOv8n-CCFM(H) | 87.2 | 85.2 | 88.8 | 43.4 | 2.42 | 6.3 |

| YOLOv9s(I) | 91.2 | 78.5 | 87.4 | 43.2 | 7.1 | 26.4 |

| YOLOv10n(J) | 86.8 | 79.7 | 85.8 | 41.2 | 2.7 | 8.2 |

| YOLOv11n(K) | 85.2 | 84.3 | 87.1 | 43.9 | 2.61 | 6.5 |

| SESM-YOLO(L) | 92.4 | 86.7 | 92.8 | 44.2 | 2.32 | 6.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Y.; Jiao, G.; Cui, M.; Ni, L. The Lightweight Method of Ground Penetrating Radar (GPR) Hidden Defect Detection Based on SESM-YOLO. Buildings 2025, 15, 2345. https://doi.org/10.3390/buildings15132345

Yan Y, Jiao G, Cui M, Ni L. The Lightweight Method of Ground Penetrating Radar (GPR) Hidden Defect Detection Based on SESM-YOLO. Buildings. 2025; 15(13):2345. https://doi.org/10.3390/buildings15132345

Chicago/Turabian StyleYan, Yu, Guangxuan Jiao, Minxing Cui, and Lei Ni. 2025. "The Lightweight Method of Ground Penetrating Radar (GPR) Hidden Defect Detection Based on SESM-YOLO" Buildings 15, no. 13: 2345. https://doi.org/10.3390/buildings15132345

APA StyleYan, Y., Jiao, G., Cui, M., & Ni, L. (2025). The Lightweight Method of Ground Penetrating Radar (GPR) Hidden Defect Detection Based on SESM-YOLO. Buildings, 15(13), 2345. https://doi.org/10.3390/buildings15132345