Typological Transcoding Through LoRA and Diffusion Models: A Methodological Framework for Stylistic Emulation of Eclectic Facades in Krakow

Abstract

1. Introduction

2. Literature Review

2.1. Stylistic Emulation in Architectural Heritage

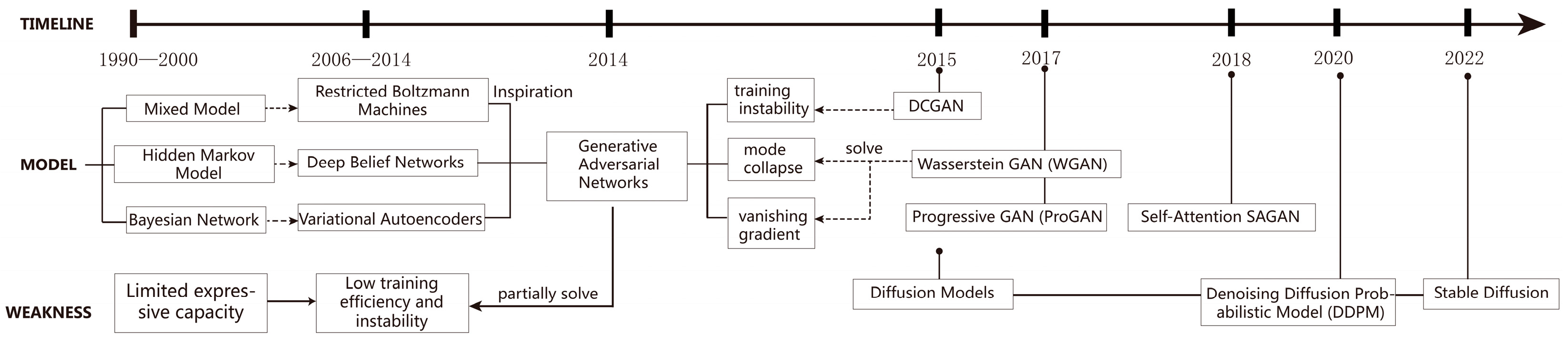

2.2. Development of Generative AI in Architecture

2.3. AI Applications in Architectural Generation

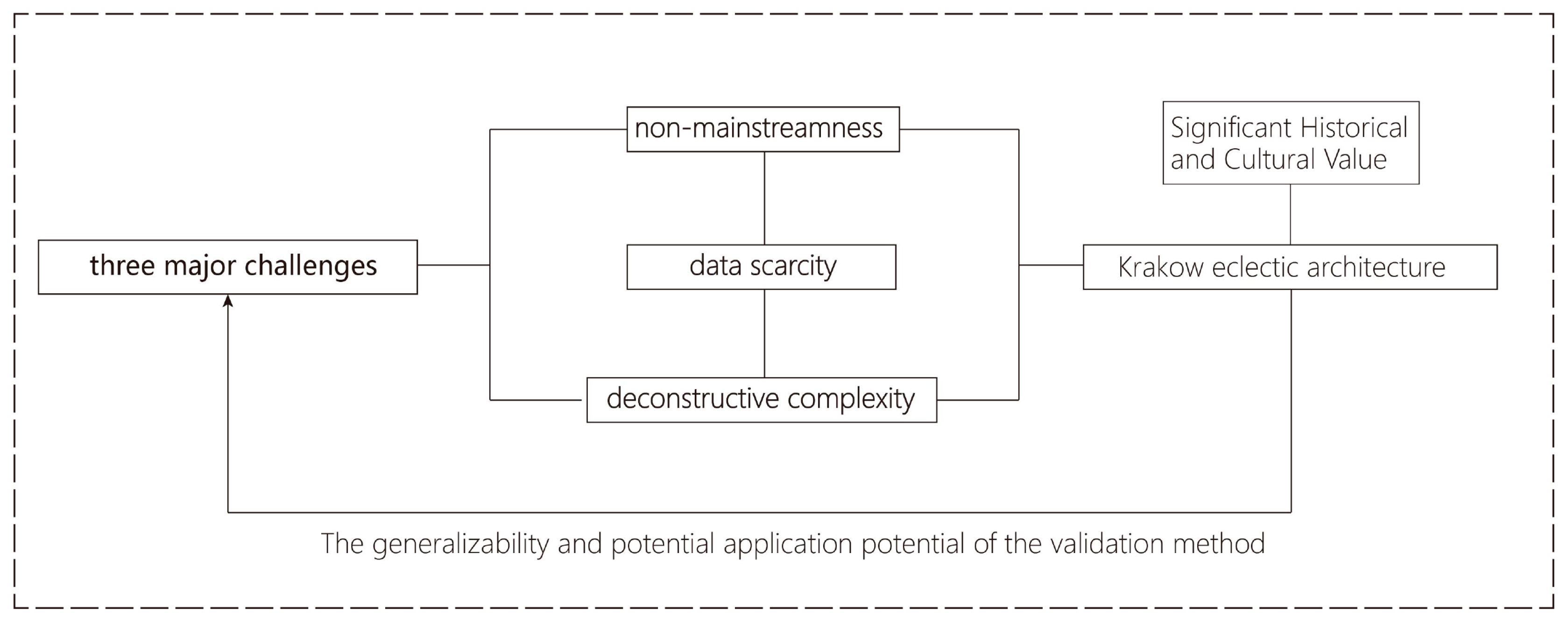

2.4. Research Gap and Proposed Approach

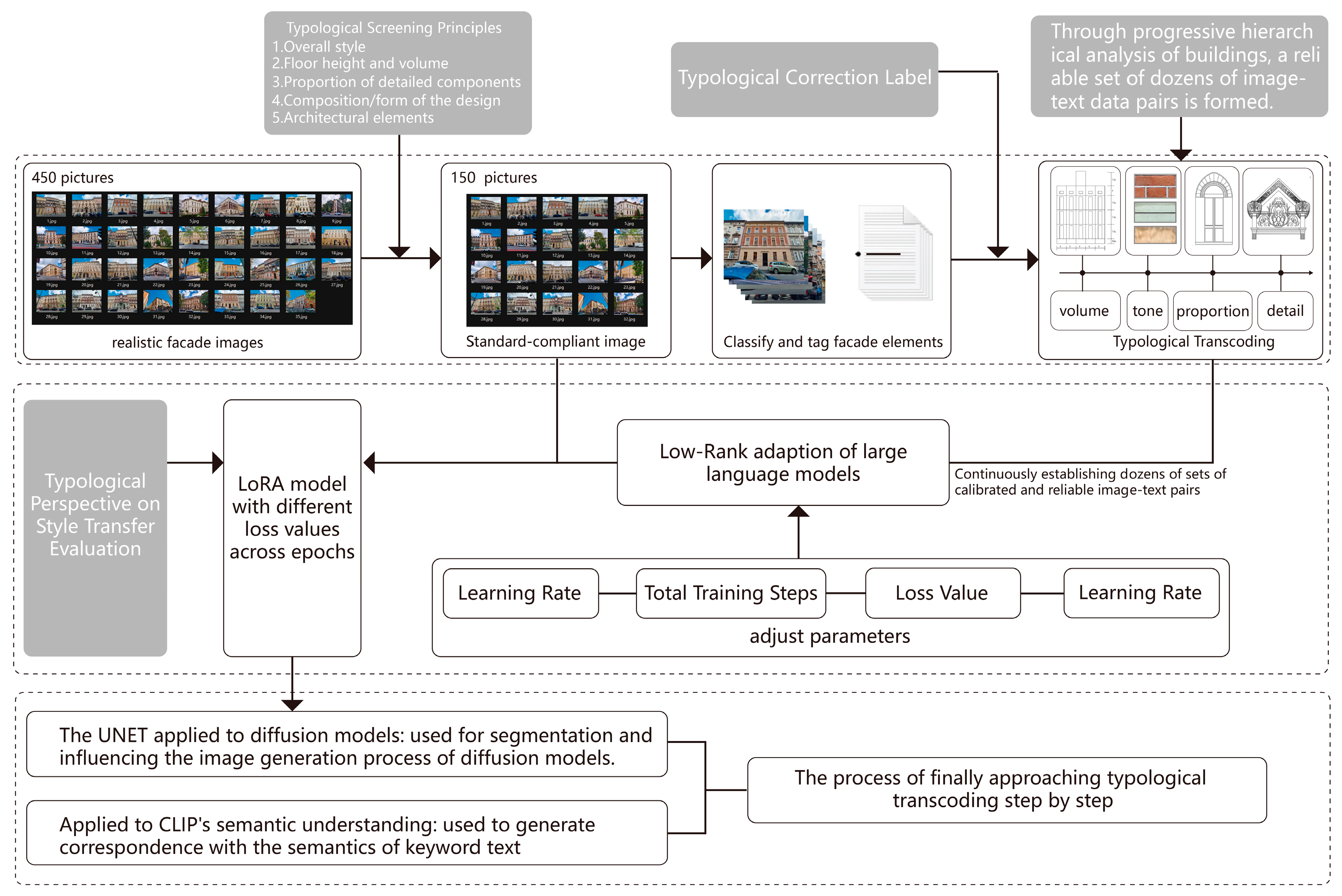

3. Materials and Methods

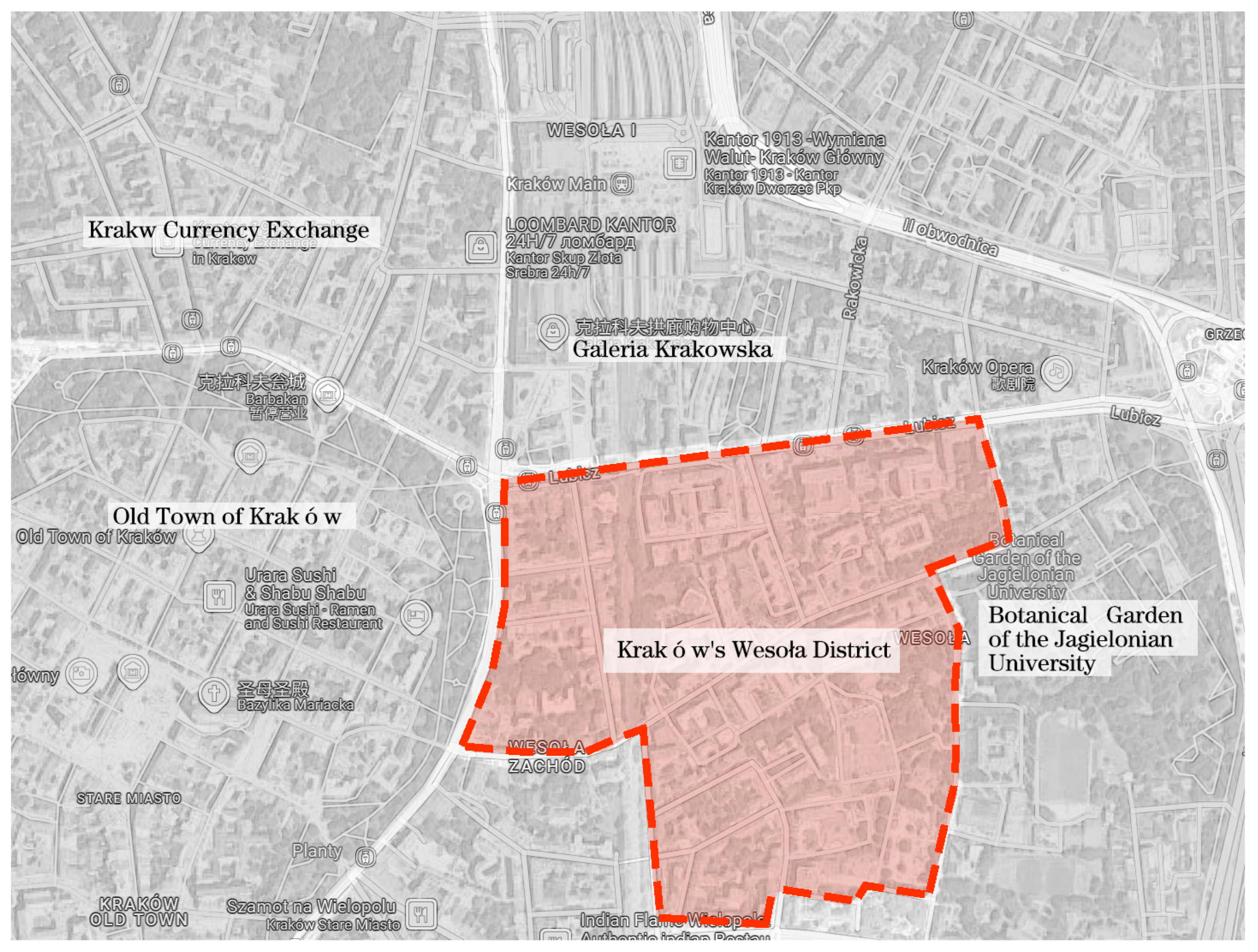

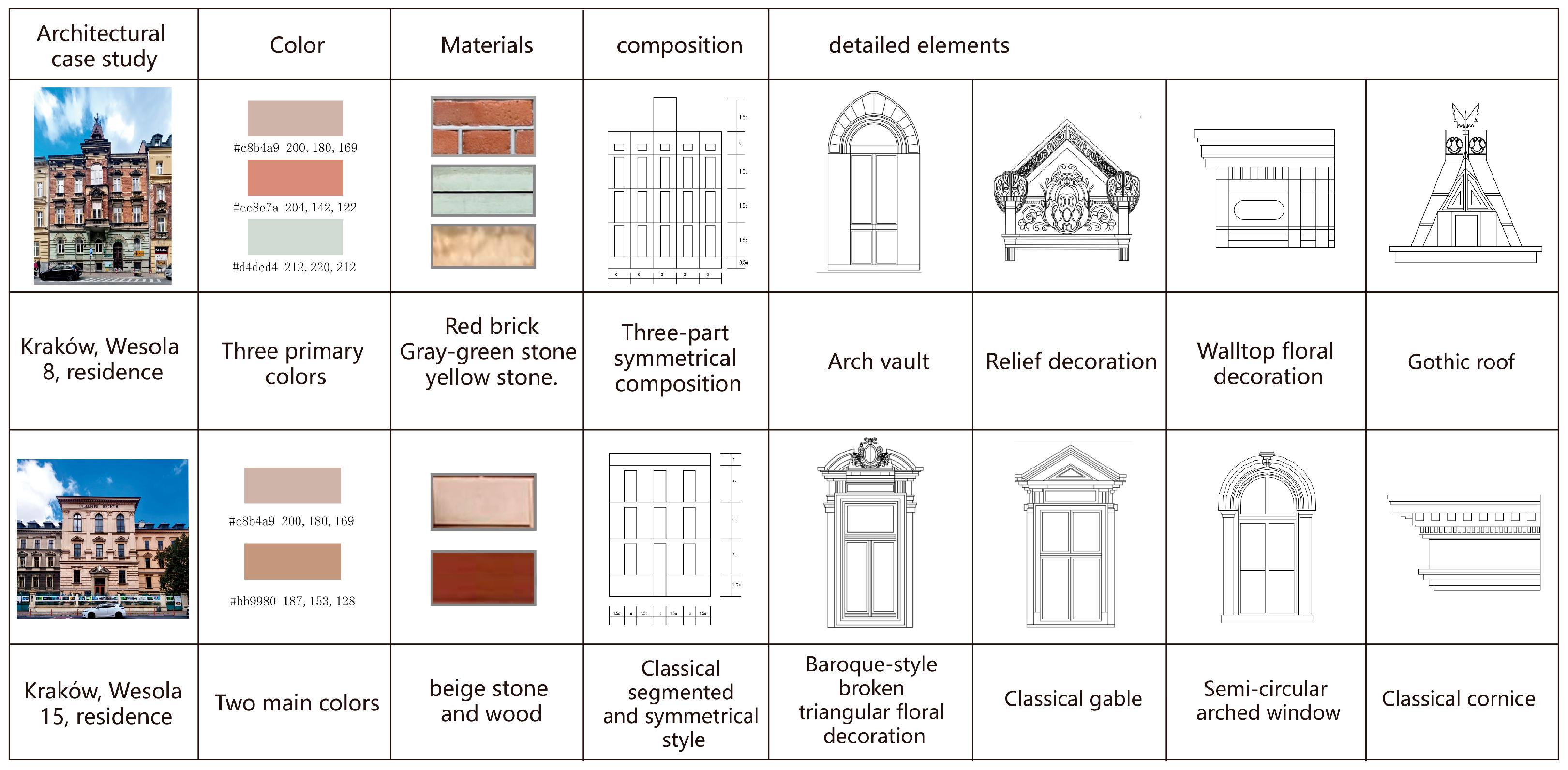

3.1. Case-Study Selection: Krakow’s Eclectic Facades

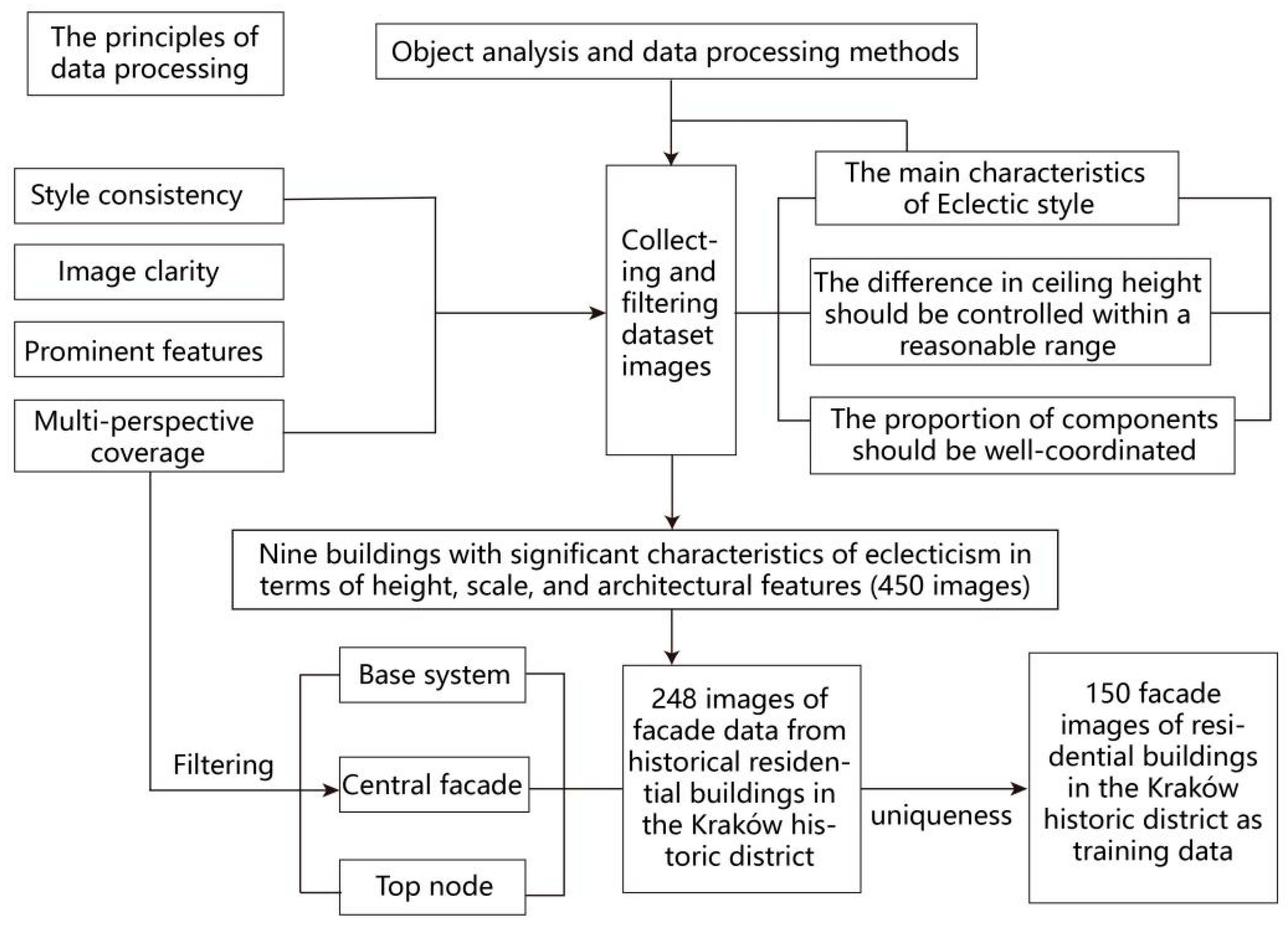

3.2. Image Data Acquisition and Preprocessing

3.2.1. Initial Collection and Screening Criteria for Image Samples

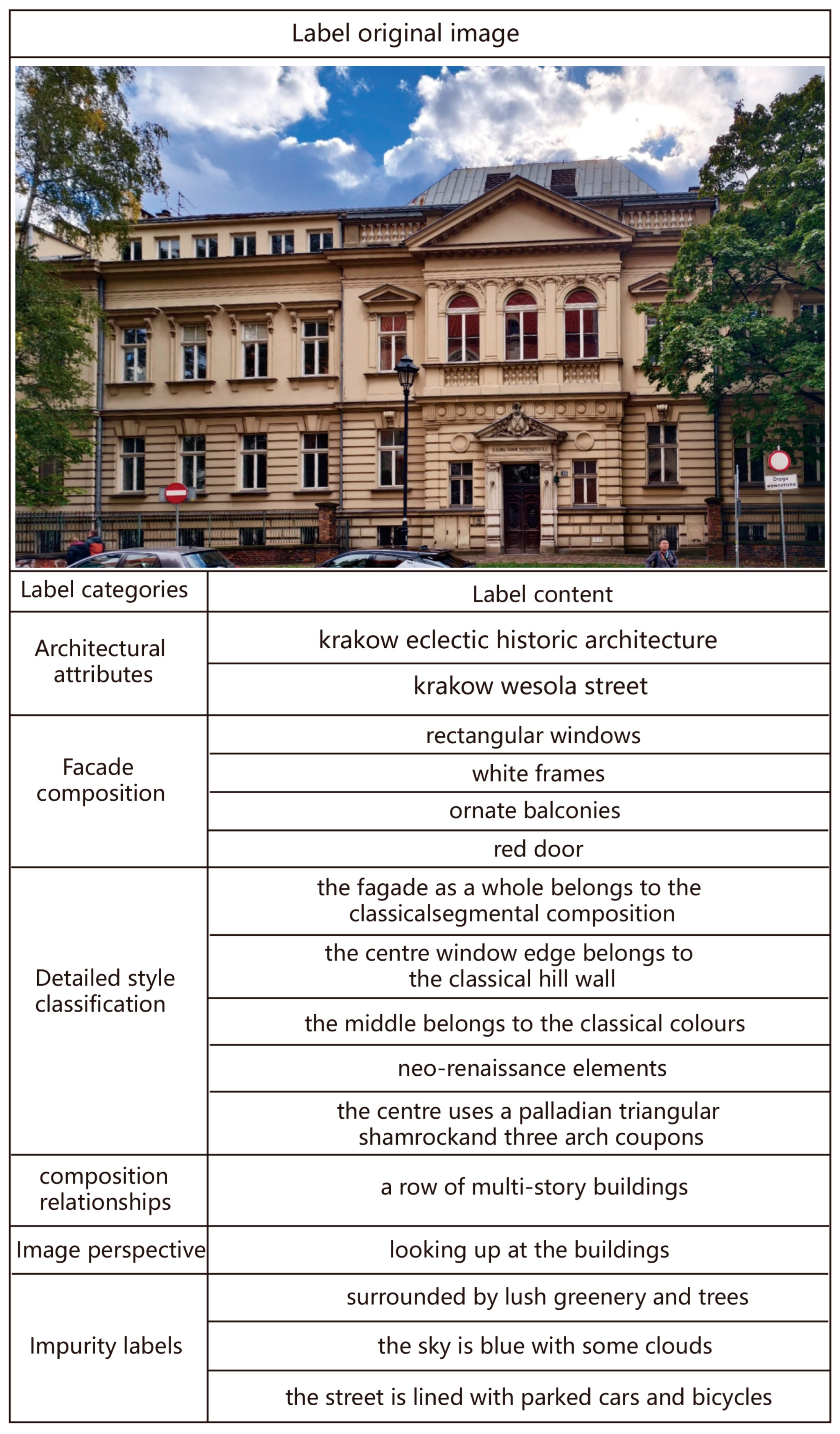

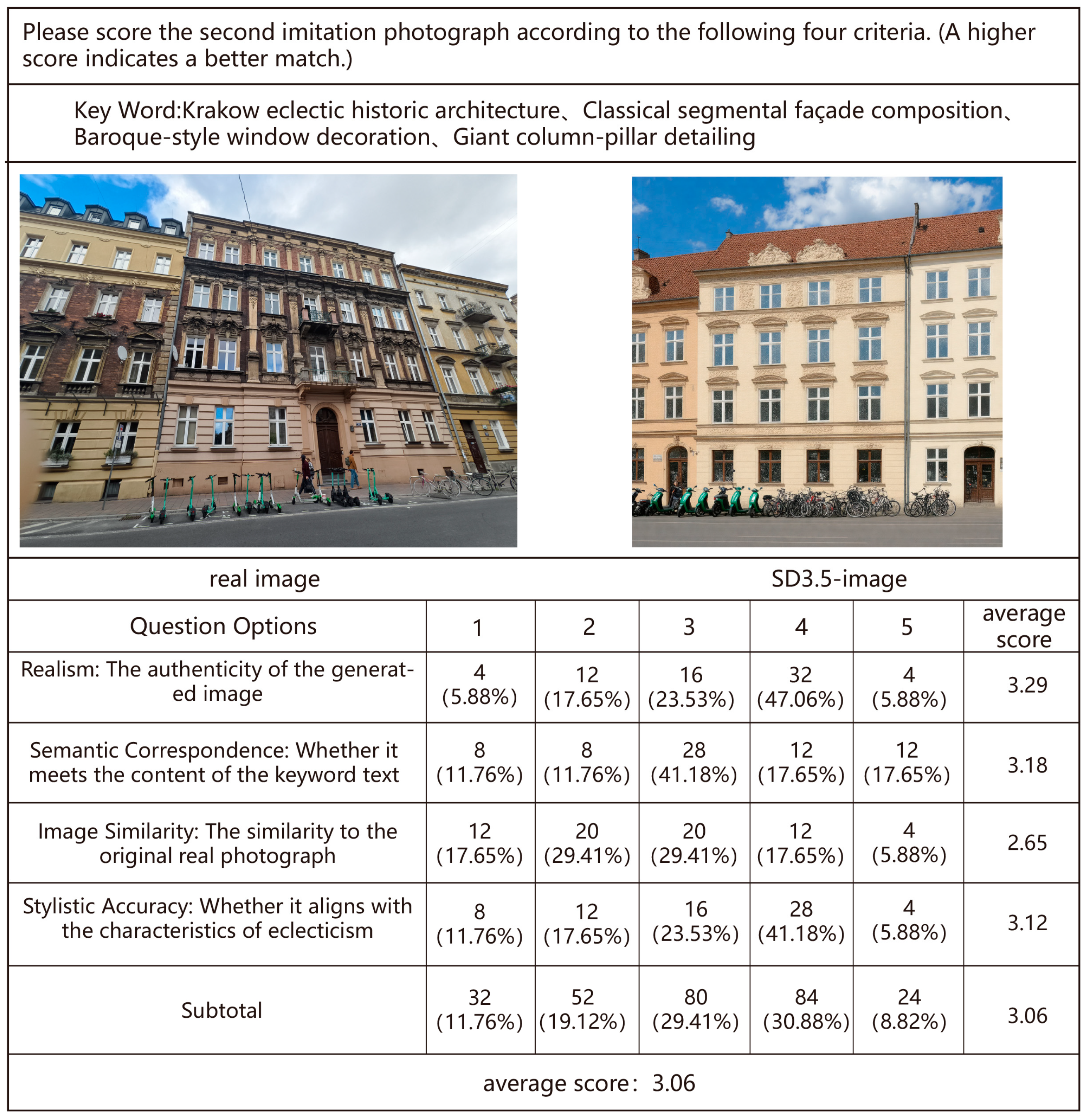

3.2.2. Typology-Based Label Generation and Keyword Optimization

- Architectural Attributes—Label Categories: For example, historical residences, public buildings;

- Facade Composition: For example, Krakow eclectic architecture (predominantly neo-renaissance style), ornate balconies, Baroque-style window decoration;

- Material Attributes: For example, red brick, beige stone, white window frames;

- Facade Composition: For example, symmetrical composition, classical segmental composition, a row of multi-story buildings;

- Detailed Style Classification: For example, orders (column types), cornices, pediments, moldings, spandrels (pier/window infill), corbels;

- Image Perspective: For example, front elevation, low-angle view (looking up at the buildings);

- Impurity Labels: For example, the sky is blue with some clouds, the street is lined with parked cars and bicycles.

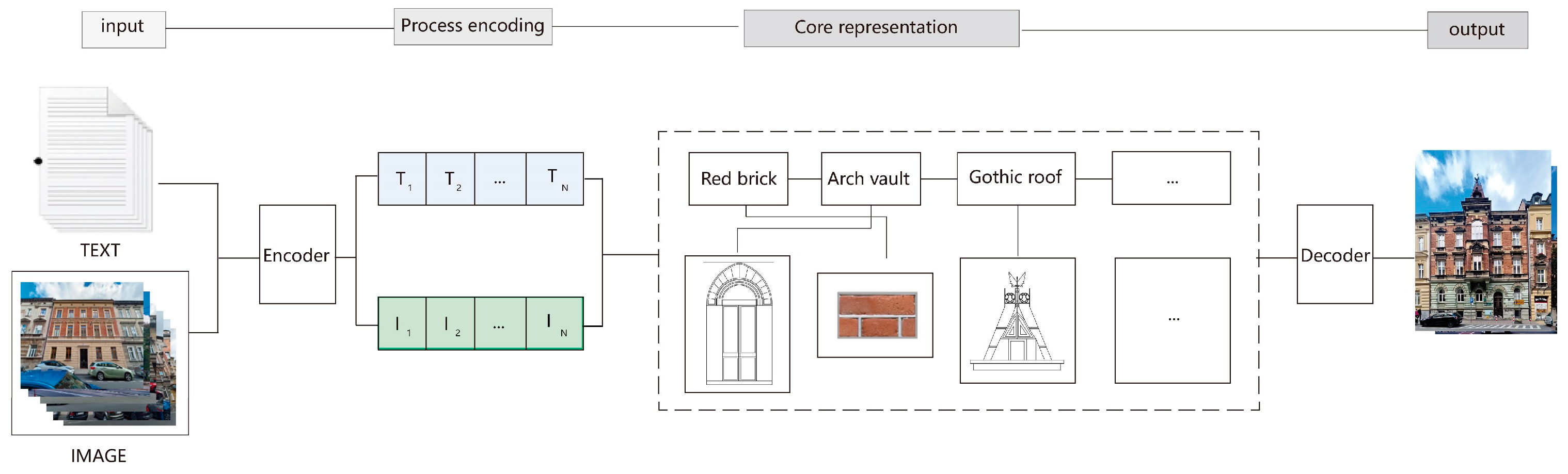

3.3. Typological Transcoding Framework

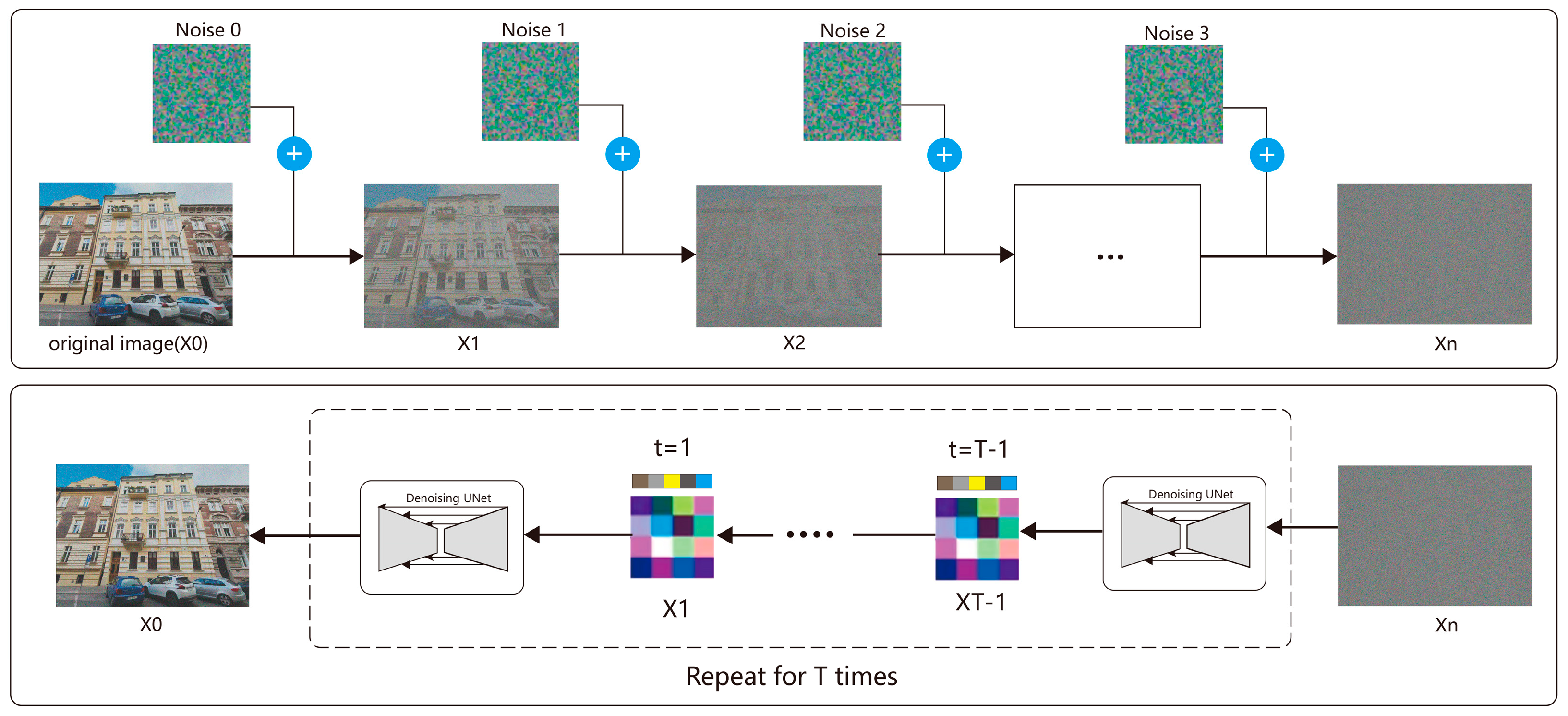

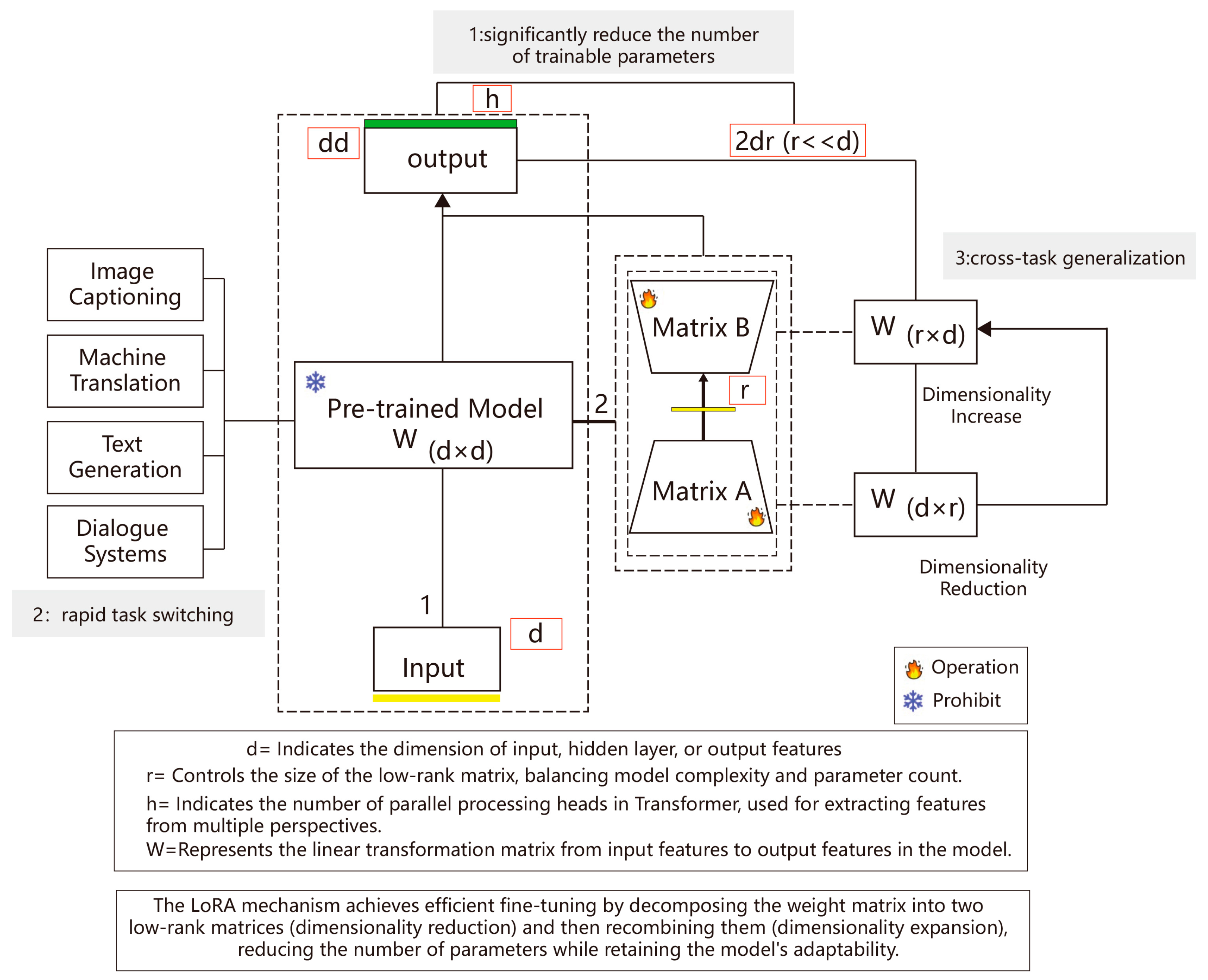

3.3.1. Brief Introduction to Diffusion Models and LoRA Technology

- Diffusion Models

- Low-Rank Adaptation

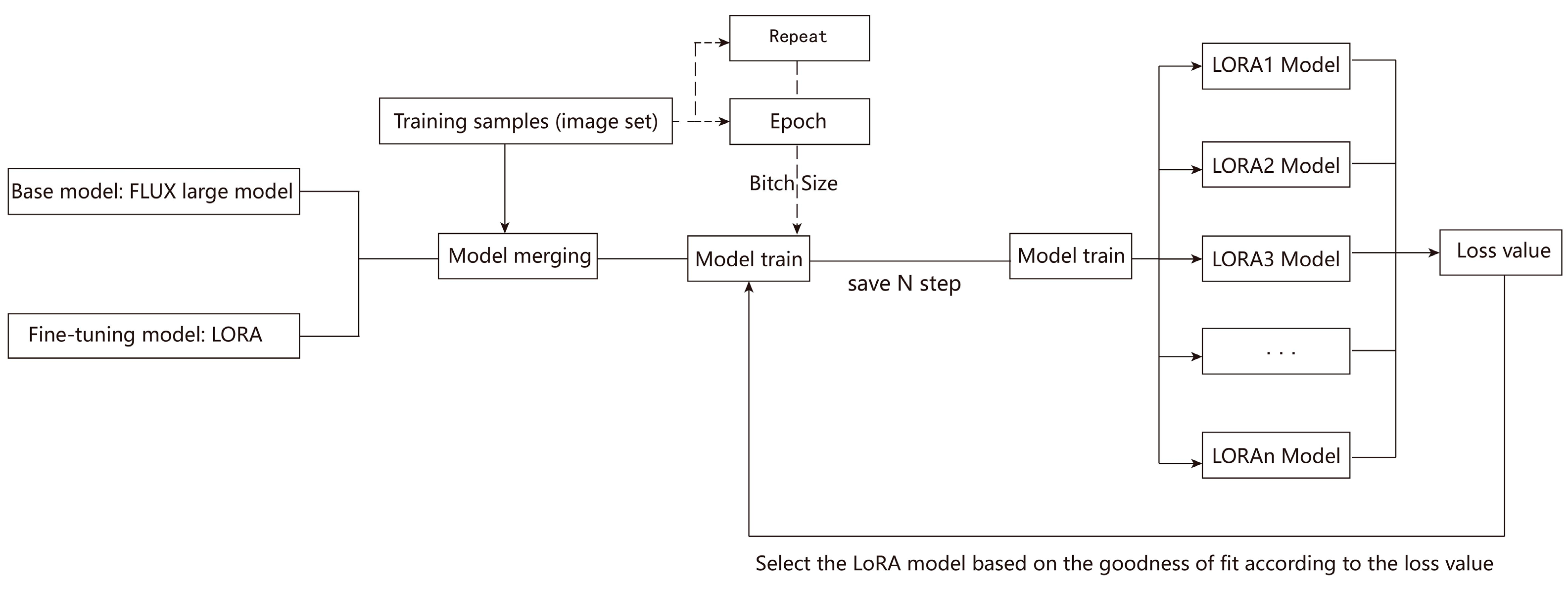

3.3.2. LoRA Model Training Workflow and Key Parameter Regulation

- Learning Rate: This hyperparameter directly dictates the step size for model weight updates during training. While an excessively high learning rate can destabilize training or cause divergence, an overly low rate significantly prolongs training and risks entrapment in local optima. Consistent with common LoRA fine-tuning practices and prioritizing model stability, this study explored and set learning rates within a relatively narrow range (e.g., 1 × 10−4 to 1 × 10−5). This strategy aimed to effectively capture the nuanced characteristics of the target style while ensuring stable convergence [42].

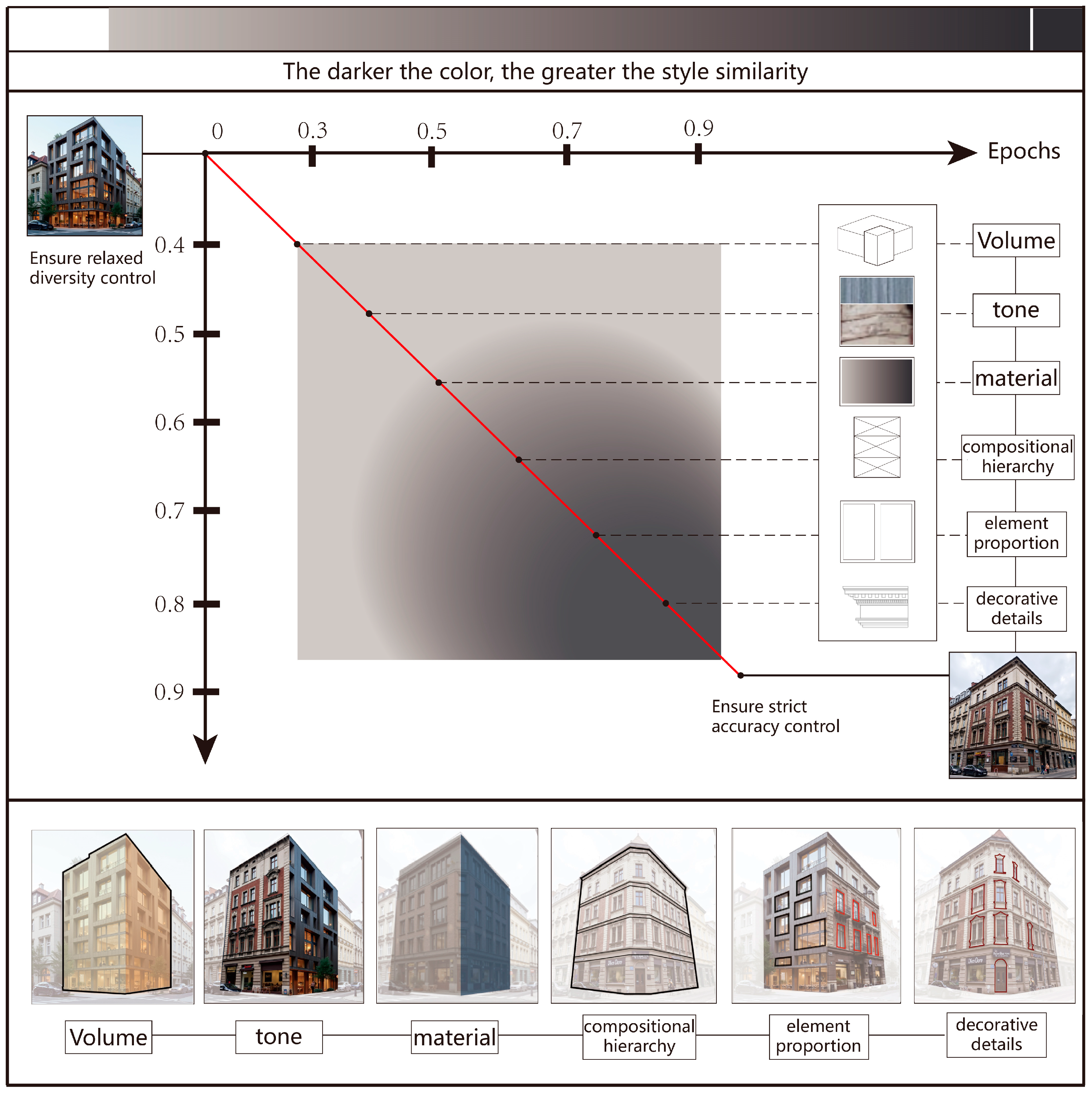

- Total Training Steps: This parameter depends on the image count, total training epochs, repetitions per image, and batch size. It directly correlates with the depth of the model’s learning from the training data. When training on complex styles such as Krakow’s Eclecticism, achieving a balance between underfitting and overfitting is paramount [43,44,45]. Underfitting occurs when the model fails to adequately learn stylistic elements, whereas overfitting involves excessive memorization of training sample details, thereby impairing generalization capabilities. Insufficient training steps can result in generated facades lacking typical stylistic details. Conversely, an excessive number of steps may lead the model to merely reproduce specific buildings from the training set, limiting its flexible application in novel design contexts. Therefore, a critical aspect of parameter tuning in this study involves judiciously planning the total training steps. This is coupled with the subsequent selection of optimal model checkpoints based on rigorous evaluation.

- Loss Value Monitoring: The loss value is a metric quantifying the discrepancy between the model’s predictions and the ground truth data. It directly reflects the model’s training efficacy. During training, a diminishing loss value typically indicates that the model’s predictions are aligning more closely with actual observations. Consequently, the monitoring and optimization of the loss value are linked to the model’s learning efficiency and the quality of the generated images. For architectural style generation tasks, particularly in rendering the detailed nuances of Krakow’s Eclecticism, optimizing the loss value is crucial. This ensures that the model captures the fine-grained characteristics of the architectural style, facilitating the generation of more realistic and precise design imagery.

3.3.3. The Guiding Role of Typological Theory in Training and Inference Processes

3.4. Ethical Considerations

4. Analysis and Results

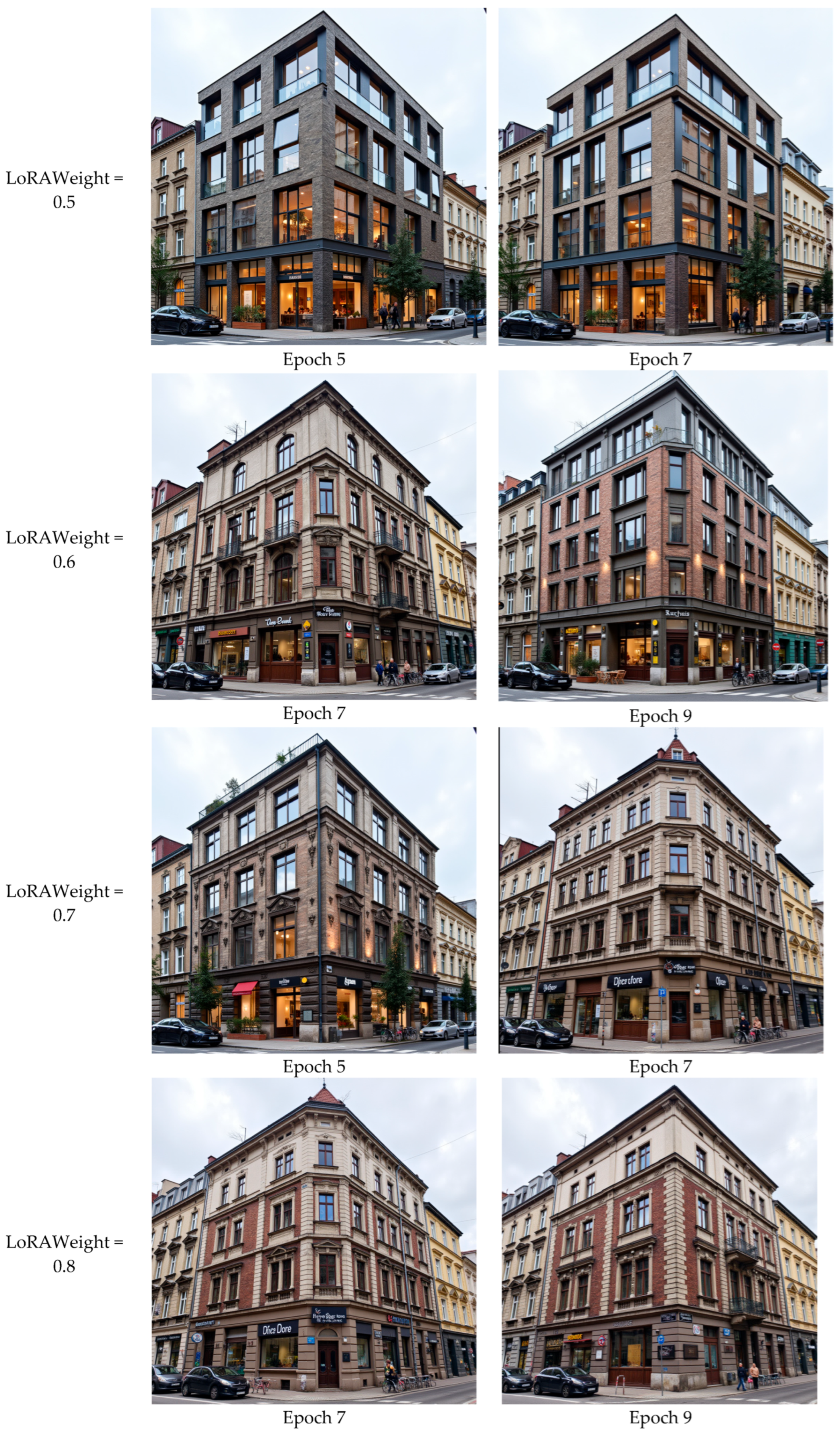

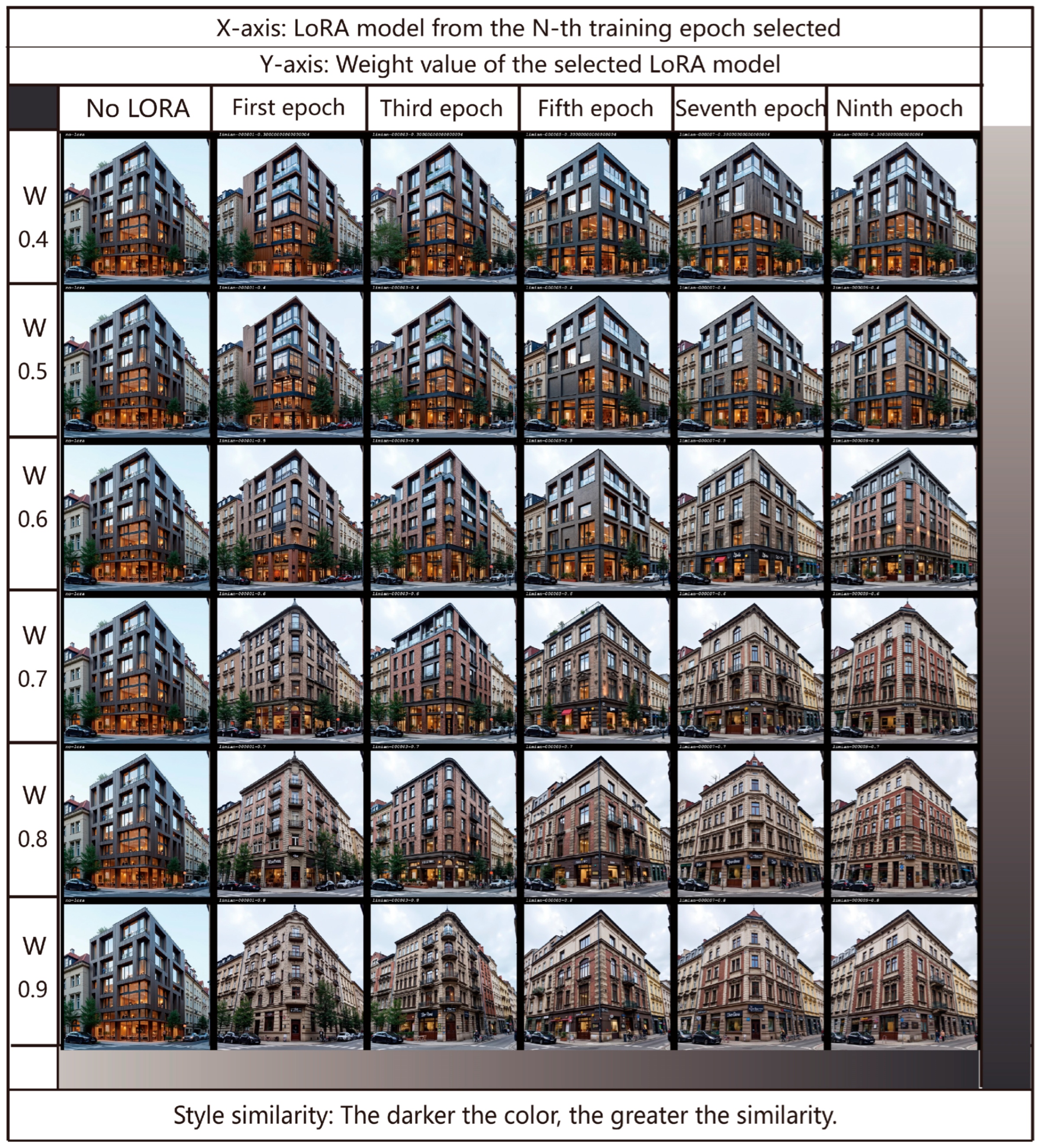

4.1. Influence of LoRA Parameters on Stylistic Generation

LoRA Loss and Weight Tuning for Style Transfer

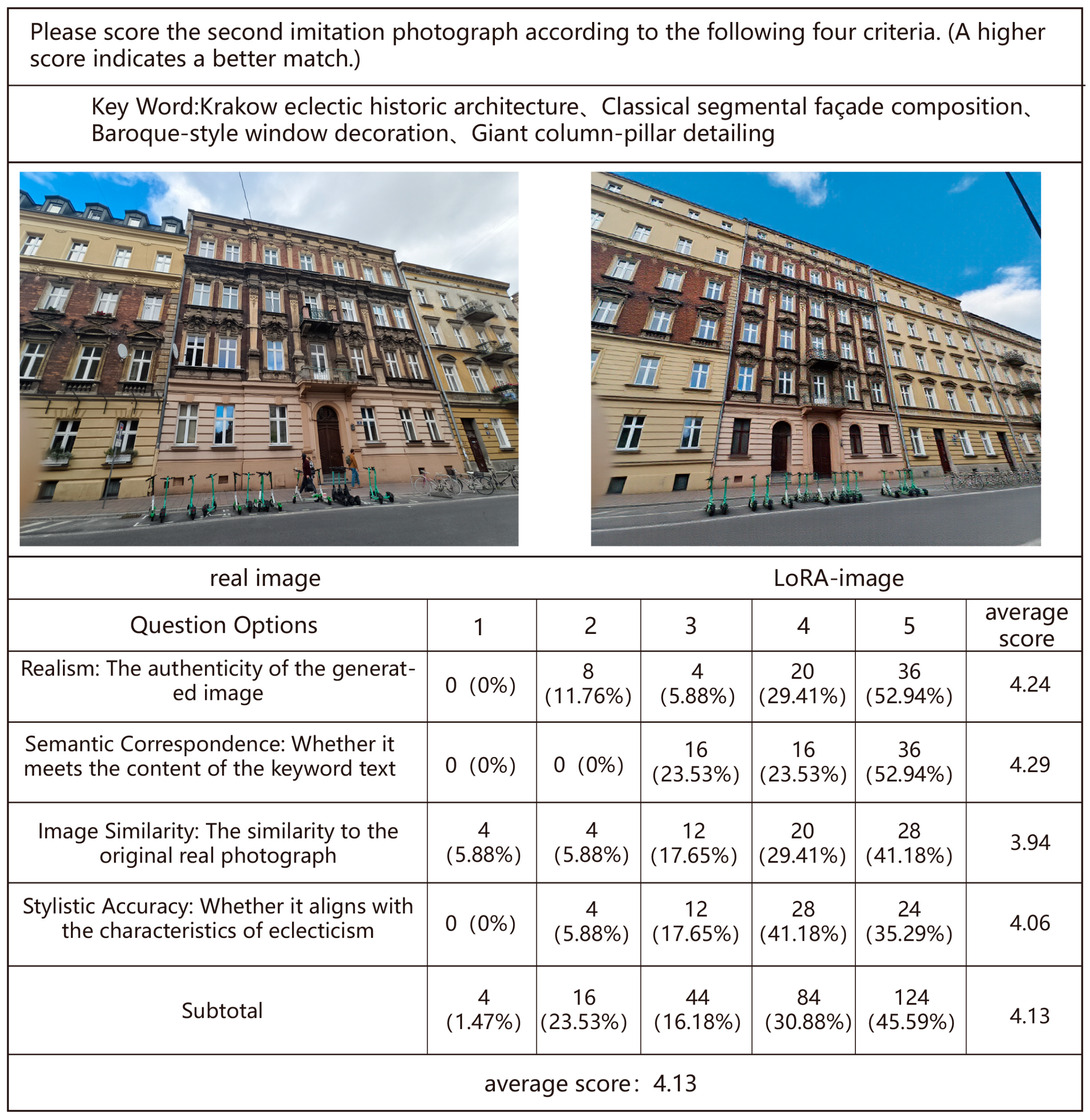

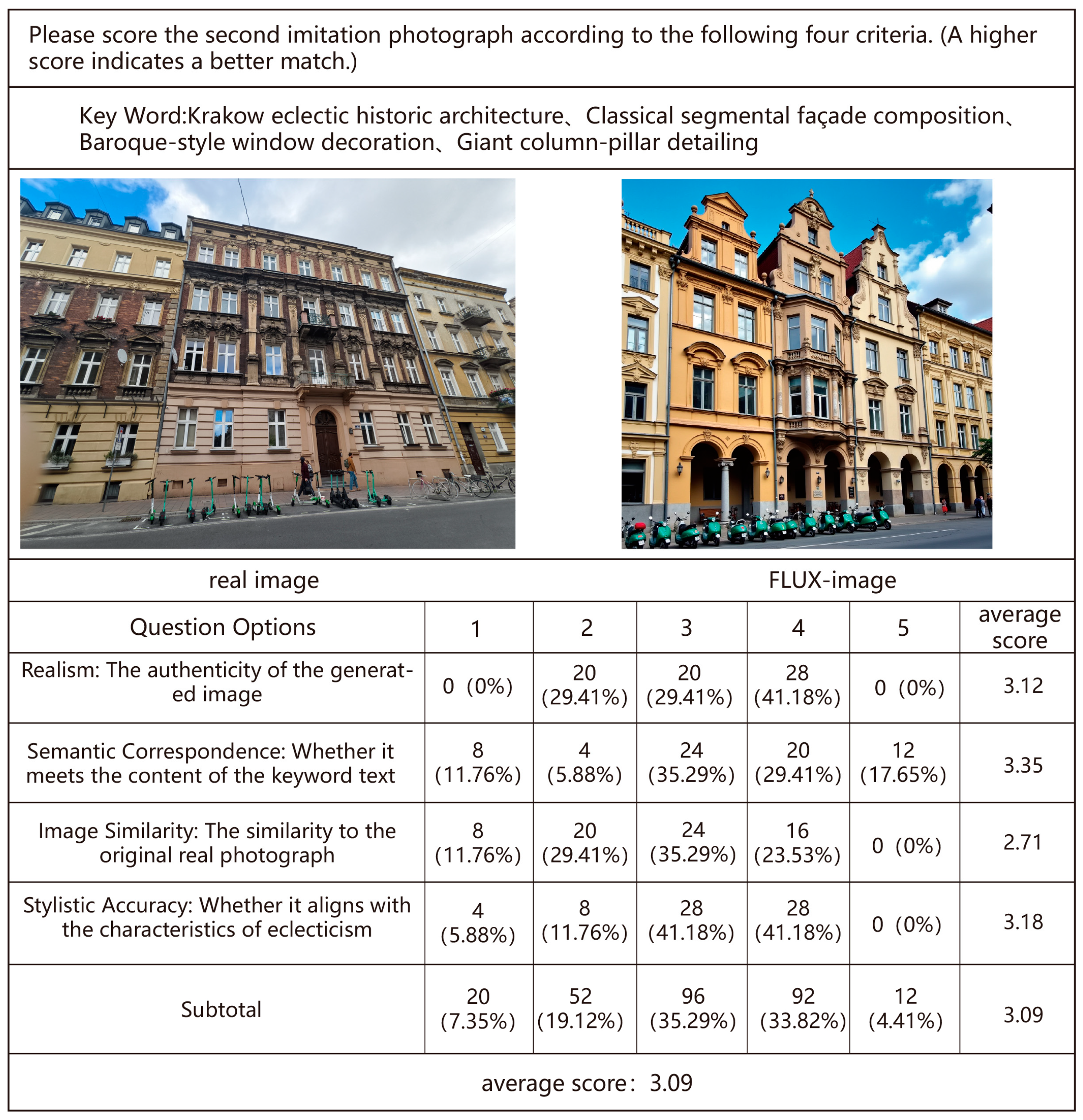

4.2. Comparative Evaluation of Model Performance

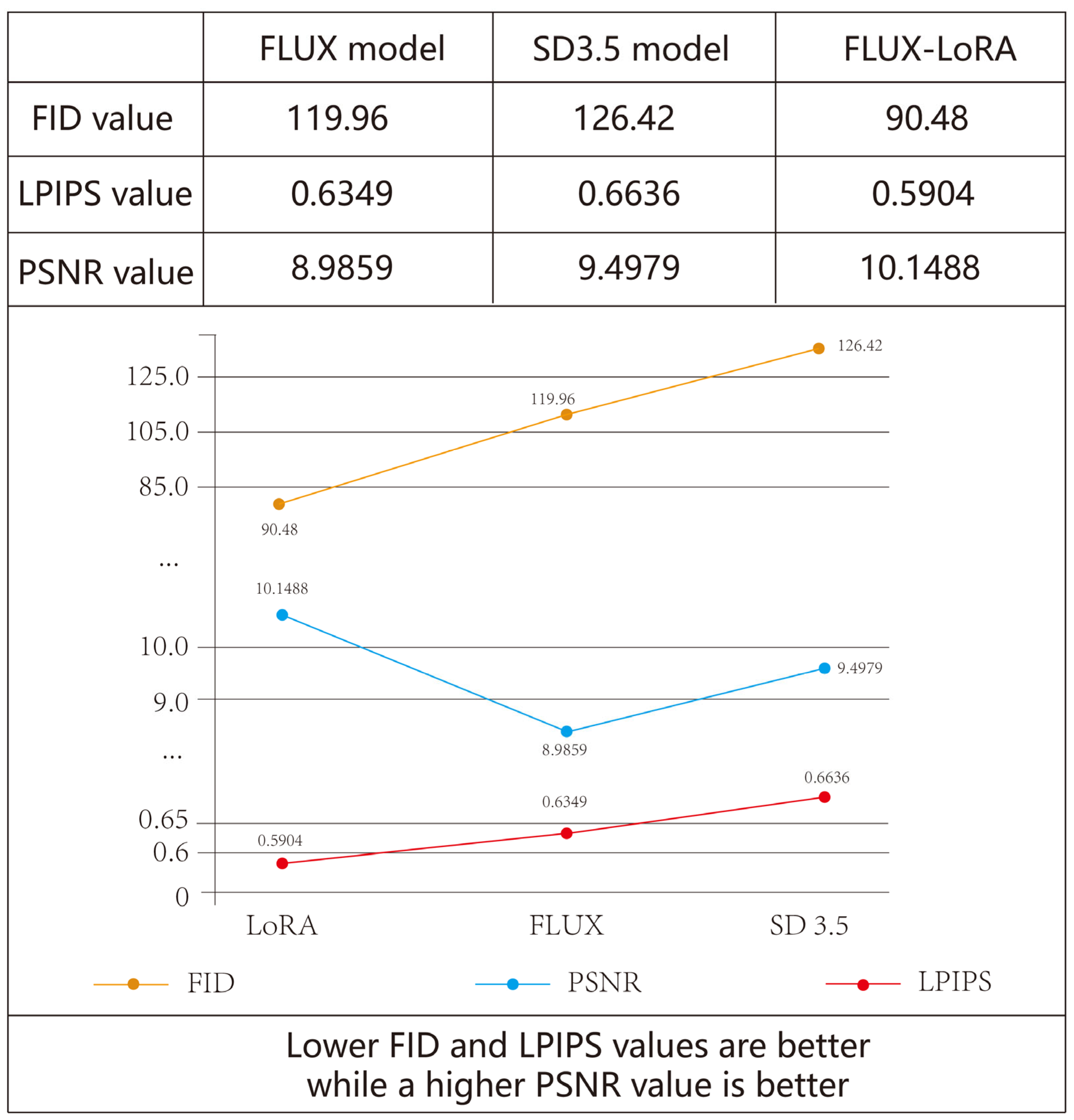

4.2.1. Quantitative Metrics Analysis

- FID Improvement (Lower is Better): The FLUX-LORA model achieved an FID value of 90.48. This represents an approximate improvement of 28.4% over the SD3.5 model’s score of 126.42, and a 24.6% improvement over the base FLUX’s score of 119.96. These results indicate that the image set generated by FLUX-LORA exhibits an overall feature distribution more closely aligned with that of authentic Krakow Eclectic building facades.

- LPIPS Improvement (Lower is Better): The FLUX-LORA model attained an LPIPS value of 0.5904. This signifies an approximate improvement of 11.0% over the SD3.5 model’s score of 0.6636, and a 7.0% improvement compared to the base FLUX’s score of 0.6349. This suggests a higher fidelity in reproducing both fine details and overall stylistic characteristics.

- PSNR Improvement (Higher is Better): The FLUX-LORA model registered a PSNR value of 10.1488 dB, marking an approximate increase of 6.8% compared to the SD3.5 model’s score of 9.4979 dB and demonstrating superior pixel-level image fidelity.

4.2.2. Qualitative Evaluation by Expert Panel

4.3. Comparison with Previous Studies

5. Discussion

5.1. Interpreting the Stylistic Learning Process: A Typological Perspective

5.2. Methodological Contributions and Practical Implications

5.3. Limitations and Future Research Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AIGC | AI-Generated Content |

| CNN | Convolutional Neural Network |

| CLIP | Contrastive Language–Image Pretraining |

| DDPM | Denoising Diffusion Probabilistic Model |

| FID | Fréchet Inception Distance |

| GANs | Generative Adversarial Networks |

| LDM | Latent Diffusion Model |

| LoRA | Low-Rank Adaptation |

| LPIPS | Learned Perceptual Image Patch Similarity |

| PSNR | Peak Signal-to-Noise Ratio |

| SD | Stable Diffusion |

Appendix A

References

- Petzet, M.; Ziesemer, J. (Eds.) International Charters for Conservation and Restoration/Chartes Internationales sur la Conservation et la Restauration/Cartas Internacionales Sobre la Conservacion y la Restauracion; Monuments & Sites 1; ICOMOS: Paris, France; Lipp GmbH: Munich, Germany, 2004; ISBN 3874906760. [Google Scholar]

- Jokilehto, J. A History of Architectural Conservation, 2nd ed.; Routledge: London, UK, 2017; ISBN 9781138639997. [Google Scholar]

- Boccardi, G. Authenticity in the heritage context: A reflection beyond the Nara Document. Hist. Environ. Policy Pract. 2019, 10, 4–18. [Google Scholar] [CrossRef]

- He, M.; Qi, J. Study on the theory of Rafael Moneo architectural typology. IOP Conf. Ser. Mater. Sci. Eng. 2019, 592, 012105. [Google Scholar] [CrossRef]

- Plevoets, B.; Van Cleempoel, K. Adaptive Reuse of the Built Heritage: Concepts and Cases of an Emerging Discipline; Routledge: London, UK, 2019; p. 256. ISBN 9781138062764. [Google Scholar]

- Plevoets, B.; Van Cleempoel, K. Adaptive reuse as a strategy towards conservation of cultural heritage: A literature review. WIT Trans. Built Environ. 2011, 118, 155–164. [Google Scholar] [CrossRef]

- Tang, Q.; Zheng, L.; Chen, Y.; Chen, J.; Yang, S. Innovative design method for Lingnan region veranda architectural heritage (Qi-Lou) façades based on computer vision. Buildings 2025, 15, 368. [Google Scholar] [CrossRef]

- Yuan, F.; Xu, X.; Wang, Y. Toward the era of generative—AI—Augmented design. Archit. J. China 2023, 659, 14–20. (In Chinese) [Google Scholar] [CrossRef]

- Yang, J.; Tan, M.; Chen, X.; Lin, Z.; Jiang, X. Exploration of theories and technical mechanisms for smart city planning. J. Southeast Univ. Nat. Sci. Ed. 2024, 54, 1066–1079. (In Chinese) [Google Scholar]

- Csiszár, I. The method of types. IEEE Trans. Inf. Theory 1998, 44, 2505–2523. [Google Scholar] [CrossRef]

- Rossi, A. The Architecture of the City; MIT Press: Cambridge, MA, USA, 1984. [Google Scholar]

- Viollet-le-Duc, E.-E. Dictionnaire Raisonné De L’architecture Française Du XIe Au XVIe Siècle; Bance: Paris, France, 1854; Volume 1. [Google Scholar]

- Bressani, M. Architecture and The Historical Imagination: Eugène—Emmanuel Viollet-le-Duc, 1814–1879; Routledge: London, UK, 2016. [Google Scholar]

- Bressani, M. Notes on Viollet-le-Duc’s philosophy of history: Dialectics and technology. J. Soc. Archit. Hist. 1989, 48, 327–350. [Google Scholar] [CrossRef]

- Zhong, H.; Wang, L.; Zhang, H. The application of virtual reality technology in the digital preservation of cultural heritage. Comput. Sci. Inf. Syst. 2021, 18, 535–551. [Google Scholar] [CrossRef]

- Selmanović, E.; Rizvic, S.; Harvey, C.; Boskovic, D.; Hulusic, V.; Chahin, M.; Sljivo, S. Improving accessibility to intangible cultural heritage preservation using virtual reality. J. Comput. Cult. Herit. JOCCH 2020, 13, 13. [Google Scholar] [CrossRef]

- Poyck, G. Procedural City Generation with Combined Architectures for Real—Time Visualization. Master’s Thesis, Clemson University, Clemson, SC, USA, 2023. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Hinton, G.E. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 2017, 30, 5767–5777. [Google Scholar] [CrossRef]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of GANs for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Bachl, M.; Ferreira, D.C. City—GAN: Learning architectural styles using a custom conditional GAN architecture. arXiv 2021, arXiv:1907.05280. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat GANs on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 68406851. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Lille, France, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3836–3847. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-rank adaptation of large language models. In Proceeding of the 10th ICLR, Kigali, Rwanda, 25–29 April 2022. [Google Scholar]

- Huang, W.; Zheng, H. Architectural drawings recognition and generation through machine learning. In Proceedings of the 38th Annual Conference of the Association for Computer Aided Design in Architecture, Mexico City, Mexico, 17–20 October 2018; pp. 616–625. [Google Scholar]

- Nauata, N.; Chang, K.H.; Cheng, C.Y.; Mori, G.; Furukawa, Y. House-—GAN: Relational generative adversarial networks for graph constrained house layout generation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 162–177. [Google Scholar]

- Sun, C.; Zhou, Y.; Han, Y. Automatic generation of architecture façade for historical urban renovation using generative adversarial network. Build. Environ. 2022, 212, 108781. [Google Scholar] [CrossRef]

- Zhang, L.; Zheng, L.; Chen, Y.; Huang, L.; Zhou, S. CGAN—Assisted renovation of the styles and features of street façades—A case study of the Wuyi area in Fujian, China. Sustainability 2022, 14, 16575. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Li, Z.; Li, Y.; Yu, Z.; Li, M. Development of a method for commercial style transfer of historical architectural façades based on stable diffusion models. J. Imaging 2024, 10, 165. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zhang, J.; Li, Y. Knowledge-driven and diffusion model-based methods for generating historical building façades: A case study of traditional Minnan residences in China. Information 2024, 15, 344. [Google Scholar] [CrossRef]

- Xu, Z.; Wang, L. Reshaping classicism—An Abnormal Landscape of Paestum and the rise of Neoclassicism. World Arch. 2023, 3, 110–115. (In Chinese) [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-efficient transfer learning for NLP. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2790–2799. [Google Scholar]

- Dietterich, T.G. Overfitting and underfitting in machine learning. In Proceedings of the ACM Computing Surveys (CSUR)–1995 Workshop on Overfitting, Seattle, WA, USA, 5–9 August 1995; pp. 114–122. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 9780262035613. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6626–6637. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition IEEE, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar] [CrossRef]

- Liu, J.; Huang, X.; Huang, T.; Chen, L.; Hou, Y.; Tang, S.; Liu, Z.; Ouyang, W.; Zuo, W.; Jiang, J.; et al. A comprehensive survey on 3D content generation. arXiv 2024, arXiv:2402.01166. [Google Scholar]

- Zhang, R.; Guo, Z.; Zhang, W.; Li, K.; Miao, X.; Cui, B.; Qiao, Y.; Gao, P.; Li, H. PointCLIP: Point cloud understanding by CLIP. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5790–5800. [Google Scholar] [CrossRef]

- Wu, S.; Lin, Y.; Zhang, F.; Zeng, Y.; Xu, J.; Torr, P.; Cao, X.; Yao, Y. Direct3D: Scalable image-to-3D generation via 3D latent diffusion transformer. arXiv 2024, arXiv:2405.14832. [Google Scholar]

- Tochilkin, D.; Pankratz, D.; Liu, Z.; Huang, Z.; Letts, A.; Li, Y.; Liang, D.; Laforte, C.; Jampani, V.; Cao, Y.P. TriPoSR: Fast 3D object reconstruction from a single image. arXiv 2024, arXiv:2403.02151. [Google Scholar]

- Xiang, J.; Lv, Z.; Xu, S.; Deng, Y.; Wang, R.; Zhang, B.; Chen, D.; Tong, X.; Yang, J. Structured 3D latents for scalable and versatile 3D generation. arXiv 2025, arXiv:2412.01506. [Google Scholar]

| Model Train Type | Pretrained Model | AE Model | t5xxl Model |

|---|---|---|---|

| flux-lora | flux1-dev.safetensors | ae.sft | t5xxl fp16.safetensors |

| Clip-l | Timestep Sampling | Model Prediction Type | Loss-Type |

| Clip-l.safetensors | sigmoid | raw | I2 |

| Resolution | Save Precision | Epochs | Batch Size |

| 1024, 1024 | bf16 | 20 | 4 |

| GPU Equipped | Learning Rate | Unet Learning Rate | Text-Encoder Learning Rate |

| NVIDIA RTX 4090 | 1 × 10−4 | 5 × 10−4 | 1 × 10−5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Zhang, N.; Xu, C.; Xu, Z.; Han, S.; Jiang, L. Typological Transcoding Through LoRA and Diffusion Models: A Methodological Framework for Stylistic Emulation of Eclectic Facades in Krakow. Buildings 2025, 15, 2292. https://doi.org/10.3390/buildings15132292

Chen Z, Zhang N, Xu C, Xu Z, Han S, Jiang L. Typological Transcoding Through LoRA and Diffusion Models: A Methodological Framework for Stylistic Emulation of Eclectic Facades in Krakow. Buildings. 2025; 15(13):2292. https://doi.org/10.3390/buildings15132292

Chicago/Turabian StyleChen, Zequn, Nan Zhang, Chaoran Xu, Zhiyu Xu, Songjiang Han, and Lishan Jiang. 2025. "Typological Transcoding Through LoRA and Diffusion Models: A Methodological Framework for Stylistic Emulation of Eclectic Facades in Krakow" Buildings 15, no. 13: 2292. https://doi.org/10.3390/buildings15132292

APA StyleChen, Z., Zhang, N., Xu, C., Xu, Z., Han, S., & Jiang, L. (2025). Typological Transcoding Through LoRA and Diffusion Models: A Methodological Framework for Stylistic Emulation of Eclectic Facades in Krakow. Buildings, 15(13), 2292. https://doi.org/10.3390/buildings15132292