Crushed Stone Grain Shapes Classification Using Convolutional Neural Networks

Abstract

1. Introduction

- collection and pre-processing of the empirical base;

- selection of neural network architectures most suitable for solving the tasks;

- selected algorithms training;

- assessment of trained algorithms’ performance on a test sample;

- examination of the findings in accordance with principal classification model quality metrics;

- conducting a comparative analysis for the determination of the crushed stone grain shape by comparing manual classification, classification from work [5], and the results of the current study.

2. Literature Review

3. Materials and Methods

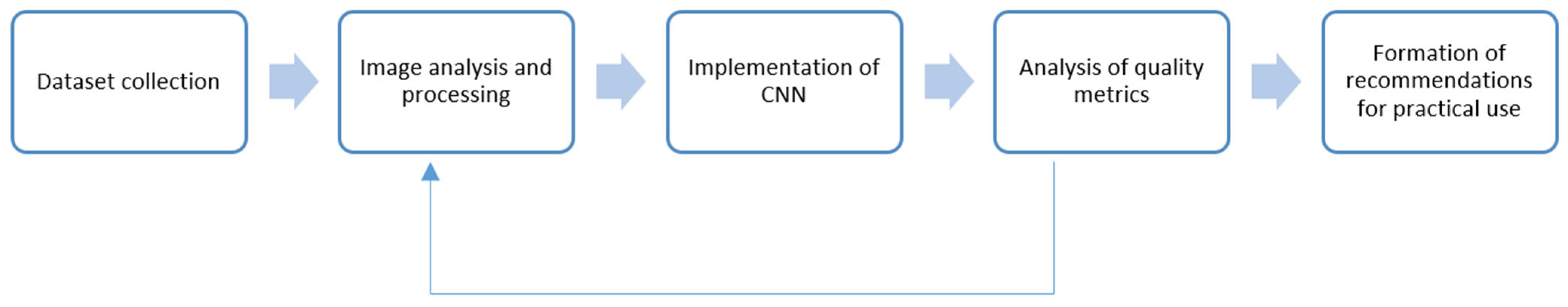

- (1)

- dataset collection: at this stage, the selected building material is described, and samples are collected;

- (2)

- image analysis and processing;

- (3)

- CNN implementation (training, optimization, and testing);

- (4)

- quality metrics analysis: if low metrics are detected that do not satisfy the subject area, it is necessary to return to stage 2 and refine the original dataset, as well as analyze the settings of the models;

- (5)

- formation of recommendations for practical use: At this stage, it is necessary to pay attention to the requirements for the practical application of the developed models, as well as limitations, to identify prospects for improving the results.

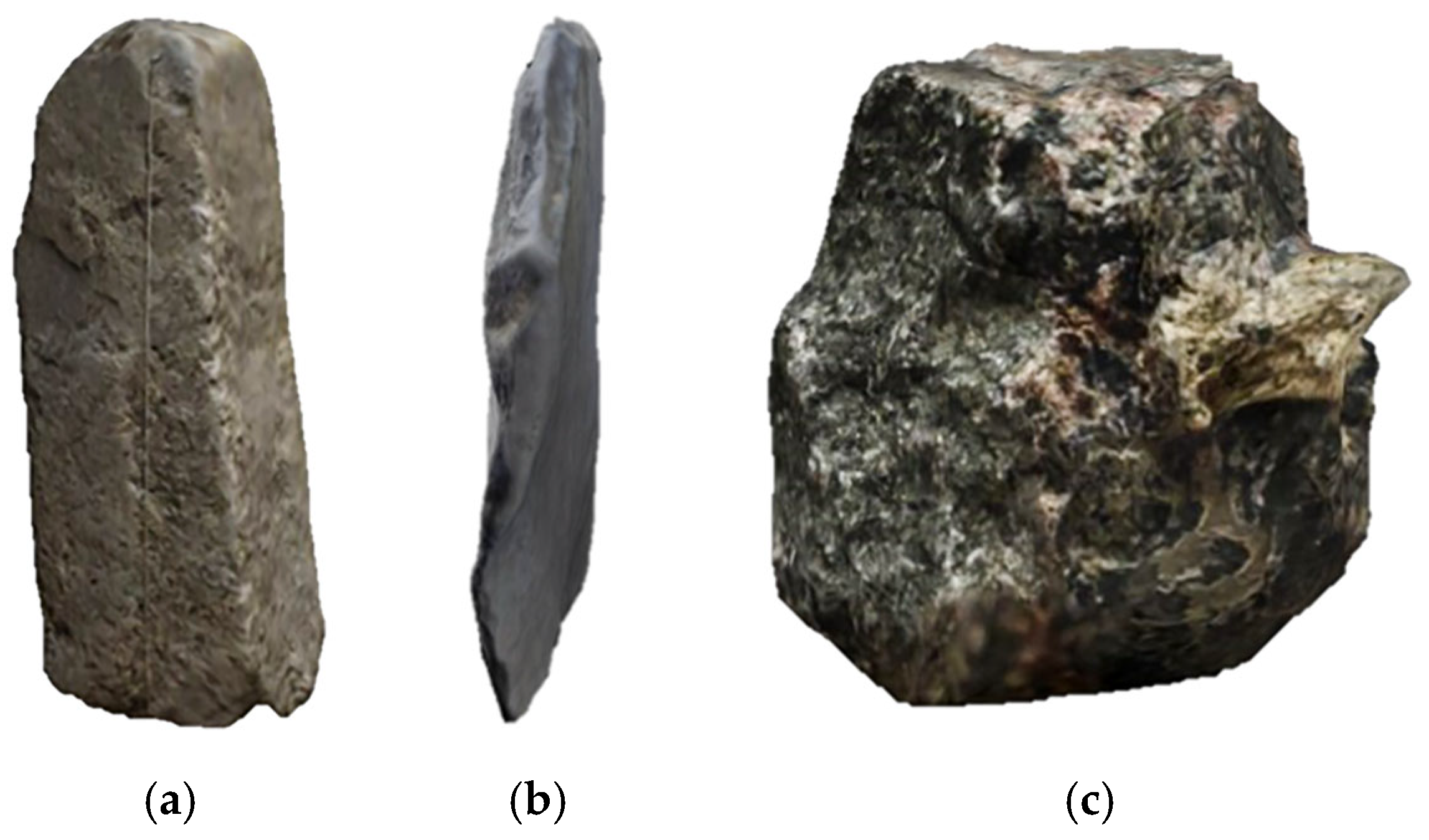

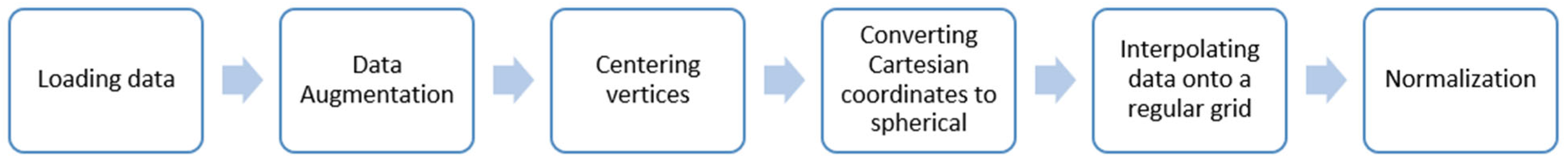

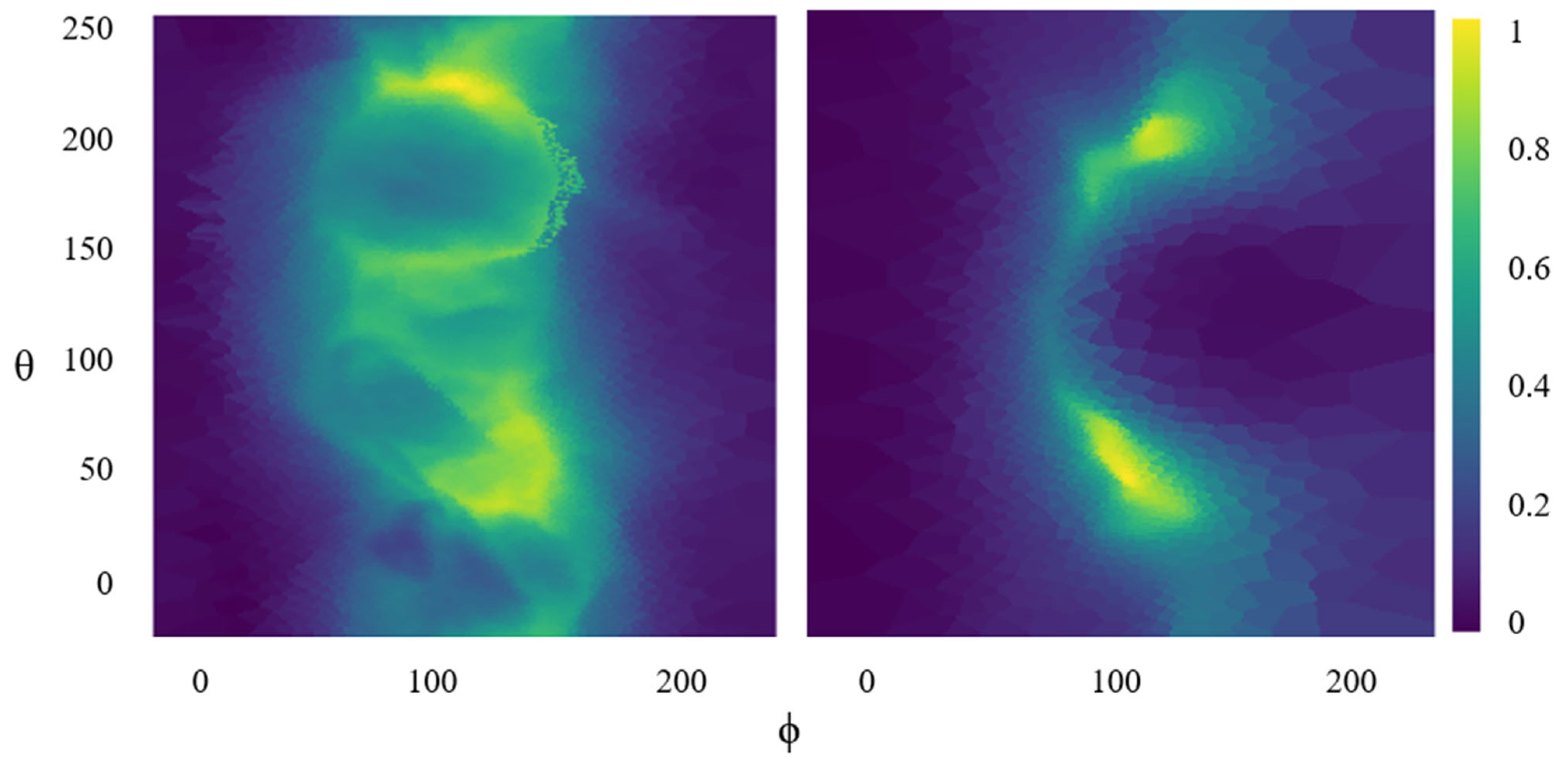

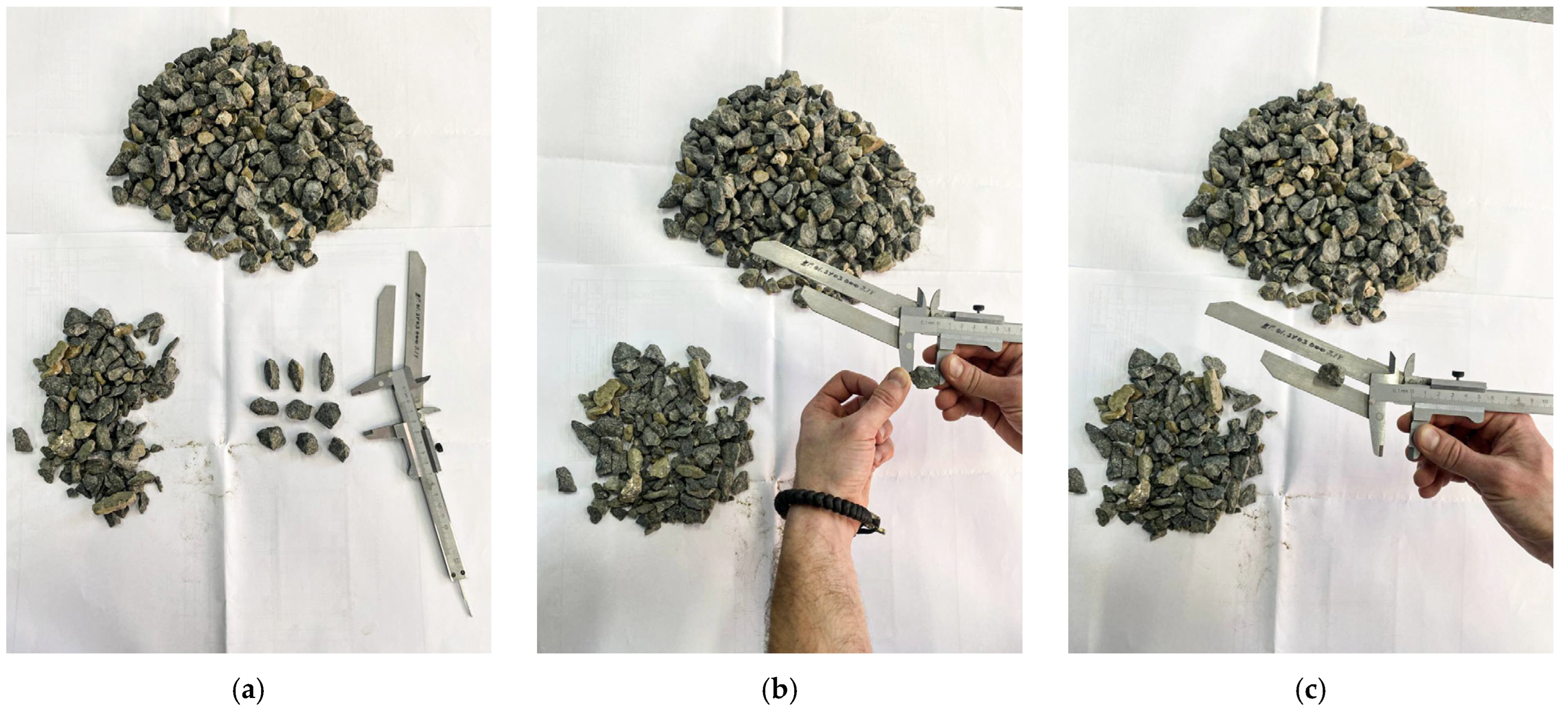

3.1. Dataset Collection, Image Analysis, and Processing

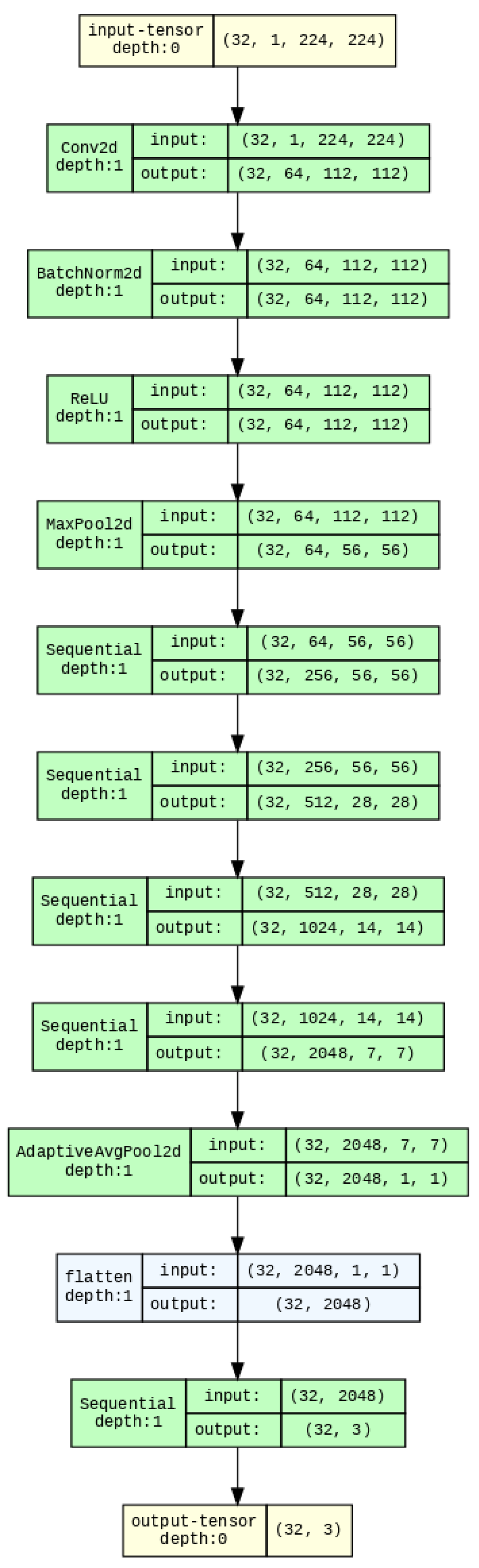

3.2. Selection of Neural Network Architectures

- The first convolutional layer is adapted to work with single-channel images.

- The fully connected layer is replaced by a sequence:

- -

- Dropout (0.5) to prevent overfitting. The parameter 0.5 means disabling 50% of neurons to avoid overfitting.

- -

- Linear (in_features, 3), where in_features is the dimension of the features before the fully connected layer, and 3 is the number of classes.

- -

- changing the size to 224 × 224 × 1, which corresponds to the input size of the ResNet50 model;

- -

- transformation into a tensor and bringing the order of the axes to the format (C, H, W), where C (Channels) is the number of channels in the image, H (Height) is the height of the image in pixels, and W (Width) is the width of the image in pixels;

- -

- normalization of pixel values by the mean and standard deviation.

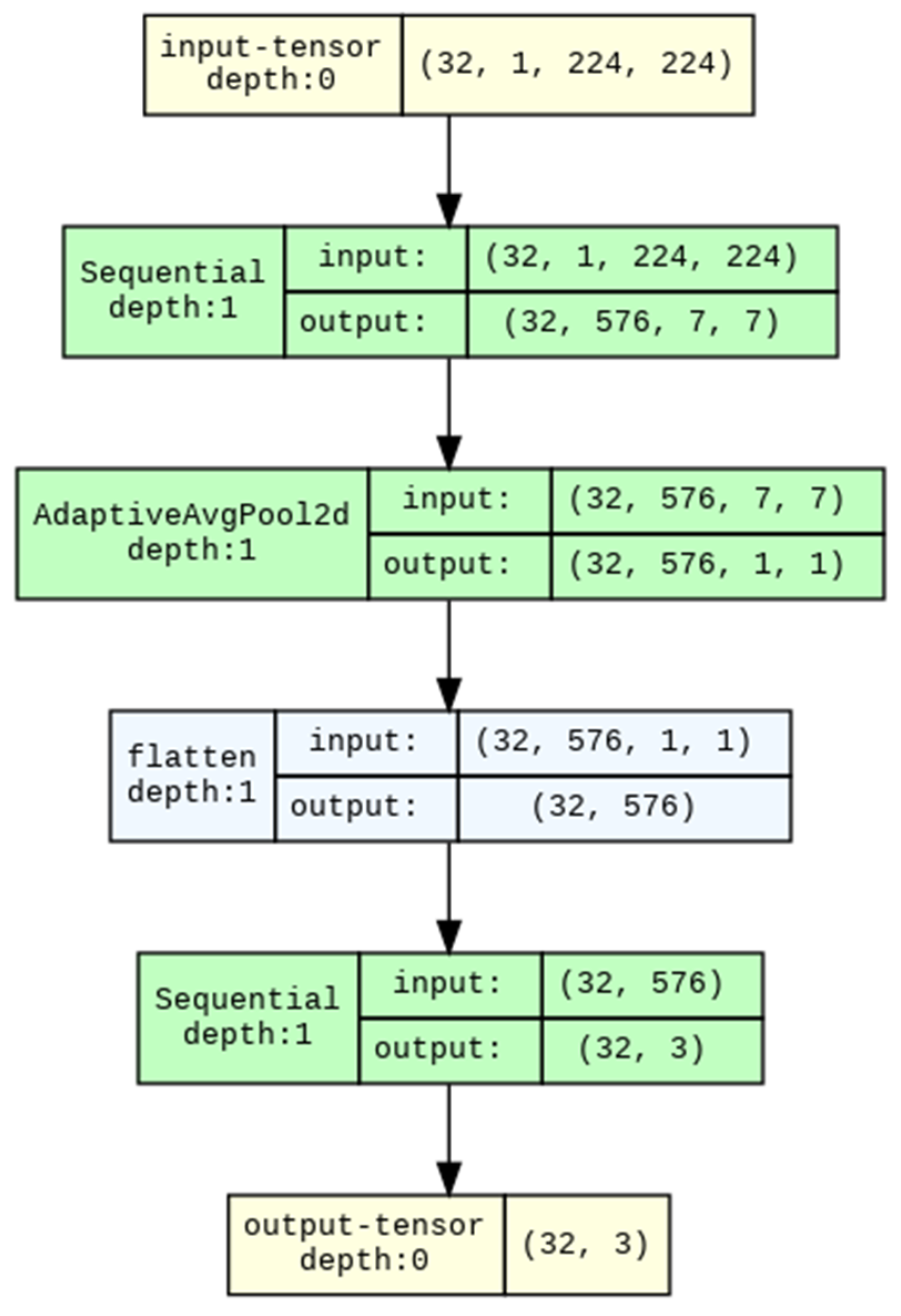

- The first convolutional layer is adapted to work with single-channel images.

- The fully connected layer is replaced with a sequence:

- -

- Dropout (0.3) to prevent overfitting. The parameter 0.3 means disabling 30% of neurons to avoid overfitting.

- -

- Linear (in_features, 3), where in_features is the dimension of the features before the fully connected layer, and 3 is the number of classes.

- -

- changing the size to 224 × 224 × 1, which corresponds to the input size of the MobileNetV3 Small model;

- -

- transforming into a tensor and bringing the order of the axes to the format (C, H, W);

- -

- normalizing pixel values by the mean and standard deviation. The third model is based on DenseNet121. DenseNet121 is a deep convolutional neural network based on dense blocks, where each layer is directly connected to all subsequent layers [64]. This architecture promotes efficient gradient propagation and improves feature reuse, which reduces the risk of vanishing gradients and improves the accuracy of the model (Figure 7).

- The first convolutional layer is adapted to work with single-channel images.

- The fully connected layer is replaced by a sequence:

- -

- Dropout (0.5) to prevent overfitting. The parameter 0.5 means disabling 50% of neurons to avoid overfitting.

- -

- Linear (in_features, 3), where in_features is the dimension of the features before the fully connected layer, and 3 is the number of classes.

- -

- changing the size to 224 × 224 × 1, which corresponds to the input size of the DenseNet121 model;

- -

- converting to a tensor and bringing the order of the axes to the format (C, H, W);

- -

- normalization of pixel values by mean and standard deviation.

- -

- Dropout (disabling some neurons during training): 0.5 for ResNet50 and DenseNet121, 0.3 for MobileNetV3 Small;

- -

- L2 regularization (weight decay): a higher coefficient for MobileNetV3 Small compensated for its smaller number of parameters;

- -

- Data augmentation also helps reduce overfitting: random rotations (±45°), shifts and noise were applied in the preprocessing stage. This increased the diversity of the data and improved the generalization ability.

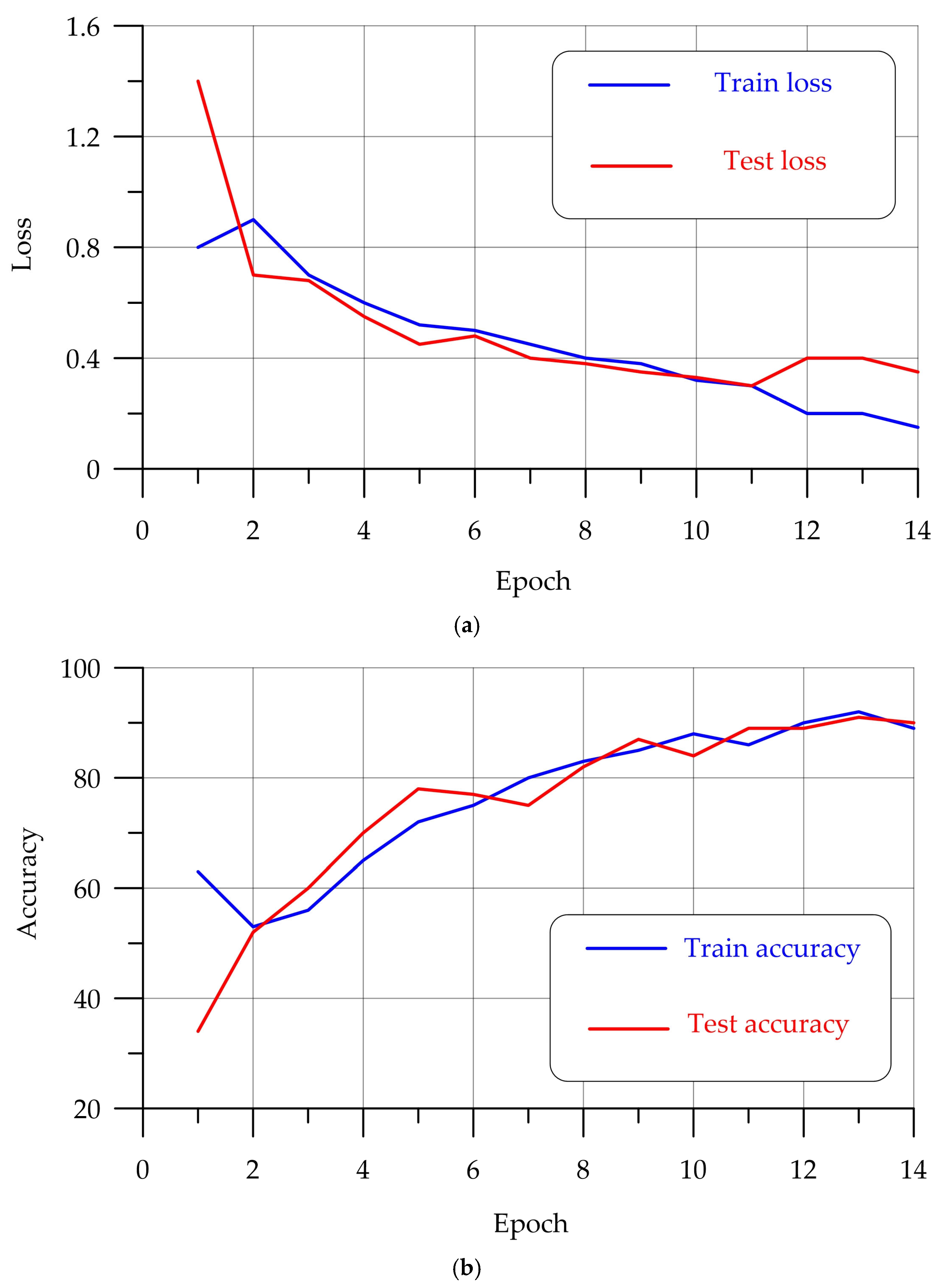

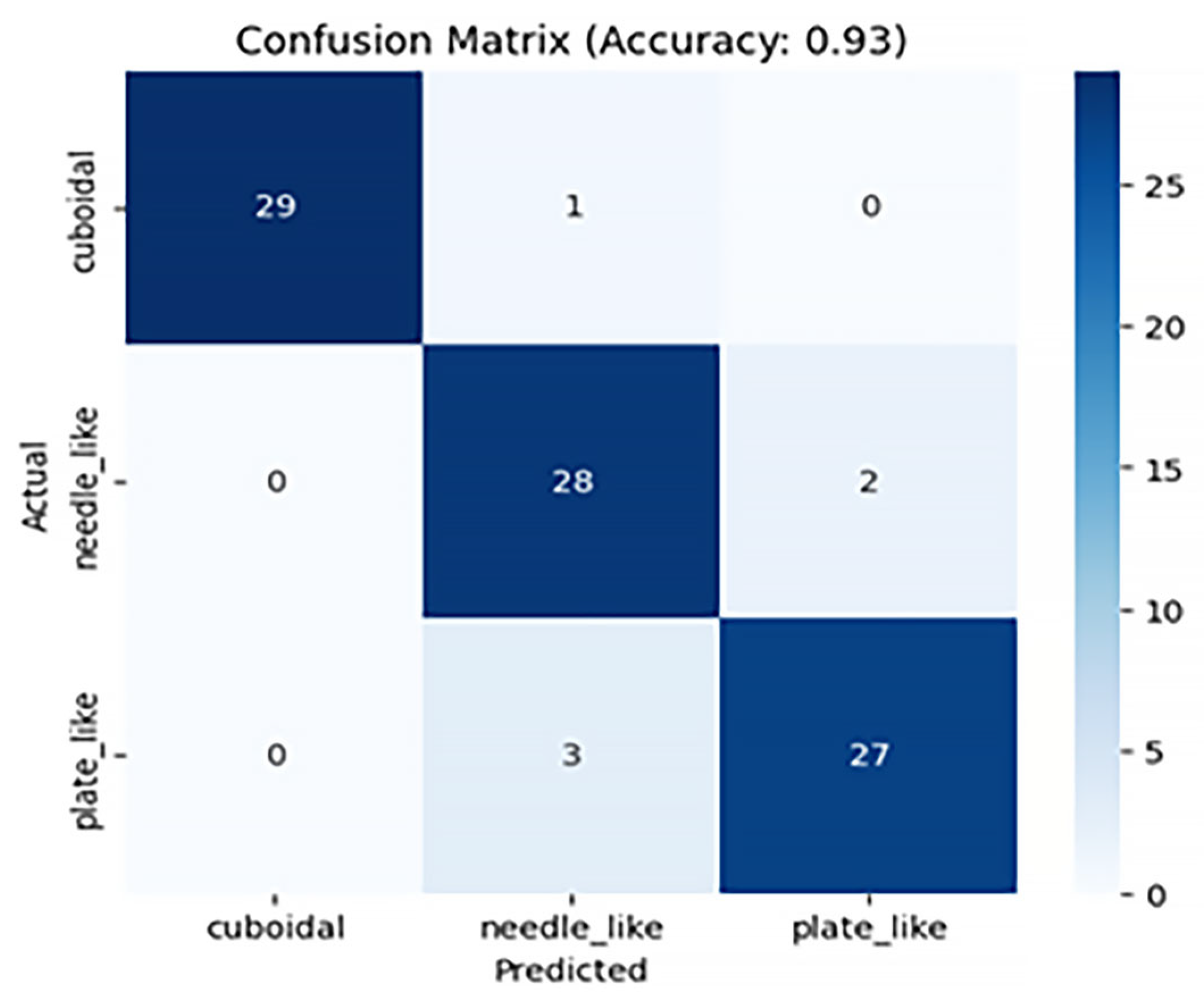

4. Results and Discussion

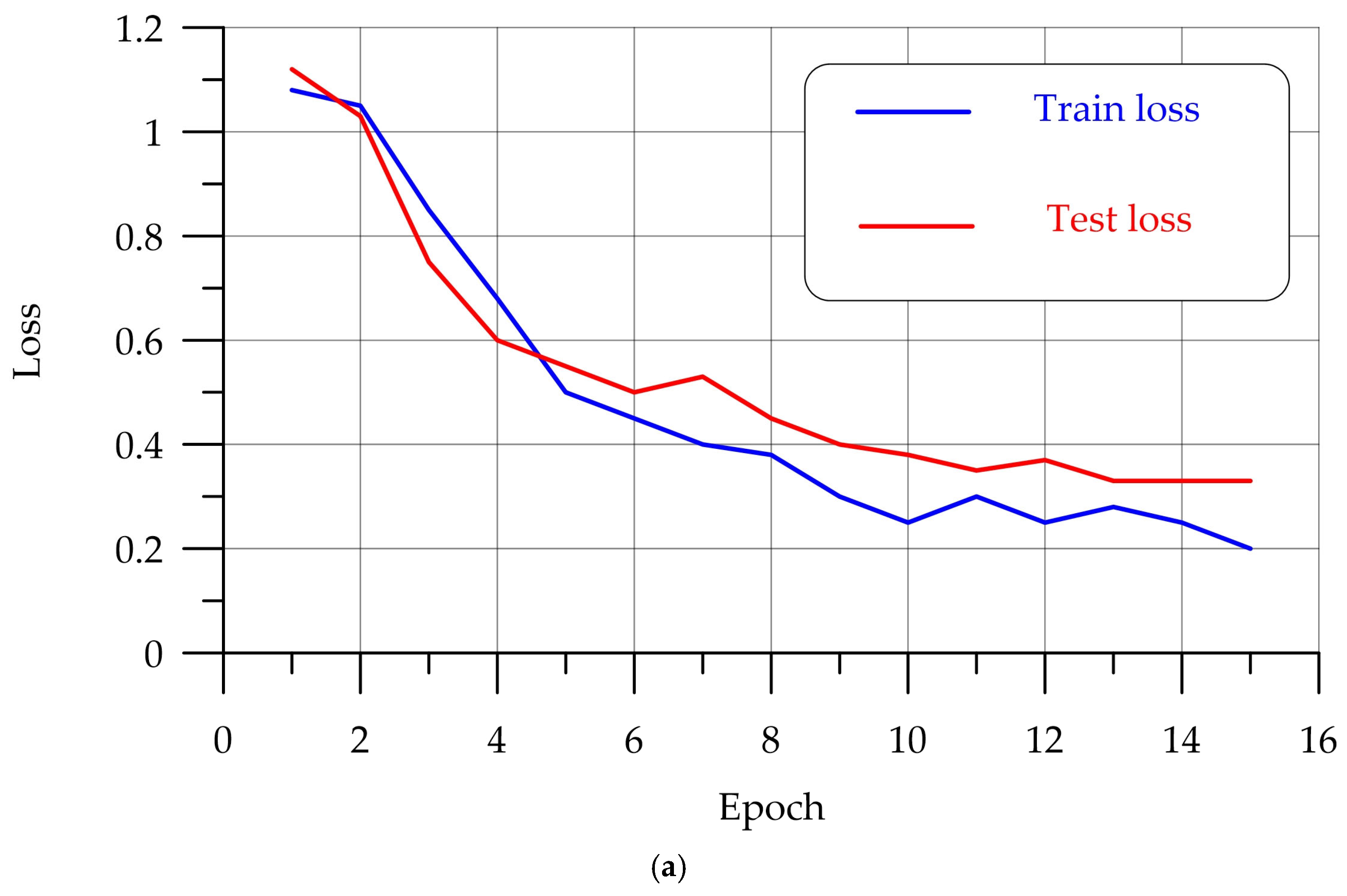

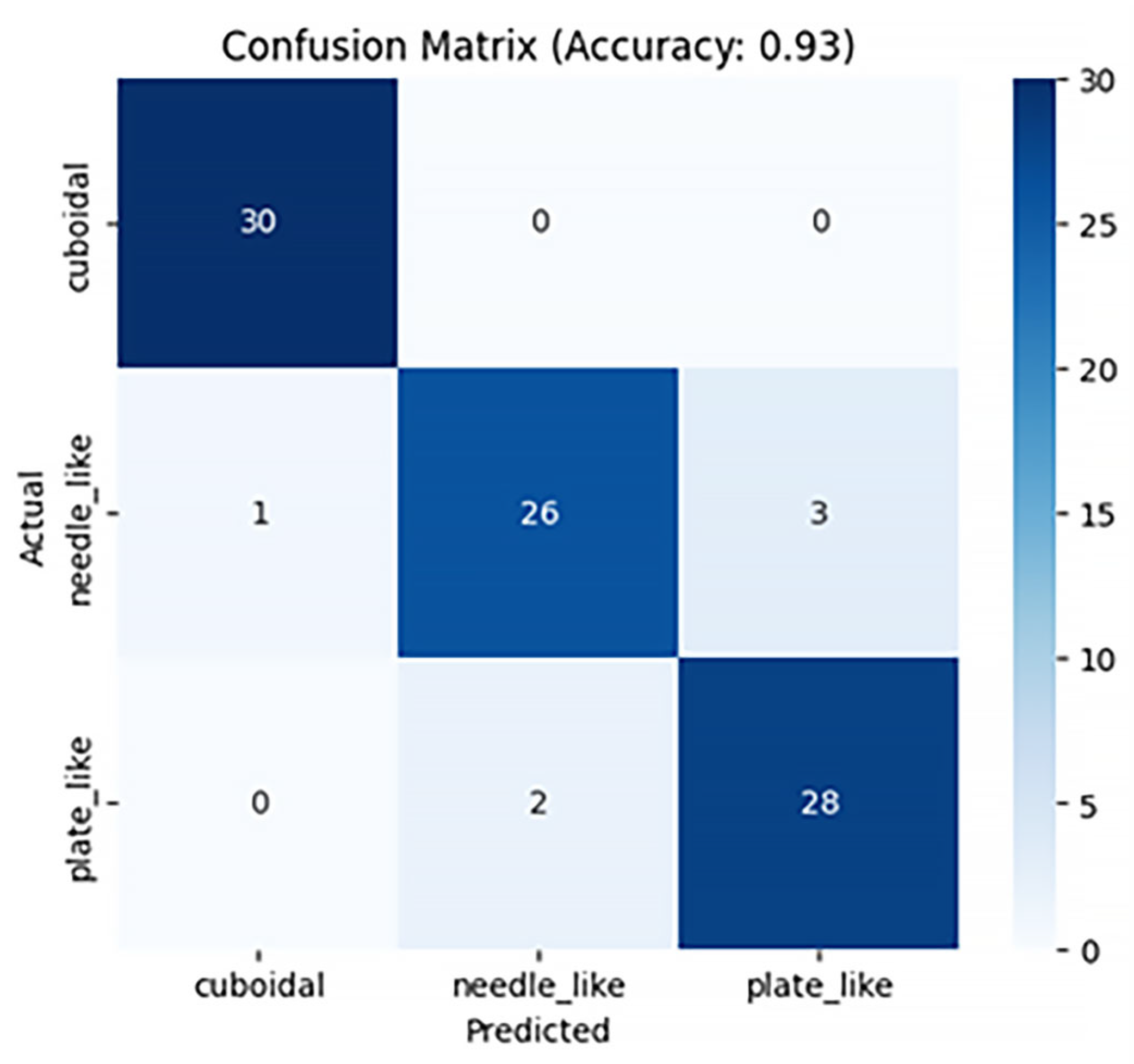

4.1. Training the Model Based on ResNet50

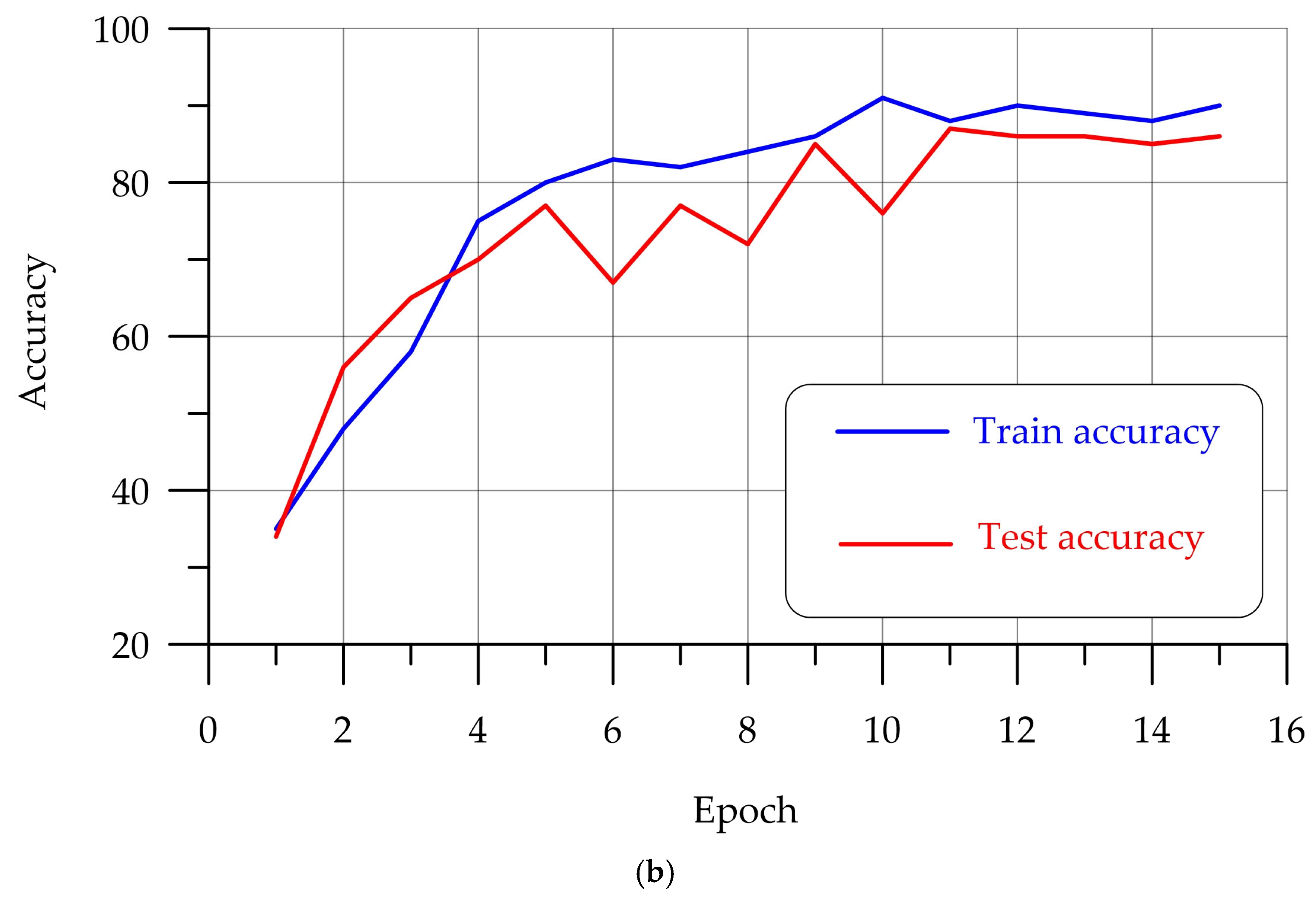

4.2. Training the Model Based on MobileNetv3 Small

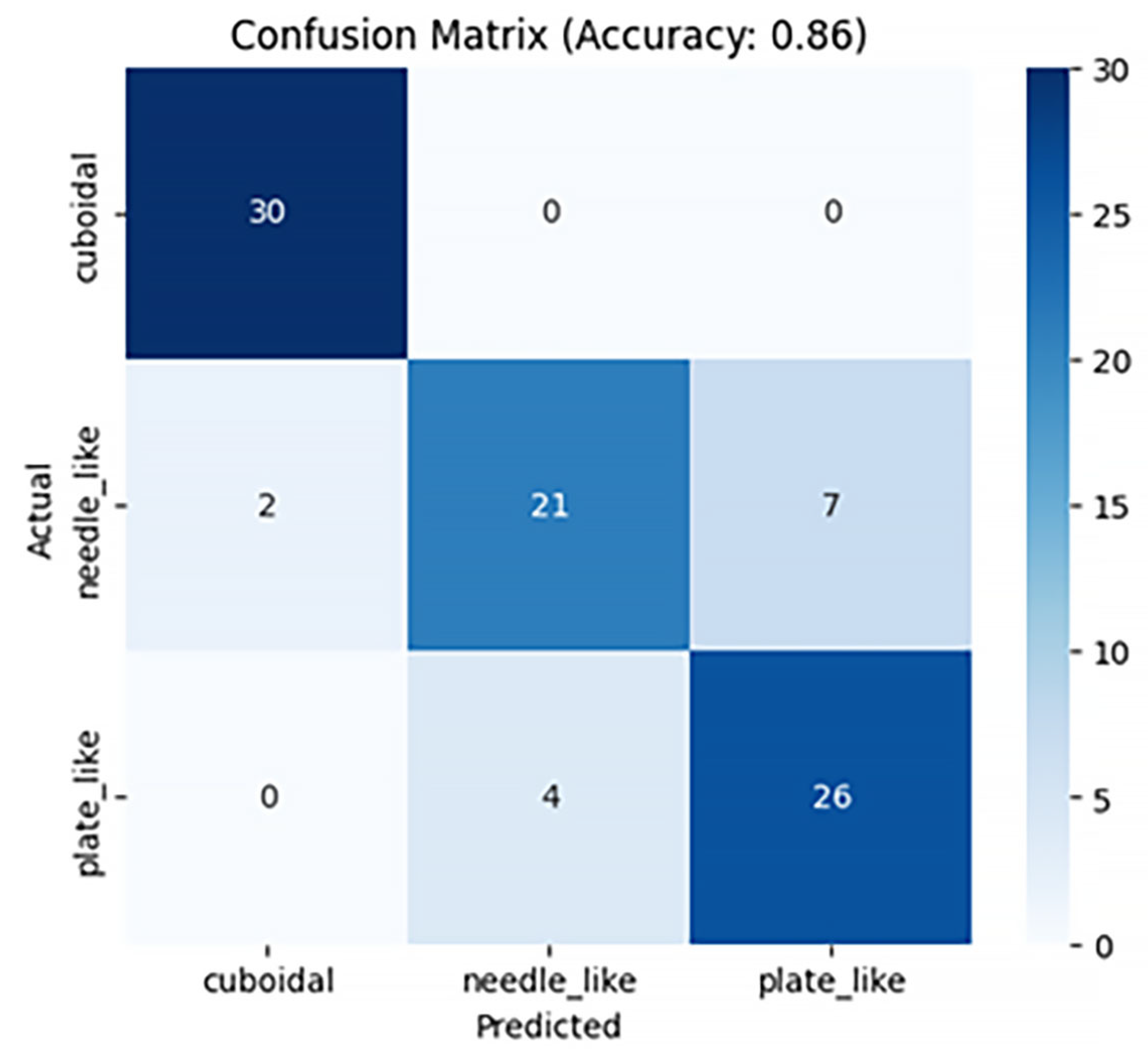

4.3. Training the DenseNet121-Based Model

5. Conclusions

- (1)

- Three-dimensional images of three crushed stone grain classes were processed to generate tensors, which were subsequently compiled into an empirical database.

- (2)

- Parameters for stable training were selected, and convolutional neural networks ResNet50, MobileNetV3 Small, and DenseNet121 were trained.

- (3)

- When tested on a test sample, the developed algorithms demonstrated high values of the Precision metric: from 0.86 to 0.94.

- (4)

- The simplified architecture of MobileNetV3 Small with reduced computational costs is suitable for use on mobile devices.

- (5)

- A comparison of the developed method with the manual classification method and the method of neural network models of computer vision, specially developed for working with three-dimensional data presented in the form of point clouds, was carried out. A high classification accuracy of 92% was achieved, which confirms the effectiveness of the proposed approach. The result is comparable with the use of traditional analysis methods and surpasses them with large sample volumes (200 or more grains).

- (6)

- The developed models allow for the complete automation of the process of determining the type of crushed stone grains, which reduces labor costs and also reduces the likelihood of human factor influence.

- (7)

- The prospect of improving the model lies in the following actions:

- -

- expanding the training sample to improve the accuracy and generalizability of the algorithms. As shown in the study, stones from the plate_like class and the needle_like class are visually very similar, which can complicate their classification, which requires an expansion of the dataset.

- -

- the methods considered can be adapted for classifying other types of building materials, which expands its applicability in various industries;

- -

- the versatility and cross-platform nature of computer vision models open up opportunities for their integration into existing industrial quality control and automation systems.

- -

- models can classify data that are very different from the training set with low accuracy, for example, stones from new geological conditions. If it is necessary to apply to special types of building materials, it is worth retraining the model;

- -

- the implementation of the retraining process and the use of such models requires computing resources, which may be a limitation for some users.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Expanded |

| AI | Artificial Intelligence |

| BIM | Building Information Model |

| CNN | Convolutional Neural Network |

| CV | Computer Vision |

| GNB | Gaussian Naive Bayes |

| GPU | Graphics Processing Unit |

| kNN | k-Nearest Neighbors |

| ML | Machine Learning |

| MLR | Multinomial Logistic Regression |

| RF | Random Forest |

| SVM | Support Vector Machine |

| VR | Virtual Reality |

References

- Kondratieva, T.N.; Chepurnenko, A.S. Prediction of the Strength of the Concrete-Filled Tubular Steel Columns Using the Artificial Intelligence. Mod. Trends Constr. Urban Territ. Plan. 2024, 3, 40–48. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; Pembek, A.; Elshaeva, D.; Chernil’nik, A.; et al. Prediction of the Compressive Strength of Vibrocentrifuged Concrete Using Machine Learning Methods. Buildings 2024, 14, 377. [Google Scholar] [CrossRef]

- Seo, J.; Han, S.; Lee, S.; Kim, H. Computer vision techniques for construction safety and health monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar] [CrossRef]

- Ferraris, C.; Amprimo, G.; Pettiti, G. Computer Vision and Image Processing in Structural Health Monitoring: Overview of Recent Applications. Signals 2023, 4, 539–574. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Shilov, A.A.; Razveeva, I.; Elshaeva, D.; Chernil’nik, A.; Onore, G. Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone. Sensors 2025, 25, 1914. [Google Scholar] [CrossRef]

- Kondratieva, T.N.; Chepurnenko, A.S. Prediction of Rheological Parameters of Polymers by Machine Learning Methods. Adv. Eng. Res. (Rostov-on-Don). 2024, 24, 36–47. [Google Scholar] [CrossRef]

- Wang, Y.; Li, J.; Zhang, X.; Yao, Y.; Peng, Y. Recent Development in Intelligent Compaction for Asphalt Pavement Construction: Leveraging Smart Sensors and Machine Learning. Sensors 2024, 24, 2777. [Google Scholar] [CrossRef]

- Fan, R.; Tian, A.; Li, Y.; Gu, Y.; Wei, Z. Research Progress on Machine Learning Prediction of Compressive Strength of Nano-Modified Concrete. Appl. Sci. 2025, 15, 4733. [Google Scholar] [CrossRef]

- Qin, X.; Xu, Z.; Liu, M.; Zhang, Y.; Wang, Y.; Yang, Z.; Ling, X. Mechanical Properties and Elastic Modulus Prediction of Mixed Coal Gangue Concrete. Materials 2025, 18, 1240. [Google Scholar] [CrossRef]

- Li, P.; Xu, Y.; Liu, Z.; Jiang, H.; Liu, A. Evaluation and Optimization of Urban Street Spatial Quality Based on Street View Images and Machine Learning: A Case Study of the Jinan Old City. Buildings 2025, 15, 1408. [Google Scholar] [CrossRef]

- Park, J.; Kang, D. Artificial Intelligence and Smart Technologies in Safety Management: A Comprehensive Analysis Across Multiple Industries. Appl. Sci. 2024, 14, 11934. [Google Scholar] [CrossRef]

- Chepurnenko, A.S.; Kondratieva, T.N. Determining the Rheological Parameters of Polymers Using Machine Learning Techniques. Mod. Trends Constr. Urban Territ. Plan. 2024, 3, 71–83. [Google Scholar] [CrossRef]

- Sobol, B.V.; Soloviev, A.N.; Vasiliev, P.V.; Lyapin, A.A. Modeling of Ultrasonic Flaw Detection Processes in the Task of Searching and Visualizing Internal Defects in Assemblies and Structures. Adv. Eng. Res. (Rostov-on-Don) 2023, 23, 433–450. [Google Scholar] [CrossRef]

- Ivanova, S.; Kuznetsov, A.; Zverev, R.; Rada, A. Artificial Intelligence Methods for the Construction and Management of Buildings. Sensors 2023, 23, 8740. [Google Scholar] [CrossRef]

- Lee, Y.-C.; Shariatfar, M.; Rashidi, A.; Lee, H.W. Evidence-driven sound detection for prenotification and identification of construction safety hazards and accidents. Autom. Constr. 2020, 113, 103127. [Google Scholar] [CrossRef]

- George, M.R.; Nalluri, M.R.; Anand, K.B. Severity Prediction of Construction Site Accidents Using Simple and Ensemble Decision Trees. In Proceedings of SECON’21; Lecture Notes in Civil Engineering; Springer: Cham, Switzerland, 2021; Volume 171, pp. 599–608. [Google Scholar]

- Jayaram, M.A. Computer vision applications in construction material and structural health monitoring: A scoping review. Mater. Today Proc. 2023, in press. [CrossRef]

- Nizina, T.A.; Nizin, D.R.; Selyaev, V.P.; Spirin, I.P.; Stankevich, A.S. Big data in predicting the climatic resistance of building materials. I. Air temperature and humidity. Constr. Mater. Prod. 2023, 6, 18–30. [Google Scholar] [CrossRef]

- Wu, T.; Chen, Z.; Li, S.; Xing, P.; Wei, R.; Meng, X.; Zhao, J.; Wu, Z.; Qiao, R. Decoupling Urban Street Attractiveness: An Ensemble Learning Analysis of Color and Visual Element Contributions. Land 2025, 14, 979. [Google Scholar] [CrossRef]

- Rabbi, A.B.K.; Jeelani, I. AI integration in construction safety: Current state, challenges, and future opportunities in text, vision, and audio based applications. Autom. Constr. 2024, 164, 105443. [Google Scholar] [CrossRef]

- Laqsum, S.A.; Zhu, H.; Haruna, S.I.; Ibrahim, Y.E.; Al-shawafi, A. Mechanical and Impact Strength Properties of Polymer-Modified Concrete Supported with Machine Learning Method: Microstructure Analysis (SEM) Coupled with EDS. J. Compos. Sci. 2025, 9, 101. [Google Scholar] [CrossRef]

- Baudrit, C.; Dufau, S.; Villain, G.; Sbartaï, Z.M. Artificial Intelligence and Non-Destructive Testing Data to Assess Concrete Sustainability of Civil Engineering Infrastructures. Materials 2025, 18, 826. [Google Scholar] [CrossRef] [PubMed]

- Ma, K.; Yao, C.; Liu, B.; Hu, Q.; Li, S.; He, P.; Han, J. Segment Anything Model-Based Hyperspectral Image Classification for Small Samples. Remote Sens. 2025, 17, 1349. [Google Scholar] [CrossRef]

- Almusaed, A.; Yitmen, I. Architectural Reply for Smart Building Design Concepts Based on Artificial Intelligence Simulation Models and Digital Twins. Sustainability 2023, 15, 4955. [Google Scholar] [CrossRef]

- Liao, W.; Lu, X.; Fei, Y.; Gu, Y.; Huang, Y. Generative AI design for building structures. Autom. Constr. 2024, 157, 105187. [Google Scholar] [CrossRef]

- Eller, B.; Movahedi Rad, M.; Fekete, I.; Szalai, S.; Harrach, D.; Baranyai, G.; Kurhan, D.; Sysyn, M.; Fischer, S. Examination of Concrete Canvas under Quasi-Realistic Loading by Computed Tomography. Infrastructures 2023, 8, 23. [Google Scholar] [CrossRef]

- Chepurnenko, A.S.; Turina, V.S.; Akopyan, V.F. Artificial intelligence model for predicting the load-bearing capacity of eccentrically compressed short concrete filled steel tubular columns. Constr. Mater. Prod. 2024, 7, 2. [Google Scholar] [CrossRef]

- Hematibahar, M.; Kharun, M.; Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I. Analysis of Models to Predict Mechanical Properties of High-Performance and Ultra-High-Performance Concrete Using Machine Learning. J. Compos. Sci. 2024, 8, 287. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.; Kozhakin, A.; Pembek, A.; Kondratieva, T.N.; Elshaeva, D.; Chernil’nik, A.; Beskopylny, N. Prediction of the Properties of Vibro-Centrifuged Variatropic Concrete in Aggressive Environments Using Machine Learning Methods. Buildings 2024, 14, 1198. [Google Scholar] [CrossRef]

- Ullah, A.; Asami, K.; Holtz, L.; Röver, T.; Azher, K.; Bartsch, K.; Emmelmann, C. A Machine Learning Approach for Mechanical Component Design Based on Topology Optimization Considering the Restrictions of Additive Manufacturing. J. Manuf. Mater. Process. 2024, 8, 220. [Google Scholar] [CrossRef]

- Ji, Y.; Wang, D.; Wang, J. Study of recycled concrete properties and prediction using machine learning methods. J. Build. Eng. 2024, 94, 110067. [Google Scholar] [CrossRef]

- Jeong, D.; Jeong, T.; Lee, C.; Choi, Y.; Lee, D. A Study on Guidelines for Constructing Building Digital Twin Data. Buildings 2025, 15, 434. [Google Scholar] [CrossRef]

- Mundt, M.; Majumder, S.; Murali, S.; Panetsos, P.; Ramesh, V. Meta-Learning Convolutional Neural Architectures for Multi-Target Concrete Defect Classification with the COncrete DEfect BRidge IMage Dataset. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11188–11197. [Google Scholar] [CrossRef]

- Vasiliev, P.V.; Senichev, A.V.; Giorgio, I. Visualization of internal defects using a deep generative neural network model and ultrasonic nondestructive testing. Adv. Eng. Res. (Rostov-on-Don) 2021, 21, 143–153. [Google Scholar] [CrossRef]

- Pooraskarparast, B.; Dang, S.N.; Pakrashi, V.; Matos, J.C. Performance of Fine-Tuning Techniques for Multilabel Classification of Surface Defects in Reinforced Concrete Bridges. Appl. Sci. 2025, 15, 4725. [Google Scholar] [CrossRef]

- Tropea, M.; Fedele, G.; De Luca, R.; Miriello, D.; De Rango, F. Automatic Stones Classification through a CNN-Based Approach. Sensors 2022, 22, 6292. [Google Scholar] [CrossRef]

- Tereso, M.; Rato, L.; Gonçalves, T. Automatic classification of ornamental stones using Machine Learning techniques A study applied to limestone. In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Seville, Spain, 24–27 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Dvorkin, L.; Bordiuzhenko, O.; Tracz, T.; Mróz, K. Optimizing Porous Concrete Using Granite Stone-Crushing Waste: Composition, Strength, and Density Analysis. Appl. Sci. 2024, 14, 6934. [Google Scholar] [CrossRef]

- Tarekegn, Y.G.; Lahmer, T.; Tarekegn, A.G.; Ftwi, E.G. Effects of Coating Thickness and Aggregate Size on the Damping Properties of Concrete: A Numerical Simulation Approach. Coatings 2025, 15, 610. [Google Scholar] [CrossRef]

- Suhendro, T.; Nugroho, R.A. Using Cube Coarse Aggregate to Determine the Compressive Strength of Concrete by Measuring Packing Density and Using Indian Standard and ACI Methods with Variations of Testing Age and Cement Products. Eng. Proc. 2025, 84, 91. [Google Scholar] [CrossRef]

- Hu, Z.; Liu, H.; Zhang, W.; Hei, T.; Ding, X.; Dong, Z. Evaluation of CBR of Graded Crushed Stone of Flexible Base Structural Layer Based on Discrete Element Model. Materials 2023, 16, 363. [Google Scholar] [CrossRef]

- Miao, Y.; Liu, X.; Hou, Y.; Li, J.; Wu, J.; Wang, L. Packing Characteristics of Aggregate with Consideration of Particle size and Morphology. Appl. Sci. 2019, 9, 869. [Google Scholar] [CrossRef]

- Lux, J.; Lau Hiu Hoong, J.D.; Mahieux, P.Y.; Turcry, P. Classification and estimation of the mass composition of recycled aggregates by deep neural networks. Comput. Ind. 2023, 148, 103889. [Google Scholar] [CrossRef]

- Fang, Z.; Song, S.; Wang, H.; Yan, H.; Lu, M.; Chen, S.; Li, S.; Liang, W. Mineral classification with X-ray absorption spectroscopy: A deep learning-based approach. Miner. Eng. 2024, 217, 108964. [Google Scholar] [CrossRef]

- Lau Hiu Hoong, J.D.; Lux, J.; Mahieux, P.Y.; Turcry, P.; Aït-Mokhtar, A. Determination of the composition of recycled aggregates using a deep learning-based image analysis. Autom. Constr. 2020, 116, 103204. [Google Scholar] [CrossRef]

- Nie, J.; Wang, Y.; Yu, Z.; Zhou, S.; Lei, J. High-precision grain size analysis of laser-sintered Al2O3 ceramics using a deep-learning-based ceramic grains detection neural network. Comput. Mater. Sci. 2025, 250, 113724. [Google Scholar] [CrossRef]

- Ji, S.-Y.; Jun, H.-J. Deep Learning Model for Form Recognition and Structural Member Classification of East Asian Traditional Buildings. Sustainability 2020, 12, 5292. [Google Scholar] [CrossRef]

- Benchabana, A.; Kholladi, M.-K.; Bensaci, R.; Khaldi, B. Building Detection in High-Resolution Remote Sensing Images by Enhancing Superpixel Segmentation and Classification Using Deep Learning Approaches. Buildings 2023, 13, 1649. [Google Scholar] [CrossRef]

- Gil, A.; Arayici, Y. Point Cloud Segmentation Based on the Uniclass Classification System with Random Forest Algorithm for Cultural Heritage Buildings in the UK. Heritage 2025, 8, 147. [Google Scholar] [CrossRef]

- Wang, S.; Han, J. Automated detection of exterior cladding material in urban area from street view images using deep learning. J. Build. Eng. 2024, 96, 110466. [Google Scholar] [CrossRef]

- Wang, S.; Park, S.; Park, S.; Kim, J. Building façade datasets for analyzing building characteristics using deep learning. Data Brief 2024, 57, 110885. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Obukhov, A.D.; Dedov, D.L.; Surkova, E.O.; Korobova, I.L. 3D Human Motion Capture Method Based on Computer Vision. Adv. Eng. Res. (Rostov-on-Don) 2023, 23, 317–328. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.; Kozhakin, A.; Meskhi, B.; Chernil’nik, A.; Elshaeva, D.; Ananova, O.; Girya, M.; et al. Computer Vision Method for Automatic Detection of Microstructure Defects of Concrete. Sensors 2024, 24, 4373. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Ge, Y.; Bai, X.; Zhang, Z.; Shangguan, Y.; Li, Y. Research on Damage Detection Methods for Concrete Beams Based on Ground Penetrating Radar and Convolutional Neural Networks. Appl. Sci. 2025, 15, 1882. [Google Scholar] [CrossRef]

- Borovkov, A.I.; Vafaeva, K.M.; Vatin, N.I.; Ponyaeva, I. Synergistic Integration of Digital Twins and Neural Networks for Advancing Optimization in the Construction Industry: A Comprehensive Review. Constr. Mater. Prod. 2024, 7, 7. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, Y.; Li, C.; Bai, C.; Zhang, F.; Li, J.; Guo, M. Prediction of cement-stabilized recycled concrete aggregate properties by CNN-LSTM incorporating attention mechanism. Mater. Today Commun. 2025, 42, 111137. [Google Scholar] [CrossRef]

- Padhan, M.K.; Rai, A.; Mitra, M. Prediction of grain size distribution in microstructure of polycrystalline materials using one dimensional convolutional neural network (1D-CNN). Comput. Mater. Sci. 2023, 229, 112416. [Google Scholar] [CrossRef]

- Kroell, N.; Thor, E.; Göbbels, L.; Schönfelder, P.; Chen, X. Deep learning-based prediction of particle size distributions in construction and demolition waste recycling using convolutional neural networks on 3D laser triangulation data. Constr. Build. Mater. 2025, 466, 140214. [Google Scholar] [CrossRef]

- Li, D.; Lu, C.; Chen, Z.; Guan, J.; Zhao, J.; Du, J. Graph Neural Networks in Point Clouds: A Survey. Remote Sens. 2024, 16, 2518. [Google Scholar] [CrossRef]

- GOST 8267-93; Crushed Stone and Gravel of Solid Rocks for Construction Works. Specifications. Standartinform: Moscow, Russia, 2018. Available online: https://www.russiangost.com/p-16864-gost-8267-93.aspx (accessed on 5 June 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar] [CrossRef]

- Albelwi, S.A. Deep Architecture based on DenseNet-121 Model for Weather Image Recognition. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 559–565. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–13. [Google Scholar]

- Su, C.; Xu, S.; Zhu, K.; Zhang, X. Rock classification in petrographic thin section images based on concatenated convolutional neural networks. Earth Sci. Inf. 2020, 13, 1477–1484. [Google Scholar] [CrossRef]

- Sanchez-Catasus, C.A.; Batista-García-Ramó, K.; Melie-Garcia, L. Brain connectivity by single-photon emission computed tomography and graph theory: A mini-review. Acad. Med. 2023, 1, 1. [Google Scholar] [CrossRef]

| Num | Parameter | Value | ||

|---|---|---|---|---|

| 1 | Model architecture | ResNet50 (modified) | MobileNetV3 small | DenseNet121 |

| 2 | Input image size | 224 × 224 × 1 | ||

| 3 | Number of classes | 3 | ||

| 4 | Activation function | ReLU | ||

| 5 | Loss function | CrossEntropyLoss | ||

| 6 | Optimizer | Adam | ||

| 7 | Learning rate (lr) | 0.0001 | ||

| 8 | Regularization coefficient (weight decay) | 1 × 10−3 | 1 × 10−2 | 1 × 10−3 |

| 9 | Batch size | 32 | ||

| 10 | Number of epochs | 15 | 15 | 10 |

| 11 | Device used | GPU | ||

| 12 | Num params (total) | 25,636,712 | 2,542,856 | 8,062,504 |

| 13 | GFLOPS | 4.09 | 0.06 | 2.83 |

| 14 | File Size, MB | 97.8 | 9.8 | 30.8 |

| Model | Precision | Recall | F1 |

|---|---|---|---|

| ResNet50 | 0.94 | 0.93 | 0.93 |

| MobileNetV3 Small | 0.86 | 0.85 | 0.85 |

| DenseNet121 | 0.93 | 0.93 | 0.93 |

| № | Method | Photo Fixation Time, Minutes:Seconds | Operating Time, Minutes:Seconds | Total, Minutes:Seconds | Accuracy, % |

|---|---|---|---|---|---|

| 1 | Grain Size Ratio Template and Visual Method | ||||

| 1.1 | Person 1 | - | 17:36 | 17:36 | 98 |

| 1.2 | Person 2 | - | 18:48 | 18:48 | 96 |

| 1.3 | Person 3 | - | 20:17 | 20:17 | 90 |

| 1.4 | Person 4 | - | 23:41 | 23:41 | 84 |

| 1.5 | Person 5 | - | 26:14 | 26:14 | 80 |

| Average results of the method using the grain size ratio template | 21:19 | 90 | |||

| 2 | Computer Vision Algorithms | ||||

| 2.1 | PointNet [5] | 16:40 | 00:20 | 17:00 | 83 |

| 2.2 | PointCloudTransformer [5] | 16:40 | 00:28 | 17:08 | 88 |

| Average performance of the algorithms | 17:04 | 86 | |||

| 2.3 | ResNet50 | 16:40 | 00:01 | 16:41 | 92 |

| 2.4 | MobileNetV3 Small | 16:40 | 00:02 | 16:42 | 82 |

| 2.5 | DenseNet121 | 16:40 | 00:01 | 16:41 | 90 |

| Average performance of the proposed algorithms | 16:41 | 88 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Razveeva, I.; Mailyan, A.L.; Elshaeva, D.; Chernil’nik, A.; Nikora, N.I.; Onore, G. Crushed Stone Grain Shapes Classification Using Convolutional Neural Networks. Buildings 2025, 15, 1982. https://doi.org/10.3390/buildings15121982

Beskopylny AN, Shcherban’ EM, Stel’makh SA, Razveeva I, Mailyan AL, Elshaeva D, Chernil’nik A, Nikora NI, Onore G. Crushed Stone Grain Shapes Classification Using Convolutional Neural Networks. Buildings. 2025; 15(12):1982. https://doi.org/10.3390/buildings15121982

Chicago/Turabian StyleBeskopylny, Alexey N., Evgenii M. Shcherban’, Sergey A. Stel’makh, Irina Razveeva, Alexander L. Mailyan, Diana Elshaeva, Andrei Chernil’nik, Nadezhda I. Nikora, and Gleb Onore. 2025. "Crushed Stone Grain Shapes Classification Using Convolutional Neural Networks" Buildings 15, no. 12: 1982. https://doi.org/10.3390/buildings15121982

APA StyleBeskopylny, A. N., Shcherban’, E. M., Stel’makh, S. A., Razveeva, I., Mailyan, A. L., Elshaeva, D., Chernil’nik, A., Nikora, N. I., & Onore, G. (2025). Crushed Stone Grain Shapes Classification Using Convolutional Neural Networks. Buildings, 15(12), 1982. https://doi.org/10.3390/buildings15121982