1. Introduction

Building is a general term for buildings and structures. It is a human-made environment designed and constructed to satisfy social life needs by applying known materials and technologies, specific scientific laws, Feng Shui concepts, and aesthetic principles [

1]. Over decades of development, building automation systems (BAS) have matured with well-established fieldbus technologies, device ecosystems, and standardized engineering specifications that govern all phases from system design to commissioning. However, contemporary BAS implementations predominantly adopt a centralized-decentralized control architecture for functionally partitioned subsystems, which inherently restricts synergistic operations among subsystems. This structural limitation compromises connection reliability, real-time responsiveness, and system-level integration—critical metrics that remain suboptimal in practice [

1,

2,

3]. Recent years have witnessed notable advancements in intelligent spatial unit control paradigms leveraging distributed architectures, offering promising solutions to these long-standing interoperability challenges [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11].

In general, if the main body of a building is divided into several non-overlapping building spatial units, the building can be considered a composite of all building spatial units [

1,

2,

3,

4,

5,

6,

7]. Similarly, if each component of a building facility is considered a building facility unit, the building facility can be regarded as a combination of several facility units [

3,

4,

5,

6,

7,

8,

9]. If each building spatial unit and building facility unit in a building are treated as one building unit in the building, the function of the building is a combination of the functions of each building unit, and the maintenance of the building’s function relies on the maintenance of the functions of each building unit [

1,

4,

5,

6,

7].

For all spatial units within a building, considering whether these spatial units are adjacent, the entire set of spatial units forms a single spatial unit network. Similarly, the building facility can be regarded as a network of these facility units by considering whether its constituent facility units are connected via pipelines, power lines, or other types of lines or pipes. Since each facility unit within a building is deployed within an appropriate spatial unit, by using the criterion of whether a facility unit is deployed within a spatial unit, the entire network of facility units is coupled with the spatial unit network in space. For each building spatial unit and each building facility unit in a building is treated as one building unit; a building can be viewed as a network of interconnected building units [

1,

3,

4,

5,

6]. Given that building units naturally possess spatial distribution and local characteristics, if the maintenance of a building unit’s function is viewed as an internal information processing task, the building’s information processing process inherently exhibits distributed and localized characteristics [

4,

5,

6,

8,

9,

10,

11,

12,

13,

14]. The maintenance of building unit functions is either conducted independently within each unit or carried out within each unit with assistance from adjacent units, with all data processed within each building unit being autonomously collected and handled locally.

Because the information processing required for the maintenance of building functionalities is distributed within each building unit [

4,

5,

6], the maintenance of building functionalities is a combination of the information processing processes within each building unit. In an insect-intelligent building platform [

4,

5,

6,

7,

8], to meet the information processing and control needs within each building unit, each building unit is equipped with a Building Information Processing Unit (BPU) to satisfy the information processing and control needs of each building unit. All information processing and control tasks within a building unit are completed at last by the BPU deployed in the building unit. When a BPU needs reference data from other building units to complete information processing and equipment control tasks within its local building unit, it can only obtain this data by querying the BPUs in adjacent building units. If each information processing unit deployed within a building unit is viewed as a microenvironment, and it is stipulated that only spatially adjacent microenvironments can exchange information with each other, then the information processing and control network that supports an insect-intelligent building platform consists of a network of microenvironments based on information exchange criteria. All control and information processing tasks within the building were completed within the microenvironment network. Emerging machine learning and artificial intelligence algorithms can help computer networks become smarter [

15].

In building units, issues such as intelligent detection of sensor faults, area occupant counting, and tactic selection for environment control are frequently modeled as optimization problems [

12,

16,

17,

18,

19,

20,

21,

22]. Artificial neural networks, evolutionary computation, and other intelligent computational methods are frequently employed to solve these optimization problems [

17,

23,

24,

25,

26]. The Particle Swarm Optimization (PSO) algorithm [

27] has been widely applied to solve relevant optimization problems within buildings owing to its computational efficiency and simplicity [

28,

29,

30,

31,

32,

33,

34,

35,

36].

Furthermore, for multi-objective optimization problems in building information processing [

37,

38,

39,

40,

41], the Niche-based Particle Swarm Optimization (NPSO) algorithm [

42,

43] has been widely applied to solve multimodal multi-objective optimization problems [

37,

38,

39,

40,

41]. In the NPSO algorithm, the search space is divided into several regions, and all particles are uniformly distributed across these regions to form subpopulations. The radius of a subpopulation is calculated based on the position of the global best particle and all other particles in the subpopulation. A diversity preservation mechanism is also employed to prevent particles from prematurely converging to local optima within the same region. Thus, the NPSO algorithm effectively balances exploration and exploitation, which are crucial for solving complex optimization problems with multiple objectives and potential solutions.

In an insect-intelligent building platform system, the network for information processing is structured as a microenvironment network based on information exchange criteria across all microenvironments within the building. The microenvironment network is a decentralized computing environment, and the existing implementations of the PSO algorithm and its various improvements have not been adapted to the loosely coupled distributed computing architecture provided by this decentralized environment. When the PSO algorithm is employed to solve optimization problems in this microenvironment network, two challenges arise for implementing the PSO algorithm. First, when the computational power of the building’s information processing units is limited, a large number of particles may lead to slow convergence of the PSO algorithm, potentially preventing the timely completion of the computational tasks. Conversely, if the number of particles is too low, the PSO algorithm may converge quickly but cannot guarantee solution precision. Secondly, the PSO algorithm is inherently stochastic and requires multiple runs to obtain a satisfactory approximation of the optimal solution. Executing the PSO algorithm multiple times requires more computational power from the processing nodes and additional time for solution attainment [

44].

Because the computational power of each BPU in an insect-intelligent building platform system is generally weak, the performance of solving an optimization problem using the PSO algorithm in a microenvironment network can be enhanced by evenly distributing all particles across each microenvironment by running a PSO algorithm instance with a small number of particles in each microenvironment and exchanging essential computational results among the microenvironments through the network for collaborative computation. This method effectively distributes the optimization requirements based on the PSO algorithm in one microenvironment to each microenvironment node, significantly reducing the computational demands on each microenvironment while simultaneously allowing each microenvironment to approximate the optimal solution to the optimization problem. Consequently, multiple approximate optimal solutions can be rapidly acquired, facilitating the efficient and repeated solving of optimization problems. This study investigates the updating mechanisms for the particle velocity and position of the PSO algorithm in a microenvironment network and presents a Microenvironment-based Particle Swarm Optimization (MPSO) algorithm. The MPSO algorithm is presented in the

Section 2. The

Section 3 evaluates the performance of the MPSO algorithm through experimental testing, and the

Section 4 summarizes the research on the MPSO algorithm.

2. Microenvironment-Based Particle Swarm Optimization Algorithm

In an insect-intelligent building platform, a building is considered a combination of several spatially adjacent building units, each equipped with a BPU. Each BPU represents a microenvironment within the building, and all the microenvironments within the building form a microenvironment network. The microenvironment network is responsible for all building control and information processing tasks. A certain number of sensing and control devices are typically deployed within each building unit, and the BPU located in it is responsible for sensing information and controlling devices in its designated building unit. Because the information processing or control results within a building unit may directly impact adjacent units, BPUs in neighboring units may communicate. Communication is essential for data sharing and collaborative computing. Consequently, in the microenvironment network, microenvironments that are spatially adjacent and require communication are considered neighboring microenvironments.

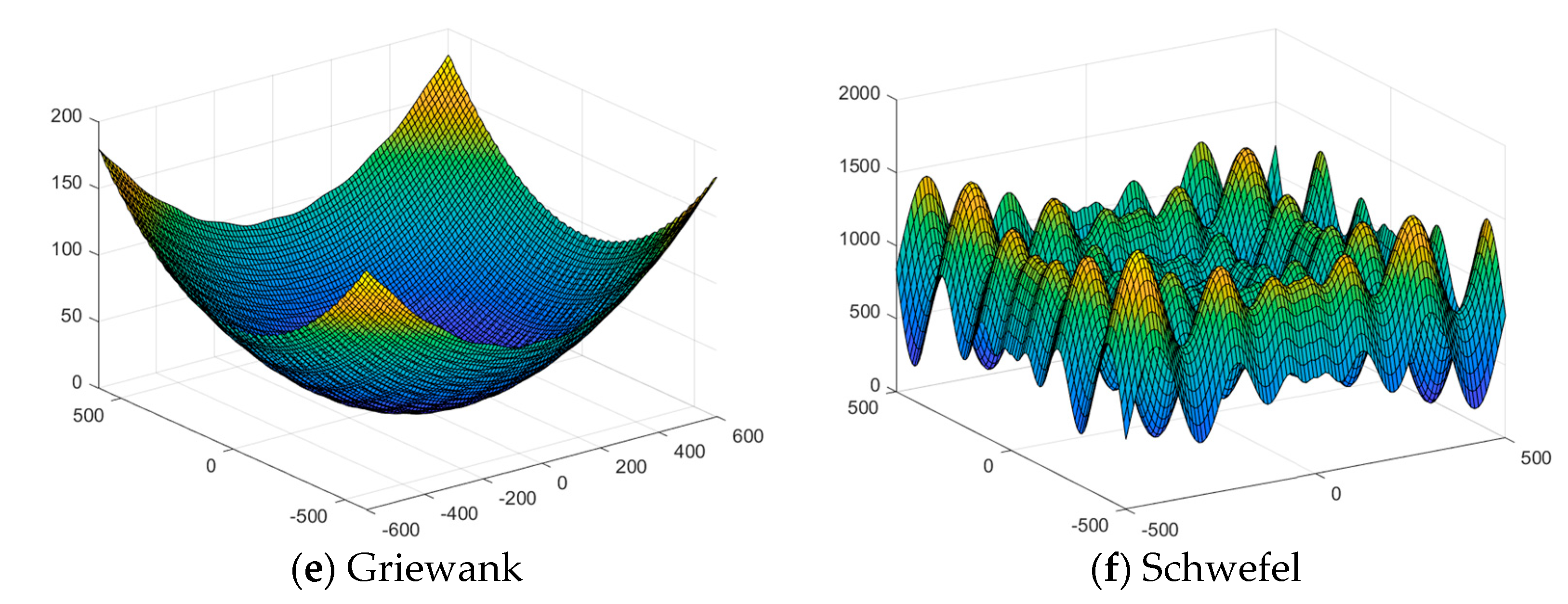

Figure 1 illustrates the topology of a microenvironment network. In

Figure 1, a gray dot represents one microenvironment node, and one edge indicates the adjacency between the two microenvironments. Logically, all microenvironment nodes were arranged in a 4-row by 5-column format, totaling 20 microenvironment nodes, as shown in

Figure 1. In the microenvironment network illustrated in

Figure 1, each node can only communicate and exchange data with its directly adjacent nodes. Any two non-adjacent microenvironment nodes cannot communicate directly or exchange data.

Generally, in the PSO algorithm, a particle represents a feasible solution to the corresponding optimization problem. In practical applications, the velocity and position update tactics for the particles of the PSO algorithm are generally specified by Equations (1) and (2). In Equations (1) and (2), v(t) represents the velocity of particles, and x(t) represents the position of particles at time t. ω is the inertia factor, c1 is the cognitive (individual) acceleration coefficient, and c2 is the social (global) acceleration coefficient. r1 and r2 are random numbers uniformly distributed in the range [0, 1]. pBest denotes the personal best position of the particles, and gBest denotes the global best position of all particles.

When the PSO algorithm is implemented in a microenvironment network, each particle must be assigned to a microenvironment, and each microenvironment may contain several particles (for simplicity, the number of particles in each microenvironment can be the same). The following assumptions are required to implement the PSO algorithm in a microenvironment network:

- (1)

There are several particles in each microenvironment.

- (2)

For any given microenvironment, gBestL denotes the historical best position of all particles in the microenvironment.

- (3)

For a particular microenvironment with m neighboring microenvironments, gBestLi denotes the best position of all particles in the ith neighboring microenvironment, and gBestA is defined according to Equation (3). In the microenvironment-based PSO algorithm, for any particle in a given microenvironment with position x(t) and velocity v(t) at time t, the velocity v(t + 1) and position x(t + 1) at time t + 1 are determined using Equations (4) and (2), respectively. Equation (3) is known as the data exchange tactic in the microenvironment-based PSO algorithm, whereas Equations (4) and (2) describe the update tactic of the particle position within the algorithm.

A full description of the MPSO (Microenvironment-based Particle Swarm Optimization) algorithm is presented in Algorithm 1. In Algorithm 1, the fitness value of each particle is used to evaluate the quality of the particle’s position; the smaller the fitness value, the better the particle’s position. Fitness function fun() is used to calculate the fitness value of a particle, where the position of the particle serves as the independent variable for the function. It is important to note that in microenvironment networks, the fitness function fun() used to calculate the fitness of each particle is consistent across every microenvironment. As shown in Algorithm 1, the following three constraints are imposed:

- (1)

Within each microenvironment, a small number of particles serve as a population that can independently execute a PSO algorithm instance, which adapts the characteristics of the microenvironment’s information.

- (2)

A microenvironment can exchange information with its neighboring microenvironments. Those microenvironments can query the position information of historically optimal particles within each other’s neighboring microenvironments.

- (3)

Non-adjacent microenvironment nodes could not exchange information in the microenvironment network.

| Algorithm 1: Microenvironment-based PSO algorithm (MPSO) |

- Input:

n, number of particles in a microenvironment; d, the dimension of position for each particle; c1 and c2, learning factors; ω, inertial factor; maxN, maximum number of iterations; fun, fitness function; pNeighbor, the list of neighboring microenvironments - Output:

gBestL, the historical optimal position of all local particles in the microenvironment.

- (1)

Initialize the velocity and position of each particle in the microenvironment. - (2)

Set the initial position of each particle as the initial value of its historical optimal position. - (3)

Calculate gBestL, the local best position for all local particles in the microenvironment. - (4)

k = 1; - (5)

while k <= maxN - (6)

For each neighbor microenvironment in pNeighbor, query its historical best position gBestLi, where, i = 1,2…m, and m is the number of neighbor microenvironment nodes. - (7)

Calculate gBestA according to Equation (3) for each local particle in the microenvironment - (8)

Update the velocity and position of the local particle according to Equation (4) and (2). - (9)

Calculate the fitness value of particles using the function fun () if their position is updated. - (10)

If the fitness value at the new position is smaller than the particle’s historical best fitness value, set the particle’s historical best position to the current position of the particle. - (11)

Update gBestL, the global best position for all local particles. - (12)

k = k + 1

|

Let gBest be the best position of all particles within the microenvironment network at time k. For any microenvironment within the microenvironment network, fun(gBestL) ≥ fun(gBestA) ≥ fun(gBest). In the MPSO algorithm, the fitness values of the particle positions within the microenvironment can converge to the fun(gBest) after a finite number of iterations because the velocity and position of each particle within any microenvironment are updated using Equations (4) and (2). Although the global best position gBest may not appear in each microenvironment, according to the connectivity characteristics of the microenvironments in the microenvironmental network and Equation (3), the global best position gBest can be transmitted to any microenvironment within a finite number of iterations because any two microenvironments can exchange information within a finite number of steps in the microenvironmental network. In extreme cases, let all m microenvironments in the microenvironmental network be lined in a row; the first microenvironment is labeled with A, and the last microenvironment is labeled with B. If the optimal position, gBest is the local optimal position within microenvironment A, then at most within m − 1 iterations, the local optimal position in microenvironment B will be better than the local optimal position in microenvironment A m − 1 iterations before, that is, better than the global optimal position m − 1 iterations before. The data exchange strategy specified in Equation (3) can propagate the global best position gBest to each microenvironment in the microenvironmental network. The fact that gBest can be transmitted to each microenvironment implies that the local best positions, gBestL of each microenvironment, are within a finite number of steps from the global best position. This is manifested as a slight difference in the fitness values of gBestL for each microenvironment when Algorithm 1 has finished its operation.

In Algorithm 1, Step 5 determines whether Steps 6–13 continue to iterate by checking whether the iteration count k is less than the maximum iteration count maxN. If the condition “k ≤ maxN” in step 5 is revised to “k ≤ maxN and fun(gBestL) ≤ goalExpect”, Algorithm 1 can control whether Steps 6 to 13 continue to iterate based on the condition that whether the iteration count k is less than maxN, and the performance of the global best position of all local particles, gBestL, is better than goalExpect, the expected performance. For the modified iteration control condition, if the maximum iteration count maxN is set to a considerable value, the loop for the update of gBestL is controlled by the condition that the performance of gBestL is better than the expected performance, goalExpect.

For Algorithm 1, when gBestL, the global best position of all local particles, is calculated at the k iteration, if step 6 requires that the historical best position gBestL of each neighboring microenvironment must correspond to the k iteration, Algorithm 1 is the synchronous form of the MPSO algorithm. On the other hand, if Step 6 only requires the historical best position gBestL of each neighboring microenvironment from the most recent iteration, Algorithm 1 is the asynchronous form of the MPSO algorithm.

3. Experiment Results and Analysis

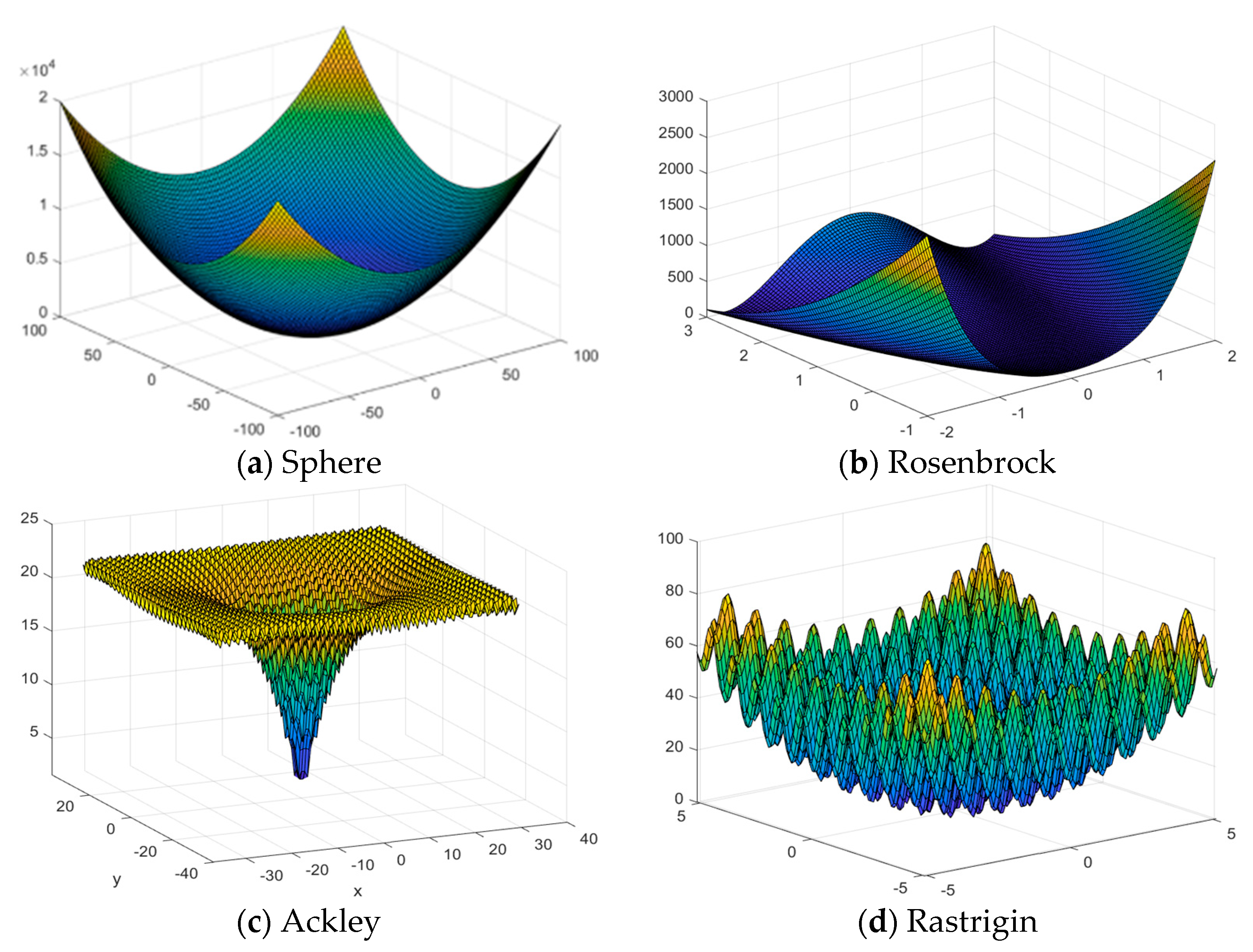

To verify the effectiveness of the MPSO algorithm, six benchmark functions listed in

Table 1 were used to evaluate the performance, such as the time consumption and precision of Algorithm 1 in our experiment. In addition, the influence of different communication methods and microenvironment network topologies on the performance of Algorithm 1 is tested.

Figure 2 shows the shapes of those six benchmark functions when the number of independent variables

d is 2. In the experiment for Algorithm 1 executed in each microenvironment,

n = 20,

d = 2,

c1 = 2,

c2 = 2,

ω = 0.8, where

n is the local particle number,

d is the dimension of the particle position,

c1 and

c2 are learning factors, and

ω is the inertia factor. The fitness function

fun(), the maximum number of iterations maxN, and the neighbor microenvironment node list pNeighbor were determined based on the optimization problem, the iteration termination condition, and the topology of the microenvironment network.

The topology of the microenvironment network used in the experiment is shown in

Figure 3, which corresponds to the distribution of spatial units of the Anhui Province Key Laboratory of Intelligent Building and Building Energy Saving [

44]. When an optimization problem is solved using the PSO algorithm, the solution obtained by a single run of the PSO algorithm is typically a candidate solution of the optimization problem, since PSO is a stochastic search algorithm. To find the optimal solution to an optimization problem, the PSO algorithm must be applied multiple times, and the candidate solution with the best precision is chosen as the optimal solution. Because there are 12 microenvironments in the microenvironment network illustrated in

Figure 3, the experiment first treated these 12 microenvironments as 12 independent computing environments in which the PSO algorithm independently solved the extremum of benchmark functions. When the PSO algorithm is used to independently solve the extremum of benchmark functions in each computing environment,

n = 240,

d = 2,

c1 = 2,

c2 = 2, and

ω = 0.8.

The time consumed and convergence precision of the PSO algorithm in the 12-node microenvironment network shown in

Figure 2, when maxN = 200 for the extremum of each benchmark function solving, are listed in

Table 2. According to

Table 2, when the PSO algorithm is repeatedly used to solve the extremum of the benchmark functions, the performance of the extremum candidate values for all benchmark functions, except the Schwefel function, exceeds the desired performance of 1 × 10

−5, and the extremum performance of the Schwefel function can reach the 1 × 10

−5 level. Additionally, the significant differences between the values in the “maximum” and “average” of precision columns shaded in gray and the corresponding “minimum” values of precision in

Table 2 indicate the necessity of running the PSO algorithm multiple times for optimization problem solving. Similarly,

Table 3 lists the time, convergence precision, and maximum number of iterations for independently solving the extremum of each benchmark function using the PSO algorithm in 12 microenvironments with maxN = 200 and goal precision = 1 × 10

−5.

Table 4 lists the time and convergence precision for independently solving the extremum of each benchmark function using the PSO algorithm in 12 microenvironments with goal precision = 1 × 10

−5 and maxN = 20,000 to prevent excessively long computation times.

Given that the experimental data in

Table 2 show that the performance of the PSO algorithm for the extremum of the Schwefel function solving is greater than 1 × 10

−5, the goal performance for the minimum value of the Schwefel function in

Table 3 is 1 × 10

−4. For comparison, the goal performance for the minimum value of the Schwefel function in

Table 4 is 1 × 10

−5.

Table 3 and

Table 4 show that when the PSO algorithm is repeatedly used to solve the extremum of the benchmark functions, the performance of the minimum value of all benchmark functions, excluding the Schwefel function, surpasses 1 × 10

−5, which is the goal performance. In contrast, the performance of the Schwefel function’s minimum value surpasses 1 × 10

−4. According to the minimum precision in

Table 2,

Table 3 and

Table 4, it can be concluded that the PSO algorithm can effectively obtain the extremum of all benchmark functions. Furthermore, the significant differences between the shaded “maximum” and “average” values of precision and the corresponding “minimum” values of precision in

Table 2,

Table 3 and

Table 4 also highlight the necessity of running the PSO algorithm multiple times.

By comparing the data in the “Minimum” column of the precision part and the “Minimum”, “Maximum”, “Average”, and “Total” columns of the time part for the Schwefel row in

Table 2,

Table 3 and

Table 4, respectively, it can be observed that when the minimum of the Schwefel function is solved using the PSO algorithm, as shown in the data in

Table 2 and

Table 3, the performance of the feasible solutions obtained by the PSO algorithm can quickly surpass 1 × 10

−4. However, by comparing the data in

Table 4, even with continued iterations, the precision of the feasible solutions obtained by the PSO algorithm remained challenging, reaching 1 × 10

−5 within 20,000 iterations.

With the microenvironment network of 12 microenvironment nodes illustrated in

Figure 3, the experiment further tested the time and precision performance of the synchronous MPSO algorithm for the extremum search of each benchmark function. The experimental results for maxN = 200 are presented in

Table 5.

Table 6 presents the experimental results for maxN = 200 and 1 × 10

−5 as the goal precision, where maxN is the maximum number of iterations.

Table 7 lists the experimental results for 1 × 10

−5 as the goal precision. In

Table 6 and

Table 7, the goal precision for the minimum Schwefel function is 1 × 10

−4. In the experiment, the number of particles in the PSO algorithm was set to 240, with 20 particles in each microenvironment. Based on the minimum precision values listed in

Table 5,

Table 6 and

Table 7, it can be concluded that the synchronous MPSO algorithm can effectively determine the extremum of all benchmark functions. Furthermore, by comparing the summary of the time part in

Table 2,

Table 3 and

Table 4 with the maximum time part in

Table 5,

Table 6 and

Table 7, it is evident that when an optimization problem must be solved multiple times under the same convergence conditions to obtain some candidate solutions, and the optimal solution for the desired optimization problem is selected from those multiple candidates, the synchronous MPSO algorithm consistently requires much less time than the PSO algorithm.

Using the microenvironment network of the 12-node microenvironment illustrated in

Figure 3, the experiment further tested the time and precision performance of the asynchronous MPSO algorithm for the extremum of each benchmark function. The experimental results for maxN = 200 are presented in

Table 8.

Table 9 presents the experimental results for maxN = 200 and 1 × 10

−5 as the goal precision, where maxN is the maximum number of iterations.

Table 10 lists the experimental results for 1 × 10

−5 as the goal precision. In

Table 8 and

Table 9, the goal precision for the minimum Schwefel function is 1 × 10

−4. In the experiment, the number of particles in the PSO algorithm was set to 240, with 20 particles in each microenvironment. Based on the minimum precision values listed in

Table 8,

Table 9 and

Table 10, it can be concluded that the asynchronous MPSO algorithm can effectively determine the extremum of all benchmark functions. Furthermore, by comparing the summary of the time part in

Table 2,

Table 3 and

Table 4 with the maximum time part in

Table 8,

Table 9 and

Table 10, it is evident that when an optimization problem must be solved multiple times under the same convergence conditions to obtain some candidate solutions, and the optimal solution for the desired optimization problem is selected from those multiple candidates, the asynchronous MPSO algorithm consistently requires much less time than the PSO algorithm. By comparing the precision and time data in

Table 5,

Table 6 and

Table 7 and

Table 8,

Table 9 and

Table 10, it can be observed that the asynchronous MPSO algorithm generally achieves slightly lower precision in approximating the optimal solutions of all benchmark functions compared to the synchronous MPSO algorithm, but it requires more time. The synchronous MPSO algorithm performed better than the asynchronous MPSO algorithm in solving the optimal solutions of the benchmark functions.

The topology of the microenvironment network for the performance data of the MPSO listed in

Table 5,

Table 6,

Table 7,

Table 8,

Table 9 and

Table 10 is illustrated in

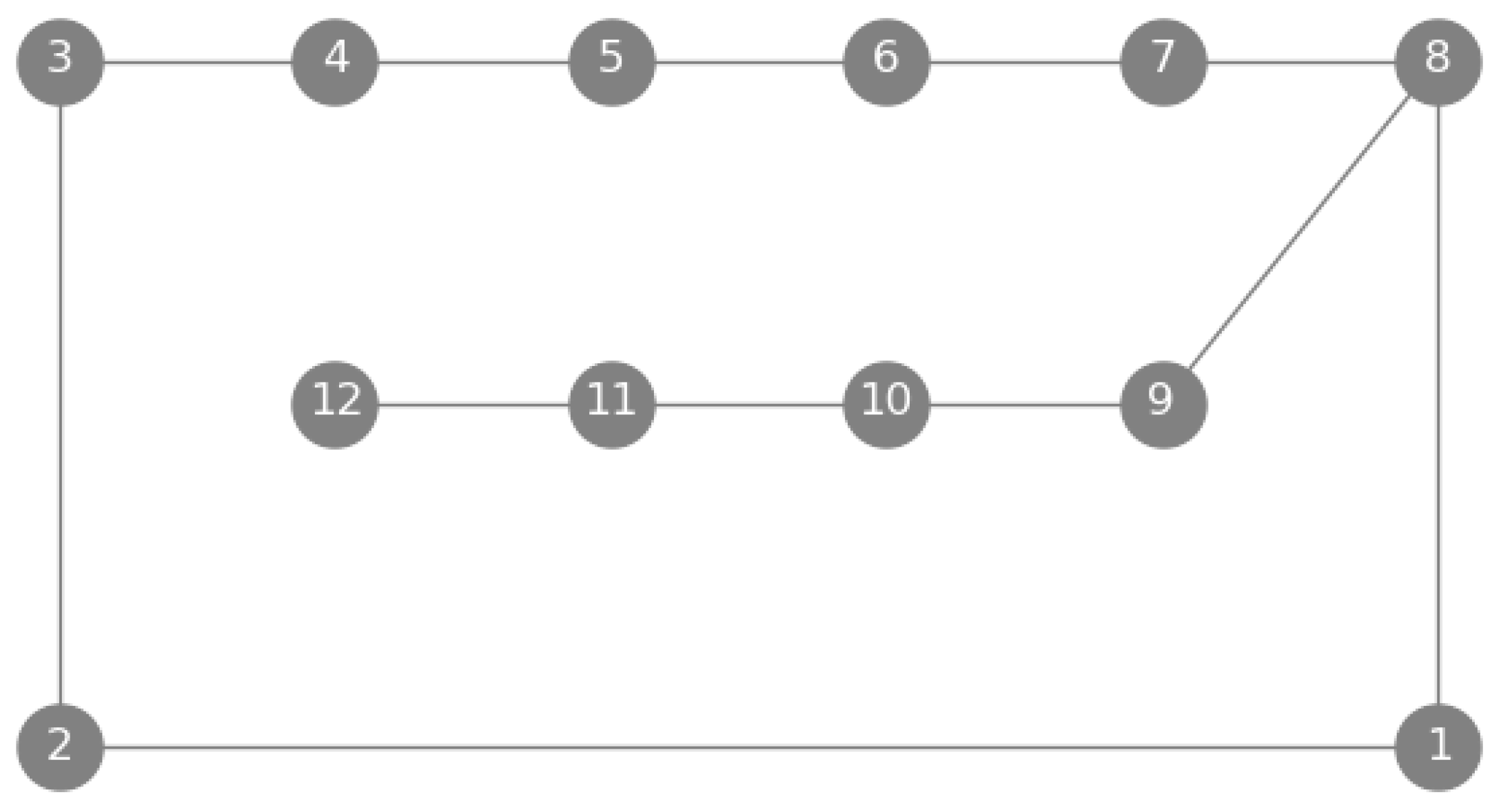

Figure 3, which is an actual microenvironment network deployed in the Anhui Provincial Key Laboratory of Intelligent Buildings and Building Energy Saving. It is consistent with the spatial unit distribution of the laboratory. Furthermore, to test the robustness of the MPSO algorithm, four different microenvironmental network topologies were used, and the microenvironment network had 12 microenvironment nodes. The topologies of the four microenvironmental networks are shown in

Figure 4. These topologies were used to evaluate the performance of the synchronous MPSO algorithm in solving the extremum of all benchmark functions. The goal performance for the Schwefel function was set to 1 × 10

−4, whereas that for the other benchmark functions was set to 1 × 10

−5.

Four microenvironment networks are shown in

Figure 4. Each microenvironment network shown in

Figure 4 has 12 microenvironment nodes, each of which has a unique topology, such as a line, ring, full, or random topology. In the line microenvironment network, except for the first and last nodes, each node has two neighbors, and each node can only communicate with its adjacent neighbors. In a ring microenvironment network, each node has two neighboring nodes, and all nodes form a closed loop. Each microenvironment node can communicate with its neighbors only in a ring microenvironment network. In a full microenvironment network, each microenvironment node can communicate with each other. The random topology of a microenvironment network is a randomly generated connected graph with 12 nodes. When generating a random topology, each node must have at least one neighbor and no more than six neighbors. This corresponds to the spatial structure of a building, where each spatial unit can have up to six neighbors located in directions such as up, down, left, right, front, and back.

To test the performance of the synchronous MPSO algorithm for the extremum of all benchmark functions solved across the four different microenvironment network topologies, in further experiments, the synchronous MPSO algorithm was executed in each microenvironment, and

n = 20,

d = 2,

c1 = 2,

c2 = 2,

ω = 0.8, where

n is the local particle number,

d is the dimension of the particle position,

c1 and

c2 are the learning factors, and

ω is the inertia factor. Furthermore, the goal performance for each benchmark function, except for the Schwefel function, was set to 1 × 10

−5, and the goal precision for the Schwefel function was 1 × 10

−4. Meanwhile, maxN, the maximum number of iterations, was set to 20,000. The fitness function

fun() was defined as each of the benchmark functions, and the list of neighboring microenvironment nodes pNeighbor was determined based on the microenvironment network topologies shown in

Figure 4.

With those four microenvironment networks illustrated in

Figure 4, the experiment further tested the time and precision performance of the synchronous MPSO algorithm for solving the extremum of each benchmark function. The time and precision data are listed in

Table 11,

Table 12,

Table 13 and

Table 14. The precision of the synchronous MPSO algorithm in solving the extremum of all benchmark functions in each microenvironment network topology can reach this goal. Furthermore, by comparing the Total Time data in the “Total Time” column of

Table 4 with the maximum time data in the “Max Time” column of

Table 11,

Table 12,

Table 13 and

Table 14 to solve the extremum of each benchmark function, it is evident that when the best solution of an optimization problem needs to be selected from some candidate solutions, the time consumed by the synchronous MPSO algorithm is less than the time consumed by the PSO algorithm.

In the current research and application of building intelligence and building energy efficiency, to complete some common tasks, such as fault diagnosis and optimization, fine-grained environmental control, energy efficiency optimization, occupant localization, and occupant behavior analysis, the reconstruction of the temperature field in building spatial units based on temperature monitoring data from a few interesting locations is a common optimization task. The PSO algorithm is frequently employed to optimize the temperature field reconstruction model.

In the following experiment, a feedforward neural network with one hidden layer was used as the reconstruction model of the temperature field for building spatial units. This model had an input layer with two input neurons, a hidden layer with two hidden neurons, and an output layer with one output neuron. The input layer was fully connected to the hidden layer, and the hidden layer was fully connected to the output layer. Each neuron in the hidden and output layers had a bias. The active function for each hidden layer neuron is a hyperbolic tangent function,

, and the active function for each output layer neuron is a linear function,

. During data collection, only five temperature monitoring points were arranged in the northeast, southeast, southwest, northwest, and center of the building spatial unit. The volume of the collected data were minimal, whereas the spatial span was large. Therefore, it is not advisable to train the feedforward neural network using the BP algorithm directly. Hence, the model parameters (weights of all neuron connections) were trained using the PSO algorithm. The performance of the MPSO algorithm for the temperature field reconstruction model training in the microenvironment topology illustrated in

Figure 3 was tested in the following experiment. For comparison, the performance of the PSO algorithm for training the temperature field reconstruction model was also tested in the following experiment. Information such as computation time, precision, and number of iterations of the PSO, Synchronous MPSO, and Asynchronous MPSO algorithms for the temperature field reconstruction model training are given in

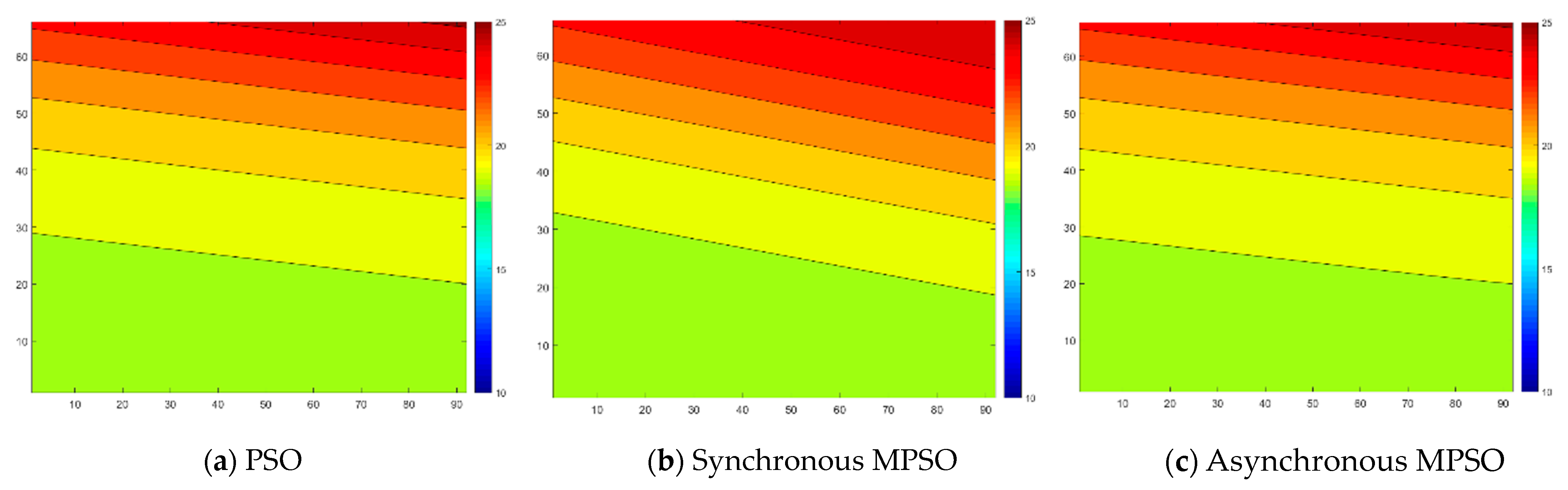

Table 15, and the temperature fields reconstructed by the temperature field reconstruction model trained by the PSO, Synchronous MPSO, and Asynchronous MPSO algorithms are shown in

Figure 5. In the experiment, the goal precision for the PSO, Synchronous MPSO, and Asynchronous MPSO algorithms was set to 1 × 10

−8, and the maximum number of iterations (maxN) was set to 2000. The number of particles in the PSO algorithm was 600, whereas the number of particles in each microenvironment for both the synchronous and asynchronous MPSO algorithms was 50. To collect data for the information in

Table 15, the PSO algorithm independently optimized the temperature field reconstruction model in 12 microenvironments. By contrast, the synchronous and asynchronous MPSO algorithms optimized the model in the same 12 microenvironments.

From the data in the “Min” column of the precision part in

Table 15, it can be asserted that the PSO, synchronous MPSO, and asynchronous MPSO algorithms can effectively optimize the temperature field reconstruction model. Moreover, by comparing the data in the “Min”, “Max”, and “mean” columns of the precision part in

Table 15, it is evident that when the PSO algorithm is used to optimize the temperature field reconstruction model, the precision of the model fluctuates significantly upon convergence. In contrast, when the synchronous and asynchronous MPSO algorithms were used to optimize the temperature field reconstruction model, the precision of the model remained stable. The similarity in the contour plots of the temperature fields reconstructed by the models using the three methods, as shown in

Figure 5, also indicates that these algorithms can optimize the temperature field reconstruction model. Furthermore, from the time taken for the optimization of the temperature field reconstruction model in

Table 15, the maximum optimization time for the synchronous and asynchronous MPSO algorithms was significantly less than the total time taken by the PSO algorithm when it was repeated 12 times (the total time was 12 times the average value). The maximum time required by the synchronous MPSO algorithm was also less than that of the asynchronous MPSO algorithm. The synchronous MPSO algorithm is more suitable for optimizing the temperature field reconstruction model.

All experiments were completed on a microenvironment network platform with 12 PCs as microenvironment nodes. Each PC had one Intel Core i7 processor and 16 GB of DDR4 memory. A microenvironment network platform was constructed based on the local area network. All PCs were purchased from the same vendor in the same batch to ensure consistent performance metrics. The operating system for each microenvironment was Linux Fedora fc38.x86_64. Each algorithm was implemented using the Python 3.12.4 programming language. MariaDB 10.5.21 for Linux (x86_64) was deployed to store the local data in each microenvironment, and the UDP protocol is used for communication.