Data-Driven Quantitative Performance Evaluation of Construction Supervisors

Abstract

1. Introduction

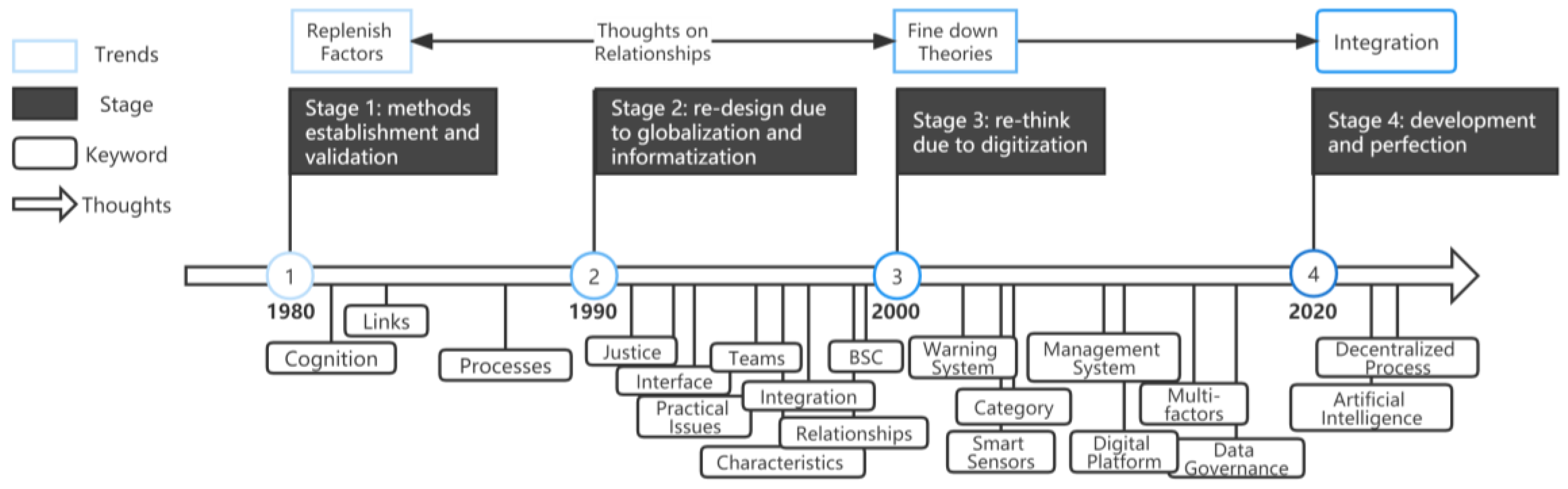

2. Literature Review

2.1. Typical Weighting Determination Methods in Construction

2.1.1. Conventional Weighting Determination Methods

2.1.2. Modern Weighting Determination Methods

- The Analytic Hierarchy Process

- 2.

- Fuzzy Comprehensive Evaluation

- 3.

- Data Envelopment Analysis

2.2. Typical Methods for Performance Evaluation of Construction Workers

- Critical Incident Method

- 2.

- Graphic Rating Scale

- 3.

- Behavior Checklist

- 4.

- Management by Objectives

- 5.

- 360-degree Evaluation Method

- 6.

- Key Performance Indicator

- 7.

- Balanced Score Card

2.3. Summary

2.3.1. Advantages of Emerging Information Technologies

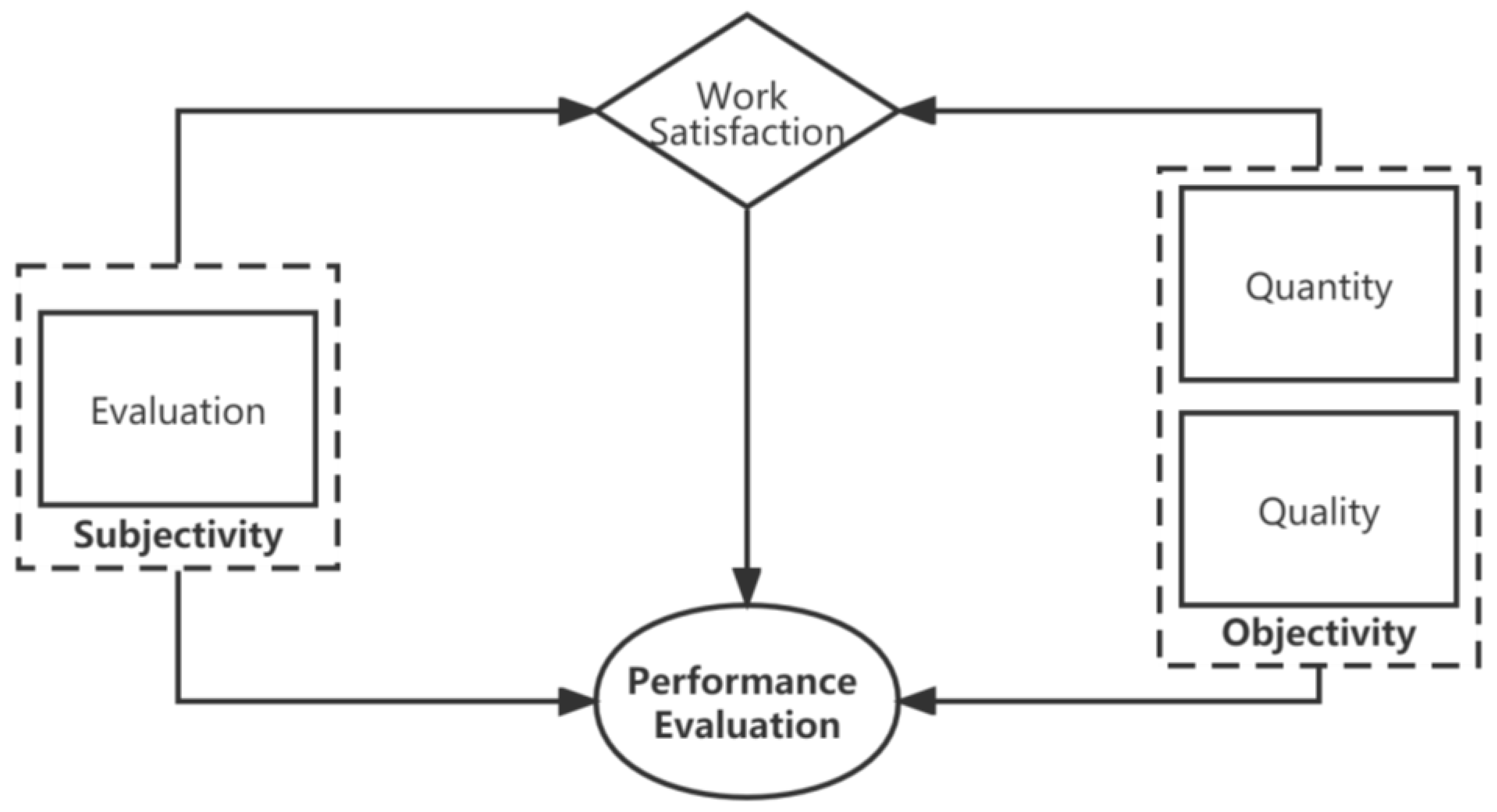

2.3.2. Performance Quantification

2.3.3. Necessity and Significance

3. Methodology

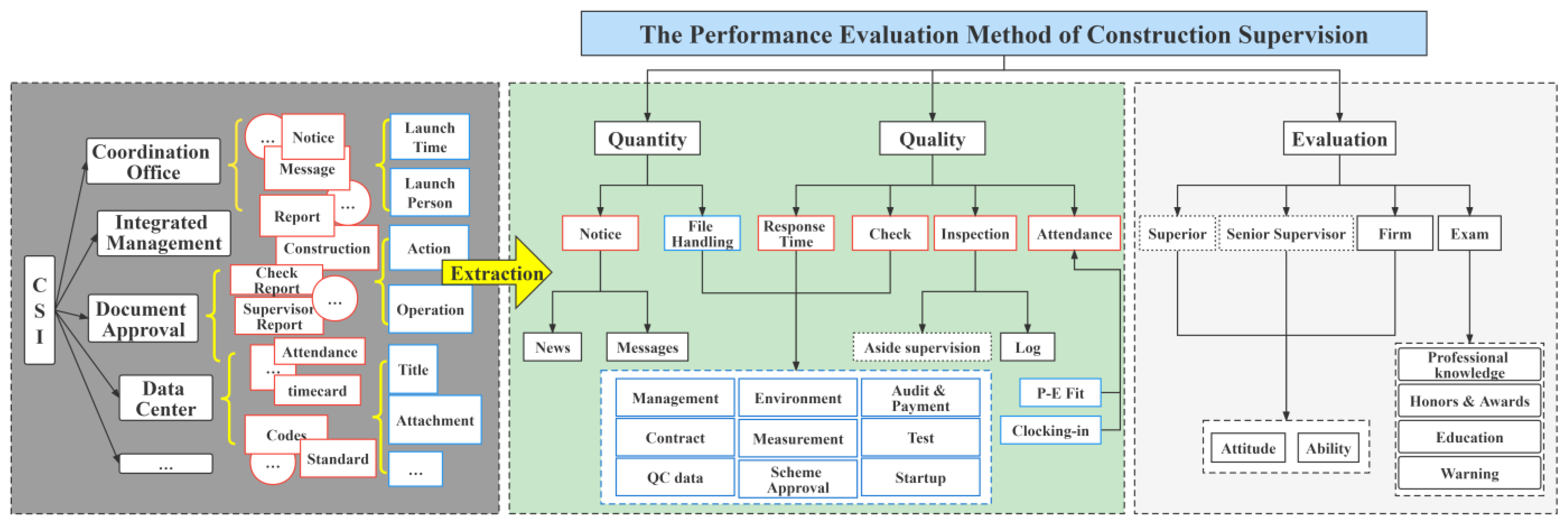

3.1. Index Extraction

3.2. Weighting

3.2.1. Weighting Based on AHP Approach

- CI is the consistency index;

- is the principal Eigen value;

- n is the Matrix size.

- CI is the consistency index as a deviation or degree of consistency;

- RI is the random index;

- CR is the consistency ratio.

3.2.2. Comparison of Other Approaches

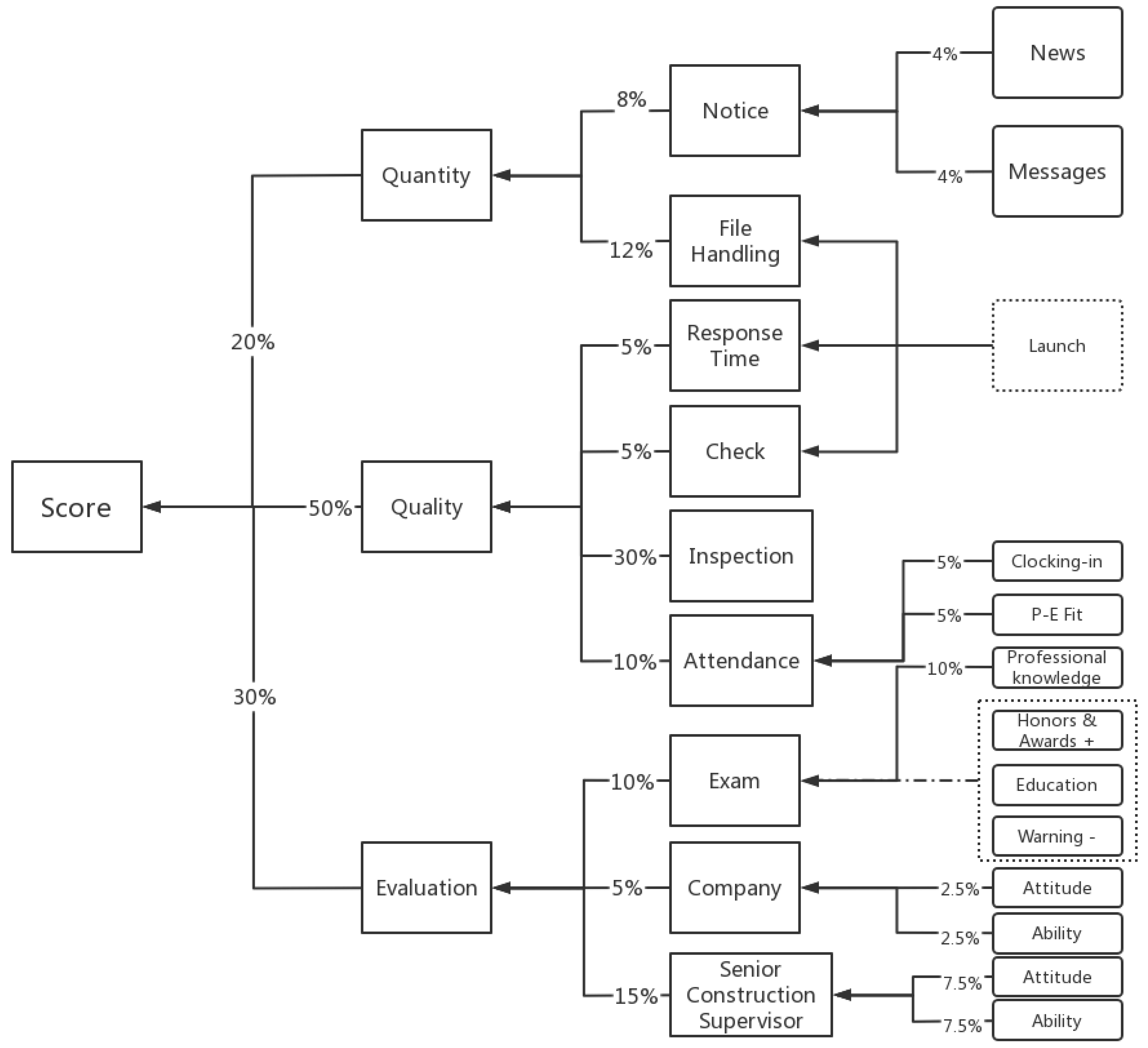

3.3. Index Calculation

- is the mark of the work quantity of senior construction supervisor;

- is the default mark of “Notice”;

- is the default mark of “File Handling”;

- is the mark of deduction item i of “Notice”;

- is the mark of deduction item j of “File Handling”.

- is the mark of the work quality of the senior construction supervisor;

- is the default mark of Response Time;

- is the default mark of Check;

- is the default mark of Inspection;

- is the default mark of Attendance;

- is the mark of the deduction item of Response Time;

- is the mark of the deduction item of Check;

- is the mark of the deduction item of Inspection;

- is the mark of the deduction item of Attendance.

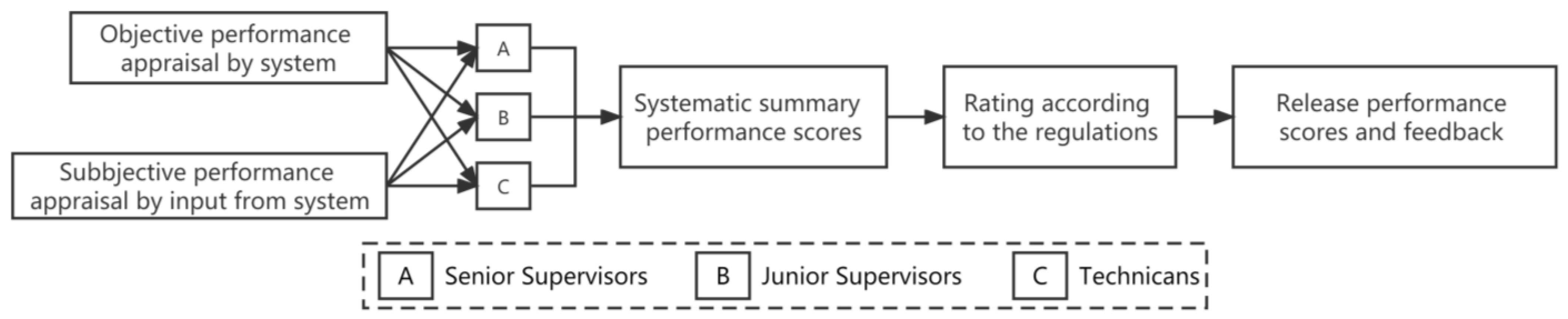

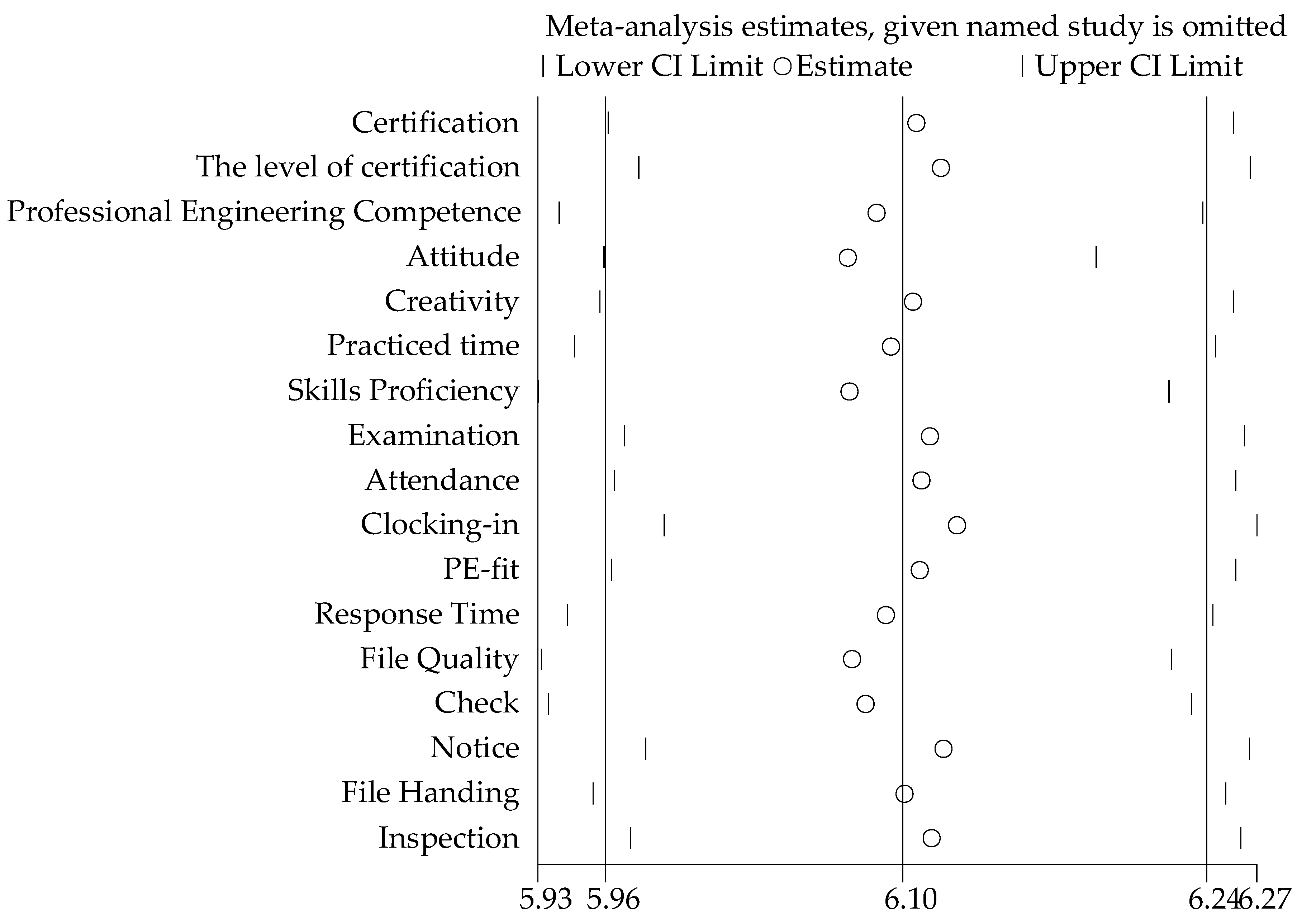

3.4. Case Analysis and Verification

4. Case Study

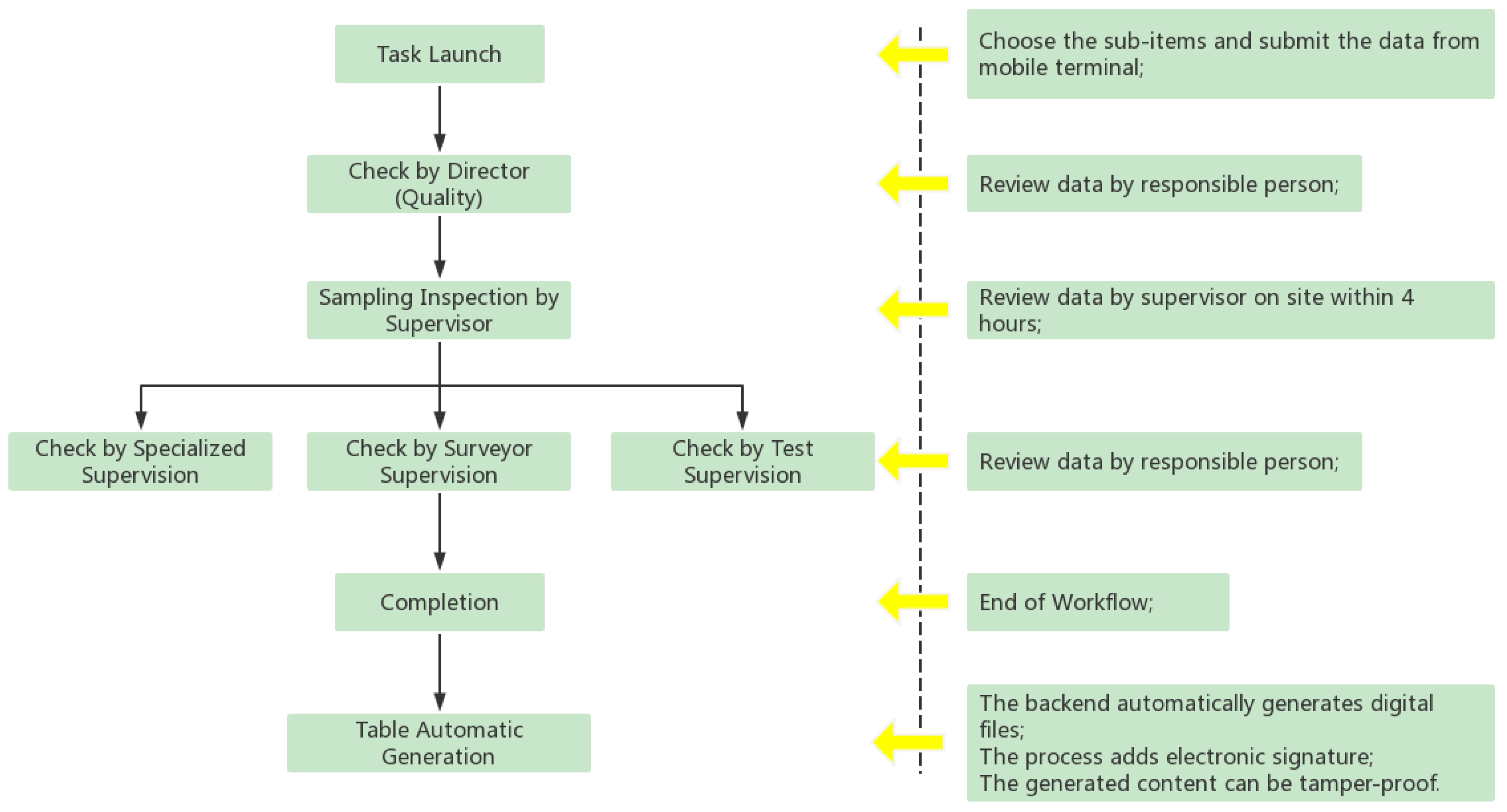

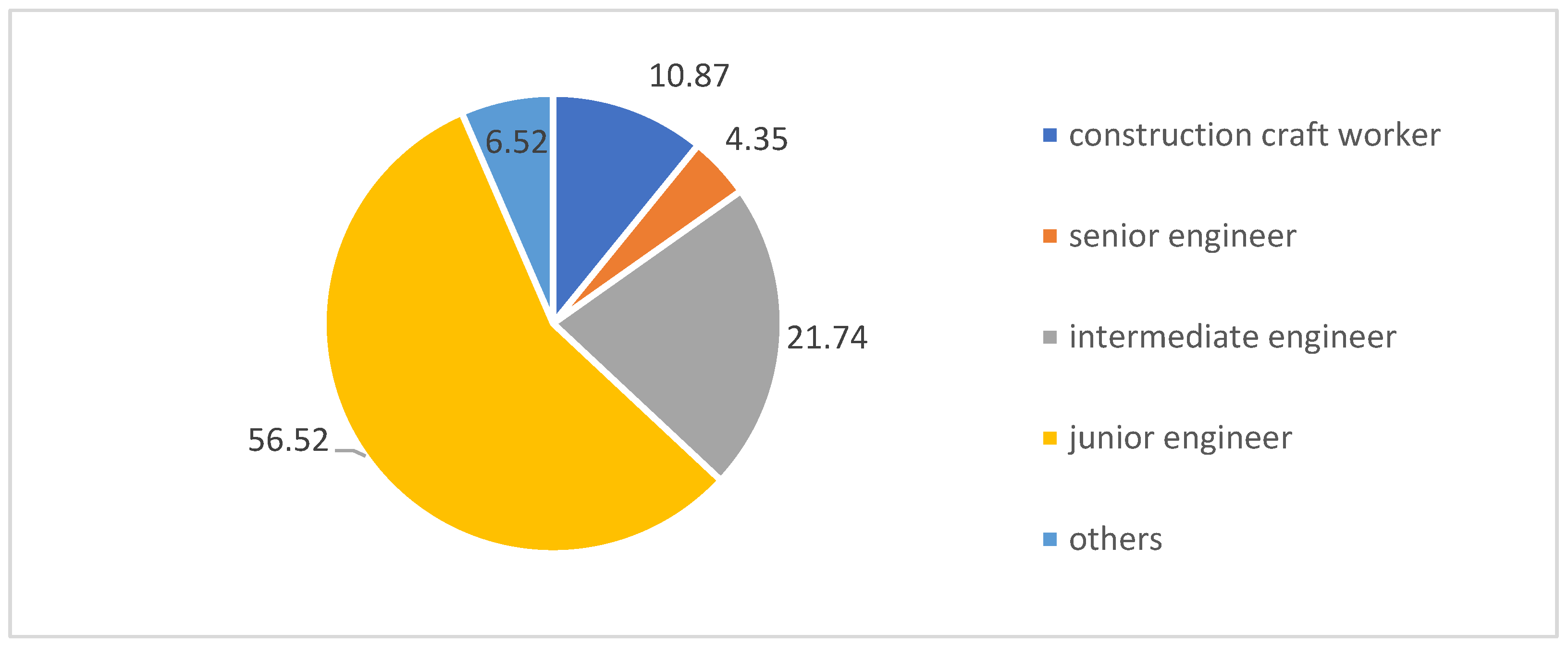

4.1. Background

4.2. Index Extraction on Case Study

4.3. Weighting on Case Study

4.4. Index Calculation on Case Study

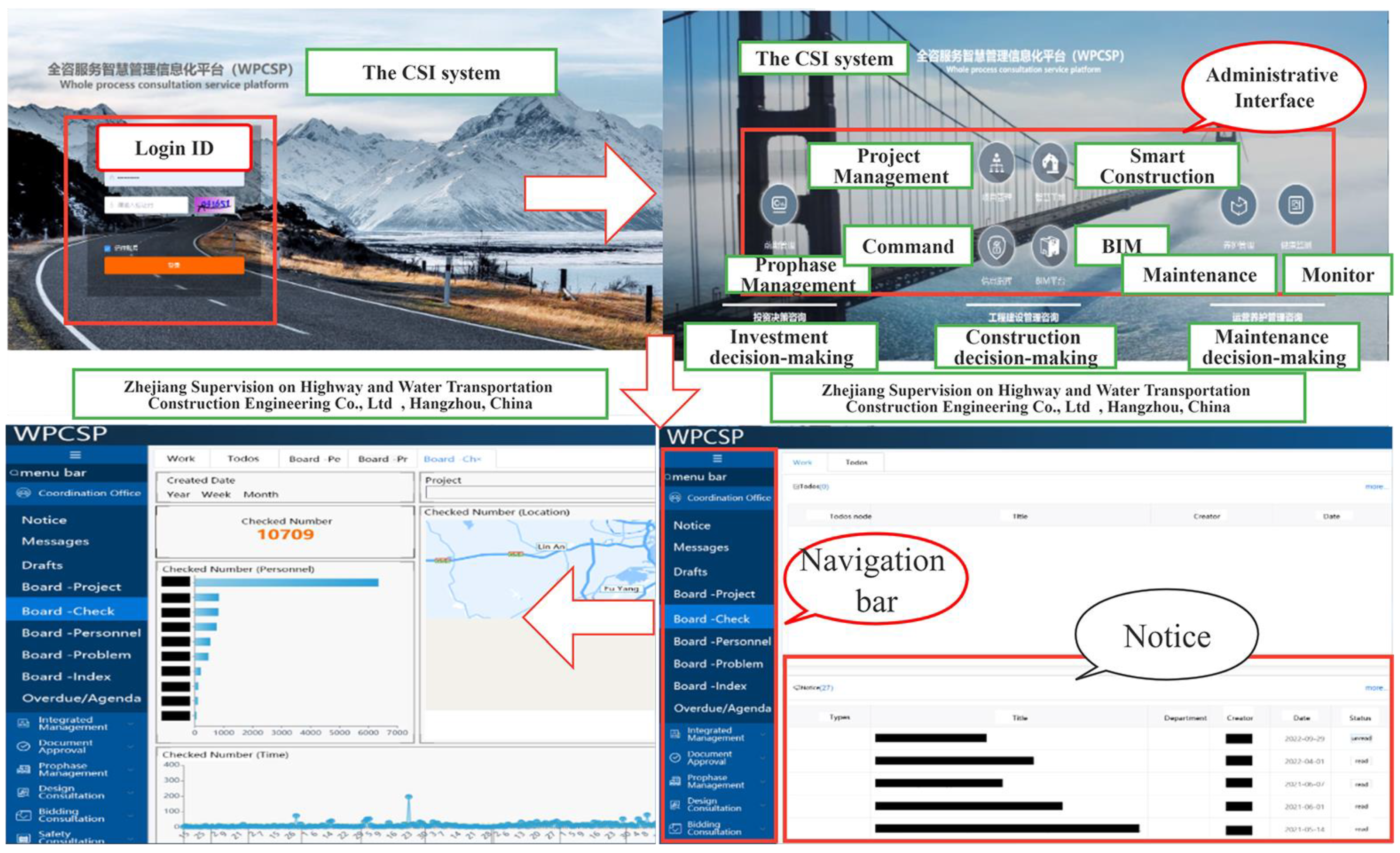

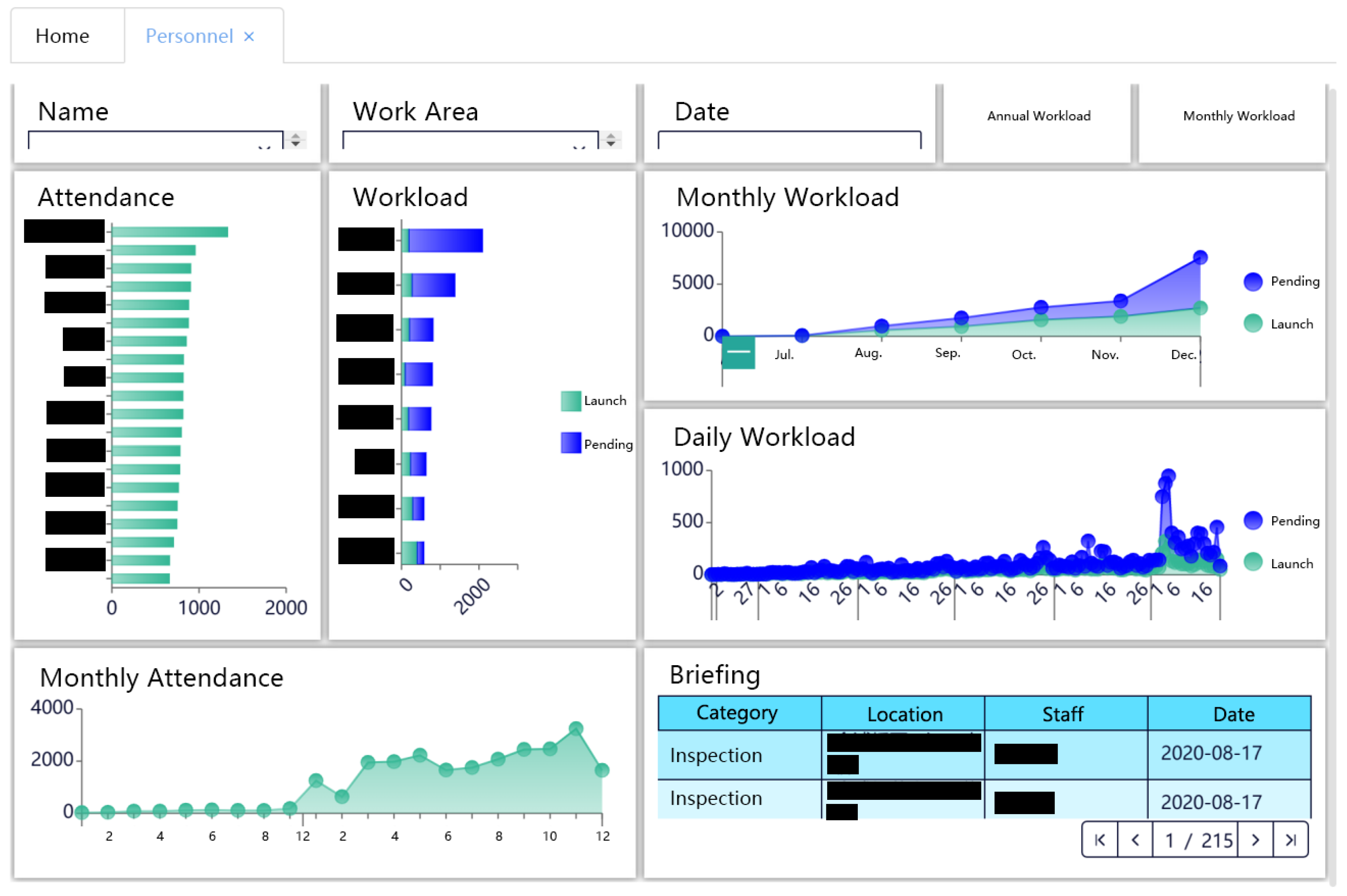

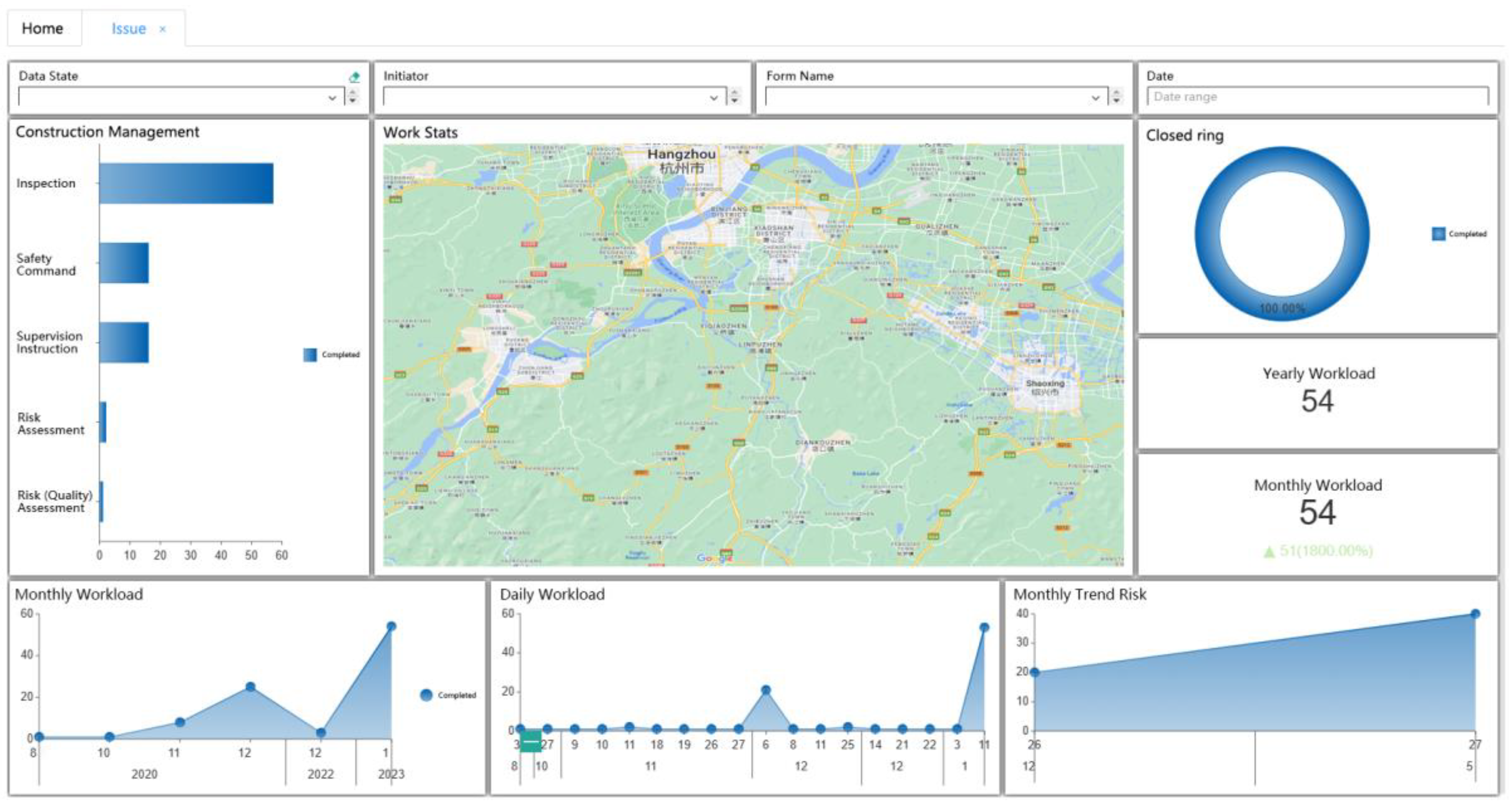

- Considering the fields and key values in the database, combined with the characteristics of the project, through literature research, site survey, and expert discussion, the indexes suitable for the performance evaluation of supervisors were supplemented and sorted out, and, finally, the evaluation system was formed. The performance-evaluating baseline was based on the national engineering guidelines and standards as well as local government policy. For the evaluating indexes, we considered suggestions from construction craft workers, construction supervisors, superintendents, and experts.

- The objective evaluation index (work quantity and work quality) and the subjective evaluation index (work evaluation) modules were used to further divide the logical relationships in the data. After the evaluating procedure was performed during the discussion and meetings with experts, the automatic calculation module began to develop based on the indexes, weighting, daily work flow, and the data extracted from digital files.

- Finally, the module was applied to the CSI system for scoring and grading. The verification of the project showed that the module was properly operational. The statistic of workload could be calculated automatically, and the statistical time range was selectable.

4.5. Application and Feedback

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| No. | Weighting | Lv.1 | Weighting | Lv.2 | Content | Period (Month) | Weighting | Lv.3 | Evaluation Item | Label | Technician (Object) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 20% | Quantity | 8% | Notice | The number of notifications read: Monthly (Read/Total) | 1 | 4.00% | News | Read pushed news from CSI system by APP or PC | non-daily | >90% |

| 4.00% | Messages | Read pushed messages from the CSI system by APP or PC | non-daily | ||||||||

| 12% | File Handling | Number of tasks processed and launched | 6 | 12.00% | Management | Acceptance records, etc. | day-to-day | >1 | |||

| Environment | Inspection records, etc. | day-to-day | |||||||||

| Audit and Payment | day-to-day | ||||||||||

| Contract | non-daily | ||||||||||

| Measurement | Approval form, etc. | day-to-day | |||||||||

| Test | day-to-day | ||||||||||

| QC data | Review signature, etc. | day-to-day | |||||||||

| Scheme Approval | non-daily | ||||||||||

| Startup | non-daily | ||||||||||

| 2 | 50% | Quality | 5% | Response Time | The time of processing and handling tasks shall be in accordance with the contract | 6 | 5.00% | Management | Acceptance records, etc. | day-to-day | 1. Tasks with the label of day-to-day: processing time < 24 working hours (one point is deducted if over than 12 h) 2. Tasks with the label of non-daily: in accordance with the contract |

| Environment | Inspection records, etc. | day-to-day | |||||||||

| Audit and Payment | day-to-day | ||||||||||

| Contract | non-daily | ||||||||||

| Measurement | Approval form, etc. | day-to-day | |||||||||

| Test | day-to-day | ||||||||||

| QC data | Review signature, etc. | day-to-day | |||||||||

| Scheme Approval | non-daily | ||||||||||

| Startup | non-daily | ||||||||||

| 5% | Check | Number of unfinished tasks: (0, full mark; <10 cases, one point is deducted for every 10 cases; >30 cases, 0) | 6 | 5.00% | Management | Acceptance records, etc. | day-to-day | Up until now, the special supervision inspection was qualified and had no accidents, full marks The task was completed and qualified without accident, full marks Number of unfinished cases: (=0, full mark; <10 cases, one point is deducted for every 10 cases; >30 cases, 0) | |||

| Environment | Inspection records, etc. | day-to-day | |||||||||

| Audit and Payment | day-to-day | ||||||||||

| Contract | non-daily | ||||||||||

| Measurement | Approval form, etc. | day-to-day | |||||||||

| Test | day-to-day | ||||||||||

| QC data | Review signature, etc. | day-to-day | |||||||||

| Scheme Approval | non-daily | ||||||||||

| Startup | non-daily | ||||||||||

| 30% | Inspection | Patrol times and logging * Side station | 6 | 10.00% | Log | Number of task flows that participate in patrol initiation | day-to-day | Inspect the construction site regularly or irregularly Not less than once a day for major projects under construction | |||

| 20.00% | Aside supervision | The number of side stations is subject to actual occurrence | |||||||||

| 10% | Attendance | Online clocking of attendance statistics | 6 | 5.00% | Clocking-in | Perform duties according to the regulations, and the monthly on-site time shall meet the contract requirements | day-to-day | In accordance with the contract | |||

| 5.00% | PE-fit | The clocking time should match the clocking place, and the reason should be explained if outside the e-fence | Upload attendance location, if outside the e-fence, or in case of absence, mark 0 on the day The reasons should be explained outside the fence. If the reasons do not meet the company’s management regulations, this item will be marked as 0 on the day | ||||||||

| 3 | 30% | Evaluation | 5% | Firm | Work attitude and professional ability are scored by the company Default full mark if no feedback | 6 | 2.50% | Attitude | Energic and responsible | non-daily | According to the score |

| 2.50% | Ability | Refer to the assessment system issued by the company | |||||||||

| 15% | Superior | The resident principal of the project will score the work attitude and business cooperation Default full mark if no feedback | 6 | 7.50% | Attitude | Energic and responsible | According to the score | ||||

| 7.50% | Ability | Refer to the assessment system issued by the company | |||||||||

| 10% | Examination | Professional investigation and reward and punishment | 12 | 10.00% | Professional Knowledge | Professional knowledge assessment | Percentage system, 10 points first gear, less than 60 points does not qualify | ||||

| According to the scoring standard | Honors and Awards | Awarded by project owner or superior | Bonus (5 points/honors) | ||||||||

| Education | Organize special meetings, education and training | If one does not attend, they will be penalized (score = day attendance score). | |||||||||

| Warning | Be criticized by the owner/superior, including behaviors, performance of the contract, etc. | Deduction (5 points/report criticism; 0 if illegal act) |

References

- Sedykh, E. Project Management: Process Approach. Vestn. Samara State Tech. Univ. Psychol. Pedagog. Sci. 2019, 16, 181–192. [Google Scholar] [CrossRef]

- Lee, M.-W.; Shin, E.-Y.; Lee, K.-S.; Park, H.-P. The Improvement Plan of Project Management for Highway Construction Supervisor Using Construction Management Benchmarking of Developed Countries. Korean J. Constr. Eng. Manag. 2006, 7, 138–148. [Google Scholar]

- Zhang, J.-P.; Lin, J.-R.; Hu, Z.-Z.; Wang, H.-W. Intelligent Construction Driven By Digitalization. Archit. Technol. 2022, 53, 1566–1571. [Google Scholar]

- Landy, F.J.; Barnes-Farrell, J.L.; Cleveland, J.N. Perceived Fairness and Accuracy of Performance Evaluation: A Follow-Up. J. Appl. Psychol. 1980, 65, 355–356. [Google Scholar] [CrossRef]

- Cardy, R.L.; Dobbins, G.H. Performance Appraisal: Alternative Perspectives; South-Western Publishing Company: La Jolla, CA, USA, 1994; ISBN 978-0-538-81383-9. [Google Scholar]

- Shahat, A. A Novel Big Data Analytics Framework for Smart Cities. Future Gener. Comput. Syst. 2018, 91, 620–633. [Google Scholar] [CrossRef]

- Dransfield, R. Human Resource Management; Heinemann: London, UK, 2000; ISBN 978-0-435-33044-6. [Google Scholar]

- Costa, N.; Rodrigues, N.; Seco, M.; Pereira, A. SL: A Reference Smartness Level Scale for Smart Artifacts. Information 2022, 13, 371. [Google Scholar] [CrossRef]

- Arvey, R.D.; Murphy, K.R. Performance evaluation in work settings. Annu. Rev. Psychol. 1998, 49, 141–168. [Google Scholar] [CrossRef]

- Banks, C.G.; Murphy, K.R. Toward narrowing the research-practice gap in performance appraisal. Pers. Psychol. 1985, 38, 335–345. [Google Scholar] [CrossRef]

- Freinn-von Elverfeldt, A.C. Performance Appraisal: How to Improve Its Effectiveness. Available online: http://essay.utwente.nl/58960/ (accessed on 26 December 2022).

- Greenberg, J. Advances in Organizational Justice; Stanford University Press: Redwood City, CA, USA, 2002; ISBN 978-0-8047-6458-2. [Google Scholar]

- Murphy, K.R.; Cleveland, J.N.; Skattebo, A.L.; Kinney, T.B. Raters Who Pursue Different Goals Give Different Ratings. J. Appl. Psychol. 2004, 89, 158–164. [Google Scholar] [CrossRef]

- Perlow, R.; Latham, L.L. Relationship of Client Abuse With Locus of Control and Gender: A Longitudinal Study in Mental Retardation Facilities. J. Appl. Psychol. 1993, 78, 831. [Google Scholar] [CrossRef]

- Waldman, D.A. The Contributions of Total Quality Management to a Theory of Work Performance. Acad. Manage. Rev. 1994, 19, 510–536. [Google Scholar] [CrossRef]

- Bobko, P.; Coella, A. Employee Reactions to Performance Standards: A Review and Research Propositions. Pers. Psychol. 1994, 47, 1–29. [Google Scholar] [CrossRef]

- Cardy, R.L.; Keefe, T.J. Observational Purpose and Evaluative Articulation in Frame-of-Reference Training: The Effects of Alternative Processing Modes on Rating Accuracy. Organ. Behav. Hum. Decis. Process. 1994, 57, 338–357. [Google Scholar] [CrossRef]

- Borman, W.C.; Motowidlo, S.J. Task Performance and Contextual Performance: The Meaning for Personnel Selection Research. Hum. Perform. 1997, 10, 99–109. [Google Scholar] [CrossRef]

- Murphy, K.R.; Cleveland, J.N. Understanding Performance Appraisal: Social, Organizational, and Goal-Based Perspectives; SAGE: Newbury Park, CA, USA, 1995; ISBN 978-0-8039-5475-5. [Google Scholar]

- Cascio, W.F.; Outtz, J.; Zedeck, S.; Goldstein, I.L. Statistical Implications of Six Methods of Test Score Use in Personnel Selection. Hum. Perform. 1995, 8, 133–164. [Google Scholar] [CrossRef]

- Fletcher, C. Performance Appraisal and Management: The Developing Research Agenda. J. Occup. Organ. Psychol. 2001, 74, 473–487. [Google Scholar] [CrossRef]

- Jackson, S.E.; Ruderman, M.N. Introduction: Perspectives for Understanding Diverse Work Teams. In Diversity in Work Teams: Research Paradigms for a Changing Workplace; Jackson, S.E., Ruderman, M.N., Eds.; American Psychological Association: Washington, DC, USA, 1995; pp. 1–13. ISBN 978-1-55798-333-6. [Google Scholar]

- Ayman, R.; Chemers, M.M.; Fiedler, F. The Contingency Model of Leadership Effectiveness: Its Levels of Analysis. Leadersh. Q. 1995, 6, 147–167. [Google Scholar] [CrossRef]

- Lewin, D.; Mitchell, O.S.; Sherer, P.D. Research Frontiers in Industrial Relations and Human Resources; Cornell University Press: Ithaca, NY, USA, 1992; ISBN 978-0-913447-53-6. [Google Scholar]

- Lawler, E.E., III. Strategic Pay: Aligning Organizational Strategies and Pay Systems; Jossey-Bass/Wiley: Hoboken, NJ, USA, 1990; pp. xvii, 308. ISBN 978-1-55542-262-2. [Google Scholar]

- Guzzo, R.A.; Dickson, M.W. Teams in organizations: Recent Research on Performance and Effectiveness. Annu. Rev. Psychol. 1996, 47, 307–338. [Google Scholar] [CrossRef]

- Prendergast, C.; Topel, R.H. Favoritism in Organizations. J. Polit. Econ. 1996, 104, 958–978. [Google Scholar] [CrossRef]

- DeNisi, A.S.; Robbins, T.L.; Summers, T.P. Organization, Processing, and Use of Performance Information: A Cognitive Role for Appraisal Instruments1. J. Appl. Soc. Psychol. 1997, 27, 1884–1905. [Google Scholar] [CrossRef]

- Hoque, Z. Jodie Moll Public Sector Reform–Implications for Accounting, Accountability and Performance of State-Owned Entities—An Australian Perspective. Int. J. Public Sect. Manag. 2001, 14, 304–326. [Google Scholar] [CrossRef]

- Kaplan, R.S.; Norton, D.P. Transforming the Balanced Scorecard from Performance Measurement to Strategic Management: Part I. Account. Horiz. 2001, 15, 87–104. [Google Scholar] [CrossRef]

- Hagood, W.O.; Friedman, L. Using the Balanced Scorecard to Measure the Performance of Your HR Information System. Public Pers. Manag. 2002, 31, 543–557. [Google Scholar] [CrossRef]

- Deem, J.W. The Relationship of Organizational Culture to Balanced Scorecard Effectiveness; Nova Southeastern University: Fort Lauderdale, FL, USA, 2009. [Google Scholar]

- Salleh, M.; Amin, A.; Muda, S.; Halim, M.A.S.A. Fairness of Performance Appraisal and Organizational Commitment. Asian Soc. Sci. 2013, 9, 121. [Google Scholar] [CrossRef]

- Batra, M.S.; Bhatia, D.A. Significance of Balanced Scorecard in Banking Sector: A Performance Measurement Tool. Indian J. Commer. Manag. Stud. 2014, 5, 47–54. [Google Scholar]

- Neha, S.; Himanshu, R. Impact of Performance Appraisal on Organizational Commitment and Job Satisfaction. Int. J. Eng. Manag. Sci. 2015, 6, 95–104. [Google Scholar]

- Azaria, A.; Ekblaw, A.; Vieira, T.; Lippman, A. MedRec: Using Blockchain for Medical Data Access and Permission Management. In Proceedings of the 2016 2nd International Conference on Open and Big Data (OBD), Vienna, Austria, 22–24 August 2016; pp. 25–30. [Google Scholar]

- Uchenna, O.; Agu, A.G.; Uche, E.U. Performance Appraisal and Employee Commitment in Abia State Civil Service: A Focus on Ministries of Education and Works. ABR 2018, 6, 335–346. [Google Scholar] [CrossRef]

- Huang, E.Y.; Paccagnan, D.; Mei, W.; Bullo, F. Assign and Appraise: Achieving Optimal Performance in Collaborative Teams. IEEE Trans. Autom. Control 2022, 68, 1614–1627. [Google Scholar] [CrossRef]

- Guo, H.; Lin, J.-R.; Yu, Y. Intelligent and Computer Technologies’ Application in Construction. Buildings 2023, 13, 641. [Google Scholar] [CrossRef]

- Modak, M.; Pathak, K.; Ghosh, K.K. Performance Evaluation of Outsourcing Decision Using a BSC and Fuzzy AHP Approach: A Case of the Indian Coal Mining Organization. Resour. Policy 2017, 52, 181–191. [Google Scholar] [CrossRef]

- Lin, J.-R.; Hu, Z.-Z.; Li, J.-L.; Chen, L.-M. Understanding On-Site Inspection of Construction Projects Based on Keyword Extraction and Topic Modeling. IEEE Access 2020, 8, 198503–198517. [Google Scholar] [CrossRef]

- Erkan, T.; Erdebilli, B. Selection of Academic Staff Using the Fuzzy Analytic Hierarchy Process (FAHP): A Pilot Study. Teh. Vjesn. 2012, 19, 923–929. [Google Scholar]

- Tzeng, G.-H.; Huang, J.-J. Multiple Attribute Decision Making: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2011; ISBN 978-1-4398-6157-8. [Google Scholar]

- Hongxing, L.I. Multifactorial Functions in Fuzzy Sets Theory. Fuzzy Sets Syst. 1990, 35, 69–84. [Google Scholar] [CrossRef]

- Wei, Q. Data Envelopment Analysis. Chin. Sci. Bull. 2001, 46, 1321–1332. [Google Scholar] [CrossRef]

- Ogbu Edeh, F.; Nonyelum Ugwu, J.; Gabriela Udeze, C.; Chibuike, O.N.; Onya Ogwu, V. Understanding Performance Management, Performance Appraisal and Performance Measurement. Am. J. Econ. Bus. Manag. 2019, 2, 129–146. [Google Scholar]

- Bracken, D.W.; Timmreck, C.W.; Church, A.H. The Handbook of Multisource Feedback; John Wiley & Sons: Hoboken, NJ, USA, 2001; ISBN 978-0-7879-5856-5. [Google Scholar]

- Aman, M.G.; Singh, N.N.; Stewart, A.W.; Field, C.J. The Aberrant Behavior Checklist: A Behavior Rating Scale for the Assessment of Treatment Effects. Am. J. Ment. Defic. 1985, 89, 485–491. [Google Scholar]

- Adekunle, D.S. The Philosophy and Practice of Management by Objectives; Troy State University: Troy, AL, USA, 2005. [Google Scholar]

- Aggarwal, A.; Mitra Thakur, G. Techniques of Performance Appraisal-A Review. Int. J. Eng. Adv. Technol. 2013, 2, 617–621. [Google Scholar]

- Jafari, M.; Bourouni, A.; Hesamamiri, R. A New Framework for Selection of the Best Performance Appraisal Method. Eur. J. Soc. Sci. 2009, 7, 92–100. [Google Scholar]

- Pan, W.; Wei, H. Research on Key Performance Indicator (KPI) of Business Process. In Proceedings of the 2012 Second International Conference on Business Computing and Global Informatization, Shanghai, China, 12–14 October 2012; pp. 151–154. [Google Scholar]

- Johanson, U.; Eklöv, G.; Holmgren, M.; Mårtensson, M. Human Resource Costing and Accounting versus the Balanced Scorecard: A Literature Survey of Experience with the Concepts; School of Business Stockholm University: Stockholm, Sweden, 1998. [Google Scholar]

- Phanden, R.K.; Aditya, S.V.; Sheokand, A.; Goyal, K.K.; Gahlot, P.; Jacso, A. A State-of-the-Art Review on Implementation of Digital Twin in Additive Manufacturing to Monitor and Control Parts Quality. Mater. Today Proc. 2022, 56, 88–93. [Google Scholar] [CrossRef]

- Zheng, Z.; Lu, X.-Z.; Chen, K.-Y.; Zhou, Y.-C.; Lin, J.-R. Pretrained Domain-Specific Language Model for Natural Language Processing Tasks in the AEC Domain. Comput. Ind. 2022, 142, 103733. [Google Scholar] [CrossRef]

- Meng, Q.; Zhang, Y.; Li, Z.; Shi, W.; Wang, J.; Sun, Y.; Xu, L.; Wang, X. A Review of Integrated Applications of BIM and Related Technologies in Whole Building Life Cycle. Eng. Constr. Archit. Manag. 2020, 27, 1647–1677. [Google Scholar] [CrossRef]

- Lin, C.; Hu, Z.Z.; Yang, C.; Deng, Y.C.; Zheng, W.; Lin, J.R. Maturity Assessment of Intelligent Construction Management. Buildings 2022, 10, 1742. [Google Scholar] [CrossRef]

- Neely, A. Business Performance Measurement: Theory and Practice; Cambridge University Press: Cambridge, UK, 2002; ISBN 978-0-521-80342-7. [Google Scholar]

- Deng, H.; Xu, Y.; Deng, Y.; Lin, J. Transforming Knowledge Management in the Construction Industry through Information and Communications Technology: A 15-Year Review. Autom. Constr. 2022, 142, 104530. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhou, Y.-C.; Lu, X.-Z.; Lin, J.-R. Knowledge-Informed Semantic Alignment and Rule Interpretation for Automated Compliance Checking. Autom. Constr. 2022, 142, 104524. [Google Scholar] [CrossRef]

- Jiang, C.; Li, X.; Lin, J.-R.; Liu, M.; Ma, Z. Adaptive Control of Resource Flow to Optimize Construction Work and Cash Flow via Online Deep Reinforcement Learning. Autom. Constr. 2023, 150, 104817. [Google Scholar] [CrossRef]

- Saaty, T.L. How to Make a Decision: The Analytic Hierarchy Process. Eur. J. Oper. Res. 1990, 48, 9–26. [Google Scholar] [CrossRef]

| Methods | Advantages | Disadvantages | Scenarios |

|---|---|---|---|

| Subjective experience method | High efficiency and low cost | Low credibility due to over-subjectivity | Assessors set weights for indexes based on their experience if assessors are familiar with and understand the object. |

| Questionnaire | Uniformity and generalization, easy to quantify | Non-guarantee of quality | A questionnaire is designed for the assessment and determines the index system and weighting analysis. |

| In-depth interview | Strong flexibility, deep | Difficulty for the host | The in-depth interview is described as a face-to-face conversation with related individuals to understand their working modes, natures, and other aspects, such as the corresponding evaluation index system and weighting, for reference. |

| Expert investigation method | Strong representativeness and scientific with reliability and authority | High cost, difficult to organize | Experts are invited to conduct research on the index system. Each expert sets the weight of the index independently, and then takes the average value of the weight of each index as the final weight. |

| Methods | Points of Focus | Degree of Automation | Data Size |

|---|---|---|---|

| Critical Incident Method | Critical events | Manual | Low |

| Graphic Rating Scale | Factors | Manual | Low |

| Behavior Checklist | Objects | Manual | Low |

| Management by Objectives | Goals | Manual or Semi-automatic | Low |

| Key Performance Indicator | Key points | Manual or Semi-automatic | Low |

| 360-degree Evaluation Method | Multiple dimensions | Manual or Semi-automatic | Middle |

| Balanced Score Card | Internal process | Manual or Semi-automatic | Middle |

| Scale Value | Comparative Meaning of Relative Importance of Factor i and Factor j |

|---|---|

| 1 | Factor i is strongly unimportant to factor j |

| 3 | Factor i is unimportant than factor j |

| 4 | Factor i is equally important to factor j |

| 5 | Factor i is more important than factor j |

| 7 | Factor i is far more important than factor j |

| 2, 6 | Factor i is between the two adjacent scales above compared with factor j |

| Matrix size | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| RI | 0.52 | 0.89 | 1.12 | 1.26 | 1.36 | 1.41 | 1.46 | 1.49 | 1.52 | 1.54 | 1.56 | 1.58 | 1.59 | 1.5943 |

| Matrix size | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| RI | 1.6064 | 1.6133 | 1.6207 | 1.6292 | 1.6358 | 1.6403 | 1.6462 | 1.6497 | 1.6556 | 1.6587 | 1.6631 | 1.6670 | 1.6693 | 1.6724 |

| Item | Correction Item Total Correlation (CITC) | Alpha If Item Deleted | Cronbach’s Alpha |

|---|---|---|---|

| Quantity | 0.677 | 0.837 | 0.845 |

| Quality | 0.767 | 0.747 | |

| Evaluation | 0.719 | 0.777 | |

| Standardized Cronbach’s α coefficient: 0.854 | |||

| Item | Correction Item Total Correlation (CITC) | Alpha If Item Deleted | Cronbach Alpha |

|---|---|---|---|

| Certification/Quantity | 0.791 | 0.969 | 0.971 |

| Certification/Quality | 0.827 | 0.969 | |

| Certification/Evaluation | 0.575 | 0.971 | |

| The level of certification/Quantity | 0.863 | 0.968 | |

| The level of certification/Quality | 0.885 | 0.968 | |

| The level of certification/Evaluation | 0.517 | 0.971 | |

| Professional Engineering Competence/Quantity | 0.901 | 0.968 | |

| Professional Engineering Competence/Quality | 0.790 | 0.969 | |

| Professional Engineering Competence/Evaluation | 0.546 | 0.971 | |

| Attitude/Quantity | 0.749 | 0.969 | |

| Attitude/Quality | 0.637 | 0.970 | |

| Attitude/Evaluation | 0.876 | 0.969 | |

| Creativity/Quantity | 0.797 | 0.969 | |

| Creativity/Quality | 0.833 | 0.969 | |

| Creativity/Evaluation | 0.831 | 0.969 | |

| Practiced time/Quantity | 0.737 | 0.970 | |

| Practiced time/Quality | 0.690 | 0.970 | |

| Practiced time/Evaluation | 0.706 | 0.970 | |

| Skills Proficiency/Quantity | 0.810 | 0.969 | |

| Skills Proficiency/Quality | 0.624 | 0.970 | |

| Skills Proficiency/Evaluation | 0.621 | 0.970 | |

| Examination/Quantity | 0.847 | 0.969 | |

| Examination/Quality | 0.878 | 0.968 | |

| Examination/Evaluation | 0.815 | 0.969 | |

| Standardized Cronbach’s α: 0.972 | |||

| AHP | Fuzzy Comprehensive Evaluation | DEA | |

|---|---|---|---|

| Advantages |

|

| Appliable to complex decision-making problems; |

| Disadvantages |

|

|

|

| Indexes | |||

|---|---|---|---|

| ① | Certification | ⑥ | The level of the holding certificate |

| ② | Professional engineering competence | ⑦ | Attitude |

| ③ | Creativity | ⑧ | Motivation |

| ④ | Practiced time | ⑨ | Qualification |

| ⑤ | Skills proficiency | ⑩ | Examination |

| The Preliminary Evaluation Index | Adjustment | Supplement Item | ||

|---|---|---|---|---|

| ① | Certification | ① | Deleted | Notice File Handling Messages News Log Aside supervision |

| ② | Professional engineering competence | ⑥ | ||

| ③ | Creativity | ③ | Merge into index Evaluation by significant others, and then divided into Attitude and Ability | |

| ④ | Practiced time | ④ | ||

| ⑤ | Skills proficiency | ⑤ | ||

| ⑥ | The level of the holding certificate | ⑦ | ||

| ⑦ | Attitude | ⑧ | ||

| ⑧ | Motivation | ② | Merge into index Quality, and then divide into Inspection, Attendance, and Check. | |

| ⑨ | Qualification | ⑨ | ||

| ⑩ | Examination | ⑩ | Categorize into index Evaluation | |

| Lv 1 Index | Lv2 Index | Lv3 Index | ||

|---|---|---|---|---|

| Quantity | Notice | Messages | News | |

| File Handling | The content is based on the different responsibilities of construction supervisors. | |||

| Quality | Inspection | Log | Aside supervision | |

| Attendance | Clocking-in | PE-fit | ||

| Response Time | The content is based on the different responsibilities of construction supervisors. | |||

| Check | ||||

| Evaluation | Superior | Attitude | Ability | |

| Company | Senior Construction Supervisor | |||

| Examination | Based on the firm regulations | |||

| Lv 1 Index | Weight | Lv 2 Index | Weight |

|---|---|---|---|

| Quantity | 0.298 | Notice | 0.134 |

| File Handing | 0.164 | ||

| Quality | 0.375 | Attendance | 0.086 |

| Response Time | 0.1015 | ||

| Inspection | 0.086 | ||

| Check | 0.1015 | ||

| Evaluation | 0.327 | Superior | 0.109 |

| Company/Senior Construction Supervisor | 0.109 | ||

| Examination | 0.109 |

| AHP Data | Attendance | Response Time | Inspection | Check |

|---|---|---|---|---|

| Attendance | 1.000 | 0.833 | 1.000 | 0.833 |

| Response Time | 1.200 | 1.000 | 1.200 | 1.000 |

| Inspection | 1.000 | 0.833 | 1.000 | 0.833 |

| Check | 1.200 | 1.000 | 1.200 | 1.000 |

| Items | Eigenvector | Weights | Max-Eigen | CI |

|---|---|---|---|---|

| Attendance | 0.909 | 22.727% | 4.000 | 0.000 |

| Response Time | 1.091 | 27.273% | ||

| Inspection | 0.909 | 22.727% | ||

| Check | 1.091 | 27.273% |

| Max-Eigen | CI | RI | CR | Test |

|---|---|---|---|---|

| 4.000 | 0.000 | 0.890 | 0.000 | Pass |

| Response Time | Inspection | Check | Attendance | |

|---|---|---|---|---|

| Membership | 0.258 | 0.236 | 0.263 | 0.242 |

| Weights | 0.258 | 0.236 | 0.263 | 0.242 |

| Lv 1 Index | Lv 2 Index | Scope (Month) | Lv 3 Index | ||

|---|---|---|---|---|---|

| A | Quantity | A1 | Notice | 1 | Receive notices |

| A2 | File Handling | 6 | The quantity of processing files | ||

| B | Quality | B1 | Response Time | 6 | The processing speed of the pending portion of the workflow |

| B2 | Check | 6 | Identify problems | ||

| B3 | Inspection | 6 | Inspection log and aside supervision | ||

| B4 | Attendance | 6 | Clocking-in and P-E fit | ||

| C | Evaluation | C1 | Superior | 6 | Evaluate the attitude and ability based on superior |

| C2 | Company | 6 | Evaluate the attitude and ability based on company | ||

| C3 | Senior Construction Supervisor | 12 | Evaluate the attitude and ability based on senior supervisor | ||

| C4 | Examination | 12 | Examine professional knowledge and award outstanding behavior (bonus), or warnings (deduction) | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Lin, J.-R.; Yan, K.-X.; Deng, Y.-C.; Hu, Z.-Z.; Liu, C. Data-Driven Quantitative Performance Evaluation of Construction Supervisors. Buildings 2023, 13, 1264. https://doi.org/10.3390/buildings13051264

Yang C, Lin J-R, Yan K-X, Deng Y-C, Hu Z-Z, Liu C. Data-Driven Quantitative Performance Evaluation of Construction Supervisors. Buildings. 2023; 13(5):1264. https://doi.org/10.3390/buildings13051264

Chicago/Turabian StyleYang, Cheng, Jia-Rui Lin, Ke-Xiao Yan, Yi-Chuan Deng, Zhen-Zhong Hu, and Cheng Liu. 2023. "Data-Driven Quantitative Performance Evaluation of Construction Supervisors" Buildings 13, no. 5: 1264. https://doi.org/10.3390/buildings13051264

APA StyleYang, C., Lin, J.-R., Yan, K.-X., Deng, Y.-C., Hu, Z.-Z., & Liu, C. (2023). Data-Driven Quantitative Performance Evaluation of Construction Supervisors. Buildings, 13(5), 1264. https://doi.org/10.3390/buildings13051264