Energy Cost Driven Heating Control with Reinforcement Learning

Abstract

:1. Introduction

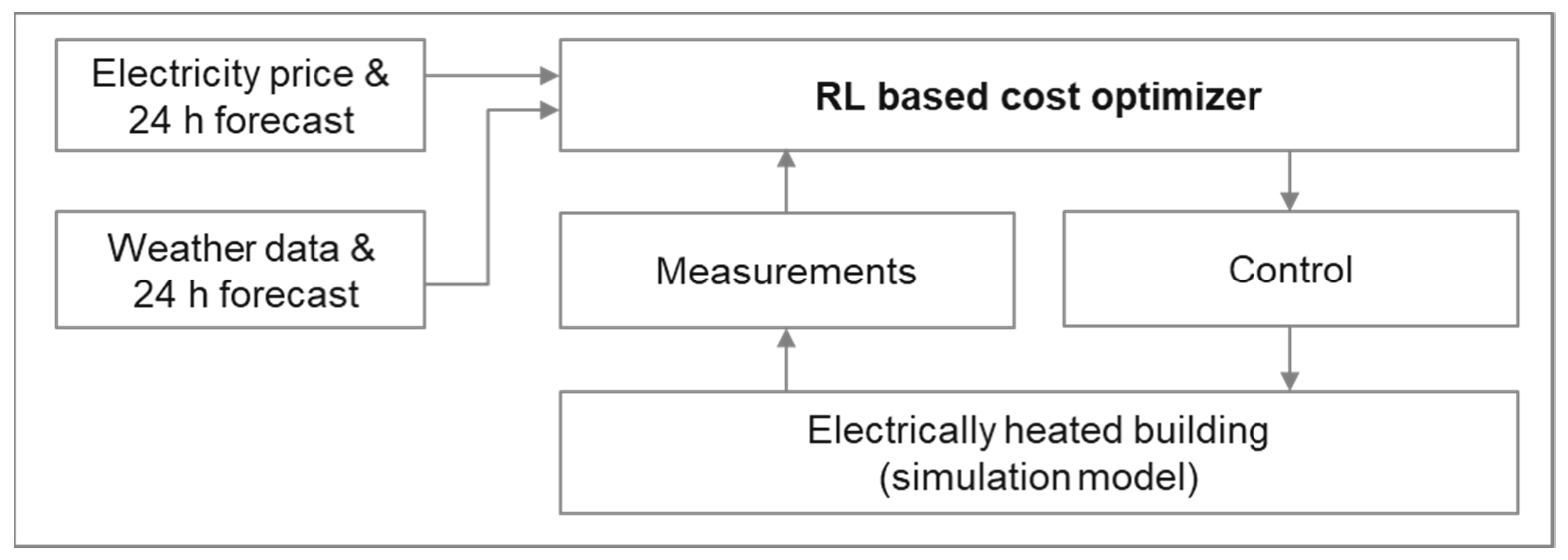

2. Methods

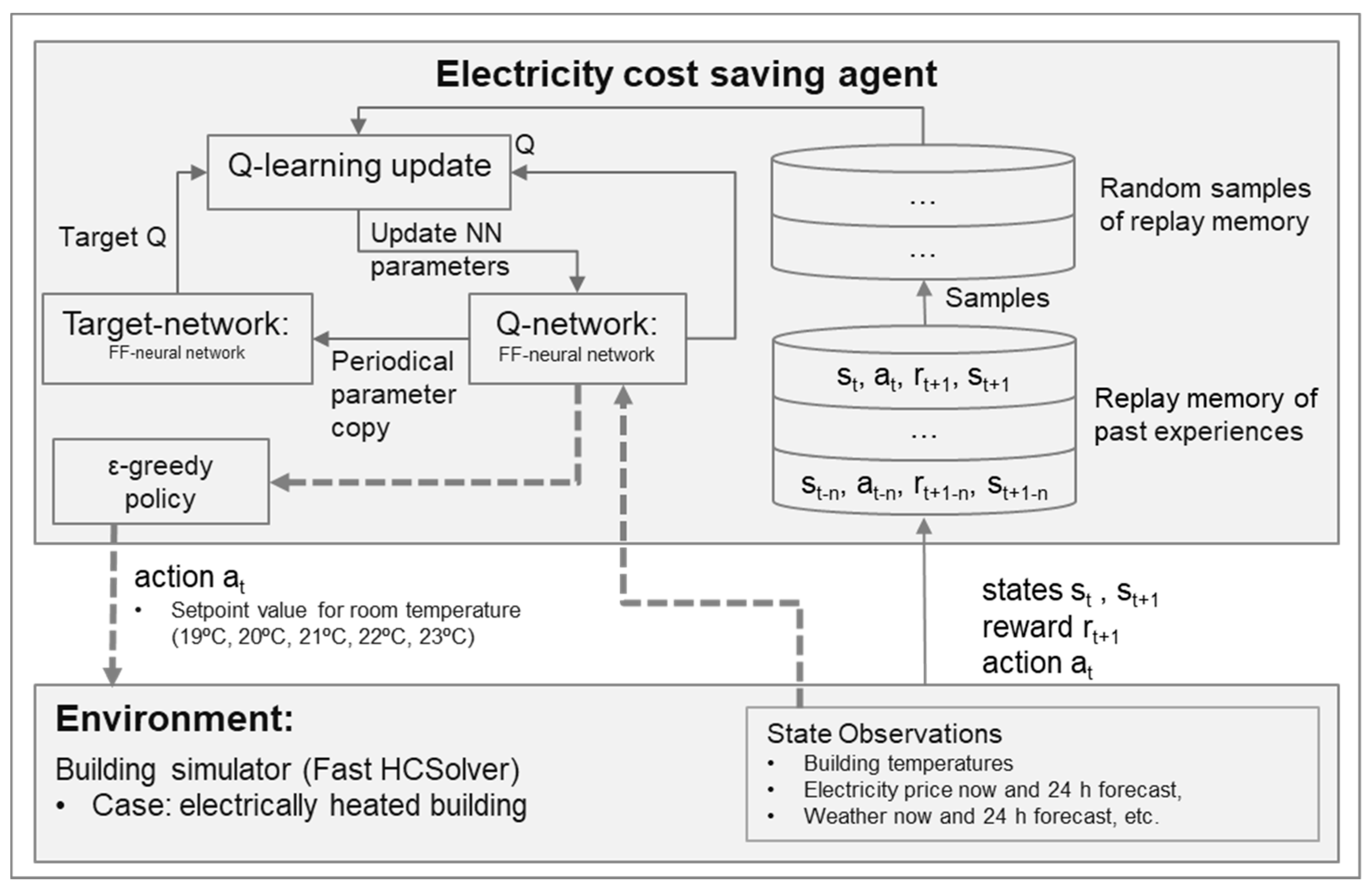

2.1. Reinforcement Learning

2.2. Implementation of the Algorithm

- timestamp (hour of day and day of week);

- weather (outdoor temperature, global radiation, and diffuse radiation) current value and forecast for next 24 h;

- electricity price and its forecast for the next 24 h;

- and temperature measurements from the building.

2.3. Environment

2.4. Buildings and Performance Tests

- typical building of years 2011–2017;

- typical building of years 2001–2010;

- typical building of years 1991–2000;

- typical building of years 1981–1990;

- typical building of years 1971–1980;

- and typical building of years 1961–1970.

3. Results

3.1. Comparison of Different Reward Functions

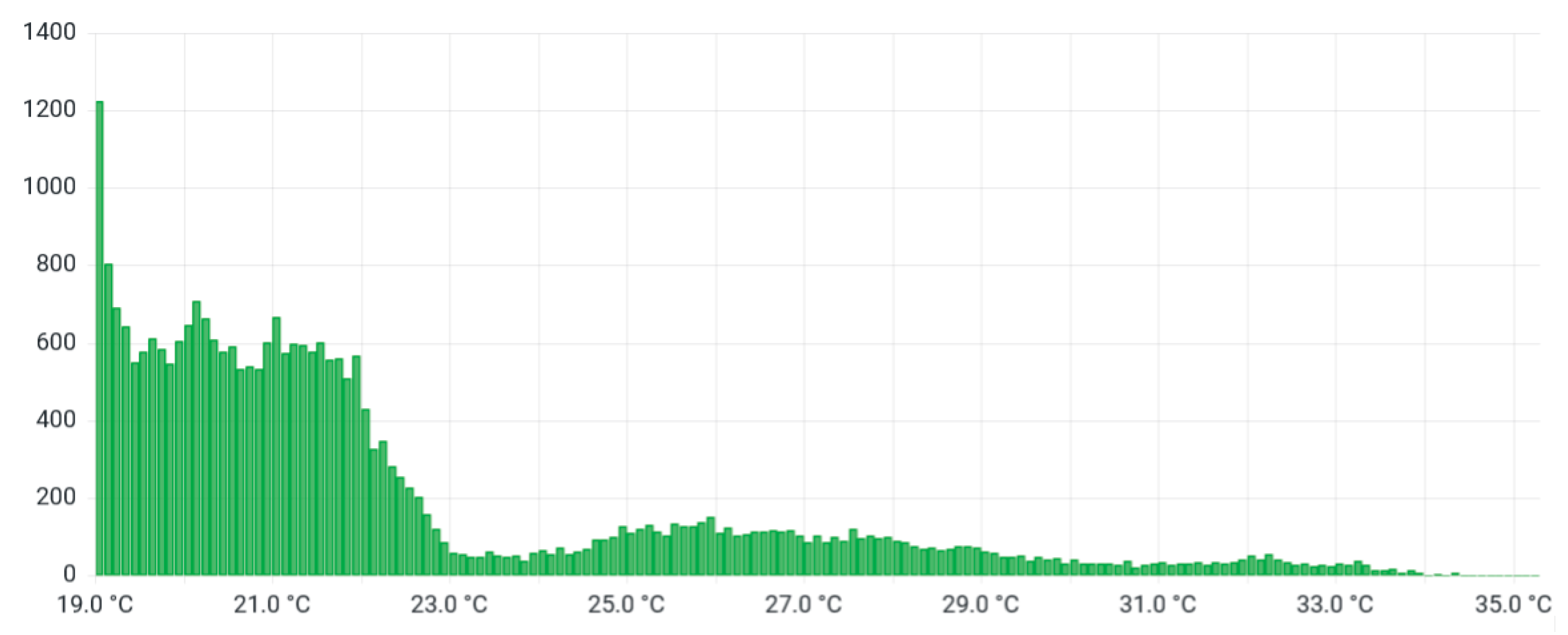

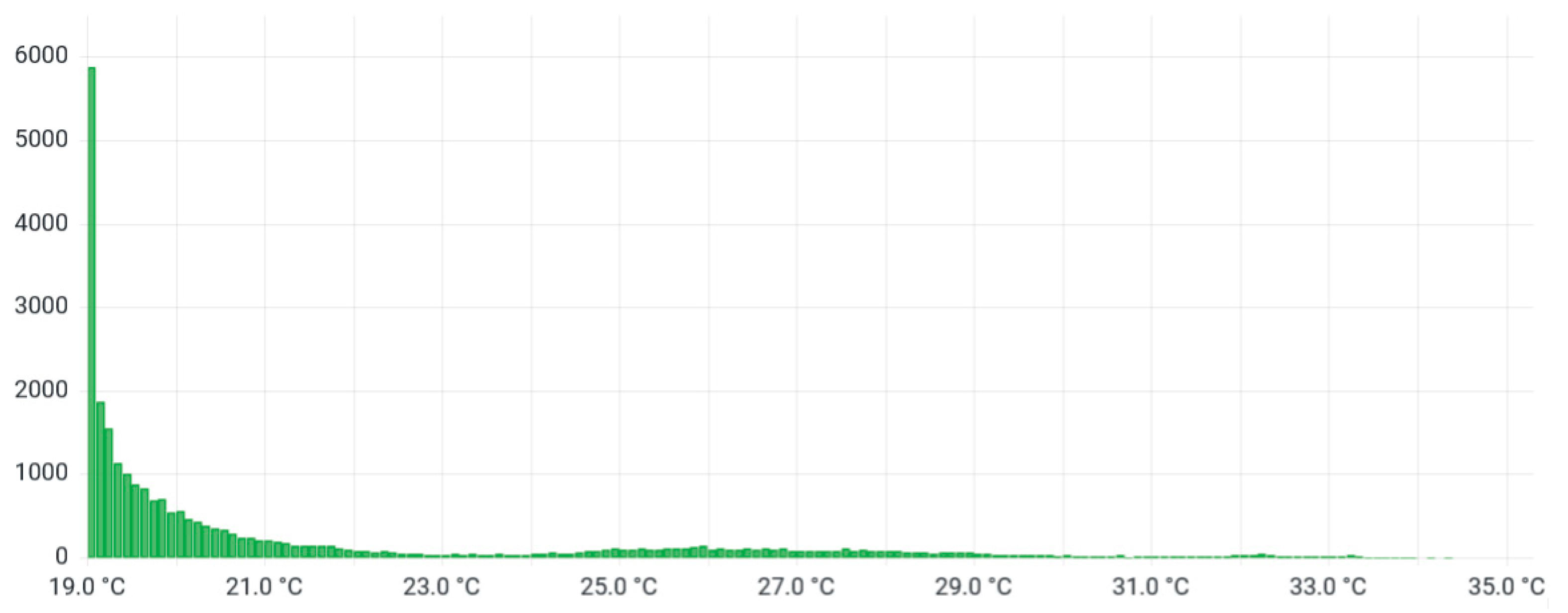

3.2. Indoor Temperature for Different Buildings

3.3. Cost Savings by Simulation Year

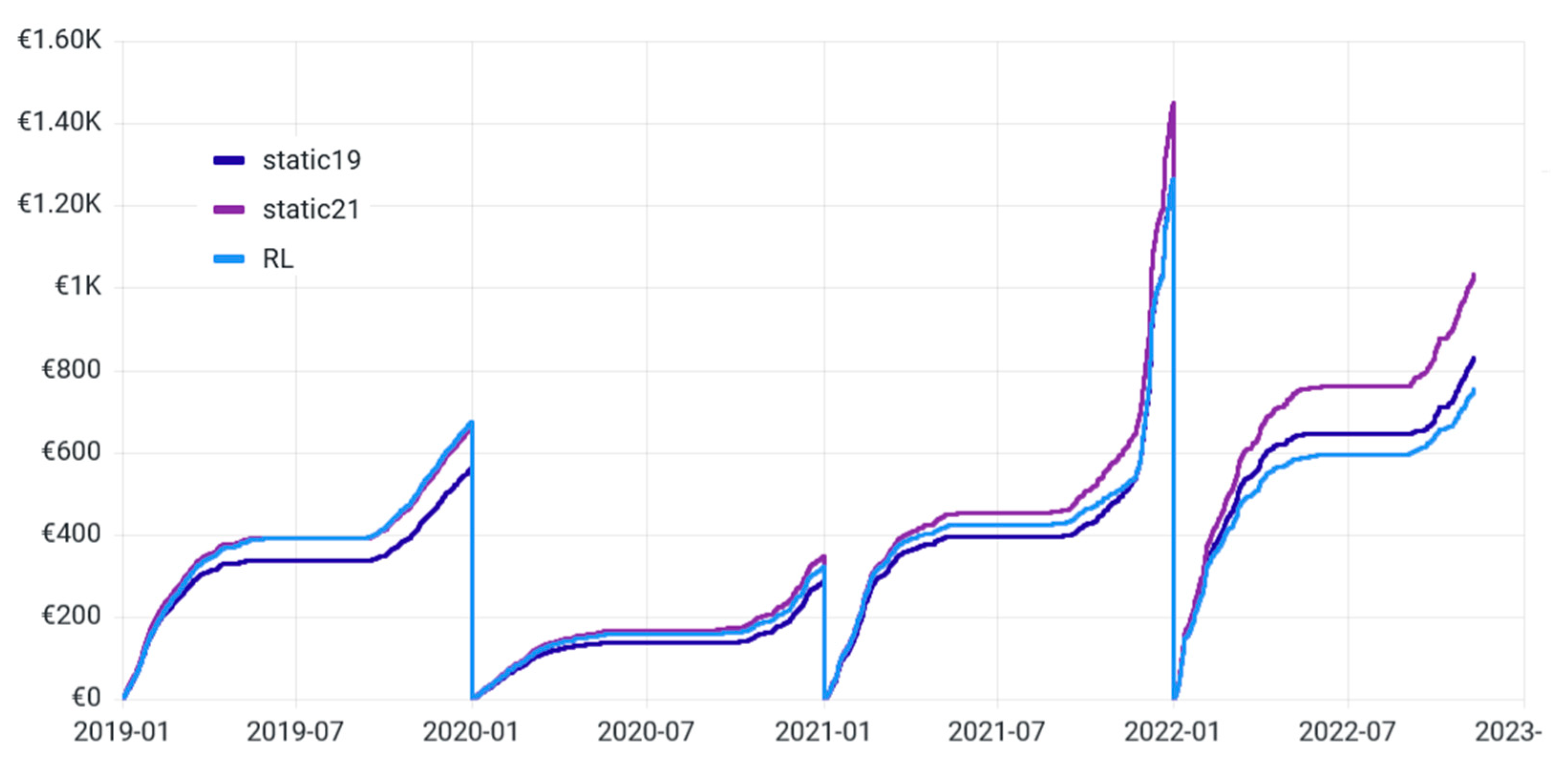

3.4. Cost Savings of Buildings Based on Construction Year

3.5. Energy Savings by Simulation Year

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Commission. A Clean Planet for All: A European Strategic Long-Term Vision for a Prosperous, Modern, Competitive and Climate Neutral Economy. Communication From the Commission 2018. Available online: https://www.europeansources.info/record/communication-a-clean-planet-for-all-a-european-strategic-long-term-vision-for-a-prosperous-modern-competitive-and-climate-neutral-economy/ (accessed on 21 December 2022).

- An European Green Deal. Available online: https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/european-green-deal_en (accessed on 16 January 2023).

- Nejat, P.; Jomehzadeh, F.; Taheri, M.M.; Gohari, M.; Majid, M.Z.A. A Global Review of Energy Consumption, CO 2 Emissions and Policy in the Residential Sector (with an Overview of the Top Ten CO2 Emitting Countries). Renew. Sustain. Energy Rev. 2015, 43, 843–862. [Google Scholar] [CrossRef]

- European Commission Directorate—General for Energy. Comprehensive Study of Building Energy Renovation Activities and the Uptake of Nearly Zero-Energy Buildings in the EU: Final Report; Publications Office of the EU: Geneva, Switzerland, 2019. [Google Scholar]

- European Commission; Centre, J.R.; Tsiropoulos, I.; Nijs, W.; Tarvydas, D.; Ruiz, P. Towards Net-Zero Emissions in the EU Energy System by 2050: Insights from Scenarios in Line with the 2030 and 2050 Ambitions of the European Green Deal; Publications Office of the EU: Geneva, Switzerland, 2020. [Google Scholar]

- Nord Pool. Available online: https://www.nordpoolgroup.com/ (accessed on 12 January 2023).

- Knapik, O. Modeling and Forecasting Electricity Price Jumps in the Nord Pool Power Market; CREATES Research Paper 2017-7; Department of Economics and Business Economics, Aarhus University: Aarhus, Danmark, 2017. [Google Scholar]

- Alimohammadisagvand, B.; Alam, S.; Ali, M.; Degefa, M.; Jokisalo, J.; Sirén, K. Influence of Energy Demand Response Actions on Thermal Comfort and Energy Cost in Electrically Heated Residential Houses. Indoor Built Environ. 2017, 26, 298–316. [Google Scholar] [CrossRef]

- Behl, M.; Jain, A.; Mangharam, R. Data-Driven Modeling, Control and Tools for Cyber-Physical Energy Systems. In Proceedings of the 2016 ACM/IEEE 7th International Conference on Cyber-Physical Systems (ICCPS), Vienna, Austria, 11–14 April 2016; pp. 1–10. [Google Scholar]

- Péan, T.Q.; Salom, J.; Costa-Castelló, R. Review of Control Strategies for Improving the Energy Flexibility Provided by Heat Pump Systems in Buildings. J. Process Control 2019, 74, 35–49. [Google Scholar] [CrossRef]

- Avci, M.; Erkoc, M.; Rahmani, A.; Asfour, S. Model Predictive HVAC Load Control in Buildings Using Real-Time Electricity Pricing. Energy Build 2013, 60, 199–209. [Google Scholar] [CrossRef]

- Li, X.; Malkawi, A. Multi-Objective Optimization for Thermal Mass Model Predictive Control in Small and Medium Size Commercial Buildings under Summer Weather Conditions. Energy 2016, 112, 1194–1206. [Google Scholar] [CrossRef]

- Zhang, H.; Seal, S.; Wu, D.; Bouffard, F.; Boulet, B. Building Energy Management with Reinforcement Learning and Model Predictive Control: A Survey. IEEE Access 2022, 10, 27853–27862. [Google Scholar] [CrossRef]

- Fischer, D.; Bernhardt, J.; Madani, H.; Wittwer, C. Comparison of Control Approaches for Variable Speed Air Source Heat Pumps Considering Time Variable Electricity Prices and PV. Appl. Energy 2017, 204, 93–105. [Google Scholar] [CrossRef]

- Vandermeulen, A.; Vandeplas, L.; Patteeuw, D.; Sourbron, M.; Helsen, L. Flexibility Offered by Residential Floor Heating in a Smart Grid Context: The Role of Heat Pumps and Renewable Energy Sources in Optimization towards Different Objectives. In Proceedings of the IEA Heat Pump Conference, Rotterdam, The Netherlands, 15–18 May 2017. [Google Scholar]

- Ala’raj, M.; Radi, M.; Abbod, M.F.; Majdalawieh, M.; Parodi, M. Data-Driven Based HVAC Optimisation Approaches: A Systematic Literature Review. J. Build. Eng. 2022, 46, 103678. [Google Scholar] [CrossRef]

- Liu, T.; Xu, C.; Guo, Y.; Chen, H. A Novel Deep Reinforcement Learning Based Methodology for Short-Term HVAC System Energy Consumption Prediction. Int. J. Refrig. 2019, 107, 39–51. [Google Scholar] [CrossRef]

- Raza, R.; Hassan, N.U.; Yuen, C. Determination of Consumer Behavior Based Energy Wastage Using IoT and Machine Learning. Energy Build 2020, 220, 110060. [Google Scholar] [CrossRef]

- Esrafilian-Najafabadi, M.; Haghighat, F. Impact of Occupancy Prediction Models on Building HVAC Control System Performance: Application of Machine Learning Techniques. Energy Build 2022, 257, 111808. [Google Scholar] [CrossRef]

- Chaudhuri, T.; Soh, Y.C.; Li, H.; Xie, L. Machine Learning Based Prediction of Thermal Comfort in Buildings of Equatorial Singapore. In Proceedings of the 2017 IEEE International Conference on Smart Grid and Smart Cities (ICSGSC), Singapore, 23–26 July 2017; pp. 72–77. [Google Scholar]

- Kusiak, A.; Tang, F.; Xu, G. Multi-Objective Optimization of HVAC System with an Evolutionary Computation Algorithm. Energy 2011, 36, 2440–2449. [Google Scholar] [CrossRef]

- Nassif, N. Modeling and Optimization of HVAC Systems Using Artificial Neural Network and Genetic Algorithm. Build Simul. 2014, 7, 237–245. [Google Scholar] [CrossRef]

- Amin, U.; Hossain, M.J.; Fernandez, E. Optimal Price Based Control of HVAC Systems in Multizone Office Buildings for Demand Response. J. Clean Prod. 2020, 270, 122059. [Google Scholar] [CrossRef]

- Yuan, X.; Pan, Y.; Yang, J.; Wang, W.; Huang, Z. Study on the Application of Reinforcement Learning in the Operation Optimization of HVAC System. Build Simul. 2021, 14, 75–87. [Google Scholar] [CrossRef]

- Fazenda, P.; Veeramachaneni, K.; Lima, P.; O’Reilly, U.-M. Using Reinforcement Learning to Optimize Occupant Comfort and Energy Usage in HVAC Systems. J. Ambient. Intell. Smart Environ. 2014, 6, 675–690. [Google Scholar] [CrossRef]

- Liu, S.; Henze, G.P. Experimental Analysis of Simulated Reinforcement Learning Control for Active and Passive Building Thermal Storage Inventory: Part 1. Theoretical Foundation. Energy Build 2006, 38, 142–147. [Google Scholar] [CrossRef]

- Barrett Enda and Linder, S. Autonomous HVAC Control, A Reinforcement Learning Approach. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2015, Porto, Portugal, 7–11 September 2015; Springer International Publishing: Cham, Germany, 2015; pp. 3–19. [Google Scholar]

- Costanzo, G.T.; Iacovella, S.; Ruelens, F.; Leurs, T.; Claessens, B.J. Experimental Analysis of Data-Driven Control for a Building Heating System. Sustain. Energy Grids Netw. 2016, 6, 81–90. [Google Scholar] [CrossRef]

- Ruelens, F.; Iacovella, S.; Claessens, B.; Belmans, R. Learning Agent for a Heat-Pump Thermostat with a Set-Back Strategy Using Model-Free Reinforcement Learning. Energy 2015, 8, 8300–8318. [Google Scholar] [CrossRef]

- Li, B.; Xia, L. A Multi-Grid Reinforcement Learning Method for Energy Conservation and Comfort of HVAC in Buildings. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Swede, 24–28 August 2015; pp. 444–449. [Google Scholar]

- Wei, T.; Wang, Y.; Zhu, Q. Deep Reinforcement Learning for Building HVAC Control. In Proceedings of the 54th Annual Design Automation Conference 2017, Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Azuatalam, D.; Lee, W.-L.; de Nijs, F.; Liebman, A. Reinforcement Learning for Whole-Building HVAC Control and Demand Response. Energy AI 2020, 2, 100020. [Google Scholar] [CrossRef]

- Biswas, D. Reinforcement Learning Based HVAC Optimization in Factories. In Proceedings of the Eleventh ACM International Conference on Future Energy Systems, Online, 22–26 June 2020; pp. 428–433. [Google Scholar]

- Gupta, A.; Badr, Y.; Negahban, A.; Qiu, R.G. Energy-Efficient Heating Control for Smart Buildings with Deep Reinforcement Learning. J. Build. Eng. 2021, 34, 101739. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards; King’s College: Cambridge, UK, 1989. [Google Scholar]

- van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 13–17 February 2016; p. 30. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Brunton, S.L.; Kutz, J.N. Data-Driven Science and Engineering; Cambridge University Press: Cambridge, MA, USA, 2022; ISBN 9781009089517. [Google Scholar]

- Deeplearning4j Suite Overview—Deeplearning4j. Available online: https://deeplearning4j.konduit.ai/ (accessed on 12 January 2023).

- ISO—ISO 13790:2008; Energy Performance of Buildings—Calculation of Energy Use for Space Heating and Cooling. Available online: https://www.iso.org/standard/41974.html (accessed on 12 January 2023).

- EN 15241:2007; Ventilation for Buildings—Calculation Methods for Energy Losses Due to Ventilation. Available online: https://standards.iteh.ai/catalog/standards/cen/4138127b-0434-4265-95ef-4b075788e878/en-15241-2007 (accessed on 9 May 2022).

- Open Data—Finnish Meteorological Institute. Available online: https://en.ilmatieteenlaitos.fi/open-data (accessed on 12 January 2023).

- ENTSO-E Transparency Platform. Available online: https://transparency.entsoe.eu/ (accessed on 12 January 2023).

- Holopainen, R. A Human Thermal Model for Improved Thermal Comfort; School of Engineering, Aalto University: Espoo, Finland, 2012. [Google Scholar]

| 2019 | 2020 | 2021 | 2022 (Until 11 September) | |

|---|---|---|---|---|

| mean | 44.0 | 28.0 | 72.3 | 139.7 |

| min | 0.1 | −1.7 | −1.4 | −1.0 |

| max | 200.0 | 254.4 | 1000.1 | 861.1 |

| std | 15.3 | 21.1 | 66.0 | 126.1 |

| 2019 | 2020 | 2021 | 2022 (Until 11 September) | |

|---|---|---|---|---|

| no penalty | 12% | 17% | 13% | 21% |

| linear penalty | 11% | 23% | 17% | 27% |

| second-order penalty | −2% | 8% | 13% | 27% |

| Building Construction Year | avg (Tindoor) | std (Tindoor) |

|---|---|---|

| 1961–1970 | 21.1 °C | 0.79 |

| 1971–1980 | 21.0 °C | 0.78 |

| 1981–1990 | 20.8 °C | 0.91 |

| 1991–2000 | 20.8 °C | 0.84 |

| 2001–2010 | 20.8 °C | 0.89 |

| 2011–2017 | 20.6 °C | 0.83 |

| 2019 | 2020 | 2021 | 2022 (Until 11 September) | |

|---|---|---|---|---|

| 19 set point | 15% | 18% | 12% | 19% |

| RL | −1% | 8% | 10% | 23% |

| Building Construction Year | sp 21 (€) | sp 19 (%) | RL (%) |

|---|---|---|---|

| 1961–1970 | 1619 | 15% | 6% |

| 1971–1980 | 1523 | 15% | 7% |

| 1981–1990 | 905 | 16% | 15% |

| 1991–2000 | 920 | 15% | 12% |

| 2001–2010 | 874 | 16% | 14% |

| 2011–2017 | 451 | 16% | 17% |

| 2019 | 2020 | 2021 | 2022 (Until 11 September) | |

|---|---|---|---|---|

| 19 set point | 16% | 19% | 15% | 19% |

| RL | −1% | 1% | 2% | 4% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kannari, L.; Kantorovitch, J.; Piira, K.; Piippo, J. Energy Cost Driven Heating Control with Reinforcement Learning. Buildings 2023, 13, 427. https://doi.org/10.3390/buildings13020427

Kannari L, Kantorovitch J, Piira K, Piippo J. Energy Cost Driven Heating Control with Reinforcement Learning. Buildings. 2023; 13(2):427. https://doi.org/10.3390/buildings13020427

Chicago/Turabian StyleKannari, Lotta, Julia Kantorovitch, Kalevi Piira, and Jouko Piippo. 2023. "Energy Cost Driven Heating Control with Reinforcement Learning" Buildings 13, no. 2: 427. https://doi.org/10.3390/buildings13020427

APA StyleKannari, L., Kantorovitch, J., Piira, K., & Piippo, J. (2023). Energy Cost Driven Heating Control with Reinforcement Learning. Buildings, 13(2), 427. https://doi.org/10.3390/buildings13020427