Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks

Abstract

:1. Introduction

- -

- -

- Increasing the number of images to improve the generalizing ability of the model by applying our own augmentation algorithm [45];

- -

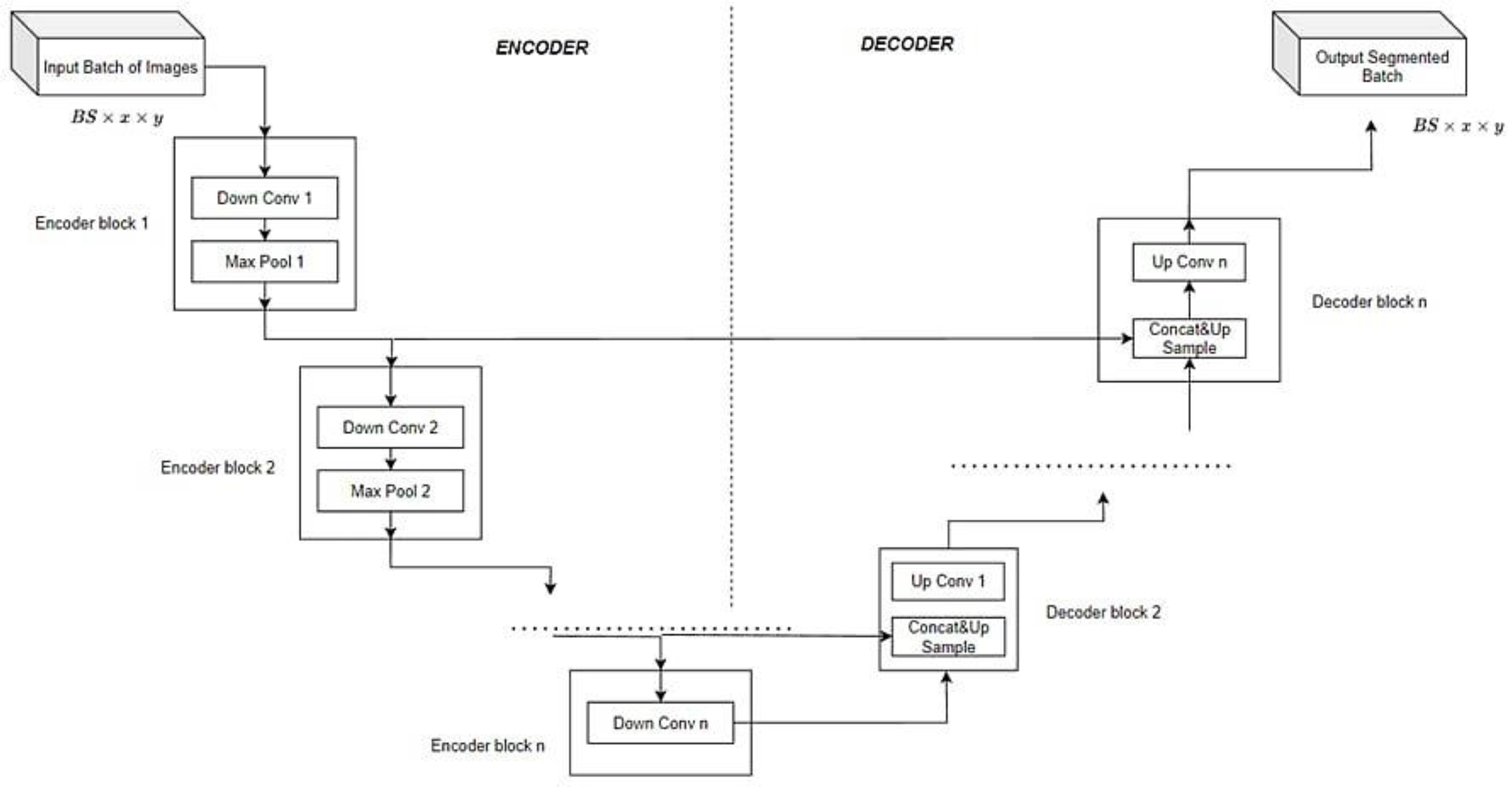

- Creating models based on the convolutional neural network of the U-Net architecture;

- -

- Creating models based on the convolutional neural network of the LinkNet architecture;

- -

- Implementing the authors’ SCALE algorithm for calculating the length of a detected defect [44].

- -

- Preparation of a database of images of aerated concrete products, in which defects in the form of cracks and delamination of various lengths and widths are detected during visual inspection;

- -

- Justification and description of the selected architectures of convolutional neural networks U-Net and LinkNet;

- -

- Carrying out of the augmentation process to expand the training data set using the authors’ algorithm;

- -

- Implementation, debugging, optimization of parameters and testing of the resulting algorithms on a test sample;

- -

- Determination of quality metrics for developed intelligent models based on CNN;

- -

- Implementation of the authors’ algorithm for calculating the length of the detected defect in segmented images;

- -

- Evaluation of the obtained result and determination of recommendations for the use of intelligent models of semantic segmentation of cracks and delamination with their subsequent analysis.

- -

- The theoretical significance of this study is as follows:

- -

- Expansion of the theory about the possibilities of using computer vision in the construction industry, in particular when detecting defects in building materials, products and structures;

- -

- Description of the features of the technical and software implementation of computer vision algorithms, which should be taken into account when implementing them into practice.

- -

- The practical significance of the work lies in the following:

- -

- Implementation of technology for creating artificial systems capable of processing (including in real time) information from images obtained during construction monitoring of the structural state of materials, products and structures;

- -

- Practical implementation of the algorithm for determining the length of a cracks and delamination detected using an intelligent algorithm.

2. Materials and Methods

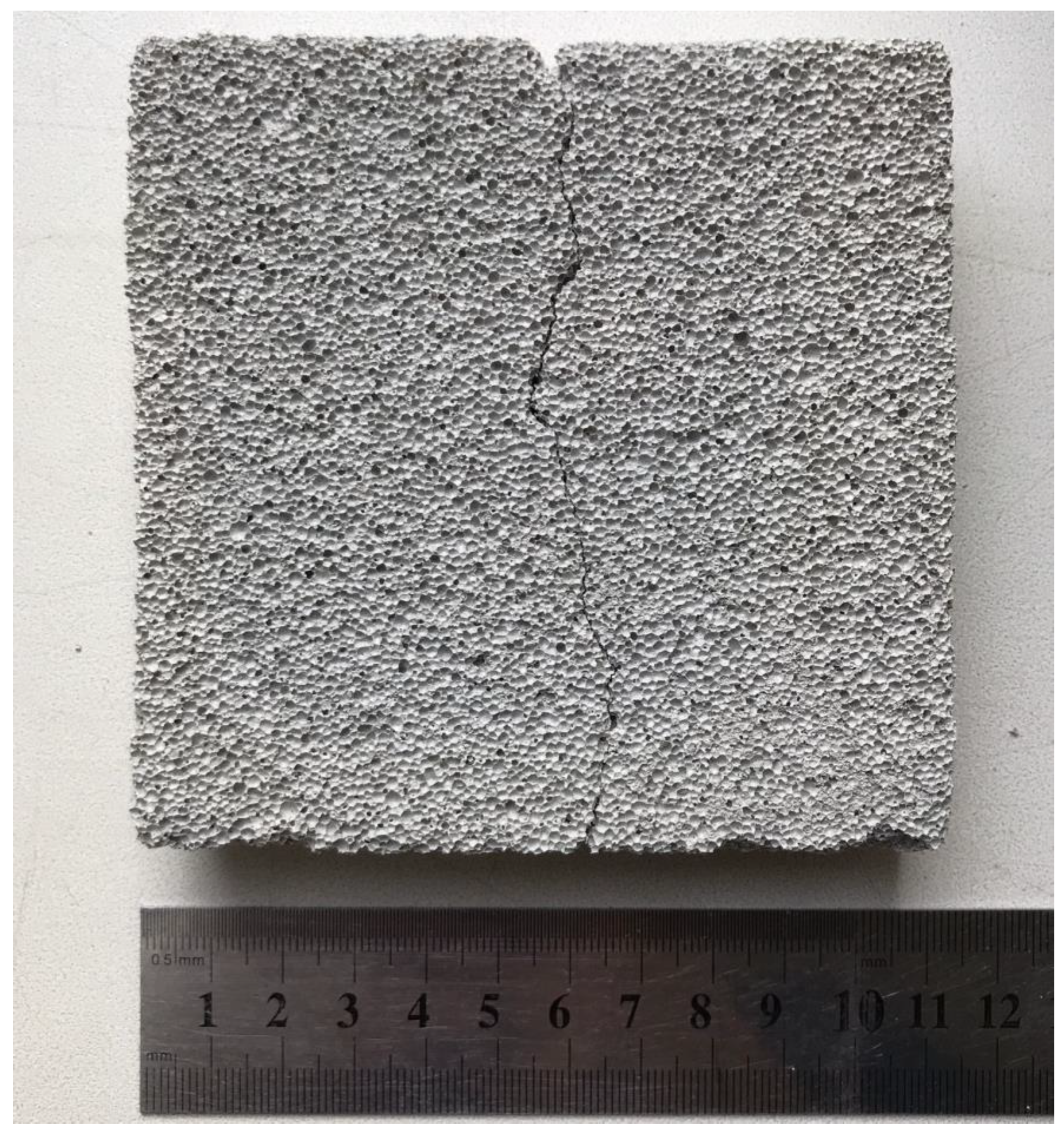

2.1. Characteristics of the Material

2.2. Development of an Intelligent Algorithm Based on Various Convolutional Neural Network Architectures

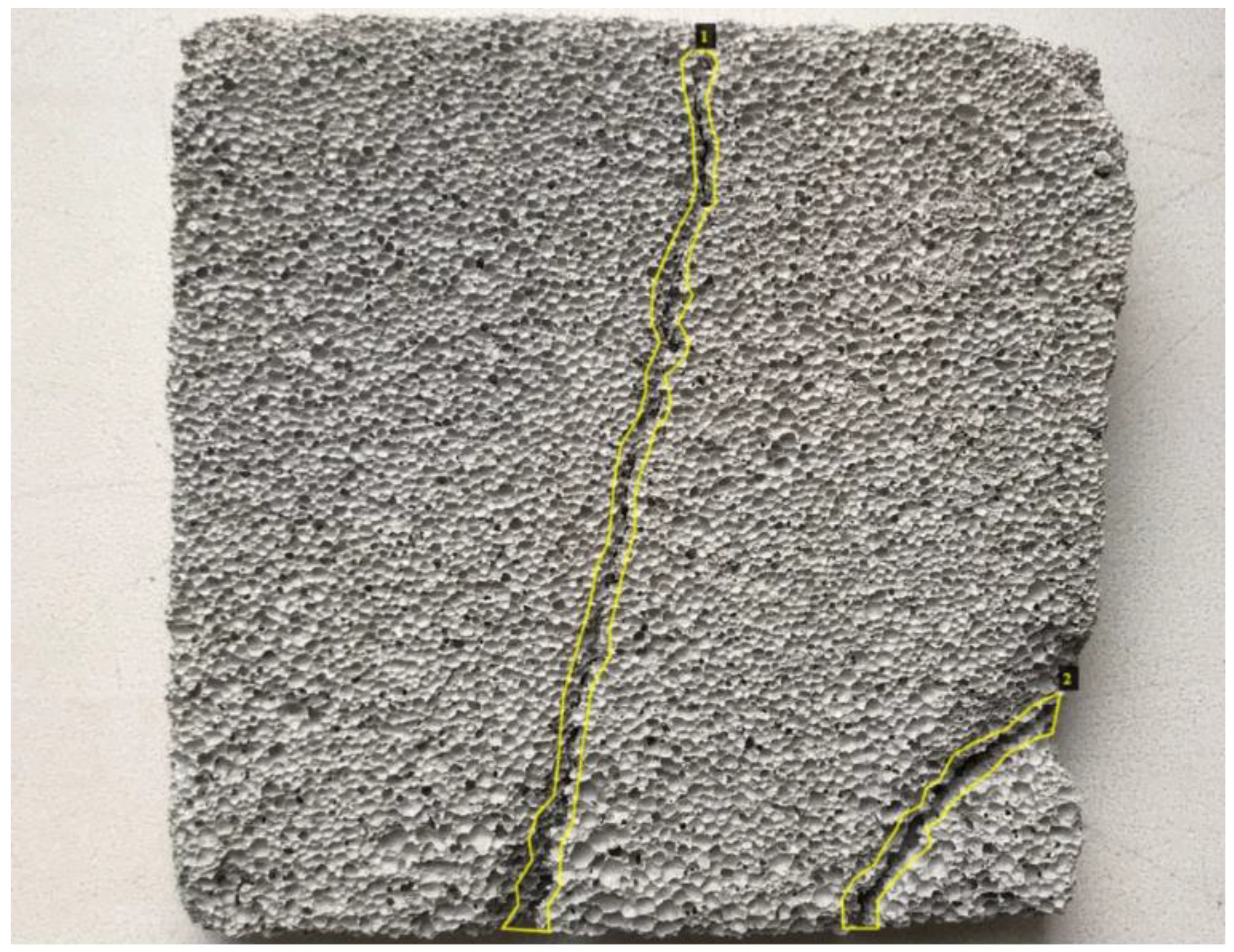

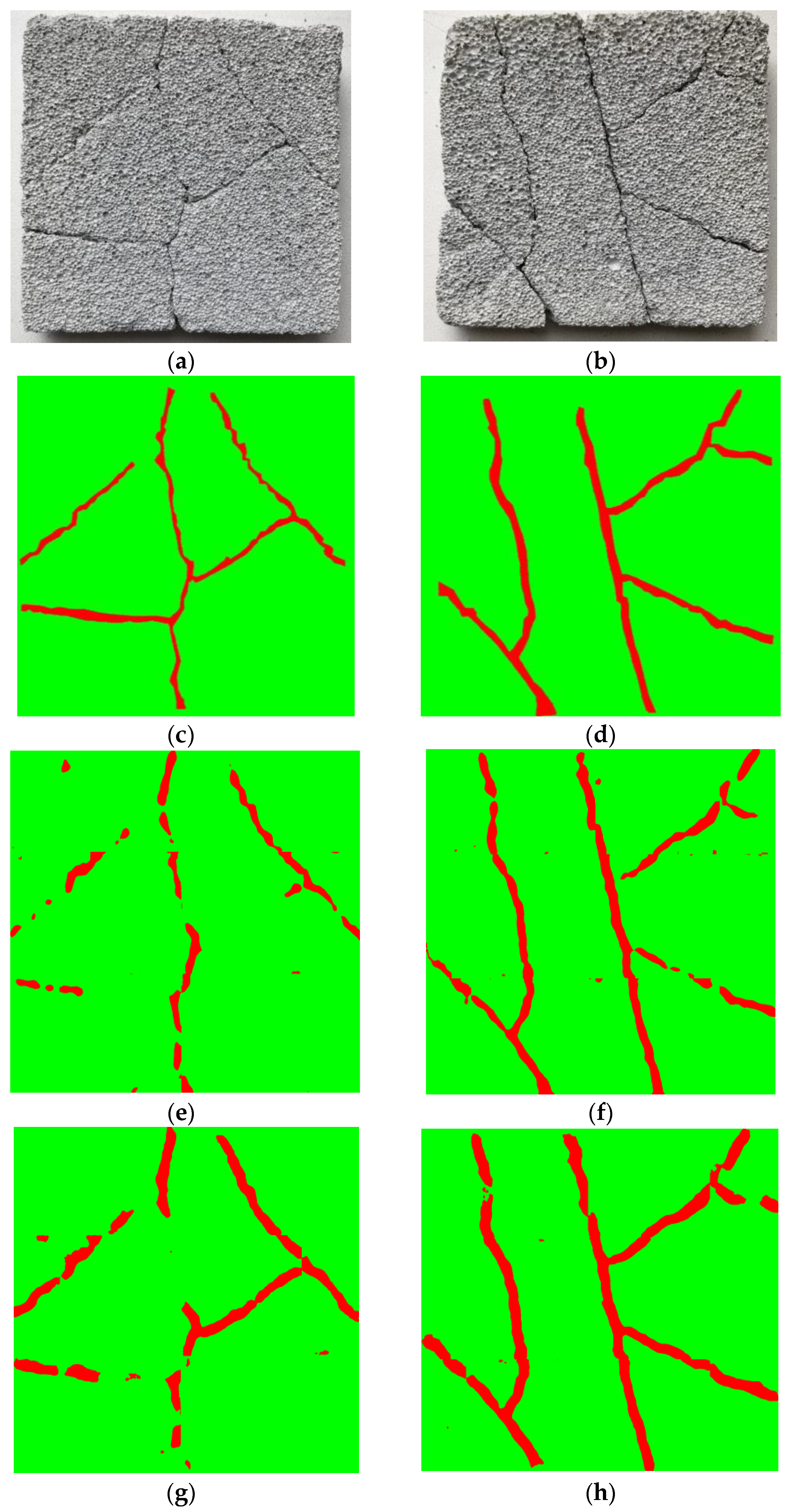

2.2.1. Image Annotation and Preprocessing

- -

- A total of 16 images were divided in a ratio of 9/4/3 into training, validation and test samples;

- -

- Images from each sample were divided into 16 fragments with a dimension of 400 × 400, so the total number of images at this stage was 256;

- -

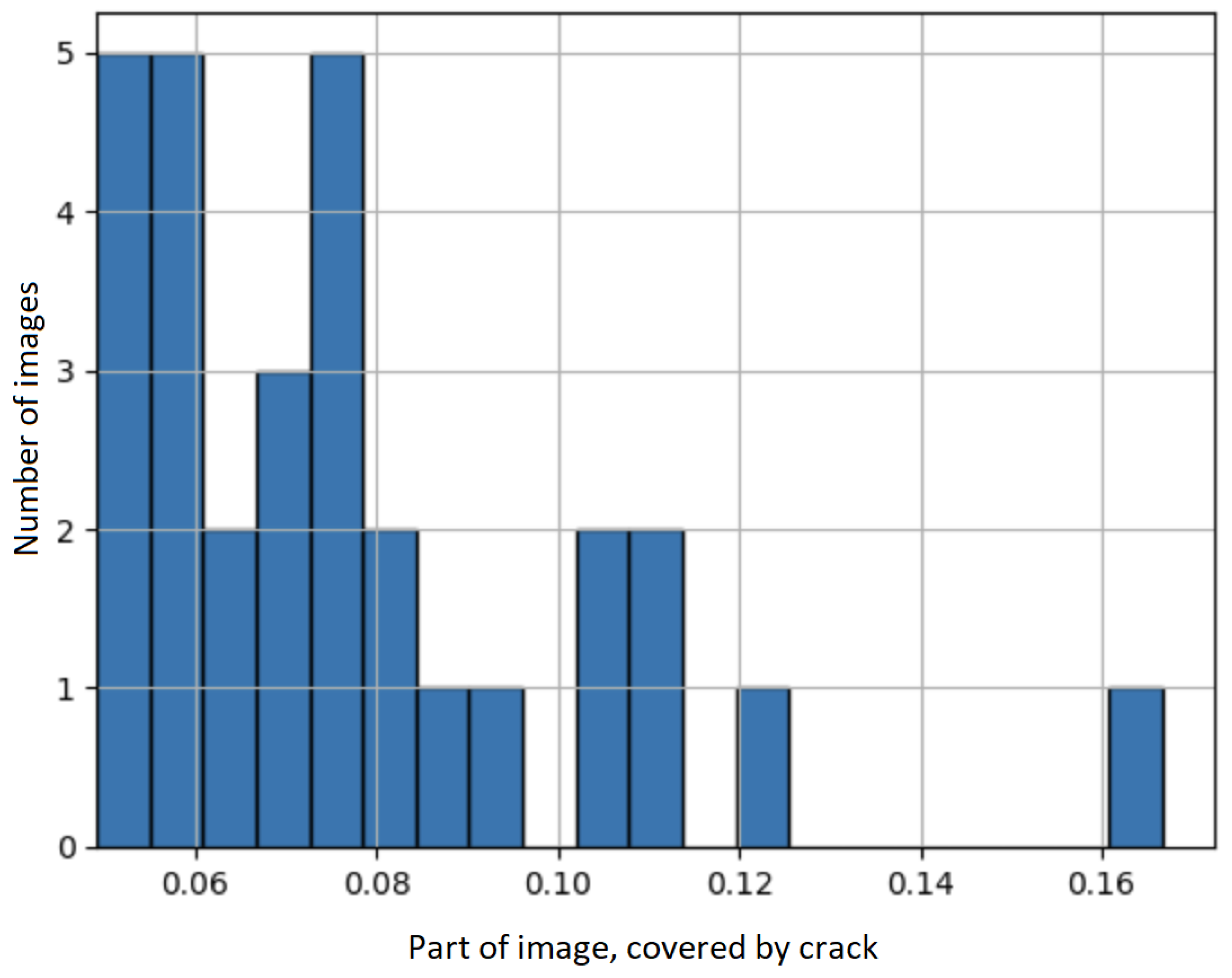

- The most balanced images were selected for each sample. For example, for the training set, 30 images were selected that contained a class of cracks with an area of 5% of the total image area. Figure 3 shows the number of images in the new training set after selecting the most suitable ones based on the percentage of content of the desired class.

- -

- A total of 16 images were divided into a ratio of 9/4/3 into training, validation and test samples;

- -

- Augmentation was performed using the authors’ algorithm [45] of up to 200/100/50 images in each of the samples, respectively.

- -

- Images from each sample were divided into 16 fragments with a dimension of 400 × 400, so the total number of images at this stage is 5600;

- -

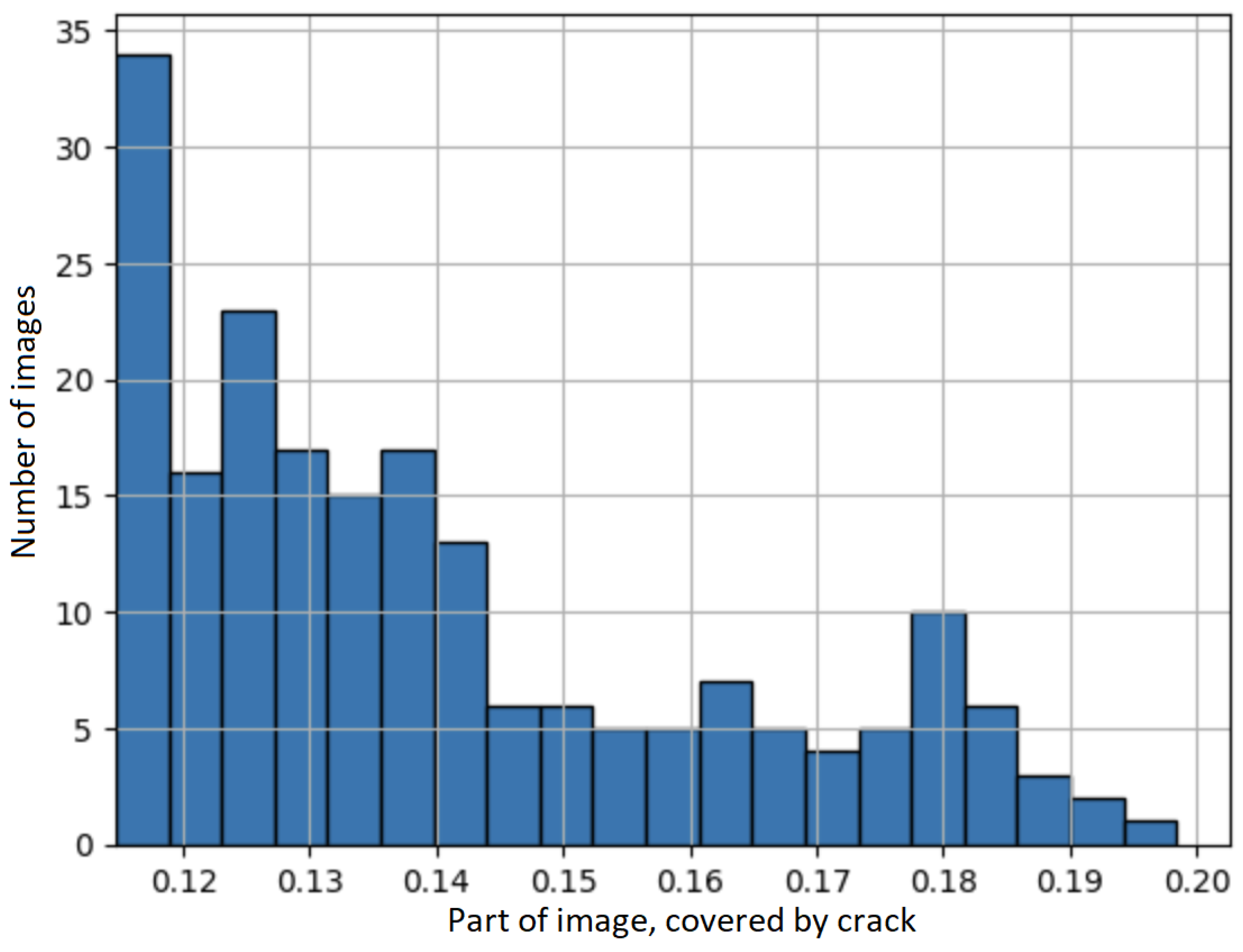

- The most balanced images were selected for each sample. For example, for the training set, 200 were selected, in which a class of cracks with an area of 11% of the total image area was detected (Figure 4). To improve the quality of segmentation, image fragments without cracks in the amount of 150 pictures were added to the training. Thus, a set of 350 pictures was created in the training set, 100 in the validation set and 50 in the test set.

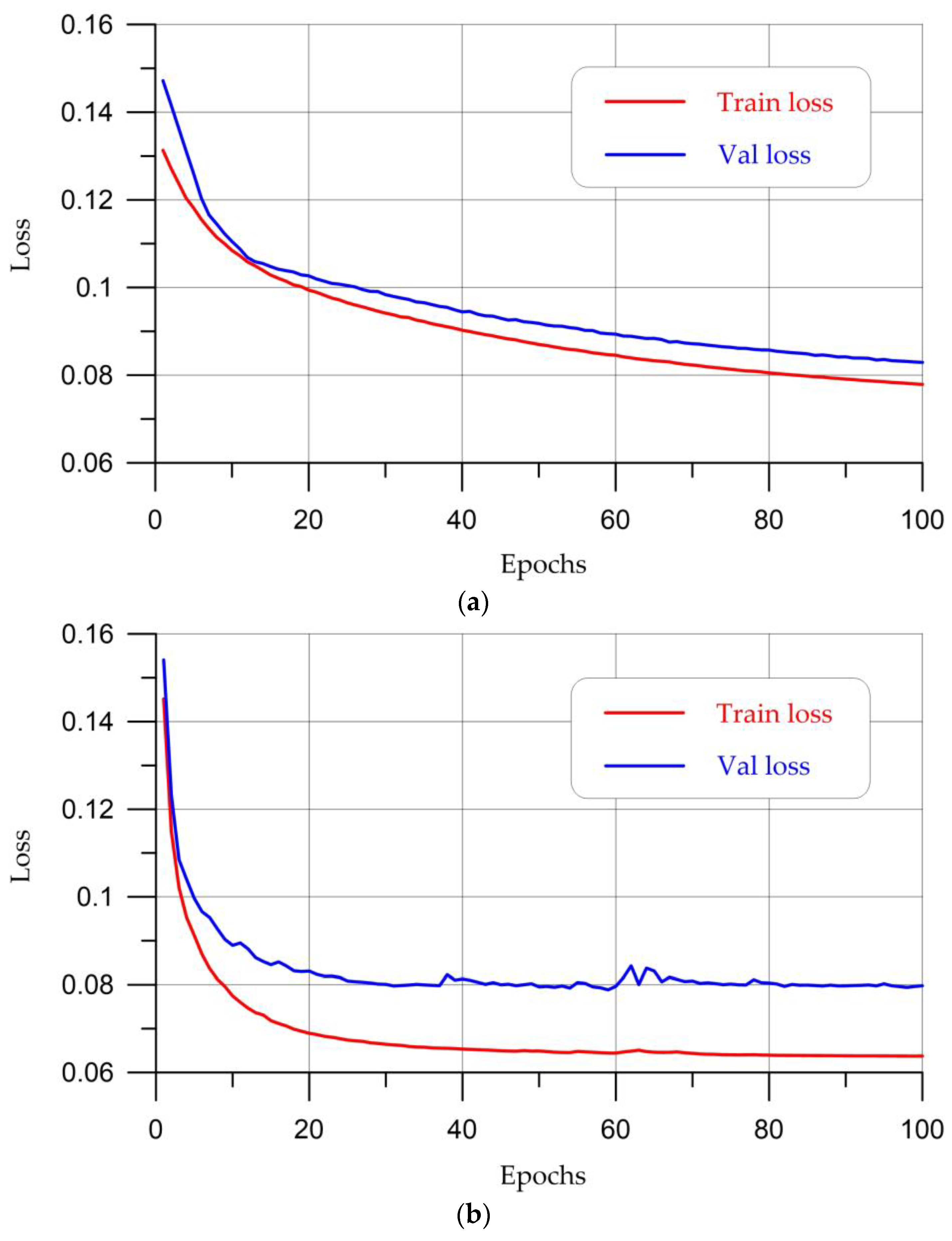

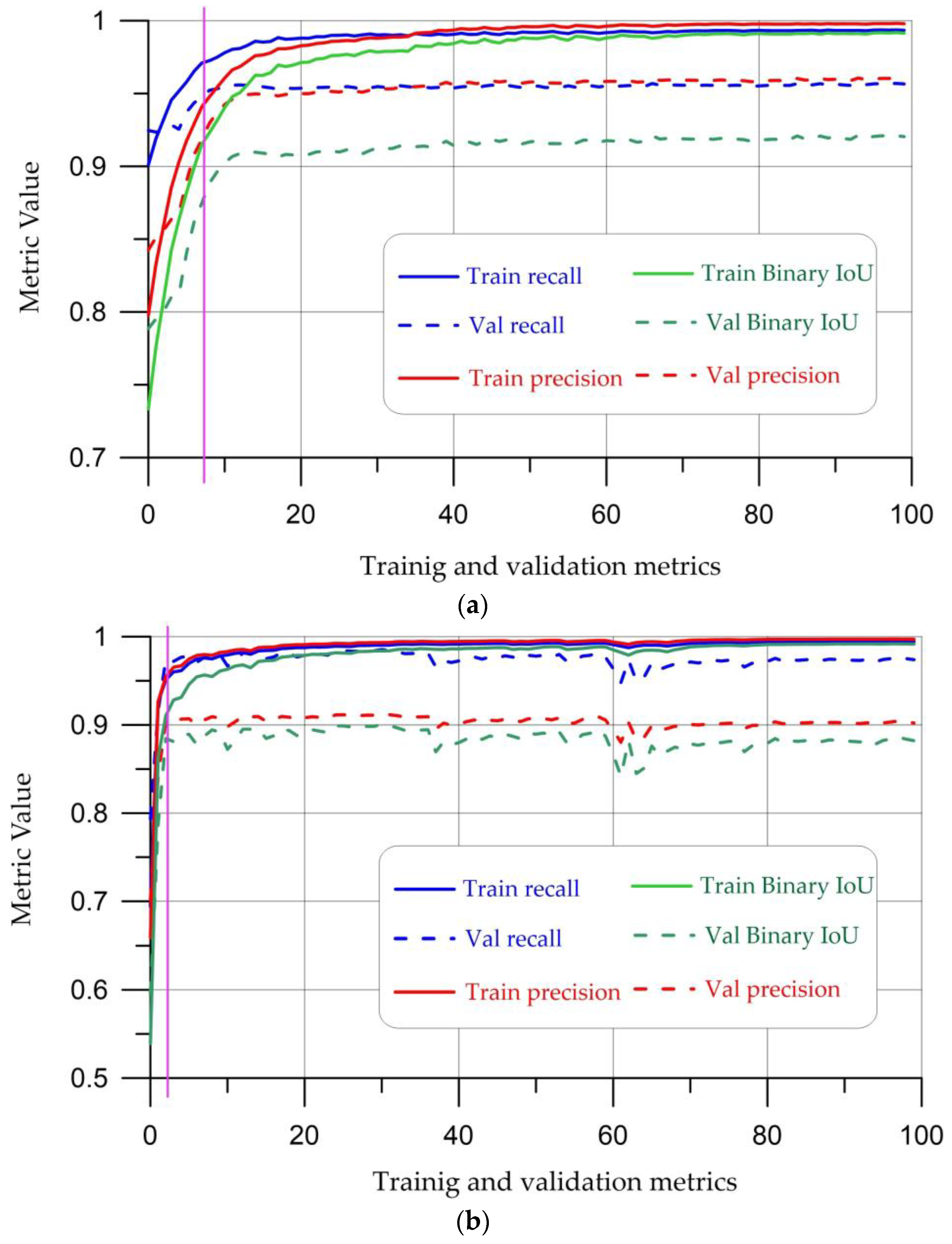

2.2.2. U-Net Architecture and Creation of Model 1.1 and Model 1.2

- (1)

- Model 1.1 is a U-Net CNN on the PyTorch machine learning framework, where the augmentation will be dynamic in nature; a set of 60 images, divided into a ratio of 30/15/15 into training, validation and test samples, is fed to the input of the CNN.

- Random crop of a fragment of an image. All possible continuous fragments in this case are equally probable.

- Equally probable application of one of four transformations:

- (a)

- Reflection along the horizontal axis;

- (b)

- Reflection along the vertical axis;

- (c)

- Turn 90 degrees in a random direction;

- (d)

- Constant conversion.

- (2)

- Model 1.2 is a U-Net CNN based on the PyTorch machine learning framework, the input of which is a set of 500 images in a ratio of 350/100/50 for the training, validation and test set (static augmentation).

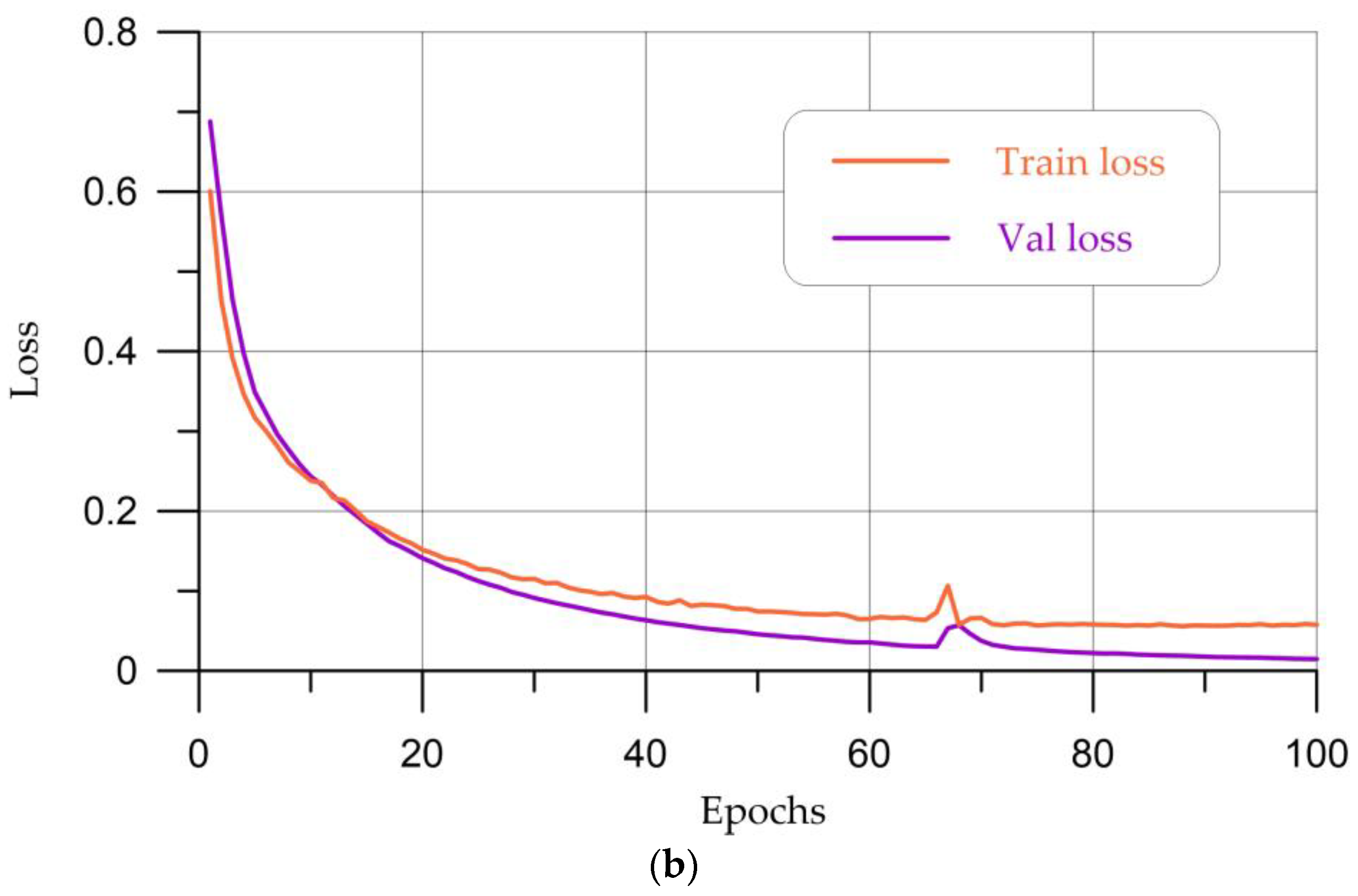

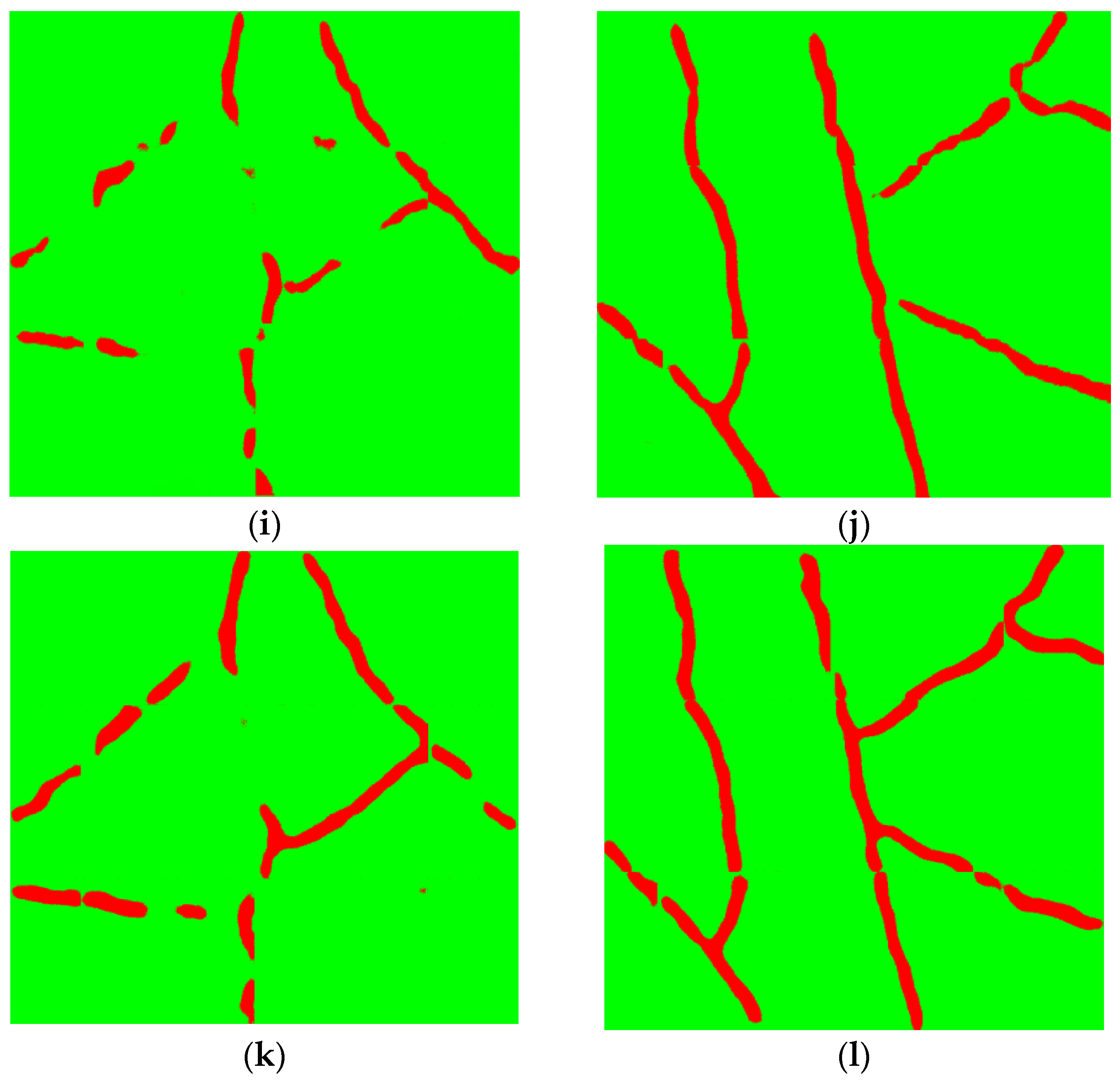

2.2.3. LinkNet Architecture and Creation of Model 1.3 and Model 1.4

- (1)

- Model 1.3—based on the LinkNet architecture, implemented on the PyTorch machine learning framework, modified at the training stage by applying dynamic augmentation at each iteration. The input of the CNN is a set of 60 images, divided in the ratio 30/15/15 into training, validation and test samples. The probabilistic process of dynamic augmentation is similar to what occurs during the implementation of model 1.1.

- (2)

- Model 1.4—based on the LinkNet architecture using PyTorch, where at the training stage a data set of 500 images is used, created using an augmentation proprietary algorithm (static augmentation).

3. Results and Discussion

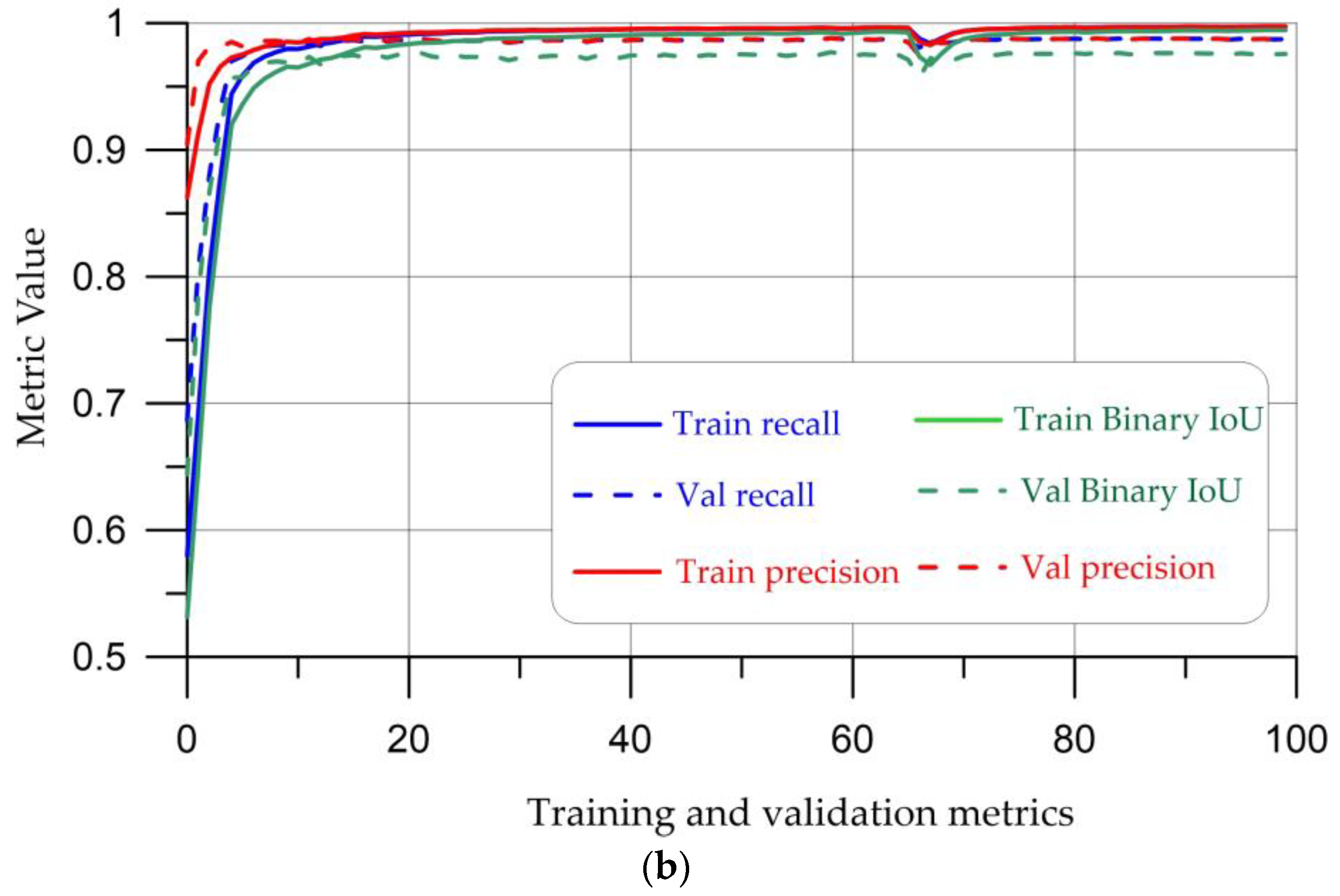

3.1. Quality Metrics for Segmenting Cracks in Aerated Concrete

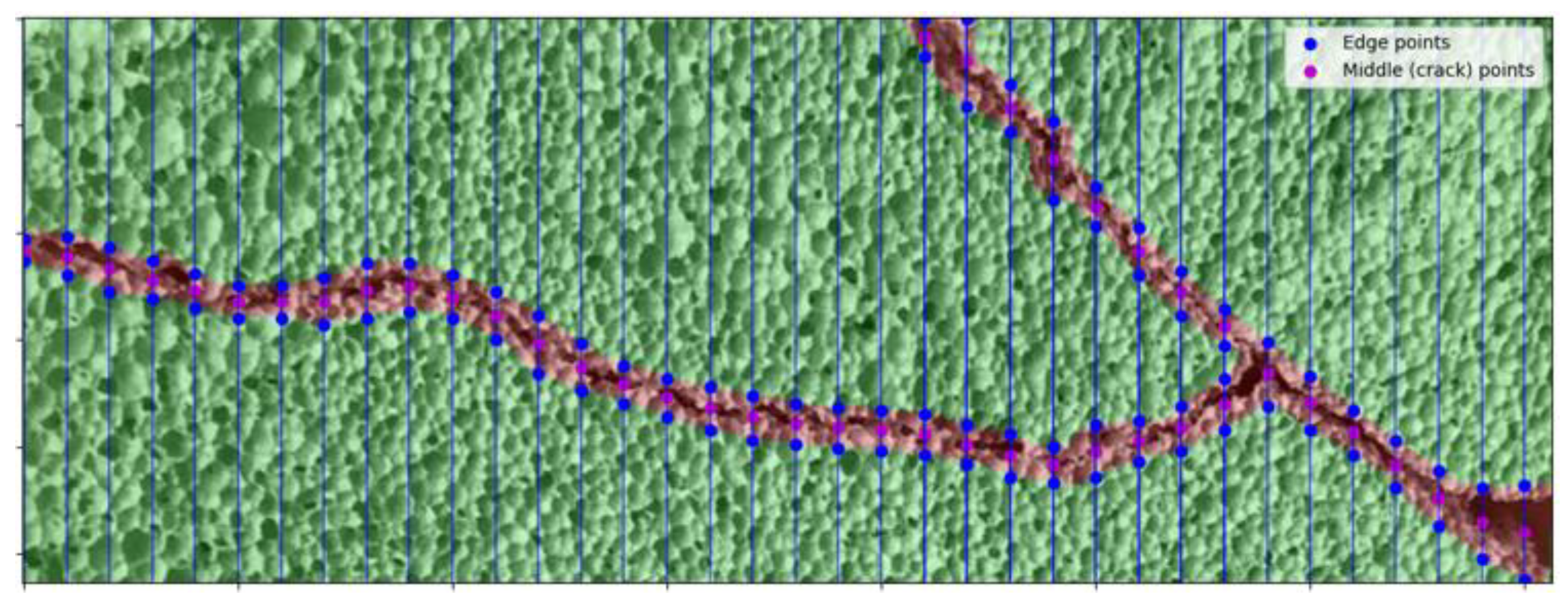

3.2. Calculating the Length of a Segmented Crack

- Dividing the mask into fragments, within each there is only one connected crack. In Figure 12, the DBSCAN clustering algorithm identified two clusters of pixels segmented by the neural network as cracks; respectively, each of these clusters (blue and red) corresponds to one of the two connected cracks detected in this image. In the future, each of these cracks will be processed independently.

- For each fragment, the orientation of the crack (vertical or horizontal) is determined by comparing the length of the corresponding fragment along the X axis and Y axis and comparing them.

- In the case of horizontal orientation: for each vertical pixel-by-pixel slice of the image, the pixels corresponding to the beginning and end of the crack in this section are determined. The pixel lying in the middle between each pair of the beginning–end of the crack in this section is defined as the pixel lying in the middle of the crack and is added to some array.

- 4.

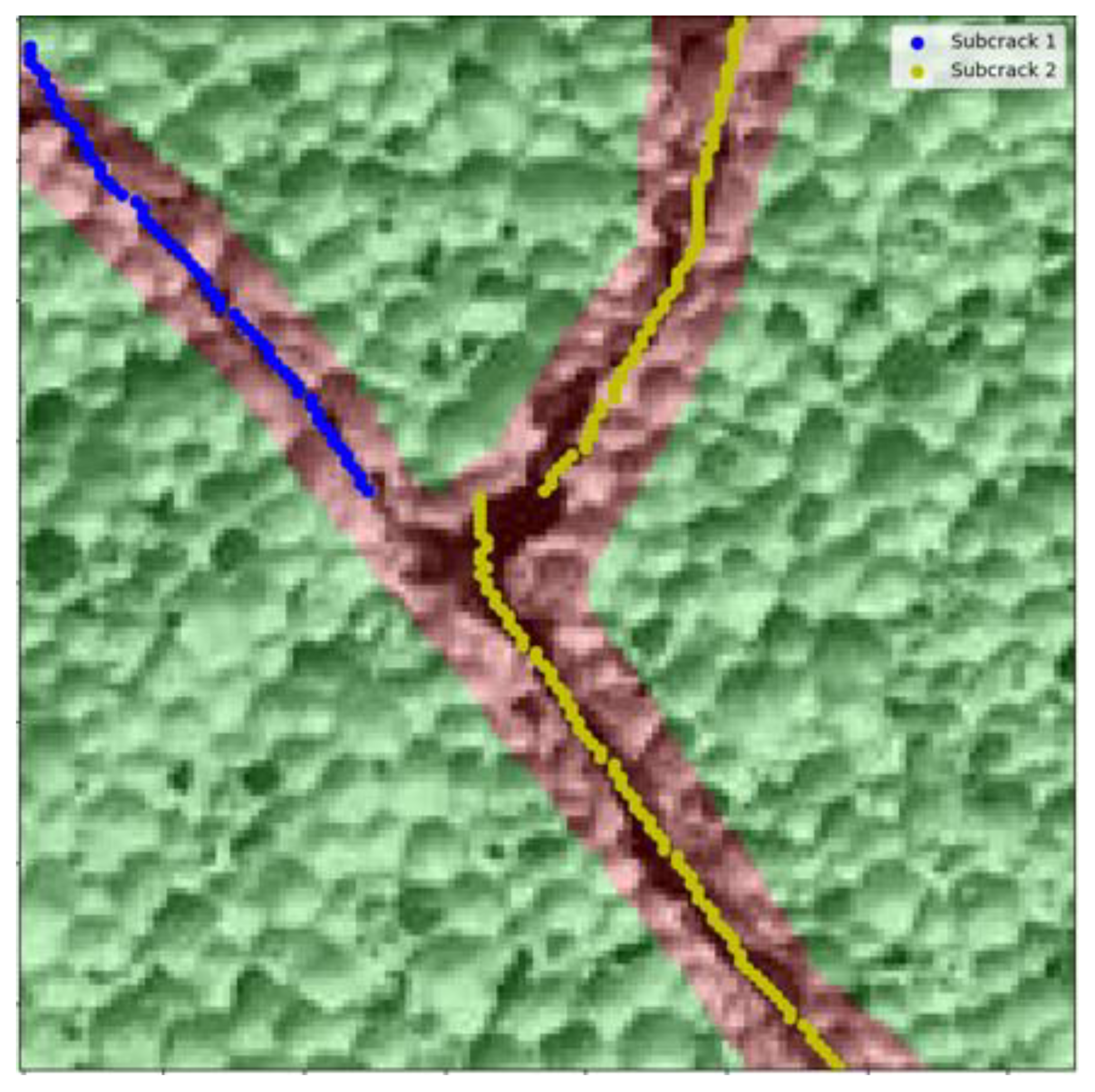

- The DBSCAN algorithm is applied to the resulting set of pixels to detect different crack branches. Figure 14 shows that the crack is divided into two branches. By repeatedly applying the DBSCAN algorithm, it is possible to fragment the crack, breaking it into two subcracks (yellow and blue), the lengths of which are calculated independently and then summed up.

- 5.

- For each branch, the length of the crack in pixels is calculated as the length of the broken line built on the corresponding pixels.

4. Conclusions

- (1)

- The LinkNet model with static augmentation was the best, with results precision = 0.73, recall = 0.80, F1 = 0.73 and IoU = 0.84, along with models based on the U-Net architecture with static and dynamic augmentation as well as the LinkNet architecture with dynamic augmentation.

- (2)

- The proprietary SCALE algorithm reduces manual labor time when determining the length of cracks and delamination.

- (3)

- The obtained crack segmentation results are comparable to the results obtained by other researchers and allow us to solve the problem of detecting defects in building materials.

- Introduction of intelligent technologies for extracting visual features from images (including in real time) to reduce the time of specialists’ participation. The influence of specialists cannot be completely excluded, since the final decision making remains with the individual.

- Expanding the range of building materials, products and structures in which defects can be detected.

- Expanding areas of interest by analyzing new types of defects, such as chips, foreign inclusions, broken corners, etc. The development of computer vision in this direction will make it possible to scale the algorithm and make it universal at all stages of the life cycle of building materials, products and structures.

- Increased resistance of CV models to various shooting conditions. This will make it possible to use computer vision models to assess the quality of building materials in the field and ensure reliable control.

- Combining computer vision models based on CNN with other technologies, such as UAVs and robotics, will make it possible to use the developed algorithms when analyzing building structures in hard-to-reach places, increasing the level of safety of construction control.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jayaram, M.A. Computer vision applications in construction material and structural health monitoring: A scoping review. Mater. Today Proc. In Press. 2023. [Google Scholar] [CrossRef]

- Alsakka, F.; Assaf, S.; El-Chami, I.; Al-Hussein, M. Computer vision applications in offsite construction. Autom. Constr. 2023, 154, 104980. [Google Scholar] [CrossRef]

- Reja, V.K.; Varghese, K.; Ha, Q.P. Computer vision-based construction progress monitoring. Autom. Constr. 2022, 138, 104245. [Google Scholar] [CrossRef]

- Ekanayake, B.; Wong, J.K.W.; Fini, A.A.F.; Smith, P. Computer vision-based interior construction progress monitoring: A literature review and future research directions. Autom. Constr. 2021, 127, 103705. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; Beskopylny, N.; El’shaeva, D.; Artamonov, S. Method for Concrete Structure Analysis by Microscopy of Hardened Cement Paste and Crack Segmentation Using a Convolutional Neural Network. J. Compos. Sci. 2023, 7, 327. [Google Scholar] [CrossRef]

- Hadinata, P.N.; Simanta, D.; Eddy, L.; Nagai, K. Multiclass Segmentation of Concrete Surface Damages Using U-Net and DeepLabV3+. Appl. Sci. 2023, 13, 2398. [Google Scholar] [CrossRef]

- Shah, S.A.R.; Azab, M.; Seif ElDin, H.M.; Barakat, O.; Anwar, M.K.; Bashir, Y. Predicting Compressive Strength of Blast Furnace Slag and Fly Ash Based Sustainable Concrete Using Machine Learning Techniques: An Application of Advanced Decision-Making Approaches. Buildings 2022, 12, 914. [Google Scholar] [CrossRef]

- Barkhordari, M.S.; Armaghani, D.J.; Mohammed, A.S.; Ulrikh, D.V. Data-Driven Compressive Strength Prediction of Fly Ash Concrete Using Ensemble Learner Algorithms. Buildings 2022, 12, 132. [Google Scholar] [CrossRef]

- Ahmad, A.; Chaiyasarn, K.; Farooq, F.; Ahmad, W.; Suparp, S.; Aslam, F. Compressive Strength Prediction via Gene Expression Programming (GEP) and Artificial Neural Network (ANN) for Concrete Containing RCA. Buildings 2021, 11, 324. [Google Scholar] [CrossRef]

- Ashrafian, A.; Taheri Amiri, M.J.; Masoumi, P.; Asadi-Shiadeh, M.; Yaghoubi-Chenari, M.; Mosavi, A.; Nabipour, N. Classification-Based Regression Models for Prediction of the Mechanical Properties of Roller-Compacted Concrete Pavement. Appl. Sci. 2020, 10, 3707. [Google Scholar] [CrossRef]

- Naderpour, H.; Rafiean, A.H.; Fakharian, P. Compressive strength prediction of environmentally friendly concrete using artificial neural networks. J. Build. Eng. 2018, 16, 213–219. [Google Scholar] [CrossRef]

- Anton, C.; Curteanu, S.; Lisa, C.; Leon, F. Machine Learning Methods Applied for Modeling the Process of Obtaining Bricks Using Silicon-Based Materials. Materials 2021, 14, 7232. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Y.M.; Khan, M.I. Robust Machine Learning Framework for Modeling the Compressive Strength of SFRC: Database Compilation, Predictive Analysis, and Empirical Verification. Materials 2023, 16, 7178. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Wang, K.; Liu, Q.; Wang, P.; Pan, F. Machine-Learning-Based Comprehensive Properties Prediction and Mixture Design Optimization of Ultra-High-Performance Concrete. Sustainability 2023, 15, 15338. [Google Scholar] [CrossRef]

- Cakiroglu, C. Explainable Data-Driven Ensemble Learning Models for the Mechanical Properties Prediction of Concrete Confined by Aramid Fiber-Reinforced Polymer Wraps Using Generative Adversarial Networks. Appl. Sci. 2023, 13, 11991. [Google Scholar] [CrossRef]

- Liu, M.-Y.; Li, Z.; Zhang, H. Probabilistic Shear Strength Prediction for Deep Beams Based on Bayesian-Optimized Data-Driven Approach. Buildings 2023, 13, 2471. [Google Scholar] [CrossRef]

- Perez, H.; Tah, J.H.M. Towards Automated Measurement of As-Built Components Using Computer Vision. Sensors 2023, 23, 7110. [Google Scholar] [CrossRef]

- Choi, J.; Ha, M.; Lee, J.G. Transfer Learning-Based Object Detection Model for Steel Structure Bolt Fastening Inspection. Appl. Sci. 2023, 13, 9499. [Google Scholar] [CrossRef]

- Lee, S.Y.; Huynh, T.C.; Park, J.H.; Kim, J.T. Bolt-Loosening Detection using Vision-Based Deep Learning Algorithm and Image Processing Method. J. Comput. Struct. Eng. Inst. Korea 2019, 1, 265–272. Available online: https://www.jcoseik.or.kr/articles/xml/NR0x/ (accessed on 28 November 2023). [CrossRef]

- Park, J.H.; Kim, T.H.; Choo, S.Y. A Development on Deep Learning-based Detecting Technology of Rebar Placement for Improving Building Supervision Efficiency. J. Archit. Inst. Korea Plan. Des. 2020, 36, 93–103. Available online: https://koreascience.kr/article/JAKO202016151583816.page (accessed on 28 November 2023).

- Kim, H.; Bang, S.; Jeong, H.; Ham, Y.; Kim, H. Analyzing context and productivity of tunnel earthmoving processes using imaging and simulation. Autom. Constr. 2018, 92, 188–198. [Google Scholar] [CrossRef]

- Rajan, A.J.; Jayakrishna, K.; Vignesh, T.; Chandradass, J.; Kannan, T.T.M. Development of computer vision for inspection of bolt using convolutional neural network. Mater. Today Proc. 2021, 45, 6931–6935. [Google Scholar] [CrossRef]

- Sun, Y.; Gu, Z. Using computer vision to recognize construction material: A Trustworthy Dataset Perspective. Resour. Conserv. Recycl. 2022, 183, 106362. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Love, P.E.D.; Luo, H.; Li, H.; Peña-Mora, F.; Zhong, B.; Zhou, C. Computer vision applications in construction safety assurance. Autom. Constr. 2020, 110, 103013. [Google Scholar] [CrossRef]

- Li, P.; Wu, F.; Xue, S.; Guo, L. Study on the Interaction Behaviors Identification of Construction Workers Based on ST-GCN and YOLO. Sensors 2023, 23, 6318. [Google Scholar] [CrossRef] [PubMed]

- Akinsemoyin, A.; Awolusi, I.; Chakraborty, D.; Al-Bayati, A.J.; Akanmu, A. Unmanned Aerial Systems and Deep Learning for Safety and Health Activity Monitoring on Construction Sites. Sensors 2023, 23, 6690. [Google Scholar] [CrossRef]

- Jin, X.; Ahn, C.R.; Kim, J.; Park, M. Welding Spark Detection on Construction Sites Using Contour Detection with Automatic Parameter Tuning and Deep-Learning-Based Filters. Sensors 2023, 23, 6826. [Google Scholar] [CrossRef] [PubMed]

- Khan, N.; Zaidi, S.F.A.; Yang, J.; Park, C.; Lee, D. Construction Work-Stage-Based Rule Compliance Monitoring Framework Using Computer Vision (CV) Technology. Buildings 2023, 13, 2093. [Google Scholar] [CrossRef]

- Wu, H.; Zhong, B.; Li, H.; Love, P.; Pan, X.; Zhao, N. Combining computer vision with semantic reasoning for on-site safety management in construction. J. Build. Eng. 2021, 42, 103036. [Google Scholar] [CrossRef]

- Lee, H.; Jeon, J.; Lee, D.; Park, C.; Kim, J.; Lee, D. Game engine-driven synthetic data generation for computer vision-based safety monitoring of construction workers. Autom. Constr. 2023, 155, 105060. [Google Scholar] [CrossRef]

- Arshad, S.; Akinade, O.; Bello, S.; Bilal, M. Computer vision and IoT research landscape for health and safety management on construction sites. J. Build. Eng. 2023, 76, 107049. [Google Scholar] [CrossRef]

- Kong, T.; Fang, W.; Love, P.E.D.; Luo, H.; Xu, S.; Li, H. Computer vision and long short-term memory: Learning to predict unsafe behaviour in construction. Adv. Eng. Inform. 2021, 50, 101400. [Google Scholar] [CrossRef]

- Beckman, G.H.; Polyzois, D.; Cha, Y.J. Deep learning-based automatic volumetric damage quantification using depth camera. Autom. Constr. 2019, 99, 114–124. [Google Scholar] [CrossRef]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Rubio, J.J.; Kashiwa, T.; Laiteerapong, T.; Deng, W.; Nagai, K.; Escalera, S.; Nakayama, K.; Matsuo, Y.; Prendinger, H. Multi-class structural damage segmentation using fully convolutional networks. Comput. Ind. 2019, 112, 103121. [Google Scholar] [CrossRef]

- Fu, R.; Cao, M.; Novák, D.; Qian, X.; Alkayem, N.F. Extended efficient convolutional neural network for concrete crack detection with illustrated merits. Autom. Constr. 2023, 156, 105098. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr. Build. Mater. 2018, 186, 1031–1045. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; El’shaeva, D.; Beskopylny, N.; Onore, G. Discovery and Classification of Defects on Facing Brick Specimens Using a Convolutional Neural Network. Appl. Sci. 2023, 13, 5413. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; El’shaeva, D.; Beskopylny, N.; Onore, G. Detecting Cracks in Aerated Concrete Samples Using a Convolutional Neural Network. Appl. Sci. 2023, 13, 1904. [Google Scholar] [CrossRef]

- Iraniparast, M.; Ranjbar, S.; Rahai, M.; Nejad, F.M. Surface concrete cracks detection and segmentation using transfer learning and multi-resolution image processing. Structures 2023, 54, 386–398. [Google Scholar] [CrossRef]

- Ding, W.; Yang, H.; Yu, K.; Shu, J. Crack detection and quantification for concrete structures using UAV and transformer. Autom. Constr. 2023, 152, 104929. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Chepurnenko, A.; Meskhi, B.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.; Kozhakin, A.; Zavolokin, K.; Krasnov, A.A. Detection and Dispersion Analysis of Water Globules in Oil Samples Using Artificial Intelligence Algorithms. Biomimetics 2023, 8, 309. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Mailyan, L.R.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.F.; Kozhakin, A.N.; Beskopylny, N.A.; El’shaeva, D.M.; Tyutina, A.D.; Onore, G.S. Photographic Images of the Structure of Aerated Concrete. Russian Federation Database 2022623622, 22 December 2022. Available online: https://www.fips.ru/registers-doc-view/fips_servlet?DB=DB&DocNumber=2022623622&TypeFile=html (accessed on 13 October 2023).

- Shcherban’, E.M.; Razveeva, I.F.; Kozhakin, A.N.; Artamonov, S.A. Segmented Images of Aerated Concrete Products. Russian Federation Database 2023623354, 5 October 2023. Available online: https://www.fips.ru/iiss/document.xhtml?faces-redirect=true&id=6e7ca08848aea4e51b2fa56243af377e (accessed on 13 October 2023).

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.F.; Kozhakin, A.N.; Beskopylny, N.A.; Onore, G.S. Image Augmentation Program. Russian Federation Computer Program 2022685192, 21 December 2022. Available online: https://www.fips.ru/registers-doc-view/fips_servlet?DB=EVM&DocNumber=2022685192&TypeFile=html (accessed on 13 October 2023).

- Ma, X.; Li, H.; Wang, D.; Li, C.; Wei, Y. Simulation and Experimental Substantiation of the Thermal Properties of Non-Autoclaved Aerated Concrete with Recycled Concrete Powder. Materials 2022, 15, 8341. [Google Scholar] [CrossRef] [PubMed]

- Legostaeva, N.V. Analysis of the causes of defects in aerated concrete during formation. Proc. Univ. Investig. Construct. 2018, 8, 91–97. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. arXiv 2017, arXiv:1707.03718. Available online: https://arxiv.org/abs/1707.03718 (accessed on 25 October 2023).

- Shcherban’, E.M.; Razveeva, I.F.; Kozhakin, A.N.; Artamonov, S.A. Program for Calculating Crack Lengths in Building Materials and Structures (SCALE: Segmented Crack Analysis & Length Estimation). Russian Federation Computer Program 2023680498, 2 October 2023. Available online: https://www.fips.ru/iiss/document.xhtml?faces-redirect=true&id=0b5c601a617868cbdcea8b4e88cebf47 (accessed on 13 October 2023).

- Liu, T.; Zhang, L.; Zhou, G.; Cai, W.; Cai, C.; Li, L. BC-DUnet-based segmentation of fine cracks in bridges under a complex background. PLoS ONE 2022, 17, e0265258. [Google Scholar] [CrossRef]

- Ko, P.; Prieto, S.A.; García de Soto, B. Developing a Free and Open-source Automated Building Exterior Crack Inspection Software for Construction and Facility Managers. arXiv 2022, arXiv:2206.09742. [Google Scholar] [CrossRef]

- Wang, W.; Su, C. Convolutional Neural Network-Based Pavement Crack Segmentation Using Pyramid Attention Network. IEEE Access 2020, 8, 206548–206558. [Google Scholar] [CrossRef]

- Anysz, H. Machine Learning and data mining tools applied for databases of low number of records. Adv. Eng. Res. 2021, 21, 346–363. [Google Scholar] [CrossRef]

| Characteristics Title | Actual Value |

|---|---|

| Density, kg/m3 | 614 |

| Compressive strength, MPa | 6.4 |

| Thermal conductivity coefficient, W/(m × K) | 0.172 |

| Specific heat capacity, kJ/(kg × K) | 0.841 |

| Modulus of elasticity, MPa | 2.43 × 103 |

| Num | Parameter | Value | |

|---|---|---|---|

| Model 1.1 | Model 1.2 | ||

| 1 | Number of images in training set | 30 | 350 |

| 2 | Number of images in the validation set | 15 | 100 |

| 3 | Number of images in the test set | 15 | 50 |

| 4 | Augmentation | Dynamic | Static |

| 5 | BatchSize | 10 | 10 |

| 6 | Number of epochs | 100 | 100 |

| 7 | Number of iterations | 300 | 3500 |

| 8 | Learning rate | 1 × 10−4 | 1 × 10−4 |

| 9 | Solver | Adam | Adam |

| 10 | Loss function | Focal loss | Focal loss |

| Num | Parameter | Value | |

|---|---|---|---|

| Model 1.3 | Model 1.4 | ||

| 1 | Number of images in training set | 30 | 350 |

| 2 | Number of images in the validation set | 15 | 100 |

| 3 | Number of images in the test set | 15 | 50 |

| 4 | Augmentation | Dynamic | Static |

| 5 | BatchSize | 10 | 10 |

| 6 | Number of epochs | 100 | 100 |

| 7 | Number of iterations | 300 | 3500 |

| 8 | Learning rate | 1 × 10−4 | 1 × 10−4 |

| 9 | Solver | Adam | Adam |

| 10 | Loss function | Binary cross-entropy | Binary cross-entropy |

| Model | Precision | Recall | F1 | IoU |

|---|---|---|---|---|

| Average value for the test set for Model 1.1 (U-Net + dynamic augmentation) | 0.86 | 0.56 | 0.65 | 0.77 |

| Average value for the test set for Model 1.2 (U-Net + static augmentation) | 0.60 | 0.61 | 0.64 | 0.83 |

| Average value for the test set for Model 1.3 (LinkNet + dynamic augmentation) | 0.60 | 0.86 | 0.66 | 0.63 |

| Average value for the test set for Model 1.4 (LinkNet + static augmentation) | 0.73 | 0.80 | 0.73 | 0.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Razveeva, I.; Kozhakin, A.; Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Artamonov, S.; Pembek, A.; Dingrodiya, H. Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks. Buildings 2023, 13, 3014. https://doi.org/10.3390/buildings13123014

Razveeva I, Kozhakin A, Beskopylny AN, Stel’makh SA, Shcherban’ EM, Artamonov S, Pembek A, Dingrodiya H. Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks. Buildings. 2023; 13(12):3014. https://doi.org/10.3390/buildings13123014

Chicago/Turabian StyleRazveeva, Irina, Alexey Kozhakin, Alexey N. Beskopylny, Sergey A. Stel’makh, Evgenii M. Shcherban’, Sergey Artamonov, Anton Pembek, and Himanshu Dingrodiya. 2023. "Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks" Buildings 13, no. 12: 3014. https://doi.org/10.3390/buildings13123014

APA StyleRazveeva, I., Kozhakin, A., Beskopylny, A. N., Stel’makh, S. A., Shcherban’, E. M., Artamonov, S., Pembek, A., & Dingrodiya, H. (2023). Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks. Buildings, 13(12), 3014. https://doi.org/10.3390/buildings13123014