Fabricated Components Hoisting Activity Recognition and Collision Analysis Based on Inertial Measurement Unit IMU

Abstract

:1. Introduction

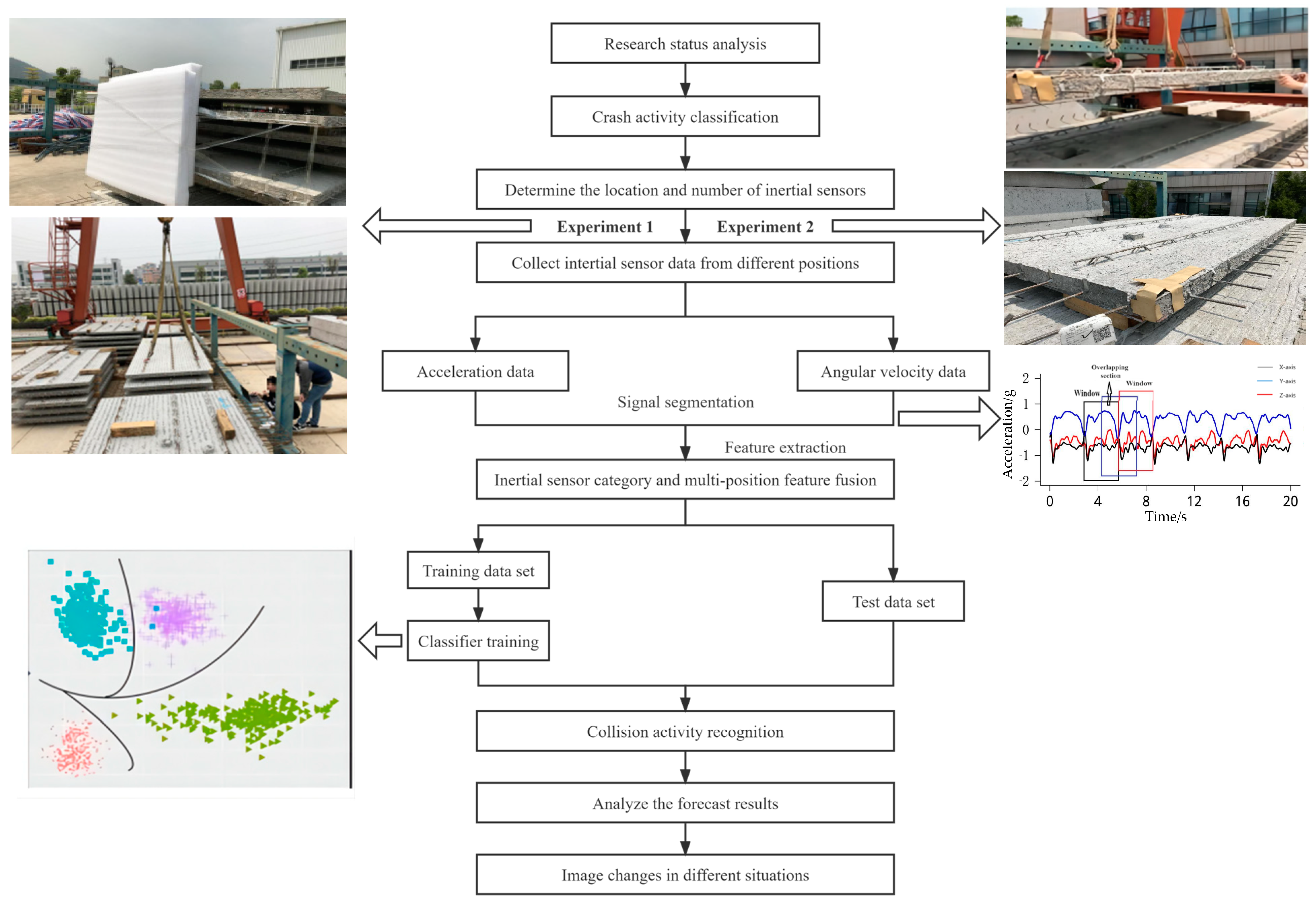

2. Recognition of Hoisting Activities of Fabricated Component Based on the IMU

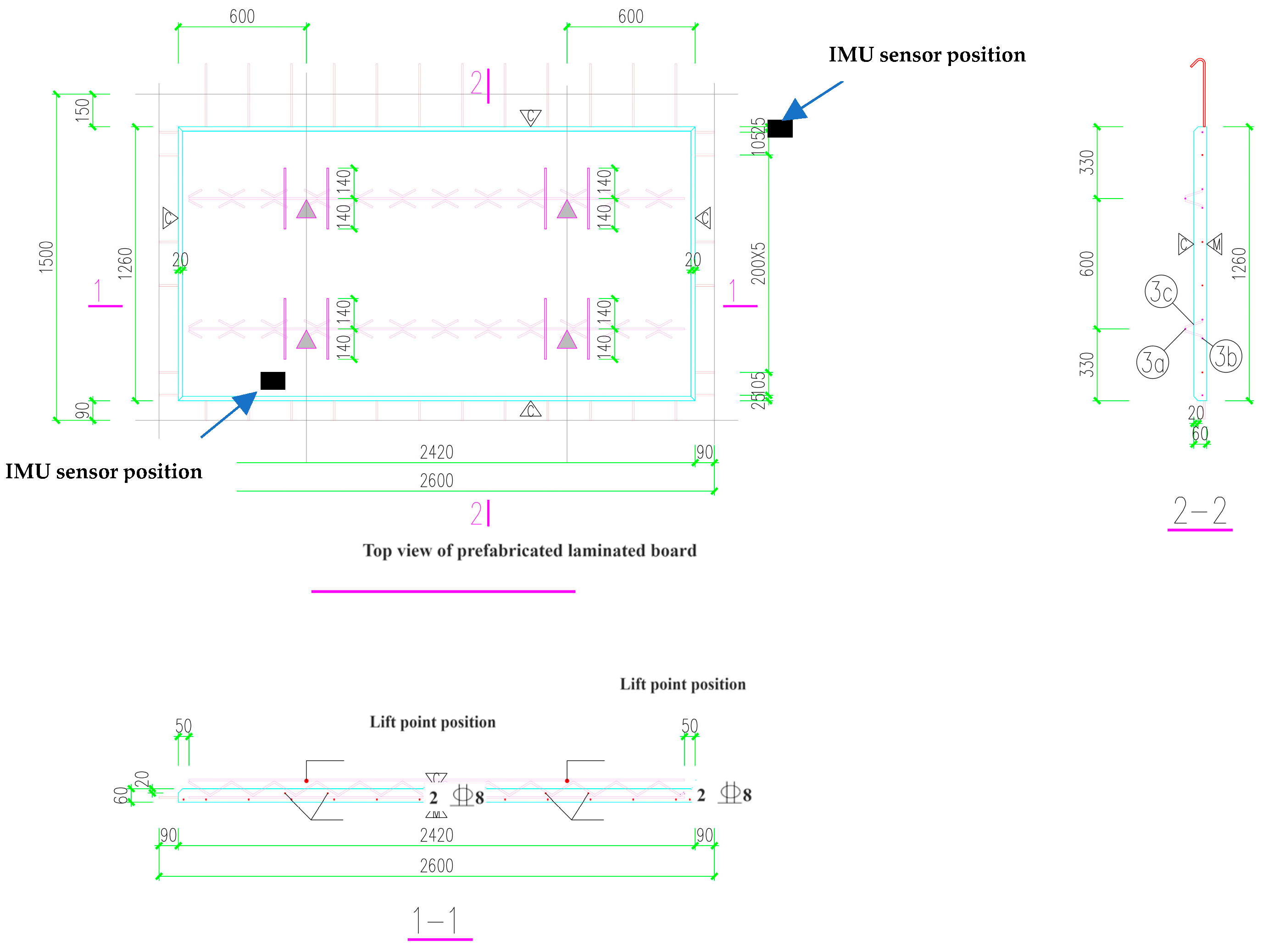

2.1. Data Collection

2.2. Data Processing

2.3. Hoisting Activity Recognition Model

2.3.1. Analysis of IMU Sensor Results at a Single Location

2.3.2. Analysis of Results of IMU Sensor Fusion of Two Positions

2.3.3. Analysis of IMU Sensor Results Fused in Three Positions

3. Collision Analysis of Assembly Component Hoisting

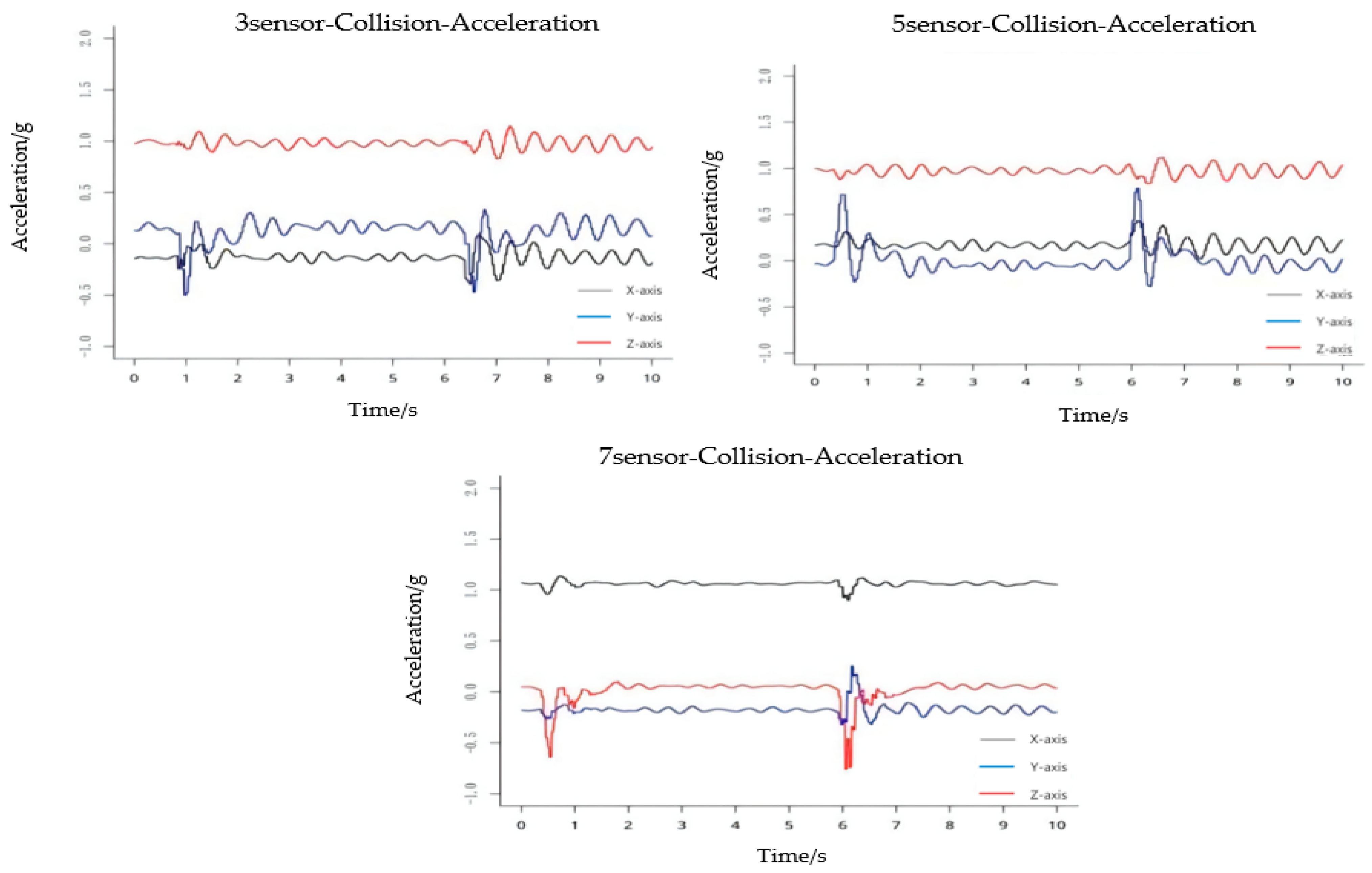

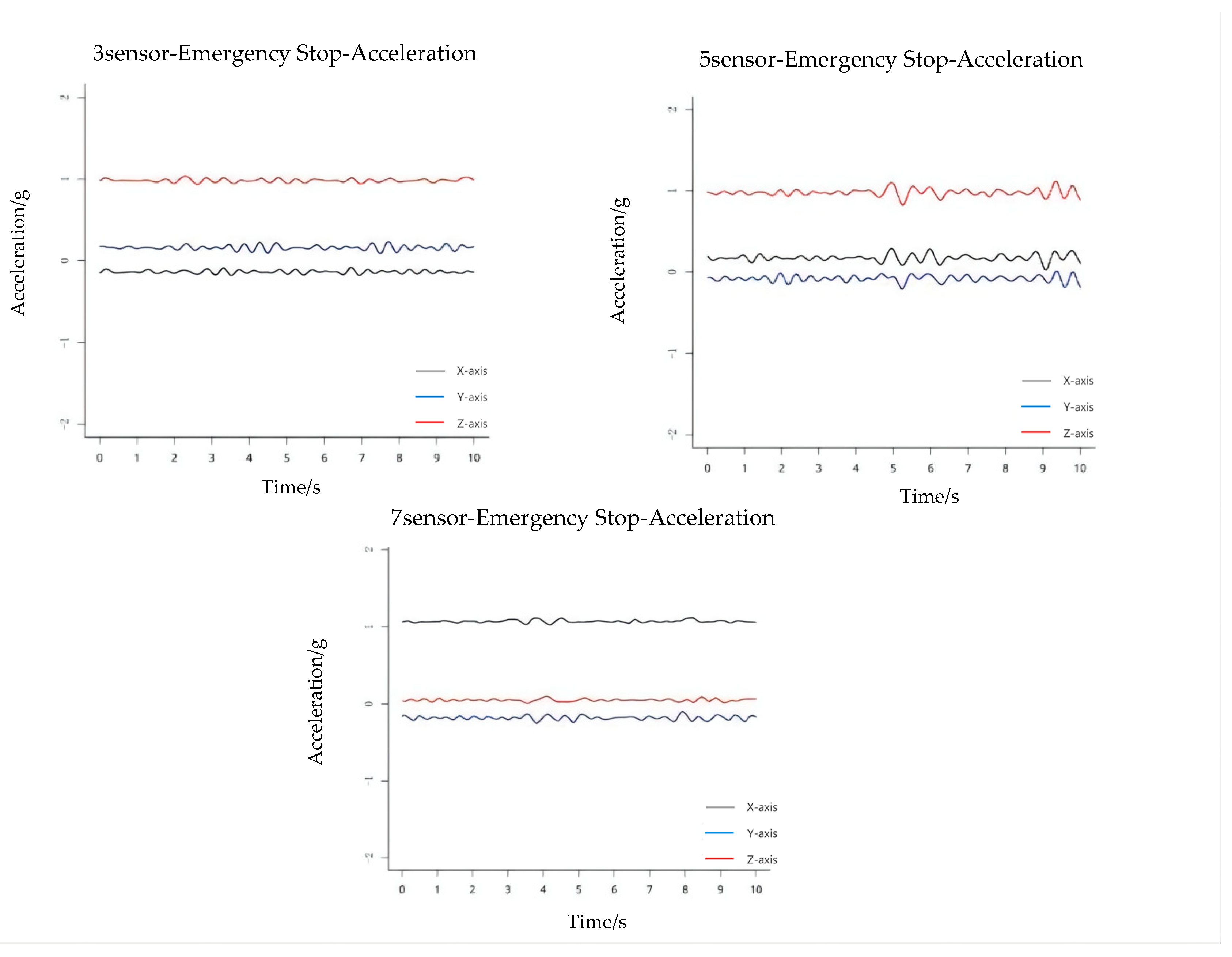

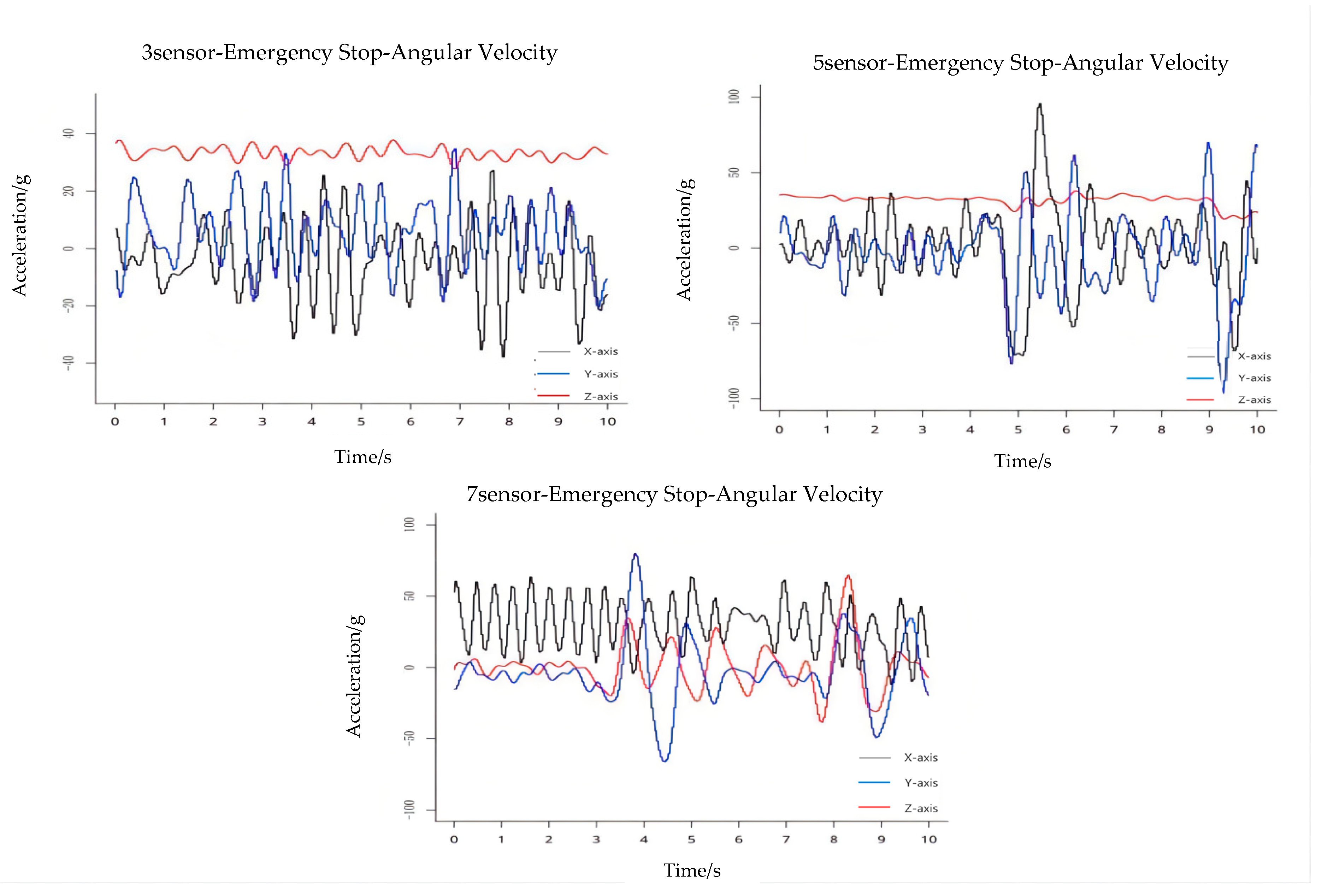

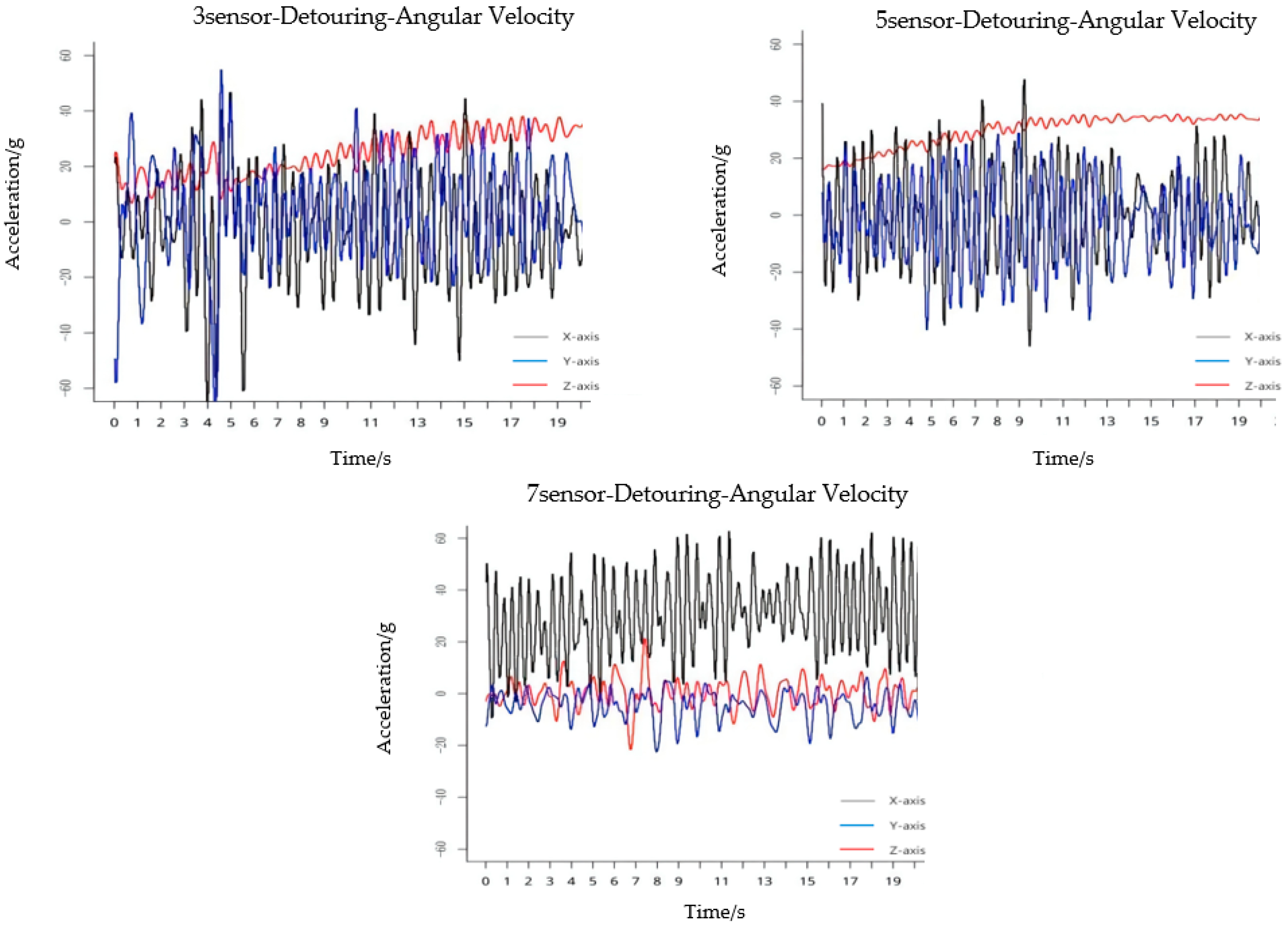

3.1. Collision Activity Classification

3.2. Image Analysis for Collision Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuchi, W.; Ronghua, C.; Qi, L.; Tao, C.; Chao, Z. Overview and prospects of the development of prefabricated buildings. Introd. Sci. Technol. 2017, 14, 162–192. [Google Scholar] [CrossRef]

- Hao, W. The current situation and future development of prefabricated buildings. Jiangxi Build. Mater. 2016, 10, 45. [Google Scholar] [CrossRef]

- Fei, W. Research on Anti-collision Technology of Crane Hoisting. Chang. Univ. 2014. [Google Scholar] [CrossRef]

- Inman, V.T.; Eberhart, H.D. The Major Determinants in Normal and Pathological Gait. J. Bone Jt. Surg. 1953, 35, 543–558. Available online: https://journals.lww.com/jbjsjournal/Abstract/1953/35030/THE_MAJOR_DETERMINANTS_IN_NORMAL_AND_PATHOLOGICAL.3.aspx (accessed on 19 June 2021).

- Morris, J.R.W. Accelerometry—A technique for the measurement of human body movements. J. Biomech. 1973, 6, 729–736. [Google Scholar] [CrossRef]

- Karantonis, D.M.; Narayanan, M.R.; Mathie, M.; Lovell, N.H.; Celler, B.G. Implementation of a Real-Time Human Movement Classifier Using a Triaxial Accelerometer for Ambulatory Monitoring. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 156–167. [Google Scholar] [CrossRef] [PubMed]

- Mathie, M.J.; Celler, B.G.; Lovell, N.H.; Coster, A. Classification of basic daily movements using a triaxial accelerometer. Med. Biol. Eng. Comput. 2004, 42, 679–687. [Google Scholar] [CrossRef] [PubMed]

- Mancini, M.; Chiari, L.; Holmstrom, L.; Salarian, A.; Horak, F.B. Validity and reliability of an IMU-based method to detect APAs prior to gait initiation. Gait Posture 2016, 43, 125–131. [Google Scholar] [CrossRef] [Green Version]

- Lyons, G.M.; Culhane, K.M.; Hilton, D.; Grace, P.A.; Lyons, D. A description of an accelerometer-based mobility monitoring technique. Med. Eng. Phys. 2005, 27, 497–504. [Google Scholar] [CrossRef]

- Hou, X.; Li, C. Research on Collision Detection for Virtual Hoisting Construction Scene. J. Syst. Simul. 2014, 26, 76–84. [Google Scholar] [CrossRef]

- Fan, Q.; Ren, L.; Zeng, Y.; Guo, J.; Zhang, Q. Crane hoisting collision detection method based on plane projection. Lift. Trans. Mach. 2018, 13, 150–154. [Google Scholar] [CrossRef]

- Qiu, S.; Wang, Z.; Zhao, H.; Qin, K.; Li, Z.; Hu, H. Inertial/magnetic sensors based pedestrian dead reckoning by means of multi-sensor fusion. Inf. Fusion 2018, 39, 108–119. [Google Scholar] [CrossRef] [Green Version]

- Zago, M.; Sforza, C.; Pacifici, I.; Cimolin, V.; Camerota, F.; Celletti, C.; Condoluci, C.; De Pandis, M.F.; Galli, M. Gait evaluation using inertial measurement units in subjects with Parkinson’s disease. J. Electromyogr. Kinesiol. 2018, 42, 44–48. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Durgan, S.D.; Lagomasino, D. Modeling risk of mangroves to tropical cyclones: A case study of Hurricane Irma. Estuar. Coast. Shelf Sci. 2019, 224, 108–116. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Wulandari; Yoshioka, H.; Itoyama, E. Classification of multiple cattle behavior patterns using a recurrent neural network with long short-term memory and inertial measurement units. Comput. Electron. Agric. 2019, 157, 247–253. [Google Scholar] [CrossRef]

- Tanigawa, A.; Morino, S.; Aoyama, T.; Takahashi, M. Gait analysis of pregnant patients with lumbopelvic pain using inertial sensor. Gait Posture 2018, 65, 176–181. [Google Scholar] [CrossRef]

- Mathie, M.J.; Coster, A.; Lovell, N.H.; Celler, B.G. Detection of daily physical activities using a triaxial accelerometer. Med. Biol. Eng. Comput. 2003, 41, 296–301. [Google Scholar] [CrossRef]

- Punchihewa, N.G.; Yamako, G.; Fukao, Y.; Chosa, E. Identification of key events in baseball hitting using inertial measurement units. J. Biomech. 2019, 87, 157–160. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Cao, C.; Liu, Z.; Mu, E. Intelligent Control Method of Hoisting Prefabricated Components Based on Internet-of-Things. Sensors 2021, 21, 980. [Google Scholar] [CrossRef] [PubMed]

| 3-A-KNN | Reference | 3-A-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 0 | 40 | 1 | down | 60 | 0 | 42 | 1 |

| still | 0 | 23 | 1 | 0 | still | 0 | 23 | 1 | 0 |

| straight | 0 | 0 | 68 | 2 | straight | 0 | 0 | 68 | 2 |

| up | 0 | 0 | 3 | 61 | up | 0 | 0 | 2 | 61 |

| 3-G-KNN | Reference | 3-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 0 | 37 | 1 | down | 48 | 0 | 13 | 0 |

| still | 0 | 23 | 0 | 0 | still | 0 | 23 | 0 | 0 |

| straight | 0 | 0 | 72 | 2 | straight | 12 | 0 | 99 | 21 |

| up | 0 | 0 | 3 | 60 | up | 0 | 0 | 2 | 43 |

| 3-A-G-KNN | Reference | 3-A-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 59 | 0 | 21 | 4 | down | 60 | 10 | 16 | 1 |

| still | 1 | 23 | 1 | 0 | still | 0 | 12 | 2 | 0 |

| straight | 0 | 0 | 89 | 8 | straight | 12 | 1 | 94 | 0 |

| up | 0 | 0 | 1 | 52 | up | 0 | 0 | 0 | 63 |

| 6-A-KNN | Reference | 6-A-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 0 | 41 | 1 | down | 59 | 0 | 39 | 1 |

| still | 0 | 23 | 0 | 0 | still | 1 | 23 | 1 | 0 |

| straight | 0 | 0 | 70 | 2 | straight | 0 | 0 | 69 | 0 |

| up | 0 | 0 | 1 | 61 | up | 0 | 0 | 3 | 63 |

| 6-G-KNN | Reference | 6-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 0 | 39 | 1 | down | 60 | 0 | 41 | 1 |

| still | 0 | 23 | 1 | 0 | still | 0 | 23 | 0 | 0 |

| straight | 0 | 0 | 69 | 0 | straight | 0 | 0 | 70 | 2 |

| up | 0 | 0 | 3 | 63 | up | 0 | 0 | 1 | 61 |

| 6-A-G-KNN | Reference | 6-A-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 3 | 9 | 9 | down | 60 | 0 | 6 | 16 |

| still | 0 | 20 | 4 | 0 | still | 0 | 19 | 0 | 0 |

| straight | 0 | 1 | 99 | 0 | straight | 0 | 4 | 106 | 0 |

| up | 0 | 0 | 1 | 55 | up | 0 | 0 | 0 | 48 |

| 8-A-KNN | Reference | 8-A-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 7 | 12 | 0 | down | 60 | 8 | 13 | 0 |

| still | 0 | 14 | 1 | 0 | still | 0 | 14 | 2 | 0 |

| straight | 0 | 2 | 99 | 0 | straight | 0 | 1 | 97 | 0 |

| up | 0 | 0 | 0 | 64 | up | 0 | 0 | 0 | 64 |

| 8-G-KNN | Reference | 8-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 59 | 0 | 39 | 1 | down | 59 | 0 | 37 | 1 |

| still | 1 | 23 | 1 | 0 | still | 1 | 23 | 1 | 0 |

| straight | 0 | 0 | 69 | 0 | straight | 0 | 0 | 72 | 0 |

| up | 0 | 0 | 3 | 63 | up | 0 | 0 | 0 | 63 |

| 8-A-G-KNN | Reference | 8-A-G-RF | Reference | ||||||

| Prediction | down | still | straight | up | Prediction | down | still | straight | up |

| down | 60 | 3 | 9 | 9 | down | 60 | 10 | 16 | 1 |

| still | 0 | 20 | 4 | 0 | still | 0 | 12 | 1 | 0 |

| straight | 0 | 0 | 99 | 0 | straight | 0 | 0 | 95 | 0 |

| up | 0 | 0 | 1 | 55 | up | 0 | 0 | 0 | 63 |

| Sensor Category | Classification Algorithm | A | G | A + G | Portfolio Promotion Rate |

|---|---|---|---|---|---|

| 3 | KNN | 81.85% | 83.01% | 86.1% | 3.72% |

| RF | 81.85% | 82.24% | 88.42% | 7.51% | |

| 6 | KNN | 82.63% | 83.01% | 90.35% | 8.84% |

| RF | 82.63% | 82.63% | 89.96% | 8.87% | |

| 8 | KNN | 91.51% | 82.63% | 90.35% | −1.27% |

| RF | 90.73% | 83.78% | 88.8% | −2.13% |

| Location Combination | Classification Algorithm | A/A | G/G | A/G | G/A | A + G/A | A + G/G | A/A + G | G/A + G |

|---|---|---|---|---|---|---|---|---|---|

| 3 + 6 | KNN | 80.38 | 83.74 | 81.77 | 85.01 | 85.21 | 88.42 | 78.01 | 88.35 |

| RF | 81.06 | 85.96 | 79.12 | 84.74 | 87.33 | 88.01 | 77.68 | 91.51 | |

| 3 + 8 | KNN | 85.32 | 79.17 | 80.03 | 85.97 | 87.07 | 84.02 | 83.92 | 78.96 |

| RF | 83.07 | 76.53 | 78.95 | 88.8 | 88.03 | 85.07 | 80.00 | 76.01 | |

| 6 + 8 | KNN | 86.07 | 80.38 | 83.14 | 87.90 | 89.88 | 84.07 | 90.17 | 88.8 |

| RF | 87.66 | 82.96 | 86.38 | 84.77 | 93.05 | 83.88 | 91.51 | 87.13 |

| Location Combination | Data Combination | Down | Still | Straight | Up | Average Value |

|---|---|---|---|---|---|---|

| 3 + 6 | A + G/G | 81.08 | 66.67 | 90.82 | 99.21 | 84.45 |

| G/A + G | 86.33 | 73.68 | 92.96 | 100 | 88.24 | |

| 3 + 8 | G/A | 81.63 | 64.86 | 92.31 | 98.41 | 84.30 |

| A + G/A | 80 | 66.67 | 90.73 | 99.21 | 99.21 | |

| 6 + 8 | A + G/A | 86.33 | 73.68 | 92.95 | 100 | 88.24 |

| A/A + G | 86.33 | 85.68 | 94.27 | 96.77 | 90.76 |

| No. 3 Sensors | No. 6 Sensors | No. 8 Sensors | KNN | RF | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| A | G | A + G | A | G | A + G | A | G | A + G | Accuracy | Accuracy |

| √ | √ | √ | 85.12 | 83.56 | ||||||

| √ | √ | √ | 87.54 | 88.55 | ||||||

| √ | √ | √ | 89.8 | 90.21 | ||||||

| √ | √ | √ | 84.13 | 83.52 | ||||||

| √ | √ | √ | 80.65 | 79.76 | ||||||

| √ | √ | √ | 87.88 | 85.96 | ||||||

| √ | √ | √ | 88.43 | 89.76 | ||||||

| √ | √ | √ | 90.12 | 90.17 | ||||||

| √ | √ | √ | 91.34 | 90.87 | ||||||

| √ | √ | √ | 84.8 | 85.76 | ||||||

| √ | √ | √ | 87.43 | 87.36 | ||||||

| √ | √ | √ | 88.8 | 89.03 | ||||||

| √ | √ | √ | 80.82 | 79.78 | ||||||

| √ | √ | √ | 81.24 | 80.33 | ||||||

| √ | √ | √ | 87.12 | 87.35 | ||||||

| √ | √ | √ | 90.12 | 89.78 | ||||||

| √ | √ | √ | 91.24 | 90.33 | ||||||

| √ | √ | √ | 89.75 | 88.43 | ||||||

| √ | √ | √ | 92.01 | 93.16 | ||||||

| √ | √ | √ | 86.32 | 84.23 | ||||||

| √ | √ | √ | 87.22 | 85.53 | ||||||

| √ | √ | √ | 84.44 | 83.52 | ||||||

| √ | √ | √ | 84.12 | 85.67 | ||||||

| √ | √ | √ | 86.23 | 85.27 | ||||||

| √ | √ | √ | 93.03 | 92.31 | ||||||

| √ | √ | √ | 88.23 | 89.25 | ||||||

| √ | √ | √ | 89.65 | 88.47 | ||||||

| Location Combination | Data Combination | Down | Still | Straight | Up | Average Value |

|---|---|---|---|---|---|---|

| 3 + 6 + 8 | A + G/A + G/A | 84.34 | 79.68 | 90.82 | 99.21 | 88.51 |

| A + G/A/A | 88.33 | 70.23 | 92.96 | 100 | 87.88 |

| Activity Classification | Activity Description |

|---|---|

| Direct collision | Hoist to the obstacle’s height, drive in a straight line at a constant speed, collide at the original speed when encountering the obstacle, and collect collision data. |

| Stop suddenly | In the process of traveling, once the distance between the obstacle and the component reaches the safety boundary, the movement suddenly stops, and the shaking of the component ends until it is ultimately still. |

| Detour | In the safe area of the work area, leave ample enough space to go around when encountering obstacles to avoid a collision. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Yu, L.; Kassem, M.A.; Yap, J.B.H.; Wang, M.; Ali, K.N. Fabricated Components Hoisting Activity Recognition and Collision Analysis Based on Inertial Measurement Unit IMU. Buildings 2022, 12, 923. https://doi.org/10.3390/buildings12070923

Wang C, Yu L, Kassem MA, Yap JBH, Wang M, Ali KN. Fabricated Components Hoisting Activity Recognition and Collision Analysis Based on Inertial Measurement Unit IMU. Buildings. 2022; 12(7):923. https://doi.org/10.3390/buildings12070923

Chicago/Turabian StyleWang, Chen, Liangcheng Yu, Mukhtar A. Kassem, Jeffrey Boon Hui Yap, Mengyi Wang, and Kherun Nita Ali. 2022. "Fabricated Components Hoisting Activity Recognition and Collision Analysis Based on Inertial Measurement Unit IMU" Buildings 12, no. 7: 923. https://doi.org/10.3390/buildings12070923

APA StyleWang, C., Yu, L., Kassem, M. A., Yap, J. B. H., Wang, M., & Ali, K. N. (2022). Fabricated Components Hoisting Activity Recognition and Collision Analysis Based on Inertial Measurement Unit IMU. Buildings, 12(7), 923. https://doi.org/10.3390/buildings12070923