1. Introduction

The impacts of wall cracks on buildings’ reliability and aesthetics are the two most talked about points in discussions concerning building structures. Any severity level of a crack indicates an alarming situation, and can be worsened if left unchanged. As an example, a fracture in a brick wall might allow water to permeate its surface, and potentially its entire above structure. Similarly, cracked brickwork and cemented plaster can lead to various problems, such as failures in building function [

1]. When the masonry becomes saturated, it can become more exposed to cold weather, causing the water to freeze and expand, and then destroying the entire structure. These examples indicate that it is imperative to make a prompt diagnosis of any cracks as early as possible to avoid further damage.

The scanned surface may show cracks and other discontinuities indicative of a poor technical condition. Fissures in walls that appear vertically or diagonally usually indicate foundation cracking. Diagonal cracks are typically found near the door and window frame corners, whereas vertical and horizontal cracks appear at the wall–column joint and in the middle of the masonry wall. Fine cracks up to 1 mm can be easily hidden by surface repair and finishing works, but larger cracks require additional measures to prevent against corrosion from the environment [

2]. An examination of the crack’s orientation will help to classify the severity of the crack to determine the best method or procedure for repairing the damages [

3]. Scanning for wall cracks can be one of the initial steps in the building assessment.

Analysing the amount of physical stress and its effect on materials is one of the main tasks of structural engineering assessment. In addition to studying the pattern of cracking and the rate of crack growth (or closure), a structural engineer can pinpoint the source of the problem and suggest possible solutions. This assessment process can take a long time to complete and require laborious effort as it involves various staff, especially if it applies to a bigger building. Buildings that are poorly maintained will suffer more damage and require more expensive repairs if left without attention [

4]. To assess the safety and performance of the current state of concrete structures, non-destructive evaluation (NDE) techniques are commonly employed [

5]. Moreover, recent studies have shown that the use of an intelligent system can aid the building assessment process [

6], and can be considered as an NDE method.

Artificial intelligence (AI) has gained huge attention from many researchers in various fields. AI technology attempts to simulate and mimic human cognitive capabilities to learn and absorb information and use it to solve problems. The development of the AI area has created diverse fields, namely computer vision (CV), natural language processing (NLP), expert systems (ES), speech recognition (SR), etc.

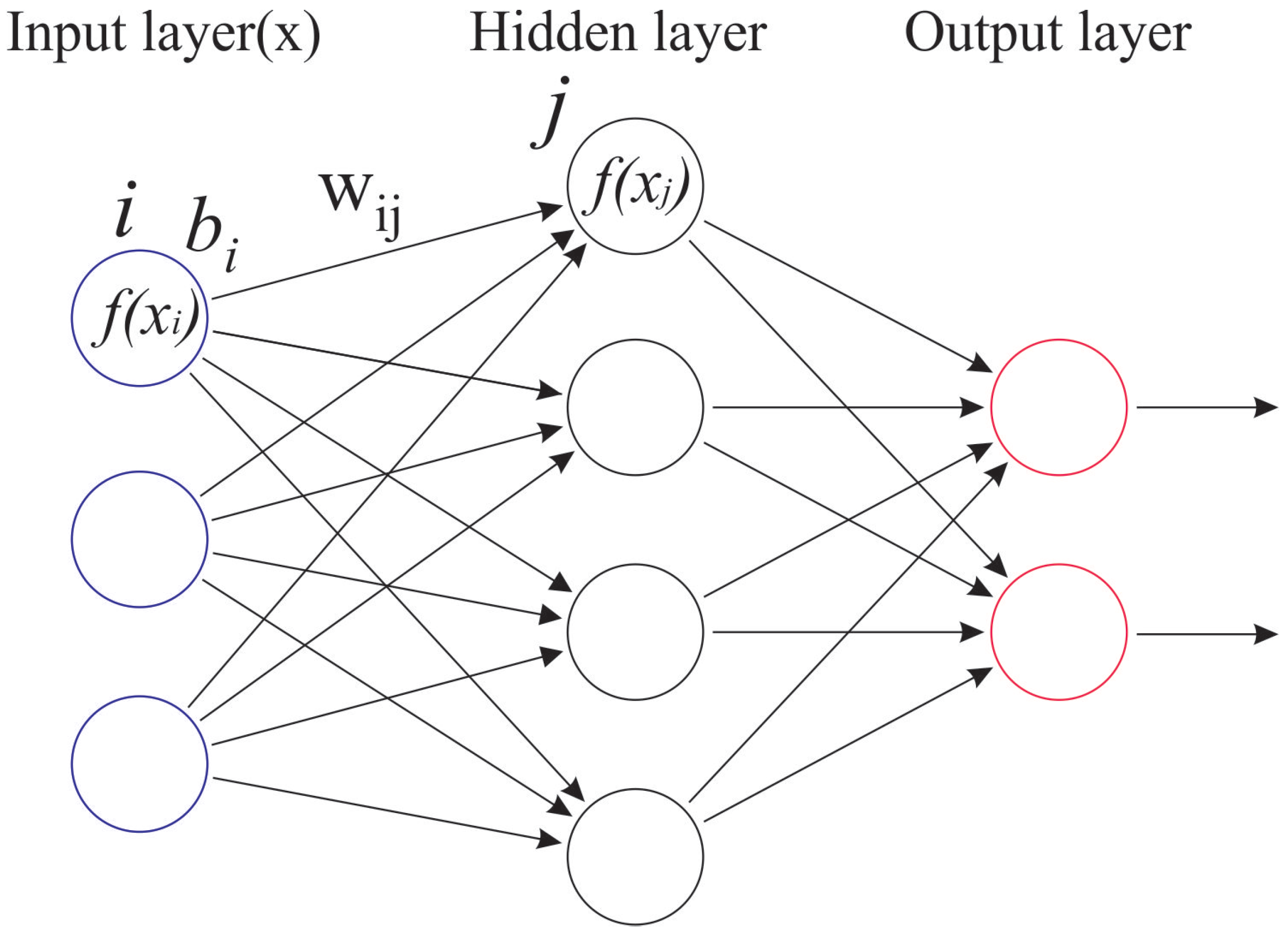

Various methods have been developed to achieve the goal of AI to replicate human ability in a machine. The rapid development of AI created several subsets of AI that, in general, can be illustrated by

Figure 1. However, in general, the main direction of AI development is to create a method that can allow machines to learn the available data. In this term, the terminology of machine learning (ML) was introduced. ML learns the data by identifying the pattern in the dataset and uses this pattern information as the basic knowledge for solving problems.

A system mixture of deep learning (DL) and computer vision can replicate various expert actions in complex building quality evaluation as envisioned in [

7]. A combination of a lightweight DL model and mobile edge computing resources can serve as a remote building inspection service. A similar approach can also be applied to the inspection of passageway quality as envisaged in [

8]. According to the study, a proposed model can be used to model the enclosure of a building based on the thermal property. Then, enclosures can be modelled more accurately than by using the Fourier method. As a result, the actual energy consumption of the building can be estimated more accurately [

9].

Due to the importance of data, the ML performance relies on domain expertise to identify key features in the dataset. Hence, the ML technique cannot be easily implemented in different cases since it still requires human intervention for its performance. Deep learning comes later to address this problem and enhance the performance of machine learning in learning the data. Unlike conventional ML, deep learning can automatically extract the key features in the dataset and improve the pattern recognition process [

10]. The key aspect of the learning process in DL methods is that it learns the data from raw representation to abstract representation with a similar procedure. Its architecture consists of multiple stacks of simple modules that map the representation of the features of the data at each level. Hence, the extraction of data representation can be simplified and automated [

11]. Another work that developed a stacking-based machine learning method that is capable of detecting cracks is presented in [

12]. The work assessed various most-popular object detection algorithms and then proposed a new stacking method to detect cracks near levees. As a comparison, the method performed best at 90.90%, whereas the stacking method performed comparably well at around 85%. However, the DL processes consume much more computational power than the stacking method. Therefore, without sacrificing too much accuracy, the stacking method would work better on smaller devices [

12]. On the other hand, a semantic segmentation-based crack detection method exhibits a high accuracy in crack detection [

13]. Despite that, fully supervised segmentation requires the manual annotation of enormous volumes of data, which is time-consuming. To address this issue, Wang (2021) presented a semi-supervised semantic segmentation network [

8].

DL application on building crack detection has been proposed and evaluated in numerous works due to its ability to generalise the pattern from a dataset. The main enabler component is convolutional neural networks (CNNs), which can learn the intrinsic features of cracks from surfaces’ images. The developed capabilities make it possible to distinguish between cracks and the normal structure as reported in [

14]. The study benchmarked the performance of the existing DL architecture, e.g., MobileNetV2, Restnet101, VGG16, and InceptionV2.

Similarly, the work in [

15] evaluates whether a concrete segment has a crack from its visual documentation by using an artificial neural network. To perform such a task, a 10-layer CNN-based classifier was developed and trained using a dataset of 40,000 concrete crack images. The work also considered various characteristics of the concrete surface, such as the illumination and surface finish (i.e., paint, plastering, and exposed), in developing its proposed classifier. According to the study’s observation, the classifier fails to recognise cracks in images that have (1) a crack located in the corner of inputs; (2) a low resolution; (3) too small a crack.

Wall crack detection using a less complex CNN with informative reporting was proposed in [

6]. The work utilised an eight-layer CNN classifier that was able to return crack segments in an image. To achieve this, the trained classifier is operated in a sliding window to detect larger resolution images. Any parts of the image that contain cracks will be assembled into a new image. A slightly different crack detection was carried out in [

8], where image segments of a pavement crack were returned to user. Compared to the above-mentioned previous studies, the work applied an existing DL framework to build a classifier for an object detection purpose. The authors also investigated the effect of various sizes of the network model on the crack detection application.The image recognition method that uses the deep learning model has shown promising results in a variety of applications, including the detection of pavement cracks [

16]. Several studies have pointed out the application of machine learning. Hafiz et al. showed that machine learning has surpassed the popularity of image processing for crack detection applications [

17].

One of the most notable deep learning techniques that have been successfully implemented in many fields is the convolutional neural network (CNN) [

18]. It has been remarkably successful in the field of computer vision. Since the beginning of its introduction, the CNN was designed to process multiple arrays in the form of an image [

19].

Adha et al. used transfer learning based on CNN architecture to identify and classify buildings using the FEMA-154 classification [

20]. In this work, the authors used transfer learning from the VGG16 network as the basis for developing a new model for building classification. The result from this work shows that the transfer learning technique is effective at reducing the computational effort during the model training.

Similar attempts were also performed by Pamuncak et al. by employing a transfer learning technique to reduce the computational effort on training the CNN model [

21]. The authors retrained the existing CNN pre-train model to identify the bridge’s load rating. Although the bridge size only implicitly represents the bridge capacity, the trained model can still successfully extract the visual representation of the bridge capacity based on the image.

In the transfer learning process, the existence of datasets is absolutely essential and vital. Regarding crack detection on walls, researchers such as Özgenel have shared their dataset containing pre-classified images of wall cracks publicly online. Özgenel’s dataset has been used to train the deep learning algorithm [

22]. Other datasets related to cracks that have also been developed include, among others: the CCIC dataset [

23], the SDNET dataset [

24], and the BCD dataset [

25]. CCIC is collected from various buildings on the METU campus. CCIC contains 40,000 RGB images with a resolution of 227 × 227 pixels. The SDNET dataset contains more than 56,000 annotated images of cracked and non-cracked concrete bridge decks, walls, and pavements [

26].

Despite the contributions made in the aforementioned works, these works mainly focus on a two-case evaluation that outputs the existence of cracks in an image input. None of these works assess the severity level of wall cracks, which can be critical in highly disaster-affected locations. In these areas, it is preferred to have a finer granularity inspection because it helps local authority to (1) survey the local buildings’ quality and (2) estimate post-disaster damage. In addition, in less-frequent disaster regions, the multi-classification system enables building tenants to self-assess and then prepare the required maintenance, avoiding potential severe damage. The following items conclude our contributions:

We constructed a dataset of wall crack images that were collected online and then labelled according to civil engineering professional standards.

We propose a systematic and verifiable step to construct the ground-truth data based on a combination of civil engineering standards.

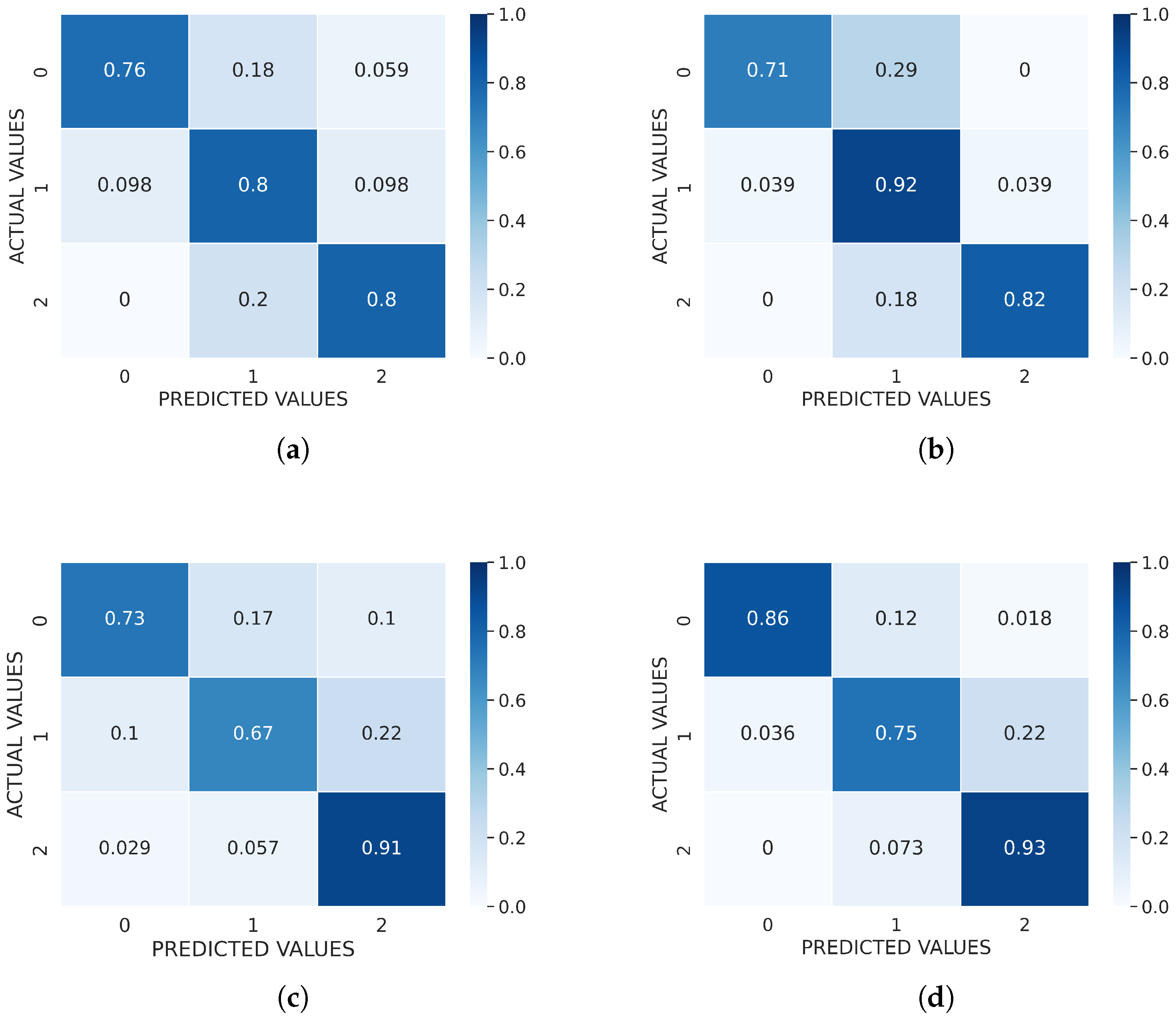

We developed eight DL models, one of which is a combined version of an existing feature extraction and classification kernel. Then, we evaluated the performance of these techniques, not only by comparing standard metrics, but also by training the convergence rate.

The remaining part of this work is organised as follows.

Section 2 presents the materials and methods that were implemented in this work, including the data collection method, data pre-processing method, machine learning method for feature extraction and the classifier, and evaluation method for the trained model. Then, the results and discussion of the DL technologies used are provided in

Section 3. Finally,

Section 4 concludes our work.