Trap of Social Media Algorithms: A Systematic Review of Research on Filter Bubbles, Echo Chambers, and Their Impact on Youth

Abstract

1. Introduction

2. Objectives of the Study

- Map the methodological approaches, theoretical frameworks, and platforms studied in youth-related research on filter bubbles, echo chambers, and algorithmic bias.

- Identify the mechanisms through which algorithms shape youth information exposure, belief formation, and social interaction.

- Examine documented social, political, and psychological impacts of these phenomena on young people.

3. Methodology

3.1. Review Design and Framework

3.2. Planning and Search Strategy

3.3. Databases Searched

3.4. PRISMA Flow and Screening Outcome

3.5. Inclusion and Exclusion Criteria

3.6. Quality Appraisal

3.7. Synthesis Approach

3.8. Data Extraction and Coding

3.9. Ethical Considerations

3.10. Registration Statement

4. Results

4.1. Overview of Included Studies

- the mechanisms by which algorithmic systems shape online information exposure,

- the extent of ideological segregation in social networks,

- the socio-psychological and contextual factors influencing youth susceptibility to polarization, and

- potential pathways for intervention, including media literacy and platform design changes.

4.2. Descriptive Characteristics of Included Studies

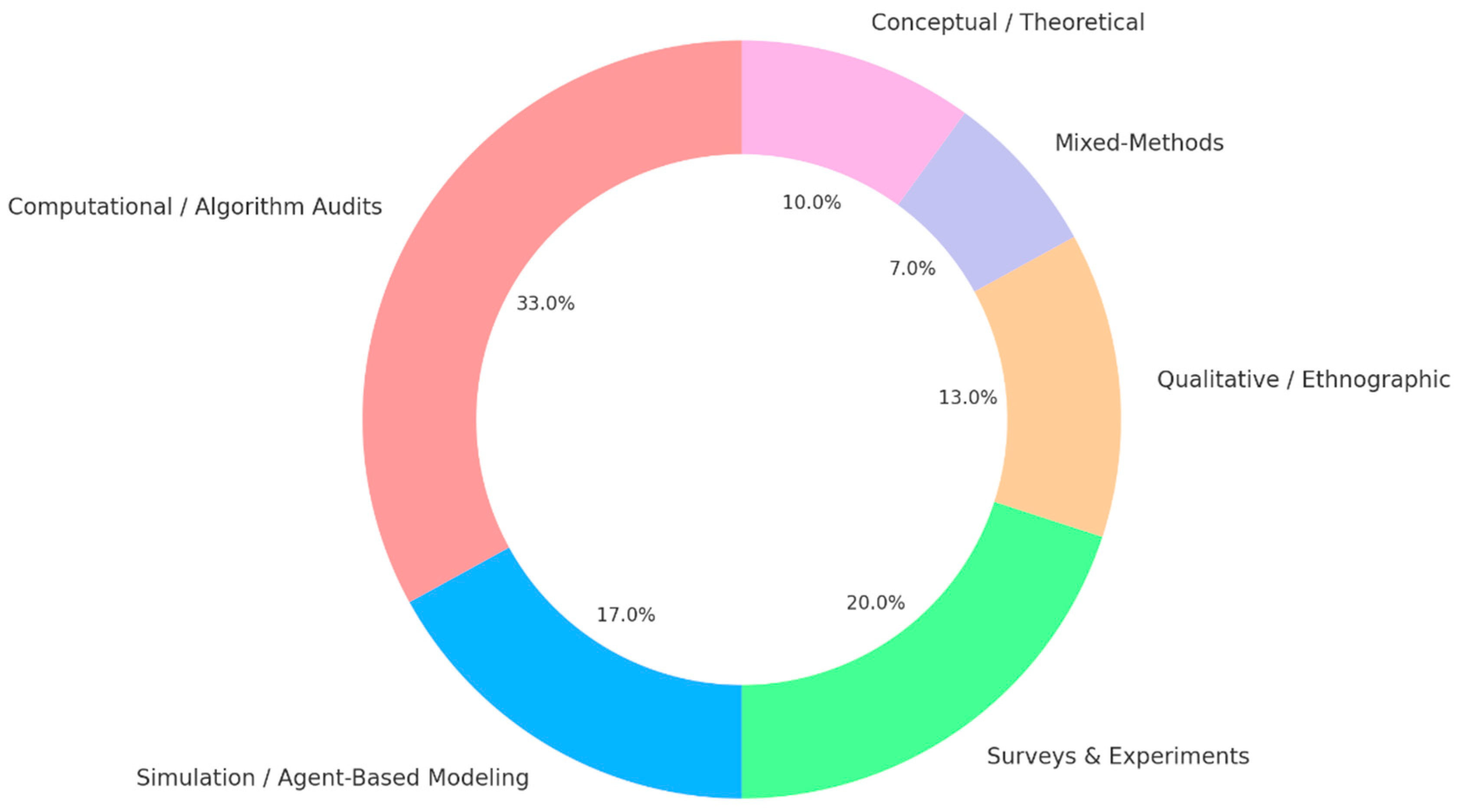

4.3. Methodological Typology

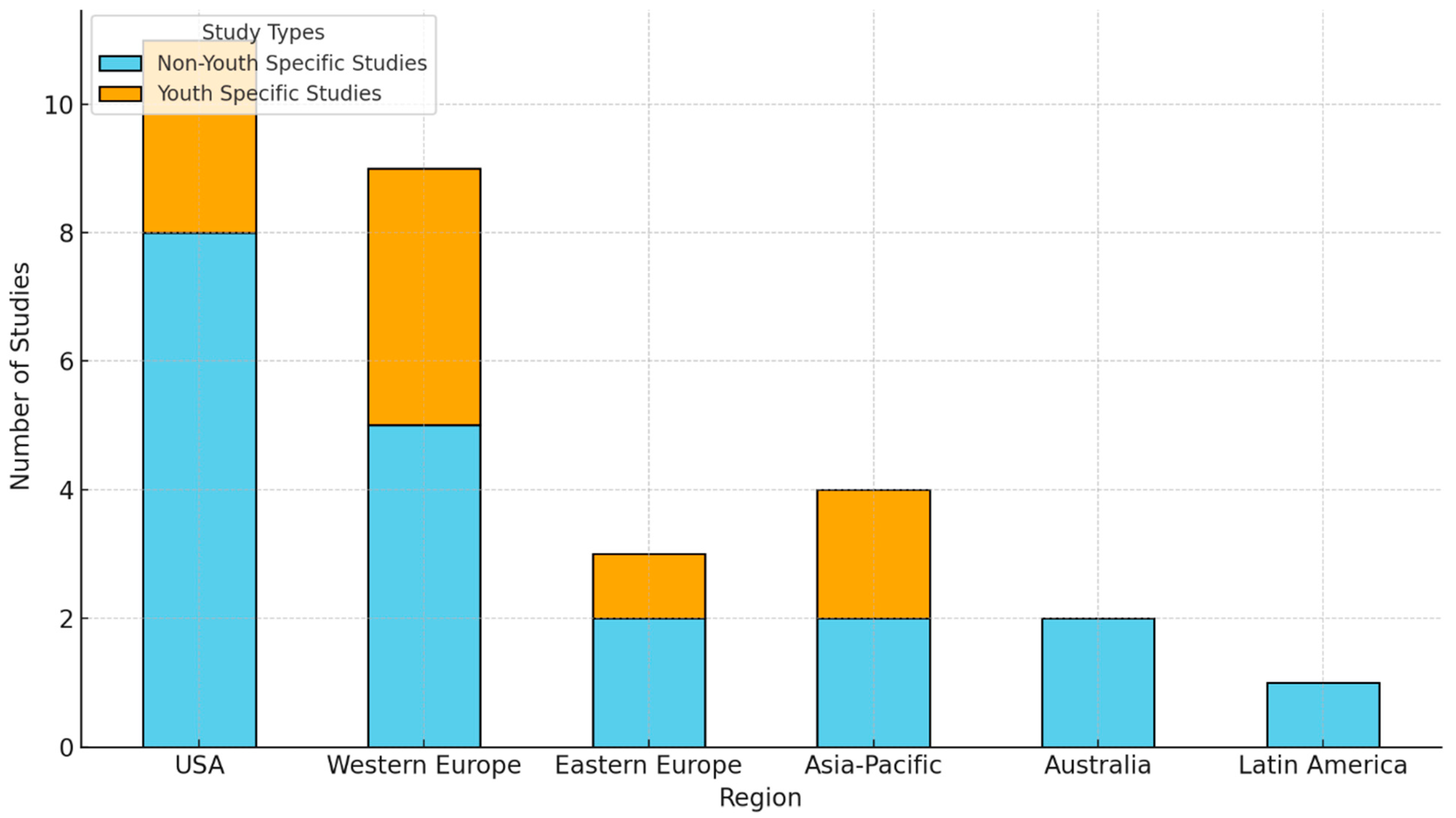

4.4. Geographic Distribution

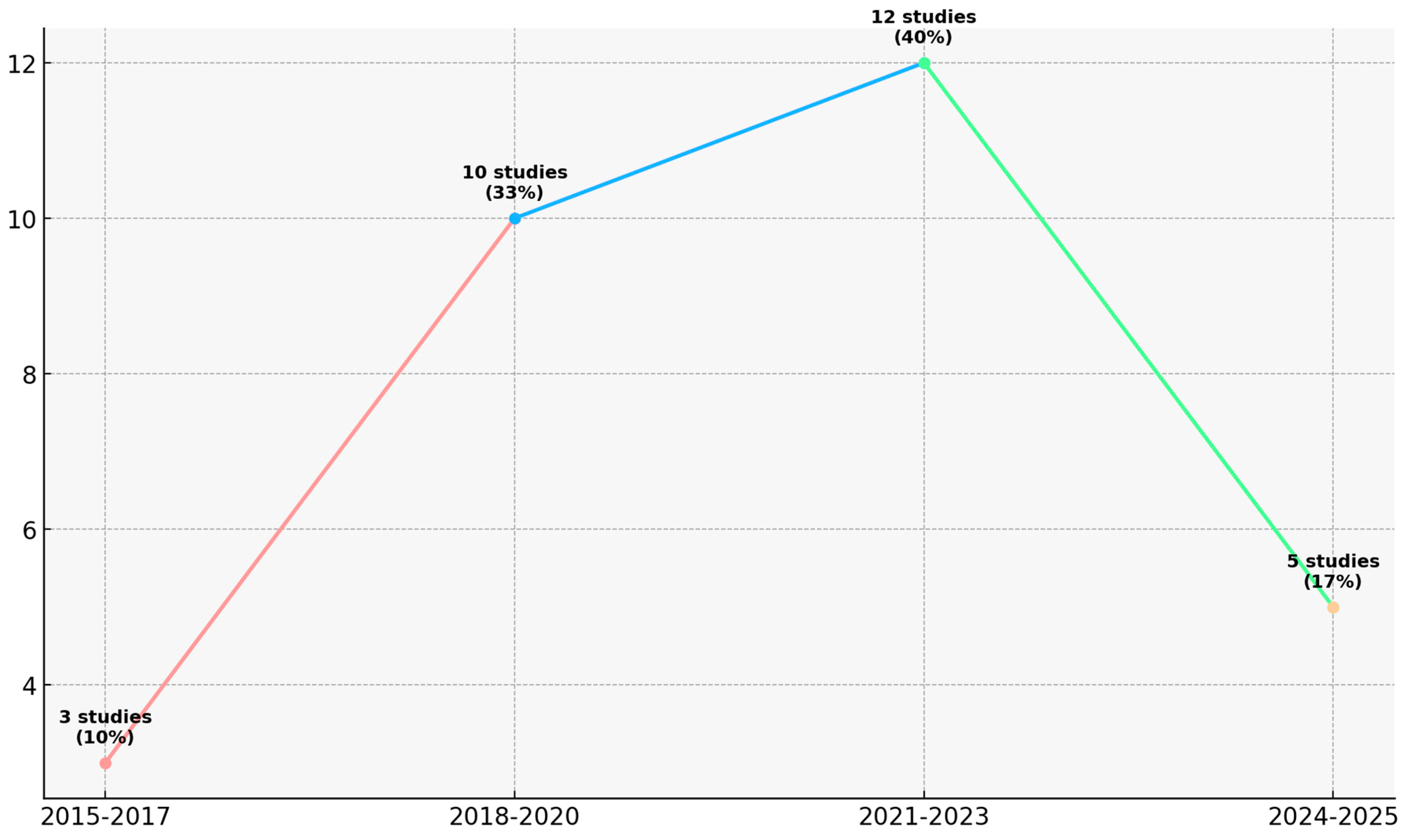

4.5. Temporal Trends (2015–2025)

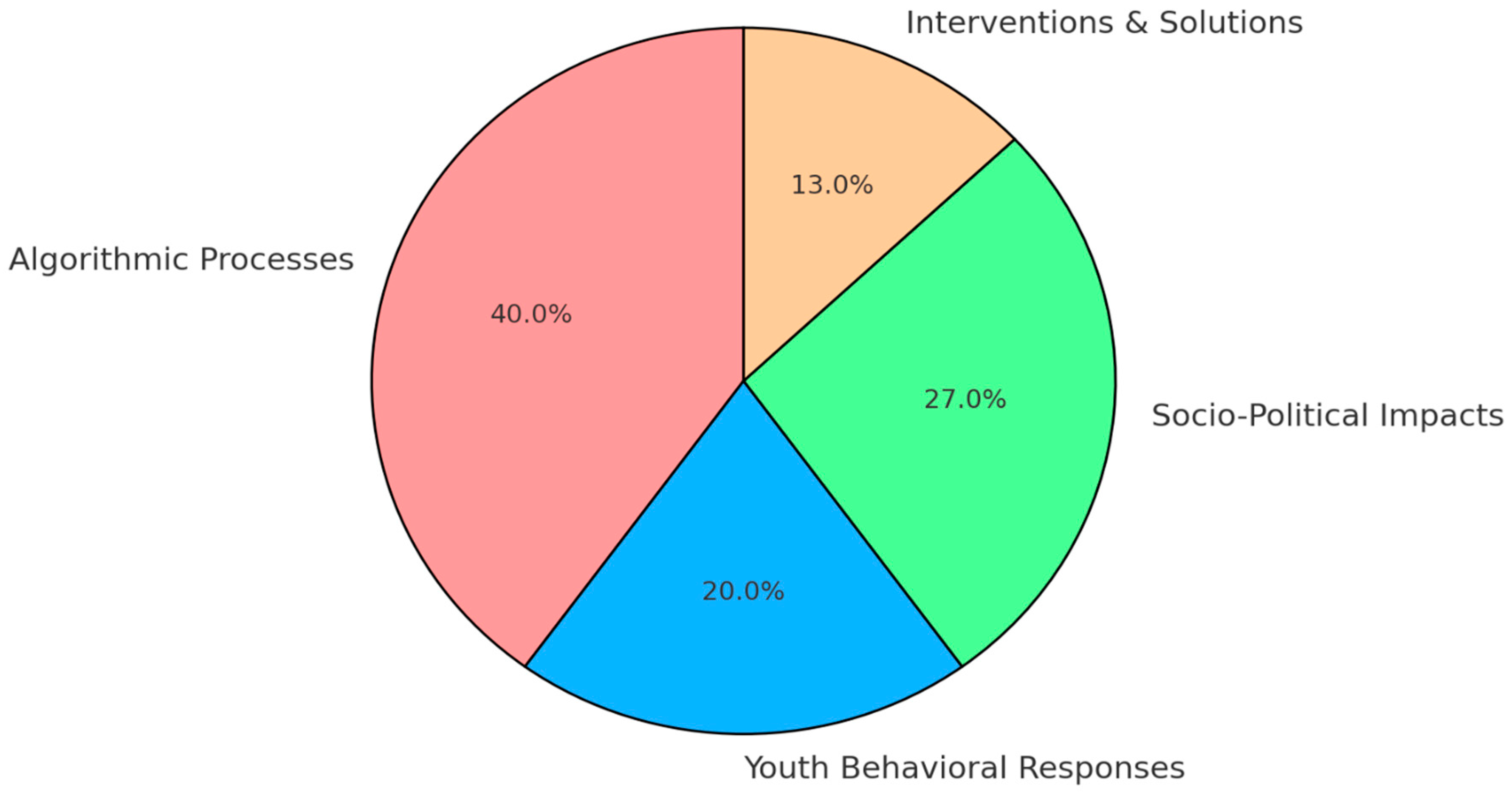

4.6. Thematic Synthesis of Findings

4.7. Theoretical Framework Usage

4.8. Methodological Limitations Identified

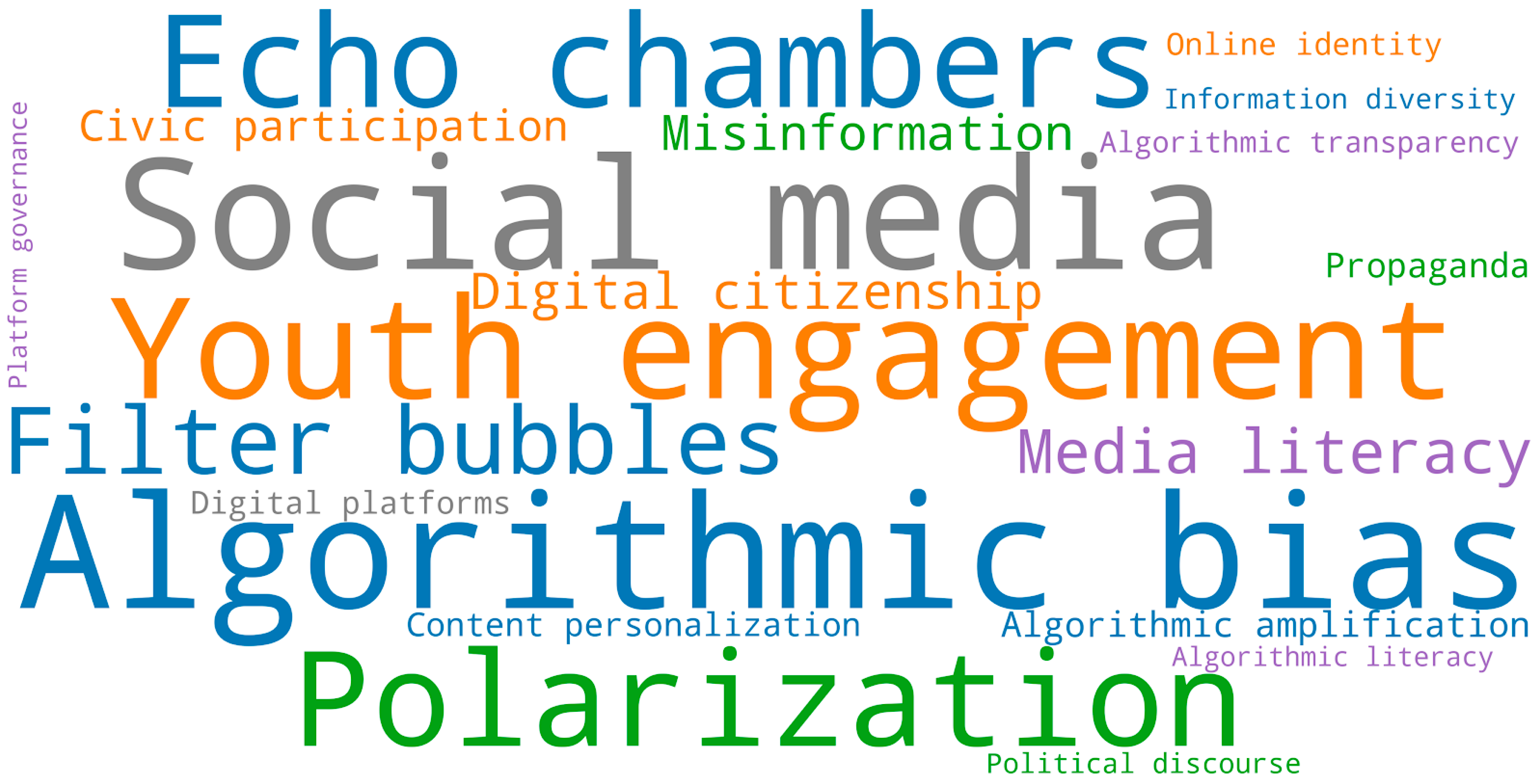

4.9. Most Frequently Used Terms and Concepts

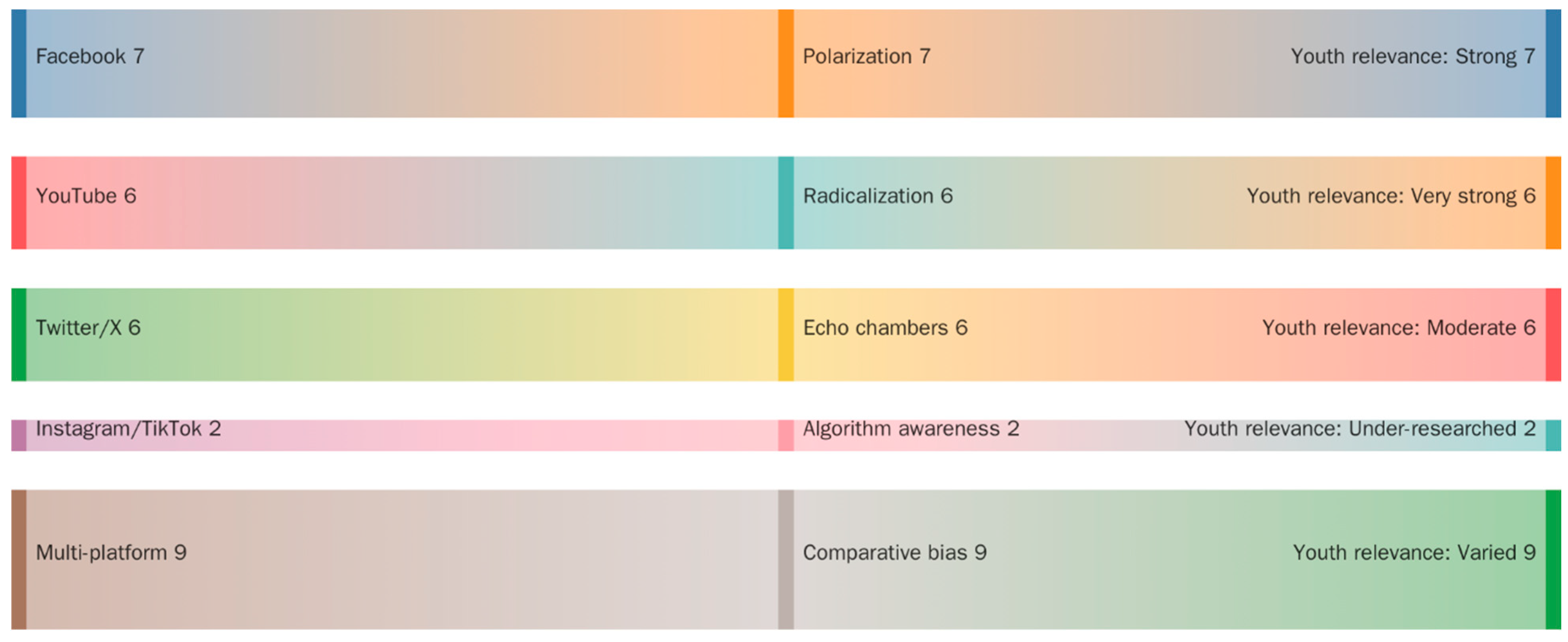

4.10. Platform-Specific Distribution

5. Discussion

Methodological Gaps and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bakshy, E.; Messing, S.; Adamic, L.A. Exposure to ideologically diverse news and opinion on Facebook. Science 2015, 348, 1130–1132. [Google Scholar] [CrossRef]

- Berman, R.; Katona, Z. Curation algorithms and filter bubbles in social networks. Mark. Sci. 2020, 39, 296–316. [Google Scholar] [CrossRef]

- Baeza-Yates, R. Bias on the web. Commun. ACM 2018, 61, 54–61. [Google Scholar] [CrossRef]

- Kulshrestha, J.; Eslami, M.; Messias, J.; Zafar, M.B.; Ghosh, S.; Gummadi, K.P.; Karahalios, K. Search bias quantification: Investigating political bias in social media and web search. Inf. Retr. J. 2019, 22, 188–227. [Google Scholar] [CrossRef]

- Bucher, T. If…Then: Algorithmic Power and Politics; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Ahmad, P.N.; Guo, J.; AboElenein, N.M.; Haq, Q.M.U.; Ahmad, S.; Algarni, A.D.; Ateya, A.A. Hierarchical graph-based integration network for propaganda detection in textual news articles on social media. Sci. Rep. 2025, 15, 1827. [Google Scholar] [CrossRef]

- Pariser, E. The Filter Bubble: What the Internet Is Hiding from You; Penguin: London, UK, 2011. [Google Scholar]

- Sunstein, C.R. #Republic: Divided Democracy in the Age of Social Media; Princeton University Press: Princeton, NJ, USA, 2017. [Google Scholar]

- Flaxman, S.; Goel, S.; Rao, J.M. Filter bubbles, echo chambers, and online news consumption. Public Opin. Q. 2016, 80, 298–320. [Google Scholar] [CrossRef]

- Geschke, D.; Lorenz, J.; Holtz, P. The triple-filter bubble: Using agent-based modelling to test a meta-theoretical framework for the emergence of filter bubbles and echo chambers. Br. J. Soc. Psychol. 2019, 58, 129–149. [Google Scholar] [CrossRef]

- Terren, L.; Borge, R. Echo Chambers on Social Media: A Systematic Review of the Literature. Rev. Commun. Res. 2021, 9, 99–118. [Google Scholar] [CrossRef]

- Khanday, A.M.U.D.; Wani, M.A.; Rabani, S.T.; Khan, Q.R.; Abd El-Latif, A.A. HAPI: An efficient Hybrid Feature Engineering-based Approach for Propaganda Identification in social media. PLoS ONE 2024, 19, e0302583. [Google Scholar] [CrossRef]

- Metaxa, D.; Park, J.S.; Robertson, R.E.; Karahalios, K.; Wilson, C.; Hancock, J.; Sandvig, C. Auditing algorithms. Found. Trends Hum. Comput. Interact. 2021, 14, 272–344. [Google Scholar] [CrossRef]

- Srba, I.; Moro, R.; Tomlein, M.; Pecher, B.; Simko, J.; Stefancova, E.; Kompan, M.; Hrckova, A.; Podrouzek, J.; Gavornik, A.; et al. Auditing YouTube’s Recommendation Algorithm for Misinformation Filter Bubbles. ACM Trans. Recomm. Syst. 2023, 1, 1–33. [Google Scholar] [CrossRef]

- Guess, A.M.; Malhotra, N.; Pan, J.; Barberá, P.; Allcott, H.; Brown, T.; Crespo-Tenorio, A.; Dimmery, D.; Freelon, D.; Gentzkow, M.; et al. How do social media feed algorithms affect attitudes and behavior in an election campaign? Science 2023, 381, 398–404. [Google Scholar] [CrossRef]

- Qian, Y.; Wang, D. Echo Chamber Effect in Rumor Rebuttal Discussions About COVID-19 in China: Social Media Content and Network Analysis Study. J. Med. Internet Res. 2021, 23, e27009. [Google Scholar] [CrossRef]

- de Groot, T.; de Haan, M.; van Dijken, M. Learning in and about a filtered universe: Young people’s awareness and control of algorithms in social media. Learn. Media Technol. 2023, 48, 701–713. [Google Scholar] [CrossRef]

- Baumgaertner, B.; Justwan, F. The preference for belief, issue polarization, and echo chambers. Synthese 2022, 200, 412. [Google Scholar] [CrossRef]

- Sindermann, C.; Elhai, J.D.; Moshagen, M.; Montag, C. Age, gender, personality, ideological attitudes and individual differences in a person’s news spectrum: How many and who might be prone to “filter bubbles” and “echo chambers” online? Heliyon 2020, 6, e03214. [Google Scholar] [CrossRef]

- Alshattnawi, S.; Shatnawi, A.; AlSobeh, A.M.R.; Magableh, A.A. Beyond Word-Based Model Embeddings: Contextualized Representations for Enhanced Social Media Spam Detection. Appl. Sci. 2024, 14, 2254. [Google Scholar] [CrossRef]

- Broto Cervera, R.; Pérez-Solà, C.; Batlle, A. Overview of the Twitter conversation around #14F 2021 Catalonia regional election: An analysis of echo chambers and presence of social bots. Soc. Netw. Anal. Min. 2024, 14, 96. [Google Scholar] [CrossRef]

- Cakmak, M.C.; Agarwal, N.; Oni, R. The bias beneath: Analyzing drift in YouTube’s algorithmic recommendations. Soc. Netw. Anal. Min. 2024, 14, 171. [Google Scholar] [CrossRef]

- Guo, S.; Song, X.; Gao, Y. Personality traits and their influence on Echo chamber formation in social media: A comparative study of Twitter and Weibo. Front. Psychol. 2024, 15, 1323117. [Google Scholar] [CrossRef] [PubMed]

- Bishop, S. Managing visibility on YouTube through algorithmic gossip. New Media Soc. 2019, 21, 2589–2606. [Google Scholar] [CrossRef]

- Livingstone, S. Developing social media literacy: How children learn to interpret risky opportunities on social network sites. Communications 2014, 39, 283–303. [Google Scholar] [CrossRef]

- Livingstone, S. Children and the Internet; Polity Press: Cambridge, UK, 2009. [Google Scholar]

- Couldry, N. Media, Society, World: Social Theory and Digital media Practice; Polity Press: Cambridge, UK, 2012. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, M.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Tranfield, D.; Denyer, D.; Smart, P. Towards a Methodology for Developing Evidence-Informed Management Knowledge by Means of Systematic Review. Br. J. Manag. 2003, 14, 207–222. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Medica 2012, 22, 276. [Google Scholar] [CrossRef]

- CASP. Critical Appraisal Skills Programme Qualitative Checklist. 2018. Available online: https://casp-uk.net/casp-tools-checklists/qualitative-studies-checklist/ (accessed on 9 August 2025).

- Hong, Q.N.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.P.; Griffiths, F.; Nicolau, B.; O’Cathain, A.; et al. The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers. Educ. Inf. 2018, 34, 285–291. [Google Scholar] [CrossRef]

- Downes, M.J.; Brennan, M.L.; Williams, H.C.; Dean, R.S. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ Open 2016, 6, e011458. [Google Scholar] [CrossRef] [PubMed]

- Braun, V.; Clarke, V. Using Thematic Analysis in Psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Guo, Q. Intentional echo chamber management: Chinese celebrity fans’ information-seeking and sense-making practices on social media. J. Doc. 2025, 81, 236–252. [Google Scholar] [CrossRef]

- Chen, W.; Pacheco, D.; Yang, K.C.; Menczer, F. Neutral bots probe political bias on social media. Nat. Commun. 2021, 12, 5580. [Google Scholar] [CrossRef]

- Sîrbu, A.; Pedreschi, D.; Giannotti, F.; Kertész, J. Algorithmic bias amplifies opinion fragmentation and polarization: A bounded confidence model. PLoS ONE 2019, 14, e0213246. [Google Scholar] [CrossRef]

- Papakyriakopoulos, O.; Carlos, J.; Serrano, M.; Hegelich, S. Political communication on social media: A tale of hyperactive users and bias in recommender systems. J. Online Soc. Netw. Media 2019, 15, 100058. [Google Scholar] [CrossRef]

- Bucher, T. The algorithmic imaginary: Exploring the ordinary affects of Facebook algorithms. Inf. Commun. Soc. 2017, 20, 30–44. [Google Scholar] [CrossRef]

- Ledwich, M.; Zaitsev, A. Algorithmic Extremism: Examining YouTube’s Rabbit Hole of Radicalization. arXiv 2019. [Google Scholar] [CrossRef]

- Shmargad, Y.; Klar, S. Sorting the News: How Ranking by Popularity Polarizes Our Politics. Political Commun. 2020, 37, 423–446. [Google Scholar] [CrossRef]

- Del Cerro, C.C. The power of social networks and social media’s filter bubble in shaping polarisation: An agent-based model. Appl. Netw. Sci. 2024, 9, 69. [Google Scholar] [CrossRef]

- Peralta, A.F.; Kertész, J.; Iñiguez, G. Opinion formation on social networks with algorithmic bias: Dynamics and bias imbalance. J. Phys. Complex. 2021, 2, 045009. [Google Scholar] [CrossRef]

- Aydoğan, B.B.; Köse, H. The Problem of Freedom of Expression in the Public Sphere of Social Media: Descriptive Analysis of the Echo Chamber Effect. Siyasal J. Political Sci. 2024, 33, 277–317. [Google Scholar] [CrossRef]

- Ackermann, K.; Stadelmann-Steffen, I. Voting in the Echo Chamber? Patterns of Political Online Activities and Voting Behavior in Switzerland. Swiss Political Sci. Rev. 2022, 28, 377–400. [Google Scholar] [CrossRef]

- Avnur, Y. What’s Wrong with the Online Echo Chamber: A Motivated Reasoning Account. J. Appl. Philos. 2020, 37, 578–593. [Google Scholar] [CrossRef]

- Cakmak, M.C.; Agarwal, N. Influence of symbolic content on recommendation bias: Analyzing YouTube’s algorithm during Taiwan’s 2024 election. Appl. Netw. Sci. 2025, 10, 23. [Google Scholar] [CrossRef]

- Coady, D. Stop Talking about Echo Chambers and Filter Bubbles. Educ. Theory 2024, 74, 92–107. [Google Scholar] [CrossRef]

- Moreno-Almeida, C. Memes as snapshots of participation: The role of digital amateur activists in authoritarian regimes. New Media Soc. 2021, 23, 1545–1566. [Google Scholar] [CrossRef]

- European Commission. The Digital Services Act. 2022. Available online: https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/europe-fit-digital-age/digital-services-act_en (accessed on 18 July 2025).

| Core Construct | Example Keywords |

|---|---|

| Algorithmic Curation | “algorithmic bias,” “recommender systems,” “personalization” |

| Filter Bubbles and Echo Chambers | “echo chamber,” “filter bubble,” “information cocoon,” “content polarization” |

| Youth and Adolescents | “youth,” “teenagers,” “young adults,” “adolescents” |

| Social Media Platforms | “Twitter,” “Facebook,” “YouTube,” “Instagram,” “TikTok,” “Weibo” |

| Database | Disciplines Covered |

|---|---|

| Web of Science (Core Collection) | Multidisciplinary |

| Scopus | Multidisciplinary, social sciences |

| Communication & Mass Media Complete (CMMC) | Communication studies |

| ACM Digital Library | Computer science, technology |

| IEEE Xplore | Engineering, technology |

| JSTOR | Humanities, social sciences |

| SAGE Journals Online | Social sciences, education |

| Taylor & Francis Online | Social sciences, humanities |

| SpringerLink | Multidisciplinary |

| ProQuest Social Science | Social sciences, political science |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Peer-reviewed journal articles | Grey literature (blogs, theses, op-eds) |

| Published 2015–2025 | Published before 2015 |

| Written in English | Non-English publications |

| Focus on filter bubbles, echo chambers, or algorithmic bias | Studies on general internet use without focus on these phenomena |

| Explicit examination of youth (adolescents, young adults ≤ 30) | Studies without youth-specific data or analysis |

| Any empirical method (qualitative, quantitative, mixed) | Purely theoretical essays, commentaries |

| Focus on social media platforms (e.g., Facebook, Twitter, YouTube, Instagram, TikTok) | Offline or non-social media contexts |

| Study Design | Quality Assessment Tool | Reference |

|---|---|---|

| Qualitative studies | CASP (Critical Appraisal Skills Programme) | CASP [32] |

| Mixed-methods studies | MMAT (Mixed Methods Appraisal Tool) | Hong et al. [33] |

| Cross-sectional surveys | AXIS (Appraisal of Cross-Sectional Studies) | Downes et al. [34] |

| No. | Author(s) & Year | Study Design | Country/Region | Platform(s) | Thematic Focus |

|---|---|---|---|---|---|

| 1 | Bakshy et al. [1] | Large-scale computational analysis | USA | Exposure to ideologically diverse news | |

| 2 | Flaxman et al. [9] | Quantitative web traffic analysis | USA | Google News, social media | Filter bubbles and news consumption |

| 3 | Guess et al. [15] | Field experiment | USA | Facebook, Instagram | Algorithmic feeds and political attitudes |

| 4 | Chen et al. [37] | Experimental audit with bots | USA | Detecting political bias in curation | |

| 5 | Metaxa et al. [13] | Systematic audit study | USA | Multiple platforms | Bias in algorithmic audits |

| 6 | Srba et al. [14] | Algorithm audit & content analysis | Slovakia | YouTube | Misinformation filter bubbles |

| 7 | Kulshrestha et al. [4] | Quantitative bias measurement | Germany/USA | Facebook, Google | Political bias in search & feeds |

| 8 | Sîrbu et al. [38] | Simulation modeling | Italy | Generic networks | Algorithmic bias amplifying polarization |

| 9 | Baeza-Yates [3] | Conceptual & empirical synthesis | Chile/USA | Web | Web bias and fairness |

| 10 | Berman & Katona [2] | Simulation & network analysis | USA | Generic networks | Filter bubble formation |

| 11 | Papakyriakopoulos et al. [39] | Computational communication analysis | Germany | Hyperactive users & recommender bias | |

| 12 | Bishop [24] | Qualitative digital ethnography | UK | YouTube | Algorithmic gossip & visibility |

| 13 | Bucher [40] | Qualitative interviews | Norway | Algorithmic imaginary | |

| 14 | Ledwich & Zaitsev [41] | Data-driven analysis | Australia | YouTube | Radicalization & algorithmic extremism |

| 15 | Shmargad & Klar [42] | Online experiment | USA | News ranking platforms | Popularity ranking & polarization |

| 16 | de Groot et al. [17] | Mixed-methods (survey + interviews) | Netherlands | Instagram, TikTok, YouTube | Youth awareness of algorithms |

| 17 | Cakmak, Agarwal, & Oni [22] | Computational audit | USA | YouTube | Drift in recommendation bias |

| 18 | Broto Cervera et al. [21] | Social network analysis | Spain | Echo chambers & bots | |

| 19 | Chueca Del Cerro [43] | Agent-based modeling | Spain | Generic networks | Polarisation dynamics |

| 20 | Sindermann et al. [19] | Survey study | Germany | Multiple | Personality & bubble susceptibility |

| 21 | Peralta et al. [44] | Simulation | Hungary | Generic networks | Opinion formation with bias |

| 22 | Baumgaertner & Justwan [18] | Experimental survey | USA | Multiple | Belief preference & polarization |

| 23 | Guo, S. et al. [23] | Comparative network analysis | China/USA | Twitter, Weibo | Personality traits & echo chambers |

| 24 | Aydoğan & Köse [45] | Qualitative descriptive | Turkey | Multiple | Freedom of expression & echo chambers |

| 25 | Qian & Wang [16] | Network/content analysis | China | Echo chambers in COVID-19 rebuttals | |

| 26 | Ackermann & Stadelmann-Steffen [46] | Survey | Switzerland | Facebook, Twitter | Online activity & voting |

| 27 | Avnur [47] | Philosophical/theoretical | USA | Multiple | Motivated reasoning in echo chambers |

| 28 | Coady [48] | Critical conceptual analysis | Australia | Multiple | Rethinking filter bubbles |

| 29 | Geschke et al. [10] | Agent-based modeling | Germany | Generic networks | Triple-filter bubble dynamics |

| 30 | Terren & Borge [11] | Literature review | Spain | Multiple | Echo chambers research synthesis |

| Limitation | Studies Reporting | Consequences |

|---|---|---|

| Western bias | 15/30 | Limited generalizability |

| Lack of longitudinal data | 12/30 | No evidence of sustained effects |

| Algorithm opacity | 10/30 | Reliance on black-box inference |

| Low youth-specific sampling | 8/30 | Gaps in adolescent-focused findings |

| Small qualitative samples | 4/30 | Weak external validity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmmad, M.; Shahzad, K.; Iqbal, A.; Latif, M. Trap of Social Media Algorithms: A Systematic Review of Research on Filter Bubbles, Echo Chambers, and Their Impact on Youth. Societies 2025, 15, 301. https://doi.org/10.3390/soc15110301

Ahmmad M, Shahzad K, Iqbal A, Latif M. Trap of Social Media Algorithms: A Systematic Review of Research on Filter Bubbles, Echo Chambers, and Their Impact on Youth. Societies. 2025; 15(11):301. https://doi.org/10.3390/soc15110301

Chicago/Turabian StyleAhmmad, Mukhtar, Khurram Shahzad, Abid Iqbal, and Mujahid Latif. 2025. "Trap of Social Media Algorithms: A Systematic Review of Research on Filter Bubbles, Echo Chambers, and Their Impact on Youth" Societies 15, no. 11: 301. https://doi.org/10.3390/soc15110301

APA StyleAhmmad, M., Shahzad, K., Iqbal, A., & Latif, M. (2025). Trap of Social Media Algorithms: A Systematic Review of Research on Filter Bubbles, Echo Chambers, and Their Impact on Youth. Societies, 15(11), 301. https://doi.org/10.3390/soc15110301