Lightweight Pest Object Detection Model for Complex Economic Forest Tree Scenarios

Simple Summary

Abstract

1. Introduction

- A high-efficiency lightweight model, LightFAD-DETR, is proposed for detecting pests in economic forests. This model achieves an excellent balance between detection accuracy and computational efficiency.

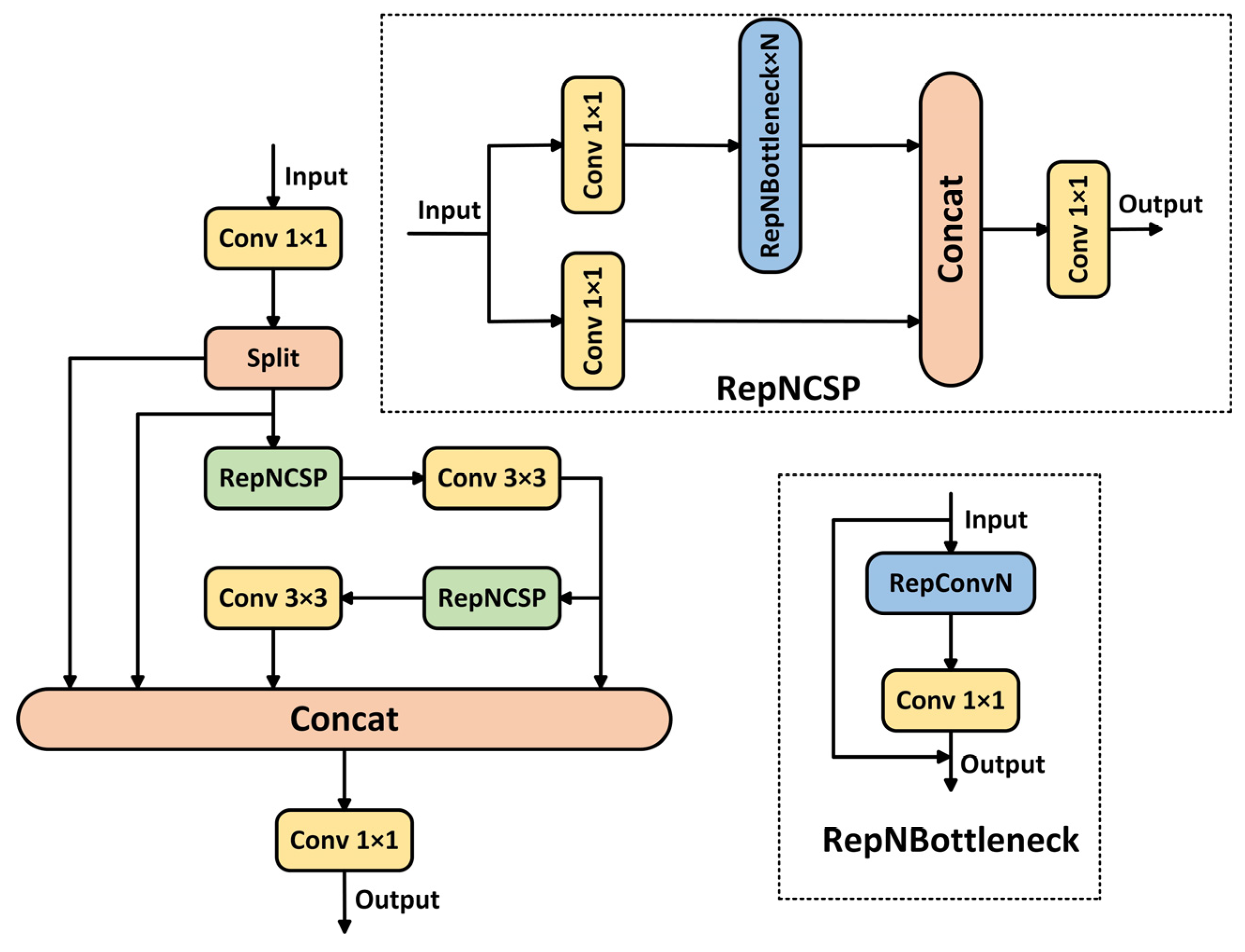

- The RepNCSPELAN4-CAA module is designed using a re-parameterization strategy that maintains a multi-branch convolution structure during training and fuses it into a single-path structure during inference, reducing computational latency. Moreover, the module incorporates one-dimensional strip convolutions to dynamically establish long-range spatial dependencies, enhancing the model’s capability to model cross-regional features of elongated pest targets.

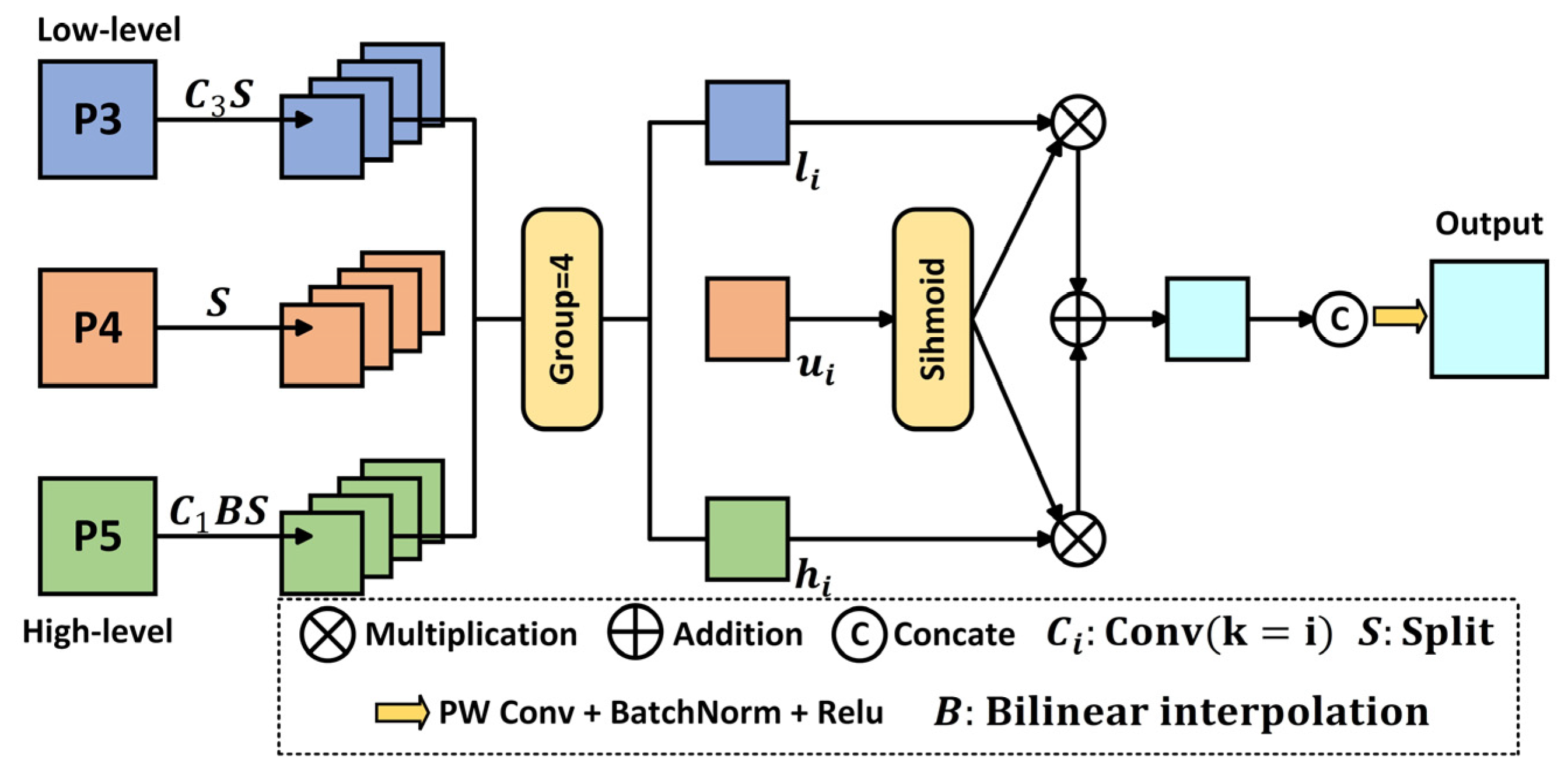

- A feature aggregation diffusion network is developed, incorporating a dimension-aware selective integration module. This design adaptively integrates deep semantic features with shallow texture details, mitigating information loss for small-scale pest targets and improving the recognition of subtle pest signs under complex leaf texture backgrounds.

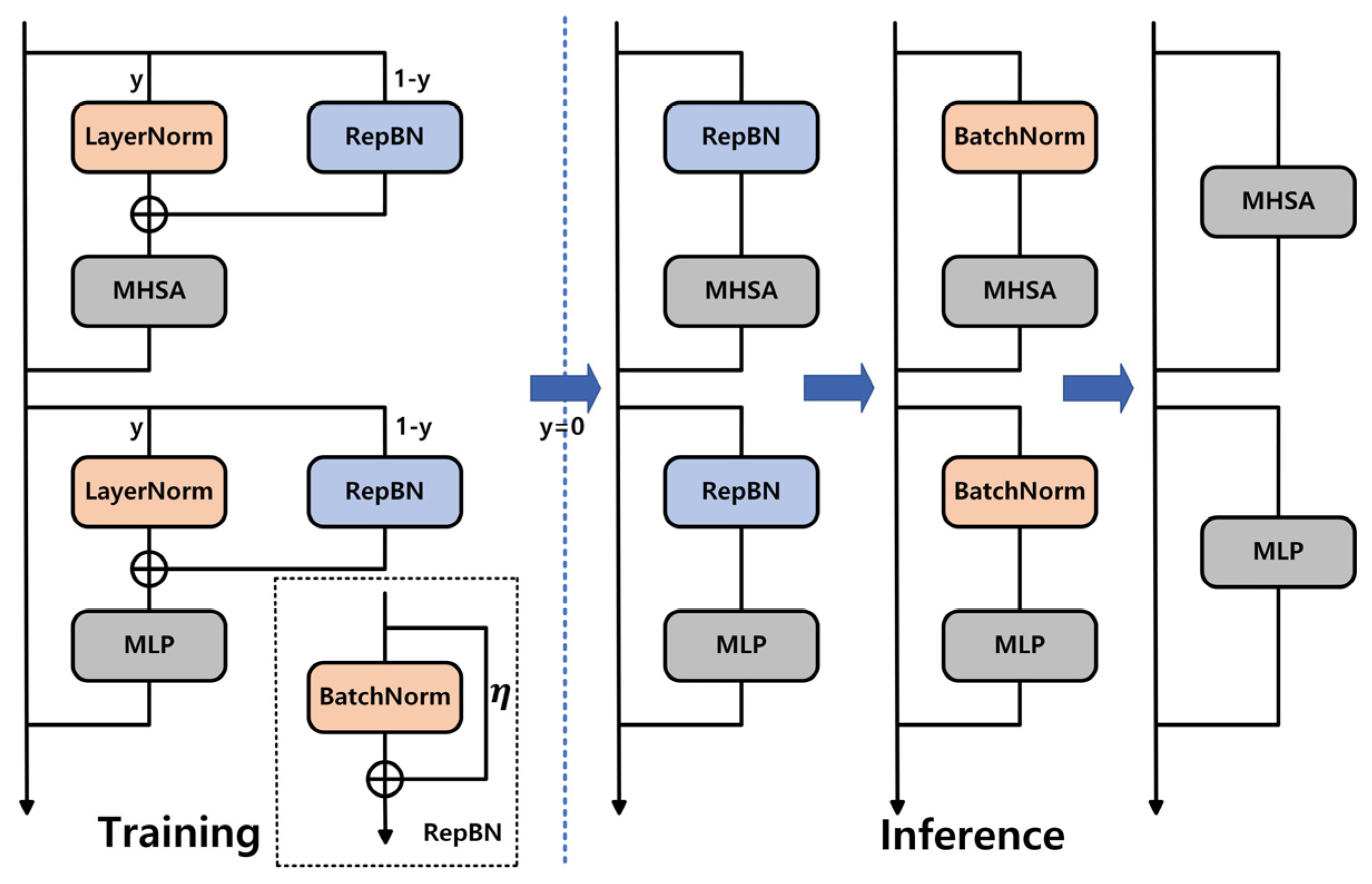

- An improved AIFI module with re-parameterized batch normalization is proposed. This enhancement adopts a progressive fusion approach to integrate dynamic linear components into the normalization process and ensures their fusion with adjacent linear layers during inference, thereby further reducing computational redundancy and optimizing the model’s execution performance on edge computing devices.

2. Related Work

2.1. Pest Object Detection

2.2. Small Object Detection

3. Proposed Method

3.1. RepNCSPELAN4-CAA Module

3.2. Feature Aggregation Diffusion Network Integrated with Dimension-Aware Selective Integration Module

3.3. Re-Parameterized Batch Normalization

4. Experiment

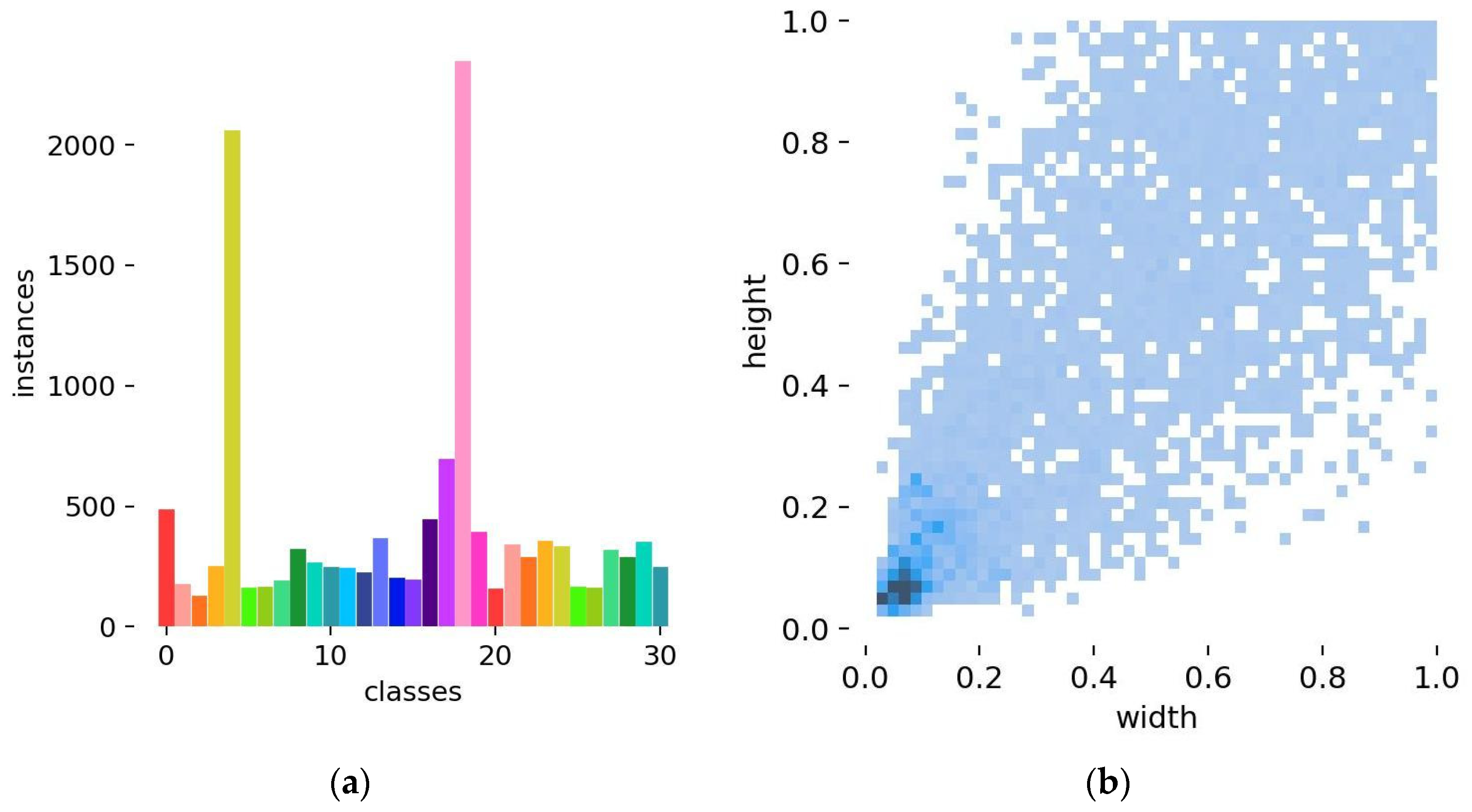

4.1. Experimental Dataset and Environment

4.2. Evaluation Metrics

4.3. Ablation Experiments

4.4. Classification Performance Analysis

4.5. Performance Comparison of Different Models

4.6. Performance Analysis on Different Datasets

4.7. Visual Analysis of Detection Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kanan, A.H.; Masiero, M.; Pirotti, F. Estimating Economic and Livelihood Values of the World’s Largest Mangrove Forest (Sundarbans): A Meta-Analysis. Forests 2024, 15, 837. [Google Scholar] [CrossRef]

- Wang, L.; Wang, E.; Mao, X.; Benjamin, W.; Liu, Y. Sustainable Poverty Alleviation through Forests: Pathways and Strategies. Sci. Total Environ. 2023, 904, 167336. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, Y.N.; Ashajyothi, M.; Uma, G.S.; Rajarajan, K.; Handa, A.K.; Arunachalam, A. Diseases and Insect Pests Challenge to Meet Wood Production Demand of Tectona grandis (L.), a High-Value Tropical Tree Species. J. Plant Dis. Prot. 2023, 130, 929–945. [Google Scholar] [CrossRef]

- Raum, S.; Collins, C.M.; Urquhart, J.; Potter, C.; Pauleit, S.; Egerer, M. Tree Insect Pests and Pathogens: A Global Systematic Review of Their Impacts in Urban Areas. Urban Ecosyst. 2023, 26, 587–604. [Google Scholar] [CrossRef]

- Barathi, S.; Sabapathi, N.; Kandasamy, S.; Lee, J. Present Status of Insecticide Impacts and Eco-Friendly Approaches for Re-mediation—A Review. Environ. Res. 2024, 240, 117432. [Google Scholar] [CrossRef]

- Saberi Riseh, R.; Gholizadeh Vazvani, M.; Hassanisaadi, M.; Thakur, V.K.; Kennedy, J.F. Use of Whey Protein as a Natural Polymer for the Encapsulation of Plant Biocontrol Bacteria: A Review. Int. J. Biol. Macromol. 2023, 234, 123708. [Google Scholar] [CrossRef]

- Wang, S.; Xu, D.; Liang, H.; Bai, Y.; Li, X.; Zhou, J.; Su, C.; Wei, W. Advances in Deep Learning Applications for Plant Disease and Pest Detection: A Review. Remote Sens. 2025, 17, 698. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Goodsman, D.; Ray, N.; Erbilgin, N. Early Detection of Bark Beetle Attack Using Remote Sensing and Machine Learning: A Review. ACM Comput. Surv. 2023, 56, 97. [Google Scholar] [CrossRef]

- Mittal, M.; Gupta, V.; Aamash, M.; Upadhyay, T. Machine Learning for Pest Detection and Infestation Prediction: A Comprehensive Review. WIREs Data Min. Knowl. Discov. 2024, 14, e1551. [Google Scholar] [CrossRef]

- Godinez-Garrido, G.; Gonzalez-Islas, J.-C.; Gonzalez-Rosas, A.; Flores, M.U.; Miranda-Gomez, J.-M.; Gutierrez-Sanchez, M.d.J. Estimation of Damaged Regions by the Bark Beetle in a Mexican Forest Using UAV Images and Deep Learning. Sustainability 2024, 16, 10731. [Google Scholar] [CrossRef]

- Yin, J.; Zhang, H.; Chen, Z.; Li, J. Detecting Emerald Ash Borer Boring Vibrations Using an Encoder–Decoder and Improved DenseNet Model. Pest Manag. Sci. 2025, 81, 384–401. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-Scale Dense YOLO for Small Target Pest Detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

- Dong, Q.; Sun, L.; Han, T.; Cai, M.; Gao, C. PestLite: A Novel YOLO-Based Deep Learning Technique for Crop Pest Detection. Agriculture 2024, 14, 228. [Google Scholar] [CrossRef]

- Ye, R.; Gao, Q.; Qian, Y.; Sun, J.; Li, T. Improved YOLOv8 and SAHI Model for the Collaborative Detection of Small Targets at the Micro Scale: A Case Study of Pest Detection in Tea. Agronomy 2024, 14, 1034. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Chennai, India, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Cagatay Akyon, F.; Onur Altinuc, S.; Temizel, A. Slicing Aided Hyper Inference and Fine-tuning for Small Object Detection. arXiv 2022, arXiv:2202.06934. [Google Scholar] [CrossRef]

- Yang, Z.; Feng, H.; Ruan, Y.; Weng, X. Tea Tree Pest Detection Algorithm Based on Improved Yolov7-Tiny. Agriculture 2023, 13, 1031. [Google Scholar] [CrossRef]

- Li, Z.; Shen, Y.; Tang, J.; Zhao, J.; Chen, Q.; Zou, H.; Kuang, Y. IMLL-DETR: An Intelligent Model for Detecting Multi-Scale Litchi Leaf Diseases and Pests in Complex Agricultural Environments. Expert Syst. Appl. 2025, 273, 126816. [Google Scholar] [CrossRef]

- Chen, H.; Wen, C.; Zhang, L.; Ma, Z.; Liu, T.; Wang, G.; Yu, H.; Yang, C.; Yuan, X.; Ren, J. Pest-PVT: A Model for Multi-Class and Dense Pest Detection and Counting in Field-Scale Environments. Comput. Electron. Agric. 2025, 230, 109864. [Google Scholar] [CrossRef]

- Zhao, C.; Bai, C.; Yan, L.; Xiong, H.; Suthisut, D.; Pobsuk, P.; Wang, D. AC-YOLO: Multi-Category and High-Precision Detection Model for Stored Grain Pests Based on Integrated Multiple Attention Mechanisms. Expert Syst. Appl. 2024, 255, 124659. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Deng, C.; Wang, M.; Liu, L.; Liu, Y.; Jiang, Y. Extended Feature Pyramid Network for Small Object Detection. IEEE Trans. Multimed. 2022, 24, 1968–1979. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multiscale Feature Fusion Small Object Detection Network for UAV Aerial Images. IEEE Trans. Instrum. Meas. 2024, 73, 5015214. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Cai, Y.; Chen, L.; Li, Y. YOLOv8-QSD: An Improved Small Object Detection Algorithm for Autonomous Vehicles Based on YOLOv8. IEEE Trans. Instrum. Meas. 2024, 73, 2513916. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Huang, Q.; Han, Y.; Zhao, M. DsP-YOLO: An Anchor-Free Network with DsPAN for Small Object Detection of Multiscale Defects. Expert Syst. Appl. 2024, 241, 122669. [Google Scholar] [CrossRef]

- Shen, C.; Qian, J.; Wang, C.; Yan, D.; Zhong, C. Dynamic Sensing and Correlation Loss Detector for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5627212. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Mark Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Computer Vision—ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 15089, pp. 1–21. [Google Scholar] [CrossRef]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly Kernel Inception Network for Remote Sensing Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27706–27716. [Google Scholar] [CrossRef]

- Xu, S.; Zheng, S.; Xu, W.; Xu, R.; Wang, C.; Zhang, J.; Teng, X.; Li, A.; Guo, L. HCF-Net: Hierarchical Context Fusion Network for Infrared Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niigata, Japan, 26 June–5 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Guo, J.; Chen, X.; Tang, Y.; Wang, Y. SLAB: Efficient Transformers with Simplified Linear Attention and Progressive Re-parameterized Batch Normalization. In Proceedings of the 41st International Conference on Machine Learning (ICML 2024), Vienna, Austria, 21–27 July 2024; PMLR: London, UK, 2024; Volume 235, pp. 16802–16812. [Google Scholar]

- Liu, B.; Liu, L.; Zhuo, R.; Chen, W.; Duan, R.; Wang, G. A Dataset for Forestry Pest Identification. Front. Plant Sci. 2022, 13, 857104. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Shaker, A.; Maaz, M.; Rasheed, H.; Khan, S.; Yang, M.-H.; Khan, F.S. SwiftFormer: Efficient Additive Attention for Transformer-Based Real-Time Mobile Vision Applications. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 17379–17390. [Google Scholar] [CrossRef]

- Xia, Z.; Pan, X.; Song, S.; Li, E.; Huang, G. Vision Transformer with Deformable Attention. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4784–4793. [Google Scholar] [CrossRef]

- Pan, Z.; Cai, J.; Zhuang, B. Fast Vision Transformers with HiLo Attention. In Proceedings of the 36th International Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2022; pp. 14541–14554. [Google Scholar]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual Event/Nashville, TN, USA, 19–25 June 2021; pp. 10881–10890. [Google Scholar] [CrossRef]

- Gong, W. Lightweight Object Detection: A Study Based on YOLOv7 Integrated with ShuffleNetv2 and Vision Transformer. arXiv 2024, arXiv:2403.01736. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Y.; Qian, H.; Du, X. Rethinking Performance Gains in Image Dehazing Networks. arXiv 2022, arXiv:2209.11448. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio, Video, Point Cloud, Time-Series and Image Recognition. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 5513–5524. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part I; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the 9th International Conference on Learning Representations (ICLR 2021), Virtual Event, Austria, 3–7 May 2021; OpenReview: Amherst, MA, USA, 2021; pp. 1–12. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Virtual Event/Montreal, QC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Wu, X.; Zhan, C.; Lai, Y.-K.; Cheng, M.-M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8779–8788. [Google Scholar] [CrossRef]

| Label Number | Category | Label Number | Category |

|---|---|---|---|

| 0 | Drosicha contrahens (female) | 16 | Latoia consocia (Walker) (larvae) |

| 1 | Drosicha contrahens (male) | 17 | Plagiodera versicolora (Laicharting) (larvae) |

| 2 | Chalcophora japonica | 18 | Plagiodera versicolora (Laicharting) (ovum) |

| 3 | Erthesina fullo | 19 | Plagiodera versicolora (Laicharting) |

| 4 | Erthesina fullo (nymph2) | 20 | Spilarctia subcarnea (Walker) (larvae) |

| 5 | Erthesina fullo (nymph) | 21 | Spilarctia subcarnea (Walker) (larvae2) |

| 6 | Spilarctia subcarnea (Walker) | 22 | Apriona germari (Hope) |

| 7 | Psilogramma menephron | 23 | Hyphantria cunea |

| 8 | Sericinus montela | 24 | Cerambycidae (larvae) |

| 9 | Sericinus montela (larvae) | 25 | Psilogramma menephron (larvae) |

| 10 | Clostera anachoreta | 26 | Monochamus alternatus |

| 11 | Cnidocampa flavescens (Walker) | 27 | Micromelalopha troglodyte (Graeser) (larvae) |

| 12 | Cnidocampa flavescens (Walker) (pupa) | 28 | Hyphantria cunea (larvae) |

| 13 | Anoplophora chinensis | 29 | Hyphantria cunea (pupa) |

| 14 | Micromelalopha troglodyte (Graeser) | 30 | Latoia consocia (Walker) |

| 15 | Psacothea hilari (Pascoe) |

| Experimental Configuration | Configuration Information |

|---|---|

| Deep Learning Framework | Pytorch 2.0.0 |

| Software Version | PyCharm PROFESSIONAL 2023.1 |

| Python Version | Python 3.8.19 |

| Server | AMAX |

| GPU | NVIDIA GeForce 2080 Ti |

| Number of Training Epochs | 300 |

| Training Batch Size | 16 |

| Number of Threads | 4 |

| Optimizer | 0.01 |

| Baseline Model | Replace the Backbone | Rep-NCSPE-LAN4-CAA | FADN (DASI) | AIFI-RepBN | (%) | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|---|

| RT-DETR | 87.1 | 19.9 | 57.1 | ||||

| √ | 86.9 | 10.5 | 33.8 | ||||

| √ | 87.6 | 19.1 | 53.1 | ||||

| √ | 88.6 | 21.2 | 63.5 | ||||

| √ | 87.9 | 19.9 | 57.1 | ||||

| √ | √ | 87.2 | 10.4 | 32.5 | |||

| √ | √ | √ | 87.9 | 11.6 | 37.1 | ||

| √ | √ | √ | √ | 88.5 | 11.6 | 37.1 |

| Improved AIFI Module | (%) | Params (M) | GFLOPs |

|---|---|---|---|

| AIFI-LPE | 86.4 | 20.0 | 57.1 |

| AIFI-EfficientAdditive | 86.6 | 19.9 | 57.3 |

| AIFI-DAttention | 87.2 | 19.9 | 57.3 |

| AIFI-HiLo | 87.1 | 19.9 | 57.2 |

| AIFI-MSMHSA | 87.6 | 19.9 | 57.2 |

| AIFI-RepBN | 87.9 | 19.9 | 57.1 |

| Improved AIFI Module | (%) | Params (M) | GFLOPs |

|---|---|---|---|

| AIFI-LPE | 87.9 | 11.8 | 37.3 |

| AIFI-EfficientAdditive | 86.9 | 11.7 | 37.6 |

| AIFI-DAttention | 88.0 | 11.6 | 37.6 |

| AIFI-HiLo | 88.1 | 11.6 | 37.5 |

| AIFI-MSMHSA | 87.6 | 11.7 | 37.5 |

| AIFI-RepBN | 88.5 | 11.6 | 37.1 |

| Replace the RepC3 Module | (%) | Params (M) | GFLOPs |

|---|---|---|---|

| DBBC3 | 87.5 | 19.9 | 57.1 |

| DGST | 87.1 | 18.6 | 50.4 |

| gConvC3 | 84.9 | 18.6 | 51.2 |

| DRBC3 | 86.5 | 18.2 | 48.4 |

| RepNCSPELAN4 | 86.7 | 18.6 | 50.3 |

| RepNCSPELAN4-CAA | 87.6 | 19.1 | 53.1 |

| Replace the RepC3 Module | (%) | Params (M) | GFLOPs |

|---|---|---|---|

| DBBC3 | 88.1 | 12.6 | 43.1 |

| DGST | 87.4 | 11.2 | 34.0 |

| gConvC3 | 86.1 | 11.4 | 35.4 |

| DRBC3 | 87.3 | 10.7 | 31.6 |

| RepNCSPELAN4 | 87.2 | 11.1 | 33.6 |

| RepNCSPELAN4-CAA | 88.5 | 11.6 | 37.1 |

| Model | Precision (%) | Recall (%) | (%) | Params (M) | GFLOPs | FPS (Frame/s) |

|---|---|---|---|---|---|---|

| RT-DETRr18 | 96.3 | 93.9 | 87.1 | 19.9 | 57.1 | 141.5 |

| RT-DETRr34 | 96.6 | 95.3 | 87.9 | 31.2 | 88.9 | 103.9 |

| RT-DETRr50 | 97.0 | 95.6 | 87.1 | 42.0 | 129.7 | 62.5 |

| LightFAD-DETR | 97.3 | 94.8 | 88.5 | 11.6 | 31.7 | 106.3 |

| Model | Precision (%) | Recall (%) | (%) | Params (M) | GFLOPs | FPS (Frame/s) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 90.2 | 86.7 | 77.4 | 137.1 | 361.6 | 13.6 |

| SSD512 | 92.1 | 93.6 | 81.6 | 24.8 | 97.5 | 42.1 |

| RetinaNet | 96.1 | 93.8 | 86.4 | 36.5 | 198.2 | 24.7 |

| YOLOv5m | 95.4 | 94.2 | 87.3 | 41.2 | 63.7 | 102.4 |

| YOLOv8m | 97.8 | 95.0 | 88.3 | 23.2 | 67.5 | 137.8 |

| YOLOv10m | 96.7 | 93.5 | 86.9 | 15.7 | 59.1 | 142.5 |

| YOLO11m [50] | 97.1 | 94.8 | 87.7 | 20.3 | 67.9 | 113.6 |

| YOLOv12m [51] | 96.8 | 95.4 | 87.9 | 20.1 | 67.7 | 103.2 |

| DETR | 95.6 | 93.9 | 86.6 | 41.1 | 86.0 | 14.2 |

| Deformable DETR | 95.8 | 94.3 | 87.1 | 39.4 | 173.2 | 22.9 |

| Swin-B | 96.7 | 94.5 | 87.7 | 88.3 | 47.0 | 11.4 |

| LightFAD-DETR | 97.3 | 94.8 | 88.5 | 11.6 | 31.7 | 106.3 |

| Model | Precision (%) | Recall (%) | (%) | Params (M) | GFLOPs | FPS (Frame/s) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 44.3 | 42.9 | 28.6 | 137.1 | 361.6 | 13.6 |

| SSD512 | 52.1 | 48.3 | 32.1 | 24.8 | 97.5 | 42.1 |

| RetinaNet | 62.7 | 58.6 | 39.9 | 36.5 | 198.2 | 24.7 |

| YOLOv5m | 65.7 | 60.8 | 40.9 | 41.2 | 63.7 | 102.4 |

| YOLOv8m | 66.9 | 62.2 | 41.2 | 23.2 | 67.5 | 137.8 |

| DETR | 59.0 | 54.3 | 38.4 | 41.1 | 86.0 | 14.2 |

| Deformable DETR | 64.5 | 57.9 | 39.7 | 39.4 | 173.2 | 22.9 |

| Swin-B | 63.2 | 61.4 | 40.5 | 88.3 | 47.0 | 11.4 |

| RT-DETRr18 | 65.2 | 59.7 | 40.1 | 19.9 | 57.1 | 141.5 |

| RT-DETRr34 | 67.1 | 61.6 | 40.7 | 31.2 | 88.9 | 103.9 |

| RT-DETRr50 | 66.7 | 62.3 | 41.2 | 42.0 | 129.7 | 62.5 |

| LightFAD-DETR | 66.4 | 61.2 | 41.2 | 11.6 | 31.7 | 106.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, X.; Wang, X.; Kang, Y.; Deng, Y.; Lu, Q.; Tang, J.; Shi, Y.; Zhao, J. Lightweight Pest Object Detection Model for Complex Economic Forest Tree Scenarios. Insects 2025, 16, 959. https://doi.org/10.3390/insects16090959

Cheng X, Wang X, Kang Y, Deng Y, Lu Q, Tang J, Shi Y, Zhao J. Lightweight Pest Object Detection Model for Complex Economic Forest Tree Scenarios. Insects. 2025; 16(9):959. https://doi.org/10.3390/insects16090959

Chicago/Turabian StyleCheng, Xiaohui, Xukun Wang, Yanping Kang, Yun Deng, Qiu Lu, Jian Tang, Yuanyuan Shi, and Junyu Zhao. 2025. "Lightweight Pest Object Detection Model for Complex Economic Forest Tree Scenarios" Insects 16, no. 9: 959. https://doi.org/10.3390/insects16090959

APA StyleCheng, X., Wang, X., Kang, Y., Deng, Y., Lu, Q., Tang, J., Shi, Y., & Zhao, J. (2025). Lightweight Pest Object Detection Model for Complex Economic Forest Tree Scenarios. Insects, 16(9), 959. https://doi.org/10.3390/insects16090959