Hybrid ML-Based Cutting Temperature Prediction in Hard Milling Under Sustainable Lubrication

Abstract

1. Introduction

2. Materials and Methods

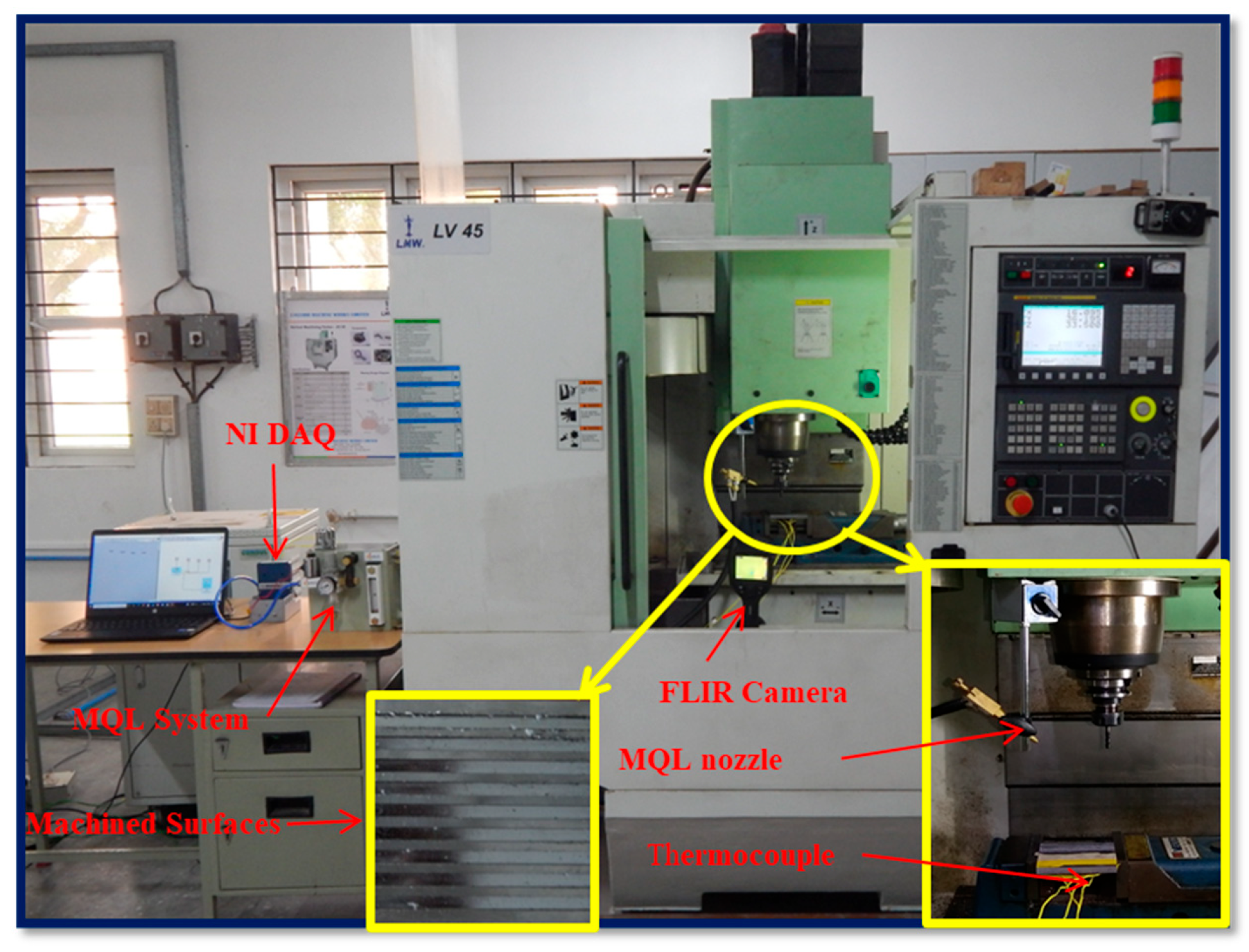

2.1. Data Acquisition

2.2. Data Preprocessing and ML Model Generation

3. Results and Discussions

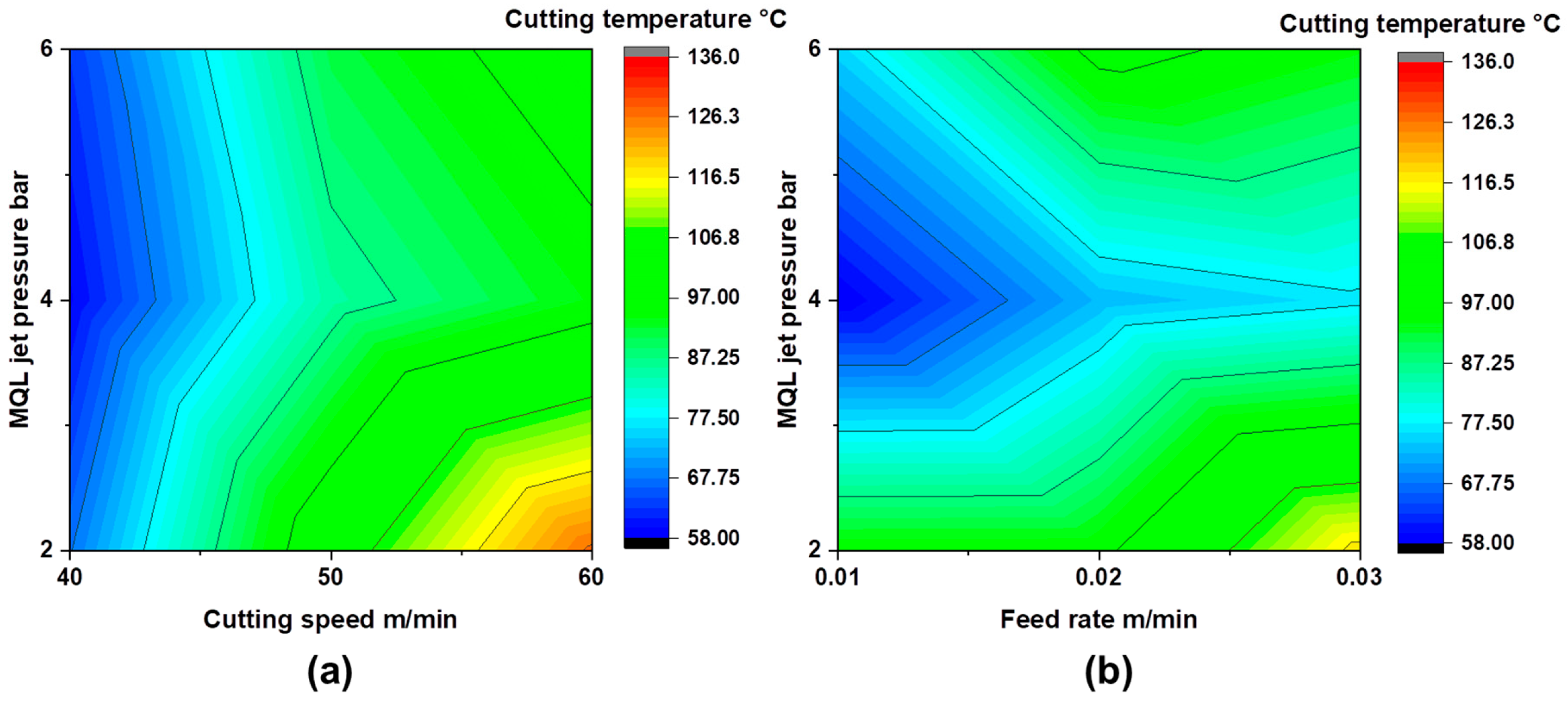

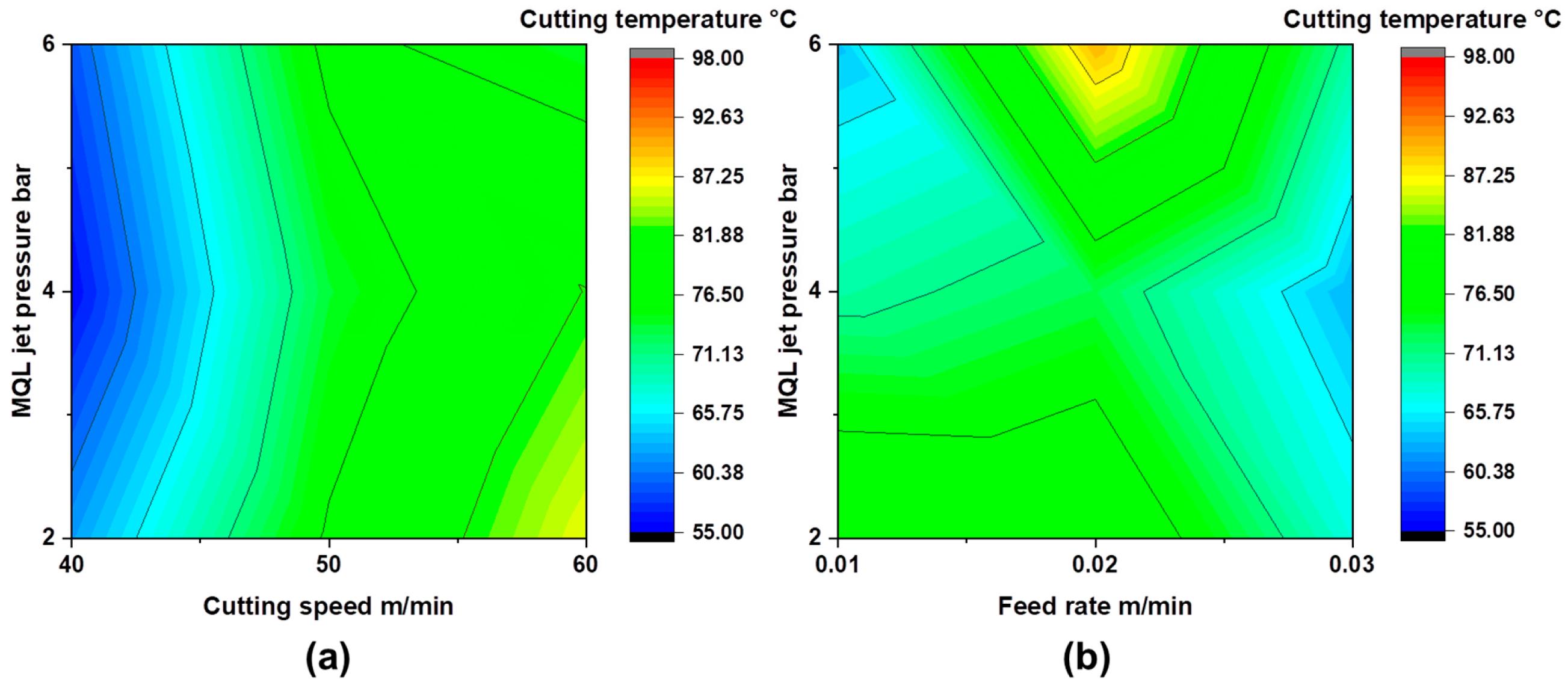

3.1. Influence of Lubrication Environment on Cutting Temperature

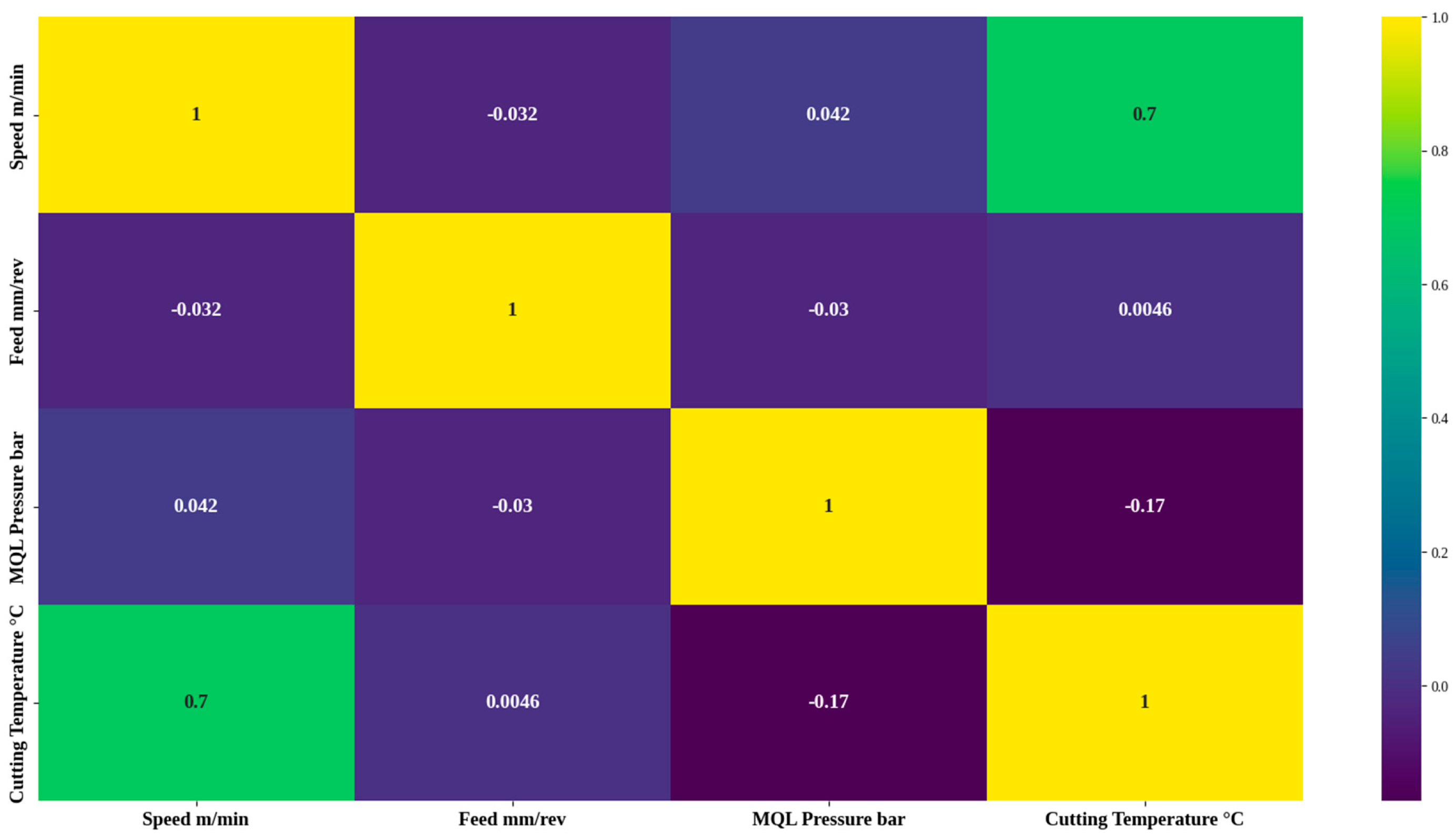

3.2. Insight into Data Processing

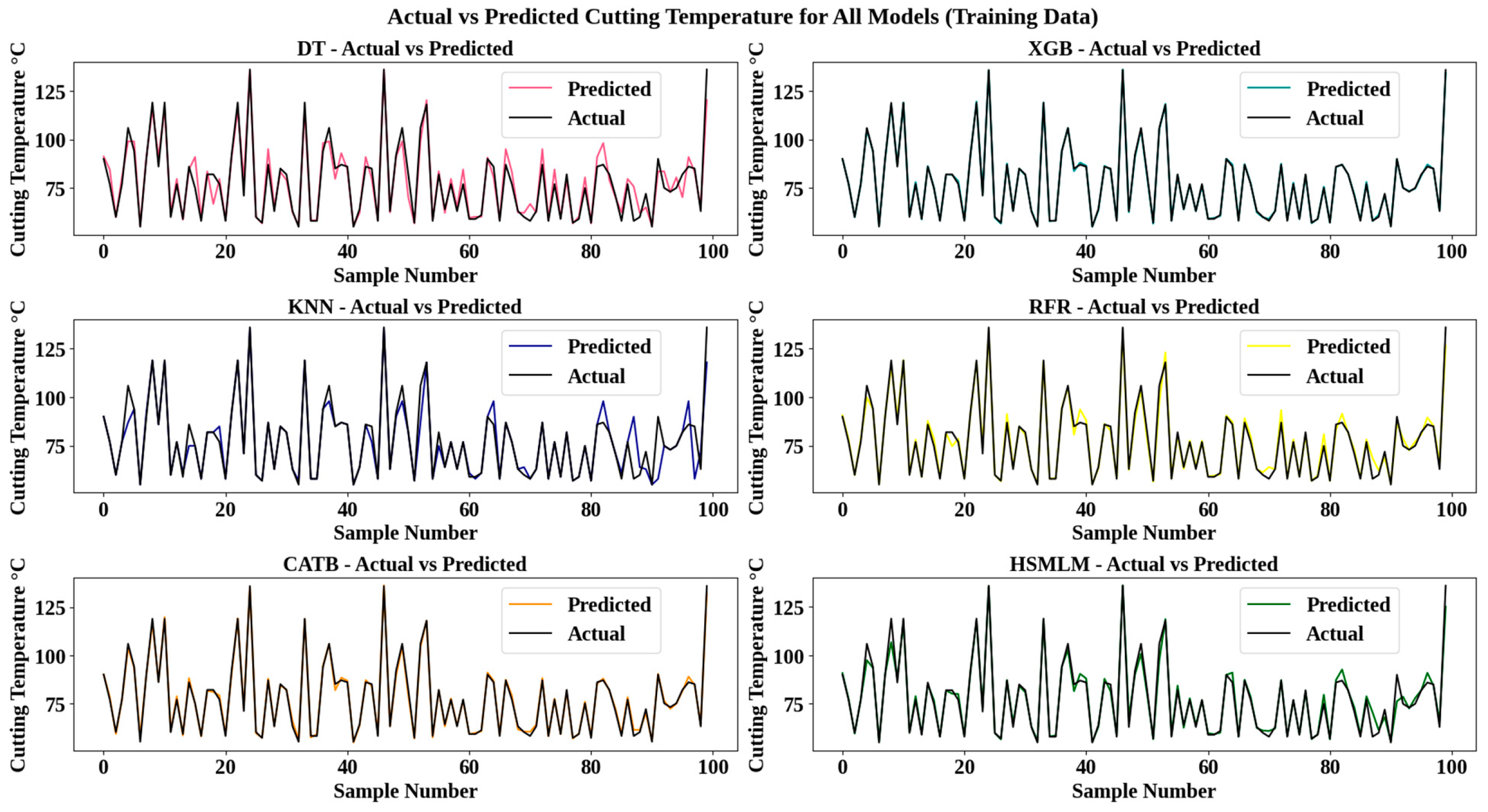

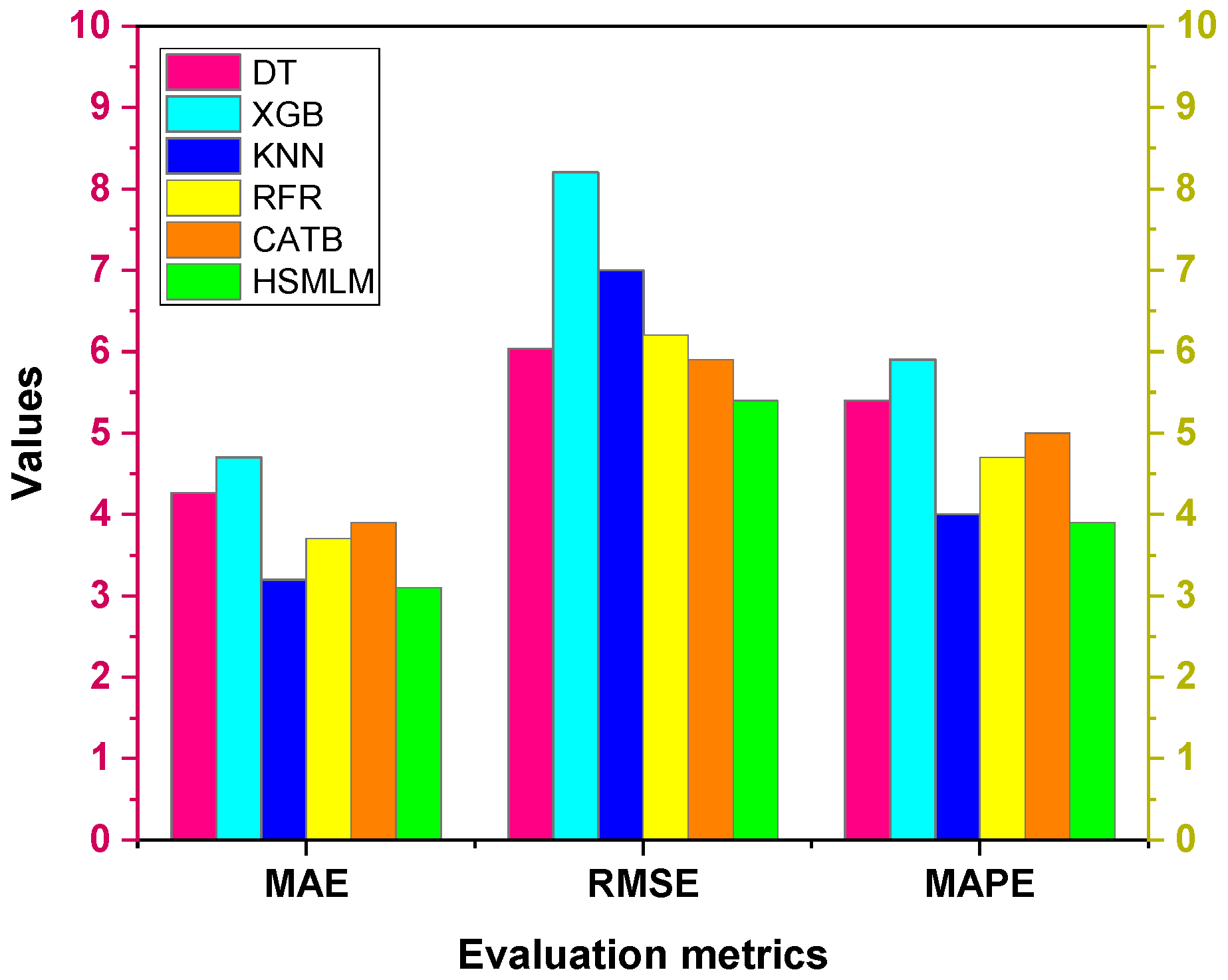

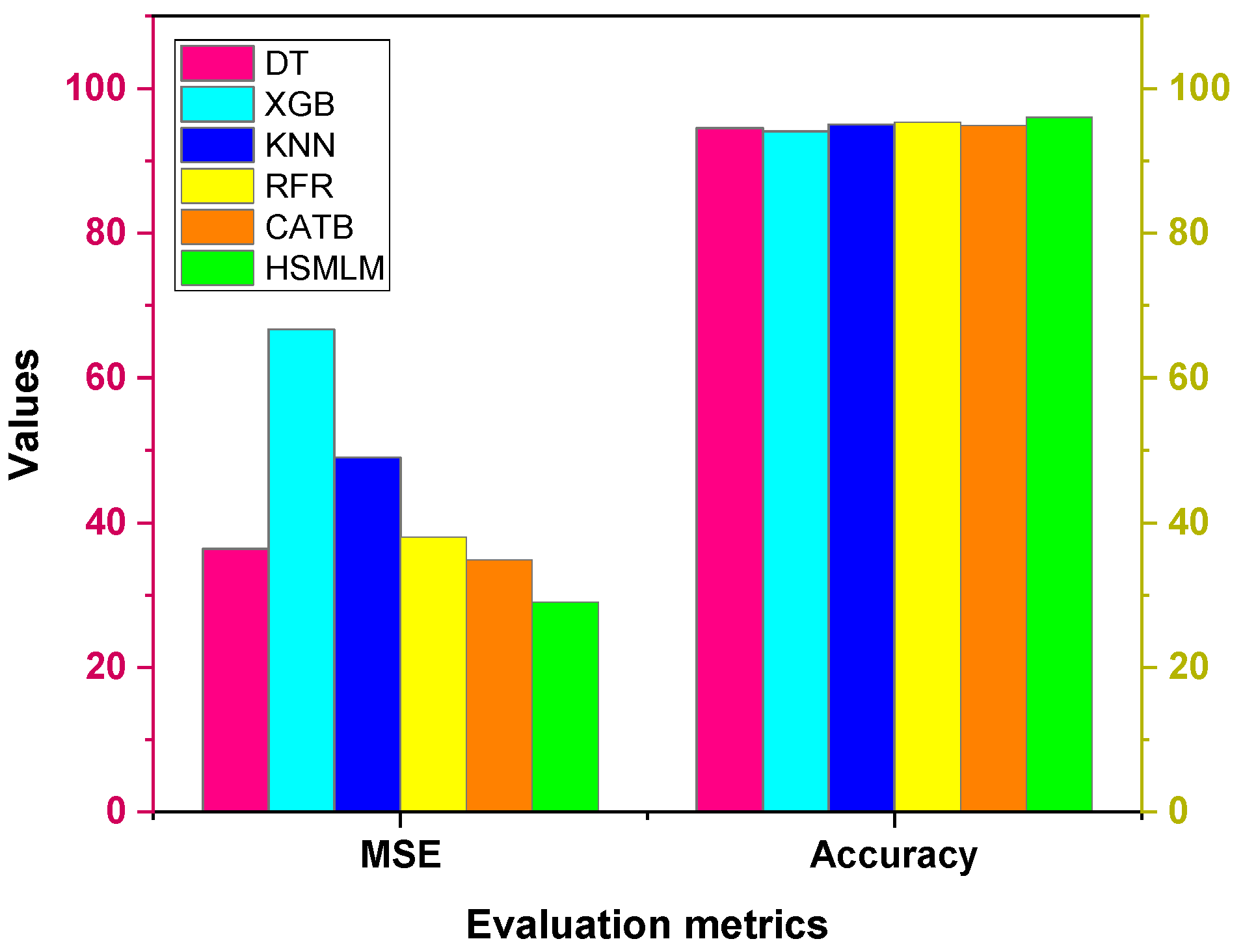

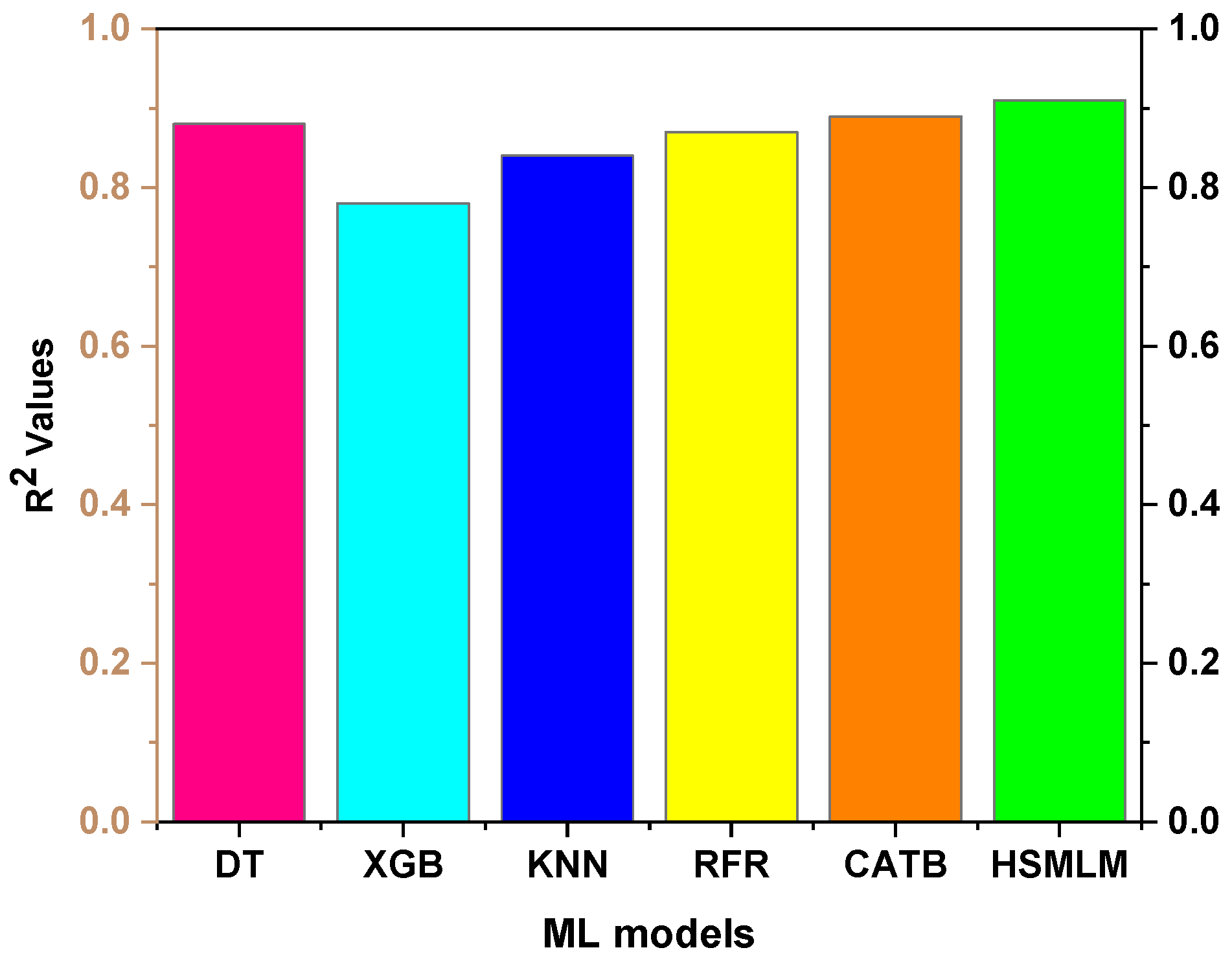

3.3. Development and Comparison of ML Models

4. Conclusions

- •

- The GEMQL environment notably lowers Tc compared to CMQL, due to Gnps-enhanced lubrication that improves thermal stability, fluid retention, and protective tribo-film formation at the tool–workpiece interface.

- •

- The lowest Tc of 58 °C in the CMQL environment was observed at a Vc of 40 m/min, a f of 0.01 mm/rev, and a Pj of 4 bar.

- •

- The GEMQL environment achieved a maximum Tc reduction of 36.8% compared to CMQL at a Vc of 60 m/min, a f of 0.01 mm/rev, and a Pj of 2 bar. This reduction surpasses the result reported in the existing study employing Gnps-enhanced sunflower oil under MQL milling.

- •

- Under the optimum parameters of 40 m/min Vc, 0.03 mm/rev f, and 4 bar Pj, GEMQL achieves the lowest Tc of 55 °C, significantly lower than CMQL.

- •

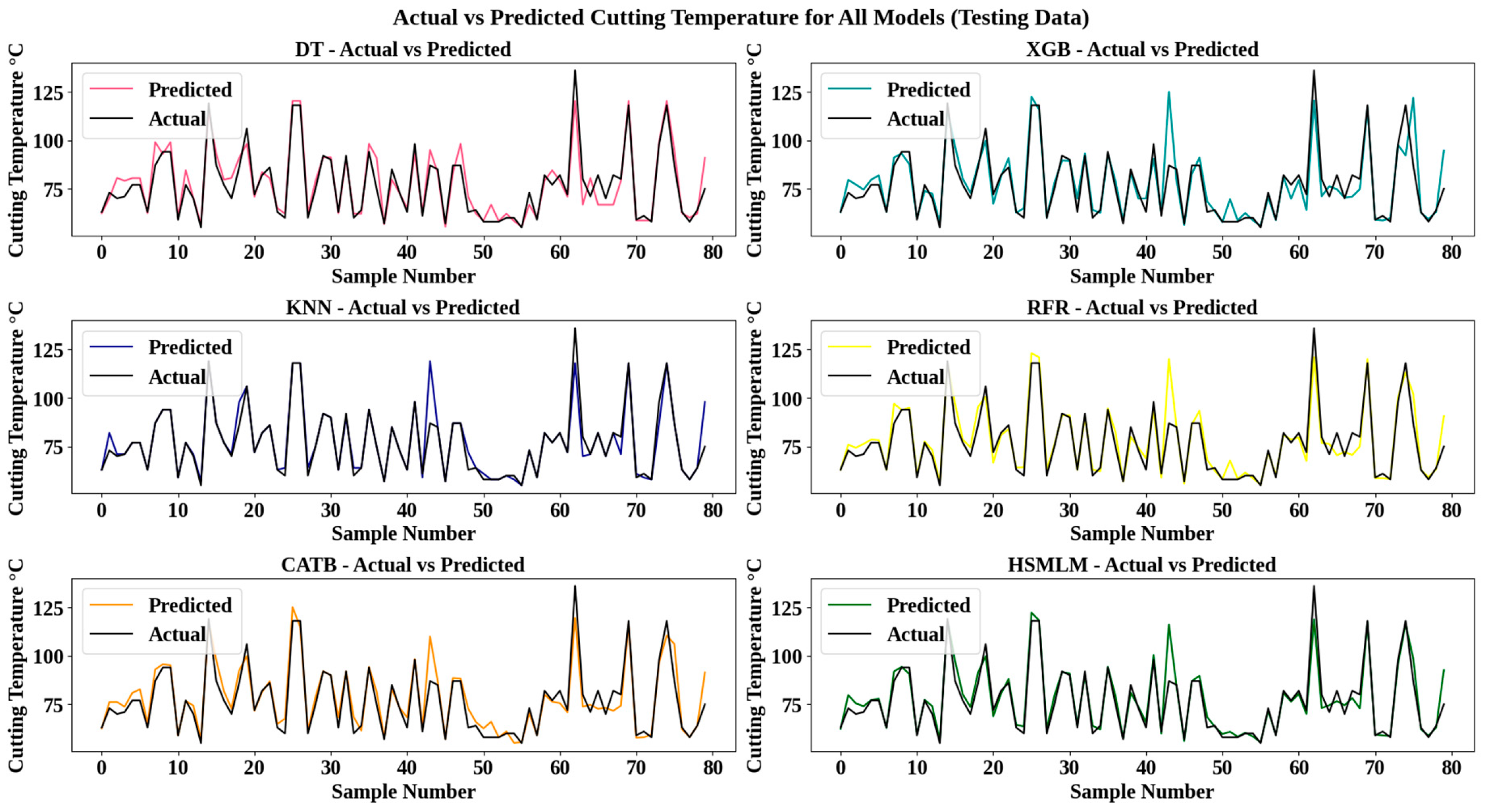

- Among the six ML models, XGBoost showed the lowest performance (MAE: 4.7, RMSE: 8.2, MAPE: 5.9, MSE: 66.7, accuracy: 94.1%, R2: 0.78) due to its sensitivity to hyperparameters and higher variance, limiting generalization on sparse test data.

- •

- The proposed HSMLM achieved the highest performance with an MAE of 3.1, an RMSE of 5.4, an MAPE of 3.9, an MSE of 29, an accuracy of 96%, and an R2 of 0.91, as its ensemble stacking effectively combines base models to enhance accuracy, robustness, and generalization. It also performs better than the existing physics-informed meta-learning approach reported for surface roughness prediction.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Budzynski, P.; Kara, L.; Küçükömeroğlu, T.; Kaminski, M. The influence of nitrogen implantation on tribological properties of AISI H11 steel. Vacuum 2015, 122, 230–235. [Google Scholar] [CrossRef]

- Hassanpour, H.; Sadeghi, M.H.; Rasti, A.; Shajari, S. Investigation of surface roughness, microhardness and white layer thickness in hard milling of AISI 4340 using minimum quantity lubrication. J. Clean. Prod. 2016, 120, 124–134. [Google Scholar] [CrossRef]

- Duc, T.M.; Tuan, N.M.; Long, T.T. Optimization of Al2O3 nanoparticle concentration and cutting parameters in hard milling under nanofluid MQL environment. Adv. Mech. Eng. 2024, 16, 16878132241257114. [Google Scholar] [CrossRef]

- Krolczyk, G.M.; Maruda, R.W.; Krolczyk, J.B.; Wojciechowski, S.; Mia, M.; Nieslony, P.; Budzik, G. Ecological trends in machining as a key factor in sustainable production—A review. J. Clean. Prod. 2019, 218, 601–615. [Google Scholar] [CrossRef]

- Kazeem, R.A.; Fadare, D.A.; Ikumapayi, O.M.; Adediran, A.A.; Aliyu, S.J.; Akinlabi, S.A.; Jen, T.C.; Akinlabi, E.T. Advances in the Application of Vegetable-Oil-Based Cutting Fluids to Sustainable Machining Operations—A Review. Lubricants 2022, 10, 69. [Google Scholar] [CrossRef]

- Azman, N.F.; Samion, S. Dispersion Stability and Lubrication Mechanism of Nanolubricants: A Review. Int. J. Precis. Eng. Manuf. Green Technol. 2019, 6, 393–414. [Google Scholar] [CrossRef]

- Haq, M.A.u.; Hussain, S.; Ali, M.A.; Farooq, M.U.; Mufti, N.A.; Pruncu, C.I.; Wasim, A. Evaluating the effects of nano-fluids based MQL milling of IN718 associated to sustainable productions. J. Clean. Prod. 2021, 310, 127463. [Google Scholar] [CrossRef]

- Pal, A.; Chatha, S.S.; Sidhu, H.S. Performance evaluation of the minimum quantity lubrication with Al2O3- mixed vegetable-oil-based cutting fluid in drilling of AISI 321 stainless steel. J. Manuf. Process. 2021, 66, 238–249. [Google Scholar] [CrossRef]

- Dong, P.Q.; Duc, T.M.; Long, T.T. Performance evaluation of mqcl hard milling of skd 11 tool steel using mos2 nanofluid. Metals 2019, 9, 658. [Google Scholar] [CrossRef]

- Hamdi, A.; Yapan, Y.F.; Uysal, A.; Merghache, S.M. Machinability assessment and optimization of turning AISI H11 steel under various minimum quantity lubrication (MQL) conditions using nanofluids. Int. J. Adv. Manuf. Technol. 2025, 137, 4089–4107. [Google Scholar] [CrossRef]

- Taghvaei, M.; Jafari, S.M. Application and stability of natural antioxidants in edible oils in order to substitute synthetic additives. J. Food Sci. Technol. 2015, 52, 1272–1282. [Google Scholar] [CrossRef]

- Padmini, R.; Vamsi Krishna, P.; Krishna Mohana Rao, G. Performance assessment of micro and nano solid lubricant suspensions in vegetable oils during machining. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2015, 229, 2196–2204. [Google Scholar] [CrossRef]

- Ni, J.; Cui, Z.; Wu, C.; Sun, J.; Zhou, J. Evaluation of MQL broaching AISI 1045 steel with sesame oil containing nano-particles under best concentration. J. Clean. Prod. 2021, 320, 128888. [Google Scholar] [CrossRef]

- Phiri, J.; Gane, P.; Maloney, T.C. General overview of graphene: Production, properties and application in polymer composites. Mater. Sci. Eng. B 2017, 215, 9–28. [Google Scholar] [CrossRef]

- Hou, X.; Xie, Z.; Li, C.; Li, G.; Chen, Z. Study of electronic structure, thermal conductivity, elastic and optical properties of α, β, γ-graphyne. Materials 2018, 11, 188. [Google Scholar] [CrossRef]

- Touggui, Y.; Uysal, A.; Emiroglu, U.; Belhadi, S.; Temmar, M. Evaluation of MQL performances using various nanofluids in turning of AISI 304 stainless steel. Int. J. Adv. Manuf. Technol. 2021, 115, 3983–3997. [Google Scholar] [CrossRef]

- de Paiva, R.L.; de Oliveira, D.; Ruzzi, R.d.S.; Jackson, M.J.; Gelamo, R.V.; Abrão, A.M.; da Silva, R.B. Surface roughness and morphology evaluation of bearing steel after grinding with multilayer graphene platelets dispersed in different base fluids. Wear 2023, 523, 204852. [Google Scholar] [CrossRef]

- Pal, A.; Singh, S.; Singh, H. Tribology International Experimental investigation on the performance of MQL drilling of AISI 321 stainless steel using nano-graphene enhanced vegetable-oil-based cutting fluid. Tribol. Int. 2020, 151, 106508. [Google Scholar] [CrossRef]

- Jiang, J.; Wu, Z.; Pan, S.; Meng, X.; Liu, D.; Mu, K.; Zhu, Q.; Zhu, J.; Cai, C. High-performance liquid metal-based SiC/Graphene-Mo hybrid nanofluid for hydraulic transmission. Tribol. Int. 2024, 198, 109871. [Google Scholar] [CrossRef]

- Li, M.; Li, Q.; Pan, X.; Wang, J.; Wang, Z.; Xu, S.; Zhou, Y.; Ma, L.; Yu, T. On understanding milling characteristics of TC4 alloy during graphene nanofluid minimum quantity lubrication with bionic micro-texture tool. J. Mater. Res. Technol. 2025, 36, 4200–4214. [Google Scholar] [CrossRef]

- Fisher, O.J.; Watson, N.J.; Porcu, L.; Bacon, D.; Rigley, M.; Gomes, R.L. Multiple target data-driven models to enable sustainable process manufacturing: An industrial bioprocess case study. J. Clean. Prod. 2021, 296, 126242. [Google Scholar] [CrossRef]

- Mia, M.; Dhar, N.R. Response surface and neural network based predictive models of cutting temperature in hard turning. J. Adv. Res. 2016, 7, 1035–1044. [Google Scholar] [CrossRef]

- Gupta, M.K.; Mia, M.; Pruncu, C.I.; Khan, A.M.; Rahman, M.A.; Jamil, M.; Sharma, V.S. Modeling and performance evaluation of Al2O3, MoS2 and graphite nanoparticle-assisted MQL in turning titanium alloy: An intelligent approach. J. Braz. Soc. Mech. Sci. Eng. 2020, 42, 207. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, X. Machine learning cutting force, surface roughness, and tool life in high speed turning processes. Manuf. Lett. 2021, 29, 84–89. [Google Scholar] [CrossRef]

- Jurkovic, Z.; Cukor, G.; Brezocnik, M.; Brajkovic, T. A comparison of machine learning methods for cutting parameters prediction in high speed turning process. J. Intell. Manuf. 2018, 29, 1683–1693. [Google Scholar] [CrossRef]

- Abu-Mahfouz, I.; El Ariss, O.; Esfakur Rahman, A.H.M.; Banerjee, A. Surface roughness prediction as a classification problem using support vector machine. Int. J. Adv. Manuf. Technol. 2017, 92, 803–815. [Google Scholar] [CrossRef]

- Sizemore, N.E.; Nogueira, M.L.; Greis, N.P.; Davies, M.A. Application of machine learning to the prediction of surface roughness in diamond machining. Procedia Manuf. 2020, 48, 1029–1040. [Google Scholar] [CrossRef]

- Azevedo, B.F.; Rocha, A.M.A.C.; Pereira, A.I. Hybrid Approaches to Optimization and Machine Learning Methods: A Systematic Literature Review. Mach. Learn. 2024, 113, 4055–4097. [Google Scholar] [CrossRef]

- Ostad Ali Akbari, V.; Kuffa, M.; Wegener, K. Physics-informed Bayesian machine learning for probabilistic inference and refinement of milling stability predictions. CIRP J. Manuf. Sci. Technol. 2023, 45, 225–239. [Google Scholar] [CrossRef]

- Rahman, M.W.; Zhao, B.; Pan, S.; Qu, Y. Microstructure-informed machine learning for understanding corrosion resistance in structural alloys through fusion with experimental studies. Comput. Mater. Sci. 2025, 248, 113624. [Google Scholar] [CrossRef]

- Kim, G.; Yang, S.M.; Kim, D.M.; Kim, S.; Choi, J.G.; Ku, M.; Lim, S.; Park, H.W. Bayesian-based uncertainty-aware tool-wear prediction model in end-milling process of titanium alloy. Appl. Soft Comput. 2023, 148, 110922. [Google Scholar] [CrossRef]

- Huang, Z.; Shao, J.; Guo, W.; Li, W.; Zhu, J.; Fang, D. Hybrid machine learning-enabled multi-information fusion for indirect measurement of tool flank wear in milling. Meas. J. Int. Meas. Confed. 2023, 206, 112255. [Google Scholar] [CrossRef]

- Karmi, Y.; Boumediri, H.; Reffas, O.; Chetbani, Y.; Ataya, S.; Khan, R.; Yallese, M.A.; Laouissi, A. Integration of Hybrid Machine Learning and Multi-Objective Optimization for Enhanced Turning Parameters of EN-GJL-250 Cast Iron. Crystals 2025, 15, 264. [Google Scholar] [CrossRef]

- Zhang, S.; Ding, T.C.; Li, J.F. Microstructural alteration and microhardness at near-surface of aisi H13 steel by Hard Milling. Mach. Sci. Technol. 2012, 16, 473–486. [Google Scholar] [CrossRef]

- Balasuadhakar, A.; Thirumalai Kumaran, S.; Uthayakumar, M. Machine learning prediction of surface roughness in sustainable machining of AISI H11 tool steel. Smart Mater. Manuf. 2025, 3, 100075. [Google Scholar] [CrossRef]

- Al-Janabi, A.S.; Hussin, M.; Abdullah, M.Z. Stability, thermal conductivity and rheological properties of graphene and MWCNT in nanolubricant using additive surfactants. Case Stud. Therm. Eng. 2021, 28, 101607. [Google Scholar] [CrossRef]

- Peng, R.; Liu, J.; Chen, M.; Tong, J.; Zhao, L. Development of a pressurized internal cooling milling cutter and its machining performance assessment. Precis. Eng. 2021, 72, 315–329. [Google Scholar] [CrossRef]

- Rohit, J.N.; Surendra Kumar, K.; Sura Reddy, N.; Kuppan, P.; Balan, A.S.S. Computational Fluid Dynamics Analysis of MQL Spray Parameters and Its Influence on MQL Milling of SS304. In Simulations for Design and Manufacturing; Lecture Notes on Multidisciplinary Industrial Engineering; Springer: Singapore, 2018; pp. 45–78. ISBN 9789811085185. [Google Scholar]

- Samy, G.S.; Thirumalai Kumaran, S.; Uthayakumar, M. An analysis of end milling performance on B4C particle reinforced aluminum composite. J. Aust. Ceram. Soc. 2017, 53, 373–383. [Google Scholar] [CrossRef]

- Dewes, R.C.; Ng, E.; Chua, K.S.; Newton, P.G.; Aspinwall, D.K. Temperature measurement when high speed machining hardened mould/die steel. J. Mater. Process. Technol. 1999, 92–93, 293–301. [Google Scholar] [CrossRef]

- Qian, S.; Peng, T.; Tao, Z.; Li, X.; Nazir, M.S.; Zhang, C. An evolutionary deep learning model based on XGBoost feature selection and Gaussian data augmentation for AQI prediction. Process Saf. Environ. Prot. 2024, 191, 836–851. [Google Scholar] [CrossRef]

- Patgiri, R.; Katari, H.; Kumar, R.; Sharma, D. Empirical Study on Malicious URL Detection Using Machine Learning. In Distributed Computing and Internet Technology, Proceedings of the 15th International Conference, ICDCIT 2019, Bhubaneswar, India, 10–13 January 2019; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 11319, pp. 380–388. [Google Scholar] [CrossRef]

- Rodríguez, J.J.; Quintana, G.; Bustillo, A.; Ciurana, J. A decision-making tool based on decision trees for roughness prediction in face milling. Int. J. Comput. Integr. Manuf. 2017, 30, 943–957. [Google Scholar] [CrossRef]

- Abedi, R.; Costache, R.; Shafizadeh-Moghadam, H.; Pham, Q.B. Flash-flood susceptibility mapping based on XGBoost, random forest and boosted regression trees. Geocarto Int. 2022, 37, 5479–5496. [Google Scholar] [CrossRef]

- Xiong, L.; Yao, Y. Study on an adaptive thermal comfort model with K-nearest-neighbors (KNN) algorithm. Build. Environ. 2021, 202, 108026. [Google Scholar] [CrossRef]

- Everingham, Y.; Sexton, J.; Skocaj, D.; Inman-Bamber, G. Accurate prediction of sugarcane yield using a random forest algorithm. Agron. Sustain. Dev. 2016, 36, 27. [Google Scholar] [CrossRef]

- Jabeur, S.B.; Gharib, C.; Mefteh-Wali, S.; Arfi, W. Ben CatBoost model and artificial intelligence techniques for corporate failure prediction. Technol. Forecast. Soc. Chang. 2021, 166, 120658. [Google Scholar] [CrossRef]

- Rtayli, N.; Enneya, N. Enhanced credit card fraud detection based on SVM-recursive feature elimination and hyper-parameters optimization. J. Inf. Secur. Appl. 2020, 55, 102596. [Google Scholar] [CrossRef]

- Nawa, K.; Hagiwara, K.; Nakamura, K. Prediction-accuracy improvement of neural network to ferromagnetic multilayers by Gaussian data augmentation and ensemble learning. Comput. Mater. Sci. 2023, 219, 112032. [Google Scholar] [CrossRef]

- Nahar, A.; Das, D. MetaLearn: Optimizing routing heuristics with a hybrid meta-learning approach in vehicular ad-hoc networks. Ad Hoc Netw. 2023, 138, 102996. [Google Scholar] [CrossRef]

- Nguyen-Sy, T. Optimized hybrid XGBoost-CatBoost model for enhanced prediction of concrete strength and reliability analysis using Monte Carlo simulations. Appl. Soft Comput. 2024, 167, 112490. [Google Scholar] [CrossRef]

- Özbek, O.; Saruhan, H. The effect of vibration and cutting zone temperature on surface roughness and tool wear in eco-friendly MQL turning of AISI D2. J. Mater. Res. Technol. 2020, 9, 2762–2772. [Google Scholar] [CrossRef]

- Hadad, M.J.; Tawakoli, T.; Sadeghi, M.H.; Sadeghi, B. Temperature and energy partition in minimum quantity lubrication-MQL grinding process. Int. J. Mach. Tools Manuf. 2012, 54–55, 10–17. [Google Scholar] [CrossRef]

- Liu, Z.Q.; Cai, X.J.; Chen, M.; An, Q.L. Investigation of cutting force and temperature of end-milling Ti-6Al-4V with different minimum quantity lubrication (MQL) parameters. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2011, 225, 1273–1279. [Google Scholar] [CrossRef]

- Zaman, P.B.; Dhar, N.R. Multi-objective Optimization of Double-Jet MQL System Parameters Meant for Enhancing the Turning Performance of Ti–6Al–4V Alloy. Arab. J. Sci. Eng. 2020, 45, 9505–9526. [Google Scholar] [CrossRef]

- Pal, A.; Chatha, S.S.; Singh, K. Performance evaluation of minimum quantity lubrication technique in grinding of AISI 202 stainless steel using nano-MoS2 with vegetable-based cutting fluid. Int. J. Adv. Manuf. Technol. 2020, 110, 125–137. [Google Scholar] [CrossRef]

- Gaitonde, V.N.; Karnik, S.R.; Davim, J.P. Selection of optimal MQL and cutting conditions for enhancing machinability in turning of brass. J. Mater. Process. Technol. 2008, 204, 459–464. [Google Scholar] [CrossRef]

- Peng, R.; Shen, J.; Tang, X.; Zhao, L.; Gao, J. Performances of a tailored vegetable oil-based graphene nanofluid in the MQL internal cooling milling. J. Manuf. Process. 2025, 134, 814–831. [Google Scholar] [CrossRef]

- dos Santos, R.G.; de Paiva, J.M.F.; Torres, R.D.; Amorim, F.L. Performance of cutting fluids with nanoparticles in the Ti5553 alloy turning process using high-speed cutting. Int. J. Adv. Manuf. Technol. 2025, 136, 4623–4645. [Google Scholar] [CrossRef]

- Danish, M.; Gupta, M.K.; Rubaiee, S.; Ahmed, A.; Sarikaya, M. Influence of graphene reinforced sunflower oil on thermo-physical, tribological and machining characteristics of inconel 718. J. Mater. Res. Technol. 2021, 15, 135–150. [Google Scholar] [CrossRef]

- Karbassi, A.; Mohebi, B.; Rezaee, S.; Lestuzzi, P. Damage prediction for regular reinforced concrete buildings using the decision tree algorithm. Comput. Struct. 2014, 130, 46–56. [Google Scholar] [CrossRef]

- Deng, Z.; Zhu, X.; Cheng, D.; Zong, M.; Zhang, S. Efficient kNN classification algorithm for big data. Neurocomputing 2016, 195, 143–148. [Google Scholar] [CrossRef]

- Johansson, U.; Boström, H.; Löfström, T.; Linusson, H. Regression conformal prediction with random forests. Mach. Learn. 2014, 97, 155–176. [Google Scholar] [CrossRef]

- Zhang, Y.; Ren, W.; Lei, J.; Sun, L.; Mi, Y. Case Studies in Construction Materials Predicting the compressive strength of high-performance concrete via the DR-CatBoost model. Case Stud. Constr. Mater. 2024, 21, e03990. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, S.; Chen, X.; Zeng, X.; Kong, Y.; Chen, J.; Guo, Y.; Wang, T. Short-term load forecasting of industrial customers based on SVMD and XGBoost. Int. J. Electr. Power Energy Syst. 2021, 129, 106830. [Google Scholar] [CrossRef]

- Zeng, S.; Pi, D. Milling Surface Roughness Prediction Based on Physics-Informed Machine Learning. Sensors 2023, 23, 4969. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arumugam, B.; Sundaresan, T.K.; Ali, S. Hybrid ML-Based Cutting Temperature Prediction in Hard Milling Under Sustainable Lubrication. Lubricants 2025, 13, 498. https://doi.org/10.3390/lubricants13110498

Arumugam B, Sundaresan TK, Ali S. Hybrid ML-Based Cutting Temperature Prediction in Hard Milling Under Sustainable Lubrication. Lubricants. 2025; 13(11):498. https://doi.org/10.3390/lubricants13110498

Chicago/Turabian StyleArumugam, Balasuadhakar, Thirumalai Kumaran Sundaresan, and Saood Ali. 2025. "Hybrid ML-Based Cutting Temperature Prediction in Hard Milling Under Sustainable Lubrication" Lubricants 13, no. 11: 498. https://doi.org/10.3390/lubricants13110498

APA StyleArumugam, B., Sundaresan, T. K., & Ali, S. (2025). Hybrid ML-Based Cutting Temperature Prediction in Hard Milling Under Sustainable Lubrication. Lubricants, 13(11), 498. https://doi.org/10.3390/lubricants13110498