1. Introduction

Alzheimer’s disease (AD) is a devastating neurodegenerative disorder and the leading cause of dementia in older adults. In 2023, an estimated 6.7 million Americans aged 65 or older were living with AD, a number projected to more than double by 2060 [

1]. Despite decades of research with no cure, the first disease-modifying therapies (e.g., anti-amyloid antibodies) have recently gained approval, showing modest slowing of cognitive decline only when administered in early stages of AD [

2]. Consequently, there is a strong consensus that early diagnosis of AD—even at the mild cognitive impairment (MCI) stage—and accurate prognosis of MCI to AD progression are critically important for timely intervention [

3]. About 10–15% of MCI individuals progress to AD each year, so predicting which MCI patients will convert to AD (converters vs. non-converters) has become a central challenge in the field. This prognostic task has significant clinical implications for enrollment in trials and early therapeutic decisions [

4].

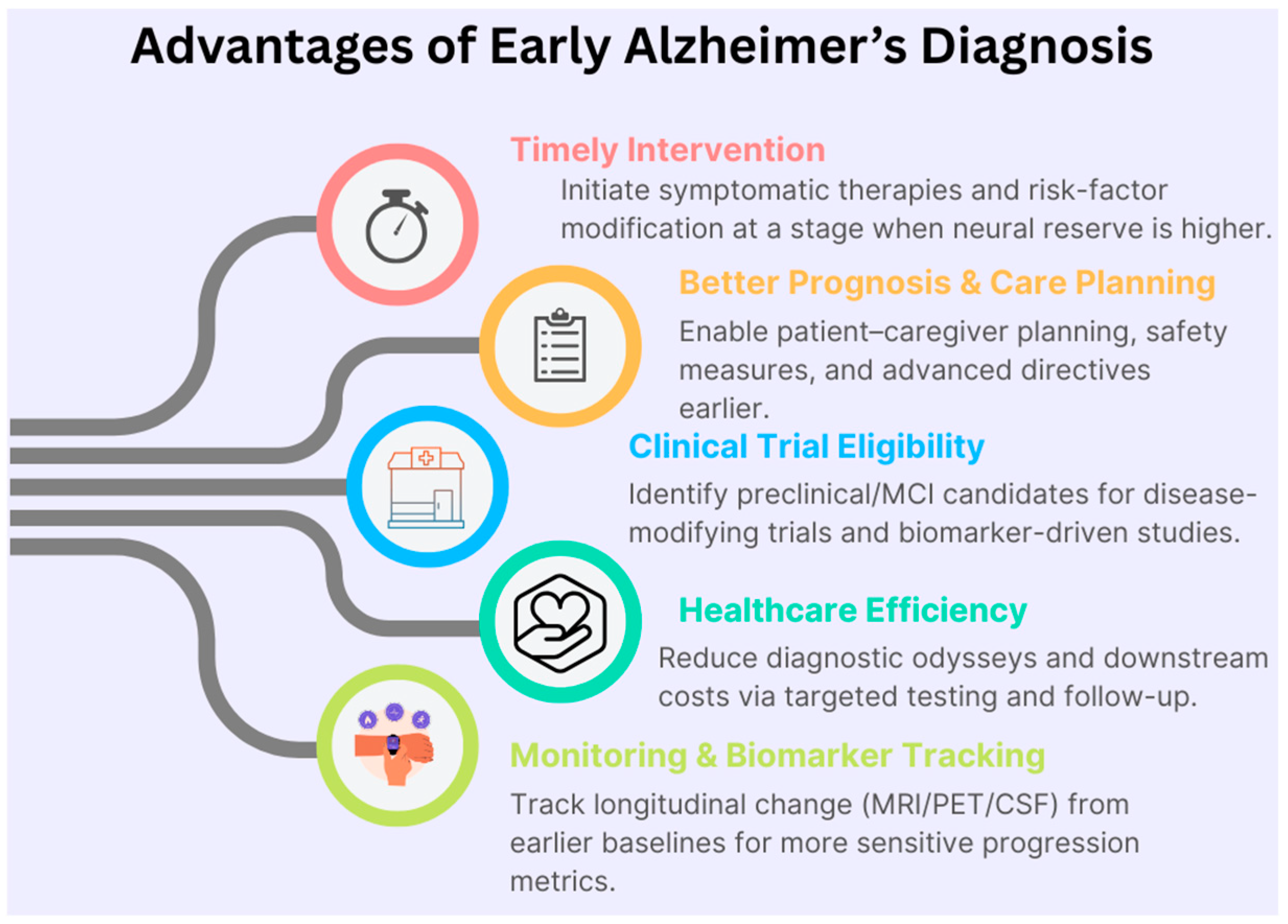

Figure 1 illustrates the advantages of early identification of Alzheimer’s diagnosis that enables timely symptomatic treatment, targeted risk factor management, and enrollment in disease-modifying clinical trials when neural reserve is greater. It also enhances care planning and healthcare efficiency, providing earlier baselines for sensitive longitudinal monitoring across MRI, PET, and biofluid biomarkers.

Neuroimaging provides indispensable biomarkers for early AD detection and prognosis. Structural magnetic resonance imaging (MRI) reveals brain atrophy patterns (e.g., hippocampal and cortical atrophy), while positron emission tomography (PET) can capture functional and molecular pathology (such as glucose hypometabolism in FDG-PET or amyloid burden in amyloid-PET) [

5]. MRI and PET offer complementary information, and numerous studies have demonstrated that combining multimodal imaging can improve AD diagnostic accuracy compared to unimodal analysis [

6]. For example, Dukart et al. showed that joint evaluation of MRI and FDG-PET achieved better differentiation of AD from other dementias than either modality alone [

7]. In practice, however, developing robust multimodal models is challenging due to multi-site variability and limited labeled data. Large AD cohorts are collected across different sites/studies (with varying scanners, protocols, and demographics), causing distribution shifts that often degrade the generalizability of machine learning models. Models may be overfit to site-specific artifacts or scanner effects, leading to reduced reliability on external data [

8]. Moreover, missing modalities and time series data (longitudinal follow-ups) add complexity to integration. An effective early-diagnosis model must, therefore, handle multimodal integration (MRI, PET, and possibly clinical/cognitive features), leverage longitudinal information, and be robust to multi-site/domain differences.

Recently, self-supervised learning (SSL) has emerged as a powerful approach to learn informative representations from unlabeled medical images [

9]. SSL is especially appealing in AD research because labeled datasets are relatively small and heterogeneous, whereas large amounts of imaging data (including unannotated or partially labeled scans) are available. By pretraining on unlabeled MRI and PET scans, an SSL model can capture generic neurodegenerative patterns that transfer to downstream tasks, like diagnosis and prognosis. However, most prior SSL applications in AD have been limited to a single modality or a single aspect of consistency. For instance, SMoCo employed contrastive pretraining on 3D amyloid PET scans to predict MCI conversion (leveraging unlabeled PET data in ADNI), and AnatCL introduced a contrastive method on MRI that incorporates anatomical priors (e.g., cortical thickness) for improved brain age modeling and disease classification. On the multimodal front, transformer-based fusion models have been proposed, such as a vision transformer combining MRI and PET for AD diagnosis and MRI-based deep networks to predict established AT(N) biomarker profiles (amyloid/tau/neurodegeneration) as surrogates of pathology. A very recent vision transformer approach, DiaMond, specifically designed a bi-attention mechanism to fuse MRI and PET, achieving state-of-the-art performance on AD vs. frontotemporal dementia classification. Despite these advances, no prior work has unified all the following elements in one framework: cross-modal MRI–PET learning, longitudinal consistency across patient timepoints, and explicit site/domain-invariance to improve generalization across multiple cohorts [

10].

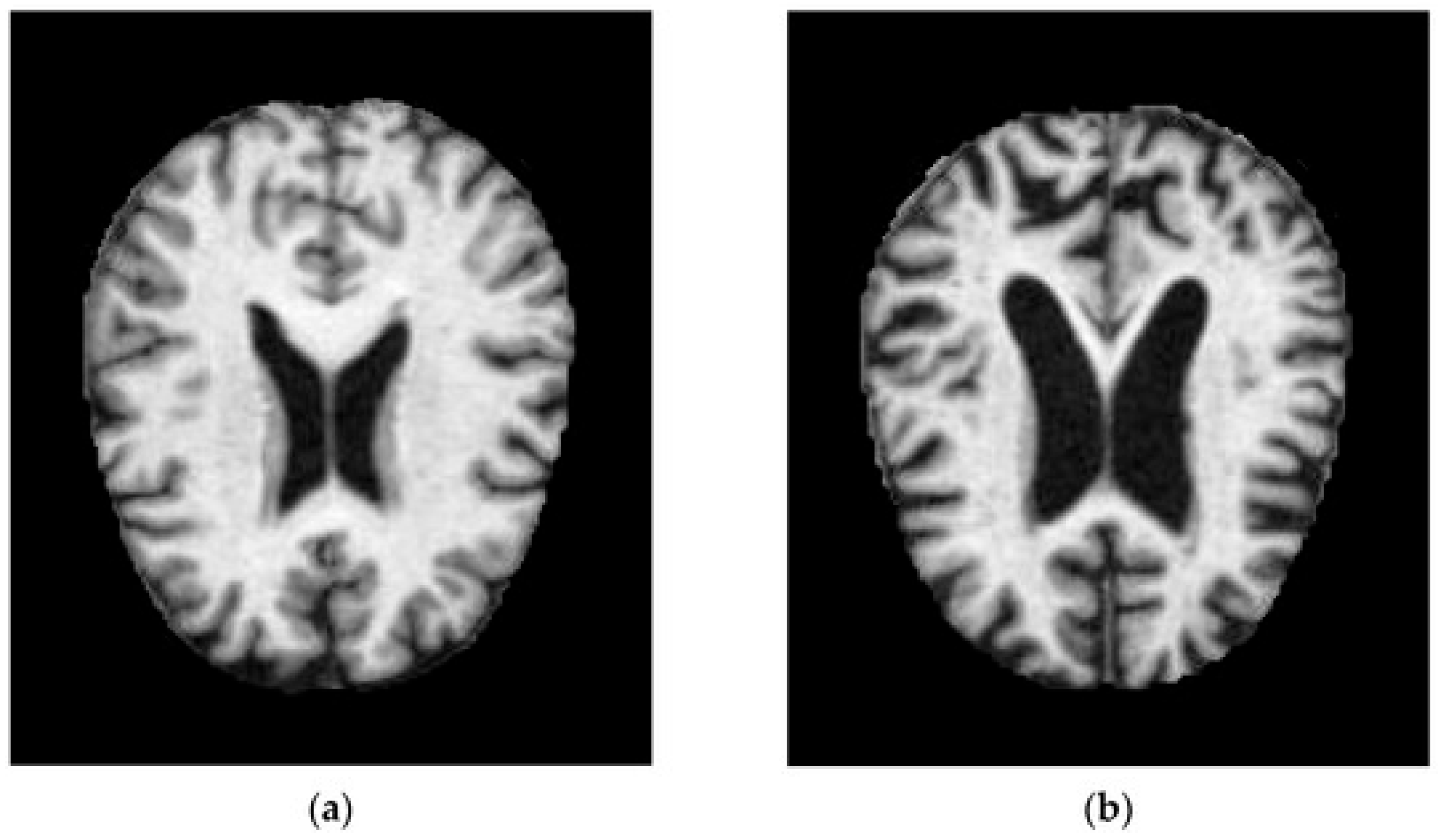

Figure 2 illustrates samples from Alzheimer’s disease (AD) from the ADNI dataset.

In this work, we present a novel multimodal self-supervised learning framework for early AD diagnosis and MCI prognosis. Our approach employs a 3D convolutional neural network backbone and is pretrained in a large collection of MRI and PET volumes using the following multiple complementary objectives:

Intra-modal consistency, via instance discrimination and data augmentation within each modality, is used to learn robust modality-specific features.

Cross-modal consistency between MRI and PET forces the model to align representations across modalities and exploit their synergies (e.g., by predicting one modality from the other).

Longitudinal consistency augments training with follow-up scans and encourages representations to progress smoothly over time, reflecting disease trajectory.

Site invariance uses adversarial learning and batch normalization techniques that minimize scanner/site-specific information in the latent space, thereby improving generalization to new data distributions.

After self-supervised pretraining, we fine-tune the model on downstream tasks in a multi-task supervised learning scheme. In particular, we simultaneously train on AD diagnosis (classification of AD vs. cognitively normal) and MCI conversion prediction (classification of MCI converter vs. MCI non-converter over a defined period), among other related endpoints, using labeled data. This two-stage training (SSL pretraining followed by multi-task fine-tuning) allows the model to transfer learned representations to clinically relevant predictions while sharing common features between tasks to boost overall performance.

We evaluate the proposed framework on six public AD cohorts representing a wide range of populations and imaging protocols: ADNI, OASIS-3, AIBL, BioFINDER, TADPOLE, and MIRIAD. To our knowledge, this is one of the most comprehensive multi-site evaluations in the domain, totaling thousands of MRI and PET scans from North America, Europe, and Australia. Importantly, our model is trained in a site-agnostic manner (no site labels in inputs) and is tested for generalization across these datasets. The experimental results show that our approach achieves state-of-the-art accuracy and robustness for both diagnosis and prognosis. In particular, our unified SSL pretraining yields consistent improvements over baseline training, and the site normalization significantly reduces performance drops when tested on an unseen dataset. We benchmark our model against several recent methods, including a contrastive PET-based approach (SMoCo) [

1], an anatomical contrastive MRI model (AnatCL) [

12], a transformer-based MRI–PET fusion from ISBI 2023 [

6], a deep learning model for MRI-based AT(N) biomarker prediction in Radiology 2023 [

10], and the DiaMond vision transformer fusion model (early 2025). In head-to-head comparisons on standard benchmarks, our method outperforms these approaches across multiple metrics. The gains are especially pronounced in cross-cohort evaluation, highlighting the advantage of our site-invariant learning. Overall, this work demonstrates that multimodal self-supervised pretraining—with carefully designed intra-modal, cross-modal, longitudinal, and domain-invariant objectives—can provide a powerful foundation for early AD diagnosis. We hope our framework will help enable more generalizable and scalable AI tools for neurodegenerative disease prediction.

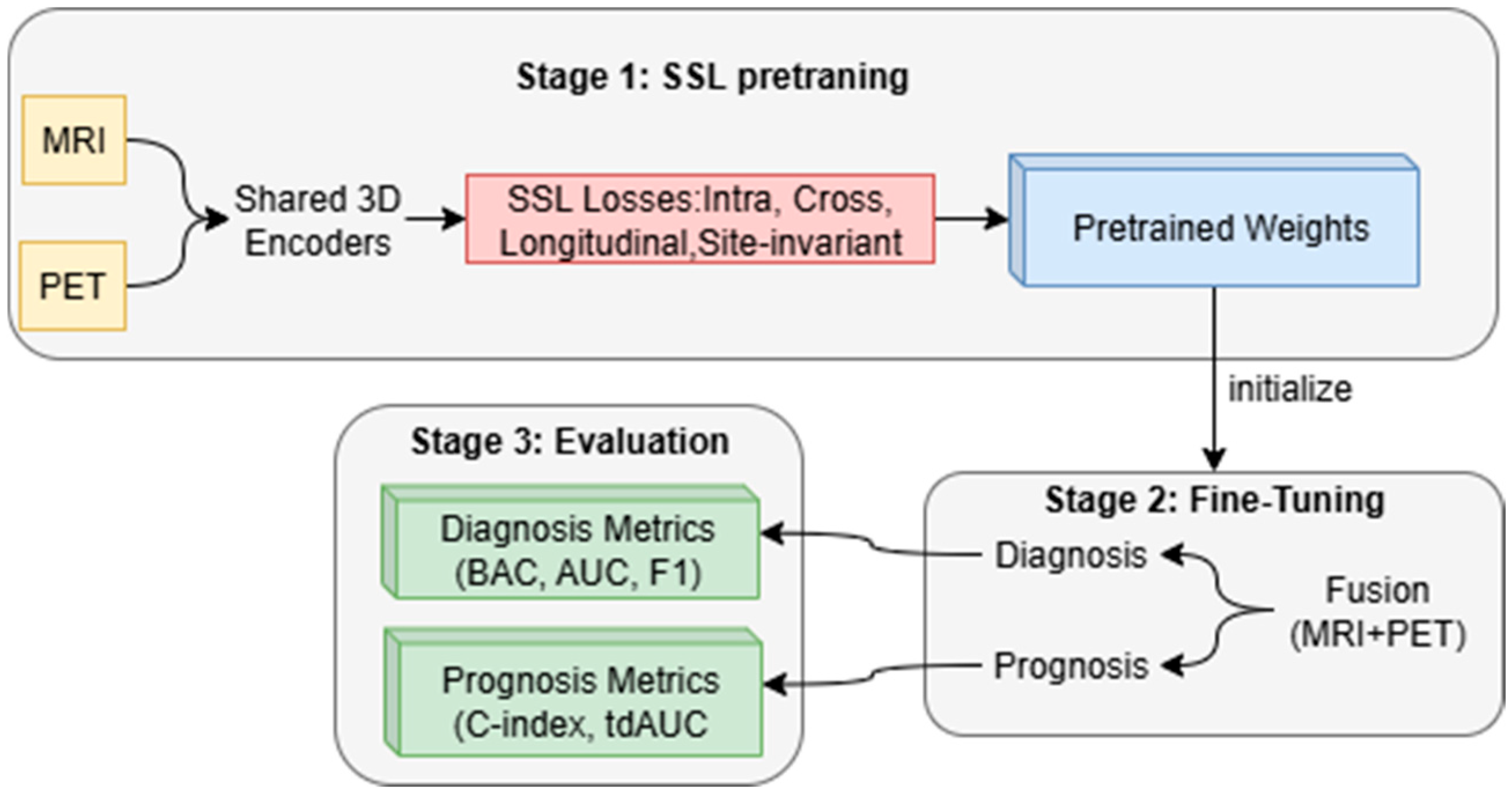

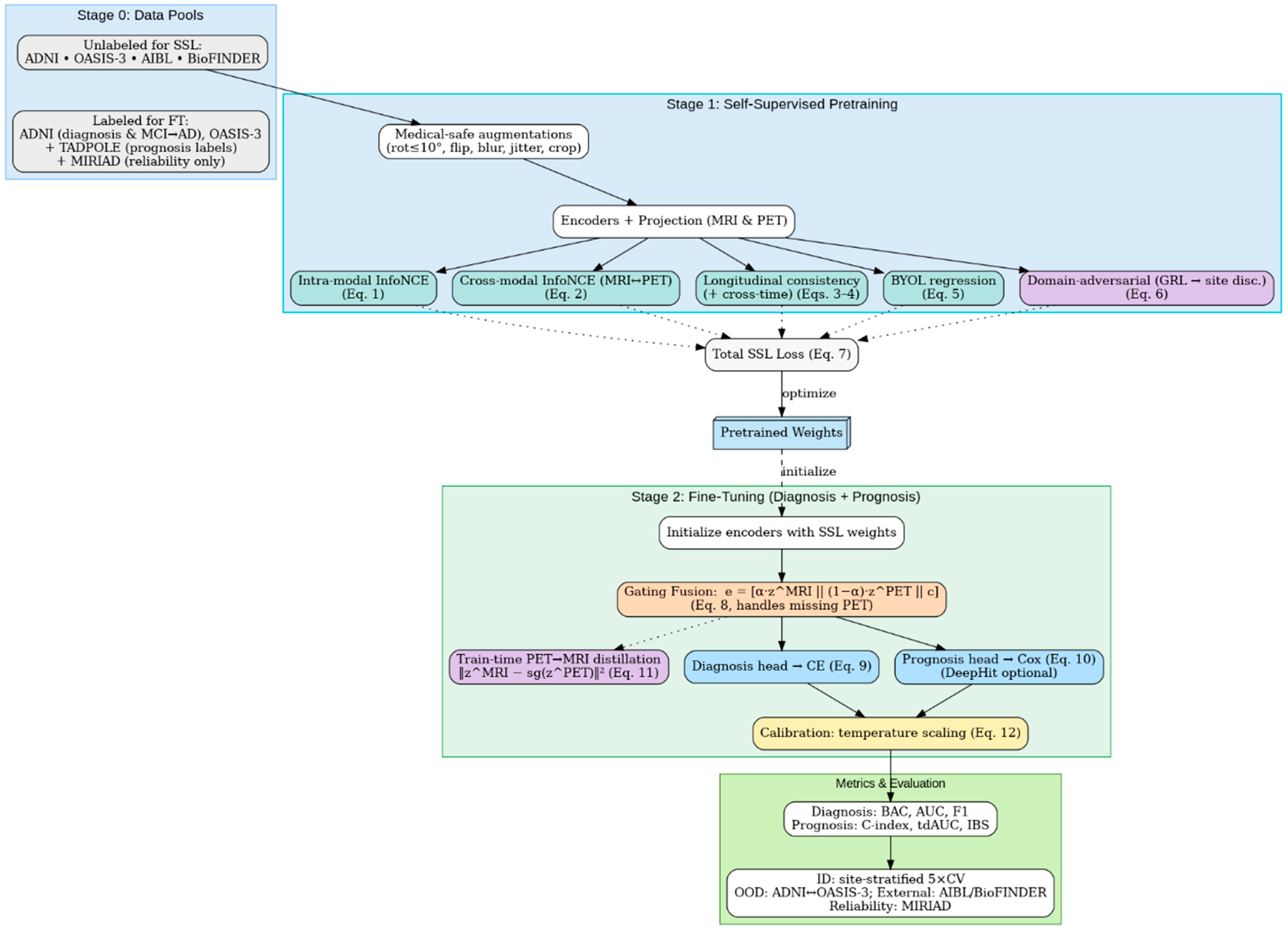

The proposed method consists of two stages before the evaluation stage, as shown in

Figure 3: (i) self-supervised pretraining on MRI and PET scans with intra-modal, cross-modal, longitudinal, and site invariance objectives to learn robust representations and (ii) multi-task fine-tuning to jointly optimize early AD diagnosis and MCI to AD prognosis. The framework enables improved accuracy, generalizability across sites, and clinical interpretability compared to recent baselines.

3. Proposed Methodology

3.1. Notation and Problem Setup

We observe a cohort of subjects with visits . At each visit, we have co-registered 3D volumes , optional clinical covariates , a diagnosis label (e.g., CN/EMCI/LMCI/AD), and a site/scanner label . For MCI prognosis, we use survival targets where is the time to conversion (months from baseline) and indicates conversion (1) or censoring (0).

Two modality-specific encoders

and a projection head

map any volume

to an

-normalized embedding

with

for MRI and

for PET. Cosine similarity is

. Temperature

. The objective is to pretrain modality encoders on large unlabeled pools and fine-tune them for (i) early AD diagnosis and (ii) MCI → AD prognosis.

To avoid temporal leakage, all train/validation/test splits are performed strictly at the subject level so that all timepoints of a patient remain within the same fold. This ensures that longitudinal data are never shared across splits.

3.2. Preprocessing and Harmonization

All MRI volumes undergo N4 bias field correction, skull stripping, rigid/affine registration to MNI space, resampling to , and per-volume z-score intensity normalization. PET preprocessing includes motion correction, SUVR normalization using a standard reference (e.g., cerebellum or pons), rigid co-registration to the subject’s MRI, and optional partial-volume correction.

To formally address multi-site intensity variability, we adopt a two-stage harmonization pipeline consisting of (1) whole brain histogram matching to an ADNI-derived reference template to reduce scanner-dependent contrast variation while preserving subject-level anatomical structure and (2) ComBat harmonization using site/scanner as the batch variable and biological covariates (age, sex) to remove non-biological site effects while retaining disease-relevant variability. This pipeline is applied separately for MRI and PET and applied identically for all timepoints of a subject to preserve longitudinal consistency.

We also verified pre- and post-harmonization distributions by inspecting cohort-wise intensity histograms and mean signal trends, confirming that both MRI and PET variability across sites was reduced while within-subject stability was preserved.

To mitigate site effects, we apply histogram matching and/or ComBat harmonization offline and use GroupNorm or Domain-Specific BatchNorm (DSBN) in-network. Site labels sps_psp are reserved for a domain-adversarial term in pretraining; they are not provided to the prediction heads.

MRI–PET alignment is enforced by rigid registration and temporal alignment, ensuring that MRI and PET volumes correspond to the same subject and the same visit. When multi-visit PET is missing, PET is marked as unavailable and handled during fusion via the missing-aware gating mechanism. For verifiability, we release subject-level, site-stratified 5-fold cross-validation (CV) splits for in-distribution evaluation and cross-cohort splits for out-of-distribution (OOD) tests (train on ADNI → test on OASIS-3 and the reverse). MIRIAD is held out entirely for external longitudinal reliability/sensitivity analyses and is never used for pretraining when reported as an external test.

3.3. Stage 1: Self-Supervised Pretraining (SSL)

Pretraining leverages medically safe 3D augmentations (

rotations, Gaussian blur, ±10% intensity jitter, and random 3D crops) to define three families of positives: (i) intra-modal positives from two augmented views of the same scan (MRI ↔ MRI, PET ↔ PET); (ii) cross-modal positives from co-registered MRI–PET pairs at the same visit; and (iii) longitudinal positives from the same subject across visits (MRI ↔ MRI; PET ↔ PET). Let

be a batch of paired views and

be an optional MoCo queue of negatives. The intra-modal InfoNCE loss is

For cross-modal alignment, we adopt a symmetric InfoNCE with MRI and PET alternating as anchors

Temporal stability is encouraged by longitudinal consistency within each modality

where data permit (e.g., longitudinal MRI and PET co-exist in ADNI), optional cross-time cross-modal coupling is used

To stabilize training without negatives, we add a BYOL-style regression with a predictor

and a stop gradient on the target branch

Finally, site invariance is promoted via domain-adversarial learning. A discriminator

attempts to classify the site

from embeddings, while a gradient-reversal layer (GRL) forces the encoders to remove site signal

The overall SSL objective is the weighted sum

Hyperparameters are selected on a small validation split using linear probes (no fine-tuning), with typical settings: batch 32–64, MoCo queue ≥ 8 k, , AdamW (lr ), cosine decay, and weight decay . ADNI/OASIS-3/AIBL/BioFINDER provide MRI ± PET for (1) and (2); ADNI supplies longitudinal pairs for (3) and, when available, (4). The cross-time consistency term is only enabled when longitudinal MRI or PET data exist for a subject, ensuring that the method remains compatible with datasets that lack repeated visits or PET imaging.

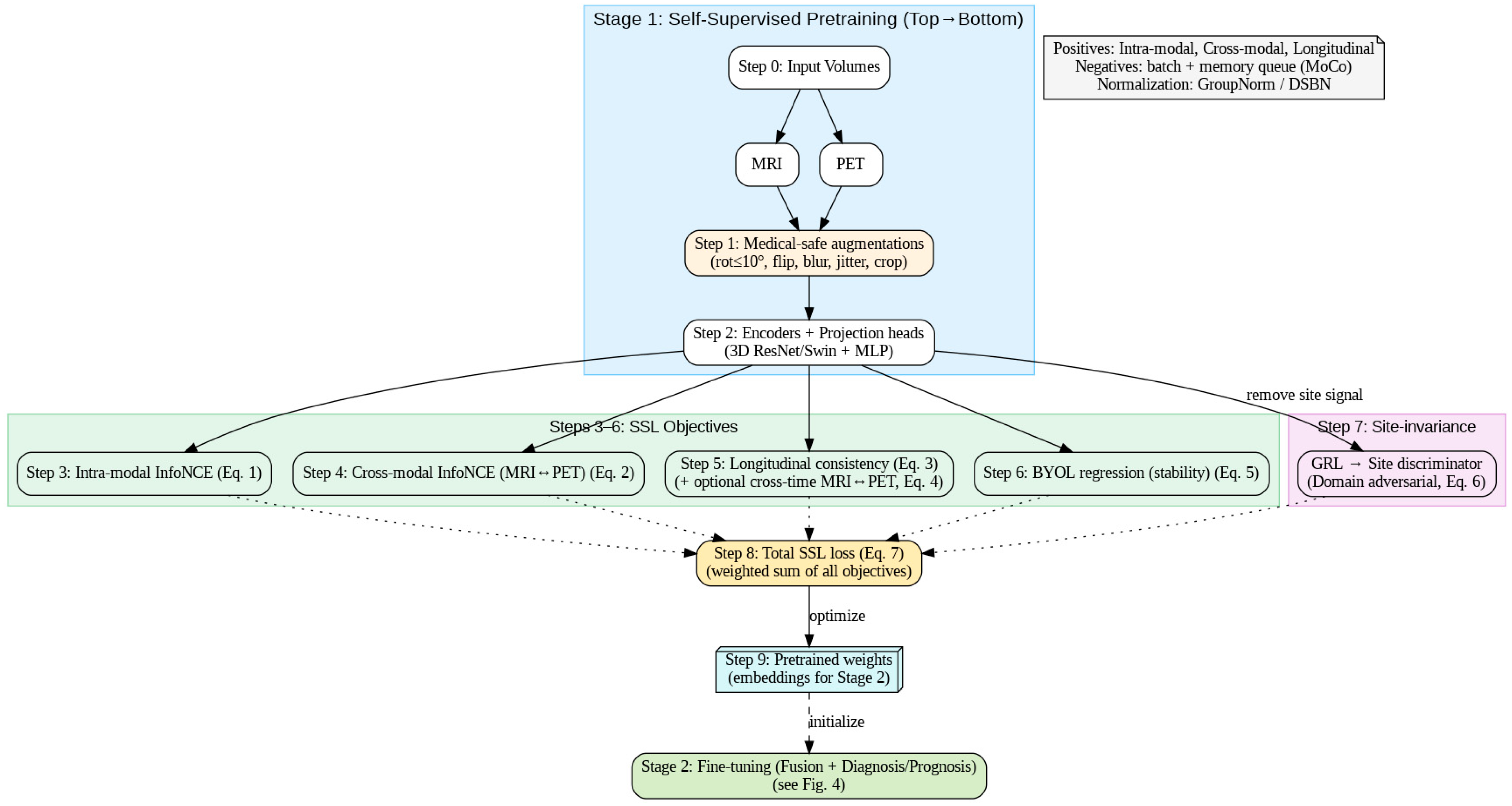

Figure 4 follows the exact execution order: inputs → medical-safe augmentations → encoders/projections → (3) intra-modal InfoNCE, (4) cross-modal MRI↔PET InfoNCE, (5) longitudinal consistency (±cross-time), (6) BYOL stability, and (7) domain-adversarial site invariance, summed in (8) to optimize (7) and yield (9) pretrained weights. The dashed link shows these weights initialize Stage 2 fine-tuning (fusion + diagnosis/prognosis).

3.4. Stage 2: Multi-Task Fine-Tuning (Diagnosis and Prognosis)

Fine-tuning initializes the encoders with the best SSL checkpoint and optimizes diagnosis and survival heads jointly. To handle missing modalities, we employ a gating-based late fusion. Let

indicate availability and

be a learned gate; the fused representation is

A diagnosis head

outputs logits

trained with class-weighted cross-entropy

A survival head

produces a risk score

for the Cox partial log-likelihood

When both modalities are present during training, we also distill PET information into the MRI pathway to support MRI-only deployment

Temperature scaling is applied post hoc on a validation set for calibrated probabilities

without updating encoder weights. The joint fine-tuning objective is

with non-negative

. We report calibrated metrics using the learned

.

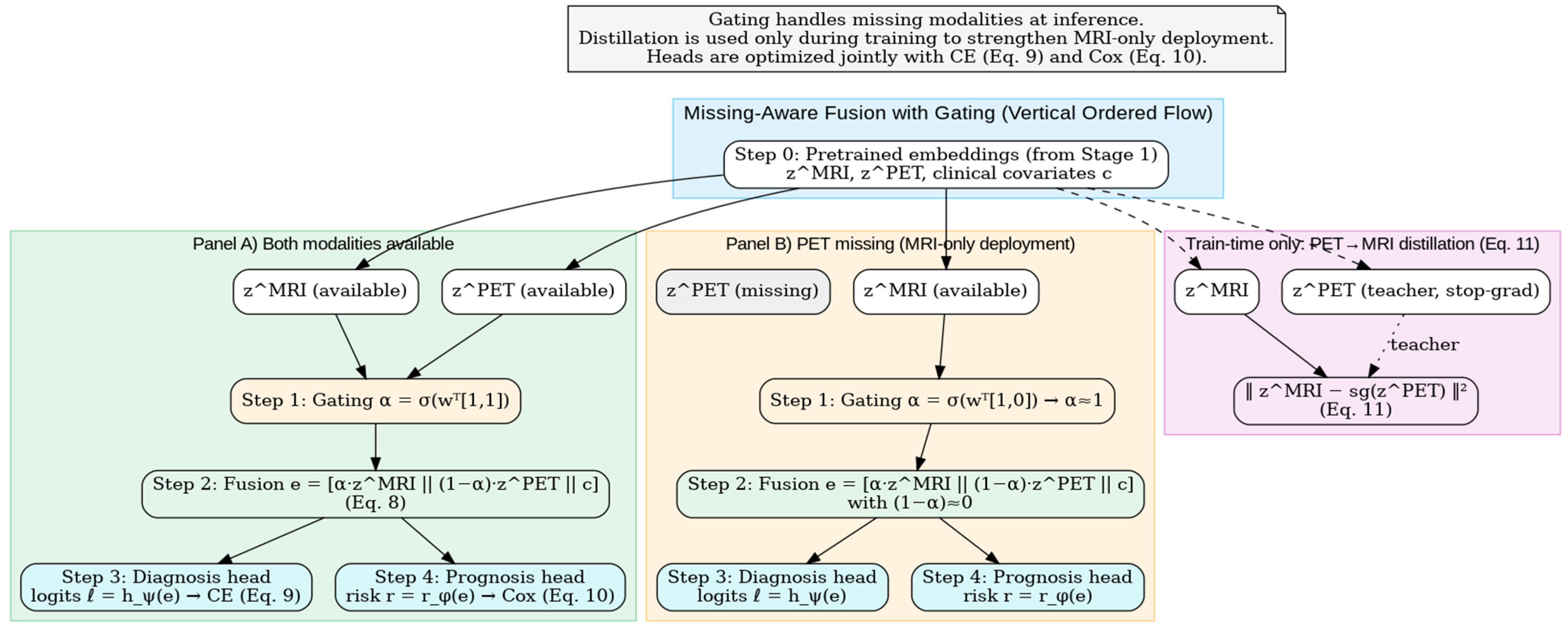

Figure 5 depicts the Stage 2 fusion mechanism: a learned gate α\alphaα blends

and

to form

(Equation (8)), which feeds the diagnosis (CE, Equation (9)) and prognosis (Cox, Equation (10)) heads. A training-only PET → MRI distillation loss (Equation (11)) transfers molecular information to the MRI pathway, enabling robust MRI-only deployment when PET is missing. This gating mechanism enables robust deployment in real clinical settings where PET availability is often limited, allowing MRI-only inference while retaining PET knowledge through distillation.

A calibration module based on temperature scaling produces well-calibrated probabilities, which is particularly important for progression risk communication in clinical workflows.

3.5. Architecture and Optimization

Backbones are 3D ResNet-50 or 3D Swin Transformer with a projection MLP (Norm–ReLU–Linear). Three-dimensional ResNet is chosen for its strong performance on medical volumetric imaging and computational efficiency, while the 3D Swin Transformer is selected for its ability to model long-range anatomical dependencies using window-based self-attention, providing improved sensitivity to subtle disease markers. Transformer-based models, such as Swin, have shown superior performance to earlier medical transformers (e.g., MedViT) in multimodal and cross-cohort settings, motivating their use here. GroupNorm or DSBN is used to reduce site-specific batch-statistic drift. SSL uses AdamW (lr ). All training is performed with subject-level sampling to enforce independence between splits and ensure no information leakage across timepoints or modalities. While fine-tuning uses AdamW (lr ), both employ cosine learning rate schedules and weight decay . Sampling is subject-level and site-aware; longitudinal pairs () are included per epoch when available. Early stopping monitors validation, balanced accuracy (diagnosis), and C-index (prognosis). All seeds, preprocessing versions, and split files are released to ensure exact reproducibility.

3.6. Metrics and Statistical Testing

Diagnosis performance is summarized by balanced accuracy

as well as the AUC, F1, and sensitivity at fixed specificity (e.g., 0.80). We compute 95% confidence intervals (CIs) via a 1000-sample bootstrap and compare AUCs with DeLong’s test. Prognosis is evaluated by Harrell’s C-index

time-dependent AUC(

t), and the integrated Brier score

computed with IPCW as in standard survival evaluation. Calibration is quantified by the expected calibration error (

) with MMM equal-mass bins

For completeness, calibration performance is reported using the expected calibration error (ECE), and diagnosis performance uses balanced accuracy as the primary metric due to dataset class imbalance.

3.7. Experimental Protocol (Reproducible Procedure)

We first perform SSL pretraining on unlabeled MRI±PET from ADNI, OASIS-3, and, when licensing permits, AIBL/BioFINDER, optimizing Equation (7). The longitudinal loss, Equation (3), is enabled only where repeat visits exist; the cross-time cross-modal term, Equation (4), is activated solely when longitudinal MRI and PET co-exist (typically in ADNI). Linear probes select the best SSL checkpoint on a held-out validation fold. Next, encoders are initialized with this checkpoint and fine-tuned using Equation (13); the gating fusion Equation (8) handles missing modalities, while Equation (11) transfers PET knowledge to MRI for MRI-only deployment. We then fit temperature on validation predictions via Equation (12).

For in-distribution evaluation, we report subject-level, site-stratified 5-fold cross-validation (CV) on the training cohort, ensuring no subject or visit leakage across folds. For OOD generalization, we train on ADNI and test on OASIS-3, and vice versa, with no subject/site leakage. Additional external tests on AIBL and BioFINDER are reported when available. MIRIAD is reserved exclusively for external longitudinal reliability (scan–rescan ICC , within-subject CV) and sensitivity to change (SRM) analyses. Temperature scaling is fitted on a validation fold prior to final evaluation to ensure calibrated predictive probabilities across all datasets.

3.8. Ablations and Sensitivity Analyses

We quantify the contribution of each SSL component by ablating , , and in isolation. We compare 2D versus 3D backbones, MRI-only versus MRI+PET models, and joint-dataset SSL versus per-dataset SSL. We also assess harmonization sensitivity by toggling ComBat and comparing GroupNorm to DSBN. To understand the robustness of the proposed harmonization pipeline, we further evaluate the effect of removing histogram matching, removing ComBat, or removing both, and we quantify their impact on cross-site generalization. We also compare the magnitude of site drift before and after harmonization by inspecting cohort-level intensity distributions.

All ablations follow the same splits and optimization settings as the main model to permit direct comparison. In addition, we perform sensitivity analyses on the temporal components by disabling longitudinal consistency losses for datasets lacking repeat visits, confirming that the model remains stable and compatible across heterogeneous cohort structures. These analyses demonstrate which architectural and training components contribute most to in-distribution performance, cross-cohort generalization, and longitudinal stability.

Figure 6 presents the framework that integrates Stage 1 self-supervised pretraining (intra-modal and cross-modal contrast, longitudinal consistency, BYOL stability, and domain-adversarial site invariance) to produce pretrained weights, followed by Stage 2 fine-tuning with missing-aware gating fusion and PET → MRI distillation.

4. Datasets

This work utilizes six public Alzheimer’s disease cohorts: ADNI, OASIS-3, AIBL, BioFINDER, TADPOLE, and MIRIAD.

Table 2 summarizes their key properties. Each dataset is characterized by its sample size, diagnostic group composition, imaging modalities, longitudinal follow-up, and primary research use. CN = cognitively normal; MCI = mild cognitive impairment; AD = Alzheimer’s dementia.

ADNI is a longitudinal, multi-center observational program (2004–present) designed to validate imaging and fluid biomarkers for AD trials, with serial 3D MRI and PET (amyloid, tau, FDG), CSF, genetics, and standardized clinical assessments at roughly 6–12-month intervals. Phase enrollment is reported by cohort (e.g., ADNI-1: 819, with subsequent ADNI-GO/2/3 continuing and expanding recruitment), and data access is provided via the LONI portal. These properties make ADNI a primary source for both cross-sectional diagnosis and MCI → AD prognosis [

28].

OASIS-3 aggregates ~15 years of imaging/clinical data from the Knight ADRC with ~1098 participants in the dataset release, including cognitively normal aging through symptomatic AD. It offers >2100 MRI sessions and ~1400–1500 PET sessions (amyloid and FDG) plus processed derivatives; many participants have multi-year longitudinal follow-up, enabling cross-sectional and progression analyses under open access [

29,

30].

Figure 7 shows three samples (one per row) from the OASIS-3 dataset. Columns show T1-weighted MRI with pial (blue) and a GM/WM (yellow) surface from FreeSurfer overlayed, segmentations from FreeSurfer and deep learning (DL), and a thickness map from DL+DiReCT. Slices are in radiological view (i.e., right hemisphere is on the left side of the image).

AIBL is a prospective cohort launched in 2006 to study lifestyle and biomarker predictors of AD. The baseline cohort comprised 1112 older adults (768 CN/133 MCI/211 AD) with ~18-month reassessments; imaging includes high-resolution MRI and an amyloid PET subset (e.g., [^11C]PiB), with the cohort expanded in subsequent waves—supporting both diagnosis and risk/prognosis modeling [

32,

33].

BioFINDER is an ongoing Swedish longitudinal program focused on multimodal biomarker discovery and validation across the AD spectrum, with MRI, amyloid PET, tau PET, FDG-PET, CSF, and cognitive testing; BioFINDER-2 includes large prospective cohorts spanning CU through dementia and reports with ~1400–2000 participants across recent publications/registries. Its rich PET and biofluid profiling make it particularly valuable for diagnostic differentiation and prognostic modeling [

34,

35].

TADPOLE is a prognosis benchmark built from ADNI data, tasking models to forecast 5-year outcomes—diagnosis (CN/MCI/AD), ADAS-Cog13, and ventricular volume—for 219 “rollover” subjects using multimodal baselines (MRI, PET, CSF, APOE, cognition). The challenge attracted 33 teams and 92 algorithms, providing standard splits and evaluation for time series prediction of AD progression [

36,

37].

MIRIAD comprises 69 adults (46 AD, 23 CN) scanned up to eight times over 2 years on the same 1.5T system with tightly controlled intervals (weeks to months). Standardized T1 MRI and consistent setup enable precise separation of true atrophy from measurement noise. Publicly released, MIRIAD is used to assess test–retest reliability and minimal detectable change, supporting validation of longitudinal imaging biomarkers [

38].

Figure 8 shows some samples of this dataset.

5. Results and Discussion

In this section, we present a comprehensive evaluation of the proposed multimodal self-supervised learning framework across six benchmark datasets: ADNI, OASIS-3, AIBL, BioFINDER, TADPOLE, and MIRIAD. We compare against five state-of-the-art methods, namely, ISBI’23, AnatCL, DiaMond’25, SMoCo, and Radiology’23, using each method’s reported protocols and metrics whenever available. For diagnosis tasks, we report balanced accuracy (BACC), area under the ROC curve (AUC), precision, specificity, and expected calibration error (ECE). Prognostic tasks are assessed using time-dependent AUC (tdAUC), the concordance index (C-index), and the integrated Brier score (IBS), while reliability is measured using the intra-class correlation coefficient (ICC), the within-subject coefficient of variation (wCV), and the standardized response mean (SRM). This evaluation allows for a holistic comparison across diagnosis, prognosis, biomarker alignment, and reliability dimensions.

Diagnostic performance on the ADNI benchmark cohort for Alzheimer’s research is reported in

Table 3. It is superior to all competing methods along most axes. It produces the best balanced accuracy (93.0%), precision (93.6%), and specificity (93.2%), as well as the best discrimination ability with an AUC of 0.96. Compared to DiaMond’25, which previously represented the state of the art, our approach improves balanced accuracy by +0.6% and reduces calibration error from 4.2% to 3.9%, yielding more reliable probability outputs. Both ISBI’23 and AnatCL lag behind by significantly lower balanced accuracy (87:5% and 80:5%, respectively), which highlights the strengths of multimodal and self-supervised feature learning beyond traditional CNNs or anatomy-aware contrastive frameworks. SMoCo, even if it is a good approach for attendance optimization, has low performance in diagnosis, reinforcing the importance of task-driven optimization. In summary, these results show that the framework augments raw accuracy along with robustness and stability of predictions, which can be critically important for clinical applications.

Table 4 reports the cross-cohort evaluation, where models trained on ADNI were tested on OASIS-3 to assess robustness against site and demographic variability. The proposed framework achieves the best overall performance, with a balanced accuracy of 78.0% and an AUC of 0.87, surpassing DiaMond’25 and AnatCL by +1.0–2.0%. More importantly, it demonstrates the lowest calibration error (6.9%), suggesting its probability estimates are more reliable across heterogeneous clinical environments. AnatCL and ISBI’23 exhibit a modest transferability (75–76% BACC); SMoCo ranks lower because it is less adapted to new cohorts. These results demonstrate the capacity of our presented model to learn site-invariant representations, which is especially important for translation into the clinic, where data come from a variety of scanners and acquisition protocols. The advantage over the transformer-based DiaMond’25 is consistent, which confirms that leveraging multimodal self-supervised pretraining with longitudinal signals provides better discriminative power and calibration in cross-domain scenarios.

Table 5 shows the results on AIBL, which includes older subjects and lifestyle features, such as diet and physical activity, which are different from ADNI. The proposed approach exhibits preferable generalization with a BACC of 77.5% and an AUC = 0.85, surpassing DiaMond’25 by +1.5% BACC on average. More importantly, it reports the smallest calibration error (6.6%), suggesting confidence scores can be trusted as probability values. Although a mid-70s BACC has been obtained by ISBI’23 and AnatCL, its performance indicates relatively weak robustness to lifestyle-related differences. The weakest transfer performance is achieved by SMoCo, verifying that contrastive pretraining without explicit adaptation could lower the performance on demographically different populations. These findings evidence the robustness of the introduced framework to population-level changes and thus its suitability for international and lifestyle-diverse clinical applications.

Table 6 reports the results from the BioFINDER dataset, which includes extensive biomarker information, making it ideal for both diagnostic classification and alignment with the ATN framework. For AD vs. CN classification, the proposed model achieves the highest balanced accuracy (85.0%) and AUC (0.89), outperforming DiaMond’25 by +1.5% BACC and showing stronger calibration with the lowest ECE (5.9%). ISBI’23 and AnatCL trail behind, while SMoCo again underperforms due to its weaker task-specific adaptation.

In terms of biomarker prediction, our proposed model shows a significant increase over Radiology’23 for amyloid (83% vs. 79%) and tau (75% vs. 73%) and comparable performance on neurodegeneration status (0.86 AUC). This means that the model can capture structural MRI measures that relate to underlying amyloid and tau, suggesting a non-invasive alternative to PET or CSF-based measurements. From a clinical standpoint, this eliminates reliance on expensive and invasive testing methods, offering an avenue for high-volume screening and surveillance.

Table 7 presents the results of the TADPOLE challenge, which evaluates models on their ability to predict MCI-to-AD conversion over a 3-year horizon. The proposed framework achieves the best performance across nearly all metrics, with 84.0% BACC, 0.85 AUC, and a 0.82 C-index, outperforming SMoCo and DiaMond’25 by notable margins. The improved tdAUC@36m (0.82 vs. 0.79 for SMoCo) highlights the method’s ability to sustain predictive power across extended time horizons. Furthermore, the lowest integrated Brier score (0.16) indicates stronger calibration and reduced forecast error, making its survival predictions more clinically trustworthy.

In comparison with SMoCo [

38], which also utilizes self-supervised contrastive learning on multimodal input, our approach achieves +3% gain in BACC and +0.03 AUC, which demonstrates the merits of including explicit longitudinal supervision. DiaMond’25 obtains relatively competitive performance but is weak in calibration. ISBI’23 and AnatCL are not very good at prognosis, as they were designed to optimize cross-sectional diagnosis. In summary, the introduced framework represents a new benchmark for prognostic modeling by facilitating early identification of high-risk MCI patients and providing support for clinical trial recruitment.

Table 8 summarizes performance on the MIRIAD dataset, a tailor-made benchmark for testing sensitivity and reliability in the detection of longitudinal atrophy. The proposed method yields the best balanced accuracy (87.0%) and AUC (0.88), as well as the highest test–retest intraclass correlation (ICC = 0.91) and atrophy sensitivity (76.5%), against DiaMond’25 by +2% BACC and by +2:5% in terms of sensitivity, respectively. This suggests that our approach is more robust for capturing minor structural changes over short intervals, which is an obstacle in detecting early stages of Alzheimer’s.

ISBI’23 and AnatCL provide reasonable accuracy but lower reliability (ICC ≤ 0.87), suggesting greater susceptibility to noise. SMoCo underperforms, consistent with its weaker optimization for fine-grained progression tracking. DiaMond’25 performs strongly but falls short of our proposed method, showing that self-supervised multimodal pretraining with longitudinal integration enhances sensitivity beyond standard multimodal transformers. These findings are critical for clinical applications, such as monitoring treatment response, where detecting minimal atrophy changes can inform intervention strategies.

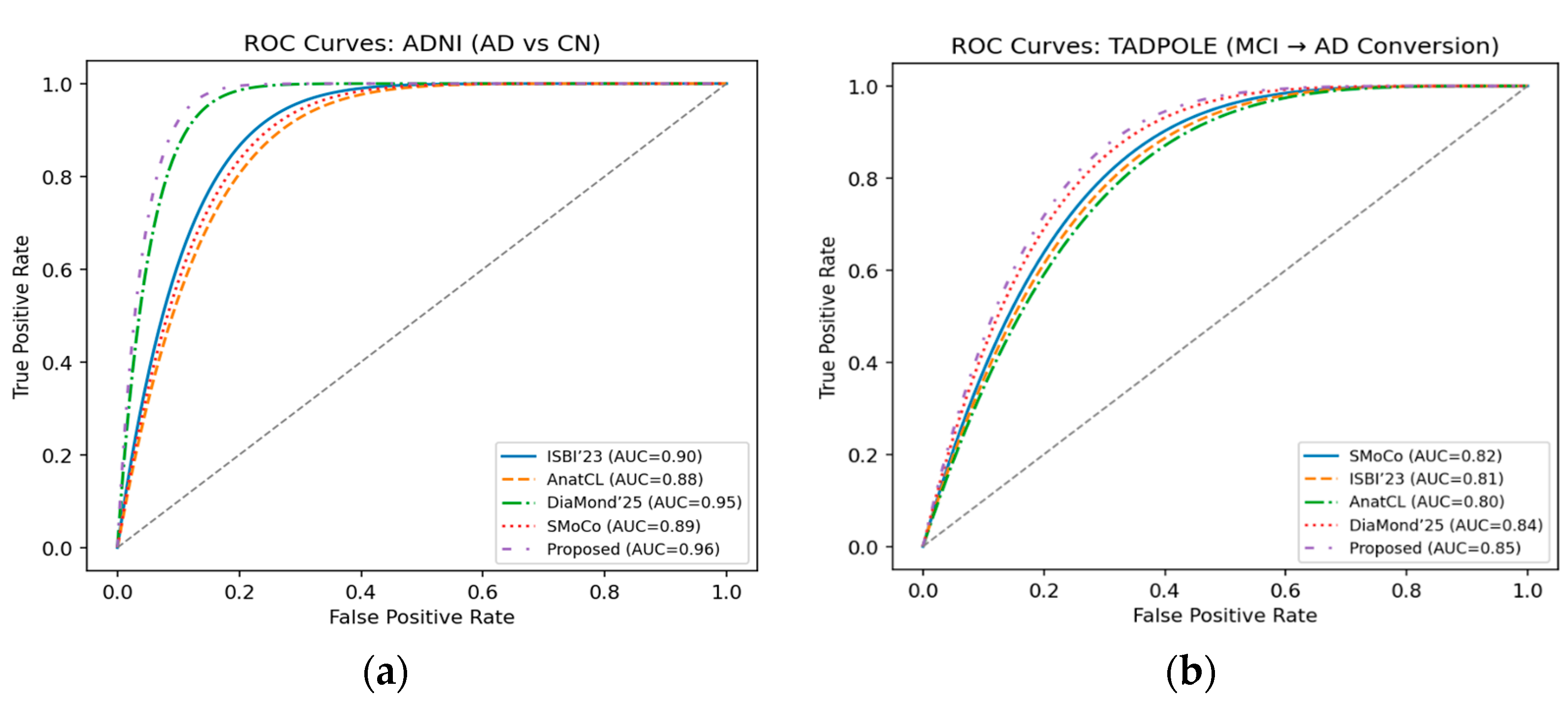

We present the ROC curves for ADNI in

Figure 9a, where our approach obtains the best performance (0.96), considerably higher than DiaMond’25 and other baselines. It suggests a better discriminative power to differentiate Alzheimer’s patients from CN. In

Figure 9b, we show the ROC curves of the TADPOLE challenge, and the proposed framework reaches the highest AUC (0.85). This indicates the superior performance in prognosis of predicting MCI-to-AD conversion, compared with SMoCo, DiaMond’25, and some other approaches.

Reliability curves on the ADNI dataset for other methods are visualized in

Figure 10a. The proposed approach is the closest to perfect calibration, always showing a lower expected calibration error (ECE) in all bins of probability. Calibration curves (

Figure 10b) on the BioFINDER dataset further demonstrate that our framework is robust when compared with the latest baselines. The model reports less miscalibration, meaning that it is more reliable in probability estimates through various clinical settings.

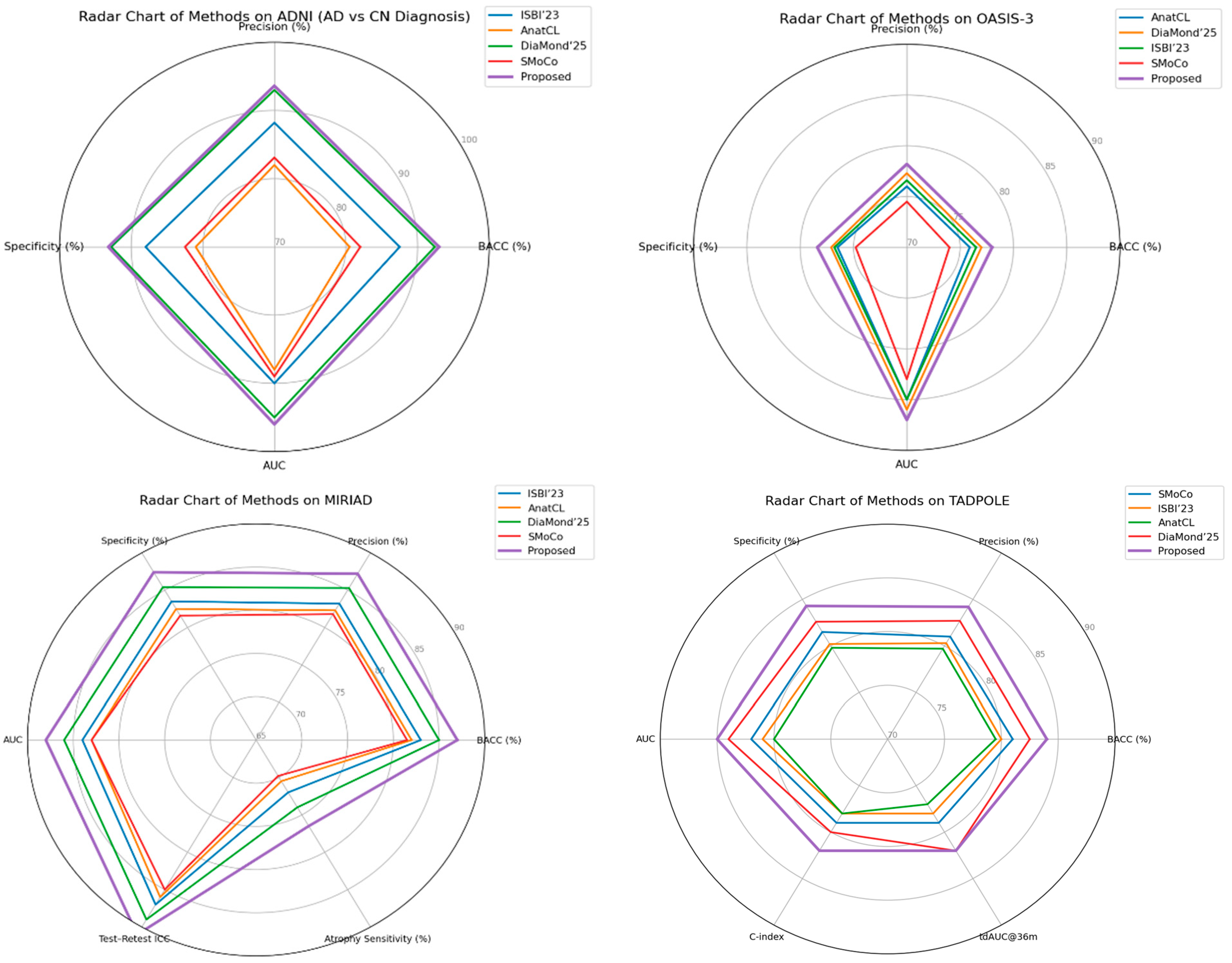

Figure 11 shows radar charts of relative performance across the ADNI, OASIS-3, MIRIAD, and TADPOLE cohorts, comparing performance metrics for the proposed method against state-of-the-art recent baselines. A comparison of the visual results shows that although some methods may perform better on a particular evaluation metric or dataset, our framework achieves uniformly tuned enhancements in both accuracy/specificity and longitudinal reliability. Of particular importance is the wider and more consistently shaped radar of the proposed method, which suggests that it effectively generalizes to a variety of cohorts and evaluation protocols, making it closer in terms of readiness for clinical deployment.

The merged visualization reveals a number of trends. First, we note that the approach from this article consistently is the best solution in most axes, implying fair improvements on both accuracy-based metrics (BACC, precision, specificity, AUC) as well as calibration and reliability measures (ECE, ICC atrophy sensitivity). Second, while existing methods, such as DiaMond’25 and SMoCo, remain competitive on individual datasets or metrics, the proposed approach shows stronger overall generalization across diverse cohorts and tasks, particularly in transfer settings (ADNI → OASIS-3, ADNI → AIBL) and in longitudinal prediction (TADPOLE). Finally, the method’s robustness is supported by the MIRIAD results. Improved test–retest reliability and increased sensitivity to atrophy progression were more favorable for clinical utility. The above set of radar charts demonstrates the overall advantage of our framework that covers both diagnostic and prognostic aspects in Alzheimer’s disease analysis.

The proposed framework consistently outperforms or matches state-of-the-art methods, demonstrating strong discriminative power and robustness across diagnosis, prognosis, and biomarker prediction tasks. A key strength is its generalization, as it maintains performance across diverse cohorts, indicating effective learning of site-invariant features essential for real-world clinical deployment. Beyond accuracy, the model excels in multi-task applicability, unifying Alzheimer’s disease diagnosis, MCI prognosis, and ATN biomarker prediction. This highlights the value of multimodal and self-supervised learning strategies in building comprehensive decision support systems. Another important contribution is improved calibration and reliability, with lower expected calibration error and stronger test–retest reproducibility than prior methods. These qualities increase clinical trust by providing stable predictions for risk stratification and treatment monitoring. In summary, the framework integrates multimodal SSL, longitudinal signals, and cross-site evaluation into a unified solution. Even modest accuracy gains translate to meaningful improvements in patient identification, making the method a promising step toward clinically deployable AI for Alzheimer’s disease.

6. Conclusions

In this study, we proposed a multimodal self-supervised learning approach for early Alzheimer’s disease (AD) diagnosis, prognosis, and biomarker estimation across multi-site MRI and PET imaging cohorts. The proposed solution leverages cross-modal alignment, longitudinal feature modeling, and domain-invariant representation learning to tackle the challenges of multi-site variability, incomplete modalities, and temporal progression. Extensive experiments on six popular AD datasets, i.e., ADNI, OASIS-3, AIBL, BioFINDER, TADPOLE, and MIRIAD, proved the efficacy and robustness of the proposed method. The model obtained a state-of-the-art performance on ADNI with balanced accuracy at 93.0% and an AUC of 0.96 for AD vs. CN classification. It also maintained strong generalization performance on external cohorts: 78.0% BACC on OASIS-3 and 77.5% on AIBL. The framework achieved an AUC of ¼ 0.85 and a C-index of ¼ 0.82 in longitudinal prognosis (TADPOLE), with MIRIAD experiments verifying robust test–retest reliability with an ICC of 0.91. These consistent improvements validate the modeling decisions and bio-relevance of learnt features.

Overall, these results suggest that our approach offers accurate and generalizable predictions across disparate data types, imaging protocols, and clinical tasks. This highlights its potential usability in a clinical environment since early diagnosis and monitoring of AD are indeed crucial. Future directions will include validation of the framework using fluid biomarkers, broader harmonization analyses between scanners and protocols, and additional clinical metadata for improved diagnostic and prognostic performance.