1. Introduction

Heart disease is a major worldwide marker of morbidity and mortality, and places a significant burden on e-healthcare systems and individuals [

1]. The complexity of heart disease and a range of risk factors, such as age, lifestyle choices, genetic predisposition and pre-existing conditions, make early diagnosis and effective treatment particularly difficult [

2]. Physical examination, laboratory diagnostic assays and imaging studies provide useful information; however, these conventional diagnostic modalities are often time-consuming, costly, and require the expertise of a specialist, and may delay an appropriate intervention. Accordingly, there is an increasing need for efficient and automated machine learning-based tools that can aid in the early detection and screening of HD.

There are some promising advancements in the area of IoT-enabled medical diagnostics with the introduction of artificial intelligence (AI), especially ML [

3] and deep learning (DL) [

4] methodologies. These methods can work with large, complex data, recognize trends, and provide screening and case identification that can be beneficial to healthcare workers when making intelligent decisions. The fact that AI systems can sort immense volumes of past medical data and learn from it means that the system would be more accurate as well as faster in screening and case identification than the traditional system, as relevant input in clinical decisions [

5]. However, there are various limitations associated with employing AI when predicting cardiac disorders.

One of the most substantial problems facing the current medical informatics is the existence of imbalanced datasets [

6,

7]. Specifically, the prevalence of healthy subjects often overrepresents the prevalence of diseased individuals, leading to a skew that can lead to a bias in screening protocols that can lead to suboptimal performance for the minority class. The literature provides a range of data balancing techniques to address this issue, such as generating synthetic observations and oversampling the minority class. In addition, there are other persistent complexities due to the difficulty in identifying the most informative variables among the myriad of medical descriptors that might affect cardiovascular risk. Redundant [

8], unnecessary, or noisy features are a common problem in clinical datasets which can affect the performance of supervised learning algorithms. By focusing on the most salient predictors and using dimensionality reduction techniques, Recursive Feature Elimination (RFE) [

9] has identified appreciable increases in predictive accuracy. Furthermore, on the topic of interpretability, the ability to understand the behavior of models is perhaps the single most important quality of artificial intelligence in clinical environments. Physicians need to be able to trust the outputs generated by these systems to incorporate them into routine practice. Models that are opaque “black boxes” without providing any transparency regarding the reasoning behind screening decisions or case identification are often met with skepticism across healthcare environments. In addition to generating precise screening and case identification, AI models also present explanations that are understandable and actionable for healthcare professionals. To rectify the aforementioned issue, we implement Shapley Additive Explanations (SHAP), a method that increases the transparency of the model by figuring out the extent to which each feature plays a role on the model’s screening and case identification [

10].

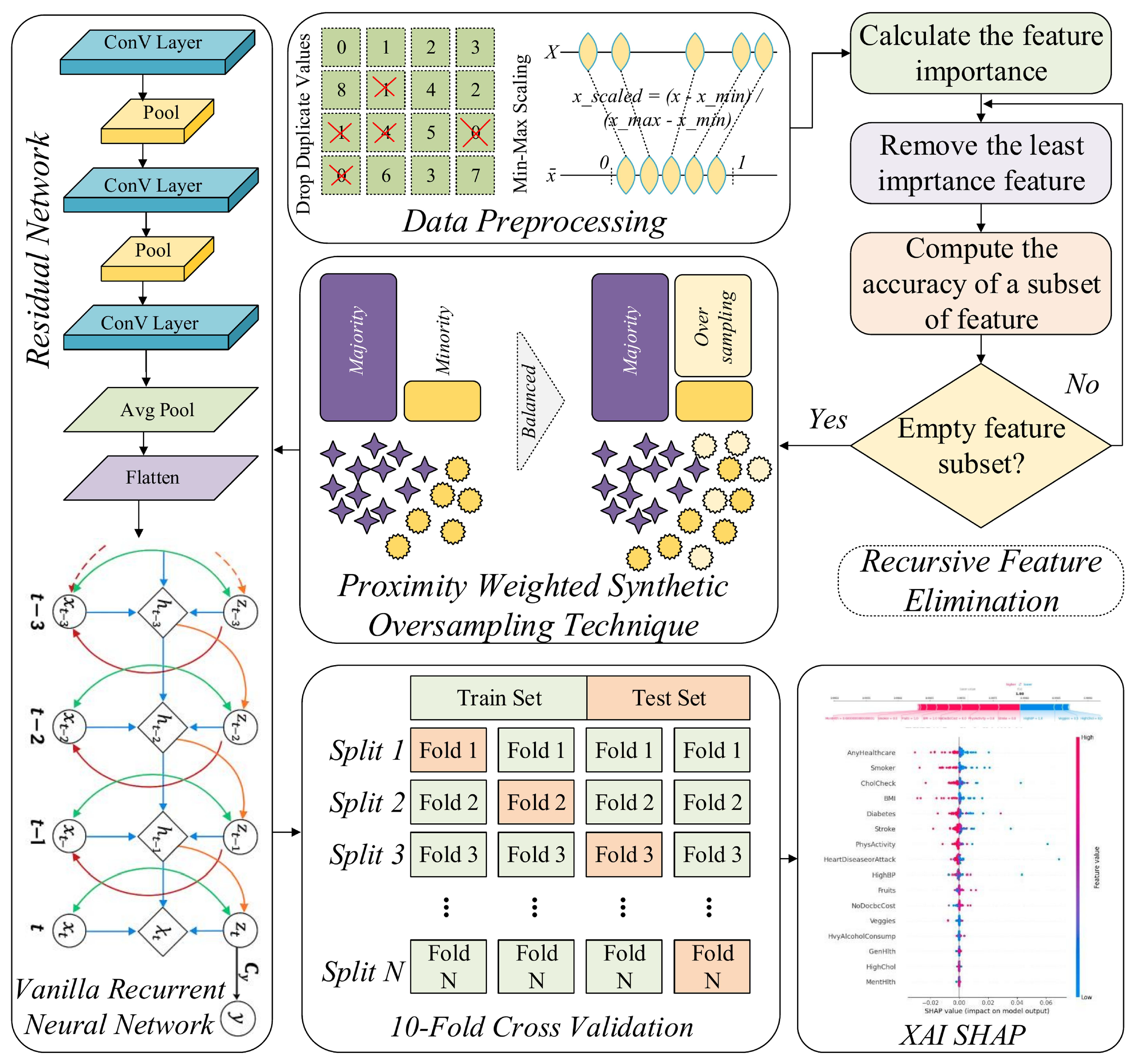

In the current paper, we propose a highly reliable IoT-enabled HD screening and identification approach that combines multiple advanced methods. We start off by preprocessing the data as rigorously as possible: this includes the balancing of the data with a Proximity-Weighted Synthetic (ProWSyn) sampling-based method to balance the classes. Then, we use the recursive elimination of features algorithm to determine the most relevant features for the optimal selection of features. At the heart of our proposed approach, RAVE-HD (ResNet And Vanilla RNN Ensemble for HD, screening and case identification) lies a hybrid DL architecture with a sequential fusion of Residual Network (ResNet) and Vanilla Recurrent Neural Network (Vanilla RNN). High-level features are obtained by mining HD data using ResNet, while Vanilla RNN is used to learn the non-linear behavior of the features (variables) present in the medically HDHI dataset. With different metrics, we test the effectiveness of our proposed approach and prove that this approach is superior to conventional ML and DL models in terms of screening and case identification quality, which is becoming correct, solid, and understandable.

The key contributions of this article are outlined herein:

We have proposed RAVE-HD (ResNet And Vanilla RNN Ensemble for HD), a novel sequential deep learning approach, to improve the accuracy of HD screening and case identification. In this approach, a method of Min-Max feature scaling is employed for rescaling the features of the HD dataset so that it makes model stable and improves model’s capability to generalize across different input distributions [

11]; ProWSyn (Proximity Weighted Synthetic Sampling) technique is employed to rectify the class imbalance that creates artificial minority-class samples which are closer to majority-class samples, and close to the classification border [

12]. Furthermore, this approach also contributes Recursive Feature Elimination (RFE) to extract the most influential features present in the data and to reduce the number of dimensions by using only the most relevant of the features [

13]. Next, in this approach, a novel RAVE model that sequentially integrates a Residual Network (ResNet) with high-level feature extraction and Vanilla Recurrent Neural Network (Vanilla RNN) is applied for extracting temporal dynamics from clinical time-series records. Furthermore, by using stratified 10-folds cross-validation, the generalizability of this proposed RAVE model is also improved. Finally, the SHAP is used to improve the trust of the proposed RAVE model. These enhancements improve the generalization capability, reduce bias, and ensure more reliable HD screening and case identification.

For training objective of the model, a variety of preprocessing techniques are used. These include Min-Max scaling and removing duplicate values. Because these preprocessing procedures reduce noise and use standard data formats, they can help AI algorithms perform better and be more precise. RFE is used to selectively remove irrelevant features, which improves the model’s ability to take advantage of unusual features when predicting HD. Because the model’s generalization skills will be improved, this will reduce the chance of overfitting, reduce the computing cost, and increase the model’s accuracy. The Proximity Weighted Synthetic Sampling (ProWSyn) is introduced to cope with the major problem of the class imbalance. ProWSyn assigns weights to minority samples based on how close they are to the decision boundary. This strategy helps to concentrate more on samples which are more challenging to classify. Therefore, this strategy produces more balanced data that can possibly be utilized to improve the classifier’s screening and case identification of HD without biasing the majority class.

The sequential hybrid combination of a ResNet and Vanilla Recurrent Neural Network (Vanilla RNN) is proposed in this work. ResNet is used to identify intricate patterns that correlate to the incidence of HD. Vanilla RNN continues it in modeling sequential dependencies, making it useful for time-series and other time-sensitive data and records where the chronology of events’ sequence is crucial. The two models’ sequential hybrid guarantees that the model can use the best aspects of both ResNet feature extraction and the understanding of sequential dependence of Vanilla RNN to provide optimal screening and case identification accuracies. To ensure the strength and generalization of the claimed hybrid model, the stratified 10-Folds cross-validation method (stratified 10-FCV) is implemented. The capability of the claimed model for generalization is more precisely estimated, and the risk of overfitting is minimized. Furthermore, it guarantees constant model performance across various datasets.

Shapley Additive Explanations (SHAP) is also employed to boost the transparency of proposed RAVE model, by providing information about the influence of each feature over the model’s screening and case identification. SHAP provides a more comprehensive explanation of the model’s choices and provides greater detail, enabling medical experts to trust the model’s results.

The Sensitivity-to-Prevalence analysis (SPA) is used to address the sensitivity of the RAVE model to the various disease prevalence scenarios. This analytical method ensures that the overall recall rate and precision of the RAVE model remains relatively constant and consistent when applied to clinically diverse screening cohorts, further emphasizing the generalizability and real-world reliability of the model.

The remaining sections of this manuscript are arranged as follows.

Section 2 reviews and critically analyzes existing research related to heart-disease screening and case identification with a focus on the application of artificial intelligence (AI)-based methods.

Section 3 presents the proposed RAVE-HD approach with the data preprocessing, feature selection, and design of a sequential hybrid deep learning model (RAVE).

Section 4 reports the experimental results.

Section 4.1 compares the performance of existing machine learning architectures against the proposed RAVE model.

Section 4.2 compares feature selection strategies.

Section 4.3 analyzes RAVE under different imbalance handling techniques.

Section 4.4 is the evaluation of stratified k-fold cross-validation for fair and reliable evaluation.

Section 4.5 is about statistical analyses for significance.

Section 4.6 is about sensitivity to prevalence for robustness evaluation on original data.

Section 4.7 is about the generalizability gap: cross dataset evaluation for heart disease screening.

Section 4.8 is about SHAP interpretability for HD prediction and case identification.

Section 4.9 is about ablation study for the contribution of each component.

Section 5 discusses limitations and also describes future work. Finally,

Section 6 wraps up the RAVE-HD approach study.

2. Related Work

The most recent research on HD prediction, screening, and case identification has been explored with data balancing, feature selection, ML, and DL techniques to achieve higher accuracy and to limit the existing problems, such as dealing with data imbalance, feature selection, and the identification of intricate patterns.

Various ML and DL architectures have been reviewed to improve the prediction of heart diseases, especially when it comes to class imbalance in the medical records. As stated in the research findings of Bilal et al. (2025) in [

14], a hybrid DL model was developed that incorporates Bidirectional Long Short-Term Memory (BiLSTM) network with Bidirectional Gated Recurrent Units (BiGRUs), and demonstrated improved performance compared to conventional models. In order to tackle the matter of dataset imbalance, the Synthetic Minority Oversampling Technique (SMOTE) was implemented to the dataset by creating artificial samples of minority classes, hence, diagnosing with better accuracy and decreasing the likelihood of overfitting. However, this dependency on SMOTE can be rather problematic to deal with when it comes to extremely imbalanced classes or extremely small datasets, with a risk of causing diminished generalization ability.

The prediction of HD using ML has also been used more and more in the academic literature, especially with specific references to data imbalance and feature selection. The PaRSEL (a concatenation stacking (P) of Passive Aggressive Classifier (PAC), Ridge Classifier (RC), Stochastic Gradient Descent Classifier (SGDC), and eXtreme Gradient Boosting (XGB)) with a Logit Boosting (LB) meta layer proposed by Noor et al. (2023) is a stacking approach to this screening and case identification task [

15]. The aim of class imbalance mitigation affords the researchers a combination of three data-balancing algorithms: Proximity Weighted Random Affine Shadowsampling (ProWRAS), Localized Random Affine Shadowsampling (LoRAS), and SMOTE, and parallelizing them with strategies of dimensionality reduction such as Recursive Feature Elimination (RFE), Linear Discriminant Analysis (LDA), and Factor Analysis (FA). By their experimental results, they provide evidence that the model based on the PaRSEL model is more accurate than the classification of its inner components, reaching up to 97% accuracy and 98% Receiver Operating Characteristic Area under the Curve (ROC-AUC), hence proving the efficacy of merging a variety of balancing and dimension-reduction methods. However, the authors face several limitations, including the potential for overfitting the model when dealing with higher-dimensional data and the considerable processing overhead required to execute it.

Some techniques of data balancing have been developed to solve the problem of class imbalance in the prediction of HD. The new divide-and-conquer approach towards data balancing proposed by Yang et al. (2024) utilizes the

K-Means algorithm [

16]. This algorithm splits the data into several clusters, and each group is sampled separately to balance the classes. Compared to the traditional methods, the authors compare this approach to SMOTE, NearMiss, and SMOTE Tomeklinks (SMOTE-Tomek). This proposed technique had a great impact on classifier performance, as it only increased the accuracy to 81 to 90 percent. However, the method’s limitation is that it uses clustering, which may cause issues with substantially overlapping instances of classes within the classes. In the case of complex medical datasets, such conditions can lead to overfitting of the predictive algorithm and introduce bias in the generation of synthetic data.

Research on coronary heart disease has been gaining momentum in recent years and ML algorithms are used for prediction. In the study by Omotehinwa et al. (2024) [

17], the authors aimed to optimize the Light Gradient Boosting Machine (LightGBM) algorithm for the early detection of CHD. Their approach included known class imbalance mitigation by Borderline-SMOTE and missing data imputation by Multiple Imputation by Chained Equations (MICE). The resulting, optimally tuned model gave an accuracy rate of 98.82 per cent, and a Receiver Operating Characteristic Area under the Curve (ROC-AUC) of 0.9963, and a precision of 98.35%. This is a significant improvement over baseline models. The downside of the model is, though, that it requires computationally costly hyperparameter tuning, and it can be overfitted when using synthetic samples, which can result in impaired generalization to other datasets.

Manikandan et al. (2024) proposed a machine learning-based prediction of heart disease using Boruta feature selection algorithm and classification methods like Logistic Regression (LR), Support Vector Machines (SVMs) and Decision Trees (DTs) [

18]. In their study, their analysis used Boruta to select the best features of HD dataset acquired in the Cleveland Clinic and showed that it enhanced the model functioning of the dataset to a high level of accuracy of 88.52 percent with LR. However, LR has a limitation, as it assumes linear relations, and hence it might fail to produce an ideal pattern of the data due to complex non-linear relations, especially lessening its performance compared to more complicated models.

Various ML models such as RF, LR, Gaussian Naive Bayes (GNB), and LDA were covered by Malakouti et al. (2023) in the classification of HD samples based on Electrocardiogram (ECG) data [

19]. It proved that the GNB classifier presents the best performance of classification accuracy of 96% of the healthy population and 96% of the HD population. However, a limitation of GNB is that It makes a hypothesis that features are independent, and a normal distribution is followed by continuous features, which is not always the case when dealing with complex ECG data and may sometimes reduce accuracy.

Ali et al. (2019) suggested a hybrid diagnosis system of feature selection relying on the

statistical model fused with a Deep Neural Network (DNN) optimally configured to predict HD [

20]. The

-DNN model had a prediction accuracy gain of 93.33%, compared to traditional DNN models since the model removed the irrelevant feature and had no overfitting. The drawback is that a DNN model might overfit if it is employed improperly with too many layers or noisy features, but this can be somewhat mitigated by the rigorous Grid Search Optimization (GS).

Yongcharoenchaiyasit et al. (2023) suggested a Gradient Boosting (GB)-based model for classifying elderly patients with dementia aortic stenosis (DOS) and heart failure (HF) [

21]. Other classifiers showed that the model performed with a major improvement, with accuracy of 83.81%, with the use of feature engineering. Even though GB produces outstanding results, its condition with respect to class imbalance is its main limitation: unless particular procedures like oversampling or changing the class weights are added, it is more likely to provide biased predictions.

Feature selection strategies were employed by Noroozi et al. (2023) to optimize ML models to predict HD [

22]. This experiment confirmed that feature selection enhanced the accuracy, precision, and sensitivity of ML models that include SVM, GNB, and RF. Nevertheless, GB was proved to be restricted in its sensitivity towards overfitting, as GB used with some form of feature selection would perform poorly, particularly in cases where the number of features was too big or contained irrelevant features. This obstacle impaired the capacity of the model to generalize within dissimilar datasets adequately.

Ben-Assuli et al. (2023) came up with a human–machine collaboration framework to model prediction models that can lead to accurate Congestive Heart Failure (CHF) risk stratification [

23]. Their experiment compared feature sets as chosen by the experts and the ML models, with the combined list of expert and ML-chosen features performing better than either of them alone, with an ROC-AUC of 0.8289. Although these have positive developments, one of the limitations of the method is that the feature set used is complex, with the final model having 42 features that might cause an overload of information on clinicians in practice. Moreover, the model is based on the adherence to such a large amount of data, which brings about the issue of computational efficiency and scalability in smaller institutions.

Pavithra et al. suggested a hybrid method of feature selection, HRFLC (RF + Adaptive Boosting (AdaBoost) + Pearson Coefficient), to forecast cardiovascular diseases [

24]. It is the combination of the merits of several algorithms with the purpose of enhanced prediction accuracy, using the finest features of a large amount of information. The results of the HRFLC model had a higher rate of accuracy and less overfitting than the conventional ones. However, one of the drawbacks of this strategy is its computational complexity, particularly when employing ensemble techniques like RF and AdaBoost, which may strain the resources as well as wasting time when dealing with large-scale data.

A multi-modal strategy for heart failure (HF) risk estimation was suggested by Gonzalez et al. (2024) [

25], utilizing brief 30 s ECG findings merged with sampled lengthy-duration Heart Rate Variability (HRV). The models they used to achieve survival are survivors like XGB and Accelerated Failure Time (AFT) with a Residual Network (ResNet) model, which captures raw ECG signals directly. This study generated a better result in the HF assessment of risk with a 0.8537 concordance index. However, the ResNet model’s complexity is one of its limitations; it requires a lot of processing power and may not even be as interpretable as other models, making it more difficult to apply to devices with constrained resources.

Al Reshan et al. (2023) presented an effective HD prediction model comprised of Hybrid Deep Neural Networks (HDNNs), which blend dense-layered LSTM networks with CNN to enable the model to achieve a greater degree of accuracy [

26]. Their model attained 98.86% accuracy on complete datasets and performed well in contrast to traditional ML models. However, the computational cost of the HDNN technique is one of its limitations, which makes it inappropriate for use in real-time or resource-constrained environments, especially when managing a big database is required as is to be anticipated as the volume of the medical dataset increases.

The challenge of HD prediction was approached hybridically by Shrivastava et al. (2023) using a CNN and BiLSTM network combination to boost classification performance [

27]. The model’s recorded accuracy on the Cleveland Heart Disease (CHD) dataset was outstanding at 96.66%. One drawback of this strategy is its computational cost, particularly the deep design of the hybrid model, which makes it unsuitable for real-time implementations on devices with constrained processing power.

Although there have been advancements in the prediction, screening, and case identification models of HDs, there has been a serious demand for better methods concerning data balancing, feature selection, and hybrid ensemble approaches. Balanced data make sure that minority classes are represented enough, and the model does not provide improper bias to the majority class. Decent feature selection strategies must be employed to downscale the dimensions of the information, yet perform the best in extricating the most applicable information for the screening and case identification. Furthermore, the hybrid approach of an ensemble of multiple models has the capability of improving the robustness and generalizability of the screening and case identification system. Besides these, it is important to introduce Explainable AI (XAI) methods to make the work of complex models more transparent and understandable. XAI also allows healthcare practitioners to comprehend and believe in decisions made by the model, an essential aspect of clinical adoption. From the above literature review, we advocate for future enhancements in the capability of heart disease screening and case identification models. These models should incorporate advanced computational techniques to improve diagnostic accuracy, enhance generalization, and make heart disease screening or case identification more reliable and effective.

3. Proposed System Approach: RAVE-HD (ResNet and Vanilla RNN Ensemble for HD) Screening and Case Identification

In this work, we present a RAVE-HD (ResNet And Vanilla RNN Ensemble for HD screening and case identification), a novel sequential DL approach for screening and case identification of HD that integrates multiple data preprocessing and modeling stages. Initially, duplicates records are identified and removed for data redundancy avoidance. Subsequently, Min-Max scaling is employed to normalize all numerical features within a unified range, facilitating stable and efficient model convergence. To mitigate the issue of class imbalance inherent in the dataset, the Proximity Weighted Synthetic Oversampling Technique (ProWSyn) is utilized, which generates synthetic minority class samples near the decision boundary, consequently improving the ability of the approach to identify rare heart disease cases. Following balancing, we adopt Recursive Feature Elimination (RFE) to reduce dimensionality by selecting the most influential features, which helps in minimizing noise and overfitting. Finally, we propose a hybrid DL model that sequentially combines the powerful spatial abstraction capabilities of the Residual Network (ResNet) with the temporal sequence modeling strength of the Vanilla Recurrent Neural Network (Vanilla RNN) to capture complex feature interactions and temporal dependencies as shown in

Figure 1, and illustrated by Algorithm 1.

3.1. Data Preprocessing

In order to make the HDHI dataset suitable for the effective training of deep learning models, an elaborate structured preprocessing pipeline is implemented to increase the quality, consistency, and intelligibility of the dataset. These steps ensure that all the features are in the correct formats and in similar scales, which will ensure stability and efficiency in the further model training process.

3.2. Data Description

This study uses a vital dataset called the Heart Disease Health Indicators (HDHI), which is a rich dataset developed for cardiovascular health analytics and predictive modeling of cardiac disease. The HDHI is derived from the Behavioral Risk Factor Surveillance System (BRFSS) [

28], a longitudinal surveillance program that was begun in 1948 and is currently managed by the U.S. Centers for Disease Control and Prevention (CDC). The dataset is made up of 253,680 unique records, each containing the health profile for a unique adult respondent. It includes 22 variables ranging from demographic characteristics, lifestyle behaviors, and clinical health measures, with notable characteristics being Age, Body Mass Index (BMI), Smoking behavior, Alcohol use, and Physical and Mental health status, as well as the history of Stroke and Diabetes. The data were collected through standardized, self-reported questionnaires and health surveys conducted under the CDC’s BRFSS initiative, ensuring consistency and comparability between all features of the participants.

All respondents, aged 18 years or older with complete and valid responses to the 22 health indicators, were included in the study with respect to the inclusion criteria. To maintain the integrity of the dataset and to avoid the introduction of bias in model training, records containing missing data, duplicate records or values above or below the range were removed in line with documented exclusion criteria.

The operational definition of “heart disease” followed the guidelines from CDC; participants who reported a diagnosis of coronary heart disease, myocardial infarction, or angina were assigned a code of 1 (positive case of heart disease), while all others were assigned a code of 0 (negative case of heart disease). Given that the data is tabular, it is very suitable for binary classification problems. Its great width, demographic heterogeneity, and open availability via the Kaggle platform make it a reliable reference for public health analytics and modeling using machine learning.

3.2.1. Duplicate Entries Identification and Removal

Since the HDHI dataset consists of real-life records, it is important to identify the redundancy of the data since such redundancies can distort the data and mislead the learning process. A full analysis of all twenty-two columns revealed 23,899 duplicate records in the dataset. All these redundant entries were carefully removed resulting in a curated dataset of 229,781 unique observations. The maintenance of the consistency and representativeness of the patient population was ensured by retaining a single example of each record. This step of data cleaning is essential for models to be able to identify real patterns in the data. By removing repetitions, the models avoid memorizing duplicate information. As a result, they can generalize better and perform more reliably on unseen cases.

3.2.2. Min-Max Features Scaling

Range normalization (Min-Max Features Scaling or Rescaling or Normalization) is the most straightforward method for rescaling any numerical feature into a specific range, usually [0, 1], which is achieved by the normalization of the features. It is a normalization preprocessing step that is especially necessary when there is a dataset consisting of features measured on different units or scales. Since such inconsistencies might have a disproportionately significant impact on the model training, this step is of vital importance, notably in algorithms that follow a gradient descent or those based on Euclidean distance. Through the standardization of the range of each feature, Min-Max scaling makes sure that a single feature cannot dominate the learning process if the shift happens only due to the scale, which in turn stabilizes the optimization path and accelerates convergence during training. The transformation is performed by subtracting a feature’s minimum (lowest) observed value and dividing by the difference between both of the maximum and minimum numbers as explained by the following Equation (

1):

In the equation,

x represents the value of the original feature,

and

are parts of the feature that have the maximum and minimum threshold numbers, respectively, and

is the normalized output. This linear scaling procedure guarantees that all of the feature values are in the same number range, so they can make equal and proportional contributions to the model’s training process. Therefore, Min-Max normalization makes the model more numerically stable and boosts the capability of the model to generalize across different input distributions [

11].

3.3. Clinical and Machine Learning-Adapted Baselines

Traditional cardiovascular risk scores, such as the Framingham Risk Score (FRS), Atherosclerotic Cardiovascular Disease (ASCVD), and SCORE2, estimate long-term CVD risk using laboratory and physiological parameters, including systolic blood pressure, HbA1c, creatinine, and blood lipid levels (LDL-C, HDL-C). These clinical variables are not available in the HDHI dataset; therefore, a direct numerical comparison with these established calculators is not feasible. SCORE2 (SCORE2 (Systematic Coronary Risk Evaluation 2) is a European 10-year cardiovascular risk model based on age, sex, blood pressure, cholesterol, and smoking status; key inputs such as systolic BP and cholesterol are missing in the HDHI dataset) is also excluded for this reason.

To provide a clinically meaningful reference, the proposed RAVE-HD approach’s suggested RAVE model is benchmarked against a set of machine learning-adapted models that emulate conventional risk logic using survey-based features. These include Logistic Regression (LR), Naïve Bayes (NB), Deep Belief Network (DBN), Gradient Boosting (GB), Residual Network (ResNet), Vanilla RNN, and the ensemble EnsCVDD. These models are widely applied in cardiovascular screening and risk stratification using health survey data.

Traditional risk equations use fixed mathematical terms to represent interactions between variables (e.g., age × cholesterol). RAVE-HD does not require such predefined terms; instead, it learns these complex dependencies directly from the data through its hybrid ResNet-VRNN architecture and explainable feature selection layer. This design enables the unbiased discovery of behavioral–clinical relationships without relying on manually specified clinical coefficients. The comparative evaluation of RAVE-HD against these clinical and machine learning baselines is presented in “Effect Size and Clinical Relevance: MCID (Minimum Clinically Important Difference) Analysis” Section, highlighting its predictive performance, interpretability, and clinical relevance.

3.4. Data Balancing

In the case of classification tasks that involve medical data, one of the most significant and still not fully solved issues is that the classes are imbalanced. This problem was detected as the root of the reliability and fairness problems of machine learning models. This challenge gains more significance in DL settings, where the optimization process may inherently favor the majority class due to the disproportionate distribution of class labels. When predicting heart disease, the dataset that this study is based on has a notable imbalance, as very few cases of heart disease, only about (9%), are labeled out of the whole dataset, while the rest, (91%), are labeled as healthy. The distributions that are skewed like that frequently give rise to models that are not good at recognizing minority class examples and hence are likely to miss serious medical conditions, although they may seem to perform well according to overall accuracy.

To reduce this drawback, we implement the ProWSyn as a directed method for equalizing the training data. ProWSyn is a more sophisticated and efficient option than classical methods such as SMOTE. Its goal is to make artificial samples of minority class in a more intelligent and context-aware manner [

12]. Whereas SMOTE generates new instances via the linear interpolation among randomly chosen minority neighbors, ProWSyn applies the proximity-aware weighting system that continuously gives the most number of points to those minority examples which are generated closely to the boundary of making a decision, where the classification is usually most uncertain. This target sampling method thus guarantees that the created synthetic examples are not only varied but also informative; they basically strengthen the decision boundary and provide the model with greater sensitivity to minority class examples that are challenging to classify. Consequently, in addition to enhancing the model’s overall screening and case identification robustness, ProWSyn guarantees its sensitivity and fairness, which are the most important conditions in domains with high stakes like medical diagnostics.

Rationale for Selecting ProWSyn: The preference for ProWSyn methodology over conventional oversampling techniques like SMOTE and ADASYN is based on the fact that it combines the use of proximity-based weighting with the dynamic boundary awareness. In contrast to SMOTE, which generates interpolations between minority instances uniformly, or ADASYN which generates interpolations based on density distributions, ProWSyn deliberately pays attention to minority observations which are near to the decision boundary by giving them greater proximity weights. As a result, the ability of the model to synthesize more informative artificial instances improves its discriminative ability in boundary-rich regions. Moreover, ProWSyn has the following features: an exponential decay of weights to reduce the weight of more remote samples, and controllable partitioning parameters (L, K) to allow domain-specific fine-tuning. As a result, ProWSyn prevents the over-concentration of synthetic points, limits redundancy, and achieves a process of sampling that is balanced and sensitive to nuances at boundaries, which makes it particularly suitable for medical datasets where decision boundaries are often subtle and clinically important.

The central idea of ProWSyn is to assign a higher probability of selection to those minority class instances that are closer to majority class samples. This proximity is quantified using a distance-based weighting function. For each minority class instance

, the proximity weight

, using Equation (

2) is calculated as the inverse of its Euclidean distance to the nearest majority class instance

:

These weights have to be normalized across all N minority instances in order to obtain probabilities for sampling by using Equation (

3):

After the probabilities are determined, a random factor

is introduced, and the interpolation method is used between a selected minority instance

and one of its neighbor instances

belonging to the same minority class to generate new synthetic instances as calculated by using Equation (

4):

The interpolation strategy guarantees that synthetic instances are created in areas of higher classification uncertainty and so strengthen the decision boundary between classes. By amplifying samples which are hard to learn, ProWSyn helps to increase the minority class sensitivity while maintaining the overall classification robustness. As a result of applying ProWSyn, the class distribution becomes more balanced, reducing the influence of the majority class and allowing the DL model to train for representative features for both healthy and heart disease cases.

3.5. Recursive Feature Elimination

Feature selection is a principal factor to raise the performance and understandability of a DL model, primarily when dealing with high-dimensional healthcare datasets. This process removes unnecessary or redundant features, reducing the number of features that are used, thus reducing the model complexity, preventing overfitting, speeding up the training, and, most importantly, improving the capability of the model to make it more generalizable to newly acquired data. In this study, we use RFE [

13] as a principal and systematic method to optimize the input feature space before feeding it into the proposed hybrid DL model.

RFE is a backward selection algorithm, which starts with all the input features and goes on to remove those that are judged to be of least importance for the model’s capability for making predictions, screening, and case identification. To decide the relevance of each and every feature to the problem (e.g., based on classification loss or model weights), a new model is trained on the given subset and the importance of each feature is estimated. The feature that has the contrary effect is eliminated, and the model is trained once more using the rest of the samples subset. This operation is used again and again, until a reliable number of the most key important features are left. By consistently identifying and retaining the most informative attributes, RFE ensures that the final selected subset maximally supports the learning objective while reducing noise and dimensionality in the data. Mathematically, let the initial feature set be represented as

. For each feature

, an importance score

is calculated using (

5), by measuring the change in a performance criterion (e.g., classification loss

) when the feature is removed:

Every iteration removes the feature with the lowest significance score by using Equation (

6):

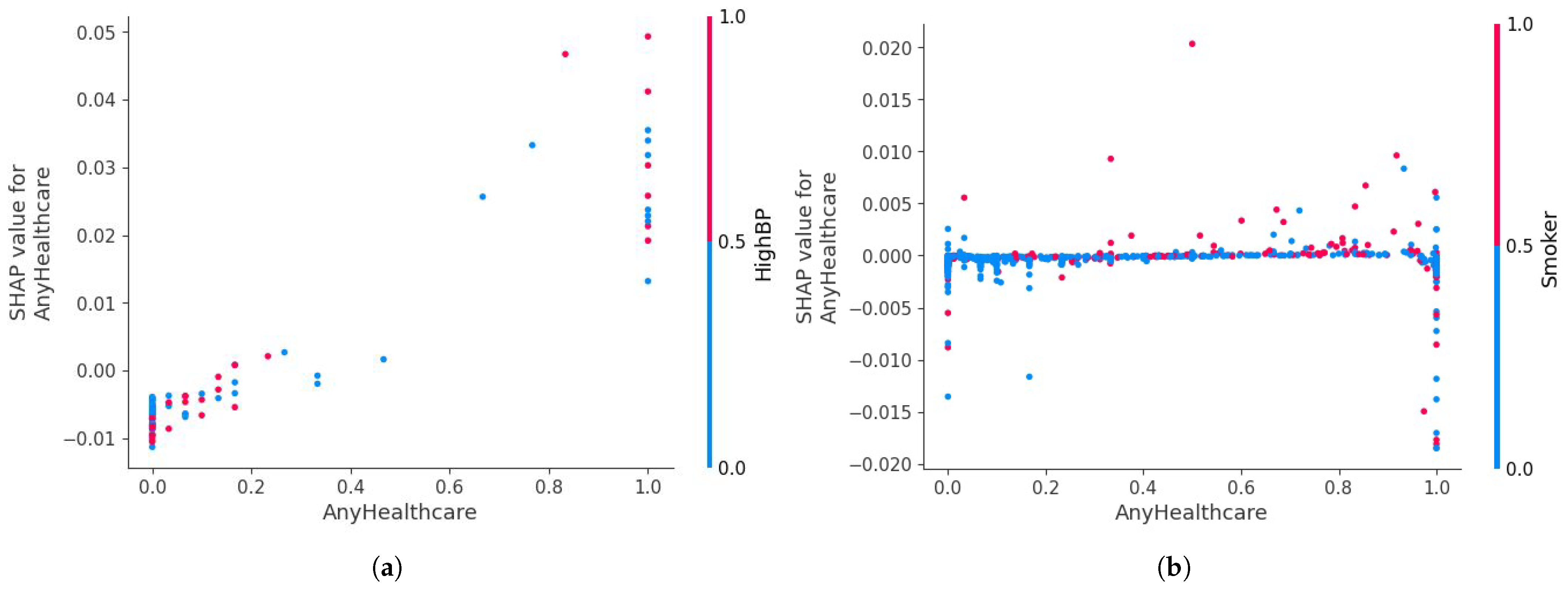

This recursive procedure continues until an optimal number of features, denoted with , is retained. The resulting optimal subset includes the most influential features supporting the model’s capability to distinguish regarding cardiac disease and healthy (normal) examples. Through RFE, the initial 21 features are reduced to 15 key predictors: HighBP, CholCheck, BMI, Smoker, Stroke, Diabetes, PhysActivity, Fruits, NoDocbcCost, GenHlth, MentHlth, PhysHlth, Sex, Age, and Education. These features demonstrate consistent relevance across multiple iterations and directly enhance the capacity of the proposed hybrid DL model to determine intricate non-linear associations present in the data.

3.6. Residual Networks

ResNets are a subclass of Deep Neural Networks (DNNs) that address the degradation problem often encountered in very deep architectures. The accuracy of training a traditional neural network also saturates as the number of layers grows and subsequently falls off dramatically because of the vanishing gradient problem and the inability to optimize the deeper layers [

29]. ResNet addresses this problem by incorporating shortcut connections that enable residual mappings to be learned by such a network as opposed to attempting to learn the underlying desired mapping, directly.

The residual block, which enables the input to be fed straight to the output without passing through one or more layers, is the core component of ResNet. The ResNet instructs the network to capture a residual function

mathematically, rather than a direct mapping

. This leads to Equation (

7).

Here,

x is residual block’s input,

is the residual mapping that was discovered by a sequence of activation, convolutional, and batch normalization layers, and

is the final value of the output. By allowing gradients to pass straight through the skip connections during backpropagation, this additive identity mapping makes deep network training more reliable and effective. A common residual block has two or three convolutional layers, preceded by (and a non-linear activation function like ReLU followed by) batch normalization. When the input and the output sizes of a residual block do not match, a linear projection is used to fix dimensions in the shortcut pathway. This is normally performed by a 1 × 1 convolution that introduces a learnable transformation in order to be dimensionally equalized. The redesigned residual mapping may be written as in the form of Equation (

8):

where

is the output of the residual function, and

is a trainable weight matrix and serves as the input

x bypassing the connection. The adjustment makes residual connection additive and still has the flexibility to architecture.

This kind of design permits the ResNet architecture to be well scaled to be very deep, where very deep networks like ResNet-50, ResNet-101, and ResNet-152 can be built without the performance penalty of vanishing gradients. Training in even very deep networks can be stabilized by the use of projection or identity skip connections, allowing efficient overall training. Also, the residual learning technique gives the network the capability to learn low-level and high-level abstractions at each layer, and thus ResNet is extremely useful in various applications such as image classification, time-series prediction, etc. With such advantages, ResNet has become a building block of DL models, with exceptional accuracy performance, robust convergence, and strong generalization properties.

3.7. Vanilla Recurrent Neural Networks

Vanilla RNNs belong to an important group of neural networks whose specific goal is the representation of sequential information, through learning the architecture of temporal dependencies between the elements of a sequence. Unlike the feedforward neural networks, where each of the inputs is processed separately with no attention to the preceding context, Vanilla RNNs have recurrent connections that allow the neural networks to keep the memory records of the past time steps. This recurring process enables the model to model the time dynamics and the context-based relationship in a sequence and as such, this model can be effectively applied in tasks that have time-dependent data. Consequently, Vanilla RNNs have found extensive use in applications in the domains of physiological signal analysis, and sensor-based time-series modeling where the relative order and development of input features is of central importance in making an effective prediction.

The Vanilla RNN processes an input sequence

over

T time steps, where each

is vector of an input at time

t [

30]. The main calculations are recursively updating the hidden state

with both the input at present time step and the input from the previous time step:

In this case,

is the weight matrix (WM) of the input-to-hidden (ITH) connection,

is the weight matrix (WM) of the hidden-to-hidden (HTH) connection,

is the hidden-to-output (HTO) weight matrix (WM),

in Equation (

9) and

in Equation (

10) are bias terms, and

is a non-linear activation function, e.g., tanh or ReLU. The initial hidden state

is typically initialized as a zero vector or a learned parameter with respect to Equation (

11):

The model learns by reducing a loss function

over the predicted outputs

and the ground truth labels

. The cross-entropy loss, which is commonly employed for classification issues, is given by Equation (

12):

where

is the softmax-normalized probability of class

j at time

t. During training, gradients are computed through time using a process known as backpropagation. Due to recurrent connections, with respect to the HS, the gradient of the loss is summed up recursively at each time step, by using Equation (

13):

This recursive dependency illustrates how early hidden states influence the output across multiple time steps. But it also raises the possibility of problems like vanishing gradients, where the norm of the gradient diminishes exponentially with respect to time steps, making it challenging for the network to identify long-term relationships. Despite its limitations, the Vanilla RNN provides a foundational and interpretable architecture for modeling sequential patterns. Its ability to map sequences to sequences, sequences to vectors, or vectors to sequences renders it a versatile tool across a broad range of temporal modeling applications.

3.8. Proposed RAVE Model for Heart Disease Screening

To conduct the binary classification, we propose a hybrid deep learning system that successively combines a Vanilla Recurrent Neural Network (RNN) and a Residual Network (ResNet). Within this construct, ResNet works as a spatial feature extractor, which distills the high-level hierarchical and abstract representations from the HDHI dataset, while the Vanilla RNN assimilates the contextual and temporal relationship between the distilled features.This synthesis of architecture derives its impetus from the synergy of the constituent models. ResNet is able to capture spatial and hierarchical patterns in high-dimensional data, while the Vanilla RNN uses the dependencies in the representations before providing the final classification output in this case of HD screening or case identification. In this sequential setup, the deep feature maps of ResNet are given as ordered input sequences to the Vanilla RNN, thus allowing the model to learn both spatial and sequential dependencies, which is especially a great feature in healthcare data, in which feature interactions are complex and interrelated. The feature flow of this hybrid pipeline is very clear: first, ResNet is used to extract the multi-scale spatial features, then these features are reshaped to the sequential embeddings and finally sent to the Vanilla RNN layer. At this point the Vanilla RNN helps to capture correlations and learns progression patterns among these embeddings, and helps to explain inter-feature dependencies typical for HDHI data. As a result, this hybrid design increases feature diversity as well as interpretability.

Assume that

is the input vector, where

d represents the number of features. The ResNet component transforms this input through multiple residual blocks. Each residual block learns a residual mapping

and adds it to the input through identity or projection shortcuts. Formally, the

l-th residual block’s output is provided by Equation (

14):

where the ReLU activation function is represented by

. Each convolutional layer in the block

is followed by batch normalization, and the

represents the trainable parameters. In the case of mismatched input and output dimensions, a projection matrix

is applied:

By using Equation (

15), the final output of the ResNet module,

, is reshaped into a pseudo-sequential form,

, enabling it to be processed by the Vanilla RNN. This transformation facilitates the modeling of dependencies among abstracted features in a sequential manner, even in the absence of explicit temporal ordering. The Vanilla RNN processes this sequence using its recurrent architecture. The present input

in Equation (

16) and the previous hidden state

are used to update the hidden state

at each time step

t given by:

where

represents a non-linear activation function such as tanh, and

,

, and

are weight matrices with corresponding biases

and

. The output at each time step is computed from the current hidden state as given in Equation (

17). While, probability of the positive class is determined by applying a sigmoid function to the final output at the last time step

given in Equation (

18):

Given that, the binary cross-entropy loss function, which is described as follows in Equation (

19), is used to train the model for the binary classification job:

where

is the true label, and

is the predicted probability. Algorithm 1 summarizes the complete flow of the proposed RAVE model. The model undergoes end-to-end training, where gradients propagate from the classification output through recurrent layers back to the residual blocks. This co-adaptation during training enables stronger feature learning across spatial and temporal domains.

The architecture details of the ResNet, Vanilla RNN, and the proposed RAVE deep learning model (ResNet → Vanilla RNN) are summarized in

Table 1.

The proposed RAVE model combines deep residual learning and sequential reasoning to improve the predictive performance and interpretability. The ResNet module extracts high-level spatial and hierarchical features by two (2) residual blocks consisting of SeparableConv1D layers with 64, 32, and 16 filters, respectively. Each layer includes a batch-normalization layer along with

tanh to keep gradient flowing and ensure the non-linear operation at the same time to stabilize the gradients, and a dropout rate of (0.2) is used to avoid overfitting. The resulting feature maps are resized and passed sequentially to the Vanilla RNN module that captures contextual relationships using two recurrent layers of 32 and 16 hidden units, respectively. The

tanh activation is used in these layers as well, and these layers use a dropout rate of (0.2) for additional regularization. The top dense layer uses sigmoid activation to give a probability as an output for binary classification. The empirical optimization of all hyper-parameters has provided balanced validation accuracy, faster convergence and increased interpretability. The hybrid architecture is a good way to combine two classes of learning; deep residual learning and sequential modeling. The ResNet module helps maintain the flow of information between the layers, while the Vanilla RNN uses the structured context of the extracted features to constrain the final decision boundary. This synergy leads to an increased ability of the model to capture complex feature dependencies and is reflected in improved classification performance as demonstrated by empirical results on a variety of evaluation metrics.

| Algorithm 1 Proposed RAVE model for the binary classification of heart disease. |

| 1. | Input: Feature vector , true label , number of ResNet blocks L, sequence length T |

| 2. | Output: Predicted probability and final class label |

| 3. | |

| 4. | Procedure HybridResNetVanilla RNN() |

| 5. | Step 1: ResNet-Based Feature Transformation |

| 6. | Initialize: |

| 7. | for

to

L

do |

| 8. | Extract features: |

| 9. | if then |

| 10. | |

| 11. | else |

| 12. | |

| 13. | end if |

| 14. | end for |

| 15. | Final ResNet feature: |

| 16. | |

| 17. | Step 2: Reshape Features for Sequential Modeling |

| 18. | Convert into sequence: |

| 19. | |

| 20. | Step 3: Vanilla RNN-Based Temporal Processing |

| 21. | Initialize hidden state: |

| 22. | for

to

T

do |

| 23. | |

| 24. | end for |

| 25. | |

| 26. | Step 4: Final screening or case identification Computation |

| 27. | Compute score: |

| 28. | Compute probability: |

| 29. | |

| 30. | Step 5: Loss Calculation (for training phase) |

| 31. | Binary cross-entropy loss: |

| 32. | |

| 33. | Step 6: Final Decision (for inference phase) |

| 34. | if

then |

| 35. | Return: Class 1 (Positive) |

| 36. | else |

| 37. | Return: Class 0 (Negative) |

| 38. | end if |

3.9. Statistical Validation Procedure

A rigorous statistical validation process is conducted on the results of the proposed RAVE-HD approach. This procedure helps to ensure robust, highly reproducible and clinically meaningful evaluation results. It contains the following components.

3.9.1. Evaluation Metrics

The evaluation of RAVE model performance is performed using a set of complementary metrics, which includes accuracy, precision, recall, F1-score, ROC-AUC, PR-AUC, log loss, Cohen’s Kappa, Matthews correlation coefficient (MCC), Hamming loss, and Brier score. Accuracy and the F1-score provide an overall measure as well as a class-balanced perspective, while ROC-AUC and PR-AUC examine the discriminative ability of the model. The Brier score on the other hand assesses the probabilistic calibration of the risk predictions.

3.9.2. Confidence Interval Estimation

A confidence interval (CI) defines the range within which the true value of a performance metric is expected to lie with a specified probability. In this study, 95% CIs are used to measure the uncertainty of each evaluation metric and to enable robust comparison between models. For every metric, CIs are computed using stratified bootstrap resampling with iterations. Stratification maintains the original class proportions in each resample, ensuring the balanced representation of both classes.

The non-parametric percentile method is applied because it does not assume any specific data distribution. The 95% CI is calculated as using Equation (

20).

where

denotes the estimated performance metric from each bootstrap sample, and

and

represent the 2.5th and 97.5th percentile quantiles of the bootstrap distribution. This approach provides a simple, distribution-free, and reliable estimate of model uncertainty.

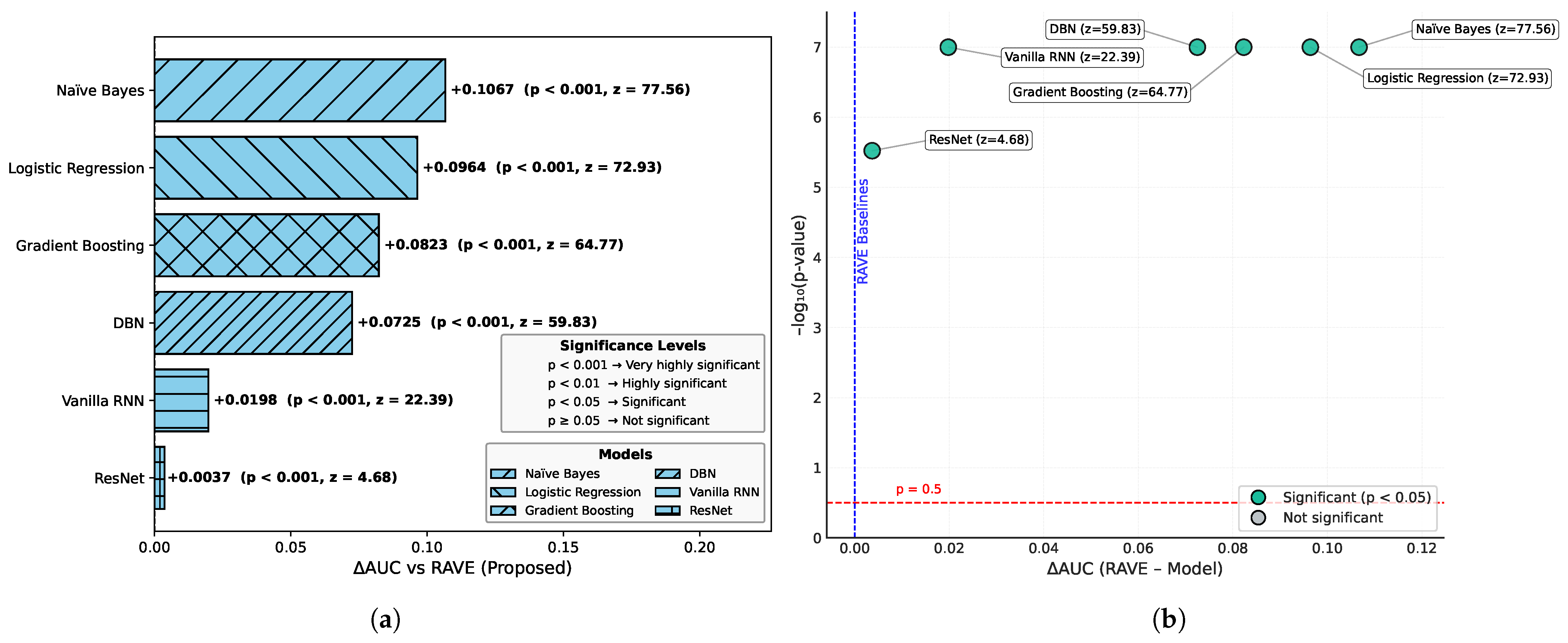

3.9.3. Significance Testing

To ensure that the observed performance improvements are not attributed to random variation, paired significance testing is performed between the proposed RAVE-HD model and all baseline models. ROC-AUC comparisons employ the DeLong test, a non-parametric method specifically designed to assess correlated ROC curves. For all other evaluation metrics, a bootstrap-based paired hypothesis test is applied to compare model performance across multiple resampled datasets.

Let

and

denote the mean metric estimates of the proposed and baseline models, respectively. The corresponding bootstrap-based test statistic is defined as in Equation (

21):

where

B represents the total number of bootstrap iterations, and

is the indicator function. Statistical significance is inferred when the proportion of bootstrap samples satisfying

substantially exceeds random expectation. This procedure ensures that the reported performance gains of the RAVE-HD model are statistically robust and not driven by sampling variability.

3.9.4. Effect Size Evaluation

Effect size measures, including Cohen’s Kappa and MCC, are calculated to quantify the practical and clinical relevance of performance improvements relative to baseline models. This step complements statistical significance by highlighting meaningful improvements in real-world outcomes.

3.9.5. Robustness Assessment

Model performance is evaluated on both oversampled training-validation sets and original test data. This ensures that training-time imbalance correction does not bias results and confirms that the model generalizes well to unseen data.

3.9.6. Reproducibility

All experimental procedures are reproduced using fixed random seeds in order to ensure reproducibility. The statistical analyses made use of well-established Python libraries like scikit-learn, numpy, and scipy, thus guaranteeing the complete reproducibility of our results.

This rigorous framework not only allows an exhaustive evaluation of the RAVE-HD methodology but also facilitates the precise description of which performance metric is created by which step in the methodology, how the uncertainty is quantified, and how the statistical and clinical relevance is rigorously determined.

After the statistical validity, there is a need to examine the robustness of the RAVE model given various characteristics of the data. While validation provides information about the reliability of the derived results, the investigation of the model’s sensitivity to class prevalence provides meaningful information on the model’s robustness and generalizability. Consequently, the following subsection describes the Sensitivity-to-Prevalence analysis conducted based on the original distribution of the data, paying particular attention to the effect of class proportions on predictive performance and general system behavior under different prevalence scenarios.

3.10. Sensitivity-to-Prevalence Analysis (SPA) Under the Original Data Distribution

Sensitivity to prevalence refers to how a model’s predictive performance varies when the fraction of positive cases in a population changes. In medical data, the prevalence of diseases is not the same in every hospital, region, and screening program. Therefore, a model that works well on one distribution may not achieve the same level of accuracy with populations with different levels of prevalence.

To show that the proposed RAVE-HD approach is robust in realistic class imbalance situations, we add a special SPA in the methodology. The evaluation is performed on the original test set (where positive HD cases still amount to about 10%) and not the oversampled distribution. This in turn enables us to check the robustness of the model under several hypothetical population situations, where we vary the proportion of positive cases.

Sensitivity to prevalence describes how a model’s predictive performance changes when the proportion of positive cases varies within a dataset. In medical dataset applications, disease prevalence often differs across hospitals, regions, and screening programs. As a result, a model that performs well under one distribution may not exhibit the same reliability when deployed in populations with different prevalence levels.

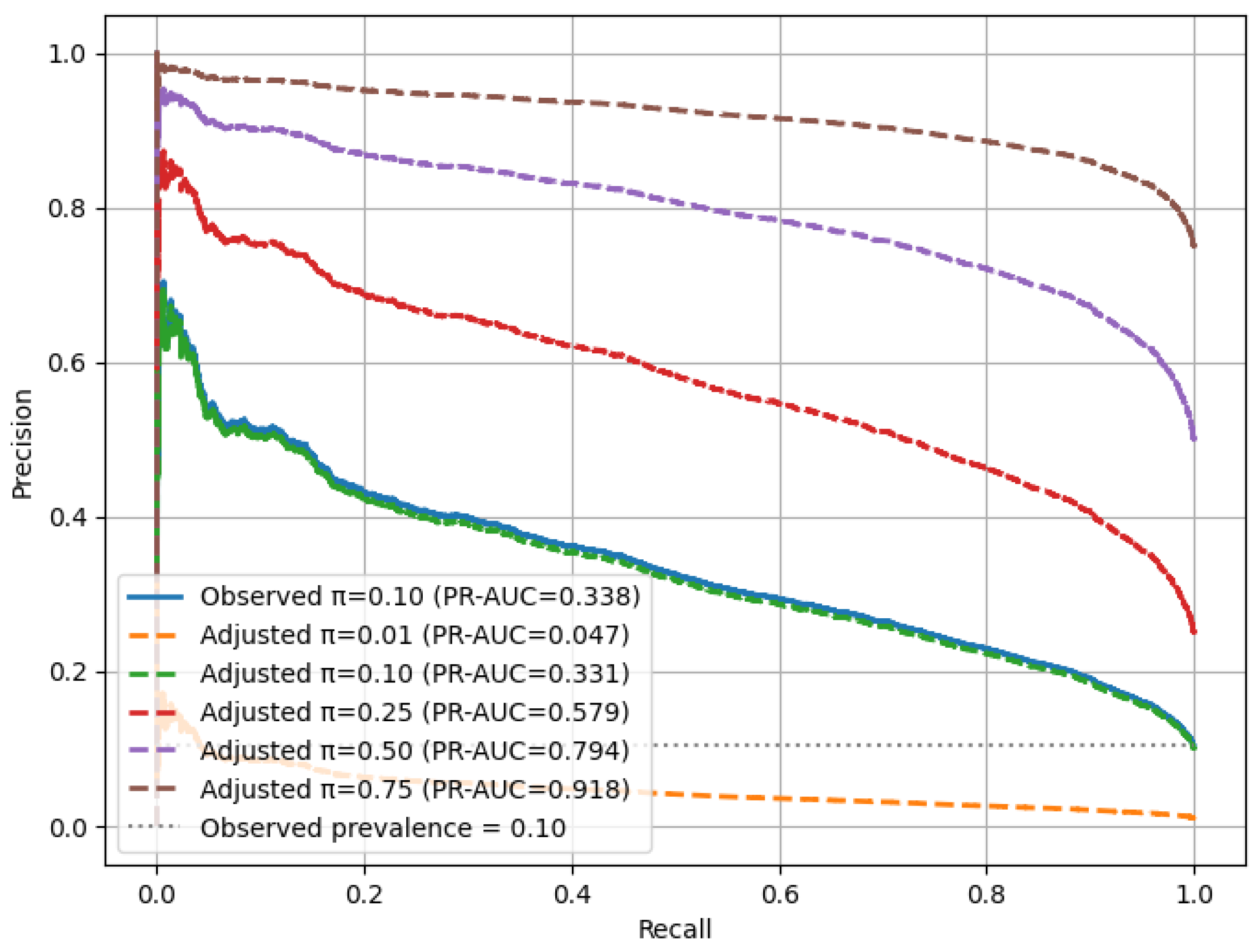

For each simulated prevalence level

, the precision–recall (PR) relationship was reweighted according to the following formulation, used in Equation (

22):

Here, denotes the classification threshold, while TPR and FPR represent the true and false-positive rates, respectively. For each prevalence level , the area under the precision–recall curve (PR-AUC) was calculated to quantify discriminative capability, and 95% confidence intervals were estimated using bootstrap resampling () to ensure statistical reliability.

This methodology establishes a prevalence-invariant evaluation framework in which recall remains unaffected by (prevalence levels), while precision adapts proportionally to changes in disease prevalence. By integrating this analysis into the experimental pipeline, the RAVE-HD approach provides a fair and reproducible assessment of model robustness across real-world class distributions.

4. Simulation Results and Experiments of Heart Disease Screening

To ensure reproducibility and to provide a strictly equitable comparison between the models, all experiments were performed in a carefully standardized computational environment. The studies were run using Google Colab Pro with GPU enabled, using an Nvidia Tesla T4 GPU and CUDA 12.2. A consistent batch size of 32 was used for all the models, to maintain consistency in the training dynamics. Random seeds were equally distributed to ensure that the data splits and model initialization are the same across runs. The experimental codebase made use of Python version 3.10, TensorFlow version 2.15, scikit-learn version 1.2, NumPy version 1.26, Pandas version 2.2 and Matplotlib version 3.8. The execution time of each model was measured as the total training time (in seconds) under the same runtime conditions, which allows for a direct comparison of all the reported performance metrics as well as the timing results in a fully reproducible way. After the definition of this experimental approach, a statistical validation protocol is followed in order to carefully evaluate the robustness of the proposed RAVE-HD methodology.

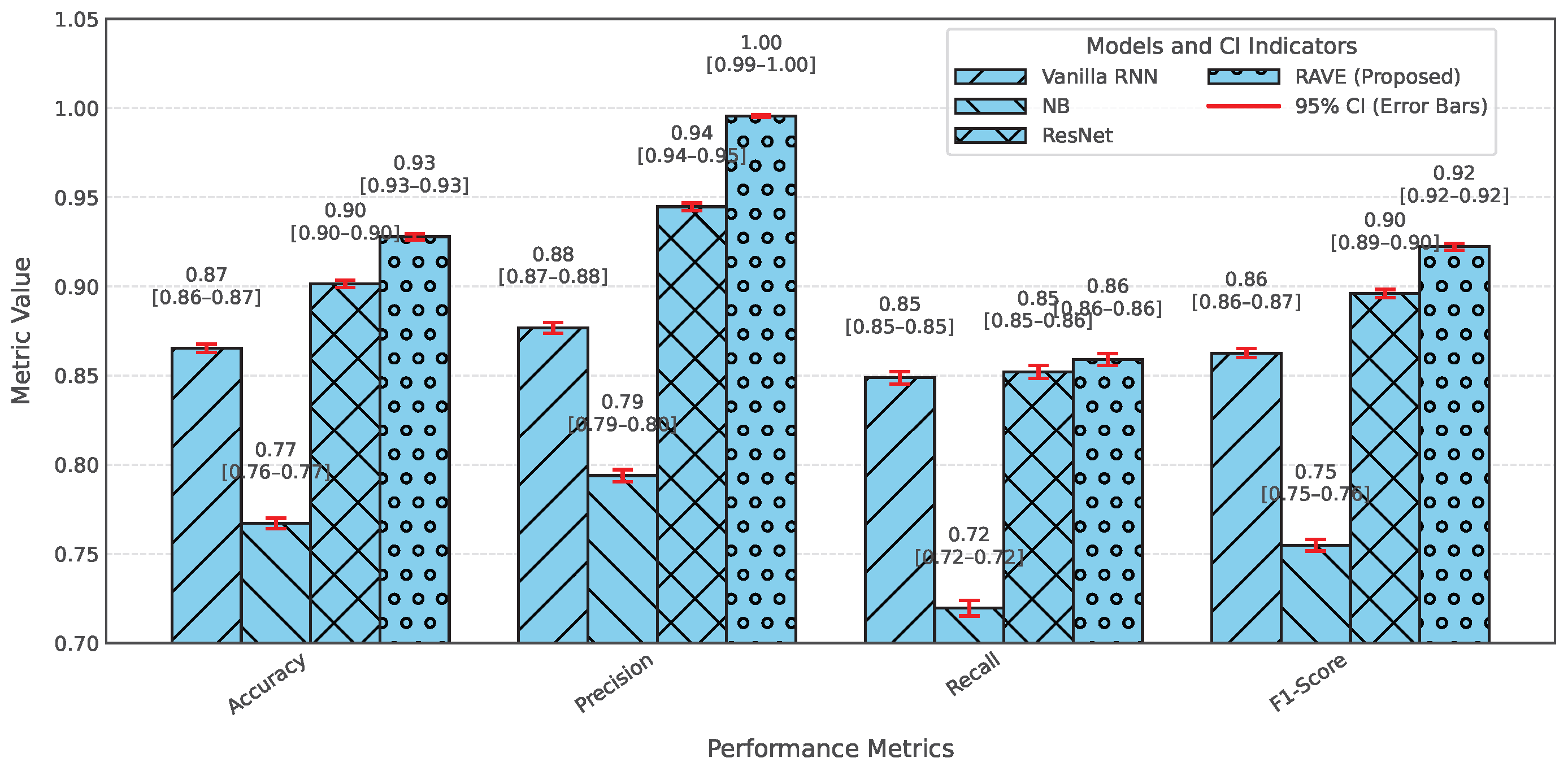

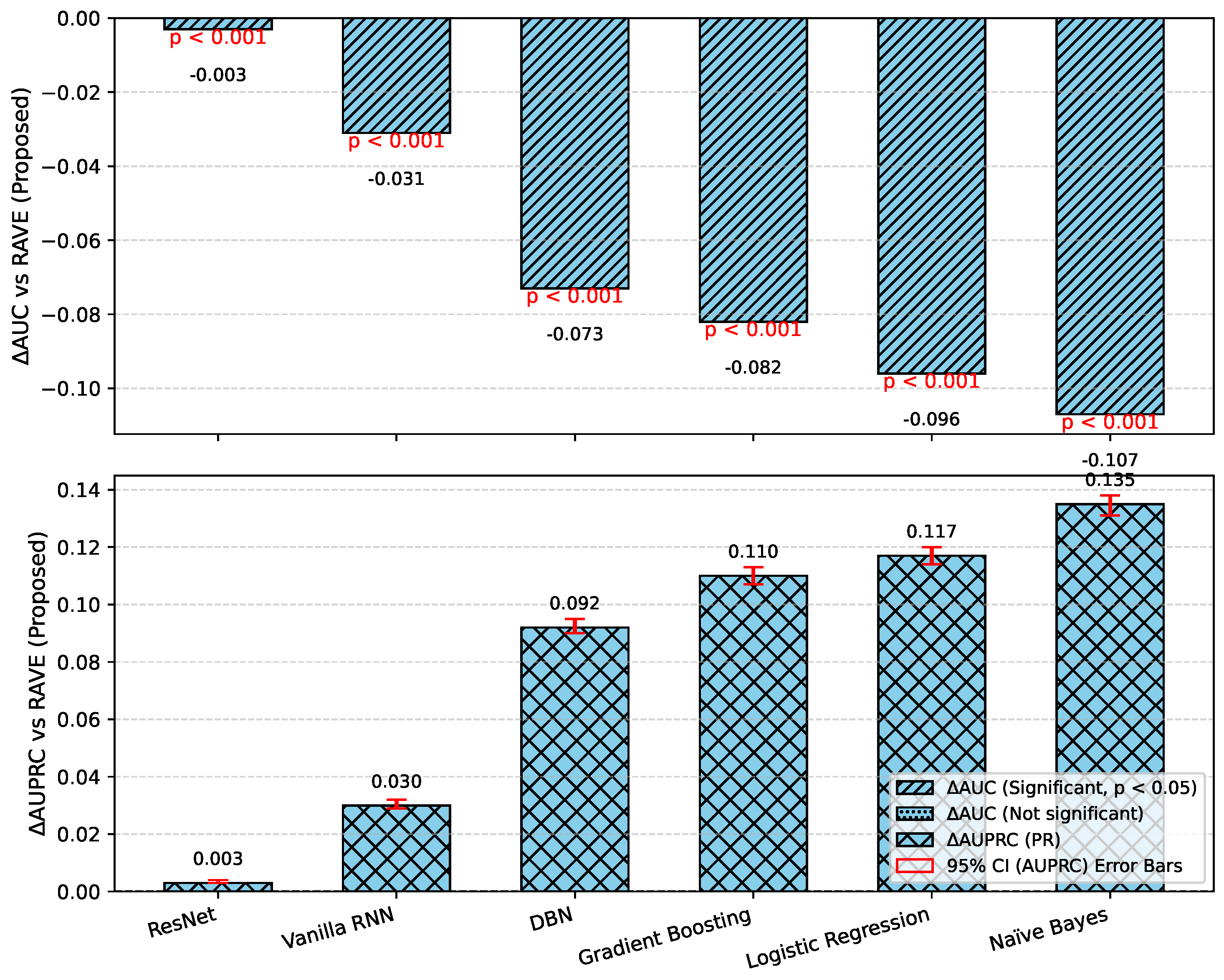

4.1. Performance Comparison of the Proposed RAVE Model and the Existing ML Architectures

In order to assess the effectiveness of the RAVE model as part of the RAVE-HD approach, an extensive comparison was conducted with known architectures for machine learning (ML) and deep learning (DL). In order to guarantee the generalization performance, the dataset was divided into training (80%) and testing (20%) sets. Traditional classifiers such as Logistic Regression (LR), Naive Bayes (NB) and Gradient Boosting (GB) were compared with deep models such as the Deep Belief Network (DBN), ResNet and a Vanilla Recurrent Neural Network (RNN). The RAVE model applies ResNet and Vanilla RNN in a sequential pattern, thus combining both the spatial and temporal feature representations. All experiments had the same conditions to ensure a fair comparison and performance was assessed by standard criteria including accuracy, precision, recall, F1-score, ROC-AUC, PR-AUC and Cohen’s Kappa. This comparative analysis confirms the effectiveness and robustness of the RAVE-HD approach in comparison to existing ML and DL baselines.

Table 2 shows a multi-dimensional study of all the models considered across the various evaluation metrics.

4.1.1. Overall Performance Comparison

In

Table 2, the accuracy, precision, recall, and F1-score for each model are provided for an overall comparative evaluation. Among the traditional models, Logistic Regression (LR) has stable performance in all the four metrics where the accuracy, recall and F1-score are roughly (0.80). On the other hand, the F1-score of a naive Bayes classifier (NB) is (0.76) which is less than all classifiers due to the relatively poor recall of (0.73) in spite of a high precision of (0.80). The Deep Belief Network (DBN) performs almost as well as LR, with a slight improvement. Gradient boosting (GB) is similar to NB regarding recall (0.81) but has a lower precision of (0.75), reducing its F1-score. Among the deep learning models, ResNet is the one that stands out, as it achieves the highest precision at (0.90), keeping the accuracy, recall, and F1-score at around (0.89). The Vanilla Recurrent Neural Network (RNN) improves all four metrics even more, with precision and recall scores of (0.85) and (0.85), respectively, giving a strong F1-score of (0.85). Finally, the proposed RAVE model, which sequentially fuses ResNet and Vanilla RNN, achieves the best results: (0.92) accuracy, (0.93) precision, (0.92) recall, and (0.92) F1-score. This highlights its better classification ability.

4.1.2. Classification Error and Reliability Measures Analysis

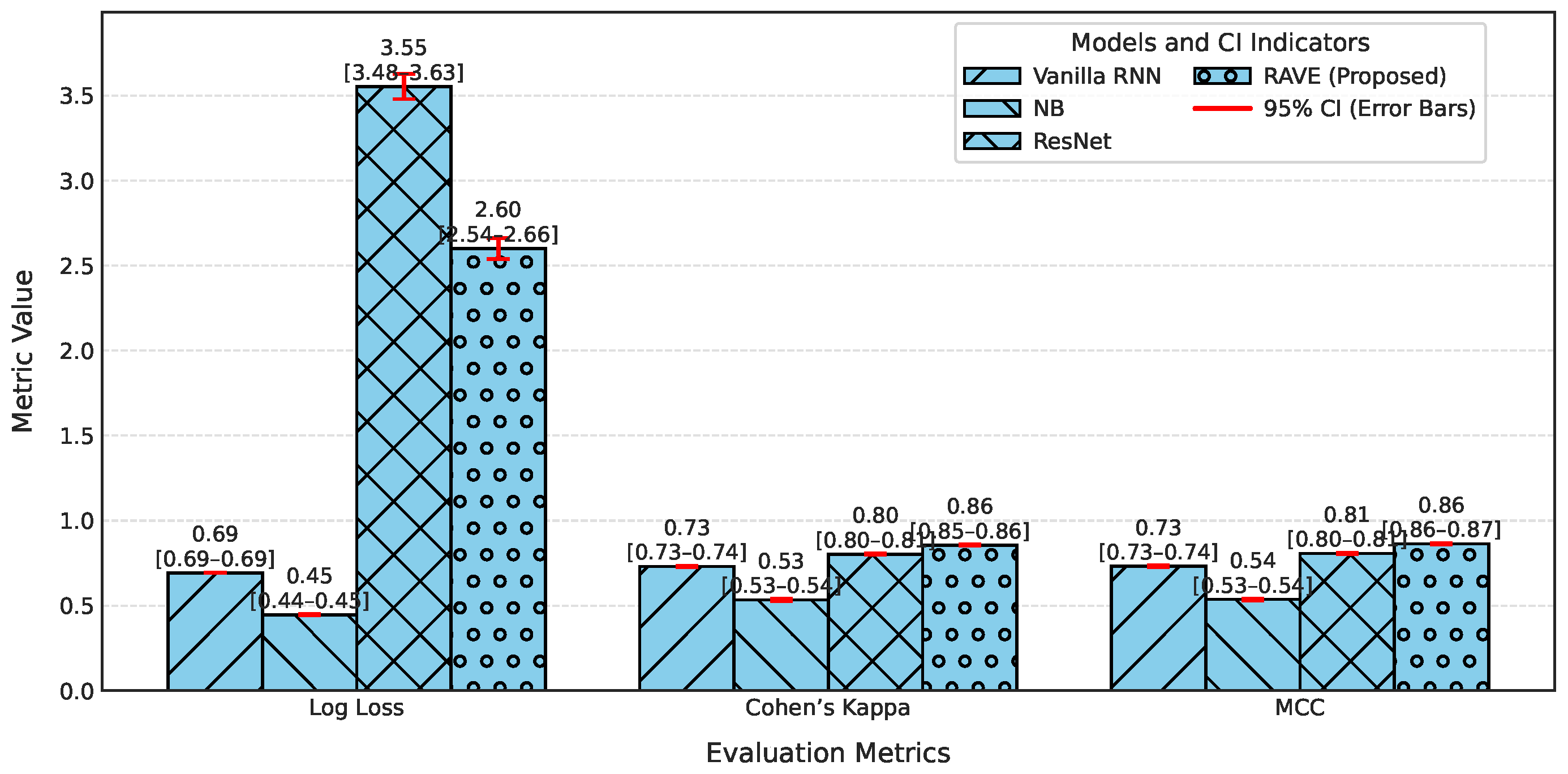

For calibration and label stability analysis for every model, log loss, Matthews correlation coefficient (MCC), Cohen’s kappa, and Hamming loss were measured systematically as shown in

Table 2. A commonly used metric of the uncertainty in predicted probability distributions, log loss, was calculated for each model. The log losses of the Logistic Regression (LR) and Deep Belief Network (DBN) models were (0.43) and (0.42), respectively, which indicates a moderate level of confidence in high-dose screening or case identification. In contrast, the naive Bayes (NB) classifier has the highest log loss (0.90) which indicates poorly calibrated probabilities despite having high precision.

The GB log loss (0.69) is also comparatively high. Vanilla RNN showed improvement with a log loss of (0.31), and ResNet further reduced it to (0.25). The proposed RAVE model achieved the lowest log loss at (0.19), confirming a well-calibrated and confident screening or case identification model. In terms of MCC, which evaluates the overall quality of the screening or case identification considering all elements of the confusion matrix, LR, DBN, NB and GB ranged from (0.59) to (0.65). Vanilla RNN achieved (0.70), ResNet achieved (0.79) and the proposed RAVE model again led with (0.85). A similar pattern followed for Cohen’s Kappa, a measure of agreement between predicted and actual classes, where RAVE achieved the highest value (0.84), indicating strong model reliability, while Vanilla RNN and ResNet followed with (0.70) and (0.78), respectively.

Furthermore, in

Table 2, Hamming loss is analyzed, which measures the fraction of wrong labels (a smaller proportion of misclassified instances). Lower values indicate fewer screening or case identification errors. LR, NB, and GB showed moderate Hamming losses ranging from (0.20) to (0.22). ResNet and DBN improved the results further with losses of (0.11) and (0.17) respectively. The Vanilla RNN model performed better, with a loss of (0.15). Most importantly, the proposed hybrid architecture achieved the lowest Hamming loss of (0.08), which in turn proved the robustness of the hybrid architecture in providing highly accurate screening or case identification with minimal label misclassifications.

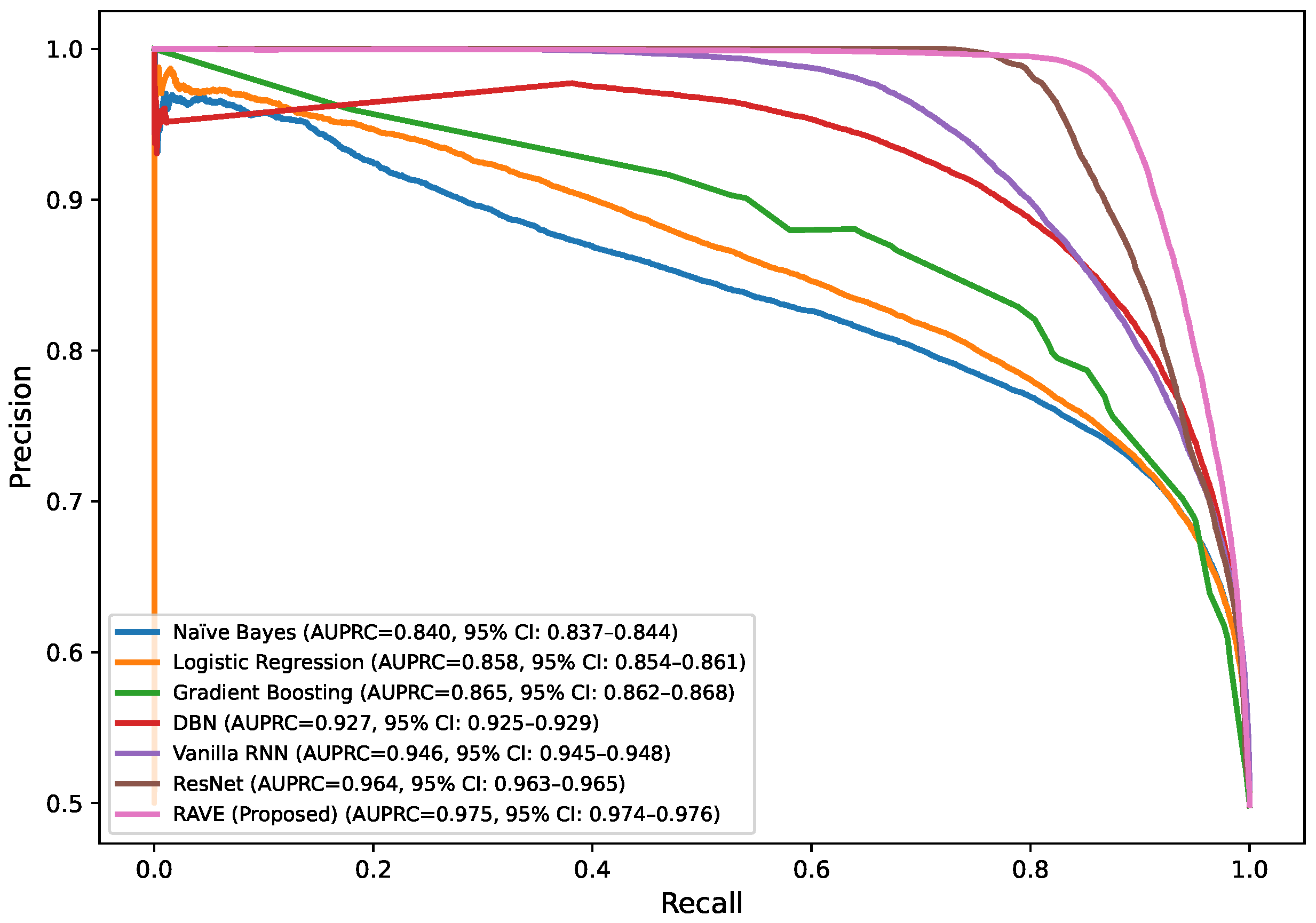

4.1.3. Discrimination Analysis (ROC-AUC and PR-AUC)

The Receiver Operating Characteristic Area Under Curve (ROC-AUC) values for each model can be found in

Table 2. This metric measures the ability of the models to discriminate the various classes. ROC-AUC values of all models are greater than 0.85. Logistic Regression, Naive Bayes and Gradient Boosting give scores of (0.88), (0.86) and (0.86) respectively. A Deep Belief Network slightly exceeds these numbers at (0.89). ResNet and a Vanilla RNN realize significantly higher values of (0.96) and (0.94), correspondingly. The proposed RAVE model has the highest performance, (0.97). Furthermore,

Table 2 shows the precision–recall area under curve (PR-AUC), which is particularly useful when working with imbalanced datasets. The NB and GB models preserved PR-AUC values of (0.84) while the LR model was slightly better at (0.86). The DBN further improved to (0.88). ResNet and Vanilla RNN both showed high PR-AUCs of (0.97) and (0.95), respectively, once again showing their robustness. The proposed RAVE model achieved the highest PR-AUC of (0.98), thus indicating the strength of the model in reducing the number of false positive and maximizing the number of true positives, which makes it extremely suitable for the screening of heart diseases or identifying cases where the early and accurate detection of people at risk is vital, despite the inherent imbalance in medical data.

4.1.4. Computational Efficiency

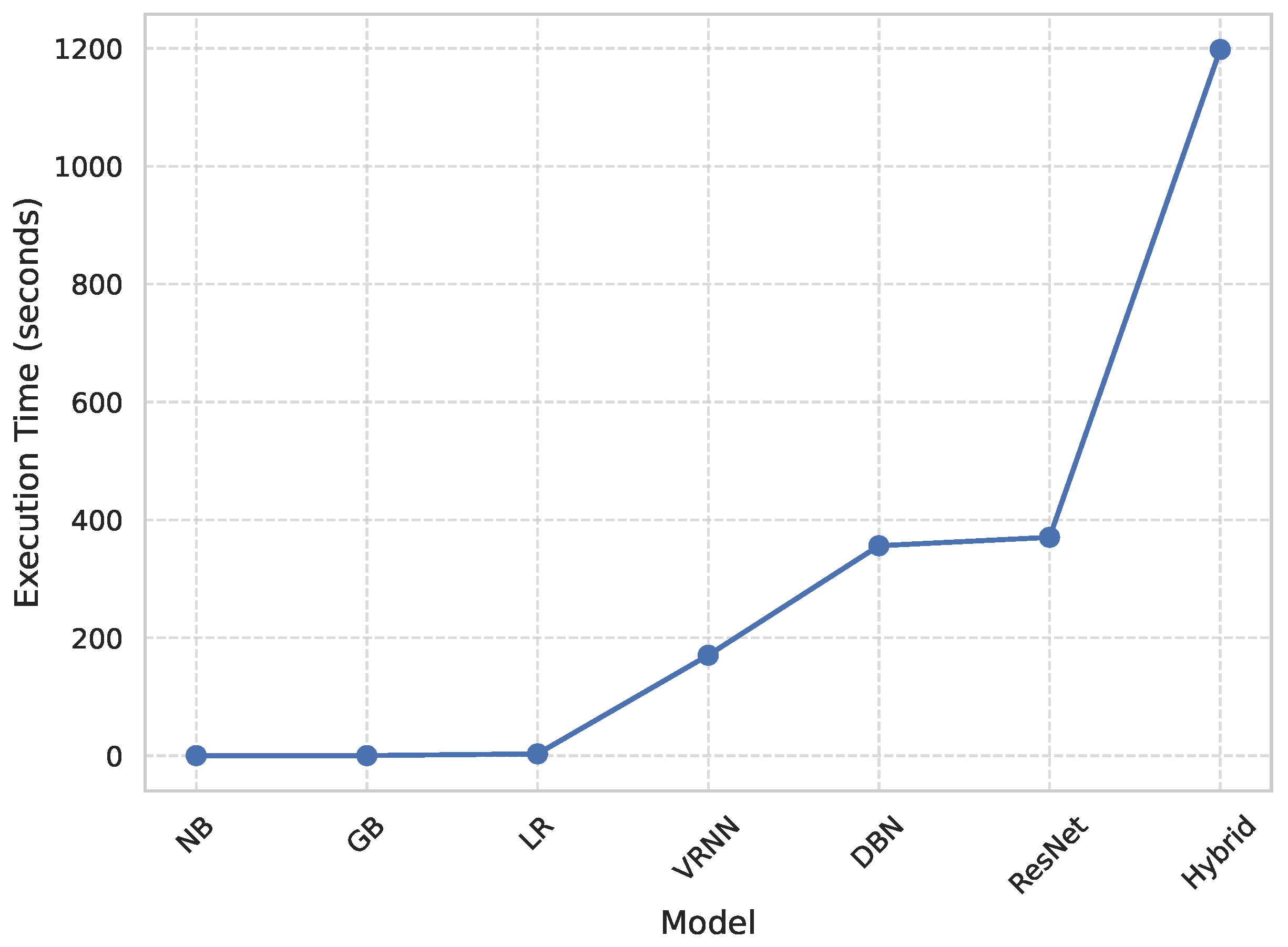

Figure 2 shows a comparative study of the execution times of each model, and therefore the quantitative measure of computational efficiency. All the models were run in the same GPU setup (NVIDIA Tesla T4 with CUDA 12.2 and a batch size of (32) to ensure reproducibility and a fair comparison between all the models. Classical algorithms like Naive Bayes (NB) and Gradient Boosting (GB) had the quickest runtimes of (0.22) seconds and (3.16) seconds, respectively, whereas Logistic Regression (LR) was the next fastest (3.16) seconds. The deep architectures, that is, Deep Belief Network (DBN) and ResNet, took significantly more time (356.18) and (368.10) seconds, respectively. The Vanilla Recurrent Neural Network (Vanilla RNN) obtained a better trade-off between complexity and efficiency with a run time of (195.38) seconds. In contrast, the proposed RAVE model, which is the most computationally demanding with a runtime of (498.71) s, achieved the highest accuracy and reliability. The obvious trade-off between wait or execution time and predictive performance is acceptable in applications where extreme accuracy is essential, such as the early diagnosis for cardiovascular disease, where correct detection is of the utmost importance for a timely medical intervention.

Table 2 shows an overall comparative analysis of LR, NB, DBN, GB, ResNet, Vanilla RNN and the proposed RAVE model (ResNet to Vanilla RNN) based on various evaluation metrics. The results show that the proposed RAVE model is consistently better than all the architectures in terms of both predictive accuracy and reliability. Compared to the strongest baseline (ResNet), the proposed RAVE model showed remarkable improvements in all metrics. Specifically, accuracy, precision, recall, and F1-score were increased from (0.89), (0.90), (0.89), and (0.89), respectively, to (0.92), (0.93), (0.92), and (0.92), respectively, corresponding to an overall improvement of the order of 3% to 4%. ROC-AUC and PR-AUC values improved from (0.96) and (0.97) to (0.97) and (0.98), indicating an improvement in discriminative ability ranging from 1% to 2%. In addition, Cohen’s kappa and MCC were increased from (0.78) and (0.79) to (0.84) and (0.85), respectively, which shows more inter-model agreement and classification consistency. Logloss decreased from (0.25) to (0.19) and the Hamming loss decreased from (0.11) to (0.080), which confirmed that the calibration was better and the misclassification was reduced. These improvements collectively lead to the validation of robustness and generalizability of the proposed hybrid for the screening of heart disease or case identification.

4.2. Comparative Evaluation of Feature Selection Methodologies

Feature selection is an important step in the RAVE-HD approach. At the same time, by identifying the most informative and predictive features relevant to myocardial pathology, it also ensures interpretability and computational tractability. A carefully selected feature subset has been proven to improve the predictive fidelity, reduce overfitting, and augment clinical elucidation. In order to guarantee both the rigor of the methodology and the practicality of the results, a set of feature selection techniques was analyzed, including both traditional model-independent techniques and cutting-edge differentiable deep learning models.

4.2.1. Feature Selection Methodologies Evaluation in the Suggested RAVE Model

In order to critically evaluate the effectiveness of different feature selection mechanisms, a comparative analysis was performed using six representative feature selection methods as presented in

Table 3. These included Random Forest-based Recursive Feature Elimination (RFE), L1-Batch Normalization, Concrete Autoencoder, NSGA-II (Non-dominated Sorting Genetic Algorithm), TabNet, and Attention Gates. Each technique was tested under the same experimental conditions, and therefore, a fair unbiased comparison was made.

The RFE method using a Random Forest surrogate achieved the highest empirical performance and stability, achieving an accuracy of (0.928), ROC-AUC of (0.973), PR-AUC of (0.978), and an MCC of (0.860). The results showed that an effective feature selection supports a good generalization ability of the RAVE model and its clinical interpretability. Although RFE is not completely differentiable and can be theoretically less optimal than methods based on gradients, its empirical reliability and model agnostic nature renders it especially suitable for healthcare data (containing heterogenous feature types and complex interdependencies).

Differentiable approaches like L1-BatchNorm were able to achieve similar accuracy (0.921) to (0.924) and high discrimination ROC-AUC of about (0.97), proving them suitable to be part of deep learning pipelines. However, they are more sensitive to hyperparameter tuning and input scaling, which could mean a reduction in reproducibility in large clinical datasets. In contrast, multi-objective NSGA-II algorithm was able to find the balance between parsimony and performance by finding the accuracy of (0.918) using only six features. Although computationally expensive, NSGAII demonstrates the potential of evolutionary optimization to generate small feature sets of high performance for real-time e-Health applications.

TabNet and attention gate mechanisms introduced such explainability with the embedded attention masks and weighting. However, both of these methods displayed variable accuracy (accuracy ≤ 0.90), and moderate discrimination (ROC-AUC ≈ 0.95), which could be attributed to a trade-off between transparency and stability.

Overall, even though differentiable and attention based share similarities with modern deep learning model theories, RFE is the most balanced and reliable option. Due to its good empirical performance, stability, and transparency, RFE is an excellent solution for clinical artificial intelligence pipelines which require reproducible and interpretable results.

4.2.2. Comparative Evaluation of Various Feature Subsets in RF Surrogate Method for the Suggested RAVE Model

In order to understand the influence of the size of a subset of features on the performance of proposed RAVE model, a Recursive Feature Elimination (RFE) procedure was used. This enabled us to determine the most important predictors. The two-stage approach we use is also model agnostic and interpretable. It ensures stability and eliminates redundant or irrelevant variables and then passes them to the RAVE architecture. Our systematic comparison showed that a set of sixteen features provide the best level of predictive accuracy and are computationally efficient. These features improved the ROC-AUC, F1-score, and overall classification accuracy in our experiments. While the end-to-end differentiable feature selection methods may be more in line with the inductive biases of the attribute and deep models, the RFE-derived subset still retains the most discriminative and clinically relevant features in our study.

We performed an evaluation of the proposed RAVE model using three unique feature sets with 12, 16, and 18 features, respectively. As shown in

Table 4 and

Figure 3, a significant improvement in the evaluation metrics is seen with an increase in the number of features from 12 to 16. In particular, the accuracy rises from (0.84) at 12 features to (0.93) at 16 features and precision increases from (0.84) to (0.94) and recall increases from (0.84) to (0.93). Correspondingly, the F1-score shows a significant improvement from (0.84) to (0.93). The ROC-AUC and PR-AUC measures also show significant improvements from (0.91) and (0.92) to (0.97) and (0.98) respectively, hence indicating much better overall classification ability related to the higher feature dimensionality. Moreover, the reliability of the model is evaluated by the reduction in log-loss from (0.41) to (0.16) and the significant improvements of Cohen’s Kappa from (0.68) to (0.87) and Matthews correlation coefficient from (0.68) to (0.87). The Hamming loss also decreases from (0.15) to (0.06), which is suggestive of a decrease in the classification errors.

However, increasing the feature set size to 18 does not lead to significant improvement and, in fact, slightly worsens some of the metrics. The accuracy drops marginally to (0.92), and similar trends are observed in precision (0.93), recall (0.92), and F1-score (0.92). ROC-AUC and PR-AUC also see slight declines to (0.9758) and (0.9807) respectively. The log loss increases slightly to (0.17), and Cohen’s Kappa and MCC decrease to (0.85) and (0.85), respectively. Moreover, the Hamming loss slightly increases to (0.07). Notably, the execution time also increases significantly from (807) seconds for 16 features to (1197) seconds for 18 features. These results suggest that using a 16-feature subset identified through the RFE surrogate provides the most optimal trade-off between performance and computational efficiency, while using fewer than 12 features leads to underfitting and using more than 18 features results in diminishing returns and increased complexity.

4.3. Comparative Evaluation of the Proposed RAVE Model Under Various Imbalance-Handling Techniques

The objective of this comparative evaluation is to determine how different balancing strategies affect the predictive performance and calibration of the proposed RAVE model. To promote our study aim,

Table 5 and

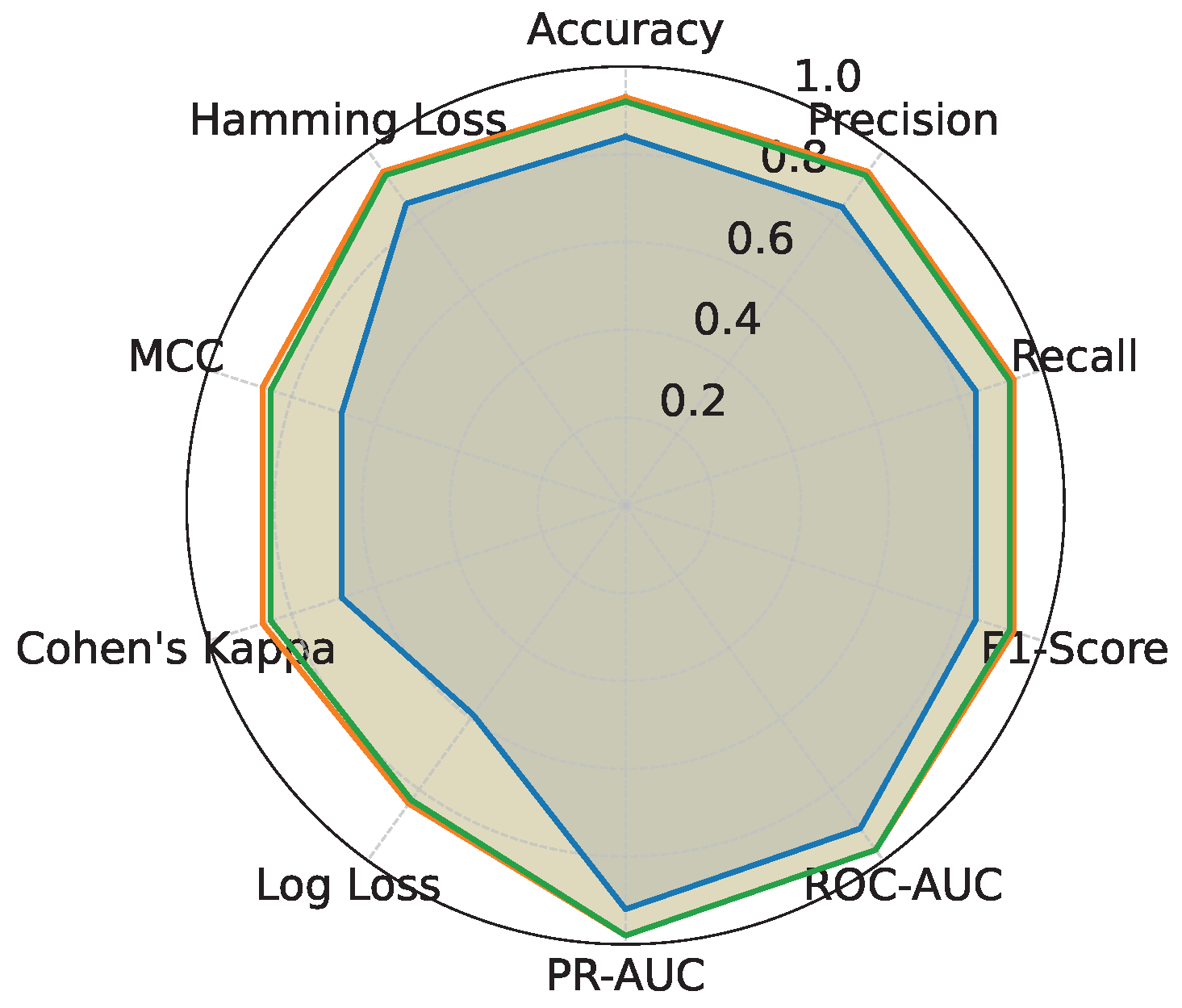

Figure 4 present a comprehensive metric-wise comparison of the proposed RAVE model using five imbalance-handling techniques: Synthetic Minority Over-sampling Technique (SMOTE), Localized Random Affine Shadowsampling (LoRAS), ProWSyn, Cost-Sensitive Learning and Threshold-Moving, which is optimized for the F1-score (F1-opt). The results showed that the model’s performance is largely dependent on the balancing method applied.

ProWSyn consistently achieved the best overall performance, with the highest accuracy (0.92), precision (0.93), recall (0.92), and F1-score (0.92). This superior performance was mainly due to its ability to generate diverse and realistic synthetic minority samples while preserving the intrinsic structure of the data. By maintaining clear class boundaries and balanced class representation, ProWSyn allowed the RAVE model to learn more discriminative and stable decision boundaries, leading to higher accuracy and generalization.

In contrast, SMOTE and LoRAS performed moderately well but showed clear limitations. SMOTE created synthetic data through linear interpolation, which often produced overlapping or noisy samples near class boundaries, reducing precision (0.76) and calibration reliability (Log Loss of 0.50). LoRAS improved upon SMOTE through localized affine transformations and achieved slightly better results (accuracy and F1-score of 0.84) but still generated redundant samples and failed to capture complex non-linear distributions of minority classes. These weaknesses limited their ability to represent true minority patterns effectively, which resulted in lower robustness and higher misclassification rates compared to ProWSyn. Algorithm-level techniques, such as Cost-Sensitive Learning and Threshold-Moving (F1-opt), also showed lower and less consistent results. Cost-Sensitive Learning adjusted class weights (acquired precision of 0.88) to penalize minority misclassification but often overcompensated for the minority class, biasing the model toward the minority class, and reducing overall calibration (Kappa of 0.24). Threshold-Moving optimized the decision threshold to maximize the F1-score but showed weak precision (0.29), recall (0.59) and probability reliability. Both methods involved the classification-specific hyperparameter optimization and post hoc calibration, which increased the computational complexity and reduce interpretability.