A Multi-Model Image Enhancement and Tailored U-Net Architecture for Robust Diabetic Retinopathy Grading

Abstract

1. Introduction

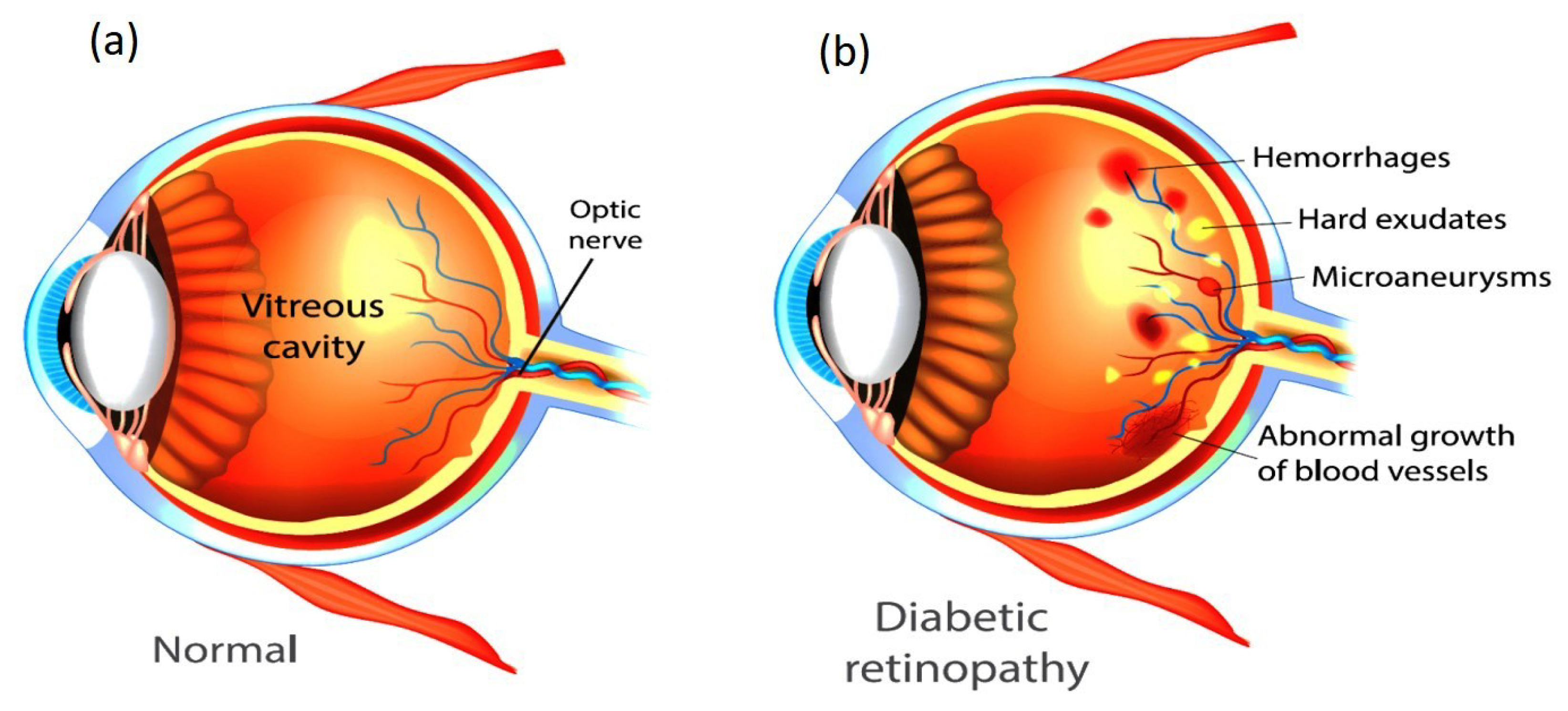

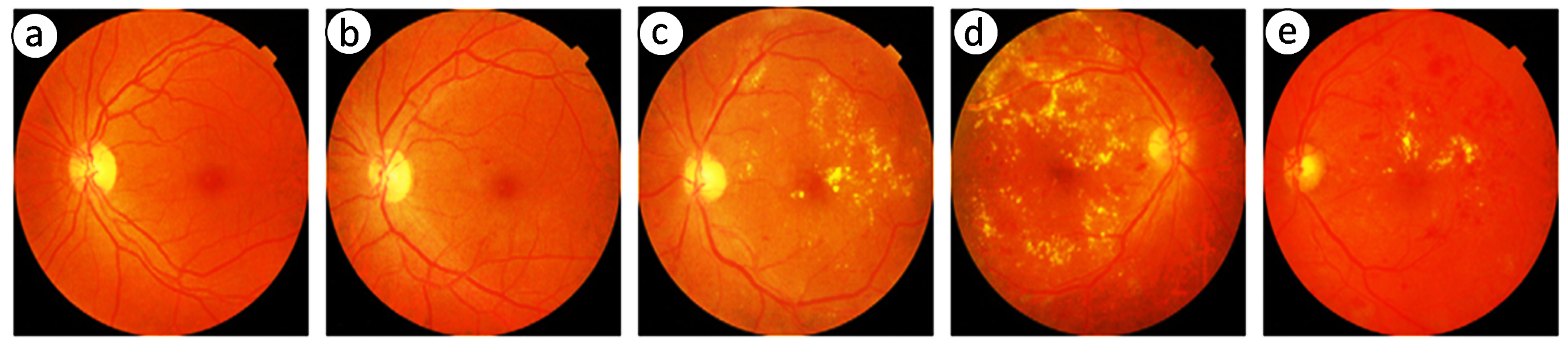

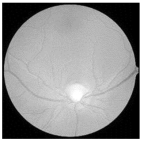

1.1. Retinal Imaging as a Diagnostic Tool

1.2. Role of Artificial Intelligence in DR Diagnosis

1.3. Enhancement and Classification of Retinal Images

- Histogram Equalization—a widely used technique that redistributes the intensity values of an image to enhance contrast, making subtle details more prominent [15].

- Adaptive Histogram Equalization—an extension of HE that applies local contrast enhancement by computing histograms for small regions of the image, thereby improving visibility in darker areas [16].

- Contrast-Limited Adaptive Histogram Equalization—a refined version of AHE that limits contrast amplification in high-contrast regions to prevent over-enhancement and noise amplification [17].

- Enhanced Sub-Image Histogram Equalization—a technique that divides the image into sub-images and applies histogram equalization separately to each region, preserving finer details [18].

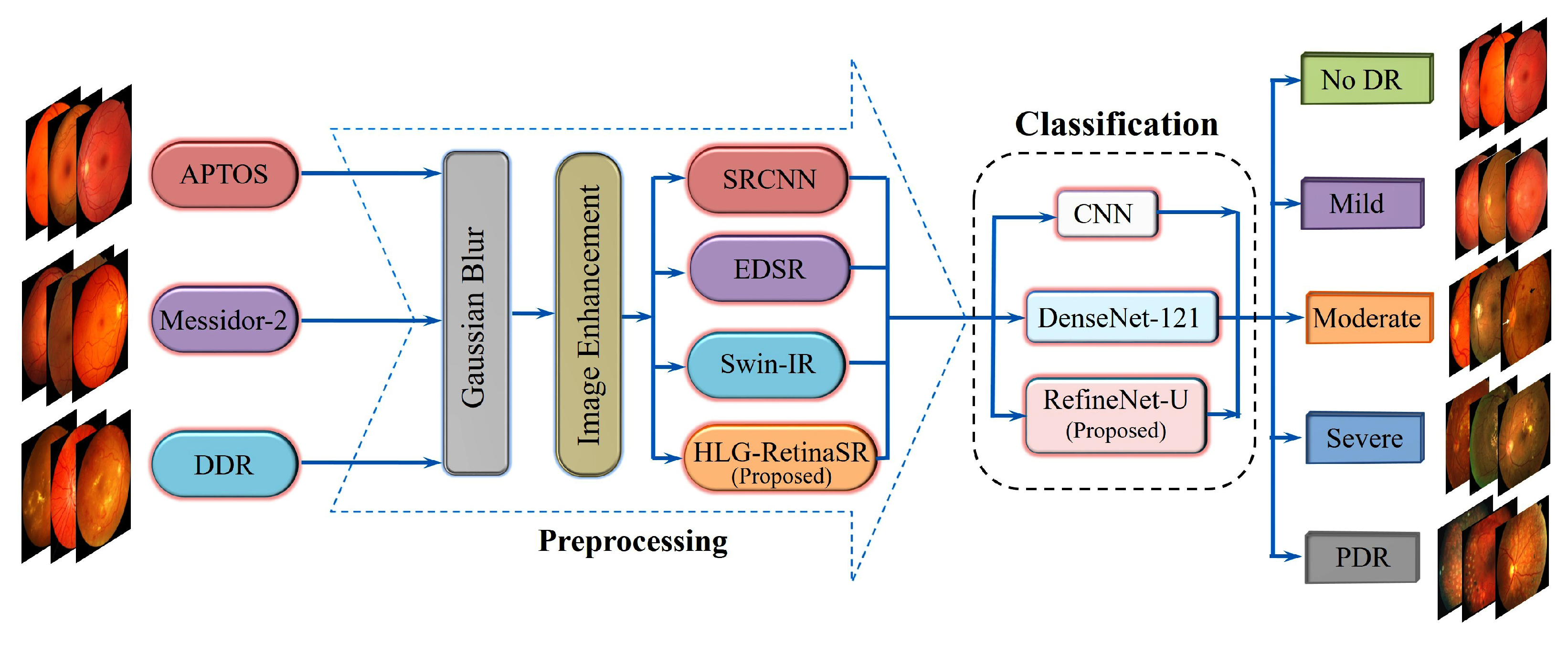

1.4. Key Contributions

- Development of the HLG-RetinaSR Module: A Hybrid Local–Global Super-Resolution (HLG-RetinaSR) module is proposed to enhance retinal image quality. By integrating deformable convolutional layers and a vision transformer, the module effectively isolates and crops local lesion areas while suppressing irrelevant background regions. This targeted enhancement reduces noise interference and significantly boosts classification accuracy.

- Feature Fusion Strategy: A comprehensive feature fusion mechanism is implemented through a U-shaped structure with skip connections for seamless integration of fine-grained details and high-level semantic features. This design preserves critical information during downsampling and enhances classification accuracy upon upsampling.

- Proposed RefineNet-U Model: A novel multi-branch fine-grained classification architecture, RefineNet-U, is introduced based on the U-Net backbone. This model is specifically designed to improve the accuracy of DR grade classification by capturing hierarchical lesion representations. U-Net offers several distinct advantages:

- -

- Precise Lesion Localization:The U-Net structure facilitates the accurate identification of DR lesions, such as microaneurysms and exudates. By effectively pinpointing these abnormalities, clinicians can better determine disease severity and progression.

- -

- Context-Aware Feature Extraction:The network’s contracting path employs downsampling to capture high-level contextual information. This ability to learn multiple scales of features ensures that U-Net can discern nuanced details in retinal images, leading to more robust DR classification.

- -

- U-shaped Architecture with Skip Connections:U-Net comprises a contracting path for feature extraction and an expanding path for refined localization. Skip connections link matching layers in these paths, seamlessly integrating detailed lower-level features with broader high-level representations. This architecture maintains essential spatial information crucial for detecting small lesions in retinal images.

- Enhanced Healthcare Management: The proposed method aids in advancing healthcare management by enabling the early detection and precise classification of DR. This facilitates healthcare practitioners in implementing timely interventions, improving patient care and alleviating the strain on healthcare infrastructure.

2. Literature Review

2.1. Image Enhancement Approaches

2.2. Machine Learning Approaches

2.3. Deep Learning Approaches

2.4. Research Gap

- Inadequate Fine-Grained Lesion Discrimination: Most existing models fail to distinguish subtle pathological features such as microaneurysms, hard exudates, or early neovascularization with sufficient granularity, especially in early-stage DR. This limits their effectiveness in clinically actionable early diagnosis.

- Limited Integration of Local–Global Context: Many DR classification frameworks prioritize either global structural features or local lesion-level information, but rarely fuse both effectively. This results in incomplete representations of disease progression.

- Suboptimal Image Enhancement Pipelines: Conventional super-resolution or enhancement methods often overlook task-specific lesion enhancement. They improve general image quality but do not necessarily boost classification performance in clinical contexts.

- Lack of Adaptivity to Retinal Variability: Existing models show poor generalization across fundus images with varied acquisition conditions (illumination, resolution, patient demographics). There is a need for adaptive enhancement–classification systems that are robust across datasets.

- Underutilization of Transformer-Based Architectures: While transformers have shown promise in medical imaging, their integration with CNNs for joint lesion localization and classification remains underexplored in DR pipelines.

3. Proposed Methodology

3.1. Image Preprocessing

- is the intensity at pixel coordinates .

- is the Gaussian kernel, defined in Equation (2):

- are coordinates relative to the kernel center.

- denotes the standard deviation that controls the degree of blurring.

- The sum of all kernel weights equals 1, preserving overall brightness.

- and represent the smallest and largest intensities in the image. This step standardizes images across all datasets, vital for the subsequent enhancement process.

- is the pixel intensity at coordinates in the grayscale image.

- is the normalized pixel value in the range .

3.2. Image Enhancement

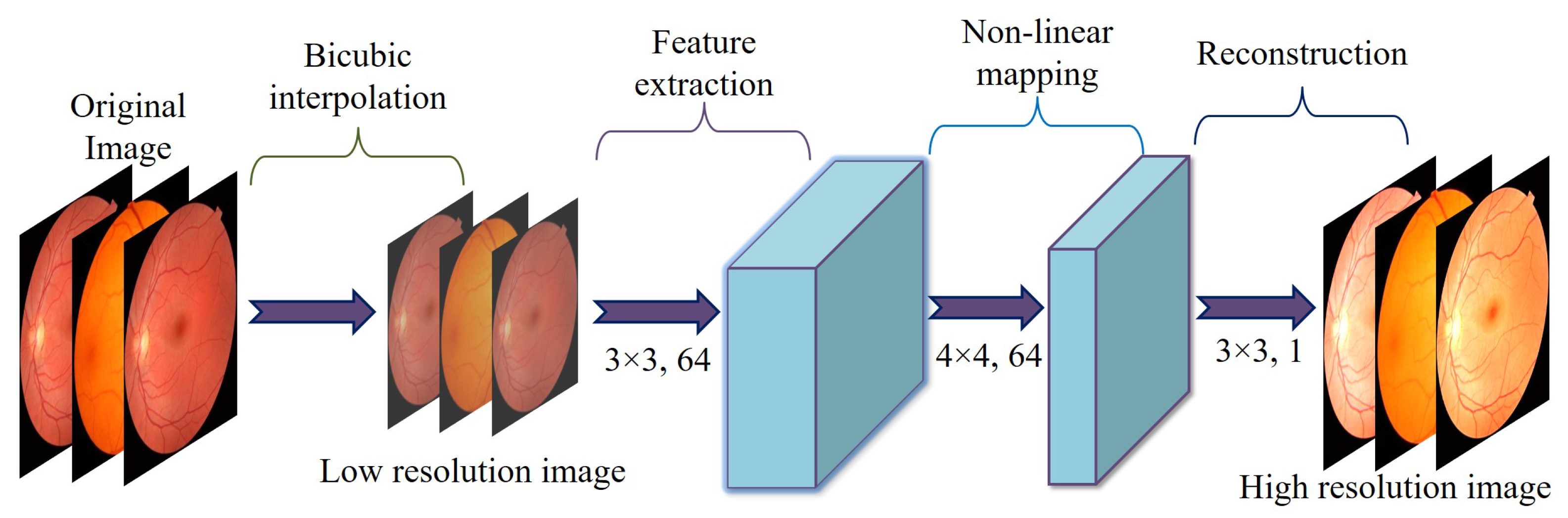

3.2.1. Super-Resolution Convolutional Neural Network

- -

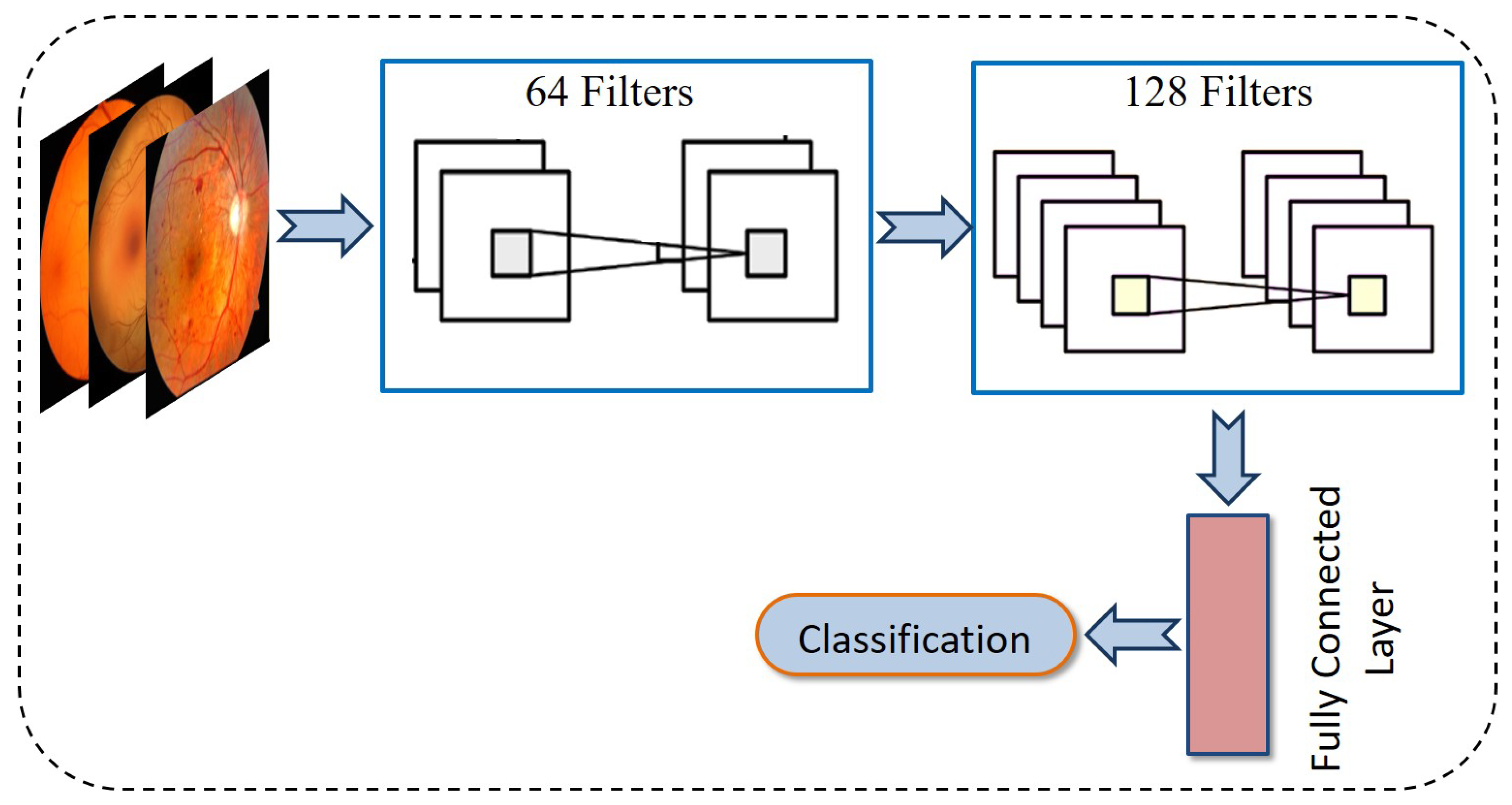

- Feature ExtractionThis step applies two convolutional layers to an input image of size . Each layer includes 64 filters, a kernel, a stride of 1, and padding of 1. This setup preserves the spatial dimensions and produces feature maps of size .

- -

- UpsamplingThe resolution is expanded from to using a transposed convolution (deconvolution) layer. This layer is defined with 64 filters, a kernel, a stride of 2, and padding of 1, resulting in output feature maps measuring .

- -

- ReconstructionThe final convolutional layer employs a kernel, a stride of 1, and padding of 1. This layer reduces the channel count from 64 down to 1, producing a grayscale output. The resulting super-resolved image has dimensions .

- N is the total number of images in the dataset.

- represents the ground truth high-resolution image for the k-th sample.

- denotes the super-resolved image predicted by the model.

- represents the learnable parameters of the model.

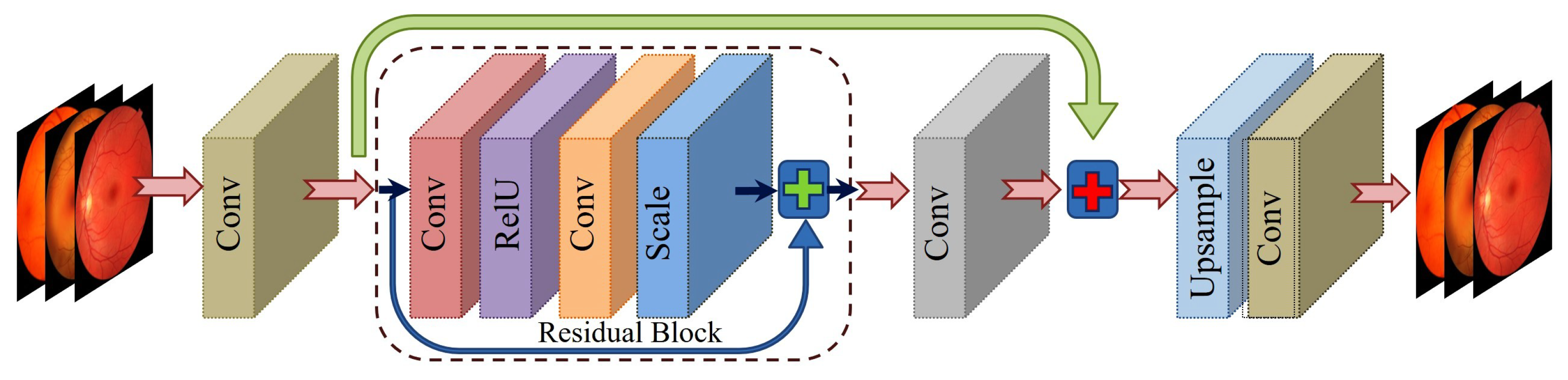

3.2.2. Enhanced Deep Super-Resolution Network

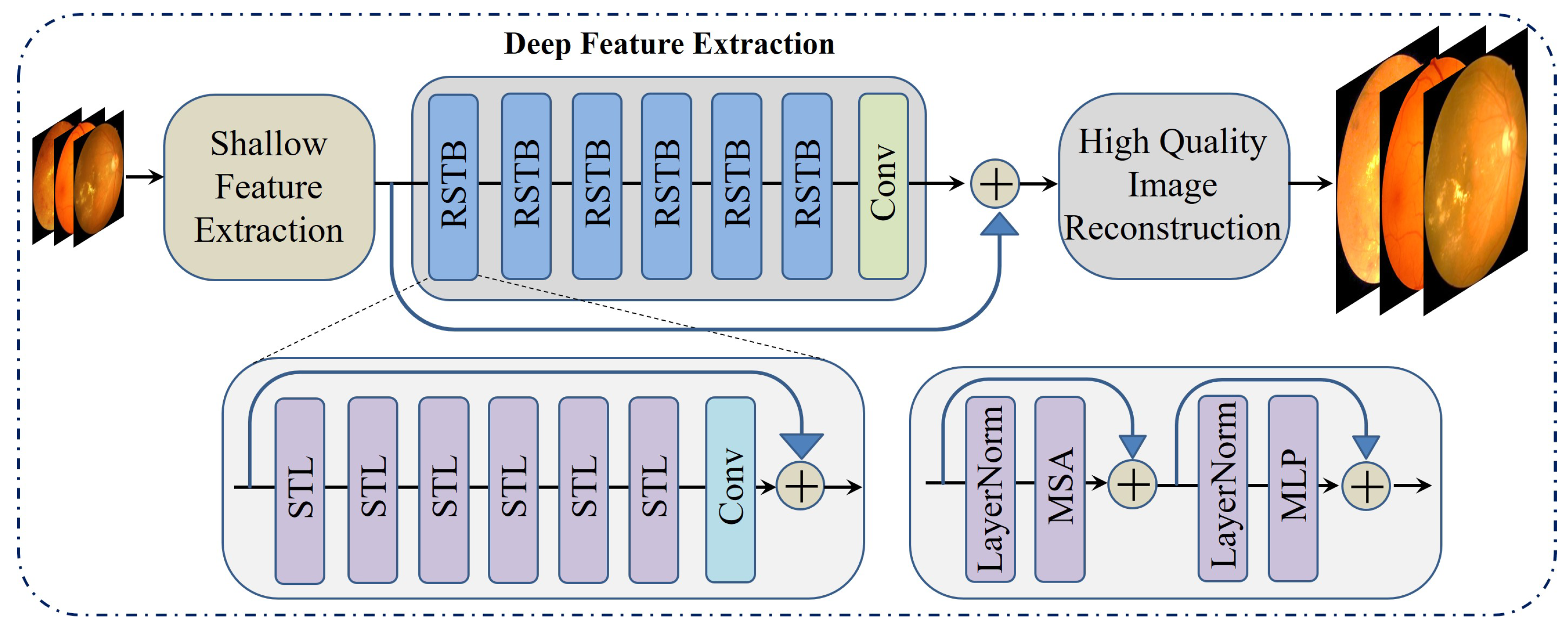

3.2.3. Swin Transformer for Image Restoration

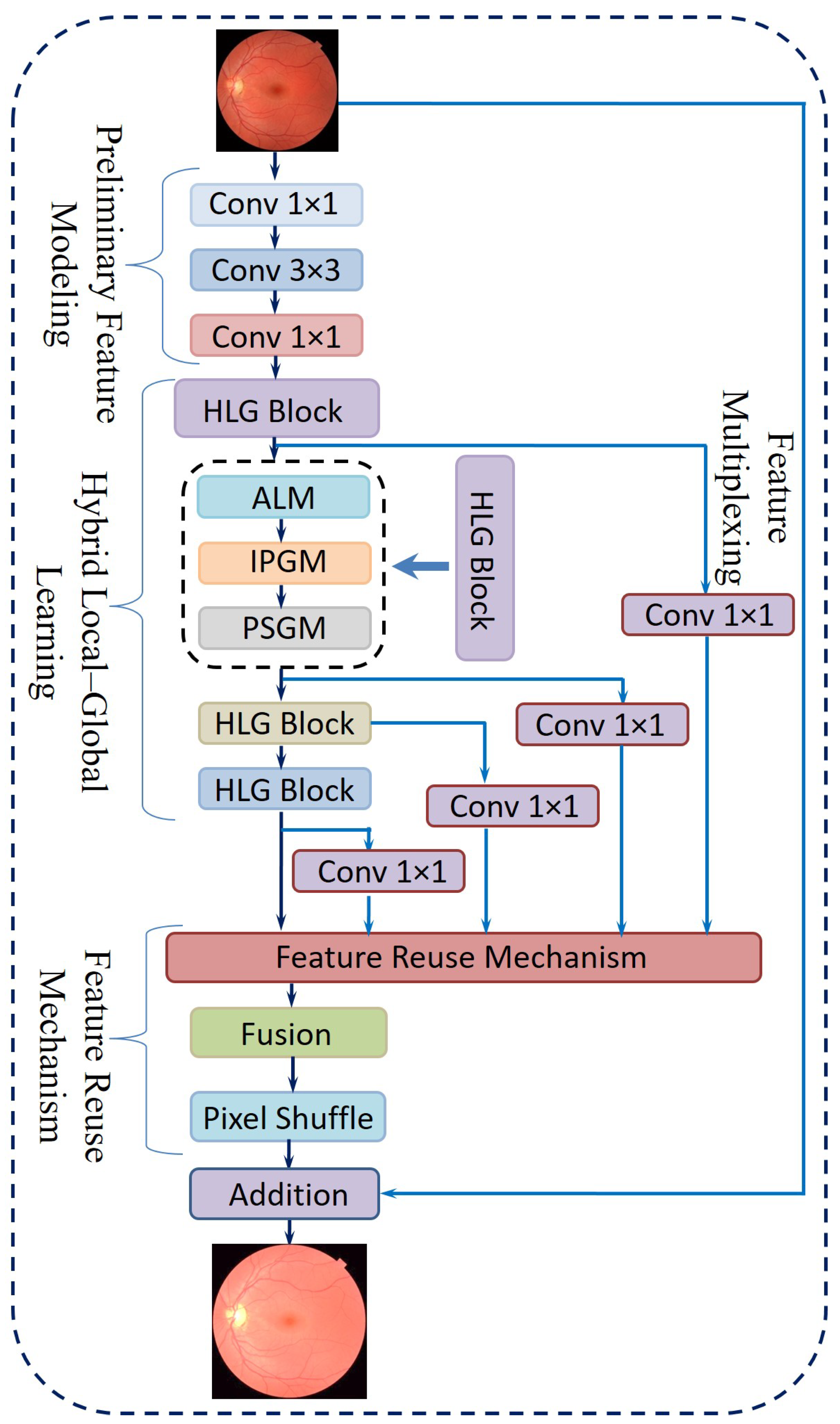

3.2.4. Proposed Novel Hybrid Local–Global Retina Super-Resolution Model

- i.

- Preliminary Feature ModelingConventional convolution-based super-resolution architectures often rely on a single 3 × 3 convolutional layer, which may overlook channel-wise interactions. Drawing inspiration from efficient model design [51], the proposed approach includes the following steps:

- A point-wise convolution to expand the feature channels.

- A 3 × 3 convolution to capture spatially distributed features in this augmented space.

- Another point-wise convolution to merge and reorganize the extracted channels, thereby retaining cross-channel information.

- ii.

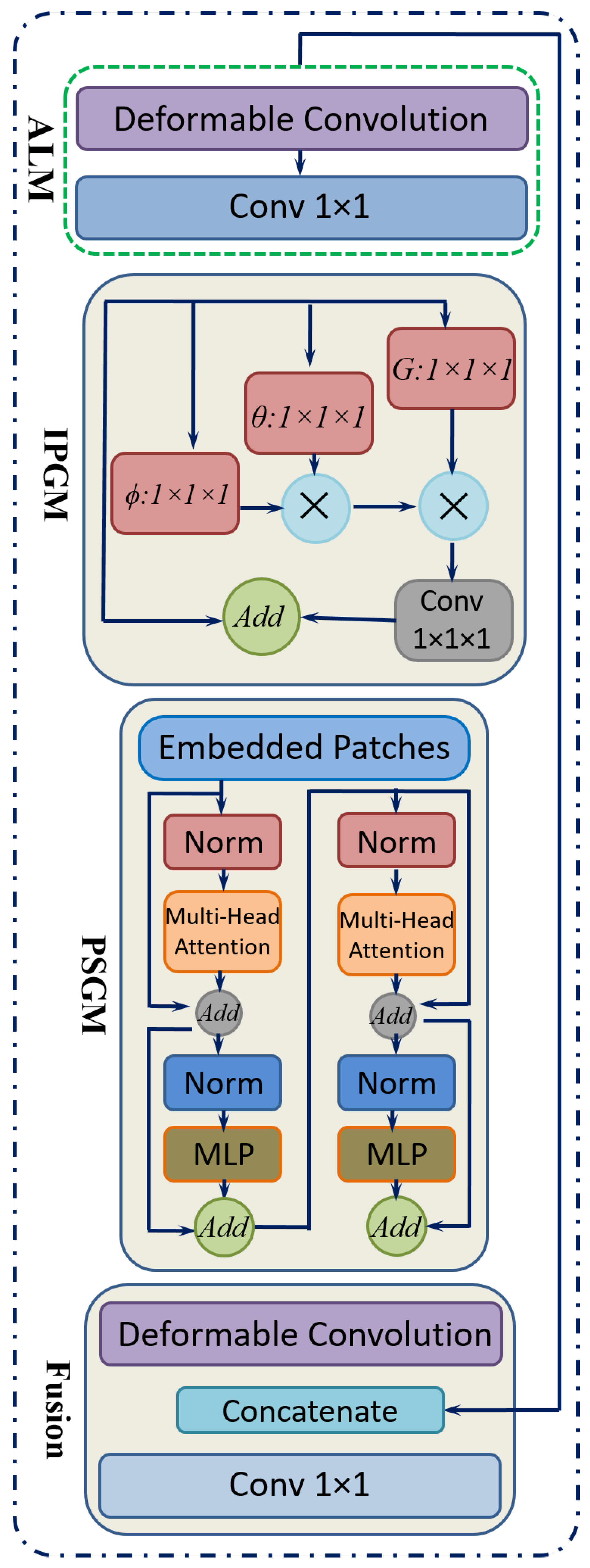

- Hybrid Local–Global LearningTo facilitate localized detail restoration for irregular structures and long-range coherence among possibly distant image regions, a Hybrid Local–Global (HLG) block is introduced. Serving as the core of the proposed neural network, as shown in Figure 9, the HLG block comprises three specialized modules, namely, an Adaptive Local Module (ALM), Inter-Pixel Global Module (IPGM), and Patch-Scope Global Module (PSGM), each described below:

- ALM: After initial feature modeling, the feature map is passed into the ALM, which is designed to handle spatially irregular or deformed regions common in retinal images. This module leverages a deformable convolution [52], which includes learnable offsets and modulations for each kernel location. Unlike fixed-grid convolutions, each position can shift in multiple directions, effectively reshaping the sampling field according to local structures.For a location r in the input, the deformable convolution can be expressed as Equation (7):where denotes learnable weights, denotes offset vectors, denotes modulation scalars, and z denotes the input features. This adaptivity enables the filter’s receptive field to follow structure irregularities more effectively.After the deformable layer, a point-wise convolution further integrates these local features into a refined representation in Equation (8).

- IPGM: In medical diagnosis, comprehending the intricate relationships between distant regions of an organ is crucial, as changes in one area can profoundly affect others. However, traditional convolutional layers, limited by their fixed local receptive fields, often fail to capture these global dependencies effectively. To overcome this constraint, a non-local mechanism [53] is integrated, which establishes correlations between each pixel and all others within the image. This pixel-to-pixel global learning approach is mathematically formulated in Equation (9), where it defines the feature representation at pixel i. The output of the inter-pixel global learning mechanism, denoted as , can be written aswhere F estimates the relationship between position i and position j, and G provides a signal based on those relationships. The normalization term N ensures balanced contributions from all positions. This global pixel–pixel approach contrasts with the ALM’s localized view, effectively restoring large-scale consistencies throughout the image.

- PSGM: While the IPGM effectively captures pixel-level correlations, it may struggle to identify broader patterns, such as organ boundaries or elongated vessels. To overcome this limitation, the PSGM employs patch-level attention. The feature map is first divided into small patches of 2 × 2 pixels, creating a structured patch sequence. Unlike certain vision transformer-based methods that utilize larger patch sizes of 8 × 8 or 16 × 16 pixels. This approach preserves fine-grained details essential for low-level tasks like super-resolution.To further refine feature representation, the PSGM applies a multi-head self-attention mechanism over these patch embeddings, as represented in Equation (10):where is the restructured patch sequence. This design incorporates two transformer layers with four attention heads, balancing capacity and computational cost. An optional deformable convolution was applied to further refine the patch-oriented features. The resulting feature map was then concatenated with the original input, and a point-wise convolution fused them into the combined output .

- iii.

- Feature Reuse MechanismFollowing the HLG block, a feature reuse step was introduced to mitigate redundancy and enhance the utilization of extracted representations. This approach is inspired by prior research [54], which highlights the advantages of revisiting and reusing intermediate features in deep networks. Specifically, the output of each HLG block incorporates a residual skip connection, while a point-wise convolution is applied to compress the dimensionality of , thereby reducing computational overhead. The final multiplexed features from all HLG blocks are then concatenated and refined through an additional 1 × 1 convolution, leading to the formulation expressed in Equation (11):where M denotes the aggregated output prepared for the reconstruction stage.

- iv.

- Final ReconstructionThe network concludes with a reconstruction phase, which uses

- A 1 × 1 convolution to further reduce feature dimensions.

- A Pixel Shuffle layer [55] to upsample the features to the desired resolution.

- Additive blending of the upsampled output with a simple interpolation of the original input, yielding the final super-resolved image.

3.3. Classification of DR

3.3.1. Convolution Neural Network

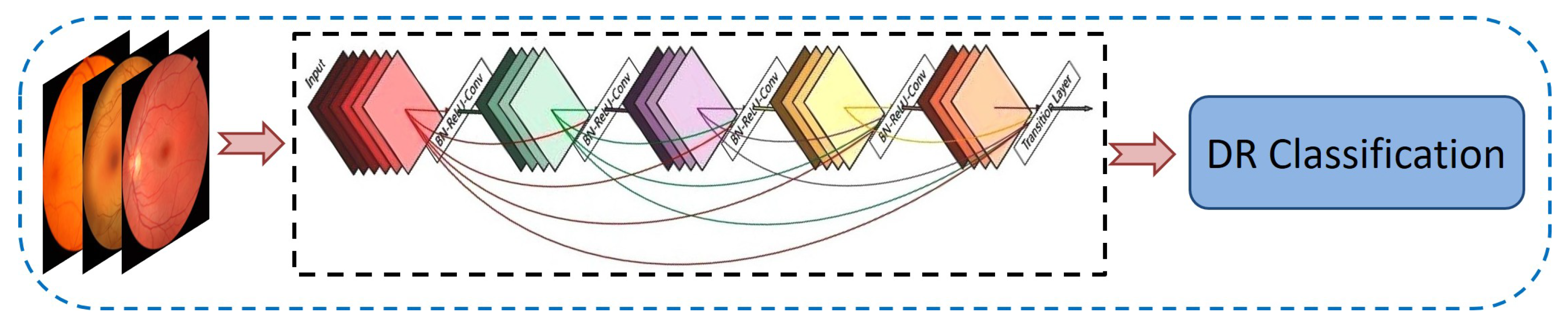

3.3.2. DenseNet-121

3.3.3. Classification Utilizing RefineNet-U

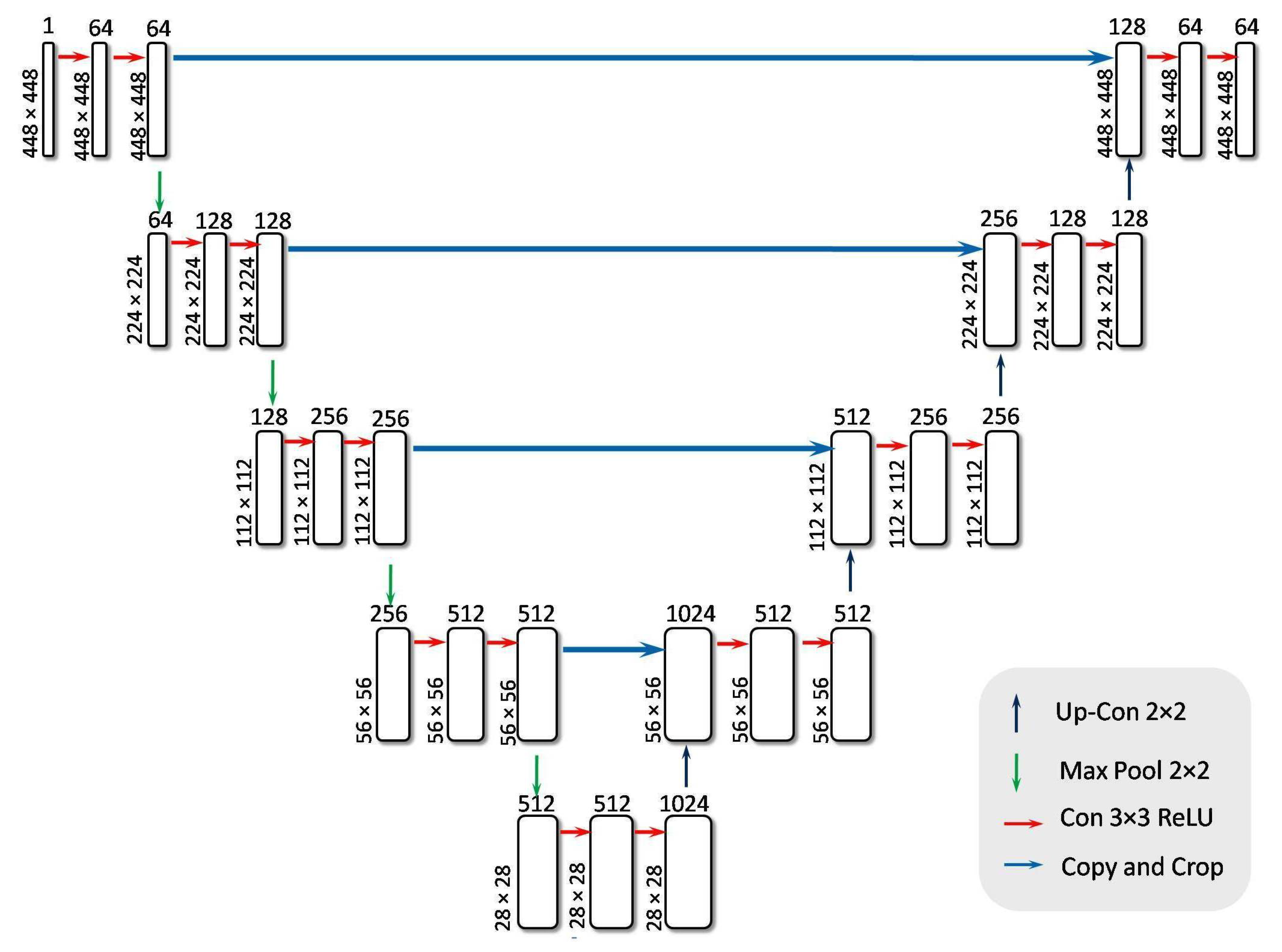

- Encoder BlockThe encoder block is a feature extractor that encodes input images through multiple sequential blocks. Each encoder block contains two convolutional layers, both activated by the ReLU function, followed by a max pooling layer. ReLU effectively nullifies any negative input by assigning it a zero slope. While the convolutional layers increase the depth (number of feature channels), the max pooling layer halves the spatial dimensions of the feature maps without reducing their depth.In this study, retinal images of size 448 × 448 × 1 were employed as input. The first convolutional layer, consisting of 64 filters, transforms the input image into a feature map of dimensions 448 × 448 × 64. The max-pooling operation, depicted by a downward arrow in Figure 12, is then applied to reduce the feature map size to 224 × 224 × 64. The network architecture incorporates four encoder blocks, where each block (except the first) receives the output feature map from its preceding block. The output of the final encoder block is passed to a bridge block for further processing. The convolution and downsampling procedures mentioned above are formally described in Equations (12) and (13).This process effectively extracts and compresses important features, preparing the data for classification into 5 classes.

- Bridge BlockThe bridge block ensures a seamless flow of encoded features from the encoder to the decoder. Table 2 outlines the specifics of this component, which contains two convolutional layers using ReLU for activation. After the four encoder blocks have downsampled the 448 × 448 × 1 input to 28 × 28 × 512, the bridge block transforms these feature maps to 28 × 28 × 1024. The resulting output is a high-level representation of the retinal images, which is then passed on to the decoder network for further processing. It is described in Equation (14).This bottleneck layer creates an abstract representation of the input image, capturing the essential features required for classification. This compressed feature map is then forwarded to the decoder block for further processing.

- Decoder BlockThe decoder network reconstructs the final segmentation mask after the bridge block delivers its high-level representation.The decoder comprises decoder blocks, each containing a 2D transposed convolution for upsampling, a concatenation step that merges data from the corresponding encoder level, and two standard 2D convolutions activated by ReLU.In Figure 12, the upward-pointing orange arrow denotes the transpose convolution process that enlarges the spatial dimensions.Specifically, the feature map is scaled up from 28 × 28 × 1024 to 56 × 56 × 512, and then concatenated with the matching encoder output (also 56 × 56 × 512) to form a combined feature map of 56 × 56 × 1024. By integrating earlier-layer features in this way, the decoder can refine its predictions for more accurate segmentation. Eventually, after repeating the upsampling process through all decoder blocks, the network restores the spatial resolution to the original input size of 448 × 448 × 1, as shown in Equation (15). This final multi-channel feature map is treated as the UNet output and is then passed to flattened and dense layers for subsequent classification tasks.This process ensures that information is preserved and utilized effectively for accurate classification, making the decoder block a critical component in the classification task.

- Flattening and Dense LayerThe RefineNet-U generates a multi-dimensional feature map through its convolutional layer, which is then transformed into a one-dimensional vector representation. To prevent the risk of overfitting, a dropout mechanism with a probability of 0.3 is applied. Following this, the resulting vector undergoes processing through a sequence of two fully connected (dense) layers. In these dense layers, every neuron establishes a direct link with all neurons from the preceding layer, ensuring complete interconnection. Additionally, the Leaky Rectified Linear Unit (LeakyReLU) activation function is employed within the dense layers to introduce non-linearity and enhance learning performance. In contrast to conventional ReLU, LeakyReLU retains negative values by assigning them a minor slope, as specified in Equation (16). The network culminates in a dense output layer that employs the softmax activation function. The softmax function computes the probabilities for each class, yielding a probability distribution in which the total of all probabilities equals one. The class with the greatest likelihood is deemed the projected class. The softmax function is shown in Equation (17). Subsequent to the network’s construction, the model undergoes training on the dataset and is then evaluated for classification accuracy using the test data. Table 3 shows the mathematical model of RefineNet-U. Table 4 presents a summary of the parameter values used in the encoder, bridge, decoder, flattening, and dense layers.where x is the input value and a is a small constant for the negative slope in the LeakyReLU activation function.where y is the input vector, is the i-th element of the input vector, and n is the total number of classes.

| Block Name | Layer Type | Count of Layers | Parameter | Value |

|---|---|---|---|---|

| Encoder Block | 2D Convolution Layer | 2 per block (4 blocks) | Filters | 64, 128, 256, 512 |

| Kernel size | 3 × 3 | |||

| Activation | ReLU | |||

| Max Pooling layer | 1 per block (4 blocks) | Pool size | 2 × 2 | |

| stride | 2 | |||

| Dropout Layer | 1 (after the last block) | Dropout Rate | 0.5 | |

| Bridge Block | 2D Convolution Layer | 2 | Filters | 1024 |

| Kernel Size | 3 × 3 | |||

| Activation | ReLU | |||

| Padding | same | |||

| Decoder Block | Transposed 2D Convolution layer | 1 per block (4 blocks) | Filters | 512, 256, 128, 64 |

| Kernel size | 2 × 2 | |||

| Activation | ReLU | |||

| 2D Convolution Layer | 2 per block (4 blocks) | Filters | 512, 256, 128, 64 | |

| Kernel size | 3 × 3 | |||

| Activation | ReLU | |||

| Concatenation layer | 1 per block (4 blocks) | - | N/A | |

| Flattening Layer | Flattening Layer | 1 | - | N/A |

| Dense Block | Dense Layer | 2 | Neurons | 128, 5 |

| Activation (Dense 1) | ReLU | |||

| Activaton (Dense 2) | Softmax | |||

| General | Batch Normalization Layer | Applied after Convolution Layers | - | N/A |

| Stage | Equation | Notations |

|---|---|---|

| Input | : input fundus image with height H, width W, and channels C; : the set of real numbers | |

| Encoder | ,: intermediate activations at level l; : encoder feature map; : ReLU activation; ∗: convolution operation; : weights and biases | |

| Pooling | : reduces spatial resolution of | |

| Bottleneck | b: bottleneck feature representation; L: last encoder layer index | |

| Decoder | Upsampling: Up enlarges spatial resolution of decoder feature map to | |

| Skip connection: concatenates decoder upsampled features with ; result: | ||

| Two convolutions + ReLU, ; : intermediate activations; : refined decoder feature map | ||

| Output | Final output: logits at pixel ; : weights and biases; : probability of class c at pixel ; (DR severity grades) | |

| Prediction | Pixel assigned to most probable class from predicted probabilities | |

| Loss Function | Categorical cross-entropy: compares predicted with ground truth one-hot label at each pixel ; is total training loss |

| Parameter Name | Definition | Formula |

|---|---|---|

| Accuracy (Acc) | Measures the proportion of correctly predicted cases among all cases. | |

| Precision (Prc) | Proportion of correctly predicted DR cases among all predicted DR cases. | |

| F1-Score (F1) | Harmonic mean of precision and recall, balancing both metrics. | |

| Recall (Rcl) | Proportion of accurately predicted DR cases among actual DR cases. | |

| Specificity (Spc) | Measures the model’s ability to correctly classify non-DR cases. | |

| Mean Squared Error (MSE) | Measures the average squared difference between original and processed image intensities. | |

| Peak Signal-to-Noise Ratio (PSNR) | Evaluate the ratio of signal power to noise interference in an image. | |

| Structural Similarity Index Measure (SSIM) | Assess image similarity based on structure, brightness, and contrast. |

4. Results and Discussion

4.1. Experimental Setup

4.2. Data Acquisition

4.2.1. APTOS-2019 Dataset (Dataset I)

4.2.2. Messidor-2 Dataset (Dataset II)

4.2.3. DDR Dataset (Dataset III)

4.3. Experimental Results

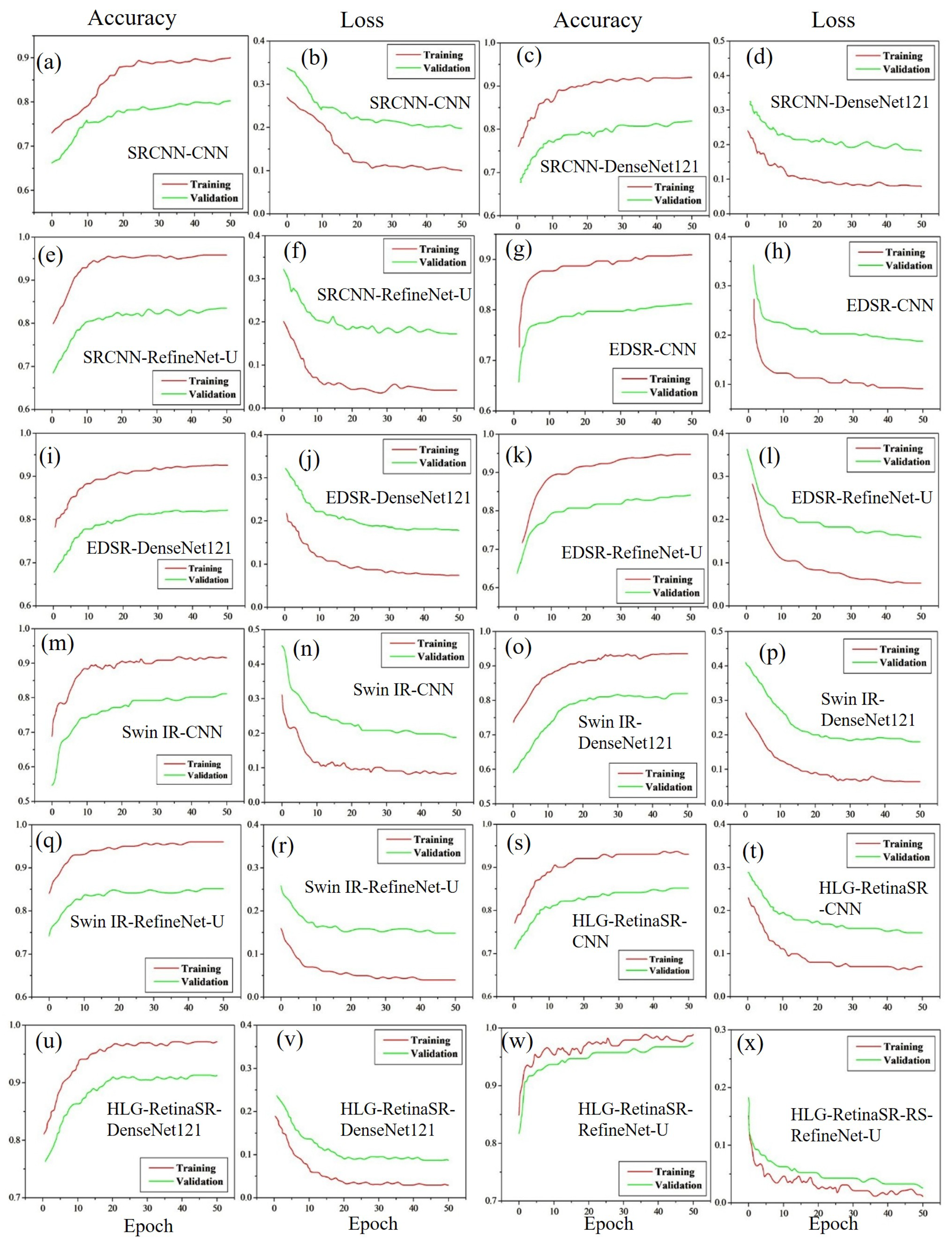

4.3.1. Accuracy and Loss Using Dataset I

4.3.2. Accuracy and Loss Using Dataset II

4.3.3. Accuracy and Loss Using Dataset III

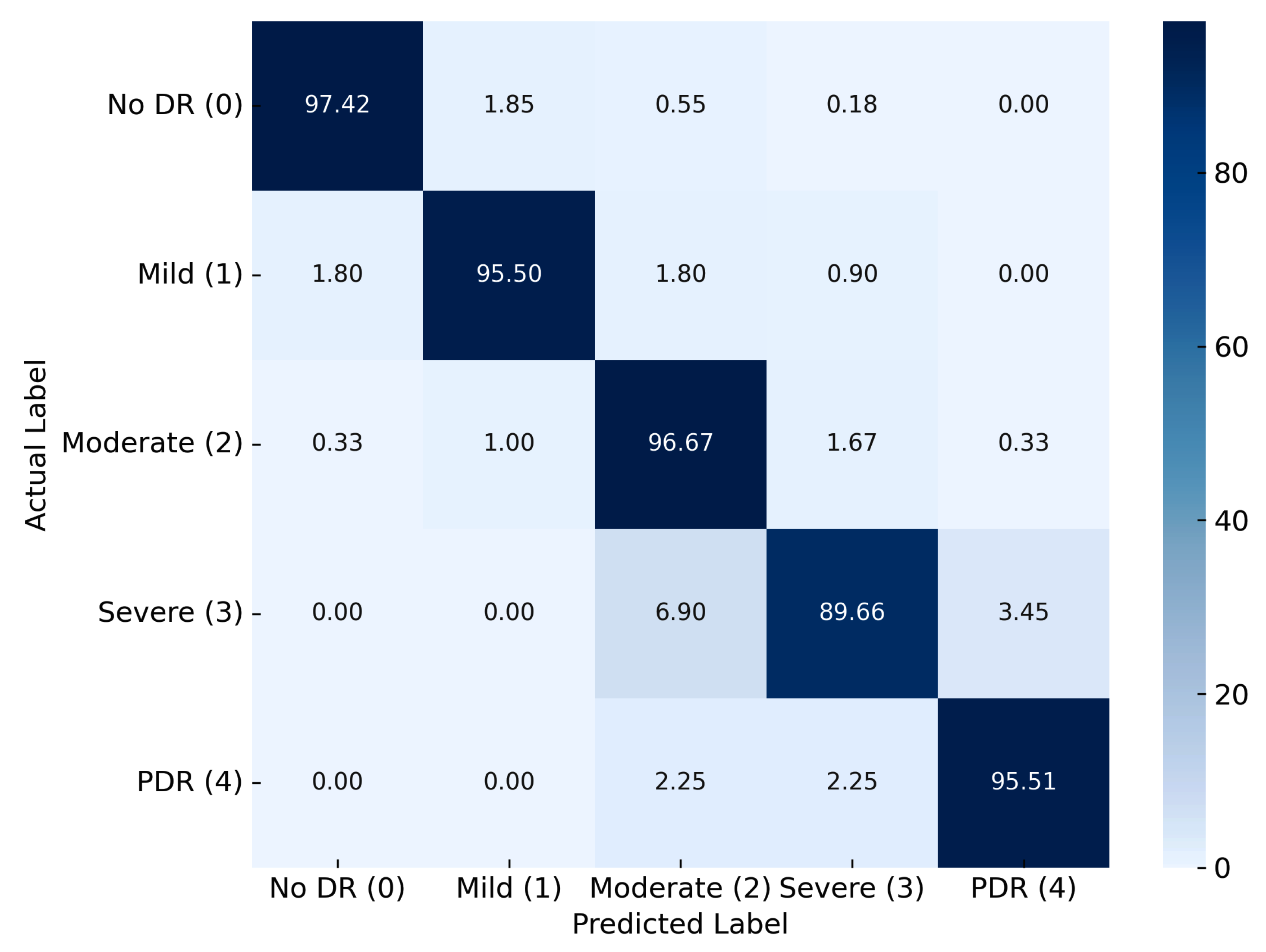

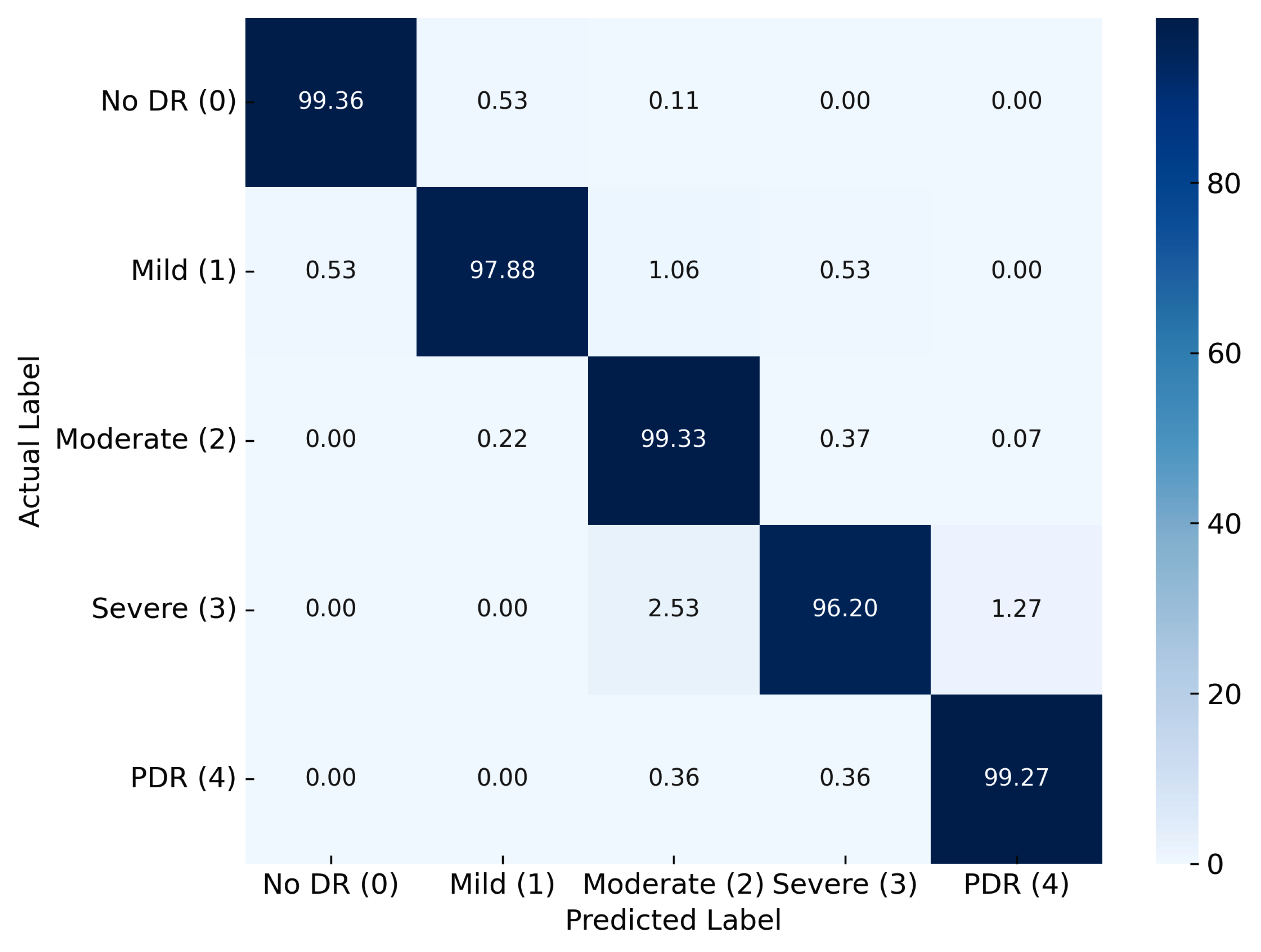

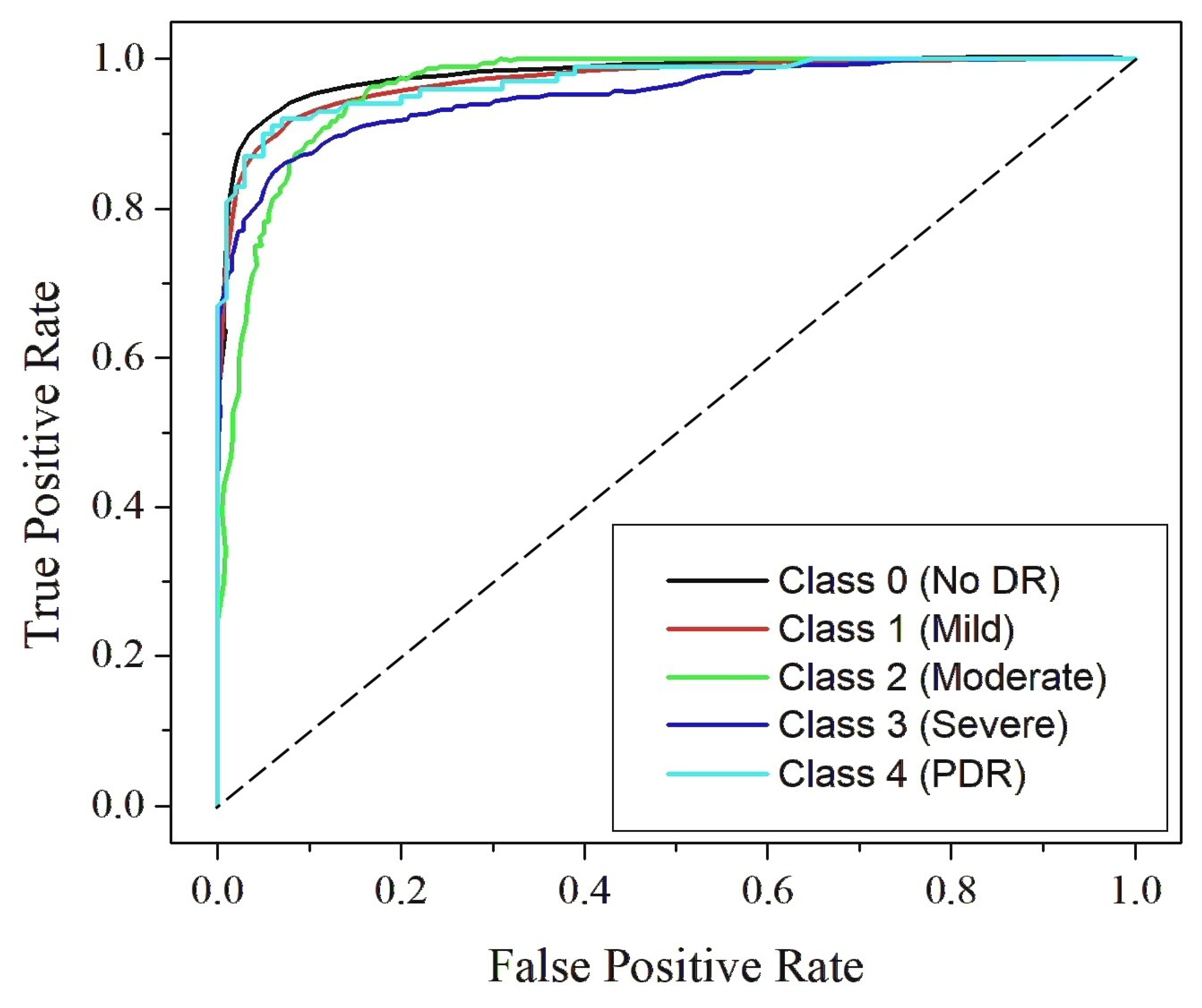

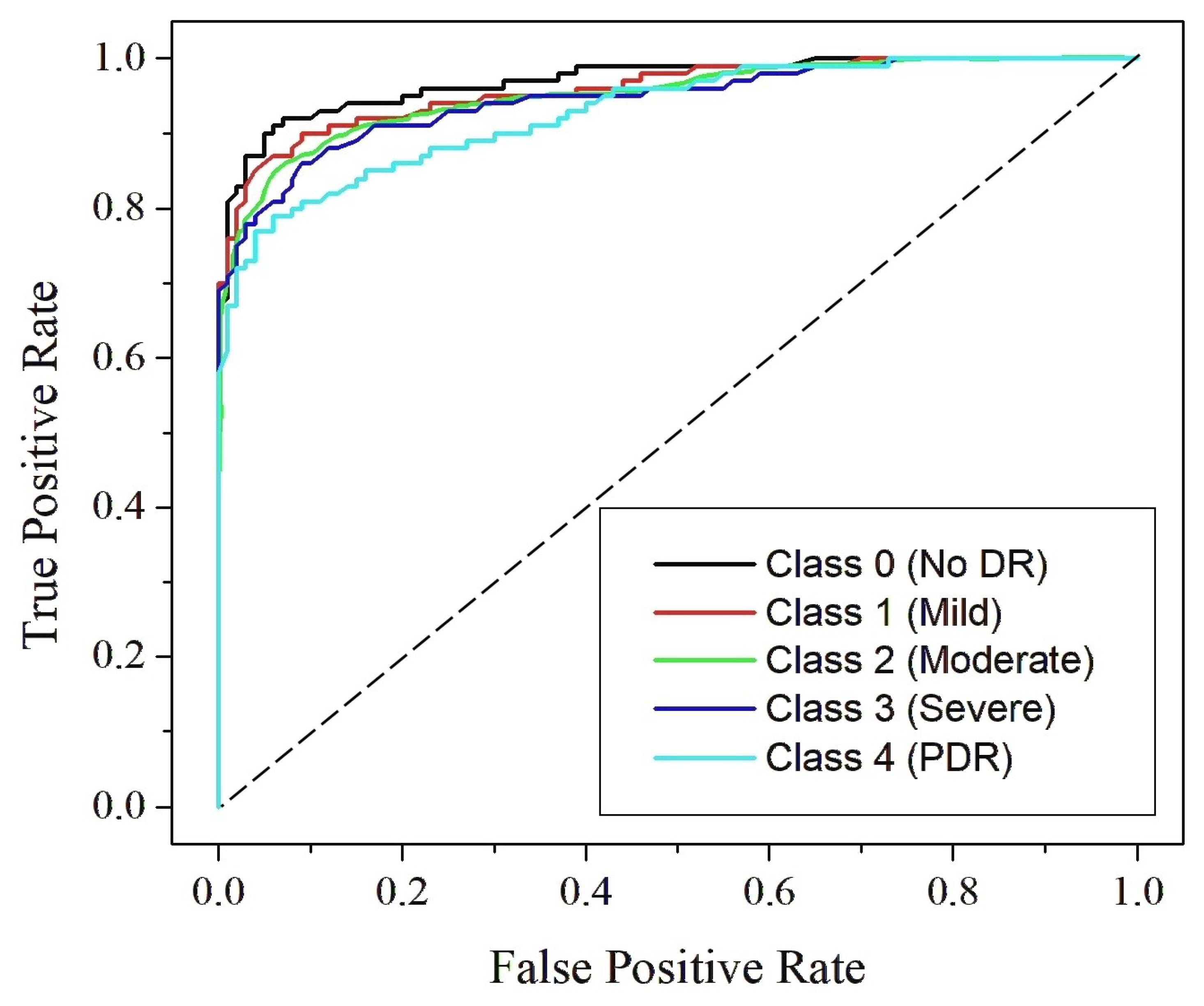

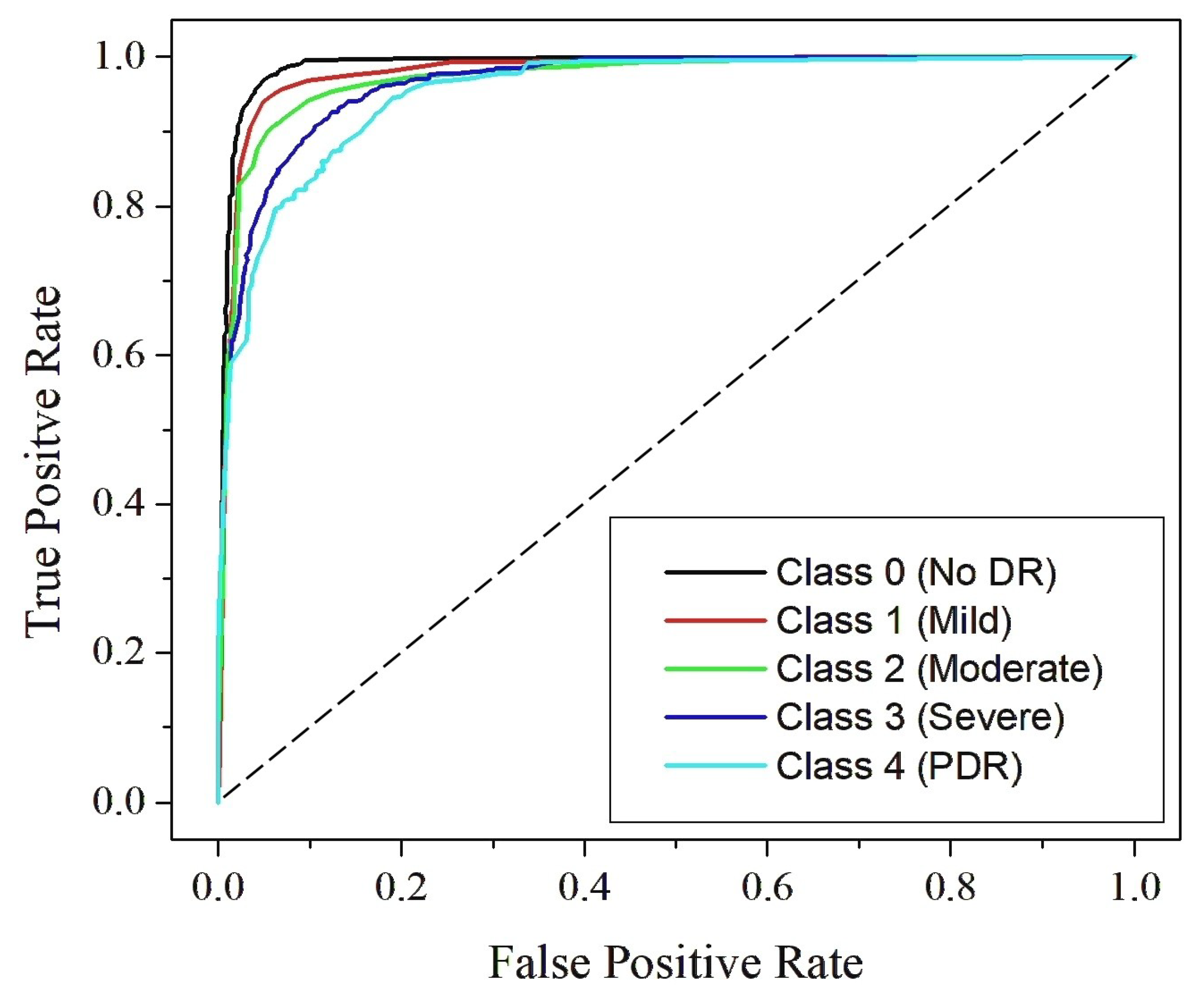

4.4. Classification Analysis

4.5. Comparison with State-of-the-Art Techniques

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alwakid, G.; Gouda, W.; Humayun, M.; Jhanjhi, N.Z. Deep learning-enhanced diabetic retinopathy image classification. Digit. Health 2023, 9, 20552076231194942. [Google Scholar] [CrossRef]

- Fong, D.S.; Aiello, L.P.; Ferris, F.L., III; Klein, R. Diabetic retinopathy. Diabetes Care 2004, 27, 2540–2553. [Google Scholar] [CrossRef]

- Bhimavarapu, U.; Battineni, G. Deep learning for the detection and classification of diabetic retinopathy with an improved activation function. Healthcare 2022, 11, 97. [Google Scholar] [CrossRef] [PubMed]

- Ganesan, K.; Martis, R.J.; Acharya, U.R.; Chua, C.K.; Min, L.C.; Ng, E.; Laude, A. Computer-aided diabetic retinopathy detection using trace transforms on digital fundus images. Med. Biol. Eng. Comput. 2014, 52, 663–672. [Google Scholar] [CrossRef] [PubMed]

- Wilkinson, C.P.; Ferris, F.L., III; Klein, R.E.; Lee, P.P.; Agardh, C.D.; Davis, M.; Dills, D.; Kampik, A.; Pararajasegaram, R.; Verdaguer, J.T.; et al. Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology 2003, 110, 1677–1682. [Google Scholar] [CrossRef]

- Stitt, A.W.; Curtis, T.M.; Chen, M.; Medina, R.J.; McKay, G.J.; Jenkins, A.; Gardiner, T.A.; Lyons, T.J.; Hammes, H.P.; Simo, R.; et al. The progress in understanding and treatment of diabetic retinopathy. Prog. Retin. Eye Res. 2016, 51, 156–186. [Google Scholar] [CrossRef] [PubMed]

- Jacoba, C.M.P.; Doan, D.; Salongcay, R.P.; Aquino, L.A.C.; Silva, J.P.Y.; Salva, C.M.G.; Zhang, D.; Alog, G.P.; Zhang, K.; Locaylocay, K.L.R.B.; et al. Performance of automated machine learning for diabetic retinopathy image classification from multi-field handheld retinal images. Ophthalmol. Retin. 2023, 7, 703–712. [Google Scholar] [CrossRef]

- Zhang, Z.; Deng, C.; Paulus, Y.M. Advances in structural and functional retinal imaging and biomarkers for early detection of diabetic retinopathy. Biomedicines 2024, 12, 1405. [Google Scholar] [CrossRef]

- Bellemo, V.; Lim, Z.W.; Lim, G.; Nguyen, Q.D.; Xie, Y.; Yip, M.Y.; Hamzah, H.; Ho, J.; Lee, X.Q.; Hsu, W.; et al. Artificial intelligence using deep learning to screen for referable and vision-threatening diabetic retinopathy in Africa: A clinical validation study. Lancet Digit. Health 2019, 1, e35–e44. [Google Scholar] [CrossRef]

- Alyoubi, W.L.; Shalash, W.M.; Abulkhair, M.F. Diabetic retinopathy detection through deep learning techniques: A review. Inform. Med. Unlocked 2020, 20, 100377. [Google Scholar] [CrossRef]

- Dai, L.; Sheng, B.; Chen, T.; Wu, Q.; Liu, R.; Cai, C.; Wu, L.; Yang, D.; Hamzah, H.; Liu, Y.; et al. A deep learning system for predicting time to progression of diabetic retinopathy. Nat. Med. 2024, 30, 584–594. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Khare, N.; Bhattacharya, S.; Singh, S.; Maddikunta, P.K.R.; Srivastava, G. Deep neural networks to predict diabetic retinopathy. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 5407–5420. [Google Scholar] [CrossRef]

- Martínez-Río, J.; Carmona, E.J.; Cancelas, D.; Novo, J.; Ortega, M. Deformable registration of multimodal retinal images using a weakly supervised deep learning approach. Neural Comput. Appl. 2023, 35, 14779–14797. [Google Scholar] [CrossRef]

- Ohri, K.; Kumar, M.; Sukheja, D. Self-supervised approach for diabetic retinopathy severity detection using vision transformer. Prog. Artif. Intell. 2024, 13, 165–183. [Google Scholar] [CrossRef]

- Singh, N.; Kaur, L.; Singh, K. Histogram equalization techniques for enhancement of low radiance retinal images for early detection of diabetic retinopathy. Eng. Sci. Technol. Int. J. 2019, 22, 736–745. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhang, B. Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans. Consum. Electron. 1999, 45, 68–75. [Google Scholar] [CrossRef]

- Wang, Q.; Ward, R.K. Fast image/video contrast enhancement based on weighted thresholded histogram equalization. IEEE Trans. Consum. Electron. 2007, 53, 757–764. [Google Scholar] [CrossRef]

- Sowmya, V.; Govind, D.; Soman, K. Significance of incorporating chrominance information for effective color-to-grayscale image conversion. Signal Image Video Process. 2017, 11, 129–136. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Keller, J.M.; Gray, M.R.; Givens, J.A. A fuzzy k-nearest neighbor algorithm. IEEE Trans. Syst. Man. Cybern. 1985, SMC-15, 580–585. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Zhang, H.; Su, J. Naive bayesian classifiers for ranking. In Proceedings of the 15th European Conference on Machine Learning, Pisa, Italy, 20–24 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 501–512. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Shome, S.K.; Vadali, S.R.K. Enhancement of diabetic retinopathy imagery using contrast limited adaptive histogram equalization. Int. J. Comput. Sci. Inf. Technol. 2011, 2, 2694–2699. [Google Scholar]

- Liu, J.; Zhou, X.; Wan, Z.; Yang, X.; He, W.; He, R.; Lin, Y. Multi-scale FPGA-based infrared image enhancement by using RGF and CLAHE. Sensors 2023, 23, 8101. [Google Scholar] [CrossRef]

- Raj, A.; Shah, N.A.; Tiwari, A.K. A novel approach for fundus image enhancement. Biomed. Signal Process. Control 2022, 71, 103208. [Google Scholar] [CrossRef]

- You, C.; Li, G.; Zhang, Y.; Zhang, X.; Shan, H.; Li, M.; Ju, S.; Zhao, Z.; Zhang, Z.; Cong, W.; et al. CT super-resolution GAN constrained by the identical, residual, and cycle learning ensemble (GAN-CIRCLE). IEEE Trans. Med. Imaging 2019, 39, 188–203. [Google Scholar] [CrossRef]

- Raj, A.; Tiwari, A.K.; Martini, M.G. Fundus image quality assessment: Survey, challenges, and future scope. IET Image Process. 2019, 13, 1211–1224. [Google Scholar] [CrossRef]

- Gayathri, S.; Gopi, V.P.; Palanisamy, P. Automated classification of diabetic retinopathy through reliable feature selection. Phys. Eng. Sci. Med. 2020, 43, 927–945. [Google Scholar] [CrossRef]

- Hasan, M.A.; Bhargav, T.; Sandeep, V.; Reddy, V.S.; Ajay, R. Image classification using convolutional neural networks. Int. J. Mech. Eng. Res. Technol. 2024, 16, 173–181. [Google Scholar]

- Ikram, A.; Imran, A. ResViT FusionNet Model: An explainable AI-driven approach for automated grading of diabetic retinopathy in retinal images. Comput. Biol. Med. 2025, 186, 109656. [Google Scholar] [CrossRef]

- Shamrat, F.J.M.; Shakil, R.; Sharmin; Hoque ovy, N.; Akter, B.; Ahmed, M.Z.; Ahmed, K.; Bui, F.M.; Moni, M.A. An advanced deep neural network for fundus image analysis and enhancing diabetic retinopathy detection. Healthc. Anal. 2024, 5, 100303. [Google Scholar] [CrossRef]

- Venkaiahppalaswamy, B.; Reddy, P.P.; Batha, S. Hybrid deep learning approaches for the detection of diabetic retinopathy using optimized wavelet based model. Biomed. Signal Process. Control 2023, 79, 104146. [Google Scholar] [CrossRef]

- Rahman, A.; Youldash, M.; Alshammari, G.; Sebiany, A.; Alzayat, J.; Alsayed, M.; Alqahtani, M.; Aljishi, N. Diabetic Retinopathy Detection: A Hybrid Intelligent Approach. Comput. Mater. Contin. 2024, 80, 4561–4576. [Google Scholar] [CrossRef]

- Navaneethan, R.; Devarajan, H. Enhancing diabetic retinopathy detection through preprocessing and feature extraction with MGA-CSG algorithm. Expert Syst. Appl. 2024, 249, 123418. [Google Scholar] [CrossRef]

- Shahzad, T.; Saleem, M.; Farooq, M.S.; Abbas, S.; Khan, M.A.; Ouahada, K. Developing a transparent diagnosis model for diabetic retinopathy using explainable AI. IEEE Access 2024, 12, 149700–149709. [Google Scholar] [CrossRef]

- Al-Antary, M.T.; Arafa, Y. Multi-scale attention network for diabetic retinopathy classification. IEEE Access 2021, 9, 54190–54200. [Google Scholar] [CrossRef]

- Minarno, A.E.; Mandiri, M.H.C.; Azhar, Y.; Bimantoro, F.; Nugroho, H.A.; Ibrahim, Z. Classification of diabetic retinopathy disease using convolutional neural network. JOIV Int. J. Inform. Vis. 2022, 6, 12–18. [Google Scholar] [CrossRef]

- Minarno, A.E.; Fadhlan, M.; Munarko, Y.; Chandranegara, D.R. Classification of Dermoscopic Images Using CNN-SVM. JOIV Int. J. Inform. Vis. 2024, 8, 606–612. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Yuan, D.; Liu, Y.; Xu, Z.; Zhan, Y.; Chen, J.; Lukasiewicz, T. Painless and accurate medical image analysis using deep reinforcement learning with task-oriented homogenized automatic pre-processing. Comput. Biol. Med. 2023, 153, 106487. [Google Scholar] [CrossRef] [PubMed]

- Salvi, M.; Acharya, U.R.; Molinari, F.; Meiburger, K.M. The impact of pre-and post-image processing techniques on deep learning frameworks: A comprehensive review for digital pathology image analysis. Comput. Biol. Med. 2021, 128, 104129. [Google Scholar] [CrossRef] [PubMed]

- Ningsih, D.R. Improving retinal image quality using the contrast stretching, histogram equalization, and CLAHE methods with median filters. Int. J. Image, Graph. Signal Process. 2020, 14, 30. [Google Scholar] [CrossRef]

- Goceri, E. Evaluation of denoising techniques to remove speckle and Gaussian noise from dermoscopy images. Comput. Biol. Med. 2023, 152, 106474. [Google Scholar] [CrossRef]

- Nahiduzzaman, M.; Islam, M.R.; Islam, S.R.; Goni, M.O.F.; Anower, M.S.; Kwak, K.S. Hybrid CNN-SVD based prominent feature extraction and selection for grading diabetic retinopathy using extreme learning machine algorithm. IEEE Access 2021, 9, 152261–152274. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Howard, L.J.; Subramanian, A.; Hoteit, I. A machine learning augmented data assimilation method for high-resolution observations. J. Adv. Model. Earth Syst. 2024, 16, e2023MS003774. [Google Scholar] [CrossRef]

- Zhu, J.; Fang, L.; Ghamisi, P. Deformable convolutional neural networks for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1254–1258. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J.; Wang, L.; Ying, X.; Wu, T.; An, W.; Guo, Y. Light field image super-resolution using deformable convolution. IEEE Trans. Image Process. 2020, 30, 1057–1071. [Google Scholar] [CrossRef]

- Kabbai, L.; Abdellaoui, M.; Douik, A. Image classification by combining local and global features. Vis. Comput. 2019, 35, 679–693. [Google Scholar] [CrossRef]

- Aitken, A.; Ledig, C.; Theis, L.; Caballero, J.; Wang, Z.; Shi, W. Checkerboard artifact free sub-pixel convolution: A note on sub-pixel convolution, resize convolution and convolution resize. arXiv 2017, arXiv:1707.02937. [Google Scholar]

- Gulati, S.; Guleria, K.; Goyal, N. Classification of diabetic retinopathy using pre-trained deep learning model-DenseNet 121. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–6. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Kaggle. APTOS 2019 Blindness Detection. 2019. Available online: https://www.kaggle.com/competitions/aptos2019-blindness-detection (accessed on 7 April 2025).

- MESSIDOR-2: Diabetic Retinopathy Images. 2014. Available online: http://www.adcis.net/en/third-party/messidor2/ (accessed on 7 April 2025).

- DDR: Diabetic Retinopathy Dataset. 2019. Available online: https://www.kaggle.com/datasets/mariaherrerot/ddrdataset (accessed on 7 April 2025).

- Islam, M.R.; Abdulrazak, L.F.; Nahiduzzaman, M.; Goni, M.O.F.; Anower, M.S.; Ahsan, M.; Haider, J.; Kowalski, M. Applying supervised contrastive learning for the detection of diabetic retinopathy and its severity levels from fundus images. Comput. Biol. Med. 2022, 146, 105602. [Google Scholar] [CrossRef]

- Guo, X.; Li, X.; Lin, Q.; Li, G.; Hu, X.; Che, S. Joint grading of diabetic retinopathy and diabetic macular edema using an adaptive attention block and semisupervised learning. Appl. Intell. 2023, 53, 16797–16812. [Google Scholar] [CrossRef]

- Bodapati, J.D.; Balaji, B.B. Self-adaptive stacking ensemble approach with attention based deep neural network models for diabetic retinopathy severity prediction. Multimed. Tools Appl. 2024, 83, 1083–1102. [Google Scholar] [CrossRef]

- Oulhadj, M.; Riffi, J.; Khodriss, C.; Mahraz, A.M.; Bennis, A.; Yahyaouy, A.; Chraibi, F.; Abdellaoui, M.; Andaloussi, I.B.; Tairi, H. Diabetic retinopathy prediction based on wavelet decomposition and modified capsule network. J. Digit. Imaging 2023, 36, 1739–1751. [Google Scholar] [CrossRef]

- Han, Z.; Yang, B.; Deng, S.; Li, Z.; Tong, Z. Category weighted network and relation weighted label for diabetic retinopathy screening. Comput. Biol. Med. 2023, 152, 106408. [Google Scholar] [CrossRef]

- He, A.; Li, T.; Li, N.; Wang, K.; Fu, H. CABNet: Category attention block for imbalanced diabetic retinopathy grading. IEEE Trans. Med. Imaging 2020, 40, 143–153. [Google Scholar] [CrossRef]

- Wang, X.; Xu, M.; Zhang, J.; Jiang, L.; Li, L. Deep multi-task learning for diabetic retinopathy grading in fundus images. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 2826–2834. [Google Scholar]

| Original | SRCNN | EDSR | SWIN IR | HLG-RetinaSR |

|---|---|---|---|---|

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

| Dataset No. | Dataset | Number of Images | Image Size | DR Severity Levels | Hospital/Institution |

|---|---|---|---|---|---|

| I | APTOS | 5590 | 224 × 224, 512 × 512 pixels | 5 | Asia Pacific Tele-Ophthalmology Society (APTOS), India |

| II | MESSIDOR | 1800 | 1440 × 960 pixels | INRIA (French National Institute for Research in Computer Science and Automation) | |

| III | DDR | 13,673 | Multiple sizes | Institute of Automation, Chinese Academy of Sciences (CASIA), China |

| Enh. Tech. | Class. Tech. | Train Acc (%) | Test Acc (%) | Recall | Pre. (%) | F1-Score | Sens | Spec | AUC |

|---|---|---|---|---|---|---|---|---|---|

| SRCNN | CNN | 90.01 | 80.21 | 0.86 | 80.24 | 0.83 | 0.86 | 0.73 | 0.79 |

| DenseNet121 | 92.01 | 81.7 | 0.87 | 81.7 | 0.84 | 0.87 | 0.75 | 0.81 | |

| RefineNet-U | 95.87 | 83.47 | 0.88 | 83.48 | 0.86 | 0.88 | 0.77 | 0.83 | |

| EDSR | CNN | 90.89 | 81.21 | 0.87 | 81.24 | 0.84 | 0.87 | 0.74 | 0.8 |

| DenseNet121 | 92.67 | 82.15 | 0.87 | 82.15 | 0.85 | 0.87 | 0.75 | 0.81 | |

| RefineNet-U | 94.74 | 84.1 | 0.89 | 84.11 | 0.86 | 0.89 | 0.78 | 0.83 | |

| Swin IR | CNN | 91.88 | 81.16 | 0.87 | 81.19 | 0.84 | 0.87 | 0.74 | 0.8 |

| DenseNet121 | 93.87 | 81.97 | 0.87 | 81.98 | 0.85 | 0.87 | 0.75 | 0.81 | |

| RefineNet-U | 96.03 | 85.16 | 0.9 | 85.16 | 0.87 | 0.9 | 0.79 | 0.84 | |

| HLG-RetinaSR | CNN | 94.04 | 82.9 | 0.88 | 82.92 | 0.85 | 0.88 | 0.76 | 0.82 |

| DenseNet121 | 97.1 | 91.32 | 0.94 | 91.35 | 0.93 | 0.94 | 0.88 | 0.91 | |

| RefineNet-U | 98.9 | 97.42 | 0.98 | 97.45 | 0.98 | 0.98 | 0.96 | 0.97 |

| Enh. Tech. | Class. Tech. | Train Acc (%) | Test Acc (%) | Recall | Pre. (%) | F1-Score | Sens | Spec | AUC |

|---|---|---|---|---|---|---|---|---|---|

| SRCNN | CNN | 82.01 | 70.27 | 0.78 | 70.24 | 0.74 | 0.78 | 0.61 | 0.7 |

| DenseNet121 | 87.03 | 73.33 | 0.8 | 73.3 | 0.77 | 0.8 | 0.65 | 0.73 | |

| RefineNet-U | 91.9 | 76.41 | 0.83 | 76.5 | 0.8 | 0.83 | 0.68 | 0.76 | |

| EDSR | CNN | 87.04 | 71.87 | 0.79 | 71.91 | 0.75 | 0.79 | 0.63 | 0.71 |

| DenseNet121 | 91.07 | 75.77 | 0.82 | 75.8 | 0.79 | 0.82 | 0.68 | 0.75 | |

| RefineNet-U | 93.1 | 76.99 | 0.83 | 77.05 | 0.8 | 0.83 | 0.69 | 0.76 | |

| Swin IR | CNN | 88.06 | 74.53 | 0.81 | 74.55 | 0.78 | 0.81 | 0.66 | 0.74 |

| DenseNet121 | 92.09 | 77.98 | 0.84 | 78.03 | 0.81 | 0.84 | 0.7 | 0.77 | |

| RefineNet-U | 94.05 | 82.22 | 0.87 | 82.2 | 0.85 | 0.87 | 0.75 | 0.81 | |

| HLG-RetinaSR | CNN | 89.07 | 79.09 | 0.85 | 79.14 | 0.82 | 0.85 | 0.72 | 0.78 |

| DenseNet121 | 94.06 | 82.03 | 0.87 | 82.06 | 0.85 | 0.87 | 0.75 | 0.81 | |

| RefineNet-U | 97.89 | 87.66 | 0.91 | 87.76 | 0.9 | 0.91 | 0.83 | 0.87 |

| Enh. Tech. | Class. Tech. | Train Acc (%) | Test Acc (%) | Recall | Pre. (%) | F1-Score | Sens | Spec | AUC |

|---|---|---|---|---|---|---|---|---|---|

| SRCNN | CNN | 91.77 | 83.17 | 0.88 | 83.18 | 0.86 | 0.88 | 0.77 | 0.82 |

| DenseNet121 | 93.66 | 83.66 | 0.88 | 83.66 | 0.86 | 0.88 | 0.77 | 0.83 | |

| RefineNet-U | 96.53 | 84.14 | 0.89 | 84.14 | 0.86 | 0.89 | 0.78 | 0.83 | |

| EDSR | CNN | 92.06 | 82.18 | 0.87 | 82.19 | 0.85 | 0.87 | 0.75 | 0.81 |

| DenseNet121 | 96.05 | 87.4 | 0.91 | 87.41 | 0.89 | 0.91 | 0.82 | 0.87 | |

| RefineNet-U | 97.13 | 92.23 | 0.95 | 92.23 | 0.93 | 0.95 | 0.89 | 0.92 | |

| Swin IR | CNN | 94.44 | 90.29 | 0.93 | 90.3 | 0.92 | 0.93 | 0.86 | 0.9 |

| DenseNet121 | 98.01 | 92.54 | 0.95 | 92.54 | 0.94 | 0.95 | 0.89 | 0.92 | |

| RefineNet-U | 99.04 | 97.2 | 0.98 | 97.21 | 0.98 | 0.98 | 0.96 | 0.97 | |

| HLG-RetinaSR | CNN | 96.08 | 85.11 | 0.9 | 85.12 | 0.87 | 0.9 | 0.79 | 0.84 |

| DenseNet121 | 98.13 | 89.9 | 0.93 | 89.91 | 0.91 | 0.93 | 0.86 | 0.89 | |

| RefineNet-U | 99.87 | 99.11 | 0.99 | 99.11 | 0.99 | 0.99 | 0.99 | 0.99 |

| Dataset | Model | References | Accuracy | Recall | Precision | F1-Score | AUC |

|---|---|---|---|---|---|---|---|

| APTOS-2019 | Xception and SCL | (Islam et al., 2022) [61] | 84.36 | 73.84 | 70.51 | 70.49 | 93.8 |

| AABNet | (Guo et al., 2023) [62] | 86.02 | 75.57 | 67.87 | 71.51 | 86 | |

| Baseline with dual attention | (Bodapati & Balaji, 2024) [63] | 86.22 | 72.23 | 80.61 | 75.41 | 96.5 | |

| Capsule network and Inception | (Oulhadj et al., 2023) [64] | 86.54 | 87 | 86 | 86 | – | |

| CWN | (Han et al., 2023) [65] | 86.12 | – | – | – | – | |

| Proposed Model | RefineNet-U | 97.42 | 98.04 | 97.45 | 97.97 | 97.1 | |

| DDR | DenseNet-121 with CABNet | (He et al., 2021) [66] | 78.98 | – | – | – | – |

| AABNet | (Guo et al., 2022) [62] | 77.15 | 58.77 | 63.76 | 59.54 | 82.2 | |

| DeepMT-DR | (Wang et al., 2022) [67] | 83.6 | 83.1 | – | 83 | – | |

| Proposed Model | RefineNet-U | 99.11 | 99.19 | 99.17 | 99.12 | 99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, A.; Jain, S.; Arora, V. A Multi-Model Image Enhancement and Tailored U-Net Architecture for Robust Diabetic Retinopathy Grading. Diagnostics 2025, 15, 2355. https://doi.org/10.3390/diagnostics15182355

Singh A, Jain S, Arora V. A Multi-Model Image Enhancement and Tailored U-Net Architecture for Robust Diabetic Retinopathy Grading. Diagnostics. 2025; 15(18):2355. https://doi.org/10.3390/diagnostics15182355

Chicago/Turabian StyleSingh, Archana, Sushma Jain, and Vinay Arora. 2025. "A Multi-Model Image Enhancement and Tailored U-Net Architecture for Robust Diabetic Retinopathy Grading" Diagnostics 15, no. 18: 2355. https://doi.org/10.3390/diagnostics15182355

APA StyleSingh, A., Jain, S., & Arora, V. (2025). A Multi-Model Image Enhancement and Tailored U-Net Architecture for Robust Diabetic Retinopathy Grading. Diagnostics, 15(18), 2355. https://doi.org/10.3390/diagnostics15182355