Governing Artificial Intelligence in Radiology: A Systematic Review of Ethical, Legal, and Regulatory Frameworks

Abstract

1. Introduction

2. Methods

2.1. Reporting Standards

2.2. Protocol and Registration

2.3. Search Strategy

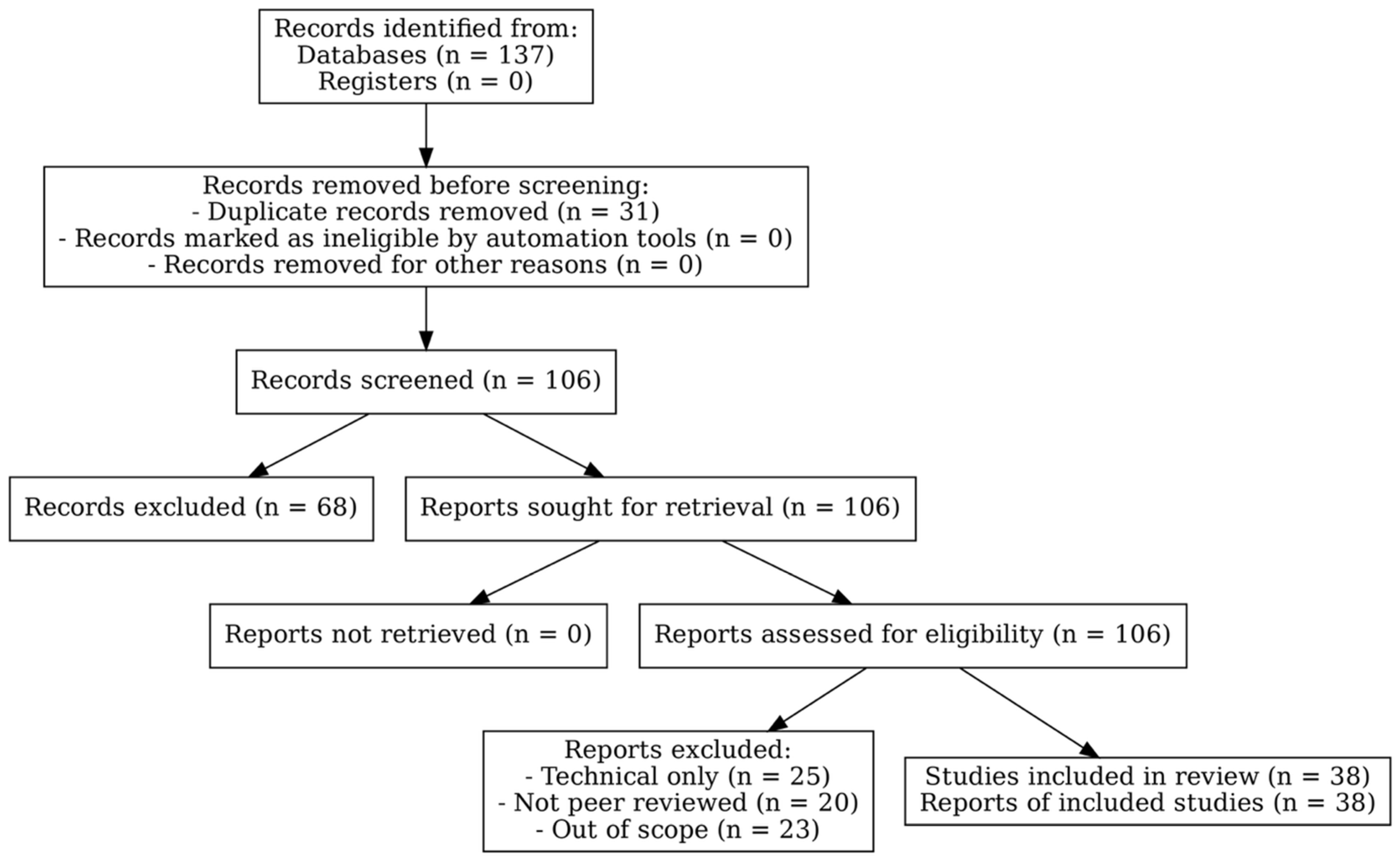

2.4. Study Selection and Inclusion Criteria

2.5. Data Extraction and Content Analysis

- Ethical concerns highlighted within the literature, such as data privacy, trust, accountability, biases, and patient autonomy.

- Legal aspects, including responsibilities concerning AI implementation and the implications of existing regulations.

- Recommendations for regulatory frameworks and governance structures that support ethical AI integration in health systems.

2.6. Synthesis of Findings

- The current state of ethical considerations in AI applications, emphasizing the ongoing challenges that healthcare professionals face in aligning AI technologies with ethical standards.

- An assessment of existing legal frameworks and any potential reforms necessary for better governance of AI technologies.

- The identification of best practices for regulatory oversight of AI in radiology, addressing both local and global concerns about implementation and monitoring.

3. Results

3.1. Ethical Considerations in AI-Driven Radiology

3.2. Legal Considerations and Liability

3.3. Regulatory Frameworks and Governance Models

3.3.1. European Union: GDPR and AI Act

- Explicit Consent: AI tools relying on large retrospective datasets must ensure explicit consent or demonstrate “public interest in public health,” which is challenging for multi-center training datasets.

- Data Minimization and Anonymization: strict anonymization protocols can reduce the utility of radiology datasets, potentially impacting algorithm accuracy [40].

- Automated Decision-Making Restrictions: Article 22 limits fully automated clinical decisions without human oversight, mandating explainability in AI diagnostics.

- Conformity assessments prior to market approval.

- Human-in-the-loop oversight mechanisms.

- Post-market performance monitoring and traceability of training datasets [54].

3.3.2. United States: HIPAA and FDA SaMD

- Scope Restriction: HIPAA does not apply to non-covered entities (e.g., tech companies handling de-identified radiology data).

- No AI-Specific Provisions: It lacks requirements for algorithmic explainability or adaptive AI oversight [55].

- Operational Implications: AI developers can more easily aggregate large datasets compared with GDPR jurisdictions, facilitating innovation but raising privacy concerns.

- Pre-market validation of safety and efficacy.

- Continuous post-market monitoring.

- For adaptive algorithms, a Predetermined Change Control Plan (PCCP) specifying acceptable model evolution without reapproval [22].

3.3.3. Canada: Hybrid Risk-Based Model

- Mandatory human oversight in diagnostic AI.

- Transparency in training datasets.

- Alignment with both GDPR and FDA standards for cross-border interoperability [26].

3.4. Integration of Ethical, Legal, and Regulatory Frameworks

3.5. Challenges and Future Directions

- For Medical Practitioners

- Integrate explainable AI (XAI) into workflows: clinicians should prioritize the adoption of interpretable AI tools (e.g., Grad-CAM, SHAP) to strengthen medico-legal defensibility and patient trust.

- Enhance training and education: radiologists should receive continuous professional development on AI ethics, liability, and regulatory compliance to prepare for evolving responsibilities.

- Promote patient-centered governance: emphasize informed consent, transparent data stewardship, and equity in the deployment of AI tools.

- Engage in governance and policy-making: active participation in hospital boards, professional societies, and regulatory discussions is essential to ensure that clinical perspectives shape AI governance frameworks.

- For AI Developers

- Prioritize dataset diversity and fairness: implement rigorous bias detection and mitigation strategies, particularly for under-represented populations and imaging modalities.

- Design for explainability and usability: ensure that AI outputs are interpretable for clinicians, balancing technical rigor with clinical applicability.

- Ensure regulatory alignment: guarantee compliance with GDPR, HIPAA, FDA SaMD, and emerging oversight mechanisms during the development process.

- Collaborate with clinical experts: engage radiologists throughout the design lifecycle to align algorithms with real-world diagnostic workflows and constraints.

- For Policymakers in the Middle East

- Regional harmonization: develop unified frameworks across Middle Eastern countries, modeled on successful international initiatives, to ensure consistent standards for safety, accountability, and transparency.

- Capacity building: invest in training for radiologists, legal scholars, and AI specialists to strengthen regional expertise in governance.

- Contextual adaptation: tailor global models (GDPR, HIPAA, FDA SaMD) to reflect local healthcare infrastructure, cultural values, and patient rights.

- For Future Research

- Empirical evaluation of XAI methods: assess how Grad-CAM, SHAP, and similar approaches impact clinical decision making and medico-legal defensibility in real-world radiology settings.

- Radiology-specific liability frameworks: explore new medico-legal models for shared responsibility between radiologists and AI systems.

- Comparative regulatory studies: systematically evaluate outcomes under EU, US, and Canadian frameworks to identify best practices.

- Bias mitigation strategies: develop protocols for equitable performance across populations and imaging modalities.

- Middle Eastern context: conduct region-specific studies on regulatory readiness, cultural considerations, and policy development.

- Dynamic oversight models: investigate adaptive monitoring mechanisms for continuously learning AI systems.

4. Conclusions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Abdullah, Y.I.; Schuman, J.S.; Shabsigh, R.; Caplan, A.; Al-Aswad, L.A. Ethics of Artificial Intelligence in Medicine and Ophthalmology. Asia-Pac. J. Ophthalmol. 2021, 10, 289–298. [Google Scholar] [CrossRef]

- Pesapane, F.; Volonté, C.; Codari, M.; Sardanelli, F. Artificial intelligence as a medical device in radiology: Ethical and regulatory issues in Europe and the United States. Insights Imaging 2018, 9, 745–753. [Google Scholar] [CrossRef] [PubMed]

- D’Antonoli, T.A. Ethical considerations for artificial intelligence: An overview of the current radiology landscape. Diagn. Interv. Radiol. 2020, 26, 504. [Google Scholar] [CrossRef] [PubMed]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef] [PubMed]

- Geis, J.R.; Brady, A.P.; Wu, C.C.; Spencer, J.; Ranschaert, E.; Jaremko, J.L.; Langer, S.G.; Borondy Kitts, A.; Birch, J.; Shields, W.F. Ethics of artificial intelligence in radiology: Summary of the joint European and North American multisociety statement. Radiology 2019, 293, 436–440. [Google Scholar] [CrossRef]

- Singhal, A.; Neveditsin, N.; Tanveer, H.; Mago, V. Toward fairness, accountability, transparency, and ethics in AI for social media and health care: Scoping review. JMIR Med. Inform. 2024, 12, e50048. [Google Scholar] [CrossRef]

- Kenny, L.M.; Nevin, M.; Fitzpatrick, K. Ethics and standards in the use of artificial intelligence in medicine on behalf of the Royal Australian and New Zealand College of Radiologists. J. Med. Imaging Radiat. Oncol. 2021, 65, 486–494. [Google Scholar] [CrossRef]

- He, C.; Liu, W.; Xu, J.; Huang, Y.; Dong, Z.; Wu, Y.; Kharrazi, H. Efficiency, accuracy, and health professional’s perspectives regarding artificial intelligence in radiology practice: A scoping review. Iradiology 2024, 2, 156–172. [Google Scholar] [CrossRef]

- Olorunsogo, T.; Adenyi, A.O.; Okolo, C.A.; Babawarun, O. Ethical considerations in AI-enhanced medical decision support systems: A review. World J. Adv. Eng. Technol. Sci. 2024, 11, 329–336. [Google Scholar] [CrossRef]

- Nazer, L.H.; Zatarah, R.; Waldrip, S.; Ke, J.X.C.; Moukheiber, M.; Khanna, A.K.; Hicklen, R.S.; Moukheiber, L.; Moukheiber, D.; Ma, H. Bias in artificial intelligence algorithms and recommendations for mitigation. PLoS Digit. Health 2023, 2, e0000278. [Google Scholar] [CrossRef]

- Rony, M.K.K.; Parvin, M.R.; Wahiduzzaman, M.; Debnath, M.; Bala, S.D.; Kayesh, I. “I wonder if my years of training and expertise will be devalued by machines”: Concerns about the replacement of medical professionals by artificial intelligence. SAGE Open Nurs. 2024, 10, 1–17. [Google Scholar] [CrossRef]

- Quazi, F. Ethics & Responsible AI in Healthcare. Int. J. Glob. Innov. Solut. 2024, 42, 180. [Google Scholar] [CrossRef]

- Giansanti, D. The Regulation of Artificial Intelligence in Digital Radiology in the Scientific Literature: A Narrative Review of Reviews. Healthcare 2022, 10, 1824. [Google Scholar] [CrossRef] [PubMed]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-alone artificial intelligence for breast cancer detection in mammography: Comparison with 101 radiologists. JNCI J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef]

- Lindsey, R.; Daluiski, A.; Chopra, S.; Lachapelle, A.; Mozer, M.; Sicular, S.; Hanel, D.; Gardner, M.; Gupta, A.; Hotchkiss, R.; et al. Deep neural network improves fracture detection by clinicians. Proc. Natl. Acad. Sci. USA 2018, 115, 11591–11596. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Annarumma, M.; Withey, S.J.; Bakewell, R.J.; Pesce, E.; Goh, V.; Montana, G. Automated Triaging of Adult Chest Radiographs with Deep Artificial Neural Networks. Radiology 2019, 291, 196–202. [Google Scholar] [CrossRef]

- Momin, E.; Cook, T.; Gershon, G.; Barr, J.; De Cecco, C.N.; van Assen, M. Systematic review on the impact of deep learning-driven worklist triage on radiology workflow and clinical outcomes. Eur. Radiol. 2025, 35, 1–15. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- U.S. Food & Drug Administration. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan; U.S. Food & Drug Administration: Silver Spring, MD, USA, 2023. [Google Scholar]

- European Union. Proposal for a Regulation of the European Parliament and of the Council Laying down Harmo-Nised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts; COM/2021/206final. 2021:1–107; European Union: Brussels, Belgium, 2021. [Google Scholar]

- Government of Canada. Guidance Document: Software as a Medical Device (SaMD): Definition and Classification; Health Canada: Vancouver, BC, Canada, 2019. [Google Scholar]

- European Society of Radiology. What the radiologist should know about artificial intelligence—An ESR white paper. Insights Into Imaging 2019, 10, 44. [Google Scholar] [CrossRef]

- Canadian Association of Radiologists (CAR) Artificial Intelligence Working Group. Canadian Association of Radiologists white paper on ethical and legal issues related to artificial intelligence in radiology. Can. Assoc. Radiol. J. 2019, 70, 107–118. [Google Scholar] [CrossRef]

- Smith, M.J.; Bean, S. AI and ethics in medical radiation sciences. J. Med. Imaging Radiat. Sci. 2019, 50, S24–S26. [Google Scholar] [CrossRef] [PubMed]

- Badawy, W.; Helal, M.M.; Hashim, A.; Zinhom, H.; Shaban, M. Ethical boundaries and data-sharing practices in AI-enhanced nursing: An Arab perspective. Int. Nurs. Rev. 2025, 72, e70013. [Google Scholar] [CrossRef]

- Ejjami, R. The Holistic Intelligent Healthcare Theory (HIHT): Integrating AI for Ethical, Transparent, and Human-Centered Healthcare Innovation. Int. J. Multidiscip. Res. 2024, 6, 1–16. [Google Scholar]

- Koçak, B.; Ponsiglione, A.; Stanzione, A.; Bluethgen, C.; Santinha, J.; Ugga, L.; Huisman, M.; Klontzas, M.E.; Cannella, R.; Cuocolo, R. Bias in artificial intelligence for medical imaging: Fundamentals, detection, avoidance, mitigation, challenges, ethics, and prospects. Diagn. Interv. Radiol. 2025, 31, 75. [Google Scholar] [CrossRef]

- Wang, J.; Sourlos, N.; Heuvelmans, M.; Prokop, M.; Vliegenthart, R.; van Ooijen, P. Explainable machine learning model based on clinical factors for predicting the disappearance of indeterminate pulmonary nodules. Comput. Biol. Med. 2024, 169, 107871. [Google Scholar] [CrossRef] [PubMed]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- McLennan, S.; Meyer, A.; Schreyer, K.; Buyx, A. German medical students views regarding artificial intelligence in medicine: A cross-sectional survey. PLoS Digit. Health 2022, 1, e0000114. [Google Scholar] [CrossRef]

- Goisauf, M.; Cano Abadía, M. Ethics of AI in radiology: A review of ethical and societal implications. Front. Big Data 2022, 5, 850383. [Google Scholar] [CrossRef]

- Currie, G.; Hawk, K.E. Ethical and Legal Challenges of Artificial Intelligence in Nuclear Medicine. Semin. Nucl. Med. 2021, 51, 120–125. [Google Scholar] [CrossRef] [PubMed]

- Ueda, D.; Kakinuma, T.; Fujita, S.; Kamagata, K.; Fushimi, Y.; Ito, R.; Matsui, Y.; Nozaki, T.; Nakaura, T.; Fujima, N. Fairness of artificial intelligence in healthcare: Review and recommendations. Jpn. J. Radiol. 2024, 42, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Harvey, H.B.; Gowda, V. Clinical applications of AI in MSK imaging: A liability perspective. Skelet. Radiol. 2022, 51, 235–238. [Google Scholar] [CrossRef] [PubMed]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Elsevier: Amsterdam, The Netherlands, 2020; pp. 295–336. [Google Scholar]

- Čartolovni, A.; Tomičić, A.; Lazić Mosler, E. Ethical, legal, and social considerations of AI-based medical decision-support tools: A scoping review. Int. J. Med. Inform. 2022, 161, 104738. [Google Scholar] [CrossRef]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (gdpr). In A Practical Guide, 1st ed.; Springer International Publishing: Cham, Germany, 2017; Volume 10, pp. 10–5555. [Google Scholar]

- Price, W.N.; Cohen, I.G. Privacy in the age of medical big data. Nat. Med. 2019, 25, 37–43. [Google Scholar] [CrossRef]

- U.S. Food & Drug Administration. Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD); U.S. Food & Drug Administration: Silver Spring, MD, USA, 2019; pp. 1–20. [Google Scholar]

- Brown, N.A.; Carey, C.H.; Gerry, E.I. FDA Releases Action Plan for Artificial Intelligence/Machine Learning-Enabled Software as a Medical Device. J. Robot. Artif. Intell. Law 2021, 4, 255–260. [Google Scholar]

- Hickman, S.E.; Baxter, G.C.; Gilbert, F.J. Adoption of artificial intelligence in breast imaging: Evaluation, ethical constraints and limitations. Br. J. Cancer 2021, 125, 15–22. [Google Scholar] [CrossRef]

- Bianchini, E.; Mayer, C.C. Medical device regulation: Should we care about it? Artery Res. 2022, 28, 55–60. [Google Scholar] [CrossRef]

- Kaissis, G.A.; Makowski, M.R.; Rückert, D.; Braren, R.F. Secure, privacy-preserving and federated machine learning in medical imaging. Nat. Mach. Intell. 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Bellamy, R.K.E.; Dey, K.; Hind, M.; Hoffman, S.C.; Houde, S.; Kannan, K.; Lohia, P.; Martino, J.; Mehta, S.; Mojsilović, A.; et al. AI Fairness 360: An extensible toolkit for detecting and mitigating algorithmic bias. IBM J. Res. Dev. 2019, 63, 4:1–4:15. [Google Scholar] [CrossRef]

- Ramprasaath, R.; Selvaraju, M.C.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. (NeurIPS) 2017, 30, 4765–4774. [Google Scholar]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A systematic review of the barriers to the implementation of artificial intelligence in healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef] [PubMed]

- Harvey, H.B.; Gowda, V. How the FDA Regulates AI. Acad. Radiol. 2020, 27, 58–61. [Google Scholar] [CrossRef]

- Recht, M.P.; Dewey, M.; Dreyer, K.; Langlotz, C.; Niessen, W.; Prainsack, B.; Smith, J.J. Integrating artificial intelligence into the clinical practice of radiology: Challenges and recommendations. Eur. Radiol. 2020, 30, 3576–3584. [Google Scholar] [CrossRef]

- Price, W.N., II; Gerke, S.; Cohen, I.G. Potential Liability for Physicians Using Artificial Intelligence. JAMA 2019, 322, 1765–1766. [Google Scholar] [CrossRef]

- Smuha, N.A. REGULATION 2024/1689 OF THE Eur. Parl. & Council of June 13, 2024 (Eu Artificial Intelligence Act). Int. Leg. Mater. 2025, 13, 1–148. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, C.; Zheng, J.; Xu, C.; Wang, D. Improving Explainability and Integrability of Medical AI to Promote Health Care Professional Acceptance and Use: Mixed Systematic Review. J. Med. Internet Res. 2025, 27, e73374. [Google Scholar] [CrossRef]

- McGraw, D. Building public trust in uses of Health Insurance Portability and Accountability Act de-identified data. J. Am. Med. Inform. Assoc. 2013, 20, 29–34. [Google Scholar] [CrossRef]

- Benjamens, S.; Dhunnoo, P.; Meskó, B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: An online database. NPJ Digit. Med. 2020, 3, 118. [Google Scholar] [CrossRef]

- Mennella, C.; Maniscalco, U.; De Pietro, G.; Esposito, M. Ethical and regulatory challenges of AI technologies in healthcare: A narrative review. Heliyon 2024, 10, e26297. [Google Scholar] [CrossRef]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef]

| Author | Year | Source/Journal | Radiology Domain | Ethical | Legal | Regulatory |

|---|---|---|---|---|---|---|

| Abdullah YI, Schuman JS, Shabsigh R, et al. [1] | 2021 | Asia-Pacific Journal of Ophthalmology | General healthcare (background) | ✔ | ✘ | ✘ |

| Pesapane F, Volonté C, Codari M, et al. [2] | 2018 | Insights into Imaging | General radiology | ✔ | ✘ | ✔ |

| D’Antonoli TA [3] | 2020 | Diagnostic and Interventional Radiology | General radiology | ✔ | ✘ | ✘ |

| Najjar R [4] | 2023 | Diagnostics | General radiology | ✘ | ✘ | ✘ |

| Geis JR, Brady AP, Wu CC, et al. [5] | 2019 | Radiology | General radiology | ✔ | ✘ | ✘ |

| Singhal A, Neveditsin N, Tanveer H, et al. [6]. | 2024 | JMIR Medical Informatics | General healthcare (background) | ✔ | ✘ | ✘ |

| Kenny LM, Nevin M, Fitzpatrick K [7] | 2021 | Journal of Medical Imaging and Radiation Oncology | General radiology | ✔ | ✘ | ✘ |

| He C, Liu W, Xu J, et al. [8] | 2024 | iRADIOLOGY | Nuclear medicine | ✘ | ✘ | ✘ |

| Olorunsogo T, Adenyi AO, Okolo CA, et al. [9]. | 2024 | World Journal of Advanced Engineering Technology and Sciences | General healthcare (background) | ✔ | ✘ | ✘ |

| Nazer LH, Zatarah R, Waldrip S, et al. [10]. | 2023 | PLOS digital health | General/other | ✔ | ✘ | ✘ |

| Quazi F [12] | 2024 | Available at SSRN 4942322 | General healthcare (background) | ✔ | ✘ | ✘ |

| Giansanti D [13] | 2022 | Healthcare | General radiology | ✘ | ✘ | ✔ |

| Smith MJ, Bean S [26] | 2019 | Journal of Medical Imaging and Radiation Sciences | General healthcare (background) | ✔ | ✘ | ✘ |

| Badawy W, Helal MM, Hashim A, et al. [27]. | 2025 | International Nursing Review | Nuclear medicine | ✔ | ✘ | ✘ |

| Ejjami R [28] | General healthcare (background) | ✔ | ✘ | ✘ | ||

| Koçak B, Ponsiglione A, Stanzione A, et al. [29]. | 2025 | Diagnostic and interventional radiology | Nuclear medicine | ✔ | ✘ | ✘ |

| Rodriguez-Ruiz A, Lång K, Gubern-Merida A, et al. [15]. | 2019 | Journal of the National Cancer Institute | Mammography/Breast imaging | ✘ | ✘ | ✘ |

| Wang J, Sourlos N, Heuvelmans M, et al. [30]. | 2024 | Computers in biology and medicine | Chest imaging | ✔ | ✘ | ✘ |

| McKinney SM, Sieniek M, Godbole V, et al. [31]. | 2020 | Nature | Mammography/Breast imaging | ✘ | ✘ | ✘ |

| McLennan S, Meyer A, Schreyer K, et al. [32]. | 2022 | PLOS Digital Health | Chest imaging | ✘ | ✘ | ✘ |

| Goisauf M, Cano Abadía M [33] | 2022 | Frontiers in Big Data | General radiology | ✔ | ✘ | ✘ |

| Group CAoRAIW [25] | 2019 | Canadian Association of Radiologists Journal | General radiology | ✔ | ✔ | ✘ |

| Currie G, Hawk KE [34] | 2021 | Seminars in Nuclear Medicine | Nuclear medicine | ✔ | ✔ | ✘ |

| Ueda D, Kakinuma T, Fujita S, et al. [35]. | 2024 | Japanese Journal of Radiology | General radiology | ✔ | ✘ | ✘ |

| Harvey HB, Gowda V [36] | 2022 | Skeletal Radiology | Nuclear medicine | ✘ | ✔ | ✘ |

| Gerke S, Minssen T, Cohen G [37] | 2020 | Artificial intelligence in healthcare: Elsevier 2020: 295–336. | General healthcare (background) | ✔ | ✔ | ✘ |

| Čartolovni A, Tomičić A, Lazić Mosler E [38] | 2022 | International Journal of Medical Informatics | General healthcare (background) | ✔ | ✔ | ✘ |

| Union E [22] | 2021 | COM/2021/206 final | Chest imaging | ✘ | ✘ | ✔ |

| Voigt P, Von dem Bussche A [39] | 2017 | A practical guide, 1st ed, Cham: Springer International Publishing | Chest imaging | ✘ | ✘ | ✔ |

| Price WN, Cohen IG [40] | 2019 | Nature medicine | General healthcare (background) | ✔ | ✘ | ✘ |

| Annarumma, M., [18] | 2019 | Radiology | Chest imaging | ✘ | ✘ | ✔ |

| FDA U [41] | 2019 | US Food and Drug Administration | General healthcare (background) | ✘ | ✘ | ✔ |

| Brown NA, Carey CH, Gerry EI [42] | 2021 | The Journal of Robotics, Artificial Intelligence & Law | Chest imaging | ✘ | ✔ | ✔ |

| Administration US FDA [21] | 2023 | In Administration USFaD, (Ed) 2023. | Chest imaging | ✘ | ✘ | ✔ |

| Ardila, D [14] | 2019 | Nat. Med | Chest imaging | ✘ | ✘ | ✘ |

| Canada H [23] | 2019 | Health Canada 2019. | General healthcare (background) | ✘ | ✘ | ✔ |

| Hickman SE, Baxter GC, Gilbert FJ [43] | 2021 | British Journal of Cancer | Mammography/Breast imaging | ✔ | ✘ | ✘ |

| Bianchini, E.; Mayer, C. [44] | 2022 | Artery Res | General healthcare (background) | ✘ | ✘ | ✔ |

| Aspect | EU (GDPR + AI Act) | US (HIPAA + FDA SaMD) | Canada (Hybrid) |

|---|---|---|---|

| Legal Scope | GDPR covers all personal health data; AI Act classifies radiology AI as ‘high-risk’ | HIPAA limited to ‘covered entities’; FDA regulates SaMD | Risk-based model under Health Canada; aligns with CAR guidance |

| Operational Requirements | Explicit consent, data minimization, conformity assessment, post-market monitoring | Easier data aggregation, PCCP for adaptive AI, TPLC lifecycle monitoring | Transparency, mandatory human oversight, cross-border alignment |

| Liability | Strong patient rights; unclear on shared AI liability | Physician-centric with evolving shared liability models | Shared liability framework under development |

| Cross-Border Compatibility | Strictest; GDPR can conflict with US data practices | Flexible; US-trained models may fail GDPR standards | Serves as a harmonization bridge |

| Author (Year) | Imaging Focus | Ethical Concern | Key Findings |

|---|---|---|---|

| Rodriguez-Ruiz et al. (2019) [15] | Mammography | Bias in datasets | A stand-alone AI system showed subgroup variation: higher recall in dense breast tissue but lower specificity in older women. |

| McKinney et al. (2020) [31] | Breast cancer screening | Bias in datasets | International AI evaluation revealed ~12% drop in sensitivity in minority populations due to dataset imbalance. |

| Goisauf & Cano Abadía (2022) [33] | General radiology | Explainability and Consent | Highlighted the ethical implications of opaque AI models and called for dynamic consent to preserve patient autonomy. |

| Amann et al. (2020) [59] | Healthcare AI (incl. radiology) | Explainability | Stressed that lack of interpretability in AI systems undermines clinician accountability and patient trust. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldhafeeri, F.M. Governing Artificial Intelligence in Radiology: A Systematic Review of Ethical, Legal, and Regulatory Frameworks. Diagnostics 2025, 15, 2300. https://doi.org/10.3390/diagnostics15182300

Aldhafeeri FM. Governing Artificial Intelligence in Radiology: A Systematic Review of Ethical, Legal, and Regulatory Frameworks. Diagnostics. 2025; 15(18):2300. https://doi.org/10.3390/diagnostics15182300

Chicago/Turabian StyleAldhafeeri, Faten M. 2025. "Governing Artificial Intelligence in Radiology: A Systematic Review of Ethical, Legal, and Regulatory Frameworks" Diagnostics 15, no. 18: 2300. https://doi.org/10.3390/diagnostics15182300

APA StyleAldhafeeri, F. M. (2025). Governing Artificial Intelligence in Radiology: A Systematic Review of Ethical, Legal, and Regulatory Frameworks. Diagnostics, 15(18), 2300. https://doi.org/10.3390/diagnostics15182300