An Optimized Machine Learning Model Accurately Predicts In-Hospital Outcomes at Admission to a Cardiac Unit

Abstract

:1. Introduction

2. Methods

2.1. Dataset

2.2. Outcomes

2.3. Performance Metrics

2.4. Data Preprocessing

2.5. Machine Learning Algorithm

2.6. Network Optimization

2.7. Performance Evaluation and Feature Selection

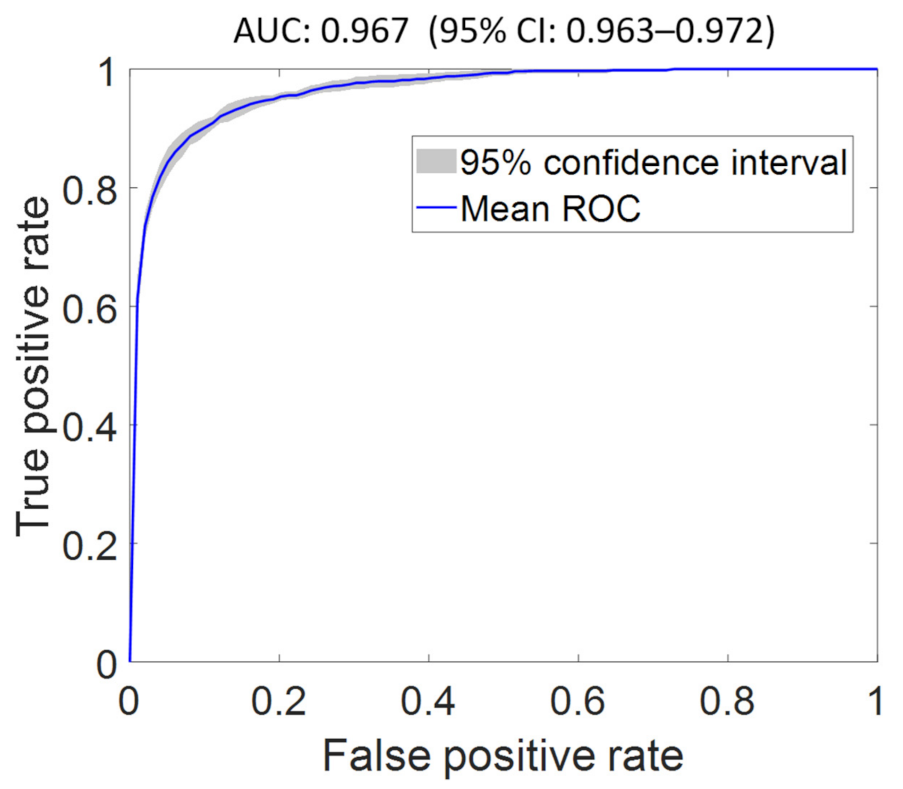

2.8. Mortality

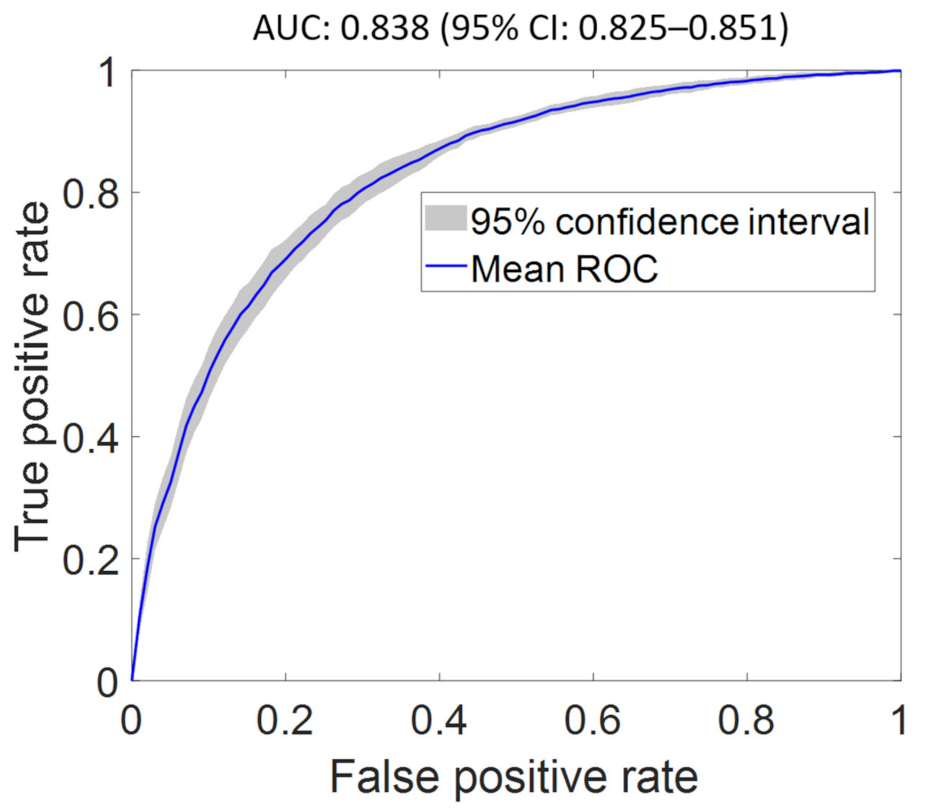

2.9. Heart Failure

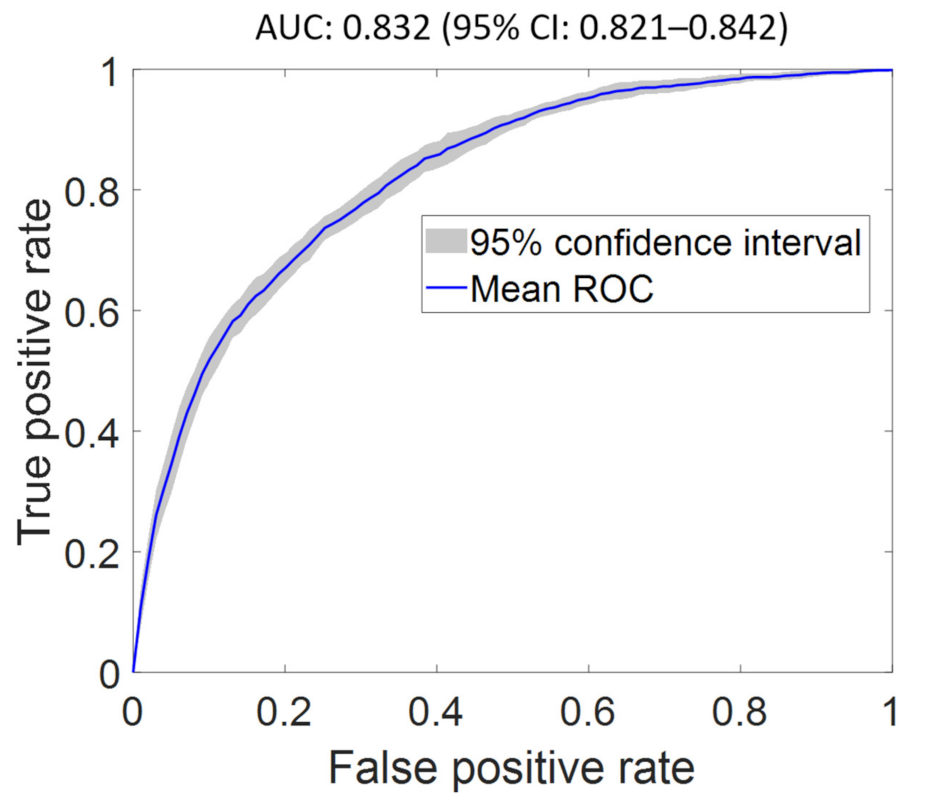

2.10. ST-Segment Elevation Myocardial Infraction

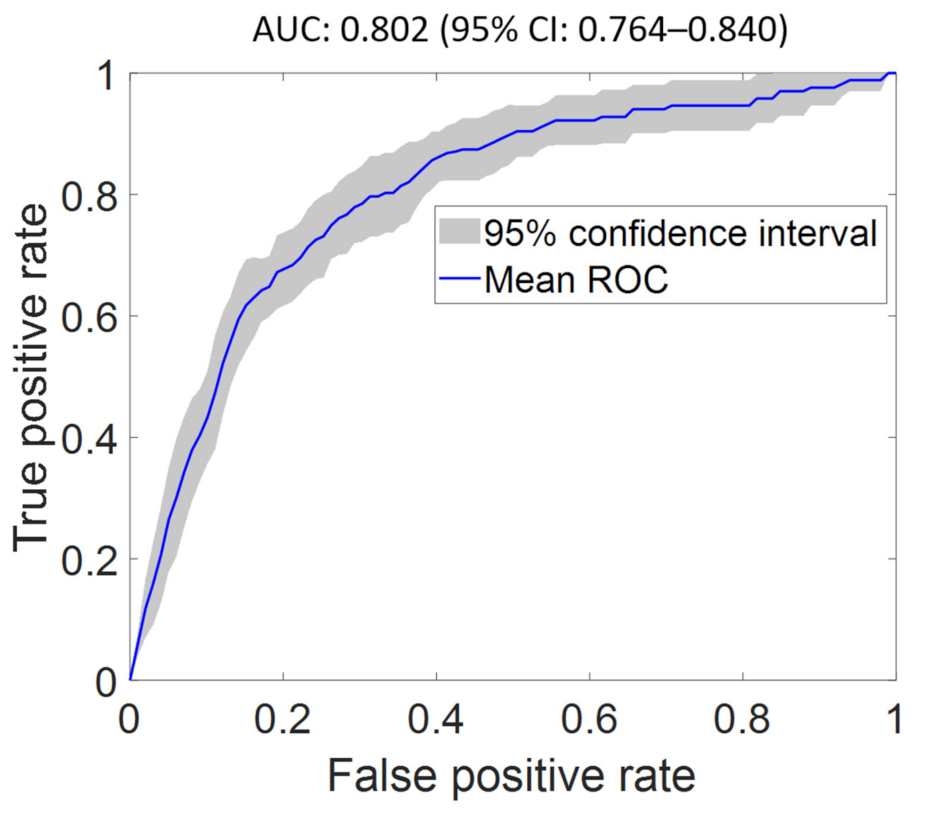

2.11. Pulmonary Embolism

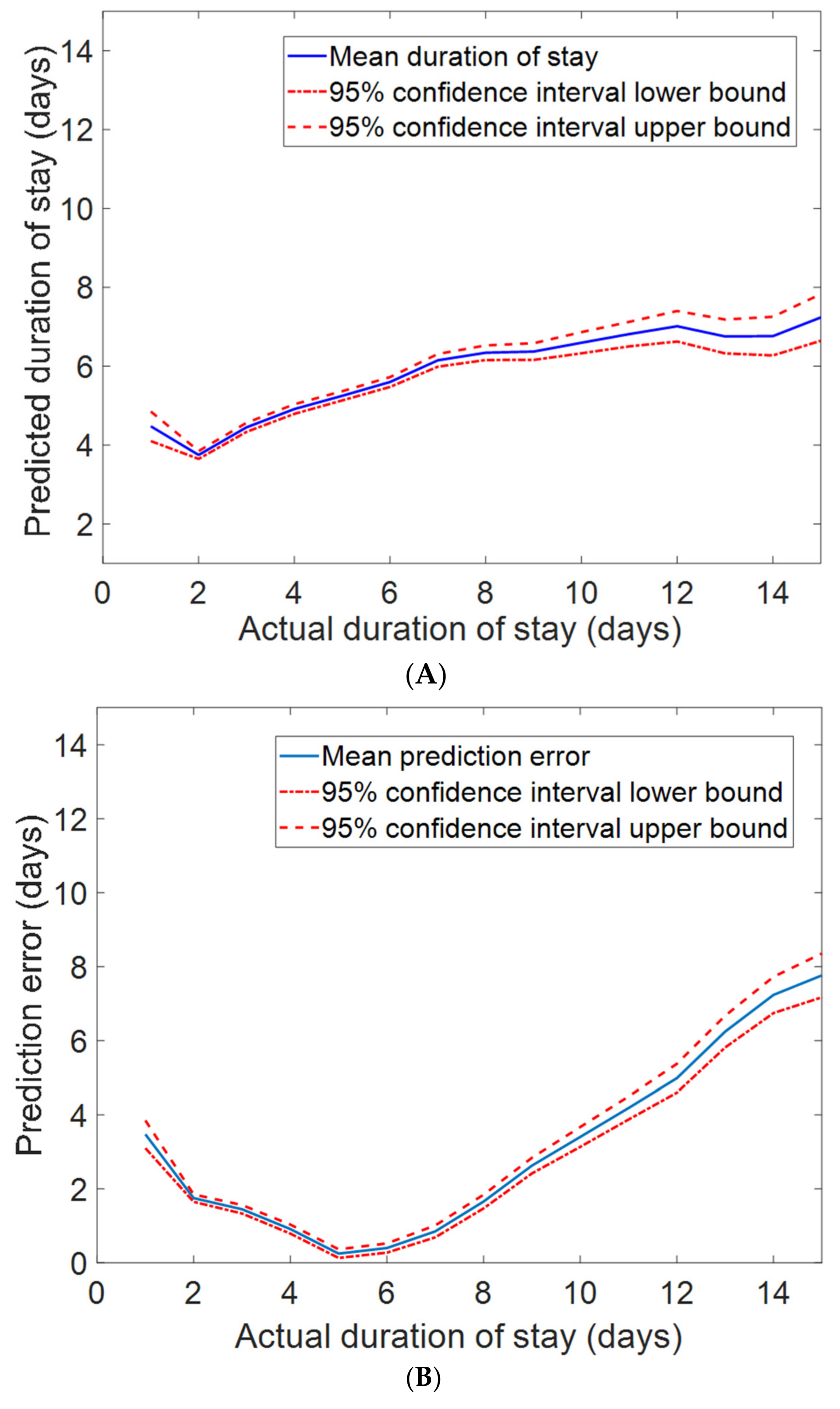

2.12. Duration of Stay

3. Discussion

4. Conclusions

5. Study Limitations

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kletečka-Pulker, M.; Völkl-Kernstock, S.; Fassl, A.; Klager, E.; Willschke, H.; Klomfar, S.; Wochele-Thoma, T.; Schaden, E.; Atanasov, A. Telehealth in Times of COVID-19: Spotlight on Austria. Healthcare 2021, 9, 280. [Google Scholar] [CrossRef]

- Massaro, A.; Galiano, A.; Scarafile, D.; Vacca, A.; Frassanito, A.; Melaccio, A.; Solimando, A.; Ria, R.; Calamita, G.; Bonomo, M.; et al. Telemedicine DSS-AI Multi Level Platform for Monoclonal Gammopathy Assistance. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020; pp. 1–5. [Google Scholar]

- Massaro, A.; Maritati, V.; Savino, N.; Galiano, A. Neural Networks for Automated Smart Health Platforms oriented on Heart Predictive Diagnostic Big Data Systems. In Proceedings of the 2018 AEIT International Annual Conference, Bari, Italy, 3–5 October 2018; pp. 1–5. [Google Scholar]

- Plati, D.K.; Tripoliti, E.E.; Bechlioulis, A.; Rammos, A.; Dimou, I.; Lakkas, L.; Watson, C.; McDonald, K.; Ledwidge, M.; Pharithi, R.; et al. A Machine Learning Approach for Chronic Heart Failure Diagnosis. Diagnostics 2021, 11, 1863. [Google Scholar] [CrossRef] [PubMed]

- Escobar, G.J.; Greene, J.D.; Scheirer, P.; Gardner, M.N.; Draper, D.; Kipnis, P. Risk-Adjusting Hospital Inpatient Mortality Using Automated Inpatient, Outpatient, and Laboratory Databases. Med. Care 2008, 46, 232–239. [Google Scholar] [CrossRef] [PubMed]

- Le Gall, J.R.; Lemeshow, S.; Saulnier, F. A new Simplified Acute Physiology Score (SAPS II) based on a European/North American multicenter study. JAMA 1993, 270, 2957–2963. [Google Scholar] [CrossRef] [PubMed]

- Moreno, R.P.; Metnitz, P.G.H.; Almeida, E.; Jordan, B.; Bauer, P.; Campos, R.A.; Iapichino, G.; Edbrooke, D.; Capuzzo, M.; Le Gall, J.-R. SAPS 3—From evaluation of the patient to evaluation of the intensive care unit. Part 2: Development of a prognostic model for hospital mortality at ICU admission. Intensive Care Med. 2005, 31, 1345–1355. [Google Scholar] [CrossRef] [Green Version]

- Vincent, J.-L.; Moreno, R.; Takala, J.; Willatts, S.; De Mendonça, A.; Bruining, H.; Reinhart, C.K.; Suter, P.M.; Thijs, L.G. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. Intensive Care Med. 1996, 22, 707–710. [Google Scholar] [CrossRef]

- Zimmerman, J.E.; Kramer, A.; McNair, D.; Malila, F.M. Acute Physiology and Chronic Health Evaluation (APACHE) IV: Hospital mortality assessment for today’s critically ill patients. Crit. Care Med. 2006, 34, 1297–1310. [Google Scholar] [CrossRef]

- Baek, H.; Cho, M.; Kim, S.; Hwang, H.; Song, M.; Yoo, S. Analysis of length of hospital stay using electronic health records: A statistical and data mining approach. PLoS ONE 2018, 13, e0195901. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med. Inform. Decis. Mak. 2020, 20, 1–16. [Google Scholar] [CrossRef]

- Hizoh, I.; Domokos, D.; Banhegyi, G.; Becker, D.; Merkely, B.; Ruzsa, Z. Mortality prediction algorithms for patients undergoing primary percutaneous coronary intervention. J. Thorac. Dis. 2020, 12, 1706–1720. [Google Scholar] [CrossRef]

- Shilo, S.; Rossman, H.; Segal, E. Axes of a revolution: Challenges and promises of big data in healthcare. Nat. Med. 2020, 26, 29–38. [Google Scholar] [CrossRef] [PubMed]

- Sevakula, R.K.; Au-Yeung, W.M.; Singh, J.P.; Heist, E.K.; Isselbacher, E.M.; Armoundas, A.A. State-of-the-Art Machine Learning Techniques Aiming to Improve Patient Outcomes Pertaining to the Cardiovascular System. J. Am. Heart Assoc. 2020, 9, e013924. [Google Scholar] [CrossRef] [PubMed]

- Efimov, I.R.; Fu, S.N.; Laughner, J.I. (Eds.) Cardiac Bioelectric Therapy: Mechanisms and Practical Implications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 335–352. [Google Scholar]

- Au-Yeung, W.-T.M.; Sahani, A.K.; Isselbacher, E.M.; Armoundas, A.A. Reduction of false alarms in the intensive care unit using an optimized machine learning based approach. NPJ Digit. Med. 2019, 2, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Au-Yeung, W.-T.M.; Sevakula, R.K.; Sahani, A.K.; Kassab, M.; Boyer, R.; Isselbacher, E.M.; Armoundas, A. Real-time machine learning-based intensive care unit alarm classification without prior knowledge of the underlying rhythm. Eur. Hear. J. Digit. Health 2021, 2, 437–445. [Google Scholar] [CrossRef]

- Vellido, A. The importance of interpretability and visualization in machine learning for applications in medicine and health care. Neural Comput. Appl. 2020, 32, 18069–18083. [Google Scholar] [CrossRef] [Green Version]

- Bazoukis, G.J.H.; Loscalzo, J.; Antman, E.M.; Fuster, V.; Armoundas, A.A. The Inclusion of Augmented Intelligence in Medicine: A Framework for Successful Implementation. Cell Rep. Med. 2022, 3, 2666–3791. [Google Scholar] [CrossRef]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef]

- Troyanskaya, O.G.; Cantor, M.; Sherlock, G.; Brown, P.O.; Hastie, T.; Tibshirani, R.; Botstein, D.; Altman, R.B. Missing value estimation methods for DNA microarrays. Bioinformatics 2001, 17, 520–525. [Google Scholar] [CrossRef] [Green Version]

- McGill, R.; Tukey, J.W.; Larsen, W.A. Variations of box plots. Am. Stat. 1978, 32, 12–16. [Google Scholar]

- Yegnanarayana, B. Artificial Neural Networks; PHI Learning Pvt. Ltd.: New Delhi, India, 2009. [Google Scholar]

- O’Malley, T.A.B. Elie and Long, James and Chollet, François and Jin, Haifeng and Invernizzi, Luca and others. Keras Tuner. 2019. Available online: https://github.com/keras-team/keras-tuner (accessed on 14 December 2021).

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Karabulut, E.M.; Özel, S.A.; Ibrikci, T. A comparative study on the effect of feature selection on classification accuracy. Procedia Technol. 2012, 1, 323–327. [Google Scholar] [CrossRef] [Green Version]

- Kay, G.L.; Sun, G.-W.; Aoki, A.; Prejean, C.A. Influence of ejection fraction on hospital mortality, morbidity, and costs for CABG patients. Ann. Thorac. Surg. 1995, 60, 1640–1651. [Google Scholar] [CrossRef]

- Al Jalbout, N.; Balhara, K.S.; Hamade, B.; Hsieh, Y.-H.; Kelen, G.D.; Bayram, J.D. Shock index as a predictor of hospital admission and inpatient mortality in a US national database of emergency departments. Emerg. Med. J. 2019, 36, 293–297. [Google Scholar] [CrossRef]

- Bozkurt, B.; Hershberger, R.E.; Butler, J.; Grady, K.L.; Heidenreich, P.A.; Isler, M.L.; Kirklin, J.K.; Weintraub, W.S. 2021 ACC/AHA Key Data Elements and Definitions for Heart Failure: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Data Standards (Writing Committee to Develop Clinical Data Standards for Heart Failure). Circ. Cardiovasc. Qual. Outcomes 2021, 14, e000102. [Google Scholar] [CrossRef] [PubMed]

- Kiron, V.; George, P. Correlation of cumulative ST elevation with left ventricular ejection fraction and 30-day outcome in patients with ST elevation myocardial infarction. J. Postgrad. Med. 2019, 65, 146–151. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.-W.; Yu, Z.-Q.; Yang, H.-B.; Chen, Y.-H.; Qian, J.-Y.; Shu, X.-H.; Ge, J.-B. Rapid predictors for the occurrence of reduced left ventricular ejection fraction between LAD and non-LAD related ST-elevation myocardial infarction. BMC Cardiovasc. Disord. 2016, 16, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arrigo, M.; Huber, L.C. Pulmonary Embolism and Heart Failure: A Reappraisal. Card. Fail. Rev. 2021, 7, e03. [Google Scholar] [CrossRef]

- Beemath, A.; Stein, P.D.; Skaf, E.; Al Sibae, M.R.; Alesh, I. Risk of Venous Thromboembolism in Patients Hospitalized with Heart Failure. Am. J. Cardiol. 2006, 98, 793–795. [Google Scholar] [CrossRef] [PubMed]

- Olsson, T.; Terent, A.; Lind, L. Rapid Emergency Medicine score: A new prognostic tool for in-hospital mortality in nonsurgical emergency department patients. J. Intern. Med. 2004, 255, 579–587. [Google Scholar] [CrossRef] [PubMed]

- Schwartz, N.; Sakhnini, A.; Bisharat, N. Predictive modeling of inpatient mortality in departments of internal medicine. Intern. Emerg. Med. 2017, 13, 205–211. [Google Scholar] [CrossRef] [PubMed]

- Soffer, S.; Klang, E.; Barash, Y.; Grossman, E.; Zimlichman, E. Predicting In-Hospital Mortality at Admission to the Medical Ward: A Big-Data Machine Learning Model. Am. J. Med. 2021, 134, 227–234. [Google Scholar] [CrossRef]

- Bazoukis, G.; Stavrakis, S.; Zhou, J.; Bollepalli, S.C.; Tse, G.; Zhang, Q.; Singh, J.P.; Armoundas, A.A. Machine learning versus conventional clinical methods in guiding management of heart failure patients—A systematic review. Hear. Fail. Rev. 2021, 26, 23–34. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, I.; Sofela, M.; Yang, J.; Chen, J.H.; Shah, N.H.; Ball, R.; Mushlin, A.I.; Desai, M.; Bledsoe, J.; Amrhein, T.; et al. Development and Performance of the Pulmonary Embolism Result Forecast Model (PERFORM) for Computed Tomography Clinical Decision Support. JAMA Netw. Open 2019, 2, e198719. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Liu, H.; Yang, J.; Xie, G.; Xu, M.; Yang, Y. Using Machine Learning Models to Predict In-Hospital Mortality for ST-Elevation Myocardial Infarction Patients. Stud. Health Technol. Inform. 2017, 245, 476–480. [Google Scholar] [PubMed]

- Carter, E.M.; Potts, H.W.W. Predicting length of stay from an electronic patient record system: A primary total knee replacement example. BMC Med. Inform. Decis. Mak. 2014, 14, 26. [Google Scholar] [CrossRef] [Green Version]

| Total Subjects: 11,498 | Mean (Standard Deviation) or Proportion (%) | Median Value (Interquartile Range) | Missing Values (%) |

|---|---|---|---|

| Demographics | |||

| Age (year) | 60.81 (13.47) | 62.00 (17) | 0.00 |

| Gender (male %) | 63.58 | 0.00 | |

| Locality (urban %) | 75.84 | 0.00 | |

| Admission type (emergency %) | 67.81 | 0.00 | |

| Duration of stay (days) | 6.35 (4.56) | 5.00 (5) | 0.00 |

| Mortality (expiry %) | 9.40 | 0.00 | |

| History | |||

| Smoking | 5.06 | 0.00 | |

| Alcohol | 6.77 | 0.00 | |

| Diabetes mellitus | 30.99 | 0.00 | |

| Hypertension | 47.70 | 0.00 | |

| Prior coronary artery disease | 66.69 | 0.00 | |

| Prior cardiomyopathy | 14.33 | 0.00 | |

| Chronic kidney disease | 8.66 | 0.00 | |

| Lab parameters | |||

| Hemoglobin (g/dL) | 12.32 (2.31) | 12.50 (3.1) | 1.81 |

| Total lymphocyte count (K/uL) | 11.41 (7.08) | 10.00 (5.3) | 1.98 |

| Platelets (K/uL) | 238.38 (103.11) | 226.00 (116) | 2.04 |

| Glucose (mmol:L) | 160.47 (82.67) | 134.00 (88) | 5.28 |

| Urea (mg/dL) | 47.82 (40.57) | 34.00 (29) | 1.69 |

| Creatinine (mg/dL) | 1.30 (1.16) | 0.93 (0.6) | 1.76 |

| Brain natriuretic peptide (pg/mL) | 785.96 (988.89) | 432.00 (934) | 59.91 |

| Raised cardiac enzymes | 20.26 | 0.00 | |

| Ejection fraction | 44.13 (13.42) | 44.00 (28) | 10.51 |

| Comorbidities | |||

| Severe anemia | 1.79 | 0.00 | |

| Anemia | 16.69 | 0.00 | |

| Stable angina | 9.08 | 0.00 | |

| Acute coronary syndrome | 37.16 | 0.00 | |

| ST-segment elevation myocardial infarction | 14.62 | 0.00 | |

| Atypical chest pain | 3.07 | 0.00 | |

| Heart failure (HF) | 26.75 | 0.00 | |

| HF with reduced ejection fraction | 14.19 | 0.00 | |

| HF with normal ejection fraction | 12.63 | 0.00 | |

| Valvular | 3.41 | 0.00 | |

| Complete heart block | 2.61 | 0.00 | |

| Sick sinus syndrome | 0.70 | 0.00 | |

| Acute kidney injury | 20.51 | 0.00 | |

| Cerebrovascular accident infract | 2.83 | 0.00 | |

| Cerebrovascular accident bleed | 0.42 | 0.00 | |

| Atrial fibrillation | 4.87 | 0.00 | |

| Ventricular tachycardia | 3.13 | 0.00 | |

| Paroxysmal supraventricular tachycardia | 0.74 | 0.00 | |

| Congenital | 1.13 | 0.00 | |

| Urinary tract infection | 5.87 | 0.00 | |

| Neuro cardiogenic syncope | 0.97 | 0.00 | |

| Orthostatic | 0.82 | 0.00 | |

| Infective endocarditis | 0.16 | 0.00 | |

| Deep-vein thrombosis | 1.37 | 0.00 | |

| Cardiogenic shock | 6.78 | 0.00 | |

| Shock | 5.64 | 0.00 | |

| Pulmonary embolism | 1.46 | 0.00 | |

| Chest infection | 2.33 | 0.00 |

| Feature Set | Mortality | Heart Failure | STEMI | Pulmonary Embolism | Duration of Stay |

|---|---|---|---|---|---|

| AUC (95% CI) | MAE (95% CI) | ||||

| FS1 | 0.955 (0.947–0.963) | 0.833 (0.819–0.846) | 0.832 (0.824–0.839) | 0.779 (0.733–0.826) | 2.561 (2.526–2.596) |

| FS2 | 0.967 (0.963–0.972) | 0.838 (0.825–0.851) | 0.832 (0.821–0.842) | 0.802 (0.764–0.840) | 2.543 (2.499–2.586) |

| FS3 | 0.952 (0.946–0.958) | 0.795 (0.783–0.807) | 0.790 (0.778–0.801) | 0.737 (0.688–0.786) | 2.572 (2.528–2.616) |

| FS4 | 0.938 (0.929–0.947) | 0.767 (0.755–0.779) | 0.731 (0.714–0.748) | 0.630 (0.580–0.680) | 2.623 (2.579–2.667) |

| FS5 | 0.922 (0.912–0.933) | 0.725 (0.715–0.734) | 0.678 (0.666–0.691) | 0.621 (0.585–0.658) | 2.642 (2.598–2.685) |

| FS6 | 0.911 (0.901–0.922) | 0.707 (0.696–0.718) | 0.647 (0.632–0.662) | 0.597 (0.557–0.636) | 2.651 (2.608–2.695) |

| FS7 | 0.907 (0.899–0.915) | 0.670 (0.657–0.684) | 0.624 (0.615–0.633) | 0.589 (0.543–0.636) | 2.694 (2.650–2.737) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bollepalli, S.C.; Sahani, A.K.; Aslam, N.; Mohan, B.; Kulkarni, K.; Goyal, A.; Singh, B.; Singh, G.; Mittal, A.; Tandon, R.; et al. An Optimized Machine Learning Model Accurately Predicts In-Hospital Outcomes at Admission to a Cardiac Unit. Diagnostics 2022, 12, 241. https://doi.org/10.3390/diagnostics12020241

Bollepalli SC, Sahani AK, Aslam N, Mohan B, Kulkarni K, Goyal A, Singh B, Singh G, Mittal A, Tandon R, et al. An Optimized Machine Learning Model Accurately Predicts In-Hospital Outcomes at Admission to a Cardiac Unit. Diagnostics. 2022; 12(2):241. https://doi.org/10.3390/diagnostics12020241

Chicago/Turabian StyleBollepalli, Sandeep Chandra, Ashish Kumar Sahani, Naved Aslam, Bishav Mohan, Kanchan Kulkarni, Abhishek Goyal, Bhupinder Singh, Gurbhej Singh, Ankit Mittal, Rohit Tandon, and et al. 2022. "An Optimized Machine Learning Model Accurately Predicts In-Hospital Outcomes at Admission to a Cardiac Unit" Diagnostics 12, no. 2: 241. https://doi.org/10.3390/diagnostics12020241

APA StyleBollepalli, S. C., Sahani, A. K., Aslam, N., Mohan, B., Kulkarni, K., Goyal, A., Singh, B., Singh, G., Mittal, A., Tandon, R., Chhabra, S. T., Wander, G. S., & Armoundas, A. A. (2022). An Optimized Machine Learning Model Accurately Predicts In-Hospital Outcomes at Admission to a Cardiac Unit. Diagnostics, 12(2), 241. https://doi.org/10.3390/diagnostics12020241