Automatic Assessment of ASPECTS Using Diffusion-Weighted Imaging in Acute Ischemic Stroke Using Recurrent Residual Convolutional Neural Network

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

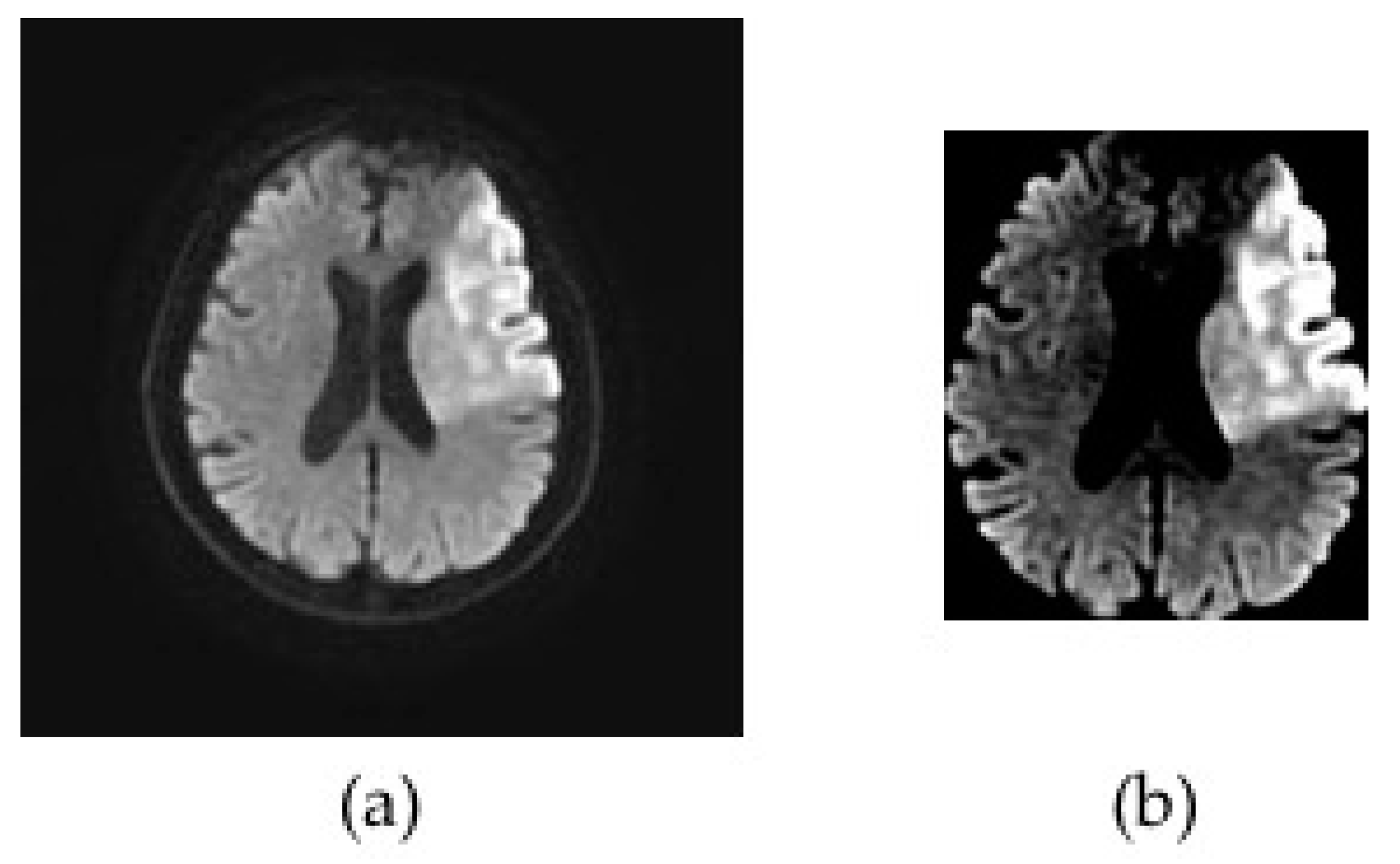

2.2. Slices Filtering

2.3. DWI Preprocessing

2.4. Data Augmentation

2.5. Model Training

2.6. Comparison of RRCNN with Pre-Trained Models and 3DCNN

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Emberson, J.; Lees, K.R.; Lyden, P.; Blackwell, L.; Albers, G.; Bluhmki, E.; Brott, T.; Cohen, G.; Davis, S.; Donnan, G.; et al. Effect of treatment delay, age, and stroke severity on the effects of intravenous thrombolysis with alteplase for acute ischaemic stroke: A meta-analysis of individual patient data from randomised trials. Lancet (Lond. Engl.) 2014, 384, 1929–1935. [Google Scholar] [CrossRef]

- Saver, J.L. Time is brain--quantified. Stroke 2006, 37, 263–266. [Google Scholar] [CrossRef] [PubMed]

- Barber, P.A.; Demchuk, A.M.; Zhang, J.; Buchan, A.M.; Group, A.S. Validity and reliability of a quantitative computed tomography score in predicting outcome of hyperacute stroke before thrombolytic therapy. ASPECTS Study Group. Alberta Stroke Programme Early CT Score. Lancet (Lond. Engl.) 2000, 355, 1670–1674. [Google Scholar] [CrossRef]

- Powers, W.J.; Rabinstein, A.A.; Ackerson, T.; Adeoye, O.M.; Bambakidis, N.C.; Becker, K.; Biller, J.; Brown, M.; Demaerschalk, B.M.; Hoh, B.; et al. 2018 Guidelines for the Early Management of Patients With Acute Ischemic Stroke: A Guideline for Healthcare Professionals From the American Heart Association/American Stroke Association. Stroke 2018, 49, e46–e110. [Google Scholar] [CrossRef]

- Grotta, J.C.; Chiu, D.; Lu, M.; Patel, S.; Levine, S.R.; Tilley, B.C.; Brott, T.G.; Haley, E.C., Jr.; Lyden, P.D.; Kothari, R.; et al. Agreement and variability in the interpretation of early CT changes in stroke patients qualifying for intravenous rtPA therapy. Stroke 1999, 30, 1528–1533. [Google Scholar] [CrossRef]

- Barber, P.A.; Hill, M.D.; Eliasziw, M.; Demchuk, A.M.; Pexman, J.H.; Hudon, M.E.; Tomanek, A.; Frayne, R.; Buchan, A.M. Imaging of the brain in acute ischaemic stroke: Comparison of computed tomography and magnetic resonance diffusion-weighted imaging. J. Neurol. Neurosurg. Psychiatry 2005, 76, 1528–1533. [Google Scholar] [CrossRef]

- McTaggart, R.A.; Jovin, T.G.; Lansberg, M.G.; Mlynash, M.; Jayaraman, M.V.; Choudhri, O.A.; Inoue, M.; Marks, M.P.; Albers, G.W. Alberta stroke program early computed tomographic scoring performance in a series of patients undergoing computed tomography and MRI: Reader agreement, modality agreement, and outcome prediction. Stroke 2015, 46, 407–412. [Google Scholar] [CrossRef]

- Lee, D.; Lee, J.; Ko, J.; Yoon, J.; Ryu, K.; Nam, Y. Deep Learning in MR Image Processing. Investig. Magn. Reson. Imaging 2019, 23, 81–99. [Google Scholar] [CrossRef]

- Soffer, S.; Ben-Cohen, A.; Shimon, O.; Amitai, M.M.; Greenspan, H.; Klang, E. Convolutional Neural Networks for Radiologic Images: A Radiologist’s Guide. Radiology 2019, 290, 590–606. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Kasasbeh, A.S.; Christensen, S.; Parsons, M.W.; Campbell, B.; Albers, G.W.; Lansberg, M.G. Artificial Neural Network Computer Tomography Perfusion Prediction of Ischemic Core. Stroke 2019, 50, 1578–1581. [Google Scholar] [CrossRef] [PubMed]

- Winzeck, S.; Mocking, S.J.T.; Bezerra, R.; Bouts, M.; McIntosh, E.C.; Diwan, I.; Garg, P.; Chutinet, A.; Kimberly, W.T.; Copen, W.A.; et al. Ensemble of Convolutional Neural Networks Improves Automated Segmentation of Acute Ischemic Lesions Using Multiparametric Diffusion-Weighted MRI. AJNR Am. J. Neuroradiol. 2019, 40, 938–945. [Google Scholar] [CrossRef]

- Do, L.-N.; Park, I.-W.; Yang, H.-J.; Baek, B.-H.; Nam, Y.; Yoon, W. Automatic Assessment of DWI-ASPECTS for Assessment of Acute Ischemic Stroke using 3D Convolutional Neural Network. In Proceedings of the The 6th International Conference on Big Data Applications and Services, Zhengzhou, China, 19–22 August 2018. [Google Scholar]

- Hampton-Till, J.; Harrison, M.; Kühn, A.L.; Anderson, O.; Sinha, D.; Tysoe, S.; Greveson, E.; Papadakis, M.; Grunwald, I.Q. Automated quantification of stroke damage on brain computed tomography scans: E-ASPECTS. Eur. Med. J. 2015, 3, 69–74. [Google Scholar]

- Aoki, J.; Kimura, K.; Shibazaki, K.; Sakamoto, Y. DWI-ASPECTS as a predictor of dramatic recovery after intravenous recombinant tissue plasminogen activator administration in patients with middle cerebral artery occlusion. Stroke 2013, 44, 534–537. [Google Scholar] [CrossRef]

- Nezu, T.; Koga, M.; Kimura, K.; Shiokawa, Y.; Nakagawara, J.; Furui, E.; Yamagami, H.; Okada, Y.; Hasegawa, Y.; Kario, K.; et al. Pretreatment ASPECTS on DWI predicts 3-month outcome following rt-PA: SAMURAI rt-PA Registry. Neurology 2010, 75, 555–561. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556 (accessed on 29 September 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, June 26–July 1 2016. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Herweh, C.; Ringleb, P.A.; Rauch, G.; Gerry, S.; Behrens, L.; Mohlenbruch, M.; Gottorf, R.; Richter, D.; Schieber, S.; Nagel, S. Performance of e-ASPECTS software in comparison to that of stroke physicians on assessing CT scans of acute ischemic stroke patients. Int. J. Stroke Off. J. Int. Stroke Soc. 2016, 11, 438–445. [Google Scholar] [CrossRef]

- Nagel, S.; Sinha, D.; Day, D.; Reith, W.; Chapot, R.; Papanagiotou, P.; Warburton, E.A.; Guyler, P.; Tysoe, S.; Fassbender, K.; et al. e-ASPECTS software is non-inferior to neuroradiologists in applying the ASPECT score to computed tomography scans of acute ischemic stroke patients. Int. J. Stroke Off. J. Int. Stroke Soc. 2017, 12, 615–622. [Google Scholar] [CrossRef]

- Olive-Gadea, M.; Martins, N.; Boned, S.; Carvajal, J.; Moreno, M.J.; Muchada, M.; Molina, C.A.; Tomasello, A.; Ribo, M.; Rubiera, M. Baseline ASPECTS and e-ASPECTS Correlation with Infarct Volume and Functional Outcome in Patients Undergoing Mechanical Thrombectomy. J. Neuroimaging Off. J. Am. Soc. Neuroimaging 2019, 29, 198–202. [Google Scholar] [CrossRef]

- Pfaff, J.; Herweh, C.; Schieber, S.; Schonenberger, S.; Bosel, J.; Ringleb, P.A.; Mohlenbruch, M.; Bendszus, M.; Nagel, S. e-ASPECTS Correlates with and Is Predictive of Outcome after Mechanical Thrombectomy. AJNR Am. J. Neuroradiol. 2017, 38, 1594–1599. [Google Scholar] [CrossRef] [PubMed]

- Choi, N.Y.; Park, S.; Lee, C.M.; Ryu, C.-W.; Jahng, G.-H. The Role of Double Inversion Recovery Imaging in Acute Ischemic Stroke. Investig. Magn. Reson. Imaging 2019, 23, 210–219. [Google Scholar] [CrossRef]

- Park, J.E.; Kim, H.S.; Jung, S.C.; Keupp, J.; Jeong, H.-K.; Kim, S.J. Depiction of Acute Stroke Using 3-Tesla Clinical Amide Proton Transfer Imaging: Saturation Time Optimization Using an in vivo Rat Stroke Model, and a Preliminary Study in Human. Investig. Magn. Reson. Imaging 2017, 21, 65–70. [Google Scholar] [CrossRef]

- Kellner, E.; Reisert, M.; Kiselev, V.G.; Maurer, C.J.; Urbach, H.; Egger, K. Comparison of automated and visual DWI ASPECTS in acute ischemic stroke. J. Neuroradiol. 2019, 46, 288–293. [Google Scholar] [CrossRef] [PubMed]

- Kuang, H.; Najm, M.; Chakraborty, D.; Maraj, N.; Sohn, S.I.; Goyal, M.; Hill, M.D.; Demchuk, A.M.; Menon, B.K.; Qiu, W. Automated ASPECTS on Noncontrast CT Scans in Patients with Acute Ischemic Stroke Using Machine Learning. AJNR Am. J. Neuroradiol. 2019, 40, 33–38. [Google Scholar] [CrossRef] [PubMed]

- Chilamkurthy, S.; Ghosh, R.; Tanamala, S.; Biviji, M.; Campeau, N.G.; Venugopal, V.K.; Mahajan, V.; Rao, P.; Warier, P. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet (Lond. Engl. ) 2018, 392, 2388–2396. [Google Scholar] [CrossRef]

- Feng, R.; Badgeley, M.; Mocco, J.; Oermann, E.K. Deep learning guided stroke management: A review of clinical applications. J. Neurointerv. Surg. 2018, 10, 358–362. [Google Scholar] [CrossRef]

- Boldsen, J.K.; Engedal, T.S.; Pedraza, S.; Cho, T.H.; Thomalla, G.; Nighoghossian, N.; Baron, J.C.; Fiehler, J.; Østergaard, L.; Mouridsen, K. Better Diffusion Segmentation in Acute Ischemic Stroke Through Automatic Tree Learning Anomaly Segmentation. Front. Neuroinform. 2018, 12, 21. [Google Scholar] [CrossRef]

- Kim, Y.C.; Lee, J.E.; Yu, I.; Song, H.N.; Baek, I.Y.; Seong, J.K.; Jeong, H.G.; Kim, B.J.; Nam, H.S.; Chung, J.W.; et al. Evaluation of Diffusion Lesion Volume Measurements in Acute Ischemic Stroke Using Encoder-Decoder Convolutional Network. Stroke 2019, 50, 1444–1451. [Google Scholar] [CrossRef]

- Sheth, S.A.; Lopez-Rivera, V.; Barman, A.; Grotta, J.C.; Yoo, A.J.; Lee, S.; Inam, M.E.; Savitz, S.I.; Giancardo, L. Machine Learning-Enabled Automated Determination of Acute Ischemic Core From Computed Tomography Angiography. Stroke 2019, 50, 3093–3100. [Google Scholar] [CrossRef]

- Woo, I.; Lee, A.; Jung, S.C.; Lee, H.; Kim, N.; Cho, S.J.; Kim, D.; Lee, J.; Sunwoo, L.; Kang, D.W. Fully Automatic Segmentation of Acute Ischemic Lesions on Diffusion-Weighted Imaging Using Convolutional Neural Networks: Comparison with Conventional Algorithms. Korean J. Radiol. 2019, 20, 1275–1284. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Zhao, L.; Lou, W.; Abrigo, J.M.; Mok, V.C.T.; Chu, W.C.W.; Wang, D.; Shi, L. Automatic Segmentation of Acute Ischemic Stroke From DWI Using 3-D Fully Convolutional DenseNets. IEEE Trans. Med. Imaging 2018, 37, 2149–2160. [Google Scholar] [CrossRef] [PubMed]

- Meijs, M.; Meijer, F.J.A.; Prokop, M.; Ginneken, B.V.; Manniesing, R. Image-level detection of arterial occlusions in 4D-CTA of acute stroke patients using deep learning. Med. Image Anal. 2020, 66, 101810. [Google Scholar] [CrossRef]

- Öman, O.; Mäkelä, T.; Salli, E.; Savolainen, S.; Kangasniemi, M. 3D convolutional neural networks applied to CT angiography in the detection of acute ischemic stroke. Eur. Radiol. Exp. 2019, 3, 8. [Google Scholar] [CrossRef] [PubMed]

- Wahlgren, N.; Moreira, T.; Michel, P.; Steiner, T.; Jansen, O.; Cognard, C.; Mattle, H.P.; van Zwam, W.; Holmin, S.; Tatlisumak, T.; et al. Mechanical thrombectomy in acute ischemic stroke: Consensus statement by ESO-Karolinska Stroke Update 2014/2015, supported by ESO, ESMINT, ESNR and EAN. Int. J. Stroke Off. J. Int. Stroke Soc. 2016, 11, 134–147. [Google Scholar] [CrossRef]

- Davis, S.M.; Campbell, B.C.V.; Donnan, G.A. Endovascular Thrombectomy and Stroke Physicians: Equity, Access, and Standards. Stroke 2017, 48, 2042–2044. [Google Scholar] [CrossRef]

- Viz.ai Granted Medicare New Technology Add-on Payment. Available online: www.prnewswire.com/news-releases/vizai-granted-medicare-new-technology-add-on-payment-301123603.html (accessed on 29 September 2020).

- Hassan, A.E.; Ringheanu, V.M.; Rabah, R.R.; Preston, L.; Tekle, W.G.; Qureshi, A.I. Early experience utilizing artificial intelligence shows significant reduction in transfer times and length of stay in a hub and spoke model. Interv. Neuroradiol. J. Perither. Neuroradiol. Surg. Proced. Relat. Neurosci. 2020. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Chang, J.; Yu, J.; Han, T.; Chang, H.; Park, E. A method for classifying medical images using transfer learning: A pilot study on histopathology of breast cancer. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Guan, Q.; Wang, Y.; Ping, B.; Li, D.; Du, J.; Qin, Y.; Lu, H.; Wan, X.; Xiang, J. Deep convolutional neural network VGG-16 model for differential diagnosing of papillary thyroid carcinomas in cytological images: A pilot study. J. Cancer 2019, 10, 4876–4882. [Google Scholar] [CrossRef] [PubMed]

- Han, S.S.; Park, I.; Lim, W.; Kim, M.S.; Park, G.H.; Chae, J.B.; Huh, C.H.; Chang, S.E.; Na, J.I. Augment Intelligence Dermatology: Deep Neural Networks Empower Medical Professionals in Diagnosing Skin Cancer and Predicting Treatment Options for 134 Skin Disorders. J. Investig. Dermatol. 2020. [Google Scholar] [CrossRef]

- Lakhani, P.; Sundaram, B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet Pre-Training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Shen, Z.; Liu, Z.; Li, J.; Jiang, Y.; Chen, Y.; Xue, X. Object Detection from Scratch with Deep Supervision. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 398–412. [Google Scholar] [CrossRef] [PubMed]

| ASPECTS | Group 1 (ASPECTS 1–6) | Group 2 (ASPECTS 7–10) | Total | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| Training | 1 | 4 | 5 | 13 | 35 | 32 | 48 | 65 | 39 | 2 | 90 | 154 | 244 |

| Validation | 0 | 0 | 2 | 3 | 12 | 9 | 14 | 20 | 14 | 1 | 26 | 49 | 75 |

| Testing | 0 | 3 | 4 | 7 | 5 | 12 | 9 | 19 | 9 | 3 | 31 | 40 | 71 |

| Model | Validation Dataset | Independent Test Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sens. 4 (%) | Spec. 5 (%) | F1 | Acc. 6 (%) | AUC | Sens. 4 (%) | Spec. 5 (%) | F1 | Acc. 6 (%) | AUC | |

| Pre-trd. 1 VGG16 | 70.5 | 86.3 | 0.801 | 72.8 | 0.801 | 61.3 | 92.5 | 0.831 | 78.8 | 0.920 |

| Pre-trd. 1 Inception V3 | 71.8 | 84.3 | 0.795 | 72.4 | 0.834 | 64.5 | 92.5 | 0.840 | 80.0 | 0.921 |

| 3DCNN2 | 78.2 | 89.1 | 0.848 | 81.7 | 0.844 | 77.4 | 90.0 | 0.867 | 84.5 | 0.929 |

| Proposed RRCNN3 | 82.0 | 89.8 | 0.872 | 84.4 | 0.910 | 83.9 | 90.0 | 0.888 | 87.3 | 0.941 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Do, L.-N.; Baek, B.H.; Kim, S.K.; Yang, H.-J.; Park, I.; Yoon, W. Automatic Assessment of ASPECTS Using Diffusion-Weighted Imaging in Acute Ischemic Stroke Using Recurrent Residual Convolutional Neural Network. Diagnostics 2020, 10, 803. https://doi.org/10.3390/diagnostics10100803

Do L-N, Baek BH, Kim SK, Yang H-J, Park I, Yoon W. Automatic Assessment of ASPECTS Using Diffusion-Weighted Imaging in Acute Ischemic Stroke Using Recurrent Residual Convolutional Neural Network. Diagnostics. 2020; 10(10):803. https://doi.org/10.3390/diagnostics10100803

Chicago/Turabian StyleDo, Luu-Ngoc, Byung Hyun Baek, Seul Kee Kim, Hyung-Jeong Yang, Ilwoo Park, and Woong Yoon. 2020. "Automatic Assessment of ASPECTS Using Diffusion-Weighted Imaging in Acute Ischemic Stroke Using Recurrent Residual Convolutional Neural Network" Diagnostics 10, no. 10: 803. https://doi.org/10.3390/diagnostics10100803

APA StyleDo, L.-N., Baek, B. H., Kim, S. K., Yang, H.-J., Park, I., & Yoon, W. (2020). Automatic Assessment of ASPECTS Using Diffusion-Weighted Imaging in Acute Ischemic Stroke Using Recurrent Residual Convolutional Neural Network. Diagnostics, 10(10), 803. https://doi.org/10.3390/diagnostics10100803