Robot Grasping System and Grasp Stability Prediction Based on Flexible Tactile Sensor Array

Abstract

1. Introduction

2. Materials and Methods

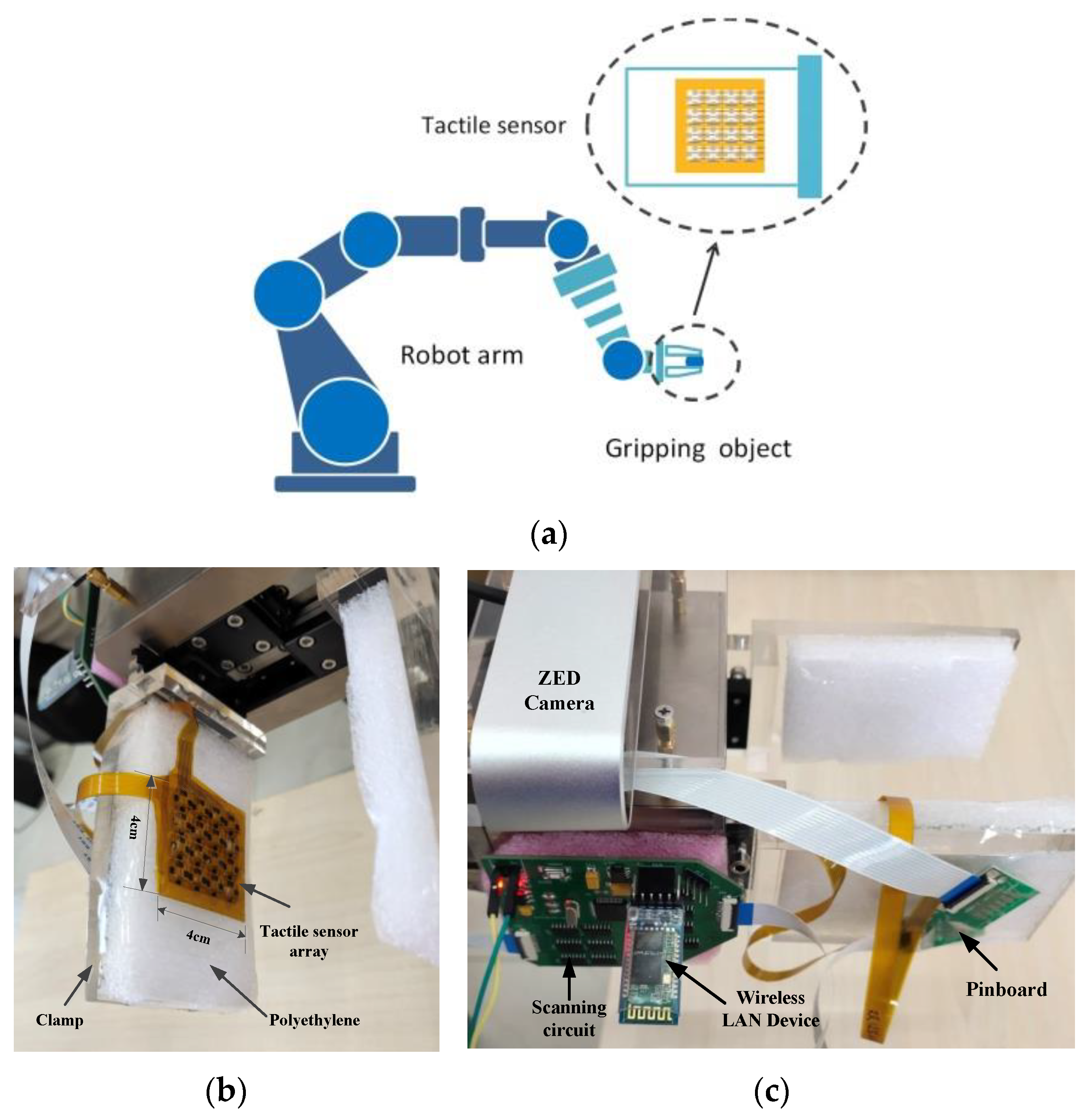

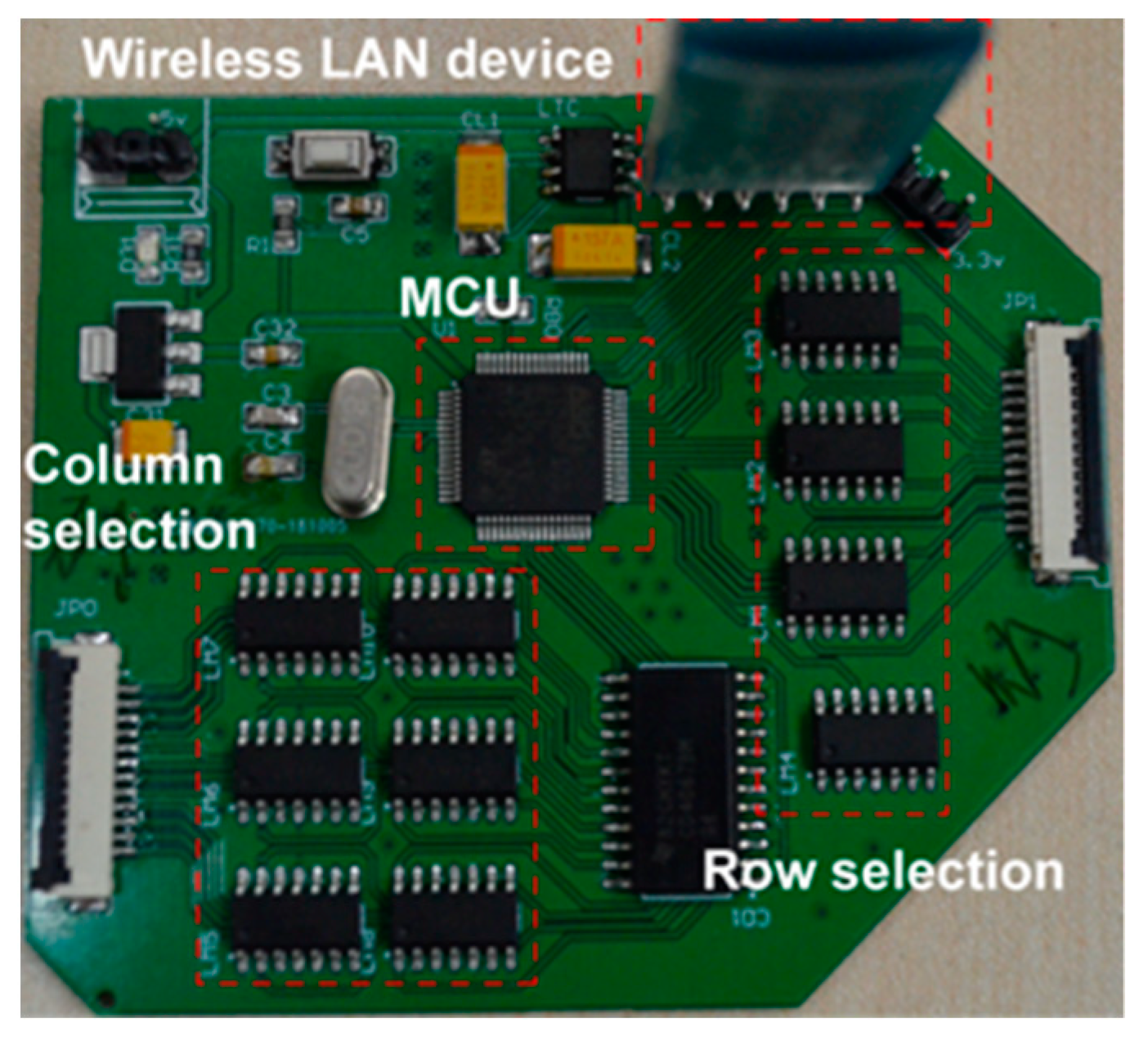

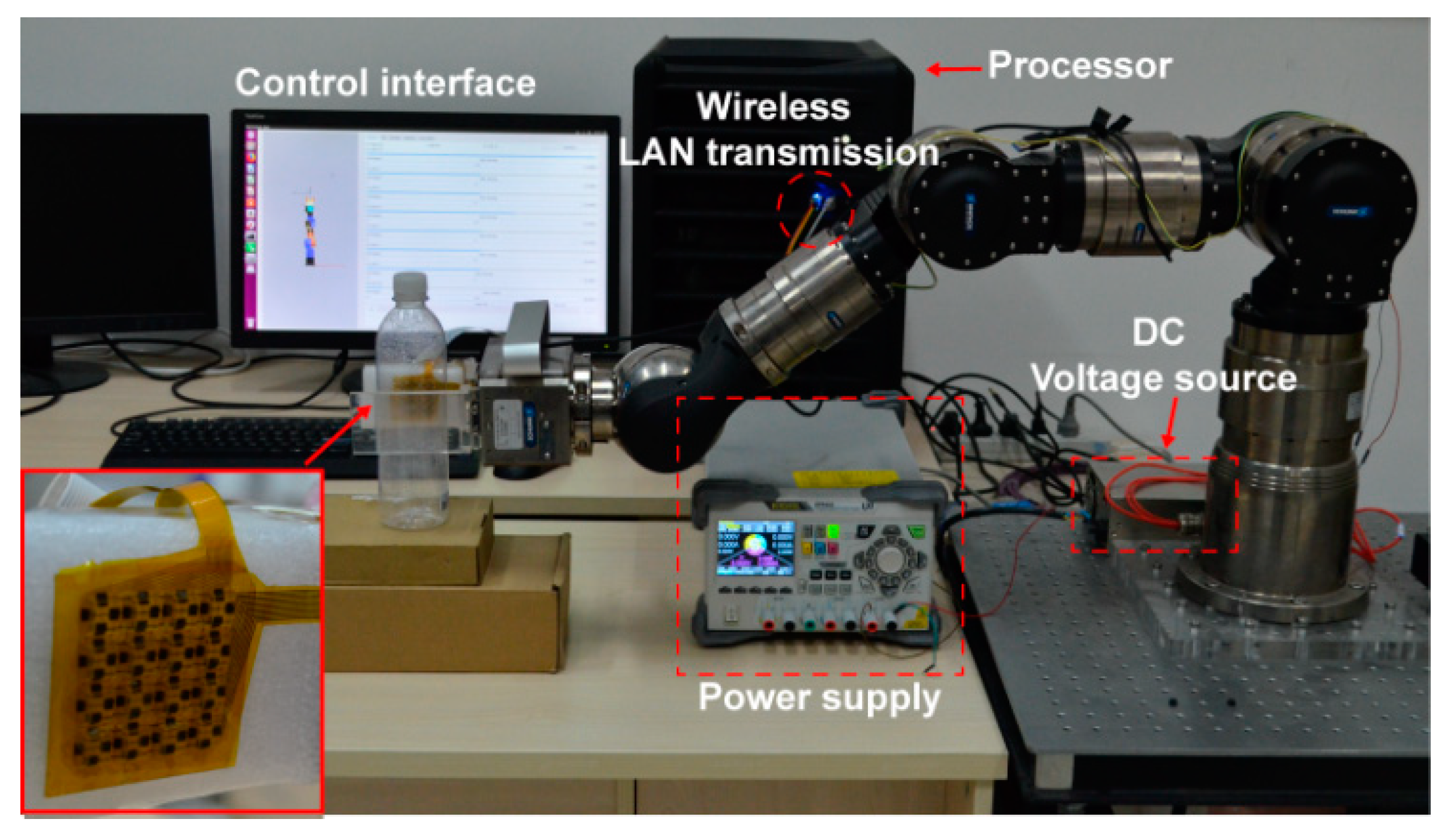

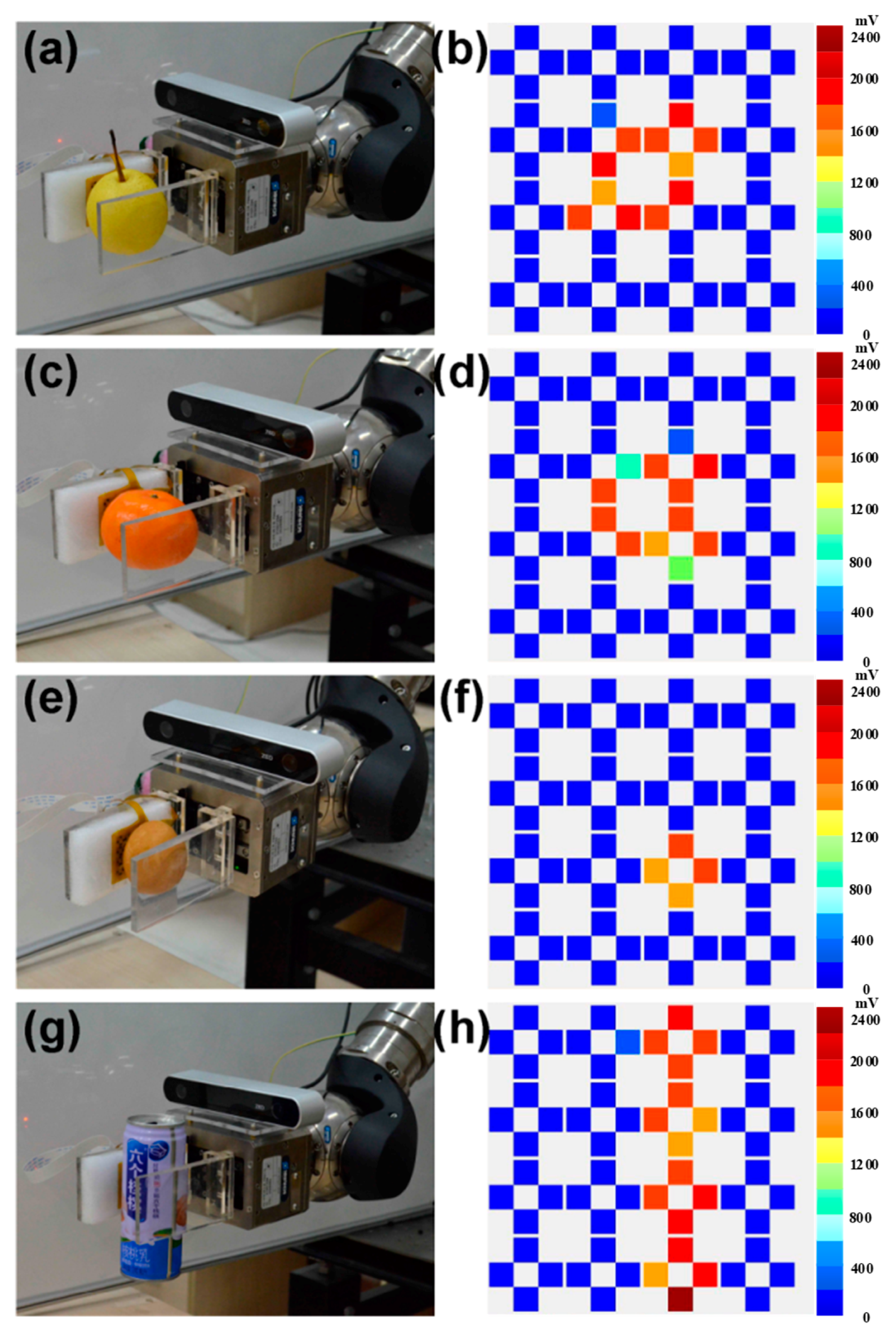

2.1. Robot Grasping System Integrated with Flexible Tactile Sensor Array

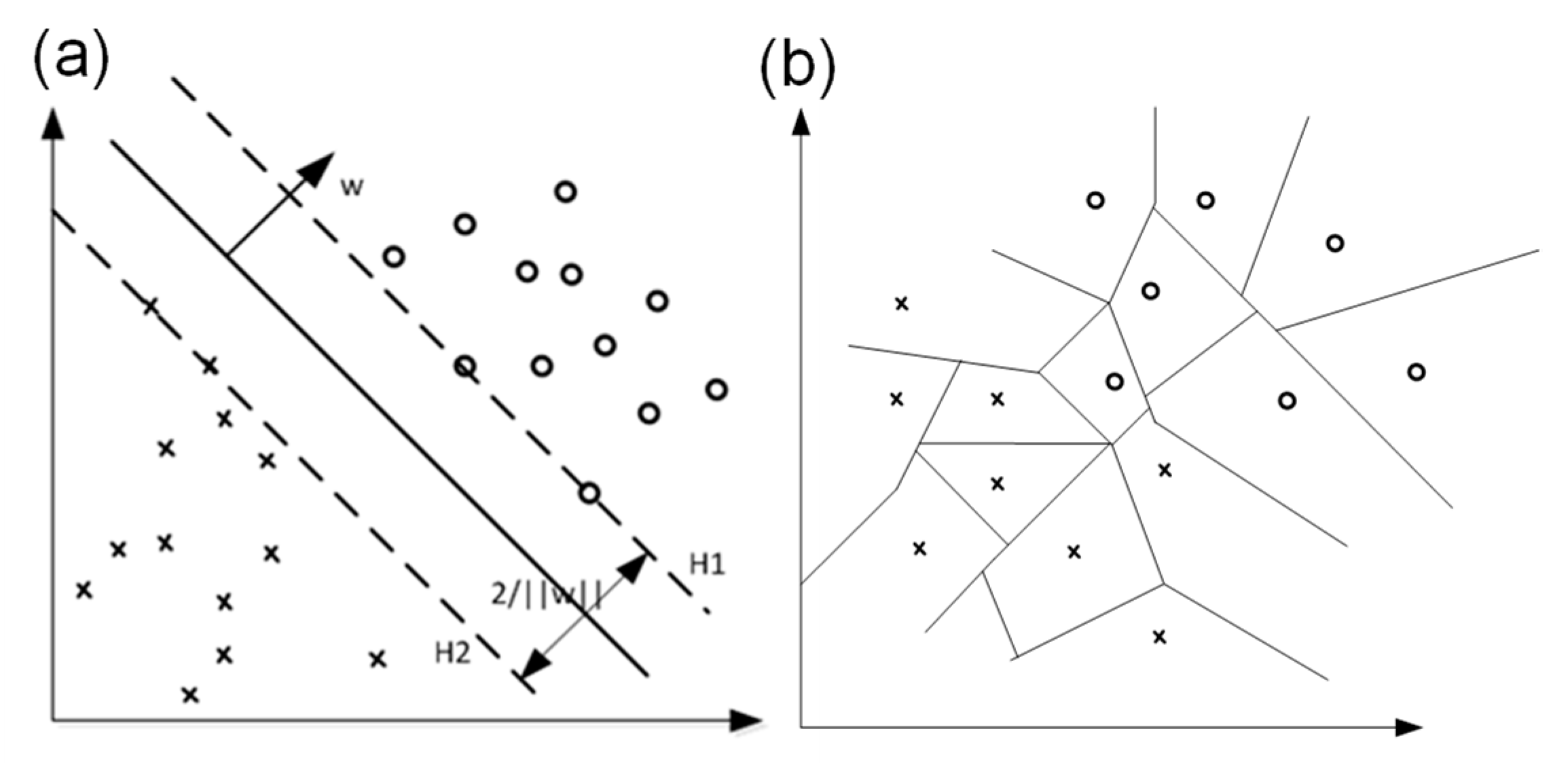

2.2. Grasp Stability Prediction Algorithm Based on Grasping Dataset

3. Results

4. Conclusions

- A highly sensitive tactile sensor array combined with a high motion resolution robot grasping system.

- A dataset of pressure distribution reflecting the contact force condition between objects and the end-effector of the robot.

- A high judgment accuracy grasping prediction model trained with the SVC algorithm on the dataset of pressure distribution.

- Real-time stable grasping prediction during actual robot grasping operation.

- Further application on high contact force sensitivity scenes such as man-machine interaction-based nursing and healthcare.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Obermeyer, Z.; Emanuel, E.J. Predicting the Future—Big Data, Machine Learning, and Clinical Medicine. N. Engl. J. Med. 2016, 375, 1216–1219. [Google Scholar] [CrossRef] [PubMed]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef]

- Jean, N.; Burke, M.; Xie, M.; Davis, W.M.; Lobell, D.B.; Ermon, S. Combining satellite imagery and machine learning to predict poverty. Science 2016, 353, 790–794. [Google Scholar] [CrossRef]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Netw. 2012, 32, 323–332. [Google Scholar] [CrossRef]

- Young, T.; Cambria, E. Recent Trends in Deep Learning Based Natural Language Processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Weng, W.; Wagholikar, K.B.; Mccray, A.T.; Szolovits, P.; Chueh, H.C. Medical subdomain classification of clinical notes using a machine learning-based natural language processing approach. BMC Med. Inform. Decis. Mak. 2017, 17, 155. [Google Scholar] [CrossRef]

- Hirschberg, J.; Manning, C.D. Advances in natural language processing. Science 2015, 349, 261–266. [Google Scholar] [CrossRef] [PubMed]

- Martinsen, K.; Downey, J.; Baturynska, I. Human-Machine Interface for Artificial Neural Network based Machine Tool Process Monitoring. Procedia CIRP 2016, 41, 933–938. [Google Scholar] [CrossRef]

- Giusti, A.; Guzzi, J.; Cire, D.C.; He, F.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; di Caro, G.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2015, 1, 661–667. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Czejdo, B.; Perez, N. Gesture Classification with Machine Learning using Kinect Sensor Data. In Proceedings of the 2012 Third International Conference on Emerging Applications of Information Technology (EAIT), Kolkata, India, 30 November–1 December 2012; pp. 348–351. [Google Scholar]

- Jahangiri, A.; Rakha, H.A. Applying Machine Learning Techniques to Transportation Mode Recognition Using Mobile Phone Sensor Data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2406–2417. [Google Scholar] [CrossRef]

- Alsheikh, M.A.; Lin, S.; Niyato, D.; Tan, H. Machine Learning in Wireless Sensor Networks: Algorithms, Strategies, and Applications. IEEE Commun. Surv. Tutor. 2014, 16, 1996–2018. [Google Scholar] [CrossRef]

- Kappassov, Z.; Corrales, J.; Systems, A.; Perdereau, V. Tactile sensing in dexterous robot hands—Review. Robot. Auton. Syst. 2015, 74, 195–220. [Google Scholar] [CrossRef]

- Romano, J.M.; Member, S.; Hsiao, K. Human-Inspired Robotic Grasp Control with Tactile Sensing. IEEE Trans. Robot. 2011, 27, 1067–1079. [Google Scholar] [CrossRef]

- Spiliotopoulos, J.; Michalos, G. A Reconfigurable Gripper for Dexterous Manipulation in Flexible Assembly. Inventions 2018, 3, 4. [Google Scholar] [CrossRef]

- Santos, A.; Pinela, N.; Alves, P.; Santos, R.; Farinha, R.; Fortunato, E.; Martins, R.; Hugo, Á.; Igreja, R. E-Skin Bimodal Sensors for Robotics and Prosthesis Using PDMS Molds Engraved by Laser. Sensors 2019, 19, 899. [Google Scholar] [CrossRef]

- Jelizaveta, S.; Agostino, K.; Kaspar, A. Fingertip Fiber Optical Tactile Array with Two-Level Spring Structure. Sensors 2017, 17, 2337. [Google Scholar]

- Costanzo, M.; de Maria, G.; Lettera, G.; Natale, C.; Pirozzi, S. Motion Planning and Reactive Control Algorithms for Object Manipulation in Uncertain Conditions. Robotics 2018, 7, 76. [Google Scholar] [CrossRef]

- De Maria, G.; Natale, C.; Pirozzi, S. Force/tactile sensor for robotic applications. Sens. Actuators A Phys. 2012, 175, 60–72. [Google Scholar] [CrossRef]

- Pang, G.; Deng, J.; Wang, F.; Zhang, J.; Pang, Z. Development of Flexible Robot Skin for Safe and Natural Human—Robot Collaboration. Micromachines 2018, 9, 576. [Google Scholar] [CrossRef]

- Sun, X.; Sun, J.; Li, T.; Zheng, S.; Wang, C.; Tan, W. Flexible Tactile Electronic Skin Sensor with 3D Force Detection Based on Porous CNTs/PDMS Nanocomposites. Nano-Micro Lett. 2019, 11, 57. [Google Scholar] [CrossRef]

- Úbeda, A.; Zapata-Impata, B.S.; Puente, S.T.; Gil, P.; Candelas, F.; Torres, F. A Vision-Driven Collaborative Robotic Grasping System Tele-Operated by Surface Electromyography. Sensors 2018, 18, 2366. [Google Scholar] [CrossRef]

- Sánchez-Durán, J.A.; Hidalgo-López, J.A.; Castellanos-Ramos, J.; Oballe-Peinado, Ó.; Vidal-Verdú, F. Influence of Errors in Tactile Sensors on Some High Level Parameters Used for Manipulation with Robotic Hands. Sensors 2015, 15, 20409–20435. [Google Scholar] [CrossRef]

- Heyneman, B.; Cutkosky, M.R. Slip classification for dynamic tactile array sensors. Int. J. Robot. Res. 2016, 35, 404–421. [Google Scholar] [CrossRef]

- Su, Z.; Hausman, K.; Chebotar, Y.; Molchanov, A.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 297–303. [Google Scholar] [CrossRef]

- Meier, M.; Patzelt, F.; Haschke, R.; Ritter, H.J. Tactile convolutional networks for online slip and rotation detection. In International Conference on Artificial Neural Networks; Springer: Cham, Sweden, 2016; pp. 12–19. [Google Scholar]

- Sundaram, S.; Kellnhofer, P.; Li, Y.; Zhu, J.Y.; Torralba, A.; Matusik, W. Learning the signatures of the human grasp using a scalable tactile glove. Nature 2019, 569, 698–702. [Google Scholar] [CrossRef] [PubMed]

- Yao, S.; Ceccarelli, M.; Carbone, G.; Dong, Z. Grasp configuration planning for a low-cost and easy-operation underactuated three-fingered robot hand. Mech. Mach. Theory 2018, 129, 51–69. [Google Scholar] [CrossRef]

- Kim, D.; Li, A.; Lee, J. Stable Robotic Grasping of Multiple Objects using Deep Neural Networks. Robotica 2021, 39, 735–748. [Google Scholar] [CrossRef]

- Su, J.; Ou, Z.; Qiao, H. Form-closure caging grasps of polygons with a parallel-jaw gripper. Robotica 2015, 33, 1375–1392. [Google Scholar] [CrossRef]

- Modabberifar, M.; Spenko, M. Development of a gecko-like robotic gripper using scott–russell mechanisms. Robotica 2020, 38, 541–549. [Google Scholar] [CrossRef]

- Homberg, B.S.; Katzschmann, R.K.; Dogar, M.R.; Rus, D. Haptic identification of objects using a modular soft robotic gripper. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1698–1705. [Google Scholar] [CrossRef]

- Russo, M.; Ceccarelli, M.; Corves, B.; Hüsing, M.; Lorenz, M.; Cafolla, D.; Carbone, G. Design and test of a gripper prototype for horticulture products. Robot. Comput. Integr. Manuf. 2017, 44, 266–275. [Google Scholar] [CrossRef]

- Dimeas, F.; Sako, D.V.; Moulianitis, V.C.; Aspragathos, N.A. Design and fuzzy control of a robotic gripper for efficient strawberry harvesting. Robotica 2015, 33, 1085–1098. [Google Scholar] [CrossRef]

- Liu, C.H.; Chen-Hua, C.; Mao-Cheng, H.; Chen, Y.; Chiang, Y.P. Topology and size–shape optimization of an adaptive compliant gripper with high mechanical advantage for grasping irregular objects. Robotica 2019, 37, 1383–1400. [Google Scholar] [CrossRef]

- Vidal-Verdú, F.; Oballe-Peinado, Ó.; Sánchez-Durán, J.A.; Castellanos-Ramos, J.; Navas-González, R. Three realizations and comparison of hardware for piezoresistive tactile sensors. Sensors 2011, 11, 3249–3266. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Fukunaga, K.; Narendra, P.M. A branch and bound algorithm for computing k-nearest neighbors. IEEE Trans. Comput. 1975, 100, 750–753. [Google Scholar] [CrossRef]

- Ruczinski, I.; Kooperberg, C.; LeBlanc, M. Logic regression. J. Comput. Graph. Stat. 2003, 12, 475–511. [Google Scholar] [CrossRef]

- Riegel, L.; Hao, G.; Renaud, P. Vision-based micro-manipulations in simulation. Microsyst. Technol. 2020, 1–9. [Google Scholar] [CrossRef]

| Pear | Nectarine | Orange | Plastic Bottle | Egg | Pop-Top Can | Overall | |

|---|---|---|---|---|---|---|---|

| SVC | 95.78% | 99.89% | 98.78% | 99.11% | 98.22% | 97.67% | 98.24% |

| KNN | 91.67% | 95.00% | 97.00% | 98.78% | 94.56% | 93.00% | 95.00% |

| LR | 95.00% | 97.22% | 98.44% | 98.78% | 97.30% | 97.67% | 97.40% |

| Ensemble Learning | 95.22% | 99.89% | 99.11% | 99.89% | 99.44% | 98.67% | 98.70% |

| SVC | KNN | Logistic Regression | Ensemble Learning | |

|---|---|---|---|---|

| With normalization | 96.63% | 93.77% | 95.76% | 97.06% |

| Without normalization | 94.52% | 93.75% | 94.30% | 94.83% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, T.; Sun, X.; Shu, X.; Wang, C.; Wang, Y.; Chen, G.; Xue, N. Robot Grasping System and Grasp Stability Prediction Based on Flexible Tactile Sensor Array. Machines 2021, 9, 119. https://doi.org/10.3390/machines9060119

Li T, Sun X, Shu X, Wang C, Wang Y, Chen G, Xue N. Robot Grasping System and Grasp Stability Prediction Based on Flexible Tactile Sensor Array. Machines. 2021; 9(6):119. https://doi.org/10.3390/machines9060119

Chicago/Turabian StyleLi, Tong, Xuguang Sun, Xin Shu, Chunkai Wang, Yifan Wang, Gang Chen, and Ning Xue. 2021. "Robot Grasping System and Grasp Stability Prediction Based on Flexible Tactile Sensor Array" Machines 9, no. 6: 119. https://doi.org/10.3390/machines9060119

APA StyleLi, T., Sun, X., Shu, X., Wang, C., Wang, Y., Chen, G., & Xue, N. (2021). Robot Grasping System and Grasp Stability Prediction Based on Flexible Tactile Sensor Array. Machines, 9(6), 119. https://doi.org/10.3390/machines9060119