Abstract

Manipulation of heavy objects in industries is very necessary, but manual manipulation is tedious, adversely affects a worker’s health and safety, and reduces efficiency. On the contrary, autonomous robots are not flexible to manipulate heavy objects. Hence, we proposed human–robot systems, such as power assist systems, to manipulate heavy objects in industries. Again, the selection of appropriate control methods as well as inclusion of human factors in the controls is important to make the systems human friendly. However, existing power assist systems do not address these issues properly. Hence, we present a 1-DoF (degree of freedom) testbed power assist robotic system for lifting different objects. We also included a human factor, such as weight perception (a cognitive cue), in the robotic system dynamics and derived several position and force control strategies/methods for the system based on the human-centric dynamics. We developed a reinforcement learning method to predict the control parameters producing the best/optimal control performance. We also derived a novel adaptive control algorithm based on human characteristics. We experimentally evaluated those control methods and compared the system performance between the control methods. Results showed that both position and force controls produced satisfactory performance, but the position control produced significantly better performance than the force controls. We then proposed using the results to design control methods for power assist robotic systems for handling large and heavy materials and objects in various industries, which may improve human–robot interactions (HRIs) and system performance.

1. Introduction

1.1. Importance of Power Assist Robotic Systems for Manipulating Heavy and Large Objects

Workers in various industries always need to handle heavy and large materials and objects. For example, they handle materials/objects for (i) loading/unloading heavy and large luggage, bags, and so forth to and from buses, trains, trucks, aircrafts, ships, and so forth for logistics and transport; (ii) handling heavy and large bags, objects, materials, and so forth in various industries, such as agriculture, forestry, automobile, mining, shipbuilding/breaking, military activities, manufacturing/assembly, timber, construction, and so forth. However, handling heavy and large materials and objects manually is usually hard, time-consuming, and tiresome; and it may reduce work efficiency and also cause injuries, back pains, musculoskeletal disorders/malfunctions, and so forth to concerned workers, especially sick, unskilled, novice, aged, and weak workers [1]. On the other hand, various autonomous robotic devices for handling large and heavy objects, such as cranes, may not provide the expected level of flexibility in manipulation [2]. Hence, we posit that suitable robotic systems/devices with human intervention/involvement, such as power assist robotic systems, may be the most suitable for handling heavy and large objects in relevant industries, especially in industries with unstructured environments [3]. In such a case, the strength of a robotic system and the intelligence of a human coworker may jointly make the human–robot collaborative system far superior to an individual robotic system or a human [4]. However, currently, power assist robotic systems are mostly used for assistive, healthcare, and rehabilitation purposes [5,6]. We thus posit that diversifications and novelty in power assist applications can be brought if power assist robotic systems are proposed to be used to manipulate heavy and large objects in various industries [7]. However, such human-friendly power assist robotic devices have not been enormously employed in the mentioned industries yet.

1.2. Power Assist Robotic Devices and Systems for Manipulating Heavy and Large Objects: State of the Art

A significant number of power assist robotic systems have already been developed and proposed for object manipulation [8,9,10,11,12,13]. However, those systems do not show a definitive aim of handling heavy/large materials and objects in the mentioned industries. Those systems also exhibit various limitations; for example, as the systems are too heavy to use, the amount or level of power assistance is not measureable and clearly understandable; the systems are usually not properly examined and evaluated for a coworker’s comfort, health, adaptability, safety, user-friendliness, and efficiency; the systems may suffer from the disadvantages of the actuation methods designed with hydraulic/pneumatic principles; and so forth [8]. The systems may produce excessive power and unexpected oscillations/vibrations [9,10,11], and they may limit the movement due to various constraints, causing difficulties in path and motion planning [12]. Moreover, there may have some common disadvantages and problems with power assist robotic devices/systems (e.g., human–machine maladjustment, actuator saturation, noises and disturbances, unsuitable or less suitable control methods, inappropriate control strategies, inaccuracy in motions, limited capacity and low efficiency, inappropriate sensor arrangements, anomaly in sensor readings, insufficient or restricted degrees of freedom, instability and lack of safety, and so forth) [8,9,10,11,12,13]. Conventional power assist robotic systems/devices usually do not use any holistic approach to overcome or reduce these limitations, which result in unsatisfactory human–machine interactions and poor system performance. Furthermore, a few power assist robotic devices/systems are already available in applications, as the literature shows, such as the Hybrid Assistive Limb (HAL) [14], Power Loader Light (PLL) [15], and BLEEX [16]. However, these robotic systems are not very suitable for handling heavy and large objects in industries due to their inappropriate suit-like configurations, heavy weight, and so forth. A few power assist robotic devices have been designed to assist old and sick workers to handle heavy and large loads instead of amplifying the ability of normal and skilled workers [17]. Finally, power assist robotic devices that can transfer patients in hospitals have been made available, but they cannot be used to handle heavy and large objects in the mentioned industries due to their unsuitable configurations [18,19].

1.3. Human Factor Issues with Power Assist Robotic Systems for Heavy and Large Object Manipulation

Power assist robots usually reduce perceived object weights, and thus a human user manipulating an object with power assist feels a significant difference between the actual and the perceived weights of the manipulated object [3,16]. Figure 1 attempts to illustrate this phenomenon. A human user or coworker usually programs/estimates feedforward manipulative forces (e.g., load and grip forces) based on his/her visual perception of the weight of the object to be manipulated, which usually depends on the object’s visual size and other visual cues [20]. As the haptically perceived weight of the object is usually much less than the visually perceived weight, the applied load and grip forces to the object are proven to be too excessive. Consequently, motions of the robotic assist system become unexpectedly excessive, which can result in poor maneuverability and safety [7]. Hence, we posit that a user’s weight perception (an example of human cognition) needs to be taken into account when designing and developing the controls of power assist robots for object manipulation to mitigate the mentioned weight perceptual effects. However, state-of-the-art power assist robotic devices usually do not include human features, such as weight perception, in the design of the controls, which may result in unsatisfactory human user–robot interactions and system performance [8,9,10,11,12,13].

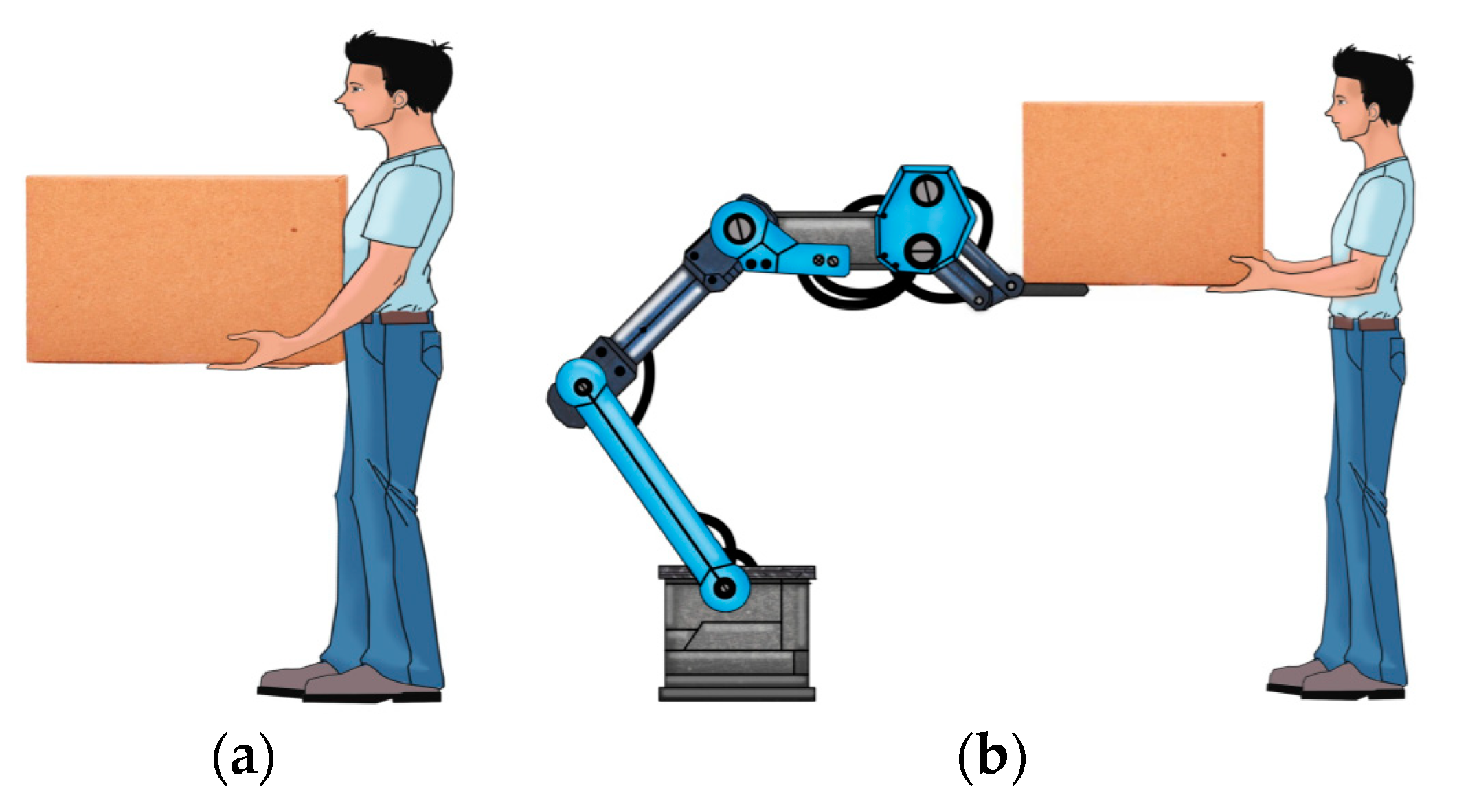

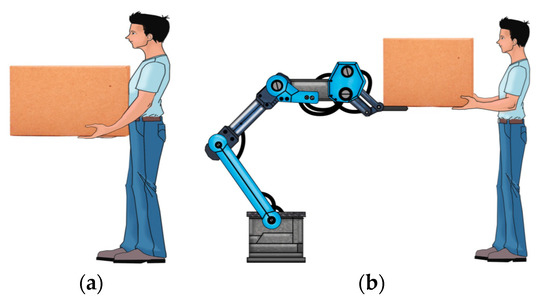

Figure 1.

(a) Manual manipulation of an object where a human perceives the actual weight of the object; (b) manipulation of an object with a power assist robot where a human perceives a reduced weight of the object. Ideally, the perceived weight of the same object is very less for the power assist case because the robot reduces the perceived weight. For the power assist case, the object is carried by the robot, and the human’s applied forces only control the motions.

1.4. Human-Friendly Control Strategies for Power Assist Robotic Systems: Position and Force Controls

Selecting appropriate control strategies and methods for power assist robotic devices/systems can have a serious impact on system performance and interactions with users in various terms, such as stability, maneuverability, efficiency, safety, and so forth [7]. As the literature shows, usually, impedance/admittance-based position and force control strategies and methods are used for power assist robotic systems [10,13]. However, the selection depends on the applications. Position control strategies may be good in some aspects (for example, providing positional accuracy), but force control strategies may be good in some other considerations and aspects (e.g., overcoming the effects of nonlinearity, friction, inertia, and disturbances) [21,22]. Application-specific complexity and/or linearity/nonlinearity of control methods may have an impact on the control selection as well [7]. The existing literature neither reports weight-perception-based position and force control methods and strategies for power assist robotic devices for object manipulation nor does any comparisons between position and force control methods/strategies for their performance and effectiveness in deciding appropriate controls for industrial applications [7].

Preliminary results of the effectiveness of weight-perception-based force controls for power assist robotic systems were presented in [7]. However, the results were not compared with that for the position control. The optimal control parameters necessary to produce optimal system-user interactions and system performance were not decided experimentally [23]. We posit that the optimal control parameters could be decided experimentally following a reinforcement learning model [24,25,26,27]. However, such initiatives were not taken. In addition, we posit that an empirically designed weight-perception-based adaptive control strategy added to the top of the position and force control strategies could be able to adapt the effects generated due to the weight perceptual issues [13,28]. However, such efforts were not attempted in [7].

1.5. Objectives

Hence, the objectives of this paper were to (i) broaden the scope of the applications of power assist systems by proposing these systems for manipulating heavy and large objects in industries, (ii) build an experimental power assist system as a testbed to lift objects with it by human operators, (iii) include a human user’s weight perception (cognition) in the derived dynamics of the experimental system, (iv) derive position and force control methods and strategies along with an adaptive control strategy for the same system for the same task (lifting objects) based on the human-centric dynamics and predict the control parameters producing the best/optimal control performance following a reinforcement learning method, (v) implement the position and force controls for lifting objects with the assist system and compare the performance between the control methods, and so forth. Here, the main contributions and novelties are (i) including a human user’s weight perception (cognition) in the dynamics and control of the system, (ii) deriving a novel adaptive control strategy for the system for lifting objects based on the human-centric dynamics and a predictive method producing the best/optimal control performance following a reinforcement learning method, (iii) comparing the performance between position and force control methods for the same experimental setting, and so forth, with the aim of improving the human friendliness and performance of the system [29].

The ultimate objective is to build a multi-degree-of-freedom (multi-DoF) power assist system for manipulating large and heavy objects. However, the experimental testbed system was limited to only a 1-DoF system that was suitable to lift only lightweight and small-sized objects. We proposed to use the findings derived from the testbed system to scale up to design appropriate control methods and strategies for industrial power assist robotic systems for manipulating heavy/large objects to improve human–machine interactions and system performance. This paper investigates the proposed control concepts and tries to understand the evaluation results of the control systems first before verifying them with large and heavy objects. Robotics follows scaling laws [30]. Therefore, the proposed upgradation is feasible.

2. Materials: Construction of the Experimental Power Assist Robotic System for Lifting Objects

This section presents the construction (design and development) details of the experimental power assist robotic system and the objects to be lifted (manipulated) with the system [31]. This section also introduces the experimental setup and the communication system of the power assist robotic system.

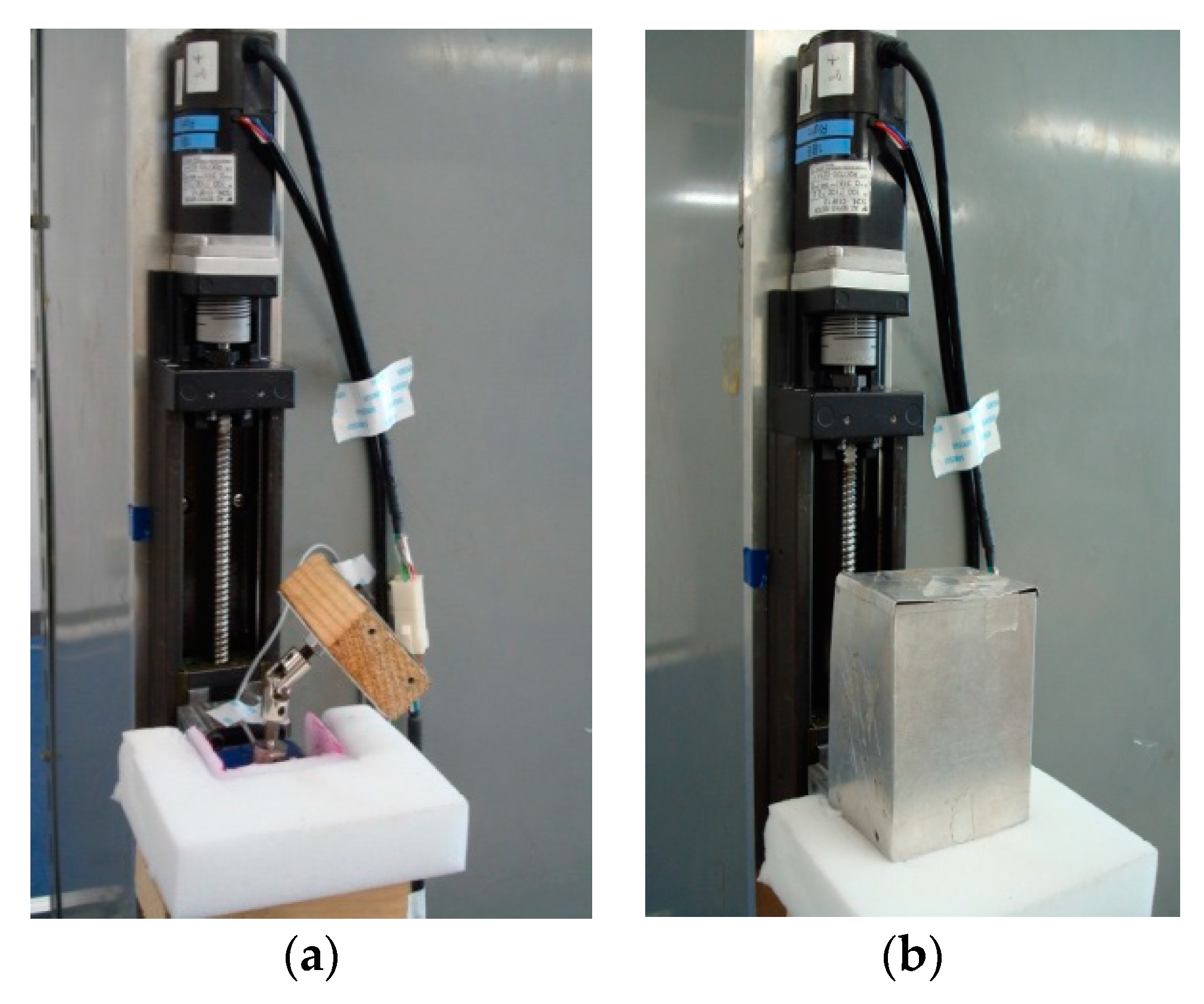

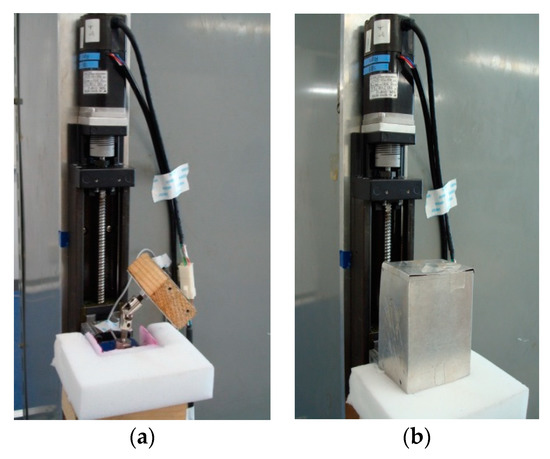

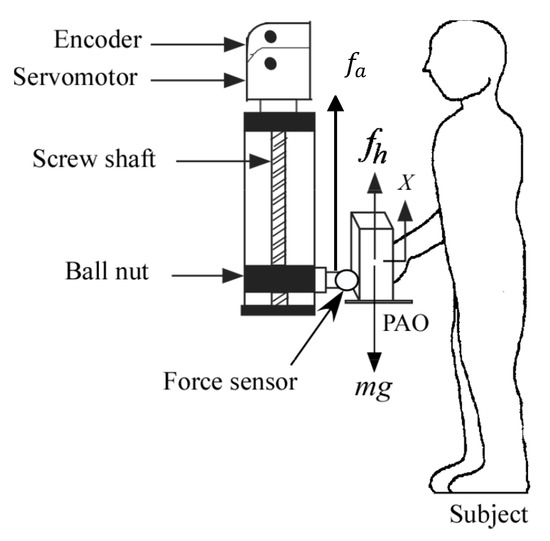

We designed and built a 1-DoF (vertical lifting motion) power assist system using a ball screw, which was actuated by an AC servomotor (see Table 1 and Table 2 for details of the servomotor and the ball screw, respectively). We also mounted a noise filter to prevent electrical noises from the power supply to the servo system. We coaxially fixed the servomotor and the ball screw on a metal plate and then vertically attached the plate to a wall, as shown in Figure 2a. The ball screw converted the rotary motion of the servomotor to the linear motion. As shown in Figure 2a, we attached a force sensor (see Table 3 for details) to the ball nut of the ball screw through an acrylic resin block. We tied one end of a universal joint to the force sensor and attached the other end to a wooden block. We lubricated the ball screw properly to enhance its efficiency and life. We used light machine oil and sometimes high-pressure grease for lubrication. We kept the backlash as minimum as possible in the ball screw and also kept it almost free from contamination by wiping it out properly.

Table 1.

Specifications of the servomotor with encoder.

Table 2.

Specifications of the ball screw system.

Figure 2.

(a) The main power assist device; (b) the complete power assist device with an object (medium size).

Table 3.

Specifications of the force sensor.

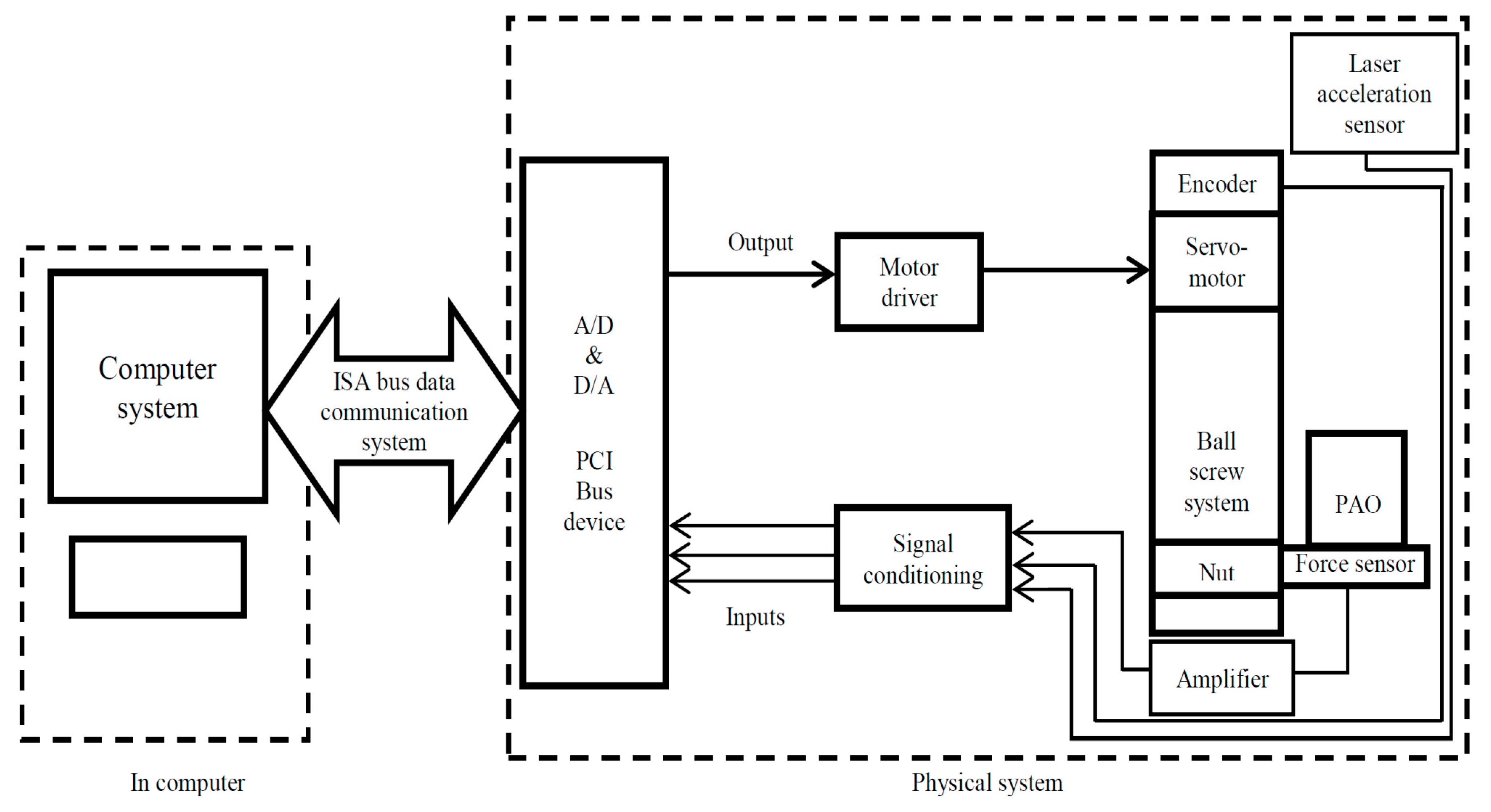

We made three rectangular-shaped objects (boxes) of different sizes by bending thin aluminum sheets (thickness: 0.0005 m). We covered the top side of each box with a cap made of thin aluminum sheet (thickness: 0.0005 m). We kept the bottom and back sides open. Those objects were lifted with the power assist robotic system, and they were called power-assisted objects, or PAOs. Dimensions such as length × width × height and self-weights of those objects are detailed in Table 4. A PAO could be tied to the force sensor through the wooden block and be lifted by a subject. The object was kept on a soft surface before it was lifted, as shown in Figure 2b. The servomotor included an encoder (see Table 1) that could measure the position and its derivatives (velocity and acceleration) of the object lifted with the power assist system. Another dedicated laser acceleration sensor that could measure the acceleration of the lifted PAO directly was also put above the PAO. Figure 3 shows the experimental setup and the communication system of the power assist system.

Table 4.

Specifications of the power-assisted objects (PAOs).

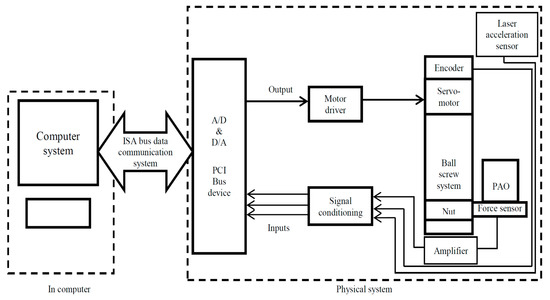

Figure 3.

The experimental setup and the communication channels for the power assist robotic system for lifting objects. A laser-type acceleration sensor is set over the PAO, as the figure shows.

3. Modeling Weight-Perception-Based System Dynamics

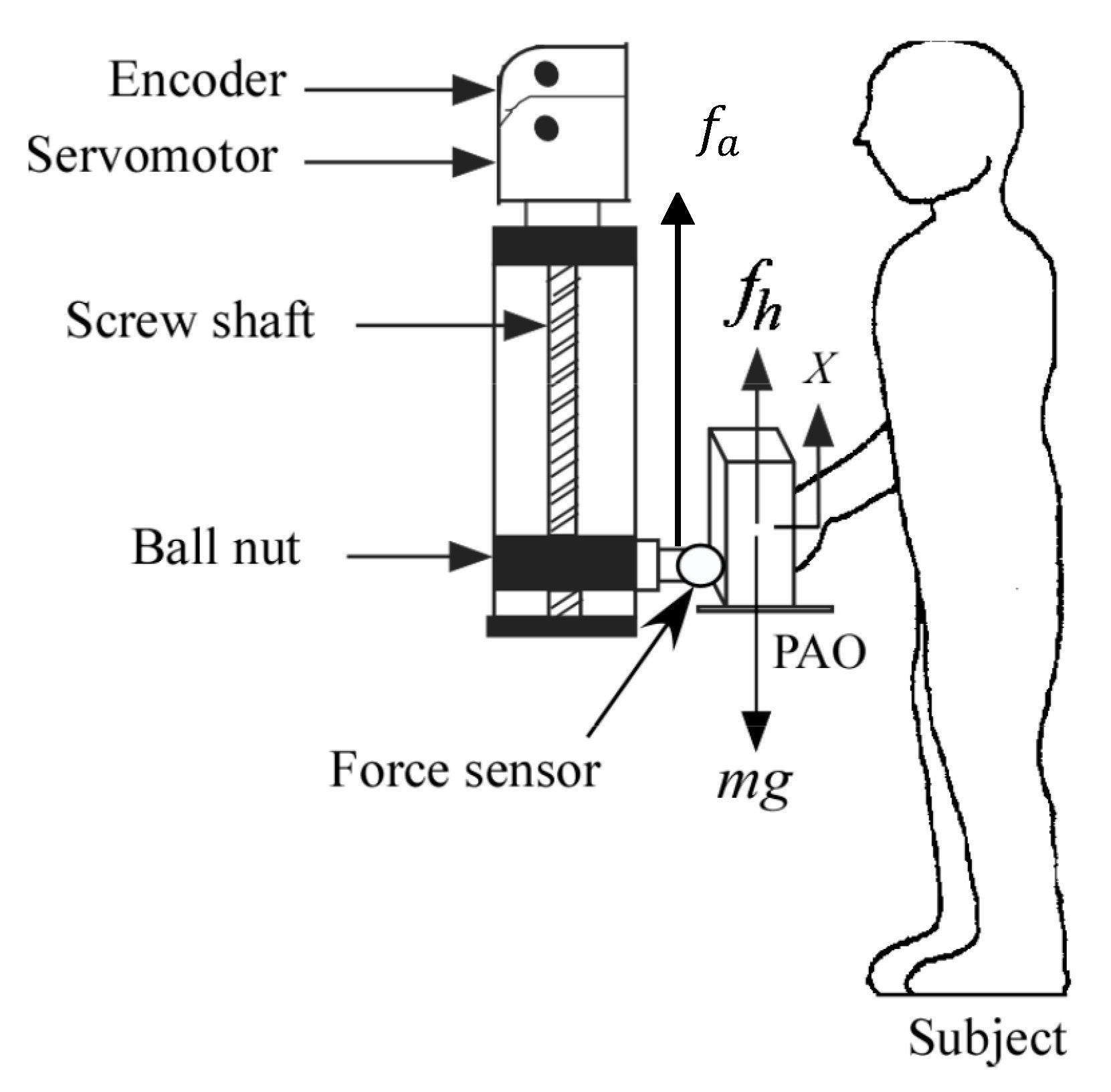

As shown in Figure 4, the dynamics for manipulating (lifting) an object with the power assist system can be expressed as Equation (1) as follows:

Figure 4.

The dynamics of lifting a PAO with the power assist robotic system by a subject (human user).

In (1),

Here, we may ignore friction, viscosity, and disturbances if we imagine the targeted dynamics. Thus, the dynamics model is simplified as in Equation (2) as follows:

We attempted to include a human user’s weight perception in the robot dynamics, and then the dynamics was changed as expressed in Equation (3).

The inclusion of a human user’s weight perception in the robot dynamics here means that the perceived weight due to the inertia is expected to differ from that due to the gravity while an object is being lifted by a user with the power assist robotic system. It may happen because the visually perceived weight is different from the haptically perceived weight. Thus, we here assume that the mass parameter used in the inertial force may be different from that in the gravitational force in Equation (2) [31], which is reflected in Equation (3). In Equation (3), m1 is the mass parameter for the inertial force and m2 is the mass parameter for the gravitational force, and we assume that , , . We then derived dynamics models for the system in three different ways as follows:

3.1. The First Dynamics Model (Dynamics Model 1)

In Equation (3), we also ignored the actuator force (fa) if we imagined it as the targeted dynamics. Then, the dynamics is changed as in Equation (4) below:

3.2. The Second Dynamics Model (Dynamics Model 2)

We performed a subtraction of Equation (4) from Equation (2) and obtained Equation (5), and then obtained Equation (6) from Equation (5). The resulting model is different from that in Equation (4). The model includes weight perception as we previously considered m1 to be different from m2.

3.3. The Third Dynamics Model (Dynamics Model 3)

We conducted a multiplication of Equation (2) by m1 and of Equation (4) by m, and then conducted a subtraction of the latter equation from the former equation, and finally obtained Equation (7). This model was a different dynamics model for the power assist robotic system, but the model included a human’s weight perception.

One of the objectives of this paper was to compare the applications of the position control with those of the force controls. Furthermore, the force controls may be developed from different perspectives. For example, dynamics model 2 includes the measurement of acceleration but does not include a human’s load force. On the other hand, dynamics model 3 includes a human’s load force but does not include the measurement of acceleration. These differences may have an impact on the performance of the system. Thus, multiple models of the same system give an opportunity to investigate differences in system performance, which may help decide the most favorable system design and performance.

4. Development of Position and Force Control Schemes Based on Weight Perception

4.1. The Position Control Scheme/Method

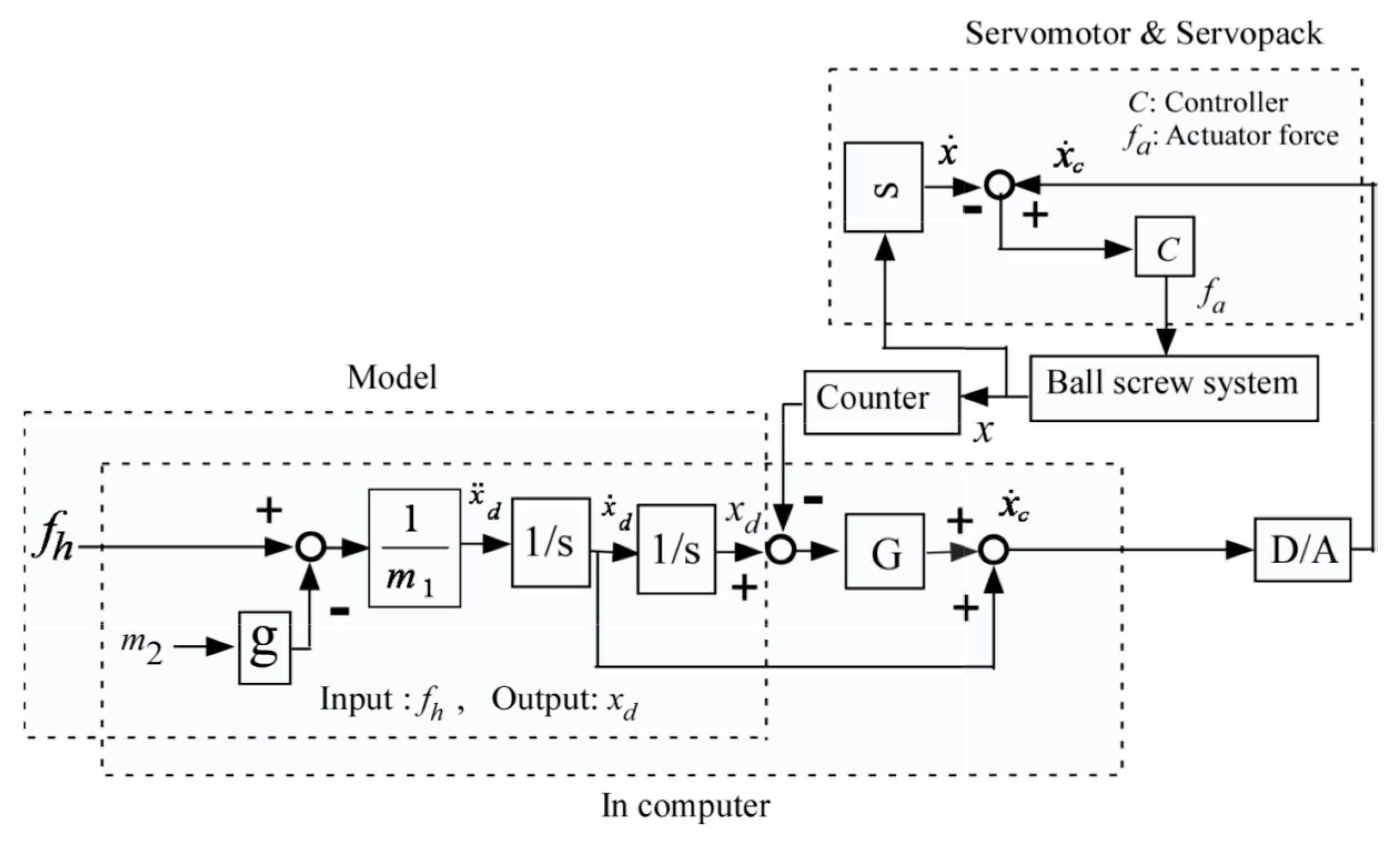

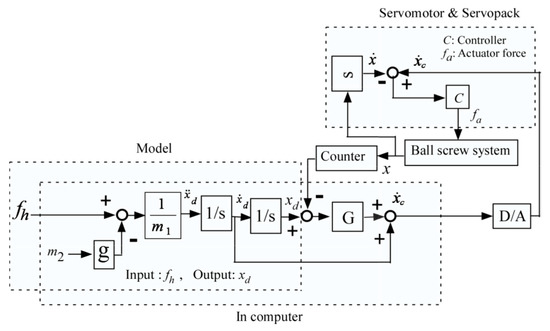

We developed the feedback position control scheme based on weight perception for the power assist robotic system following Equation (4), which is shown in Figure 5. If the developed position control is simulated in MATLAB/Simulink with the servomotor’s velocity control mode, the commanded velocity () to the servomotor can be defined by Equation (8), where G denotes the feedback gain, which is then to be fed to the servomotor through a digital-to-analog (D/A) converter. The servodrive is used to realize a control law based on the error in the displacement (xd − x) utilizing the velocity control with a positional feedback.

Figure 5.

The block diagram of the feedback position control of the power assist robotic system. Here, G denotes the feedback gain, digital-to-analog (D/A) refers to the D/A converter, and denotes the actual displacement. The feedback position control is implemented with the servomotor in the mode of velocity control.

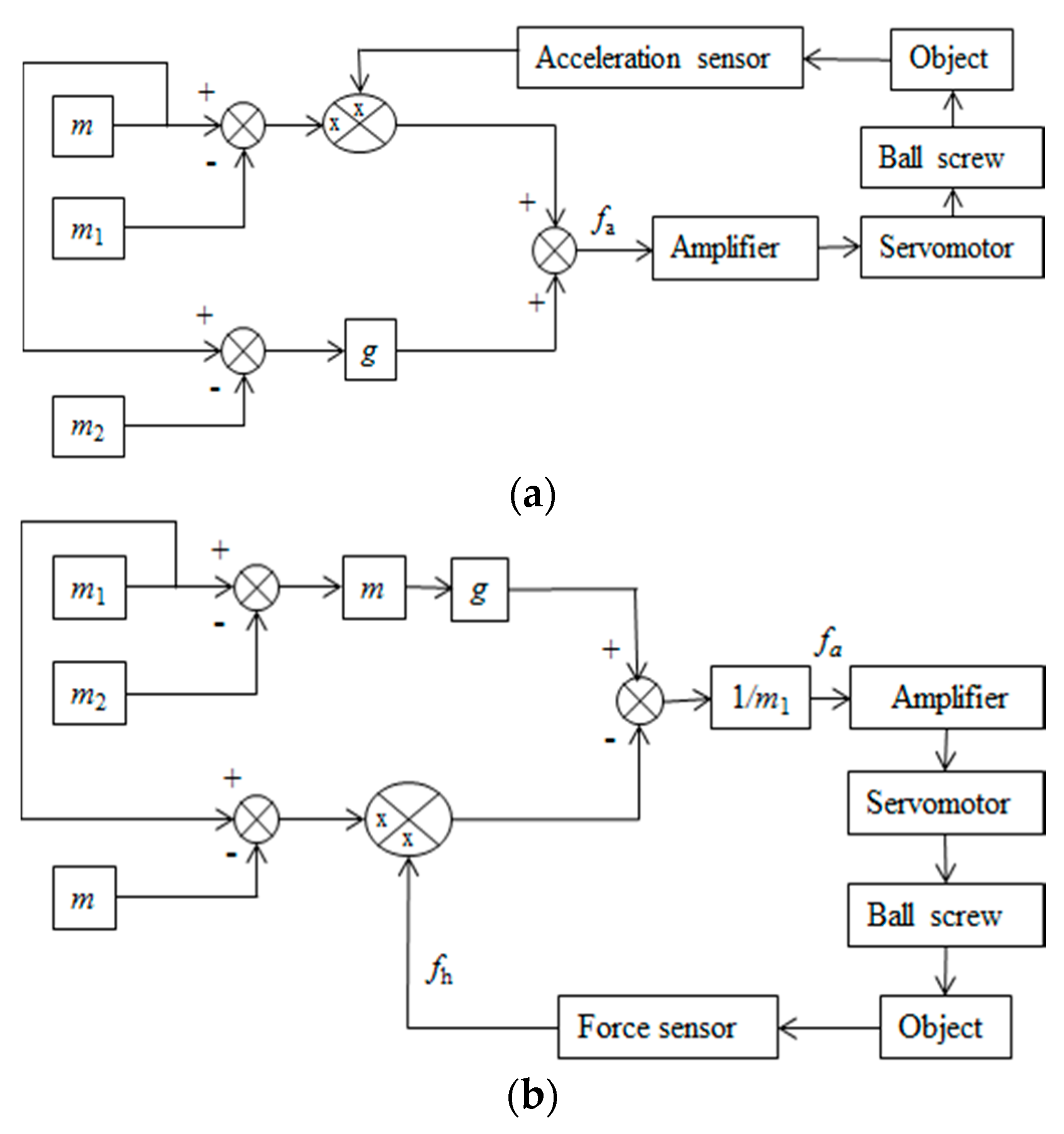

4.2. Force Control Scheme/Method 1

The load force (LF) symbolized by fh is not included in dynamics scheme/model 2 in Equation (6). Therefore, it is not required to use a force sensor for the design of the force control for the assist system considering this model of dynamics. It can be termed as the force sensorless scheme of force control [21]. However, we may require an acceleration sensor for this type of force control scheme. To do so, we can measure x (actual displacement of the object) using the servomotor’s encoder and then derive the acceleration () from x. This derivation may be found noisy. Therefore, it seems to be necessary to use an acceleration sensor for the design of the force control scheme using this dynamics model. The force control model developed considering Equation (6) is exhibited in Figure 6a.

Figure 6.

The force control schemes/methods: (a) force control scheme/method 1 (a force control scheme, but it is force sensorless); (b) force control scheme/method 2 (uses a force sensor that measures a human’s load force).

4.3. Force Control Scheme/Method 2

We noticed in the proposed dynamics model 3 that fh is included in Equation (7). Therefore, it is necessary to have a force sensor to measure fh for designing a force control scheme considering this dynamics model. However, an acceleration sensor is not necessary for this type of control scheme. The developed force control scheme utilizing Equation (7) is exhibited in Figure 6b.

5. Experiment 1: Evaluation of the Weight-Perception-Based Control Methods

The main objective of this experiment was to evaluate the performance of different control systems derived in Section 4 utilizing the experimental device presented in Figure 2 and the experimental setup shown in Figure 3.

We recruited 20 human subjects (engineering graduate and undergraduate students, mostly males in gender). Their ages were between 22 and 30 years (the mean age was 23.80 years; the STD was 2.30). The recruited subjects were believed to have no previous knowledge and experience on the developed experimental device and of the adopted hypothesis. We briefly trained the subjects separately before they participated in the experimental trials. The study was conducted following local ethical standards and principles for human subjects.

At the beginning, each of the selected subjects separately estimated/perceived the weights of three (3) PAOs of three (3) different sizes, which was based on subjective evaluation considering visual cues [20]. We observed the evaluations of the subjects and recorded each of the subjects’ estimated weights for each size of the object separately. We then computed the mean (here, n = 20) estimated weight for each size of object. Those estimated weights were found to be 2.82, 1.98, and 1.49 kg for the large-, medium-, and small-sized objects, respectively. In this article, we called those visually estimated/perceived weights as “visual weights.” We found that the objects’ visual sizes influenced the visually perceived weights significantly [20].

We conducted an experimental study (experiment/simulation with the physical robotic system) with the three control schemes/systems exhibited in Figure 5 and Figure 6 separately applying a MATLAB/Simulink environment (the solver used was ode4, Runge–Kutta; the solver type was fixed-step; the fundamental sample time was 0.001 s) for each size of the objects. We used m1 = 0.6 kg, m2 = 0.5 kg, and m = 1.0 kg for the simulation where applicable. The values of m1 = 0.6 kg, m2 = 0.5 kg, and m = 1.0 kg were selected because we found that humans felt good when the system was simulated with those values, which was decided following a reinforcement learning method [7,24,25]. Details of the method are given in Appendix A.

The m would be the object’s mass value used to simulate the power assist system for lifting different objects if the issue of weight perception was not to be considered (this is the usual case as in Equation (2)). However, m2 was the mass value used for the gravitational force for simulating the power assist robotic system for lifting different objects when the issue of weight perception was to be considered (novelty in our case as in Equation (3)). We called m2 as the “simulated weight” of the object when the issue of weight perception was considered.

Each of the selected subjects in each experimental trial needed to lift a PAO with the assist system up to a target height, maintain the lift of the PAO for several seconds, and then release the PAO. Then, each of the subjects needed to subjectively estimate the perceived haptic weight of the PAO comparing the haptic weight with that of some prearranged weights (the reference weights). We observed and recorded the weight of the PAO perceived by each subject for each experiment trial separately. Those perceived weights were called “haptic weights” here. In each experiment trial, the subject, just after estimating each PAO’s haptic weight, evaluated/scored the performance of the assist system subjectively in various terms, such as the power assist system motion, maneuverability, system stability, user safety, perceived naturalness, and the system’s ease of use based on a 7-point rating scale (bipolar and equal-interval scale) as follows [23]:

- It is (undoubtedly) the best (the score is +3).

- It is (conspicuously) better (the score is +2).

- It is (moderately) better (the score is +1).

- It should be on the borderline (the score is 0).

- It is (moderately) worse (the score is −1).

- It is (conspicuously) worse (the score is −2).

- It is (undoubtedly) the worst (the score is −3).

We separately recorded all the evaluation scores for each experiment trial. We performed the acquisition of the data for acceleration and load force for each experiment trial for the position control scheme and recorded the data separately. We performed the acquisition of acceleration data for each experiment trial for force control scheme/method 1, and of load force data for each experiment trial for force control scheme/method 2. We recorded the data separately.

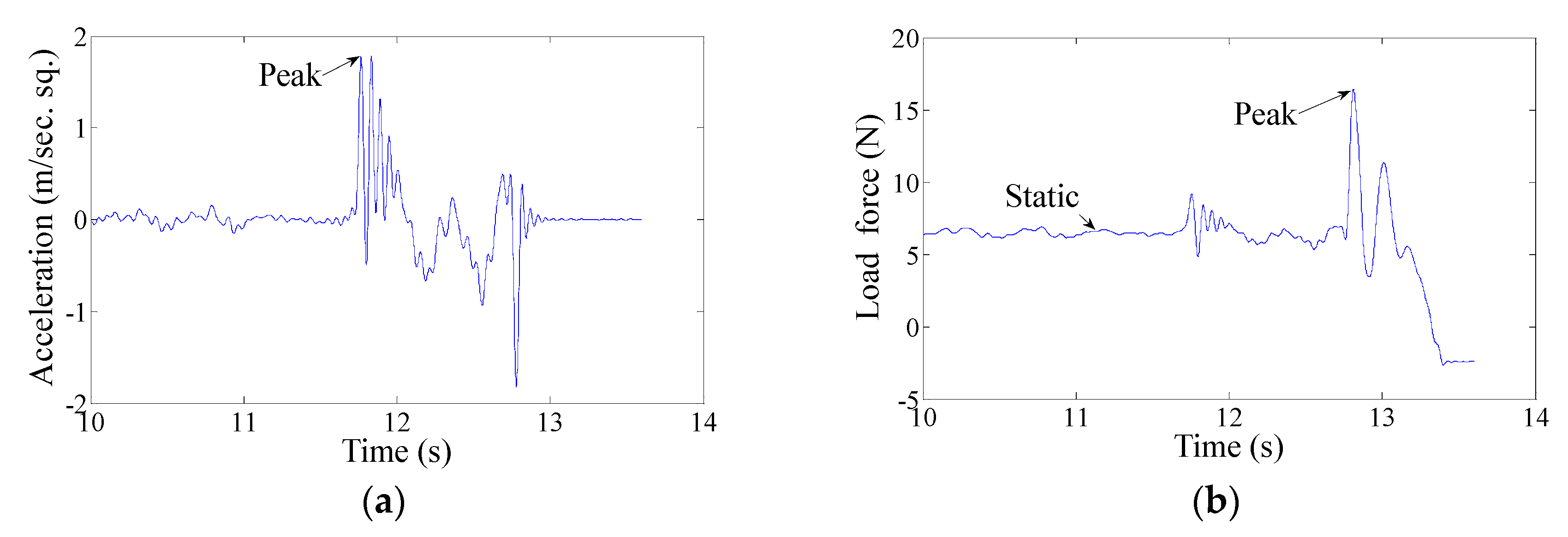

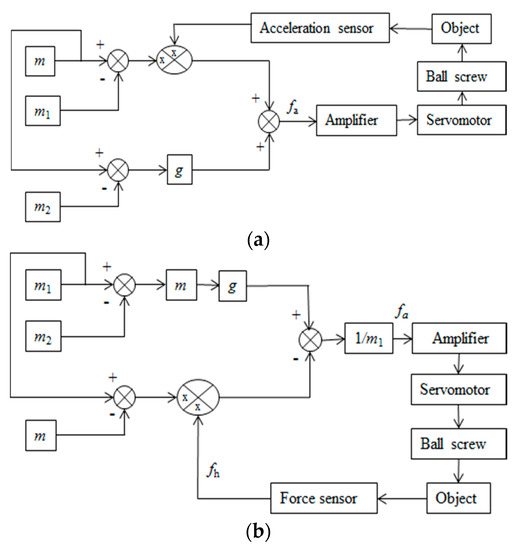

6. Results of Experiment 1

The sample acceleration data (for force control scheme/method 1) and the sample load force data (for force control scheme/method 2) are shown in Figure 7. For the load force data, the simulated weight of the object is the static force, which is about 5 N because m2 = 0.5 kg. However, the figure shows a slightly higher static force (>5 N) probably because the human hand was not stationary while holding the object. Again, the peak load force (PLF) (about 17 N) is close to the mean visually perceived weight (1.98 kg or about 19 N), which reveals that the feedforward load force is applied based on the visually perceived weight, which is in line with what we assumed in Section 1 [20]. The PLF is about 17 N, but the static force (the actually required load force to lift the object [20]) is about 5 N, which reveals that the human usually applies excessive load force when lifting the object.

Figure 7.

(a)The sample data of acceleration for force control scheme/method 1, and (b) the sample data of load force for force control scheme/method 2 for an object (the medium size). Here, the positive signs/directions of the data indicate the upward object movement.

For each size object, we computed the mean peak acceleration for force control scheme/method 1 and the mean PLF for force control scheme/method 2. In the same way, for each size object, we computed the mean PLF and mean peak acceleration for the position control scheme. As the figures show, the mean PLFs and mean peak accelerations were larger than the actual requirements. We assume that the large PLFs produced large accelerations, and both the large PLFs and peak accelerations might reduce the system performance that we mentioned in Section 1 [20]. Note that detailed results of the mean PLFs, mean peak accelerations, and system performance are to be presented in a forthcoming section. The results indicate that we need an effective strategy to remove or reduce excessive PLFs and peak accelerations to improve human–system interactions and system performance [23].

Figure 7 shows the magnitude of the measured load force when the control system used m1 = 0.6 kg and m2 = 0.5 kg, which were the smaller values of m1 and m2 (see Appendix A). Here, m1 = 0.6 kg and m2 = 0.5 kg were the end results of the reinforcement learning algorithm, which also indicates the best or the most favorable manipulation condition. However, the subjects needed to lift the objects for other m1 and m2 values used in the control system before we decided the best values (m1 = 0.6 kg, m2 = 0.5 kg) through the reinforcement learning procedure. Let us consider the potentially worst situation when m1 = 1.5 kg and m2 = 1.5 kg (see Appendix A) were used in the control system. In that condition, the magnitude of the measured load force could be very high. The force magnitude also depends on the human’s perception of the object weight [20]. If the human perceives the object to be very heavy, the load force magnitude may further increase [20]. This is why a force sensor with a large capacity was needed (Table 3). In addition, the availability of a force sensor with a lower capacity but with better resolution was also an issue. However, in any sense, the use of a force sensor with a higher capacity, keeping the force sensor and the system size within the expected limit, has no adverse effect on the system design and application. This motivated us to choose a force sensor with a slightly large capacity as in Table 3.

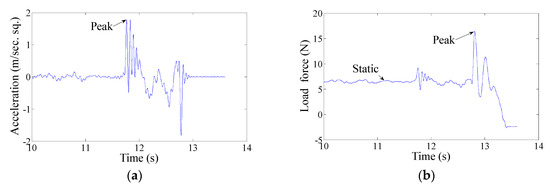

7. Experiment 2: Evaluation of a Novel Adaptive Control Strategy to Improve System Performance

The main objective of this experiment was to derive and evaluate the performance of a newly proposed adaptive control strategy and to compare its performance with that of different control systems derived in Section 4 utilizing the experimental device presented in Figure 2 and the experimental setup shown in Figure 3.

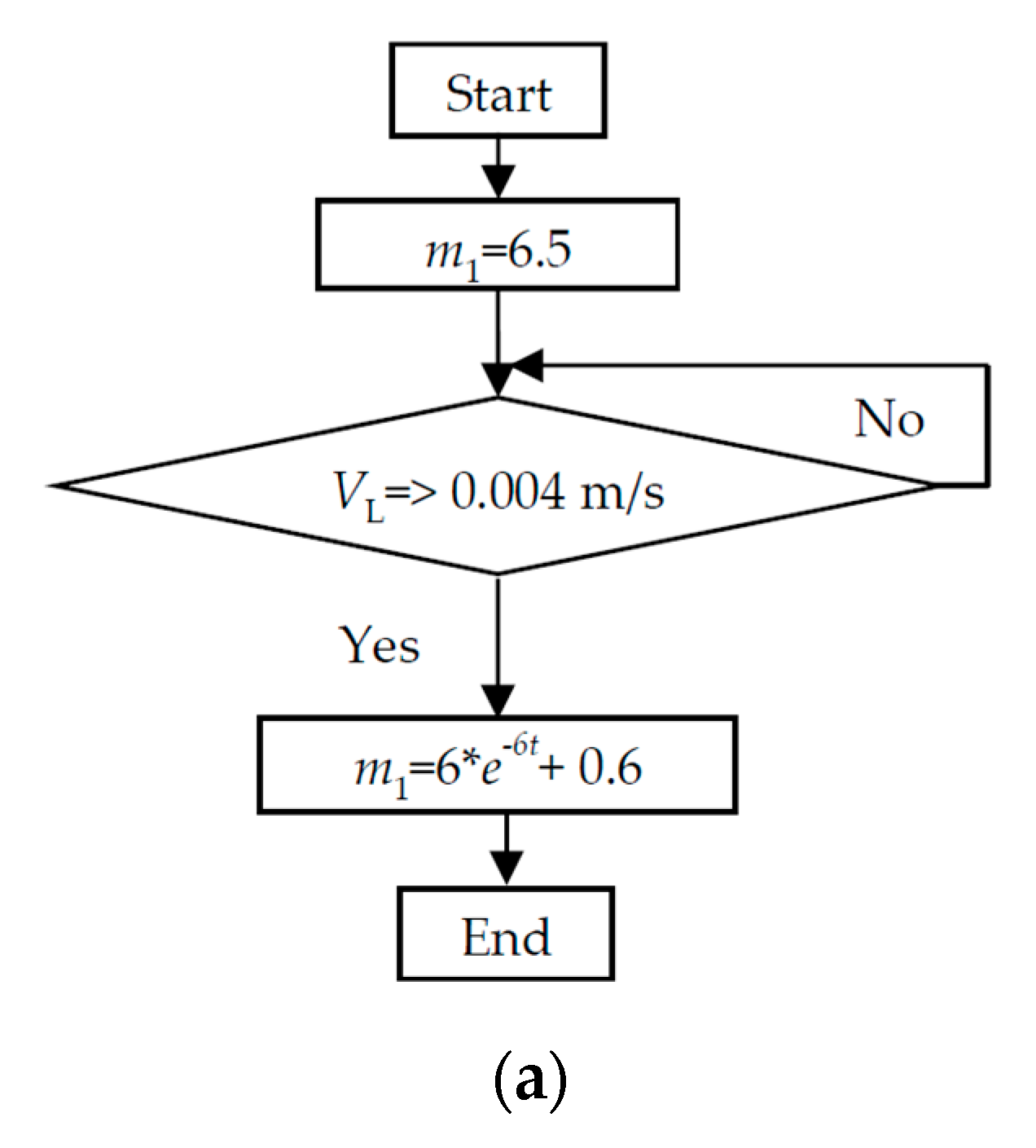

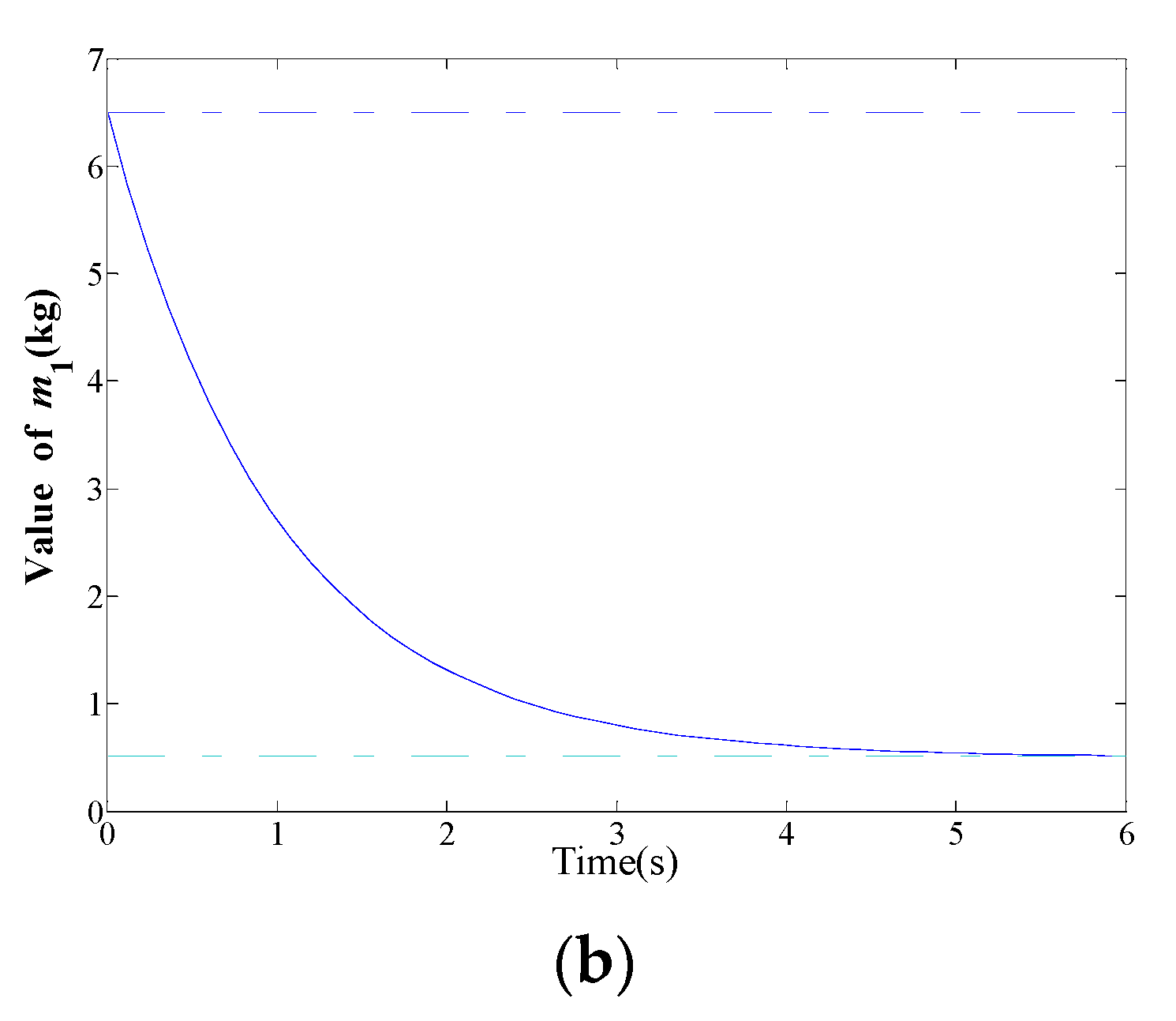

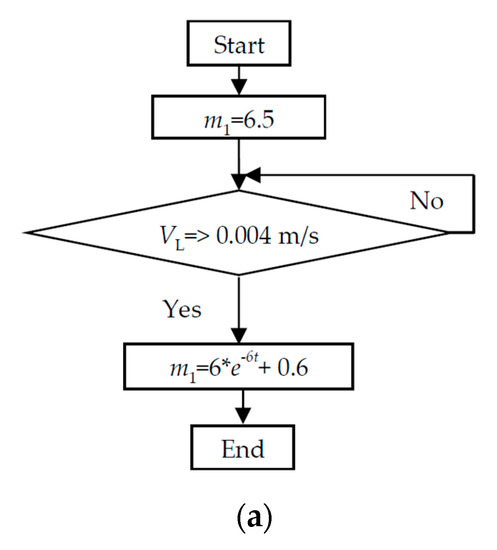

We performed experiment 2 with the aim of reducing excessive PLFs and peak accelerations by applying another innovative adaptive control technique/strategy. We previously observed in [7] that PLF magnitudes are to be linearly proportional to m1 values, but human users usually do not feel such changes in m1. Hence, the novel adaptive control strategy that we derived was such that the value of m1 should exponentially decline from a large value (say, m1 = 6.5) to a small value of m1 (say, m1 = 0.6) when the subject lifts the PAO with the assist system and the lifting velocity (e.g., VL) of the PAO exceeds a threshold. The reduction in m1 would reduce the PLFs (and accelerations) proportionally as m1 was linearly proportional to the PLFs [7]. Furthermore, the reduction in PLFs would not have an adverse impact on the relationships expressed in Equation (3) because a human user would not feel any changes in m1 [7]. Therefore, the novel adaptive control strategy defined by the following two equations for m1 and m2 may be adopted. Figure 8 diagrams the novel adaptive control algorithm/strategy as a flowchart.

m1 = 6e−6t + 0.6

m2 = 0.5

Figure 8.

The flowchart for the novel adaptive control algorithm (a) and the hypothetical time trajectory of m1 for lifting the PAO when the novel control algorithm is implemented (b).

In the algorithm, the values of VL, m1 = 6.5, and the digits 6 in m1 = 6e−6t + 0.6 were estimated by trial and error. We conducted experiment 2 following the same procedures and setup as those we employed for experiment 1 (i.e., the tasks were repeated), but the control systems shown in Figure 5 and Figure 6 were modified by the novel control algorithm shown in Figure 8 (i.e., m1 of Figure 5 and Figure 6 was replaced by m1 as expressed in Equation (9)).

8. Results of Experiment 2

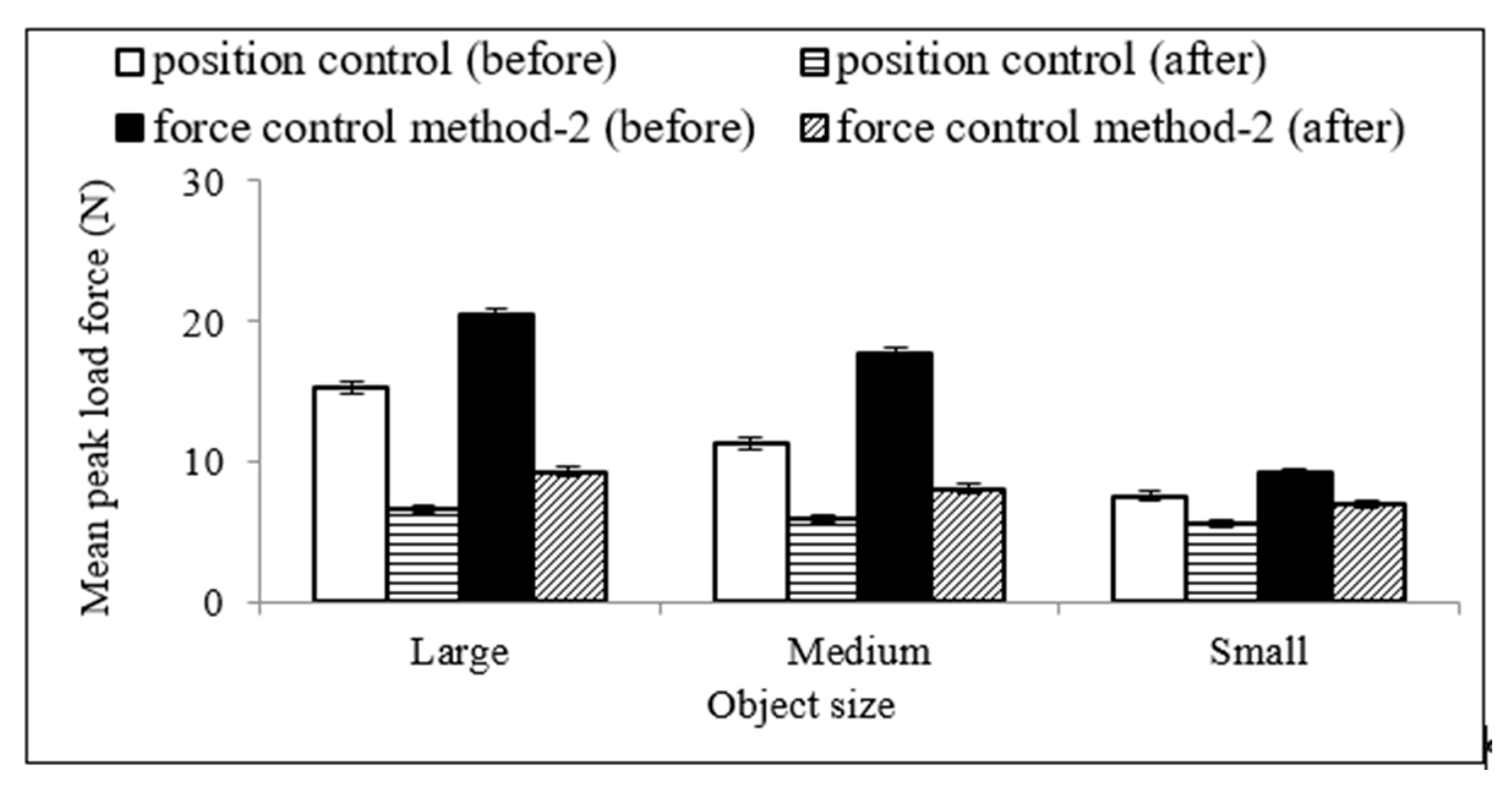

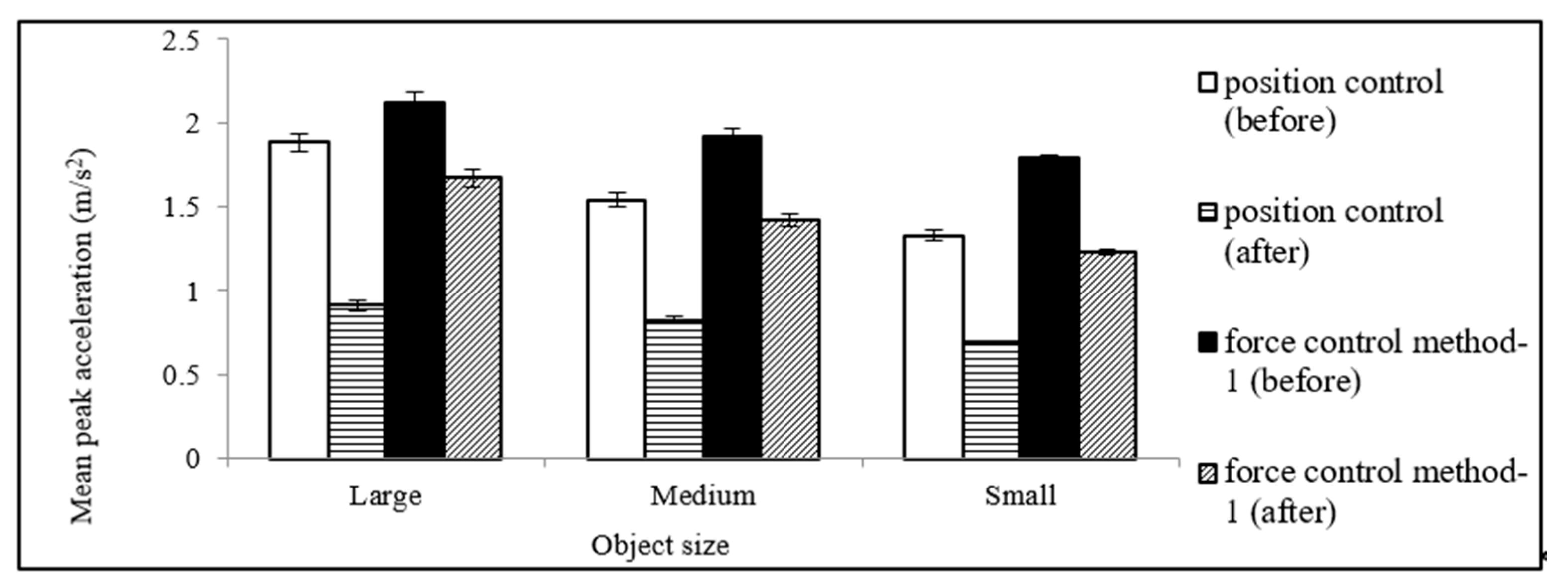

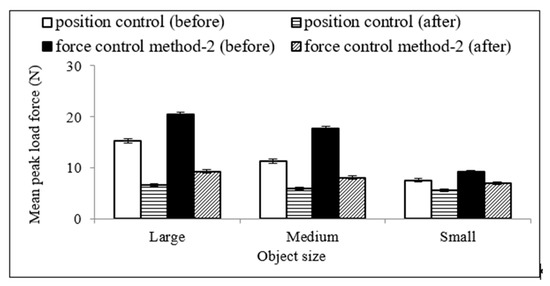

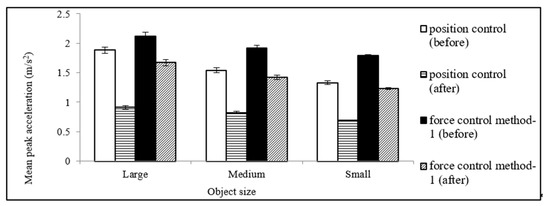

We determined the mean PLF and peak acceleration for each size object for the position control scheme, the mean peak acceleration for each size object for force control scheme/method 1, and the mean PLF for each size object for force control scheme/method 2 for experiment 2 (after the implementation of the novel adaptive control strategy/algorithm), and compared them with those of experiment 1 (before the implementation of the novel adaptive control strategy/algorithm). The results are shown in Figure 9 and Figure 10.

Figure 9.

Mean (n = 20) peak load force for the position control and force control scheme/method 2 for objects of different sizes before (experiment 1) and after (experiment 2) the implementation of the novel adaptive control algorithm.

Figure 10.

Mean (here, n = 20) peak accelerations for the position control and force control method 1 for different sizes of lifted objects before (experiment 1) and after (experiment 2) the implementation of the novel adaptive control algorithm.

Figure 9 shows that (i) before the implementation of the novel control algorithm, the PLFs for the position control scheme were smaller than those for force control scheme/method 2, and the PLFs for both control schemes/methods were larger than the minimum load force requirements (about 5 N, which was equal to the object’s simulated weight); (ii) the novel control algorithm reduced the PLFs, but the reduction was more intensive for the position control than for the force control, which means that for the position control, the PLF reduced to almost the minimum, but the reduction was not so much for the force control; (iii) the load force was proportional to the visual object sizes [20]; and so forth. Figure 10 shows that (i) before the implementation of the novel algorithm, the peak accelerations for the position control scheme were smaller than those for force control scheme/method 1, although the accelerations for both of the control methods were large; (ii) the novel control algorithm reduced the peak accelerations, but the reduction was more intensive for position control than for force control; (iii) accelerations were proportional to visual object sizes [20]; and so forth.

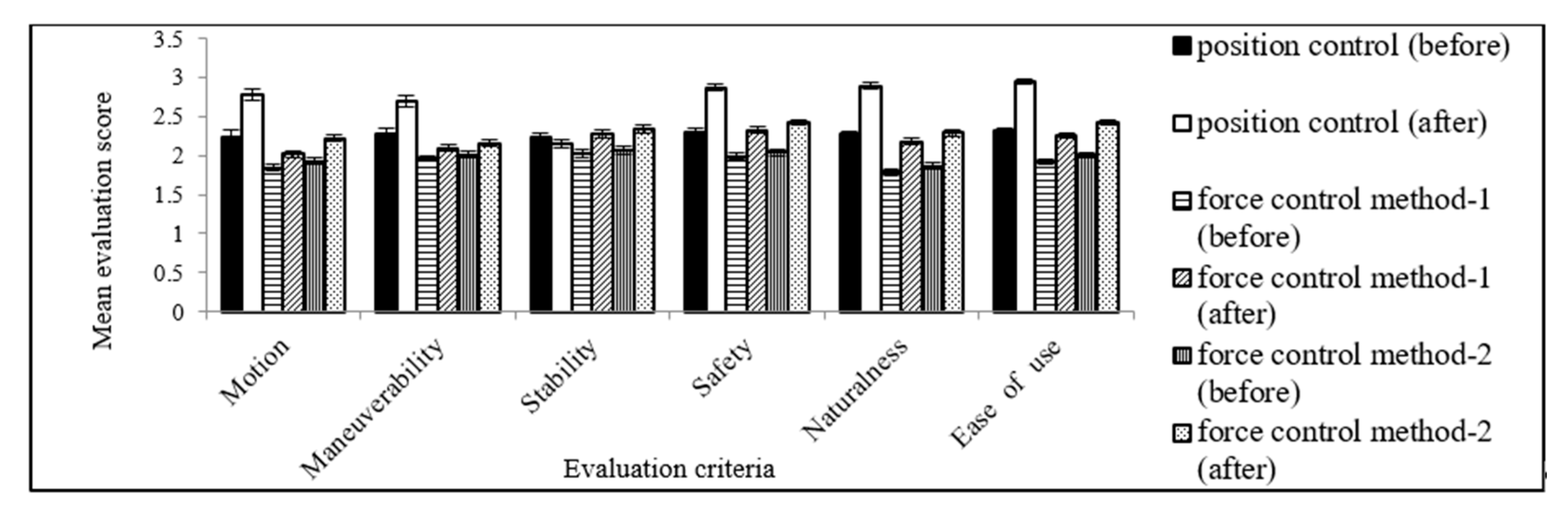

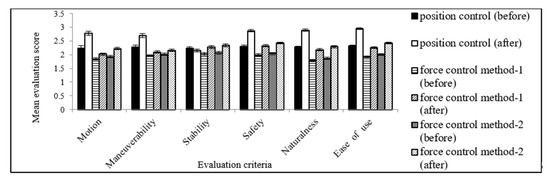

For each size object and for each control scheme/method, we computed the mean performance evaluation scores for each performance evaluation criterion after the implementation of the novel adaptive control strategy/algorithm (in experiment 2) separately, and then compared the results with those in experiment 1, which was conducted before implementing the novel adaptive control strategy/algorithm. The results (for the medium-sized object only) are shown in Figure 11 as the representative results. The results in general reveal that the performance for all of the three (3) control strategies/methods in experiment 1 was satisfactory, even though this experiment was not benefited through the implementation of the novel adaptive control algorithm. We assume that the favorable results in experiment 1 were the benefits/advantages of the inclusion of a human user’s weight perception (or cognition) in the dynamics and controls by using the optimum/best mass values (values of m1, m2, m) in the control systems obtained through the machine learning model [26,27]. The results in general reveal that, for experiment 1, the position control scheme/method produced the best/optimal performance in all evaluation criteria, then force control scheme/method 2 could be ranked for its performance, and then force control scheme/method 1 could be ranked in terms of its ability to produce a satisfactory system performance. In addition, the obtained performance significantly improved in all of the three (3) control schemes/methods through the implementation of the novel adaptive control strategy/algorithm in experiment 2. However, the observed improvement in system performance was highest/best in the position control scheme. Force control scheme/method 2 stood second in terms of improving system performance by applying the novel adaptive control scheme/algorithm. Force control scheme/method 1 stood at third position/rank. Analyses showed that the order of excellence in generating system performance among the control schemes/methods exactly matched with the order of their excellence observed in terms of PLFs and peak accelerations. Based on these findings, we may conclude that the position control scheme was able to produce the highest performance among the three (3) control schemes/methods in both experimental conditions (before and after the implementation of the novel adaptive control strategy/algorithm). It might happen because the magnitudes of the PLFs and peak accelerations for the position control scheme/method were lowest or smallest. We conducted analyses of variances (ANOVAs), which showed that the variations in the system performance scores between object sizes were not statistically so significant (p > 0.05 at each case). The reason behind these results may be that the human subjects needed to evaluate the assist system based on their haptic senses where the visual object sizes (visual cues) had no or little influences [20]. We also found that the variations in the system performance scores between the human subjects were not statistically so significant (p > 0.05 at each case).

Figure 11.

Mean (here, n = 20) performance evaluation scores for the medium-sized object for the three control methods before (experiment 1) and after (experiment 2) the implementation of the novel adaptive control algorithm.

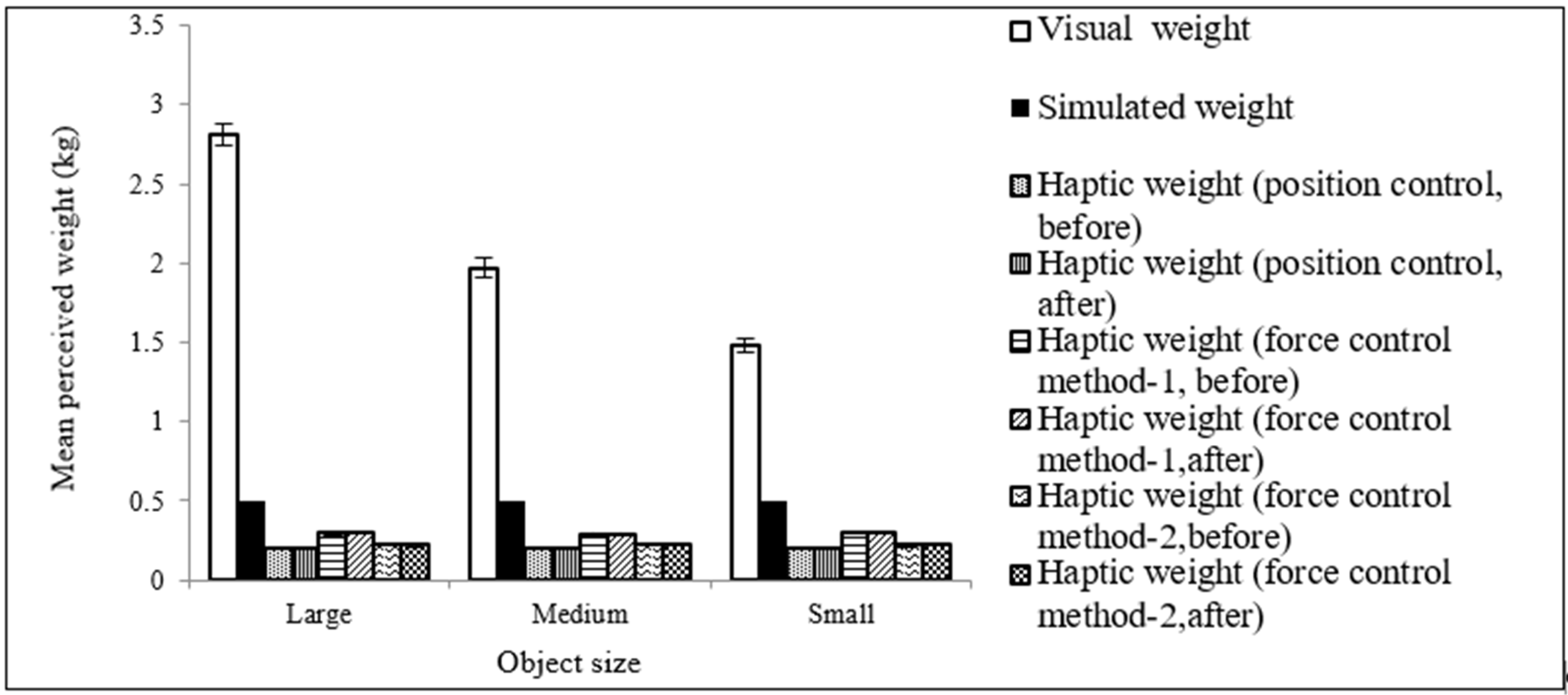

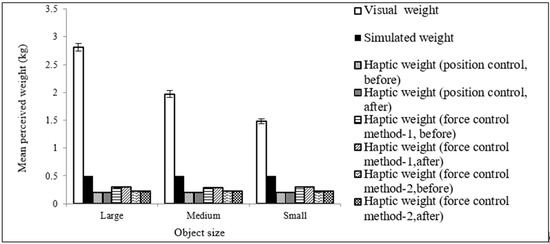

For each size object and for each control scheme/method, we computed the mean haptic weights separately for the case of experiment 2 (after the modification of the control schemes), and then compared the results with those obtained for the case of experiment 1 for the visual and simulated object weights. The obtained comparative results are exhibited in Figure 12. The obtained results reveal that the power-assist-lifted weights (the haptic weights), on average, were about 58%, 50%, and 40% of the simulated weights for force control scheme/method 1, force control scheme/method 2, and the position control scheme/method, respectively. These findings indirectly indicate 42%, 50%, and 60% of power assistance obtained in object lifting/manipulation for force control scheme/methods 1 and 2 and the position control scheme, respectively [7]. The results also reveal that, on average, the haptic weights were about 10%, 14%, and 19% of the visually perceived weights of the large, medium, and small objects, respectively. We mentioned the possibility of such findings in Section 3 while introducing our weight perceptual hypothesis. The obtained results thus justify that our hypothesis is true. The obtained results also reveal that the novel adaptive control scheme/algorithm in experiment 2 did not change the haptically perceived weights, which in turn indicates that the novel adaptive control algorithm did not have an adverse impact on the relationships expressed in Equation (3), which was in line with our expectations. The obtained results also reveal that the haptically perceived weights for the position control scheme were lowest/smallest among the three (3) control schemes/methods, indicating that the position control scheme produced the best/highest system performance because of the lowest haptic weights (i.e., because of the highest power assistance). In the same way, the perceived haptic weights for force control scheme/method 2 were found to be lower than those for force control scheme/method 1. We assume that the lower haptic weights (the corresponding higher power assistances) for force control scheme/method 2 over force control scheme/method 1 produced a better system performance for force control scheme/method 2. We conducted ANOVAs that showed that the variations in the haptic weights between object sizes were not statistically significant (p > 0.05 at each case). The probable reasons might be that the human subjects attempted to estimate the perceived haptic weights based on their haptic senses, and the visual object sizes or the visual cues had no or little influences [20]. We also found in the ANOVAs that the variations in the haptic weights between the human subjects were not statistically significant (p > 0.05 at each case).

Figure 12.

Mean (here, n = 20) weights (visually perceived, simulated, and haptically perceived) for the three control methods for different sizes of lifted objects before (experiment 1) and after (experiment 2) the implementation of the novel adaptive control algorithm.

9. Discussion

9.1. Reliability and Acceptance of Subjective Evaluations

A significant portion of the presented results was based on subjective evaluations (see Figure 11 and Figure 12). However, we also conducted objective assessments/measurements (see Figure 7, Figure 9 and Figure 10). We believe that the subjective evaluation results should be acceptable and reliable because (i) in many cases with human–system interactions, it may be difficult to generate objective-type data, and thus we need to depend on subjective evaluations, and (ii) the subjective evaluations were performed following well-established standards, and thus they should be reliable. For example, such subjective evaluation results were proven to be acceptable and reliable in many past cases [23]. The subjective results can be used to triangulate with the objective results through the mixed-methods analyses [29].

9.2. Validity of the Experimental System Design and the Experimental Results

The final objective of this research direction is to apply the proposed control strategies to power assist robotic devices for handling heavy and/or large objects in various industries, which can produce satisfactory human user–robot interactions and performance. However, the presented experimental system was a 1-DoF simple testbed system, and the selected object sizes and object weights were also small. Nonetheless, we posit that the presented experimental robotic system satisfactorily proved the efficacy of (i) adopting the hypothesis regarding the consideration of a user’s weight perception in the robot dynamics and controls; (ii) deriving several alternative control strategies/methods considering a user’s weight perception; (iii) implementing the selected/derived control strategies/methods and their experimental evaluations; (iv) deriving, implementing, and evaluating a novel adaptive control strategy/algorithm based on weight perception; and (v) comparing the system performance between the derived control strategies/methods; and so forth. We posit that the obtained results will be able to improve existing power assist robotic systems, and also believe that the presented findings will be able to benefit researchers when developing and evaluating multi-DoF real power assist robotic systems for handling heavy and large materials and objects.

9.3. Superiority of Position Control: The Reasoning

We think that the higher power assistance (Figure 12) of the position control is one of the reasons behind its superiority. For manipulating objects from one location or position to another location or position with power assist, usually, the positional inaccuracy causes problems, and thus the accuracy seems to be more expected. In addition, human users perceive and/or realize robotic system characteristics and performance generated through positional commands more ergonomically, comfortably, intensely, and realistically [7]. In addition, the position control strategy seems to be able to compensate various dynamic effects, such as inertia, friction, and viscosity, in system configurations, which cannot be compensated easily through force controls. We believe that the mentioned dynamic effects and resulting nonlinear forces may have an impact on the system configurations and performance for force controls, especially the force controls of multi-degree-of-freedom systems. However, these types of dynamic effects are not to be so significant for position controls [21,22,28]. All these factors have favored the position control over its counterparts (force controls).

On the contrary, if we observe a difference in values between m and m1 and if the difference is large (i.e., if (m − m1) is large), the proposed position control strategy may create high load on the servomotor of the assist system, resulting in system instability. However, in the proposed position control system, the difference (i.e., (m − m1)) was not large; thus the problem was not observed [7].

10. Conclusions and Future Extension

We presented the development of a 1-DoF testbed power assist robotic system for lifting small objects of different sizes. We considered a human user’s weight perceptions and reflected those perceptions in modeling the robotic system dynamics, and derived a position control and two different force control strategies/methods based on the dynamics models. We implemented the control methods separately and evaluated their performance. The results showed that the controls produced satisfactory performance. We also derived and implemented another novel adaptive control algorithm that successfully augmented the performance of the control strategies/methods. The position control was found to be the best for improving performance using the novel control algorithm. The main novelties were (i) including a human user’s weight perception (cognition) in the dynamics and control of the system, (ii) deriving a novel adaptive control strategy for the system for lifting objects based on the human-centric dynamics and a predictive method producing the best/optimal control performance following a reinforcement learning method, (iii) comparing the performances between position and force control methods for the same experimental setting; and so forth, with the aim of improving the human friendliness and performance of the system. In addition, the amount of power assistances in object manipulation in different conditions was made clearer. The derived human-centric control systems were proved to make the assist system more human friendly and produce better system performance. The obtained results can help design and develop control strategies/methods for power assist robotic systems for manipulating heavy and/or large objects in various relevant industries, which can improve manipulation productivity and a coworker’s health and safety and ensure better human–machine interactions.

In the future, we plan to verify and validate the results using heavy and/or large objects and a multi-DoF power assist robotic system. We will use more subjects and trials to create a bigger training dataset to achieve more accurate reinforcement learning outcomes. We will design a Q-learning method to further learn the control policies and modify the controls accordingly.

Funding

This particular research presented herein received no external funding.

Institutional Review Board Statement

The study was guided by ethics. The study was conducted following local ethical standards and principles for human subjects.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Available from the author on request.

Acknowledgments

The research was partly conducted when the author was with the Mechanical Engineering Department, Mie University, 1577 Kurimamachiya-cho, Tsu City, Mie, 514-8507, Japan. The author acknowledges the support that he received from his past lab members and Ryojun Ikeura of Mie University, Japan.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

The same reinforcement learning procedures were followed for the position control in Figure 5 and the force controls in Figure 6. For example, for the position control for the robotic system exhibited in Figure 2, the system was implemented using a MATLAB/Simulink environment. Table A1 shows 36 pairs of m1 and m2 values that we selected based on our experiences. In each experimental trial, a set (pair) of values of m1 and m2 from Table A1 was randomly selected and put in the control system (Figure 5). However, the exact values of m1 and m2 were confidential to the subject. Then, the assist system was run, and a human subject lifted an object with the system up to a certain height (about 0.1 m), maintained the lift for a few seconds, and then released the object. For that pair of values of m1 and m2, the trial was repeated three times by the same subject. Then, the subject subjectively evaluated (scored) the human–robot interaction (HRI) following a rating scale between 1 and 10, where 1 indicated the least favorable HRI and 10 indicated the most favorable HRI. The HRI was expressed in terms of maneuverability, safety, and stability, and such expression was communicated to the subject before he/she lifted the object. The subject perceived the maneuverability, safety, and stability of the collaborative system during the lifting task. The combined perception created an integrated feeling in the subject, and the subject rated the system depending on that feeling about the HRI. In total, 20 subjects participated in the experiment separately, and each subject lifted the object (e.g., medium size) with the system for 36 × 3 times for 36 pairs of m1 and m2 values. The grand total trials were 36 × 3 × 20 = 2160, and thus 2160 rating values were obtained.

Table A1.

Values of m1 and m2 used in the experiment (6 × 6 pairs).

Table A1.

Values of m1 and m2 used in the experiment (6 × 6 pairs).

| m1 (kg) | 0.25 | 0.50 | 0.60 | 1.0 | 1.25 | 1.5 |

| m2 (kg) | 0.25 | 0.50 | 0.60 | 1.0 | 1.25 | 1.5 |

A grand total of 2160 trials (2160 pairs of m1 and m2 values) and 2160 rating values were used as the training dataset of a machine learning problem. The training dataset may be elaborated as in Equation (A1) (where n is the number of trials), which used the pairs of m1 and m2 values as the inputs vector (x) and the subjective rating values as the outputs vector (y) [24,25]. Table A2 partly illustrates the training dataset. A target function or general formula (f) as in Equation (A2) was learned based on the input–output relationships in Equation (A3), which was used to predict the performance rating for the given pairs of m1 and m2 values.

Table A2.

The sample machine learning training dataset (partial list for illustration only).

Table A2.

The sample machine learning training dataset (partial list for illustration only).

| Trials | Pairs of m1 and m2 Values (Inputs, x) | Performance Rating (Outputs, y) |

|---|---|---|

| 1 | m1 = 1.0 kg, m2 = 1.0 kg | 5 |

| 2 | m1 = 1.0 kg, m2 = 1.5 kg | 2 |

The 2160 pairs of m1 and m2 values were available from the beginning of the experiments. However, how the users felt and evaluated the HRI for the 2160 pairs of m1 and m2 values was not available in the beginning. Then, we obtained the 2160 rating values for the 2160 pairs of m1 and m2 values, which completed the training dataset. As a result, this could be considered a reinforcement learning model [24,25]. We then developed a simple frequency-based optimization algorithm that was used to pick a pair of m1 and m2 values or a few pairs of m1 and m2 values that produced the highest levels of ratings (for example, 9 and 10 ratings) (i.e., the target function f in Equation (A2)). The results showed that m1 = 0.6 kg and m2 = 0.5 kg produced most of the 10 ratings, and m1 = 1.0 kg and m2 = 0.5 kg produced most of the 9 and 10 ratings. Hence, m1 = 0.6 kg and m2 = 0.5 kg were considered the learned control system parameters that could predict the highest levels of HRI (and thus the control performance).

At the testing phase, we randomly selected 10 subjects (out of 20 originally selected subjects). We put m1 = 0.6 kg and m2 = 0.5 kg in the control system shown in Figure 5. However, information about m1 = 0.6 kg and m2 = 0.5 kg was confidential to the subjects. Each subject separately lifted the object (e.g., medium size) with the robotic system once, and then rated his/her perceived HRI in the 10-point rating scale. The results showed that the rating values were 10 out of 10 nine times (for 9 subjects), and the rating value was 9 out of 10 only one time (for only 1 subject). Thus, it is posited that the control system parameters learned in the training phase were able to successfully predict the highest levels of HRI, as proved in the testing phase. We followed similar approaches to learn the best control parameters for the force controls and found similar results.

References

- Ayoub, M.M. Problems and solutions in manual materials handling: The state of the art. Ergonomics 1992, 35, 713–728. [Google Scholar] [CrossRef]

- Okamura, A.; Smaby, N.; Cutkosky, M. An overview of dexterous manipulation. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; pp. 255–262. [Google Scholar]

- Kazerooni, H. Extender: A case study for human-robot interaction via transfer of power and information signals. In Proceedings of the 1993 2nd IEEE International Workshop on Robot and Human Communication, Tokyo, Japan, 3–5 November 1993; pp. 10–20. [Google Scholar]

- Peter, N.; Kazerooni, H. Industrial-strength human-assisted walking robots. IEEE Robot. Autom. Mag. 2001, 8, 18–25. [Google Scholar]

- Shibata, T.; Murakami, T. Power-assist control of pushing task by repulsive compliance control in electric wheelchair. IEEE Trans. Ind. Electron. 2012, 59, 511–520. [Google Scholar] [CrossRef]

- Seki, H.; Tanohata, N. Fuzzy control for electric power-assisted wheelchair driving on disturbance roads. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 1624–1632. [Google Scholar] [CrossRef]

- Rahman, S.M.M.; Ikeura, R.; Hayakawa, S. Novel human-centric force control methods of power assist robots for object manipulation. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 340–345. [Google Scholar]

- Doi, T.; Yamada, H.; Ikemoto, T.; Naratani, H. Simulation of a pneumatic hand crane power-assist system. J. Robot. Mechatron. 2007, 20, 2321–2326. [Google Scholar] [CrossRef]

- Niinuma, A.; Miyoshi, T.; Terashima, K.; Miyashita, Y. Evaluation of effectiveness of a power-assisted wire suspension system compared to conventional machine. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 369–374. [Google Scholar]

- Hara, S. A smooth switching from power-assist control to automatic transfer control and its application to a transfer machine. IEEE Trans. Ind. Electron. 2007, 54, 638–650. [Google Scholar] [CrossRef]

- Yagi, E.; Harada, D.; Kobayashi, M. Upper-limb power-assist control for agriculture load lifting. Int. J. Autom. Technol. 2009, 3, 716–722. [Google Scholar] [CrossRef]

- Takubo, T.; Arai, H.; Tanie, K.; Arai, T. Human-robot cooperative handling using variable virtual nonholonomic constraint. Int. J. Autom. Technol. 2009, 3, 653–662. [Google Scholar] [CrossRef]

- Dimeas, F.; Koustoumpardis, P.; Aspragathos, N. Admittance neuro-control of a lifting device to reduce human effort. Adv. Robot. 2013, 27, 1013–1022. [Google Scholar] [CrossRef]

- Hayashi, T.; Kawamoto, H.; Sankai, Y. Control method of robot suit HAL working as operator’s muscle using biological and dynamical information. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3063–3068. [Google Scholar]

- Power Loader Light. Available online: http://psuf.panasonic.co.jp/alc/en/index.html (accessed on 20 January 2021).

- Kazerooni, H. Exoskeletons for human power augmentation. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3459–3464. [Google Scholar]

- Sato, M.; Yagi, E. A study on power assist suit using pneumatic actuators based on calculated retaining torques for lift-up motion. In Proceedings of the 2011 SICE Annual Conference, Tokyo, Japan, 13–18 September 2011; pp. 628–632. [Google Scholar]

- Takaoka, D.; Iwaki, T.; Yamada, M.; Tsukamoto, K. Development of a transfer supporting equipment-applying power assist control. In Proceedings of the 4th IEEE International Workshop on Advanced Motion Control—AMC’96—MIE, Mie, Japan, 18-21 March 1996; pp. 92–96. [Google Scholar]

- Hara, H.; Sankai, Y. HAL equipped with passive mechanism. In Proceedings of the 2012 IEEE/SICE International Symposium on System Integration (SII), Fukuoka, Japan, 16–18 December 2012; pp. 1–6. [Google Scholar]

- Gordon, A.; Forssberg, H.; Johansson, R.; Westling, G. Visual size cues in the programming of manipulative forces during precision grip. Exp. Brain Res. 1991, 83, 477–482. [Google Scholar] [CrossRef] [PubMed]

- Seki, H.; Iso, M.; Hori, Y. How to design force sensorless power assist robot considering environmental characteristics-position control based or force control based. In Proceedings of the IEEE 2002 28th Annual Conference of the Industrial Electronics Society. IECON 02, Sevilla, Spain, 5–8 November 2002; Volume 3, pp. 2255–2260. [Google Scholar]

- Artemiadis, P.; Kyriakopoulos, K. EMG-based position and force control of a robot arm: Application to teleoperation and orthosis. In Proceedings of the 2007 IEEE/ASME International Conference On Advanced Intelligent Mechatronics, Zurich, Switzerland, 4–7 September 2007; pp. 1–6. [Google Scholar]

- Ikeura, R.; Inooka, H.; Mizutani, K. Subjective evaluation for maneuverability of a robot cooperating with humans. J. Robot. Mechatron. 2002, 14, 514–519. [Google Scholar] [CrossRef]

- Neftci, E.O.; Averbeck, B.B. Reinforcement learning in artificial and biological systems. Nat. Mach. Intell. 2019, 1, 133–143. [Google Scholar] [CrossRef]

- Botvinick, M.; Ritter, S.; Wang, J.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement learning, fast and slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, S.; Nolan, K.J.; Zanotto, D. Reinforcement learning assist-as-needed control for robot assisted gait training. In Proceedings of the 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), New York, NY, USA, 29 November–1 December 2020; pp. 785–790. [Google Scholar]

- Khalili, M.; Zhang, Y.; Gil, A.; Zhao, L.; Kuo, C.; Van Der Loos, H.F.M.; Borisoff, J.F. Development of a learning-based intention detection framework for power-assisted manual wheelchair users. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 118–123. [Google Scholar]

- Raziyev, Y.; Garifulin, R.; Shintemirov, A.; Do, T.D. Development of a power assist lifting device with a fuzzy PID speed regulator. IEEE Access 2019, 7, 30724–30731. [Google Scholar] [CrossRef]

- Hebesberger, D.; Koertner, T.; Gisinger, C.; Pripfl, J.; Dondrup, C. Lessons learned from the deployment of a long-term autonomous robot as companion in physical therapy for older adults with dementia a mixed methods study. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 27–34. [Google Scholar]

- Thoesen, A.; McBryan, T.; Green, M.; Mick, D.; Martia, J.; Marvi, H. Revisiting scaling laws for robotic mobility in granular media. IEEE Robot. Autom. Lett. 2020, 5, 1319–1325. [Google Scholar] [CrossRef]

- Rahman, S.M.M. Trustworthy power assistance in object manipulation with a power assist robotic system. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; pp. 1–6. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).