Event-Triggered H∞ Control for Permanent Magnet Synchronous Motor via Adaptive Dynamic Programming

Abstract

1. Introduction

- 1.

- It is the first time that the ADP algorithm is applied in solving the optimal control problem of PMSM, and the control problem is formulated as a two-player zero-sum differential game. Compared with the traditional ADP structure, this algorithm only requires a single critic neural network to approximate the solution of the HJI equation online and adaptively learn the optimal controller, significantly simplifying the control architecture and reducing the online computational complexity.

- 2.

- A collaborative optimization mechanism that combines a feedforward compensation structure and an event-triggering mechanism is proposed, significantly improving the real-time efficiency of the algorithm. Designing a feedforward compensation term omits the traditional disturbance observer. Combining this term with an event-triggering mechanism significantly reduces the computational burden while ensuring control accuracy.

- 3.

- The Zeno behavior is rigorously precluded in theory. Apply the comparison lemma to derive a strictly positive lower bound time , which theoretically precludes the Zeno behavior.

2. System Descriptions and Preliminaries

3. Event-Based Adaptive Control Design for the Zero Sum Games

3.1. Derivation of HJI Equation

3.2. Event-Based Adaptive Critic Design

3.3. Stability Analysis of the Closed-Loop System

| Algorithm 1: Adaptive dynamic programming with event-triggered control. |

Initialization: 1. Set PMSM parameters (Table 1), control weights , learning rate , event-trigger thresholds . 2. Initialize critic NN weights , sampling states , trigger counter . Main Loop (for each time step t): 3. Compute state norm . 4. Event trigger condition: if and: a. Update trigger time . b. Sample state . c. Update control input: . d. Update critic weights: . else: Maintain previous control . 5. Apply to PMSM dynamic (6). 6. Solve closed-loop system ODEs via ode45. 7. Record states , weights , trigger events. Termination: 8. Stop when . Plot results. |

| Parameter | Value |

|---|---|

| J (rotor inertia) | |

| B (mechanical damping coefficient) | |

| P (number of pole pairs) | 4 |

| (stator resistance) | |

| (stator inductance) | |

| (permanent magnet flux linkage) | |

| (reference angular velocity) |

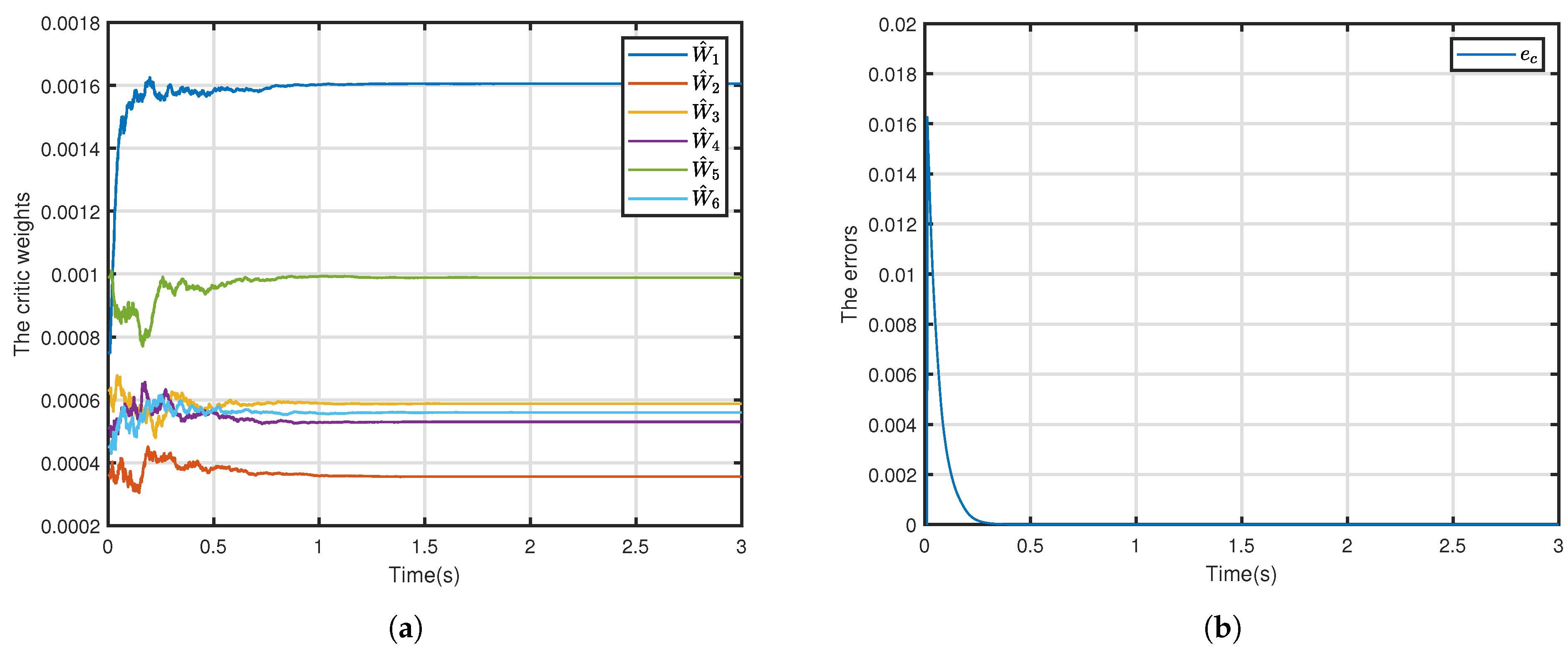

3.4. Lower Bound Analysis on Inter-Event Times

4. Simulation Results and Analysis

4.1. Simulation Parameter Setting

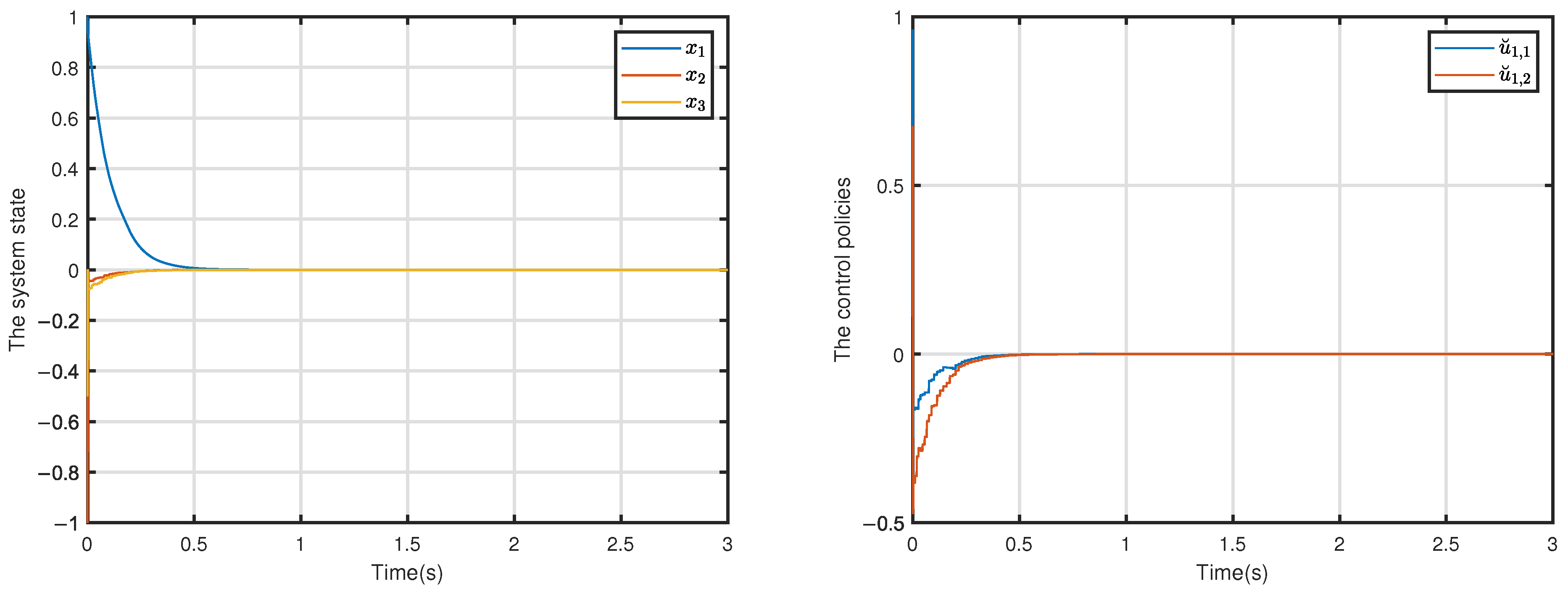

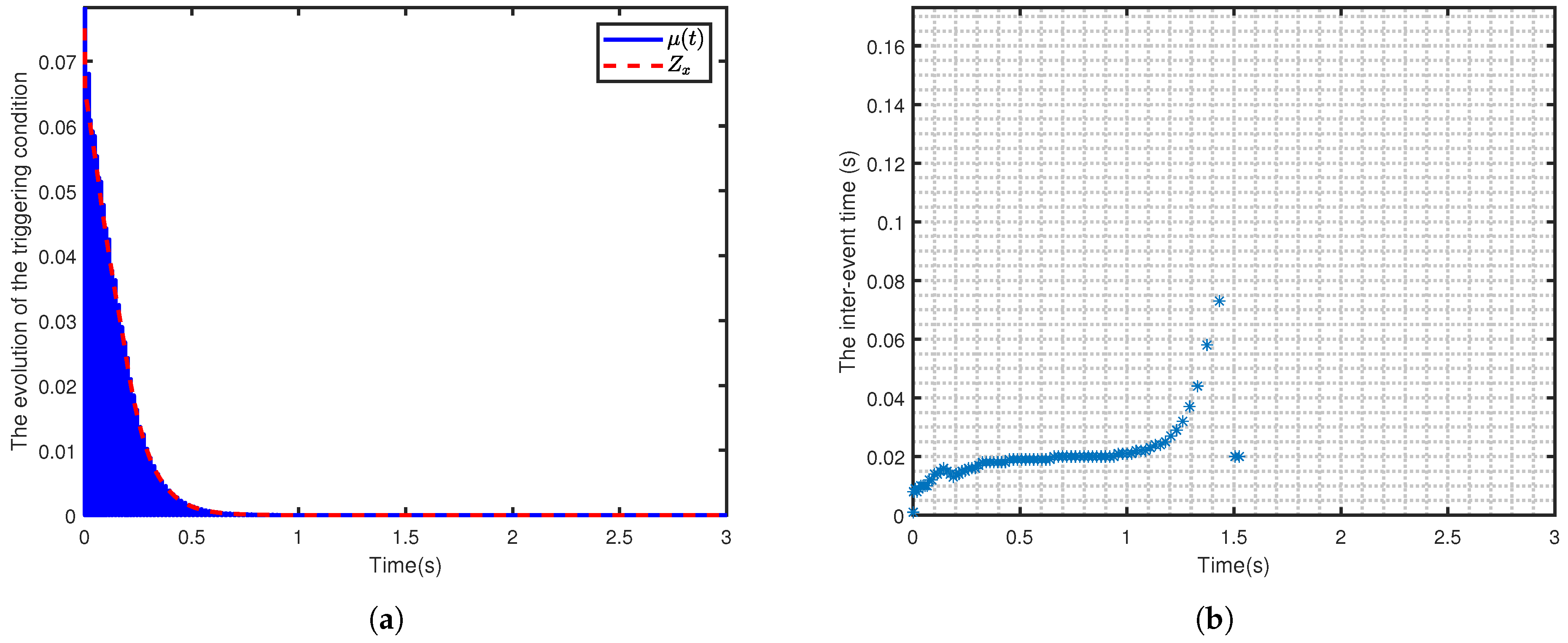

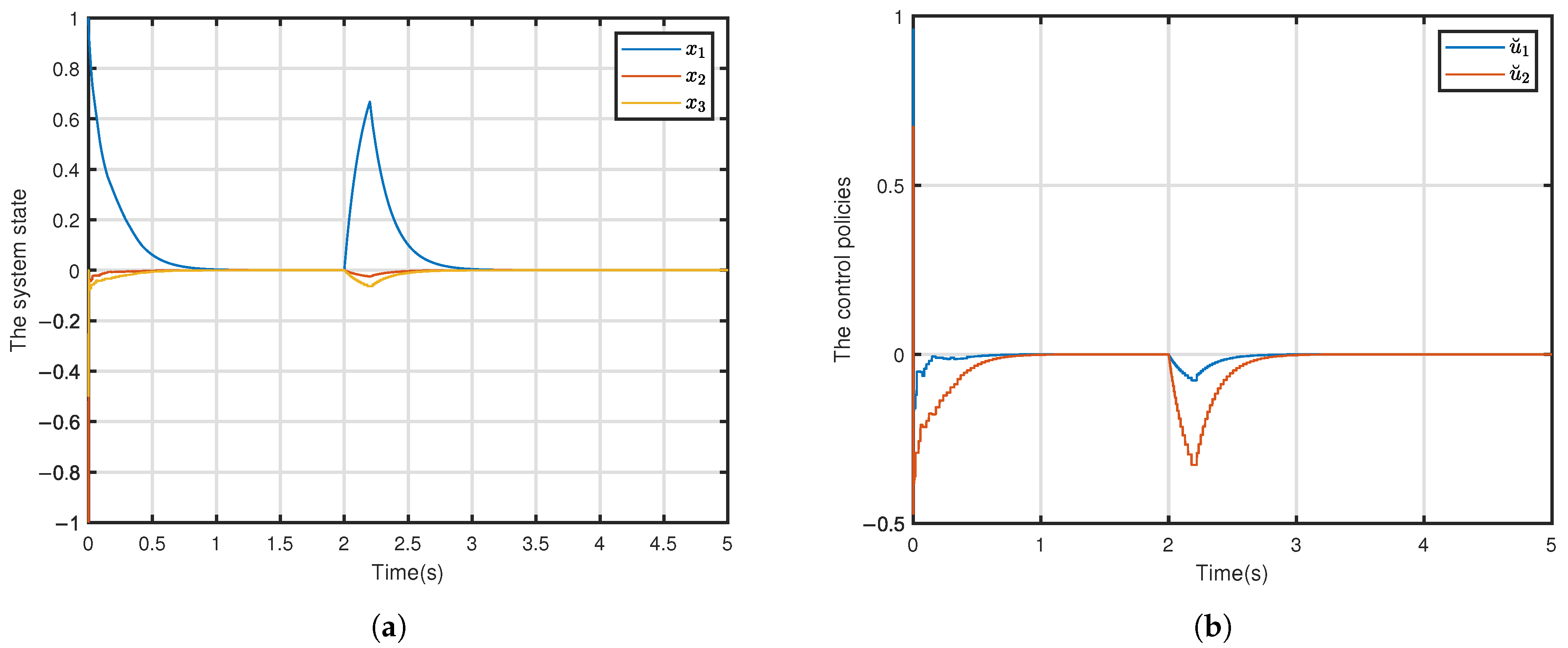

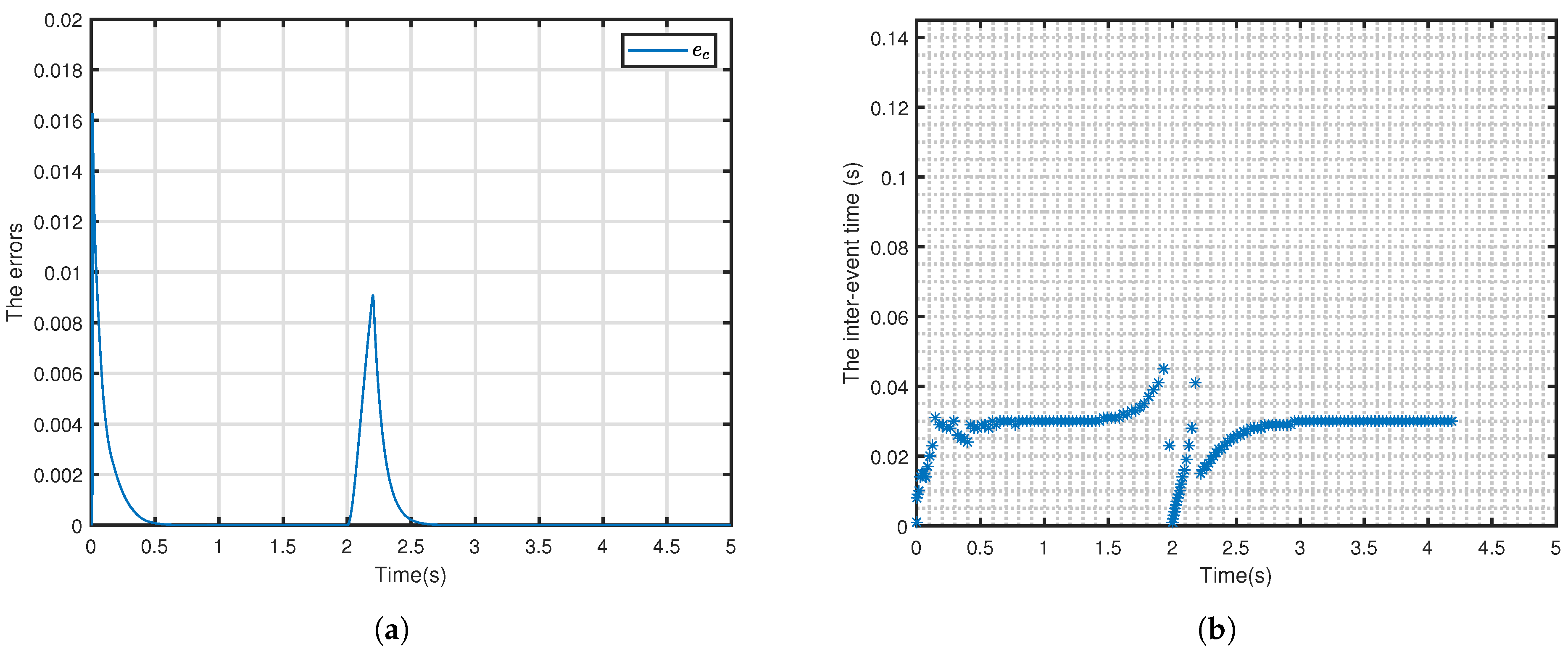

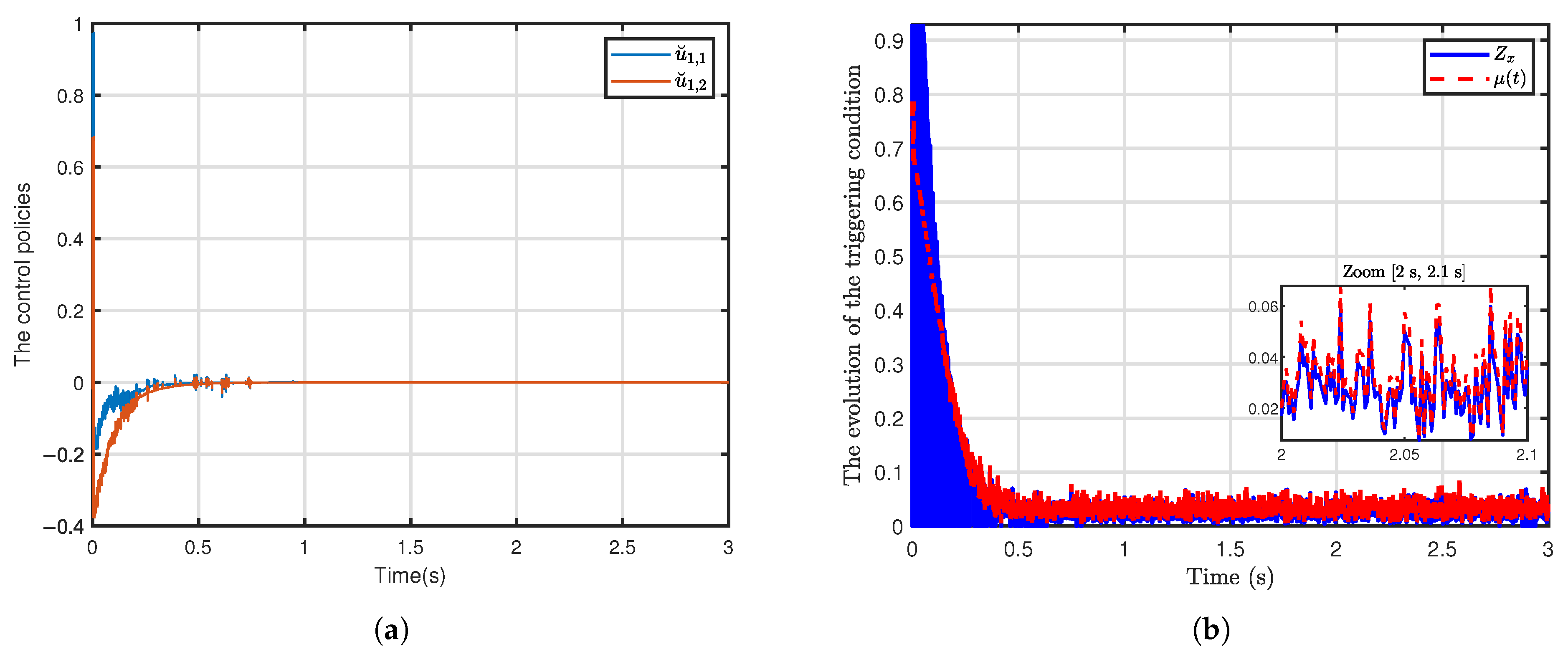

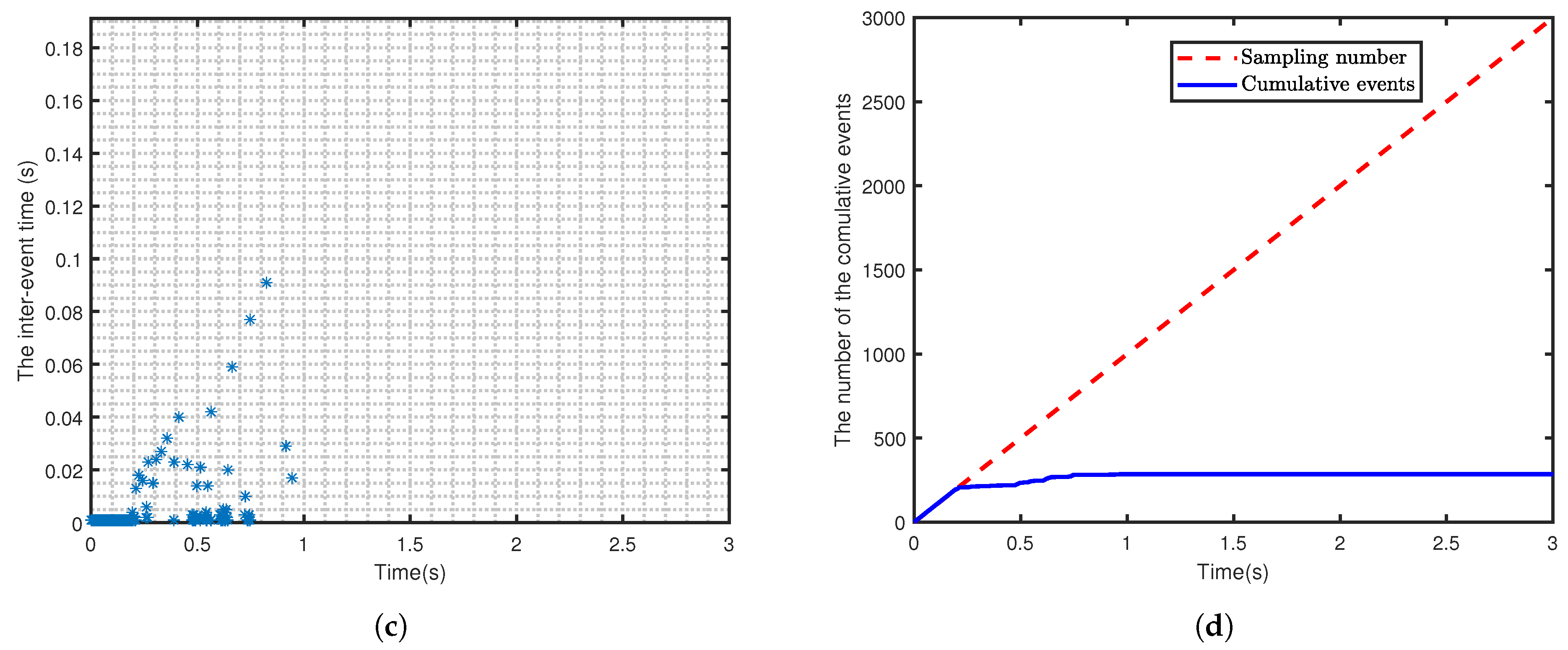

4.2. Simulation Results and Analysis

4.3. Robustness Analysis

4.3.1. Robustness Analysis Against Step Disturbance

4.3.2. Robustness Analysis Under Noise and Delay

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nguyen, T.H.; Nguyen, T.T.; Nguyen, V.Q.; Le, K.M.; Tran, H.N.; Jeon, J.W. An Adaptive Sliding-Mode Controller with a Modified Reduced-Order Proportional Integral Observer for Speed Regulation of a Permanent Magnet Synchronous Motor. IEEE Trans. Ind. Electron. 2022, 69, 7181–7191. [Google Scholar] [CrossRef]

- Sanada, M.; Morimoto, S.; Takeda, Y. Interior Permanent Magnet Linear Synchronous Motor for High-Performance Drives. IEEE Trans. Ind. Appl. 1997, 33, 966–972. [Google Scholar] [CrossRef]

- Dhulipati, H.; Mukundan, S.; Li, Z.; Ghosh, E.; Tjong, J.; Kar, N.C. Torque Performance Enhancement in Consequent Pole PMSM Based on Magnet Pole Shape Optimization for Direct-Drive EV. IEEE Trans. Magn. 2021, 57, 8103407. [Google Scholar] [CrossRef]

- Liu, C.; Chau, K.T.; Lee, C.H.T.; Song, Z. A Critical Review of Advanced Electric Machines and Control Strategies for Electric Vehicles. Proc. IEEE 2020, 109, 1004–1028. [Google Scholar] [CrossRef]

- Ping, Z.; Wang, T.; Huang, Y.; Wang, H.; Lu, J.; Li, Y. Internal Model Control of PMSM Position Servo System: Theory and Experimental Results. IEEE Trans. Ind. Inf. 2020, 16, 2202–2211. [Google Scholar] [CrossRef]

- Liao, C.; Bianchi, N.; Zhang, Z. Recent Developments and Trends in High-Performance PMSM for Aeronautical Applications. Energies 2024, 17, 6199. [Google Scholar] [CrossRef]

- Niu, F.; Wang, B.; Babel, A.S.; Li, K.; Strangas, E.G. Comparative Evaluation of Direct Torque Control Strategies for Permanent Magnet Synchronous Machines. IEEE Trans. Power Electron. 2016, 31, 1408–1424. [Google Scholar] [CrossRef]

- Yu, Y.; Pan, Y.; Chen, Q.; Hu, Y.; Gao, J.; Zhao, Z.; Niu, S.; Zhou, S. Multi-Objective Optimization Strategy for Permanent Magnet Synchronous Motor Based on Combined Surrogate Model and Optimization Algorithm. Energies 2023, 16, 1630. [Google Scholar] [CrossRef]

- Fan, Z.-X.; Li, S.; Liu, R. ADP-Based Optimal Control for Systems with Mismatched Disturbances: A PMSM Application. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 2057–2061. [Google Scholar] [CrossRef]

- Jung, J.; Leu, V.Q.; Do, T.D.; Kim, E.; Choi, H.H. Adaptive PID Speed Control Design for Permanent Magnet Synchronous Motor Drives. IEEE Trans. Power Electron. 2015, 30, 900–908. [Google Scholar] [CrossRef]

- Belkhier, Y.; Abdelyazid, A.; Oubelaid, A.; Khosravi, N.; Bajaj, M.; Vishnuram, P.; Zaitsev, I. Experimental Analysis of Passivity-Based Control Theory for Permanent Magnet Synchronous Motor Drive Fed by Grid Power. IET Control Theory Appl. 2024, 18, 495–510. [Google Scholar] [CrossRef]

- Soreshjani, M.H.; Heidari, R.; Ghafari, A. The Application of Classical Direct Torque and Flux Control (DTFC) for Line-Start Permanent Magnet Synchronous and Its Comparison with Permanent Magnet Synchronous Motor. J. Electr. Eng. Technol. 2014, 9, 1954–1959. [Google Scholar] [CrossRef][Green Version]

- Nicola, M.; Nicola, C.-I.; Selișteanu, D.; Ionete, C.; Șendrescu, D. Improved Performance of the Permanent Magnet Synchronous Motor Sensorless Control System Based on Direct Torque Control Strategy and Sliding Mode Control Using Fractional Order and Fractal Dimension Calculus. Appl. Sci. 2024, 14, 8816. [Google Scholar] [CrossRef]

- Niu, F.; Huang, X.; Ge, L.; Zhang, J.; Wu, L.; Wang, Y. A Simple and Practical Duty Cycle Modulated Direct Torque Control for Permanent Magnet Synchronous Motors. IEEE Trans. Power Electron. 2018, 34, 1572–1579. [Google Scholar] [CrossRef]

- Fu, D.; Zhao, X.; Zhu, J. A Novel Robust Super-Twisting Nonsingular Terminal Sliding Mode Controller for Permanent Magnet Linear Synchronous Motors. IEEE Trans. Power Electron. 2021, 37, 2936–2945. [Google Scholar] [CrossRef]

- Qian, J.; Ji, C.; Pan, N.; Wu, J. Improved Sliding Mode Control for Permanent Magnet Synchronous Motor Speed Regulation System. Appl. Sci. 2018, 8, 2491. [Google Scholar] [CrossRef]

- Li, K.; Ding, J.; Sun, X.; Tian, X. Overview of Sliding Mode Control Technology for Permanent Magnet Synchronous Motor System. IEEE Access 2024, 12, 71685–71704. [Google Scholar] [CrossRef]

- Powell, W.B. Approximate Dynamic Programming: Solving the Curses of Dimensionality; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- El-Sousy, F.F.M.; Amin, M.M.; Al-Durra, A. Adaptive Optimal Tracking Control via Actor-Critic-Identifier Based Adaptive Dynamic Programming for Permanent-Magnet Synchronous Motor Drive System. IEEE Trans. Ind. Appl. 2021, 57, 6577–6591. [Google Scholar] [CrossRef]

- Kiumarsi, B.; Lewis, F.L. Actor-Critic-Based Optimal Tracking for Partially Unknown Nonlinear Discrete-Time Systems. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 140–151. [Google Scholar] [CrossRef] [PubMed]

- Vamvoudakis, K.G.; Lewis, F.L. Online Actor-Critic Algorithm to Solve the Continuous-Time Infinite Horizon Optimal Control Problem. Automatica 2010, 46, 878–888. [Google Scholar] [CrossRef]

- Khiabani, A.G.; Heydari, A. Optimal Torque Control of Permanent Magnet Synchronous Motors Using Adaptive Dynamic Programming. IET Power Electron. 2020, 13, 2442–2449. [Google Scholar] [CrossRef]

- Karg, P.; Köpf, F.; Braun, C.A.; Hohmann, S. Excitation for Adaptive Optimal Control of Nonlinear Systems in Differential Games. IEEE Trans. Autom. Control 2023, 68, 596–603. [Google Scholar] [CrossRef]

- Su, H.; Zhang, H.; Liang, Y.; Mu, Y. Online Event-Triggered Adaptive Critic Design for Non-Zero-Sum Games of Partially Unknown Networked Systems. Neurocomputing 2019, 368, 84–98. [Google Scholar] [CrossRef]

- Liang, Y.; Luo, Y.; Su, H.; Zhang, X.; Chang, H.; Zhang, J. Event-Triggered Explorized IRL-Based Decentralized Fault-Tolerant Guaranteed Cost Control for Interconnected Systems against Actuator Failures. Neurocomputing 2025, 615, 128837. [Google Scholar] [CrossRef]

- Zhong, X.; He, H. An Event-Triggered ADP Control Approach for Continuous-Time System with Unknown Internal States. IEEE Trans. Cybern. 2017, 47, 683–694. [Google Scholar] [CrossRef]

- Wang, D.; Mu, C.; He, H.; Liu, D. Event-Driven Adaptive Robust Control of Nonlinear Systems with Uncertainties through NDP Strategy. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 1358–1370. [Google Scholar] [CrossRef]

- Sahoo, A.; Xu, H.; Jagannathan, S. Neural Network-Based Event-Triggered State Feedback Control of Nonlinear Continuous-Time Systems. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 497–509. [Google Scholar] [CrossRef]

- Zhang, H.; Su, H.; Zhang, K.; Luo, Y. Event-Triggered Adaptive Dynamic Programming for Non-Zero-Sum Games of Unknown Nonlinear Systems via Generalized Fuzzy Hyperbolic Models. IEEE Trans. Fuzzy Syst. 2019, 27, 2202–2214. [Google Scholar] [CrossRef]

- Su, H.; Zhang, H.; Jiang, H.; Wen, Y. Decentralized Event-Triggered Adaptive Control of Discrete-Time Nonzero-Sum Games over Wireless Sensor-Actuator Networks with Input Constraints. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4254–4266. [Google Scholar] [CrossRef]

- Su, H.; Zhang, H.; Gao, D.W.; Luo, Y. Adaptive Dynamics Programming for H∞ Control of Continuous-Time Unknown Nonlinear Systems via Generalized Fuzzy Hyperbolic Models. IEEE Trans. Syst. Man Cybern. Syst. 2019, 50, 3996–4008. [Google Scholar] [CrossRef]

- Dai, C.; Guo, T.; Yang, J.; Li, S. A Disturbance Observer-Based Current-Constrained Controller for Speed Regulation of PMSM Systems Subject to Unmatched Disturbances. IEEE Trans. Ind. Electron. 2021, 68, 767–775. [Google Scholar] [CrossRef]

| Learning Rate | Steady-State Error | Convergence Time (s) |

|---|---|---|

| 0.0001 | 1.505 | |

| 0.001 | 1.169 | |

| 0.01 | 1.256 | |

| 0.1 | 1.457 | |

| 1.0 | 1.863 | |

| 2.0 | 2.666 | |

| 5.0 | 1.305 |

| Control Strategy | Torque Ripple (%) | THD (iq) (%) | Vibration RMS |

|---|---|---|---|

| ADP | 0.035 | 0.03 | |

| PI | 0.553 | 33.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, C.; Su, H.; Yan, W.; Cui, Y. Event-Triggered H∞ Control for Permanent Magnet Synchronous Motor via Adaptive Dynamic Programming. Machines 2025, 13, 715. https://doi.org/10.3390/machines13080715

Gu C, Su H, Yan W, Cui Y. Event-Triggered H∞ Control for Permanent Magnet Synchronous Motor via Adaptive Dynamic Programming. Machines. 2025; 13(8):715. https://doi.org/10.3390/machines13080715

Chicago/Turabian StyleGu, Cheng, Hanguang Su, Wencheng Yan, and Yi Cui. 2025. "Event-Triggered H∞ Control for Permanent Magnet Synchronous Motor via Adaptive Dynamic Programming" Machines 13, no. 8: 715. https://doi.org/10.3390/machines13080715

APA StyleGu, C., Su, H., Yan, W., & Cui, Y. (2025). Event-Triggered H∞ Control for Permanent Magnet Synchronous Motor via Adaptive Dynamic Programming. Machines, 13(8), 715. https://doi.org/10.3390/machines13080715