Supporting Human–Robot Interaction in Manufacturing with Augmented Reality and Effective Human–Computer Interaction: A Review and Framework

Abstract

1. Introduction

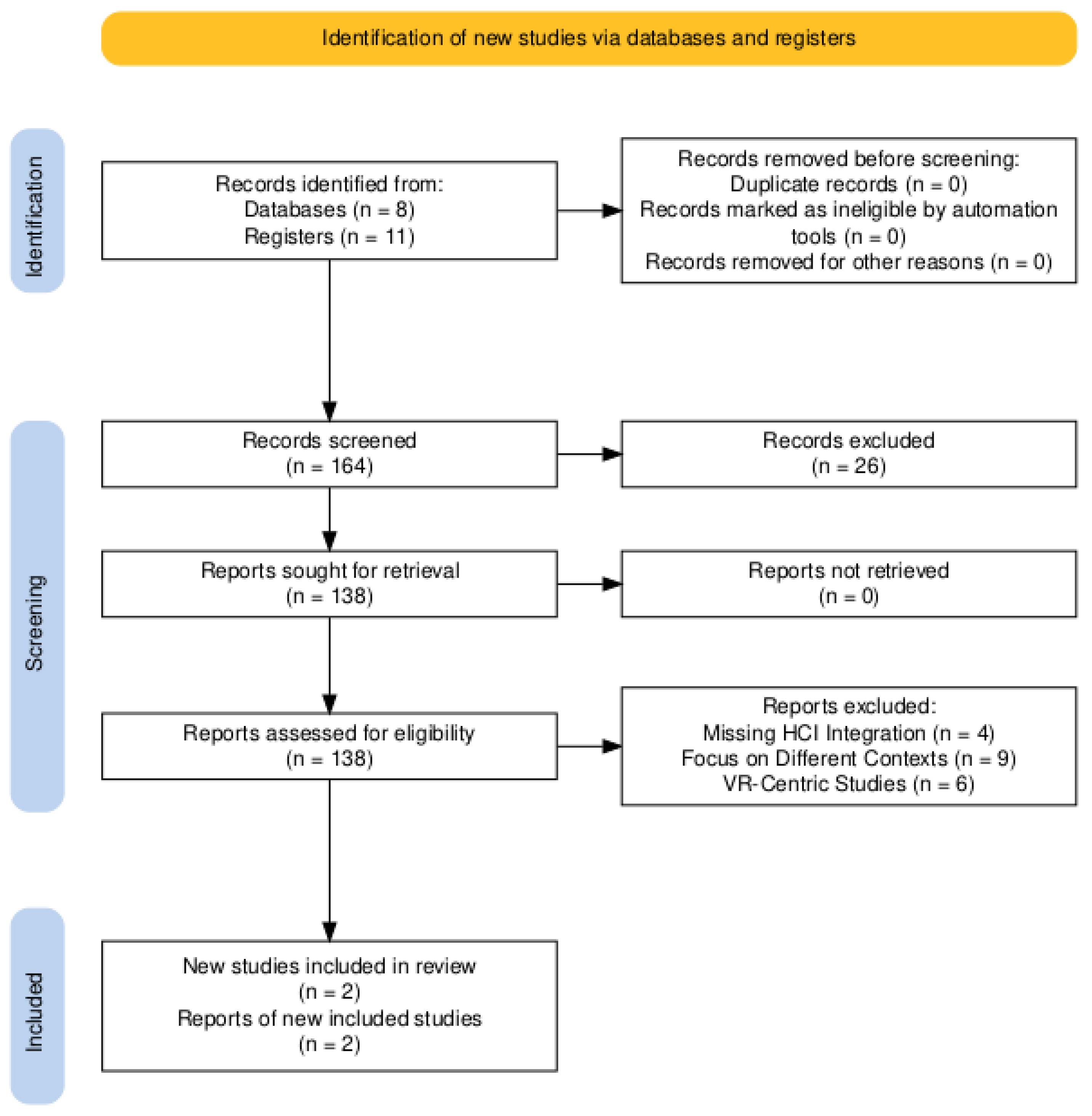

2. Materials and Methods

- The first researcher focused on AR- and HRI-related content, using the following search strings: “HRI Key Principles”, “HRI Foundations”, “AR in HRI”, “Mixed Reality in HRI”, and “Hololens in HRI”.

- The second researcher concentrated on HCI materials, using the following search strings: “UI in HRI”, “UX in HRI”, “HCI in HRI”, “AR UI in HRI”, “HCI Foundations”, and “UX/UI Principles”.

- The third researcher specialized in Situational Awareness, using the following search strings: “Situational Awareness in HCI”, “Situational Awareness in HRI”, “Situational Awareness for AR”, and “Hololens Situational Awareness”.

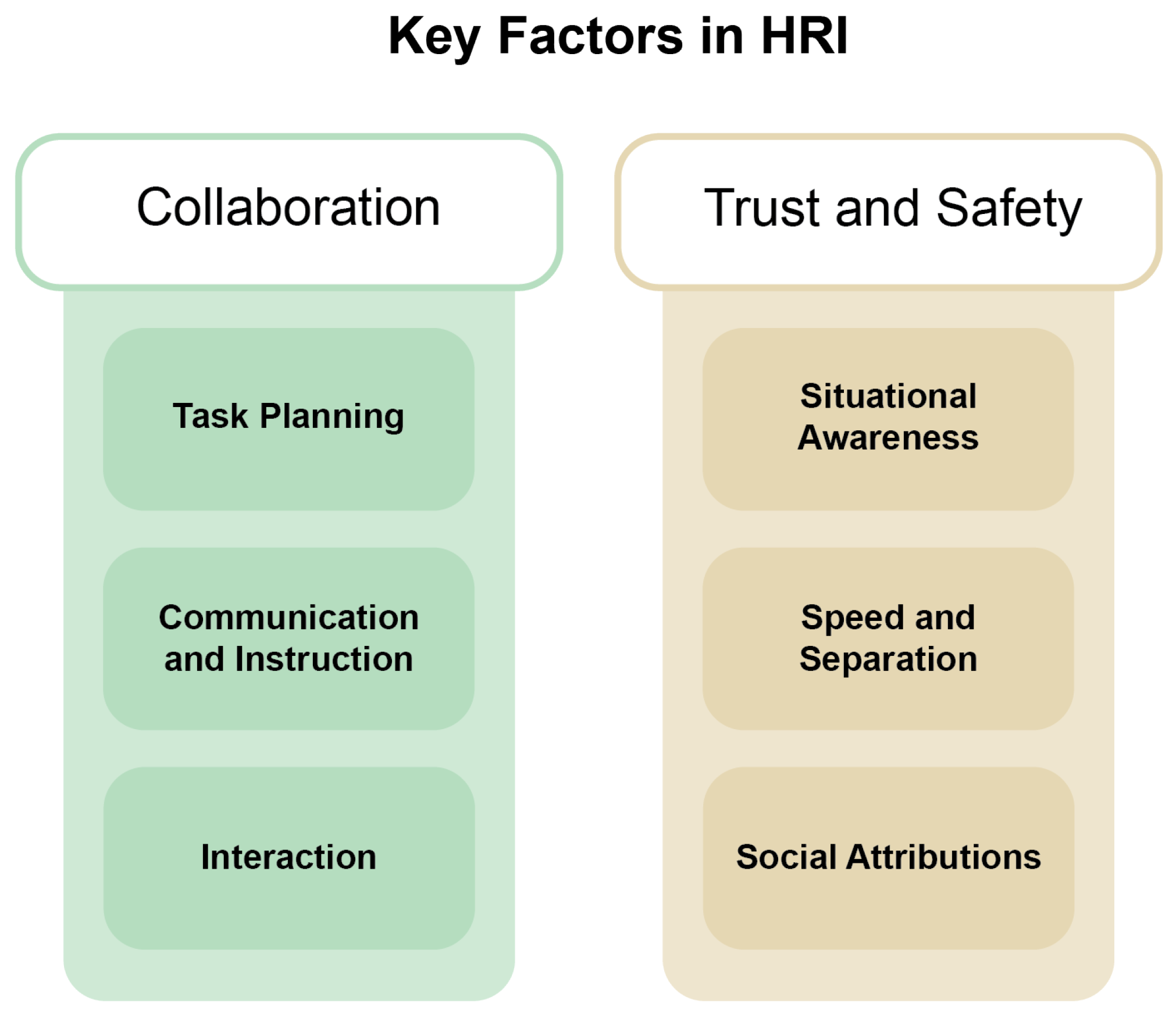

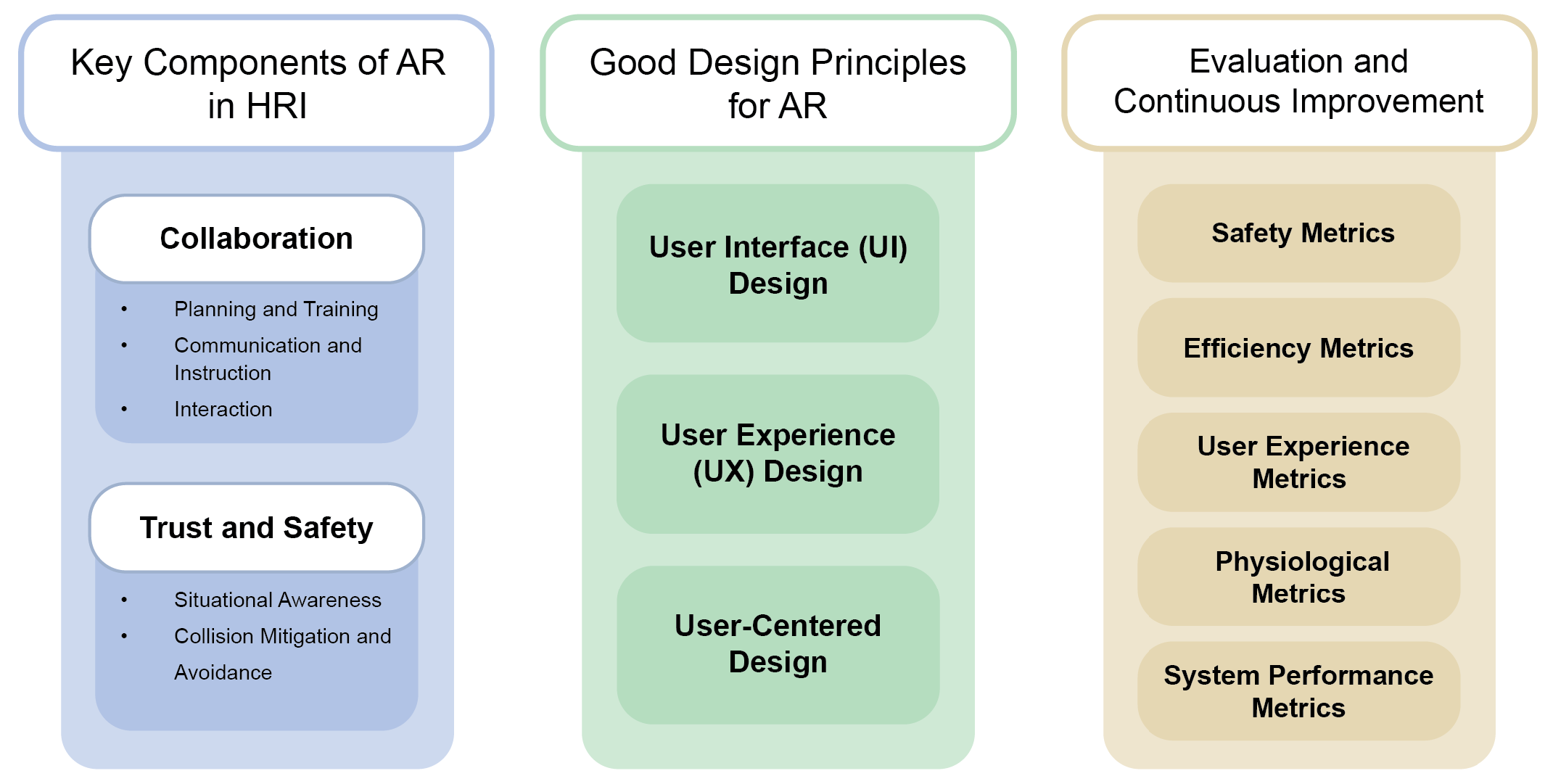

3. Key Factors in HRI

3.1. Collaboration

3.1.1. Task Planning

3.1.2. Communication and Instruction

3.1.3. Interaction

3.2. Trust and Safety

3.2.1. Situational Awareness

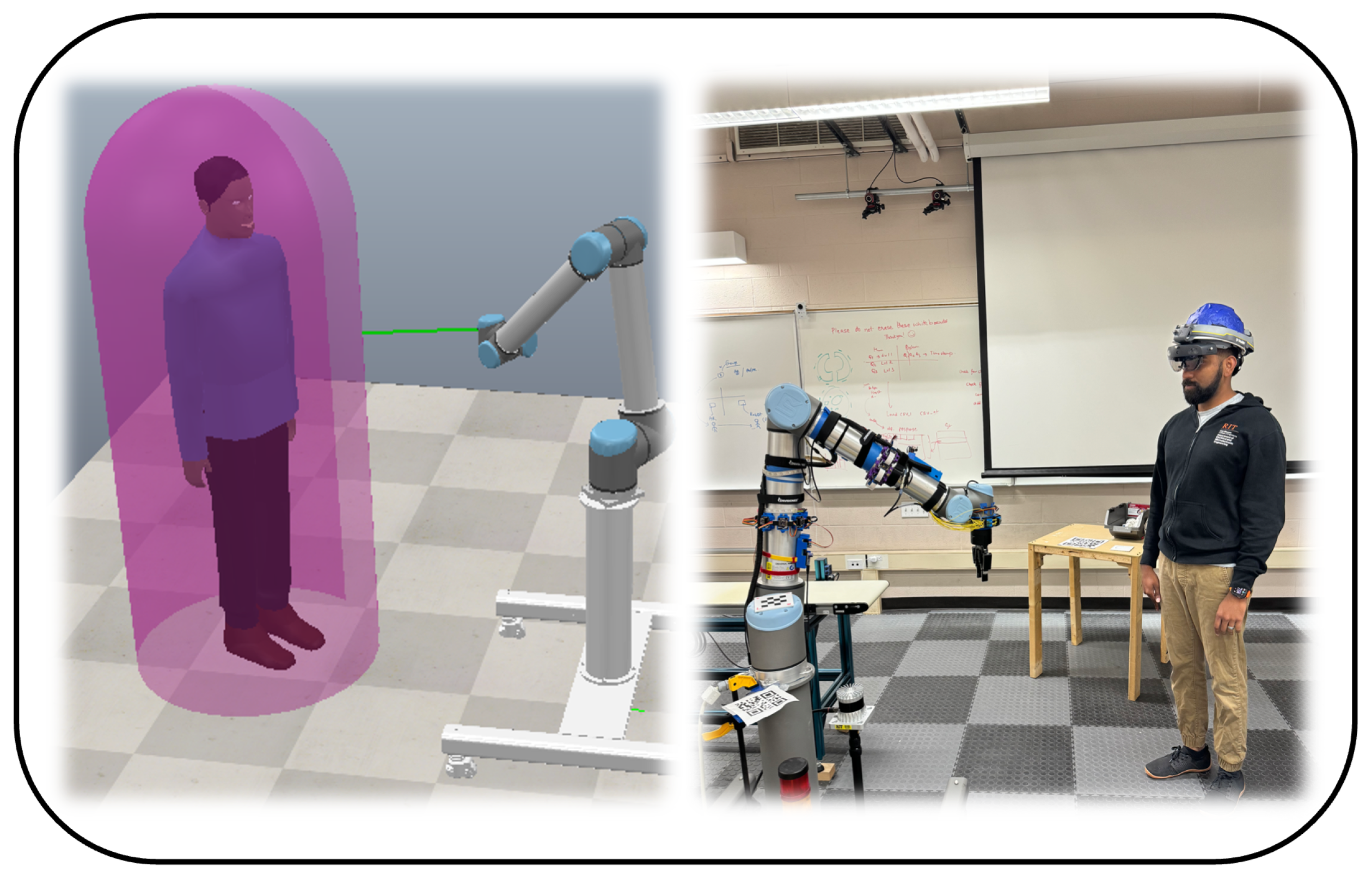

3.2.2. Speed and Separation Monitoring

3.2.3. Social Attributions

4. Challenges in HRI

- Safety Concerns: Improving both human and robot awareness of each other’s actions and intentions to prevent collisions and minimize risks of injuries.

- Low Levels of Communication and Collaboration: Building and maintaining trust in robotic systems through predictable, transparent actions.

- Necessity for Clear Instruction and Planning: Overcoming the limitations of pre-planned or rigid robot actions by enabling more dynamic, interactive, and responsive robot behaviors.

5. Augmented Visualizations Used to Improve HRI

5.1. Collaboration Utilizing AR

5.1.1. Planning and Training Using AR

5.1.2. Employing AR for Communication and Instruction

5.1.3. AR Promoting Interaction

5.2. Applying AR to Improve Trust and Safety

5.2.1. Situational Awareness with AR

5.2.2. Using AR for Collision Mitigation and Avoidance

6. Good Design Principles for AR

6.1. UI and UX Design

- Simplicity: Designs should be simple, clutter-free, and easy to navigate. Avoid unnecessary elements and keep the interface as straightforward as possible.

- Consistency: Maintain internal and external consistency. The design elements should be consistent throughout the system and align with universal design standards. For example, a floppy disk icon universally signifies “save”.

- Feedback: Provide feedback through visual, auditory, or haptic means to indicate that an action has been successfully completed. This helps users understand the outcome of their interactions.

- Visibility: Ensure that the cues are easy to see and understand. Visibility also refers to making the current state of the system visible to users. This helps users stay informed about what is happening within the system.

- Usability: Ensure the user flow of the system is easy to navigate, learn, and use. The design should be intuitive and provide a seamless experience for users.

- Clarity: The design should be straightforward and provide users with only essential information, avoiding unnecessary elements. Clarity ensures that users understand the interface and its functions easily.

- Efficiency: The system should be designed for performance optimization, enabling users to accomplish their goals quickly. Efficient design minimizes the time and effort required to complete tasks.

- Accessibility: Ensure that the system is accessible to all users, regardless of their abilities. This includes considering different devices, screen sizes, and assistive technologies to create an inclusive user experience.

6.2. User-Centered Design

6.3. Current Design Principles Used for AR in HRI

7. Evaluation and Continuous Improvement

7.1. Safety Metrics

- Collision Count: The number of collisions or near misses between robots and humans. A lower collision rate indicates that the system effectively prevents accidents, ensuring the safety of human operators. Monitoring this metric helps quantify the direct impact of the AR-HRI system on safety. It is critical to track this to ensure that the implemented safety features are functioning as intended and to identify any areas needing improvement.

- Safe Distance Maintenance: The percentage of time the robot maintains a predefined safe distance from the human operator. This metric shows how consistently the system keeps humans out of harm’s way. Maintaining a safe distance is crucial to prevent injuries and build trust between human operators and robots. Continuous monitoring of this metric ensures that the system adapts to dynamic environments while prioritizing human safety.

- Emergency Stops: The frequency of emergency stops triggered by the system to prevent collisions. While emergency stops are necessary to prevent collisions, a high frequency may indicate overly conservative settings, which can disrupt workflow. Balancing safety and efficiency is key, and this metric helps in tuning the system. Tracking emergency stops helps in refining the sensitivity and response parameters of the system for optimal performance.

7.2. Efficiency Metrics

- Task Completion Time: The time taken to complete a task before and after implementing the AR-HRI system. Reducing task completion time while maintaining safety shows that the system is efficient [115]. This metric is vital for demonstrating that the system not only keeps humans safe but also enhances productivity. It provides insights into how well the AR integration and SSM are streamlining the workflow.

- Idle Time: The amount of time the robot is idle due to safety interventions. Minimizing idle time indicates that the system is effective without unnecessarily halting operations [115]. This balance is critical for maintaining a smooth and efficient workflow. By tracking idle time, one can assess the efficiency of the SSM algorithm in differentiating between real and false positives, thereby ensuring that the robot operates as smoothly as possible.

- Path Optimization: Changes in the robot’s path efficiency, such as distance traveled and the smoothness of movements. Efficient path planning ensures that the robot performs its tasks optimally while avoiding humans [116]. Measuring path optimization helps in understanding the overall efficiency of the system. This metric is important for identifying any unnecessary detours or delays caused by the AR-HRI system and for optimizing the robot’s navigation algorithms.

7.3. User Experience Metrics

- Perceived Safety: Users’ feedback on how safe they feel working alongside the robot. Perceived safety is crucial for user acceptance of robotic systems [117]. This qualitative metric helps to understand the psychological impact of the system on human operators. Gathering user feedback through surveys and interviews helps in assessing the effectiveness of the AR visualizations and the SSM in making users feel secure.

- NASA TLX Scores: Scores from the NASA Task Load Index, assessing perceived workload across six dimensions: mental demand, physical demand, temporal demand, performance, effort, and frustration [44]. Assessing workload using NASA TLX provides insights into how the system affects the cognitive and physical demands on human operators [118]. Lower scores indicate a more user-friendly and less stressful interaction. This metric is essential for understanding how the AR-HRI system impacts overall user workload and for identifying areas that can be improved to reduce user strain.

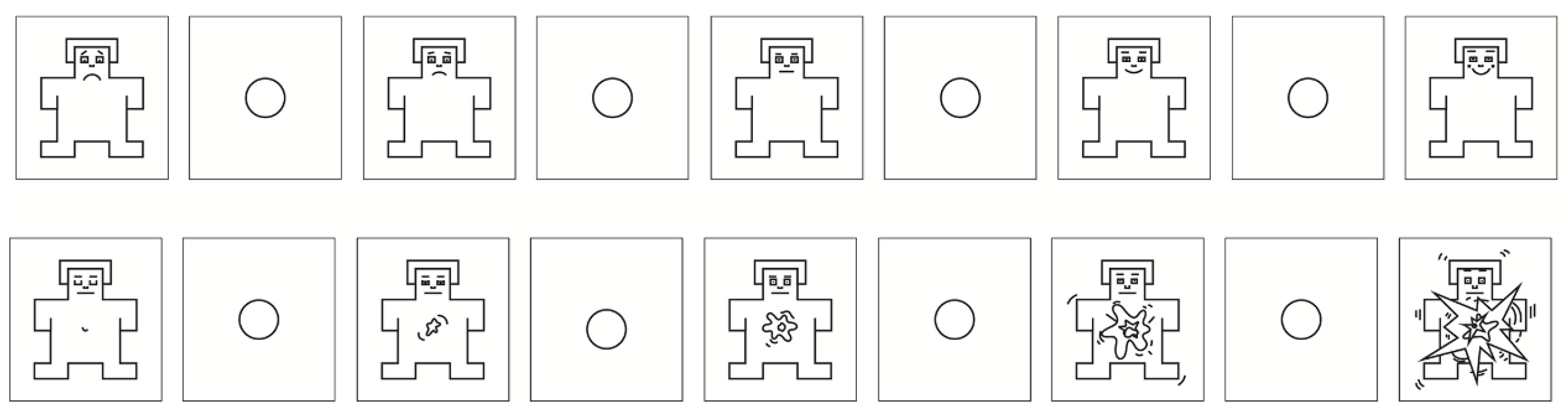

- SAM Scale Scores: Measurements from the Self-Assessment Manikin (SAM) scale [119], assessing user arousal and valence. The SAM scale provides a subjective measure of users’ emotional responses [120]. These scores can be used for understanding the feelings felt by the workers during interaction with the robots while using AR. Positive emotional experiences are essential for user satisfaction and acceptance. Correlating these scores with physiological data (described in the next section) can provide a holistic view of user experience and emotional state [121]. Figure 14 is a visual depiction of how subjects would convey their subjective state.

- User Experience Questionnaire (UEQ): The UEQ consists of 26 questions, using a 7-point Likert scale where participants evaluate the user experience of a system across six attributes, including dependability and attractiveness [97].

7.4. Physiological Metrics

- ECG and Galvanic Skin Response (GSR): Data from physiological sensors that relay electrocardiogram (ECG) and galvanic skin response (GSR) signals from the human operator can be used to train models that estimate arousal and valence from ECG and GSR signals [117]. These models can also provide data on the emotional states of users, which can be correlated with subjective measures from the SAM scale to validate the user experiences. Implementing these models helps in real-time monitoring and analysis of user states, enabling proactive adjustments to the AR-HRI system to improve user comfort and performance.

7.5. System Performance Metrics

- Response Time: The time it takes for the system to detect a potential collision and respond. Faster response times are crucial for preventing accidents. This metric demonstrates the system’s efficiency in real-time monitoring and intervention. Reducing response time is essential for ensuring that safety interventions are timely and effective, thereby minimizing the risk of accidents.

- Positional Accuracy: The accuracy of the system in detecting the positions of both the robot and the human. High positional accuracy is essential for the effective functioning of the system. This metric ensures that the system can reliably monitor and react to the positions of humans and robots. Accurate position tracking is vital for the SSM algorithm to function correctly and for providing precise AR visualizations.

8. Framework for Developing Augmented Reality Applications in Human–Robot Collaboration

8.1. Improving Collaboration, Safety, and Trust Using AR in HRI

8.2. Collision Warnings Using Virtual Elements

9. Limitations

9.1. Scope Limitation

9.2. Technical Limitations and User Acceptance

9.3. Plan for Long-Term Impact Assessment of AR in HRI

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Thrun, S. Toward a Framework for Human-Robot Interaction. Human–Computer Interact. 2004, 19, 9–24. [Google Scholar] [CrossRef] [PubMed]

- Scholtz, J. Theory and evaluation of human Robot Interactions. In Proceedings of the 36th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6–9 January 2003; p. 10. [Google Scholar] [CrossRef]

- Sidobre, D.; Broquère, X.; Mainprice, J.; Burattini, E.; Finzi, A.; Rossi, S.; Staffa, M. Human–Robot Interaction. In Advanced Bimanual Manipulation: Results from the DEXMART Project; Siciliano, B., Ed.; Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2012; pp. 123–172. [Google Scholar] [CrossRef]

- Ong, S.K.; Yuan, M.L.; Nee, A.Y.C. Augmented Reality applications in manufacturing: A survey. Int. J. Prod. Res. 2008, 46, 2707–2742. [Google Scholar] [CrossRef]

- Suzuki, R.; Karim, A.; Xia, T.; Hedayati, H.; Marquardt, N. Augmented Reality and Robotics: A Survey and Taxonomy for AR-enhanced Human-Robot Interaction and Robotic Interfaces. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (CHI ’22), New Orleans, LA, USA, 29 April 2022–5 May 2022; pp. 1–33. [Google Scholar] [CrossRef]

- Ruiz, J.; Escalera, M.; Viguria, A.; Ollero, A. A simulation framework to validate the use of head-mounted displays and tablets for information exchange with the UAV safety pilot. In Proceedings of the 2015 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Cancun, Mexico, 23–25 November 2015; pp. 336–341. [Google Scholar] [CrossRef]

- Chan, W.P.; Hanks, G.; Sakr, M.; Zhang, H.; Zuo, T.; van der Loos, H.F.M.; Croft, E. Design and Evaluation of an Augmented Reality Head-mounted Display Interface for Human Robot Teams Collaborating in Physically Shared Manufacturing Tasks. ACM Trans. Hum.-Robot Interact. 2022, 11, 31:1–31:19. [Google Scholar] [CrossRef]

- Kalpagam Ganesan, R.; Rathore, Y.K.; Ross, H.M.; Ben Amor, H. Better Teaming Through Visual Cues: How Projecting Imagery in a Workspace Can Improve Human-Robot Collaboration. IEEE Robot. Autom. Mag. 2018, 25, 59–71. [Google Scholar] [CrossRef]

- Woodward, J.; Ruiz, J. Analytic Review of Using Augmented Reality for Situational Awareness. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2166–2183. [Google Scholar] [CrossRef] [PubMed]

- Rogers, Y.; Sharp, H.; Preece, J. Interaction Design: Beyond Human-Computer Interaction, 6th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2023; Available online: http://id-book.com (accessed on 9 September 2024).

- Blaga, A.; Tamas, L. Augmented Reality for Digital Manufacturing. In Proceedings of the 2018 26th Mediterranean Conference on Control and Automation (MED), Zadar, Croatia, 19–22 June 2018; pp. 173–178. [Google Scholar] [CrossRef]

- Caudell, T.; Mizell, D. Augmented Reality: An application of heads-up display technology to manual manufacturing processes. In Proceedings of the Twenty-Fifth Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; Volume 2, pp. 659–669. [Google Scholar] [CrossRef]

- Novak-Marcincin, J.; Barna, J.; Janak, M.; Novakova-Marcincinova, L. Augmented Reality Aided Manufacturing. Procedia Comput. Sci. 2013, 25, 23–31. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and prospects of the human–robot collaboration. Auton. Robot. 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Baratta, A.; Cimino, A.; Gnoni, M.G.; Longo, F. Human Robot Collaboration in Industry 4.0: A literature review. Procedia Comput. Sci. 2023, 217, 1887–1895. [Google Scholar] [CrossRef]

- Bauer, A.; Wollherr, D.; Buss, M. Human-Robot Collaboration: A Survey. Int. J. Humanoid Robot. 2008, 5, 47–66. [Google Scholar] [CrossRef]

- Semeraro, F.; Griffiths, A.; Cangelosi, A. Human–robot collaboration and machine learning: A systematic review of recent research. Robot. Comput.-Integr. Manuf. 2023, 79, 102432. [Google Scholar] [CrossRef]

- Kumar, S.; Savur, C.; Sahin, F. Survey of Human–Robot Collaboration in Industrial Settings: Awareness, Intelligence, and Compliance. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 280–297. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Lamon, E.; De Franco, A.; Peternel, L.; Ajoudani, A. A Capability-Aware Role Allocation Approach to Industrial Assembly Tasks. IEEE Robot. Autom. Lett. 2019, 4, 3378–3385. [Google Scholar] [CrossRef]

- Rahman, S.M.; Wang, Y. Mutual trust-based subtask allocation for human–robot collaboration in flexible lightweight assembly in manufacturing. Mechatronics 2018, 54, 94–109. [Google Scholar] [CrossRef]

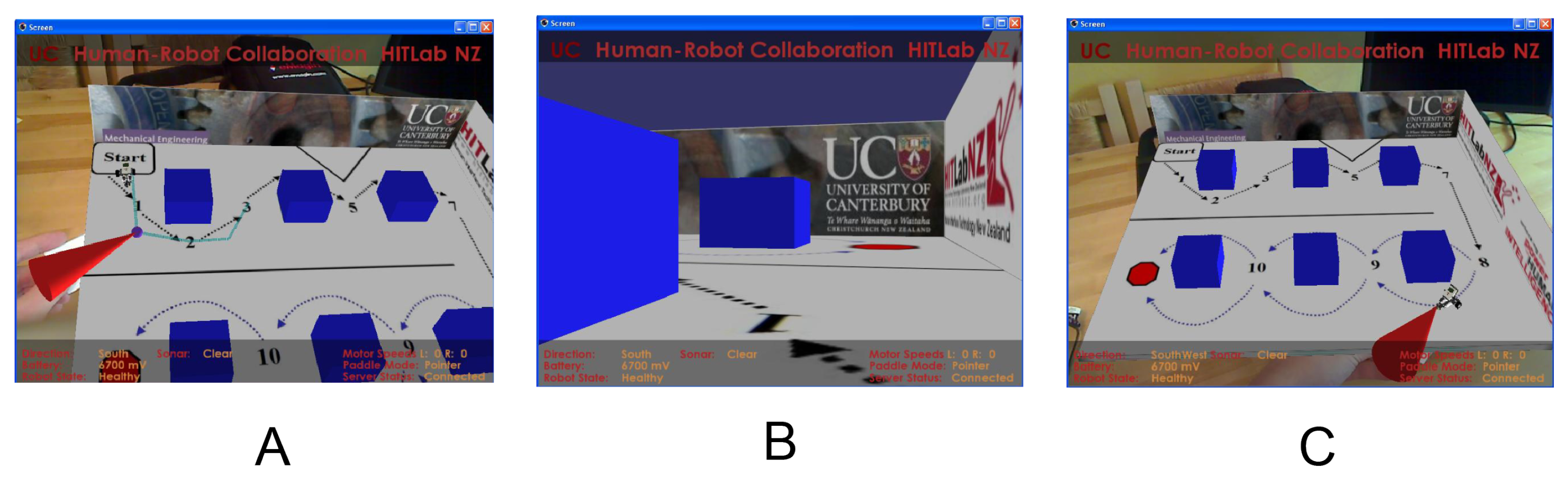

- Green, S.A.; Chase, J.G.; Chen, X.; Billinghurst, M. Evaluating the Augmented Reality Human–Robot Collaboration System. In Proceedings of the 2008 15th International Conference on Mechatronics and Machine Vision in Practice, Auckland, New Zealand, 2–4 December 2008. [Google Scholar]

- Glassmire, J.; O’Malley, M.; Bluethmann, W.; Ambrose, R. Cooperative manipulation between humans and teleoperated agents. In Proceedings of the 12th International Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, 2004. HAPTICS ’04. Proceedings, Chicago, IL, USA, 27–28 March 2004; pp. 114–120. [Google Scholar] [CrossRef]

- Hoffman, G.; Breazeal, C. Effects of anticipatory action on Human–Robot teamwork: Efficiency, fluency, and perception of team. In Proceedings of the 2007 2nd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Arlington, VA, USA, 10–12 March 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Lasota, P.A.; Shah, J.A. Analyzing the Effects of Human-Aware Motion Planning on Close-Proximity Human–Robot Collaboration. Hum. Factors J. Hum. Factors Ergon. Soc. 2015, 57, 21–33. [Google Scholar] [CrossRef]

- Yao, B.; Zhou, Z.; Wang, L.; Xu, W.; Yan, J.; Liu, Q. A function block based cyber-physical production system for physical human–Robot Interaction. J. Manuf. Syst. 2018, 48, 12–23. [Google Scholar] [CrossRef]

- IEC 61499; Standard for Distributed Automation. International Electrotechnical Commission: Geneva, Switzerland, 2012. Available online: https://iec61499.com/ (accessed on 9 September 2024).

- Stark, R.; Fresemann, C.; Lindow, K. Development and operation of Digital Twins for technical systems and services. CIRP Ann. 2019, 68, 129–132. [Google Scholar] [CrossRef]

- Liu, Q.; Leng, J.; Yan, D.; Zhang, D.; Wei, L.; Yu, A.; Zhao, R.; Zhang, H.; Chen, X. digital-twin-based designing of the configuration, motion, control, and optimization model of a flow-type smart manufacturing system. J. Manuf. Syst. 2021, 58, 52–64. [Google Scholar] [CrossRef]

- Rosen, R.; Von Wichert, G.; Lo, G.; Bettenhausen, K.D. About The Importance of Autonomy and Digital Twins for the Future of Manufacturing. IFAC-PapersOnLine 2015, 48, 567–572. [Google Scholar] [CrossRef]

- Sahin, M.; Savur, C. Evaluation of Human Perceived Safety during HRC Task using Multiple Data Collection Methods. In Proceedings of the 2022 17th Annual System of Systems Engineering Conference (SOSE), Rochester, NY, USA, 7–11 June 2022; pp. 465–470. [Google Scholar] [CrossRef]

- Soh, H.; Xie, Y.; Chen, M.; Hsu, D. Multi-task trust transfer for human–Robot Interaction. Int. J. Robot. Res. 2020, 39, 233–249. [Google Scholar] [CrossRef]

- ISO 10218-1:2011; Robots and Robotic Devices—Safety Requirements for Industrial Robots—Part 1: Robots. ISO: Geneva, Switzerland, 2011.

- ISO/TS 15066:2016; Robots and Robotic Devices—Collaborative Robots. ISO: Geneva, Switzerland, 2016.

- Haddadin, S.; Albu-Schäffer, A.; Hirzinger, G. Safe Physical Human-Robot Interaction: Measurements, Analysis and New Insights. In Robotics Research; Kaneko, M., Nakamura, Y., Eds.; Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2011; pp. 395–407. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Maurtua, I.; Ibarguren, A.; Kildal, J.; Susperregi, L.; Sierra, B. Human–robot collaboration in industrial applications: Safety, interaction and trust. Int. J. Adv. Robot. Syst. 2017, 14, 172988141771601. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; De Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors J. Hum. Factors Ergon. Soc. 2011, 53, 517–527. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M. Theoretical underpinnings of situation awareness: A critical review. In Situation Awareness Analysis and Measurement; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000; pp. 3–32. [Google Scholar]

- Endsley, M.; Kiris, E. The Out-of-the-Loop Performance Problem and Level of Control in Automation. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Unhelkar, V.V.; Siu, H.C.; Shah, J.A. Comparative performance of human and mobile robotic assistants in collaborative fetch-and-deliver tasks. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 82–89. [Google Scholar] [CrossRef]

- Endsley, M. Direct Measurement of Situation Awareness: Validity and Use of SAGAT. In Situation Awareness: Analysis and Measurement; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000. [Google Scholar]

- Endsley, M. Situation awareness global assessment technique (SAGAT). In Proceedings of the IEEE 1988 National Aerospace and Electronics Conference, Dayton, OH, USA, 23–27 May 1988; Volume 3, pp. 789–795. [Google Scholar] [CrossRef]

- Bew, G.; Baker, A.; Goodman, D.; Nardone, O.; Robinson, M. Measuring Situational Awareness at the small unit tactical level. In Proceedings of the 2015 Systems and Information Engineering Design Symposium, Charlottesville, VA, USA, 24 April 2015; pp. 51–56. [Google Scholar] [CrossRef][Green Version]

- Endsley, M.R. A Systematic Review and Meta-Analysis of Direct Objective Measures of Situation Awareness: A Comparison of SAGAT and SPAM. Hum. Factors 2021, 63, 124–150. [Google Scholar] [CrossRef]

- Marvel, J.A.; Norcross, R. Implementing Speed and Separation Monitoring in collaborative robot workcells. Robot. Comput.-Integr. Manuf. 2017, 44, 144–155. [Google Scholar] [CrossRef]

- Kumar, S.; Arora, S.; Sahin, F. Speed and Separation Monitoring using On-Robot Time-of-Flight Laser-ranging Sensor Arrays. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; pp. 1684–1691. [Google Scholar] [CrossRef]

- Rosenstrauch, M.J.; Pannen, T.J.; Krüger, J. Human robot collaboration—using kinect v2 for ISO/TS 15066 Speed and Separation Monitoring. Procedia CIRP 2018, 76, 183–186. [Google Scholar] [CrossRef]

- Ganglbauer, M.; Ikeda, M.; Plasch, M.; Pichler, A. Human in the loop online estimation of robotic speed limits for safe human robot collaboration. Procedia Manuf. 2020, 51, 88–94. [Google Scholar] [CrossRef]

- Oh, K.; Kim, M. Social Attributes of Robotic Products: Observations of Child-Robot Interactions in a School Environment. Int. J. Design 2010, 4, 45–55. [Google Scholar]

- Sauppé, A.; Mutlu, B. The Social Impact of a Robot Co-Worker in Industrial Settings. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 3613–3622. [Google Scholar] [CrossRef]

- Bruce, A.; Nourbakhsh, I.; Simmons, R. The role of expressiveness and attention in Human–Robot Interaction. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 4, pp. 4138–4142. [Google Scholar] [CrossRef]

- Lu, L.; Xie, Z.; Wang, H.; Li, L.; Xu, X. Mental stress and safety awareness during Human–Robot collaboration—Review. Appl. Ergon. 2022, 105, 103832. [Google Scholar] [CrossRef] [PubMed]

- Evans, G.; Miller, J.; Pena, M.; MacAllister, A.; Winer, E. Evaluating the Microsoft HoloLens through an Augmented Reality assembly application. In Proceedings of the SPIE Defense + Security, Anaheim, CA, USA, 9–13 April 2017; p. 101970V. [Google Scholar] [CrossRef]

- Sääski, J.; Salonen, T.; Liinasuo, M.; Pakkanen, J.; Vanhatalo, M.; Riitahuhta, A. Augmented Reality Efficiency in Manufacturing Industry: A Case Study. In Proceedings of the DS 50: Proceedings of NordDesign 2008 Conference, Tallinn, Estonia, 21–23 August 2008. [Google Scholar]

- Palmarini, R.; del Amo, I.F.; Bertolino, G.; Dini, G.; Erkoyuncu, J.A.; Roy, R.; Farnsworth, M. Designing an AR interface to improve trust in Human-Robots collaboration. Procedia CIRP 2018, 70, 350–355. [Google Scholar] [CrossRef]

- Tsamis, G.; Chantziaras, G.; Giakoumis, D.; Kostavelis, I.; Kargakos, A.; Tsakiris, A.; Tzovaras, D. Intuitive and Safe Interaction in Multi-User Human Robot Collaboration Environments through Augmented Reality Displays. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 520–526. [Google Scholar] [CrossRef]

- Vogel, C.; Schulenburg, E.; Elkmann, N. Projective- AR Assistance System for shared Human-Robot Workplaces in Industrial Applications. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 1259–1262. [Google Scholar] [CrossRef]

- Choi, S.H.; Park, K.B.; Roh, D.H.; Lee, J.Y.; Mohammed, M.; Ghasemi, Y.; Jeong, H. An integrated mixed reality system for safety-aware Human–Robot collaboration using deep learning and digital twin generation. Robot. Comput.-Integr. Manuf. 2022, 73, 102258. [Google Scholar] [CrossRef]

- Bassyouni, Z.; Elhajj, I.H. Augmented Reality Meets Artificial Intelligence in Robotics: A Systematic Review. Front. Robot. AI 2021, 8, 724798. [Google Scholar] [CrossRef]

- Costa, G.D.M.; Petry, M.R.; Moreira, A.P. Augmented Reality for Human–Robot Collaboration and Cooperation in Industrial Applications: A Systematic Literature Review. Sensors 2022, 22, 2725. [Google Scholar] [CrossRef]

- Franze, A.P.; Caldwell, G.A.; Teixeira, M.F.L.A.; Rittenbruch, M. Employing AR/MR Mockups to Imagine Future Custom Manufacturing Practices. In Proceedings of the 34th Australian Conference on Human-Computer Interaction (OzCHI ’22), New York, NY, USA, 6 April 2023; pp. 206–215. [Google Scholar] [CrossRef]

- Fang, H.; Ong, S.; Nee, A. Robot Path and End-Effector Orientation Planning Using Augmented Reality. Procedia CIRP 2012, 3, 191–196. [Google Scholar] [CrossRef]

- Doil, F.; Schreiber, W.; Alt, T.; Patron, C. Augmented Reality for manufacturing planning. In Proceedings of the Workshop on Virtual Environments 2003 (EGVE ’03), New York, NY, USA, 22–23 May 2003; pp. 71–76. [Google Scholar] [CrossRef]

- Wang, Q.; Fan, X.; Luo, M.; Yin, X.; Zhu, W. Construction of Human-Robot Cooperation Assembly Simulation System Based on Augmented Reality. In Virtual, Augmented and Mixed Reality. Design and Interaction; Chen, J.Y.C., Fragomeni, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; pp. 629–642. [Google Scholar] [CrossRef]

- Andersson, N.; Argyrou, A.; Nägele, F.; Ubis, F.; Campos, U.E.; Zarate, M.O.d.; Wilterdink, R. AR-Enhanced Human-Robot-Interaction—Methodologies, Algorithms, Tools. Procedia CIRP 2016, 44, 193–198. [Google Scholar] [CrossRef]

- Michalos, G.; Karagiannis, P.; Makris, S.; Tokçalar, Ö.; Chryssolouris, G. Augmented Reality (AR) Applications for Supporting Human-robot Interactive Cooperation. Procedia CIRP 2016, 41, 370–375. [Google Scholar] [CrossRef]

- Lunding, R.; Hubenschmid, S.; Feuchtner, T. Proposing a Hybrid Authoring Interface for AR-Supported Human–Robot Collaboration. 2024. Available online: https://openreview.net/forum?id=2w2ynC3yrM¬eId=ritvr8VKmu (accessed on 6 July 2024).

- Tabrez, A.; Luebbers, M.B.; Hayes, B. Descriptive and Prescriptive Visual Guidance to Improve Shared Situational Awareness in Human–Robot Teaming. In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, Online, 9–13 May 2022. [Google Scholar]

- De Franco, A.; Lamon, E.; Balatti, P.; De Momi, E.; Ajoudani, A. An Intuitive Augmented Reality Interface for Task Scheduling, Monitoring, and Work Performance Improvement in Human-Robot Collaboration. In Proceedings of the 2019 IEEE International Work Conference on Bioinspired Intelligence (IWOBI), Budapest, Hungary, 3–5 July 2019; pp. 75–80. [Google Scholar] [CrossRef]

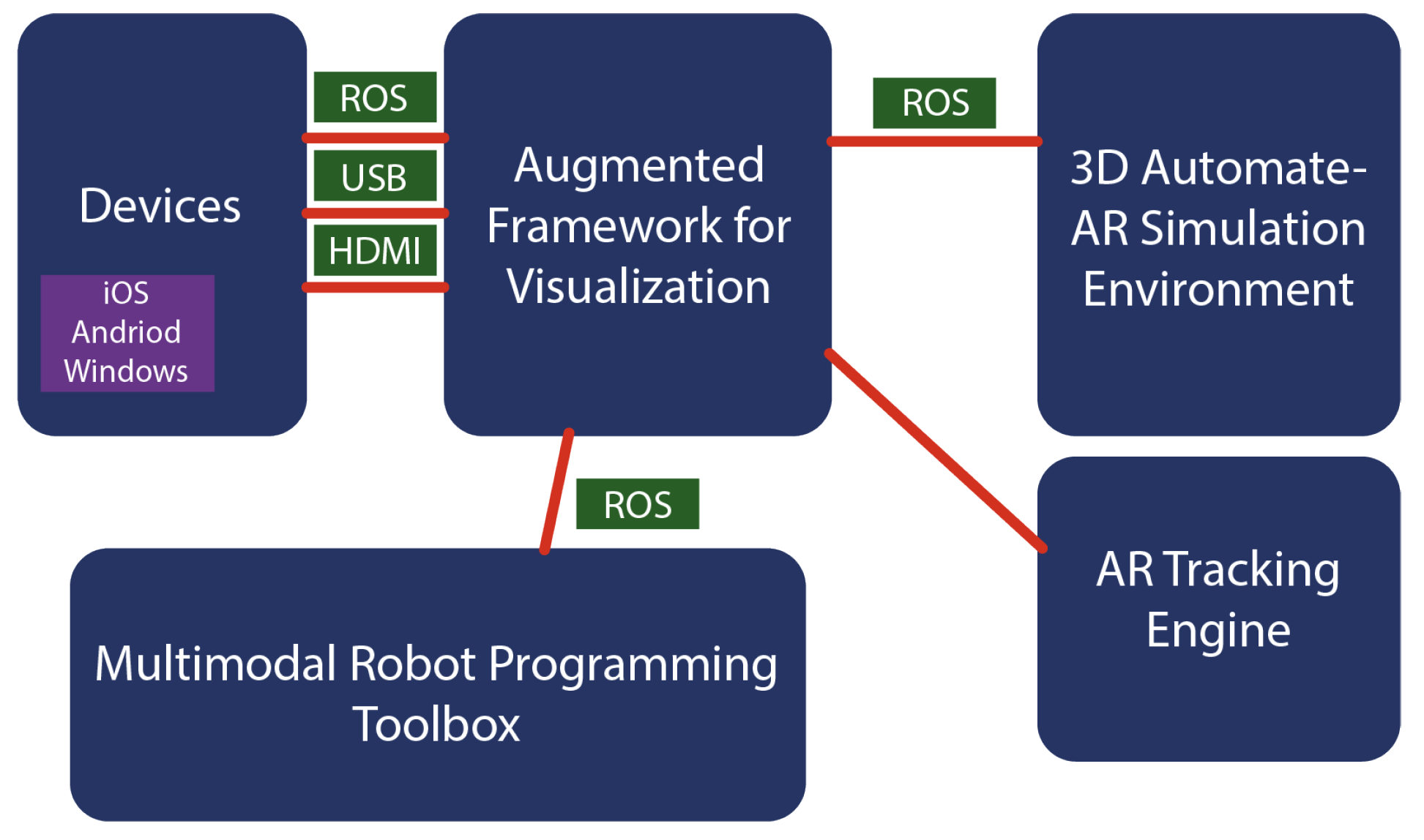

- Andronas, D.; Apostolopoulos, G.; Fourtakas, N.; Makris, S. Multi-modal interfaces for natural Human-Robot Interaction. Procedia Manuf. 2021, 54, 197–202. [Google Scholar] [CrossRef]

- Gkournelos, C.; Karagiannis, P.; Kousi, N.; Michalos, G.; Koukas, S.; Makris, S. Application of Wearable Devices for Supporting Operators in Human-Robot Cooperative Assembly Tasks. Procedia CIRP 2018, 76, 177–182. [Google Scholar] [CrossRef]

- Qiu, S.; Liu, H.; Zhang, Z.; Zhu, Y.; Zhu, S.C. Human-Robot Interaction in a Shared Augmented Reality Workspace. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 11413–11418. [Google Scholar] [CrossRef]

- Sonawani, S.; Amor, H.B. When and Where Are You Going? A Mixed-Reality Framework for Human Robot Collaboration. 2022. Available online: https://openreview.net/forum?id=BSrx_Q2-Akq (accessed on 21 June 2024).

- Hietanen, A.; Latokartano, J.; Pieters, R.; Lanz, M.; Kämäräinen, J.K. AR-based interaction for safe Human–Robot collaborative manufacturing. arXiv 2019. [Google Scholar]

- Lunding, R.S.; Lunding, M.S.; Feuchtner, T.; Petersen, M.G.; Grønbæk, K.; Suzuki, R. RoboVisAR: Immersive Authoring of Condition-based AR Robot Visualisations. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’24), New York, NY, USA, 11–15 March 2024; pp. 462–471. [Google Scholar] [CrossRef]

- Eschen, H.; Kötter, T.; Rodeck, R.; Harnisch, M.; Schüppstuhl, T. Augmented and Virtual Reality for Inspection and Maintenance Processes in the Aviation Industry. Procedia Manuf. 2018, 19, 156–163. [Google Scholar] [CrossRef]

- Papanastasiou, S.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Papavasileiou, A.; Dimoulas, K.; Baris, K.; Koukas, S.; Michalos, G.; Makris, S. Towards seamless human robot collaboration: Integrating multimodal interaction. Int. J. Adv. Manuf. Technol. 2019, 105, 3881–3897. [Google Scholar] [CrossRef]

- Makris, S.; Karagiannis, P.; Koukas, S.; Matthaiakis, A.S. Augmented Reality system for operator support in human–robot collaborative assembly. CIRP Ann. 2016, 65, 61–64. [Google Scholar] [CrossRef]

- Matsas, E.; Vosniakos, G.C.; Batras, D. Prototyping proactive and adaptive techniques for Human–Robot collaboration in manufacturing using virtual reality. Robot. Comput.-Integr. Manuf. 2018, 50, 168–180. [Google Scholar] [CrossRef]

- Bischoff, R.; Kazi, A. Perspectives on Augmented Reality based Human–Robot Interaction with industrial robots. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3226–3231. [Google Scholar] [CrossRef]

- Matsas, E.; Vosniakos, G.C. Design of a virtual reality training system for human–robot collaboration in manufacturing tasks. Int. J. Interact. Des. Manuf. (IJIDeM) 2017, 11, 139–153. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. An AR-based Worker Support System for Human-Robot Collaboration. Procedia Manuf. 2017, 11, 22–30. [Google Scholar] [CrossRef]

- Janin, A.; Mizell, D.; Caudell, T. Calibration of head-mounted displays for Augmented Reality applications. In Proceedings of the IEEE Virtual Reality Annual International Symposium, Seattle, WA, USA, 18–22 September 1993; pp. 246–255. [Google Scholar] [CrossRef]

- Mitaritonna, A.; Abásolo, M.J.; Montero, F. An Augmented Reality-based Software Architecture to Support Military Situational Awareness. In Proceedings of the 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 12–13 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Sheikh Bahaei, S.; Gallina, B. Assessing risk of AR and organizational changes factors in socio-technical robotic manufacturing. Robot. Comput.-Integr. Manuf. 2024, 88, 102731. [Google Scholar] [CrossRef]

- Feddoul, Y.; Ragot, N.; Duval, F.; Havard, V.; Baudry, D.; Assila, A. Exploring human-machine collaboration in industry: A systematic literature review of digital twin and robotics interfaced with extended reality technologies. Int. J. Adv. Manuf. Technol. 2023, 129, 1917–1932. [Google Scholar] [CrossRef]

- Maruyama, T.; Ueshiba, T.; Tada, M.; Toda, H.; Endo, Y.; Domae, Y.; Nakabo, Y.; Mori, T.; Suita, K. Digital Twin-Driven Human Robot Collaboration Using a Digital Human. Sensors 2021, 21, 8266. [Google Scholar] [CrossRef] [PubMed]

- Shaaban, M.; Carfì, A.; Mastrogiovanni, F. Digital Twins for Human-Robot Collaboration: A Future Perspective. In Intelligent Autonomous Systems 18; Lee, S.G., An, J., Chong, N.Y., Strand, M., Kim, J.H., Eds.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 795, pp. 429–441. [Google Scholar] [CrossRef]

- Carroll, J.M. HCI Models, Theories, and Frameworks: Toward a Multidisciplinary Science; Elsevier: Amsterdam, The Netherlands, 2003. [Google Scholar]

- Nazari, A.; Alabood, L.; Feeley, K.B.; Jaswal, V.K.; Krishnamurthy, D. Personalizing an AR-based Communication System for Nonspeaking Autistic Users. In Proceedings of the 29th International Conference on Intelligent User Interfaces (IUI ’24’), New York, NY, USA, 18–21 March 2024; pp. 731–741. [Google Scholar] [CrossRef]

- von Sawitzky, T.; Wintersberger, P.; Riener, A.; Gabbard, J.L. Increasing trust in fully automated driving: Route indication on an Augmented Reality head-up display. In Proceedings of the 8th ACM International Symposium on Pervasive Displays (PerDis ’19), New York, NY, USA, 12–14 June 2019. [Google Scholar] [CrossRef]

- Chang, C.J.; Hsu, Y.L.; Tan, W.T.M.; Chang, Y.C.; Lu, P.C.; Chen, Y.; Wang, Y.H.; Chen, M.Y. Exploring Augmented Reality Interface Designs for Virtual Meetings in Real-world Walking Contexts. In Proceedings of the 2024 ACM Designing Interactive Systems Conference (DIS ’24), New York, NY, USA, 1–5 July 2024; pp. 391–408. [Google Scholar] [CrossRef]

- Norman, D.A. The Design of Everyday Things, Revised Edition; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5298, pp. 63–76. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A quick and dirty usability scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996. [Google Scholar]

- Vredenburg, K.; Mao, J.Y.; Smith, P.W.; Carey, T. A survey of user-centered design practice. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’02), New York, NY, USA, 20–25 April 2002; pp. 471–478. [Google Scholar] [CrossRef]

- Abras, C.; Maloney-Krichmar, D.; Preece, J. User-Centered Design. In Berkshire Encyclopedia of Human-Computer Interaction; Bainbridge, W., Ed.; Sage Publications: Thousand Oaks, CA, USA, 2004. [Google Scholar]

- Geerts, D.; Vatavu, R.D.; Burova, A.; Vinayagamoorthy, V.; Mott, M.; Crabb, M.; Gerling, K. Challenges in Designing Inclusive Immersive Technologies. In Proceedings of the 20th International Conference on Mobile and Ubiquitous Multimedia (MUM ’21), New York, NY, USA, 5–8 December 2021; pp. 182–185. [Google Scholar] [CrossRef]

- Tanevska, A.; Chandra, S.; Barbareschi, G.; Eguchi, A.; Han, Z.; Korpan, R.; Ostrowski, A.K.; Perugia, G.; Ravindranath, S.; Seaborn, K.; et al. Inclusive HRI II: Equity and Diversity in Design, Application, Methods, and Community. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’23), New York, NY, USA, 13–16 March 2023; pp. 956–958. [Google Scholar] [CrossRef]

- Ejaz, A.; Syed, D.; Yasir, M.; Farhan, D. Graphic User Interface Design Principles for Designing Augmented Reality Applications. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 0100228. [Google Scholar] [CrossRef]

- Knowles, B.; Clear, A.K.; Mann, S.; Blevis, E.; Håkansson, M. Design Patterns, Principles, and Strategies for Sustainable HCI. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA ’16), New York, NY, USA, 7–12 May 2016; pp. 3581–3588. [Google Scholar] [CrossRef]

- Nebeling, M.; Oki, M.; Gelsomini, M.; Hayes, G.R.; Billinghurst, M.; Suzuki, K.; Graf, R. Designing Inclusive Future Augmented Realities. In Proceedings of the Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems (CHI EA ’24), New York, NY, USA, 11–16 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Samaradivakara, Y.; Ushan, T.; Pathirage, A.; Sasikumar, P.; Karunanayaka, K.; Keppitiyagama, C.; Nanayakkara, S. SeEar: Tailoring Real-time AR Caption Interfaces for Deaf and Hard-of-Hearing (DHH) Students in Specialized Educational Settings. In Proceedings of the Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems (CHI EA ’24), New York, NY, USA, 11–16 May 2024; pp. 1–8. [Google Scholar] [CrossRef]

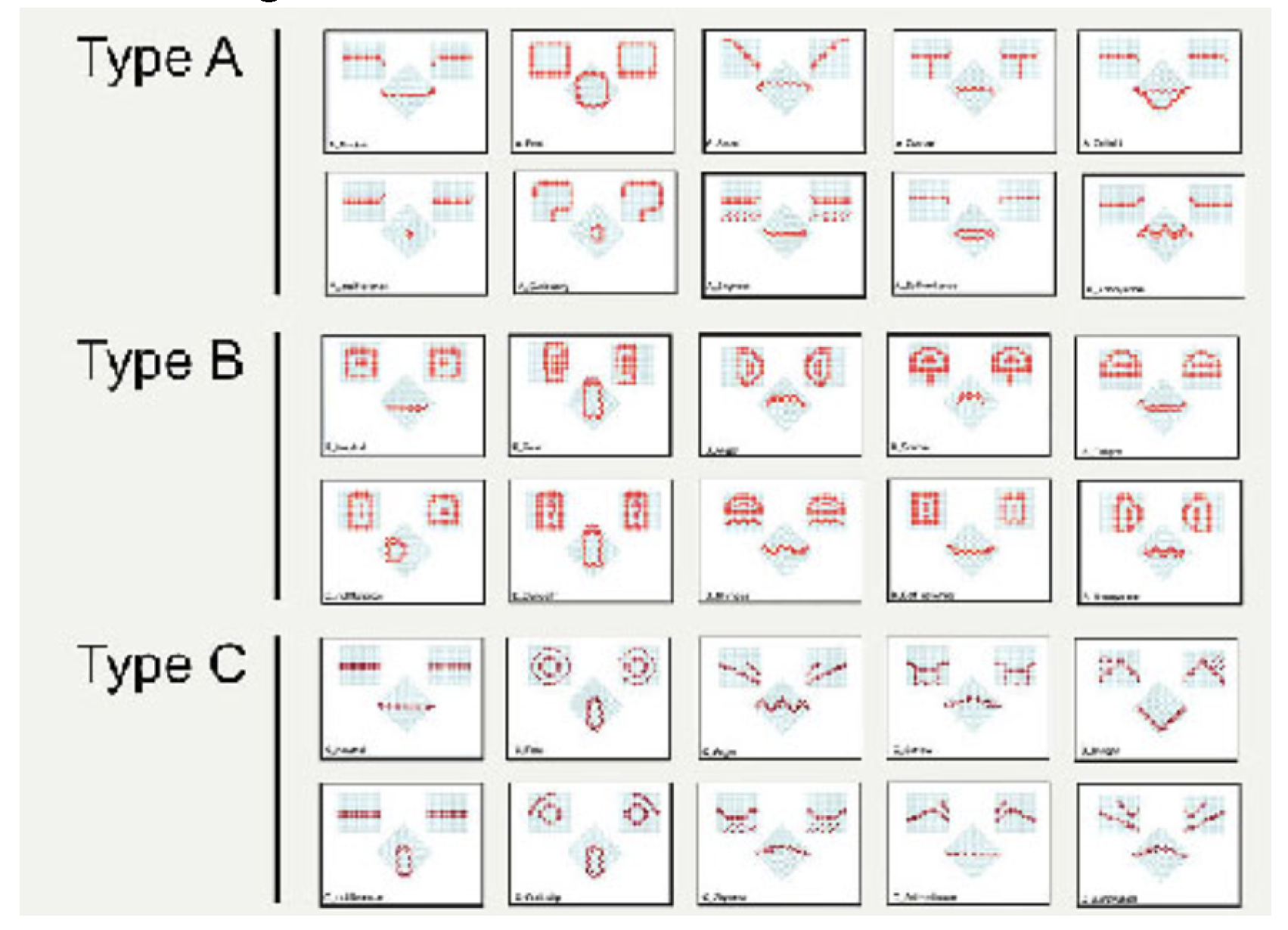

- Fang, H.C.; Ong, S.K.; Nee, A.Y.C. Novel AR-based interface for Human–Robot Interaction and visualization. Adv. Manuf. 2014, 2, 275–288. [Google Scholar] [CrossRef]

- Alt, B.; Zahn, J.; Kienle, C.; Dvorak, J.; May, M.; Katic, D.; Jäkel, R.; Kopp, T.; Beetz, M.; Lanza, G. Human-AI Interaction in Industrial Robotics: Design and Empirical Evaluation of a User Interface for Explainable AI-Based Robot Program Optimization. arXiv 2024. [Google Scholar]

- Lindblom, J.; Alenljung, B. The ANEMONE: Theoretical Foundations for UX Evaluation of Action and Intention Recognition in Human-Robot Interaction. Sensors 2020, 20, 4284. [Google Scholar] [CrossRef]

- Jeffri, N.F.S.; Rambli, D.R.A. Guidelines for the Interface Design of AR Systems for Manual Assembly. In Proceedings of the 2020 4th International Conference on Virtual and Augmented Reality Simulations (ICVARS ’20), New York, NY, USA, 14–16 February 2020; pp. 70–77. [Google Scholar] [CrossRef]

- Wewerka, J.; Micus, C.; Reichert, M. Seven Guidelines for Designing the User Interface in Robotic Process Automation. In Proceedings of the 2021 IEEE 25th International Enterprise Distributed Object Computing Workshop (EDOCW), Gold Coast, Australia, 25–29 October 2021; pp. 157–165. [Google Scholar] [CrossRef]

- Zhao, Y.; Masuda, L.; Loke, L.; Reinhardt, D. Towards a Design Toolkit for Designing AR Interface with Head-Mounted Display for Close-Proximity Human–Robot Collaboration in Fabrication. In Collaboration Technologies and Social Computing; Takada, H., Marutschke, D.M., Alvarez, C., Inoue, T., Hayashi, Y., Hernandez-Leo, D., Eds.; Springer: Cham, Switzerland, 2023; pp. 135–143. [Google Scholar] [CrossRef]

- Marvel, J.A. Performance Metrics of Speed and Separation Monitoring in Shared Workspaces. IEEE Trans. Autom. Sci. Eng. 2013, 10, 405–414. [Google Scholar] [CrossRef]

- Kumar, S.P. Dynamic Speed and Separation Monitoring with On-Robot Ranging Sensor Arrays for Human and Industrial Robot Collaboration. Ph.D. Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2020. [Google Scholar]

- Scalera, L.; Giusti, A.; Vidoni, R.; Gasparetto, A. Enhancing fluency and productivity in Human–Robot collaboration through online scaling of dynamic safety zones. Int. J. Adv. Manuf. Technol. 2022, 121, 6783–6798. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Lacevic, B. Safe and minimum-time path-following problem for collaborative industrial robots. J. Manuf. Syst. 2022, 65, 686–693. [Google Scholar] [CrossRef]

- Savur, C. A Physiological Computing System to Improve Human–Robot Collaboration by Using Human Comfort Index. Ph.D. Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2022. [Google Scholar]

- Chacón, A.; Ponsa, P.; Angulo, C. Cognitive Interaction Analysis in Human–Robot Collaboration Using an Assembly Task. Electronics 2021, 10, 1317. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Betella, A.; Verschure, P.F.M.J. The Affective Slider: A Digital Self-Assessment Scale for the Measurement of Human Emotions. PLoS ONE 2016, 11, e0148037. [Google Scholar] [CrossRef] [PubMed]

- Legler, F.; Trezl, J.; Langer, D.; Bernhagen, M.; Dettmann, A.; Bullinger, A.C. Emotional Experience in Human–Robot Collaboration: Suitability of Virtual Reality Scenarios to Study Interactions beyond Safety Restrictions. Robotics 2023, 12, 168. [Google Scholar] [CrossRef]

- Savur, C.; Sahin, F. Survey on Physiological Computing in Human–Robot Collaboration. Machines 2023, 11, 536. [Google Scholar] [CrossRef]

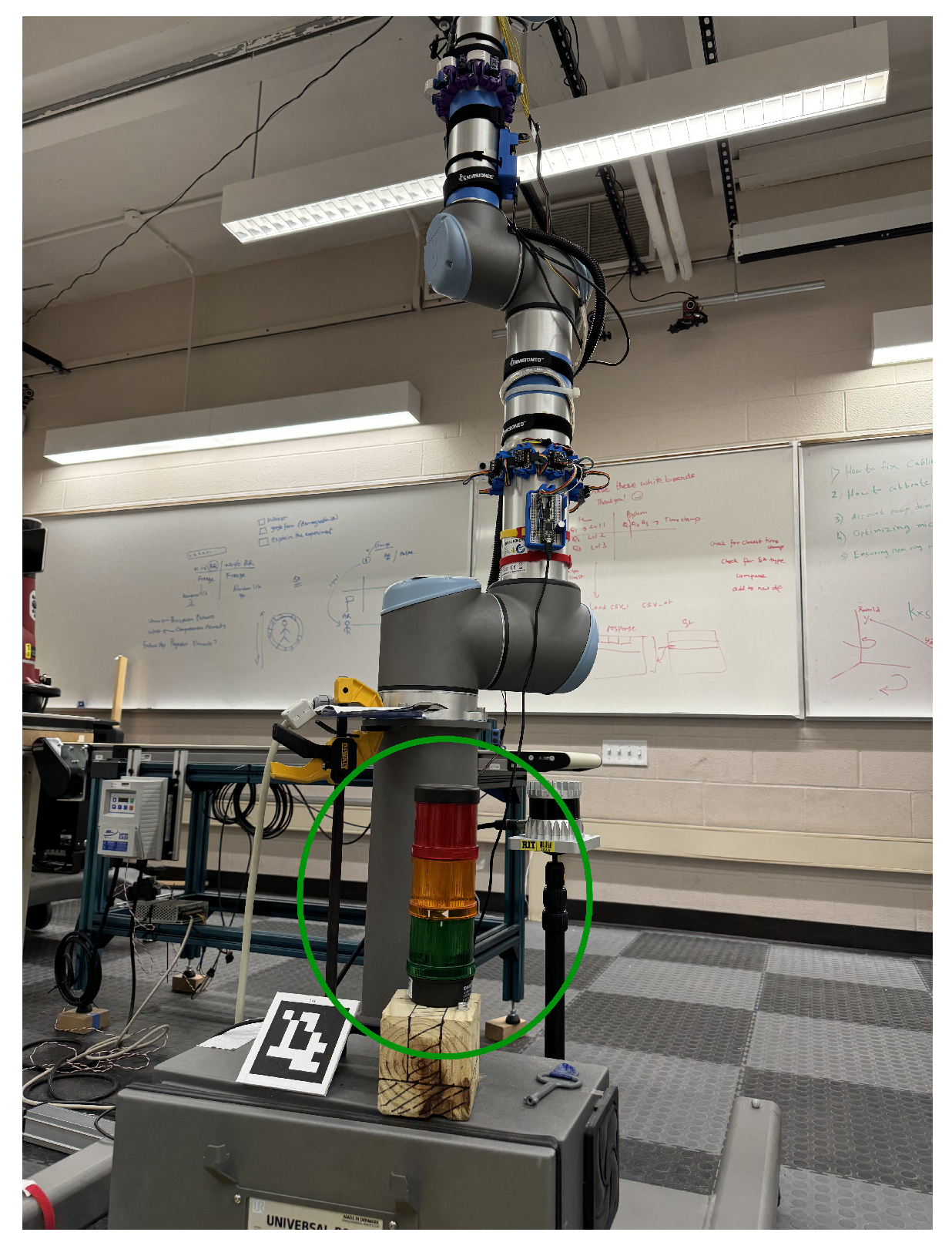

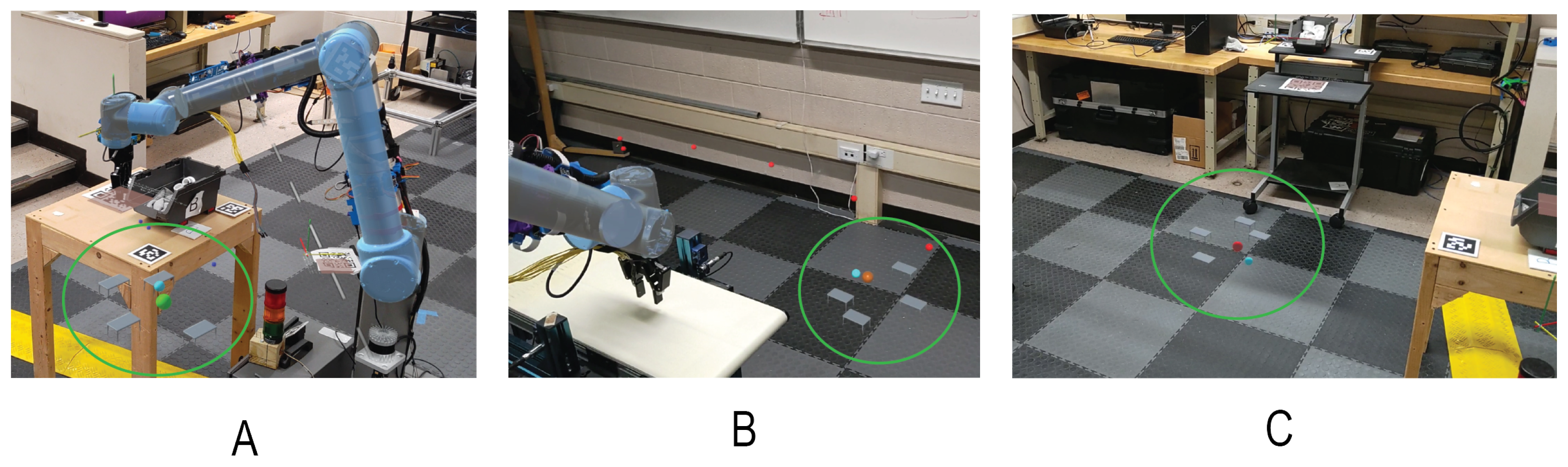

- Sahin, M.; Subramanian, K.; Sahin, F. Using Augmented Reality to Enhance Worker Situational Awareness in Human-Robot Interaction. In Proceedings of the 2024 IEEE Conference on Telepresence, California Institute of Technology, Pasadena, CA, USA, 16–17 November 2024. Accepted for Presentation. [Google Scholar] [CrossRef]

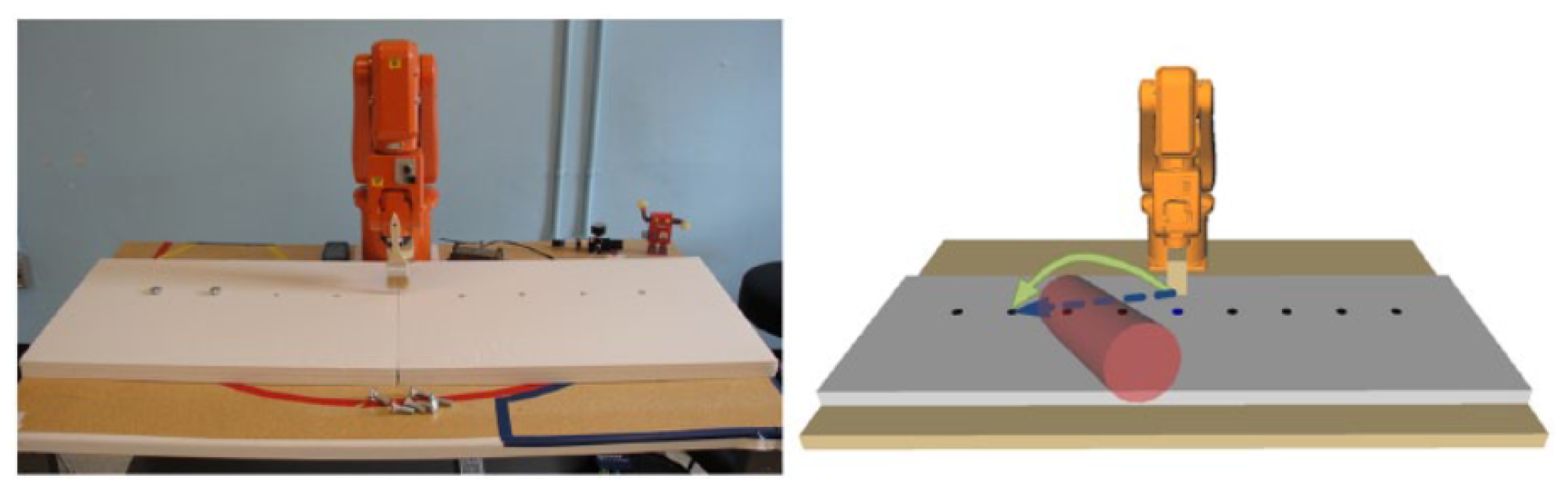

- Subramanian, K.; Arora, S.; Adamides, O.; Sahin, F. Using Mixed Reality for Safe Physical Human–Robot Interaction. In Proceedings of the 2024 IEEE Conference on Telepresence, California Institute of Technology, Pasadena, CA, USA, 16–17 November 2024. Accepted for Presentation. [Google Scholar] [CrossRef]

| Reference | Category | Use of AR | Hardware |

|---|---|---|---|

| Fang et al. [65] | Collaboration | Path Plan | Display |

| Doil et al. [66] | Collaboration | Task Plan | HMD+ |

| Wang et al. [67] | Collaboration | Task Plan | HMD |

| Andersson et al. [68] | Collaboration | Task Train | HMD |

| Saaski et al. [57] | Collaboration | Task Instr | HMD |

| Michalos et al. [69] | Collaboration | Task Instr | HMD+ |

| Liu et al. [31] | Collaboration | Task Instr | Display |

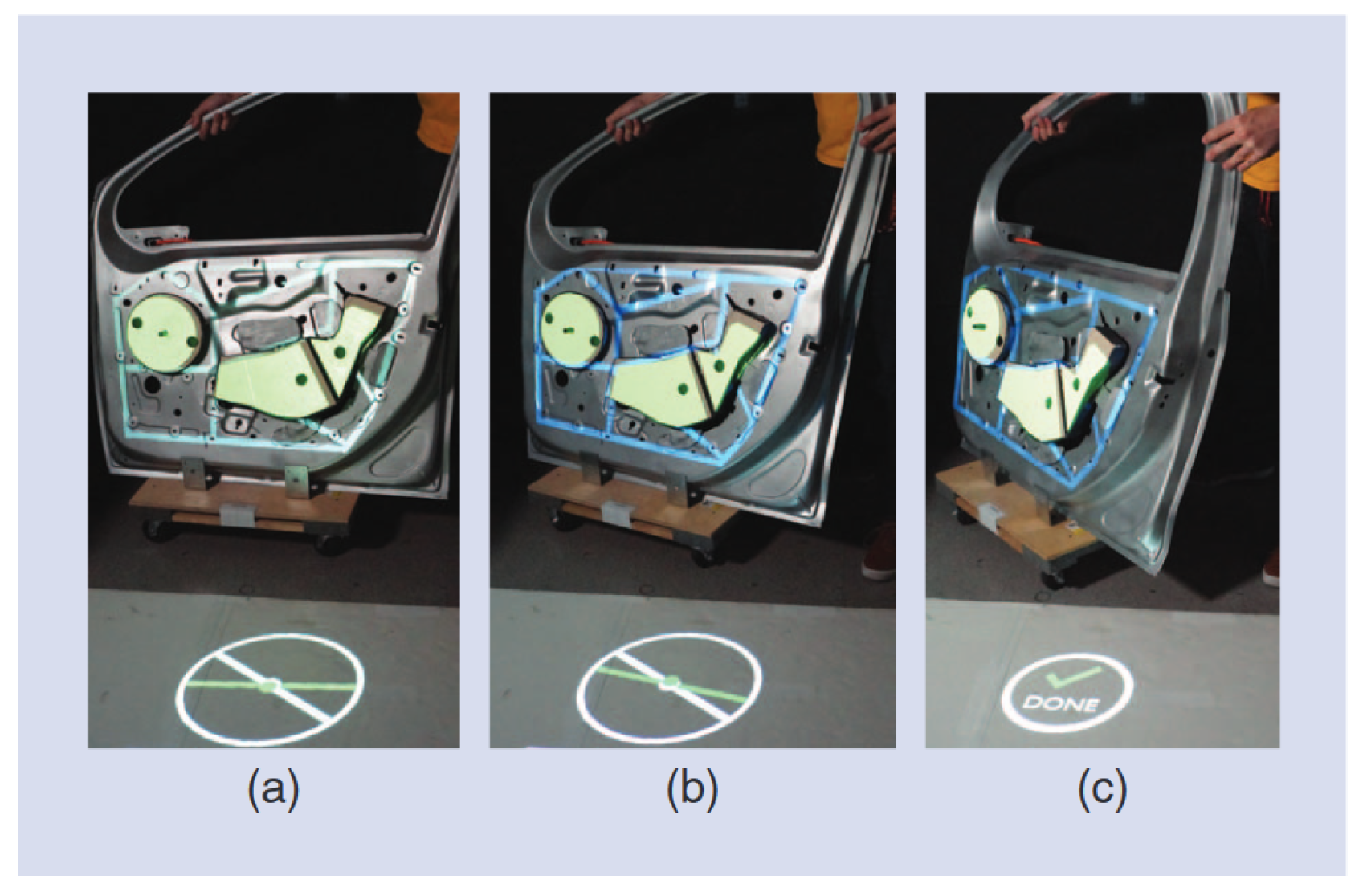

| Kalpangam et al. [8] | Collaboration | Task Instr | Projection |

| Lunding et al. [70] | Collaboration | Communication | HMD |

| Tabrez et al. [71] | Collaboration | Communication | HMD |

| De Franco et al. [72] | Collaboration | Task Collab | HMD |

| Andronas et al. [73] | Collaboration | Interact. Cues | HMD |

| Gkournelos et al. [74] | Collaboration | Interact. Cues | Smartwatch |

| Qui et al. [75] | Collaboration | Interact. Cues | Web |

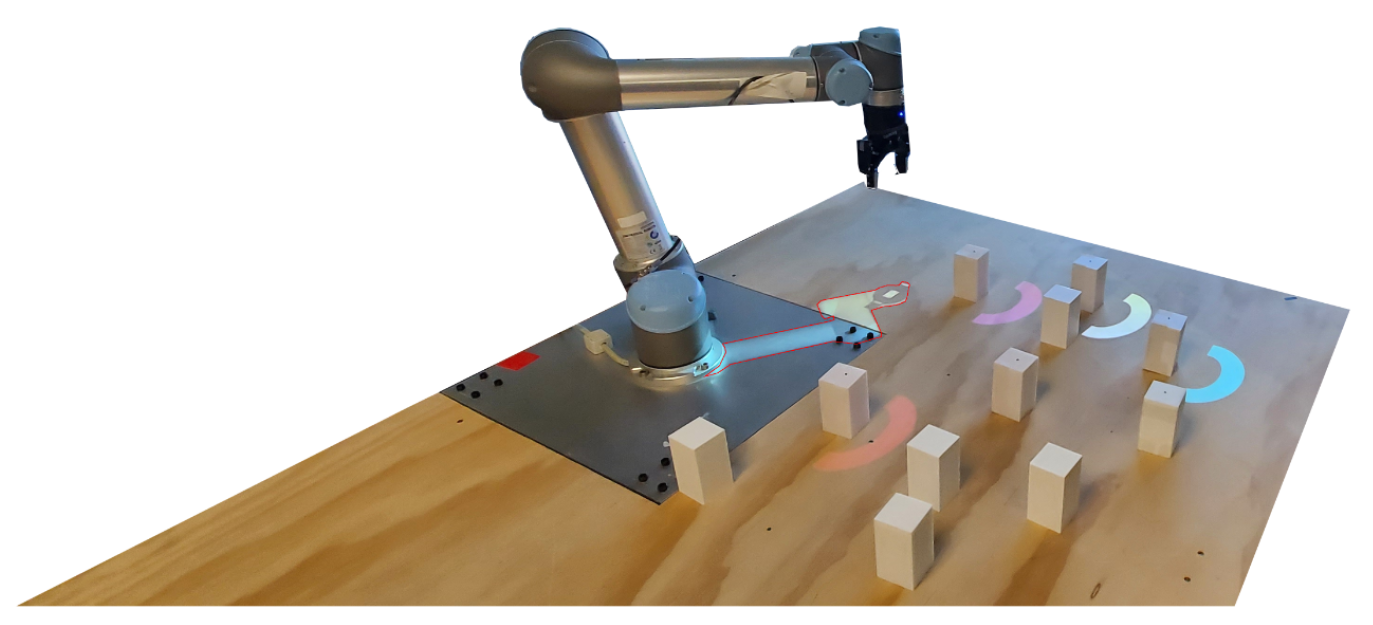

| Sonawwani et al. [76] | Collaboration | Interact. Cues | Projection |

| Tsamis et al. [59] | Trust and Safety | Visual. Actions | HMD |

| Palmarini et al. [58] | Trust and Safety | Visual. Actions | Tablet |

| Vogel et al. [60] | Trust and Safety | Safe Zone | Projection |

| Choi et al. [61] | Trust and Safety | Safe Zone | HMD |

| Hietanen et al. [77] | Trust and Safety | Safe Zone | HMD+ |

| Lunding et al. [78] | Trust and Safety | Safe Zone | HMD |

| Eschen et al. [79] | Trust and Safety | Safe Maint | HMD |

| Papanastasiou et al. [80] | Trust and Safety | Safe OS | HMD+ |

| Makris et al. [81] | Trust and Safety | Safe OS | HMD |

| Matsas et al. [82] | Trust and Safety | Collis. Avd | Projection |

| Metric | T-Statistic | p-Value |

|---|---|---|

| Average Perception Error | −3.5871 | 0.002106 |

| Percent Correct | 2.4179 | 0.02643 |

| Mean Confidence | 4.0171 | 0.008085 |

| Mean Response Time | −1.7847 | 0.09115 |

| Error Metric | Value |

|---|---|

| Max | 0.07435 |

| Min | 0.00607 |

| Median | 0.03087 |

| Mean | 0.03149 |

| RMSE | 0.03382 |

| Std Dev | 0.01234 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Subramanian, K.; Thomas, L.; Sahin, M.; Sahin, F. Supporting Human–Robot Interaction in Manufacturing with Augmented Reality and Effective Human–Computer Interaction: A Review and Framework. Machines 2024, 12, 706. https://doi.org/10.3390/machines12100706

Subramanian K, Thomas L, Sahin M, Sahin F. Supporting Human–Robot Interaction in Manufacturing with Augmented Reality and Effective Human–Computer Interaction: A Review and Framework. Machines. 2024; 12(10):706. https://doi.org/10.3390/machines12100706

Chicago/Turabian StyleSubramanian, Karthik, Liya Thomas, Melis Sahin, and Ferat Sahin. 2024. "Supporting Human–Robot Interaction in Manufacturing with Augmented Reality and Effective Human–Computer Interaction: A Review and Framework" Machines 12, no. 10: 706. https://doi.org/10.3390/machines12100706

APA StyleSubramanian, K., Thomas, L., Sahin, M., & Sahin, F. (2024). Supporting Human–Robot Interaction in Manufacturing with Augmented Reality and Effective Human–Computer Interaction: A Review and Framework. Machines, 12(10), 706. https://doi.org/10.3390/machines12100706