Fuzzy-Based Image Contrast Enhancement for Wind Turbine Detection: A Case Study Using Visual Geometry Group Model 19, Xception, and Support Vector Machines

Abstract

1. Introduction

- Implementation of the proposed fuzzy contrast enhancement (FCE) image preprocessing step for VGG19, Xception and SVM algorithms. Additionally, the performances of all algorithms without and with the proposed FCE were analyzed and compared.

- Creation of a novel RGB dataset of 4500 aerial images from a Primus Air Max small wind turbine mimicking the environment of a wind turbine farm using a small-scale wind turbine prototype to compare the performance of the three ML algorithms.

- Detailed analyses and implementation of the VGG19, Xception, and SVM algorithms using different optimization methods, model training, and hyperparameter tuning technologies.

2. Convolutional Neural Networks (CNNs)

2.1. VGG19

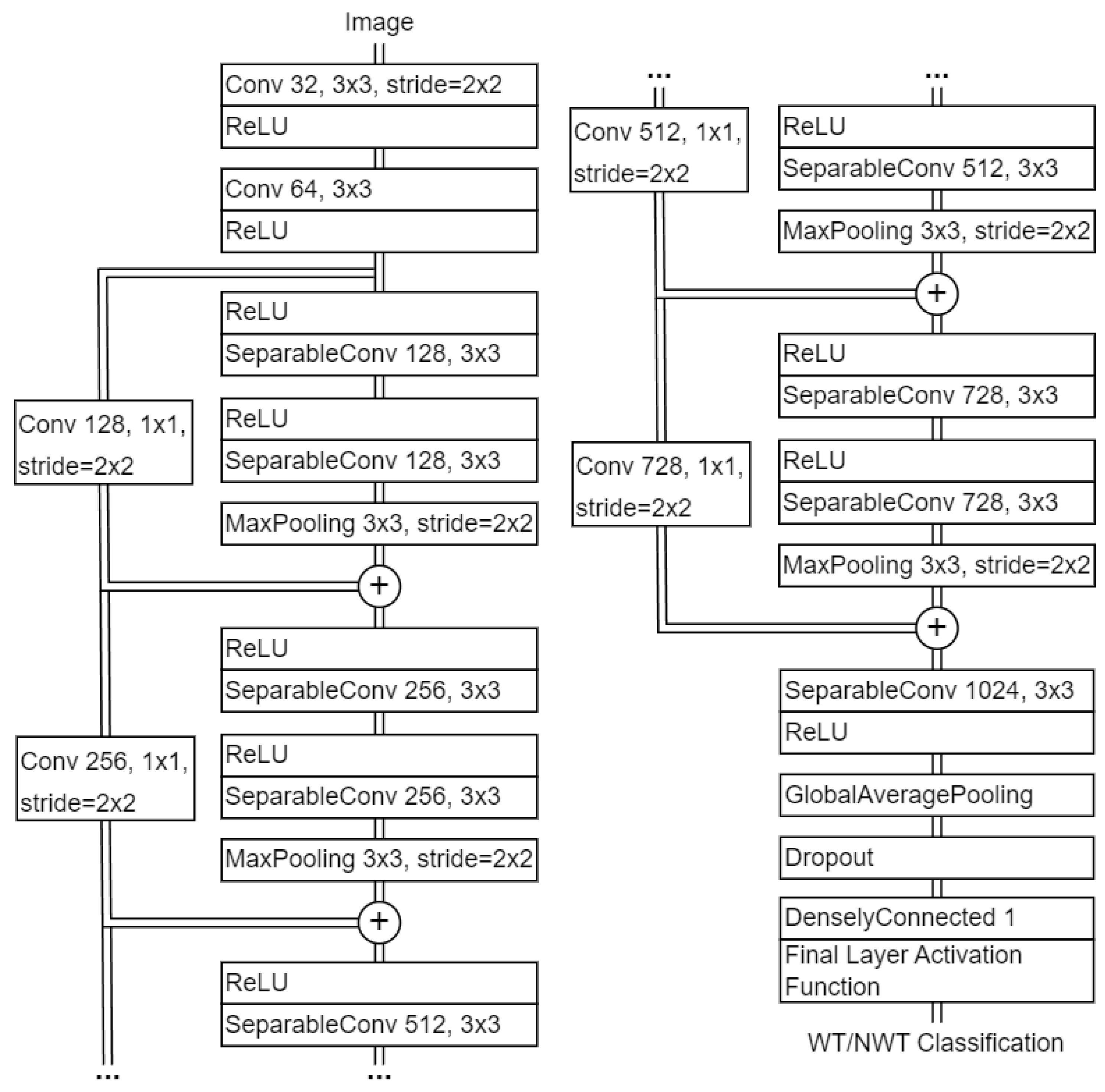

2.2. Xception

3. Support Vector Machine (SVM)

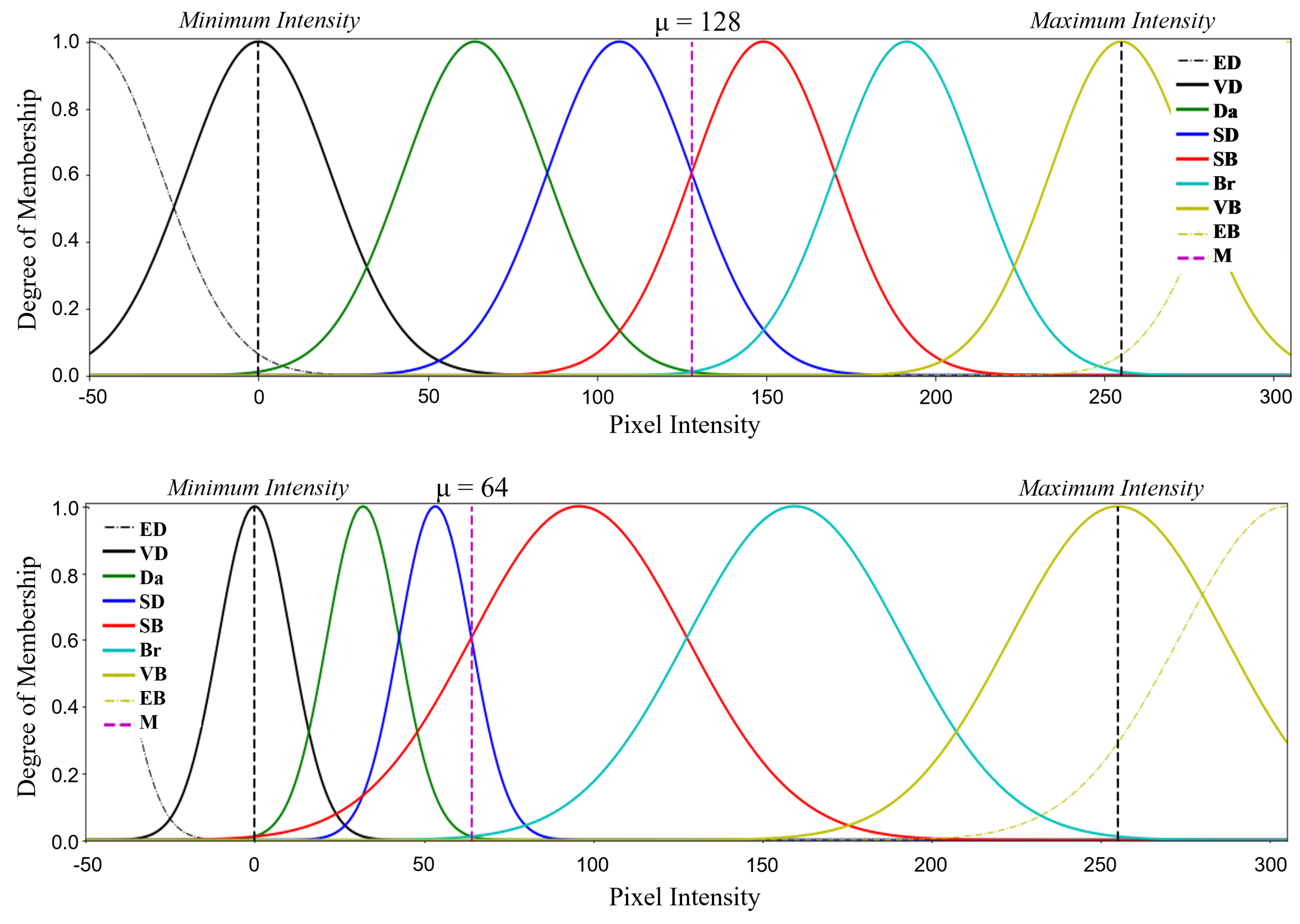

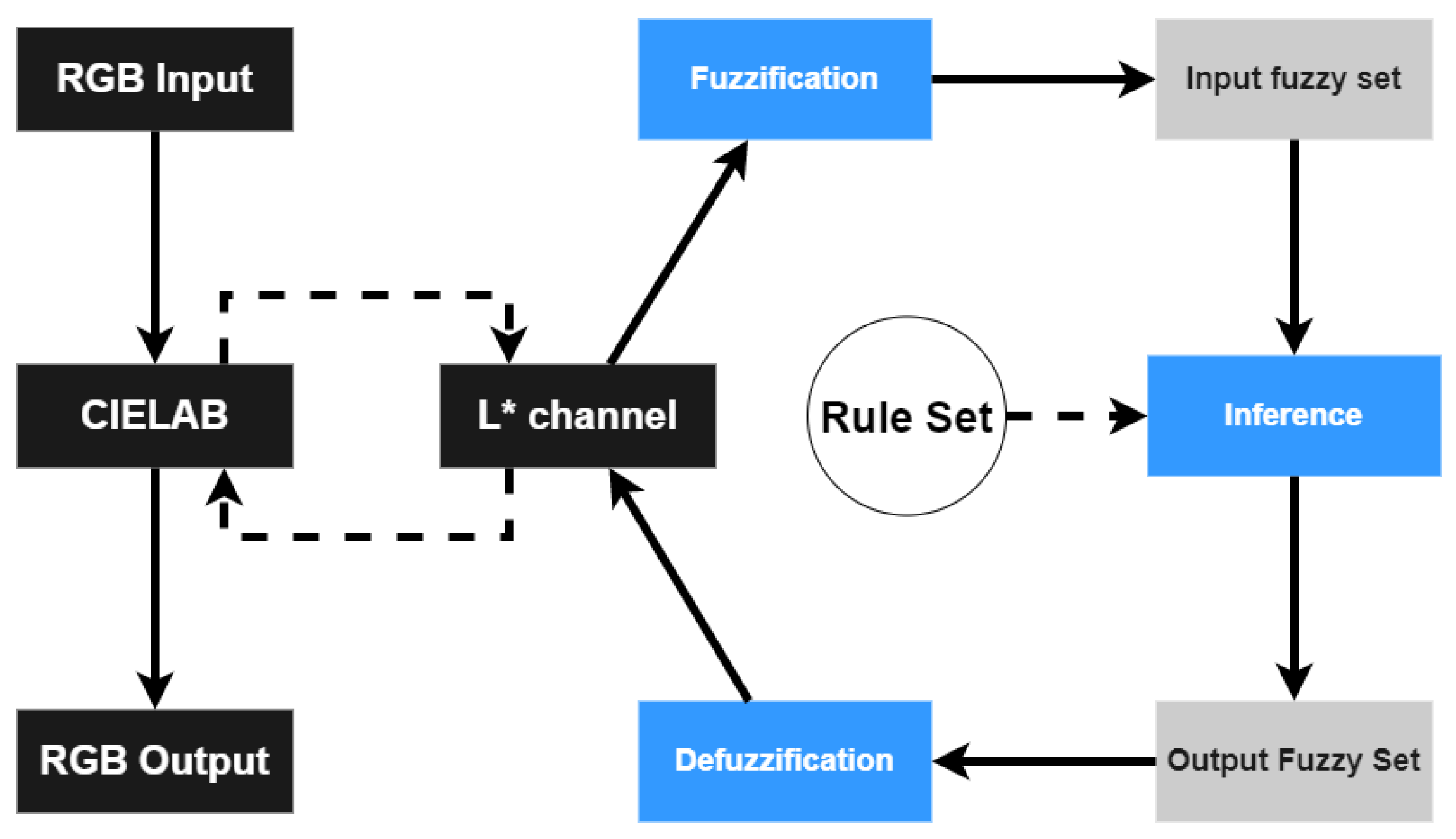

4. Proposed Image Preprocessing with Fuzzy Contrast Enhancement (FCE)

4.1. CIELAB Color Space

4.2. Fuzzification of Image Pixel Intensity

| Algorithm 1 Fuzzy Logic-Based Image Contrast Enhancement |

| Input: Aerial RGB image Output: Aerial RGB image with fuzzy contrast enhancement

|

5. Hyperparameter Tuning

5.1. Hyperparameter Categories

5.1.1. Optimizer

5.1.2. Activation Functions

5.1.3. Batch Size

5.1.4. Loss Function

5.1.5. Dropout

6. Experimental Results

6.1. Dataset Overview and Characteristics

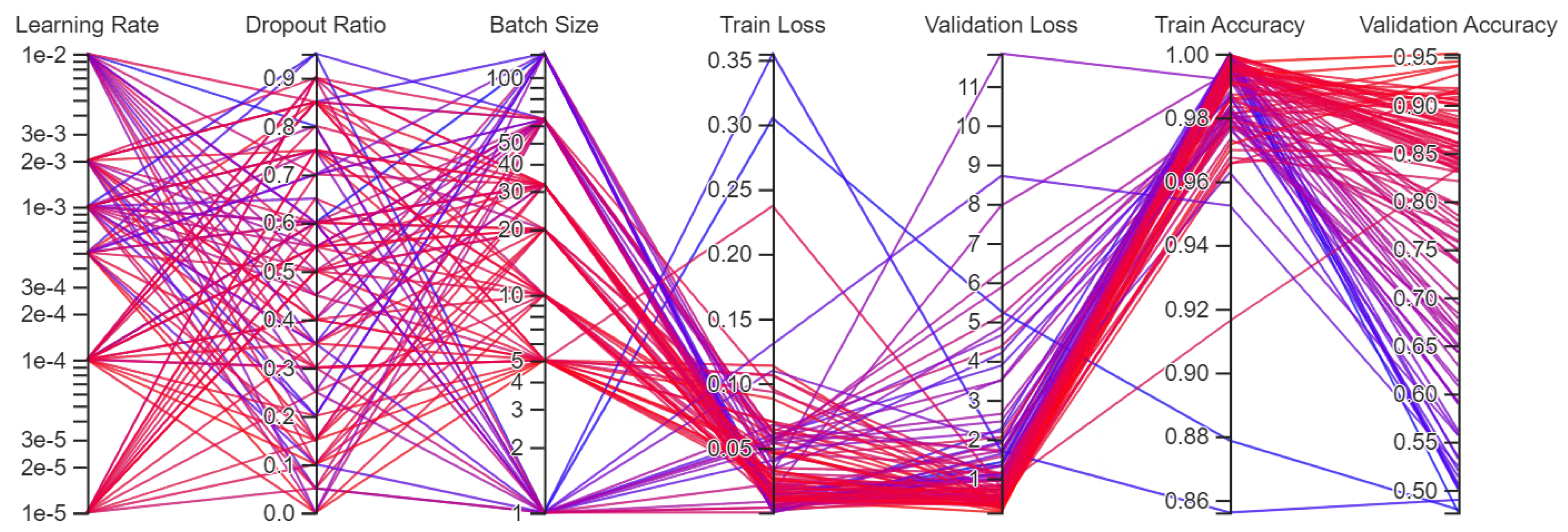

6.2. VGG19 Experimental Results

6.2.1. VGG19 Case Study A1

6.2.2. VGG19 Case Study A2

6.2.3. VGG19 Case Study A3

6.2.4. VGG19 Case Study A4

6.2.5. VGG19 Case Study A5

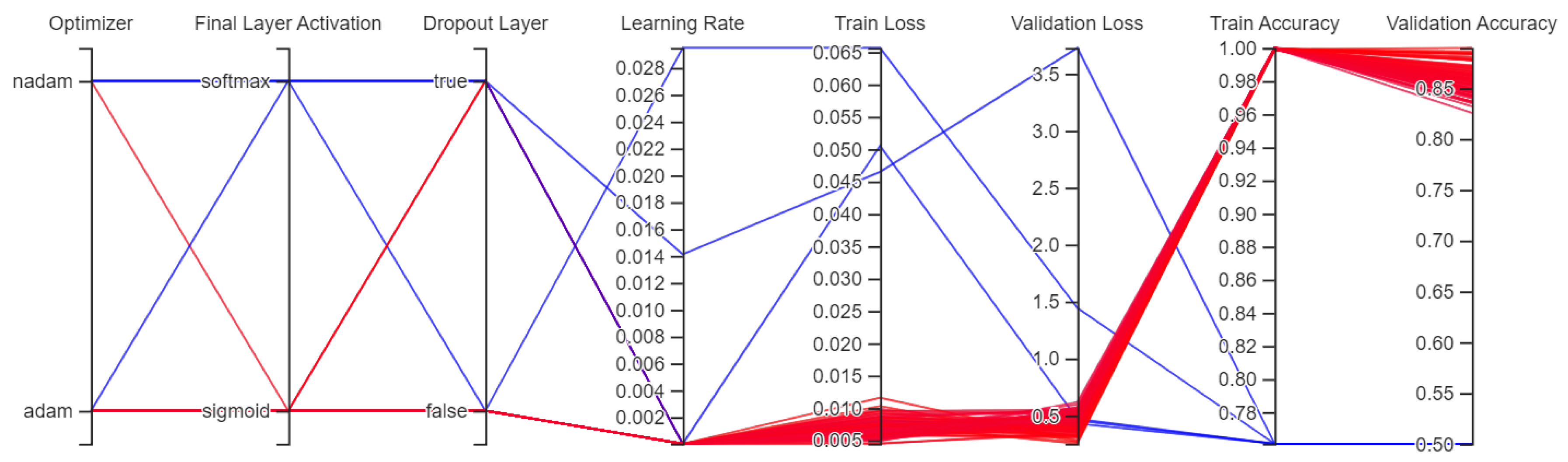

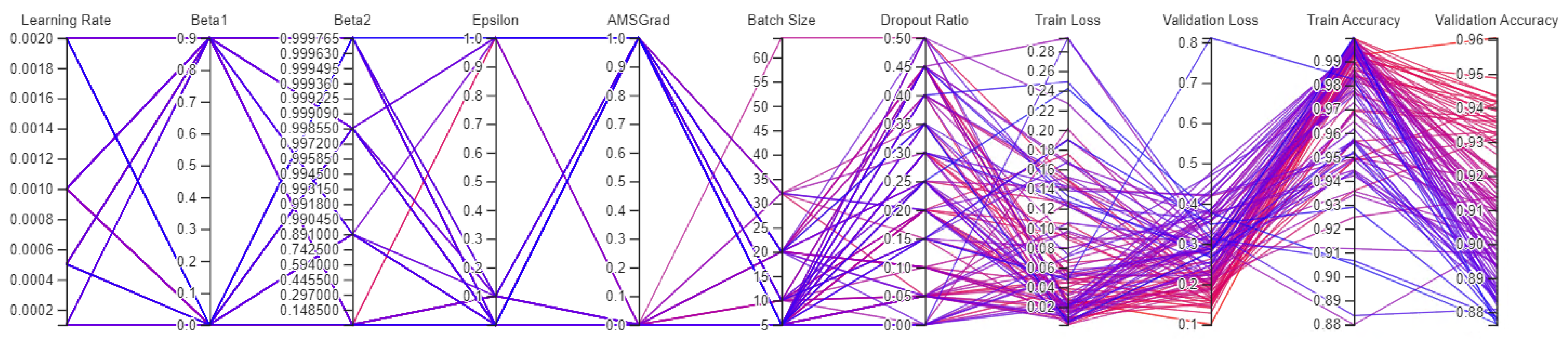

6.3. Xception Experimental Results

6.3.1. Xception Case Study B1

6.3.2. Xception Case Study B2

6.3.3. Xception Case Study B3

6.3.4. Xception Case Study B4

6.3.5. Xception Case Study B5

6.3.6. Xception Case Study B6

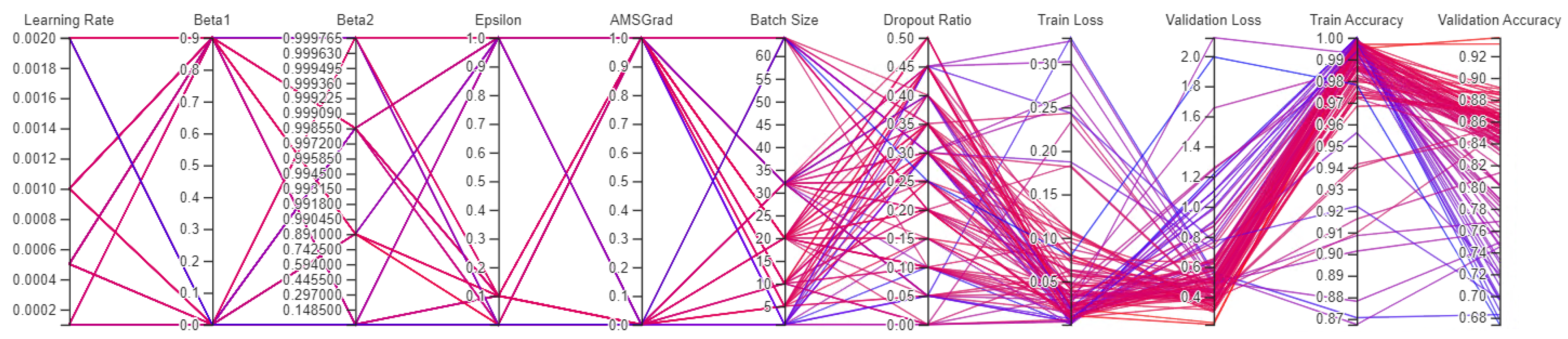

6.4. SVM Experimental Results

6.4.1. SVM Case Study C1

6.4.2. SVM Case Study C2

6.4.3. SVM Case Study C3

6.4.4. SVM Case Study C4

7. Conclusions

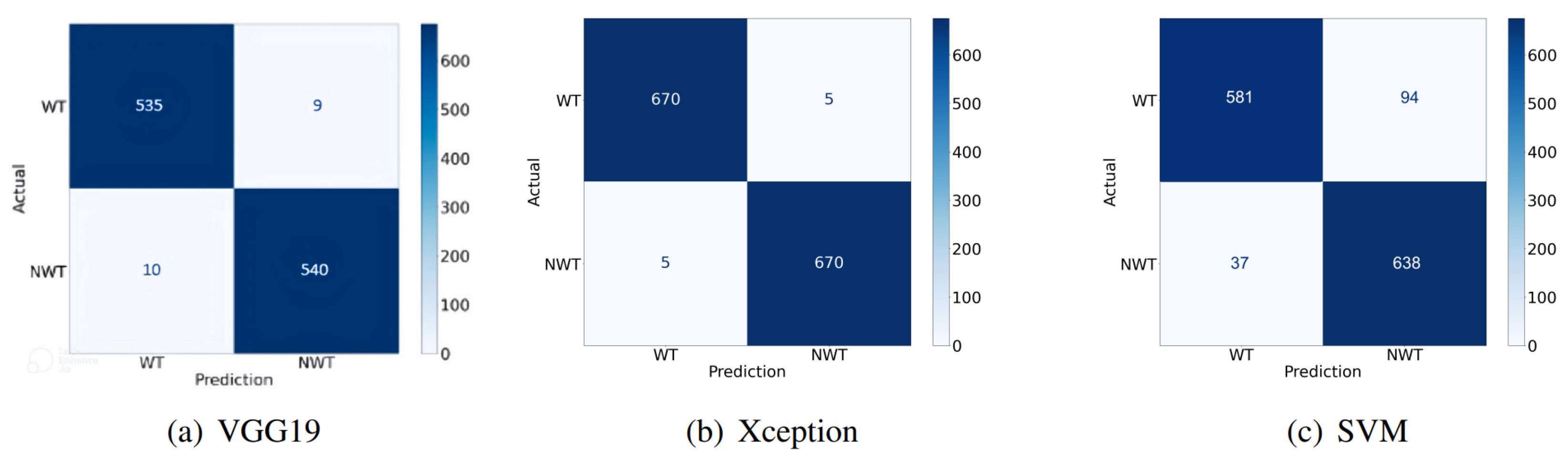

- A comprehensive exploration, implementation, and comparison of three distinct machine learning algorithms, comprising two convolutional neural networks and the SVM algorithm. These algorithms are utilized to classify whether RGB images contain wind turbines.

- The application of the proposed fuzzy contrast enhancement (FCE) data preprocessing step to the VGG19, Xception, and SVM machine learning algorithms, accompanied by a comparison of their conventional performance against their performance when augmented by this preprocessing step.

- The creation of a novel Primus Air Max wind turbine classification dataset, consisting of 4500 RGB images, and its utilization to assess the performance of the implemented VGG19, Xception, and SVM algorithms.

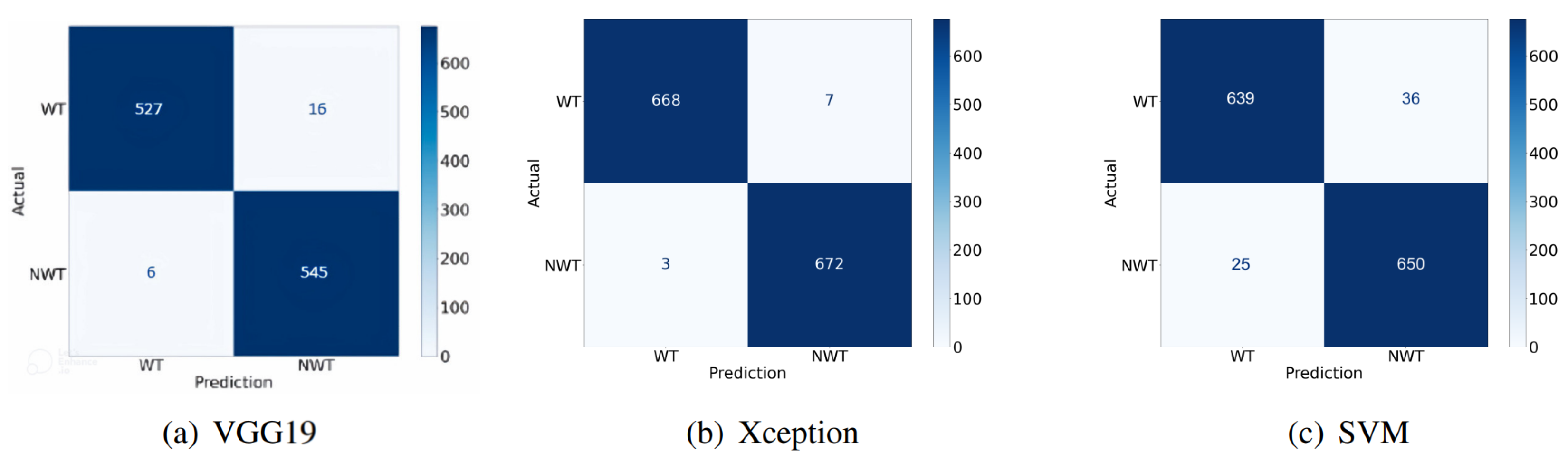

- The assessed convolutional neural networks, namely Xception and VGG19, demonstrated commendable performances with accuracies above 98%. In contrast, as anticipated, the SVM algorithm exhibited a less favorable accuracy of around 90%.

- The implementation of the proposed FCE results in enhanced accuracy for Xception and SVM, while VGG19 does not experience similar improvements.

- The results presented in Figure 7 and Figure 8 and Table 2 and Table 3 demonstrate that implementing the proposed FCE leads to improvements in accuracy, precision, and F1 score. Specifically, for the Xception model, these metrics increase from 99%, 99.2%, and 98.99% to 99.18%, 99.5%, and 99.18%, respectively. Similarly, for the SVM algorithm, the application of FCE raises accuracy, precision, and F1 score from 90.3%, 91%, and 90.12% to 95.48%, 95.63%, and 95.48%, respectively.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, J.; Zhao, F. GWEC | Global Wind Report 2022. 2022. Available online: https://gwec.net/wp-content/uploads/2022/03/GWEC-GLOBAL-WIND-REPORT-2022.pdf/ (accessed on 4 September 2022).

- Wang, L.; Zhang, Z.; Long, H.; Xu, J.; Liu, R. Wind Turbine Gearbox Failure Identification With Deep Neural Networks. IEEE Trans. Ind. Inform. 2017, 13, 1360–1368. [Google Scholar] [CrossRef]

- Long, H.; Wang, L.; Zhang, Z.; Song, Z.; Xu, J. Data-Driven Wind Turbine Power Generation Performance Monitoring. IEEE Trans. Ind. Electron. 2015, 62, 6627–6635. [Google Scholar] [CrossRef]

- Ribrant, J.; Bertling, L.M. Survey of Failures in Wind Power Systems With Focus on Swedish Wind Power Plants During 1997–2005. IEEE Trans. Energy Convers. 2007, 22, 167–173. [Google Scholar] [CrossRef]

- Honjo, N. Detail survey of wind turbine generator and electric facility damages by winter lightning. In Proceedings of the 31st Wind Energy Utilization Symposium, 2013; Volume 35, pp. 296–299. Available online: https://www.jstage.jst.go.jp/article/jweasympo/35/0/35_296/_article/-char/en (accessed on 5 December 2023).

- Hahn, B.; Durstewitz, M.; Rohrig, K. Reliability of wind turbines. In Wind Energy; Springer: Berlin/Heidelberg, Germany, 2007; pp. 329–332. [Google Scholar]

- Qiao, W.; Lu, D. A Survey on Wind Turbine Condition Monitoring and Fault Diagnosis—Part I: Components and Subsystems. IEEE Trans. Ind. Electron. 2015, 62, 6536–6545. [Google Scholar] [CrossRef]

- GEV Wind Power. 2021 Wind Turbine Blade Inspection. Available online: https://www.gevwindpower.com/blade-inspection/ (accessed on 4 September 2022).

- Industrial Rope Access Trade Association. IRATA International. Available online: https://irata.org/ (accessed on 26 December 2023).

- Society of Professional Rope Access Technicians. SPRAT. Available online: https://sprat.org/ (accessed on 26 December 2023).

- Coffey, B. Taking Off: Nevada Drone Testing Brings Commercial UAVs Closer to Reality. 2019. Available online: https://www.ge.com/news/reports/taking-off-nevada-drone-testing-brings-commercial-uavs-closer-to-reality (accessed on 4 September 2022).

- Clobotics. Wind Services. Available online: https://clobotics.com/wind/ (accessed on 26 December 2023).

- Arthwind. Arthwind—Visibility and Prediction. Available online: https://arthwind.com.br/en/ (accessed on 26 December 2023).

- N’Diaye, L.M.; Phillips, A.; Masoum, M.A.S.; Shekaramiz, M. Residual and Wavelet based Neural Network for the Fault Detection of Wind Turbine Blades. In Proceedings of the 2022 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 13–14 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Seibi, C.; Ward, Z.; Masoum, M.A.S.; Shekaramiz, M. Locating and Extracting Wind Turbine Blade Cracks Using Haar-like Features and Clustering. In Proceedings of the 2022 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 13–14 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Seegmiller, C.; Chamberlain, B.; Miller, J.; Masoum, M.A.S.; Shekaramiz, M. Wind Turbine Fault Classification Using Support Vector Machines with Fuzzy Logic. In Proceedings of the 2022 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 13–14 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Pinney, B.; Duncan, S.; Shekaramiz, M.; Masoum, M.A.S. Drone Path Planning and Object Detection via QR Codes; A Surrogate Case Study for Wind Turbine Inspection. In Proceedings of the 2022 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 13–14 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- IBM. What Is Machine Learning? Available online: https://www.ibm.com/cloud/learn/machine-learning (accessed on 17 September 2022).

- IBM. What Are Convolutional Neural Networks. Available online: https://www.ibm.com/cloud/learn/convolutional-neural-networks (accessed on 8 September 2022).

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Rahman, R.; Tanvir, S.; Anwar, M.T. Unique Approach to Detect Bowling Grips Using Fuzzy Logic Contrast Enhancement. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 13–15 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Chutani, G.; Bohra, H.; Diwan, D.; Garg, N. Improved Alzheimer Detection using Image Enhancement Techniques and Transfer Learning. In Proceedings of the 2022 3rd International Conference for Emerging Technology (INCET), Belgaum, India, 27–29 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Keras. 2015. Available online: https://keras.io (accessed on 24 June 2022).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sarkar, D. A comprehensive hands-on guide to transfer learning with real-world applications in deep learning. Towards Data Sci. 2018, 20, 4. [Google Scholar]

- Yalcin, O.G. 4 Pre-Trained CNN Models to Use for Computer Vision with Transfer Learning. Towards Data Sci. 2020. Available online: https://towardsdatascience.com/4-pre-trained-cnn-models-to-use-for-computer-vision-with-transfer-learning-885cb1b2dfc (accessed on 5 December 2023).

- Chollet, F. Deep Learning with Python; Simon and Schuster: New York, NY, USA, 2021. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Rahman, M.R.; Tabassum, S.; Haque, E.; Nishat, M.M.; Faisal, F.; Hossain, E. CNN-based Deep Learning Approach for Micro-crack Detection of Solar Panels. In Proceedings of the 2021 3rd International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 18–19 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Roopashree, S.; Anitha, J. DeepHerb: A Vision Based System for Medicinal Plants Using Xception Features. IEEE Access 2021, 9, 135927–135941. [Google Scholar] [CrossRef]

- Chen, B.; Liu, X.; Zheng, Y.; Zhao, G.; Shi, Y.Q. A Robust GAN-Generated Face Detection Method Based on Dual-Color Spaces and an Improved Xception. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3527–3538. [Google Scholar] [CrossRef]

- Hui, J.; Du, M.; Ye, X.; Qin, Q.; Sui, J. Effective Building Extraction From High-Resolution Remote Sensing Images With Multitask Driven Deep Neural Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 786–790. [Google Scholar] [CrossRef]

- Pupale, R. Support Vector Machines (SVM)—An Overview. 2018. Available online: https://towardsdatascience.com/https-medium-com-pupalerushikesh-svm-f4b42800e989 (accessed on 24 June 2022).

- Kobaiz, A.R.; Ghrare, S.E. Behavior of LSSVM and SVM channel equalizers in non-Gaussian noise. In Proceedings of the 2014 International Conference on Computer, Communications, and Control Technology (I4CT), Langkawi, Malaysia, 2–4 September 2014; pp. 407–410. [Google Scholar] [CrossRef]

- Saha, N.; Show, A.K.; Das, P.; Nanda, S. Performance Comparison of Different Kernel Tricks Based on SVM Approach for Parkinson’s Disease Detection. In Proceedings of the 2021 2nd International Conference for Emerging Technology (INCET), Belagavi, India, 21–23 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Jiu, M.; Pustelnik, N.; Chebre, M.; Janaqv, S.; Ricoux, P. Multiclass SVM with graph path coding regularization for face classification. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Vietri sul Mare, Italy, 13–16 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Murata, M.; Mitsumori, T.; Doi, K. Overfitting in protein name recognition on biomedical literature and method of preventing it through use of transductive SVM. In Proceedings of the Fourth International Conference on Information Technology (ITNG’07), Las Vegas, NV, USA, 2–4 April 2007; pp. 583–588. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Zeljkovic, V.; Druzgalski, C.; Mayorga, P. Quantification of acne status using CIELAB color space. In Proceedings of the 2023 Global Medical Engineering Physics Exchanges/Pacific Health Care Engineering (GMEPE/PAHCE), Songdo, Republic of Korea, 27–31 March 2023; pp. 1–6. [Google Scholar]

- Vuong-Le, M.N. Fuzzy Logic—Image Contrast Enhancement. 2020. Available online: https://www.kaggle.com/code/nguyenvlm/fuzzy-logic-image-contrast-enhancement/notebook (accessed on 24 June 2022).

- Talashilkar, R.; Tewari, K. Analyzing the Effects of Hyperparameters on Convolutional Neural Network & Finding the Optimal Solution with a Limited Dataset. In Proceedings of the 2021 International Conference on Advances in Computing, Communication, and Control (ICAC3), Mumbai, India, 3–4 December 2021; pp. 1–5. [Google Scholar] [CrossRef]

- O’Malley, T.; Bursztein, E.; Long, J.; Chollet, F.; Jin, H.; Invernizzi, L.; Bursztein, L. KerasTuner. 2019. Available online: https://github.com/keras-team/keras-tuner (accessed on 1 July 2022).

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization. J. Mach. Learn. Res. 2016, 18, 1–52. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Orr, G.; Müller, K.R. Efficient BackProp. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of Adam and Beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Kamalov, F.; Nazir, A.; Safaraliev, M.; Cherukuri, A.K.; Zgheib, R. Comparative analysis of activation functions in neural networks. In Proceedings of the 2021 28th IEEE International Conference on Electronics, Circuits, and Systems (ICECS), Dubai, United Arab Emirates, 28 November–1 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Fukushima, K. Visual Feature Extraction by a Multilayered Network of Analog Threshold Elements. IEEE Trans. Syst. Sci. Cybern. 1969, 5, 322–333. [Google Scholar] [CrossRef]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for Activation Functions. arXiv 2017, arXiv:1710.05941. [Google Scholar] [CrossRef]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). arXiv 2015, arXiv:1511.07289. [Google Scholar] [CrossRef]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-Normalizing Neural Networks. arXiv 2017, arXiv:1706.02515. [Google Scholar] [CrossRef]

- Jiang, T.; Cheng, J. Target Recognition Based on CNN with LeakyReLU and PReLU Activation Functions. In Proceedings of the 2019 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Beijing, China, 15–17 August 2019; pp. 718–722. [Google Scholar] [CrossRef]

- Lin, R. Analysis on the Selection of the Appropriate Batch Size in CNN Neural Network. In Proceedings of the 2022 International Conference on Machine Learning and Knowledge Engineering (MLKE), Guilin, China, 25–27 February 2022; pp. 106–109. [Google Scholar] [CrossRef]

- Koech, K.E. Cross-Entropy Loss Function. 2020. Available online: https://towardsdatascience.com/cross-entropy-loss-function-f38c4ec8643e (accessed on 24 June 2022).

- MacKay, D.; Kay, D.; Press, C.U. Information Theory, Inference and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Şen, S.Y.; Özkurt, N. Convolutional Neural Network Hyperparameter Tuning with Adam Optimizer for ECG Classification. In Proceedings of the 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, 15–17 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

| Layer # | Layer Details | Layer # | Layer Details |

|---|---|---|---|

| 1 | Conv (64) | 11 | Conv (512) |

| 2 | Conv (64) | 12 | Conv (512) |

| - | MaxPool | - | MaxPool |

| 3 | Conv (128) | 13 | Conv (512) |

| 4 | Conv (128) | 14 | Conv (512) |

| - | MaxPool | 15 | Conv (512) |

| 5 | Conv (256) | 16 | Conv (512) |

| 6 | Conv (256) | - | MaxPool |

| 7 | Conv (256) | 17 | Fully Connected (4096) |

| 8 | Conv (256) | 18 | Fully Connected (4096) |

| - | MaxPool | 19 | Fully Connected (1000) |

| 9 | Conv (512) | - | SoftMax |

| 10 | Conv (512) | - | - |

| Algorithm | Accuracy | Precision | Hit Rate | Miss Rate | Specificity | Fall-Out | F1 Score |

|---|---|---|---|---|---|---|---|

| Xception | 99.00% | 99.21% | 98.78% | 1.21% | 99.21% | 0.78% | 98.99% |

| VGG19 | 98.26% | 98.34% | 98.16% | 1.83% | 98.36% | 1.64% | 98.25% |

| SVM | 90.31% | 91.00% | 87.15% | 12.84% | 94.01% | 5.98% | 90.12% |

| Algorithm | Accuracy | Precision | Hit Rate | Miss Rate | Specificity | Fall-Out | F1 Score |

|---|---|---|---|---|---|---|---|

| Xception | 99.18% | 99.50% | 98.84% | 1.15% | 99.51% | 0.49% | 99.17% |

| VGG19 | 97.99% | 97.05% | 98.87% | 1.12% | 97.14% | 2.85% | 97.95% |

| SVM | 95.48% | 95.63% | 94.75% | 5.25% | 96.23% | 3.76% | 95.48% |

| Case Study | No. | BS | LR | DR | Validation Accuracy (VA) |

|---|---|---|---|---|---|

| Case Study A1 | 1 | 30 | 0.0005 | 30% | 98.36% |

| 2 | 60 | 0.0001 | 30% | 98.35% | |

| 3 | 30 | 0.001 | 20% | 98.32% | |

| Case Study A2 | 1 | 20 | 0.0005 | 20% | 98.35% |

| 2 | 60 | 0.0005 | 30% | 98.30% | |

| 3 | 60 | 0.0001 | 20% | 98.29% | |

| Case Study A3 | 1 | 300 | 0.0001 | 30% | 98.41% |

| 2 | 60 | 0.0001 | 20% | 98.39% | |

| 3 | 150 | 0.0001 | 20% | 98.25% |

| Case Study | No. | BS | L | O | DL | DR | LR | B1 | B2 | E | AMSG | Accuracy |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case B1 | 1 | 20 | BC | Adam | True | 60% | 0.9 | 0.999 | False | 95.321% | ||

| 2 | 5 | BC | Adam | True | 10% | 0.9 | 0.999 | False | 94.509% | |||

| 3 | 5 | BC | Adam | True | 80% | 0.9 | 0.999 | False | 94.209% | |||

| Case B2 | 1 | 32 | BC | Adam | False | - | 0.9 | 0.999 | False | 88.953% | ||

| 2 | 32 | BC | Adam | False | - | 0.9 | 0.999 | False | 88.590% | |||

| 3 | 32 | BC | Adam | False | - | 0.9 | 0.999 | False | 88.462% | |||

| Case B3 | 1 | 10 | BC | Adam | True | 45% | 0.9 | 0.99 | True | 93.686% | ||

| 2 | 20 | BC | Adam | True | 35% | 0.0 | 0.99 | True | 93.103% | |||

| 3 | 5 | BC | Adam | True | 25% | 0.0 | 0.0 | True | 89.103% | |||

| Case B4 | 1 | 5 | BC | Adam | True | 45% | 0.0 | 0.0 | True | 96.058% | ||

| 2 | 10 | BC | Adam | True | 20% | 0.0 | 0.999 | False | 94.872% | |||

| 3 | 5 | BC | Adam | True | 20% | 0.9 | 0.9999 | True | 94.359% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ward, Z.; Miller, J.; Engel, J.; Masoum, M.A.S.; Shekaramiz, M.; Seibi, A. Fuzzy-Based Image Contrast Enhancement for Wind Turbine Detection: A Case Study Using Visual Geometry Group Model 19, Xception, and Support Vector Machines. Machines 2024, 12, 55. https://doi.org/10.3390/machines12010055

Ward Z, Miller J, Engel J, Masoum MAS, Shekaramiz M, Seibi A. Fuzzy-Based Image Contrast Enhancement for Wind Turbine Detection: A Case Study Using Visual Geometry Group Model 19, Xception, and Support Vector Machines. Machines. 2024; 12(1):55. https://doi.org/10.3390/machines12010055

Chicago/Turabian StyleWard, Zachary, Jordan Miller, Jeremiah Engel, Mohammad A. S. Masoum, Mohammad Shekaramiz, and Abdennour Seibi. 2024. "Fuzzy-Based Image Contrast Enhancement for Wind Turbine Detection: A Case Study Using Visual Geometry Group Model 19, Xception, and Support Vector Machines" Machines 12, no. 1: 55. https://doi.org/10.3390/machines12010055

APA StyleWard, Z., Miller, J., Engel, J., Masoum, M. A. S., Shekaramiz, M., & Seibi, A. (2024). Fuzzy-Based Image Contrast Enhancement for Wind Turbine Detection: A Case Study Using Visual Geometry Group Model 19, Xception, and Support Vector Machines. Machines, 12(1), 55. https://doi.org/10.3390/machines12010055