Abstract

The mounting increase in the technological complexity of modern engineering systems requires compound uncertainty quantification, from a quantitative and qualitative perspective. This paper presents a Compound Uncertainty Quantification and Aggregation (CUQA) framework to determine compound outputs along with a determination of the greatest uncertainty contribution via global sensitivity analysis. This was validated in two case studies: a bespoke heat exchanger test rig and a simulated turbofan engine. The results demonstrated the effective measurement of compound uncertainty and the individual impact on system reliability. Further work will derive methods to predict uncertainty in-service and the incorporation of the framework with more complex case studies.

1. Introduction

Uncertainty Quantification (UQ) concerning the maintenance of engineering systems is growing in recognition and rigour as the complexity of such systems surges in the modern world. Complex Engineering Systems (CESs) are comprised of multiple sub-elements including equipment and operators that interact simultaneously and nonlinearly with each other and the environment on multiple levels [1,2]. The consideration of the relationships between elements is vital to understand emergent behaviour to aid decision-making [3]. Complex systems science is a field in itself, the theory of which is widely discussed in the literature [3,4,5,6], but is outside the scope of this paper.

The maintenance of complex and non-complex engineering systems exhibits a range of uncertainties from interconnected factors such as quality and the availability of quantitative equipment data, as well as the qualitative influence of operators, expert opinion, experience, and environmental conditions [7]. These uncertainties are represented by varying Probability Distribution Functions (PDFs) and can lead to underestimation or overestimation of maintenance costs, reliability measurement, equipment availability, and delays in maintenance scheduling. Recent research in CESs has explored UQ in micro gear measurements [2], structured surfaces using metrological characteristics [8], correlation uncertainty in gear conformity [9], grey-box energy models for office buildings [10], uncertainty in disassembly line design [11], and others reviewed in various related studies. Many of these approaches only consider quantitative uncertainty given by variability in measured data, rather than the compound aggregation of quantitative and qualitative uncertainties [2,8,10,11]. Methodologies to do this are growing in many areas, but are limited from an industrial maintenance perspective. This is necessary to obtain a comprehensive understanding of system reliability, as well as the inherent risks and knock-on effects imposed by altering elements within the system. Limited research guiding the aggregation of compound uncertainty sets the focus for this paper.

A six-step framework is presented to quantify and aggregate compound uncertainties to enhance system performance assessment. This will provide maintenance planners with a comprehensive view of the parameters surrounding the above factors to improve decision-making capabilities.

A literature review into uncertainty classification in the context of this paper and techniques to combine quantitative and qualitative uncertainties is given in Section 2. The proposed framework is detailed in Section 3 along with key mathematical formulae, functions, and the assumptions made. Section 4 applies the framework to two case studies: a bespoke heat exchanger test rig comprised of multiple sub-systems, developed at Cranfield University [12], and a simulated dataset for turbofan engine degradation. Individual uncertainties from quantitative and qualitative sources and correlations between them are assessed and aggregated to give a confident indication of system performance. Section 5 discusses the results, strengths, and limitations of the framework along with conclusions and future work in this area.

2. Literature Review

2.1. Deriving Uncertainty and Risk

The distinction between uncertainty and risk is well documented in the literature, though often blurred in practice. Uncertainty is the degree of—or lack of—knowledge held concerning a given entity, be it measured data, equipment state, environmental conditions, or the accuracy of expert opinion. The resulting risk is the negative impact of uncertainty [13,14,15,16,17,18,19].

A confident uncertainty estimate can be positively utilised to aid decision-making. Two key types of uncertainty are described in the Guide to the Expression of Uncertainty in Measurement (GUM): Type A, sourced from quantitative measured data; and Type B, which considers qualitative technical and expert knowledge or experience, as well as environmental conditions [1,20,21,22,23,24,25]. The uncertainty of a measured input is given by its standard deviation around the mean value, termed standard uncertainty, set within distribution parameters [1,26]. The distinction between types of uncertainty helps to reduce risk and avoid underestimation or overestimation or of the probability of failure in a system [13,27,28].

Uncertainties can be further derived as epistemic and aleatory. The former emanates from knowledge about the measured entity and can be reduced by obtaining additional data or by refining measurement models. The latter represent variables that can differ each time they are recorded and, therefore, cannot be reduced [10,27,28,29,30,31,32,33].

Risk can be defined from numerous perspectives [13,14,34,35]. In a broad sense, it is the probability of the loss or gain of a quantity that holds value. In this context, uncertainty is the lack of knowledge about the degree of risk that exists. It is necessary to distinguish types of uncertainty to reduce risk and avoid underestimation or overestimation or of the probability of failure in a system, which could have significant negative knock-on effects [13,27,28]. Risk assessments in conjunction with uncertainty analysis are highly beneficial in decision-making, but it is necessary to consider other principles, methods, and instruments involved [14]. There is a requirement to look beyond the probabilistic world and embrace subjective and expert opinions.

2.2. Combining Quantitative and Qualitative Uncertainty

The GUM has been implemented in numerous applications utilising UQ [1,2,9,20,21,23,25,36,37,38,39]. The main process defined involves five core stages: (1) identify the measurand; (2) identify uncertainty sources and associated probability distributions; (3) quantify uncertainties (simulation); (4) aggregate uncertainties; (5) report analysis results. UQ is not always considered a core task, especially considering qualitative factors [40]. Coverage factors are applied to account for purely qualitative estimates or a combination of the two types. While proficient for purely quantitative analysis, the coverage factors have been found to lead to underestimation and cannot be realistically applied in dynamic complex systems [41,42].

UQ in CESs involves the propagation of errors around the sample mean of each parameter via simulation [39]. The three most-common and -validated propagation techniques are Taylor series expansion, Monte Carlo simulation, and Latin Hypercube Sampling (LHS). Taylor series expansion is not suitable for complex nonlinear models, but the derivation of normalised sensitivity coefficients would be beneficial to identify the most-significant parameters [43]. Monte Carlo simulation is widely used, relatively simple, adaptable, and applicable for more complex applications [9,32,44,45,46]. LHS migrates simple Monte Carlo to assess the convergence of cumulative probability distributions for output variables [6,10,25].

Clarke et al. [25] reviewed the application of these techniques and applied them in a thermodynamic analysis of heat exchanger designs, which highlighted the need to consider both quantitative and qualitative uncertainty and the identification of parameters that pose the greatest influence on uncertainty through Sensitivity Analysis (SA). The approaches used were influenced by Vasquez and Whiting [47]. Similarly, Tatara and Lupia [48] examined heat exchanger performance through temperature measurement uncertainty, with a spotlight on the effect data acquisition methods and measurement devices have on the resulting uncertainty. The heat transfer coefficient was calculated considering the quantified uncertainties.

2.2.1. Qualitative Contributions

The consideration of qualitative uncertainty factors can have significant effects on the overall estimate. This is often overlooked in practice or assigned a general bias element considering data acquisition and aleatory factors. The pedigree approach is a widely renowned and verified approach to equate qualitative estimates in line with quantitative data. First proposed by Funtowicz and Ravetz [49], the approach comprises a matrix to score expert knowledge and opinion according to predefined criteria to permit quantitative reliability assessment. This has been applied in a range of fields including oil and gas, meteorology, and genealogy [7,24,38,40,50,51]. It can be applied on its own or through an encompassing approach to standardise combined uncertainty dimensions via five qualifiers: Numeral, Unit, Spread, Assessment, and Pedigree (NUSAP) [24,38,50,52]. The first three terms consider quantitative factors: quantity value, acquisition date, and the random error of the variance of the dataset (addressed by SA and Monte Carlo simulation), respectively.

Ciroth et al. [38] presented a process to improve uncertainty estimation by gauging qualitative uncertainty factors through the pedigree approach for flow data in a multidimensional database. Estimates are attributed by their Geometric Standard Deviation (GSD), where inputs fit the multiplicative lognormal distribution (Equation (1)) [38,53,54]. It is stated that the arithmetic standard deviation used to attribute uncertainty in quantitative data has the disadvantage of relying on the scale (unit) of data in a linear manner [38,53]. Therefore, for the analysis of data from varying sources and measured in different units, uncertainty factors need to be independent of scaling effects. Using the GSD as the uncertainty measure overcomes scale dependency.

where: σg = GSD; n = number of inputs; xi = dataset; = geometric mean of dataset.

To enable aggregation where data sources do not follow a lognormal distribution, GSD ratios are obtained via the Coefficient of Variation (CV) [53,55]. This is a dimensionless measure of variability defined as the ratio between the standard deviation and the mean [55,56]. Muller et al. [53] provided formulas to apply the CV to various distributions to allow the user to select the most-appropriate types for analysis. This is a key method to aggregate compound uncertainties through different PDFs, given in Table 1, the robustness of which was tested for each parameter PDF using Monte Carlo simulation.

Table 1.

Probability Distribution Function (PDF) and relative Coefficient of Variation (CV) calculations [38,53].

Given as a dimensionless measure of variability, the CV can be used as a measure of uncertainty for each input and aggregated to give a representative total. The application of the CV and pedigree aims to convert quality and lack of knowledge into uncertainty figures [53].

2.2.2. Correlation and Sensitivity Analysis

Dependencies between input parameters should be accounted for through correlation [1,8,26,39,48,57]. Qualitative uncertainties given by subjective opinion are intuitively correlated in terms of rank rather than linear relationships [6]. Spearman’s rank correlation () is, therefore, best suited to consider the correlation between compound uncertainties ()—given by Equation (2).

The significance of positive and negative correlations on the aggregated uncertainty estimate will vary with system complexity, as well as the coefficient value. It is important to remember that correlation is not causation and while two parameters can show a significant correlation, they may not be impacted by one another in practice.

Sensitivity Analysis (SA) identifies parameters whose uncertainty has the greatest relative impact on the system [1,39,58,59,60,61,62]. It gives an illustration of the relationships between different inputs of various PDFs and parameters, as well as those with negligible effects that can be removed. An important tool in uncertainty assessment, design optimisation, and reliability measurement, SA is performed in two ways—local and global. Local Sensitivity Analysis (LSA) explores the change of the quantity of interest around a certain reference point, such as nominal values via partial derivatives. This is the simplest approach, but can prove arduous when applied for a large number of parameters. Global Sensitivity Analysis (GSA) studies the effect over the full range of the input space, typically adopting Monte Carlo techniques.

Groen [63] compared five GSA methods in environmental life cycle assessment: squared standardized regression coefficient, squared Spearman correlation coefficient, key issue analysis, Sobol’ indices and random balance design. Spearman correlation coefficients and Sobol’ indices were found to give the best overall performance. Generally, the best method depends on the available data, the uncertainty magnitude, and the goal of the study. Spearman correlation coefficients assume linearity in the system, which is often not the case in practice. Sobol’ indices allow for nonlinearity, but assume all parameters to be independent to identify the influence of each input parameter on the output [6,10,58,59,60,61,62,63,64,65,66]. Correlation coefficients should ideally be established between input parameters [63,67]. Discounting correlation is acceptable when the sensitivity of parameter is significantly greater than parameter , rendering negligible [68]. Where it is not, discounting correlation can lead to underestimation or overestimation of the resulting uncertainty estimate.

Further from the selection of the best sensitivity approach, Groen [68] compared an analytical and a sampling approach to consider dependant variables in GSA, achievable with small datasets through adjusted regression models devised by Xu and Gertner [67]. Both approaches resulted in relatively equal output variance and sensitivity indices for the applied case study. The sampling approach assumed all inputs to be normally distributed. Knowledge of parameter PDFs, means, standard deviations, and correlations is required prior to sampling—a prerequisite of the model proposed in this paper.

2.2.3. Compound Aggregation

The aggregated uncertainty () due to the uncertainty in the quantitative parameters is equal to the Root-Sum-Square (RSS) of those uncertainties () added to significant correlation coefficients (Equation (3)) [69] ( and are parameter x and parameter y, where I = 1 for the sum of those parameters; is the uncertainty for parameter ). If parameters are independent (), the second half of the equation is zero and cancels out. The widely used propagation of error model uses Taylor series expansion to consider local sensitivity coefficients within the aggregation, given by partial derivatives [1,25,39,69]. While suitable for non-complex models, the use of partial derivatives in complex nonlinear models can give a large degree of error and lead to underestimation or overestimation of the uncertainty propagation [25]. This paper, therefore, propagates uncertainties via Monte Carlo simulation and assesses their effect on the output response through GSA, as discussed previously.

To combine quantitative, recorded parameters with qualitative factors, recorded standard deviations are converted to their respective CVs according to their PDF type. The arithmetic mean of symmetric PDFs such as normal and uniform is equal to the mode and, as such, does not change when uncertainty increases [53]. They can, therefore, be aggregated additively by the RSS (Equation (3)). Lognormal distributions are asymmetric; the arithmetic mean will change with increasing or decreasing uncertainty. CVs represented by the lognormal distribution, CVLn, are aggregated multiplicatively by Equation (4) [53]. To combine these with symmetric distributions, a new arithmetic mean needs to be calculated to account for the shifting uncertainty, given by Equation (5) [53]. The proposed approach to aggregate compound uncertainty is discussed in Section 3.

2.3. Research Gaps

The GUM method is widely adopted for UQ. Along with the propagation of error method, this provides highly confident depictions of purely quantitative uncertainty. However, methods of deriving qualitative uncertainty using the GUM have been found to lead to inaccurate depictions [37,70]. Qualitative uncertainties are best accounted for through the pedigree approach, the criteria for which should be well established when designing the analysis architecture [24,50,51]. The identification of the most-appropriate PDF to represent each input is key to assess its uncertainty [51,71]. This can be achieved visually by comparing fits against a plotted histogram of the data. The representation of uncertainty through the respective CV, as described by Ciroth et al. [38] and Muller et al. [53], enables the quantification and aggregation of compound uncertainties and can be applied to a range of symmetric and asymmetric PDFs. While formulae to denote the inputs of varying PDFs by their respective CVs are defined, a method to aggregate CVs from a mix of symmetric and asymmetric PDFs in a compound manner is unclear. This is necessary to establish compound uncertainty estimates represented by different PDFs with a high degree of confidence.

This compound aggregation can then be used in GSA to calculate sensitivity indices. Correlations should be considered where suitable to avoid underestimation or overestimation in the estimate; however, the majority of applied studies assume input variables to be independent. It is logical to assume there will be significant correlations between quantitative, measured variables and the qualitative influence on how those variables are recorded. Emerging techniques have been proposed to account for dependant variables in SA, with varying success [66,67,68]. Incorporation with qualitative uncertainties also requires further research at this stage [6,58,61,66]. The risks in ignoring correlation in uncertainty propagation and SA were explored extensively by Groen [63,68]. The role of GSA is to identify variables that have a significant impact on the system, which ties in closely with correlation, though at different stages in the analysis. The consideration of correlation through the sampling GSA approach allows for increased accuracy in the determination of which variables have the most-significant impact on the overall uncertainty and is therefore incorporated in this study [67,68]. The ability to consider PDFs other than normal will further enhance this capability in the aggregation framework.

The research gaps are therefore summarised as:

- Approaches to quantify and aggregate compound uncertainties represented by different distributions, considering dependencies between them, applicable to increasingly complex engineering systems.

- Application of GSA to determine the impact of individual uncertainties on the aggregated total, accounting for compound parameters and significant correlation.

3. Compound Uncertainty Quantification and Aggregation Framework

Every measurement or estimate is subject to a degree of error, which in turn contributes a level of uncertainty. Quantifying this uncertainty enables a thorough assessment of the scale of risk each component might inflict on the system [1,20]. The level of uncertainty and associated risk can directly or indirectly influence system reliability for maintenance planning, corresponding turnaround times, and system performance.

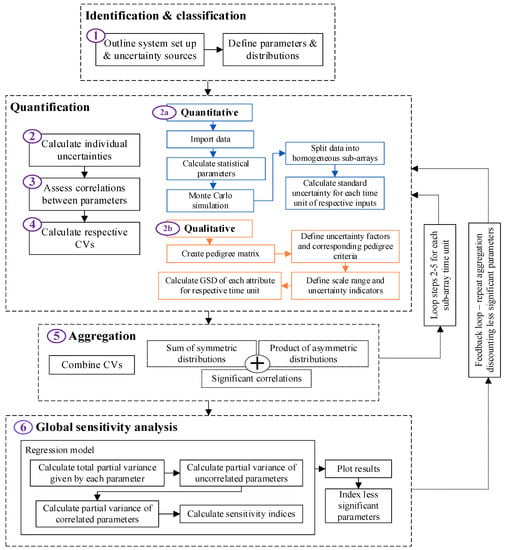

This paper contributes a holistic assessment of compound uncertainties in dynamic data represented by different distributions with an integrated assessment of correlations and sensitivity. This addresses the research gaps above and was achieved through a six-step modelling approach developed in MATLAB (version 2022b), described below and illustrated in Figure 1.

Figure 1.

CUQA framework overview.

The framework was designed as an extension and amalgamation of existing methodologies from the literature [1,20,33,70,72,73,74]. An initial version was presented in Grenyer et al. [75]. Here, it is further developed and validated in two distinct case studies to illustrate the framework’s flexibility in contextual application, considering key parameter variables identified within a system. While maintenance practices are not discussed directly in the case studies, the compound uncertainty consideration enables greater coherence in system behaviour, reliability, and maintenance requirements. The framework steps were developed from the traditional approach in the GUM, extended to consider compound uncertainty and GSA—detailed as follows:

Step 1: Outline system setup and uncertainty sources. The inputs were grouped according to their uncertainty type—quantitative or qualitative. This includes all measured data, assumptions made, and environmental predictions. Distribution types were established by “goodness-of-fit” tests. Selected types were indexed for later calculation.

Step 2: Calculate individual uncertainties. Statistical parameters were calculated for each input according to their relative distribution via Monte Carlo simulation and the pedigree matrix. These were grouped for each subsystem, for which the standard uncertainties and correlations were determined separately before combining with the whole system, elaborated as follows:

Step 2a: Quantitative, recorded data were concatenated in a cell array to allow inputs with a varying number of data points to be considered. Any non-numeric values (including gaps and non-formatted values) were removed. Monte Carlo simulations were run for the relative indexed PDF over a user-defined number of points (default 10,000) or to the size of the largest input parameter. This propagates the input data to a homogeneous array size. It was assumed that individual non-numeric values will not have a significant impact on the statistical parameters of the sub-arrays (such as outliers). In order to consider the uncertainty in the measured values, each dataset () was split into sub-arrays over the recorded time period. The number of rows for each sub-array () can be selected by the user or defined automatically. Possible values for are defined by the number of factors () in the value of the length of the dataset ()). The automatic selection is given by Equation (6). This aimed to select the middle factor, providing enough values to determine the uncertainty at each point while allocating enough sub-arrays to determine the change in uncertainty for the recorded period. Each dataset was then reshaped according to Equation (7), where is the reshaped sub-array dimension.

The arithmetic and geometric mean and deviation were calculated for each sub-array and the full dataset, along with the maximum and minimum values of each input variable. The standard deviation of each time unit was then calculated using the simulated data for each distribution type. For lognormal variables, the mean and standard deviation are given as geometric. Normal and uniform distribution variables are arithmetic [38]. To visualise the data, boxplots for each sub-array were overlaid on the initial dataset. These plots give more detailed information than standard error bars on the change in uncertainty over time with dynamic datasets.

Step 2b: Qualitative factors are defined through pedigree criteria. Based on the example implemented by Ciroth [38], the matrix defines uncertainty indicators based on expert judgement. Criteria are defined for each score for each factor, which relates to predefined case-dependent uncertainty measures. The ideal case has a pedigree score of 1, corresponding to minimal uncertainty. Scores of 2-n have progressively higher uncertainties owing to their representative criteria. While there is no limit to the number of scores, typically a maximum of 5–7 was used. The scores for each factor correspond to an uncertainty indicator, the GSD of which was obtained from one or multiple sources (interviews, surveys etc.). These scores will not be fixed over time, and so were pseudo-randomly applied ±1 of the defined score for each sub-array. If the uncertainty indicators were obtained from a single source, the GSD is given as its square root. If they were obtained from multiple sources, the GSD is given by Equation (1), modelled by the lognormal distribution [38,53,54]. The GSD of less ideal indicators is given as the ratio of the calculated GSD and that of the ideal score for each input, meaning that it is always equal to or greater than 1 [38].

Step 3: Determine significant correlations between input parameters. To best determine correlation, the input parameters must be of equal length. For quantitative data, initial recordings prior to Monte Carlo were sampled to the size of the largest parameter length around their respective PDF type. Qualitative parameters were sampled using their uncertainty score as the respective mean and GSD as the standard deviation under a lognormal distribution to achieve a homogeneous sample size. Spearman’s correlation coefficient (Equation (2)) was calculated between each pairwise input parameter, along with their corresponding p-values. These were the result of the null hypothesis significance test that determines whether what is observed in the data sample is likely to be true for a wider population. A default significance level () of 0.05 determines that for p-values , there is a 5% chance that a significant correlation does not exist between those parameters [6,39]. In addition, an ideal limit to define a significant coefficient magnitude is defined by the user as and cut-off . If there was not at least one pairwise coefficient for which the absolute value , the ideal was reduced in increments of 0.01 via a “while” loop until the condition was true or the defined was reached. This enables the user to define the degree of correlation to be included in the aggregation with the assurance that the resulting coefficients are statistically significant. The corresponding input parameters for which the final condition is true were plotted in a correlation matrix and stored for use in Step 5. This matrix provides a visualisation of the correlation magnitude for each parameter with a significantly correlated pair [76].

Step 4: Calculate the CV for each input. Uncertainties from different data types represented by different PDFs must be considered on an equal scale in order to be aggregated. This was achieved through the CV, explained in Section 2.2, the formulae for which are given in Table 1 [53]. These were calculated within the framework by a sequential algorithm according to the specified input and distribution type. Summary tables were then generated for the compound inputs and correlation, as calculated in Steps 2–3.

Step 5: Aggregate respective CVs and correlated parameters. As discussed in Section 2.2.3, symmetric distributions were aggregated additively by the RSS (Equation (3)). Asymmetric distributions, given by lognormal distributions, CVLn, were aggregated multiplicatively by Equation (4) [53]. The framework splits the calculated CVs of quantitative inputs according to the distribution type. The sum of symmetric attributes were added to the product of the lognormal attributes. Comparing this with Equation (3), the aggregated uncertainty is given by in Equation (8):

where is the Spearman correlation coefficient of two parameters and multiplied by their respective CV.

Individual CVs were plotted as bars against the aggregated total, along with a colour bar to visualise the acceptability of relative factors according to predefined scales. The correlation coefficient standardizes the variables and is, therefore, unaffected by changes in scale or units. The formulae allow the aggregated CV of quantitative and qualitative data to be determined as a measure of total uncertainty. Given that CV is the ratio between the standard deviation and the mean, the output follows a normal distribution. The uncertainty can, therefore, be expressed back as the standard deviation via Equation (9).

Steps 2–5 were repeated for each sub-array unit. Summary variables including the individual and aggregated CV were stored and used to calculate the sensitivity indices in Step 6.

Step 6: Conduct GSA and visualise results. The relative influence of individual uncertainties on the aggregated total was calculated as the response vector over each sub-array time unit. The sampling approach proposed by Groen [68], influenced by Xu and Gertner [67], was applied to consider the effect of correlated parameters using an adjusted regression model. Results were visualised by a 3D bar plot to show dependant and independent effects against the total, with the same colour scale applied as for Step 5 to illustrate the severity. A feedback loop was then taken back to Step 2 where parameters with total effects below a defined threshold (default 5%) were discounted. The aggregated uncertainty and sensitivity indices were updated to determine the parameters contributing the greatest impact to the aggregated uncertainty, visualised in the same manner.

4. Stepped Implementation and Results of CUQA Framework

4.1. Case Study 1: Heat Exchanger Test Rig

The framework was first applied to a bespoke heat exchanger test rig, developed from an initial design by Addepalli et al. [12] with the installation of a motorised pump and digital sensors. The combination of digital and analogue recording, along with qualitative factors discussed below, manifests compound uncertainty in heat exchanger performance. These uncertainties need to be quantified and aggregated to assess their impact on the system, assessed via the heat transfer coefficient [25,48,77]. This was calculated with the resulting uncertainty, derived alongside the CUQA framework as follows:

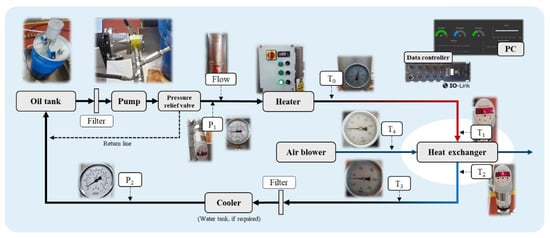

Step 1: Outline system setup and uncertainty sources. The system comprised a hot closed-loop system and a cold open-loop system, illustrated in Figure 2 Component specifications are described in Table 2. Notation relating to Figure 2 is defined in Table 3.

Figure 2.

Heat exchanger test rig: system design [12].

Table 2.

Heat exchanger test rig: component specifications of the initial design.

Table 3.

Heat exchanger test rig: uncertainty sources—measured parameters.

The experimental setup comprised seven quantitative parameters, summarised in Table 3, along with their corresponding reading interval and error and five qualitative factors: (1) reliability of data, (2) basis of estimate, (3) reading accuracy, (4) environmental conditions, and (5) sample size—each modelled by the lognormal distribution. Oil temperature at the inlet (T1) and outlet (T2) was measured by dual-temperature sensors. A constant flow rate was maintained by a motorised pump. Oil pressure (P1) was regulated by a pressure relief valve, recorded by a dual pressure sensor at the pump outlet. The sensors fed real-time data to the PC controller via IO-Link, logged to a CSV file in 1 s intervals along with a timestamp.

The heat transfer coefficient is given by the heat load of the hot () and cold () fluid (Equation (10)):

where = mass flow rate, given by the product of the volumetric flow rate and density ; = specific heat capacity; is the fluid temperature differential in and out of the heat exchanger.

The heat balance error and composite heat load considering associated uncertainty () are given by Equations (11) and (12), respectively, as derived by Tatara and Lupia [48]. Contributing measurement uncertainties and additional qualitative bias in the system were calculated separately using the propagation of error method [39].

While (Equation (13)), the overall heat transfer coefficient can be found and the associated measurement uncertainties were considered valid [48].

where: = uncertainty in relative parameter

The focus of this study was on the uncertainty in the measured values over time, not the uncertainty of the overall recording period.

The heating system was set to switch off at 80 °C to prevent overheating. However, due to its design, the heater was not able to sustain the temperature at 0.02 °C/min for 10 min, as recommended by Tatara and Lupia [48] to determine the steady-state. While this is unsuitable for thorough thermodynamic assessment of heat transfer efficiency from the heat exchanger, it contributed further qualitative uncertainty to the system, which was reflected in the application of the CUQA framework.

The steady-state region was, therefore, defined by the time of the first and last peak temperature readings at T1. Two cycles were completed, with a total of 85 min recorded; a total of 5590 data points for the three digital parameters. The temperature recorded at T1 had an overall range of 6.8 °C and 1.2 °C at T2 over the recorded period. The pressure, P1 was set at 1.8 bar, following a lognormal distribution with a range of 0.32 bar.

Aside from these readings, all variable measurements were recorded via in-line analogue dials. Many of these dials gave readings on different interval scales and varying measurement accuracy and, therefore, resulted in an increased uncertainty. Additional attributes such as parallax error and ambient temperature further increased the uncertainty in the measurement.

The volumetric flowrate of the oil (hot fluid) was held at 5 L/min (0.83 × 10−3 m3/s) with a uniform distribution. A reading error of ±2 L/min was assigned owing to the scale of the flowmeter. At a maximum temperature of 80 °C, 0.95 kg/L (950 kg/m3). Therefore, for the hot fluid = 0.08 kg/s. was given as 1800 J/kg.C. For the air (cold fluid), was given as 1.12 kg/s and as 1005 J/kg.C. Further thermodynamic analysis involving parameters such as oil viscosity and temperature loss through connecting pipes were out of the scope of the framework application. The uncertainty contributed by these factors was factored into the pedigree matrix.

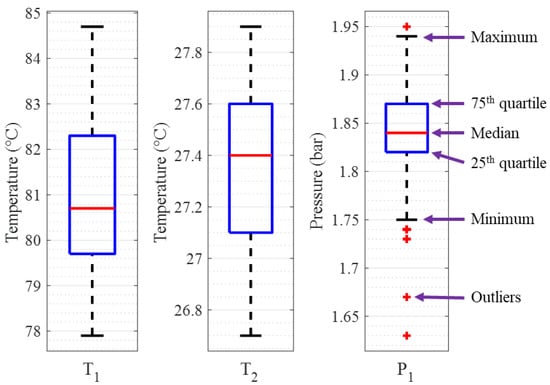

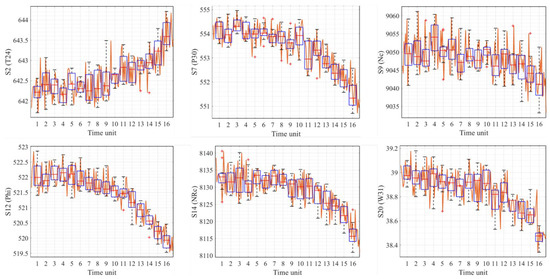

Step 2a: Calculate quantitative uncertainties. A summary of the seven quantitative parameters is given in Table 5. Summary statistics from the logged data for T1, T2, and P1 are given by the boxplots in Figure 3. The outliers were values greater than or less than , where is the maximum whisker length, 1.5 times the interquartile range, and and are the 25th and 75th quartiles of the respective dataset [78].

Figure 3.

Heat exchanger test rig: boxplots for T1, T2, and P1.

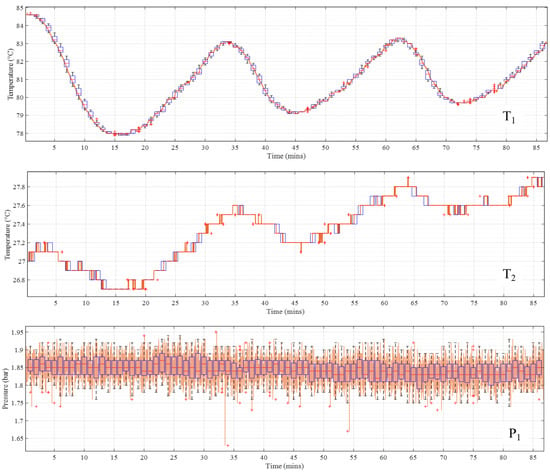

The three digitally recorded parameters were split into 65 homogeneous sub-arrays over the 5590 data points. The overlaid boxplots are shown in Figure 4 (coloured as for Figure 3), plotted over the time series of the logged data. Owing to the multimodal shape of the data, the sub-array standard deviation for T1 was low to negligible at the peaks and troughs and high for temperature increases or decreases. The temperature at T2 was more constant with respect to T1, showing a step change over time owing to the heat transfer coefficient of the heat exchanger.

Figure 4.

Heat exchanger test rig: sub-array boxplots over time series data.

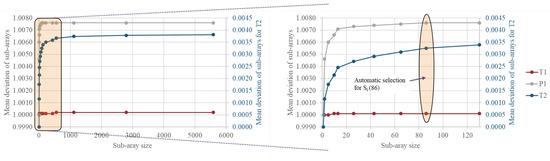

The greater the sub-array size (), the greater the uncertainty in the measurement. This is illustrated in Appendix A (Figure A1) for all possible factors (a), with a focus on values of 0–130 and the automatically selected value, 86, highlighted (b). This procedure enables a mean uncertainty estimate to be obtained where the recorded data are not able to meet the criterion for steady-state readings. As increased, the number of sub-arrays decreased, resulting in greater uncertainty. This was considered by the basis of estimate factor in the pedigree matrix.

The four remaining quantitative parameters were acquired by analogue dials with varying reading intervals (Table 3). These were taken every 30 min over the recording period, resulting in limited data in comparison to the automated recording. Using Monte Carlo simulation, the readings were propagated to match the array size of the three digital parameters according to their statistical range and rounded to their corresponding reading intervals.

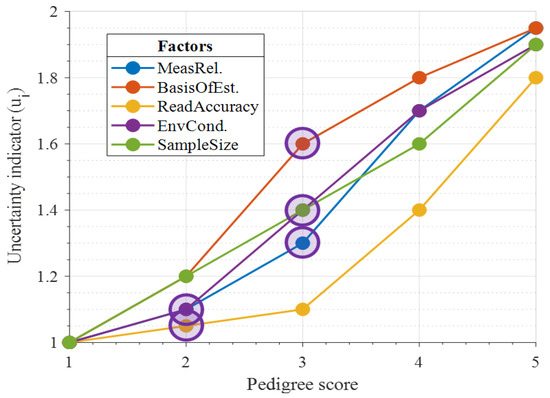

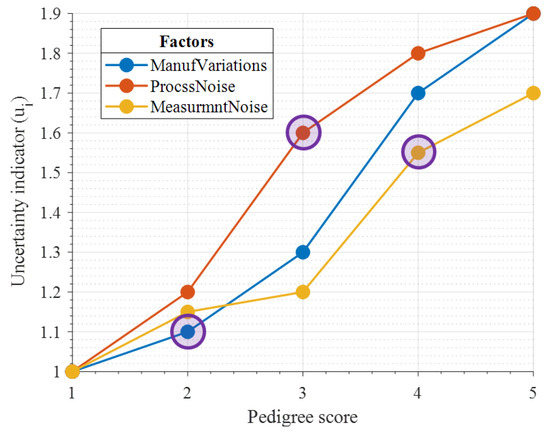

Step 2b: Calculate qualitative uncertainties. The five qualitative factors were scored by the defined pedigree criteria detailed in Table 4. These were based on adjusted examples from the literature to apply to the case study [7,24,52]. Uncertainty indicators for each factor for increasing pedigree scores corresponding to the criteria are illustrated in Figure 5. For this case study, the uncertainty indicators were obtained from a single source (the authors opinion), applied to the full dataset. Their GSDs are, therefore, given as the square root of the uncertainty indicator. These scores will not remain fixed over time and were, therefore, pseudo-randomly applied ±1 of the defined score circled in Figure 5 for each sub-array.

Table 4.

Heat exchanger test rig: pedigree criteria.

Figure 5.

Heat exchanger test rig: uncertainty indicators for increasing pedigree scores.

The resulting CV calculated in Step 4 was significantly greater than that of the lognormal recorded data. This was most likely due to the small number of data points in the sub-arrays. To give a closer comparison of the uncertainty, the pedigree factors were rescaled by Equation (14). The following results up to Step 6 illustrate an example for the first sub-array time unit.

where = uncertainty indicator.

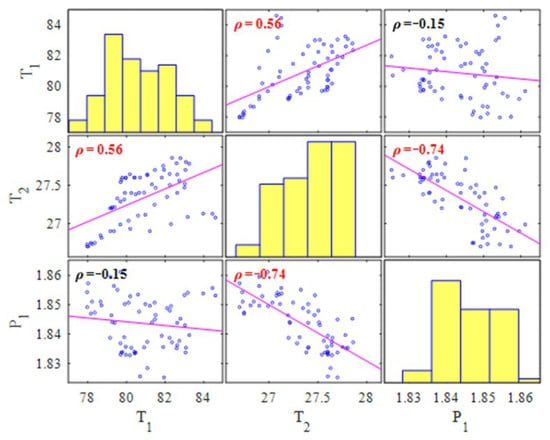

Step 3: Assess correlations between parameters. The ideal limit of was set to 0.5, with a cut-off at 0.2. Naturally, significant positive correlation was identified between T1 and T2, highlighted in red (Figure 6). The negative correlation to P1 reflects the pressure drop due to oil viscosity with increasing temperature. This shows the effectiveness of selecting the desired limit to remove minor correlations from the analysis.

Figure 6.

Heat exchanger test rig: significant correlations for which .

Step 4: Calculate respective CVs. The summary tables with the calculated CV for each input are given in Table 5 and Table 6 for the quantitative and qualitative factors, respectively. Uniformly distributed parameters had a negligible deviation and, therefore, a CV of zero and did not contribute to the aggregated uncertainty total.

Table 5.

Heat exchanger test rig: recorded data and calculated parameters.

Table 6.

Heat exchanger test rig: pedigree factors with relating GSD and CV.

Step 5: Combine CVs. The combined CV of each PDF was calculated by Equation (8) and summarised in Table 7, aggregated for symmetric and asymmetric distributions and total CV with correlation between T1 and T2—given in the table as .

Table 7.

Heat exchanger test rig: CV aggregation results.

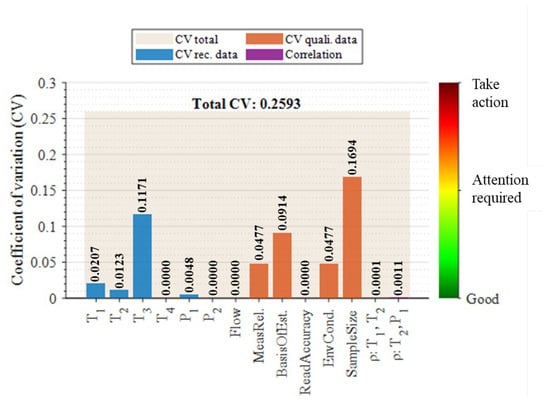

The visualisation in Figure 7 illustrates the relative CV of each quantitative (blue), qualitative (orange), and correlated (purple) input against the aggregated total (cream) for 1 of the 86 sub-array time units. When calculated for only the quantitative parameters, the aggregated CV fell to 0.1293, a percentage decrease of 50.1%. This illustrates the significance of accounting for qualitative factors alongside quantitative parameters—providing a holistic view of factors that manifest uncertainty in the system. While the depiction of these factors is subjective, the compound consideration reduced the risk of underestimating the aggregated uncertainty, which can occur if only accounting for quantitative parameters [38].

Figure 7.

Heat exchanger test rig: aggregated total CV against individual factors for one time unit.

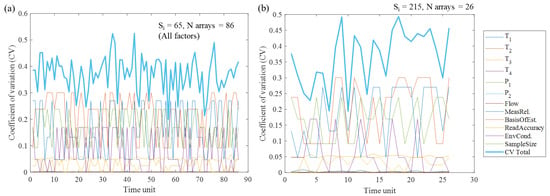

Individual uncertainties were then expressed as variances by the square of Equation (9) to feed into Step 6. The change in individual and aggregated CV over all time units for (86 sub-arrays) is given in Figure 8a and compared with (26 sub-arrays) in Figure 8b. This demonstrates the effect of sub-array size on the resulting uncertainty estimate.

Figure 8.

Heat exchanger test rig: aggregated total CV against individual factors over all time units for (a) and (b).

Calculating the heat load parameters from Equations (10)–(13) gave [48]: Qh = 366.2 MW, UQh = 16.31 MW, Qc = 4.52 kW, UQc = 97.56 W, and a resulting Q = 4.52 kW. The heat balance error (HBE) = 99.98%, and the composite load uncertainty UHBE = 311%. This passed the validity test given by as , indicating that the measurements were valid.

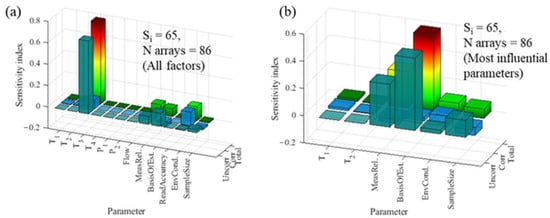

Step 6: GSA and visualisation. The relative influence of individual uncertainties on the aggregated total is plotted in Figure 9a. The uncertainty in T3, the oil temperature after being cooled by the heat exchanger, had an overwhelmingly greater effect (76%) on the aggregated uncertainty than any other parameter. This was due to the large error margin of ±2 °C given by the reading interval on the dial. If T3 was discounted, along with parameters with an impact below 5% (uniformly distributed), the basis of the estimate was deemed to have the greatest effect at 56% (Figure 9b). The influence of T1 and T2 was minimal due to the comparatively equal deviation for each sub-array time unit. The qualitative factors saw greater variability owning to the pseudorandom score allocation (Figure 5).

Figure 9.

Heat exchanger test rig: GSA results of individual to aggregated uncertainty for all factors (a) and most-influential parameters (b) for test example.

Altering about the pedigree score allocation of the qualitative factors impacted the degree of uncertainty each factor would contribute to the aggregated total, according to the defined uncertainty indicators in Figure 5. Applying higher pedigree scores will apply a higher representative level of uncertainty. The difference between one uncertainty indicator to another will influence the respective factor’s sensitivity index owing to the pseudorandom score allocation. Increasing the degree of allocation (e.g., from ±1 to ±2) will also influence the respective sensitivity indices, though this was not deemed necessary in this study for the score range of 1–5. While the uncertainty indicator scores were subjective, they were expected to increase linearly or exponentially. Therefore, lower scores would have less influence on the aggregated total.

4.2. Case Study 2: Turbofan Engine Degradation

The framework was applied to a turbofan engine degradation dataset from the Commercial Modular Aero-Propulsion System Simulation (C-MAPSS) tool, developed by NASA [79,80]. This publicly available dataset has been widely applied in Prognostics and Health Management (PHM) [80,81,82]. The C-MAPSS data consist of four datasets simulated under different operating conditions. The FD001 training dataset, simulating the degradation of the High-Pressure Compressor (HPC), was applied to the CUQA framework to analyse the aggregated uncertainty in the measurements over time:

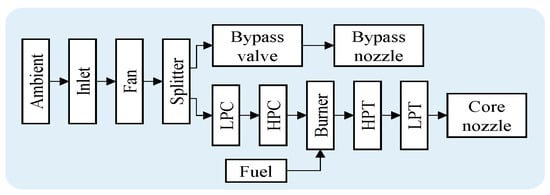

Step 1: Outline system setup and uncertainty sources. The FD001 dataset consisted of 21 sensors measuring temperature, pressure, and speed for 100 engine units, each with a random start time and normal operating level, running to failure. For this study, one engine unit was selected with 192 cycles to failure. The system design is illustrated in Figure 10.

Figure 10.

C-MAPSS turbofan engine: system design as simulated in C-MAPSS [79].

Previous work using this dataset focused on Remaining Useful Life (RUL) prediction [81,82]. In these studies, sensor data were divided into three categories according to the data trend; ascending, descending, and irregular/constant. Data that did not exhibit an ascending or descending trend over time (uniform) are not viable for RUL prediction and were, therefore, discounted from the dataset. The previous case study showed that constant, uniform parameters did not contribute to the uncertainty. Therefore, the same approach was applied here. A description of the 14 included sensors is given in Table 8.

Table 8.

C-MAPSS turbofan engine: detailed description of sensors [79].

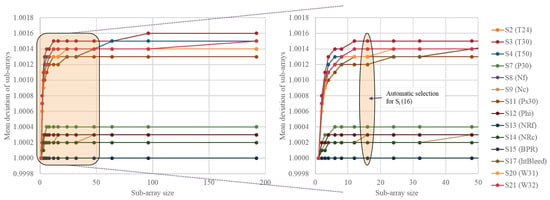

Step 2a: Calculate quantitative uncertainties. The sensor data were indexed and divided into 16 sub-arrays consisting of 12 rows by Equation (6). The mean and deviation of each array were calculated up to the point of failure. This is illustrated for 4 of the 14 inputs in Figure A2. A comparison of the sub-array size to the mean deviation is given in Figure A3. Other than for the derivation of pedigree factors in Step 2b, the illustrated results up to Step 6 give an example for the first sub-array unit. A summary of the quantitative sensor data for this example is given in Table 10.

Step 2b: Calculate qualitative uncertainties. Random noise models of mixed distributions were used in the composition of the C-MAPSS dataset to propagate associated qualitative factors with a mix of distributions to give realistic results [79,81]. This was given as a combination of three core factors applied to all sensors: manufacturing and assembly variations (resulting in varying degrees of initial wear), process noise (factors not taken into account in modelling), and measurement noise. More in-depth factors concerning maintenance between flights and environmental operating conditions could be considered in practice. For this study, they were incorporated in the three core factors for the simulated data, scored against the pedigree criteria detailed in Table 9 [79]. Uncertainty indicators for each factor are illustrated in Figure 11, with the GSD given as the square root of the uncertainty indicator. As for the previous study, the scores were pseudo-randomly applied ±1 of the defined score circled in Figure 11 for each sub-array, scaled by Equation (14).

Table 9.

C-MAPSS turbofan engine: pedigree criteria.

Figure 11.

C-MAPSS turbofan engine: uncertainty indicators for increasing pedigree scores.

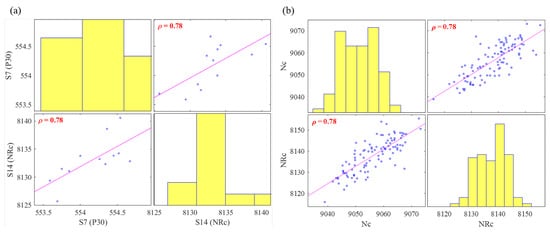

Step 3: Assess correlations between parameters. Each sub-array consisted of 12 data points. The ideal limit of , therefore, needed to be set to a high level of 0.8, with a cut-off at 0.6. No significant correlations were present above 0.8, so the value was reduced incrementally to 0.78, for which a significant correlation was detected between the pressure at the HPC outlet and turbine core speed (Figure 12a). While it is logical to expect a positive relationship between these parameters, notable in the plot, it was not maintained through the other 15 sub-arrays. This does not mean the relationship was not present, but that other dependencies were more prevalent below the limit of 0.8. When run for all data points, a positive trend was identified between the physical and corrected core speed of the engine (Figure 12b).

Figure 12.

C-MAPSS turbofan engine: significant correlations for which for HPC outlet only (a) and all data points (b).

Step 4: Calculate respective CVs. Summary tables with the calculated CV for each input are given in Table 10 and Table 11 for the quantitative and qualitative factors, respectively. The majority of factors here were lognormally distributed by the goodness-of-fit tests.

Table 10.

C-MAPSS turbofan engine: recorded data and calculated parameters.

Table 11.

C-MAPSS turbofan engine: pedigree factors with related GSD and CV.

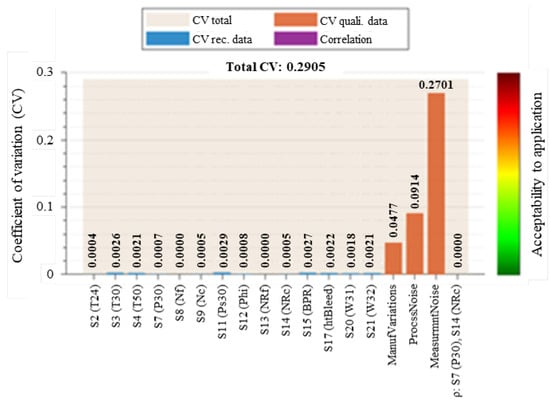

Step 5: Combine CVs. The combined CV is summarised in Table 12, aggregated for symmetric and asymmetric distributions and the total CV with correlation. The visualisation in Figure 13 illustrates the relative CV of each input against the aggregated total for the example time unit.

Table 12.

C-MAPSS turbofan engine: CV aggregation results.

Figure 13.

C-MAPSS turbofan engine: aggregated total CV against individual factors for one time unit.

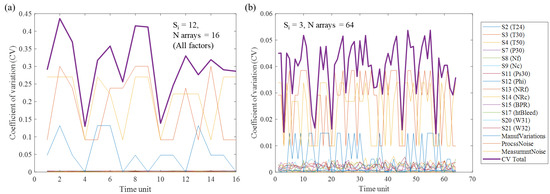

For the example time unit, the measured data had minimal uncertainty compared to the qualitative factors. Discounting the qualitative factors here resulted in a 97.8% decrease in the aggregated CV from 0.2905 to 0.0065. The minimal quantitative uncertainty was due to the spread of the 12 data points in the sub-array. Increasing the number of data points increased the mean uncertainty depending on the variability in the dataset, but reduced the number of sub-arrays (Figure A3). The change in individual and aggregated CV over all time units for (16 sub-arrays) is given in Figure 14a and compared with (64 sub-arrays) in Figure 14b.

Figure 14.

C-MAPSS turbofan engine: aggregated total CV against individual factors over all time units for (a) and (b).

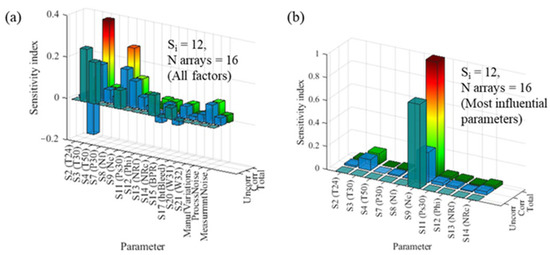

Step 6: GSA and visualisation. The relative influence of individual uncertainties on the aggregated total is plotted in Figure 15a and resulted after discounting the less influential parameters factors in Figure 15b. The quantitative parameters had a greater influence than the qualitative factors, despite them having a lower CV for all sub-array units. The most-influential parameter uncertainty was T50 (temperature at LPT inlet) at 37%. Discounting parameters with an impact <5% resulted in Nc (turbine core speed) having a dominating influence, while T50 dropped to 9%. This was again due to the variation in the data points of each sub-array. As for Case Study 1, the difference between one uncertainty indicator to another, defined in Figure 11, will influence the respective factor’s sensitivity index owing to the pseudorandom score allocation.

Figure 15.

C-MAPSS turbofan engine: GSA results for all factors (a) and most-influential parameters (b) for test example.

5. Discussion and Conclusions

The CUQA framework presented in this paper was designed to enhance system reliability measurement in a manner applicable to complex and non-complex engineering systems through quantification and aggregation of compound uncertainties. These develop as a result of the recording methods and assumptions made about the system and were modelled by different distribution types. The framework builds on existing literature to aggregate compound uncertainty considering dependant variables in the analysis, as well as the identification of the greatest contributing factors through the GSA. The benefits of this framework include enhancements to performance assessment and corresponding maintenance planning for complex and non-complex engineering systems and respective subsystems.

The framework was first applied to a bespoke heat exchanger test rig, which contributed a range of uncertainties that impacted measurement quality and accuracy. Three distributions were considered: lognormal, normal, and uniform. All qualitative factors were lognormal [38]. The measured parameters were deemed valid, though the true steady-state was not obtainable owing to the heating system [48]. The second case study implemented a simulated engine degradation dataset [79]. The majority of the selected sensors exhibited a lognormal distribution up to failure. The following paragraphs critique the effectiveness of the framework through the results of the two case studies, concluding with a summary of the contributions and recommendations for future work.

The CUQA framework is capable of assessing uncertainty for nonhomogeneous input data. The user can view and select the best-suited distribution for each input via “goodness-of-fit” tests. While effective for a small number of inputs, an automated method would prove more efficient for more complex systems. Monte Carlo simulation was used in Step 2a to give a homogeneous array size, enabling level consideration of each input. Monte Carlo was selected due to its flexibility with multiple distributions [6]. The inherently random nature of the simulation, though within the respective distribution parameters, caused different results each time the experiment was run, which may impact the accuracy of the parameter values. Other techniques such as Latin Hypercube Sampling (LHS) and Taylor series expansion may provide samples tighter to the respective mean, but do not show the same flexibility as Monte Carlo for multiple distribution types.

Splitting the input data into sub-arrays enabled uncertainty in the measured values to be determined over time. The greater the number of rows in each sub-array, the fewer arrays were allocated over the time series. The more arrays allocated, the more loops were performed between Steps 2 and 5, increasing the execution time. It is, therefore, necessary to find a balance with optimum values in each sub-array, which was the purpose of the automatic selection by Equation (6) (comparisons of mean deviation with increasing sub-array size are illustrated in Appendix A and Appendixe B respectively for the two case studies). The input parameters that did not maintain a positive or negative trend required more sub-arrays to account for their variation. The framework allocated the same number of sub-arrays to each input to maintain equal consideration throughout the analysis. Flexible size allocation by individual input trend or average variance rather than sample size warrants further investigation.

Step 2b defined uncertainty indicators associated with qualitative inputs. These are ideally defined by multiple sources such as surveys, interviews, and historical trends. The mean indicator is taken to calculate the Geometric Standard Deviation (GSD). Naturally, high uncertainty reflects low confidence in the measured parameter. While the use of the GSD overcame scale dependency in the measured data, the resulting coefficient of variation (CV) was found to be considerably lower than that of normally distributed data and the qualitative factors attributed by the pedigree matrix. This was due to the number of data points in the sub-array unit. Uncertainty indicators for the qualitative factors were initially assigned on a scale between 1 and 2 and the square root calculated to give the GSD [38]. These were rescaled by Equation (14) to give a more equal comparison to the quantitative data. This would, however, artificially reduce the aggregated total and saw normally distributed parameters such as T3 in Case Study 1 attributing the greatest influence on the aggregated total.

Significant correlations between input variables are defined via Spearman’s rank coefficient in Step 3. The ability to define the ideal coefficient limit allows the user to define the desired level of detail of the dependant variables. This can have a significant impact on the resulting estimate. The dependencies identified between the parameter values did not impact the aggregated total of the two case studies in Step 5. However, the influence attributed by individual CVs to the aggregated total in Step 6 was shown to exhibit dependencies that warrant further investigation. Stronger dependencies between parameter values will have a greater influence on emergent behaviour in more complex systems.

The CV was adopted as the uncertainty measure in Step 4 to allow the inputs of varying distribution types to be represented on an equal scale, enabling effective uncertainty quantification. Representing uncertainty by the CV proved effective to aggregate uncertainties represented by different distributions in Step 5, but further research is required into the scaling of geometric against arithmetic standard deviations. Acceptable levels of uncertainty are user-defined according to the application and visualised by the colour scale. Conversion of further distribution types such as Weibull and non-parametric derivations will allow for the consideration of more complex datasets. Aggregating the individual CVs by a combination of the propagation of error method for symmetric CVs and the product of asymmetric CVs allowed an aggregated total estimate to be obtained. This can be used to determine how the aggregated uncertainty changes over time, which is converted back to the standard deviation and used as the response vector in Step 6.

Global Sensitivity Analysis (GSA) was employed to identify which individual uncertainties contribute the greatest influence to the aggregated total. The sampling method was applied by Groen [68] using matrix-based LCA. It was applied in this study using the individual uncertainties of each sub-array as the inputs and the aggregated uncertainty at each point as the response. It was deemed the best-suited GSA method for the CUQA framework because it can be implemented with relatively small datasets and illustrates the influence of correlated and uncorrelated uncertainties against the total effects. While the sub-array derivation in Step 2a was more accurate with a greater number of rows in each sub-array, the number of sub-arrays affected the quality of the GSA over each unit. The removal of factors that do not contribute to the aggregated total (uniformly distributed or negligible for each iteration) allowed for a focused analysis on influential parameters in a second pass through the feedback loop. The risks formed as a result of these uncertainties can then be mitigated. More in-depth GSA at each time using methods such as Sobol’ indices would require the derivation of model process equations for the system application, which is out of the scope of this study.

Compared to complex engineering systems used in operational environments, Case Study 1 represented a relatively simple laboratory system setup, but served to prove the functionality of the CUQA framework as it exhibited uncertainties akin to those faced in such environments and presented comparable challenges to UQ. While the coefficients of the correlated parameters fell between negligible error margins with minimal risk, they may have a significant impact in real-world environments where operating conditions such as atmospheric temperatures or wind speeds will impact the accuracy of recorded data or subjective opinion.

The applications for complex engineering systems will feature a great deal more parameters than those exhibited in the two case studies. While the CUQA framework is able to account for additional parameters in the computation, it will take more time to produce actionable results. In addition, the visualisations resulting from Steps 2–5 would be more cumbersome to decipher with a large number of variables. This was already overcome in Step 3 by the function to only display significant correlations. The illustration of aggregated CV against individual factors for one time unit produced in Step 5 would become cumbersome with many additional parameters; however, this was only used as an example result. The use of GSA in Step 6 was even more beneficial in high-dimensional cases, where individual uncertainties that contribute the greatest influence to the aggregated total were identified.

The core contributions of the CUQA framework are:

- Use of the CV to enable effective quantification and aggregation of compound uncertainties represented by different distribution types;

- Assessment of the correlation between compound parameters;

- GSA for dependant compound parameters;

- Intuitive visualisation of results showing the most-significant parameters and dominant sensitivity indices.

The authors have drawn four key conclusions as a result of this work:

- Deriving the uncertainty measure as the CV proved effective for the aggregation of uncertainties represented by different PDFs, but further research into the scaling of geometric against arithmetic standard deviations is required. Aggregating individual CVs by a combination of the propagation of error method for symmetric CVs and the product of asymmetric CVs allowed an aggregated total estimate to be obtained. This can be used to determine how the aggregated uncertainty changes over time.

- Dependencies between compound parameters were not found to impact the aggregated total for the two case studies. However, the influence attributed by individual CVs to the aggregated total was shown to exhibit dependencies that warrant further investigation. Such dependencies may have a significant impact in real-world environments where operating conditions such as atmospheric temperatures or wind speeds impact the accuracy of recorded data or subjective opinion.

- The case studies served to prove the functionality of the CUQA framework, exhibiting uncertainties akin to those faced in operational environments and comparable challenges to UQ. User-defined ideal limits to identify significant correlations between compound parameters enabled the definition of the desired levels of detail for the dependant variables. Stronger dependencies between parameter values will have a greater influence on emergent behaviour in more complex systems.

- The GSA method applied by Groen [68] was deemed the best-suited approach for the CUQA framework because it can be implemented with relatively small datasets and illustrated the influence of dependant and independent uncertainties against the aggregated total. Intuitive visualisation of the results at each stage further boosted the framework’s useability and enabled rapid identification of uncertainties outside of acceptable levels and where mitigation may be required.

The authors propose future work to derive uncertainty from non-parametric and stochastic distributions through clustering techniques. Further assessment of aggregated compound uncertainty is necessary, incorporating additional distribution types and improving the rigour of the GSA approach in variance decomposition for each sub-array time unit. The emergent behaviour of uncertainties should be forecast through the in-service life to determine when and where further mitigation may be required.

Author Contributions

Conceptualisation, A.G., J.A.E., S.A. and Y.Z.; methodology, A.G. and J.A.E.; software, A.G.; validation, A.G., J.A.E. and S.A.; formal analysis, A.G. and S.A.; investigation, A.G. and S.A.; data curation, A.G.; writing—original draft preparation, A.G.; writing—review and editing, A.G., J.A.E., S.A. and Y.Z.; visualization, A.G.; supervision, J.A.E., S.A. and Y.Z.; project administration, A.G. and J.A.E.; funding acquisition, J.A.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Engineering and Physical Sciences Research Council (EPSRC), Project Reference 1944319, and the Doctoral Training Partnership (DTP).

Data Availability Statement

For access to the data underlying this paper, please see the Cranfield University repository, CORD, at DOI:10.17862/cranfield.rd.13550561.

Acknowledgments

This research was conducted as part of a Ph.D. with collaboration between the Centre for Digital Engineering and Manufacturing (CDEM) at Cranfield University (U.K.) and BAE Systems.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Heat Exchanger Test Rig

Figure A1.

Heat exchanger test rig: increasing deviation (uncertainty) with sub-array size.

Appendix B. C-MAPSS Turbofan Engine

Figure A2.

C-MAPSS turbofan engine: sub-array boxplots over time series data (example for six input parameters).

Figure A3.

C-MAPSS turbofan engine: increasing deviation (uncertainty) with sub-array size.

References

- NASA. Measurement Uncertainty Analysis Principles and Methods; NASA: Washington, DC, USA, 2010.

- Lanza, G.; Viering, B. A novel standard for the experimental estimation of the uncertainty of measurement for micro gear measurements. CIRP Ann. Manuf. Technol. 2011, 60, 543–546. [Google Scholar] [CrossRef]

- Newman, M.E.J. Complex Systems: A Survey. Am. J. Phys. 2011, 79, 800–810. [Google Scholar] [CrossRef]

- Stevens, R. Profiling Complex Systems. In Proceedings of the 2nd Annual IEEE Systems Conference, Montreal, QC, Canada, 7–10 April 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Efthymiou, K.; Mourtzis, D.; Pagoropoulos, A.; Papakostas, N.; Chryssolouris, G. Manufacturing systems complexity analysis methods review. Int. J. Comput. Integr. Manuf. 2016, 29, 1025–1044. [Google Scholar] [CrossRef]

- Helton, J.C.; Davis, F.J. Latin hypercube sampling and the propagation of uncertainty in analyses of complex systems. Reliab. Eng. Syst. Saf. 2003, 81, 23–69. [Google Scholar] [CrossRef]

- Grenyer, A.; Dinmohammadi, F.; Erkoyuncu, J.A.; Zhao, Y.; Roy, R. Current practice and challenges towards handling uncertainty for effective outcomes in maintenance. Procedia CIRP 2019, 86, 282–287. [Google Scholar] [CrossRef]

- MacAulay, G.D.; Giusca, C.L. Assessment of uncertainty in structured surfaces using metrological characteristics. CIRP Ann. Manuf. Technol. 2016, 65, 533–536. [Google Scholar] [CrossRef]

- Dantan, J.; Vincent, J.; Goch, G.; Mathieu, L. Correlation uncertainty—Application to gear conformity. CIRP Ann. Manuf. Technol. 2010, 59, 509–512. [Google Scholar] [CrossRef]

- Shamsi, M.H.; Ali, U.; Mangina, E.; O’donnell, J. A framework for uncertainty quantification in building heat demand simulations using reduced-order grey-box energy models. Appl. Energy 2020, 275, 115141. [Google Scholar] [CrossRef]

- Bentaha, M.L.; Battaïa, O.; Dolgui, A.; Hu, S.J. Dealing with uncertainty in disassembly line design. CIRP Ann. Manuf. Technol. 2014, 63, 21–24. [Google Scholar] [CrossRef]

- Addepalli, S.; Eiroa, D.; Lieotrakool, S.; François, A.-L.; Guisset, J.; Sanjaime, D.; Kazarian, M.; Duda, J.; Roy, R.; Phillips, P. Degradation Study of Heat Exchangers. Procedia CIRP 2015, 38, 137–142. [Google Scholar] [CrossRef]

- Andretta, M. Some Considerations on the Definition of Risk Based on Concepts of Systems Theory and Probability. Risk Anal. 2014, 34, 1184–1195. [Google Scholar] [CrossRef]

- Aven, T. On how to define, understand and describe risk. Reliab. Eng. Syst. Saf. 2010, 95, 623–631. [Google Scholar] [CrossRef]

- Savage, S. The flaw of averages. Harv. Bus. Rev. 2002, 80, 20. [Google Scholar] [CrossRef]

- Krane, H.P.; Johansen, A.; Alstad, R. Exploiting Opportunities in the Uncertainty Management. Procedia Soc. Behav. Sci. 2014, 119, 615–624. [Google Scholar] [CrossRef]

- Perminova, O.; Gustafsson, M.; Wikström, K. Defining uncertainty in projects–a new perspective. Int. J. Proj. Manag. 2008, 26, 73–79. [Google Scholar] [CrossRef]

- Ward, S.; Chapman, C. Transforming project risk management into project uncertainty management. Int. J. Proj. Manag. 2003, 21, 97–105. [Google Scholar] [CrossRef]

- Erkoyuncu, J.A.; Durugbo, C.; Roy, R. Identifying uncertainties for industrial service delivery: A systems approach. Int. J. Prod. Res. 2013, 51, 6295–6315. [Google Scholar] [CrossRef]

- Lequin, R.M. Guide to the Expression of Uncertainty of Measurement: Point/Counterpoint. Clin. Chem. 2004, 50, 977–978. [Google Scholar] [CrossRef]

- Willink, R. A procedure for the evaluation of measurement uncertainty based on moments. Metrologia 2005, 42, 329–343. [Google Scholar] [CrossRef]

- Ratcliffe, C.; Ratcliffe, B. Doubt-Free Uncertainty in Measurement; Springer International Publishing: Cham, Switzerland; Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Willink, R. Measurement Uncertainty and Probability; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar] [CrossRef]

- Van Der Sluijs, J.P.; Craye, M.; Funtowicz, S.; Kloprogge, P.; Ravetz, J.; Risbey, J. Combining Quantitative and Qualitative Measures of Uncertainty in Model-Based Environmental Assessment: The NUSAP System. Risk Anal. 2005, 25, 481–492. [Google Scholar] [CrossRef]

- Clarke, D.D.; Vasquez, V.R.; Whiting, W.B.; Greiner, M. Sensitivity and uncertainty analysis of heat-exchanger designs to physical properties estimation. Appl. Therm. Eng. 2001, 21, 993–1017. [Google Scholar] [CrossRef]

- Minkina, W.; Dudzik, S. Infrared Thermography: Errors and Uncertainties; John Wiley and Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Kiureghian, A.; Ditlevsen, O. Aleatoric or Epistemic? Does it matter? Spec. Workshop Risk Accept. Risk Commun. 2009, 31, 105–112. [Google Scholar] [CrossRef]

- Flage, R.; Baraldi, P.; Zio, E.; Aven, T. Probability and Possibility-Based Representations of Uncertainty in Fault Tree Analysis. Risk Anal. 2013, 33, 121–133. [Google Scholar] [CrossRef] [PubMed]

- Soundappan, P.; Nikolaidis, E.; Haftka, R.T.; Grandhi, R.; Canfield, R. Comparison of evidence theory and Bayesian theory for uncertainty modeling. Reliab. Eng. Syst. Saf. 2004, 85, 295–311. [Google Scholar] [CrossRef]

- Chalupnik, M.J.; Wynn, D.C.; Clarkson, P.J. Approaches to mitigate the Impact of Uncertainty in Development Processes. In Proceedings of the 16th International Conference on Engineering Design, Stanford, CA, USA, 24–27 August 2009; pp. 459–470. [Google Scholar]

- Hogan, R. Calculating Effective Degrees of Freedom, ISO Budgets. 2014. Available online: http://www.isobudgets.com/calculating-effective-degrees-of-freedom/ (accessed on 24 August 2017).

- Helton, J.C. Uncertainty and sensitivity analysis in the presence of stochastic and subjective uncertainty. J. Stat. Comput. Simul. 1997, 57, 3–76. [Google Scholar] [CrossRef]

- Helton, J.C.; Johnson, J.D. Quantification of margins and uncertainties: Alternative representations of epistemic uncertainty. Reliab. Eng. Syst. Saf. 2011, 96, 1034–1052. [Google Scholar] [CrossRef]

- Xu, Y.; Reniers, G.; Yang, M.; Yuan, S.; Chen, C. Uncertainties and their treatment in the quantitative risk assessment of domino effects: Classification and review. Process Saf. Environ. Prot. 2023, 172, 971–985. [Google Scholar] [CrossRef]

- Aven, T.; Renn, O. The Role of Quantitative Risk Assessments for Characterizing Risk and Uncertainty and Delineating Appropriate Risk Management Options, with Special Emphasis on Terrorism Risk. Risk Anal. 2009, 29, 587–600. [Google Scholar] [CrossRef]

- Ciroth, A. Quantitative Inventory Uncertainty. Greenh. Gas Protoc. 2013, 2, 89. Available online: https://www.ghgprotocol.org/sites/default/files/ghgp/QuantitativeUncertaintyGuidance.pdf (accessed on 6 April 2019).

- Ellison, S.L.; Williams, A. Quantifying Uncertainty in Analytical Measurement; EURACHEM/CITAC Working Group: Teddington, UK, 2012; Volume 126, Available online: https://www.eurachem.org/index.php/publications/guides/quam (accessed on 25 August 2022).

- Ciroth, A.; Muller, S.; Weidema, B.; Lesage, P. Empirically based uncertainty factors for the pedigree matrix in ecoinvent. Int. J. Life Cycle Assess. 2016, 21, 1338–1348. [Google Scholar] [CrossRef]

- Coleman, H.W.; Steele, W.G. Experimentation, Validation, and Uncertainty Analysis for Engineers; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Kloprogge, P.; van der Sluijs, J.P.; Petersen, A.C. A method for the analysis of assumptions in model-based environmental assessments. Environ. Model. Softw. 2011, 26, 289–301. [Google Scholar] [CrossRef]

- Willink, R. An improved procedure for combining Type A and Type B components of measurement uncertainty. Int. J. Metrol. Qual. Eng. 2013, 4, 55–62. [Google Scholar] [CrossRef]

- Azene, Y.T.; Roy, R.; Farrugia, D.; Onisa, C.; Mehnen, J.; Trautmann, H. Work roll cooling system design optimisation in presence of uncertainty and constrains. CIRP J. Manuf. Sci. Technol. 2010, 2, 290–298. [Google Scholar] [CrossRef]

- Iman, R.L.; Helton, J.C. An Investigation of Uncertainty and Sensitivity Analysis Techniques for Computer Models. Risk Anal. 1988, 8, 71–90. [Google Scholar] [CrossRef]

- Fleeter, C.M.; Geraci, G.; Schiavazzi, D.E.; Kahn, A.M.; Marsden, A.L. Multilevel and multifidelity uncertainty quantification for cardiovascular hemodynamics. Comput. Methods Appl. Mech. Eng. 2020, 365, 113030. [Google Scholar] [CrossRef]

- Valdez, A.R.; Rocha, B.M.; Chapiro, G.; Santos, R.W.D. Uncertainty quantification and sensitivity analysis for relative permeability models of two-phase flow in porous media. J. Pet. Sci. Eng. 2020, 192, 107297. [Google Scholar] [CrossRef]

- Cardin, M.-A.; Nuttall, W.J.; de Neufville, R.; Dahlgren, J. Extracting Value from Uncertainty: A Methodology for Engineering Systems Design. INCOSE Int. Symp. 2007, 17, 668–682. [Google Scholar] [CrossRef]

- Vasquez, V.R.; Whiting, W.B. Accounting for Both Random Errors and Systematic Errors in Uncertainty Propagation Analysis of Computer Models Involving Experimental Measurements with Monte Carlo Methods. Risk Anal. 2005, 25, 1669–1681. [Google Scholar] [CrossRef]

- Tatara, R.; Lupia, G. Assessing heat exchanger performance data using temperature measurement uncertainty. Int. J. Eng. Sci. Technol. 2012, 3, 1–12. [Google Scholar] [CrossRef]

- Funtowicz, S.O.; Ravetz, J.R. Uncertainty and Quality in Science for Policy; Springer: Dordrecht, The Netherlands, 1990. [Google Scholar] [CrossRef]

- Berner, C.L.; Flage, R. Comparing and integrating the NUSAP notational scheme with an uncertainty based risk perspective. Reliab. Eng. Syst. Saf. 2016, 156, 185–194. [Google Scholar] [CrossRef]

- Erkoyuncu, J.A. Cost Uncertainty Management and Modelling for Industrial Product-Service Systems; Cranfield University: Cranfield, UK, 2011. [Google Scholar]

- Durugbo, C.; Erkoyuncu, J.A.; Tiwari, A.; Alcock, J.R.; Roy, R.; Shehab, E. Data uncertainty assessment and information flow analysis for product-service systems in a library case study. Int. J. Serv. Oper. Inform. 2010, 5, 330. [Google Scholar] [CrossRef]

- Muller, S.; Lesage, P.; Ciroth, A.; Mutel, C.; Weidema, B.P.; Samson, R. The application of the pedigree approach to the distributions foreseen in ecoinvent v3. Int. J. Life Cycle Assess. 2016, 21, 1327–1337. [Google Scholar] [CrossRef]

- Limpert, E.; Stahel, W.A.; Abbt, M. Log-normal Distributions across the Sciences: Keys and Clues. BioScience 2001, 51, 341. [Google Scholar] [CrossRef]

- Smart, C. Bayesian Parametrics: How to Develop a CER with Limited Data and Even Without Data. Int. Cost Estim. Anal. Assoc. 2014, 1, 1–23. [Google Scholar]

- Hochbaum, D.S.; Wagner, M.R. Production cost functions and demand uncertainty effects in price-only contracts. IIE Trans. Inst. Ind. Eng. 2015, 47, 190–202. [Google Scholar] [CrossRef]

- Groen, E.A. An Uncertain Climate: The Value of Uncertainty and Sensitivity Analysis in Environmental Impact Assessment of Food; Wageningen University: Wageningen, The Netherlands, 2016. [Google Scholar] [CrossRef]

- Saltelli, A.; Ratto, M.; Andres, T.; Campolongo, F.; Cariboni, J.; Gatelli, D.; Saisana, M.; Tarantola, S. Global Sensitivity Analysis. In The Primer; John Wiley & Sons, Ltd.: Chichester, UK, 2007. [Google Scholar] [CrossRef]

- Saltelli, A. Sensitivity analysis for importance assessment. Risk Anal. 2002, 22, 579–590. [Google Scholar] [CrossRef]

- Brune, A.J.; West, T.K.; Hosder, S. Uncertainty quantification of planetary entry technologies. Prog. Aerosp. Sci. 2019, 111, 100574. [Google Scholar] [CrossRef]

- Iooss, B.; Lemaître, P. A Review on Global Sensitivity Analysis Methods. In Operations Research/Computer Science Interfaces Series; Dellino, G., Meloni, C., Eds.; Springer: Boston, MA, USA, 2015; pp. 101–122. [Google Scholar] [CrossRef]

- Patelli, E.; Pradlwarter, H.J.; Schuëller, G.I. Global sensitivity of structural variability by random sampling. Comput. Phys. Commun. 2010, 181, 2072–2081. [Google Scholar] [CrossRef]

- Groen, E.A.; Bokkers, E.A.M.; Heijungs, R.; de Boer, I.J.M. Methods for global sensitivity analysis in life cycle assessment. Int. J. Life Cycle Assess. 2017, 22, 1125–1137. [Google Scholar] [CrossRef]

- Saltelli, A.; Bolado, R. An alternative way to compute Fourier amplitude sensitivity test (FAST). Comput. Stat. Data Anal. 1998, 26, 445–460. [Google Scholar] [CrossRef]

- Sobol, I.M. Sensitivity analysis for nonlinear mathematical models, M.V. Keldysh Institute of Applied Mathematics. Russ. Acad. Sci. Mosc. 1993, 1, 407–414. [Google Scholar] [CrossRef]

- DeCarlo, E.C.; Mahadevan, S.; Smarslok, B.P. Efficient global sensitivity analysis with correlated variables. Struct. Multidiscip. Optim. 2018, 58, 2325–2340. [Google Scholar] [CrossRef]

- Xu, C.; Gertner, G.Z. Uncertainty and sensitivity analysis for models with correlated parameters. Reliab. Eng. Syst. Saf. 2008, 93, 1563–1573. [Google Scholar] [CrossRef]

- Groen, E.; Heijungs, R. Ignoring correlation in uncertainty and sensitivity analysis in life cycle assessment: What is the risk? Environ. Impact Assess. Rev. 2017, 62, 98–109. [Google Scholar] [CrossRef]

- Castrup, H. Estimating and Combining Uncertainties. In Proceedings of the 8th Annual ITEA Instrumentation Workshop, Lancaster, CA, USA, 5 May 2004. [Google Scholar]

- Grote, G. Management of Uncertainty: Theory and Application in the Design of Systems and Organisations, Decision Engineering, London; Springer: Zurich, Switzerland, 2009. [Google Scholar]

- Stockton, D.; Wang, Q. Developing cost models by advanced modelling technology, Proceedings of the Institution of Mechanical Engineers. Part B J. Eng. Manuf. 2004, 218, 213–224. [Google Scholar] [CrossRef]

- Limbourg, P.; de Rocquigny, E. Uncertainty analysis using evidence theory–confronting level-1 and level-2 approaches with data availability and computational constraints. Reliab. Eng. Syst. Saf. 2010, 95, 550–564. [Google Scholar] [CrossRef]

- Schwabe, O.; Shehab, E.; Erkoyuncu, J. Uncertainty quantification metrics for whole product life cycle cost estimates in aerospace innovation. J. Prog. Aerosp. Sci. 2015, 77, 1–24. [Google Scholar] [CrossRef]

- Schwabe, O.; Shehab, E.; Erkoyuncu, J.A. A framework for geometric quantification and forecasting of cost uncertainty for aerospace innovations. Prog. Aerosp. Sci. 2016, 84, 29–47. [Google Scholar] [CrossRef]

- Grenyer, A.; Erkoyuncu, J.A.; Addepalli, S.; Zhao, Y. An Uncertainty Quantification and Aggregation Framework for System Performance Assessment in Industrial Maintenance. In Proceedings of the TESConf 2020–9th International Conference on Through-life Engineering Services, Cranfield, UK, 3–4 November 2020. [Google Scholar] [CrossRef]

- Mathworks Documentation, Corrplot, Econometrics Toolbox. 2012. Available online: https://uk.mathworks.com/help/econ/corrplot.html (accessed on 25 August 2022).

- Thulukkanam, K. Heat Exchanger Design Handbook, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar] [CrossRef]

- Langford, E. Quartiles in Elementary Statistics. J. Stat. Educ. 2006, 14, 3. [Google Scholar] [CrossRef]