1. Introduction

With the rapid development of social computing and social manufacturing (SM), traditional manufacturing mode is transforming to customization, small batch, digital production [

1]. The cost of rapid prototyping equipment, represented by 3D printers, is decreasing, and the technology is becoming increasingly popular. Desktop and industrial-grade 3D printers make it possible for individuals to produce products independently. Users can personalize and manufacture physical objects in small batches without relying on complex factories as long as they have valid 3D model data (or have other types of manufacturing instructions). Each 3D printer is an independent manufacturing node with a naturally distributed, decentralized character. Anyone with an idea for a product design, even if they do not have the knowledge of 3D modeling, can design a digital model by relying on AI technology, and then realize it with manufacturing facilities. With a well-developed design platform, innovative ideas can be realized on the Internet. Using distributed manufacturing tools such as 3D printers, personalized and customized products can be provided to users worldwide.

Industry 4.0 utilizes the cyber-physical system (CPS) in combination with additive manufacturing, the Internet of things, machine learning, and artificial intelligence technologies to a realize and smarten manufacturing [

2]. It aims to improve the intelligence level of the manufacturing industry and establish a smart manufacturing model with adaptability and resource efficiency. Cyber-physical-social systems (CPSS) further enhance the integration of Internet information and social manufacturing systems and fully integrate industry with the human society [

3]. In the CPSS industrial environment, social manufacturing seamlessly connects the Internet of Things with 3D printers. The public can fully participate in the entire lifecycle of the product manufacturing process, to achieve real-time, personalized, large-scale innovation, and “agile mobile intelligence”.

Social manufacturing is a paradigm that uses 3D printing to involve the community in the process of producing products. During the 3D printing process, the first step is to obtain a 3D model. Currently, most 3D models are generated by computer-aided design (CAD), but the time and human resources costs are high. It is essential to design a user-friendly end-to-end 3D content generation model so that users can personalize the 3D model using text representation. Futhermore, a cloud platform can be built and put it into practical use.

The rest of the paper is organized as follows. In

Section 2, we present the literature review of SM.

Section 3 introduces the theoretical concepts.

Section 4 reviews the current difficulties in product customization. Finally, in

Section 5, the multimodal data-based product customization manufacturing framework is proposed and illustrated with practical application examples, followed by concluding remarks in

Section 6.

2. Literature Review

Utilizing SM is a practical way to achieve intelligent, social, and personalized manufacturing. SM allows for the active participation of all facets of social customization and the intense personalization of every component of every product. The term SM was proposed by Professor Fei-Yue Wang at the “Workshop on Social Computing and Computational Social Studies” in 2010 [

3], and its formal definition is given in his article in 2012 [

4]: “The social manufacturing refers to the personalized, real-time and economic production and consumption mode for which a consumer can fully participate in the whole lifetime of manufacturing in a crowdsourcing fashion by utilizing the technologies, such as 3D printing, network, and social media.” Thus, it is noted that SM is inspired by the social computing concept that has been hailed as a cutting-edge manufacturing solution for the coming era of personalized customization. In the same way that the social computing [

5] enables everyone in the society to obtain computing capacity, the SM enables everyone to obtain innovation and manufacturing capacity.

In the context of the SM, the critical feature is that everyone participates in product design, manufacturing and consumption in social production. Everyone can turn their ideas into reality. SM is a further the development and continuation of the existing crowdsourcing model. Xiong et al. [

6] summarize this feature as “from mind to product”. Based on this concept, Shang et al. [

7,

8,

9] provided a vision of connected manufacturing services that are smart and engaging online. Mohajeri et al. [

10,

11,

12] argued for using social computing and other Internet technologies to realize personalized, real-time, and socialized production. Jiang et al. [

13,

14,

15,

16] focused on the mechanisms for crowdsourcing and outsourcing services throughout the entire lifespan of individualized production. As for the SM service mode, Xiong et al. [

17] presented a social value chain system that applies the SM mode to the entire value chain and makes a contribution to bringing more potential to value-adding for all involved participants while reducing waste. Cao et al. [

18] provided a manufacturing-capability estimation model for the SM mode’s service level that is based on the ontology, the semantic web, the rough set theory, and a neural network. It includes models for production service capability and machining service capability. In the evaluation system proposed by Xiong et al. [

19], the best supplier is selected by using the hierarchical analysis method (AHP) and fuzzy comprehensive method. The suggested techniques can assist prosumers in assessing their suitability for a certain manufacturing requirement. Additionally, it can assist prosumers in their search and ability matching. Ming et al. [

20] proposed a data-driven view in the context of SM. Xiong et al. [

21] summarized the five layers that make up an SM system architecture, and a detailed discussion of each of the layers is presented next.

(1) Resource Layer: The production and service resources found in this layer can be combined to form a social resource network. A few of the resources include 3D printers, sophisticated logistics networks, information networks using 3D intelligent terminals, and heterogeneous operating systems. In this layer, 3D intelligent terminals can offer interaction and perception capabilities, and a modern logistics network can offer the logistical capability. Along with a range of physical links, the logistics network offers transparent information transmission options. For terminal perception, information communication, and production and product logistics, this layer is essential.

(2) Support Layer: Service module encapsulation, service discovery, service registration, service management, service data management, and middleware management are all part of this layer. Enterprise information can be transferred reliably, efficiently, and safely in accordance with user needs using the service registration and service discovery modules.

(3) Environment Layer: This layer includes the computer environment, information analysis environment and monitoring management environment that works in coordinated operation.

(4) Application Layer: This layer includes 3D modeling, operational monitoring tools, a platform for collaborative manufacturing management, a data collection and analysis platform, an optimization tool for allocating socialized manufacturing resources (SMRs), an assessment mechanism for system management, real-time monitoring, and resource scheduling.

(5) User Layer: This layer is composed of manufacturers, prosumers, and SMRs. Crowdsourcing is used to connect manufacturers and prosumers, and SMRs can be tailored for production.

3. Concepts

3.1. 3D Modeling

Additive manufacturing (AM) is a fabrication method that uses a digital prototype file as the foundation for constructing objects by printing layer by layer using material, such as powdered metal or plastic. Without the need for traditional tools, fixtures, or multiple machining processes, this technology enables the rapid and precise manufacturing of parts with a complex shape in a single machine, thereby allowing “free-form” manufacturing. Moreover, the more complex the structure of the product, the more significant the accelerating effect of its manufacturing. Currently, AM technology has many applications in the aerospace, medicine, transportation and energy, civil engineering, and other fields. With the development of AM technology and the popularity of 3D printers, there is an increasing demand for 3D modeling. The traditional modeling approach uses forward modeling software—3DMax, AutoCAD, etc. [

22]—which is highly technical. It takes a long time to design a model, making it difficult to build complex, arbitrarily shaped objects. Roberts et al. [

23] proposed a method to obtain 3D information from 2D images, known as reverse modeling. Since then, vision-based 3D reconstruction developed rapidly, and many new methods have emerged. Image-based rendering (IBR) methods could be divided into two categories depending on whether they rely on a geometric prior or not. Those methods that rely on geometric priors generally require a multi-view stereo (MVS) algorithm first to calculate the geometric information of the scene, and then to guide the input image for view composition. The methods that do not rely on geometric priors can generate new views directly from the input images. In 2018, Yao proposed an unstructured multi-view 3D reconstruction network (MVSNet) [

24], an end-to-end framework using deep neural networks (DNNs). IBR methods that rely on geometric a priori information perform better when geometric information is abundant and accurate. Still, when the geometry is missing or incorrect, it produces artifacts and degrades the quality of the view. The light field is the main area of research that does not rely on geometric a priori methods. Traditional methods for drawing light fields require dense and regular image captures, making them difficult to apply in practice. In recent years, with the development of DNN, researchers have discovered that it is possible to synthesize new views by fitting a neural network to a light sample of the scene, thereby implicitly encoding the light field of the input image. Compared to traditional light fields, the neural reflectance field (NeRF) [

25] method can be used for handheld captures with a small number of input images, greatly extending the applicability. The main advantages and disadvantages of the mainstream 3D modeling approach are shown in

Table 1. As shown in

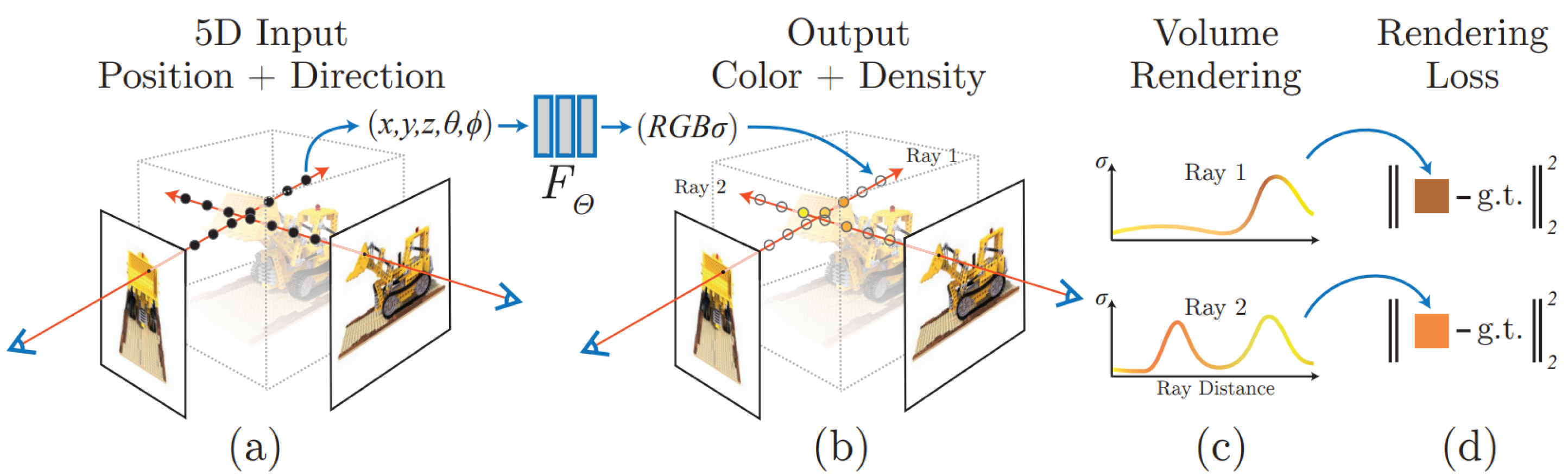

Figure 1, NeRF can be briefly summarized as using a multi-layer preceptron (MLP) neural network that learns a static 3D scene implicitly. While the input is the spatial coordinates, the view direction output is the bulk density of the spatial location under that view direction and the view-related camera light radiation field. In its basic form, a NeRF model represents scenes as a radiance field approximated by a neural network. The radiance field describes color and volume density for every point and for every viewing direction in the scene. This is written as,

where

are the in-scene coordinates,

represent the azimuthal and polar viewing angles,

represents color, and

represents the volume density. This 5D function is approximated by one or more MLP, sometimes denoted as

F. The two viewing angles

are often represented by

, a 3D Cartesian unit vector. This neural network representation is constrained to be multi-view consistent by restricting the prediction of

, the volume density (i.e., the content of the scene), to be independent of viewing direction, whereas the color

is allowed to depend on both viewing direction and in-scene coordinates. NeRF utilizes a neural network as an implicit representation of a 3D scene instead of the traditional explicit modeling of point clouds, meshes, voxels, etc. Through such a network, it is possible to directly render a projection image from any angle at any location. For this purpose, NeRF introduces the concept of radiation field, which is a very important concept in graphics, and here we give the definition of the rendering equation,

The neural radiation field represents the scene as volumetric density and directional radiation brightness at any point in space. Using the principles of classical stereoscopic rendering, we can render the color of any ray passing through the scene. The bulk density

can be interpreted as the derivable probability that the ray stays at position

X for an infinitesimal particle. Under the conditions of the nearest boundaries

and farthest boundaries

, the color

of the camera light is:

In practical applications, a fully automated, full-flow 3D reconstruction tool chain is urgently needed in many areas across industries, which also opens up many ideas and opportunities for our 3D reconstruction research. There are two key issues in this process that deserve our consideration. The first is that in the construction process of a 3D reconstruction system, the traditional geometric vision has clear interpretability, so how can we integrate an end-to-end deep learning method? Whether it is an end-to-end replacement or one embedded in our current process seems to be inconclusive and needs further exploration. The second is, from this practicality, how can we combine the reverse reconstruction in computer vision with the forward reconstruction in graphics so as to truly realize the highly structured and highly semantic 3D model required by industry from massive images. Generation is also an essential trend in the future. Only by solving these problems can we indeed use our image 3D reconstruction system to effectively support various business requirements in practical application settings, such as digital city planning, VR content production, and high-precision mapping.

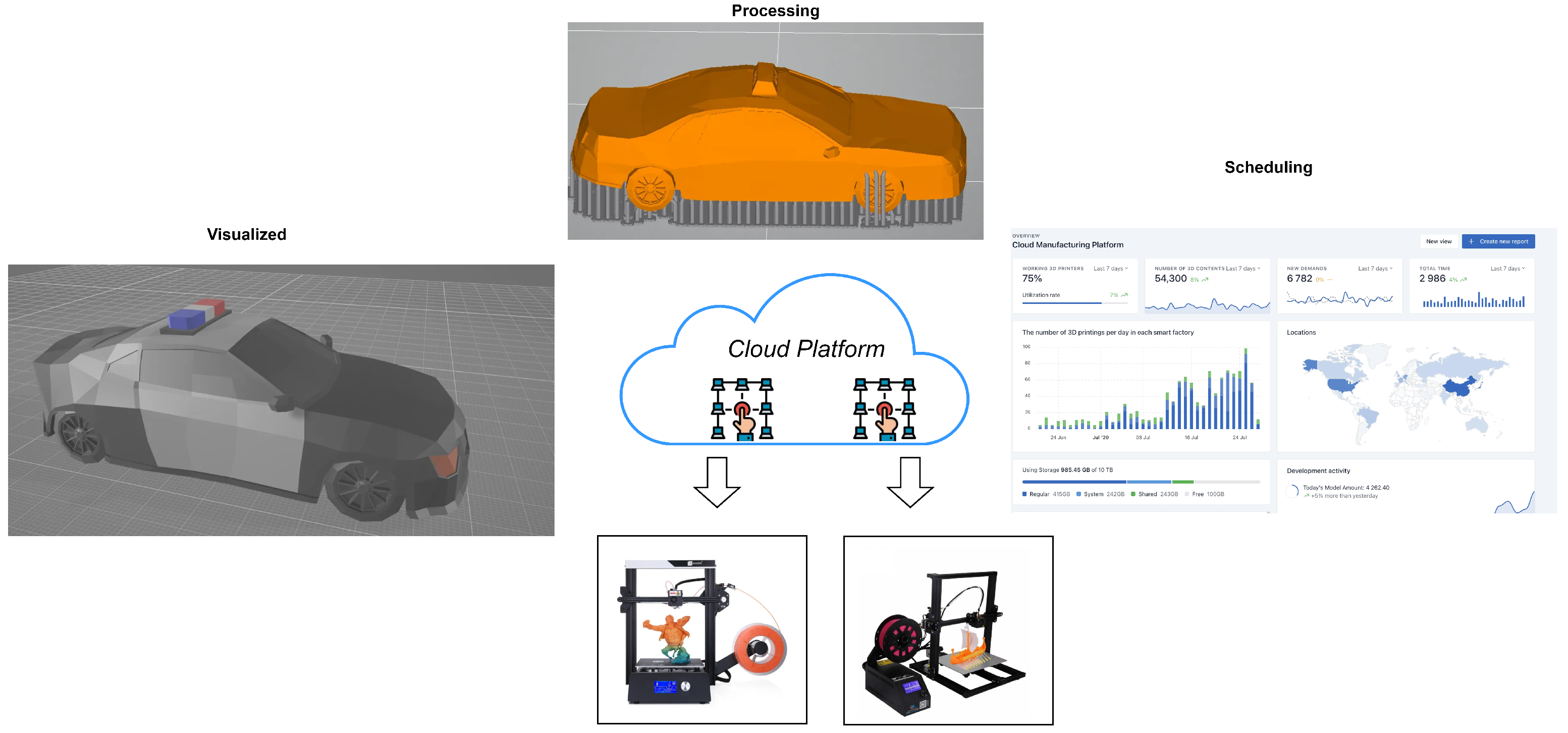

3.2. Cloud Manufacturing

Cloud manufacturing is an intelligent, efficient, and service-oriented new manufacturing model proposed in recent years. We aim to organically combine the 3D content generation model and cloud manufacturing mode so that this technology can be successfully implemented and applied to actual production. Cloud manufacturing is a cutting-edge manufacturing model that combines cloud computing, big data, and the Internet of Things to enable service-oriented, networked, and intelligent manufacturing. It provides a customized approach to production based on the use of widely distributed, on-demand manufacturing services to meet dynamic and diverse individualized needs and to support socialized production [

26,

27,

28,

29]. Cloud manufacturing emphasizes the embedding of computing resources, capabilities, and knowledge into networks and environments, allowing the center of attention of manufacturing companies to shift or return to users’ needs themselves. Cloud manufacturing is committed to building a communal manufacturing environment where manufacturing companies, customers, intermediaries, and others can fully communicate. In the cloud manufacturing model, user involvement is not limited to the traditional user requirement formulation and user evaluation but permeates every aspect of the entire manufacturing lifecycle. In the cloud manufacturing model, the identity of the customer or user is not unique, i.e., a user is a consumer of cloud services but also a provider or developer of cloud services, reflecting a kind of user participation in manufacturing [

30]. The advanced 3D-printing cloud model integrates 3D printing technology with the cloud manufacturing paradigm. Furthermore, the advanced 3D-printing cloud-model-based 3D-printing cloud platform has a service-oriented architecture, personalized customization technology, and a scalable service platform [

31]. The 3D-printing cloud platform is not a standalone information-sharing platform, but rather an open, shared, and scalable platform that offers both 3D printing and other types of high-value-added knowledge services. The 3D-printing cloud platform can power a 3D printing community based on collaborative innovation and promote the growth of 3D-printing-related industries. Manufacturing resources (such as 3D printers and robots) from various regions and enterprises are encapsulated into various types of manufacturing services in the cloud platform, with the goal of providing service demanders with on-demand service compositions. Yang et al. [

32] provided a cloud-edge cooperation mechanism for cloud manufacturing to offer customers on-demand manufacturing services, greatly enhancing the usage of distributed manufacturing resources and the responsiveness to the needs of customized products. Tamir et al. [

33] proposed a new robot-assisted AM and control system framework which effectively combines 3D printing and robotic arm control to better support cloud printing tasks.

4. The Difficulties in Product Customization

Offering products with their colors, components, and features changed to suit consumers’ preferences is known as product customization. The practical implementations of NeRF-based product customization currently face several difficulties due to the limitations of technology and resources. This section evaluates the research and divides the challenges into three groups,

(1) The accuracy of 3D modeling: We need to understand and render 3D scenes, because we human beings already live in such a 3D world, and we need to interact with others. When making a virtual scene, we hope to reconstruct the object from different perspectives and then analyze and observe the object. In the medical field, we hope to reconstruct the parts of each person and then guide the doctor’s decisions. At the same time, we hope to have a seamless interaction with this virtual world, hoping to get realistic enjoyment in the virtual world. For the next generation of artificial intelligence, we hope that it can understand 3D scenes so that it can better serve humans. Hopefully, we can make artificial intelligence have the ability to interact. This will form a pipeline. After reconstructing the 3D representation from the 2D world, a realistic rendering can be made. On top of this, it is hoped that a 3D scene could be generated so that the generated model could be used to learn the entire process. However, there are specific difficulties in realizing this. In fact, the generation of 3D scenes is a very complicated process. Take NeRF as an example, it requires multi-view images. However, after all, the amount of data in multi-view images is far from enough. This kind of data is actually a lot of this single-view images on the Internet, which lacks perspective information. We hope to use the existing pre-trained model to provide prior knowledge.

(2) Security and supervision: AM files can be easily transferred from the AM design stage to the shop floor during final production. Digital supply networks and chains can be created thanks to the ease with which parts and products can be shared and communicated thanks to AM’s digital nature. In addition, these digital advantages come with a few drawbacks. A digital design-and-manufacturing process increases the likelihood of data theft or tampering without a robust data protection framework. Data leakage and identity theft will pose significant security risks as the scale of the SM production system grows, and the participation of dishonest and malicious nodes will put the interests of honest nodes in jeopardy. At the same time, when physical products are transported via product data, it is essential to secure, store, and share data containing all important information. The digital thread for AM, also known as DTAM, creates a single, seamless data link between initial design concepts and finished products in order to mitigate risks. The integration of multiple printers and printing technologies and a number of distinct and disjointed physical manufacturing facilities is the primary obstacle in this challenge. Additionally, because parts must be inspected throughout the process rather than just at the end, businesses can need help to keep track of events that take place during the additive process. This may be necessary for part certification and qualification.

(3) Production efficiency: The uneven distribution of manufacturing resources in the manufacturing industry and the low utilization rate of resources have seriously affected the development of the manufacturing industry. To effectively integrate scattered manufacturing resources and improve the utilization rate of manufacturing resources, cloud manufacturing, a manufacturing model that uses information technology for services, appears in everyone’s vision. As one of the key research issues of the cloud manufacturing platform, the scheduling of manufacturing resources in the cloud manufacturing environment will affect the overall operational efficiency of the cloud manufacturing platform. In both academia and industry, cloud computing resource scheduling problems are considered to be as difficult as non-deterministic polynomial optimization problems, that is, NP problems. Therefore, algorithms that solve relatively conventional scheduling problems may suffer from dimensional damage when the scale of the problem increases. With the development of cloud computing and the increase in complexity, this problem has become more challenging.

5. The Efficient Product Customization Framework

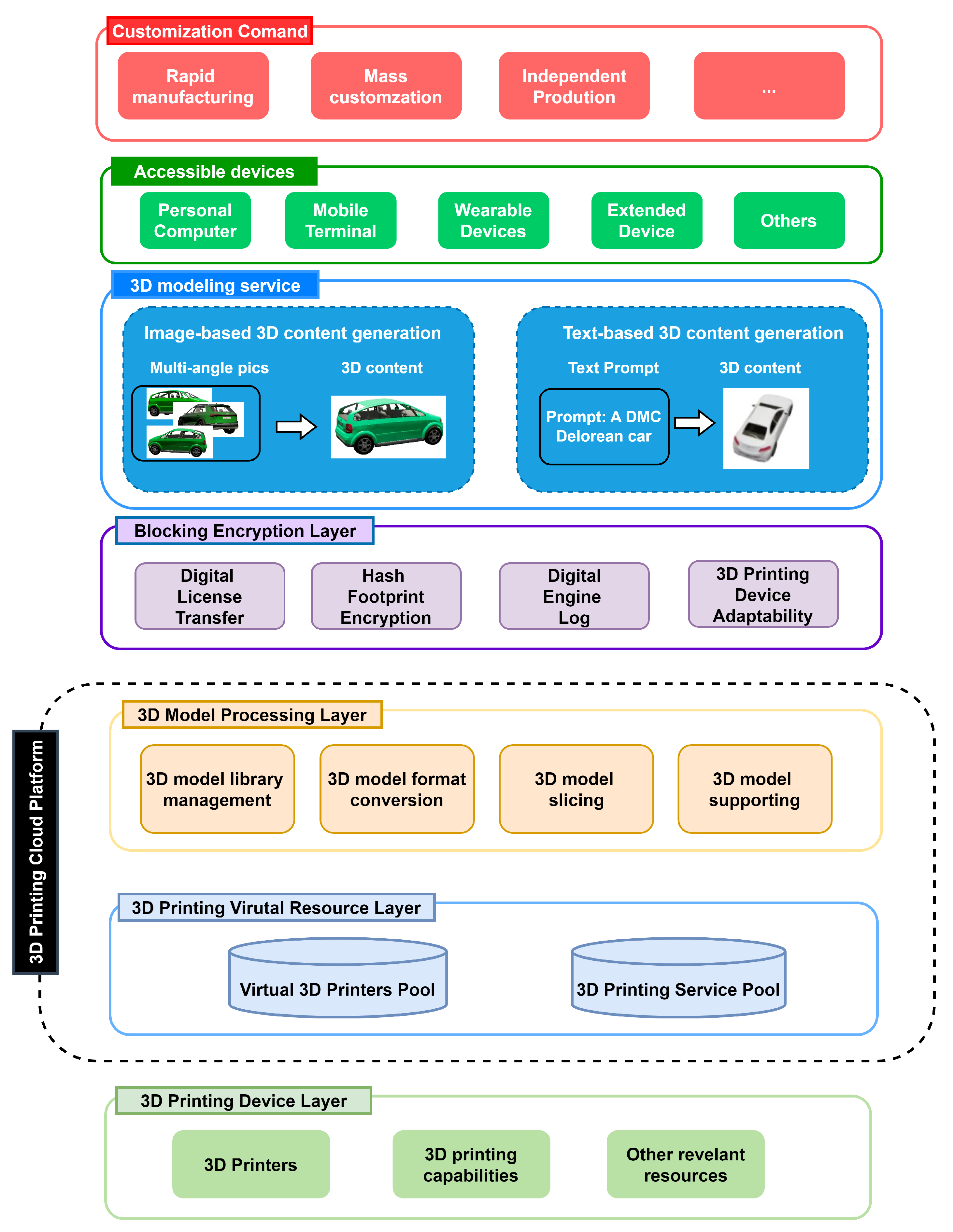

Considering that the characteristics of NeRF and other artificial intelligence technology have great potential for solving the difficulties of SM, a customization production framework of 3D printing using NeRF and ultra-large-scale pre-trained multimodal models as the baseline is proposed in this paper. As shown in

Figure 2, the framework is constructed in the order of the multimodal data-based customization production process and value flow, specifically, from the bottom to the top, it is divided into three parts: 3D modeling service, blockChain encryption service, and cloud management service.

5.1. 3D Modeling Service

3D content customization is a very challenging task as it is a 3D representation. The existing stylized methods are 2D, so we can use the 2D stylized form to provide a kind of supervision. Still, this kind of supervision does not have 3D information, so we need to use the mutual learning between 2D and 3D. Two-dimensional neural networks can provide a stylized reference. More importantly, we offer this 3D-based spatial consistency information to 3D NeRF and finally stylize NeRF. Therefore, we can convert the scenes taken by mobile phones into this 3D stereo-stylized effect.

To solve the difficulties in 3D modeling, as shown in

Figure 3, the service includes two modules: image-based 3D digital-asset reconstruction and text-based 3D digital-asset generation. In the image-based module, the end-to-end model can automatically generate a 3D geometric model without human intervention by taking multiple 2D pictures of the object from different angles with a device such as a mobile phone. The entire model is implemented in the following steps. First, a 360° surrounding image needs to be captured at a fixed focal length. After that, we use this tool for pose estimation. After recovering the poses of the photographed objects, the poses obtained from the sparse reconstruction are converted into local light field fusion (LLFF)-format data and then rendered by NeRF. The NeRF combines light-field sampling theory with neural networks, using an MLP to implicitly learn the scene in the sampling of all light, and it achieves good view synthesis results. In addition, NeRF uses the images themselves for self-supervised learning, which is applicable to a wide range of datasets, from synthetic to real-world. Finally, the corresponding mesh model is exported. To make NeRF practical for 3D modeling in AM, we build it based on instant neural graphics primitives (Instant-NGP) [

34], a fast variant of NeRF. In addition, in order to achieve the realization of the reconstruction process, only the target object is modeled. We use image segmentation algorithms in the pre-processing process, which focuses on the accurate extraction of the reconstructed target and can reduce redundant information in the image during the reconstruction process.

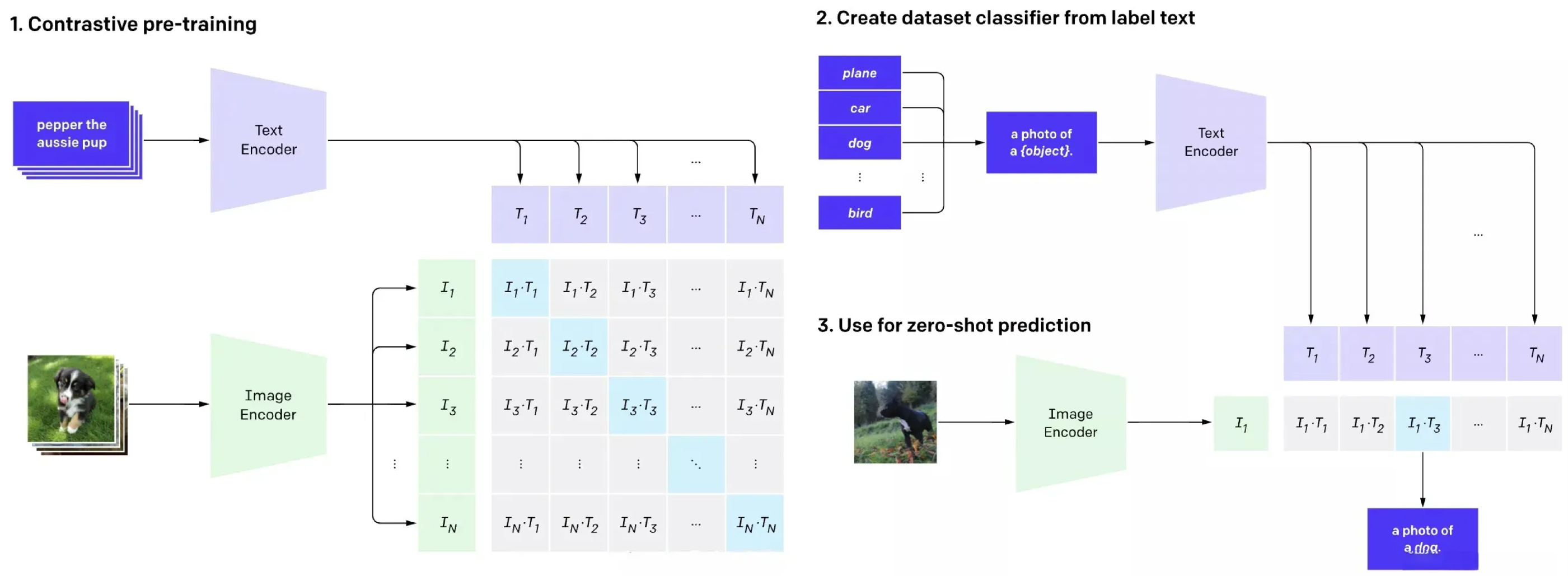

The process of 3D reconstruction based on sequenced images is rather complex. In order to further enhance the convenience and versatility of product customization, we propose a text-guided 3D digital-asset generation module. Recent breakthroughs in text-to-image synthesis have been driven by multimodal models trained on billions of image-to-text pairs. The CLIP multimodal pre-training model is called contrastive language-image pre-training [

35], i.e., a pre-training method based on contrasting text-image pairs. CLIP uses text as a supervised signal to train the visual model, which results in a very good zero-shot effect and good generalization of the final model. The training process is as follows: The input of CLIP is a pair of picture–text pairs. The text and images are output with corresponding features by the text encoder and image encoder, respectively. The text features and image features are then compared and learned on these outputs. If the input to the model is

n pairs of image–text pairs, then this pair of mutually paired image–text pairs are positive samples (the parts marked in blue on the diagonal of the output feature matrix in the

Figure 4), and the other pairs are negative samples. The training process of the model is thus to maximize the similarity of the positive samples and minimize the similarity of the negative samples. However, applying this approach to 3D synthesis requires large-scale datasets of labeled 3D assets and efficient methods for denoising 3D data.

The traditional NeRF scheme for generating 3D scenes requires multiple 3D photographs to achieve 360° visual reconstruction. In contrast, the Dreamfields [

36] algorithm used in this paper does not require photos to generate 3D models and can generate entirely new 3D content. In fact, the algorithm is guided by a deep neural network (DNN) that can display geometric and color information based on the user’s textual description of the 3D object and some simple adjustments. When training the algorithm, a multi-angle 2D photo is required as a supervised signal, and once trained, a 3D model is generated and a new view is synthesized. The role of the CLIP multimodal pre-training model is to evaluate the accuracy of the text-generated images. After the text is fed into the network, the untrained NeRF model generates random views from a single perspective, and then the CLIP model is used to evaluate the accuracy of the generated images. In other words, as shown in

Figure 3, NeRF renders the image from a random position, and finally uses CLIP as a measure the similarity between the text description and the composite image of given parameters

and position s orientation

p. This process is repeated 20,000 times from different views until a 3D model is generated that matches the text description. The corresponding loss function for this training process is:

where

is the image encoder and

is the text encoder. The

objective is to maximize the cosine similarity of the rendered image embedding to the text embedding, and the

objective is to maximize the average transmittance. Inspired by Dreamfields algorithm, this paper leverages a priori knowledge from large pre-trained models to generate 3D digital assets better. Using Dreamfields as the baseline, the images are encoded by a CLIP image encoder and compared with the text input encoded by a CLIP text encoder. To implement support for Chinese prompts, we replaced CLIP with Taiyi-CLIP [

37], a visual-language model using Chinese-Roberta-wwm [

38] as the language encoder, and applied the vision transformer (ViT) [

39] in CLIP as the vision encoder. They froze the vision encoder and tuned the language encoder to speed up and stabilize the pre-training process, and applied Noah-Wukong [

40] and Zero-Corpus [

41] as the pre-training datasets. There are two main limitations of existing dataset collection methods. Specifically, a dataset can contain 100 million image pairs collected from the web. The Wukong dataset was compiled from a query list of 200,000 words in order to cover a sufficiently diverse set of visual concepts. Furthermore, the CLIP model transforms this encoded text input into an image embedding, the output of which is also used for a loss function. In addition, this encoded text input is transformed into an image embedding by the CLIP model. This output is also used for a loss function. The specific operation of the model for each input uses a text prompt. It is necessary to retrain a NeRF model, which will require multi-angle 2D photos, and after completing the training can generate a 3D model, and thus, synthesis of the new perspective. The role of CLIP is still to evaluate the accuracy of text-generated images.

5.2. Blockchain Encryption Service

3D Printing has gone through these stages: from concept to CAD file to generating design (if available) to actual 3D Printing. All these steps represent a loophole where 3D Printing could be compromised or even stolen, putting the company’s intellectual property at risk. All projects, from start to finish, can be done in a blockchain, from the communication of the project to the production and transfer of data, and 3D printing and delivery. Everything could be accessible in the chain, and each party would have all the data. Off-chain, cross-chain, off-chain management, and off-chain certification facilities are primarily referred to as “blockchain infrastructure” in this context. They serve as the foundation for communication between blockchains [

42]. As shown in

Figure 5, firstly, the 3D-printing files are encrypted using a hashing algorithm. Based on the content of the file, the encryption algorithm generates a fingerprint of a unique hash value. The blockchain stores a hash value that verifies the authenticity of the file rather than the file itself. At the second step, we upload the hash value generated after encryption, which is the so-called digital fingerprint, to the blockchain. Finally, if someone wants to print the 3D content, the service will upload the key printing information, such as operator, and location, to the blockchain. Then the blockchain transaction ID is generated, which can further trace the source of the 3D content operation. Trusted printers can communicate with the blockchain by installing so-called Secure Elements [

43] onto AM machines.

The characteristics and their relevance to AM are shown in

Table 2. The most significant advantage of applying blockchain technology to AM is the development of trust. The designer would no longer need to be concerned about his designs being used illegally and could instead focus on getting better at making models. The individual user could also choose to print the files in a variety of ways, either by the number of times they are printed or by gaining access to the files and downloading them directly, both of which are now available at a relatively lower price and with full disclosure regarding the models’ origin. On-demand production is also environmentally friendly and carbon neutral, and manufacturers could produce locally, close to their customers, thereby reducing storage and transportation costs. In addition to the actual goods, brand manufacturers could offer their customers printable files for customization, accessories, and replacement parts. In short, the blockchain for 3D printing will make it possible to trade 3D printing in the form of “tokens” and promote 3D printing as a technology to a greater extent.

5.3. Cloud Management Service

Theoretically, 3D printing is the ideal method of production for cloud manufacturing. Digital files can be printed anywhere with just a 3D printer and suitable materials, thanks to their ease of transfer and no geographic restrictions. The cloud-based 3D printing product personalization service platform uses a browser/server model that allows users to use a browser to access the platform. The Internet serves as the medium between the browser and the server, enabling the cloud service platform to provide a variety of cloud 3D-Printing services. This cloud platform’s architecture consists primary of three layers [

30]: the virtual resources layer, the 3D-printing-resource layer, and the technology support layer. The foundation for the cloud services platform is the primary technical support layer. The cloud administration stage must include the framework as a management of the executives model, which intends to provide consistent and smooth specialized assistance for the cloud-producing administration stage’s activity. The primary function of the virtual resources layer is to abstract and simplify cloud service platform-connected 3D-printing resources: The cloud service platform’s cloud computing technology is used to describe the various physical 3D-printing resources as virtual resources [

31], resulting in virtual data resources. A virtual cloud pool is created when virtual data resources are encapsulated and published to the cloud platform’s resource service center module. Users can select the printing resources they require from the cloud. For the personalized service platform, the 3D-printing resource layer provides software, material, and equipment resources. The purpose of the user interface layer is to provide the cloud service platform with user-friendly application interfaces, allowing users to invoke various cloud services freely.

6. Case Study

Recreational equipment manufacturing is selected as the practice object to verify the effectiveness of the product customization framework proposed in this paper. The toy manufacturing industry is an integral part of the traditional manufacturing industry. It has a high demand for labor and a large export volume of products. Surveys show that the 2020 toy and game market is projected to be US

$135 billion [

44]. 3D Printing allows the creation of physical objects from geometric representations by continuously adding material. It can effectively respond to customers’ needs and help achieve service-oriented manufacturing. In China, the traditional toy market has interactive electronic toys with high-technology content, high-tech intelligent toys, and educational toys. They can foster children’s imagination, creativity, and hands-on skills. These toys are mainstream. The traditional peak season for the toy industry is June to October each year. Thanks to government measures to promote consumption, and urban consumption upgrades, China’s toy and retail scale have maintained steady growth. The main export markets are the United States, the United Kingdom, Japan, and other countries, of which exports to the United States amounted to US

$8.57 billion in 2021, an increase in 6.8% over the previous year, accounting for 25.6% of China’s total exports. According to the research study of Made-in-China, China’s Toys Export in November Amounted to US

$ 2.44 Billion, up by 21.18%.

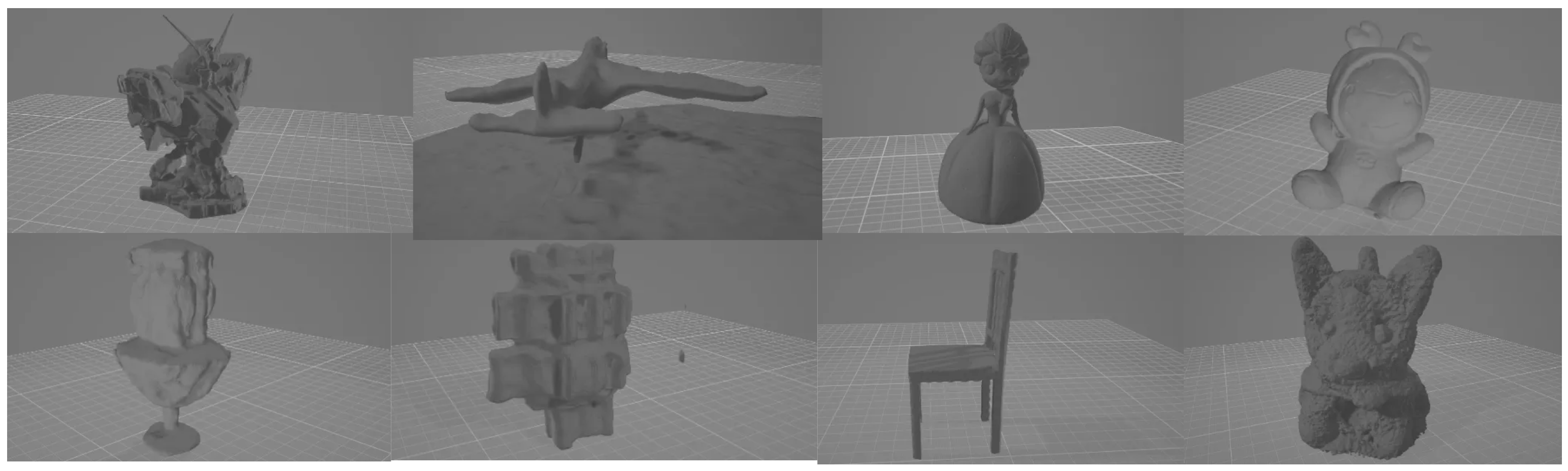

In this paper, the pictures used in the production of datasets were obtained by taking video with mobile phones and then drawing frames. The dataset consists of 86 different scenes, which are mainly composed of scenes with four rotations and scans at 90-degree intervals, thereby realizing a 360-degree model. The production of the dataset can be summarized in three steps. First, do feature matching of pictures to obtain the camera pose. Second, convert the matching pose into LLFF format. Finally, upload the required files to the corresponding folder of NeRF and set up the configuration file. LLFF-format data can be the corresponding picture parameters. Camera position and camera parameters can be stored in a simple and effective file to facilitate Python reading. And the NeRF model’s source code has the necessary configuration and modules for direct training on LLFF-format datasets, making it easy for researchers to use. COLMAP is a universal motion structure (SfM) and multi-view stereo (MVS) processing tool with graphical and command-line interfaces. It provides a wide range of functions for the reconstruction of ordered and disordered image collections. We used this tool to estimate the photo position. Through the sparse reconstruction of COLMAP, the position and posture of the photo are restored. The next step is to generate the data format used for NeRF training and select the data in LLFF format. The whole process is end-to-end trainable using the dataset as input. The trained NeRF-based model is used to build an end-to-end module. Users can provide multiple photos at different angles for the same object. For example, take one image at each angle, and provide 3–4 angled pictures. If you need to generate a position that cannot be photographed, such as the bottom, you need to provide further pictures of the model lying on its side. When moving around an object, adjacent photos must overlap by at least 70%. It is recommended to take at least 30 photos to provide more pictures, which is helpful in generating higher-quality 3D models.

As for the text-to-3D module, we use a Pytorch implementation of an original Dreamfields algorithm as baseline. We mainly changes the back end of the original Dreamfields from the original NeRF to our model and replaces the origin CLIP encoder with the Taiyi-CLIP [

37] encoder. In addition, to improve the performance of the generated content, we apply R-Drop [

45] for regularization in the forward propagation of the CLIP pre-trained model; these methods enhance the expressiveness and generalization ability of our model. We used search engines to crawl about 10,000 image–text pairs of data related to the toy manufacturing industry to form fine-tuned datasets. Each image has up to five descriptions associated with it. The input for the model is a batch of subtitles and a batch of images passed through the CLIP text encoder and image encoder, respectively. The training process uses contrast learning to learn joint embedded representations of images and subtitles. In this embedded space, the images are very close to their respective descriptions, as are similar images and similar descriptions. Conversely, images and descriptions of different images may be pushed further. In order to standardize our dataset and prevent overfitting due to the size of the dataset, we used both image and text enhancement. Image enhancement was done online using the built-in conversion in the Pytorch Torchvision package. The transformations used were random cropping, random resizing and cropping, color jitter, and random horizontal and vertical flipping.

As shown in

Table 3 and

Table 4, we have performed some experiments to test the print time, quality, and the corresponding actual printing time of the generated models with different inputs, and we can see that the models generated within this framework are reliable in terms of efficiency and quality. Some results are shown in

Figure 6.

The resource scheduling interface of the cloud platform adopts the development mode of separating the front and back ends. Springboot, Mybatis, and Springcloud frameworks were applied to develop the back-end of the cloud platforms. The persistent operation of data storage uses MongoDB and MySQL databases. The cloud platform is equipped with a cloud server with 16 G memory and 8 NVIDIA V100 GPUs graphics cards. We deployed the pre-trained large model described above on TF-Serving’s server. TF-Serving will automatically deploy it according to the incoming port and model path. The server where the model is located does not need a Python environment (take Python training as an example). And then the application service directly launches a service call to the server where the model is located. The call can be made through grpc.

The 3D models of the 3D model file library come from the uploads of various designers on the cloud platform. The designers of the 3D printing cloud platform designed 3D models with a certain market value according to their own capabilities and market needs and then uploaded them to the cloud platform. The cloud platform is responsible for optimizing and managing these model files. Users can quickly search for suitable 3D model files. The management of the 3D model file library mainly includes the removing repeat, classification, evaluation, and security management of model files.

The cloud platform’s overall framework is shown in

Figure 7. It supports data processing in the 3D printing process, including data format conversion, support design, slice calculation, print path planning, structural analysis, model optimization, etc. These steps depend on each other and affect each other, and together determine the the efficiency of printing and the quality of the finished product. In addition, in order to improve the computational efficiency of the support-generation process in the 3D-printing process, the parallelized slicing method, the model placement pointing optimization and its parallelization method [

46], and GPU-based parallelized support generation were successfully applied to this platform.

7. Conclusions

In SM, providing a user-friendly approach to product customization has been the focus of research. As an early attempt to apply NeRF and large pre-trained vision-language models such as CLIP, we propose an efficient product customization framework in the SM paradigm. It provides a new method of collaborative product design, which can efficiently solve the problems existing in current manufacturing systems, such as the accuracy of 3D modeling, security, supervision, anti-counterfeiting, and efficient allocation of resources. However, the key technologies used in this paper, such as neural-volume rendering and multimodal data processing technology, still need to be further studied and improved. The main limitations can be summarized as follows. Firstly, choosing a suitable resolution is very important for printing a high-quality model. A too low resolution will inevitably affect the quality of the finished print. A low resolution results in a non-smooth surface for the finished 3D printing. It is currently difficult to obtain high-resolution geometry or textures for 3D models with the model presented in this paper. To address this issue, a coarse-to-fine optimization approach could be proposed, in which multiple diffusion priors at different resolutions would optimize the 3D representation, which would result in view-consistent geometry and high-resolution details. Secondly, in the current implementation of the 3D model generation algorithm, a NeRF network needs to be retrained for each text prompt, which results in low efficiency of model generation and requires a large amount of GPU resources. In the future, we can consider loading the existing generic mesh model and then iteratively modifying the 3D model by text prompts to generate an ideal 3D model. A priori forward-looking production framework for future integration would be instructive. Accordingly, this work is proved to be a revolutionary break through for solving the core problems of 3D modeling in SM.