Abstract

This work addresses the problem of dynamic consensus, which consists of estimating the dynamic average of a set of time-varying signals distributed across a communication network of multiple agents. This problem has many applications in robotics, with formation control and target tracking being some of the most prominent ones. In this work, we propose a consensus algorithm to estimate the dynamic average in a distributed fashion, where discrete sampling and event-triggered communication are adopted to reduce the communication burden. Compared to other linear methods in the state of the art, our proposal can obtain exact convergence under continuous communication even when the dynamic average signal is persistently varying. Contrary to other sliding-mode approaches, our method reduces chattering in the discrete-time setting. The proposal is based on the discretization of established exact dynamic consensus results that use high-order sliding modes. The convergence of the protocol is verified through formal analysis, based on homogeneity properties, as well as through several numerical experiments. Concretely, we numerically show that an advantageous trade-off exists between the maximum steady-state consensus error and the communication rate. As a result, our proposal can outperform other state-of-the-art approaches, even when event-triggered communication is used in our protocol.

1. Introduction

The problem of dynamic consensus, also referred to as dynamic average tracking, consists of computing the average of a set of time-varying signals distributed across a communication network. This problem has recently attracted a lot of attention due to its applications in general cyber-physical systems and robotics. For instance, dynamic consensus algorithms can be used to coordinate multi-robot systems, formation control, and target tracking, as in [1,2]. A more detailed overview of the common problems in the field of multi-robot coordination can be found in [3].

Many dynamic consensus approaches in continuous time exist in the literature [4]. For example, a high-order linear dynamic consensus approach is proposed in [5], which is capable of achieving arbitrarily small steady-state errors when persistently varying signals are used by tuning a protocol parameter. The protocol in [6] adopts a similar linear approach and analyses its performance against bounded cyber-attacks in the communication network. In [7], another linear consensus protocol is proposed to address the problem of having active and passive sensing nodes with time-varying roles. While these approaches obtain good theoretical results in continuous time, only discrete-time communication is possible in practice. In contrast, other works are designed directly in a discrete-time setting where local time-varying signals are sampled with a fixed sampling step shared across the network. For example, a high-order discrete-time linear dynamic consensus algorithm is proposed in [8] with similar performance to those in continuous time. The proposal in [9] extends the previous work to ensure it is robust to initialization errors. Similar ideas are applied to distributed convex optimization in [10].

Besides having discrete communication, maintaining a low communication burden in the network is desirable, both from power consumption and communication bandwidth usage perspectives. For this purpose, an event-triggered approach can be used to decide when agents should communicate instead of doing so at every sampling instant. Several works use event-triggered conditions in which the estimate for the average signal for a particular agent is only shared when it changes sufficiently with respect to the last transmitted value. For example, an event-triggered communication rule is applied to a continuous-time dynamic consensus problem in [11]. In [12], an event-triggered dynamic consensus approach is analysed for homogeneous and heterogeneous multi-agent systems. These ideas have shown to be helpful in distributed state estimation. For instance, deterministic [13] and stochastic [14] event-triggering rules have been used to construct distributed Kalman filters. The proposal in [15] borrows these ideas to construct an extended Kalman filter. Distributed set-membership estimators have also been studied in the context of event-triggered communication [16]. Event-triggered schemes for estimation typically result in a trade-off between the quality of the estimates and the number of triggered communication events, as is pointed out in [17]. This allows the user to tune the parameters of the event-triggering condition to reduce communication while achieving a desired estimation error for the consensus estimates. A more in-depth look into event-triggered strategies can be found in [18].

However, most event-triggered and discrete-time approaches for dynamic consensus are based on linear techniques, even in recent works [15]. This means that the estimations cannot be exact when the dynamic average is persistently varying, e.g., when the local signals behave as a sinusoidal. Concretely, these approaches typically attain a bounded steady-state error, which can become arbitrarily small by increasing some parameters in the algorithm [4]. Despite this, as typical with high-gain arguments, increasing such parameters can reduce the algorithm’s robustness when only imperfect measurements or noisy local signals are available [19]. In contrast, exact dynamic consensus algorithms can achieve exact convergence towards the dynamic average under reasonable assumptions for the local signals, including the persistently varying case. For example, a discontinuous First-Order Sliding Mode (FOSM) approach is used in [20] to achieve exact convergence in this setting. Similar ideas are applied in [21] for continuous-time state estimation. The work in [22] proposes a High-Order Sliding Mode (HOSM) approach for exact dynamic consensus, which was then extended in [23] to account for initialization errors. Note that these approaches are mainly based on sliding modes to achieve such an exact convergence. As a result, continuous-time analysis is mostly available for these protocols.

Unfortunately, sliding modes deteriorate their performance in the discrete-time setting due to the so-called chattering ([24], Chapter 3). In particular, FOSM approaches for dynamic consensus in [20,21] deteriorate its performance in an order proportional to the time step in the discrete-time setting. A standard solution to attenuate chattering is to use HOSM [24]. To the best of our knowledge, the only methods that use HOSM for dynamic consensus are [22,23]. However, discrete-time analysis or event-triggered extensions for these approaches have not been discussed so far in the literature.

As a result of this discussion, we contribute a novel distributed dynamic consensus algorithm with the following features. First, the algorithm uses discrete-time samples of the local signal. We also propose the adoption of event-triggered communication between agents to reduce the communication burden. Our proposal is consistently exact, which means that the exact estimation of the average signal is obtained when the time step approaches zero and when events are triggered at each sampling step. We show the advantage of our method against linear approaches, in which the permanent consensus error cannot be eliminated. Moreover, in the general discrete-time case, we show that our proposal reduces chattering when compared to exact FOSM approaches.

This article is organized as follows. Section 2 provides a description of the problem of interest and our proposal to solve the consensus problem on a discrete-time setting using event-triggered communication. A formal analysis of the convergence for our protocol can be found in Section 3. In Section 4, we validate our proposal and compare it to other methods through simulation experiments. Furthermore, Section 5 provides a qualitative discussion comparing our proposal to other related works. The conclusions and future work are drawn in Section 6.

2. Problem Statement and Protocol Proposal

In this Section, we describe the problem of interest and our proposed consensus protocol to solve it using event-triggered communication between the agents.

2.1. Notation

Let , then . Let , the matrix with unit elements and the identity matrix. Let if if and . Moreover, if , let for and . When , then for . Let represent the nth order derivative of x. The notation , with some , refers to the interval .

2.2. Problem Statement

Consider a multi-agent system distributed in a network with node set and edge set , modelling communication links between agents. In the following, is an undirected connected graph of agents characterised by an adjacency matrix and equally described by an incidence matrix , choosing an arbitrary orientation ([25], Chapter 8). Let denote the index set of neighbours for an agent .

We consider that each agent has access to a local time-varying signal at some fixed sampling instants where is the sampling period. We write for simplicity. The goal is for all the agents to estimate the dynamic average signal [4]:

in a distributed fashion, by sharing information between them.

2.3. Protocol Proposal

Our proposal to solve the multi-agent consensus problem is a protocol that adopts event-triggered communication to reduce the communication burden in the network of agents. It also uses High-Order Sliding Modes to achieve precise consensus results.

Event-triggered communication: To reduce the communication burden in the network, an event-triggered approach is used to decide when an agent communicates with its neighbours. Denote with the estimation that agent has for the signal at . Then, the communication link is updated at the sequence of instants provided by the recursive triggering rule:

where is a design parameter and by setting without loss of generality. The interpretation of (2) is that both agents for a link have stored local copies of each other’s estimation from the last time the link was updated. If agent i detects that its current estimation satisfies , it then sends to agent j, which responds by sending its current estimation back. Subsequently, both agents update . A similar protocol occurs when agent j detects .

The proposed algorithm to estimate in this setting is of the following form. Each agent has internal states from which the local estimation is computed according to:

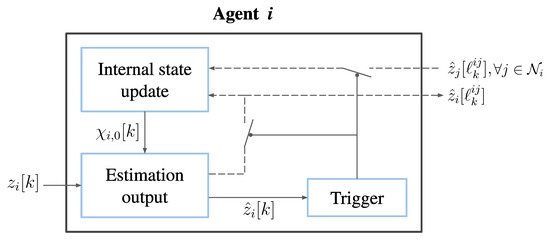

with gains and . The proposal for the exact dynamic consensus algorithm with the event-triggered communication between the agents is summarized in Algorithm 1. Figure 1 illustrates the flow of information for each agent .

| Algorithm 1: Exact dynamic consensus with event-triggered communication |

|

Figure 1.

Block diagram for each agent . The internal states that the agent computes are updated using the last transmitted estimates of the agent and its neighbours , which are denoted by and , respectively. The current estimate is computed using the internal state and the local signal . This estimate is evaluated by the event trigger, which decides if the current value should be transmitted to the neighbours.

To ensure the convergence of (3), we require the following assumption for the signals , regardless of the sampling period :

Assumption A1.

Given , then it follows that:

Theorem 1.

Let be a connected graph and Assumption 1 hold for some fixed . Then, for any there exist sufficiently high gains and some , such that if then:

for some constant .

The condition in the previous theorem has been used in other works in the literature [4,22] and is complied trivially by setting . The proof for Theorem 1 is provided in Section 3.1.

In addition, if samples of the derivatives are also available, either by assumption or through a differentiation algorithm [26], then additional outputs can be obtained as:

which estimate the derivatives at . The following result regarding the outputs (4) is obtained as a consequence of the proof of Theorem 1 in Section 3.1.

Corollary 1.

Consider the assumptions of Theorem 1 and the outputs in (4). Then, in addition to the conclusion of Theorem 1, there also exist such that:

Remark 1.

While the performance of our proposal in (3) is not exact as a result of Theorem 1, we say that it is consistently exact. This means that the exact convergence is obtained when , even under persistently varying local signals , recovering the performance of the continuous-time protocol in [22].

Remark 2.

Note that if , our proposal in (3) is similar to the approaches in [20,21], which use FOSM. In this case, considering , the error bound performance of the protocol is proportional to Δ as a result of Theorem 1, as expected due to chattering. Moreover, by increasing the order of the sliding modes in (3), the effect of chattering is attenuated by having an error bound performance proportional to , which is smaller than Δ for small sampling steps.

Remark 3.

In light of Theorem 1 and Corollary 1, it can be concluded that the event-triggering threshold ε affects the maximum error bound. Hence, its value can be tuned for a particular application according to the magnitude of the admissible consensus error. Moreover, recalling (2), the parameter ε represents the admissible error one node can have in its knowledge of its neighbours’ estimates. For this reason, ε should be small enough to guarantee that the magnitude of the difference is acceptable in comparison to the amplitude of the signal according to the application requirements.

Remark 4.

Theorem 1 ensures the existence of sufficiently high gains such that the convergence of (3) is obtained. However, the proof of the theorem reveals that these gains can be extracted precisely from a continuous-time EDCHO protocol. Hence, we refer the reader to [22], where a design procedure for the EDCHO gains is described in detail based on well-established techniques.

3. Protocol Convergence

In this Section, we provide a formal analysis of the convergence of our consensus protocol. To do so, we introduce three technical lemmas before providing the proof of Theorem 1 in Section 3.1.

First, define and . Moreover, define:

where , as well as . In addition, note that where by construction from (2). Hence, with and .

Thus, the protocol in (3) can be written in vector form as:

for which the condition in Theorem 1 can be written as .

The purpose of the internal variables is to approximate the disagreement between local signals expressed as with recalling that such that if exactly, then all the agents reach consensus towards:

The first step to show that approximates is showing that it is always orthogonal to the consensus direction in the following result.

Lemma 1.

Let be connected and hold . Then, (5) satisfies .

Proof.

The proof follows by strong induction. Note that, from (5) since . Hence, due to the initial condition . Now, use this as an induction base for the rest of the . Assume from the induction hypothesis that with and . Then, from (5):

from which it follows that similarly as before, completing the proof. □

Now, let the error and recall from Corollary A2 in Appendix B that can be expanded as:

where . Therefore, combining (5) and (6), the error dynamics for can be written as:

using due to . Rearranging (7) leads to:

To show the convergence of the error system in (9), we write an equivalent continuous-time system as follows. First, we extend solutions to (7) as for any according to:

where is an arbitrary vector such that (11) complies (9) at . Hence, it follows that:

Finally, note that satisfies and hence . Thus, we have:

In the following, we derive some important properties for (13).

Lemma 2.

Given any , the differential inclusion (13) is invariant under the transformation:

Proof.

Write with and note that:

and . The same reasoning applies to the case where . Now, compute the dynamics of the transformed variables in the new time :

which completes the proof. □

Lemma 3.

Let Assumption 1 hold. Hence, if , then there exist sufficiently high gains such that (13) is finite-time stable towards the origin.

Proof.

The proof follows by noting that:

for and:

Hence, (13) with is equivalent to (A2) in Appendix A, which is finite-time stable towards the origin according to Corollary A1, completing the proof. □

With these results, we are ready to show Theorem 1.

3.1. Proof of Theorem 1

We use a similar reasoning as in Theorem 2 in [27] where the asymptotics in Theorem 1 are obtained through an argument of continuity of solutions for differential inclusions with respect to the parameter [28]. First, let the transformation:

and note that the dynamics of the new variables comply with:

according to Lemma 2. Lemma 3 implies that if then (15) complies with for some . Hence, by the continuity of the solutions of the inclusion (15), there exists a sufficiently small such that (15) complies for some .

Using , the original variables comply that for :

with , which is a constant since is fixed. Therefore, . This means that:

and, thus, . Consequently, evaluating the previous inequality component-wise and at , then for some where we used , completing the proof of Theorem 1. A similar argument is used for the additional outputs in (4) in order to show Corollary 1.

4. Numerical Experiments

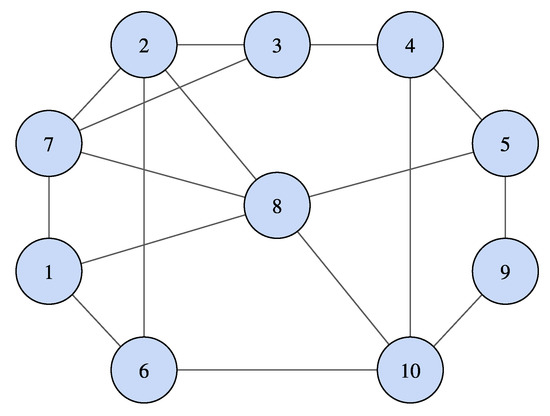

To validate our proposal, we show experiments on a simulation scenario. The communication network for the agents is described by the graph shown in Figure 2. As an example, we consider . For this case, we set the gains for all agents, which were chosen as established in Remark 4. We have arbitrarily chosen initial conditions as and as well as to ensure . The internal reference signals for each agent have been provided as . For the sake of generality, we have arbitrarily chosen the following amplitudes , frequencies , and phases , respectively:

Figure 2.

Graph describing the communication network of the agents.

Our goal is to show that we can achieve consistently exact dynamic consensus on the average of the reference signals, , as defined in Remark 1. For our protocol, the error due to the discretization and event-triggered communication is in an arbitrarily small neighbourhood of zero. The desired neighbourhood can be tuned with and , according to Theorem 1. Thus, we have tested our proposal with different sampling times and event thresholds .

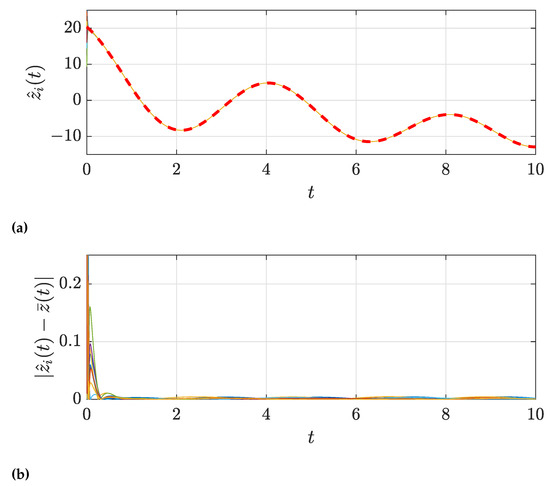

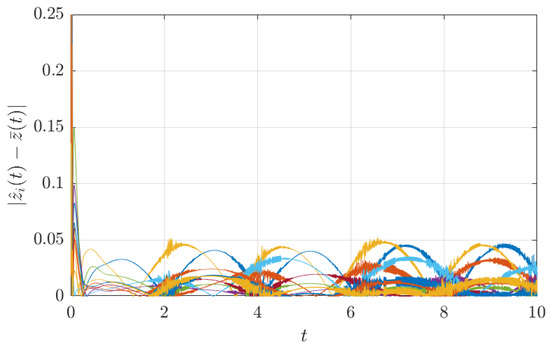

Figure 3 shows the consensus results for our protocol with a sampling period of and no event-triggered communication ( as an approximation of the ideal continuous-time case with full communication. For the Figures, we define for . The desired dynamic average of the signals, , is plotted in a dashed red line. It can be observed that, after some time, the consensus error falls close to zero.

Figure 3.

Consensus results for our protocol with (full communication). By using a small time step and communication at every step, our protocol obtains highly precise results. (a) Consensus estimates for the average signal using our proposal. The desired value is plotted as a dashed red line. The estimates quickly converge to the average signal. (b) Consensus error of the estimates with respect to the average signal. The error converges to a neighbourhood of zero, which can be made arbitrarily small by tuning the value of the sampling period.

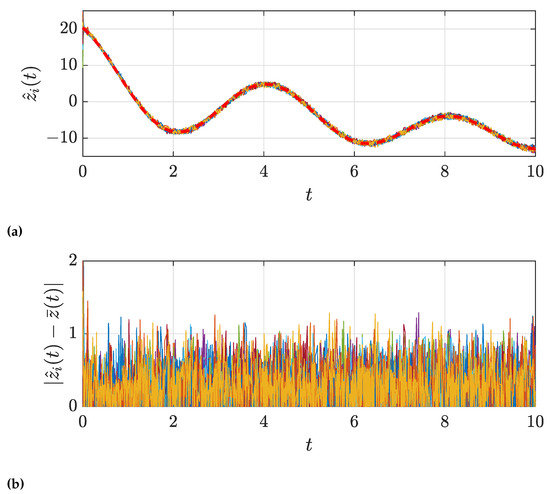

When we add event-triggered communication, the consensus error increases with the value of the triggering threshold . This can be observed in Figure 4 and Figure 5, which show the results with and , respectively, keeping . Note that, especially for a small , the event-triggered error is more apparent in the instants where the measured signal changes its slope. This is due to the shape of the event-triggering condition (2), since events are triggered when the current estimate differs from the last transmitted one by more than some threshold . In the instants around the change in slope, this difference remains smaller than the triggering threshold for a longer time, with the signal around the flat region. Hence, a more noticeable trigger-induced error appears.

Figure 4.

Consensus error for our protocol with . The addition of event-triggered communication increases the steady-state consensus error with respect to the full communication case (see Figure 3) and can be tuned with .

Figure 5.

Consensus results for our protocol with . Due to the increase in the event-triggering threshold, the error is higher than in the case with , shown in Figure 4. (a) Consensus estimates for the average signal using our proposal. The desired value is plotted as a dashed red line. The estimates quickly converge to the average signal, but with an increased steady-state error due to the event-triggered communication. (b) Consensus error of the estimates with respect to the average signal. Increasing the event-triggering threshold causes a higher steady-state error.

The sampling period also affects the magnitude of the steady-state consensus error. Figure 6 shows the consensus results with . When compared to Figure 4, it is apparent that the consensus error has increased with the sampling period. Note that the oscillating shape of the error is due to the sinusoidal shape of the signal of interest.

Figure 6.

Consensus error for our protocol with . Compared to the case shown in Figure 4, with , the consensus error has increased.

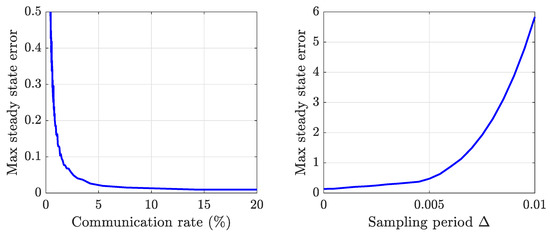

The increase in error due to is common in event-triggered setups where there is a trade-off between the number of triggered events and the quality of the results. When we set a higher value of , we achieve a reduction in communication between agents since information is only exchanged at event instants in the corresponding links, at the cost of an increase in the resulting error. To further analyse the said trade-off, Figure 7 shows the effect that the reduction in communication has on the consensus error. This figure shows that the communication through the network of agents can be significantly reduced with respect to the full communication case without producing a high increase in the steady-state consensus error. The communication rate has been computed as follows:

Figure 7.

Trade-off between the consensus error and the communication rate (with fixed ) and trade-off with the sampling period (with fixed . As the value of the triggering threshold increases, the communication through the network is reduced, at the cost of a higher consensus error. However, using the event-triggering mechanism, communication can be significantly reduced with respect to the full communication case (communication rate of 100%), with a relatively small increase in the steady-state error. The consensus error also increases with the sampling period . When , the parameter has a higher impact than on the magnitude of the steady-state error.

A communication rate of 100% represents full communication, where each agent transmits to all its neighbours at every sampling time. For our proposal, note that when an event is triggered in the link , a message is sent from i to its neighbour j and agent j replies with another message to agent i. Thus, for every event that is triggered, two messages are sent through the network. We have considered that when events are triggered in the links and simultaneously, only one message is sent in each direction.

A similar trade-off is found for the sampling time. Figure 7 also shows the evolution of the consensus error with different values of the sampling period. Note that, if event-triggered communication is used (), the value of has a higher impact on the steady-state error than the sampling period when . In that case, the steady-state error does not asymptotically tend to zero but rather remains in a neighbourhood of zero that is bounded according to .

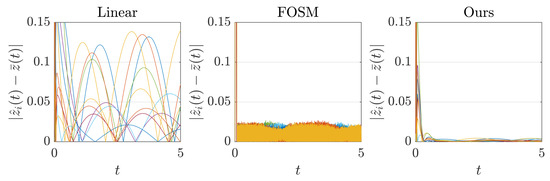

To compare with the other approaches in the literature, we have obtained consensus results for the same simulation scenario using a linear protocol and a First-Order Sliding Modes (FOSM) protocol, which are two existing options for dynamic consensus in the state of the art as described in the following. Note that by performing some adaptations, the linear and FOSM protocols can be obtained as particular cases of our protocol. To implement the linear protocol, we use (3) with , which is a similar setting as in [4,9]. For the FOSM protocol, we use the equations in (3) setting , which correspond to one of the proposals in [20]. For both cases, we set and to simulate the case with full communication.

Figure 8 compares the consensus error for the linear and FOSM protocols against our proposal. The FOSM protocol can eliminate the steady-state consensus error in continuous time implementations, but the discretized version suffers from chattering. In contrast, we can see that our method reduces chattering, improving the steady-state error. The linear approach cannot eliminate the permanent consensus error in the estimates of the dynamic average , regardless of the value of (note that the shape of the error is due to the sinusoidal nature of the signals used in the experiment).

Figure 8.

Comparison of consensus error with linear protocol, FOSM, and our protocol, under full communication (). With the linear protocol, there exists a permanent steady-state error, which is higher than for the other protocols. Both the FOSM and our protocol can eliminate the error in a continuous-time implementation, but in the discretized setup our method improves the error caused by chattering with respect to the FOSM protocol.

We show the numerical results in Table 1 to summarize our comparison. We include the maximum steady-state error obtained for the linear and FOSM protocols and for our method. We include the results with full communication () for comparison and the values obtained with our event-triggered setup (). To highlight the advantage of event-triggered communication, we include the level of communication in the network of each approach. The results from Table 1 show that our proposal can significantly reduce the steady-state error compared to the linear and FOSM protocols. Moreover, including event-triggered communication instead of having each agent transmit to all neighbours at every sampling instant produces a drastic reduction in the amount of communication through the network while still performing better than the linear and FOSM protocols with full communication.

Table 1.

Comparison of numerical results for different protocols. Our proposal reduces the estimation error with respect to linear and FOSM protocols in the full communication case. Moreover, adding event-triggered communication to our proposal produces a significant reduction in communication, while still achieving better error values than other protocols.

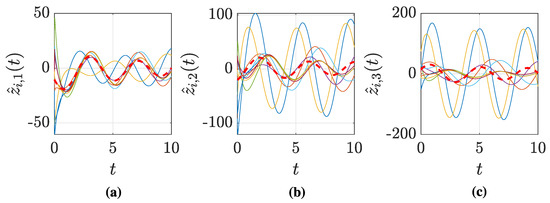

Finally, we showed in Corollary 1 that our proposal could also recover the derivatives of the signal of interest with some bounded error. We compare the results obtained for the derivatives against those of a linear protocol. For the FOSM protocol, recall that is used in (3) to compute it and, therefore, it only obtains the internal state , but not the corresponding internal states for the derivatives. Although the linear approach can recover the derivatives of the desired average signal, our proposal is more accurate, as shown in Figure 9 and Figure 10. With our protocol, the derivatives are recovered with some bounded error due to the sampling and event-triggered communication. It can be seen in Figure 10 that this error is more apparent in high-order derivatives and its magnitude can be tuned according to the parameters , as shown in Corollary 1. Hence, the improvement obtained with our protocol with respect to the linear one is also evident.

Figure 9.

Consensus results for the linear protocol with , showing the estimates of the derivatives of first (a), second (b), and third (c) order. The derivatives are plotted as a dashed red line. High-order derivatives are not accurately computed using a linear protocol.

Figure 10.

Consensus results for our protocol with , showing the estimates of the derivatives of first (a), second (b), and third (c) order. The derivatives are plotted as a dashed red line. The error induced by the discretization and event-triggered communication is more apparent in higher-order derivatives, but the estimates are greatly improved with respect to the linear protocol (compare to Figure 9).

5. Comparison to Related Work

In this Section, we offer a qualitative comparison of our work with other methods in the literature. Note that our work is related to the EDCHO protocol presented in [22], which provides a continuous-time formulation of an exact dynamic consensus algorithm. Both works are similar in that they use HOSM to achieve exact convergence. However, since discrete communication is used in practice, our work contributes a discrete-time formulation that guarantees exact convergence when the sampling step approaches zero. Moreover, we have included event-triggered communication to alleviate the communication load in the network of agents.

Other exact dynamic consensus approaches appear in [20,21], which use FOSM. In the discrete-time consensus problem, FOSM protocols suffer from chattering, a steady-state error caused by discretization. The chattering deteriorates the performance of the consensus algorithm in an order of magnitude proportional to the sampling step when using FOSM. Using HOSM, we have shown in Theorem 1 that our protocol diminishes the effect of chattering. In particular, the error bound is proportional to , with m being the order of the sliding modes. We showcased this improvement in the experiment results from Figure 8 and Table 1 in Section 4.

Even though the use of sliding modes results in the exact convergence of the consensus algorithm in continuous-time implementations, several works still rely on linear protocols. A high-order, discrete-time implementation of linear dynamic consensus protocols appears in [9] whose performance does not depend on the sampling step, contrary to our work. Nonetheless, this approach requires the agents to communicate on each sampling instant. This feature is improved in our approach by the use of event-triggered communication.

Many recent works featuring event-triggered schemes to reduce communication among the agents [13,15,29] attain a similar trade-off between the consensus quality and communication rate. However, they also rely on linear consensus protocols. When persistently varying signals are used, this always results in a non-zero steady-state error, which cannot be eliminated even in continuous-time implementations. Hence, even though our event-triggering mechanism is similar to those from other works, our HOSM approach can achieve a vanishing error when are arbitrarily small.

6. Conclusions

We have proposed a dynamic consensus algorithm to estimate the dynamic average of a set of time-varying signals in a distributed fashion. By construction, discrete sampling and event-triggered communication are used to reduce the communication burden. The algorithm uses High-Order Sliding Modes, resulting in exact convergence when continuous communication and sampling are used. We have shown that reduced chattering is obtained in the discrete-time setting compared to the First-Order Sliding Modes approach. We have also shown the advantage of our proposal against linear protocols because our protocol can recover the average signal’s derivatives. Finally, we have highlighted the important communication savings that can be achieved by using event-triggered communication between the network agents while maintaining a good trade-off between error and communication. A formal convergence analysis and multiple numerical examples are provided, which verify the proposal’s advantages. We consider removing the constraint on the initial conditions for future work, which will cause the proposal to be robust to the connection or disconnection of agents from the network.

Author Contributions

Conceptualization, R.A.-L.; data curation, validation and experimentation I.P.-S.; investigation and software, I.P.-S. and R.A.-L.; writing, review and editing, I.P.-S., R.A.-L. and C.S.; project administration, supervision and funding acquisition, C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported via projects PID2021-124137OBI00 and TED2021-130224B-I00 funded by MCIN/AEI/10.13039/501100011033, by ERDF A way of making Europe and by the European Union NextGenerationEU/PRTR, by the Gobierno de Aragón under Project DGA T45-20R, by the Universidad de Zaragoza and Banco Santander, by the Consejo Nacional de Ciencia y Tecnología (CONACYT-Mexico) with grant number 739841, and by Spanish grant FPU20/03134.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The EDCHO Protocol

The EDCHO protocol, presented in [22], is a distributed algorithm designed to compute and its first m derivatives at each agent, where are local time-varying signals. EDCHO can be written as:

where is the index set of neighbours for agent i.

Proposition A1

([22] adapted from Theorem 7). Let Assumption 1 hold for given L, and a fixed connected communication network . Then, there exists a time that depends on the initial conditions and gains such that (A1) comply with:

Given a value of , the design rules for the parameters are detailed in Section 6 in [22]. Now, let . Hence, Proposition A1 implies that , which, combining with , implies with recalling that . This means that reaches the origin in finite time and has dynamics:

written as a differential inclusion since it applies to any given Assumption 1 and since due to . In this case, the solutions to (A2) are understood in the sense of Filippov [28] in which we set . This leads to the following result.

Corollary A1.

Consider that the assumptions of Proposition A1 hold, then all the trajectories of the differential inclusion in (A2) reach the origin in finite time.

Appendix B. Taylor Theorem with Integral Remainder

Proposition A2

([30] [Theorem 7.3.18, Page 217). ] Suppose that exist on for some and that is Riemann integrable on the same interval. Therefore, we have:

where the remainder is provided by:

Corollary A2.

Consider some integer m such that the same assumptions as in Proposition A2 hold for each of the components of some vector signal with . Moreover, assume that for some L. Then, we have:

where the remainder satisfies:

Proof.

First, let be any component of and set in Proposition A2 to recover (A3) with vector of remainders:

Next:

which completes the proof. □

References

- Aldana-Lopez, R.; Gomez-Gutierrez, D.; Aragues, R.; Sagues, C. Dynamic Consensus with Prescribed Convergence Time for Multileader Formation Tracking. IEEE Control Syst. Lett. 2022, 6, 3014–3019. [Google Scholar] [CrossRef]

- Chen, F.; Ren, W. A Connection Between Dynamic Region-Following Formation Control and Distributed Average Tracking. IEEE Trans. Cybern. 2018, 48, 1760–1772. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Beard, R.W.; Atkins, E.M. A survey of consensus problems in multi-agent coordination. In Proceedings of the American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 3, pp. 1859–1864. [Google Scholar]

- Kia, S.S.; Van Scoy, B.; Cortes, J.; Freeman, R.A.; Lynch, K.M.; Martinez, S. Tutorial on Dynamic Average Consensus: The Problem, Its Applications, and the Algorithms. IEEE Control Syst. Mag. 2019, 39, 40–72. [Google Scholar] [CrossRef]

- Sen, A.; Sahoo, S.R.; Kothari, M. Distributed Average Tracking With Incomplete Measurement Under a Weight-Unbalanced Digraph. IEEE Trans. Autom. Control 2022, 67, 6025–6037. [Google Scholar] [CrossRef]

- Iqbal, M.; Qu, Z.; Gusrialdi, A. Resilient Dynamic Average-Consensus of Multiagent Systems. IEEE Control Syst. Lett. 2022, 6, 3487–3492. [Google Scholar] [CrossRef]

- Peterson, J.D.; Yucelen, T.; Sarangapani, J.; Pasiliao, E.L. Active-Passive Dynamic Consensus Filters with Reduced Information Exchange and Time-Varying Agent Roles. IEEE Trans. Control Syst. Technol. 2020, 28, 844–856. [Google Scholar] [CrossRef]

- Zhu, M.; Martínez, S. Discrete-time dynamic average consensus. Automatica 2010, 46, 322–329. [Google Scholar] [CrossRef]

- Montijano, E.; Montijano, J.I.; Sagüés, C.; Martínez, S. Robust discrete time dynamic average consensus. Automatica 2014, 50, 3131–3138. [Google Scholar] [CrossRef]

- Kia, S.S.; Cortés, J.; Martínez, S. Distributed convex optimization via continuous-time coordination algorithms with discrete-time communication. Automatica 2015, 55, 254–264. [Google Scholar] [CrossRef]

- Kia, S.S.; Cortés, J.; Martínez, S. Distributed Event-Triggered Communication for Dynamic Average Consensus in Networked Systems. Automatica 2015, 59, 112–119. [Google Scholar] [CrossRef]

- Zhao, Y.; Xian, C.; Wen, G.; Huang, P.; Ren, W. Design of Distributed Event-Triggered Average Tracking Algorithms for Homogeneous and Heterogeneous Multiagent Systems. IEEE Trans. Autom. Control 2022, 67, 1269–1284. [Google Scholar] [CrossRef]

- Battistelli, G.; Chisci, L.; Selvi, D. A distributed Kalman filter with event-triggered communication and guaranteed stability. Automatica 2018, 93, 75–82. [Google Scholar] [CrossRef]

- Yu, D.; Xia, Y.; Li, L.; Zhai, D.H. Event-triggered distributed state estimation over wireless sensor networks. Automatica 2020, 118, 109039. [Google Scholar] [CrossRef]

- Rezaei, H.; Ghorbani, M. Event-triggered resilient distributed extended Kalman filter with consensus on estimation. Int. J. Robust Nonlinear Control 2022, 32, 1303–1315. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L.; Wang, Z. A dynamic event-triggered transmission scheme for distributed set-membership estimation over wireless sensor networks. IEEE Trans. Cybern. 2019, 49, 171–183. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Jia, Q.S.; Johansson, K.H.; Shi, L. Event-based sensor data scheduling: Trade-off between communication rate and estimation quality. IEEE Trans. Autom. Control 2013, 58, 1041–1046. [Google Scholar] [CrossRef]

- Peng, C.; Li, F. A survey on recent advances in event-triggered communication and control. Inf. Sci. 2018, 457–458, 113–125. [Google Scholar] [CrossRef]

- Vasiljevic, L.K.; Khalil, H.K. Error bounds in differentiation of noisy signals by high-gain observers. Syst. Control. Lett. 2008, 57, 856–862. [Google Scholar] [CrossRef]

- George, J.; Freeman, R.A. Robust Dynamic Average Consensus Algorithms. IEEE Trans. Autom. Control 2019, 64, 4615–4622. [Google Scholar] [CrossRef]

- Ren, W.; Al-Saggaf, U.M. Distributed Kalman–Bucy Filter With Embedded Dynamic Averaging Algorithm. IEEE Syst. J. 2018, 12, 1722–1730. [Google Scholar] [CrossRef]

- Aldana-López, R.; Aragüés, R.; Sagüés, C. EDCHO: High order exact dynamic consensus. Automatica 2021, 131, 109750. [Google Scholar] [CrossRef]

- Aldana-López, R.; Aragüés, R.; Sagüés, C. REDCHO: Robust Exact Dynamic Consensus of High Order. Automatica 2022, 141, 110320. [Google Scholar] [CrossRef]

- Perruquetti, W.; Barbot, J.P. Sliding Mode Control in Engineering; Marcel Dekker, Inc.: New York, NY, USA, 2002. [Google Scholar]

- Godsil, C.; Royle, G. Algebraic Graph Theory; Graduate Texts in Mathematics; Springer: Berlin/Heidelberg, Germany, 2001; Volume 207. [Google Scholar]

- Levant, A. Higher-order sliding modes, differentiation and output-feedback control. Int. J. Control 2003, 76, 924–941. [Google Scholar] [CrossRef]

- Levant, A. Homogeneity approach to high-order sliding mode design. Automatica 2005, 41, 823–830. [Google Scholar] [CrossRef]

- Filippov, A.F. Differential equations with discontinuous righthand sides; Mathematics and its Applications (Soviet Series); Kluwer Academic Publishers Group: Dordrecht, The Netherlands, 1988; Volume 18, p. x+304, Translated from the Russian. [Google Scholar]

- Tan, X.; Cao, J.; Li, X. Consensus of Leader-Following Multiagent Systems: A Distributed Event-Triggered Impulsive Control Strategy. IEEE Trans. Cybern. 2019, 49, 792–801. [Google Scholar] [CrossRef]

- Rudin, W. Principles of Mathematical Analysis, 3rd ed.; McGraw-Hill Book Company, Inc.: New York, NY, USA; Toronto, ON, Canada; London, UK, 1976. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).