Abstract

The binary logistic regression model (LRM) is practical in situations when the response variable (RV) is dichotomous. The maximum likelihood estimator (MLE) is generally considered to estimate the LRM parameters. However, in the presence of multicollinearity (MC), the MLE is not the correct choice due to its inflated standard deviation (SD) and standard errors (SE) of the estimates. To combat MC, commonly used biased estimators, i.e., the Ridge estimators (RE) and Liu estimators (LEs), are preferred. However, most of the time, the traditional LE attains a negative value for its Liu parameter (LP), which is considered to be a major drawback. Therefore, to overcome this issue, we proposed a new adjusted LE for the binary LRM. Owing to numerical evaluation purposes, Monte Carlo simulation (MCS) study is performed under different conditions where bias and mean squared error are the performance criteria. Findings showed the superiority of our proposed estimator in comparison with the other estimation methods due to the existence of high but imperfect multicollinearity, which clearly means that it is consistent when the regressors are multicollinear. Furthermore, the findings demonstrated that whenever there is MC, the MLE is not the best choice. Finally, a real application is being considered to be evidence for the advantage of the intended estimator. The MCS and the application findings pointed out that the considered adjusted LE for the binary logistic regression model is a more efficient estimation method whenever the regressors are highly multicollinear.

Keywords:

bias; binary logistic regression model; Liu estimator; multicollinearity; maximum likelihood estimator; ridge regression MSC:

62J02; 62J05; 62J07; 62J20

1. Introduction

The binary LRM (BLRM) is a common RM, and it is preferred in situations where the RV is dichotomous or binary. Forecasting a dichotomous RV is vital in epidemiology and medicine [1,2]. For example, a set of risk factors such as cholesterol and blood pressure effect on the possibility of undergoing a heart attack. The method of MLE is commonly employed to estimate the binary LRM [2,3]. In the case of MC, the variance becomes inflated, and the standard errors become high, and consequently, the t-statistic and F-ratios become insignificant [3,4,5]. To overcome the issue of MC, different biased techniques are available in the writings. One of them constructing on ridge regression (RR), see [6], for the linear RM. The logistic RR was first introduced in [7] via the results of [6]. For the same purpose three biased estimators are considered [3]. Lee and Silvapulle [8] introduced the two ridge parameters for the logistic RR estimator (LRRE). As in the LRRE, the ridge parameter plays a very important role. After these studies, many researchers focused on the collection of the ridge parameter in the LRRE [9,10,11,12,13,14]. Liu [15] discussed some limitations of the RRE and proposed another alternative estimator called the LE for handling the issue of multicollinearity in a better way. The LE for the BLRM was adapted and called the logistic LE (LLE), [16]. The theoretical and numerical properties of the LLE and its comparison with the MLE and LRRE have been discussed.

In the LLE, the LP (d) plays a main character in the estimation of the LLE and should lie between 0 and 1, i.e., Sometimes, the Liu parameter produces negative and zero values that affect the efficiency of the LLE [17]. Several authors introduced various types of Liu estimators in the BLRM to improve the efficiency of the LE [18,19,20,21,22]. These modified Liu estimators also often produced zero values for the Liu parameter. To overcome the limitations of the available LEs, Lukman et al. [23] introduced the modified one-parameter LE for the linear RM.

In this article, we propose a new adjusted LLE (ALLE) for the BLRM. Its theoretical properties are investigated. Furthermore, the properties of the new estimator are assessed via a theoretical comparison and compared with other estimation methods. The matrix mean squared error (MMSE) and the scalar mean squared error (MSE) are used as the performance evaluation criteria. To investigate the new estimator a MCS study has been performed. The forthcoming sections are organized as follows: Section 2 presents the construction of the proposed estimator for the BLRM with a theoretical comparison. A MCS study is presented in Section 3. Section 4 is devoted to analyzing prostate cancer data. Section 5 ends with some conclusions.

2. Statistical Method

Following [7,24,25], the BLRM is defined as

where has zero mean and variance with expectation of and are independent for each . Considering, the ith value of RV to be distributed as Bernoulli , with

where , and is the data matrix with ; and is considered to be the vector of regression coefficients. The regression coefficients vector is usually estimated via the MLE method. The logarithm of the likelihood function for Equation (1) is computed as

Let be the estimates of at the Tth step, using and The following iterative algorithm is well define [26],

The Equation (3) can be written as

with and where , while . has asymptotic normal distribution as [8].

Let be the orthogonal matrix that has the eigenvectors of as columns. Then the covariance and MMSE of the are

The scalar MSE of the is

where .

In the case of high correlated regressors, the matrix becomes ill-conditioned, which causes the problem of multicollinearity. The LRRE is defined by Schaefer et al. [7] as a simple extension of Hoerl and Kennard [6] to treat multicollinearity effects as

where with the ridge parameter k and identity matrix . The bias vector (BV), covariance matrix (CM) and MMSE of (7) are

and

where . From (10) by applying the operator, we obtain the scalar MSE of the LRRE as

where and k (k > 0) is the Hoerl and Kennard [6] ridge parameter. Optimizing the Equation (11) with respect to k yields

Another estimator that treats the multicollinearity better than the LRRE was given by Liu [25] and Mansson et al. [11] for the BRLM that is named as the LLE and is defined by

where , and is the LP. The BV and CM of (13) are given respectively by

By using (14) and (15), the MMSE is defined as

where , and . The scalar MSE of the LLE is given by

where d is the LP. Optimizing the Equation (17) with respect to d yields . For , we obtain .

2.1. The Proposed Estimator

In the LLE, most of the time, the value of d is negative, which affects the BLRM estimation under multicollinearity. This estimator has been proposed for the Poisson RM [27]. Therefore, following [27], we define the adjusted logistic Liu estimator (ALLE), which is given as

where and is the Liu parameter for the ALLE. The new estimator gives a genuine refinement in the efficacy of the BLRM coefficients. The BV, CM, MSE matrix and scalar MSE of the ALLE are given in Equations (19)–(22), respectively.

2.2. Theoretical Comparison

Proposition 2.1.

Let M > 0, i.e., M is a positive definite (p,d) matrix. Thenif and only iffor some vector [28].

Proposition 2.2.

Letandbe estimators of . Suppose that , where and represents the CM ofand , respectively. Then,if and only ifwhereis the bias whereaswhereis the BV of[3].

Theorem 2.1.

For the BLRM, if , thenis better than ; that is,if and only if , where.

Proof:

See Appendix A. □

Theorem 2.2.

Under the BLRM, ifand, thenis better than; that is,if and only ifwhere.

Proof:

See Appendix A. □

Theorem 2.3.

Under the BLRM, ifand, thenis better than; that is,if and only if, where.

Proof:

See Appendix A. □

2.3. Parameter Estimation

In the literature different methods are employed to optimize the biasing parameters; see [17,20,29,30]. Computing the critical points of the Equation (22) with respect to , we obtain the jth term as

In (23), replacing and by their unbiased estimators, we obtain

For practical considerations, we consider the minimum value of (24) as

3. Simulation

3.1. Design

Here the simulation study is designed, and general layout is given. The comparison criteria is the simulated MSE of the estimators, i.e., MLE, LRRE, LLE and ALLE, when the regressors are multicollinear. Considering to be the independent standard normal pseudo-random numbers and following [2,14,23], the EVs with various levels of correlation can be generated via the following expression:

where is select so as be the correlation between any two explanatories. For simulation, we select four different values of : 0.80; 0.90; 0.95; 0.99. Four different sample size are considered ; 400. Moreover, we also consider different number of EVs , and is selected to be the eigenvector of the matrix corresponding to the largest eigenvalue [14].

The Bernoulli distribution is used to generate observations, where such that the data matrix . The experiment is repeated 1000 times by generating . The bias and MSE values of the estimators are computed via the equation:

where is the deviation between the parameter and its estimate for each of the BLRM estimators and R is the number replications. The R Language is used for computations.

3.2. Discussion

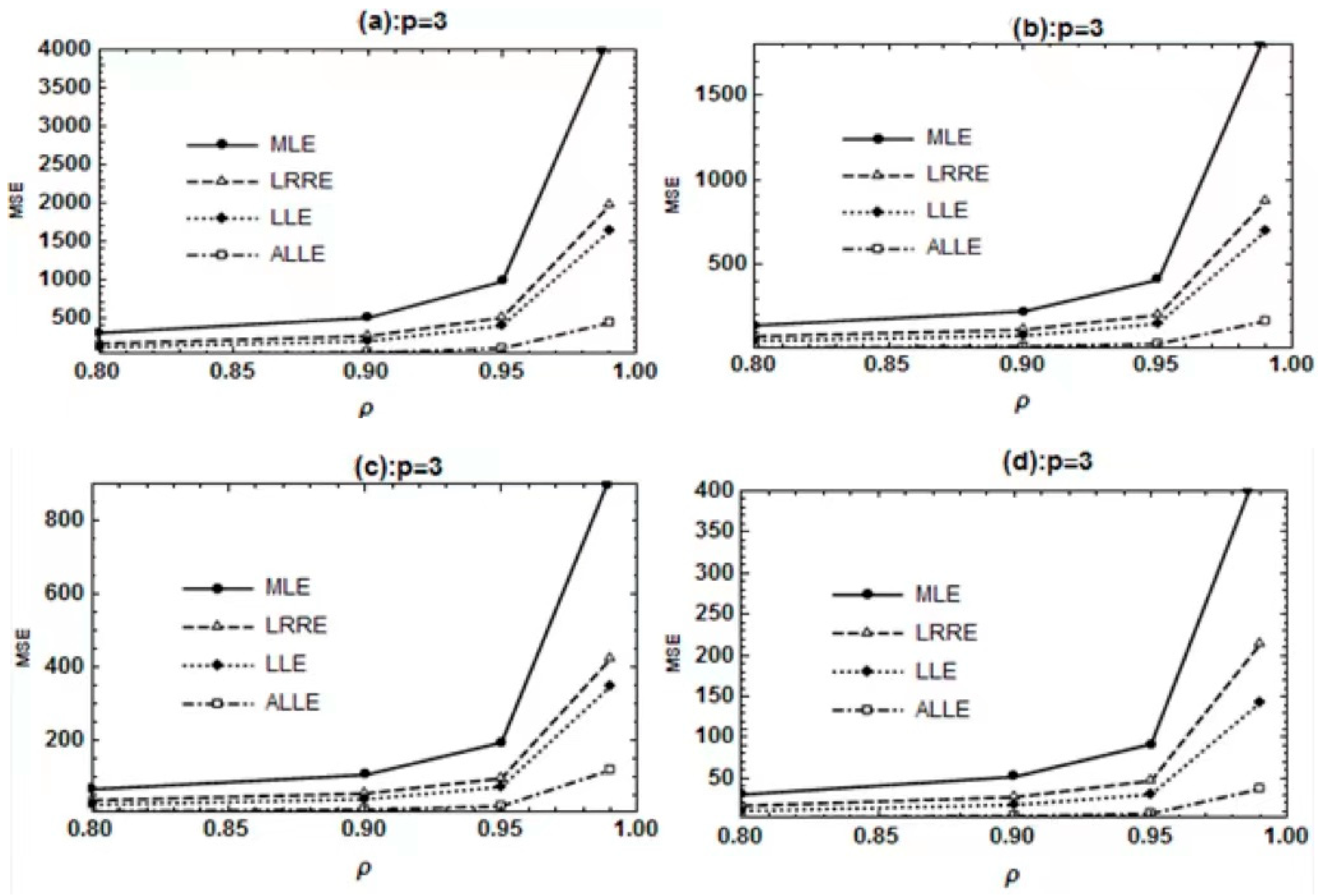

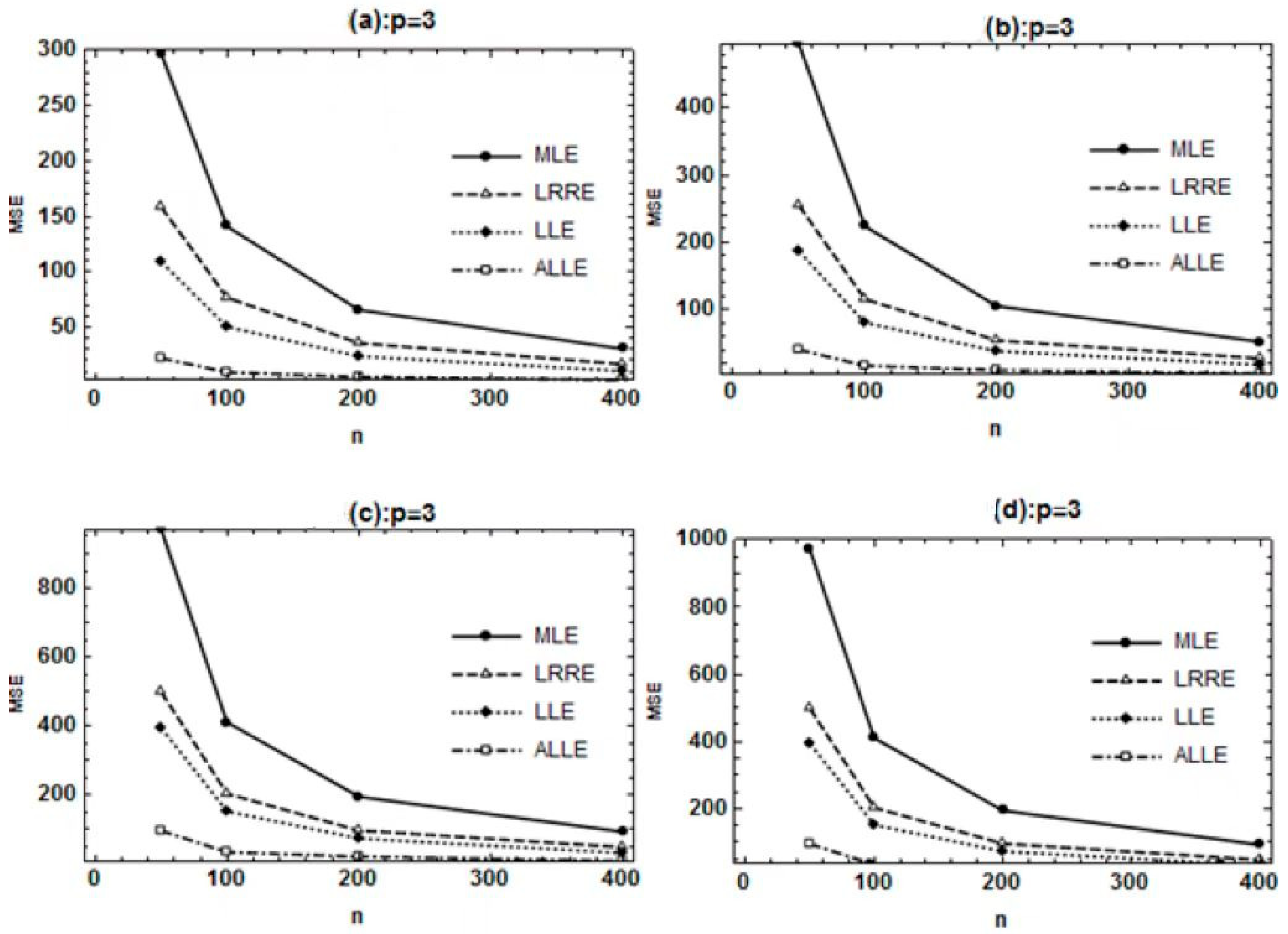

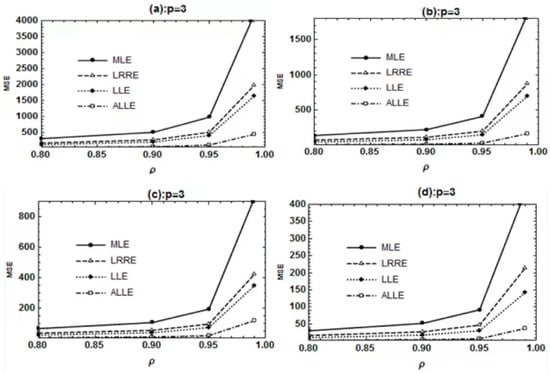

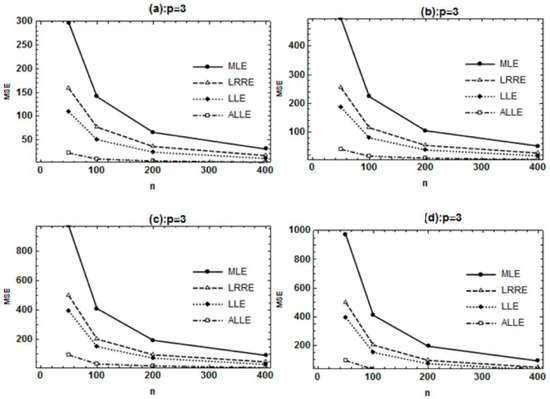

The estimated biases and MSEs of the BLRM estimators for changed controlled conditions are summarized in Table 1, Table 2 and Table 3 for p = 3, 6 and 12, respectively. The simulated results in Table 1, Table 2 and Table 3 clearly demonstrated that the constructed ALLE surpassed the other estimators in terms of minimum bias and MSE. In all cases, the performance of the MLE is the worst since the estimated MSE is larger as compared to the other estimators. Based on the findings of the simulation, it can be seen that there is a direct relationship between the scalar MSEs and various levels of multicollinearity. As we change the multicollinearity level from mild to severe, the estimated MSEs increase for a given sample size and number of explanatory variables (EV). However, it is clearly observed that the proposed ALLE for the BLRM is a unique estimation method due to its minimum bias as well as minimum estimated MSEs. We also provide a graphical representation for the readers to see a clear image of the constructed and other considered estimators. Figure 1a–d demonstrates the effect of collinearity on the performance of the under-studied estimators with different sizes. Figure 1 clearly shows that the proposed ALLE performed the best for all the levels of multicollinearity. In addition, when the results are compared with respect to the sample size, then it can be noticed that the estimated MSE values of all the estimators decrease as n increases. For more details, see Figure 2a–d. When the results are interpreted with regard to the number of EVs, then it is observed from Table 1, Table 2 and Table 3 that the estimated MSEs increase by increasing the value of p. Based on the results, ALLE is the most suitable and consistent option whenever the RV is binary, and the EVs are correlated due to its minimum bias and estimated MSEs. Undoubtedly, other biased estimators also attain a lower MSE in contrast to the MLE, but the variation in the proposed ALLE is quite lower than the other estimators for all evaluated states.

Table 1.

Bias and MSE values of the estimators for p = 3.

Table 2.

Bias and MSE values of the estimators for p = 6.

Table 3.

Bias and MSE values of the estimators for p = 12.

Figure 1.

The BLRM estimators in relation to multicollinearity: (a). , (b). , (c). , (d). .

Figure 2.

The BLRM estimators for various : (a). , (b). , (c). , (d). .

4. Application

Here, a real application is considered to discuss the performance of the constructed estimator. For this purpose, prostate cancer data have been considered, which were taken from Kutner et al. [31]. However, the RV is the seminal vesicle invasion, i.e., presence or absence of seminal vesicle invasion: the binary RV is 1 if yes; 0 otherwise. The descriptions of seven regressors are given in Table 4.

Table 4.

Explanation of the respected regressors.

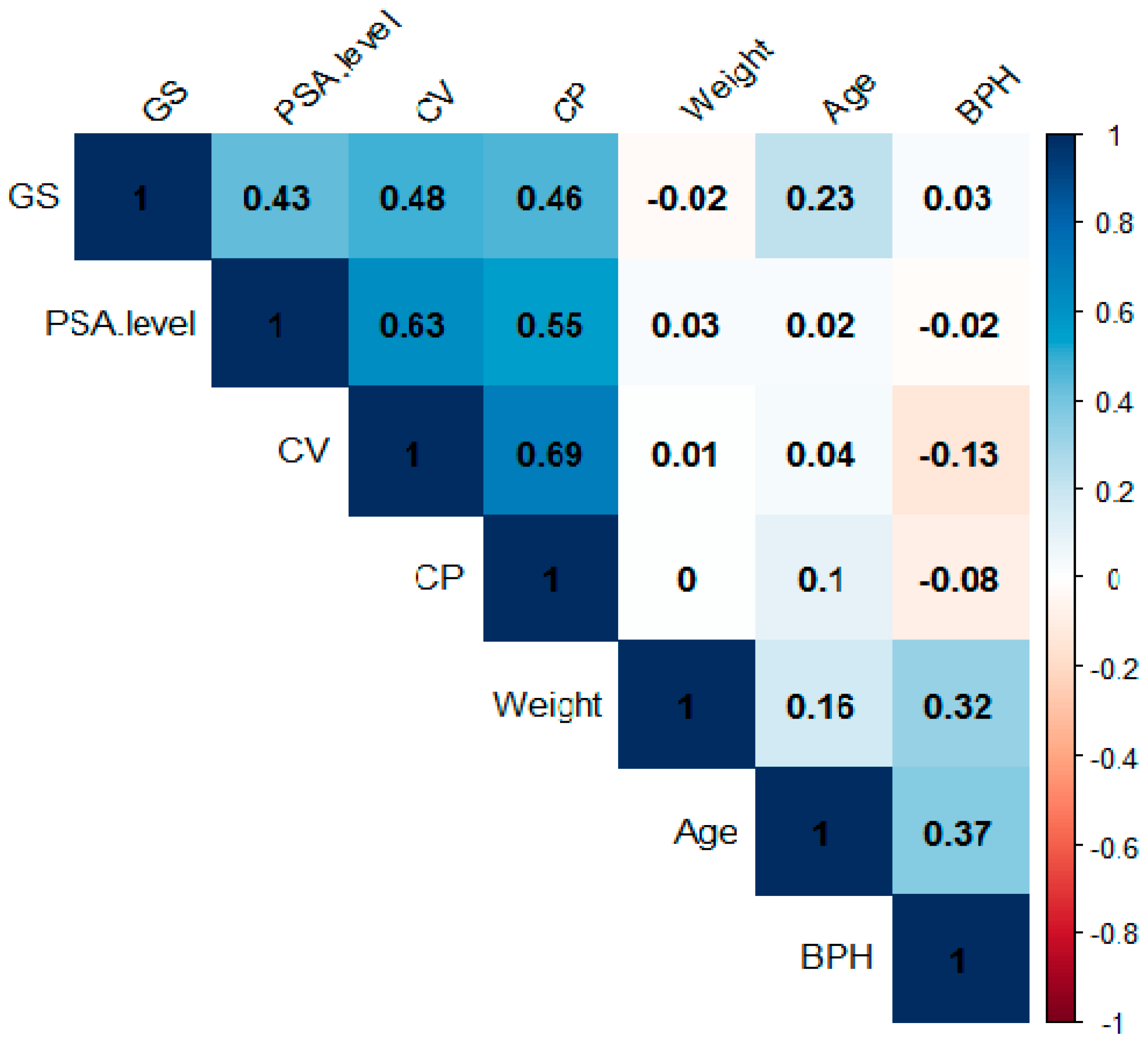

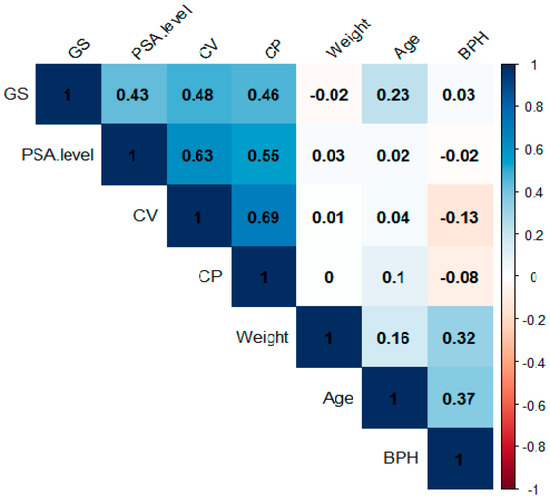

The eigenvalues of matrix are found to be: , , , , , and . The multicollinearity of the regressors is assessed via a condition index (CI). The CI is evaluated by the eigenvalues of the matrix without an intercept term, while the eigenvalues of the matrix include the intercept term. We observed that the . This confirms that there is a multicollinearity issue among the regressors. Moreover, we also compute the correlations among the regressors, and the results are displayed in Figure 3. It declares the moderate correlation among CV and PSA. level, CP and PSA. level and CV and CP.

Figure 3.

Correlation matrix among the seven regressors of a prostate cancer data.

The estimated coefficients (EC) and the MSE values are presented in Table 5. The EC of the MLE, LRRE, LLE and ALLE are obtained from (4), (7), (14) and (19), respectively. However, the scalar MSE values of the MLE, LRRE, LLE and ALLE are calculated using equations (6), (12), (18) and (23), respectively. The LRRE involves a shrinkage ridge parameter , which is found to be 0.077. Whereas the Liu parameter for the LLE was found to be 0.5689. However, the shrinkage parameter of the proposed ALLE was found to be 0.0693. Table 5 reveals that ALLE decreases the EC and MSE value in a better way in comparison with the competitive estimators. It is also noticed that the MLE attains the largest MSE, which clearly indicates that it is the most sensitive estimator when there exists a multicollinearity among the regressors. In addition, the proposed ALLE is the most consistent option in the case of multicollinearity. Further, it can be noticed that the performance of LRRE is comparatively better than that of LLE and MLE. Further, it is also observed that the ALLE’s performance is better as compared to the other biased estimators as well as the MLE. Therefore, it is recommended to use the ALLE estimator when one considers the BLRM with correlated regressors because of its consistent and robust behavior against multicollinearity.

Table 5.

Regression estimates and MSEs of the logistics regression estimators for the prostate cancer data.

5. Concluding Remarks

We constructed a new adjusted logistic LE (ALLE) for the binary LRM (BLRM) to handle the multicollinearity issue. The MLE method is not a good choice due to its inflated variance and standard error whenever the regressors are multicollinear. The efficacy of the constructed estimator is judged via a MCS under various controlled conditions. The investigation has been performed for different values of correlation, sample sizes and the number of EVs. Findings showed that the ALLE’s performance is better compared to competitive ones and the MLE. Further, the MSE values of the MLE, LRRE, LLE and ALLE increase as the multicollinearity level increases. This phenomenon is particularly sharp for small sample sizes and whenever the correlation is high. However, this increase is quite smaller for the proposed ALLE. The superiority of the proposed estimator over others is also proved via a real application. The findings from both simulation and application clearly support our constructed estimator, which is a good and robust estimation method whenever there exists an imperfect but high multicollinearity among the regressors. As a result, we strongly advise practitioners to use the new estimator when estimating the unknown BLRM regression coefficients in the presence of multicollinearity.

Author Contributions

Authors contributed equally. All authors have read and agreed to the published version of the manuscript.

Funding

The author Nahid Fatima would like to acknowledge the support of Prince Sultan University for its support and help for paying the Article Processing Charges (APC) of this publication. Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R 299), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Data Availability Statement

The data is included with in the article.

Acknowledgments

The author Nahid Fatima would like to acknowledge the support of Prince Sultan University for its support and help for paying the Article Processing Charges (APC) of this publication. The authors are thankful to Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R 299), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflicts of Interest

There is no conflict of interest.

Appendix A

Proof of Theorem 2.1

However, the scalar MSE of (24) can be written as

where is the bias of our proposed estimation method. We observed from Theorem 2.1 that is positive definite. is positive definite if . Thus, by using proposition 2.1 and 2.2, we conclude that the proof is ended by proposition 2.1 and 2.2. □

Proof of Theorem 2.2

where . While the difference in scalar MSEs of (26) can be written as

where and is the bias of LRRE and our proposed (ALLE) estimation method. We observed from Theorem 2.2 that is positive definite if . Thus, by using proposition 2.1 and 2.2, we conclude that the proof is ended by proposition 2.1 and 2.2. □

References

- Lin, E.; Lin, C.-H.; Lane, H.-Y. Logistic ridge regression to predict bipolar disorder using mRNA expression levels in the N-methyl-D-aspartate receptor genes. J. Affect. Disord. 2022, 297, 309–313. [Google Scholar] [CrossRef] [PubMed]

- Afzal, N.; Amanullah, M. Dawoud–Kibria Estimator for the Logistic Regression Model: Method, Simulation and Application. Iran. J. Sci. Tech. Trans. A Sci. 2022, 46, 1483–1493. [Google Scholar] [CrossRef]

- Schaefer, R.L. Alternative estimators in logistic regression when the data are collinear. J. Stat. Comput. Simul. 1986, 25, 75–91. [Google Scholar] [CrossRef]

- Lukman, A.F.; Ayinde, K. Review and classifications of the ridge parameter estimation techniques. Hacet. J. Math. Stat. 2017, 46, 953–967. [Google Scholar] [CrossRef]

- Frisch, R. Statistical confluence analysis by means of complete regression systems. Econ. J. 1934, 45, 741–742. [Google Scholar]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Schaefer, R.L.; Roi, L.D.; Wolfe, R.A. A ridge logistic estimator. Commun. Stat.—Theor. Methods 1984, 13, 99–113. [Google Scholar] [CrossRef]

- Lee, A.H.; Silvapulle, M.J. Ridge estimation in logistic regression. Commun. Stat. Simul. Comput. 1988, 17, 1231–1257. [Google Scholar] [CrossRef]

- Cessie, S.L.; Houwelingen, J.C.V. Ridge estimators in logistic regression. J. R. Stat. Soc. C 1992, 41, 191–201. [Google Scholar] [CrossRef]

- Segerstedt, B. On ordinary ridge regression in generalized linear models. Commun. Stat. Theor. Method. 1992, 21, 2227–2246. [Google Scholar] [CrossRef]

- Akram, M.N.; Amin, M.; Elhassanein, A.; Aman Ullah, M. A new modified ridge-type estimator for the beta regression model: Simulation and application. AIMS Math. 2022, 7, 1035–1057. [Google Scholar] [CrossRef]

- Kibria, B.M.G.; Månsson, K.; Shukur, G. Performance of some logistic ridge regression estimators. Comput. Econ. 2012, 40, 401–414. [Google Scholar] [CrossRef]

- Asar, Y. Some new methods to solve multicollinearity in logistic regression. Commun. Stat. Simul. Comput. 2017, 46, 2576–2586. [Google Scholar] [CrossRef]

- Hadia, M.; Amin, M.; Akram, M.N. Comparison of link functions for the estimation of logistic ridge regression: An application to urine data. Commun. Stat. Simul. Comput. 2022. [Google Scholar] [CrossRef]

- Liu, K. A new class of biased estimate in linear regression. Commun. Stat. 1993, 22, 393–402. [Google Scholar]

- Mansson, K.; Kibria, B.M.G.; Shukur, G. On Liu estimators for the logit regression model. Econ. Model. 2012, 29, 1483–1488. [Google Scholar] [CrossRef]

- Qasim, M.; Amin, M.; Amanullah, M. On the performance of some new Liu parameters for the gamma regression model. J. Stat. Comput. Simul. 2018, 88, 3065–3080. [Google Scholar] [CrossRef]

- İnan, D.; Erdoğan, B.E. Liu-type logistic estimator. Commun. Stat. Simul. Comput. 2013, 42, 1578–1586. [Google Scholar] [CrossRef]

- Şiray, G.Ü.; Toker, S.; Kaçiranlar, S. On the restricted Liu estimator in the logistic regression model. Commun. Stat. Simul. Comput. 2015, 44, 217–232. [Google Scholar] [CrossRef]

- Asar, Y.; Genc, A. New shrinkage parameters for the Liu-type logistic estimators. Commun. Stat. Simul. Comput. 2016, 45, 1094–1103. [Google Scholar] [CrossRef]

- Wu, J. Modified restricted Liu estimator in logistic regression model. Comput. Stat. 2016, 31, 1557–1567. [Google Scholar] [CrossRef]

- Wu, J.; Asar, Y. More on the restricted Liu estimator in the logistic regression model. Commun. Statist. Simul. Comput. 2017, 46, 3680–3689. [Google Scholar] [CrossRef]

- Lukman, A.F.; Kibria, B.M.G.; Ayinde, K.; Jegede, S.L. Modified one-parameter Liu estimator for the linear regression model. Model. Simul. Eng. 2020, 2020, 9574304. [Google Scholar] [CrossRef]

- Wu, J.; Asar, Y.; Arashi, M. On the restricted almost unbiased Liu estimator in the logistic regression model. Commun. Stat. Theor. Method. 2018, 47, 4389–4401. [Google Scholar] [CrossRef]

- Varathan, N.; Wijekoon, P. Logistic Liu estimator under stochastic linear restrictions. Stat. Pap. 2019, 60, 595–612. [Google Scholar] [CrossRef]

- Pregibon, D. Logistic regression diagnostics. Ann. Stat. 1981, 9, 705–724. [Google Scholar] [CrossRef]

- Li, Y.; Asar, Y.; Wu, J. On the stochastic restricted Liu estimator in logistic regression model. J. Stat. Comput. Simul. 2020, 90, 2766–2788. [Google Scholar] [CrossRef]

- Amin, M.; Akram, M.N.; Kibria, B.M.G. A new adjusted Liu estimator for the Poisson regression model. Concurr. Comput. Pract. Exper. 2021, 33, e6340. [Google Scholar] [CrossRef]

- Farebrother, R.W. Further results on the mean square error of ridge regression. J. R. Stat. Soc. 1976, 38, 248–250. [Google Scholar]

- Mustafa, S.; Amin, M.; Akram, M.N.; Afzal, N. On the performance of link functions in the beta ridge regression model: Simulation and application. Concurr. Comput. Pract. Exper. 2022, 34, e7005. [Google Scholar] [CrossRef]

- Kutner, M.H.; Nachtsheim, C.J.; Neter, J.; Li, W. Applied Linear Statistical Models, 5th ed.; McGraw Hill: New York, NY, USA, 2005. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).