3.1. Dataset

We used two datasets containing operational data for two independent SAG mills every half hour over a total time of 340 and 331 days, respectively. Each one of the SAG mills receives fresh feed and is connected in an open circuit configuration (SABC-B) where the pebble crusher product is sent to ball mills. At each time

t, the dataset contains Feed tonnage (FT) (ton/h), Energy consumption (EC) (kWh), Bearing pressure (BPr) (psi) and Spindle speed (SSp) (rpm). They are split into two main subsets (a validation dataset is not considered since the optimum LSTM architecture to train is drawn from previous work [

14]): training and testing (

Table 2). This is an arbitrary division, and we seek to have a proportion of ∼50/50, respectively.

As it can be seen in

Table 2, the predictive methods are trained with the first 50% and tested with the upcoming 50%, without being fed with the previous 50% of historical data.

Note that the comminution properties of the ore, such as or BWi, are not included in the datasets; therefore, the relationship between forecasted ORH and comminution properties is not explored in this work. The results herein presented, however, serve as a basis to examine such a relationship if those properties were known.

3.2. Assumptions

SAG mills are fundamental pieces in comminution circuits. As no information regarding downstream/upstream processes is available, recognizing bottlenecks in the dataset becomes subjective. We assume that SAG mills will potentially show changes from steady-state to under capacity and vice versa along with the dataset. Thus, stationarity of all operational variable distributions is assumed throughout this work, including the ore grindability. It means that the entire dataset belongs to a known and planned combination of ore characteristics (geometallurgical units). By doing so, we limit the applicability of the present models beyond the temporal dataset without a proper training process.

As explained in the problem statement section, we make use of the temporal average over energy consumption and feed tonnage as input for operational hardness prediction. Thus, we assume an additivity property over those variables as their units are kWh and ton/h, respectively, over constant temporal discretization so averaging adjacent data points is mathematically consistent.

In the operation from which the datasets were obtained, the SAG mill liners are replaced every 5–7 months. Since the datasets cover almost a year, we can ensure that the liners were replaced in each SAG mill at least once during the tested period, which may alter the relationship between energy consumption and other operational variables, inducing a discontinuity in the temporal plots. However, since in this work the temporal window for ORH evaluation is eight hours, the local discontinuity associated with liners replacement is not expected to affect the forecast at that time frame. The ORH is related to what was happening in the corresponding mill within the last few hours, and not to the mill behaviour prior to the last replacement of liners.

3.3. Problem Statement

The aim is to forecast the operational relative-hardness. To do so, we need to label the datasets with the associated ORH category at data point. We know from Equation (

1) that the ORH labelling process requires as input (i) the one-step forward differences on energy consumption (

) and feed tonnage (

), and (ii) a lambda (

) value. In addition, we are interested in forecasting the ORH at different time supports.

Since the information is collected every 30 min, the upcoming energy consumption EC

and feed tonnage FT

at 0.5 h support are denoted simply as EC

and FT

in reference to EC

and FT

, respectively. An upcoming EC and FT at 1 h support, EC

and FT

, are computed by averaging the next two energy consumption, EC

and EC

, and the two feed tonnage, FT

and FT

. Similarly, by averaging the upcoming ECs and FTs, different supports can be computed. Let

be the time support in hours, which represents the average over a temporal interval of a given duration, then EC

and FT

are calculated as:

In this experiment, three different supports () are considered: 0.5, 2 and 8 h.

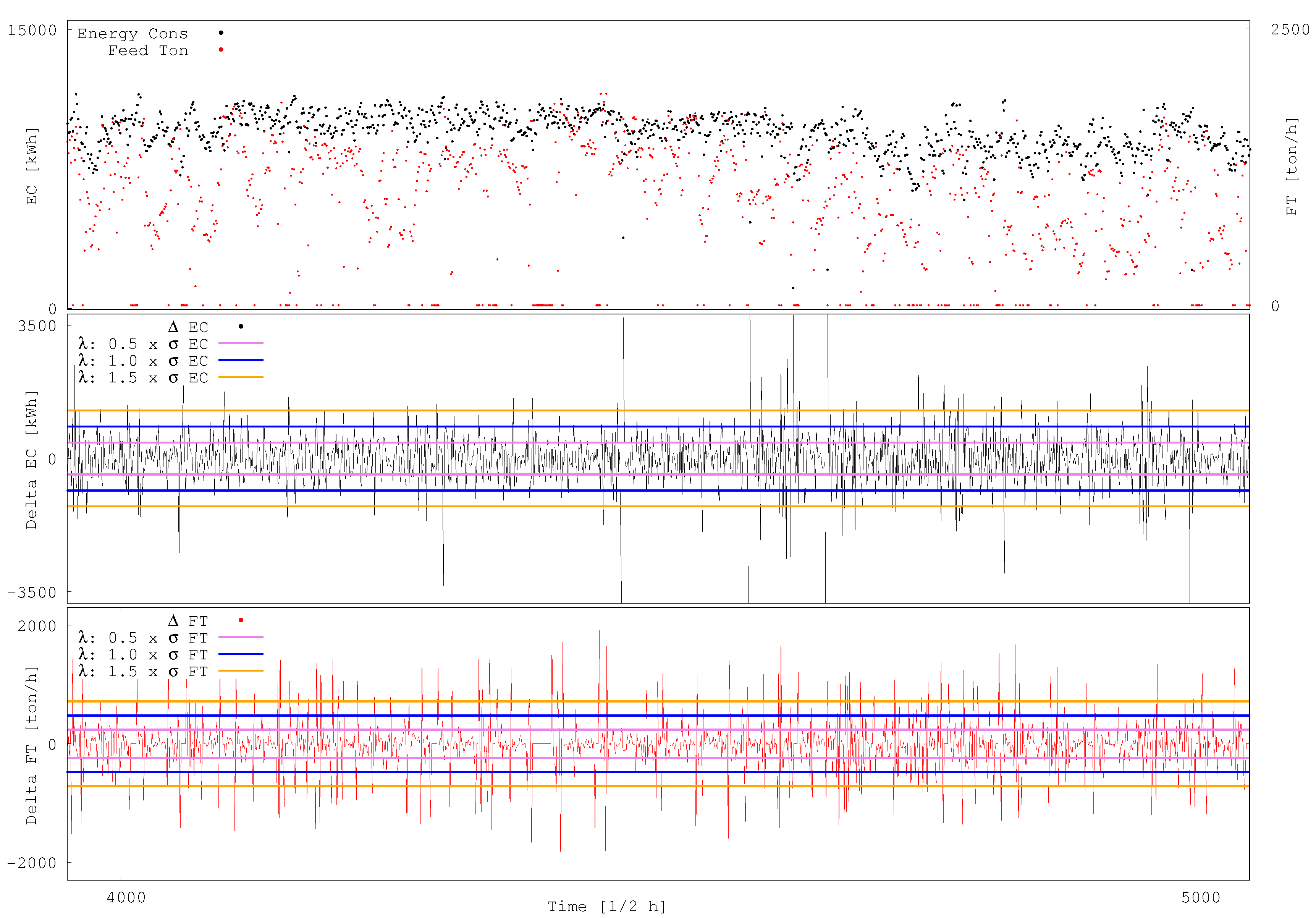

Figure 2 illustrates the ORH criteria using a half-hour time support on SAG mill 1 dataset. From the daily graph of EC

and FT

at the top, the graph of

EC

and

FT

are extracted and presented at the centre and bottom, respectively. Three different bands, corresponding to

: 0.5, 1.0 and 1.5, are shown. The values that are above the band are considered as increasing, the ones below it are considered as decreasing and inside as undefined (relatively constant). The corresponding categories for EC and FT are used to define the operational relative-hardness (as in

Table 1). It can be seen that, when

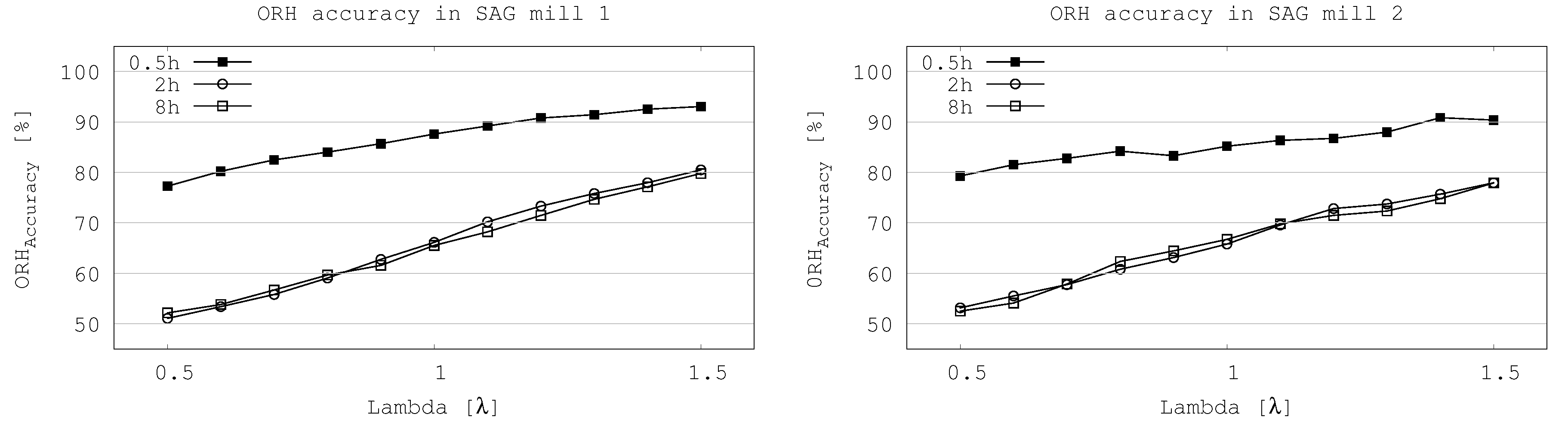

increases, the proportions of hard and soft instances decrease. Since

is an arbitrary parameter, a sensitivity analysis is performed in the range

to capture its influence on the resulting LSTM accuracy to suitably learn to predict the ORH at the different time supports.

At each time t the input variables considered to predict ORH are FT, BPr and SSp. To account for trends, and since FT and SSp are operational decisions, the differences FTFT and SSpSSp are also considered as inputs. Therefore, the dataset of predictors and output , at each time support , has samples made by FT, BPr, SSp, FTFT, SSpSSp and . We also tried several other combinations of input variables, but all led to results with lower quality. A temporal window of the previous four hours (previous eight consecutive data points) are used as input for training and testing the LSTM models.

3.4. Preprocessing Dataset

A preprocessing step is performed over the raw datasets to make them suitable for deep neural network training and inference processes. The aim is to make all input attributes fall into certain regions of the non-linear transfer functions via normalization and to be properly coded in categories via one-hot encoding. Thus, we normalize the entire raw dataset with the mean and standard deviation of the training dataset.

Let be one of the five input variables () at time t, its normalized expression is computed as , where and represent the mean and standard deviation of in the training dataset. We normalize the first three attributes of , FT, BPr and SSp while for last two attributes, the differences between the original values FTFT and SSpSSp, are replaced by the differences between the normalized values of FT and SSp.

The known operational relative-hardness at time t () is one-hot encoding such that soft, undefined and hard are encoded as , and , respectively.

3.5. Optimal LSTM Architecture

From the training dataset, sequence of length are extracted to train the LSTM model in order to forecast the operational relative-hardness at next time step , at different time supports. The chosen length is four hours (: 8).

The external hyper-parameter to be optimized on any LSTM architecture is the number of hidden units,

. Based on a previous work [

14], the optimum number of hidden units was found and here used. They are displayed in

Table 3.

Adam Optimizer is used to train the LSTM with hyper-parameters

,

and

as recommended by [

17].