Abstract

The Multiple Mobile Robot (MMR) cooperative system is becoming a focus of study in various fields due to its advantages, such as high efficiency and good fault tolerance. However, the uncertainty and nonlinearity problems severely limit the cooperative localization accuracy of the MMR system. Thus, to solve the problems mentioned above, this manuscript presents a cooperative localization algorithm for MMR systems based on Cubature Kalman Filter (CKF) and adaptive Variance Component Estimation (VCE) methods. In this novel algorithm, a nonlinear filter named CKF is used to enhance the cooperative localization accuracy and reduce the computational load. On the other hand, the adaptive VCE method is introduced to eliminate the effects of unknown system noise. Furthermore, the performance of the proposed algorithm is compared with that of the cooperative localization algorithm based on normal CKF by utilizing the real experiment data. In addition, the results demonstrate that the proposed algorithm outperforms the CKF cooperative localization algorithm both in accuracy and consistency.

1. Introduction

In recent years, with the development of robot technology, network communication technology and automatic control technology, the Multiple Mobile Robot (MMR) cooperative system has attracted the attention of many researchers. Using multiple mobile robots to execute the task, the MMR cooperative system can not only enhance operation efficiency but also improve the capability of fault tolerance and reconfiguration. Therefore, the MMR cooperative system has shown broad application prospects and is becoming a focus of study in various fields, such as the military, space exploration, traffic control, medical and service trades [1,2].

Exactly knowing the position, velocity, attitude and other motion information of each mobile robot is one of the basic premises of the MMR cooperative system [3,4]. This means that it requires the ability of self-localization for each mobile robot. The MMR cooperative system could achieve the goal of localization by each mobile robot separately and the localization accuracy just depends on the accuracy of the navigation system that is equipped on each mobile robot. Although this kind of localization scheme is simple to achieve, the localization accuracy is limited by the insufficiency of information sharing [5,6]. The relative range or relative bearing between different mobile robots, observed by binocular cameras, monocular cameras or other kinds of sensors, is exchanged among the mobile robot group [1,7]. In addition, the performance of this kind of navigation scheme, which is called cooperative navigation, is superior to the one of the separate navigation scheme [8,9]. In a cooperative navigation system, the performance of a low accuracy navigation system will be improved significantly by utilizing the navigation information from a high accuracy navigation system [10,11]. Furthermore, when some mobile robots lose their capacity of self-localization, the cooperative navigation will help them to recover it [12]. Since the cooperative navigation has so many potential advantages in robotics, it is becoming a key research direction.

The cooperative navigation algorithm is the core and infrastructure of cooperative navigation [13,14]. Typically, the structure of a cooperative navigation algorithm can be implemented in the central centralized manner [15] or the distributed manner [16]. In the centralized manner, all measurement data are collected and processed in a central agent. In addition, in the distributed manner, each agent exchanges information with its neighbors and estimates its own position by itself. Taking computational cost, survivability, and reliability into account, the distributed manner is superior to the centralized manner [17,18].

1.1. Problem Statements

A cooperative navigation algorithm is essentially a data fusion technique that is used to estimate the pose of each mobile robot by utilizing the output of the sensors equipped on each mobile robot. Due to the characteristics of the nonlinearity of MMR systems, nonlinear estimation methods, such as the extended Kalman filter (EKF) and the unscented Kalman filter (UKF), have proven to be effective methods for MMR cooperative localization problems. In the EKF, the nonlinear system can be linearized utilizing the Taylor series expansion for variance propagation, while the prediction of the state vector and measurement vector are conducted using the nonlinear system. Although EKF has been used in many MMR cooperative localization systems for its simplicity, the precision is limited in the systems with strong nonlinearity and the fussy Jacobian matrix should be calculated, which will inevitably increase the computational load. With the Unscented Transformation (UT), the UKF method can approximate the mean and the variance of the Gaussian state distribution using the nonlinear function, avoiding the local linearization and calculation of the Jacobian matrix effectively. However, the covariance matrix sometimes is easily non-positive in high-dimensional systems, which will lead to filtering divergence.

In addition, the above nonlinear filters are optimal only when the mathematical model is exactly known and system noises are of white Gaussian type. However, in actual MMR systems, the measurement noise is not white Gaussian noise. For example, the noise is quantitative in a vision-based MMR system, which is caused by the limited resolution and is relative to distance between the depth from the viewpoint. In [19], the authors separated the measurement depths into different segments according to the quantized measurement error of stereo vision camera firstly. For each segment, the measurement error is modeled as a combination of a fixed constant error and white Gaussian noise. Finally, an adaptive EKF is established, taking the measurement error segment into consideration. Moreover, the experiment result illustrated the effectiveness of the proposed algorithm. However, the key problem of this method is that the quantized measurement error model of the camera should be known as priori knowledge and the quantized error needs accurate calibration before operation. For actual MMR systems, this is difficult to satisfy because of the vast workload. It is well known that the adaptive filter can be utilized to estimate and correct the model parameters and the noise characteristics at the same time [20]. As a result, the adaptive filter has been considered to enhance the estimation accuracy in robotics [21,22]. A Sage–Husa adaptive filter is an outstanding adaptive filter method that is widely used to estimate the variance matrices of the process and measurement noises [23]. Nevertheless, it cannot estimate these two variance matrices simultaneously, which means that one of them should be known as prior knowledge. By adjusting or restraining the impacts of the current observations on the parameter estimation using the fading factor, an adaptive filter makes full use of the current observations to improve the filtering accuracy [24]. However, it is difficult to obtain the optimal fading factor, and the computational complexity will accordingly be increased as well. Furthermore, the weights of the a priori reliable information would be decreased to a certain extent if the current observation is abnormal, and then the filtering accuracy would also be degraded.

1.2. Contributions

By taking the high dimensionality, nonlinearity and the colored measurement noise into account, a novel cooperative localization algorithm is proposed in this paper based on an adaptive CKF method. In order to overcome the influence on cooperative localization accuracy caused by the nonlinearity and high-dimension, the Cubature Kalman filter (CKF) [25,26] is used in the novel algorithm. CKF is based on the Cubature Transform to conduct the prediction using the Cubature points that have the same weight. It has better performance compared with EKF and UKF, especially for the high-dimensional and strong nonlinear system [25,26]. Moreover, in order to overcome the influence on cooperative localization accuracy caused by the uncertainty of measurement noise, the Variance-Covariance Components Estimation (VCE) is used to estimate the noise adaptively [27,28]. At present, various adaptive and robust estimations have been widely used in statistics and geodesy. In addition, one of the famous methods is an a posteriori VCE method on the basis of Helmert, in which the weights of different observations can be calculated by the adaptive iteration. Since the matrix will be negative sometimes during the calculation, some improved methods were proposed. Wang et al. proposed an adaptive Kalman filter (AKF) based on the VCE method, and verified its effectiveness using the actual experiments [27,29]. The state estimation algorithm based on VCE has many advantages and has been widely used in linear systems, but few researchers have applied VCE to nonlinear systems. Since the novel algorithm cannot only estimate the statistical property of the system’s noise, but also be applied in nonlinear MMR systems, the cooperative localization accuracy could be further improved by utilizing the novel algorithm.

2. Basic Knowledge

2.1. Nonlinear Model of MMR Cooperative Localization System

2.1.1. State Model

The state model equation of n mobile robots system can be expressed as

where represents the pose of the i-th mobile robot in the MMR group, and it can be expressed as

2.1.2. Observation Model

In an MMR system, the bearing and range measurement information is used as the observation and the observation equations are represented as

if mobile robot i measures the range and bearing relative to mobile robot j or

if mobile robot i measures the range and bearing relative to landmark k, where

where Equation (5) represents the observation equation if the mobile robot measures the relative range and bearing relative to another mobile robot, while Equation (6) represents the observation equation if the mobile robot measures the relative range and bearing relative to landmarks; and represent the poses of the i-th mobile robot and the j-th mobile robot, respectively; represents the position of k-th landmark.

2.2. MMR Cooperative Localization Algorithm Based on CKF

2.2.1. Structure of MMR Cooperative Localization

Without a loss of generality, it is usually assumed that state transitions and observations occur in alternating order, i.e., after each state transition from to an observation is recorded. However, the observation information cannot be obtained at each time index for mobile robot systems because of the occlusion. As a result, the cooperative localization algorithm under the framework of an extended Kalman filter is re-established as Algorithm 1. In Algorithm 1, the measurement update is carried out only when measurement information exists. Otherwise, the predicted state estimate and covariance estimate act as the updated state estimate and covariance estimate, respectively.

| Algorithm 1 Cooperative localization of MMR |

|

In Algorithm 1, , and denote the previous covariance estimate, the predicted covariance estimate and the updated covariance estimate, respectively; , and indicate the previous state estimate, the predicted state estimate, the updated state estimate; and is the state transition model and its Jacobian matrix, respectively; and mean the observation model and its Jacobian matrix, respectively; and denote the covariance of process noise observation noise, respectively; and signify the measurement residual and its covariance, respectively; denotes the near-optimal Kalman gain.

2.2.2. Cubature Kalman Filter Algorithm

Since the CKF is not only simple and easy to be implemented but also highly precise and converges well, it is widely used in nonlinear estimations, especially for the MMR system.

Let us consider the MMR system model:

where and , as introduced in Section 2.1 well, are the state vector and the measurement vector, respectively; and are the nonlinear state and measurement vector functions; and represent the process noise and the measurement noise vectors, respectively; denotes the noise driven matrix.

CKF is proposed to solve the nonlinear filtering problem on the basis of the spherical radial cubature criterion [25,26]. CKF approximates the mean and variance of probability distribution through a set of (where m is the dimension of the nonlinear system) Cubature points with the same weight, propagates the above cubature points through the nonlinear functions, and then calculates the mean and variance of the current approximate Gaussian distribution by the propagated cubature points. A set of Cubature points are given by , where is the i-th cubature point and is the corresponding weight:

wherein m is the dimension of the nonlinear system, , and n is the number of mobile robots in the whole system.

Without a loss of generality, it is assumed that state transitions and observations occur in alternating order, i.e, after each state transition from to , an observation is recorded. When the observation matrix is full rank, which means that all of the mobile robots could observe all of the other mobile robots and landmarks, the estimation process is as follows:

The time update:

The measurement update:

In addition, the gain matrix is:

The estimation of the state vector can be obtained:

where is the system innovation vector of the nonlinear system.

The variance matrix of the estimated state vector is:

CKF uses the cubature rule and cubature point sets to compute the mean and variance of probability distribution without any linearization of a nonlinear model. Thus, the modeling can reach the third order or higher. Furthermore, this filtering solution does not demand Jacobians and Hessians so that the computational complexity will be alleviated to a certain extent. Therefore, compaered with EKF and UKF, CKF is widely used in attitude estimation and navigation due to its high accuracy and low calculation load.

3. Cooperative Localization Algorithm Based on VCKF

3.1. Adaptive Filter Based on the VCE Method

The adaptive filter based on the VCE method can estimate the variance components of the process noise and the measurement noise vectors in real time using the residual vectors to decompose the system innovation vector. On the basis of the estimated variance components, the weighting matrices of the process noise and the measurement noise vectors can be adjusted and then their effects on the state vector can be adjusted accordingly. The principle of the adaptive filter based on the VCE will be introduced as follows.

Considering the standard linear system model, the state and measurement equations are:

where and are the state vector and the measurement vector, respectively; and denote the state-transition matrix and the coefficient matrix of the process noise vector, respectively; and are the process noise vector and the measurement noise vector, respectively. Furthermore, and are the zero mean Gaussian noises and expressed as follows:

where and are positive definite matrices.

Thus, the two-step update process of the original Kalman filter is as follows:

The time update:

The measurement update:

where and denote the gain matrix and the system innovation vector, respectively:

The estimated state vector is optimal only if and are exactly known. However, it is difficult to know them from the prior knowledge in practice. Thus, it would be great if they could be estimated by utilizing the adaptive filter in real time.

According to the sources, the random information in a system can normally be divided into three independent parts: the process noise vector , the measurement noise vector and the predicted states noise vector [29]. They are defined as follows respectively:

Considering their residual equations, the system in Equation (23) can be rewritten as:

with their measurement variance matrices as follows:

Thus, we can estimate the covariance matrices of the system as long as we calculate the covariance matrices of the residual vectors. According to the frame of the Kalman filter, the estimations of the residual vectors can be calculated by Equation (33):

In addition, the corresponding variance matrices are then represented as:

Therefore, the innovation vector is projected into three residual vectors associated with the three groups of the measurements. Hence, we can estimate the variance factors.

Assume that all components in and are uncorrelated, so both and become diagonal. In this case, the redundancy index of each measurement noise factor is given by [29]

Similarly, the redundancy index of each process noise factor is equal to

Furthermore, individual group redundancy contributions, equal to the totals of each group redundant index, can be expressed as follows:

On the basis of the Herlmet VCE method, the individual group variance factors of unit weight are estimated by the residual vector, and the corresponding redundant index is as follows:

Thus, at time k, the individual variance factors of can be calculated by:

In addition, the covariance matrix of the measurement noise is as follows:

3.2. An MMR Cooperative Localization Algorithm Based on VCKF

To solve the nonlinear problem and improve the stochastic model simultaneously in MMR system cooperative localization, a novel improved adaptive nonlinear state estimation algorithm by considering both CKF and the VCE adaptive method, called VCKF, is proposed here.

Considering the nonlinear system described in Equation (23), three pseudo measurement groups are defined as follows:

The residual equation of the nonlinear system is represented as

According to the steps of the CKF, Equation (34) can be rewritten as:

The corresponding variance matrices are:

The individual group redundant indices are equal to:

Here, the covariance factors and the variance matrices for the nonlinear system can be calculated by Equations (44)–(47).

After combining the VCKF algorithm and MMR cooperative localization framework shown as Algorithm 1 together, a novel MMR cooperative localization algorithm is proposed in this manuscript, solving the nonlinearity and the uncertainty problems at the same time. Its performance is analyzed in the following section.

4. Experiment and Analysis

Real data is used to examine the effectiveness and superiority of the proposed cooperative localization algorithm based on VCKF. In this section, the experiment environment is introduced first. In addition, then taking CKF as a reference, the experiment data is processed by utilizing CKF and VCKF, respectively. The experiment results has also been analyzed in this section.

4.1. Experiment Setup

The prerecorded dataset, captured by the University of Toronto, the Institute of Aerospace Studies (UTIAS) [30], used a fleet of five identically iRobot Creates. Shown in Figure 1, each mobile robot was equipped with a monocular camera and an encoder. The monocular camera is used to capture its range-bearing relative to other mobile robots and landmarks, which is shown in Figure 2, while the encoder is used to measure its own motion information. A Vicon system with m accuracy is used as ground truth, whose sampling frequency is 100 Hz.

Figure 1.

Experiment environment and the mobile robots fleet of the University of Toronto, the Institute of Aerospace Studies.

Figure 2.

Top-view of mobile robots fleet to illustrate the relative measurements between different mobile robots and landmarks.

4.2. Cooperative Localization Accuracy Analysis

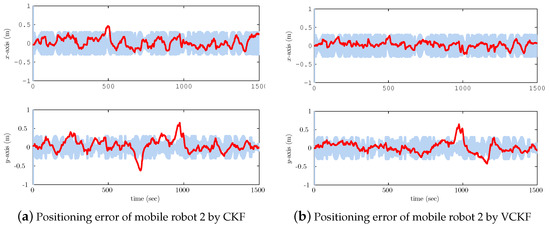

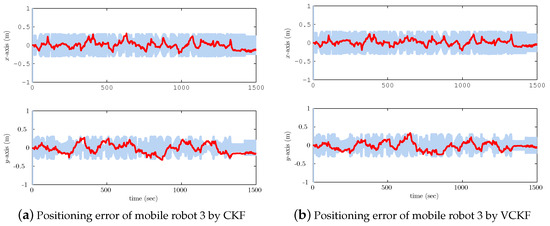

The normal CKF and the proposed VCKF methods are used to estimate the system state here. State estimation error and associated 3- error boundaries of each mobile robot are shown in Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7. It can be seen obviously that estimation errors of x- and y-directions with the proposed VCKF algorithm are always sandwiched within associated 3- error boundaries, while the errors with CKF are out of the associated 3- error boundaries at some epochs. This implies that, although both the localization scheme based on CKF and the localization scheme based on VCKF are capable of generating a state estimation for each mobile robots, the VCKF algorithm has a better performance.

Figure 3.

(a) Positioning estimation error curves of mobile robot 1 by utilizing Cubature Kalman Filter (CKF); (b) Positioning estimation error curves of mobile robot 1 by utilizing improved algorithm based on CKF and VCE methods, denoted by VCKF. The red solid line indicates the estimation error while the shaded regions indicate double-sided 3- error boundaries.

Figure 4.

(a) Positioning estimation error curves of mobile robot 2 by utilizing CKF; (b) Positioning estimation error curves of mobile robot 2 by utilizing VCKF. The red solid line indicates the estimation error while the shaded regions indicate double-sided 3- error boundaries.

Figure 5.

(a) Positioning estimation error curves of mobile robot 3 by utilizing CKF; (b) Positioning estimation error curves of mobile robot 3 by utilizing VCKF. The red solid line indicates the estimation error while the shaded regions indicate double-sided 3- error boundaries.

Figure 6.

(a) Positioning estimation error curves of mobile robot 4 by utilizing CKF; (b) Positioning estimation error curves of mobile robot 4 by utilizing and VCKF. The red solid line indicates the estimation error while the shaded regions indicate double-sided 3- error boundaries.

Figure 7.

(a) Positioning estimation error curves of mobile robot 5 by utilizing CKF; (b) Positioning estimation error curves of mobile robot 5 by utilizing and VCKF. The red solid line indicates the estimation error while the shaded regions indicate double-sided 3- error boundaries.

In order to evaluate the superiority of the proposed cooperative localization algorithm based on VCKF quantitatively, the root mean square (RMS) of each robot’s estimation error has been calculated and summarized in Table 1. From Table 1, it can be seen that the Root Mean Square (RMS) of x- and y-directions by utilizing the cooperative localization algorithm based on VCKF are smaller than the ones by using algorithms based on CKF. Therefore, this offers further proof that the proposed localization algorithm based on VCKF has better performance in localization accuracy than the algorithm based on normal CKF methods.

Table 1.

Comparison of the estimation error RMS by CKF and VCKF methods.

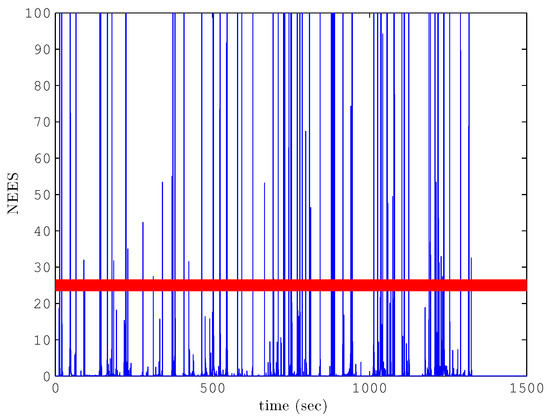

4.3. Algorithm Consistency Analysis

To examine the consistency of the proposed cooperative localization algorithm, Normalized Estimation Error Squared (NEES) is calculated by utilizing

where is the computed NEES; denotes the pose estimation error at time step k and represents the estimated error covariance matrix. For a single run, the estimation is consistent if the computed NEES can make the following inequality hold:

where represents the chi-square distribution with Degree of Freedom (DOF) and is the significance level [31]. Therefore, the upper-bound of the acceptance region for the 15-DOF stochastic process is given by and is equal to 24.95.

Figure 8 and Figure 9 give the situations in which the NEES values fall outside of the acceptance region of the cooperative localization algorithm based on CKF and proposed VCKF methods, respectively. In addition, the percentage of the NEES values falling outside the acceptance region based on the CKF method is , while the one based on VCKF method is . Thus, it can be seen that the percentages are both less than , which is acceptable. Furthermore, we also can see that the cooperative localization algorithm based on VCKF has better performance in consistency than the algorithm based on CKF.

Figure 8.

The Normalized Estimation Error Squared (NEES) of the cooperative localization algorithm based on VCKF. The red straight line indicates and the blue solid line indicates ; 3.78 percent of are above the red straight line.

Figure 9.

NEES of the cooperative localization algorithm based on CKF. The red straight line indicates and the blue solid line indicates ; 8.24 percent of are above the red straight line.

5. Conclusions

In order to overcome the influence on cooperative localization accuracy caused by the uncertainty of measurement noise and the nonlinearity for the MMR system at the same time, the adaptive VCE method was introduced to cubature Kalman filter, proposing a novel cooperative localization algorithm based on the adaptive VCKF method in this manuscript. By using the adaptive VCE method, the statistical property of the system’s noise can be estimated well, and this proposed algorithm can also be applied in nonlinear MMR systems owing to the nonlinear CKF method. Therefore, this proposed algorithm can not only avoid the influence on state estimation caused by the uncertainty of measurement noise availably, but also improve the cooperative localization accuracy for MMR systems significantly. Real experiment data was also used to verify the effectiveness and superiority of the novel cooperative localization algorithm. Furthermore, the experiment results showed that the cooperative localization algorithm based on VCKF has better performance than the one based on CKF both in accuracy and in consistency.

Acknowledgments

The authors would like to thank the open source data set from University of Toronto, Institute of Aerospace Studies (UTIAS). This work is also supported by the National Natural Science Foundation of China (Nos. 51509049 and 51679047) and the Postdoctoral Foundation of Heilongjiang Province (No.LBH-Z16044).

Author Contributions

Ming Diao and Ya Zhang conceived and designed the experiments; Qian Sun performed the experiments; Ya Zhang analyzed the data; Qian Sun wrote the paper; and Yibing Li revised the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sharma, R.; Quebe, S.; Beard, R.W.; Taylor, C.N. Bearing-only Cooperative Localization. J. Intell. Robot. Syst. 2013, 72, 429–440. [Google Scholar] [CrossRef]

- Wanasinghe, T.R.; Mann, G.K.I.; Gosine, R.G. Distributed Leader-Assistive Localization Method for a Heterogeneous Multirobotic System. IEEE Trans. Autom. Sci. Eng. 2015, 12, 795–809. [Google Scholar] [CrossRef]

- Wanasinghe, T.R.; Mann, G.K.I.; Gosine, R.G. Decentralized Cooperative Localization Approach for Autonomous Multirobot Systems. J. Robot. 2016, 2016, 2560573. [Google Scholar] [CrossRef]

- Leung, K.Y.K.; Barfoot, T.D.; Liu, H.H.T. Decentralized Localization of Sparsely-Communicating Robot Networks: A Centralized-Equivalent Approach. Robot. IEEE Trans. 2010, 26, 62–77. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Kruppa, H.; Thrun, S. A Probabilistic Approach to Collaborative Multi-Robot Localization. Auton. Robot. 2000, 8, 325–344. [Google Scholar] [CrossRef]

- Leonard, J.J.; Teller, S. Robust Range-Only Beacon Localization. Ocean. Eng. IEEE J. 2006, 31, 949–958. [Google Scholar]

- Wanasinghe, T.R.; Mann, G.K.I.; Gosine, R.G. Relative Localization Approach for Combined Aerial and Ground Robotic System. J. Intell. Robot. Syst. 2015, 77, 113–133. [Google Scholar] [CrossRef]

- Michael, N.; Shen, S.; Mohta, K.; Kumar, V.; Nagatani, K.; Okada, Y.; Kiribayashi, S.; Otake, K.; Yoshida, K.; Ohno, K. Collaborative Mapping of an Earthquake Damaged Building via Ground and Aerial Robots. J. Field Robot. 2014, 29, 832–841. [Google Scholar] [CrossRef]

- Roumeliotis, S.I.; Bekey, G.A. Distributed Multirobot Localization. IEEE Trans. Robot. Autom. 2002, 18, 781–795. [Google Scholar] [CrossRef]

- Howard, A.; Parker, L.E.; Sukhatme, G.S. Experiments with a Large Heterogeneous Mobile Robot Team: Exploration, Mapping, Deployment and Detection. Int. J. Robot. Res. 2006, 25, 431–447. [Google Scholar] [CrossRef]

- Li, H.; Nashashibi, F.; Yang, M. Split Covariance Intersection Filter: Theory and Its Application to Vehicle Localization. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1860–1871. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, X.; Tian, D.; Chen, M.; Zhang, X. A DSRC-based Vehicular Positioning Enhancement Using a Distributed Multiple-Model Kalman Filter. IEEE Access 2016, 4, 8338–8350. [Google Scholar] [CrossRef]

- Müller, F.D.P. Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors 2017, 17, 271. [Google Scholar] [CrossRef] [PubMed]

- Masehian, E.; Jannati, M.; Hekmatfar, T. Cooperative mapping of unknown environments by multiple heterogeneous mobile robots with limited sensing. Robot. Auton. Syst. 2016, 87, 188–218. [Google Scholar] [CrossRef]

- Howard, A.; Matark, M.J.; Sukhatme, G.S. Localization for Mobile Robot Teams using Maximum Likelihood Estimation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 1, pp. 434–439. [Google Scholar]

- Wu, N.; Li, B.; Wang, H.; Xing, C.; Kuang, J. Distributed Cooperative Localization based on Gaussian Message Passing on Factor Graph in Wireless Networks. Sci. China Inf. Sci. 2015, 58, 1–15. [Google Scholar] [CrossRef]

- Chiang, S.Y.; Wei, C.A.; Chen, C.Y. Real-Time Self-Localization of a Mobile Robot by Vision and Motion System. Int. J. Fuzzy Syst. 2016, 18, 1–9. [Google Scholar] [CrossRef]

- Zhao, S.; Huang, B.; Liu, F. Localization of Indoor Mobile Robot Using Minimum Variance Unbiased FIR Filter. IEEE Trans. Autom. Sci. Eng. 2016, 99, 1–10. [Google Scholar] [CrossRef]

- Shan, J.; Wang, X. Experimental Study on Mobile Robot Navigation using Stereo Vision. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 1958–1965. [Google Scholar]

- Hu, C.; Chen, W.; Chen, Y.; Liu, D. Adaptive Kalman Filtering for Vehicle Navigation. J. Glob. Position. Syst. 2003, 2, 42–47. [Google Scholar] [CrossRef]

- Aghili, F.; Salerno, A. Driftless 3-D Attitude Determination and Positioning of Mobile Robots By Integration of IMU With Two RTK GPSs. IEEE/ASME Trans. Mechatron. 2013, 18, 21–31. [Google Scholar] [CrossRef]

- Yu, F.; Sun, Q.; Lv, C.; Ben, Y.; Fu, Y. A SLAM Algorithm Based on Adaptive Cubature Kalman Filter. Math. Probl. Eng. 2014, 2014, 1–11. [Google Scholar] [CrossRef]

- Sun, J.; Xu, X.; Liu, Y.; Zhang, T.; Li, Y. FOG Random Drift Signal Denoising Based on the Improved AR Model and Modified Sage-Husa Adaptive Kalman Filter. Sensors 2016, 16, 1073. [Google Scholar] [CrossRef] [PubMed]

- Chang, G.; Liu, M. An Adaptive Fading Kalman Filter based on Mahalanobis Distance. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2015, 229, 1114–1123. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature Kalman Filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S.; Hurd, T.R. Cubature Kalman Filtering for Continuous-Discrete Systems: Theory and Simulations. IEEE Trans. Signal Process. 2010, 58, 4977–4993. [Google Scholar] [CrossRef]

- Wang, J.G. Reliability Analysis in Kalman Filtering. J. Glob. Position. Syst. 2009, 8, 101–111. [Google Scholar] [CrossRef]

- Moghtased-Azar, K.; Tehranchi, R.; Amiri-Simkooei, A.R. An Alternative Method for Non-Negative Estimation of Variance Components. J. Geod. 2014, 88, 427–439. [Google Scholar] [CrossRef]

- Wang, J.G.; Gopaul, N.; Scherzinger, B. Simplified Algorithms of Variance Component Estimation for Static and Kinematic GPS Single Point Positioning. J. Glob. Position. Syst. 2009, 8, 43–52. [Google Scholar] [CrossRef]

- Leung, K.Y.; Halpern, Y.; Barfoot, T.D.; Liu, H.H. The UTIAS Multi-Robot Cooperative Localization and Mapping Dataset. Int. J. Robot. Res. 2011, 30, 969–974. [Google Scholar] [CrossRef]

- Bahr, A.; Walter, M.R.; Leonard, J.J. Consistent Cooperative Localization. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3415–3422. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).