Urban Traffic Congestion Evaluation Based on Kernel the Semi-Supervised Extreme Learning Machine

Abstract

:1. Introduction

- It is difficult to attain the reliable labeled data. Data resources are more than 23,000 taxicabs with GPS in the city. The quantity of data from the floating cars each week is more than 15,000,000 samples and the quantity of road traffic samples is around 700,000. The reliable labeled data of congestion is derived from the real-time observations of the Transportation Department staff that cost much human resources and working time. The traffic network of the city is complex on account of different kinds of bridges, tunnels, main roads, sub roads and intersections. The evaluation models based upon the supervised learning are ineffective because of the sparsely-labeled samples.

- For many semi-supervised learning algorithms, large scale data results in huge computation cost. With the continuous change of traffic conditions, the evaluation model needs to be retrained frequently. So this application demands a machine learning framework that offers more stable and efficient training.

- Though the congestion value of unlabeled data is uncertain, it represents the different traffic conditions which reflect the distribution information of traffic data. Kernel-SSELM improves the recognition accuracy of evaluation models by involving unlabeled data in the training.

- Extreme learning machine has high training efficiency and is easy to implement. In the case of large data scales, high training speed ensures that the congestion evaluation model can be updated in time according to the data changes.

- In neglecting the number of hidden layer nodes, the optimization of kernel function improves the stability of SSELM.

2. Traffic Congestion Eigenvalue

2.1. Road Section Information

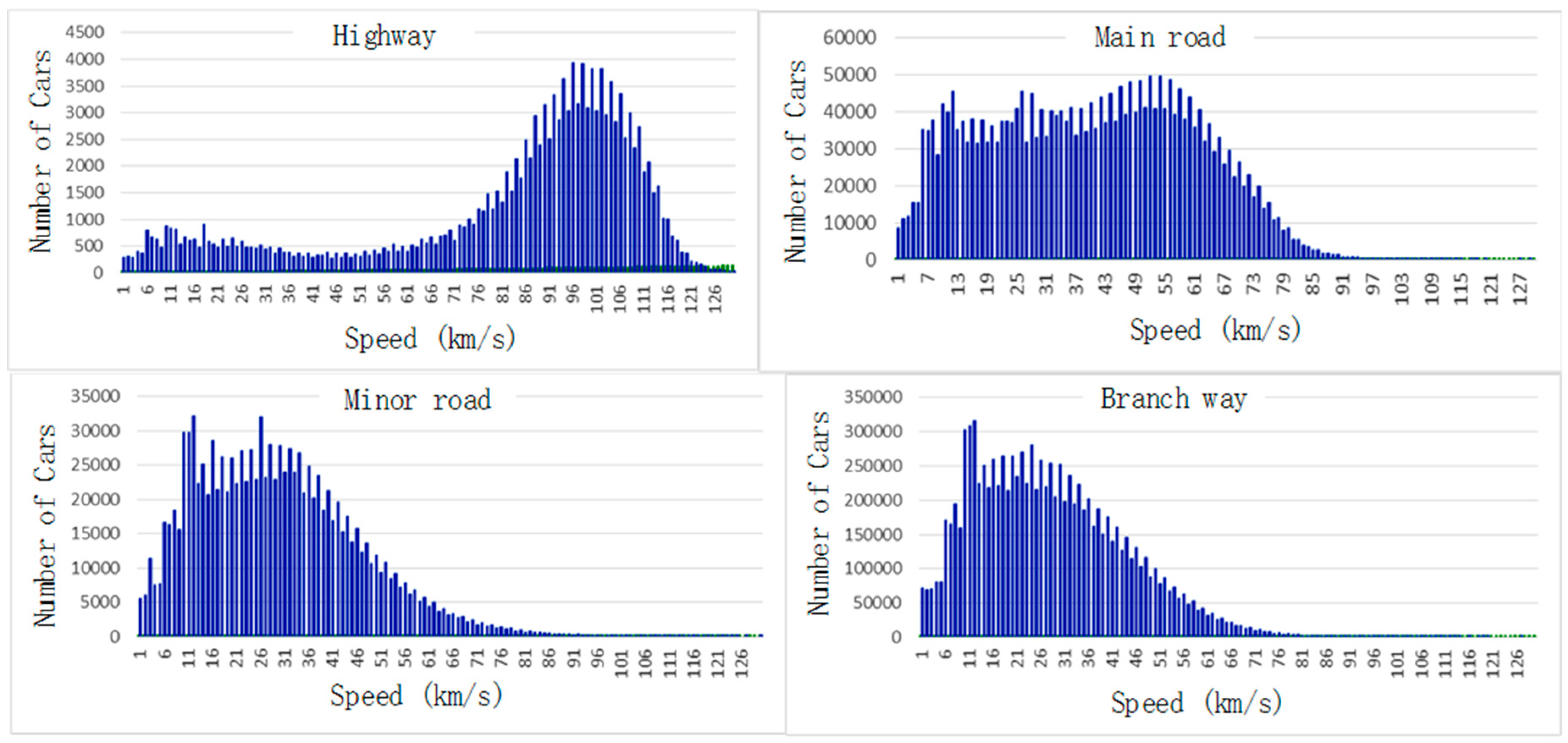

2.2. Speed Information Based on Floating Car Data

2.3. Congestion Value

3. Kernel-Based SSELM

4. Evaluation Performance Results

4.1. Experimental Setup

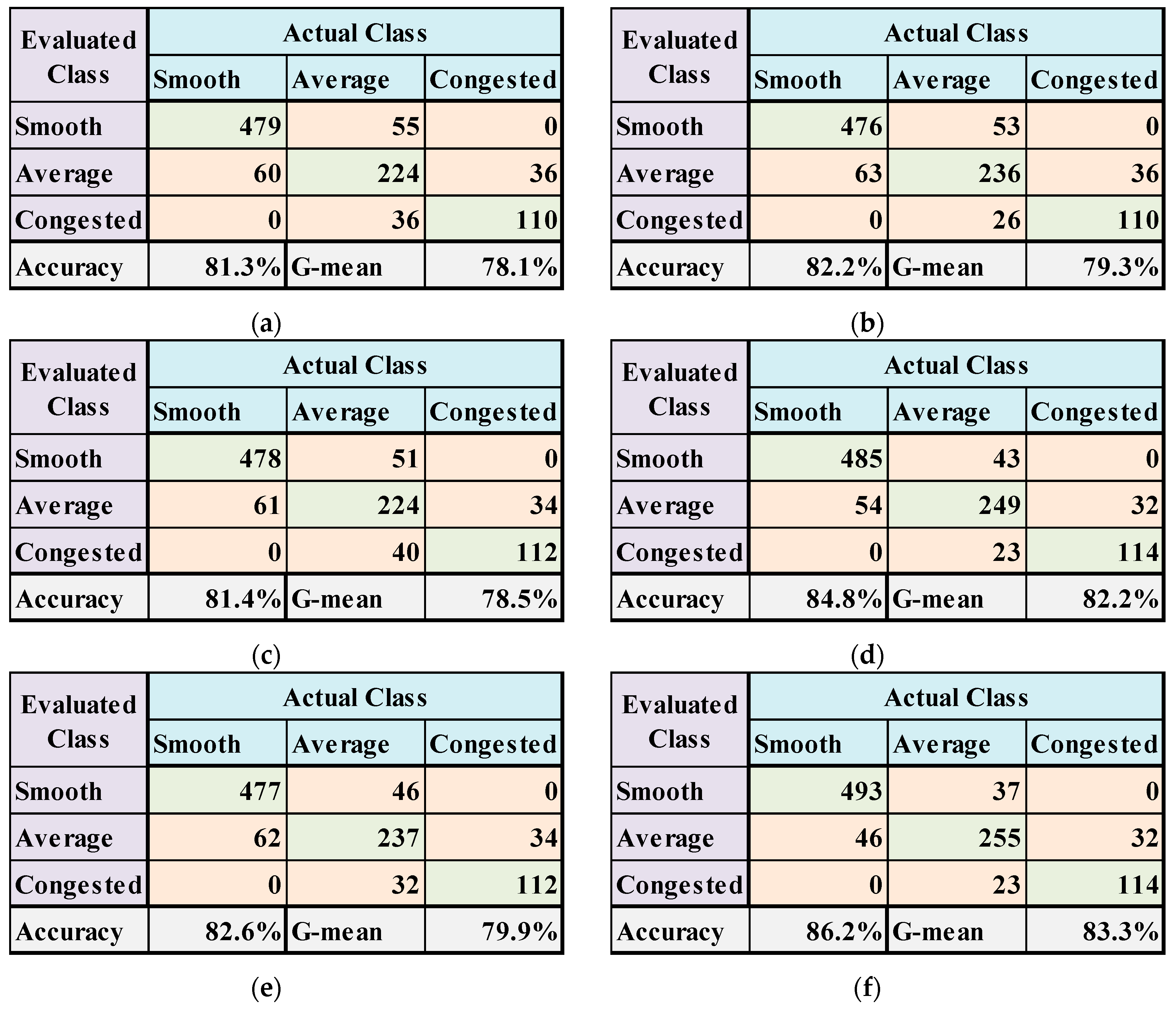

4.2. Comparisons with Related Algorithms

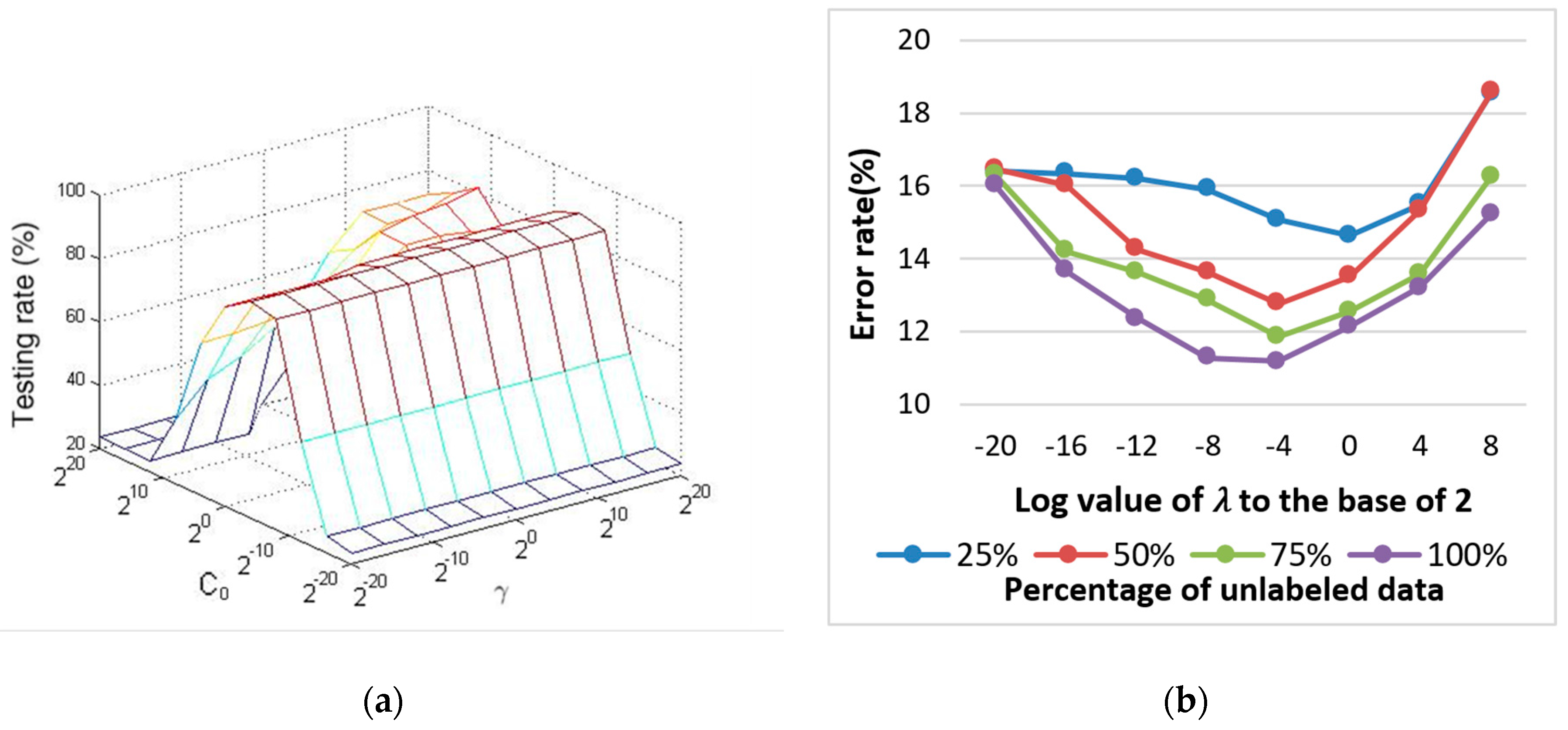

4.3. Performance Sensitivity on Parameters

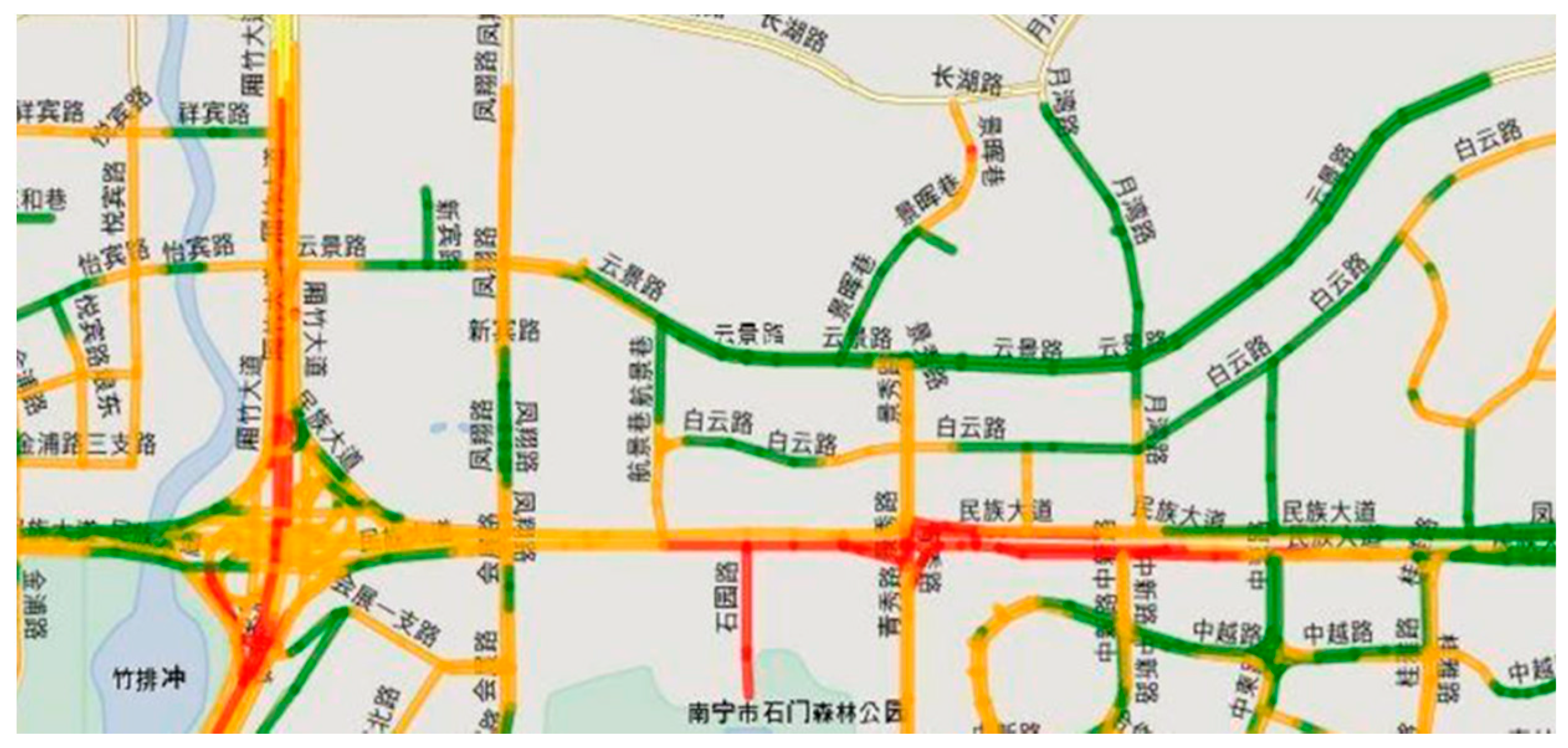

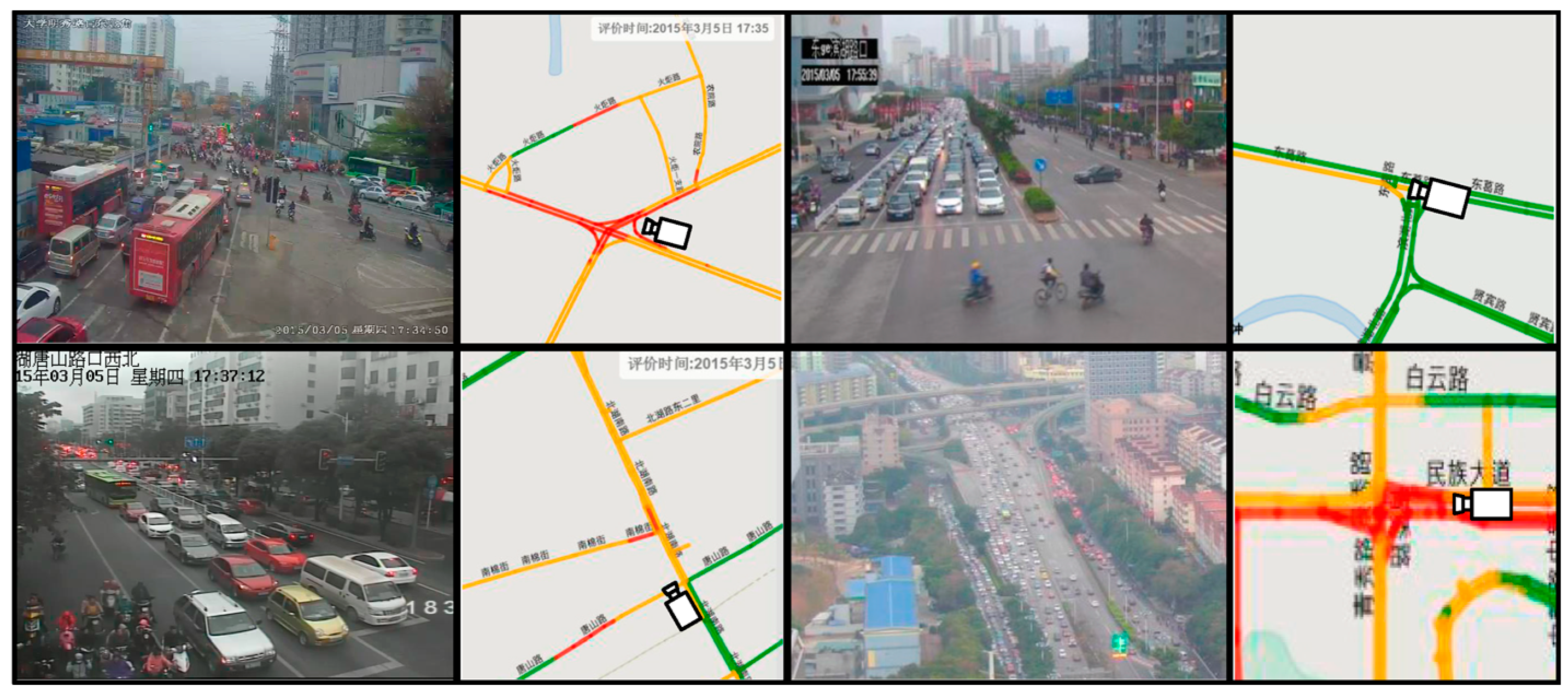

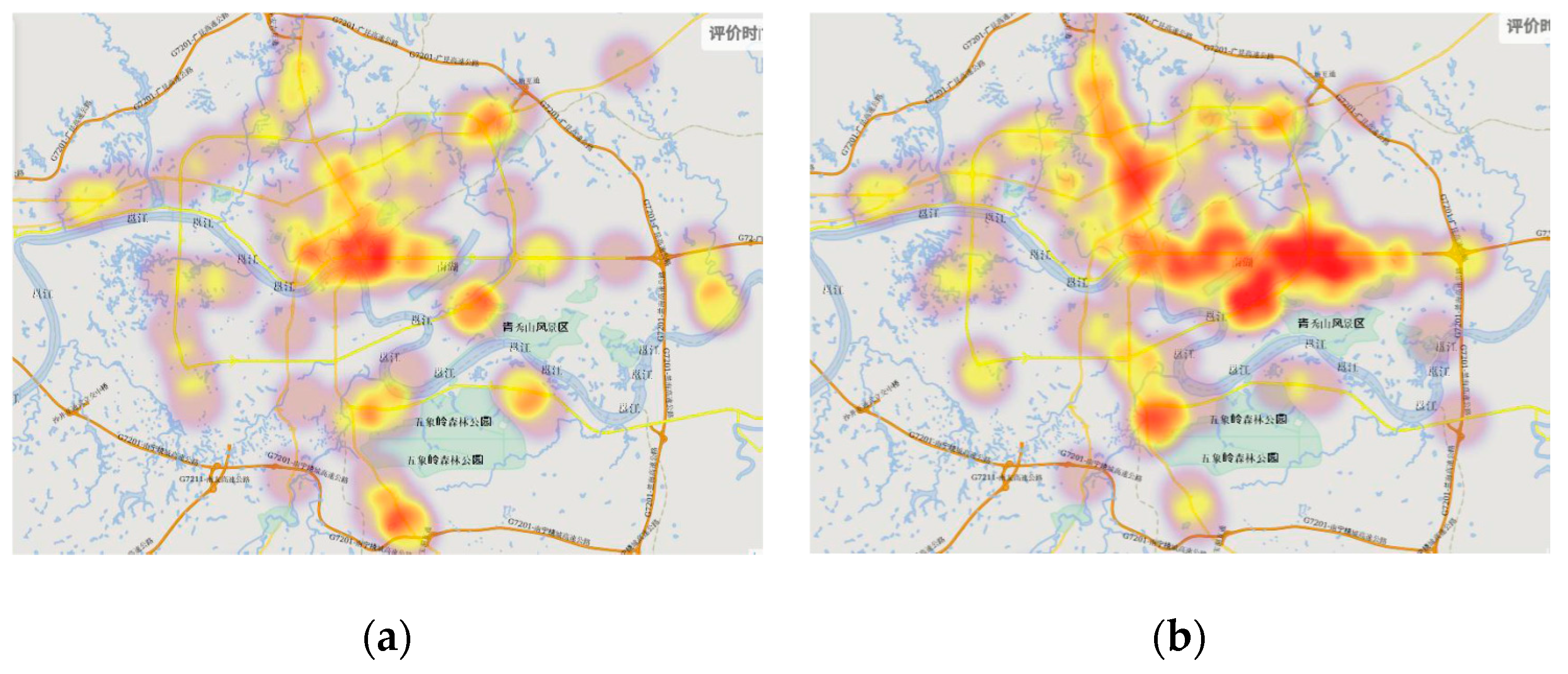

5. Evaluation on the Realistic Traffic Data

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gu, J.; Zhou, S.H.; Yan, X.P.; Deng, L.F. Formation Mechanism of Traffic Congestion in View of Spatio-temporal Agglomeration of Residents’ Daily Activities: A Case Study of Guangzhou. Sci. Geogr. Sin. 2012, 32, 921–927. [Google Scholar]

- Wen, H.; Sun, J.; Zhang, X. Study on Traffic Congestion Patterns of Large City in China Taking Beijing as an Example. Procedia Soc. Behav. Sci. 2014, 138, 482–491. [Google Scholar] [CrossRef]

- Liu, R.; Hu, W.P.; Wang, H.L.; Wu, C. The Road Network Evolution Analysis of Guangzhou-Foshan Metropolitan Area Based on Kernel Density Estimation. In Proceedings of the International Conference on Computational and Information Sciences, Chengdu, China, 17–19 December 2010; pp. 316–319. [Google Scholar]

- Kong, Q.J.; Zhao, Q.; Wei, C.; Liu, Y. Efficient traffic state estimation for large-scale urban road networks. IEEE Trans. Intell. Transp. Syst. 2013, 14, 398–407. [Google Scholar] [CrossRef]

- Batool, F.; Khan, S.A. Traffic estimation and real time prediction using Ad Hoc networks. In Proceedings of the IEEE Symposium on Emerging Technologies, Catania, Italy, 18 September 2005; pp. 264–269. [Google Scholar]

- Gomez, A.E.; Alencar, F.A.R.; Prado, P.V.; Osorio, F.S.; Wolf, D.F. Traffic lights detection and state estimation using hidden markov models. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 750–755. [Google Scholar]

- Purusothaman, S.B.; Parasuraman, K. Vehicular traffic density state estimation using Support Vector Machine. In Proceedings of the 2013 IEEE International Conference on Emerging Trends in Computing, Communication and Nanotechnology (ICE-CCN), Tirunelveli, India, 25–26 March 2013; pp. 782–785. [Google Scholar]

- Huang, G.B.; Siew, C.K. Extreme learning machine: RBF network case. In Proceedings of the 2004 Control, Automation, Robotics and Vision Conference (ICARCV), Kunming, China, 6–9 December 2004; Volume 2, pp. 1029–1036. [Google Scholar]

- Huang, G.B.; Chen, L. Letters: Convex incremental extreme learning machine. Neurocomputing 2007, 70, 3056–3062. [Google Scholar] [CrossRef]

- Rong, H.J.; Huang, G.B.; Sundararajan, N.; Saratchandran, P. Online Sequential Fuzzy Extreme Learning Machine for Function Approximation and Classification Problems. IEEE Trans. Syst. Man Cybern. Part B 2009, 39, 1067–1072. [Google Scholar] [CrossRef] [PubMed]

- Kasun, L.L.C.; Zhou, H.; Huang, G.B.; Vong, C.M. Representational learning with extreme learning machine for big data. IEEE Intell. Syst. 2013, 28, 31–34. [Google Scholar]

- Yang, Y.; Wu, Q.M.J. Multilayer Extreme Learning Machine with Subnetwork Nodes for Representation Learning. IEEE Trans. Cybern. 2015, 46, 2570–2583. [Google Scholar] [CrossRef] [PubMed]

- Baradarani, A.; Wu, Q.M.J.; Ahmadi, M. An efficient illumination invariant face recognition framework via illumination enhancement and DD-DTCWT filtering. Pattern Recognit. 2013, 46, 57–72. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.M.J.; Wang, Y. Autoencoder With Invertible Functions for Dimension Reduction and Image Reconstruction. IEEE Trans. Syst. Man Cybern. Syst. 2016, 99, 1–15. [Google Scholar] [CrossRef]

- Heeswijk, M.V.; Miche, Y.; Oja, E.; Lendasse, A. GPU-accelerated and parallelized ELM ensembles for large-scale regression. Neurocomputing 2011, 74, 2430–2437. [Google Scholar] [CrossRef]

- Cao, J.; Lin, Z.; Huang, G.B.; Liu, N. Voting based extreme learning machine. Inf. Sci. 2012, 185, 66–77. [Google Scholar] [CrossRef]

- Luo, J.; Vong, C.M.; Wong, P.K. Sparse Bayesian Extreme Learning Machine for Multi-classification. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 836–843. [Google Scholar] [PubMed]

- Bai, Z.; Huang, G.B.; Wang, D.; Westover, M.B. Sparse extreme learning machine for classification. IEEE Trans. Cybern. 2014, 44, 1858–1870. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; Lin, Z. Extreme learning machines on high dimensional and large data applications: A survey. Math. Probl. Eng. 2015, 2015, 1–14. [Google Scholar] [CrossRef]

- Cao, J.; Xiong, L. Protein sequence classification with improved extreme learning machine algorithms. BioMed Res. Int. 2014, 2014, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Song, S.; Gupta, J.N.D.; Wu, C. Semi-supervised and unsupervised extreme learning machines. IEEE Trans. Cybern. 2014, 44, 2405–2417. [Google Scholar] [CrossRef] [PubMed]

- Pal, M.; Maxwell, A.E.; Warner, T.A. Kernel-based extreme learning machine for remote-sensing image classification. Remote Sens. Lett. 2013, 4, 853–862. [Google Scholar] [CrossRef]

- Liu, H.; Qin, J.; Sun, F.; Guo, D. Extreme Kernel Sparse Learning for Tactile Object Recognition. IEEE Trans. Cybern. 2016. [Google Scholar] [CrossRef] [PubMed]

- Shen, Q.; Ban, X.; Guo, C.; Wang, C. Kernel Semi-supervised Extreme Learning Machine Applied in Urban Traffic Congestion Evaluation. In Proceedings of the 13th International Conference on Cooperative Design, Visualization, and Engineering (CDVE), Sydney, Australia, 24–27 October 2016; pp. 90–97. [Google Scholar]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Joachims, T. Transductive inference for text classification using support vector machines. In Proceedings of the 6th International Conference on Machine Learning (ICML), Bled, Slovenia, 27–30 June 1999; Volume 99, pp. 200–209. [Google Scholar]

- Drigo, M.; Maniezzo, V.; Colorni, A. The ant system: Optimization by a colony of cooperation agents. IEEE Trans. Syst. Man Cybern. Part B 1996, 26, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold Regularization: A Geometric Framework for Learning from Labeled and Unlabeled Examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

| Congestion | Smooth | Average | Congested |

|---|---|---|---|

| Highway | >65 | 35~65 | <35 |

| Main road | >40 | 30~40 | <20 |

| Minor road and Branch road | >35 | 25~35 | <10 |

| Speed Grades | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Range(km/h) | <15 | 15~35 | 35~55 | 55~75 | >75 |

| Empirical Rule | TSVM | LDS | LapRLS | LapSVM | SSELM | Kernel-SSELM | |

|---|---|---|---|---|---|---|---|

| Average Accuracy | 68.9% | 81.3% | 82.2% | 81.4% | 84.8% | 82.6% | 86.2% |

| Best Accuracy | 73.0% | 87.0% | 86.0% | 86.0% | 88.0% | 87.0% | 88.0% |

| Std. Dev. | 3.79% | 2.15% | 2.71% | 2.35% | 1.97% | 2.43% | 1.55% |

| Training Time (s) | - | 18,437 | 35,334 | 931 | 825 | 41.6 | 48.2 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Q.; Ban, X.; Guo, C. Urban Traffic Congestion Evaluation Based on Kernel the Semi-Supervised Extreme Learning Machine. Symmetry 2017, 9, 70. https://doi.org/10.3390/sym9050070

Shen Q, Ban X, Guo C. Urban Traffic Congestion Evaluation Based on Kernel the Semi-Supervised Extreme Learning Machine. Symmetry. 2017; 9(5):70. https://doi.org/10.3390/sym9050070

Chicago/Turabian StyleShen, Qing, Xiaojuan Ban, and Chong Guo. 2017. "Urban Traffic Congestion Evaluation Based on Kernel the Semi-Supervised Extreme Learning Machine" Symmetry 9, no. 5: 70. https://doi.org/10.3390/sym9050070

APA StyleShen, Q., Ban, X., & Guo, C. (2017). Urban Traffic Congestion Evaluation Based on Kernel the Semi-Supervised Extreme Learning Machine. Symmetry, 9(5), 70. https://doi.org/10.3390/sym9050070