1. Introduction

As modern digital infrastructure grows increasingly complex and ubiquitous, cybersecurity has emerged as one of the most pressing challenges across both public and private domains. As networked systems become integral to critical infrastructure and real-time services, they face increasing risks from cyber threats such as malware, DDoS attacks, advanced persistent threats (APTs), and adversarial attacks [

1,

2,

3]. Intrusion detection systems (IDSs) are essential for timely and adaptive monitoring, serving as a vital defense against these evolving threats.

Broadly, IDS methodologies can be categorized into four major paradigms: signature-based detection [

4], anomaly-based detection [

5], regex-based detection [

6], and auto-encoder-based detection [

7,

8,

9]. Signature-based systems rely on predefined rules or known attack signatures—such as byte patterns, protocol violations, or heuristically derived markers—to identify threats. These approaches are highly effective at detecting previously encountered attacks and are computationally efficient, due to their deterministic nature. However, their reliance on a static signature database renders them ineffective against unknown or polymorphic threats, and they require frequent updates to remain current with emerging attack techniques. Anomaly-based systems, on the other hand, aim to construct models of normal network behavior using statistical profiles, machine learning, or clustering techniques. Any significant deviation from the learned baseline is flagged as potentially malicious. While this paradigm enables the detection of novel and zero-day attacks, it introduces new challenges. These include high false positive rates—where benign deviations are mistaken for attacks—as well as sensitivity to environmental noise, concept drift, and diverse traffic patterns. Moreover, anomaly-based IDSs often require extensive training data and computational resources, limiting their practicality in low-resource settings such as edge environments. Regex-based detection approaches leverage regular expressions to define malicious traffic patterns with flexible matching rules, offering a lightweight solution but requiring careful rule engineering. Auto-encoder-based methods employ deep learning techniques to learn compressed representations of normal behavior, enabling the detection of deviations in a data-driven manner. To balance these trade-offs, hybrid intrusion detection [

10,

11] models have been proposed that combine the strengths of multiple paradigms. However, designing systems that are both accurate and efficient, especially in distributed or real-time environments, remains a non-trivial challenge. The growing complexity of modern networks and the evolving sophistication of adversaries further demand intrusion detection methods that are adaptable, lightweight, and capable of handling heterogeneous data sources.

With the proliferation of edge computing and the explosion of IoT devices, traditional IDS solutions face significant new challenges.

Figure 1 shows the architecture of our distributed framework. Edge computing shifts data processing from centralized cloud servers to the network edge, closer to where data is generated. This paradigm supports latency-sensitive applications and enhances privacy preservation, but also introduces unique constraints. Edge nodes such as smart sensors and mobile devices operate under strict resource limitations, and the edge network itself is highly dynamic and heterogeneous. Recent research has applied machine learning (ML) and deep learning (DL) [

12,

13] to network intrusion detection, achieving impressive accuracy in traffic classification. However, most of these methods require centralized data collection, raising concerns about privacy, bandwidth, and scalability. In addition, such models may not generalize well to distributed, real-world edge environments. Federated learning (FL) [

14] has emerged as a decentralized alternative, enabling collaborative model training across distributed clients without sharing raw data. While FL mitigates privacy risks and supports scalability, it faces practical challenges such as unstable convergence and communication overhead. Recent variants incorporating attention-based aggregation and hierarchical architectures have been proposed to enhance FL robustness in heterogeneous settings. Meanwhile, hashing techniques [

15] have shown great potential for efficient data representation and retrieval in resource-constrained environments. Hashing neural networks learn compact binary codes that preserve semantic similarity, allowing for lightweight storage and fast nearest neighbor searching via Hamming distance.

Despite advances in federated learning and hashing, existing IDS methods often fail to address the key constraints of edge deployment, including limited computational resources, non-independent and identically distributed (non-IID) data, high communication overhead, and the need for real-time response. Recent IDS approaches based on deep learning or Transformer architectures achieve high detection accuracy, but they often incur high computational costs and lack efficient retrieval mechanisms, making them less suitable for edge scenarios. Most approaches focus on either accuracy or efficiency, but not both, and few attempt to integrate lightweight feature modeling, distributed training, and fast retrieval in a unified system. Moreover, few works simultaneously consider privacy preservation and real-time response in resource-constrained environments, leaving a significant gap between research prototypes and practical edge deployments. There remains a critical need for edge-friendly IDS frameworks that are accurate, efficient, and privacy-preserving.

To bridge this gap, we propose a unified intrusion detection framework for edge environments that combines spatiotemporal hashing, attention-driven federated learning, and multi-index nearest neighbor retrieval. Our approach first models traffic behavior using a hashing neural network to encode spatiotemporal features into compact binary codes (0/1). These binary codes are then compared using Hamming distance in a multi-index search structure, allowing efficient retrieval of the most similar entries in the database. The retrieved neighbors are subsequently used to determine the final intrusion classification result. Our proposed framework also exhibits structural and functional symmetry, where symmetric operations are applied across distributed edge nodes and the attention mechanism ensures consistent model aggregation, aligning well with the symmetry principles. For training, we introduce a hierarchical FL architecture that clusters edge nodes into subnets and uses attention-based aggregation to balance client contributions. Compared to the existing methods, our framework achieves a better trade-off between accuracy and efficiency while reducing retrieval latency and maintaining strong privacy guarantees:

We have developed a spatiotemporal hashing neural network that transforms raw network traffic into compact binary codes by capturing both spatial and temporal features. These codes preserve behavioral similarity and enable fast, low-cost Hamming distance comparison on edge devices.

We propose a hierarchical federated learning framework with attention-based aggregation, where edge clients are grouped into subnetworks to update model parameters. An attention mechanism adaptively weighs client contributions based on model similarity, improving performance under non-IID data and reducing communication overhead.

We implement a multi-index hashing retrieval method for efficient KNN-based intrusion detection. By splitting each binary code into subcodes and indexing them across multiple hash tables, the system supports scalable, low-latency retrieval using bitwise operations, ensuring high accuracy with lower memory and delay.

2. Related Work

2.1. Intrusion Detection in Edge Environments

Traditional intrusion detection systems are predominantly designed for deployment in centralized data centers, where traffic can be aggregated and analyzed using powerful computational resources. However, with the increasing shift toward edge computing and IoT architectures, there is a growing need to move intrusion detection closer to the data source. Edge environments consist of highly distributed, resource-constrained devices—such as sensors, embedded systems, and mobile nodes—that continuously generate and process network traffic.

Early efforts to adapt intrusion detection to edge scenarios focused on lightweight variants of traditional models. For example, Kolosnjaji et al. [

16] proposed a simplified deep learning-based IDS for packet inspection on limited hardware. Ferdowsi and Roy [

17] introduced a distributed GAN-based IDS that eliminated centralized coordination, showing promise for decentralized learning. Burcu et al. [

18] evaluated classical classifiers such as KNN and decision trees under edge constraints, focusing on feature selection and sampling strategies to reduce computation. Recent works have further explored IDS design tailored for smart city and IoT environments. Elsaeidy et al. [

19] proposed an unsupervised IDS based on Restricted Boltzmann Machines, capable of detecting anomalies from sensor grids in smart infrastructure. Other studies have explored IDSs based on edge–cloud collaboration, where preliminary detection is performed at the edge and refined in the cloud [

20]. More recently, blockchain and smart contract-based IDS frameworks have emerged, using decentralized verification to enhance transparency and resilience in edge settings [

21].

Despite these advancements, edge-based IDSs face persistent limitations. The high-dimensionality and heterogeneity of network traffic make efficient modeling difficult, especially on low-power devices. Moreover, handcrafted features often fail to generalize across protocols and deployment contexts. For these challenges, our work has developed a lightweight intrusion detection framework specifically designed for scalable deployment across heterogeneous edge environments.

2.2. Federated Learning

Federated learning (FL) is a decentralized machine learning paradigm that enables multiple clients to collaboratively train a global model without sharing their raw data. Each device performs local updates and transmits only model parameters or gradients to a central server, preserving privacy and reducing communication overhead. FL is particularly suited to edge environments, where data is inherently distributed, heterogeneous, and non-IID.

FL frameworks can be categorized into horizontal, vertical, and hybrid types, depending on data distribution. Horizontal FL is the most common in edge computing, where participants share the same feature space but differ in data samples. Vertical FL handles scenarios where participants hold complementary features over shared users, often in cross-organization collaborations. Both aim to balance privacy with model utility in decentralized settings. In recent years, FL has been applied to intrusion detection [

22] with promising results. Hamdi Friji et al. [

23] proposed a GNN-based IDS using IP and port graphs for lightweight anomaly detection. Mansi H. Bhavsar et al. [

24] proposed a federated intrusion detection system leveraging logistic regression and CNN classifiers, achieving high accuracy and privacy preservation in transportation IoT environments using the NSL-KDD and Car-Hacking datasets. Zhigang Jin et al. [

25] proposed FL-IIDS, a federated intrusion detection framework that addresses catastrophic forgetting through gradient-balanced and knowledge-distilled loss functions. Ozlem Ceviz et al. [

26] proposed a decentralized FL-based intrusion detection system tailored for UAV networks, effectively reducing computation costs and enhancing privacy without sacrificing detection performance. Babatunde Olanrewaju-George et al. [

27] employed both supervised and unsupervised deep learning models trained via federated learning for IoT intrusion detection, demonstrating that FL-based auto-encoders outperform non-FL counterparts.

Although FL provides privacy by keeping raw data on local devices, it remains vulnerable to security risks such as model poisoning attacks and gradient leakage, which can compromise the integrity of the global model. Recent studies have suggested robust aggregation strategies, anomaly detection of malicious updates, and differential privacy to mitigate these threats. Despite these advancements, FL-based IDS frameworks still face key limitations, including uneven data distributions, varying computational capacities, and high communication costs. Most existing works focus on either learning accuracy or communication reduction, with limited consideration of real-time constraints and retrieval efficiency. To address these gaps, our work introduces an attention-guided aggregation strategy within a federated learning framework, enabling efficient intrusion detection across decentralized edge nodes.

2.3. Hash-Based Representation and Retrieval

Hashing has been widely used for compact data representation and efficient similarity searching in large-scale scenarios. It maps high-dimensional inputs into fixed-length binary codes that preserve semantic similarity, allowing fast nearest neighbor retrieval in Hamming space with low memory and computational cost. This is especially advantageous for real-time intrusion detection on resource-constrained edge devices.

Locality-Sensitive Hashing (LSH) [

28] is a well-known data-independent method using random projections but suffers from limited accuracy and adaptability. To address this, data-dependent methods learn hash functions from data distributions. Gong and Lazebnik [

29] proposed Iterative Quantization (ITQ) for minimizing quantization error. Zhou et al. [

30] introduced Kernel Supervised Hashing (KSH), and Norouzi and Fleet [

31] developed Minimal Loss Hashing (MLH) to preserve semantic relations more effectively. Deep learning further advanced hashing by jointly learning feature representations and binary codes. CNN Hashing [

32] and Deep Semantic Ranking Hashing (DSRH) [

33] are representative approaches. Recent studies have leveraged hashing techniques in various directions, including accelerating retrieval, enhancing privacy protection, and reducing communication costs. To improve retrieval speed, Wan et al. [

34] proposed multi-index hashing (MIH), which partitions hash codes to support sublinear KNN search. Hashing has also been explored in distributed and privacy-sensitive contexts. Kapoor et al. [

35] proposed HashFed, combining hashing with federated learning to reduce communication costs. In intrusion detection, hash-based representations enable lightweight traffic encoding and fast matching, making them suitable for low-power edge nodes. However, existing hashing-based IDS approaches rarely consider integration with federated settings or adapt to edge environments. In this work, we address this gap by designing a spatiotemporal hashing model integrated with multi-index retrieval.

3. Methodology

To address the challenges of scalability, efficiency, and adaptability in deploying Network Intrusion Detection Systems (NIDS) at the edge, we propose a distributed and hash-driven detection framework that integrates compact traffic representation, communication-aware training, and collaborative retrieval. Specifically, we first design a hashing neural network to encode high-dimensional traffic features into compact binary codes, enabling lightweight and similarity-preserving modeling. Then, we introduce a multi-level federated learning architecture that employs attention-based aggregation to improve model convergence and reduce communication overhead. Finally, we develop a collaborative detection strategy that constructs a distributed spatiotemporal hash code database across edge nodes and performs efficient KNN-based anomaly detection via multi-index hashing.

3.1. Discrete Hashing Network for Intrusion Detection

We adopt a deep supervised discrete hashing neural network to model high-dimensional network traffic samples with compact binary representations, as illustrated in

Figure 2. Given

N traffic instances

, where each

and

denotes the number of traffic features, our goal is to learn a set of

K-bit binary hash codes

through an end-to-end learnable network. For each input

, its corresponding binary code is denoted as

, where

is the learned hash function.

The network architecture consists of an input layer, two hidden layers, and an output layer. The number of input neurons matches the traffic feature dimension

, and the number of output neurons equals the target hash length

K. Each layer is fully connected, and the hidden layers use ReLU activation to enhance non-linearity. This compact structure balances representation capability and computational efficiency, making it suitable for real-time edge deployment. The learning objective is twofold: (1) to preserve semantic similarity by ensuring that hash codes of similar samples have minimal Hamming distance, and (2) to enable discriminative classification through a linear classifier

, where

Y is the ground truth label matrix and

W is the classifier weight. We define the similarity matrix

. Here,

M is a binary similarity matrix where

indicates that samples

and

belong to the same class (semantically similar), and

otherwise. This matrix serves as the supervision signal for preserving semantic similarity in the learned hash codes. The training procedure of our deep supervised discrete hashing network is summarized in Algorithm 1, where a neural network is optimized to learn a hash function

that maps inputs into compact binary codes. The algorithm consists of four main update steps described as follows.

| Algorithm 1: Neural Network Training Algorithm Based on Deep Supervised Discrete Hashing |

![Symmetry 17 01580 i001 Symmetry 17 01580 i001]() |

The training procedure of our deep supervised discrete hashing network is summarized in Algorithm 1, where a neural network is optimized to learn a hash function that maps inputs into compact binary codes. Here, denotes the sign function, which outputs 1 for positive values and 0 otherwise, producing binary sequences in . The algorithm consists of four main update steps described as follows.

Step 1: Loss function construction. We define a loss function that jointly preserves semantic similarity and enables discriminative classification. For training samples

, where

is a one-hot class label, the total objective is given by

where

measures the pairwise similarity between continuous hash outputs

,

indicates whether

and

are semantically similar,

is the linear classification weight matrix, and

is the

K-bit binary hash code obtained from

via the sign function. Hyperparameters

u and

v control the trade-off between the classification loss and the Frobenius-norm regularization term on

W.

Step 2: Feature mapping update. The continuous hash representation is computed as

, where

is the output of intermediate layers with parameters

g,

G is a projection matrix, and

n is a bias term. During training, we update

G,

n, and

g via standard backpropagation based on the gradient of

with respect to

. We update the feature mapping via:

Step 3: Classifier weight update. To minimize the supervised loss, we update the classifier weights using ridge regression:

where

B contains current binary codes and

Y holds label vectors.

Step 4: Binary code optimization. We update the

binary hash matrix

using discrete cyclic coordinate descent to jointly preserve semantic similarity and enhance classification. The optimization objective is

where

is the Frobenius norm,

is the matrix trace (sum of diagonal elements),

is the classifier weight matrix,

is the label matrix,

is the continuous hash output, and

integrates the label prediction term (

) with the quantization adjustment term (

). Here,

is a hyperparameter controlling the influence of the quantization adjustment, encouraging

H to align with

B, and

is a hyperparameter balancing the classification loss term in the overall objective. Each bit

is updated by fixing all others and applying

where

is the

element of

P,

and

denote

B and

W with the

k-th row removed, and

is the

k-th row of

W. This process is iterated over all bits until convergence.

3.2. Multi-Level Attention-Based Aggregation

To mitigate communication overhead and address data heterogeneity in distributed intrusion detection, we design a multi-level federated learning framework combined with an attention-based aggregation strategy. As illustrated in

Figure 2, the framework organizes edge nodes into hierarchical subnetworks to enable multi-level model aggregation. Furthermore, as shown in

Figure 3, an attention mechanism is employed to dynamically assign aggregation weights at each level, allowing more adaptive and fine-grained model updates. This joint design significantly improves the efficiency and scalability of the system.

The resulting relevance scores are then normalized to obtain attention weights. In our federated learning framework, the server begins by initializing a global parameter vector

and distributing it to all participating clients. Each client

c receives

at round

t and trains a local model

on its private dataset

, producing updated parameters

. After completing local training, the client sends

to the server. Upon receiving updates from a subset of

clients in round

t, the server aggregates them as:

where

is the updated global model and

is the number of clients participating in aggregation at round

t. After each aggregation, the server sends

back to the participating clients. Each client then updates its local parameters by blending the new global model with its current local model:

where

is a mixing coefficient controlling the balance between global guidance and local adaptation. A higher

places more weight on the global model, while a lower

allows the client to retain more of its locally learned features. This partial participation and blending strategy improves robustness in heterogeneous environments, as the server can proceed with updates without waiting for all clients, and clients can flexibly adjust the influence of global updates according to their local data characteristics.

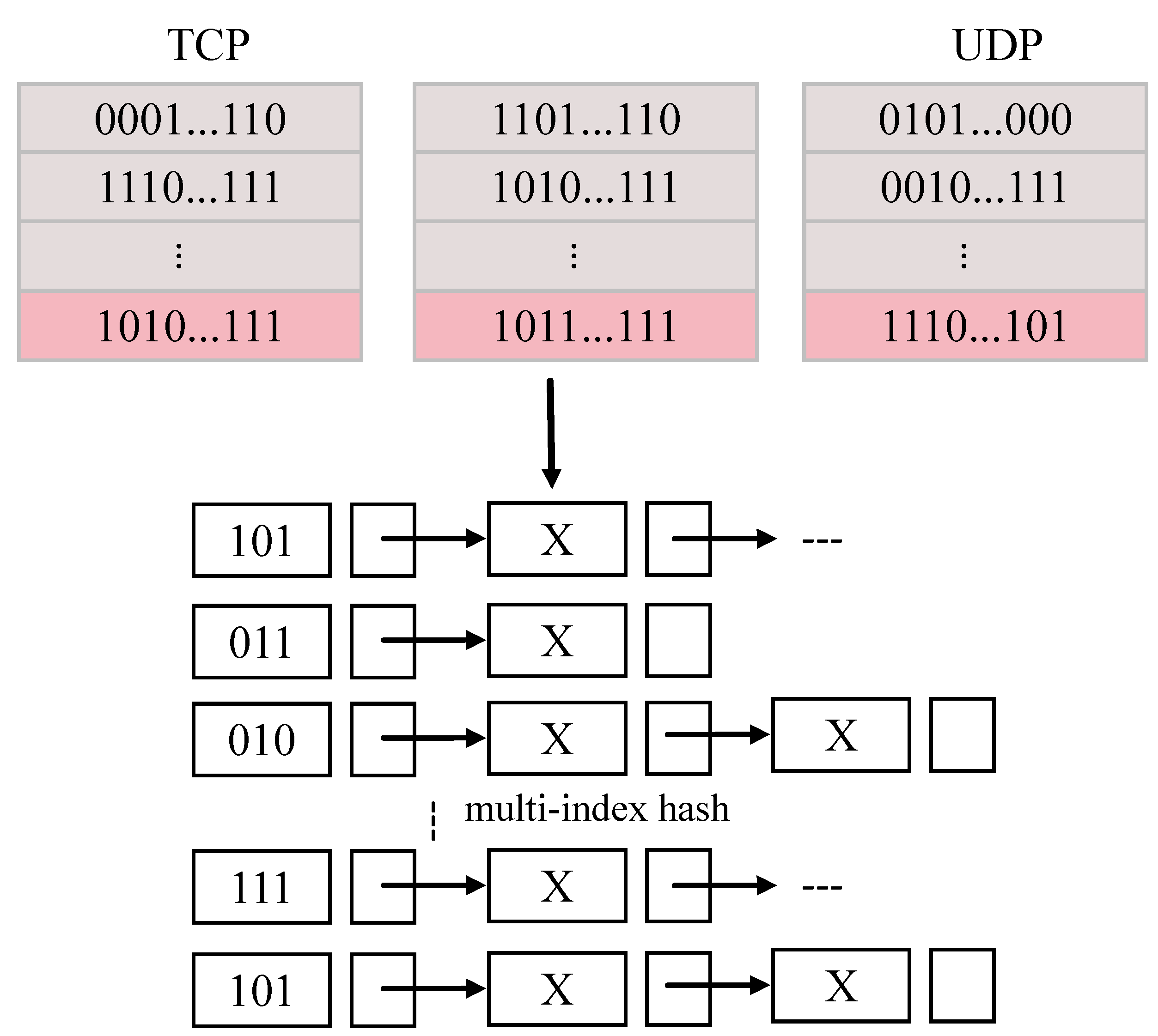

3.3. Federated Multi-Index Hashing

To enable efficient intrusion detection in distributed and large-scale network environments, we propose a collaborative detection strategy that integrates hash-based representation with multi-index retrieval across multiple edge nodes. In this framework, each edge node trains a hashing model to encode raw network traffic into fixed-length binary codes, while preserving semantic similarity among similar traffic patterns. The encoded hash codes are uploaded to the node’s sub-server, which synchronizes with the central server to construct a global spatiotemporal hash codebase covering multiple domains.

To support fast retrieval, we adopt the multi-index hashing (MIH) scheme, as illustrated in

Figure 4. MIH partitions each

K-bit hash code into

m disjoint subcodes and stores them in separate hash tables. During inference, a query code is similarly split, and each subcode is used to search its corresponding hash table in parallel. The retrieved candidates are then ranked by Hamming distance, and their labels are aggregated to determine the most likely traffic category.

Theorem 1. Given two binary codes h and g with Hamming distance , if the codes are divided into m substrings, then there exists at least one substring such that the Hamming distance between the z-th substrings is bounded by , i.e., This property ensures retrieval completeness [36]. Based on Theorem 1, MIH retrieves, for each substring position j, a candidate set consisting of codes whose Hamming distance from is at most or . The union of these sets forms , and all elements in are compared with g to determine the final r-neighbors. Theorem 2. To find any binary code within a Hamming radius in q-bit binary space, it is sufficient to search only substrings with radius , and the remaining substrings with radius . That is, Let be the length of each substring, where q is the total bit length. The number of bucket lookups per query is given by:where denotes the binary entropy function. The corresponding retrieval cost is: Since the number of queries grows much slower than exhaustive search and the candidates per bucket decrease exponentially with s, the overall cost remains low even in large-scale databases. This design preserves privacy by avoiding raw traffic transmission, enables rapid retrieval via bitwise XOR operations, and ensures generalization across diverse traffic types, protocols, and temporal patterns.

4. Experiments

To validate the effectiveness of our proposed intrusion detection framework in edge environments, we conducted a series of experiments on widely used benchmark datasets. This section describes the datasets used, the experimental setup, performance results, system resource overhead, and communication efficiency.

4.1. Datasets

We conducted our experiments on three widely used benchmark datasets: NSL-KDD [

37], UNSW-NB15 [

38], and CIC-IDS2017 [

39]. Among these, CIC-IDS2017 served as the primary dataset for performance comparison and analysis, due to its comprehensive coverage of real-world network traffic scenarios. The CIC-IDS2017 dataset contains both benign and malicious traffic, including various types of modern attacks, such as DoS, DDoS, Web Attack, Port Scan, Botnet, and Infiltration. For our experiments, a subset of the dataset was selected and sampled for training and testing, as shown in

Table 1.

For all the datasets (NSL-KDD, UNSW-NB15, and CIC-IDS2017), we performed a unified preprocessing pipeline including feature selection, normalization, and encoding of categorical attributes. We first removed redundant or highly correlated features, using correlation analysis and domain knowledge to reduce dimensionality. Then, continuous features were normalized to zero mean and unit variance using z-score normalization to prevent scale bias during training. For categorical fields such as protocol type and service, one-hot encoding was applied. These preprocessing steps have been shown to improve the convergence of the hashing neural network and the performance of the attention-based KNN retrieval.

4.2. Experimental Settings

To simulate real-world network attack scenarios, we set up a controlled test environment comprising six emulated attack hosts and one designated target server. The attack hosts used the hping3 tool to launch Denial of Service (DoS) attacks by sending crafted TCP packets to the target server. Specifically, each attack machine transmitted a packet every 500 to 2000 milliseconds, continuously sending large volumes of spoofed requests to saturate the server’s bandwidth and computational resources. This setup effectively mimicked a Distributed Denial of Service (DDoS) environment, which is one of the most prevalent and representative forms of network attacks in real-world scenarios. The target server is equipped with a packet capture utility that monitors and records all incoming traffic, including both attack traffic and normal background traffic (labeled as BENIGN). The collected packets contained essential TCP-related header fields, such as source and destination IP addresses, source and destination ports, and TCP flags. Additionally, a set of time-based traffic statistical features was extracted from the captured flows, providing a comprehensive representation of network behavior for both benign and malicious scenarios.

In our experiments, the neural network was configured with an input layer of 78 nodes, followed by two hidden layers with 64 and 48 neurons, respectively. The output layer size correspondes to the hash code length, which was set to {12, 24, 48, 96}. During training, the baseline model was configured with a batch size of 8 and a learning rate of . The mixing factor is set to 0.28 and the number of communication rounds was fixed at 80. To ensure a comprehensive evaluation, each dataset was split into a training set and a test set with an 60/20 ratio. The training samples were evenly distributed across five edge nodes. For the IID setting, the training data was randomly divided into five equally sized subsets and assigned to the edge nodes, ensuring that each node had a representative distribution of the entire dataset. For the non-IID setting, each edge node received samples belonging to only two randomly selected classes from the training set, thereby simulating data heterogeneity across the devices. We evaluated the performance of the intrusion detection model, using three widely accepted metrics: accuracy (AC), false positive rate (FPR), and F1-score. These metrics offer a balanced view of classification precision, recall, and the rate of false alarms, which are crucial for reliable intrusion detection. During training, the base model on each edge node was optimized using a batch size of 8 and a learning rate of . The total number of communication rounds between the clients and the server was set to 80.

4.3. Experimental Results

We evaluated the intrusion detection performance of our proposed model across various datasets and compared it against state-of-the-art baselines.

Table 2 reports the results on four datasets, CIC-IDS2017, UNSW-NB15, NSL-KDD, and a self-collected Synthetic Data—under both IID and non-IID settings. The Synthetic Data was constructed from network traffic traces collected in our controlled laboratory environment, containing both benign flows and simulated attack scenarios. The metrics included accuracy (AC), F1-score (F1), and false positive rate (FPR).

The results demonstrate that our model consistently achieves high performance across all datasets. For example, on the CIC-IDS2017 dataset, it reached an accuracy of 98.97% and an F1-score of 96.73% under IID conditions. Even in the more challenging non-IID setting, it maintained strong performance with 94.13% accuracy and 92.59% F1. Furthermore,

Table 3 and

Table 4 compare our method with several existing intrusion detection approaches on the CIC-IDS2017 and UNSW-NB15 datasets, respectively. On the UNSW-NB15 dataset, our model achieved the highest accuracy (98.52%) and F1-score (97.12%), while maintaining a lower FPR (2.32%) than NE-GConv and GatedGraphConv. On the CIC-IDS2017 dataset, it outperforms CoWatch by over 5% in accuracy and reduces the false positive rate from 3.85% to 1.97%. These results confirm the superiority of our attention-driven federated learning framework with hash-based modeling, offering both high detection accuracy and reduced false alarm rates across diverse real-world and synthetic datasets.

To further evaluate the classification performance of our method, we visualize confusion matrices for the CIC-IDS2017 and UNSW-NB15 datasets in

Figure 5, each including six representative categories of network traffic. From

Figure 5a, we observe that the proposed model achieved extremely high classification accuracy across most classes in the CIC-IDS2017 dataset. Specifically, benign traffic (class 1), DoS (class 2), and Port Scan (class 5) show near-perfect accuracy exceeding 99%, with very limited misclassification.

Figure 5b shows the results on the UNSW-NB15 dataset, where overall classification performance remained strong, with most classes above 97% accuracy. Nonetheless, class 6 experienced a slightly lower performance (97.63%), with a small number of instances misclassified as benign traffic or class 5. This indicates a minor similarity in feature space, highlighting the challenge of precisely identifying certain rare attack types in realistic and noisy network environments.

4.4. Time and Space Efficiency Analysis

We evaluated the time efficiency by measuring the search latency of linear scan and Multi-Index Hashing (MIH) on the CIC-IDS2017 and UNSW-NB15 datasets for various

k values. As shown in

Table 5, MIH delivered significant acceleration over linear scan, with speedups ranging from an order of magnitude to over one thousand times, depending on the dataset characteristics and hash code distribution. When performing a 1-NN search on 1 billion 48-bit binary codes, linear scan required 11.63 seconds, while MIH completed the same task in just 0.018 seconds, achieving a 640-fold speedup. On the CIC-IDS2017 dataset, the speedup ranged from 239× (with 12-bit codes) to 1013× (with 24-bit codes). On the UNSW-NB15 [

38] dataset, the corresponding improvement ranged from 407× to 806×. Notably, the distribution of the hash codes determined by the dataset and hash bit length directly affected the retrieval performance. As the value of

k increases (i.e., more neighbors were retrieved), the speedup tended to decrease. This was because larger

k values expand the search radius, increasing the number of candidate neighbors that must be checked. In general, however, MIH consistently outperforms linear scan by one to two orders of magnitude, in terms of speed, demonstrating its efficiency and suitability for real-time intrusion detection on large-scale binary hash databases.

To evaluate the space efficiency of our hash-based modeling approach, we selected subsets of three datasets—CIC-IDS2017, UNSW-NB15, and NSL-KDD whose original data sizes were 17.2 MB, 15.7 MB, and 23.8 MB, respectively. The results are visualized in

Figure 6. The line plot in the figure shows the ratio of the hash code storage to the original data size across four encoding lengths: 12, 24, 48, and 96 bits. The corresponding storage proportions were approximately 1.2%, 11%, 32%, and 63%, respectively. As expected, longer hash codes provided finer-grained representations of the original data but also consumed more storage. When combined with the accuracy results in

Table 5, we observe that extremely short codes (e.g., 12-bit) tend to sacrifice detection performance, while moderate-length codes (e.g., 48-bit) offer a good trade-off between accuracy and storage.