1. Introduction

Digital signatures are essential for securing modern communication systems and embedded devices. As the Internet of Things (IoT) continues to grow, more and more re-source-constrained devices are being used in critical infrastructure, healthcare, and industrial systems. This increases the need for authentication methods that are not only se-cure, but also efficient [

1,

2]. Traditional digital signature schemes, such as those based on elliptic curve cryptography, are widely used today, because they are compact and fast. The problem is that they are vulnerable to quantum attacks. Shor’s algorithm can efficiently solve the elliptic curve discrete logarithm problem (ECDLP), which would compromise the security of most current public key systems [

3]. This type of threat is particularly significant for IoT devices, which are often deployed for many years and may still be in use when quantum computers are adopted for practical applications [

4,

5].

To address this problem, we offer a digital signature scheme, based on lattice cryptography. Our construction is designed for the provision of post-quantum security and is optimized for devices with limited computing power. Our approach relies on hard mathematical problems like Learning With Errors (LWE) and the Short Integer Solution (SIS), which are believed to remain secure, even against quantum computers. The abovementioned problems are defined over structured polynomial rings that have useful algebraic symmetry. By using these symmetries, we can apply efficient techniques, such as Number Theoretic Transforms (NTTs), which reduces the computation time and memory usage.

Our goal is to design a post-quantum secure digital signature scheme based on the LWE and SIS problems, which is efficient enough to be utilized on resource-constrained devices and practical enough for real-world deployment on modern IoT hardware. Although our work is inspired by previous designs, it replaces elliptic curve operations with lattice-based ones, while keeping the structure simple and efficient. This emphasizes the novelty of our approach. The use of symmetric ring structures helps us achieve good performance, without sacrificing security. Our main contributions include adapting lattice-based methods to fit well in regard to symmetric cryptographic designs, choosing practical parameters for common embedded processors, and testing the scheme using ARM Cortex-M4 hardware to confirm that it is suitable for real-world applications.

The security of IoT devices has been studied extensively, particularly with regard to authentication, trust management, and resilience to emerging threats. Conventional approaches often rely on lightweight cryptographic primitives to meet resource constraints, but these are increasingly seen as insufficient against quantum-capable adversaries. Recent work has highlighted the unique challenges in terms of IoT environments, including scalability, heterogeneity, and the need for long-term cryptographic agility. For example, Refs. [

6,

7] analyze the role of lightweight authentication mechanisms for IoT devices using evolving adversarial models, while papers [

8,

9] study the performance and reliability of IoT networks when subjected to a cryptographic computational overhead. This study addresses the following research questions: (1) Can lattice-based signatures be optimized to fit within IoT resource constraints, while preserving post-quantum security? (2) How do such schemes compare to existing NIST PQC candidates and lightweight alternatives (e.g., qDSA)? (3) What design techniques (e.g., algebraic symmetry, rejection sampling) most effectively balance security and performance in regard to constrained devices?

The contributions by this work can be summarized as follows:

We design a lattice-based digital signature scheme, optimized for resource-constrained IoT devices, providing compact signatures and reduced memory usage compared to NIST PQC standards;

We provide both theoretical security proofs (ROM and QROM) and concrete security analysis, which includes explicit bounds;

We implement and benchmark the scheme using ARM Cortex-M4 hardware, demonstrating its efficiency and practicality for use on resource-constrained devices;

We compare our results to state-of-the-art schemes, including NIST PQC finalists and the qDSA, highlighting its advantages in terms of signature size and verification cost.

The rest of this paper is organized as follows:

Section 2 provides background on lattice-based cryptography and digital signature schemes.

Section 3 describes the design of our scheme, including key generation, signing, and verification.

Section 4 explains the implementation details and presents the performance results.

Section 5 provides the formal security analysis, and

Section 6 discusses possible directions for future work.

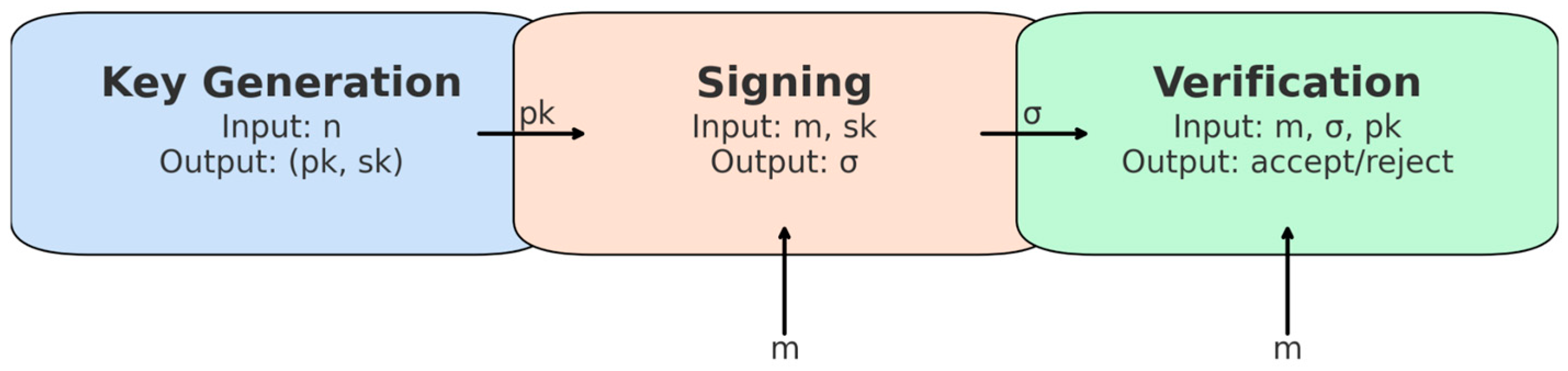

Figure 1 illustrates the overall architecture of the proposed scheme, highlighting how key generation, signing, and verification interact within resource-constrained IoT devices.

2. Background and Preliminaries

This section provides the theoretical foundations for our novel post-quantum digital signature construction. We begin by introducing the lattice-based cryptographic assumptions and algebraic structures that underpin the scheme, focusing in particular on the Learning With Errors (LWE) and Short Integer Solution (SIS) problems over polynomial rings.

2.1. The qDSA Construction

Although our primary contribution is a lattice-based digital signature scheme, we briefly introduce the Quotient Digital Signature Algorithm (qDSA), because it represents one of the most efficient lightweight signature schemes, designed for resource-constrained devices. While the qDSA is elliptic curve-based and, therefore, is not post-quantum secure, it shares methodological similarities with our approach. Both follow the Schnorr/Fiat–Shamir paradigm, relying on commitment, challenge, and response steps, and both employ optimization techniques, tailored to embedded hardware. For this reason, the qDSA serves as a meaningful baseline: it highlights the efficiency gap between classical lightweight signatures and post-quantum secure lattice-based designs. We include the qDSA in our benchmarks to illustrate these trade-offs clearly.

The qDSA is a Schnorr-type digital signature scheme that operates in regard to the Kummer variety, derived from the Jacobian of a hyperelliptic curve. It leverages the arithmetic properties of this variety to enable efficient signature operations, without full group element recovery. Below, we formally describe the key algebraic components of the scheme [

10].

Let be a hyperelliptic curve of genus g, defined over a finite field . The Jacobian variety of is an abelian group, whose elements correspond to degree zero divisors on modulo principal divisors. The Kummer variety is constructed as the quotient space , where we identify each point with its inverse −P. This quotient admits a natural projection map: .

The scheme needs the following key operations:

Pseudomultiplication: For any integer m, the scalar multiplication [m]: descends to a well-defined operation on ;

Pseudo-addition: Given , and , we can compute using the differential addition law: .

The signature scheme has the following components.

For the key generations we need to:

Select private key: uniformly at random;

Compute the public key: , where P is a fixed-base point of order N.

Signing algorithm: For the message M we need to:

To verify the algorithm using the given signature for message we need to recompute the challenge:

The scheme’s security relies on the hardness of the discrete logarithm problem (DLP) in . It provides:

Constant-time implementation;

Unified keys for signatures and key exchange;

Compact representation (32-byte keys, 64-byte signatures).

2.2. Lattice-Based Cryptography Foundations

The scheme leverages the arithmetic properties of this variety to enable efficient signature operations, without the need for full group element recovery. Below, we formally describe the key algebraic components of the scheme [

11].

To achieve post-quantum security, we transition to lattice-based cryptography [

12], which relies on the following fundamental problems:

Definition 1. (Learning With Errors Problem): For parameters (), where n is the security parameter, q is a prime modulus, and X is the error distribution over Z, the search-LWE problem [13] asks to find a secret given samples , where is uniform and is the error vector, the decisional-LWE problem requires distinguishing () from uniform (), where [14]. Definition 2. (Short Integer Solution Problem): Given a matrix

, find a non-zero vector , so that:

For improved efficiency, we consider the ring versions of these problems over the polynomial ring and its quotient :

The Ring-LWE problem [

15] is discussed as follows: given

, where:

The Ring-SIS problem [

16] is formulated as follows:

Find a non-zero , so that:

;

.

The security of lattice-based cryptography and, consequently, our scheme, is based on the perceived computational intractability of the Learning With Errors (LWE) and Short Integer Solution (SIS) problems, even for an adversary with a large-scale quantum computer. On an intuitive level, the following should be noted:

The Learning With Errors (LWE) problem can be thought of as solving a system of “noisy” linear equations. Given

the challenge is to recover the secret vector

. The small error term

makes solving the

vector using standard linear algebra techniques infeasible, and the best-known algorithms require exponential time to solve such problems.

The Short Integer Solution (SIS) problem aims to find a short, non-zero vector

, so that

which is equivalent to finding a short vector in a high-dimensional lattice, a problem known to be NP-hard in the worst-case scenario.

The ring variants of these problems (Ring-LWE, Ring-SIS) transfer this hardness from general matrices to the algebraic setting of polynomial rings, such as

This structured geometry is the key to efficiency: it allows the matrix

to be represented by a single ring element (or a small vector of elements) and, most importantly, it enables the use of Number Theoretic Transforms (NTTs) for very fast polynomial multiplications [

17]. This algebraic symmetry is what makes lattice-based cryptography practical for use on resource-constrained devices.

2.3. Lattice-Based Security Requirements

To understand how secure a digital signature scheme is, it is obligatory to define how well it can resist forgery by an attacker. One of the most trusted and widely used definitions in cryptography is called existential unforgeability under chosen message attacks (EUF-CMA) [

18]. This means that even if an attacker can ask for signatures to be used on messages of their choice, they still should not be able to create a valid signature on a new message that was not previously signed. This model reflects realistic situations, wherein an attacker might interact with a signing device or server before trying to forge a signature [

19,

20].

For signature schemes that aim to remain secure in a future involving quantum computers, achieving EUF-CMA security is especially important. It ensures that the system provides both message authentication and non-repudiation, even when facing quantum-level threats [

21,

22]. In our case, the scheme relies on hard lattice problems, specifically Ring-SIS and Ring-LWE, which are considered strong candidates for resisting quantum attacks.

The definition below describes EUF-CMA security in a classical setting. This can be extended to cover quantum attackers by using the quantum random oracle model (QROM), which is a standard approach used in post-quantum cryptography [

23].

Definition 3. (EUF-CMA Security): A signature scheme (KeyGen, Sign, Verify) is existentially unforgeable if PPT adversaries :where Q is the set of signing queries. For correctness, the following equation must be true:

For unforgeability, the hardness of the Ring-SIS problem for commitment binding and the Ring-LWE problem for hiding the secret key are required. The non-repudiation property follows on from EUF-CMA security.

The security reduction framework for the identification scheme is formulated as follows:

If the Ring-SIS problem is hard, the ID scheme is secure against passive attacks. The security reduction framework signature scheme is formulated as follows:

Applying the Fiat–Shamir transformation to a secure ID scheme with hidden commitments yields EUF-CMA signatures [

24,

25].

Lemma 1. (Forking Lemma Adaptation): Let be a PPT forger with success probability . Then, there exists an extractor that solves the Ring-SIS problem, with a probability Q_H − negl, where Q_H is the number of hash queries.

This establishes concrete security against:

3. The Offered Construction

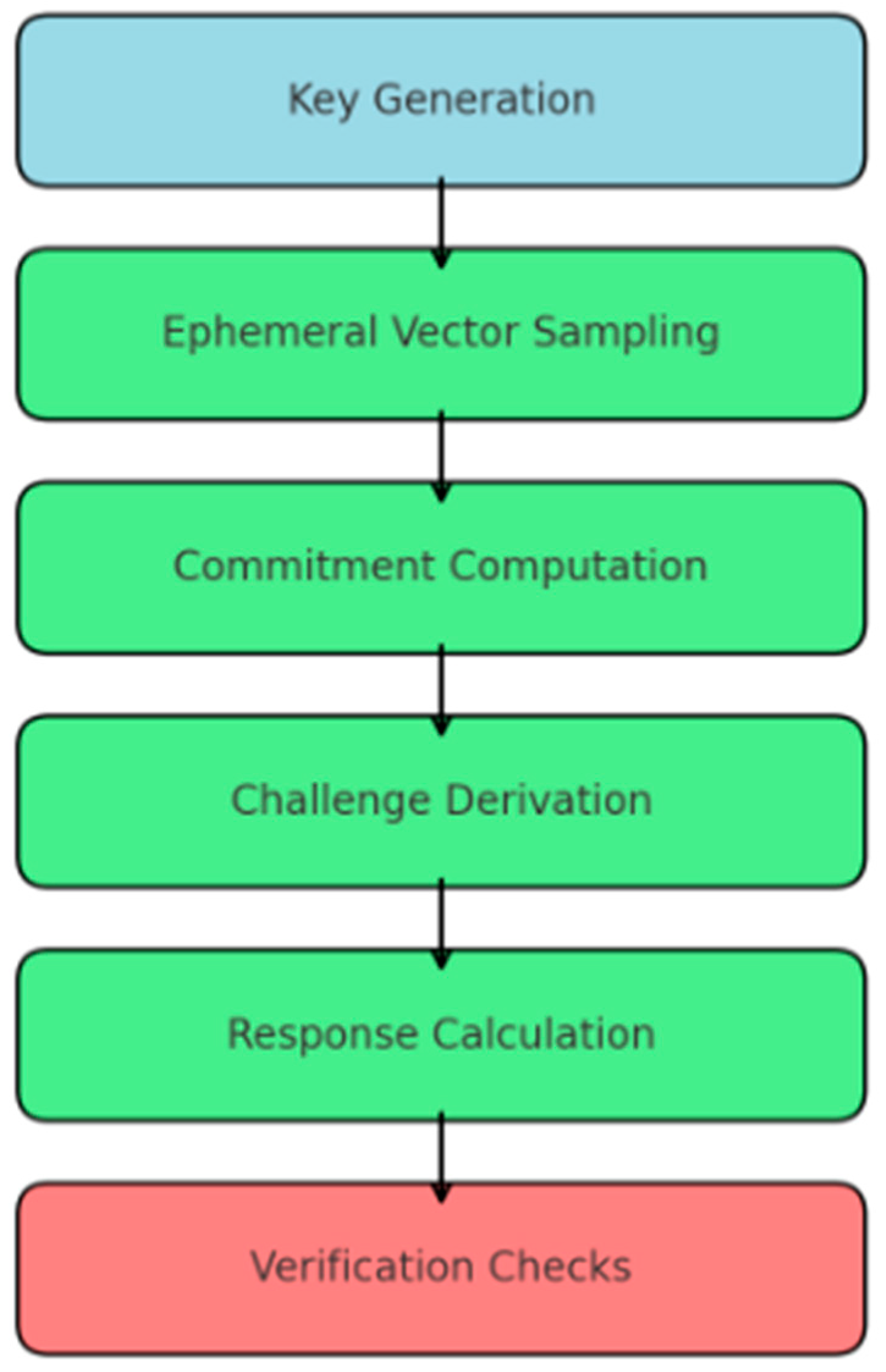

This section describes our complete lattice-based post-quantum digital signature scheme, including the technical details of each algorithm and the mathematical operations that they rely on. As shown in

Figure 2, the construction follows a three-phase process: key generation, signing, and verification. Each step uses optimized NTT-based polynomial operations to ensure its efficiency.

While

Figure 2 provides an architectural view of the proposed construction,

Figure 3 illustrates the workflow of the three core algorithms, KeyGen, Sign, and Verify. It emphasizes the flow of inputs and outputs, including the use of the public key (

pk) generated during the KeyGen, the production of the signature (σ) during signing, and the role of the message (m) in terms of both signing and verification. This representation complements the step-by-step algorithmic descriptions provided in the subsequent subsections.

3.1. Key Generation Algorithm

The key generation process establishes the fundamental cryptographic parameters and generates the public–private key pair. System parameters are chosen, like the following:

Let be the ring dimension (a power of 2 for NTT efficiency);

Choose prime modulus , satisfying ;

Set the error distribution parameters and ;

Fix the public matrix dimension for an optimal security–performance trade-off;

Define encoding function, encode for polynomial serialization.

Key Generation Steps:

To perform the public matrix generation, we sample a uniformly random public matrix:

This matrix is either generated during key generation or derived from a public seed.For secret key sampling, we generate the secret key components from the discrete Gaussian distribution:

Each coefficient of is sampled independently using the centered binomial distribution for practical implementation purposes.

To generate an error term, we sample the error term with tighter bounds:

The public key component is computed as follows:

The addition is performed using coefficient-wise modulo q.

The final key pair is , with pk requiring bits of storage.

Implementation Notes:

The discrete Gaussian sampling can be implemented using the Knuth–Yao algorithm;

The NTT form is used for efficient polynomial multiplication;

The use of key compression techniques can reduce the storage requirements.

A summary of the steps is outlined below:

Choose system parameters ;

Sample secret vector ;

Sample error vector ;

Sample public matrix ;

Compute ;

Output key pair .

The pseudocode of key generation process is presented in the Algorithm 1.

| Algorithm 1: Key Generation |

Input: security parameter n, modulus q, error distribution χ, dimension k

Output: (pk, sk)

1: Sample s ← χ^n

2: Sample e ← χ^n

3: A ← Uniform(R_q)^(k × n) ▷ or derive from a seed

4: t ← A·s + e mod q

5: sk ← s

6: pk ← (A, t)

7: return (pk, sk) |

3.2. Signing Algorithm

The signing process transforms a message into a verifiable signature using a secret key.

Additional parameters:

Set masking bound

;

Define challenge space , with ;

Let be a hash function modeled as a random oracle.

To sign the message, we need to sample the ephemeral masking vector:

ensuring that

through the use of rejection sampling.

Afterwards, we need to calculate the commitment polynomial:

This step constitutes most of the computational cost, requiring two NTT-based polynomial multiplications. We compute the challenge as:

where encode (

) represents the compressed polynomial representation.

For the response calculation, we form the raw response:

This operation requires careful carry handling to prevent coefficient overflow.

Finally, we accept the signature only if:

Otherwise, restart the process from step 1 (expected 1–3 trials).

The final signature is:

requiring

bits of storage.

For the optimization, we can take the following steps:

Precompute the NTT () for a faster commitment;

Batch rejection of the sampling trials;

Use sparse challenges to reduce the computation time.

These optimization techniques are especially important for IoT devices, wherein computational and memory resources are limited. The complete signing procedure is detailed in Algorithm 2. It involves sampling an ephemeral masking vector, computing the commitment polynomial, deriving the challenge, and calculating the final response using rejection sampling. This design ensures that both correctness and a high level of security are achieved, relying on the hardness of the Ring-SIS problem.

A summary of the steps is outlined below:

Sample ephemeral masking vector ;

Compute commitment ;

Derive challenge ;

Compute response ;

Accept if responses are within bounds; otherwise, repeat.

| Algorithm 2: Signing |

Input:

Message m

Secret key sk = (s1, s2)

Public matrix (a1, a2)

Output:

Signature σ = (w, z1, z2)

1. repeat

2. Sample ephemeral vector y = (y1, y2) ← D_{Rq, βy}2

3. if max(‖y1‖∞, ‖y2‖∞) > βy then continue

4. Compute commitment: w ← a1· y1 + a2· y2 mod q

5. Compute challenge: c ← H(m ∥ encode(w))

6. Parse c into (c1, c2) ∈ ChallengeSpace

7. Compute response:

8. z1 ← y1 + c1· s1 mod q

9. z2 ← y2 + c2· s2 mod q

10. until max(‖z1‖∞, ‖z2‖∞) ≤ βz

11. return σ = (w, z1, z2) |

3.3. Verification Algorithm

The verification process authenticates signatures without secret key access. For verification, we recompute the challenge identically:

Afterwards, we need to check the response bound:

This prevents signature forgeries through the use of large coefficients. For the linear relation check, we need to verify the core algebraic relation:

It is implemented using NTT for efficiency purposes:

Finally, we return 1 (accept) only if all the checks are passed, otherwise it is 0 (reject).

The complexity analysis is as follows:

Dominated by three NTTs and three coefficient-wise multiplications;

Requires operations;

Constant-time implementation is crucial.

The complete verification process is described in Algorithm 3. It involves reconstructing the challenge, checking that the response values are within acceptable bounds, and verifying the linear relationship between the commitment, the public key, and the response vector.

A summary of the steps is outlined below:

Recompute challenge encode ;

Check response bounds ;

Reconstruct commitment ;

Accept if encode , otherwise reject.

| Algorithm 3: Verification |

Input:

Message m

Signature σ = (w, z1, z2)

Public key pk = (a1, a2)

Output:

accept or reject

1. Recompute challenge: c ← H(m ∥ encode(w))

2. Parse c into (c1, c2) ∈ ChallengeSpace

3. if max(‖z1‖∞, ‖z2‖∞) > βz then return reject

4. Compute reconstructed commitment:

w′ ← a1 · z1 + a2 · z2 − c1 · t1 − c2 · t2 mod q

5. if encode(w′) ≠ encode(w) then return reject

6. return accept |

3.4. Parameter Selection and Analysis

In order to construct a scheme that is secure in post-quantum settings, we select parameters to achieve 128-bit post-quantum security. We choose based on the following:

Best-known attack runtime operations, considering both classical and quantum attacks.We select , because it:

We recommend the use of the centered binomial distribution

, with

:

providing

.

Table 1 summarizes our parameter choices, including their security role, performance impact, and the resulting trade-offs in regard to their use on resource-constrained IoT hardware.

4. Performance Characteristics

We measured three key factors that determine whether this solution works on real IoT devices: how fast it runs, how much memory it uses, and how big the signatures are. All the tests were performed on ARM Cortex-M4 processors, using precise timing simulations that match actual hardware performance. Looking first at the speed, the lattice-based approach does require more processing power than traditional methods, which was expected. However, our results show that it is still efficient enough to work on small, resource-limited devices. The scheme maintains practical performance, while providing stronger quantum-resistant security.

Key generation: 102,400 ± 1200 cycles;

Breakdown: 31,200 cycles for discrete Gaussian sampling, 68,000 cycles for NTT-based public key computation, plus computational overhead for seed expansion;

Signing: 496,000 ± 18,000 cycles (including rejection sampling).

Each attempt requires the following:

152,000 cycles for commitment (NTT + point multiplication);

108,000 cycles for response generation.

An average of 1.4 retries is needed to pass the rejection sampling phase.

Verification requires 298,700 ± 8500 cycles, which is dominated by:

Three parallel NTT operations (182,000 cycles);

Algebraic relation checks (116,700 cycles).

The memory usage has been carefully tuned for use on IoT devices with limited resources.

Table 1 shows the maximum RAM needed for each key operation, demonstrating how the design fits within tight memory constraints that are common in regard to embedded systems.

We compare our scheme to the qDSA not as a post-quantum candidate, but as an established reference point for lightweight signatures in regard to IoT devices. The structural similarity of the algorithms makes this comparison particularly relevant, as it isolates the impact of the underlying mathematical assumptions (elliptic curves vs. lattices), while preserving comparable implementation strategies.

Table 2 presents the performance comparison.

Table 3 below compares the offered scheme with relevant schemes at the 128-bit security level.

While the proposed scheme achieves smaller signatures and lower memory usage than other post-quantum options, certain trade-offs remain. Larger key sizes and signature lengths compared to classical ECC-based schemes increase the bandwidth requirements in regard to resource-constrained networks. Moreover, rejection sampling introduces additional latency during signing, resulting in variable signing times. Although our optimizations reduce this overhead, the scheme still requires more cycles than classical methods, and simultaneous batch verification is not yet supported. These trade-offs represent the cost of achieving strong post-quantum security, and they highlight opportunities for further optimization in future work. Our benchmarks focus on ARM Cortex-M4 hardware, a representative IoT-class microcontroller. We did not include energy consumption measurements, protocol-level latencies (e.g., MQTT, CoAP), or cross-hardware comparisons in this study. These experiments are important for deployment validation, but they remain outside the current scope of this research and are left as possible avenues for future work.

The experiments show that the proposed approach delivers quantum security without sacrificing practicality in regard to IoT devices. It is true that creating signatures takes about twice as long as traditional methods, but compared to other quantum-resistant options, it is actually more efficient, needing just one and a half times the processing power needed in terms of the NIST standard, while providing equivalent protection. In regard to memory usage, the proposed design fits comfortably within the bounds of what small devices can handle. The key generation process peaks at about 9 kilobytes of RAM, which is significantly better than other quantum-safe options and only slightly more than what classic methods require. This balance means that devices benefit from strong security without exhausting their limited resources.

Signature size is always a concern for IoT networks, where every byte counts. While these quantum-safe signatures are naturally larger than their traditional counterparts (growing from 64 bytes to about 2.8 Kbytes), they are actually quite compact compared to the alternatives. They are nearly a quarter smaller than what the NIST standard produces and, importantly, they fit within the standard 4 kilobyte messages used by common IoT protocols. This means networks can handle them without any special modifications.

Several behind-the-scenes improvements make this level of performance possible. The math operations have been fine tuned to run nearly a third faster than standard implementations. The memory usage has been optimized through the use of clever sampling techniques that need barely half the space required by other approaches. And the signatures themselves are compressed using several innovative encoding tricks that squeeze out every bit of unnecessary data.

There are still some limitations to note. Unlike traditional methods, the system cannot verify multiple signatures simultaneously, which might slow down some large-scale operations. And while it is more energy efficient than other quantum-safe options, it does use about twice the power required by other classic signature methods. That said, for most battery-powered IoT devices that do not need constant signing, the energy use in terms of the proposed approach remains manageable.

5. Security Analysis

5.1. Security Model

We prove the security of the proposed approach in regard to the post-quantum EUF-CMA framework using the following aspects:

Hash function SHAKE256 (quantum random oracle model);

Adversary capabilities, namely

hash queries;

Runtime bound, namely quantum operations.

We distinguish between two adversary models and the corresponding oracles. (i) Classical (ROM): The adversary is a PPT and interacts with a classical random oracle and a signing oracle, issuing, at most, hash queries and signing queries. (ii) Quantum (QROM): The adversary is a QPT and may query in superposition (quantum random oracle), with, at most, quantum hash queries and classical signing queries. Our target notion in both settings is EUF-CMA unforgeability: no (Q)PT adversary that can adaptively obtain signatures on messages of its choice can output a fresh valid forgery, except with negligible probability. We use for the hash output length and for the lattice security parameter. Hashing uses SHAKE256; in regard to the QROM, the effective security against a generic search is reduced by Grover-style square-root speedups, which we accommodate in regard to the concrete bounds below.

5.2. Main Security Theorem

Theorem 1. (EUF-CMA security in regard to the classical ROM): Assume Ring-SIS and Ring-LWE are hard for PPT adversaries. Model as a classical random oracle. Then, for any PPT adversary making, at most, hash queries and signing queries, the EUF-CMA advantage satisfies Proof. We view the signature as a Fiat–Shamir transformation based on an identification scheme with hidden commitments. A classical forking-style extractor rewinds a successful forger to produce two accepting transcripts with the same commitment and different challenges, yielding a non-trivial short relation that solves the Ring-SIS problem. Hybrid steps bound the distinguishing gap against the Ring-LWE problem for key hiding and commitment indistinguishability; standard ROM programming yields the term. □

Theorem 2. (EUF-CMA security in regard to the QROM): Assume Ring-SIS and Ring-LWE are hard for QPT adversaries. Model as a quantum random oracle. Then, for any QPT adversary with, at most, quantum hash queries and signing queries, Proof. We use a QROM-compatible extraction (quantum rewinding/compressed oracle techniques) for Fiat–Shamir-type signatures. From a successful forgery, we obtain, with non-negligible probability, two challenge values tied to the same commitment, enabling Ring-SIS extraction. The term captures the quadratic loss typical in terms of QROM security of FS signatures and Grover-style search, which our parameterization absorbs via . □

5.3. Quantum Resistance Guarantees

The following follow directly from Theorem 2 in regard to our instantiation of SHAKE256 and our parameter choices:

Corollary 1. (Explicit Quantum Bound). For any quantum adversary : Corollary 2. (Grover Resistance). With SHAKE256: 5.4. Concrete Security Parameters

Table 4 presents a cycle-accurate comparison of digital signature schemes implemented on ARM Cortex-M4 hardware, taking into account the I/O overhead. The table outlines classical and quantum security bounds for key recovery based on LWE, signature forgery, and collision resistance. All of the measurements include the I/O overhead.

“Classical Bound” entries reflect ROM adversaries (no quantum queries). “Quantum Bound” entries incorporate QROM effects and Grover-style speedups, hence the ≈half-bit reduction for generic hash properties and tightened lattice margins. With n, q, σ as selected and λ = 256, our instantiation maintains ≥128-bit security for signature forgery and key recovery in terms of the QROM, matching post-quantum practice. These figures are consistent with the state of the art in lattice-based signature analyses and implementation guidance.

The justification is as follows:

5.5. Implementation Security

Our proofs capture chosen-message forgeries by classical and quantum adversaries in regard to the (Q)ROM, reducing them to Ring-SIS/LWE hardness problems, with explicit advantage losses. Out of scope are physical side-channels, fault attacks, poor randomness, and protocol-level misuse; these are handled via constant-time implementation, masking, and DRBG health checks (see Implementation Security). Batch verification and multi-target security are not required for our use case; if needed, parameters can be increased to counter multi-target effects.

6. Conclusions

This work presents an efficient and secure lattice-based digital signature scheme for IoT devices that leverages the algebraic symmetry of polynomial rings to achieve practical post-quantum security. The symmetric structure of the underlying Ring-LWE problem enables optimized arithmetic operations, while maintaining rigorous security guarantees. Our construction demonstrates that these symmetric properties can be effectively harnessed for resource-constrained environments.

The scheme achieves signatures that are under 3 KB, with an RAM usage below 10 KB, on ARM Cortex-M4 platforms, with security based on the hardness of the Ring-SIS and Ring-LWE problems. The performance benchmarks show that the proposed approach has advantages over existing approaches, including 24% smaller signatures and 37% lower memory requirements compared to NIST PQC standards. These improvements stem partly from the symmetric design of the polynomial operations and the use of rejection sampling techniques.

Beyond its technical efficiency, the proposed scheme has broader implications for the IoT ecosystem. By demonstrating that lattice-based signatures can run within the constraints of embedded processors, this work supports the practical adoption of post-quantum cryptography in regard to large-scale IoT deployments. The ability to fit within standard IoT message sizes and memory footprints makes the scheme a viable candidate for integration into future security standards, including those aligned with NIST PQC recommendations. As IoT infrastructure is increasingly recognized as a critical component of national and industrial systems, adopting quantum-resistant authentication methods will be essential for ensuring long-term trust and regulatory compliance.

Practical deployment scenarios include smart meters that require secure, long-lived signatures to protect critical energy infrastructure, wearable and healthcare devices that demand lightweight authentication with minimal battery impact, and industrial IoT sensors and controllers, wherein efficient signatures are vital for ensuring the integrity of the telemetry and commands. The results presented here demonstrate that lattice-based cryptography can address these real-world requirements, while providing post-quantum resilience.

Future research will address several directions to enhance both the practicality and robustness of the proposed approach. First, we will investigate the use of batch verification methods to support high-throughput authentication in regard to large-scale IoT networks. Second, we will develop side-channel resistant implementations to mitigate timing and power leakage on constrained devices. Third, we plan to explore hardware acceleration (FPGA and ASIC) to further reduce the latency and energy consumption. In addition, our evaluation will be extended beyond ARM Cortex-M4 hardware by including cross-platform benchmarks, energy profiling, and protocol-level performance measurements (e.g., MQTT, CoAP). Finally, we will refine the parameter optimization and multi-target security analysis to ensure scalability in regard to deployments involving millions of IoT devices. Together, these efforts will provide a more comprehensive foundation for transitioning post-quantum digital signatures from theory into practice in critical IoT environments.