1. Introduction

Recent advances in artificial intelligence and sensor technologies have significantly propelled the development of unmanned aerial vehicles (UAVs), enabling their widespread applications in aerial monitoring, environmental inspection, traffic management, and disaster rescue [

1]. However, the misuse of low-altitude small UAVs, such as unauthorized flights and illegal reconnaissance, poses serious challenges to airspace management and public safety. Particularly in high-risk areas, UAVs’ remote controllability, high maneuverability, and low observability present dual-use concerns in border security, critical infrastructure protection, and tactical reconnaissance [

2]. Thus, developing efficient and reliable counter-UAV detection technologies has become a priority worldwide.

Current counter-UAV detection methods include radar detection, radio frequency (RF) signal analysis, electro-optical/infrared (EO/IR) imaging, and acoustic recognition [

3,

4]. Radar systems offer long detection range and wide coverage unaffected by lighting conditions but are susceptible to electromagnetic interference and have limited capabilities for detecting low-altitude, slow-moving small UAVs, lacking precise target classification [

5]. RF analysis detects communication and video transmission signals, effectively locating active transmitters but failing against silent or frequency-hopping UAVs and suffering from environmental noise [

6]. EO/IR imaging provides intuitive visual information suitable for target identification and tracking but is highly dependent on lighting and weather conditions and struggles with small distant targets [

7]. Acoustic methods leverage UAV-specific noise for detection with good stealth but have limited range and are vulnerable to ambient noise interference [

8]. These approaches face challenges such as insufficient detection accuracy, high false alarm rates, poor environmental adaptability, limited multi-target handling, and weak performance on low-observability and micro-UAVs, limiting their applicability in complex scenarios.

Deep learning-based object detection algorithms have emerged as dominant methods in counter-UAV vision detection, leveraging their ability to hierarchically extract abstract features from complex images and videos for accurate recognition and localization [

9]. These methods primarily fall into two categories: single-stage detectors (e.g., YOLO series [

10,

11], SSD [

12,

13]) and two-stage detectors (e.g., Faster R-CNN) [

14]. Significant efforts have been devoted to enhancing small object detection and background interference suppression. For instance, Wang et al. added a high-resolution detection head to the P2 layer of YOLOv8 to preserve details [

15]. To reduce noise, IRWT-YOLO integrates image segmentation techniques [

16]. Yang et al. proposed IASL-YOLO optimizing YOLOv8s for a balance between accuracy and lightweight design [

17], while Huang et al. developed EDGS-YOLOv8 incorporating ghost convolutions, efficient multi-scale attention modules (EMA), and DCNv2 for real-time lightweight detection [

18].

Following

Table 1, we explicitly outline three critical challenges that persist in counter-UAV detection research: (1) Target confusion in complex backgrounds—low-altitude scenes often exhibit high visual similarity between UAVs and surrounding elements such as sky, vegetation, or buildings, leading to attention distraction, false positives, and missed detections. (2) Inefficient multi-scale feature fusion—drastic scale variation and small-object scenarios hinder accurate localization and boundary modeling [

19,

20]. (3) Limited accuracy and robustness for small targets—small UAVs typically occupy very few pixels, making feature preservation difficult, especially under motion blur or long-range conditions [

21,

22].

To tackle these challenges, we propose a lightweight and effective YOLO11-based detection framework incorporating three dedicated components: C3K2-FB, designed to suppress background interference and enhance key region features; MS_FPN, which improves cross-scale fusion and edge-aware localization; and RFCBAM, an attention-enhanced detection head that preserves fine-grained features for small-object detection. These modules are collaboratively designed to address the identified limitations and form a unified framework optimized for real-time UAV detection in complex environments.

The main contributions are summarized as follows:

The C3K2 with Fusion Block (C3K2-FB) module employs a gating mechanism to dynamically suppress complex background interference and enhance features within key target regions, effectively improving robustness and feature discrimination under challenging environmental conditions.

A Multi-Scale Edge-Enhanced Feature Pyramid (MS_FPN) is designed to integrate edge information with an improved cross-scale bidirectional fusion strategy, enabling efficient fusion of deep semantic and shallow detailed features to enhance detection robustness and localization accuracy across multiple scales.

An attention-based small object detection head is constructed by adding a high-resolution detection layer and integrating receptive field and convolutional attention modules, which effectively preserves and highlights small object features, significantly improving detection accuracy and stability for small targets.

2. YOLO-Based Multi-Scale and Small Object Detection Framework

2.1. Overview of YOLO11

YOLO11 [

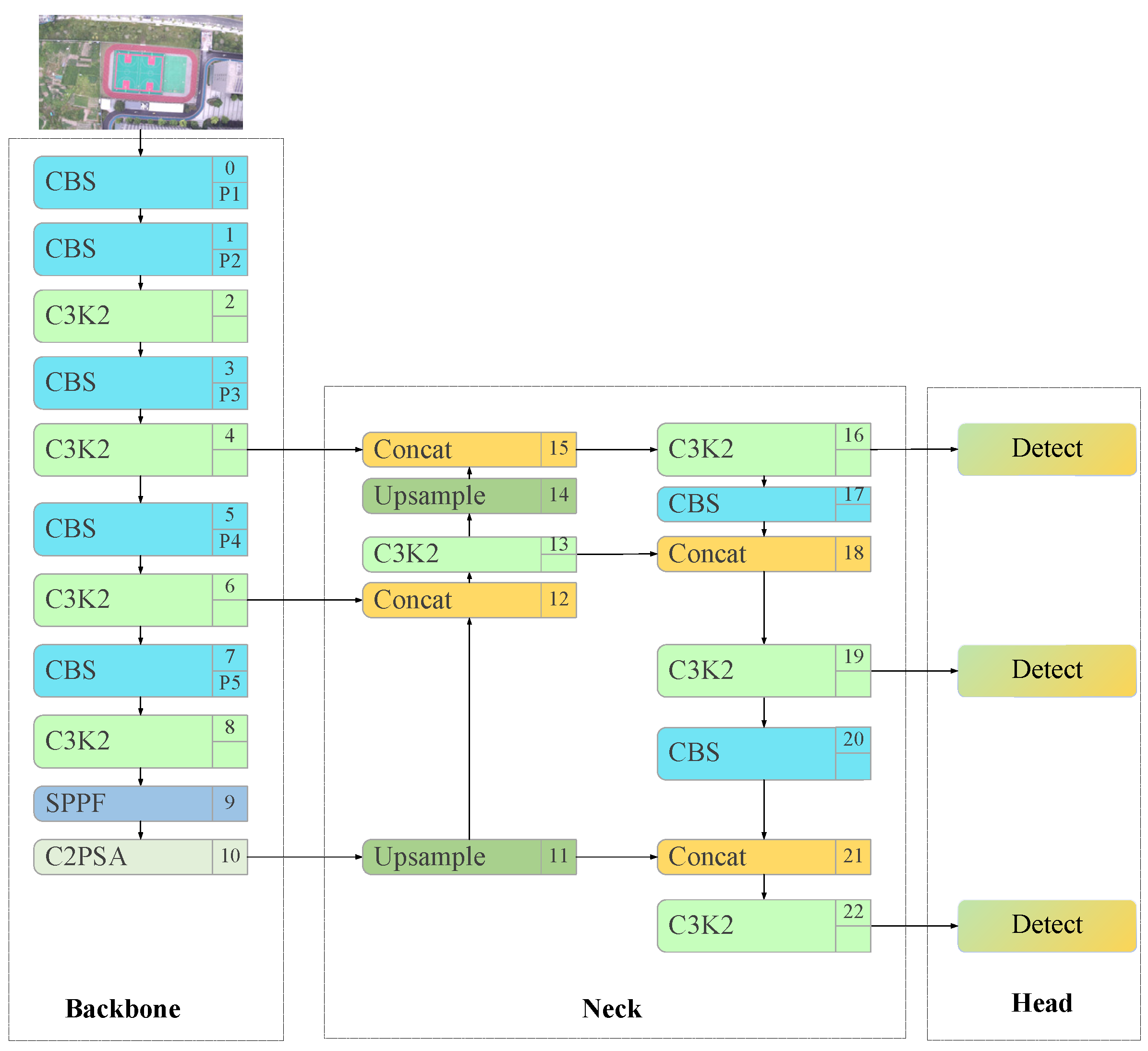

23], developed by the Ultralytics team and released in September 2024, represents a next-generation object detection framework that preserves the conventional four-component YOLO architecture while introducing several architectural innovations. The structure is shown in

Figure 1. The input stage employs a grid-based direct prediction approach to enhance multi-scale adaptability. The backbone network incorporates an optimized C3K2 module (demonstrating 30% faster computational speed and 40% fewer parameters than YOLOv8’s C2f module), integrated with both an SPFF (Spatial Pyramid Fast Fusion) module for multi-scale feature extraction and a C2PSA (Cross-Partial Self-Attention) dual-branch attention mechanism, significantly improving small object detection performance and critical region awareness. The neck architecture utilizes upsampling operations combined with channel-wise concatenation to achieve efficient feature fusion. For final detection, an end-to-end Detect module enables parallel prediction of multiple targets. This optimized architecture achieves substantial improvements in computational efficiency while maintaining high detection accuracy.

2.2. Overall Framework

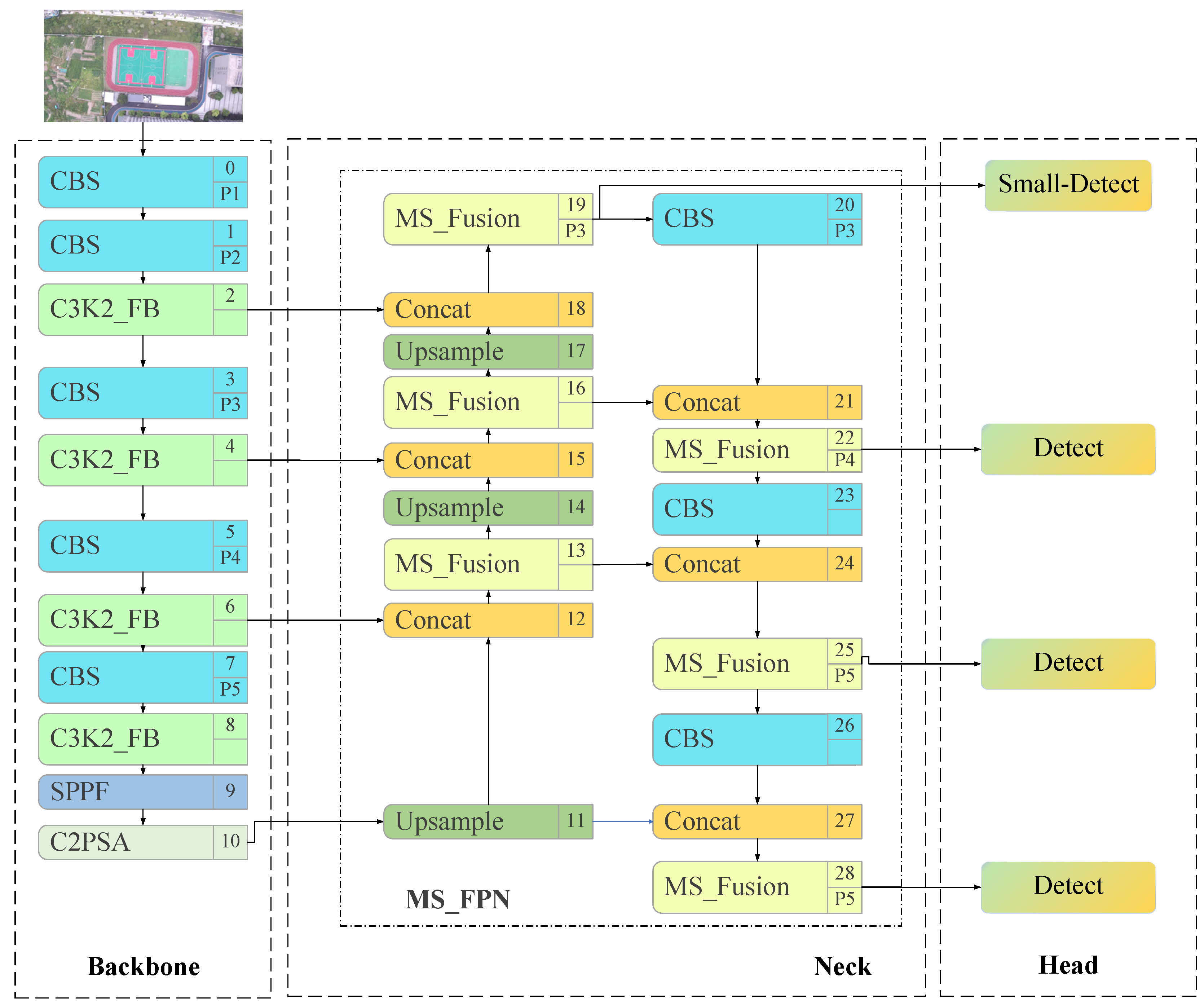

To address the challenge of small object detection for low-altitude UAVs in cluttered environments, this paper proposes a multi-scale and small object detection framework based on the YOLO11 architecture. The framework is designed to systematically tackle three key issues: background interference, low multi-scale feature fusion efficiency, and the loss of small object information during feature extraction. The overall architecture of the proposed framework is illustrated in

Figure 2.

In the Backbone, a novel feature extraction module, C3K2-FB, is introduced. This module employs a gated mechanism to dynamically suppress background noise and enhance the feature representation of key target regions. It improves the model’s robustness and feature discriminability in complex environments and enhances the early-stage response to small targets.

In the Neck, a network named MS_FPN is proposed. This network incorporates edge information to support feature expression and adopts an improved cross-scale bidirectional fusion mechanism to effectively integrate deep semantic and shallow detail features. This design enhances detection robustness and localization accuracy across targets of various scales.

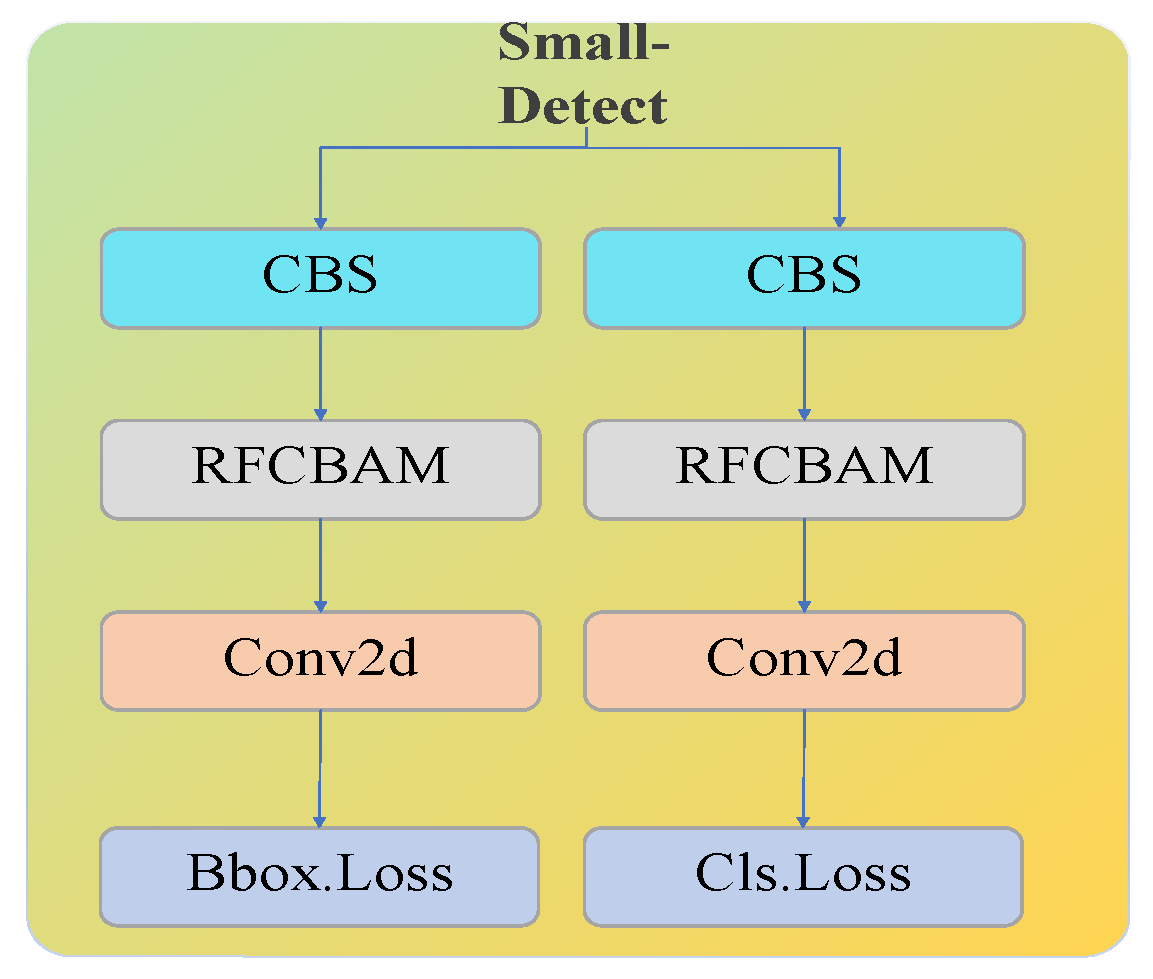

In the Head, a high-resolution detection layer dedicated to small objects is added (the Small-Detect in

Figure 2). Additionally, the standard convolution is replaced by a newly designed Receptive Field and Convolutional Block Attention Module (RFCBAM) module, which integrates Receptive Field Attention (RFA) and Convolutional Block Attention Module (CBAM). By jointly modeling the importance of channels and spatial dimensions, this module guides the network to focus more precisely on key regions and feature channels of small UAV targets, significantly improving both the accuracy and stability of small object detection.

2.3. C3K2-FB Module Design

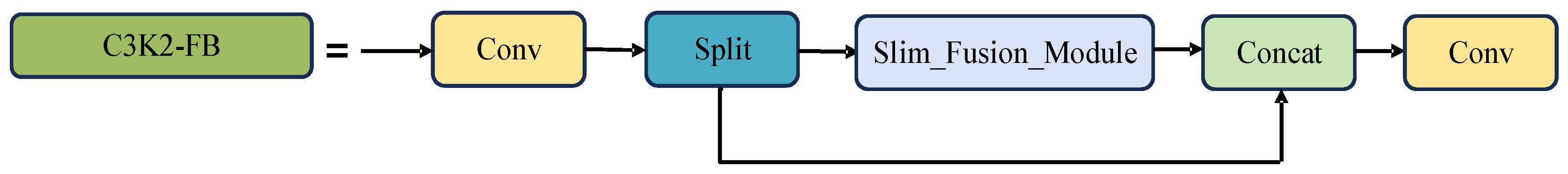

Although the C3K2 module in the backbone of YOLO11 incorporates an efficient residual structure and multi-scale convolutional combinations to enhance feature extraction efficiency, its core operations still rely on standard convolutions. Standard convolutions have significant limitations in complex scenarios because their fixed kernel parameters prevent them from adapting to spatial structural variations. When target and background share similar textures or colors, this often leads to distracted attention, blurred object boundaries, and difficulty in suppressing irrelevant interference while focusing on critical discriminative regions. To address these issues, a novel feature extraction and fusion module—C3K2-FB—is proposed to replace the original C3K2 structure. As shown in

Figure 3, the C3K2-FB module integrates the Slim Fusion Module (SFM) [

24], which introduces a gating mechanism and wide-receptive-field convolution to dynamically enhance target features and suppress background interference in complex environments. This module facilitates the efficient integration of multi-scale semantic information while keeping computational overhead low, significantly enhancing the model’s ability to detect small, occluded, and scale-varying targets in cluttered scenes.

C3K2-FB draws on the feature stream optimization concept of C2f and adopts a restructured fusion strategy to improve the learning efficiency of deep features. Specifically, the input feature maps are first processed by deep convolution to extract basic spatial and semantic representations of multi-scale targets. These features are then reshaped and evenly grouped along the channel dimension, ensuring balanced distribution and diversity of semantic information such as edges and textures. Each group is passed through the SFM module (as shown in

Figure 4), where a residual enhancement mechanism is used to emphasize key regions and suppress background noise. The outputs from SFM are then fused with the input features (via concatenation or weighted summation), followed by a convolutional integration step to generate the optimized features, thereby forming a closed-loop enhancement of the feature stream.

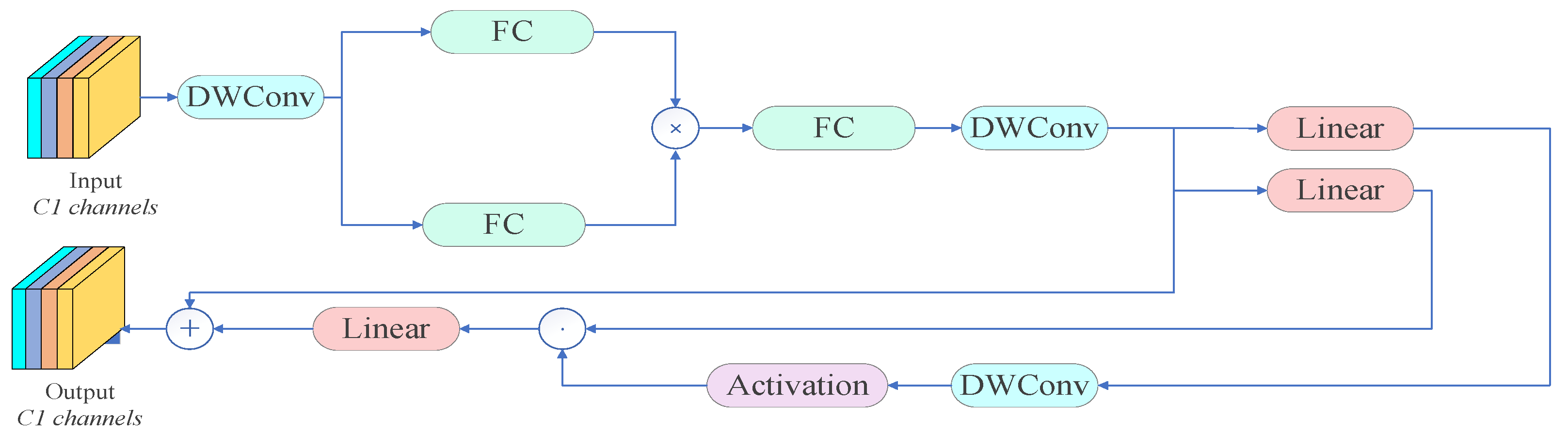

As the core structural component of the C3K2-FB module, SFM combines an efficient element-wise multiplication mechanism (referred to as the “star operation”) with a Gated Linear Unit (GLU)-like structure, achieving dynamic feature modulation and spatial enhancement with minimal computational cost. Initially, SFM uses depthwise separable convolution to split the input into two sub-feature groups, which are processed by two parallel branches: the main branch applies standard convolution to extract fundamental features, while the gating branch generates dynamic weight maps that are element-wise multiplied with the main branch output. This “star operation” replaces traditional summation to enable high-dimensional nonlinear feature modeling and adaptive enhancement of key information. The outputs of both branches are then fused via concatenation or element-wise operations followed by convolution, enhancing the representation of fine-grained spatial information. The fused features are subsequently reconstructed using depthwise separable convolution, and a GLU-like gating mechanism is applied to adjust channel importance dynamically. Specifically, the reconstructed features are split into two linear projections: one undergoes a 3 × 3 depthwise convolution followed by SiLU activation [

25] and is then element-wise multiplied with the other, allowing for dynamic control of channel responses. Finally, a residual connection is used to merge the module output with the original input, which effectively alleviates gradient vanishing while preserving information integrity and enhancing feature representation.

2.4. Multi-Scale Edge-Enhanced Feature Pyramid Design

Although traditional pyramid structures in YOLO-based object detection models possess certain capabilities for multi-scale feature processing, they still exhibit significant limitations in feature extraction and fusion. Typically, these structures rely on progressive downsampling of images and extract features independently at each scale. This approach struggles to simultaneously preserve fine-grained details present in high-resolution feature maps and global semantic context available in low-resolution maps. This limitation is particularly detrimental for small object detection, where high-level semantic features tend to lose edge and texture details, while low-level features lack sufficient contextual information, ultimately reducing detection accuracy. Moreover, conventional pyramid designs employ fixed-scale processing, which is inflexible to the diverse object scale variations encountered in complex scenes. This often results in feature discontinuities and inconsistencies across scales, thereby constraining the model’s robustness and generalization ability for multi-scale object detection.

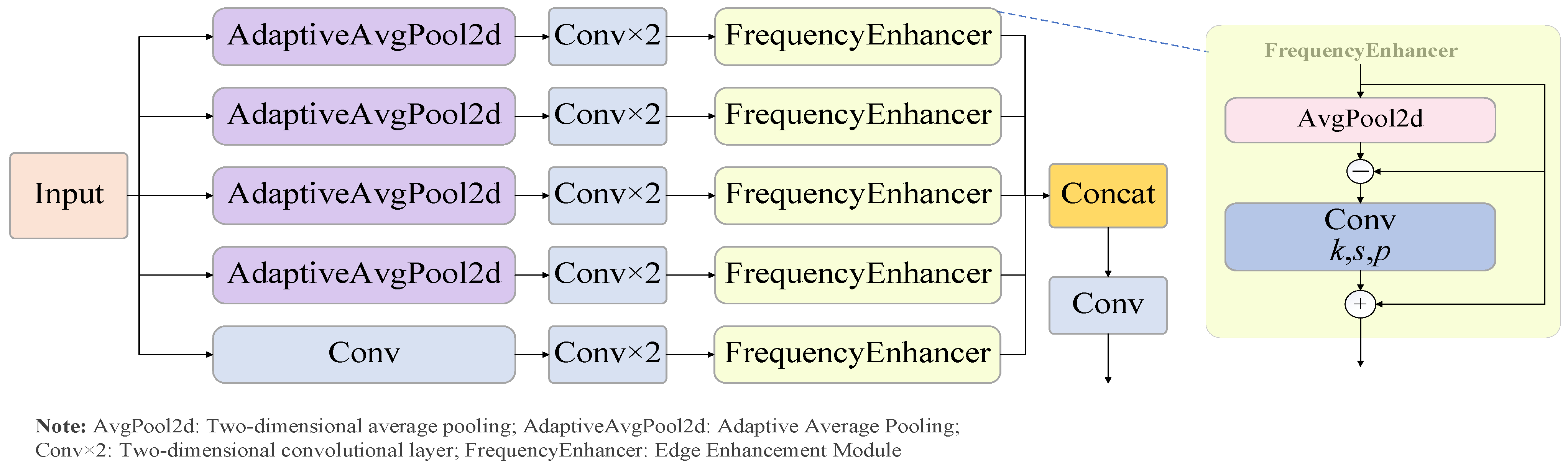

To overcome these limitations, we propose a novel multi-scale edge-enhanced feature pyramid network, termed MS_FPN, inspired by the design philosophy of SlimNeck. The MS_FPN integrates a newly designed MS_Fusion_Block (Multi-scale Selective Fusion Block), which incorporates the FrequencyEnhancer edge enhancement module. This design enables precise modeling of object contours and effective fusion of multi-scale semantic information. The MS_FPN substantially improves the model’s ability to preserve fine details and robustness in detecting small and distant tiny UAV targets, while maintaining computational efficiency.

Structurally, the MS_Fusion_Block, shown in

Figure 5, serves as the core unit of MS_FPN and consists of four primary processing stages: (1) Multi-scale Context Extraction: Adaptive average pooling generates feature maps at multiple resolutions to capture cross-scale contextual information. (2) Efficient Feature Modeling: Each scale’s features undergo parallel 1 × 1 convolutions for channel compression and grouped 3 × 3 convolutions for spatial detail extraction, balancing feature richness and computational cost. (3) Edge Saliency Enhancement: The embedded FrequencyEnhancer module first extracts low-frequency background via average pooling, and then isolates high-frequency edge information through residual calculation. Convolution followed by Sigmoid activation enhances edge saliency, and residual fusion generates the edge-enhanced feature maps. (4) Cross-scale Feature Fusion: The enhanced multi-scale features are upsampled, aligned in channel dimension, concatenated, and fused via convolution to produce the final refined feature map. This module effectively highlights object contours and improves semantic consistency and boundary localization precision under complex background conditions, providing a stable and robust feature foundation for subsequent detection heads.

2.5. Small Detection Head Based on Hybrid Attention Convolution

Low-altitude UAV detection typically falls into the category of small object detection. The original YOLO11 architecture employs three detection heads; however, due to the progressive downsampling operations in deep networks, detailed features of small objects contained in shallow layers are largely lost during transmission. This compromises the model’s ability to accurately detect small-scale UAV targets. To address this issue and better preserve fine-grained visual information, an additional high-resolution detection head specifically designed for small object detection is introduced into the framework. Moreover, standard convolution modules commonly used in traditional detection heads treat all spatial positions and channel features equally, lacking the ability to adaptively focus on key spatial regions (e.g., object contours) and discriminative channels (e.g., texture or shape-related features). This limits the effectiveness of feature extraction and fusion for small object detection.

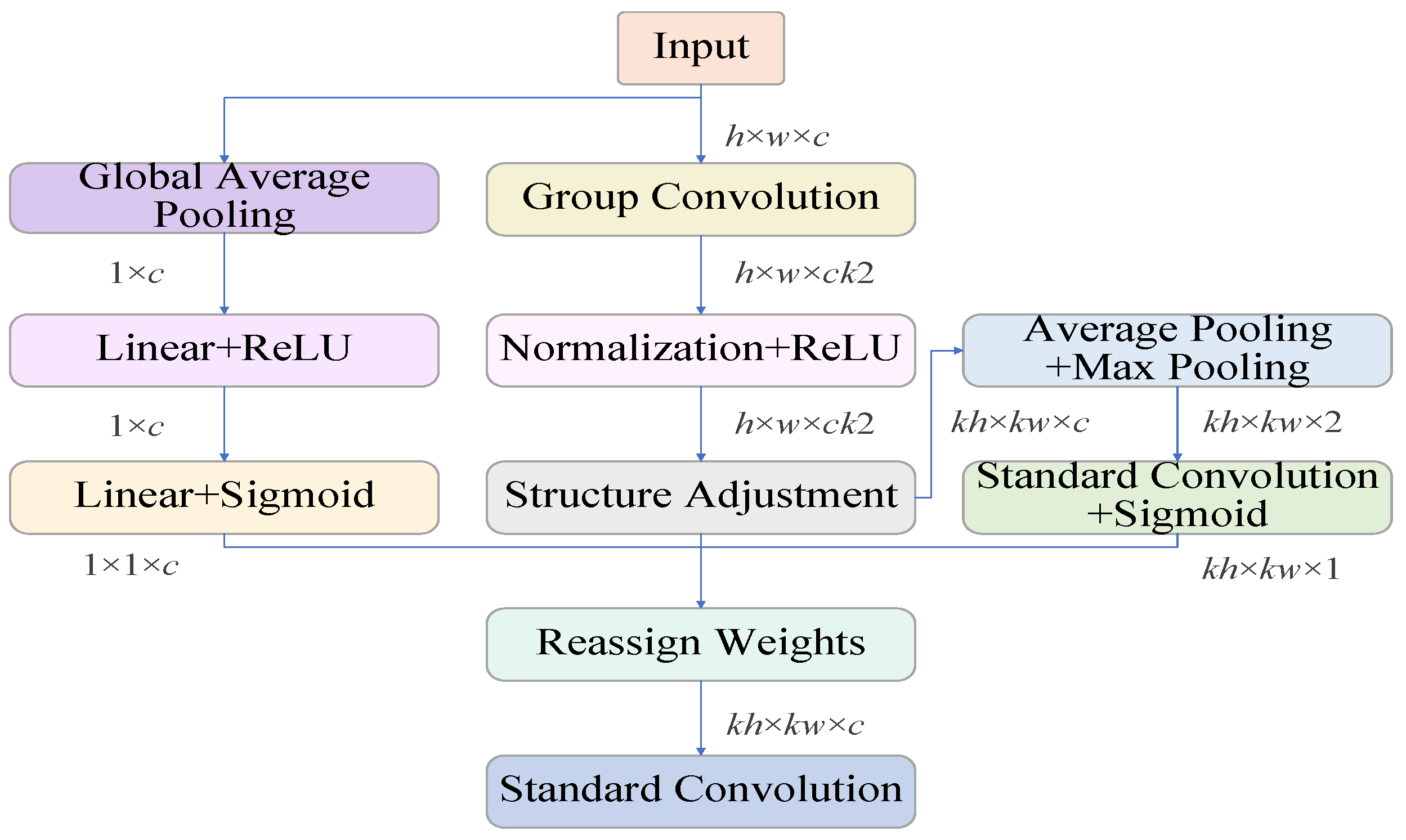

To overcome these limitations, a hybrid attention convolution module, named RFCBAM, is proposed to replace the standard convolution in the newly added detection head. RFCBAM integrates the Receptive Field Attention (RFA) mechanism [

26] with the Convolutional Block Attention Module (CBAM) [

27] to enhance the model’s capability in both spatial and channel dimensions. RFA dynamically adjusts the receptive field to better capture small targets with diverse spatial distributions; however, it lacks the ability to suppress irrelevant channel-wise information and is susceptible to background clutter. In contrast, CBAM excels at channel attention and enhances target-related features, but its spatial attention mechanism is constrained by fixed receptive fields, limiting its effectiveness for objects with large scale variations.

RFCBAM overcomes these limitations through a synergistic combination of the two mechanisms. Specifically, RFA provides adaptive spatial anchoring that guides CBAM’s attention focus, effectively mitigating background interference. Meanwhile, CBAM’s channel attention further refines the features within the dynamically localized regions identified by RFA. This dual enhancement strategy enables more accurate spatial localization and more discriminative feature representation for small targets in complex scenes. As a result, RFCBAM significantly improves the model’s ability to extract and utilize relevant features while maintaining strong robustness against background noise.

The structure of the hybrid attention-based small object detection head is shown in

Figure 6, where the input features are directly taken from the first shallow layer of the backbone to maximally retain raw spatial detail and positional cues. The architecture of the proposed RFCBAM module is illustrated in

Figure 7.

3. Experiments and Results

3.1. Dataset Details

This study adopts the DeTFly dataset as the primary benchmark for training and evaluating the proposed model. DeTFly [

28] is specifically designed for low-altitude UAV detection in cluttered environments, covering a variety of challenging scenes such as urban, sky, and mountain. These scenarios introduce significant background interference and target occlusion. Representative sample images are shown in

Figure 8. While the dataset primarily focuses on UAVs, it presents significant intra-class diversity with respect to object scale, background complexity, and visibility. Images vary in lighting (from morning to evening), background types (e.g., sky, urban, mountain, field), relative distances, and UAV perspectives (top, front, bottom). Annotations were manually created by trained annotators and verified via double-checking to ensure bounding box accuracy and consistency.

The dataset contains over 10,000 high-resolution images (3840 × 2160 pixels) with corresponding bounding box annotations. All labeled objects are small low-altitude UAVs, with most targets occupying less than 5% of the image area, reflecting the typical challenges of small object detection. The dataset is split into training (8000 images), validation (1000 images), and test (1000 images) sets in an 8:1:1 ratio to support model training, hyperparameter tuning, and final evaluation.

3.2. Experimental Configuration

To ensure training stability and reproducibility, all experiments were conducted under the following hardware and software configurations, as shown in

Table 2. Data Augmentation: Rotational symmetry (probability 0.2), Symmetric MixUp augmentation (probability 0.1), horizontal flip (probability 0.5).

The training hyperparameter settings are shown in

Table 3.

3.3. Evaluation Metrics

To comprehensively evaluate the detection performance of the proposed framework in cluttered low-altitude scenarios, the following widely adopted object detection metrics are used:

Precision (P): The proportion of predicted positive samples that are actually true positives, reflecting the accuracy of the detection results. Recall (R): The proportion of actual positive instances that are correctly detected, indicating the model’s ability to cover all targets. AP (Average Precision): The average of precision values at different recall levels, used to measure the detection capability for each category. In this study, AP is computed for the single-class UAV target. mAP (mean Average Precision): The mean of AP across all classes. Since only one class is considered, mAP is equivalent to AP. The mAP@0.5:0.95 metric, which averages performance over Intersection over Union (IoU) thresholds from 0.5 to 0.95, provides a more rigorous assessment of both localization accuracy and detection robustness. This is particularly critical in small UAV detection, where even minor localization errors significantly impact the IoU due to the small size of the target regions. F1 Score (F1): The harmonic means of Precision and Recall, providing a balanced measure of performance. It is defined as:

3.4. Ablation Experiments

To evaluate the contribution of each proposed module to the overall model performance, a series of ablation experiments were conducted by progressively integrating specific structural components, followed by comparative evaluation on the DeTFly dataset. The main evaluation metrics include Precision, Recall, F1 Score, mAP@0.5, mAP@0.5:0.95, and Parameters. The detailed results are presented in

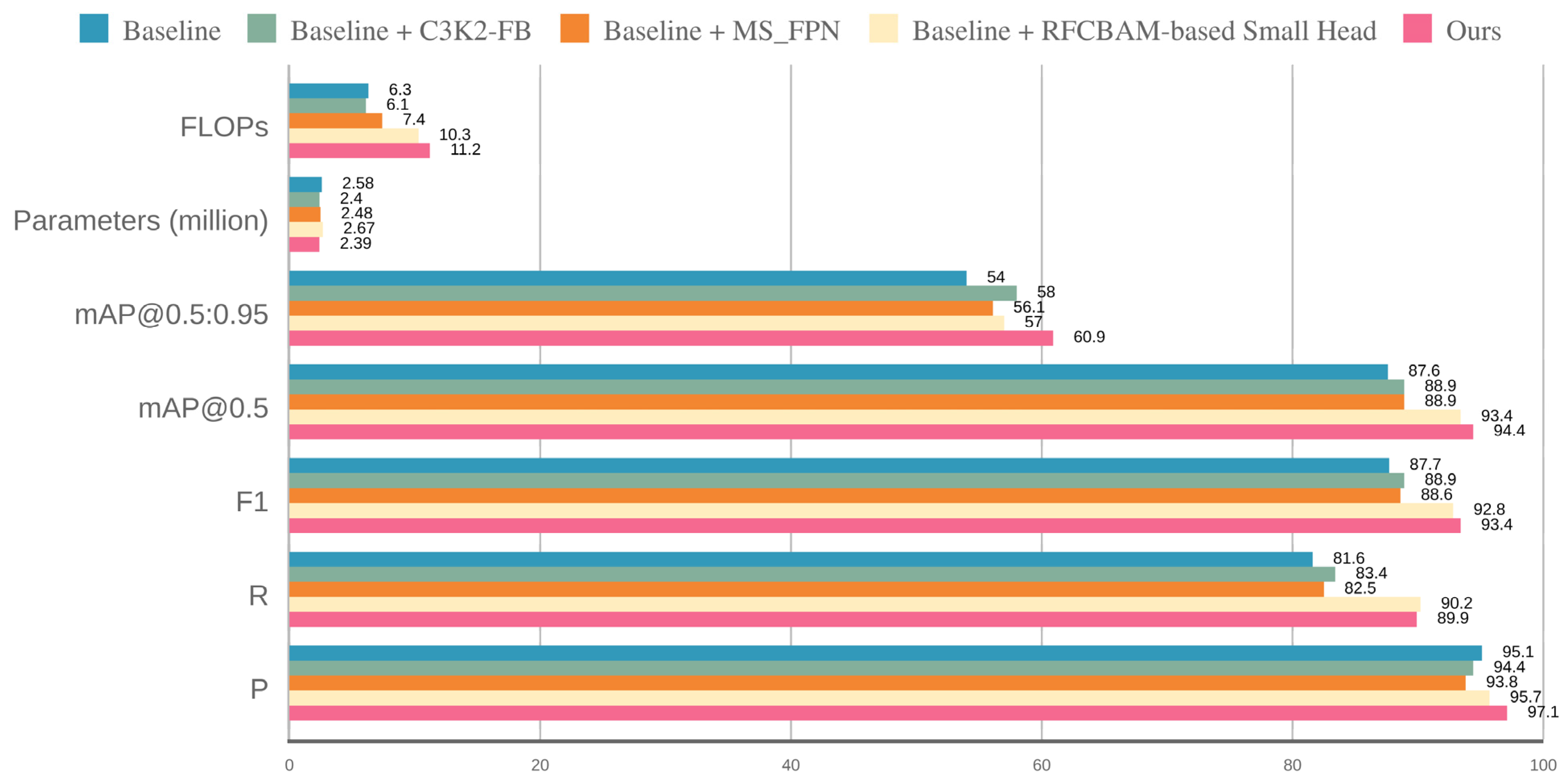

Table 1. Baseline: The original YOLO11n model, serving as the comparison reference. Baseline + C3K2-FB: Replaces the standard C3K2 module in the backbone with the proposed C3K2-FB, to assess its impact on feature extraction and background suppression. Baseline + MS_FPN: Introduces the Multi-Scale Feature Pyramid Network with Edge Enhancement (MS_FPN) in the neck to evaluate its effectiveness in multi-scale fusion and small object boundary preservation. Baseline + RFCBAM-based Small Head: Adds an additional high-resolution detection head tailored for small objects and replaces the standard convolution with the RFCBAM hybrid attention module, to assess its influence on small object detection precision. Ours: Integrates all the proposed modules into a unified Multi-Scale and Small Object Detection Framework, serving as the final model for evaluating the overall architecture’s performance. The ablation experiment results are shown in

Table 4. For ease of visual comparison among different module combinations, a bar graph of ablation experiment results is as shown in

Figure 9.

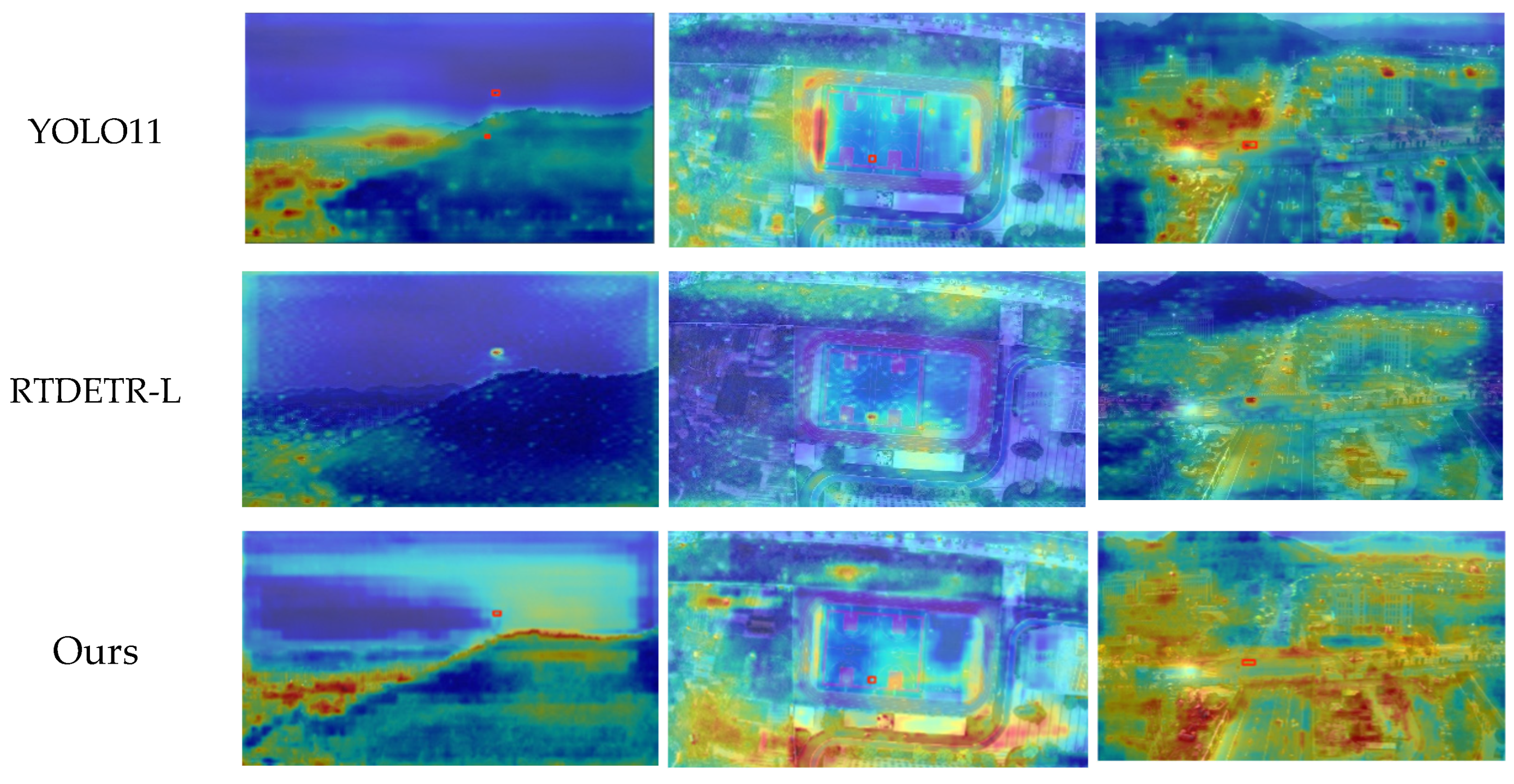

Ablation results demonstrate that, compared with the baseline model, introducing the C3K2-FB module leads to an increase in mAP@0.5 from 87.6% to 88.9% and in mAP@0.5:0.95 from 54.0% to 58.0%, with the F1 score improving to 88.9%. These results indicate that C3K2-FB effectively enhances feature extraction while suppressing background interference in complex scenes. With the addition of the MS_FPN structure, mAP@0.5:0.95 improves to 56.1%, and the Grad-CAM heatmaps (as shown in

Figure 10) demonstrate the module’s effectiveness in multi-scale feature fusion and fine-grained boundary preservation, particularly in small object detection. The introduction of the RFCBAM-based small object detection head yields the most significant performance gains, increasing Recall to 90.2%, F1 Score to 92.8%, and mAP@0.5 to 93.4%. These results highlight the module’s strong capability in enhancing the model’s sensitivity to small targets. The final integrated model (Ours) achieves the best overall performance, with mAP@0.5 and mAP@0.5:0.95 reaching 94.4% and 60.9%, respectively, and F1 Score rising to 93.4%. Precision and Recall also improve to 97.1% and 89.9%. Notably, these improvements are achieved with only 2.39 million parameters, even fewer than the baseline model, demonstrating the lightweight and efficient design of the proposed framework.

In summary, all three modules contribute positively to the model’s performance. Among them, the RFCBAM head shows the most significant improvement in small object detection, while the C3K2-FB module enhances overall feature representation under a lightweight design. The final integrated framework achieves a well-balanced trade-off among accuracy, robustness, and computational efficiency.

3.5. Comparison Experiment with Mainstream Models

To further validate the overall performance of the proposed model, comparative experiments were conducted against several mainstream object detection models, including the two-stage detector Faster R-CNN, the transformer-based detector RT-DETR-L, and multiple versions of lightweight YOLO series models (YOLOv8n, YOLOv9n, YOLOv10n, and YOLO11n). All baseline models were implemented using their official or widely adopted open-source versions, and training pipelines were aligned in terms of augmentation, learning rates, batch sizes, and epochs to ensure fair and reproducible comparisons.

The experimental results compared with mainstream models are shown in

Table 5.

The experimental results demonstrate that the proposed method achieves a Precision of 97.1% and a Recall of 89.9%, representing improvements of 2.0 and 8.3 percentage points over YOLO11n, respectively. This indicates a significant enhancement in the model’s detection robustness under complex background conditions. The mAP@0.5:0.95 reaches 60.9%, surpassing YOLO11n’s 54.0% and approaching the performance of RT-DETR-L, while requiring only about one-twelfth of its parameters and significantly reducing computational complexity. Compared with earlier models such as YOLOv10n, YOLOv9n, and YOLOv8n, the proposed framework achieves mAP@0.5 improvements of 8.0%, 14.5%, and 13.2%, respectively, while maintaining similar or lower parameter counts. Similarly, the mAP@0.5:0.95 improves by 11.0%, 20.3%, and 17.6%, respectively. Compared to Faster R-CNN, the model improves mAP@0.5 by 11.6% and mAP@0.5:0.95 by 12.5%, with over 95% reduction in parameters. In summary, the proposed Multi-Scale and Small Object Detection Framework demonstrates superior detection accuracy, enhanced sensitivity to small objects, and excellent model efficiency in low-altitude UAV detection tasks. It significantly outperforms the selected baseline models, highlighting its broad potential for practical deployment in complex real-world scenarios.

3.6. Visualization Results

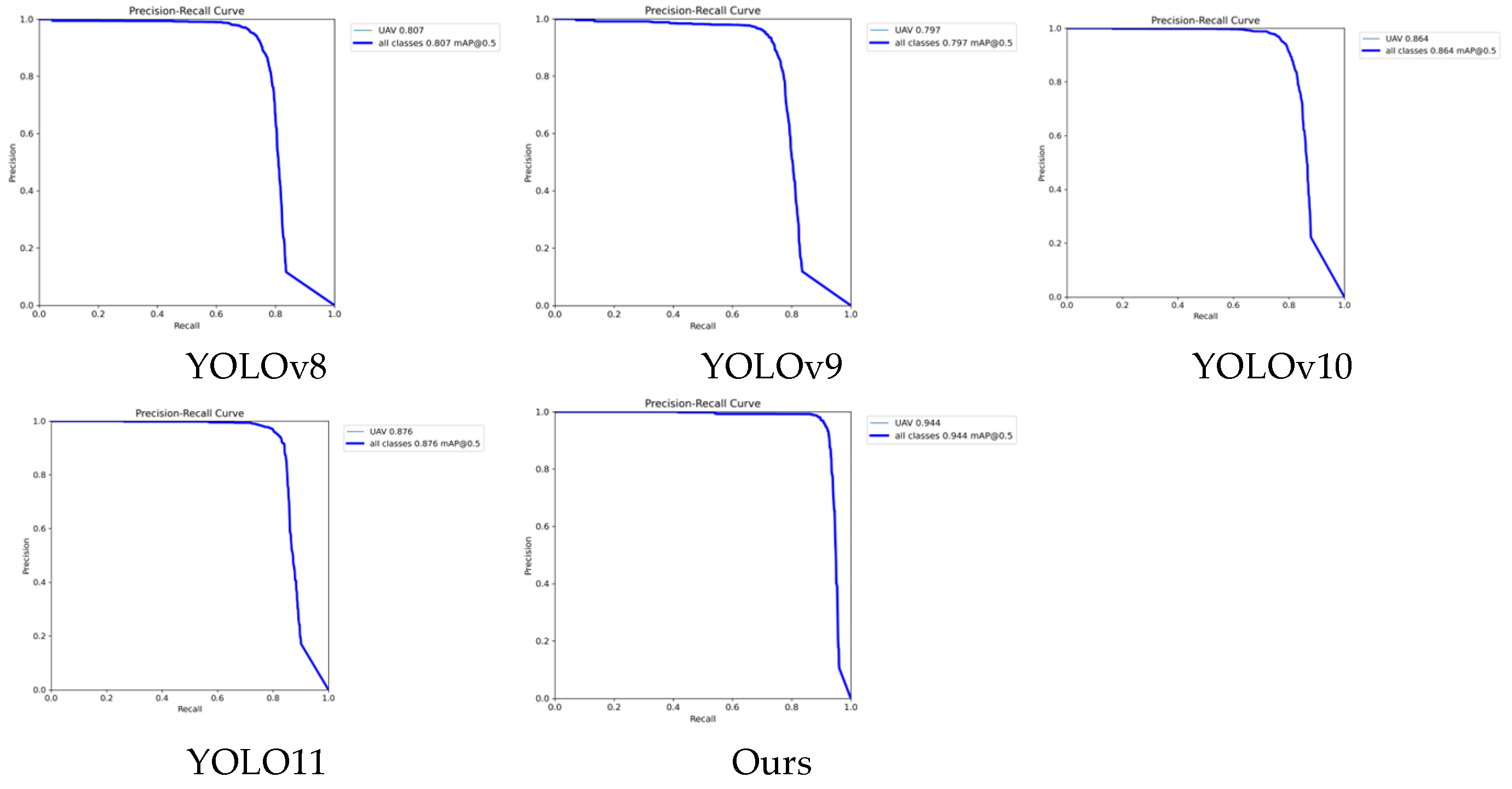

To further validate the effectiveness of the proposed model in detecting small UAV targets under complex background conditions, this study presents a series of visual analyses from multiple perspectives. The visualization includes three main aspects: Precision–Recall (P-R) curves, detection result visualizations, and heatmaps.

Figure 11 shows the PR curves of different models evaluated on the DeTFly test set. Compared with YOLOv8n, YOLOv9n, YOLOv10n, and YOLO11n, the proposed method demonstrates a significantly better PR curve, with the curve lying closer to the ideal top-right corner. This indicates that the proposed model achieves superior detection performance and robust discriminative capability across various confidence thresholds, particularly suitable for complex application scenarios where high recall must be maintained under low-confidence settings.

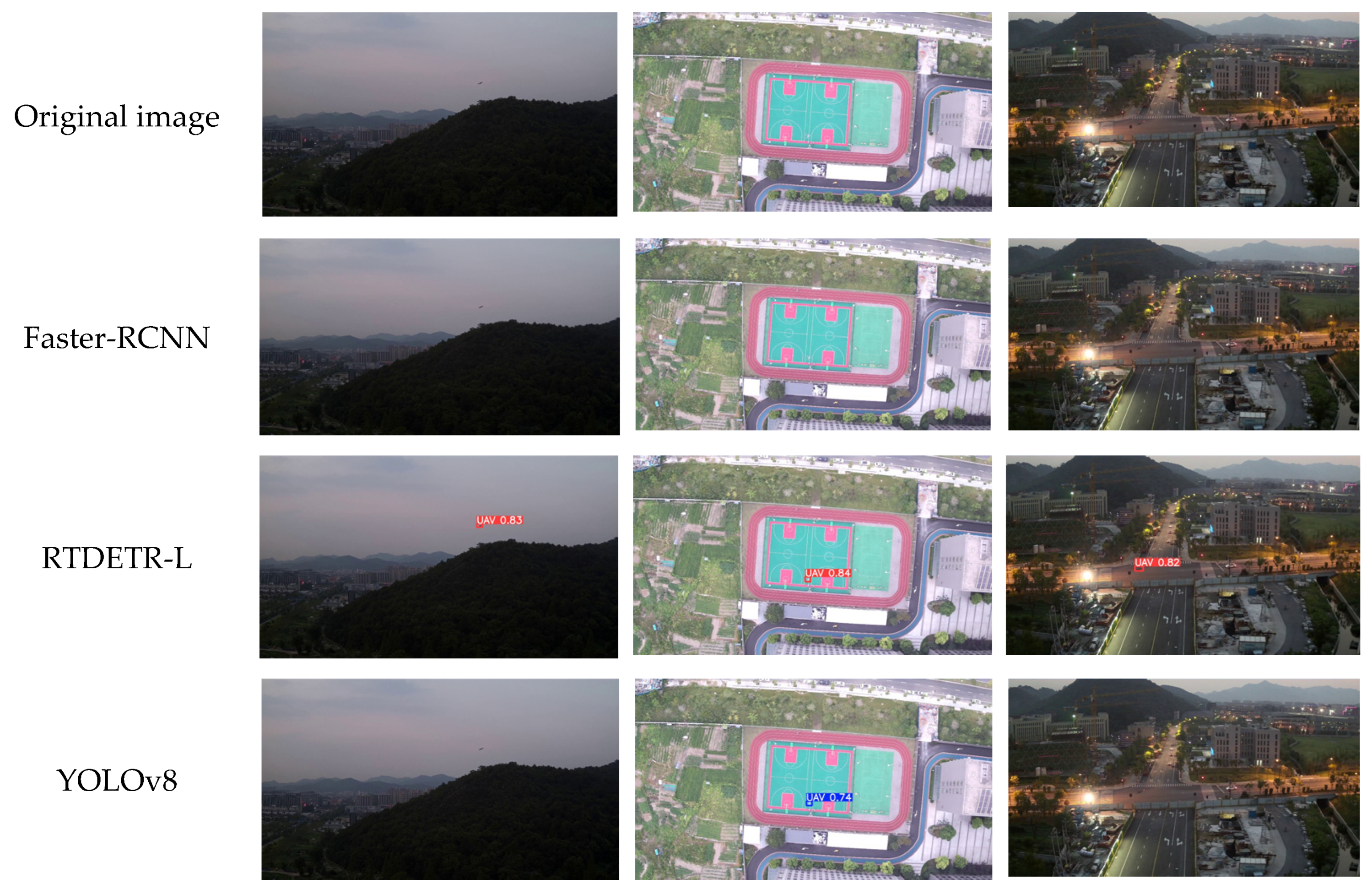

Figure 12 visualizes the detection results on representative test images, including bounding boxes with their corresponding confidence scores. It can be observed that the proposed model is able to accurately locate small UAV targets even under severe background clutter and occlusion, and assigns higher confidence scores compared to other models. In contrast, competing models often exhibit unstable confidence distributions or miss detections in similar scenes. As shown in

Figure 12, in urban scenarios, Faster-RCNN, YOLOv8, YOLOv9, and the baseline model failed to detect the UAV target, while our method successfully identified it. Similar failure cases were also observed in other complex environments. These results demonstrate that the integration of the C3K2-FB and RFCBAM modules effectively enhances the model’s ability to focus on discriminative regions while suppressing background noise, thereby reducing false positives and false negatives in complex scenes.

Figure 13 presents heatmaps generated using the Grad-CAM method to visualize the model’s attention distribution across feature maps at different levels. The proposed model exhibits clear attention focus on the UAV’s contour and body region in the high-level semantic features, rather than being misled by irrelevant background textures such as trees, buildings, or sky. This result further validates the contribution of the MS_FPN module in enhancing edge sensitivity and spatial awareness, thereby improving the model’s perception accuracy for small or indistinct targets.

4. Conclusions

This paper proposes a multi-scale and small object detection framework tailored for low-altitude UAV detection in cluttered environments, where small object size, background complexity, and scale variation present significant challenges. Built upon the YOLO11 architecture, three key enhancements are introduced: the C3K2-FB module for improving feature extraction and suppressing irrelevant background noise; the MS_FPN module for effective multi-scale fusion and enhanced edge representation of small objects; and the RFCBAM detection head that integrates spatial and channel attention to improve focus on discriminative features. Experimental results demonstrate that our model achieves superior performance with an mAP@0.5 of 94.4% and mAP@0.5:0.95 of 60.9%, outperforming the baseline YOLO11n by +6.9% in mAP@0.5:0.95. Compared to other state-of-the-art models such as RT-DETR-L and YOLOv10n, our framework offers a better trade-off between detection accuracy and model complexity, with only parameters of 2.7 M and FLOPs of 11.2. These characteristics make the framework a promising candidate for deployment in real-time UAV monitoring and security defense applications, such as airport perimeter protection, sensitive facility surveillance, and public event safety.

Despite these encouraging results, current limitations remain. The proposed model has not been extensively validated under dynamic tracking conditions or in extreme weather environments, where motion blur, occlusion, and lighting variance may degrade performance. In future work, we plan to perform comprehensive cross-dataset evaluation using benchmarks like UAVSwarm and UAVFly, as well as extend the model to real-time multi-target tracking, UAV swarm detection, and multi-sensor fusion scenarios to further enhance its robustness and application potential in real-world deployments.