Transformer-Driven GAN for High-Fidelity Edge Clutter Generation with Spatiotemporal Joint Perception

Abstract

1. Introduction

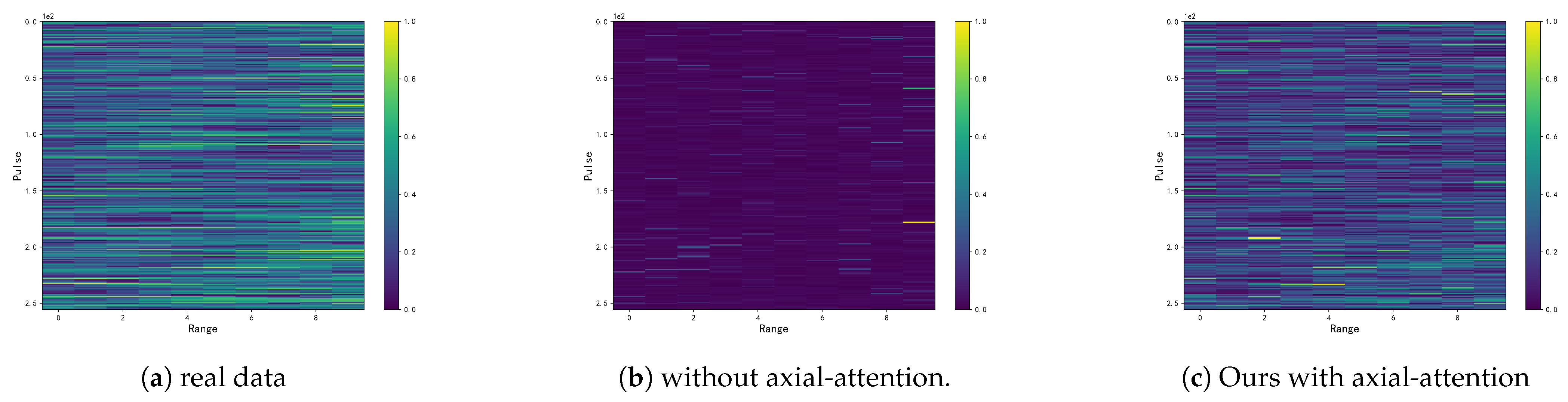

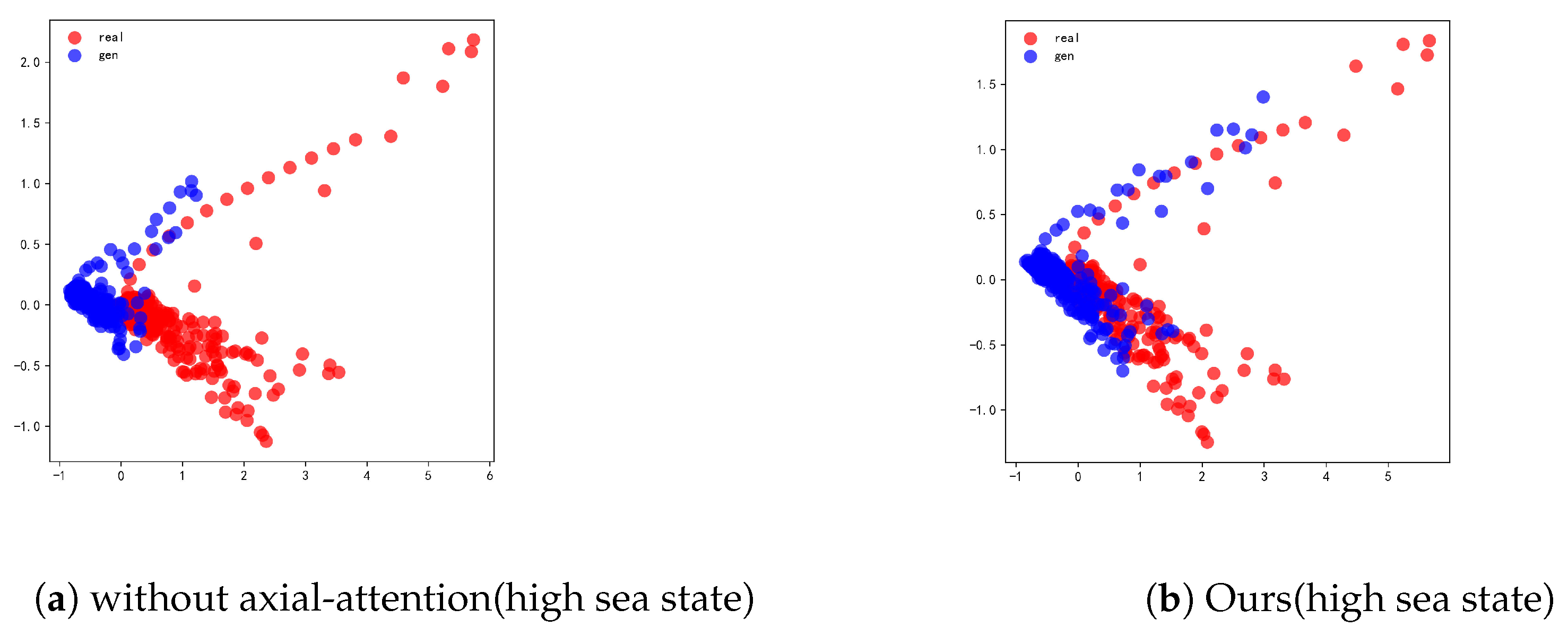

- An approach for extracting spatiotemporal features from generated sea clutter data via a transformer network and a GAN is proposed. Specifically, by employing axial attention in place of traditional attention mechanisms, we effectively extract long-range spatiotemporal dynamic information from sea clutter data.

- We propose a technique for creating and using two-dimensional variable-length vectors to maintain the spatiotemporal properties of sea clutter data, improving the realism of the generated samples.

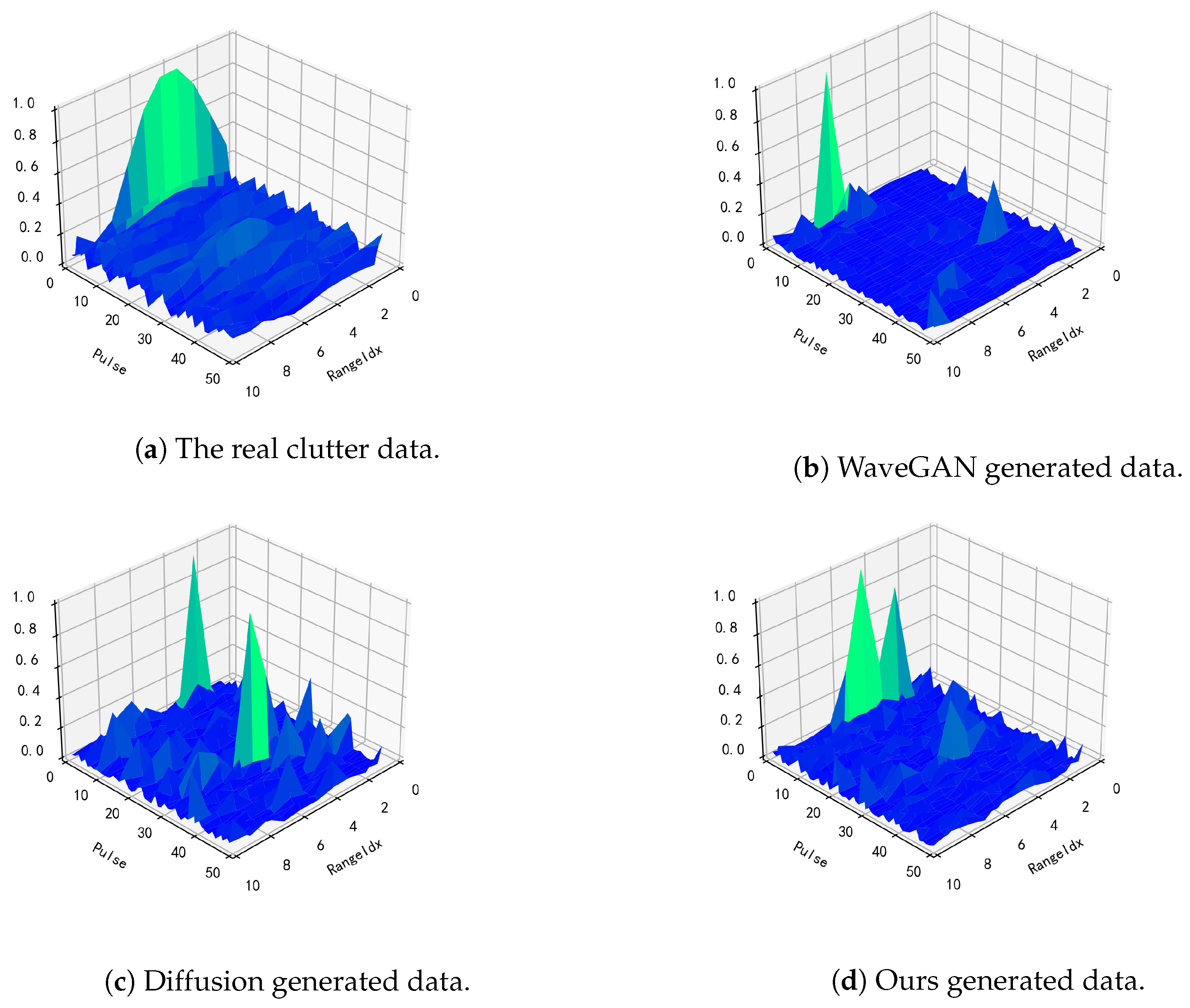

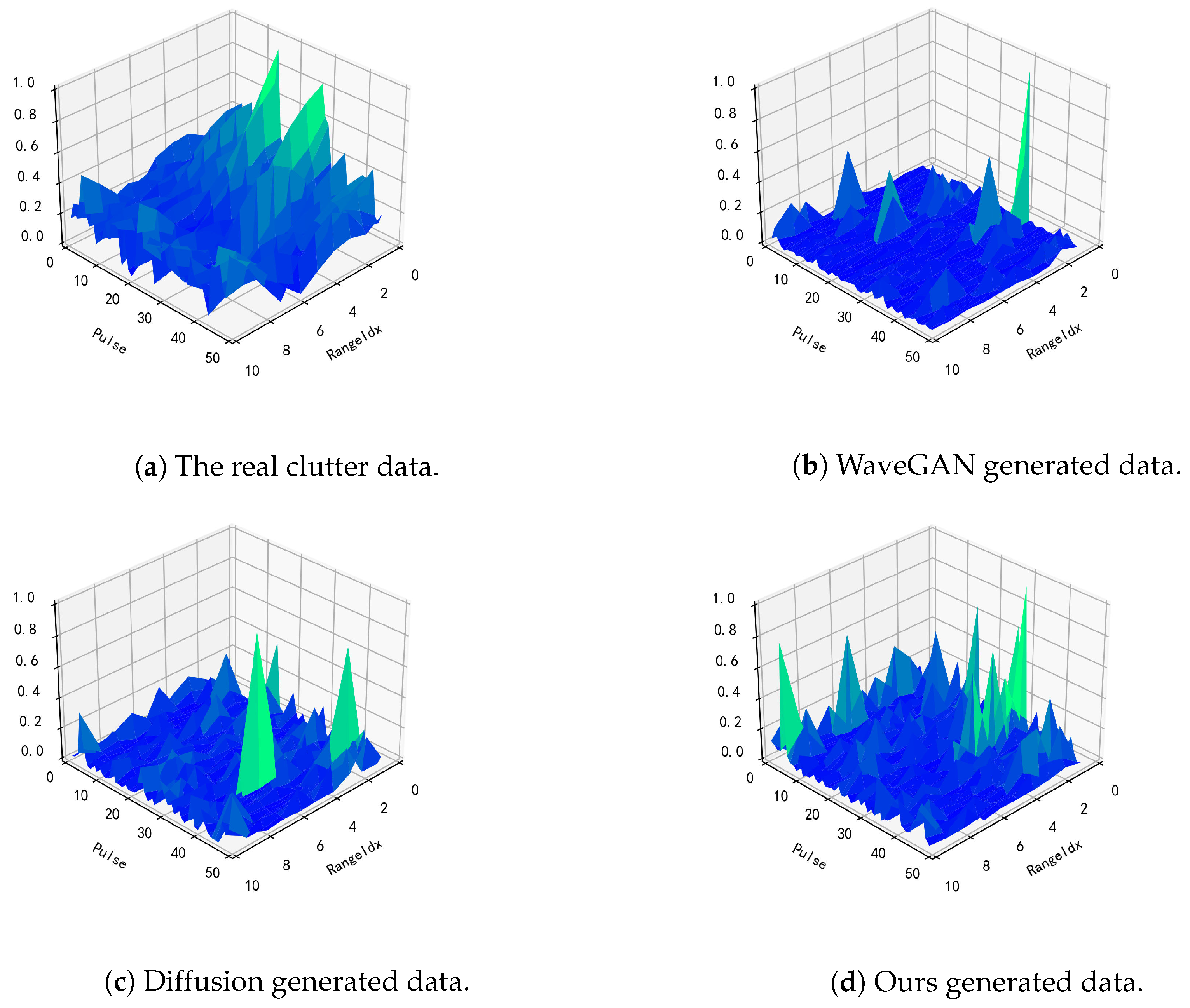

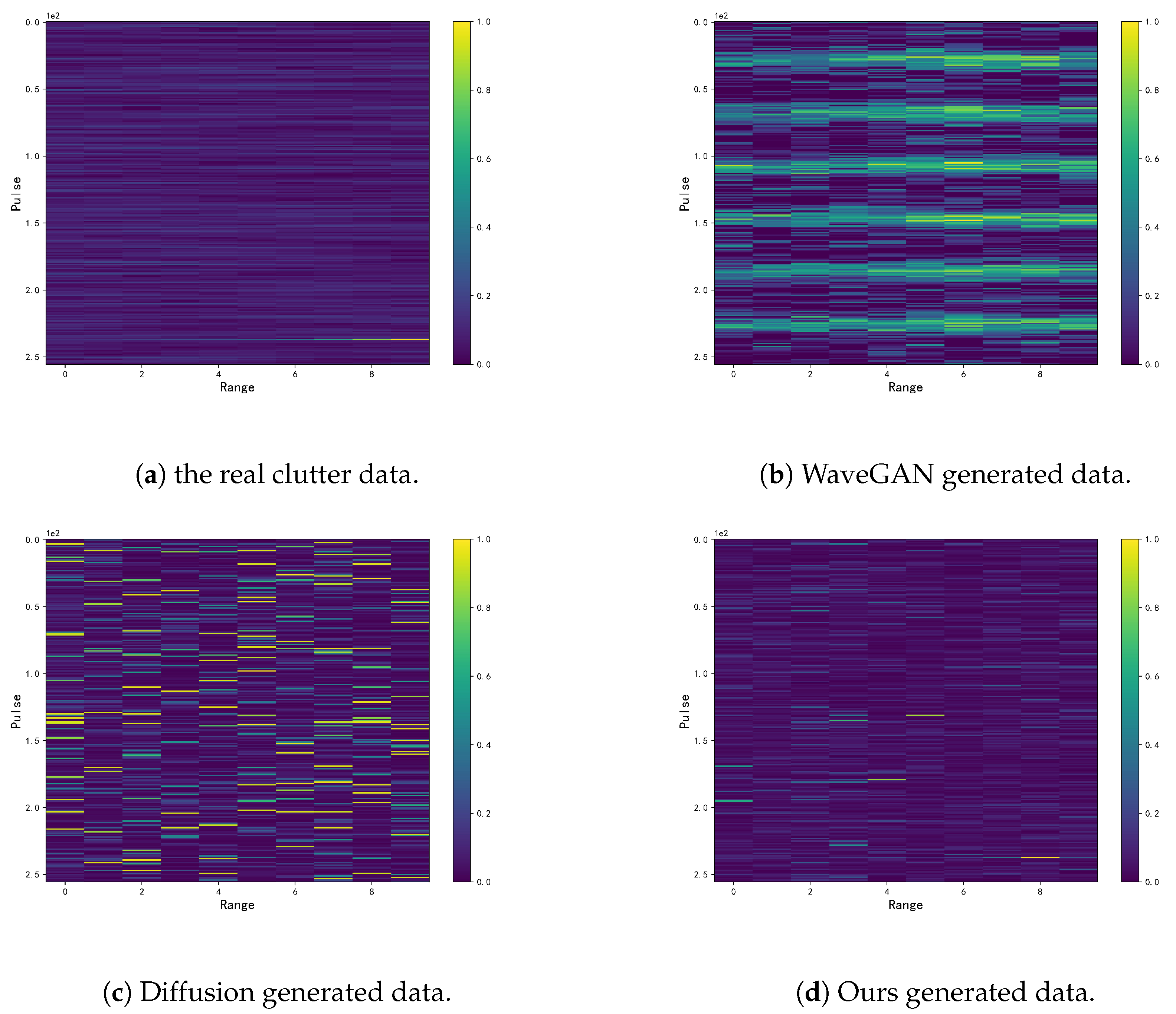

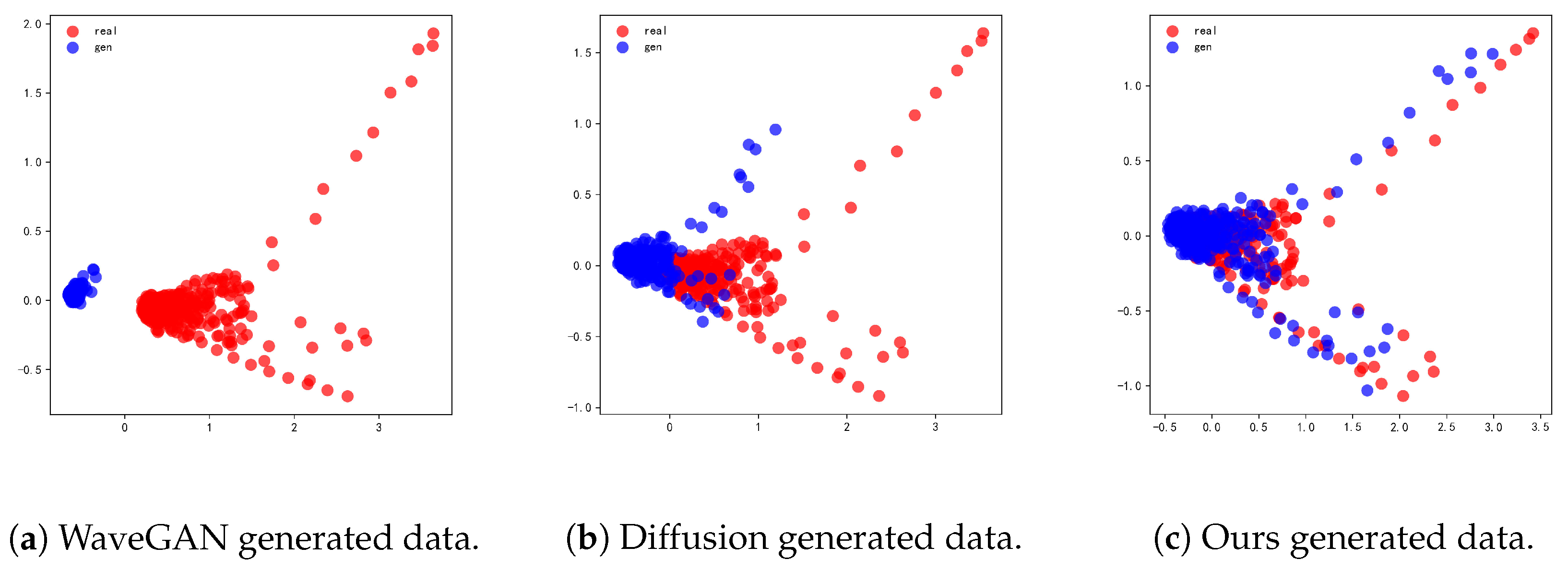

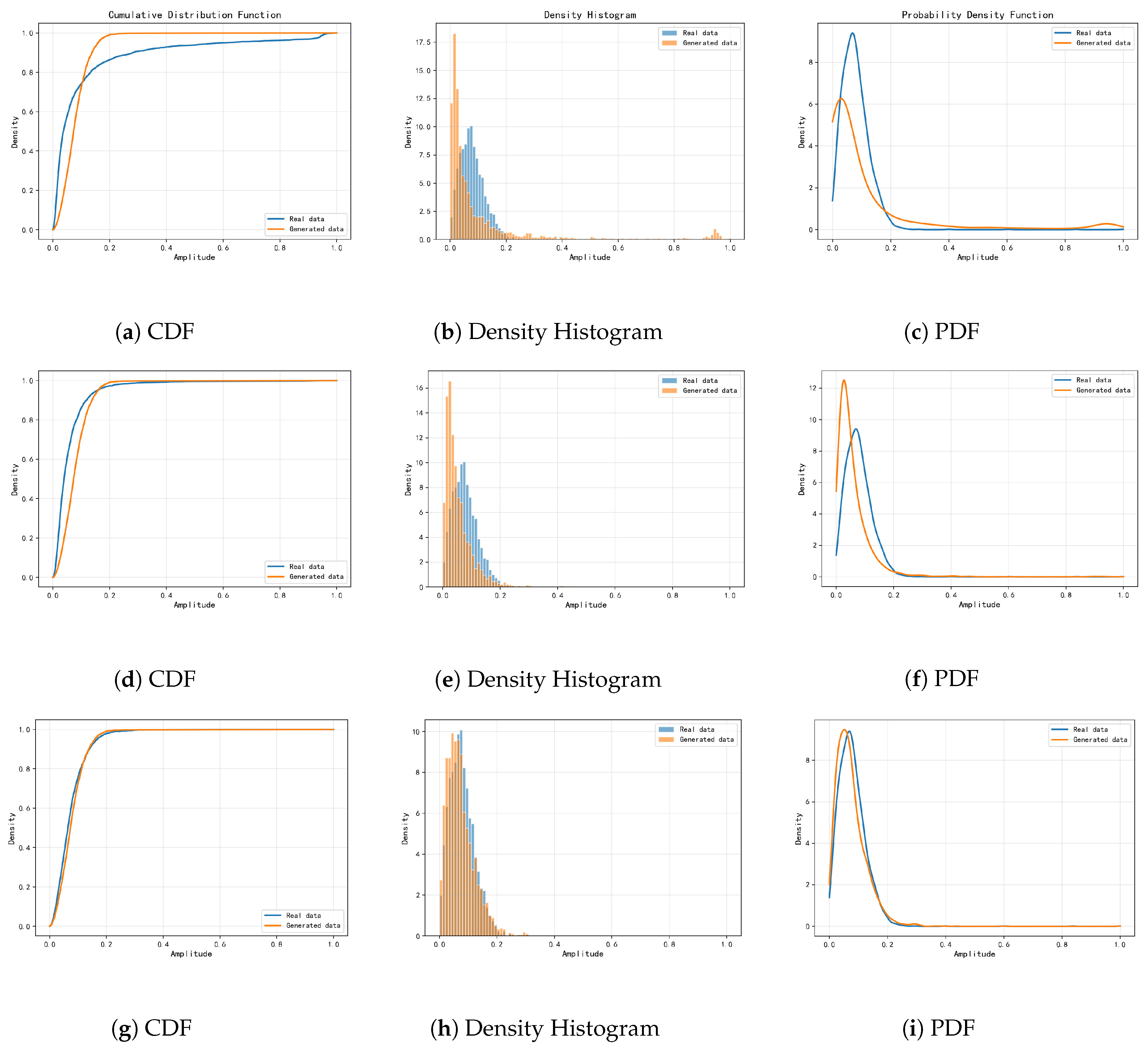

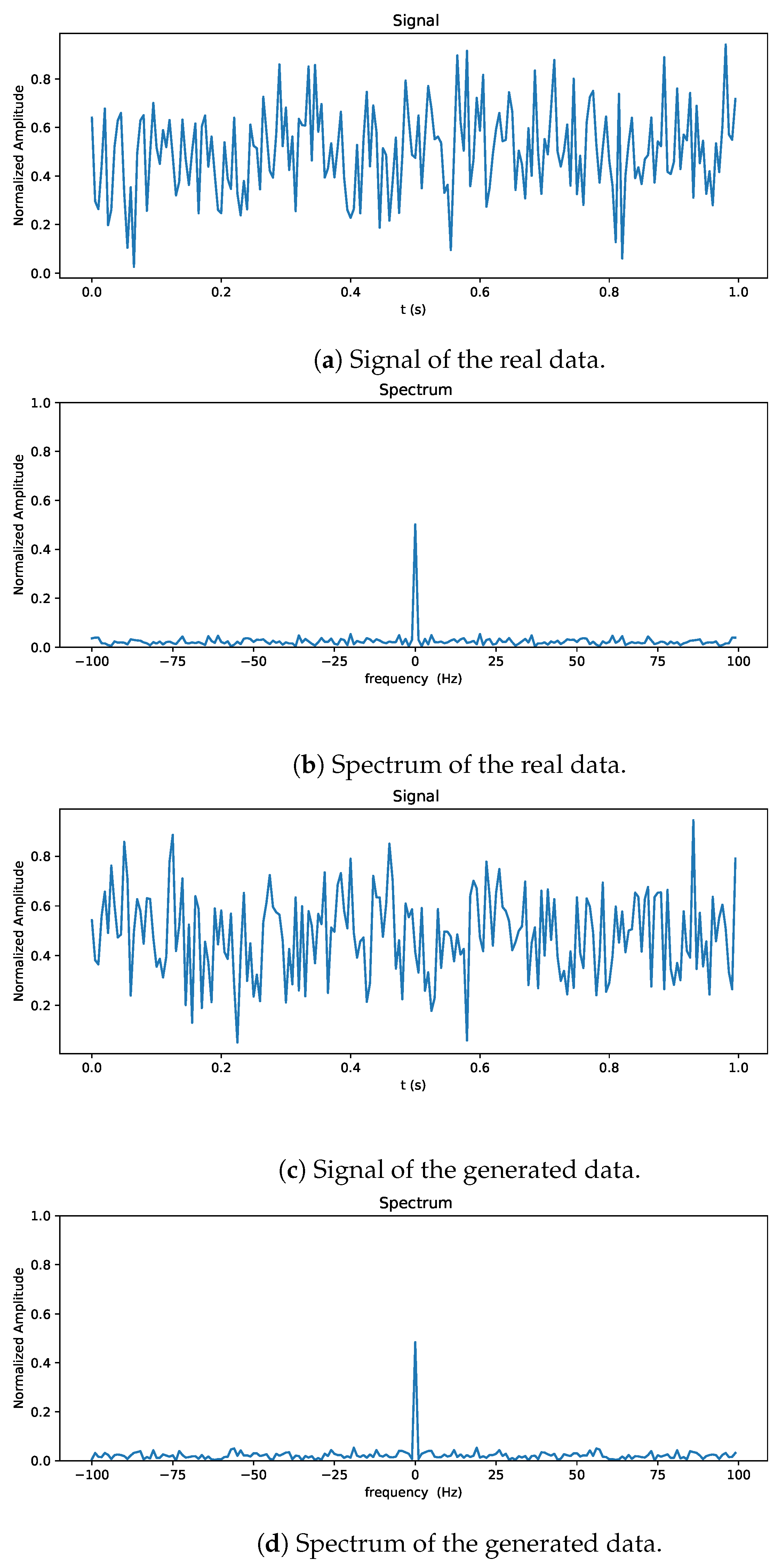

- We conducted qualitative and quantitative experiments to assess the quality of the generated data in comparison with real data and traditional, other GAN-based, mainstream clutter generation methods, confirming the superior accuracy of the proposed approach.

2. Background

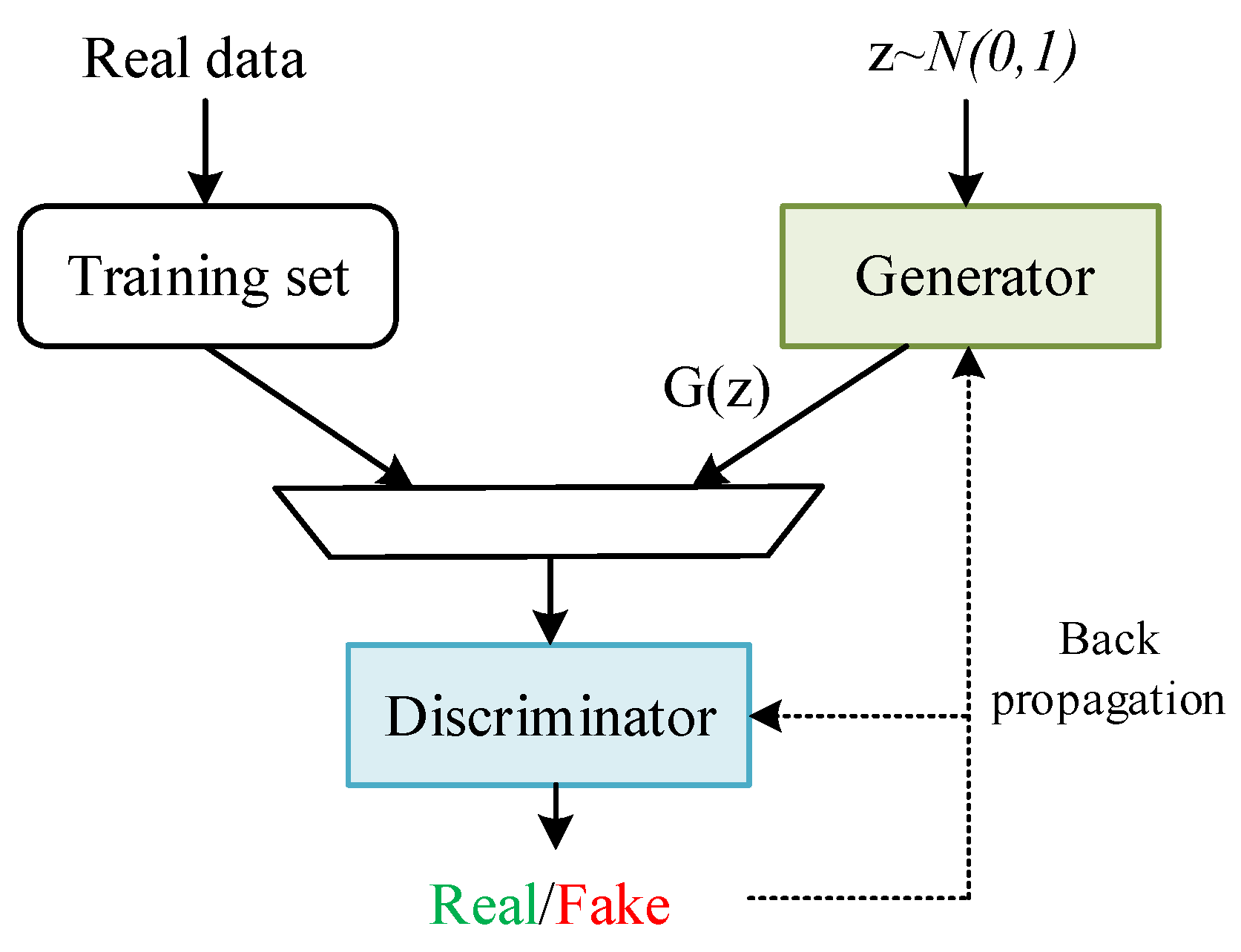

2.1. Generative Adversarial Network

2.2. Transformer

2.3. Characteristics of Radar Clutter

2.4. Evaluation Metrics in Sea Clutter Generation

2.4.1. Limitations of Traditional Metrics

2.4.2. Sim Metric Design (Multi-Moment Cosine Similarity)

2.4.3. Sufficiency and Necessity of the Similarity Metric

- Sufficiency: Sim jointly constrains first-order (location), second-order (spread and covariance), and higher-order (tail heaviness and asymmetry) statistics, thus capturing central tendency, dispersion, heavy tails, asymmetry, and inter-channel coupling within a single, stable criterion—unlike single-aspect distances (KL/KS/JS).

- Necessity: Robust comparison across sea states and polarizations requires higher-order moments in addition to mean/variance; omitting skewness or kurtosis sharply reduces sensitivity to heavy-tailed or asymmetric differences. Therefore, combining multi-moment features under cosine similarity is necessary to distinguish distributions with similar low-order moments but different shapes.

3. Methodology

3.1. Spatiotemporal Vectors

- Dataset: This study employs an X-band radar dataset collected for maritime detection tasks [47]. The radar is equipped with a solid-state power amplifier and utilizes pulse compression. The transmitted single-pulse widths range from 40 ns to 100 s, yielding a maximum range resolution of 6 m at a nominal transmit power of 100 W. A total of 160,000 pulses—each sampled at 950 range bins—were selected for training and evaluating our high-fidelity sea clutter generation framework. The dataset exhibits sufficiently diverse pulse width distributions to allow the model to cope with varying range resolutions and energy scaling effects during training. Furthermore, leveraging the network’s end-to-end learning capabilities, we demonstrate that deep convolutional and transformer-based architectures can autonomously learn sensor-specific parameters [48,49], implicitly internalizing pulse width dependencies. The detailed radar operating parameters are summarized in Table 1.

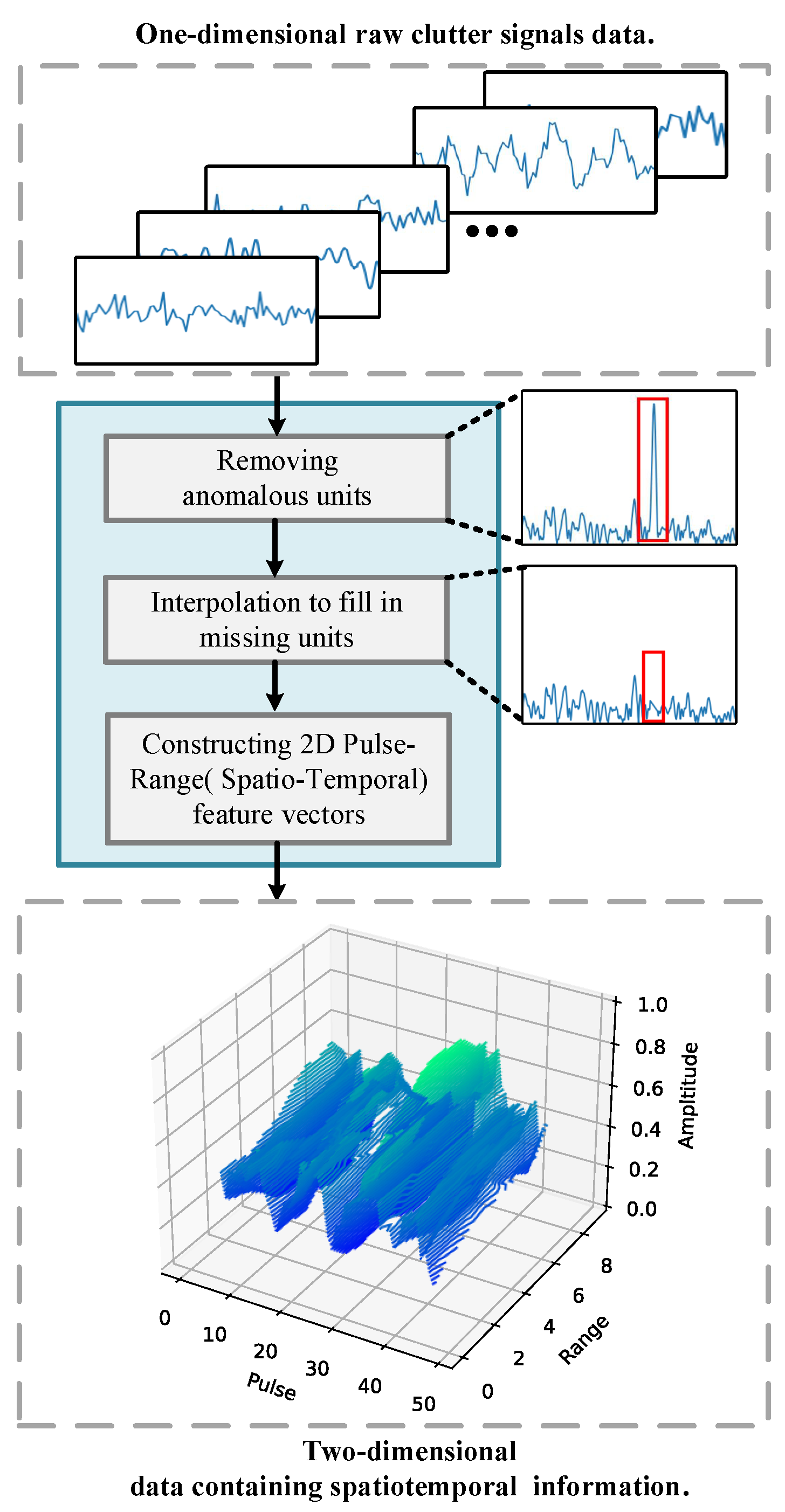

- Remove outliers and interpolation: Small or cooperating objects on the sea surface, as well as potential sea spikes, are frequently found in sea clutter datasets. These units are referred to as anomalous units because their amplitudes are markedly different from those of nearby units. To acquire a pure dataset of clutter, these anomalous units must be removed from the sea clutter study to prevent interference with the experimental results.To maintain data continuity, after removing the anomalous units, it is necessary to fill in the missing units. To fill in the missing units, three interpolation techniques are used: linear interpolation, mean interpolation, and polynomial interpolation. This preserves the diversity of the filled data.

- Construction of spatiotemporal feature vectors: Multiple time-domain signals are layered within a single range unit because the sea clutter data are divided into range cells according to the correlation length of the sea clutter. A two-dimensional vector representation of sea clutter is created via this partitioning procedure, which aids in the retention of the spatiotemporal properties of the sea clutter data. Figure 3 shows the procedure for creating the spatiotemporal feature vectors.

- Data preprocessing and spatiotemporal feature construction: To obtain a pure sea clutter dataset while preserving its intrinsic spatiotemporal structure, let the raw complex echoes be (with M range cells and P pulses). We construct a three-channel real tensor byOutlier removal. Small targets or sea spikes—whose amplitudes deviate sharply from neighbors—are marked by index intervals . For each , we setthus excising anomalous units from the dataset.Interpolation. To restore continuity, missing values are filled using three complementary schemes: linear interpolation, mean interpolation, and polynomial interpolation along the range (spatial) dimension, which can preserve statistical diversity and avoid bias towards any single filling strategy.Global window determination. For each sample, we estimate the time domain autocorrelation length (in pulses) and select a window length that matches the scale, ensuring that each window spans at least one estimated correlation length, thereby preserving the coherence between pulses within the sample; at the same time, all samples are anchored to a common minimum correlation scale to obtain more comparable cross-sample statistics.Spatiotemporal feature extraction. During iteration, a random file is loaded and a sub-tensor of shape is extracted:where (range offset) and (pulse offset). This block preserves temporal correlation over w pulses and spatial correlation over d range cells, yielding a spatiotemporal patch (tensor) for downstream learning.

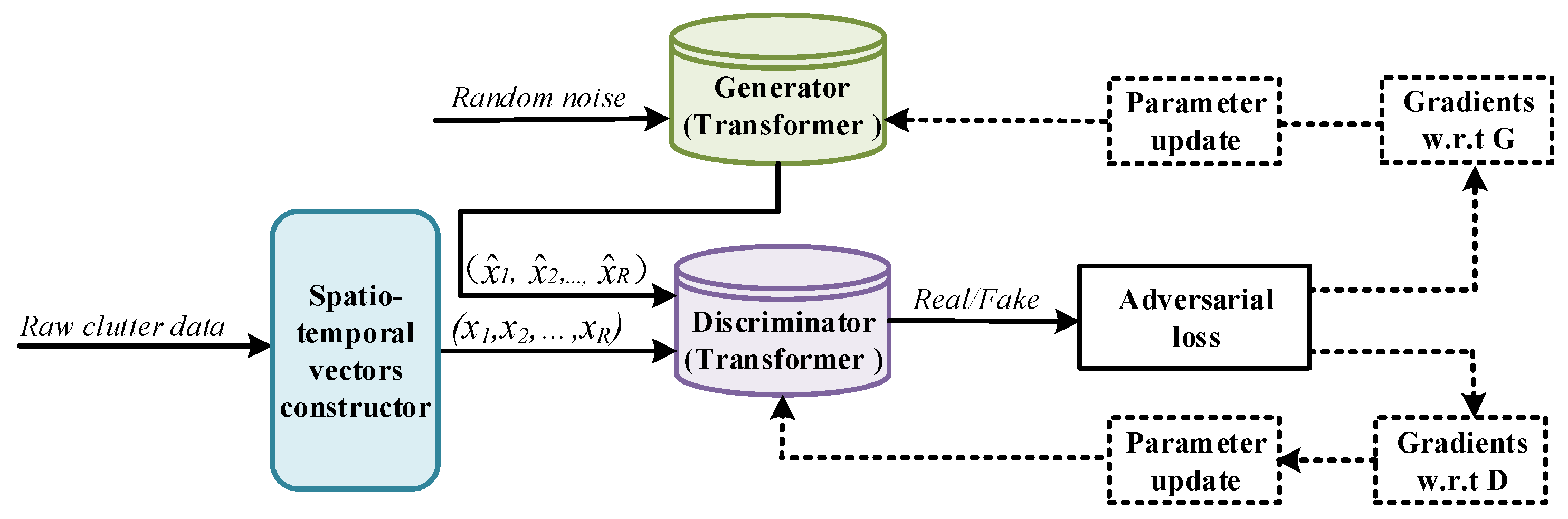

3.2. Transformer GAN Model Architecture

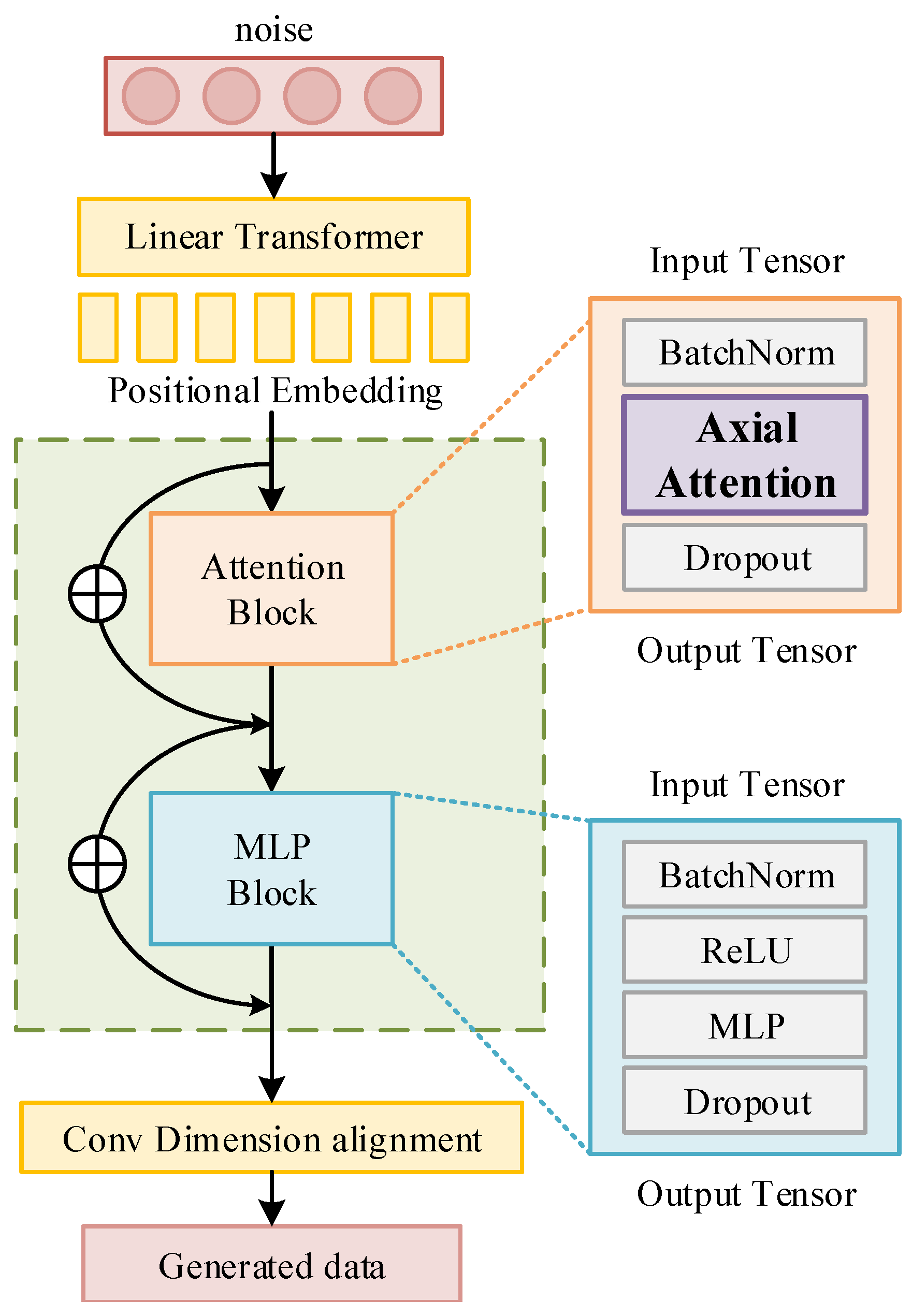

- Normalization, activation, and regularization: Each block adopts a GELU nonlinearity followed by a feedforward MLP, with residual connections between blocks to preserve information and stabilize gradient flow. To mitigate covariate shift, we use a pre-normalization strategy: grid-based branches processed by convolutions use BN, whereas transformer sublayers use LayerNorm [50]. Dropout is applied after the main transforms to reduce overfitting. The combination of BN, LN, GELU, MLP, and the dropout configuration yields stable optimization for sea clutter generation and maintains generalization across sea states [51,52,53].The architecture of the generator is illustrated in Figure 4. Before receiving real data, the generator first receives the input of the noise. To assist the model in understanding the spatiotemporal correlations present in the data, the noise sequence is then split into several patches, each of which is then enhanced with position encoding. These patches are then sent into the encoder’s blocks for additional processing. The generator can efficiently capture the spatiotemporal properties of the data and produce high-quality samples by segmenting the noise sequence into patches and incorporating position encoding.The discriminator uses the Vision Transformer (ViT) technique, which classifies input data into actual data and creates categories via an encoder [54]. Owing to the special nature of clutter signals and the characteristics of neural networks, real data need to be converted into real numbers and normalized before being fed into the discriminator. Given a certain spatiotemporal vector obtained from the processing in Section 3.1, where , P is the number of pulses, and R is the number of range cells. To retain the information of the real signal data, we transformed the real and imaginary parts into dual channels, i.e., , where C is the number of channels. Owing to the large range of signal amplitudes, which can lead to difficulties or even failure in model convergence, it is necessary to normalize the real data. ViT consistently divides an image into numerous blocks of the same width and height. Similarly, in this paper, we take a similar strategy by evenly partitioning the sea clutter data into numerous multidimensional segments by using the time step as the width and the correlation length of the sea clutter as the height of the image. Each segment also includes location encoding to help the discriminator better grasp the spatial and temporal characteristics of the clutter.

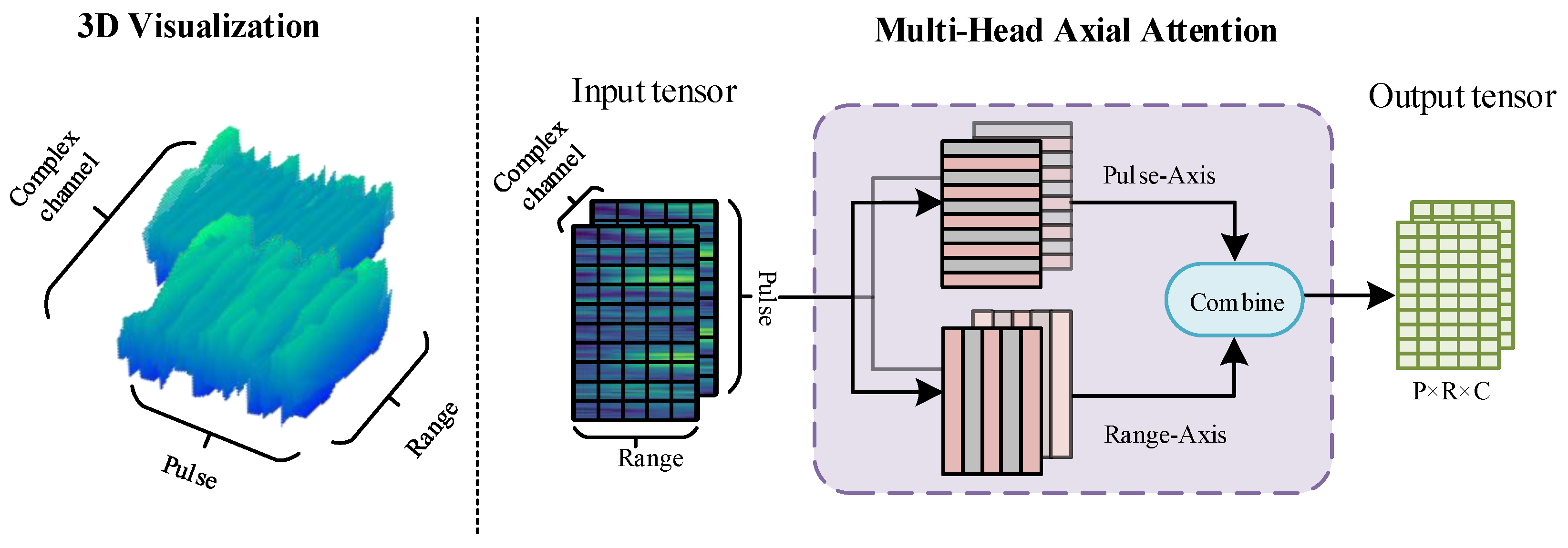

- Pulse-range axial attention: Sea clutter data display variations in properties across various dimensions, unlike flat images. The correlations in clutter data between various units in various dimensions are different from those between various pixels in a 2D flat image. We breakdown the multihead attention mechanism into two modules to allow the network to learn the correlations between various dimensions and units separately and overcome the difficulty of computing global attention. The first module performs self-attention in the pulse dimension, whereas the second module conducts self-attention in the spatial dimension. This operation is referred to as pulse-range axial attention. By employing axial attention, we efficiently capture relevant information in both dimensions while mitigating the computational complexity burden associated with computing global attention. By adopting axial attention, it efficiently captures the relevant information in both dimensions, avoiding the burden of computing global attention while maintaining the ability to learn correlations in the data effectively. This approach allows the network to focus on important relationships within each dimension and improves the model’s ability to process sea clutter data effectively.Applying axial attention to both the pulse and spatial dimensions effectively simulates the original attention mechanism and significantly improves computational efficiency. Figure 5 illustrates the implementation of the axial attention designed in this paper, which is a parallelized spatiotemporal axial attention mechanism. In terms of computation, attention is performed independently along both dimensions, reducing the required computational complexity per position in the spatiotemporal vector from to . Furthermore, the feature vectors after the attention operation still preserve global information and do not alter the size of the input tensor. For a specific position , the output of axial attention is computed as follows:Here, represents the query vector corresponding to position , while and are the key and value matrices along the pulse dimension, respectively. Similarly, and are the key and value matrices along the spatial unit dimension. To enable the network to learn the characteristics of different dimensions separately, the parameters of the two modules are not shared. This axial attention design allows the network to independently learn and capture important feature information in the pulse and spatial dimensions with some degree of discrimination. As a result, it better models sea clutter data in different dimensions.

- Axial attention efficiency analysis in sea clutter generation: In two-dimensional sea clutter generation, standard Multi-Head Self-Attention (MHSA) computes attention over the entire sequence of length at once, incurring both time and memory complexitywhere H and W are the token counts along temporal and spatial dimensions, and d is the embedding dimension. For large windows, this quadratic growth becomes prohibitive.Axial attention splits the 2D attention into two 1D attentions: row-wise (length W) and column-wise (length H). Its combined complexity isand the memory for attention weights reduces toinstead of .When , MHSA has complexity , whereas axial attention isIn summary, compared to standard Multi-Head Self-Attention (MHSA) in sea clutter generation, axial attention delivers substantial efficiency improvements; it reduces computational and memory complexity from to while maintaining identical parameter counts. For typical operational window sizes ( 256 16,384), axial attention achieves order-of-magnitude speed-ups (10-fold) and drastically reduces storage requirements for attention maps.

- Training procedure:The loss of the generator isThe loss of the discriminator isOverall, the generator aims to minimize the loss as much as possible to confuse real and generated sea clutter data; the discriminator aims to maximize the loss to distinguish between real and generated data. The loss function for the generator includes a penalty term with a weight to enforce a gradient penalty. represents a linearly sampled sample from both real sea clutter data and generated sea clutter data. Adding the penalty term helps to distribute the gradient descent weights more evenly. In this experiment, is set to 10.The Adam optimizer is chosen for optimization, with a learning rate of 0.0001 for the generator and 0.003 for the discriminator. and . Additionally, a weight decay of 0.01 is applied. Both the generator and discriminator have a batch size of 64.To balance the differences between the generator and discriminator, a training strategy is employed. The discriminator is trained for five iterations first. After that, both generators and the discriminators are alternately trained for three iterations in each cycle. This process is repeated to ensure the balanced training of the generator and discriminator in the model.

4. Experiments

4.1. Training Setup and Resource Consumption

4.2. Result and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Ward, K.D.; Tough, R.J.; Watts, S. Sea clutter: Scattering, the k distribution and radar performance. Waves Random Complex Media 2007, 17, 233–234. [Google Scholar] [CrossRef]

- Kim, J.; Kim, T.; Ryu, J.-G.; Kim, J. Spatiotemporal graph neural network for multivariate multi-step ahead time-series forecasting of sea temperature. Eng. Appl. Artif. Intell. 2023, 126, 106854. [Google Scholar] [CrossRef]

- Fernández, J.R.M.; de la Concepción Bacallao Vidal, J. Fast selection of the sea clutter preferential distribution with neural networks. Eng. Appl. Artif. Intell. 2018, 70, 123–129. [Google Scholar] [CrossRef]

- Pérez-Fontán, F.; Vazquez-Castro, M.A.; Buonomo, S.; Poiares-Baptista, J.P.; Arbesser-Rastburg, B. S-band lms propagation channel behaviour for different environments, degrees of shadowing and elevation angles. IEEE Trans. Broadcast. 1998, 44, 40–76. [Google Scholar] [CrossRef]

- Jie, Z.; Dong, C.; Dewei, S. K distribution sea clutter modeling and simulation based on zmnl. In Proceedings of the 2015 8th International Conference on Intelligent Computation Technology and Automation (ICICTA), Nanchang, China, 14–15 June 2015; pp. 506–509. [Google Scholar]

- Yi, L.; Yan, L.; Han, N. Simulation of inverse gaussian compound gaussian distribution sea clutter based on sirp. In Proceedings of the 2014 IEEE Workshop on Advanced Research and Technology in Industry Applications (WARTIA), Ottawa, ON, Canada, 29–30 September 2014; pp. 1026–1029. [Google Scholar]

- Ye, L.; Xia, D.; Guo, W. Comparison and analysis of radar sea clutter k distribution sequence model simulation based on zmnl and sirp. Model. Simul. 2018, 7, 8–13. [Google Scholar] [CrossRef]

- Guo, S.; Zhang, Q.; Shao, Y.; Chen, W. Sea clutter and target detection with deep neural networks. In Proceedings of the 2nd International Conference on Artificial Intelligence and Engineering Applications, Guilin, China, 23–24 September 2017; pp. 316–326. [Google Scholar]

- Baek, M.-S.; Kwak, S.; Jung, J.-Y.; Kim, H.M.; Choi, D.-J. Implementation methodologies of deep learning-based signal detection for conventional mimo transmitters. IEEE Trans. Broadcast. 2019, 65, 636–642. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Balouji, E.; Salor, Ö.; McKelvey, T. Deep learning based predictive compensation of flicker, voltage dips, harmonics and interharmonics in electric arc furnaces. IEEE Trans. Ind. Appl. 2022, 58, 4214–4224. [Google Scholar] [CrossRef]

- Guo, S.; Zhou, B.; Yang, Y.; Wu, Q.; Xiang, Y.; He, Y. Multi-source ensemble learning with acoustic spectrum analysis for fault perception of direct-buried transformer substations. IEEE Trans. Ind. Appl. 2022, 59, 2340–2351. [Google Scholar] [CrossRef]

- Yamamoto, R.; Song, E.; Kim, J.-M. Parallel wavegan: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6199–6203. [Google Scholar]

- Yoon, J.; Jarrett, D.; Van der Schaar, M. Time-series generative adversarial networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Jing, J.; Li, Q.; Ding, X.; Sun, N.; Tang, R.; Cai, Y. Aenn: A generative adversarial neural network for weather radar echo extrapolation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 89–94. [Google Scholar] [CrossRef]

- Saarinen, V.; Koivunen, V. Radar waveform synthesis using generative adversarial networks. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar]

- Truong, T.; Yanushkevich, S. Generative adversarial network for radar signal synthesis. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–7. [Google Scholar]

- Ma, X.; Zhang, W.; Shi, Z.; Zhao, X. Clutter simulation based on wavegan. In Proceedings of the International Conference on Radar Systems, Edinburgh, UK, 24–27 October 2022; pp. 605–611. [Google Scholar]

- Donahue, C.; McAuley, J.; Puckette, M. Adversarial audio synthesis. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Chen, H.; Chen, F.; He, H. A sea–land clutter classification framework for over-the-horizon radar based on weighted loss semi-supervised generative adversarial network. Eng. Appl. Artif. Intell. 2024, 133, 108526. [Google Scholar]

- Guo, M.-F.; Liu, W.-L.; Gao, J.-H.; Chen, D.-Y. A data-enhanced high impedance fault detection method under imbalanced sample scenarios in distribution networks. IEEE Trans. Ind. Appl. 2023, 59, 4720–4733. [Google Scholar] [CrossRef]

- Thomas, J.A.; Cover, T.M. Elements of Information Theory; Tsinghua University Press: Beijing, China, 2006. [Google Scholar]

- Smirnoff, N.W. On the estimation of the discrepancy between empirical curves of distribution for two independent samples. Bull. MathéMatique L’Université Mosc. 1939, 2, 3–11. [Google Scholar]

- Walker, D. Doppler modelling of radar sea clutter. IEE Proc.-Radar Sonar Navig. 2001, 148, 73–80. [Google Scholar] [CrossRef]

- Angelliaume, S.; Rosenberg, L.; Ritchie, M. Modeling the amplitude distribution of radar sea clutter. Remote Sens. Target Detect. Mar. Environ. 2019, 11, 319. [Google Scholar] [CrossRef]

- Vondra, B.; Bonefacic, D. Mitigation of the Effects of Unknown Sea Clutter Statistics by Using Radial Basis Function Network. Radioengineering 2020, 29, 215–227. [Google Scholar] [CrossRef]

- Wen, B.; Wei, Y.; Lu, Z. Sea clutter suppression and target detection algorithm of marine radar image sequence based on spatio-temporal domain joint filtering Entropy. Signal Data Anal. 2022, 24, 250. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Skolnik, M.I. Radar handbook. IEEE Aerosp. Electron. Syst. Mag. 2008, 23, 41. [Google Scholar] [CrossRef]

- Greco, M.S.; Gini, F. Statistical analysis of high-resolution SAR ground clutter data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 566–575. [Google Scholar] [CrossRef]

- Smith, G.E.; Woodbridge, K.; Baker, C.J. Radar micro-Doppler signature classification using dynamic time warping. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1078–1096. [Google Scholar] [CrossRef]

- Li, G.; Song, Z.; Fu, Q. A convolutional neural network based approach to sea clutter suppression for small boat detection. Front. Inf. Technol. Electron. Eng. 2020, 21, 1504–1520. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, H.; Patel, V.M. Generative adversarial network-based restoration of speckled SAR images. In Proceedings of the 2017 IEEE 7th International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), Curaçao, The Netherlands, 10–13 December 2017; pp. 1–5. [Google Scholar]

- Yang, H.; Lin, Y.; Zhang, J.; Qian, Y.; Liu, Y.; Kuang, H. Diffusion model in sea clutter simulation. In IET Conference Proceedings CP874; The Institution of Engineering and Technology: Stevenage, UK, 2023; Volume 2023, pp. 3664–3669. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Jiang, Y.; Chang, S.; Wang, Z. Transgan: Two pure transformers can make one strong gan, and that can scale up. Adv. Neural Inf. Process. Syst. 2021, 34, 14745–14758. [Google Scholar]

- Lee, K.; Chang, H.; Jiang, L.; Zhang, H.; Tu, Z.; Liu, C. Vitgan: Training gans with vision transformers. arXiv 2021, arXiv:2107.04589. [Google Scholar] [CrossRef]

- Li, X.; Metsis, V.; Wang, H.; Ngu, A.H.H. Tts-gan: A transformer-based time-series generative adversarial network. In Proceedings of the International Conference on Artificial Intelligence in Medicine, Halifax, NS, Canada, 14–17 June 2022; Springer: Cham, Switzerland, 2022; pp. 133–143. [Google Scholar]

- Zhao, M.; Tang, H.; Xie, P.; Dai, S.; Sebe, N.; Wang, W. Bidirectional transformer gan for long-term human motion prediction. Acm Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–19. [Google Scholar] [CrossRef]

- Xu, L.; Xu, K.; Qin, Y.; Li, Y.; Huang, X.; Lin, Z.; Ye, N.; Ji, X. Tganad: Transformer-based gan for anomaly detection of time series data. Appl. Sci. 2022, 12, 8085. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, X.; Cao, W. An improved gan with transformers for pedestrian trajectory prediction models. Int. J. Intell. Syst. 2022, 37, 4417–4436. [Google Scholar] [CrossRef]

- Lombardo, P.; Greco, M.; Gini, F.; Farina, A.; Billingsley, J. Impact of clutter spectra on radar performance prediction. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 1022–1038. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 2002, 37, 145–151. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. Int. Conf. Mach. Learn. 2017, 70, 214–223. [Google Scholar]

- Jin, Y.; Chen, Z.; Fan, L.; Zhao, C. Spectral Kurtosis–Based Method for Weak Target Detection in Sea Clutter by Microwave Coherent Radar. J. Atmos. Ocean. Technol. 2015, 32, 310–317. [Google Scholar] [CrossRef]

- Guan, J.; Liu, N.; Wang, G.; Ding, H.; Dong, Y.; Huang, Y.; Tian, K.; Zhang, M. Sea-detecting radar experiment and target feature data acquisition for dual polarization multistate scattering dataset of marine targets. J. Radars 2023, 12, 1–14. [Google Scholar]

- Jiang, W.; Haimovich, A.M.; Simeone, O. End-to-end learning of waveform generation and detection for radar systems. arXiv 2019, arXiv:1912.00802. [Google Scholar]

- Mateos-Ramos, J.M.; Song, J.; Wu, Y.; Häger, C.; Keskin, M.F.; Yajnanarayana, V.; Wymeersch, H. End-to-End Learning for Integrated Sensing and Communication. arXiv 2021, arXiv:2111.02106. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the ICML, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. In Proceedings of the NeurIPS 2016, Barcelona, Spain, 5–10 December 2016; pp. 2234–2242. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and Improving the Image Quality of StyleGAN. In Proceedings of the CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Croitoru, F.-A.; Hondru, V.; Ionescu, R.T.; Shah, M. Diffusion Models in Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10850–10869. [Google Scholar] [CrossRef]

- Ramakrishnan, D.; Krolik, J. Adaptive radar detection in doubly nonstationary autoregressive doppler spread clutter. IEEE Trans. Aerosp. Electron. Syst. 2009, 45, 484–501. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

| Technical Specification | Parameter |

|---|---|

| Band | X |

| Frequency Range | 9.3–9.5 GHz |

| Range Coverage | 0.0625–96 nm |

| Scan Bandwidth | 25 MHz |

| Range Resolution | 6 m |

| Pulse Repetition Frequency | 1.6 K, 3 K, 5 K, 10 K |

| Peak Transmit Power | 100 W |

| Antenna Rotation Speed | 2, 12, 24, 48 rpm |

| Antenna Length | 1.8 m (HH), 2.4 m (VV) |

| Horizontal Beamwidth | 1.2° |

| Vertical Beamwidth | 22° |

| Transmit Time | 40 nm–100 s |

| Method | Real | Imaginary | Amplitude | |||

|---|---|---|---|---|---|---|

| MSE | K-S | MSE | K-S | MSE | K-S | |

| Without Axial Attention | 0.073 | 0.073 | 0.128 | 0.071 | 0.221 | 0.129 |

| All kept (Ours) | 0.030 | 0.039 | 0.052 | 0.031 | 0.192 | 0.096 |

| Attention Type | Complexity | Time per Epoch (s) | Memory (GB) | Inference Time (s) |

|---|---|---|---|---|

| Standard MHSA | 5979 | |||

| Axial Attention | 4325 |

| Name | a | ||

|---|---|---|---|

| Without Axial Attention | 0.0342 | 0.2496 | 0.0382 |

| All kept (Ours) | 0.0636 | 0.1047 | 0.0338 |

| Method | Real | Imaginary | Amplitude | |||

|---|---|---|---|---|---|---|

| MSE | K-S | MSE | K-S | MSE | K-S | |

| AR | 0.103 | 0.106 | 0.105 | 0.139 | 0.113 | 0.201 |

| WaveGAN | 0.095 | 0.096 | 0.205 | 0.089 | 0.316 | 0.188 |

| Diffusion | 0.046 | 0.051 | 0.144 | 0.071 | 0.302 | 0.112 |

| Ours | 0.030 | 0.039 | 0.052 | 0.031 | 0.192 | 0.096 |

| Name | a | ||

|---|---|---|---|

| AR | 0.1536 | 0.3421 | 0.1718 |

| WaveGAN | 0.1398 | 0.2812 | 0.1516 |

| Diffusion | 0.0886 | 0.2675 | 0.1018 |

| Ours | 0.0636 | 0.1047 | 0.0338 |

| Method | Real | Imaginary | Amplitude | |||

|---|---|---|---|---|---|---|

| Cos-Sim | Js-Dis | Cos-Sim | Js-Dis | Cos-Sim | Js-Dis | |

| Without Axial Attention | 0.9763 | 0.0370 | 0.9918 | 0.0162 | 0.9086 | 0.0248 |

| Ours | 0.9914 | 0.0066 | 0.9925 | 0.0087 | 0.9952 | 0.0137 |

| Method | Real | Imaginary | Amplitude | |||

|---|---|---|---|---|---|---|

| Cos-Sim | Js-Dis | Cos-Sim | Js-Dis | Cos-Sim | Js-Dis | |

| AR | 0.8565 | 0.1432 | 0.9037 | 0.1073 | 0.9112 | 0.1055 |

| WaveGAN | 0.9679 | 0.0881 | 0.9764 | 0.0647 | 0.9271 | 0.0371 |

| Diffusion | 0.9494 | 0.0767 | 0.9287 | 0.0575 | 0.9799 | 0.359 |

| Ours | 0.9914 | 0.0066 | 0.9925 | 0.0087 | 0.9952 | 0.0137 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Ren, J.; Tao, W.; Chen, A.; Liu, X.; Wu, C.; Ji, C.; Zhou, M.; Xu, X. Transformer-Driven GAN for High-Fidelity Edge Clutter Generation with Spatiotemporal Joint Perception. Symmetry 2025, 17, 1489. https://doi.org/10.3390/sym17091489

Zhao X, Ren J, Tao W, Chen A, Liu X, Wu C, Ji C, Zhou M, Xu X. Transformer-Driven GAN for High-Fidelity Edge Clutter Generation with Spatiotemporal Joint Perception. Symmetry. 2025; 17(9):1489. https://doi.org/10.3390/sym17091489

Chicago/Turabian StyleZhao, Xiaoya, Junbin Ren, Wei Tao, Anqi Chen, Xu Liu, Chao Wu, Cheng Ji, Mingliang Zhou, and Xueyong Xu. 2025. "Transformer-Driven GAN for High-Fidelity Edge Clutter Generation with Spatiotemporal Joint Perception" Symmetry 17, no. 9: 1489. https://doi.org/10.3390/sym17091489

APA StyleZhao, X., Ren, J., Tao, W., Chen, A., Liu, X., Wu, C., Ji, C., Zhou, M., & Xu, X. (2025). Transformer-Driven GAN for High-Fidelity Edge Clutter Generation with Spatiotemporal Joint Perception. Symmetry, 17(9), 1489. https://doi.org/10.3390/sym17091489