1. Introduction

Cantonese is one of the major Sinitic varieties, spoken predominantly in Guangdong, Guangxi, Hong Kong, Macau, and overseas Chinese communities. As a representative language of southern China, it carries a rich historical and cultural heritage and differs markedly from Mandarin in phonology, vocabulary, and syntax. Cantonese has a comparatively intricate sound system with nine lexical tones and several distinctive syllable structures; many of its phonemes have no direct Mandarin counterparts. In addition, Cantonese exhibits unique phonological phenomena—such as diverse coda and nucleus realizations—whose tonal diversity further heightens the difficulty of ASR.

Although automatic speech recognition has progressed rapidly for high-resource languages such as English and Mandarin [

1], Cantonese and other low-resource languages still pose formidable challenges. Resource scarcity is only one obstacle; Canton-ese’s tone system and complex syllable structures also impede recognition accuracy. Deep learning-based ASR has achieved remarkable results, yet the shortage of annotated data continues to limit performance for low-resource languages, especially for tone-sensitive Cantonese tasks [

2,

3]. Nevertheless, advancing Cantonese ASR is essential for preserving and revitalizing Cantonese culture and for promoting digital technology uptake in Cantonese-speaking regions.

To mitigate data scarcity, the pre-train–fine-tune (PT-FT) paradigm has gained wide adoption in low-resource ASR [

4,

5]. During pre-training, multilingual audio is combined to learn language-agnostic representations; subsequent fine-tuning adapts the model to the target language with its limited transcriptions. While effective, existing augmentation strategies have yet to resolve Cantonese tone-recognition errors: tone-character error rate (Tone-CER) remains high even as data size increases.

Current mainstream pre-training strategies fall into three categories: (i) self-supervised pre-training [

6,

7]; (ii) subword-based supervised pre-training [

8,

9]; and (iii) phoneme-based supervised pre-training [

10]. Self-supervised methods exploit large volumes of unlabeled audio and learn cross-lingual features via contrastive or masked-prediction objectives, eliminating manual annotation and offering strong transferability. Subword-based approaches build a shared cross-lingual subword vocabulary so that multilingual transcriptions fit into a common coding scheme, enhancing text modeling across languages. Phoneme-based approaches employ the International Phonetic Alphabet (IPA) for unified labeling and explicit alignment of speech units, enabling efficient knowledge sharing across languages. IPA’s language-neutral, standardized phoneme representation improves generalization and yields superior multilingual recognition.

Compared with self-supervised and subword methods, phoneme-level pre-training offers distinct advantages. Fine-grained phoneme labels capture language-specific pronunciation patterns, helping the model learn cross-language phoneme mappings. A unified phonemic annotation framework also facilitates knowledge transfer, raising learning efficiency and accuracy in low-resource settings. However, practical deployment faces hurdles: acquiring high-quality IPA annotations for Cantonese and other regional dialects is arduous due to the lack of systematic resources, the need for deep phonological expertise, and difficulties in standardizing annotation protocols. Establishing an efficient, standardized phoneme annotation pipeline under low-resource conditions has thus become a critical bottleneck.

Several promising solutions have emerged. Feng et al. proposed a multilingual IPA-supervised pre-training framework that augments HuBERT with IPA transcriptions, outperforming standard HuBERT and XLSR-53 while reducing labeled-data requirements by up to 75% [

11]. Xue et al.’s TranUSR couples UniData2Vec’s continuous speech representations with a phoneme-to-word Transcoder, achieving a two-stage pipeline from phoneme pre-training to direct word generation and lowering PER by 5.3% on Common Voice [

12]. Martin et al. investigated HuBERT’s layer-wise phoneme representations, demonstrating that lower layers capture fine-grained acoustic cues whereas higher layers encode linguistic information, offering new insights for phoneme-level representation learning [

13].

In response to these challenges, we propose a Cantonese ASR framework that integrates phoneme-aware augmentation with weak phoneme supervision. First, an IPA-level self-supervised pre-training stage enables the model to share fine-grained articulatory features across languages. To address Cantonese’s tonal complexity, we introduce two lightweight phoneme-aware augmentation strategies: (1) Phoneme Dropout, a dynamic masking mechanism that adaptively adjusts the probability of masking entire phoneme spans according to their duration and training iteration, allowing the model to learn redundant and robust representations under “variable-omission” scenarios and thus mitigating errors arising from tone deletion or reduction; and (2) Phoneme-Aware SpecAugment, which applies time masking at whole-phoneme granularity, preserving internal tonal contours while promoting contextual inference. Finally, we adopt the Whistle model, which employs weak phoneme supervision by using automatically generated IPA sequences as training targets, thereby lowering human annotation costs.

2. Fundamental Principles

With the rapid evolution of multilingual pre-training techniques, phoneme-level modeling has become increasingly pivotal in speech recognition systems. In low-resource scenarios, incorporating phonemic information not only enhances a model’s generalization ability but also markedly improves recognition accuracy. To clarify the theoretical underpinnings of the phoneme-aware augmentation strategies proposed in this paper, this chapter outlines the key technological frameworks and core principles on which our study relies. We first analyze the architecture and training mechanics of Wav2Vec 2.0, a widely adopted self-supervised speech representation model. We then introduce the two-stage Whistle model, with emphasis on its approach to phoneme representation and the advantages it offers in multilingual ASR tasks.

2.1. Wav2Vec 2.0 Model

Wav2Vec 2.0, proposed by Facebook AI Research (FAIR) [

14], is a self-supervised framework for speech representation learning. By pre-training on large volumes of unlabeled audio, it substantially boosts ASR performance under low-resource conditions. During pre-training, the model masks portions of the latent feature sequence and is forced to predict the masked content, thereby capturing rich acoustic patterns and contextual information without costly human annotations. Convolutional layers extract local time-domain features, while a Transformer network captures long-range dependencies, yielding higher-level, more generalizable representations.

The overall architecture of Wav2Vec 2.0 can be divided into three main components: the Feature Encoder, the Context Network, and the Self-Supervised Objective Module (Contrastive Loss). The Feature Encoder consists of a stack of one-dimensional convolutional layers that perform subsampling and feature extraction on raw audio waveforms. Its primary function is to transform the continuous speech signal—typically sampled at 16 kHz—into low-frequency latent representations. The Context Network is composed of multiple Transformer encoder layers, which are responsible for modeling both local context and global semantic structure. This module takes the latent representations from the feature encoder and generates contextualized representations, which capture temporal and semantic dependencies across the input sequence. The Self-Supervised Objective governs the pre-training process. During this phase, Wav2Vec 2.0 randomly masks certain time steps of the latent sequence and tasks the model with identifying the correct latent vector from a set of distractors (negative samples). This contrastive learning objective, known as Contrastive Loss, aims to maximize the agreement between the true latent vector and its contextual representation while minimizing similarity with the negatives. In the fine-tuning stage, Wav2Vec 2.0 appends a CTC output layer that maps contextualized representations directly to characters, subword units, or phoneme labels. By leveraging a small amount of labeled data, the model can be rapidly adapted to specific language recognition tasks, making it particularly suitable for low-resource languages. To address the challenges of low-resource speech recognition, the wav2vec2-large-XLSR-53 variant is pre-trained on speech data from 53 languages. By sharing cross-lingual acoustic representations, it offers superior initialization for downstream ASR tasks and delivers significant gains in recognition accuracy for under-resourced languages.

2.2. Whistle Model

Whistle, proposed in 2024 by the Speech, Processing, and Machine Intelligence (SPMI) Laboratory at Tsinghua University [

15], is a unified framework for multilingual and cross-lingual ASR. Its key innovation is the use of WPS to replace costly manual phoneme annotation. Leveraging off-the-shelf grapheme-to-phoneme (G2P) converters, Whistle automatically generates IPA sequences as training targets, thereby lowering the barrier to building large-scale multilingual ASR systems. Compared with purely self-supervised representation learning, WPS preserves phoneme-level interpretability while still enabling cross-language information sharing, offering an attractive trade-off between modeling efficiency and transferability in low-resource settings.

Whistle comprises three core modules: a feature encoder, a phoneme-alignment network, and a cross-lingual mapping layer. The feature encoder adopts a Conformer architecture, where convolutional layers capture local temporal cues and self-attention layers model long-range dependencies. Input log-Mel filterbank features are transformed through multiple encoder layers into high-dimensional acoustic representations. The phoneme-alignment network introduces a hybrid CTC–CRF design: the CTC branch provides weak supervision to align acoustic frames with phoneme sequences, while the CRF layer, formulated as a hidden Markov model, explicitly learns phoneme-state transition probabilities to increase robustness against pronunciation variation. Whistle optimizes a composite objective:

where

is dynamically updated according to the validation-set word-error rate (WER). By increasing

when the model underfits tonal or pronunciation variability—and decreasing it once convergence stabilises—the training process strikes a balance between faster convergence and robustness to phonetic variation. The CTC and CRF modules share the same encoder and jointly optimize a hybrid loss function. The CTC branch provides weak alignment capability to facilitate fast convergence, while the CRF layer models phoneme-state transition probabilities, enhancing robustness to phonological variation. The hybrid loss is formulated as shown in Equation (1), where the weight λ is dynamically adjusted according to the word error rate (WER) on the validation set, balancing the contributions of the two supervision signals at different training stages. The CTC component is computed using the standard forward algorithm, whereas the CRF component is optimized with the forward–backward algorithm. This hybrid CTC–CRF design was chosen over pure CTC and attention-based decoding because it can explicitly model phoneme-level transitions while maintaining low decoding latency. The design is particularly well suited to our phoneme-aware augmentation framework, which requires precise frame–phoneme alignment and benefits from CRF’s sequence-level modeling capabilities.

The cross-lingual mapping layer constructs a Multilingual Shared Phonetic Space (MSPS) covering the phoneme union of ten high-coverage languages. During pre-training, the model leverages unlabeled speech from these languages and employs phoneme-level contrastive learning to build language-agnostic encodings. It reconstructs masked speech spans in a self-supervised fashion and enforces a phoneme-distribution consistency constraint, encouraging the encoder to disregard language-specific pronunciation variants. All languages share a single phoneme embedding matrix to promote cross-lingual knowledge transfer. During fine-tuning, the Conformer encoder is frozen, and only the mapping layer and the CRF transition matrix are updated, following a dynamic freezing strategy to accelerate convergence and stabilize training.

During pre-training, Whistle leverages unlabeled speech drawn from ten foundation languages and employs phoneme-level contrastive learning to construct a cross-lingual shared phonetic space. The model reconstructs masked speech spans in a self-supervised fashion and imposes a phoneme-distribution consistency constraint, forcing the encoder to disregard language-specific pronunciation variants. In the fine-tuning stage, the feature encoder parameters are frozen; only the cross-lingual mapping layer and the CRF transition matrix are updated. For low-resource settings, a dynamic curriculum learning schedule is adopted to accelerate convergence and stabilize training.

Whistle’s lightweight encoder, combined with its hybrid CTC–CRF alignment, offers both efficiency and compatibility with phoneme-aware augmentation strategies. The CRF’s sequence-level modeling enhances the robustness of phoneme predictions, which is particularly beneficial for Cantonese, where tonal contrasts and complex syllable structures require precise modeling. Preliminary experiments also showed that Whistle achieved comparable or better PER than a Conformer baseline of similar size while training faster and decoding with lower latency—properties that align well with the low-resource and edge deployment focus of this work.

2.3. The Zipformer Model

Zipformer is an efficient encoder model tailored for ASR tasks [

16]. Building on the classic Conformer architecture, it blends self-attention with convolutional operations and introduces a sparse-attention mechanism, substantially reducing the computational burden of Transformers on long input sequences while preserving the ability to capture global contextual information with high precision.

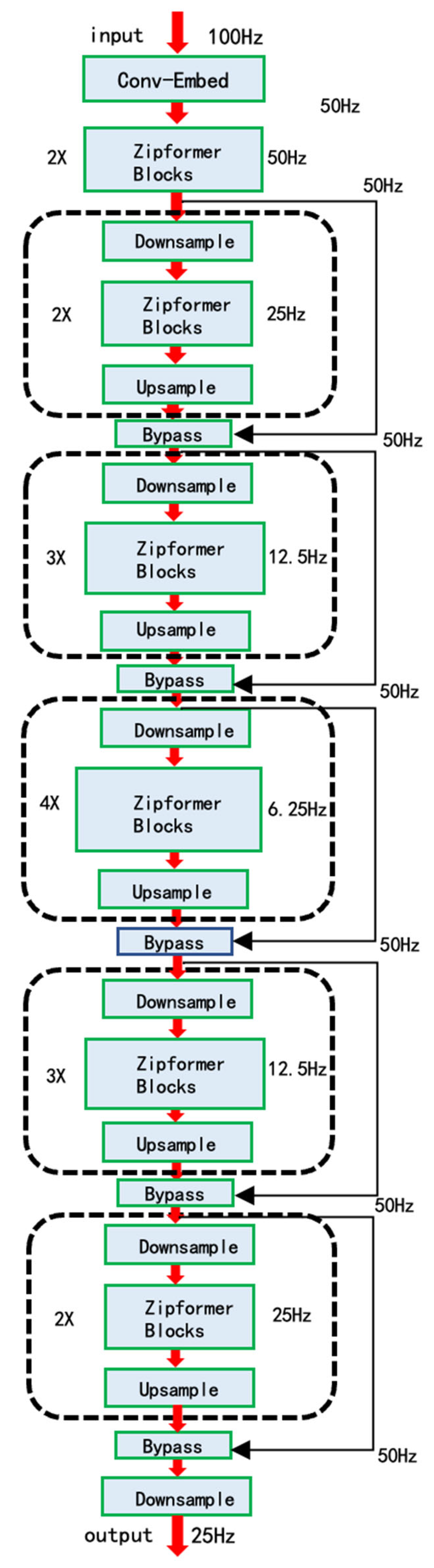

Figure 1 depicts Zipformer’s U Net-style, multi-resolution encoder. A single convolutional embedding layer first subsamples the raw 100 Hz acoustic sequence to 50 Hz while projecting it into the model’s hidden dimension. The 50 Hz features then pass through a symmetric cascade of six blocks that operate successively at 50 Hz, 25 Hz, 12.5 Hz, 6.25 Hz, 12.5 Hz, and 25 Hz. Each block performs a paired down/up sampling operation that fuses sparse self-attention with convolution, enabling the network to capture dependencies over progressively broader time spans. The outputs from all resolutions are subsequently cropped or zero padded to a common length, concatenated, and fed through a final down sampling layer that standardizes the frame rate to 25 Hz, yielding the encoder’s unified representation.

Unlike standard multi-head self-attention, Zipformer splits the attention computation into two critical stages. (1) It first forms the attention weight matrix

for the input sequence. The formulation is given by

where

is the feature matrix, N is the number of time steps, and D is the feature dimension. This procedure follows the conventional scaled dot-product formulation but keeps only the weight matrix A. The queries

and

are derived from linear projections, capturing pairwise dependencies across time. Once A is obtained, Zipformer reuses it across subsequent self-attention (SA) and non-linear attention (NLA) blocks, enabling multiple feature transformations without recomputing the expensive

. This reuse substantially reduces computational overhead while maintaining modeling capacity.

Zipformer also introduces sparse attention in the encoder stage, which lowers complexity but maintains the ability to capture long-range dependencies. In addition, BiasNorm is adopted instead of conventional LayerNorm to alleviate potential normalization instability during early training. Zipformer incorporates hierarchical down-sampling and systematic reuse of attention weights. Together with improved normalization, these designs allow efficient modeling of long speech sequences. Its multi-scale frame-rate design provides broad receptive fields at low frame rates while preserving fine-grained details at higher frame rates, thereby enabling the model to simultaneously capture global context and short-term dynamics.

2.4. Principles of Phoneme-Aware Augmentation

Conventional end-to-end ASR systems typically rely on random augmentation techniques such as SpecAugment to improve generalization [

17]. These methods, however, disregard phoneme boundaries and linguistic structure: the masked spans lack semantic interpretability, may disrupt phonemic integrity, and can even weaken the model’s ability to learn key tonal units in tone languages such as Cantonese. To address these limitations, this study embeds two phoneme-aware augmentation strategies into the Whistle framework, targeting both regularization-driven generalization and robustness-oriented training. Each strategy presupposes accurate phoneme-boundary information, which is obtained with Montreal Forced Aligner (MFA) [

18]. Together, the proposed augmentations enable the model to cope more effectively with phoneme variation or deletion while preserving essential tonal cues.

2.5. Phoneme Dropout: A Boundary-Aware Dynamic Dropping Mechanism

To more faithfully reflect Cantonese conversational phenomena—such as elision, lenition, and tonal drift—this study introduces Phoneme Dropout, a boundary-aware regularization technique applied at the input-feature level (or at a selected intermediate layer). Unlike conventional frame-based time masking, Phoneme Dropout treats an entire phoneme segment as the minimal unit; selected segments are masked while label consistency is maintained, thereby enhancing the model’s resilience to missing or blurred speech cues.

The method relies on phoneme-level forced alignment (obtained via Montreal Forced Aligner) to extract precise start- and end-time boundaries for each phoneme. A probabilistic schedule then chooses segments for masking. To avoid unstable convergence caused by excessive context reliance in early training, a dynamic drop-rate scheme is employed: the proportion of masked phonemes starts low and is gradually increased as training proceeds, yielding a curriculum in which perturbation strength grows progressively stronger.

The global dropout upper bound at the tth parameter update is defined as

where

is the target maximum drop rate (set to 0.25 in this work),

controls the growth slope, and

denotes the warm-up step count.

For the ith phoneme in the same utterance—whose start and end frame indices are denoted

and

and where N is the total number of phonemes—the duration-based weight is computed as

The actual sampling probability for the ith phoneme at step

t is defined as

where

is a normalization factor ensuring the expected number of masked phonemes per batch remains constant,

is a fixed maximum probability threshold.

Phoneme selection is then performed via

the frame-level masking is constructed as follows:

where

is the frame index and

denotes the binary mask.

Finally, perturbation is applied in two modes, chosen with equal probability:

where

is the original acoustic feature at frame

and

is the standard deviation of Gaussian noise.

Step-by-step procedure: Boundary extraction—Obtain phoneme start–end indices from MFA; Weight computation—Calculate duration-based weights via Equation (4); Probability assignment—Compute using Equation (5); Phoneme selection—Sample phonemes using Equation (6); Frame masking—Generate mask via Equation (7); Perturbation—Apply zeroing or Gaussian noise as in Equation (8).

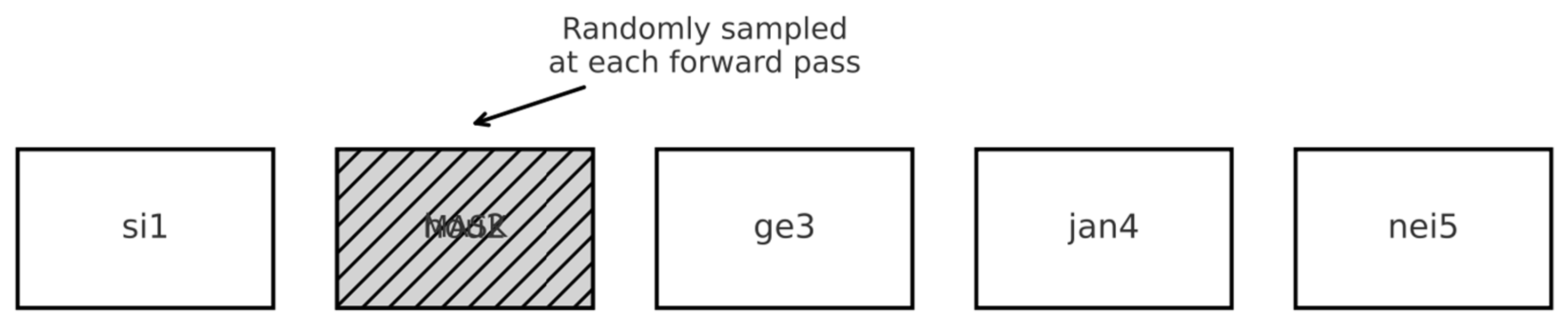

As shown in

Figure 2, Phoneme Dropout randomly masks phonemes at the phoneme level during each forward pass, with different masking results across layers, thereby enhancing the model’s robustness in weakly supervised scenarios. Additional representative examples of target vs. predicted IPA sequences (with and without augmentation) are provided in

Appendix A.

2.6. Phoneme-Aware SpecAugment

Phoneme-Aware SpecAugment extends the original SpecAugment framework by imposing phoneme-boundary constraints: every time- or frequency-masking operation is aligned with the full duration of a phoneme segment rather than with arbitrary frame spans. By guaranteeing segment-level alignment, the method preserves intra-phonemic pitch contours and formant trajectories, preventing the corruption of tonal shape and resonant structure that can otherwise occur in tone languages such as Cantonese.

The masking budget and dynamic schedule are defined as follows. For an utterance u that contains N phonemes, the global masking budget at training step t is

where

is the warm-up horizon and

controls the growth rate.

The number of phoneme segments to be masked is then

To concentrate masking on the regions most relied upon by the model, an attention-weighted sampling procedure is used. For each phoneme

, the average attention score is computed from layer

of a baseline model by averaging over all

heads:

After normalization,

and the set of segments to mask,

is drawn from a multinomial distribution.

As shown in

Figure 3, the proposed Phoneme-Aware SpecAugment performs time masking aligned with phoneme boundaries, thus avoiding the disruption of tonal or phonemic structures caused by random masking.

As shown in

Figure 4, a comparison between random SpecAugment and Phoneme-Aware SpecAugment is presented. It can be observed that random masking may damage the completeness of phonemes, whereas the phoneme-aware approach guarantees strict alignment of masked regions with phoneme boundaries, thereby better reflecting the linguistic structure of speech.

3. Training Procedure

3.1. Data Pre-Processing and Phoneme Alignment

The training corpus is the Cantonese subset of the Chinese portion of Mozilla Common Voice, which comprises volunteer recordings from Hong Kong, Guangdong, and other Cantonese-speaking regions. The subset is highly heterogeneous—covering a broad spectrum of accents, genders, age groups, and speaking styles—and therefore provides natural, informal utterances that are well suited for assessing real-world ASR performance.

To support the phoneme-aware augmentation strategies introduced later, the raw speech and accompanying transcripts undergo systematic cleaning and standardization. After text normalization (punctuation removal, full-/half-width unification, numeral expansion, and script consistency checks), we employ Montreal Forced Aligner (MFA) to obtain frame-level phoneme boundaries for every utterance. The resulting time-aligned phoneme annotations serve as the basis for Phoneme Dropout, Phoneme-Aware SpecAugment, and subsequent model training stages.

Text normalization is first applied to improve data quality and modeling consistency. Because the raw transcriptions contain noisy symbols, inconsistent punctuation, and non-linguistic characters, we remove all extraneous symbols, standardize punctuation marks, convert full-width to half-width forms, and normalize multilingual code points. A joint character-level and phoneme-level vocabulary is then constructed, incorporating the full set of IPA labels so that the model’s output space is fully compatible with the phoneme-aware augmentation strategies introduced later. For phoneme annotation, an IPA-based Cantonese romanization workflow is adopted. Open-source G2P tools map standard Cantonese text to the corresponding phoneme sequences. To improve coverage and accuracy, we supplement these tools with a custom pronunciation lexicon generated via a longest-match algorithm, producing high-fidelity IPA sequences that serve as training targets. Frame-level phoneme boundaries are obtained with MFA. Speech recordings, cleaned transcripts, and the word-to-IPA lexicon are supplied to MFA, which outputs TextGrid files containing phoneme start–end times. These TextGrids are parsed into JSON format, yielding precise boundary metadata that the Phoneme Dropout and Phoneme-Aware SpecAugment modules can access during training. Regarding the accuracy of MFA, this study conducted a manual inspection of 100 randomly selected utterances in Praat. Over 90% of phoneme boundaries differed by ≤±20 ms from the human reference, indicating sufficient precision for the proposed phoneme-level augmentations.

3.2. Model Architecture and Training Workflow

The core modeling framework is built on Whistle, adopting phoneme-level modeling to exploit the representational power of IPA phonemes in multilingual ASR. Unlike conventional end-to-end systems, Whistle decomposes speech recognition into two stages: (1) a CTC network first predicts a phoneme sequence and (2) a separate language-model component (or a word-level decoder) produces the final textual output. This study concentrates on the first stage, training a pure phoneme recognizer in order to assess how different augmentation strategies affect recognition accuracy.

The input to the Whistle model consists of log-Mel filterbank (Fbank) features extracted from raw speech, and the output is a sequence of IPA phoneme labels. The overall architecture comprises three main components: a feature extraction layer, a Transformer encoder, and a CTC output layer. Feature extraction is implemented using Lhotse and Torchaudio, with the Fbank dimension set to 80, a frame shift of 10 ms, and a frame length of 25 ms. The encoder consists of six Transformer layers, each with a hidden dimension of 512 and 8 attention heads. The output layer applies a linear transformation followed by a Softmax function, used to predict IPA phoneme labels including the CTC blank token.

During training, the Whistle model is optimized with the CTC loss, whose objective is defined as

where

denotes the input sequence of acoustic features,

is the corresponding sequence of IPA phoneme labels,

is the CTC collapse function that maps an alignment path

to its label sequence, and

ranges over all possible alignment paths compatible with

.

To enhance the model’s sensitivity to phonemic structure and bolster its robustness to pronunciation blurring or deletion, two phoneme-aware augmentation strategies—Phoneme Dropout and Phoneme-Aware SpecAugment—are injected at the front end of the network. For every training mini-batch, complete phoneme segments are randomly selected according to the alignment information provided by MFA; the chosen segments are then masked at the feature level (Phoneme Dropout) or along the temporal axis (Phoneme-Aware SpecAugment), respectively.

The Whistle model is trained with a mini-batch size of 8 and an initial learning rate of 3 × 10−4 using the Adam optimizer. A warm-up of 500 steps precedes the dynamic learning-rate schedule, and the network is trained for 15 epochs in total. Throughout training, mixed-precision computation (fp16) is enabled to improve memory efficiency and throughput. These baselines use the same 80-dimensional log-Mel Fbank features (25 ms window, 10 ms shift) as Whistle, with a batch size of 8, the Adam optimizer, a peak learning rate of 3 × 10−4, 500 warm-up steps, and 15 training epochs.

3.3. Evaluation Metric

In speech recognition, the most widely used metric is Error Rate. Depending on the modeling unit, common variants include PER, CER, and WER. Because this study focuses on phoneme recognition, PER is adopted as the primary evaluation metric. PER measures the discrepancy between the model-predicted phoneme sequence and the reference sequence, following the same formulation as WER:

where

denotes the number of substituted phonemes,

denotes the number of deleted phonemes,

denotes the number of inserted phonemes, and

denotes the total number of phonemes in the reference transcription. PER reflects recognition accuracy at the phoneme level: the lower the value, the better the performance. Compared with WER, PER is more appropriate for phoneme recognition tasks and is particularly representative in our setting, where the Whistle model outputs only IPA phoneme sequences rather than word-level transcriptions.

4. Results

4.1. Training Stability Analysis

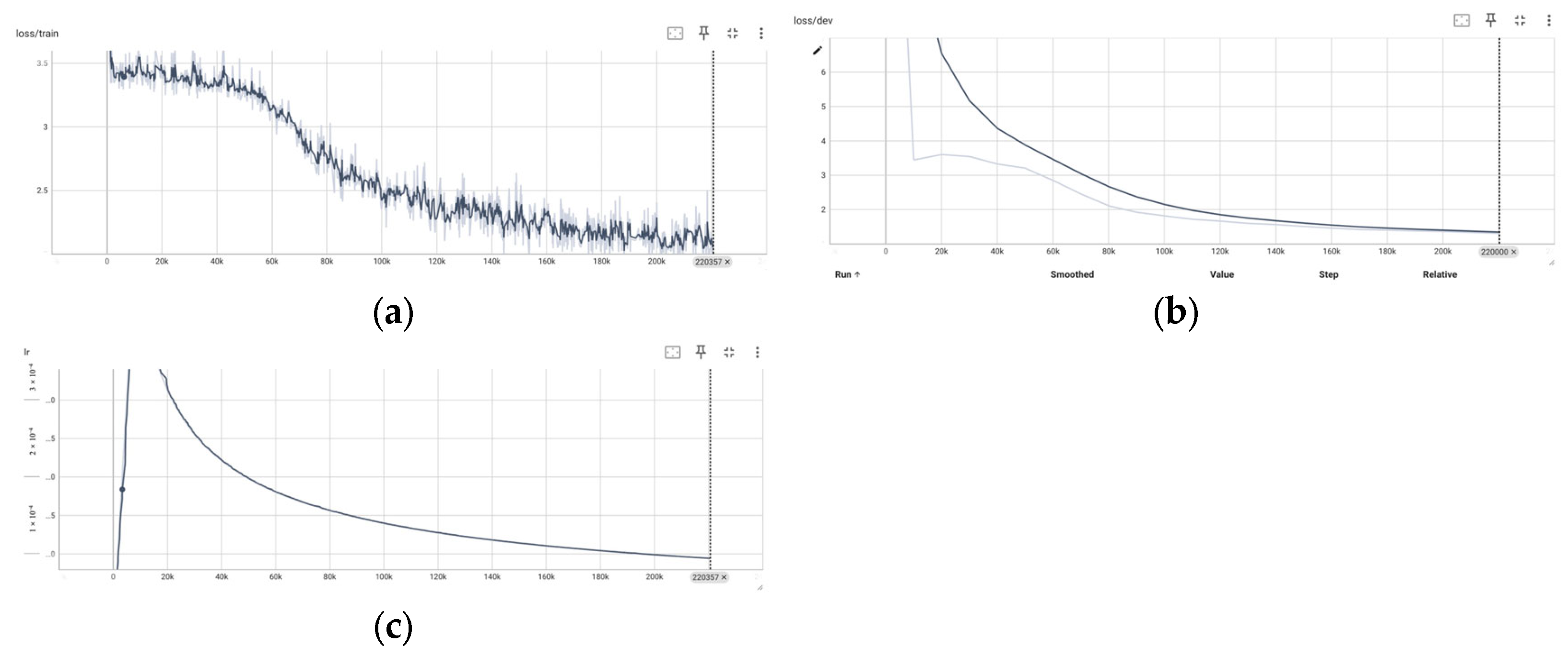

To evaluate the training dynamics and robustness of the proposed Whistle model, we present the training and validation loss curves, along with the corresponding learning rate schedule, as illustrated in

Figure 5.

As shown in

Figure 5a, the training loss exhibits a clear downward trend, decreasing from approximately 3.5 to below 1.5 over 220 k steps. After an initial plateau during the first 50 k iterations, the model enters a rapid convergence phase, followed by stable and gradual refinement. The presence of minor oscillations reflects the model’s sensitivity to phoneme-level variation, yet no signs of divergence or collapse are observed, indicating strong optimization stability.

Figure 5b shows the validation loss, which decreases steadily throughout the training process. The continuous decline without overfitting suggests that the phoneme-aware augmentation and dynamic masking strategies contribute to better generalization, especially under limited supervision. Notably, the validation loss aligns well with the training loss trajectory, further confirming convergence consistency.

The learning rate schedule, illustrated in

Figure 5c, follows a polynomial decay strategy. The learning rate peaks at approximately 3.5 × 10

−4 and gradually decreases over time. This smooth decay complements the curriculum learning framework, helping the model transition from coarse to fine-grained phoneme representations while maintaining training stability.

Collectively, the results demonstrate that the proposed training framework—featuring dynamic augmentation, contrastive regularization, and scheduled learning rate decay—ensures both convergence efficiency and robustness under low-resource conditions.

4.2. Recognition Performance at Different Data Scales

Table 1 summarizes the ASR results obtained on the 50 h and 100 h Cantonese subsets of Common Voice. Both CER and PER are computed on the official test split. A lower score indicates better recognition accuracy.

Under the 50 h training condition, the baseline Wav2Vec2.0 model yields a CER of 26.17% (PER: 28.12%), whereas Zipformer reaches 38.83% (PER: 40.32%); both figures are substantially higher than the PER of 17.8% achieved by Whistle. When the amount of labeled data is doubled to 100 h, all systems benefit markedly: Wav2Vec2.0’s CER drops to 6.29% (PER: 7.19%), Zipformer further improves to 4.16% (PER: 4.98%), and Whistle’s PER converges from 17.8% to 16.4%.

It should be noted that only the Whistle model natively outputs phoneme sequences. For Zipformer and Wav2Vec2, which generate character-level transcriptions, we further map each recognized character into its canonical phoneme sequence using a lexicon built from the training set. This ensures that all models are evaluated consistently in terms of PER, while CER results are also reported for continuity with prior Cantonese ASR research.

Comparing like-for-like metrics, the 100 h Zipformer configuration attains the lowest character-level error, while Whistle remains superior at the phoneme level, offering more robust modeling of tones and fine-grained articulatory detail. Increasing the training data from 50 h to 100 h at least halves the CER of character-based models, underscoring Cantonese ASR’s strong reliance on annotated data. Zipformer leverages sparse attention and multi-resolution pathways to secure the best CER at 100 h, whereas Whistle—thanks to WPS and phoneme-aware augmentation—retains an advantage in PER under the 50 h scenario.

Note: Whistle is evaluated with PER, whereas Wav2Vec 2.0 and Zipformer are evaluated with CER according to their native output units. These metrics are not directly comparable; CER results are provided only as a general reference.

4.3. Impact of Dynamic Phoneme Dropout on Whistle-ASR

Here, static dropout refers to masking the same phoneme segments across all training epochs with a fixed probability and position, while dynamic dropout samples new segments in each epoch according to the specified dropout probability, often aligned with phoneme boundaries.

Table 2 compares the performance of static and dynamic Phoneme Dropout in the Whistle-ASR model, evaluated on training sets of 50 h and 100 h. Across both data sizes, the dynamic mechanism consistently outperforms the static one in terms of phoneme error rate (PER).

For the 50 h dataset, dynamic dropout reduces PER from 17.8% to 16.7%, achieving an absolute improvement of 1.1 percentage points (≈6.17% relative reduction). On the 100 h dataset, the gain is even larger, with PER dropping from 16.4% to 15.1%, corresponding to a 1.4 percentage-point absolute reduction (≈8% relative). This consistent advantage suggests that dynamic dropout scales better in larger training scenarios, with stronger capacity to model phoneme-level variability and uncertainty.

The advantage of the dynamic approach lies in its ability to avoid the overfitting risk of static dropout, which masks fixed positions and may limit the modeling of structural variations in phonetic context. By introducing boundary-aware perturbations, the dynamic mechanism better simulates natural speech phenomena such as coarticulation, weak articulation, and tonal drift. This leads to closer alignment with the distribution of phonemes in real speech and ultimately improves recognition accuracy.

4.4. The Impact of Phoneme-Aware SpecAugment on Whistle-ASR

As shown in

Table 3, we analyze the impact of different masking strategies in Phoneme-Aware SpecAugment on the performance of the Whistle-ASR model. Compared to traditional static masking (i.e., randomly applied time-frequency masking), the proposed dynamic masking strategy significantly improves phoneme recognition accuracy across different training data scales.

With 50 h of training data, the PER is reduced from 17.8% to 16.3%, achieving an absolute improvement of 1.6 percentage points, corresponding to a relative reduction of approximately 8.5%. When the training data are increased to 100 h, dynamic masking further lowers the PER from 16.4% to 14.6%, resulting in an absolute reduction of 1.8 points or a relative improvement of about 11%.

This improvement can be attributed to the fact that dynamic masking incorporates phoneme boundary information along the time axis, allowing the model to selectively mask non-critical segments while preserving semantically important regions. This targeted augmentation enhances the model’s ability to learn robust speech representations. In contrast, static masking lacks structural awareness during augmentation and may disrupt semantic integrity, potentially leading to overfitting or insufficient generalization.

4.5. Recognition Results of Whistle-ASR Under the Full Augmentation Strategy

Table 4 presents the final recognition performance of the Whistle-ASR model under the combined application of Dynamic Phoneme Dropout and Phoneme-Aware SpecAugment across different training data scales.

On the 50 h training set, the model achieves a PER of 15.9%, representing an absolute reduction of 1.9 percentage points compared to the original unaugmented baseline (17.8%). With 100 h of training data, the PER further drops to 14.4%, yielding a 2.0-point absolute improvement over the baseline performance of 16.4%. On the test set, the full augmentation strategy achieves a PER of 15.9% (50 h training) with a 95% confidence interval of ±0.5 percentage points.

These results demonstrate that in low-resource speech recognition scenarios, the integration of structure-aware and semantic-sensitive augmentation strategies can significantly enhance both the robustness and generalization capability of the model. Dynamic Phoneme Dropout more effectively simulates natural phonetic variations in spontaneous speech, while Phoneme-Aware SpecAugment helps preserve critical speech units by selectively masking non-essential regions. The two methods complement each other and jointly contribute to consistent and superior performance under varying training conditions.

In summary,

Section 4.3,

Section 4.4 and

Section 4.5 present a systematic ablation study, covering four settings: the baseline without augmentation, using only Phoneme Dropout, using only Phoneme-Aware SpecAugment, and the combined full strategy. The results indicate that each augmentation individually contributes significant improvements, while their combination further enhances robustness and generalization.

4.6. Qualitative Analysis of Augmentation

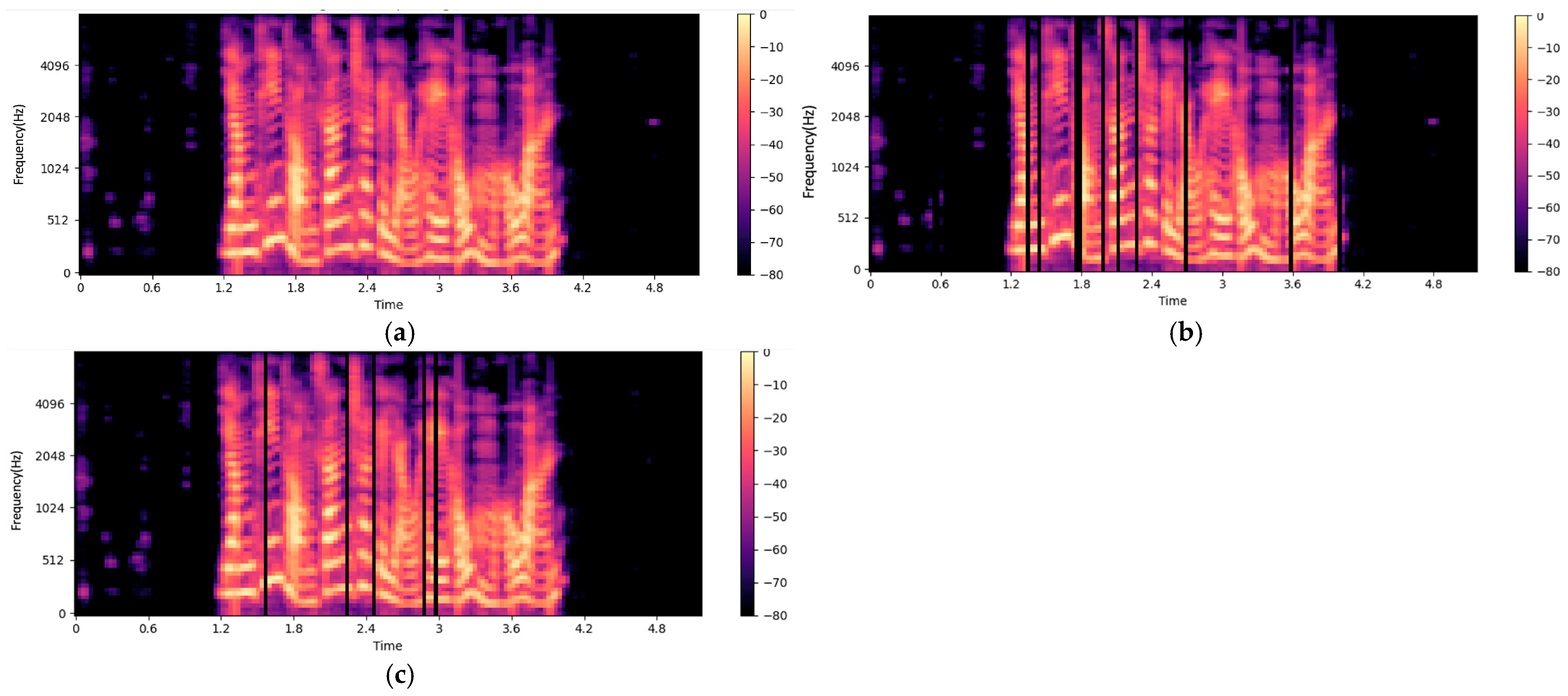

To complement the quantitative results, we further provide qualitative comparisons of spectrograms under different augmentation strategies.

As shown in

Figure 6, random masking often introduces irregular gaps across time and frequency, which may disrupt phonemic boundaries and hinder the model’s ability to capture phoneme-level structures. In contrast, phoneme-aware masking is aligned with phoneme boundaries and selectively removes less informative regions, thereby preserving essential phonemic cues. This leads to augmented samples that are linguistically more consistent and closer to real-world pronunciation variations such as phoneme reduction or deletion.

5. Discussion

This study proposed two phoneme-aware augmentation strategies—Dynamic Phoneme Dropout and Phoneme-Aware SpecAugment—and demonstrated their effectiveness in improving low-resource Cantonese ASR using the Whistle framework. The experimental results support our central hypothesis that linguistically structured augmentation can significantly enhance model robustness and generalization under limited data conditions.

Compared with traditional static masking, the dynamic dropout mechanism yielded consistent gains by introducing phoneme boundary awareness. This design not only reflects the natural variability found in spontaneous Cantonese speech (e.g., phoneme reduction, tonal drift) but also preserves semantic integrity by avoiding indiscriminate masking. Similarly, the integration of phoneme-level constraints into SpecAugment al-lowed the model to retain important phonetic information while perturbing less relevant segments, thereby reducing overfitting and improving accuracy.

The combined augmentation strategy further amplified these benefits. As shown in

Table 4, the joint application of both methods lowered the PER to 15.9% and 14.4% on the 50 h and 100 h datasets, respectively, representing an absolute improvement of up to two percentage points over the baseline. These results confirm that structured perturbations at the phoneme level, especially when guided by alignment boundaries and attention-aware heuristics, provide a powerful alternative to purely random augmentation.

Compared to prior works in low-resource ASR, our approach requires no additional human annotation or pronunciation dictionaries, relying only on forced alignment (MFA) and a G2P lexicon. This makes the method more practical and scalable, particularly for dialects and tonal languages where annotation cost is high and phonetic variation is complex.

In summary, this study confirms that combining structural and semantic awareness at the phoneme level offers substantial advantages for end-to-end ASR in tonal low-resource languages, paving the way for more linguistically informed data augmentation in future speech recognition systems.

6. Conclusions

6.1. Limitations

Despite the progress achieved in this study, several limitations remain. The use of a single dataset—Common Voice—limits domain diversity, as it does not cover real-world scenarios such as telephone speech, background noise, or multi-speaker conversations. As a result, the out-of-domain robustness of the model remains unverified. In addition, the scope of model experimentation has yet to be extended to include more acoustic architectures.

6.2. Summary

Experimental results demonstrate that structured phoneme-aware augmentation—especially the combination of boundary-aligned dynamic Phoneme Dropout and semantically sensitive PA-SpecAugment—significantly improves the robustness of Whistle-ASR in low-resource Cantonese recognition. Without requiring extra manual annotation, this framework leverages MFA alignment and G2P conversion to reduce the PER to 12.9% within only 100 h of labeled data, offering a lightweight and efficient engineering pathway for end-to-end recognition of tone-rich dialects like Cantonese.

6.3. Future Work

Future research will extend in several directions. First, we plan to expand the coverage of the framework by incorporating a wider range of acoustic models and encoder architectures (e.g., Conformer, Zipformer, Paraformer) and conducting systematic training and inference comparisons across multiple mainstream open-source toolkits (e.g., CAT, ESPnet, Fairseq). This will enable a comprehensive evaluation of how different model architectures and implementation paradigms affect ASR performance in low-resource settings.

Second, we will focus on the deployability of the framework in practical applications, with an emphasis on evaluating lightweight architectures for deployment on edge devices. In this process, we will further assess the feasibility and optimization of key augmentation components used in this study—such as MFA-based phoneme alignment and attention-weighted sampling—for on-device use. Although these operations are only applied during training and are not involved in inference, their complexity could add overhead to the deployment pipeline. Therefore, we will explore more efficient alternatives. Additionally, we will systematically measure deployment time performance metrics, including latency, throughput, and memory usage, to better support low-resource ASR in real-world scenarios.

Third, we will develop and train an end-to-end system dedicated to phoneme-level modeling to strengthen the explicit modeling of phoneme-level structures. This system will be used to analyze phoneme boundaries, phonological variations, and tonal shifts in speech signals, thereby providing a more accurate foundation for phoneme-aware augmentation strategies (e.g., TTS-based synthesis, phoneme substitution). It may also serve as a front-end module to be jointly optimized with the main ASR decoder, further improving overall recognition accuracy.

Finally, we will explore more fine-grained multilingual transfer learning strategies, combining cross-lingual phoneme mapping with domain adaptation methods. Special attention will be given to extending the proposed framework to other low-resource and tonal languages (e.g., Vietnamese, Thai). Once suitable phoneme-aligned corpora are available, we will conduct cross-lingual experiments on these languages to verify the framework’s applicability and robustness in broader linguistic contexts.

6.4. Practical Implications and Future Applications

Given the lightweight and modular design of the proposed augmentation framework, it is well-suited for deployment in embedded systems and edge devices where computational resources are constrained. In future work, we plan to explore the integration of the Whistle-ASR model—augmented with phoneme-aware strategies—into low-power microcontrollers, smart IoT terminals, and custom speech recognition chips for Cantonese and other tonal languages.

Potential application scenarios for the proposed framework include its deployment in smart wearable devices equipped with on-device speech recognition capabilities, such as hearing aids, smart glasses, and fitness trackers. It can also be applied to voice-controlled nanoscale instruments in biomedical or industrial inspection environments, where accurate command recognition is required under conditions of noise or data scarcity. Furthermore, the framework is well suited for multilingual, edge-based ASR modules used in public service robots or smart city terminals deployed in Cantonese-speaking regions. In addition, it can support real-time speech interfaces in mobile and vehicular embedded platforms, which demand low-latency and noise-robust Cantonese interaction. By reducing reliance on large-scale training data and external linguistic resources, the proposed approach contributes to scalable and hardware-friendly ASR solutions, particularly in resource-constrained or privacy-sensitive environments.