1. Introduction

Driven by the energy transition and the “dual carbon” goals, Photovoltaic (PV) systems significantly reduce air pollution by producing clean energy, while helping to reduce reliance on primary raw materials and traditional energy sources, thus providing strong support for the development of a circular economy [

1,

2]. However, the intermittent and unpredictable nature of PV power generation poses significant challenges to the stable operation of power systems, electricity market transactions, and the operational management of new energy power plants [

3]. Consequently, accurate prediction of PV power generation is of paramount practical importance for optimizing grid operation, ensuring power system stability, and ultimately advancing sustainable development goals [

4,

5].

PV power generation forecasting can be categorized by time scale into ultra-short-term forecasting (0–24 h), short-term forecasting (24–72 h), and medium- to long-term forecasting (1–12 months) [

6]. In comparison to medium- to long-term forecasting, short-term forecasting offers a narrower time horizon and higher prediction accuracy, allowing it to effectively capture the rapid fluctuations in PV power and provide precise data support for real-time grid dispatch [

7].

Currently, PV power prediction methods can be classified into physical models, statistical models, and artificial intelligence methods [

8]. Physical models depend on complex meteorological parameters and equipment characteristics, but their generalization capability is limited in unstructured scenarios [

9]. Statistical models, such as ARIMA [

10] and SVM [

11], excel at handling linear relationships but face difficulties in capturing nonlinear features and multivariate coupling relationships. With the rapid advancement of artificial intelligence technologies, they have progressively become a focal point of research in the field of PV power prediction [

12]. References [

13,

14] utilizes long short-term memory networks (LSTM) for PV power prediction, and the results indicate that LSTM models can reduce prediction errors. However, LSTM has inherent issues when processing extremely long sequences, such as information loss, gradient vanishing, gradient explosion, and slow training speed. The latest xLSTM architecture effectively overcomes the inherent limitations of LSTM by introducing the exponential gating mechanism and matrix memory unit. It achieves significant improvements in both model expressive capability and parallel computing efficiency, rendering it more adaptable to the complex fluctuation characteristics of PV power. Reference [

15] further integrates convolutional neural networks to extract spatial features and combines them with LSTM to form a hybrid model. While this approach enhances gradient stability, it overlooks the importance of feature selection, which ultimately reduces model prediction accuracy. Reference [

16] constructed a model embedding layer by extracting relevant features and employed the Informer model for PV generation prediction. However, the Informer model has high demands for input data quality, and its predictive performance can degrade under extreme conditions, such as very small data volumes or high noise levels. To address these challenges, recent studies have primarily adopted hybrid prediction models to achieve complementary optimization by integrating the strengths of multiple methods. Relevant research has mainly concentrated on two key areas: data preprocessing and prediction model construction. By enhancing data quality and feature representation capabilities, the accuracy and robustness of predictions have been significantly improved.

In the data preprocessing stage, PV power generation is influenced by both meteorological factors and equipment operating factors. From the perspective of power generation principles, meteorological factors directly or indirectly affect power generation capacity by influencing key stages of PV conversion; equipment operating factors, on the other hand, impact output power by altering the performance of light absorption or electrical energy conversion pathways. This results in raw data containing a wealth of relevant features, but also containing redundant information, which can lead to reduced prediction accuracy [

17]. Therefore, effective feature selection methods are crucial for improving the performance of PV prediction models. Reference [

18] employed the Pearson correlation coefficient (PCC) to filter the original input features, which effectively eliminated the influence of weakly correlated variables while conserving computational resources. However, this approach faces limitations when dealing with data containing strongly correlated variables. Reference [

19] utilized the Maximal Information Coefficient (MIC) to extract features relevant to PV power generation, though its parameter selection process is somewhat subjective. Reference [

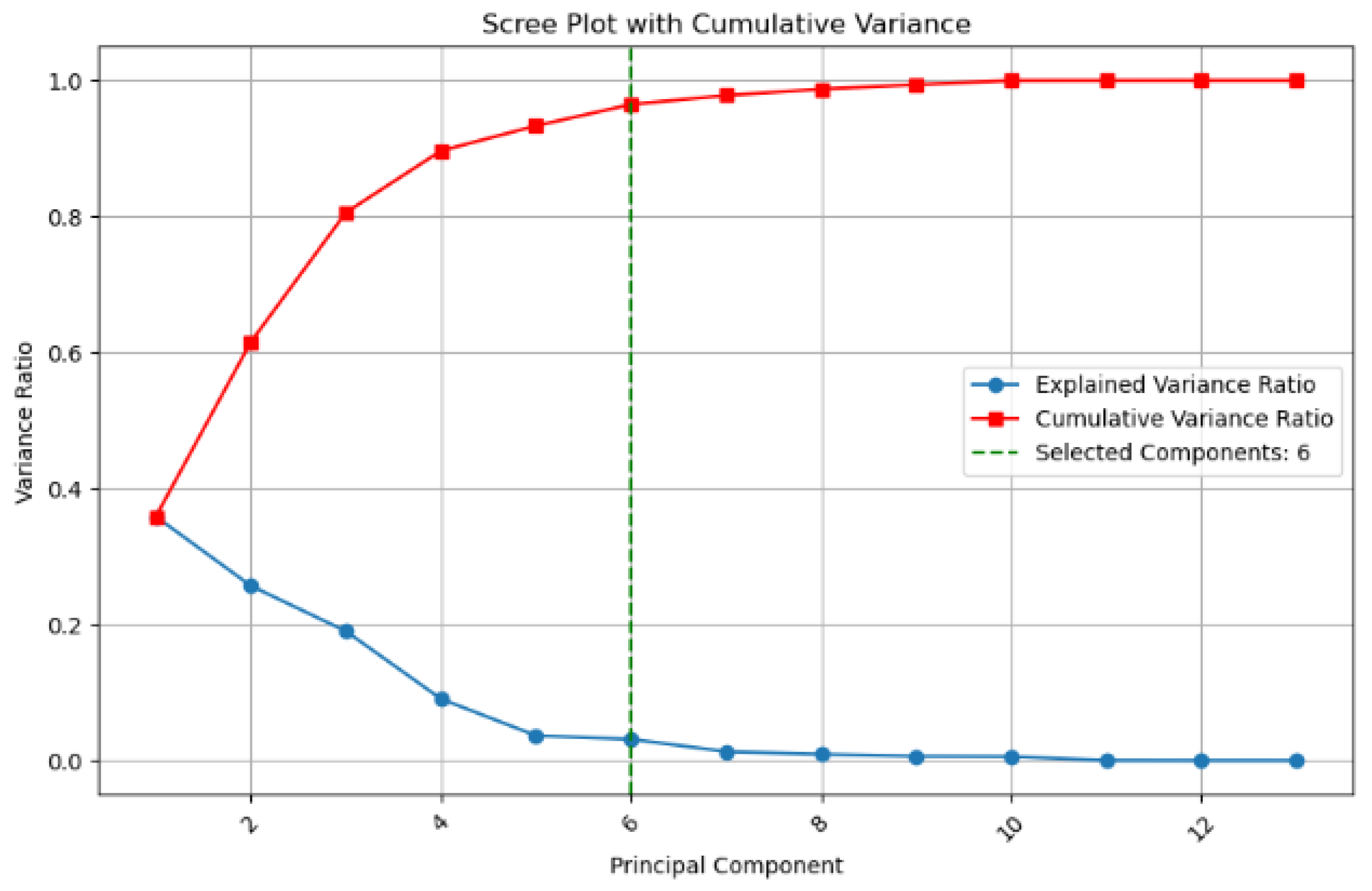

20] proposes the use of principal component analysis (PCA) to construct a mixed prediction model, but it has limitations such as the inability to effectively identify key features and difficulty in capturing nonlinear relationships. Reference [

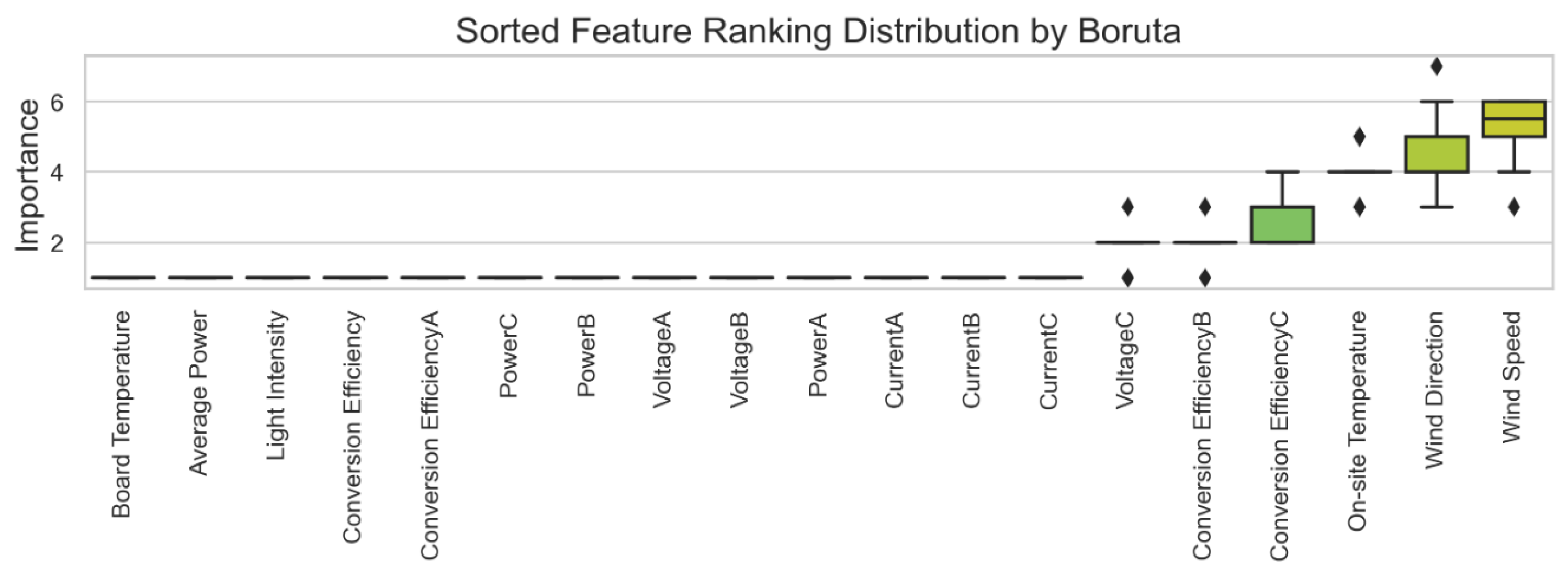

21] employs the Boruta algorithm based on the wrapper method for feature selection, which improves wind speed prediction performance. However, the feature set selected by the Boruta algorithm may contain redundant features, necessitating further optimization. Considering a single feature selection method alone is insufficient to balance feature importance and redundant feature removal, adopting a feature combination strategy emerges as a viable solution direction.

PV power generation exhibits inherent temporal patterns that possess approximate time-translation symmetry. To enhance prediction accuracy by addressing the dual challenges of complex temporal dependencies and symmetry exploitation, we propose the Bi-xLSTM-Informer model. The main contributions are

- (1)

To address the issue of redundant features in PV data, this study proposes a feature selection method based on Boruta-PCA. The Boruta algorithm is used to evaluate feature importance based on random forests, screening out feature variables significantly correlated with the target variable. PCA transformation constructs an orthogonal feature subspace, removing redundant correlations and creating a symmetric, decorrelated representation that enhances model generalization. This feature optimization method reduces interference from redundant information while overcoming the limitations of traditional single feature extraction methods.

- (2)

A Bi-xLSTM-Informer hybrid model explicitly incorporating temporal symmetry via a bidirectional mLSTM layer processing time-flipped sequences. Within the xLSTM framework, the sLSTM structure is retained, and a bidirectional mLSTM processing layer is constructed. Through sequence flipping operations, reverse inputs are generated, and feature concatenation and convolutional fusion are used to enhance bidirectional feature extraction capabilities. The Informer module’s sparse self-attention mechanism captures long-term dependencies in time-series data, enhancing the model’s robustness in modeling nonlinear time-series features. The combination of these two components enables the model to extract effective information across different time scales, thereby improving prediction accuracy.

- (3)

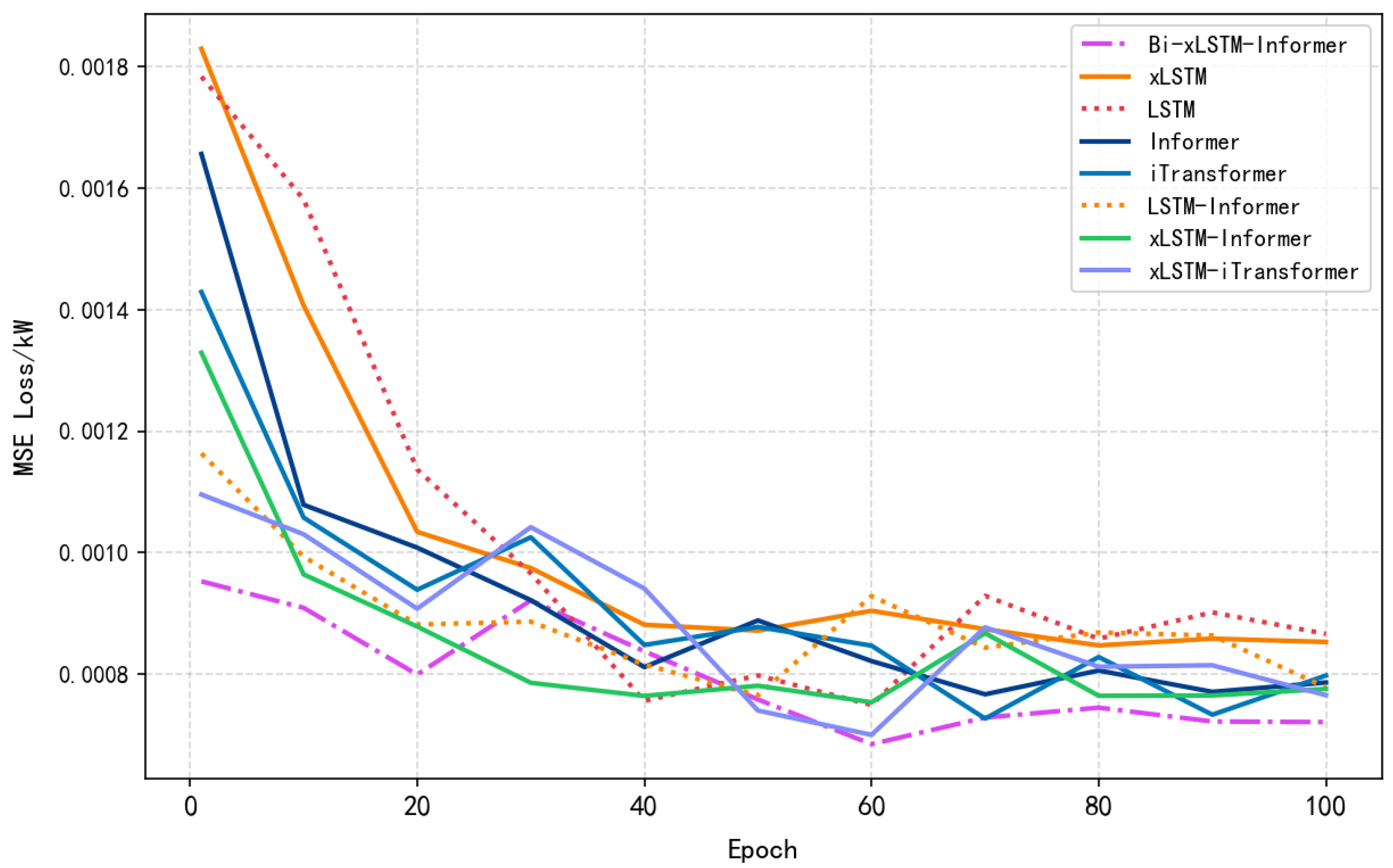

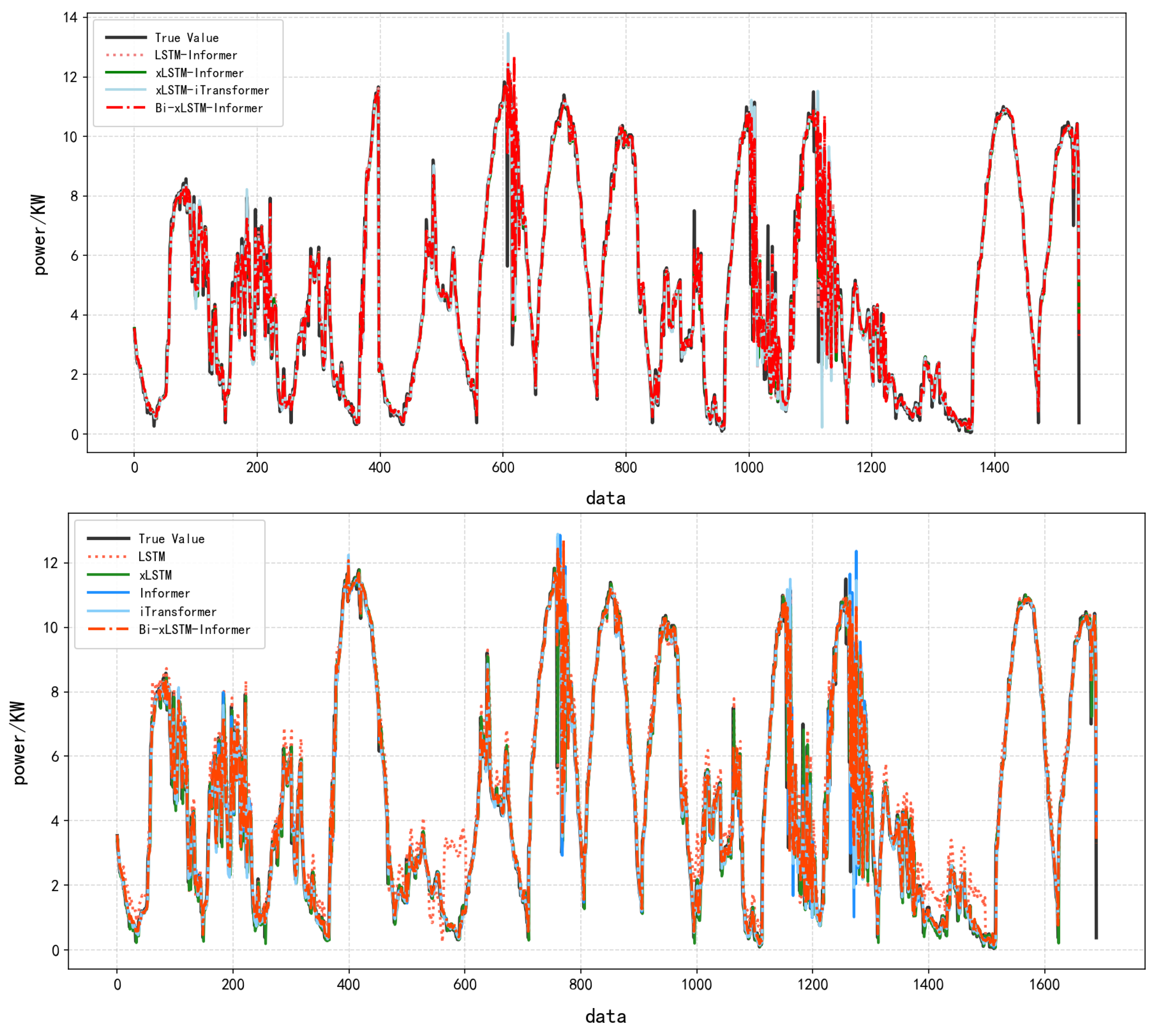

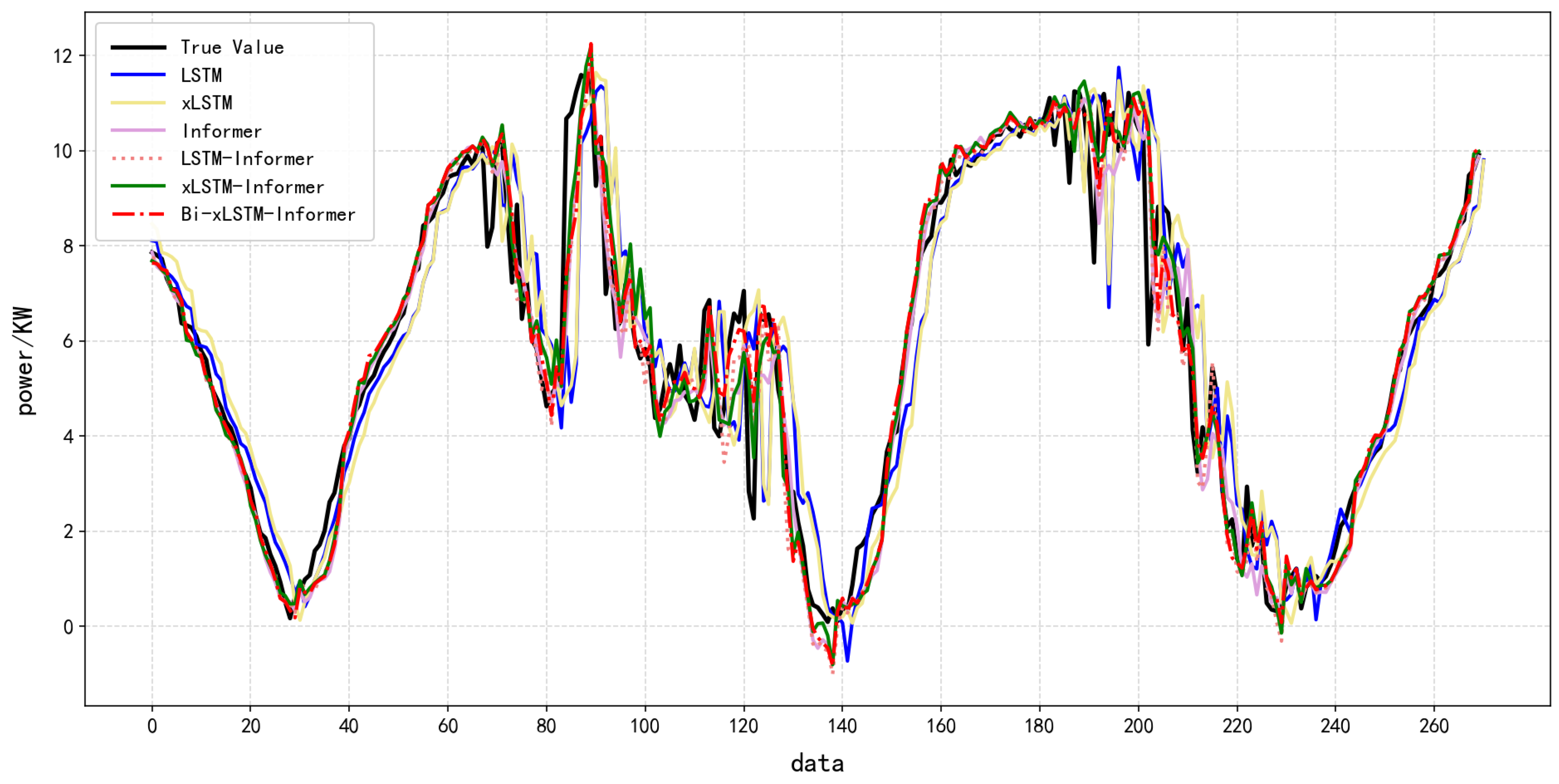

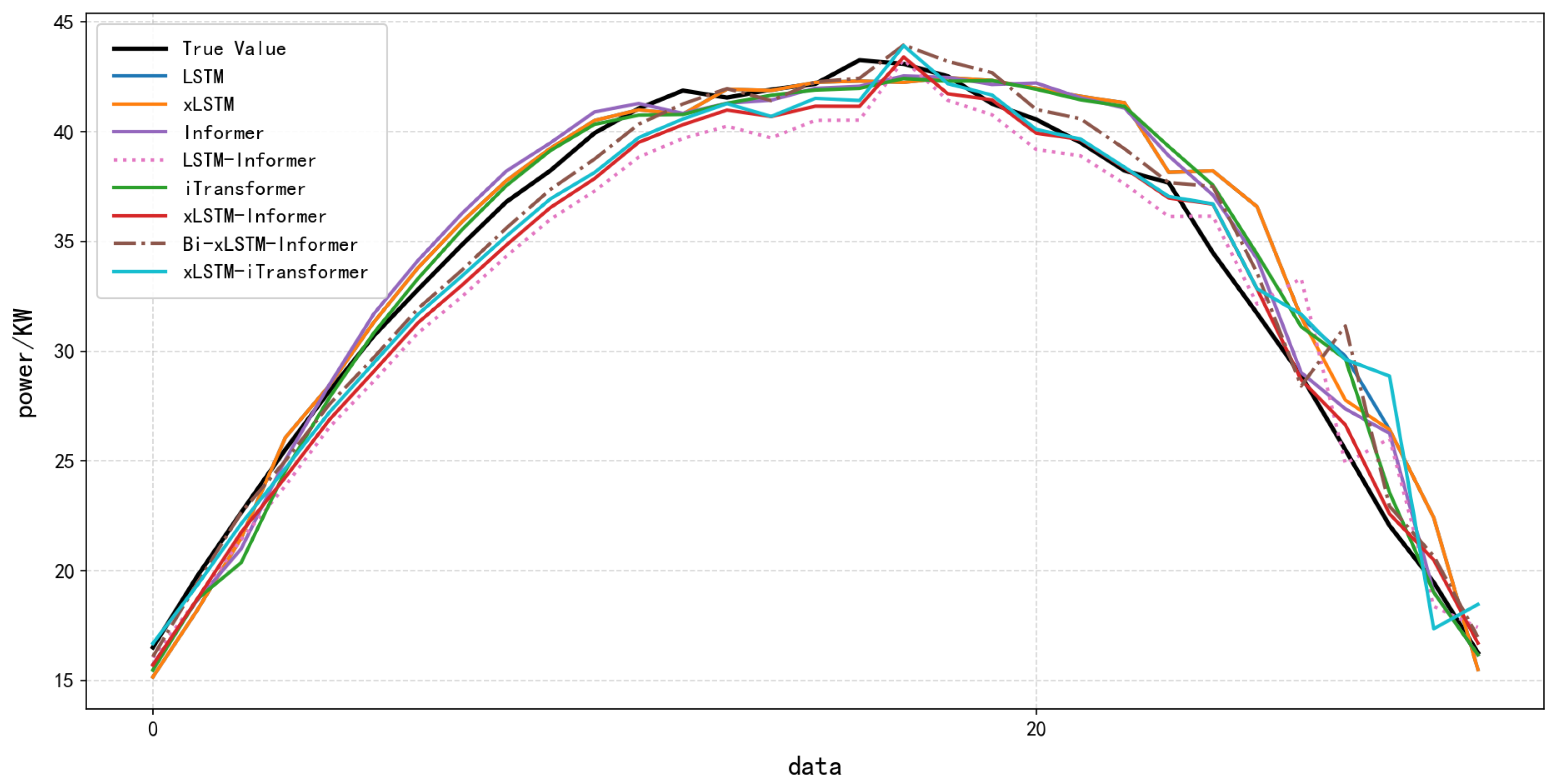

A comparative experiment was conducted using data from the PV Power Station Artificial Intelligence Operations and Maintenance Big Data Processing and Analysis Competition, comparing and analyzing eight benchmark models (LSTM-Informer, xLSTM, LSTM, xLSTM-Informer, xLSTM-iTransformer, iTransformer and Informer) to validate that the proposed prediction method achieves higher accuracy.

2. Bi-xLSTM-Informer Prediction Model

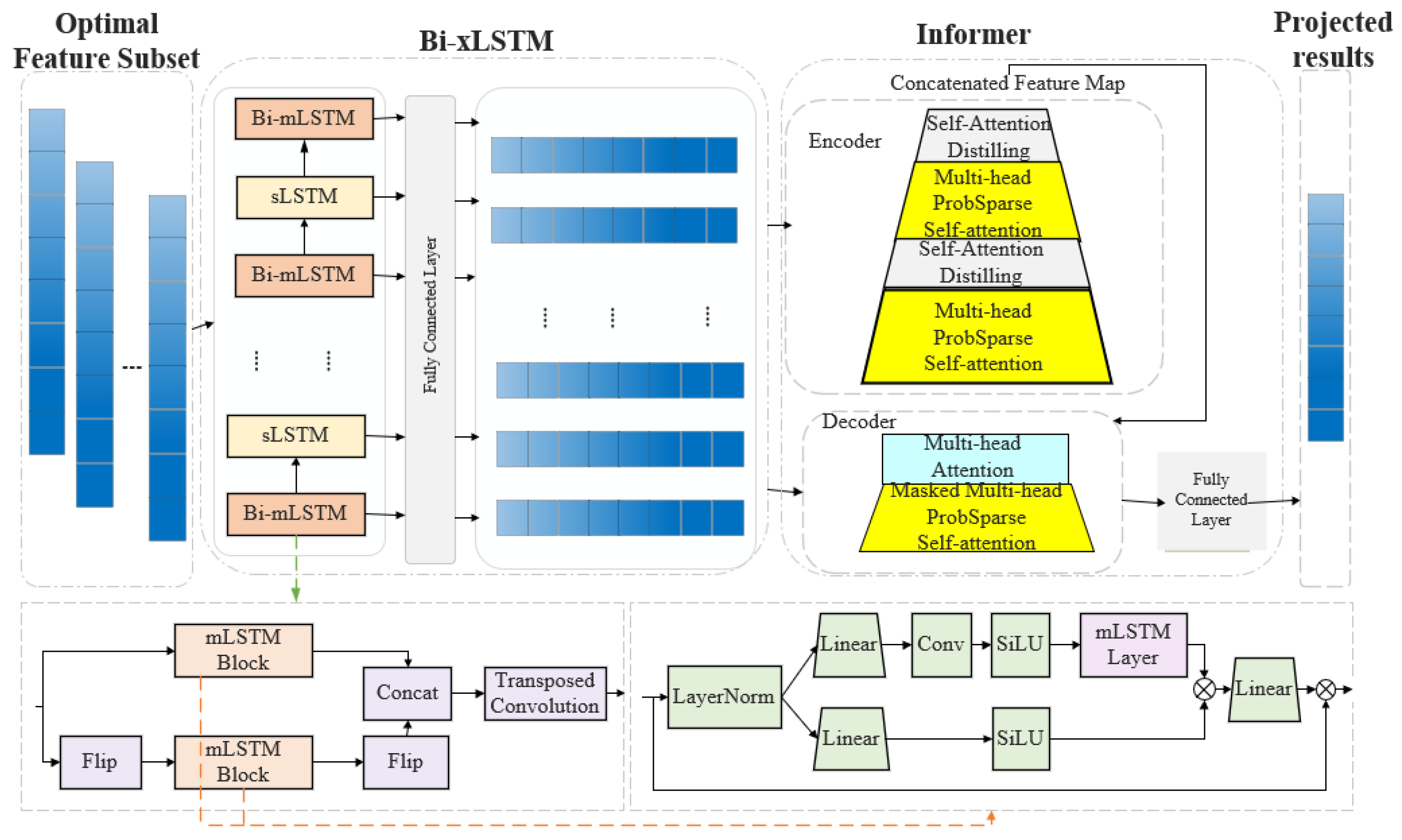

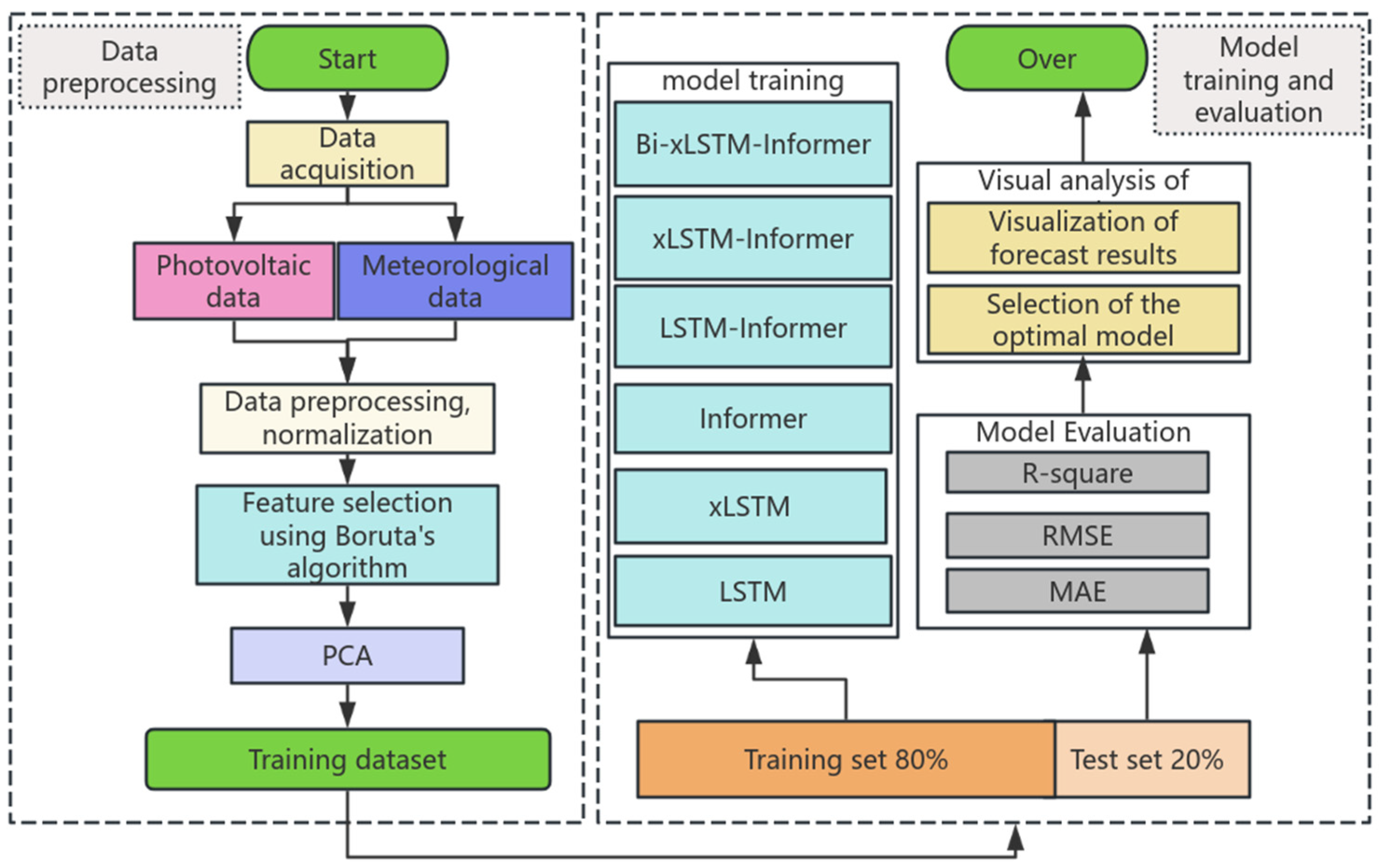

This study introduces the Bi-xLSTM-Informer model to tackle the issues of feature redundancy and the limited improvement in prediction accuracy in PV power forecasting. The general architecture of the model is illustrated in

Figure 1. The prediction process is outlined as follows:

The Bi-xLSTM module, consisting of sLSTM and Bi-mLSTM components, is employed to extract local features from the input sequence. Specifically, sLSTM captures short-term dependencies within the sequence, while the Bi-mLSTM layer enhances contextual awareness by simultaneously processing both the forward and reverse sequences—generated through the Flip operation—using the same mLSTM block. The fused local features are subsequently fed into the Informer module, which conducts global dependency analysis on the sequence data. This module also reduces prediction errors through a dynamic compensation mechanism, further enhancing overall prediction accuracy. Finally, the global features are mapped via a fully connected layer to produce the PV power prediction values.

4. Bi-xLSTM and Informer Models

4.1. Bi-xLSTM Model

To clearly illustrate the core recurrent neural network architecture discussed in this paper and its evolutionary relationship,

Table 1 compares the core concepts and features of LSTM and its extended versions (sLSTM, mLSTM) as well as the overall xLSTM framework.

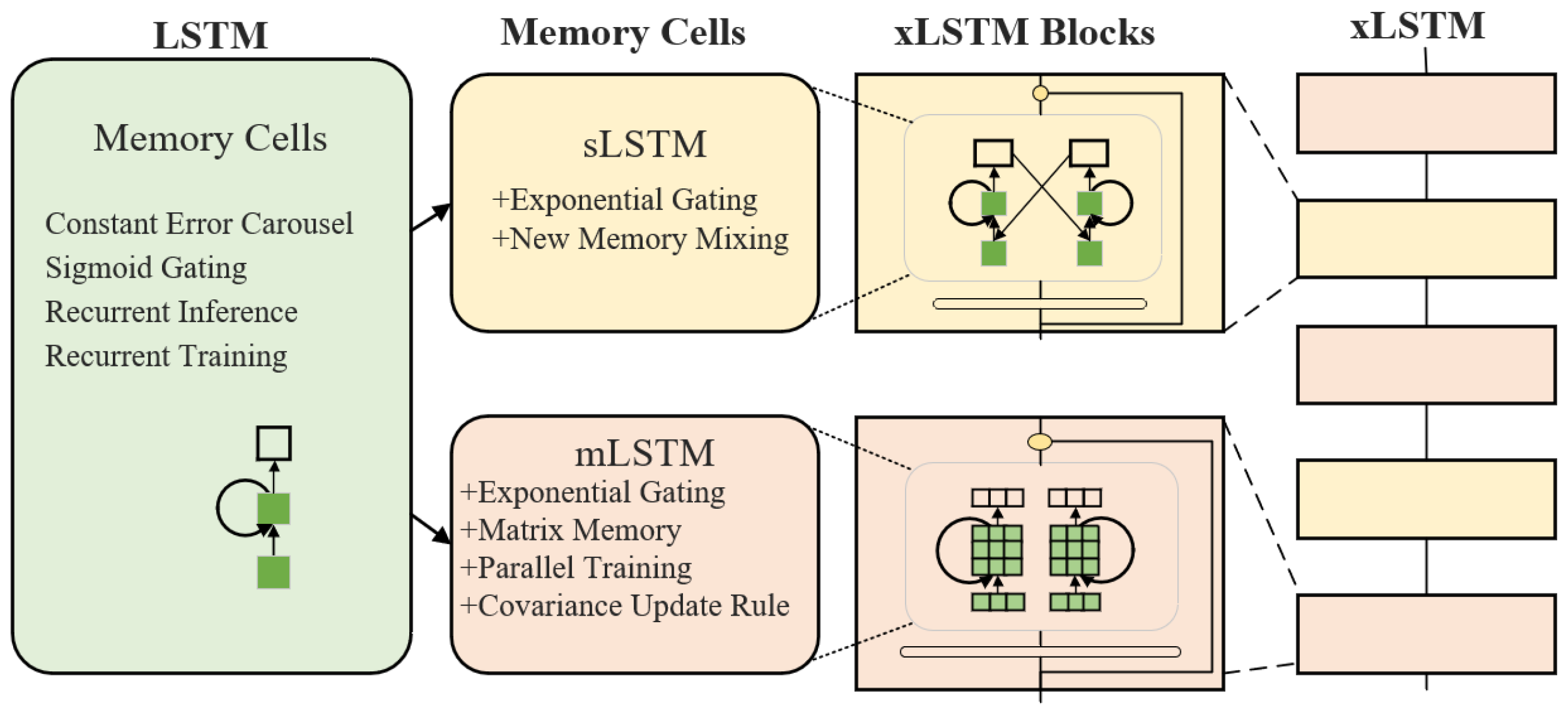

xLSTM is an extended architecture based on the traditional LSTM network. By incorporating an exponential gating mechanism, designing a novel memory structure, and adopting a residual stacking architecture, it significantly enhances performance in sequence prediction tasks [

25]. xLSTM includes two new variants: sLSTM and mLSTM.

The complete xLSTM architecture is composed of several xLSTM blocks arranged sequentially, with each block being constructed by alternately stacking sLSTM and mLSTM blocks. The overall configuration is illustrated in

Figure 2.

sLSTM retains the scalar memory unit while introducing new memory mixing techniques and layer normalization state constraints—allowing memory units to mix information across layers and improving information storage and utilization efficiency. In contrast, mLSTM uses matrix-form memory units and optimizes storage strategies via covariance update rules, enabling dynamic adjustment of matrix parameters to adapt to data features and enhancing storage capacity and sparse information retrieval. Additionally, mLSTM eliminates connections between hidden states across time steps, breaking the sequential computation pattern of traditional LSTMs and enabling fully parallel processing. The forward propagation formula of mLSTM is as follows [

26]:

where

is the current cell state,

is the normalized state,

is the hidden state,

is the output gate,

is the value vector,

is key vector,

is the query vector,

is the input gate, and

is the forget gate.

Traditional xLSTM networks use a unidirectional sequential training method when processing PV power generation time series data, which limits the ability to fully explore the temporal dependencies within the data. The bidirectional architecture lets the model process sequence data from both forward and backward directions simultaneously, enabling more comprehensive capture of contextual dependencies in the input sequence. In variants of xLSTM, sLSTM relies on memory mixing through recurrent connections, which supports multi-head architectures.

However, intra-head memory mixing requires sequential sequence processing, and bidirectional adaptation necessitates cross-head communication, potentially increasing design complexity. In contrast, the mLSTM network is based on a matrix memory structure and covariance update rules. Its key-value pair storage and query mechanism has direction-independent characteristics, meaning that bidirectional expansion does not disrupt the original mathematical structure. This design feature allows mLSTM to significantly enhance language modeling performance after bidirectional adaptation.

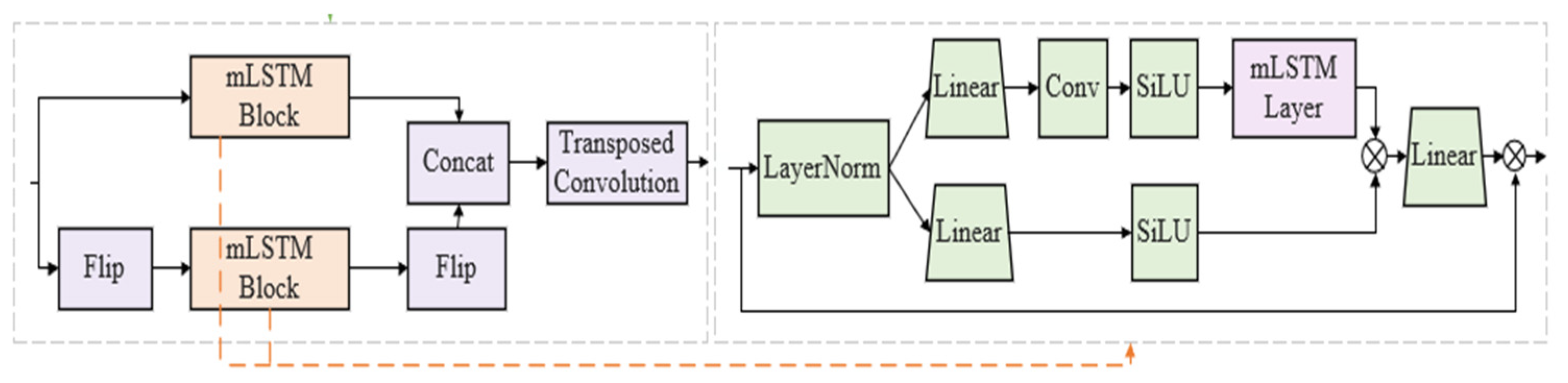

Based on this, this study proposes a Bi-xLSTM model, which consists of an sLSTM network and a Bi-mLSTM network. In the Bi-mLSTM network, a unidirectional mLSTM block with shared parameters is used to process reverse sequences through a flip operation. The bidirectional outputs are concatenated and integrated with a Conv1D convolution to achieve feature extraction. This mechanism enables the Bi-xLSTM model to simultaneously capture both forward and reverse temporal features of the data, efficiently uncovering the intrinsic connections between current data and past/future data, thereby improving data utilization efficiency and prediction accuracy. The computational formula for the Bi-xLSTM network structure is

where

operation generates a time-reversed copy of the input sequence, enabling the model to learn temporally symmetric representations. This is critical for capturing invariant patterns under time-direction transformations.The structure of the Bi-mLSTM model is illustrated in

Figure 3.

In addition, traditional LSTMs rely on sigmoid gate functions for information control, with output values constrained to the range [0, 1], which presents certain limitations. xLSTM adopts an exponential activation function, allowing the input and forget gates to control memory updates exponentially—enabling more efficient information flow and memory adjustments. This lets the model make more significant changes to the memory cell state, quickly integrating new information and adjusting its memory accordingly.

4.2. Informer

Due to the inherent challenges in parallelizing certain components when handling long sequences, the performance of xLSTM is somewhat constrained. Consequently, this study incorporates the Informer model, to achieve superior prediction results. The Informer model is founded on the Transformer architecture, replacing the conventional self-attention mechanism with the ProbSparse self-attention mechanism. This modification substantially streamlines the computational process, reducing both computational and space complexities from O(L

2) to O(lgL), thereby enhancing the model’s efficiency and effectively mitigating resource constraints when processing extended sequences [

27].

In long sequence modeling, only a small subset of key vectors exerts a substantial influence on each query vector, meaning the attention distribution is sparse. Leveraging this characteristic, Informer introduces the ProbSparse self-attention mechanism, which utilizes KL divergence to assess the sparsity of the

i-th query vector

:

where

,

represent the

i-th row of

Q and

K, respectively.

After computing the “sparsity score”, a new sparse query matrix is selected, consisting of the top-u query vectors with the highest sparsity scores. The average value is then directly assigned to the remaining query vectors. Finally, the ProbSparse self-attention is computed as follows:

where

is the sparse query matrix.

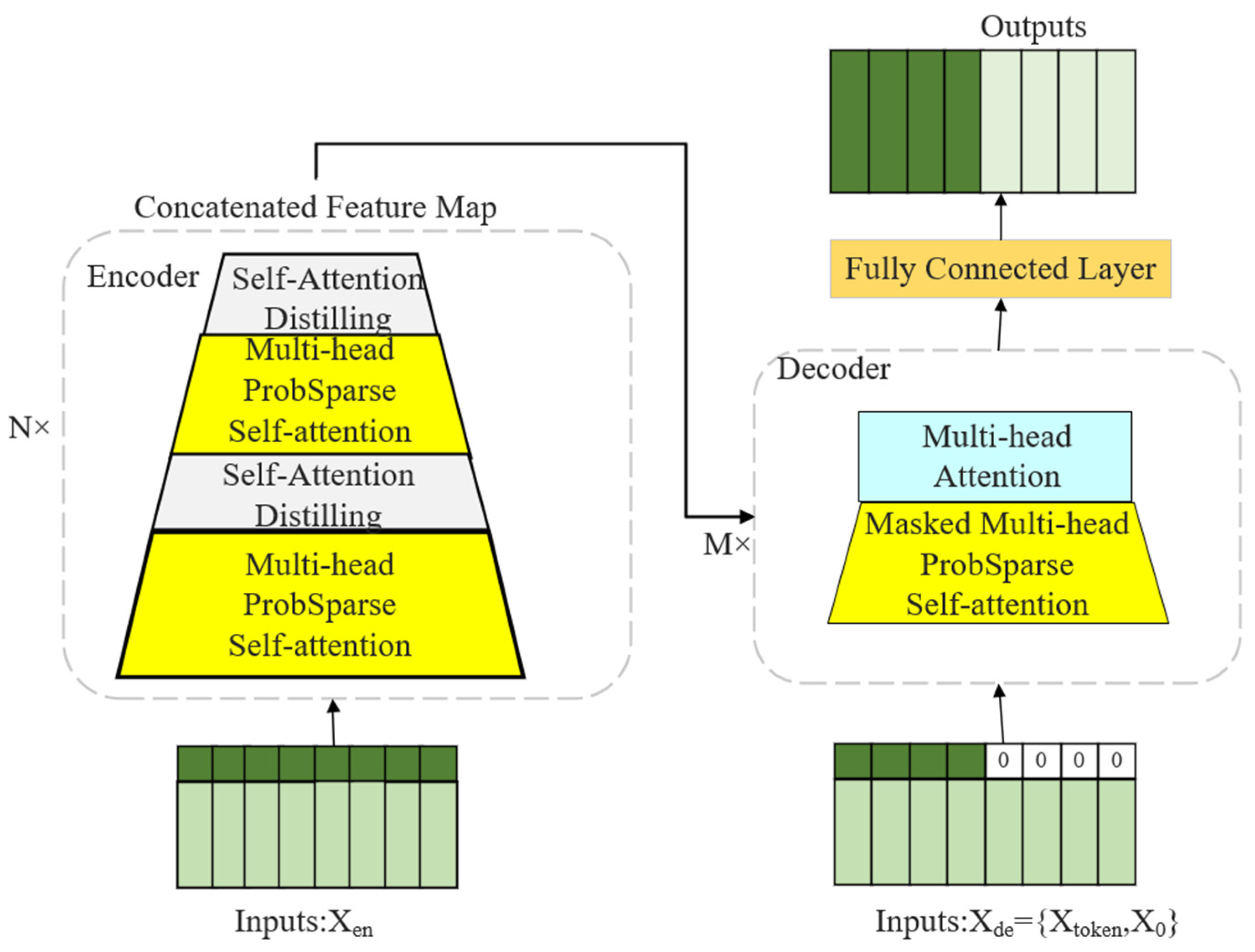

Informer consists of an encoder and a decoder, with the model architecture depicted in

Figure 4. The encoder is made up of several stacked encoding layers, each primarily comprising a ProbSparse self-attention module and a feedforward neural network module. The encoder processes the input long-sequence time series data to capture long-term dependency features.

Furthermore, a self-attention distillation operation is incorporated within the encoder, where the input to each subsequent layer is halved to extract the dominant attention, allowing the Informer to effectively manage exceptionally long input sequences. The distillation formula from the first layer to the final layer is as follows:

where

is the one-dimensional convolution operation,

is the activation function, and

is the maximum pooling operation.

The decoder is also composed of multiple stacked decoding layers, each containing a cross-attention module, a ProbSparse self-attention module, and a feedforward neural network module. The decoder predicts future time series values based on the encoder’s output and previously predicted outputs. That is,

where

is the input sequence of the decoder,

is the start token, and

is the target placeholder.

6. Conclusions

To improve the accuracy and reliability of short-term PV power forecasting, this study proposed a novel PV forecasting framework that explicitly leverages symmetry principles to enhance accuracy. The method first designs a two-stage feature selection mechanism using Boruta-PCA to effectively extract key features, eliminate multicollinearity interference, and reduce input dimensions. It then constructs a Bi-xLSTM-Informer hybrid model, which combines sequence reversal and feature fusion techniques to form a bidirectional processing layer, integrating the local temporal modeling capabilities of Bi-xLSTM with the global information extraction advantages of Informer. Based on comparisons and validations using public datasets from the Photovoltaic (PV) Power Plant AI Competition, the proposed approach significantly improves prediction accuracy and generalization performance by explicitly embedding symmetry considerations. Experimental results demonstrate that the Bi-xLSTM-Informer model achieves the best prediction performance among all compared models, with an R2 of 98.76% and an RMSE of 0.3776. This RMSE value represents a reduction of 5.81% compared to the best-performing benchmark model. It demonstrates excellent stability and adaptability when handling high-dimensional features and complex temporal patterns, along with significant application potential in real-world PV power prediction scenarios.

Future research will explore the transferability of this symmetry-based forecasting framework to multi-energy scenarios such as wind power and hydropower, and will further integrate cutting-edge technologies to enhance the universality, intelligence, and symmetry-aware adaptability of predictions.