1. Introduction

Steel plates, as fundamental structural materials in modern industry, are widely used across various sectors such as construction, transportation, and machinery manufacturing [

1,

2]. However, during actual production, various factors such as manufacturing processes, raw material quality, and production environments may lead to the formation of surface defects on steel plates, which can compromise their quality and service life and potentially pose safety risks [

3,

4]. Therefore, the detection and classification of surface defects on steel plates are of great significance.

Early methods for defect classification typically relied on hand-crafted image features, using classifier models to categorize surface defects on steel plates [

5]. These approaches are effective in scenarios with well-defined features and ample data samples, but they remain limited in handling complex backgrounds and detecting subtle defects [

6]. With the rapid advancement of deep learning technologies, convolutional neural networks (CNNs) have achieved groundbreaking results in image classification tasks and have been widely adopted in the field of steel surface defect classification [

7,

8]. Representative CNN models such as VGG [

9], ResNet [

10], and EfficientNet [

11] are capable of automatically learning hierarchical and discriminative features directly from raw images, significantly improving classification accuracy. Compared with traditional methods based on manually engineered features, deep learning-based defect classification approaches demonstrate clear advantages in terms of accuracy and have become the mainstream direction and a major research focus in steel surface defect classification [

12].

In recent years, ConvNeXt [

13], a convolutional model that incorporates modern architectural design principles, has gained considerable attention from researchers in the field of steel surface defect detection. Owing to its efficient capability in capturing multiscale textures and boundary features, ConvNeXt demonstrates more pronounced performance advantages compared to earlier deep convolutional neural network models as well as recent popular vision Transformer (ViT) architectures such as Vision Transformer [

14] and Swin Transformer [

15].

Although ConvNeXt has gained popularity in steel surface defect classification tasks, several challenges remain. On the one hand, ConvNeXt focuses more on aggregating global information, which may lead to the omission of critical local details such as subtle defects and fine-grained structures [

13]. To address this limitation, this paper introduces an improved Symmetric Dual-dimensional Attention Module (SDAM) into the ConvNeXt backbone. Inspired by the channel and spatial attention mechanisms in the Convolutional Block Attention Module (CBAM) [

16], SDAM adopts a structurally symmetric and parallel design that independently models salient features along both the channel and spatial dimensions. This symmetric and parallel architecture avoids the sequential bias inherent in cascaded attention mechanisms, thereby enhancing the integrity and discriminative capability of feature representations. As a result, the model’s ability to attend to local regions and respond to defect-prone areas in both channel and spatial dimensions is significantly improved.

On the other hand, ConvNeXt exhibits limited feature fusion capabilities when dealing with defects of varying scales, particularly under complex backgrounds or in scenarios involving large disparities in defect sizes. This may lead to insufficient expression of multi-scale features. To mitigate this limitation, this paper proposes the Transformer-Fused Feature Pyramid Network (TF-FPN), which integrates a Feature Pyramid Network (FPN) [

17] into the ConvNeXt backbone to strengthen the fusion of multi-scale features. Moreover, a lightweight Transformer is introduced after the multi-scale feature fusion stage in the Feature Pyramid Network (FPN) to enhance global modeling capability across scales and improve the efficiency of contextual information propagation. This design further strengthens the model’s ability to identify defects that are sparsely distributed or structurally ambiguous. Here, “lightweight” refers to a single-layer Transformer with eight attention heads and a hidden size of 256, introducing only ~1.42 million additional parameters—significantly fewer than those of conventional multi-layer Transformer architectures.

In summary, this paper proposes a method named FAX-Net for the classification of surface defects on steel plates, which is built upon the ConvNeXt architecture and incorporates SDAM and TF-FPN to enhance classification accuracy by improving the model’s ability to capture critical local details and integrate multi-scale features. The main contributions of this paper are as follows:

- (1)

The introduction of FAX-Net, which presents a novel method for the classification of surface defects on steel plates.

- (2)

The introduction of the SDAM, which enhances the model’s ability to focus on local defect regions.

- (3)

TF-FPN is proposed, which integrates a lightweight Transformer to improve the model’s classification performance on defects with large scale variations and complex structures.

The remainder of this paper is organized as follows.

Section 2 reviews related work on defect classification methods, attention mechanisms, and feature fusion strategies.

Section 3 provides a detailed description of the FAX-Net architecture, including the design of its key components—the SDAM and TF-FPN.

Section 4 presents the experimental setup, including the dataset, evaluation metrics, and results. Finally,

Section 5 concludes the paper.

3. Method

In this chapter, we provide a detailed introduction to our proposed method, FAX-Net.

Section 3.1 presents the overall architecture of the network, including the basic configuration of the backbone ConvNeXt and the integration of the proposed modules.

Section 3.2 focuses on the design and improvements of the proposed SDAM.

Section 3.3 further elaborates on the implementation of the TF-FPN module.

Section 3.4 describes the training implementation details of the model, including the loss function and training setup.

3.1. The Framework of the Proposed FAX-Net

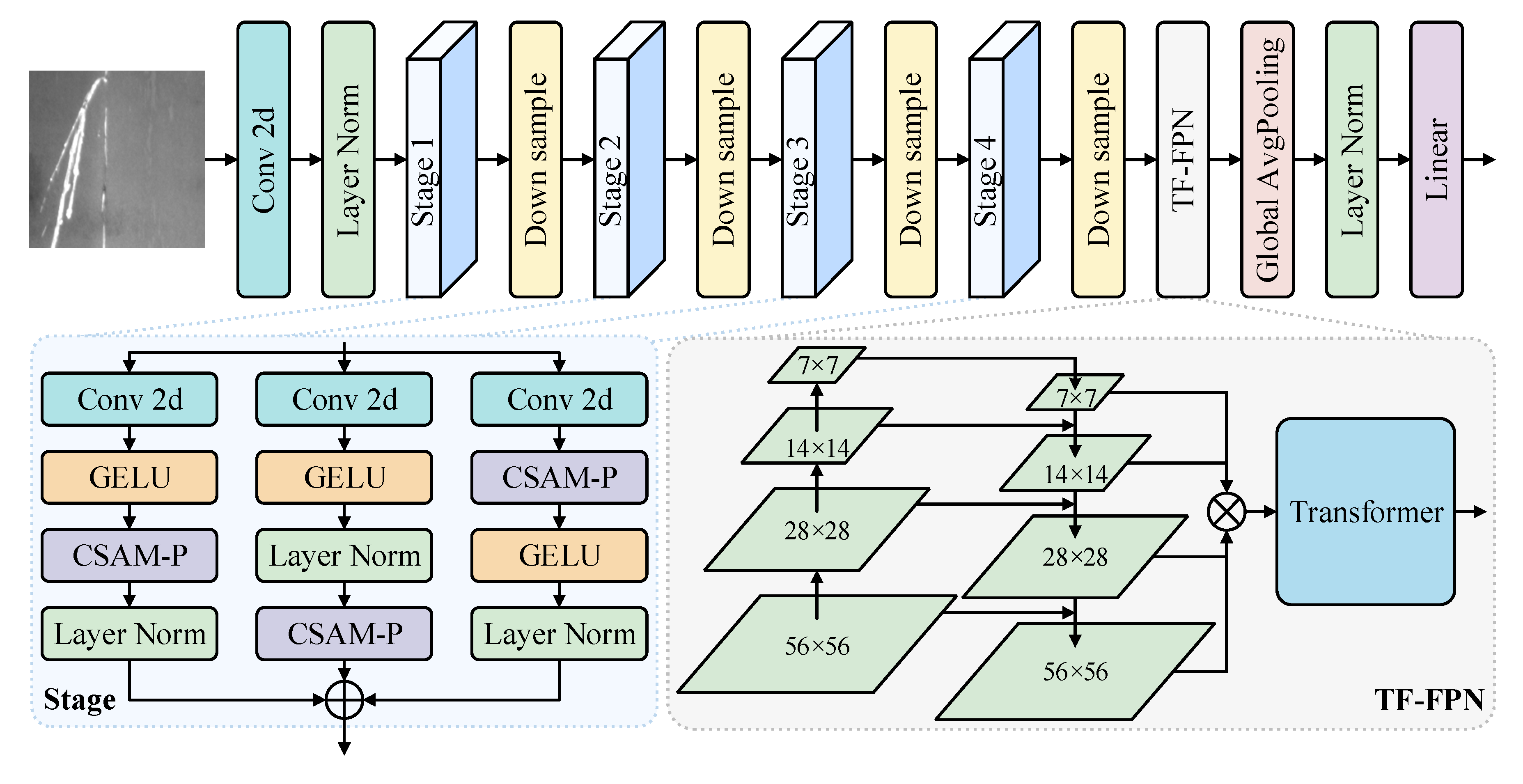

In this study, we propose an improved convolutional neural network architecture for the task of steel surface defect image classification. The network is built upon ConvNeXt as the backbone, into which the SDAM and TF-FPN modules are integrated. This design balances the capability of capturing local fine details with global contextual modeling. Unlike conventional convolutional networks, the proposed model aims to simultaneously enhance attention to key feature regions, strengthen multi-scale feature representation, and improve long-range dependency modeling. This makes it particularly suitable for industrial surface image classification tasks characterized by complex textures and blurred boundaries. The overall architecture of the network is illustrated in

Figure 1. The network consists of four principal stages (Stage 1 to Stage 4). Initially, a 4 × 4 convolution with a stride of 4 is applied to downsample the input into a 224 × 224 feature map, followed by layer normalization. Each stage comprises a Conv2D operation, GELU activation, the CSAM-P attention mechanism, and layer normalization, all integrated with residual connections. Downsampling is performed between successive stages. Upon completion of Stage 4, multi-scale feature maps (e.g., 56 × 56, 28 × 28) are processed by the TF-FPN module using 7 × 7 convolutions and subsequently fused via ⊗ operations. The fused features are then passed to a Transformer module. Finally, the output is generated through global average pooling, layer normalization, and a linear projection layer.

In the backbone network, ConvNeXt adopts a pure convolutional architecture while incorporating several design strategies inspired by the ViT, such as large convolutional kernels (7 × 7), GELU activation functions, and LayerNorm. These enhancements significantly improve the depth and representational power of feature extraction without introducing attention mechanisms. In this study, the four-stage output structure of ConvNeXt is retained, producing feature maps with channel dimensions of 96, 192, 384, and 768, respectively. These multi-level semantic features serve as the foundation for subsequent modules.

To further guide the network to focus on more discriminative channel and spatial regions for the classification task, an SDAM is embedded after each block of the ConvNeXt backbone. This module first extracts statistical information along the channel dimension using global average pooling and max pooling to generate a channel attention map. A 1 × 1 convolution followed by a nonlinear activation function is then applied to model inter channel dependencies. For the spatial attention, the module constructs a saliency map based on the mean and max values along the channel axis and applies a convolution layer with a large receptive field to produce the spatial attention map. The channel and spatial attention maps are then fused via element-wise multiplication and applied to the input feature map. A residual connection is used to retain the original features. To enhance the adaptability of this mechanism across different image samples, two learnable scaling parameters, α and β, are introduced to adaptively modulate the channel and spatial attention, respectively. This allows the attention mechanism to remain flexible and effective across different network stages.

To effectively fuse multi-scale features from different depths, the TF-FPN module is introduced into the model. Drawing inspiration from the FPN architecture, this module first applies 1 × 1 convolutions to the output feature maps from all four stages of ConvNeXt to unify the channel dimensions to 256. Subsequently, a top–down pyramid structure is used to hierarchically fuse features: high-level feature maps are upsampled to match the resolution of the lower-level maps and are then fused via element-wise addition with their corresponding lower-level features. Finally, a 3 × 3 convolution is applied to refine the fused features at each scale. This design effectively preserves low-level spatial details and high-level semantic information, mitigates the information loss caused by resolution discrepancies across feature maps, and provides a structurally consistent input for subsequent global modeling.

Building upon the above modules, a lightweight Transformer layer is further introduced to enhance the modeling of global contextual information. The Transformer module first rearranges the 2D feature map into a sequential format suitable for self-attention operations. After Layer Normalization, the sequence is fed into a multi-head self-attention module, where global attention weights are computed to enable cross region feature interaction. This is followed by a feed-forward neural network (FFN) that further transforms the attended features. Residual connections and additional layer normalization are applied throughout to stabilize training and improve the model’s generalization capability. Finally, the output features are aggregated via global average pooling to generate an image-level representation, which is then passed through a fully connected layer to produce the final defect classification output.

FAX-Net adopts a stacked integration of the SDAM and TF-FPN to achieve an effective synergy between local refinement and global contextual modeling. The SDAM, embedded across all stages of the ConvNeXt backbone, employs parallel channel and spatial attention to enhance salient features, thereby improving sensitivity to fine-grained and small-scale defects. The refined features are then passed to TF-FPN, which fuses multi-scale information and leverages a lightweight Transformer to capture long-range dependencies across spatial and semantic levels. This progressive interaction—from local discrimination via the SDAM to global integration via TF-FPN—significantly enhances the network’s representational capacity and is central to the superior performance of FAX-Net.

3.2. Symmetric Dual-Dimensional Attention Module

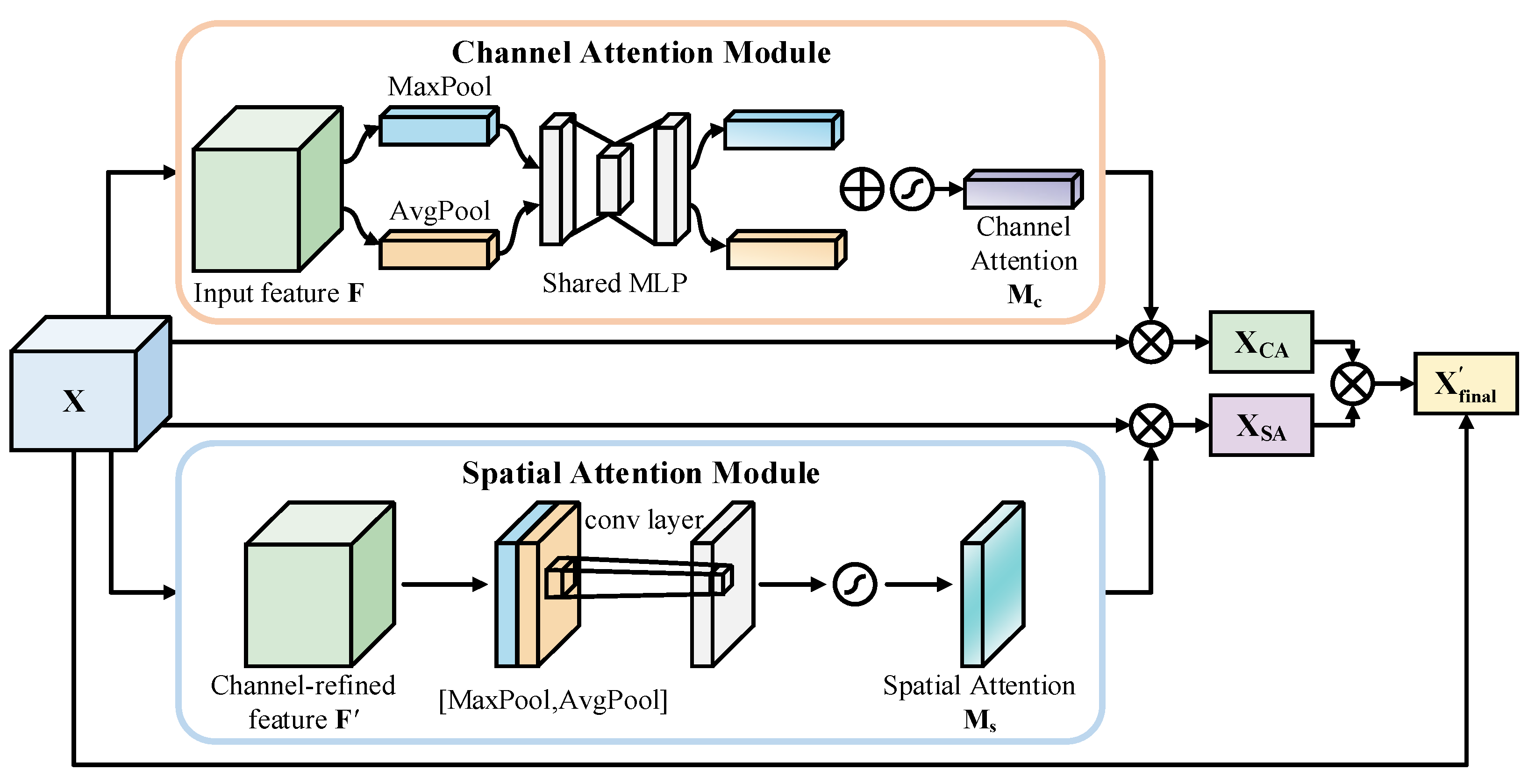

In CNNs, attention mechanisms enhance model performance by assigning different weights to various regions of the feature map, which is particularly beneficial in visual tasks. This study proposes an improved convolutional block attention module, termed the SDAM, which integrates the strengths of the original CBAM while introducing a symmetric architecture, a learnable weighting mechanism, and residual connections to further optimize attention modeling. The aim is to enhance the model’s ability to perceive critical features. A schematic illustration of the SDAM is shown in

Figure 2. The module comprises symmetric and parallel branches for channel and spatial attention, which independently model the saliency information along the channel and spatial dimensions, respectively. The channel attention branch generates attention weights through global pooling operations followed by a shared multi-layer perceptron (MLP), while the spatial attention branch derives spatial weights based on the weighted feature maps. The outputs of both branches are subsequently fused to produce an enhanced and more discriminative feature representation.

The input feature map is a 3D tensor, where H denotes the height, W the width, and C the number of channels. The conventional CBAM applies independent channel and spatial attention mechanisms to weight the feature map along the channel and spatial dimensions, respectively. However, to enhance the adaptability of the model, this study introduces an improved version of the original CBAM by incorporating two learnable weighting parameters, α and β, which dynamically adjust the contributions of the channel and spatial attention mechanisms, respectively.

The core idea of the channel attention mechanism is to weight the feature maps across different channels based on their global contextual information, thereby enabling the network to focus more on the most informative channel features. To achieve this, global pooling operations are applied to the input feature map, where both average pooling and max pooling are used to capture global average and maximum information, respectively, as shown in Equation (1).

The two pooled feature maps are then concatenated to form a new tensor,

. This tensor is subsequently fed into a two-layer fully connected (FC) network (or convolutional layers) to learn the importance of each channel. The first layer reduces the dimensionality of the concatenated features, while the second layer restores the original number of channels, C. Finally, a Sigmoid activation function is applied to produce the channel attention map, as defined in Equation (2).

Here, and are the weight matrices of the two fully connected layers, denotes the Sigmoid activation function, and the resulting channel attention map is used to weight each channel of the input feature map.

To enhance the adaptability of the model, we introduce a learnable scaling parameter,

, to weight the channel attention map, as defined in Equation (3).

Here, represents the feature map after channel weighting, and is a learnable parameter used to control the importance of the channel attention.

The goal of the spatial attention mechanism is to weight different spatial locations of the input feature map based on the importance of regions along the spatial dimension. To achieve this, global pooling operations are first applied to the input feature map, as shown in Equation (1), to obtain a global spatial information representation.

Next, the pooled feature maps

and

are concatenated to form a new tensor,

, which is then passed through a convolutional operation to generate the spatial attention map

, as shown in Equation (4).

Here, denotes the convolutional kernel, and represents the Sigmoid activation function. The resulting spatial attention map reflects the importance of each spatial location in the input feature map.

Unlike the traditional CBAM, we introduce a learnable weighting parameter,

, to adaptively adjust the spatial attention map, as defined in Equation (5).

Here, is a learnable parameter used to control the importance of spatial attention, and represents the feature map after spatial weighting.

In the improved CBAM, the channel attention map and spatial attention map are not applied independently to the feature map. Instead, they are combined through element-wise multiplication. Specifically, the channel attention map

and the spatial attention map

are multiplied to generate the final attention map, as defined in Equation (6).

This mechanism maintains the structural symmetry, computational independence, and balanced integration between the channel and spatial attention branches, enabling the model to simultaneously capture semantic features along the channel dimension and positional cues along the spatial dimension without being affected by processing order. In this design, the shallow stages rely more on fine-grained texture and edge information, resulting in relatively higher α values. In contrast, the deeper stages focus increasingly on semantic structures, with β values gaining prominence. This adaptive weighting across semantic depths reflects the effective coordination between channel and spatial attention, thereby enhancing the representational discriminability of the model.

3.3. Feature Fusion Module

In this paper, the integration of the FPN and Transformer forms the core of the model design. The FPN provides multi-scale feature maps, while the Transformer further enhances global contextual modeling based on these features. Through this combination, the model achieves significant performance improvements in handling multi-scale objects and capturing global dependencies.

Specifically, let the feature maps extracted from different layers of the backbone network be denoted as

, where

represents the feature map from the i layer,

and

denote the height and width, respectively, and

is the number of channels. The FPN applies lateral convolution operations to these feature maps, as defined in Equation (7).

Then, top–down feature propagation is performed through layer-by-layer upsampling and feature fusion, as defined in Equation (8).

Subsequently, each layer of the feature map is further smoothed using a convolution operation denoted as , resulting in a feature pyramid, , under a unified semantic scale. These feature maps capture multi-scale information ranging from high-level abstractions to low-level details.

Subsequently, the multi-scale feature maps output by the FPN are used as input to the Transformer module for global contextual modeling. To handle these features uniformly, each scale’s feature map,

, is flattened and linearly projected into a sequence of vectors, as defined in Equation (9).

Here

, d represent the embedding dimensions of the Transformer module. Subsequently, the sequence vectors from all scales are concatenated into a single input sequence,

, as defined in Equation (10).

To preserve the spatial structure of the fused multi-scale features, the model relies on the Transformer’s inherent positional encoding mechanism when passing the concatenated sequence into the Transformer module. This mechanism introduces implicit spatial cues into the token representations, thereby enabling the Transformer to infer the relative positions of features within the original maps. Consequently, the model is better equipped to capture long-range dependencies and global contextual relationships, as illustrated in Equation (11).

The Transformer uses a multi-head self-attention mechanism, where each attention head is computed as defined in Equation (12).

Here, Q, K, and V are the linear transformations of the input sequence , and d is the scaling factor. Multi-head attention captures dependencies between different positions in parallel, thereby enhancing the model’s understanding of the global structure of the image.

The multi-scale feature maps output by the FPN provide the Transformer with richer input information, containing semantic features from different hierarchical levels. Under the self-attention mechanism of the Transformer layers, these feature maps can better capture dependencies between different spatial positions within the image, especially excelling in global semantic consistency and spatial context modeling. In this way, the Transformer not only enhances the global modeling capability of the features but also further improves the semantic representation effectiveness of the features extracted by the FPN.

This integration enables the model to extract rich information from multiple scales and to perform unified modeling of this information through the self-attention mechanism, thereby exhibiting stronger discriminative capability in complex scenes. The FPN enhances the model’s adaptability to multi-scale objects, while the Transformer improves its ability to model complex patterns and long-range dependencies through global information interaction.

3.4. Training Implementation

The loss function employed in our approach is the cross-entropy loss, as defined in Equation (13).

In the definition of the cross-entropy loss function, y represents the discrete probability distribution of the true labels, which is typically characterized as a one-hot encoded vector. Its component acts as an indicator for class c, taking a value of 1 if and only if the sample belongs to class c.

In contrast, corresponds to the predicted probability distribution output by the model. It satisfies and , where the component denotes the model’s probability estimate for the sample belonging to class c.

Here, signifies the total number of classes, and log denotes the natural logarithm (base e).

This function enables the quantitative evaluation of classification performance by measuring the discrepancy between the true distribution y and the predicted distribution .

All input images were resized to 224 × 224 resolution and converted to 3-channel RGB format. We implemented the model using PyTorch 2.2.1 with CUDA 12.7 acceleration, employing the Adam optimizer at a learning rate of 0.0001. Training was conducted for 100 epochs with a mini-batch size of 16 on a single NVIDIA GeForce RTX 4060 GPU.

4. Experiments

In this section, we describe how we systematically evaluated the performance of the proposed FAX-Net model on the task of steel surface defect classification through a series of experiments.

Section 4.1 introduces the defect dataset used and the evaluation metrics.

Section 4.2 analyzes the cross-entropy loss function employed in the model training process. In

Section 4.3, we describe how we conducted a comparative analysis between FAX-Net and several mainstream classification models, demonstrating its classification accuracy across different defect types.

Section 4.4 further verifies the practical contribution of the proposed key modules to performance improvement through ablation studies.

4.1. Defect Dataset and Evaluation Metrics

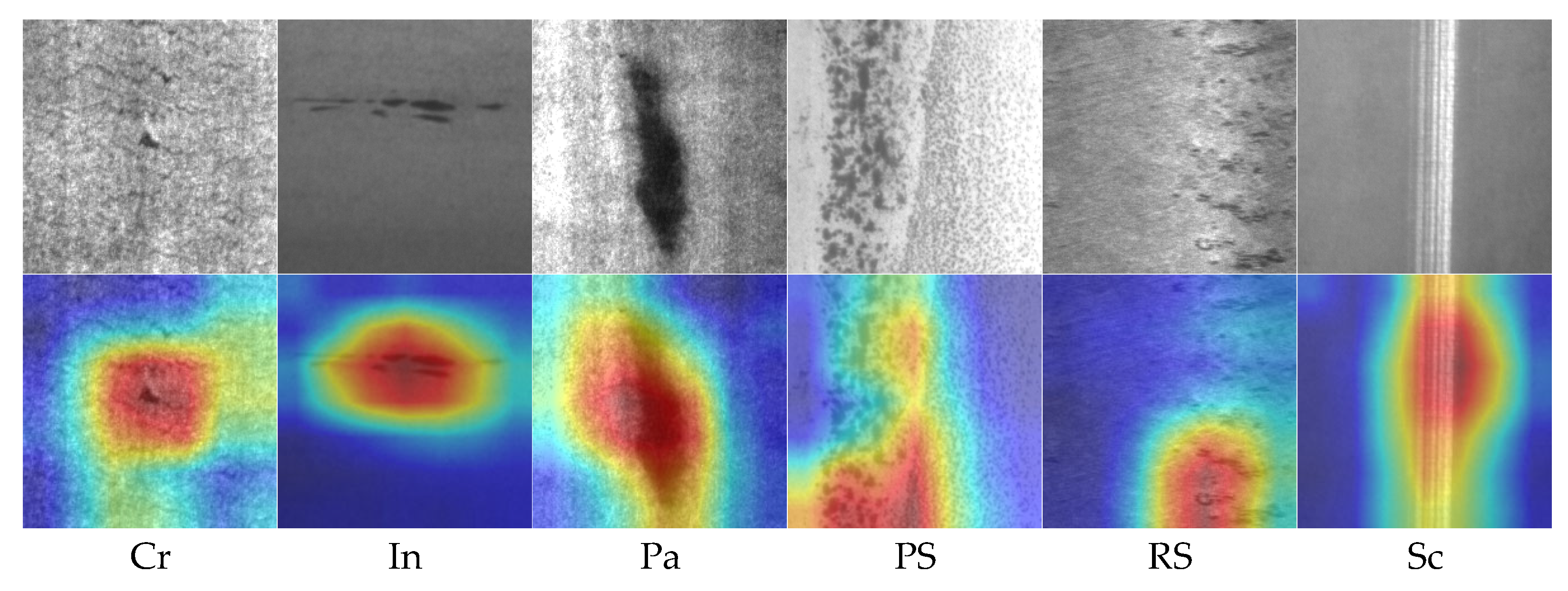

In this study, the NEU-CLS defect dataset [

46] was used as the benchmark for steel surface defect classification experiments. Released by Northeastern University, this dataset contains six typical types of steel surface defects: Cracks (Cr), Inclusions (In), Patches (Pa), Pitted Surface (PS), Rolled-in Scale (RS), and Scratches (Sc), with sample images shown in

Figure 3. Each defect category consists of 300 RGB images with a resolution of 200 × 200. To train and evaluate model performance, the selected defect samples were divided into training/testing sets in a ratio of 8:2.

In this paper, precision, recall, F1-score, and accuracy are adopted as evaluation metrics to assess the performance of the model. These metrics comprehensively reflect the model’s effectiveness from the perspectives of predictive accuracy and coverage capability. Class-wise accuracy is not reported, as it is relatively insensitive to false positives and false negatives and therefore provides limited interpretive value in such tasks. Specifically, precision measures the proportion of true positive samples among those predicted as positive, reflecting the reliability of the model’s positive predictions. Recall measures the proportion of correctly identified positive samples among all actual positive samples, indicating the model’s coverage of positive instances. F1-score is the harmonic mean of precision and recall, balancing both aspects and being particularly suitable for scenarios with imbalanced class distributions. Accuracy evaluates the proportion of correctly predicted samples among all samples, serving as a direct indicator of overall performance. The calculation formulas are shown in Equations (14)–(17).

True positive (TP) refers to the number of samples correctly predicted as positive by the model; false positive (FP) indicates the number of samples that are actually negative but incorrectly predicted as positive; true negative (TN) represents the number of samples correctly identified as negative; and false negative (FN) refers to the number of samples that are actually positive but incorrectly predicted as negative. These four metrics form the foundation for evaluating classification performance and support the calculation and analysis of the composite evaluation metrics such as precision, recall, F1-score, and accuracy.

4.2. Cross-Entropy Loss Analysis

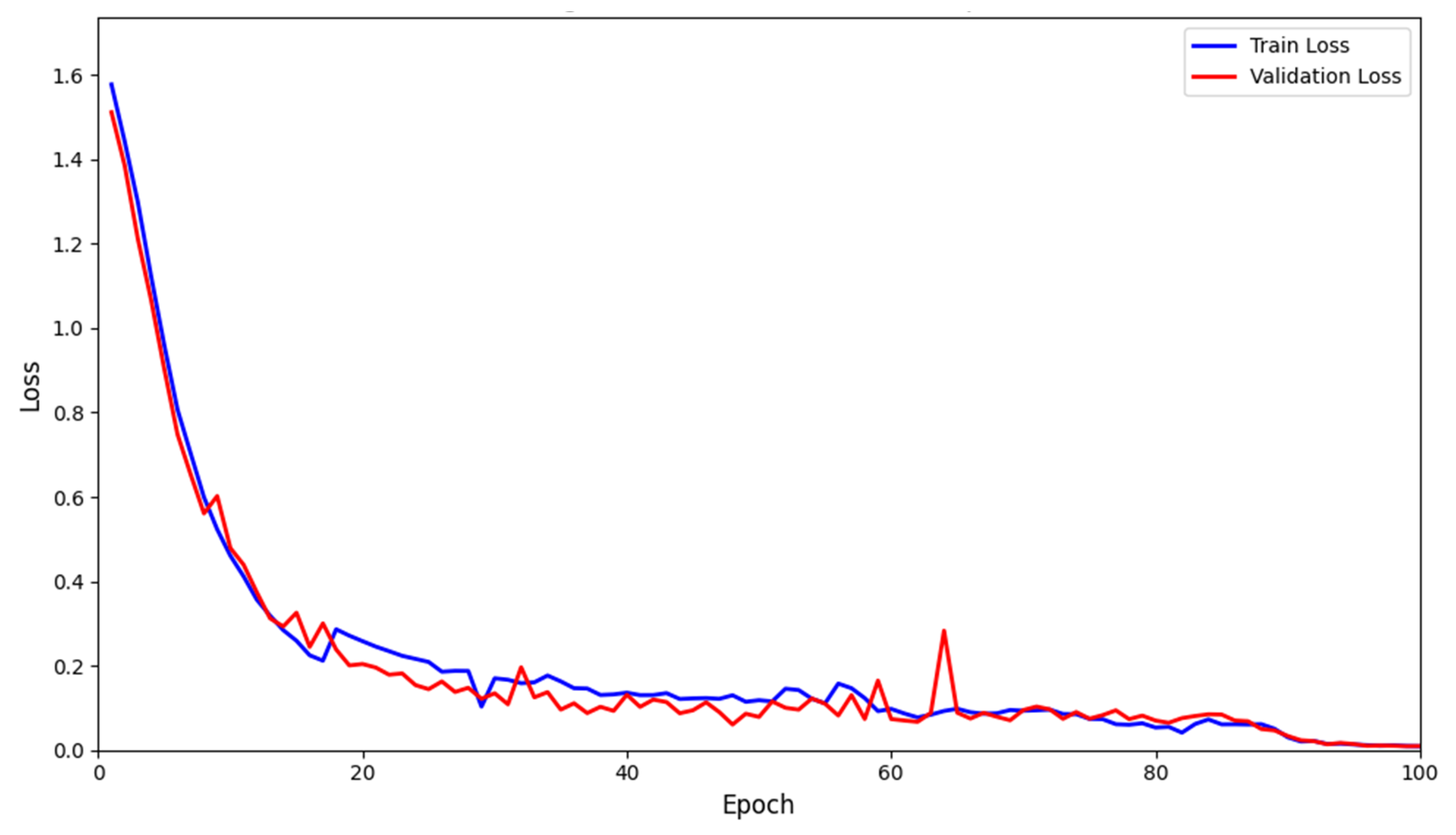

In this paper, the cross-entropy loss function was employed to train the model. This loss function guides the optimization process by quantifying the discrepancy between the predicted class probability distribution and the ground truth labels. As illustrated in

Figure 4, the loss value decreased rapidly during the initial training stages, indicating that the model effectively learned discriminative features from the input data. As training progressed, the loss gradually stabilized, suggesting that the model converged toward an optimal solution. Overall, both the training and validation loss curves exhibit consistent downward trends, with no evident signs of overfitting. In the later stages of training, the loss continued to decrease and eventually converged to a relatively low level, further demonstrating the model’s robustness and generalization capability. Although minor fluctuations are observed in some training epochs, the overall loss curve remains smooth, indicating that the training process was stable and the optimization was effective.

4.3. Comparative Experiments

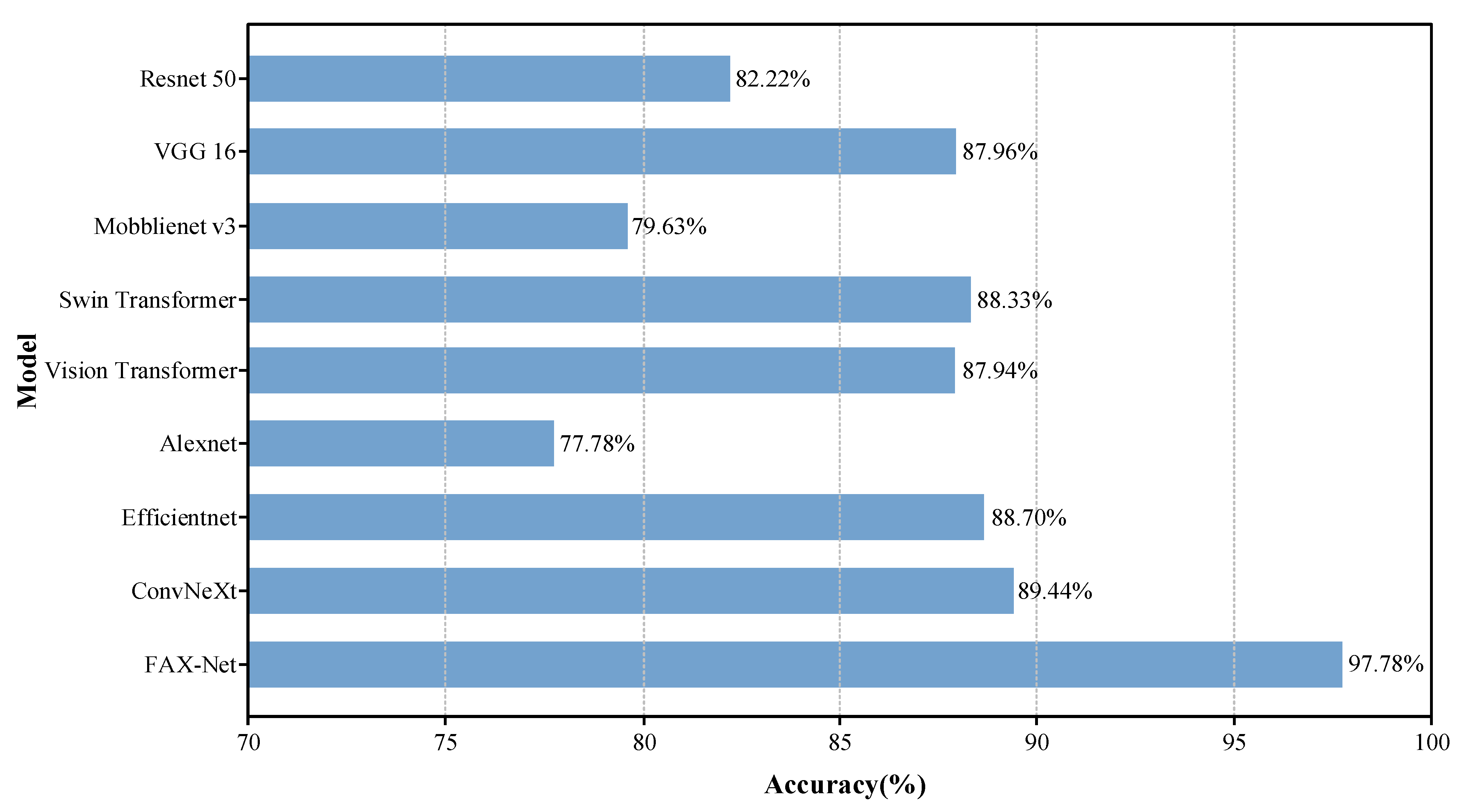

To verify the effectiveness of the proposed FAX-Net model in the task of steel surface defect classification, we conducted systematic comparative experiments on the standard NEU-CLS dataset. The FAX-Net model was compared with several mainstream models, including ResNet50, VGG16, MobileNetV3, Vision Transformer, Swin Transformer, AlexNet, and EfficientNet. In addition, to further validate the effectiveness of the proposed modules, the backbone network used in this study, ConvNeXt, was also included as a baseline model for comparison.

Table 1 presents the classification performance of various models across six types of steel surface defects, including precision, recall, and F1-score. The results demonstrate that FAX-Net performed exceptionally well across all evaluation metrics, achieving an average precision of 97.42%, an average recall of 97.67%, and a high average F1-score of 97.51%. Compared to other mainstream models, FAX-Net shows more consistent and comprehensive recognition capabilities across all defect categories, highlighting its superior feature extraction and discrimination abilities.

Furthermore, as shown in

Figure 5, FAX-Net attained the highest overall classification accuracy of 97.78%. By comparison, the next best-performing model, EfficientNet, achieved an accuracy of 88.70% and an F1-score of 88.63%—both substantially lower than those of FAX-Net. These results strongly confirm the significant advantage of the proposed model in steel surface defect classification.

In addition, FAX-Net demonstrated excellent recognition performance across all six categories of steel surface defects, with the best performance observed on the RS class, where both precision and recall reached 100%, and the F1-score was 97.30%. This result indicates that the proposed model possesses superior feature extraction and discrimination capabilities, especially when dealing with complex textures and easily confusable defect categories. It also highlights the model’s enhanced ability to identify subtle defects and samples with blurred boundaries.

4.4. Ablation Experiments

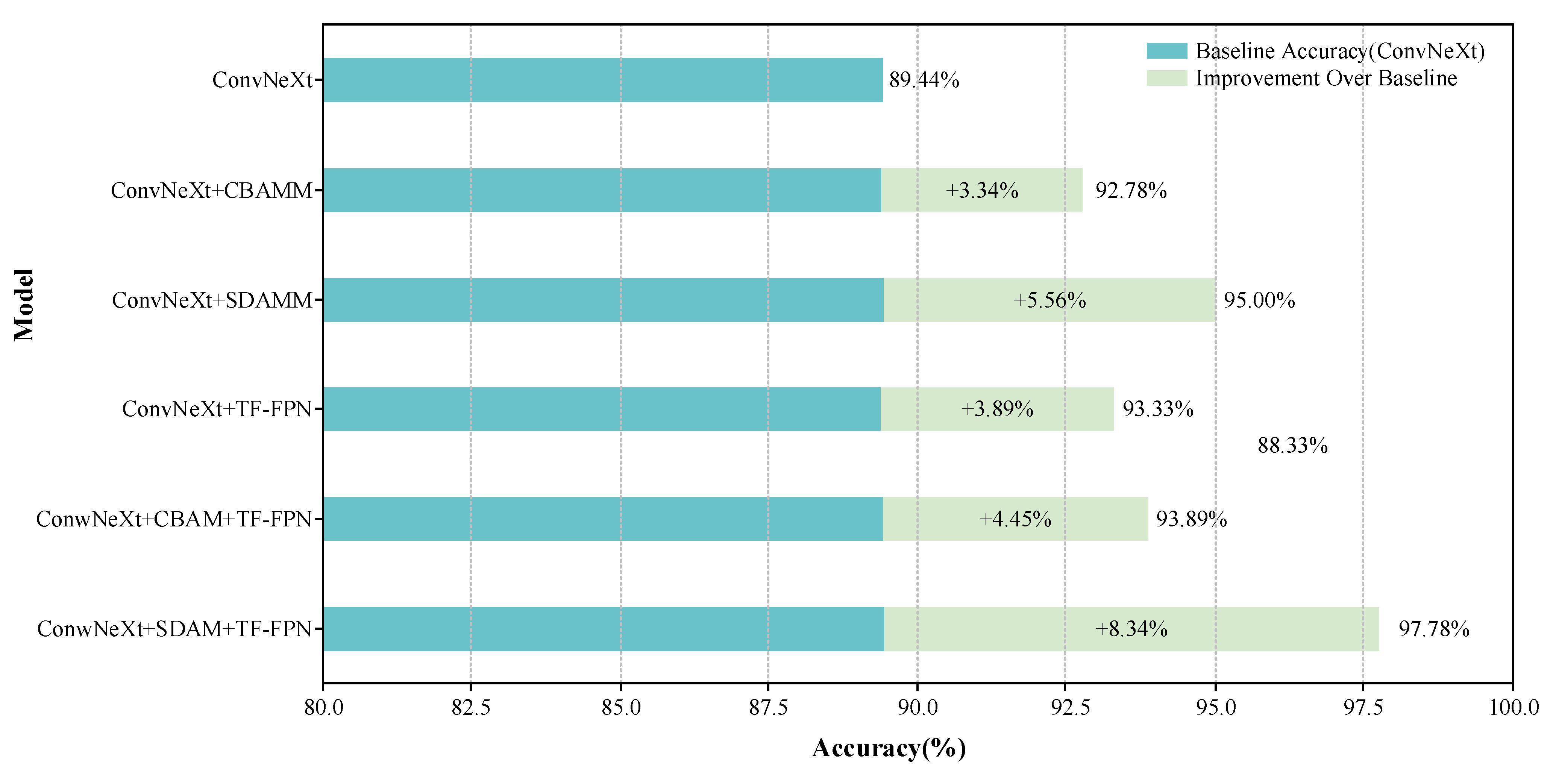

To investigate the impact of different modules on classification performance, we conducted ablation studies by individually incorporating the SDAM and TF-FPN module into the ConvNeXt backbone. The experimental results are presented in

Table 2 and

Figure 6, where ↑ indicates an improvement in the corresponding metric relative to the baseline model. Under the influence of these modules, our model achieved significant improvements across various evaluation metrics compared to the baseline. Specifically, the introduction of the SDAM led to notable performance gains for several defect types, particularly for the challenging categories Sc and In. The F1-score for Sc improved from 72.53% to 92.31%, and for In, it increased from 82.19% to 92.68%, achieving absolute gains of 19.78% and 10.49%, respectively. These results indicate that the SDAM effectively enhances the model’s response to key local regions in the feature maps, improving its discriminative ability for low-contrast and complex-texture defects such as inclusions.

The introduction of the TF-FPN module enables the model to more effectively recognize defect types with large scale variations and complex morphologies. It shows clear advantages particularly in categories such as Sc and PS, which are characterized by blurred local textures and indistinct boundaries. For the Sc category specifically, the F1-score increased from 72.53% (baseline) to 90.20%, representing an improvement of 17.67%. This significantly enhances the model’s discriminative capability for difficult-to-classify defects. Furthermore, TF-FPN effectively optimizes feature fusion for elongated edge features (e.g., Sc) and multi-scale defects, compensating for the limitations of traditional convolutional networks in modeling geometric features.

To assess the impact of the SDAM on defect-specific attention, Grad-CAM was employed to visualize the model’s focus regions, as illustrated in

Figure 7. The results reveal that, for linear defects such as Cr and Sc, the model primarily attends to elongated edge structures; for region-based defects like In and Pa, attention is concentrated on the core areas with pronounced intensity variation; and for texture-complex defects such as PS and RS, the model highlights the transitional zones between defect and background. These observations confirm that the SDAM effectively guides the model to focus on the most informative regions based on the nature of the defect, thereby improving both classification accuracy and interpretability.

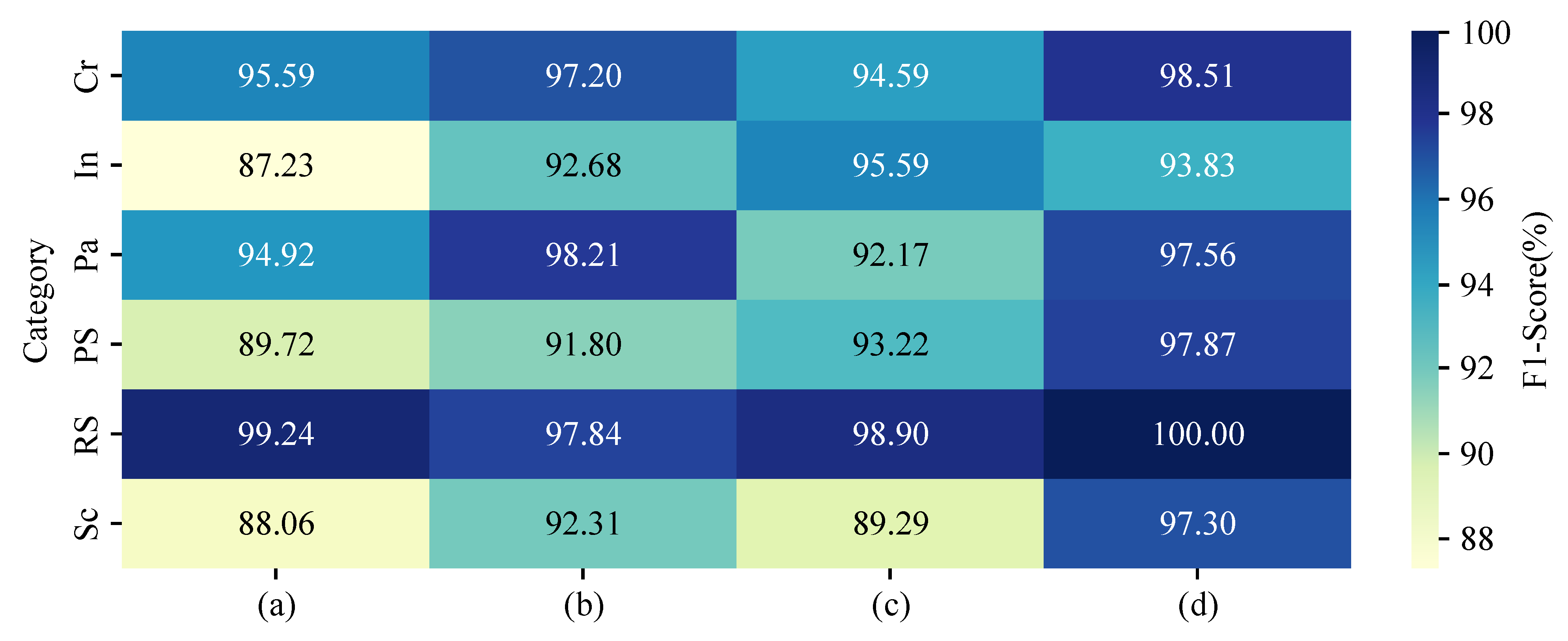

To further validate the effectiveness of the symmetric design in defect region modeling, we visualized the F1-score heatmaps for different models across each defect category, as shown in

Figure 8. The classification performance of each model on different defect types can be intuitively compared through the color intensity. It can be clearly observed that, compared with the CBAM, the SDAM achieved performance improvements across most categories, with particularly significant gains in challenging and easily confusable classes such as Sc, In, and Cr. These improvements demonstrate that the symmetric parallel attention structure adopted by the SDAM can more effectively and evenly model salient information across both channel and spatial dimensions, thereby enhancing the model’s perception of complex structures and blurred-edge defects. Compared with the CBAM’s sequential modeling strategy, the SDAM maintains structural symmetry and independence between the channel and spatial attention branches, enabling the attention mechanism to capture multi-dimensional information more evenly and avoiding the interference caused by sequential bias—highlighting the advantages of the symmetric design in attention mechanisms.

5. Conclusions

We propose an improved model for steel surface defect classification, named FAX-Net, which is built upon the ConvNeXt backbone and integrates the SDAM and TF-FPN, thereby enhancing both the discriminative power and the completeness of feature representation. Comparative and ablation experiments on the NEU-CLS dataset demonstrate that FAX-Net outperforms existing mainstream methods across all classification metrics, confirming its effectiveness in defect classification tasks. Notably, FAX-Net exhibits excellent generalization ability when dealing with structurally complex and scale-varying defect types.

In the future, we will focus on the lightweight design and deployment optimization of the model. To meet the demands of real-time defect detection in industrial scenarios, subsequent work will explore techniques such as structural pruning, knowledge distillation, and quantization to reduce model parameters and computational overhead. Additionally, we will investigate the integration of graph optimization and efficient inference engines tailored for edge hardware platforms such as embedded GPUs, ARM processors, and FPGAs, aiming to enhance deployment efficiency and inference speed in resource-constrained environments. Concurrently, the generalizability of FAX-Net will be rigorously evaluated on additional steel defect datasets, such as extended NEU variants and domain-specific private datasets. Its applicability across diverse industrial scenarios, including cold-rolled steel plate and special alloy production lines, will be assessed to further demonstrate robustness and versatility across varying defect morphologies and manufacturing contexts.