1. Introduction

Fake news (disinformation) continues to have an adverse impact on human societies across the world, with social media platforms augmenting its echo chamber effect. Information asymmetry is foundational to understanding this rapid expansion, where the creators of fake news possess knowledge of the content, emotional triggers, factual, and fictional boundaries that can drive viral spread and echo chamber effect. In contrast, the recipients or consumers of fake news seek reinforcement of their false and prejudiced world views, a confirmation bias that is directly supported by information asymmetry.

In this context, we define information asymmetry as the imbalance between content creators and content consumers, where creators possess greater control over the narrative, emotional framing, and rhetorical techniques, while consumers often lack the awareness, resources, or motivation to correctly verify what they encounter. This imbalance is amplified by algorithmic dissemination and emotional resonance, enabling disinformation to exploit its consumers rather than engage in open information exchange [

1,

2,

3,

4].

Disinformation collectively represents fake news, deception, rumors, hoaxes, clickbait, conspiracy theories, etc. It is distinguished from misinformation, the inadvertent creation and sharing of false information, and advertising, which may have fictional claims for promoting a product, service, or person, but without a news-like format. Ghanem et al. provide a formal definition as: “False information that is published with the intent to deceive” [

5]. Zhang and Ghorbani define fake news as: “all kinds of false stories or news that are mainly published and distributed on the Internet in order to purposely mislead, befool, or lure readers for financial, political, or other gains.” [

6]. Fake news can be further characterized by the three Vs of Big Data: (1) Volume—Due to low barriers to entry, disinformation is voluminous, (2) Variety—Disinformation takes different forms and also covers almost every aspect of our daily life, (3) Velocity—Disinformation is short lived, and it spreads quickly.

Recent advances in AI, specifically generative AI, have automated the creation of fake news. Following the release of ChatGPT in December 2022, websites and social media accounts generating fake news had increased from 49 to 600 by May 2023 [

7]. The impact of generative AI on fake news is further noted in the accelerated development of bot farms and off-the-shelf AI tools for fake news creation and propagation via social media platforms [

8]. Another study focused on the distinction between AI-generated and human-generated factual news, concluding that AI-generated news content is perceived to be less credible than human-generated content [

9]. Despite its role in the automation of fake news generation, AI has also been used effectively for the detection of fake news. Several recent survey articles report how diverse AI techniques have been effective at detection [

10,

11,

12,

13].

AI’s ability to learn, reason, and optimize massive amounts of data to create insights, forecasts, and other aggregates will fill these gaps. Despite AI having being studied and applied for several decades, in 2023, the OECD presented a renewed definition of AI: “An AI system is a machine-based system that for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment” [

14]. AI innovations and applications can be found across diverse industry sectors, including digital health [

15,

16] and industrial systems [

17,

18]. The risks and challenges of AI include bias, hallucinations, inaccuracies, lack of transparency, and impact on physical and mental health [

19,

20]. Responsible AI has emerged as a new field of study aiming to address these challenges while ensuring wider adoption and innovations [

21,

22,

23], as well as the development of energy-efficient AI models and systems [

24,

25].

In this article, we develop and apply a novel AI approach to address the information asymmetry of fake news detection based on fine-tuned language models and emotion embeddings. Within this approach, the intensity and temperature of emotion, selection of words, and the structure and relationship between words contribute to detecting this information asymmetry. The rest of this paper is organized as follows.

Section 2 presents a summary of relevant related work in fake news detection using AI.

Section 3 presents the proposed approach, starting with datasets used to build and test the method, followed by separate sections for the three modules of emotion modeling, language modeling, and semantic modeling. Experiments and results are presented in

Section 4, followed by the limitations and future directions in

Section 5. The paper concludes in

Section 6.

2. Related Work

The exponential spread of fake news usually comes in mass waves, mainly at times of interest such as major public events and emergencies, making it challenging for independent systems and fact-checkers alone to combat the spread of fake news. Thus, Large Language Models (LLMs) can meet this need. With the introduction of BERT [

26] by Google in 2018, LLMs have been state-of-the-art for every downstream application such as question answering, summarization, classification, extraction, and generation [

27]. In the domain of fake news detection, since 2019, there have been several studies that have incorporated these language models for fake news detection. Singhal et al. proposed SpotFake [

28], which generates the textual feature for a news article using language models and combines it with the visual features of the article to detect fake news. Jwa et al. proposed exBAKE [

29], which pre-trained a language model using a corpus of news from CNN and DailyMail to make the model domain-specific. Then, this model was further fine-tuned using another dataset. Kaliyar et al. proposed FakeBERT [

30], which extended the BERT model by adding parallel 1-d CNNs for better learning. Schütz proposed that a fine-tuned model’s accuracy depends on the preprocessing pipeline as well as the hyper-parameters used for tuning [

31].

In addition to BERT, other LLMs have also been efficiently used in fake news detection. Raza and Ding utilized a transformer-based architecture, drawing inspiration from the BERT architecture, to identify fake news articles during their early stages of being created [

32]. Caramancion evaluated the proficiency of popular LLMs such as OpenAI’s ChatGPT, Google’s Bard/LaMDA, and Microsoft’s Bing AI in detecting fake news. His evaluations revealed an average score of 65% across all models. GPT-4.0 led with 71% accuracy [

33]. Wu et al. proposed a method of using an LLM, GPT3.5, as a feature extractor for detecting cheap fakes. The proposed method captures the correlation between two image captions, allowing for a deeper understanding of the relationship between captions [

34]. Jiang et al. leveraged pre-trained large language models through the use of knowledge prompt learning to detect fake news [

35].

Fake news is intentionally written to mislead the readers; therefore, it is logical to consider that there exists an idea of emotion or sentiment extraction from news articles or other texts and posts posted online to detect fake news. Ajao et al. proposed a sentiment-aware classification using a ratio called the emoratio, a ratio between the count of negative and positive emotional words in the text [

36]. Giachanou et al. proposed an EmoCred approach based on an LSTM neural network that incorporates emotional signals using three different approaches: a lexicon-based approach, an approach that calculates the emotional intensity of the claims, and a neural network approach that assigns an intensity level to the claims and represents the number of emotional reactions that the claim can trigger in the readers [

37]. Guo et al. proposed an Emotion-based Fake News Detection framework (EFN), which can exploit content and social emotions simultaneously for fake news detection using deep neural networks [

38]. Zhang et al. also proposed a similar model built based on the relationship between publisher emotion and social emotion, adopted as dual emotion. They explain how dual emotions, emotion resonances, and dissonances can be used for fake news detection [

39]. Ghanem et al. proposed a model called Emotionally Infused Network (EIN) that incorporates word embeddings and affective features such as emotions and hyperbolic expressions to capture the emotional flow of the document in relation to its topical information. [

5]. While models like EFN, EIN, and EmoCred have advanced emotion-aware fake news detection, they often depend on social signals, lack modularity, or target specific data types like tweets. In contrast, our approach (1) uses a lexicon tuned for fake/real polarity, (2) models emotion, semantics, and language independently, and (3) adopts a modular design for interpretability and real-time use. Unlike EmotionFlow and similar models, which treat emotion as secondary, we center information asymmetry by jointly capturing emotional polarity shifts, stylized phrasing, and simplified semantics dimensions not concurrently addressed in prior work.

Buntain and Golbeck proposed a methodology incorporating linguistic features along with temporal, structural, and user-specific features (12 input features in total), achieving 65.29% accuracy [

40]. However, their research was limited to microblog posts. Also, the use of temporal and structural features is a limitation in real-time detection. Fan Yang et al. proposes a self-explanatory model (“XFake”) that uses semantic-level feature ratios along with n-gram analysis. This model achieved a maximum of 60.7% test accuracy [

41]. The main drawback of their approach is that they limited their research to the political domain. Also, the context captured by their model is limited to n neighbors (in their case, a maximum of 3 neighbors). Since their model is self-explanatory, they were able to generate explainability by highlighting the words contributing to the decision-making process. Verma et al. carried out research using only linguistic features and content-based word embeddings (count vector and TF-IDF) [

42]. They generalized their detection by incorporating datasets from different domains; their dataset was released to the public as “WELFake”. They followed a hard voting mechanism on three sets of linguistic features.

Several studies have conducted extensive investigations into emotion detection, generation of emotion-based lexicons, and conversational agents from unstructured data. However, recent work on using standalone lexicon-based approaches that exploit the fake and true polarities of language components in classifying fake news is limited. Mertoğlu et al. proposed a novel lexicon construction model, including a scoring mechanism, to score each word based on its occurrence in true and fake corpus, creating a Turkish lexicon for detecting Turkish fake news [

43].

Table 1 compares the existing approaches for fake news detection based on the modality of data used to detect fake news. In addition to highlighting the presence of semantic, emotional, and linguistic features, this table also includes the type of dataset employed (short or long text) and the reported accuracy of each approach, offering a more comprehensive comparison. While various models incorporate emotion and linguistic features, few, if any, explicitly address or model information asymmetry as a multi-faceted concept involving emotional manipulation, semantic deception, and strategic framing. Our work fills this gap by embedding asymmetry-aware features across the emotion, language, and semantic layers.

Although some prior work has explored the combination of emotion and language models (e.g., EmoCred, EmotionFlow), these approaches often lack cross-domain generalizability and do not explicitly ground their design in the broader notion of information asymmetry. Our work addresses this gap by modeling asymmetry as a multi-faceted phenomenon encompassing emotional manipulation, semantic simplification, and persuasive language through three independently developed modules (emotion, semantic, and linguistic), later fused in a decision-level ensemble. This modular design embeds asymmetry-aware features at each layer and enables more robust, interpretable, and generalizable detection.

A majority of literature proposes approaches that are trained and tested on a specific dataset. Since we focus on fake news originating in different platforms (social media, news organizations, forums, etc.), our research is not limited to one dataset but rather utilizes different types of datasets (short text: tweets, long text: news posts) in order to build a generalizable model. Hence, typical evaluation strategies such as accuracy, precision, and recall are insufficient to evaluate the performance of our approach; a generalizability metric is needed for this purpose. Nasir et al. [

44] proposed an evaluation strategy for evaluating model generalizability by training the model on one dataset and validating it on a separate dataset. We have extended this metric as explicated in the experiments section.

3. Methodology

This section presents the proposed approach for fake news detection based on three modules: emotion modeling, language modeling, and semantic modeling. This section begins with an introduction to the datasets used in this study; a total of eight datasets were used for various purposes, such as training models, hyperparameter tuning, fine-tuning language models, and evaluating trained models for generalizability. Each module explains the methods used to extract the module-specific features, associated resources, and how these resources were created or obtained. To implement information asymmetry in our approach, we capture three orthogonal dimensions: (i) emotional intensity and misalignment, indicating manipulative triggers; (ii) lexical framing and word usage patterns, distinguishing real vs. fake narratives; and (iii) semantic complexity, which reflects the gap between credible and misleading content. These modules together quantify the asymmetry between creators and consumers, moving beyond sentiment to model deception.

Section 3.5 explains the normalization process implemented on the extracted features and describes the final classifier built to input the separate modeling features, combine them, and produce the final classification outcome.

3.1. Datasets

Five datasets, CONSTRAINT [

46], the Fake News Detection Dataset from Kaggle [

47], FakeNewsNet [

48], LIAR [

49], and ISOT [

50], were used to train and tune the models, while two different datasets, FA-KES [

51] and the COVID-19 fake news dataset [

52], were used to evaluate the trained model. Furthermore, the WELFake dataset [

42] was used in modeling the language pipeline.

The CONSTRAINT dataset was shared as part of the CONSTRAINT shared task. This dataset is composed of nearly 11,000 posts related to COVID-19, with a near equal split of fake and true posts. The data was extracted directly from social media platforms such as Twitter, Facebook, Instagram, etc. The dataset was preprocessed to remove duplicates, non-English tweets, and irrelevant content, ensuring a clean and balanced dataset for training. The fake news detection dataset from Kaggle is composed of fake and true news from mainstream media. It comprises 3164 fake news articles and 3171 true news articles. The data was collected from various online news platforms and verified using fact-checking tools. The articles were stored in a tabular format, with fields such as title, content, and label (fake/true). FakeNewsNet contains short statements collected from Politifact and Gossipcop, which are two popular fact-checking platforms. This dataset contains 5755 fake statements and 17,441 true statements. The data extraction process involved scraping posts from these platforms, along with their corresponding metadata, such as the title, source, url, and fact-checked label. The dataset was structured as CSV files. The LIAR dataset contains short statements collected from Politifact. It has data belonging to six different categories: true, mostly true, half true, mostly false, false, and pants on fire. The labels were converted to binary labels by taking the first three as true and the latter three as fake. Accordingly, this dataset has 5657 fake statements and 7134 true statements. The ISOT dataset contains long texts collected from websites verified as legitimate and fake news spreaders by popular fact-checking engines. It has 21,417 fake news articles and 23,481 true news articles. The data was extracted using web scraping techniques, targeting specific websites identified by fact-checking organizations. The dataset was stored in CSV format, with columns for the article title, content, and label. Preprocessing steps included removing HTML tags, normalizing text, and handling missing values. The FA-KES dataset is composed of true and fake news articles on the Syrian war. It has 371 fake news articles and 418 true news articles. The COVID-19 fake news dataset consists of a collection of true and fake news related to COVID-19. It consists of 1058 fake news articles and 2061 true news articles collected between December 2019 and July 2020. The data was gathered from online news platforms, social media, and fact-checking websites. The dataset was structured in a tabular format, with fields for the article content, source, and label. Preprocessing included removing irrelevant articles and standardizing the text format. WELFake is a combined dataset containing long texts from four different well-cited datasets. It consists of 37,106 fake news articles and 35,028 true news articles. The dataset was created by merging data from multiple sources, ensuring no duplicates and consistent formatting. The final dataset was stored in CSV format, with fields for the article content, source dataset, and label. Preprocessing involved tokenization, stopword removal, and text normalization.

As shown in

Table 2, the datasets used in this study vary significantly in size and scope, ranging from small, topic-specific datasets like FA-KES and COVID-19 fake news to larger, general-purpose datasets like ISOT and WELFake. This diversity ensures that the models are trained and evaluated on a wide range of data, improving their robustness and generalizability. Additionally, some datasets, such as CONSTRAINT and COVID-19 fake news, are time-constrained and focus on specific events, while others, like FakeNewsNet and LIAR, cover broader topics. The table provides a concise summary of the dataset volumes and their respective focuses, offering a clear view of the extent of the work.

To ensure broad representation across diverse fake news typologies, our dataset selection strategy intentionally spans multiple domains (e.g., political, health-related, conflict-based), formats (short-form social media posts, long-form articles), and platforms (Twitter, Facebook, mainstream news websites, curated fact-checking sources). For instance, the CONSTRAINT and COVID-19 datasets capture health misinformation from social media, while FA-KES introduces conflict-related narratives (Syrian war). FakeNewsNet and LIAR include politically themed claims vetted by professional fact-checkers. The inclusion of both general-purpose (e.g., ISOT, WELFake) and domain-specific datasets ensures that our model is exposed to a wide array of linguistic and emotional patterns associated with misinformation. The limitations of the datasets include temporal bias to specific events (e.g., COVID-19); platform-specific features that could influence model behavior; language bias, as all datasets are in English; and label granularity varies (e.g., binary vs. fine-grained truth ratings). These limitations are partially mitigated through cross-domain evaluations described in

Section 4. Future work could address these issues by incorporating multilingual corpora, multimodal content (e.g., image + text), and nuanced truth scales to further improve generalizability.

3.2. Module 1—Emotion Modeling

Figure 1 illustrates the emotion modeling module implemented in this study. Initially, a DistilBert transformer model (Distilbert-base-uncased) from the Hugging Face Models Library was fine-tuned on a labeled emotions dataset obtained from the Hugging Face Dataset Library. DistilBERT is a transformer model, smaller and faster than BERT, pretrained on the same corpus as BERT in a self-supervised fashion using the BERT base model. The DistilBERT model used, Distilbert-base-uncased, is a distilled version of the BERT base model. This model is uncased; it does not distinguish between words like english and English. The emotion dataset used to fine-tune the model consists of English Twitter (now X) messages annotated with six basic emotions: anger, fear, joy, love, sadness, and surprise. The emotion dataset used to fine-tune the model consists of English Twitter (now X) posts labeled with six basic emotions: anger, fear, joy, love, sadness, and surprise. Although the dataset comes from short social media posts, both short and long news articles often use similarly strong emotional language, especially in headlines. Prior work [

53] shows that emotions like fear, outrage, and strong opinions are common in misleading content across formats. Thus, despite structural differences, Twitter data captures the emotional cues found in fake news, making it suitable for fine-tuning emotion models.

The NRC Affect Intensity Emotion Lexicon presented by Mohammed [

52] was used. The NRC Emotion Intensity Lexicon is a list of English words with real-valued scores of intensity for eight basic emotions. The lexicon has around 10,000 entries for Robert Plutchik’s eight emotions. It includes common English terms as well as terms that are more prominent on social media platforms, such as Twitter. It also includes terms that are associated with emotions. Each word in the lexicon contained an emotion label (one of anger, anticipation, disgust, fear, joy, sadness, surprise, and trust) and an intensity score. To support real-time detection and reduce inference time, we applied a reduction process to the lexicon. First, we removed outlier terms that were rare or contextually inconsistent. Then, we clustered semantically similar emotion words and retained representative terms to reduce redundancy. This process reduced the lexicon from 10,000 to 3000 entries, preserving emotional diversity while improving efficiency and stability during scoring.

Embeddings for the reduced lexicon were computed using the fine-tuned emotion language model. These embeddings were then indexed using an Annoy graph. Annoy (Approximate Nearest Neighbors Oh Yeah) is a C++ library used to search for points in space that are close to a given query point. It also creates large read-only file-based data structures that are mapped into memory so that many processes may share the same data. Annoy is used here to expedite the process of performing a nearest neighbor search using the lexicon and the news item in real time.

A news item that enters the pipeline is first preprocessed by lowercasing it and removing URLs, non-ASCII characters, punctuation, numbers, and null spaces. Next, the embedding of the news item is calculated using the same fine-tuned emotion language model that computed the embeddings of the lexicon. Thereby, the embeddings of the lexicon and the news item are projected into the same embedding space. Next, a nearest neighbor search is performed on the news item, where the 50 closest emotion lexical neighbors to the news item are identified. This choice of 50 neighbors aimed to balance clarity and reliability in the emotion scores. While larger values risk blurring important emotional differences, smaller values can make the results noisy, overly sensitive, or influenced by rare words. Using 50 gave us enough neighbors to smooth the scores while still keeping the emotional meaning clear and focused.

A score is calculated for each of the 50 lexical neighbors based on its semantic distance from the news item and its emotion intensity score. The semantic distance is computed as the cosine distance between the embedding vectors of the news item and the lexical neighbor, as shown in Equation (

1):

The scores of each neighbor are aggregated into eight different emotion buckets based on their emotion label. These eight emotion scores are then converted into the eight features that are outputted from the emotion pipeline.

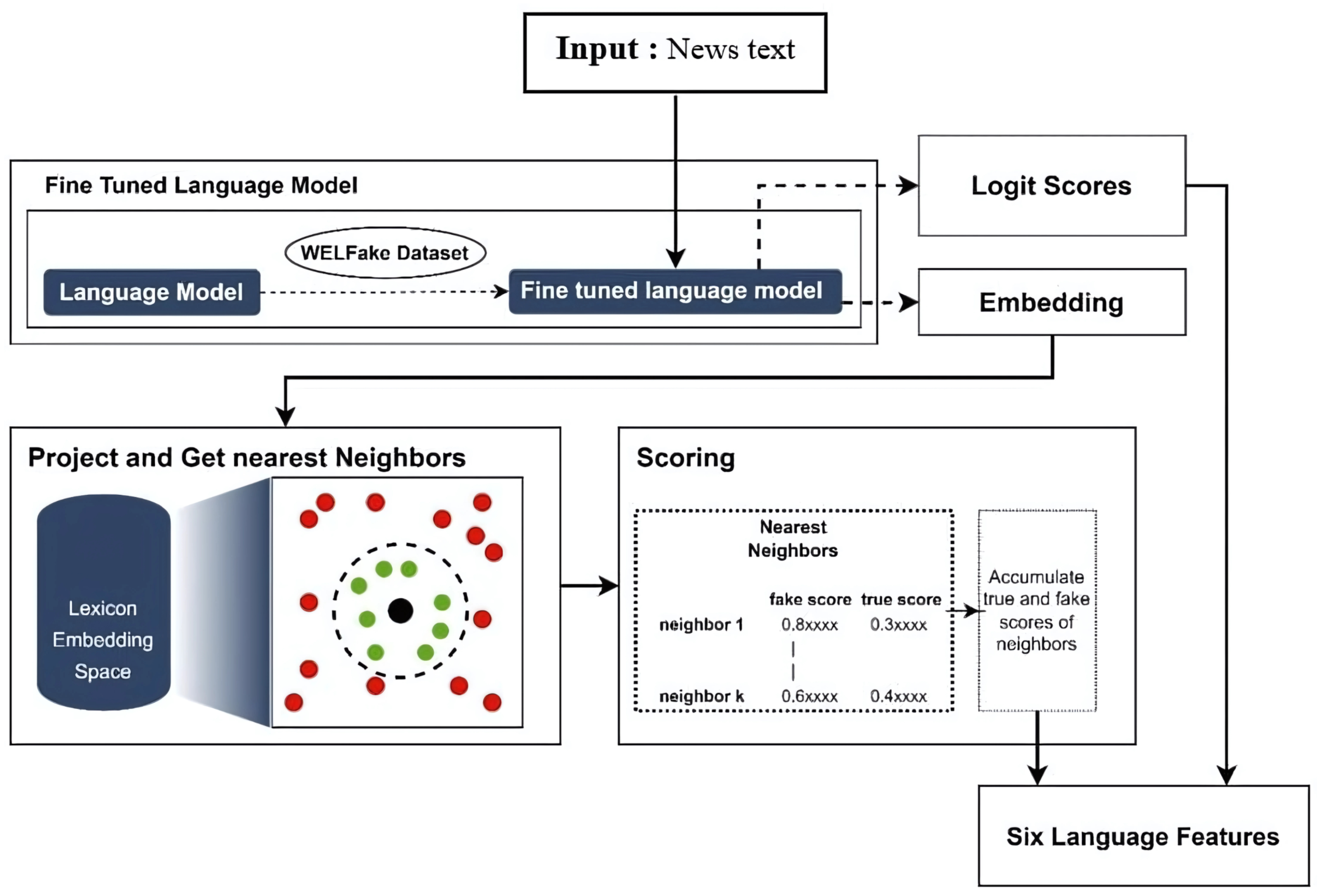

3.3. Module 2—Language Modeling

Figure 2 illustrates the module that extracts the language features from an incoming news item. Initially, the WELFake dataset was used in the language modeling pipeline for the purpose of fine-tuning the DistilledBERT embedding model and creating the lexicon. Initially, the WELFake dataset was preprocessed and cleaned, and the duplicates were removed. Next, the title and text columns were concatenated. Finally, the dataset was split into train, test, and validation sets. Through the use of the Trainer API from Huggingface, a DistilBERT classifier was fine-tuned on the WELFake dataset. This fine-tuned model was able to achieve an accuracy of 0.99 on the validation set and 0.99 on the test set, reflecting in-distribution performance. This is expected, as WELFake is a synthetically balanced dataset with clear differences between real and fake news, making it commonly used for adapting language models to the fake news domain. Each incoming news item is passed through the tokenizer and then the fine-tuned language model. The model outputs a two-element array of logits, where each element corresponds to the true and fake scores of a class and the embedding of the news item. Here, true and fake scores are defined by Equation (

2):

The WELFake dataset was also used to generate the lexicon. The dataset was preprocessed, where URLs, non-ASCII characters, numbers, and punctuation were removed. Multiple spaces were also converted to single spaces. Finally, the following corpuses were created from the dataset:

For the creation of both news corpuses (

and

), we first extract the most frequently occurring monograms, bigrams, and trigrams from the news corpus (before extracting monograms, STOP words are removed using the SMART stopword list). In addition to DistilBERT-based contextual embeddings, we included monogram, bigram, and trigram features to capture surface-level language patterns often linked to fake news, which tends to use repeated phrases, emotional slogans, or clickbait headlines that deep models may overlook. These features help the model spot how the text is written and complements the deeper semantic understanding provided by the embeddings. Each extracted monogram, bigram, and trigram generates four different scores based on the following equations (only the calculation of monogram scores is shown; similarly, bigram and trigram scores were calculated):

The created lexicon had a total of 3771 words and phrases (including monograms, bigrams, and trigrams). The lexicon was embedded into a vector space using the same fine-tuned language model that calculated the embeddings of the news item. The outliers from the lexicon were removed using the isolation forest algorithm.

Similar to the emotion modeling module, the embeddings of the news item and the lexicon, created by the same fine-tuned language model, are projected into the same vector space. Next, a nearest neighbor search is performed on the news item using Annoy, where the nearest hundred lexical neighbors to the news item are identified. The selection of the hundred neighbors was determined through empirical evaluation that balanced diversity and noise. Smaller values limited the range of word meanings captured, while larger values brought in less relevant words that added noise. The four different scores of each of the hundred lexical neighbors (fake and true document scores and fake and true occurrence scores) are aggregated into fake and true document scores and fake and true occurrence scores of the news item. Finally, the two logit scores generated by the embedding model and the four aggregated news item scores are outputted from the language pipeline.

3.4. Module 3—Semantic Modeling

This module extracts the semantic features from an incoming news item. Initially, the post is preprocessed by removing non-ASCII characters, URLs, and punctuation. Further lemmatization techniques were applied as part of the feature extraction pipeline. Out of more than 50 different semantic features that can be extracted from a text, we have extracted around 20 of the most prominent and important features. Each extracted feature and the category it belongs to is explained in detail below.

Features such as words per sentence, characters per word, and punctuation per sentence were extracted to compute the complexity of the text. Text complexity can be defined as the level of difficulty in reading and understanding a text based on a series of factors such as the readability of the text, the levels of meaning in the text, the structure of the text, the length of the text, the length of words, etc.

Features such as type-to-token ratio, redundancy, noun ratio, verb ratio, adjective ratio, and adverb ratio were extracted to quantify the diversity of text. Diversity in a text refers to the diverse use of vocabulary and the redundant use of the same tokens. Text and token average length-based features such as number of characters, number of words, number of sentences, number of punctuation marks, number of question marks, and number of exclamation marks were extracted for quantity-based analysis of the text. The uncertainty measure of the text addresses the confidence level of the author in the discussed subject matter. Immediacy refers to communicative behaviors that reduce the physical or psychological distance between individuals. Immediacy behaviors can be verbal or non-verbal. In this study, verbal non-immediacy clues were extracted from the text using the following equations:

These features were selected based on both domain knowledge and their theoretical relevance to information asymmetry in fake news. Specifically, they capture how misinformation creators manipulate structural complexity (e.g., words per sentence, characters per word), emotional tone (e.g., sentiment polarity, question/exclamation marks), and linguistic style (e.g., lexical diversity, part-of-speech ratios, redundancy) to influence perception, often differing from how consumers interpret that information.

To refine this set, we applied Recursive Feature Elimination with Cross-Validation (RFECV), which reduced the list to the most predictive 14 features across the datasets. Notably, length-only features (e.g., total word or character count) were excluded, as they skew model learning without offering meaningful semantic patterns, particularly across texts of varying length.

Semantic features are highly influenced by the length of the text (number of words, number of characters, number of sentences, etc.). For example, the number of words in a short text mostly falls between 0 and 50, and the unique number of nouns falls between 0 and 25, whereas for a long text, the number of words in a long text mostly falls between 0 and 250, and the unique number of nouns falls between the same 0 and 15 range, but due to the higher assigned value for the number of words, the model will give higher priority to that feature. To neutralize this effect, the features were normalized against the text length by applying IQR (interquartile range) filtering to map any outlier features into the IQR region and applying Z-score normalization to penalize the default feature weight gained by their value range. These normalized fourteen semantic features are outputted from the semantic pipeline.

3.5. Module 4—Classification

Before passing the data into the classifier, filtering is applied to shape outliers for the emotion and semantic features. These two sets of features are then normalized using Z-score normalization. The language features were not standardized, as they performed well without standardization.

Figure 3 presents the final voting classifier used. The voting classifier uses two separate Gaussian naive Bayes models to classify emotion and semantic features and a K nearest neighbor classifier for language features. Following this, the decisions outputted by the three models are considered, and the voting classifier outputs the final results based on a hard voting mechanism. The architecture maintains modular emotion, semantic, and linguistic feature pipelines, which not only enhance classification robustness but also allow for interpretability by enabling us to examine the role of each module in the final prediction.

4. Experiments and Results

Extensive experiments were carried out to investigate, test, and evaluate the proposed methodology. The usual evaluation strategies and metrics are not sufficient to evaluate the generalizability of an approach. Therefore, a relatively new metric that has been used to evaluate model generalizability in previous research, the Cross Validation Average Accuracy (CVAA), was used to evaluate the experiments. The CVAA of a classifier model (M) across five different datasets (D1, D2, D3, D4, and D5) is calculated according to Equation (

15):

where

The initial phase of the study involved an ablation study, which was conducted to evaluate the extent to which each modeling pipeline could effectively detect instances of fake news, hence assessing their generalizability. Separate experiments were undertaken on individual pipelines, employing a variety of algorithms such as tree algorithms, boosting algorithms, and other classification approaches in order to determine the most effective models for each modeling technique and to determine the extent of their importance in detecting fake news. Furthermore, an examination was conducted to assess the influence of the standardization process. These investigations aimed to ascertain the extent to which standardization contributes to the improvement of the overall applicability of fake news detection in each pipeline. This phase of the study involved comparing and contrasting the average generalization (cross-validation) accuracies of these models.

From the experimental results in

Table 3, the conducted ablation investigations demonstrated that the Gaussian naive Bayes classifier exhibited remarkable performance in relation to both emotion and semantic characteristics, attaining accuracies of 0.58 and 0.53, correspondingly. Furthermore, the K nearest neighbors classifier demonstrated superior performance in terms of linguistic features, obtaining an accuracy of 0.6, as evidenced by the conducted ablation trials. When examining the effects of standardization, research conducted using ablation studies revealed that standardization had a positive impact on emotion and semantic characteristics, resulting in enhanced accuracies of 0.584 and 0.58, respectively. In contrast, language features demonstrated consistent performance even in the absence of standardization. In terms of their efficiency, language traits proved to be more advantageous in detecting instances of fake news, with emotional and semantic elements following closely after. The gains observed in the combined approach can be attributed to partial overlap in the features captured by the three modules. For instance, both the emotion and semantic models encode elements of emotions and narratives, which may result in feature redundancy. Moreover, since each model is already optimized for a specific dimension, their aggregation does not produce a strictly additive gain. Future enhancements could explore deeper feature fusion mechanisms or late-stage decision fusion to better leverage the complementary strengths of each module.

These results highlight the benefits of a modular architecture, where emotion, linguistic, and semantic pipelines are modeled independently and optimized for their respective feature types. The ablation study effectively demonstrates how each module contributes to fake news detection in isolation. Linguistic features achieved the highest CVAA (0.60), followed by emotional (0.584) and semantic (0.58) features after standardization. This independent evaluation provides a broad but informative view of how each pipeline performs individually. Importantly, it provides a basis for understanding how their integration enhances the model’s overall robustness and generalizability. Next, the effect of combining the features was investigated in order to evaluate if the combination of the multiple facets had any significant impact on improving the generalizability of fake news detection. Multiple experiments were conducted where various combination approaches, such as concatenation of features, neural networks, and ensembling techniques such as voting classification, were tested to identify the best-performing technique in combining the features.

From the various feature fusion experiments conducted, the use of the ensembling technique, the voting classifier, to combine the multifaceted features proved to be the best-performing combination approach due to its high accuracy, lower computational cost, and greater interpretability in a real-time detection context. Here, three different classification models were used to classify each modeling pipeline, and finally, a decision was made by the voting classifier using the three classification votes from each pipeline. Further experiments were conducted using different combinations of models for each pipeline and different combinations of hard and soft voting for the voting classifier.

The voting classifier created using the Gaussian naive Bayes classifier for normalized emotion and semantic features and the K nearest neighbors classifier for unnormalized language features, which performed hard voting (obtained the majority of the votes as the prediction label), was observed to make the most accurate prediction. The accuracy obtained was by far the best, with a cumulative CVAA of 0.62, as shown in

Table 4. It can be concluded that combining the different facets enabled the detection of fake news more accurately than each pipeline did separately. While the highest CVAA achieved may appear modest in absolute terms, it reflects consistent performance across five diverse datasets with varying formats (tweets, articles), lengths (short/long text), and domains (COVID-19, politics, general news). This level of cross-domain generalizability is particularly relevant for real-world deployment, where fake news characteristics often vary significantly across platforms and topics. Therefore, even incremental gains in CVAA suggest robust model adaptability, rather than overfitting to a specific dataset.

In addition to evaluating the generalizability of the model by using different modeling facets, different combinations of datasets were also used in order to evaluate the generalizability of the approach across data with diverse features. Here, the voting classifier from previous experiments was trained and tested on varying combinations of the five datasets, as listed below:

Trained on short texts and tested on long texts

Trained on long texts and tested on short texts

Trained on a combination of short and long texts; tested on short texts

Trained on a combination of short and long texts; tested on long texts

Trained on a combination of short and long texts; tested on a combination of short and long texts

To support real-time applicability, we designed the system with computational efficiency in mind. The emotion lexicon was reduced from about 10,000 to 3000 entries to enable faster look-ups. Embeddings are pre-computed and indexed using the Annoy algorithm for approximate nearest neighbor search, ensuring low latency. Additionally, the use of lightweight classifiers such as Gaussian naive Bayes and KNN ensures rapid inference. From

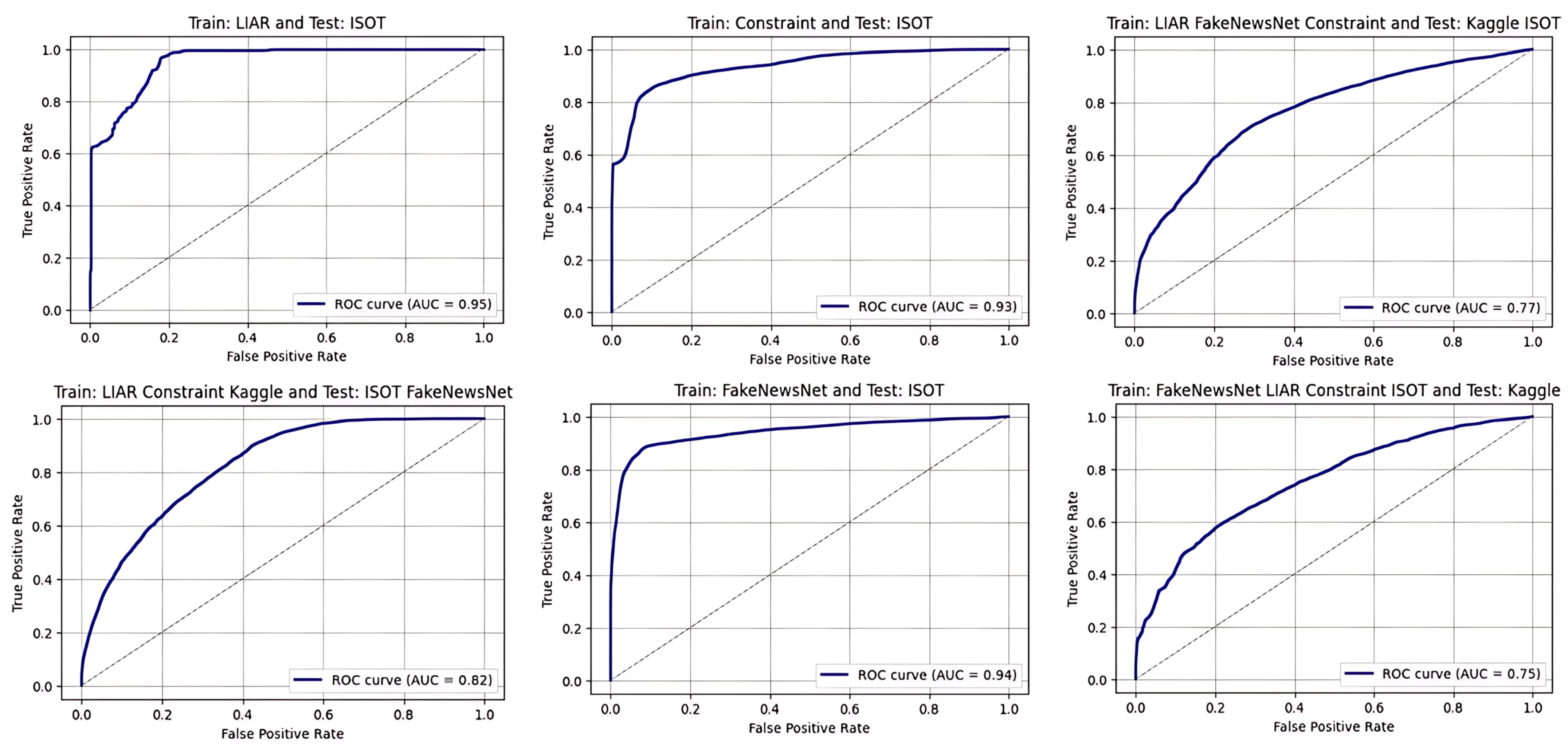

Table 5, it can be observed that when the model was trained on long text only (trained on ISOT and Kaggle, tested on FNN, LIAR, and CONSTRAINT), the accuracy obtained was comparatively low (0.50). But, when a mixture of long and short texts were used, the accuracies were comparatively higher, with an average of 0.68 (trained on FNN, LIAR, CONSTRAINT, and ISOT, tested on Kaggle). This indicates that for a model to achieve good generalizability, it has to be trained on various kinds of training data (short and long). As further demonstration of generalizability across domains, we plotted ROC curves for selected train–test combinations in

Figure 4. These visualizations demonstrate high AUC scores in multiple configurations, supporting the model’s robustness across diverse dataset sources.

These findings highlight that generalization across platforms or sources depends not only on model design but also on the diversity of the training data. Only using long-form content limits the emotional and linguistic variation captured during training. In contrast, short texts often exhibit concentrated emotional expressions, rhetorical questions, or polarizing cues and elements crucial for detecting manipulation. Therefore, training on a mix of short and long content enhances model robustness and scalability, especially when deployed in dynamic, mixed-format environments such as social media and digital news platforms.

Nasir et al. introduced a model that exhibits generalizability, which was trained using the ISOT dataset and subsequently validated through cross-validation on the Fa-KES dataset [

44]. In a similar manner, our proposed approach was trained using the ISOT dataset and subsequently underwent cross-validation using the FA-KES dataset. In this experiment, our model achieved comparable results to Nasir et al.’s method by solely utilizing the news article titles, yielding an accuracy of 0.50. Furthermore, our methodology exhibited superior performance when utilizing the complete content, including both the title and text, achieving an accuracy rate of 0.52. This finding provides additional evidence that our model is more effective in predicting fake news across a wide range of scenarios when compared to the methods that are currently available. These experiments firmly demonstrate how the proposed multifaceted approach achieves generalizability in addressing the information asymmetry challenge of fake news detection.

To demonstrate the interpretability of our modular architecture, we present a qualitative comparison of two representative news items—one fake and one real—from the dataset. This example shows how each pipeline (emotion, semantic, and linguistic) contributed distinct evidence toward the system’s final prediction.

The fake news item, “Bombshell Obama paid FBI informant over million to do it to Trump even after the election”, exhibits elevated emotional intensities, particularly anger (15.48) and fear (8.15), suggesting an intent to provoke or mislead. In contrast, the real news item, “Johnny Depp has a clear and epic sense of entitlement ex managers say”, has much lower scores in anger (0) and fear (1.48), but a high trust score (30.9), consistent with narrative or neutral reporting. This contrast indicates that the emotion module helped identify emotionally manipulative framing in the fake article.

Semantically, the fake item includes shorter sentences (8.5 words on average), lower lexical diversity (52.94%), and flat sentiment polarity (0.00) traits often linked to oversimplified or exaggerated content. The real article features longer sentences (12 words), greater lexical diversity (58.33%), and positive sentiment polarity (0.38), reflecting linguistic complexity and balance. These were effectively captured by the semantic pipeline, which favored the real item for its richer structure.

The linguistic module adds further contrast: the real article shows a balanced word score split (51.1% vs. 48.9%) and moderate logit scores, indicating natural phrasing. The fake item, in comparison, has a skewed word distribution (64.4% vs. 35.6%) and high monogram/trigram values (3.05 and 2.54), reflecting the use of repetitive or emotionally loaded phrases often seen in misinformation.

Together, the modules consistently predicted the correct labels, highlighting the benefit of a modular, multi-perspective approach. By combining emotional, structural, and linguistic cues, the system improves both detection accuracy and transparency in decision-making.

5. Discussion and Future Work

The performance gains reported in

Table 3, while moderate in absolute terms, are representative of an architecture for real-world generalizability, interpretability, and efficiency. The system was designed to support real-time fake news detection through fast classifiers (Gaussian naive Bayes, KNN), compact emotion lexicons, Annoy graphs for rapid neighbor lookups, and hard voting for low-latency aggregation. The modular architecture enables deeper insight into which facets of information—emotional, semantic, or linguistic—drive predictions, while also supporting independent module tuning or replacement. This design enhances generalizability by capturing multiple dimensions of misinformation, allowing the system to perform robustly across news items with varying styles, topics, and intents. To ensure the system remains viable in real-time and high-throughput scenarios, several strategies were applied to reduce computational demands. The emotion lexicon was streamlined from 10,000 to approximately 3000 terms by removing rare and contextually ambiguous entries and merging semantically similar terms. This resulted in a 49% reduction in average emotion embedding lookup time (from 71 ms to 36 ms), achieved through cosine similarity and Annoy-based indexing. Further, storing indexed vectors in read-only memory structures facilitated parallel access, reducing overhead during concurrent inference. These efficiency gains were paired with a robust evaluation strategy. Instead of relying on single-split metrics, we employed Cross-Validation Average Accuracy (CVAA) across multiple datasets to ensure stronger generalizability and resilience to topical or structural variation. This stricter evaluation provides a more reliable picture of the model’s real-world applicability. As future work, we intend to conduct further experiments with larger and more diverse datasets and compare across pre-existing methods for fake news detection. We will also explore adaptive lexicon growth that is expanding the emotion lexicon in response to emerging emotional patterns, as well as dynamic neighbor selection based on content length or emotional intensity. Likewise, SHAP (Shapley Additive Explanations) or similar model-agnostic techniques will be incorporated to quantify the relative contribution of each module, enabling fine-grained interpretability of predictions across diverse input types.

5.1. Comparison with Recent Approaches

Recent studies on fake news detection have increasingly included LLMs, emotion-based analysis, and multi-feature hybrid architectures. LLMs demonstrate high generalizability and robustness, particularly against adversarial attacks and domain shifts. For instance, RumorLLM by Lai et al. [

54] effectively detects fake news using LLM-based augmentation, whereas comparative evaluations by Raza et al. [

55] reveal that encoder-only models can outperform generative LLMs in terms of accuracy, but there is a trade-off with some robustness and interpretability. Emotion-based approaches improve model transparency and resilience to concept drift. Liu et al. [

56] highlight how affective signals (e.g., anger, fear) correlate with misinformation, a trend supported by models incorporating sentiment and emotion features [

57,

58,

59]. These models enhance interpretability and maintain performance over time, particularly on evolving social media content. Hybrid and multifeature models achieve the best overall performance by integrating diverse signals. Notably, BRaG [

60] combines BERT, RNN, and GNN layers using user and propagation contexts, and DANES [

61] fuses social and textual contexts. These systems achieve state-of-the-art results on multiple datasets. Other systems, such as TLFND [

62] and SAMPLE [

63], use multimodal fusion and prompt learning to improve cross-domain generalizability.

In contrast to these approaches, our method directly models information asymmetry through a modular pipeline consisting of three independently tuned and explainable modules: emotion, semantic, and language modeling. While emotion has been treated as a secondary feature in some models, we place it at the core, aligning affective, linguistic, and semantic disparities between fake news creators and consumers. Moreover, our model achieves cross-domain generalizability (CVAA of 0.62) and real-time applicability, supported by lightweight classifiers and indexed embeddings. This modular design offers interpretability comparable to that of XAI-based systems but with faster inference and focused attribution of feature contributions. This is further summarized in

Table 6.

5.2. Limitations of the Proposed Approach

Despite the strengths of the proposed modular ensemble model in terms of interpretability, performance, and cross-domain adaptability, there are some limitations that will be addressed through future work. First, experiments were conducted on a curated set of publicly available benchmark datasets. Although these datasets offer diversity in topic and structure, they may not fully represent the linguistic, cultural, or contextual diversity encountered in real-world misinformation, particularly in low-resource settings or non-English content. This may limit the generalizability of the model to underrepresented domains.

Second, the effectiveness of the emotion module is highly dependent on the quality of the underlying emotion annotations. In several datasets, emotional cues are inferred using lexicon-based or distant supervision methods, which can introduce labeling noise, particularly in cases involving sarcasm, irony, or subtle affective manipulation. Consequently, the emotional component may be less reliable in ambiguous or adversarial contexts.

Third, although our modular architecture supports interpretability at the component level, it does not yet provide token-level or instance-specific attribution comparable to post hoc explainability tools such as LIME or SHAP. While the model allows for a high-level understanding of feature contributions, further integration of localized explanation mechanisms could enhance interpretability for end-users and auditors. Another important limitation is the challenge of temporal and conceptual drift. Our current evaluation does not include longitudinal data or time-sensitive misinformation trends, and it remains uncertain how well the model will perform in dynamic environments where language use and misinformation strategies evolve rapidly. Addressing this would require real-time or time-split evaluations to assess robustness over time.

In addition, the emotion and linguistic lexicons used by the model are static. As language evolves constantly, especially in online and social media settings, new slang, cultural references, and affective cues emerge regularly. Without regular updates to the lexicon to reflect these changes, the model may miss or misinterpret novel expressions, weakening the performance of the emotion and language modules, particularly in unfamiliar or emerging contexts.

Finally, the current model operates without external factual resources, such as knowledge graphs, retrieval-augmented generation (RAG), or structured fact-checking databases. Although this design allows for lightweight deployment and speed, incorporating external knowledge can significantly enhance the semantic depth and factual grounding of predictions, particularly for claim-based fake news detection.