Enhancing Commentary Strategies for Guandan: A Study of LLMs in Game Commentary Generation

Abstract

1. Introduction

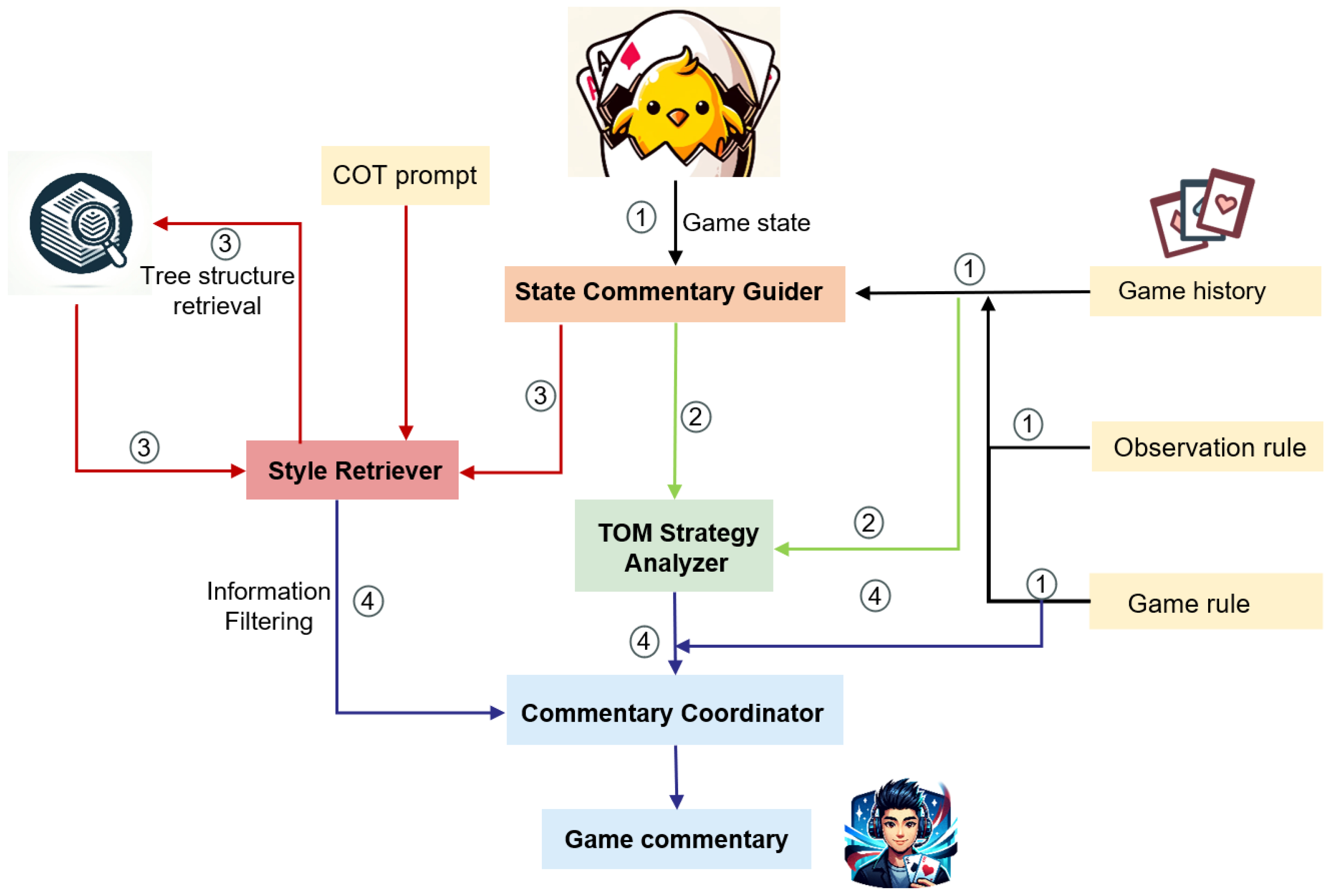

- We equip LLMs with Theory of Mind (ToM) capabilities, optimizing retrieval and information filtering mechanisms. This allows LLMs to effectively analyze the game-playing processes of RL agents and produce more personalized, context-aware commentary.

- We design a specialized commentary agent framework tailored for Guandan, a card game characterized by incomplete information. This framework enables the agent to handle complex game situations and generate insightful commentary without requiring specific training in the game environment.

- Our experimental results demonstrate that the proposed framework significantly enhances the performance of open-source LLMs, outperforming GPT-4 across multiple evaluation metrics.

2. Related Work

2.1. Game Commentary Generation

2.2. Retrieval-Augmented Generation

3. Method

3.1. State Commentary Guider

- General Rules: A brief game introduction, team position rules, all single cards, and cards ranking.

- Card Type that cannot beat other types: {Description of Card Type 1, Example of Card Type 1}, {Description of Card Type 2, Example of Card Type 2}, ...;

- Card Type that can beat other types: {Description of Card Type 1, Example of Card Type 1}, {Description of Card Type 2, Example of Card Type 2}, ...;

- Single Win/Loss Rule: The scoring rules for four players in a single game, with different combinations of cards being played out in different order.

- Whole Win/Loss Rule: The overall win/loss conditions.

- Input Explanation: The input types, like dictionaries and lists, are clearly specified. A description of every component in the input is also provided.

- Conversion Tips: Additional instructions guide converting the low-level game state representations into natural language descriptions.

3.2. TOM-Based Strategy Analyzer

- First-order ToM: We utilize first-order ToM for basic strategy analysis. By analyzing players’ past actions and the current situation, we infer the possible hand types each player may hold and analyze their potential strategies. This information is utilized to construct commentary content, explaining players’ actions and potential counter-strategies by opponents. For example, if a player consistently chooses to play certain cards, we can infer that they might hold strong cards and possibly intend to suppress their opponents.Second-order ToM: We introduce second-order ToM for a deeper level of strategy analysis. At this stage, we not only consider players’ strategies and actions but also predict opponents’ cognition and reactions to these strategies. Through this approach, we can interpret the game progression and players’ strategic tendencies more comprehensively. For instance, if a player adopts a relatively adventurous strategy in a certain situation, we may speculate that they believe their opponents would not anticipate this move, deliberately selecting this strategy.

| Algorithm 1 Theory of Mind (ToM) Inference Step |

|

3.3. Style Retrieval and Extraction

- Data Retrieval: In the data retrieval phase of Guandan game commentary, we adopt a tree structure retrieval method to efficiently extract information most relevant to user queries from a corpus specifically designed for the Guandan game. In this process, each document is broken down into individual document nodes, each containing the content of the original document and a unique identifier, to facilitate subsequent vector indexing. The content of these document nodes is then converted into vector form and indexed using a vector space model.

- Information Filtering: In the information filtering stage, the model meticulously filters the retrieved data. The system examines the relevance of each data item, retaining only those that meet high standards for subsequent processing. The content outputted by the Style Retrieval and Extraction module will provide curated, relevant data to the Commentary Coordinator to support the analysis and explanation of game situations and player strategies during the commentary process.

4. Experiments and Result Analysis

4.1. Implementation Details

4.2. Dataset

- Professional commentary: Transcribed from match videos with two-stage validation:

- Technical accuracy (≥90% compliance) verified by game experts (2+ years experience)

- Strategic relevance scored by professionals (avg. 4.5/5)

- Generated commentary: Produced by the commentary model based on actual match data, simulating the style and content of professional commentary.

4.3. Metrics

- Cosine Similarity: We employ the TF-IDF vectorization method [29] to convert processed texts into vector form and calculate the cosine similarity between professional and test texts. This measures the semantic closeness of professional and generated texts, reflecting the model’s capability to capture semantics.Sentiment Analysis: Through sentiment analysis [30] tools, we assign sentiment polarity scores to the texts to compare the emotional expression differences between the two types of texts.Lexical Diversity: We use the Type-Token Ratio (TTR) [31] to assess the lexical diversity of the texts. This metric measures the proportion of different words in the text relative to the total number of words, reflecting the richness and diversity of the language.SNOWNLP: The SNOWNLP [32] score is a sentiment score ranging from 0 (most negative) to 1 (most positive), computed using a Chinese text sentiment analysis library based on Naive Bayes.Human Evaluation: We also conduct human evaluations, including match consistency and fluency. These evaluations are completed by human reviewers to verify whether the commentary texts accurately reflect the actual card game situations and assess the readability and naturalness of the texts. Reviewers need to have a certain knowledge and experience of Guandan to accurately judge the accuracy of the text descriptions.

- Match Consistency

- Key Event Identification (KEI): Assess whether the commentary texts capture key events in the match, such as major turnarounds or critical decision points [33].

- Detail Accuracy: Check if the text descriptions of card types, scores, and player strategies are precise and correct.

- Fluency

- Naturalness: Assess if the text language is smooth and free of grammatical errors or unnatural expressions.

- Information Organization: Evaluate if the text’s information is properly organized and can be understood in a logical order throughout the development of the match.

- Logical Coherence: Check if the narrative of events in the text is coherent and free from logical jumps or contradictions.

4.4. Results

- Human Evaluation Analysis. We recruit 20 human annotators with Guandan experience for scoring. As shown in Table 2, in terms of match consistency, our model significantly outperforms other models, particularly excelling in Key Event Identification (KEI) [34]. This demonstrates that our model accurately captures crucial moments in the game, effectively reflecting the game’s turning points and climactic sections. In Detail Accuracy, our model also performs exceptionally well, accurately describing game elements such as card types, scores, and strategic actions. Regarding fluency, our framework scores high in naturalness (0.95), information organization (0.89), and logical coherence (4.34), highlighting the model’s ability to generate commentary text that is grammatically correct, logically structured, and contextually coherent.

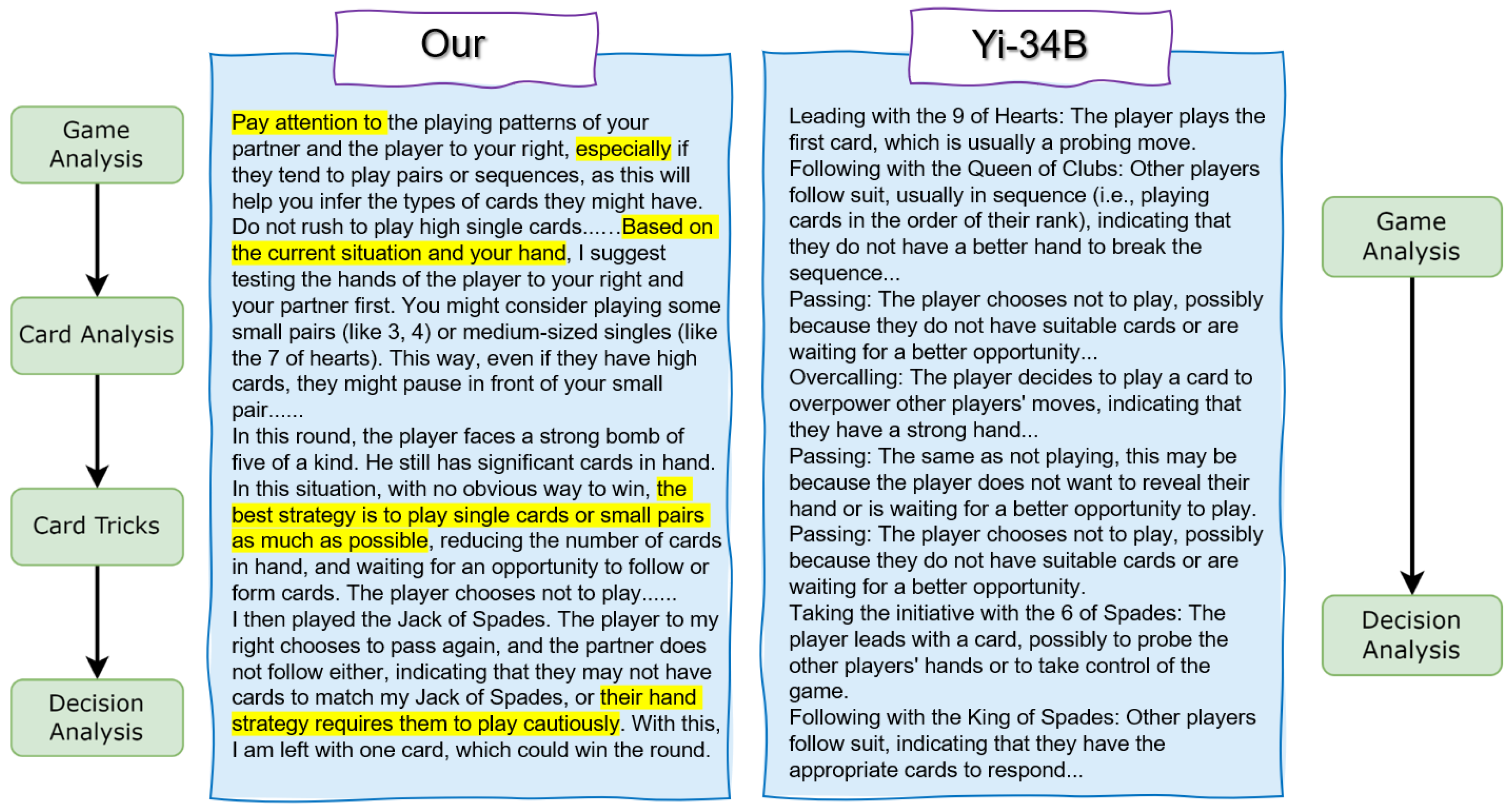

- Case Study. As shown in Figure 2, our method not only focuses on the types of cards played but also delves into detailed analysis of the playing patterns and potential strategies of the players and their opponents. It provides recommendations based on the current and predicted game state, advising when to play high or low cards. This includes discussions on when to play single cards or pairs to maximize gameplay advantage, helping players not only understand the game rules but also master advanced strategies to enhance their gameplay level. In contrast, the Yi-34B model’s commentary seems to focus more on the sequence of cards played without deeply exploring the reasoning or strategic implications behind these choices. It lists actions such as leading with certain cards or choosing to pass, which, while informative, do not delve into the strategic significance of these decisions. See Appendix B for more output examples.

4.5. Ablation Studies

- Ablation Studies on RAG and ToM. Table 3 shows that removing RAG (e.g., “Our(w/o RAG)(Vanilla)”) results in a cosine similarity of 0.0 with the original text, despite producing the maximum SNOWNLP score of 1.0 and a reasonably high lexical diversity (0.87 or 1.0). This outcome implies that, without retrieval, the generated text deviates significantly from the source content, even though it may appear stylistically diverse. In contrast, when RAG is introduced (“Our(w RAG)(1st-ToM)” or “Our(w RAG)(2nd-ToM)”), the cosine similarity rises markedly (up to 0.7955), indicating stronger alignment with the original text. However, this improvement is accompanied by a slight decrease in both lexical diversity and SNOWNLP scores, suggesting that retrieval imposes some constraints on free-form generation. Overall, these results underscore the importance of RAG for achieving higher semantic fidelity in commentary.Ablation Result Analysis. The RAG model with the retrieval component displays significant disparities from the model lacking it across various dimensions. In terms of lexical diversity, the model incorporating retrieval exhibits somewhat constrained diversity, suggesting a potential limitation imposed by the retrieval component. Sentiment analysis using the SnowNLP tool reveals that the model without retrieval yields more pronounced sentiment results, diverging notably from the original text. This deviation may arise from the model’s greater freedom in generating emotional expressions, albeit resulting in a less faithful imitation of the source text. Conversely, regarding text semantic similarity, the model integrating retrieval showcases a distinct advantage, effectively highlighting the crucial role of the retrieval component in maintaining semantic coherence and bolstering text relevance.

5. Conclusions

6. Code Availability

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Guandan Rules

Appendix A.1. Basic Rules

Appendix A.2. Additional Rules

Appendix B. Sample Commentary for Output

Appendix B.1. GPT-4

Appendix B.2. GPT-3.5

Appendix B.3. Qwen-32B

Appendix B.4. Yi-34B

Appendix B.5. GLM-4

Appendix B.6. Our-Part1

Appendix B.7. Our-Part2

References

- Liao, J.W.; Chang, J.S. Computer Generation of Chinese Commentary on Othello Games. In Proceedings of the Rocling III Computational Linguistics Conference III, Taipei, Taiwan, 21–23 September 1990; pp. 393–415. [Google Scholar]

- Sadikov, A.; Možina, M.; Guid, M.; Krivec, J.; Bratko, I. Automated chess tutor. In Proceedings of the Computers and Games: 5th International Conference, CG 2006, Turin, Italy, 29–31 May 2006; pp. 13–25. [Google Scholar]

- Kameko, H.; Mori, S.; Tsuruoka, Y. Learning a game commentary generator with grounded move expressions. In Proceedings of the 2015 IEEE Conference on Computational Intelligence and Games (CIG), Tainan, Taiwan, 31 August–2 September 2015; pp. 177–184. [Google Scholar]

- Guo, J.; Yang, B.; Yoo, P.; Lin, B.Y.; Iwasawa, Y.; Matsuo, Y. Suspicion-Agent: Playing Imperfect Information Games with Theory of Mind Aware GPT-4. arXiv 2023, arXiv:2309.17277. [Google Scholar] [CrossRef]

- Kim, B.J.; Choi, Y.S. Automatic baseball commentary generation using deep learning. In Proceedings of the the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 30 March–3 April 2020; pp. 1056–1065. [Google Scholar]

- Taniguchi, Y.; Feng, Y.; Takamura, H.; Okumura, M. Generating live soccer-match commentary from play data. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Zhao, Y.; Lu, Y.; Zhao, J.; Zhou, W.; Li, H. DanZero+: Dominating the GuanDan Game through Reinforcement Learning. arXiv 2023, arXiv:2312.02561. [Google Scholar] [CrossRef]

- Kosinski, M. Evaluating Large Language Models in Theory of Mind Tasks. arXiv 2023, arXiv:2302.02083. [Google Scholar] [CrossRef] [PubMed]

- Puduppully, R.; Lapata, M. Data-to-text generation with macro planning. Trans. Assoc. Comput. Linguist. 2021, 9, 510–527. [Google Scholar] [CrossRef]

- Gardent, C.; Shimorina, A.; Narayan, S.; Perez-Beltrachini, L. Creating training corpora for nlg micro-planning. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017. Association for Computational Linguistics (ACL), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 179–188. [Google Scholar]

- Wang, Z.; Yoshinaga, N. Esports Data-to-commentary Generation on Large-scale Data-to-text Dataset. arXiv 2022, arXiv:2212.10935. [Google Scholar]

- Wei, J.; Bosma, M.; Zhao, V.Y.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned language models are zero-shot learners. arXiv 2021, arXiv:2109.01652. [Google Scholar]

- Ishigaki, T.; Topić, G.; Hamazono, Y.; Noji, H.; Kobayashi, I.; Miyao, Y.; Takamura, H. Generating racing game commentary from vision, language, and structured data. In Proceedings of the the 14th International Conference on Natural Language Generation, Scotland, UK, 20–24 September 2021; pp. 103–113. [Google Scholar]

- Jhamtani, H.; Gangal, V.; Hovy, E.; Neubig, G.; Berg-Kirkpatrick, T. Learning to generate move-by-move commentary for chess games from large-scale social forum data. In Proceedings of the the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 1661–1671. [Google Scholar]

- Nimpattanavong, C.; Taveekitworachai, P.; Khan, I.; Nguyen, T.V.; Thawonmas, R.; Choensawat, W.; Sookhanaphibarn, K. Am I Fighting Well? Fighting Game Commentary Generation With ChatGPT. In Proceedings of the the 13th International Conference on Advances in Information Technology, Bangkok, Thailand, 6–9 December 2023; pp. 1–7. [Google Scholar]

- Shahul, E.; James, J.; Anke, L.E.; Schockaert, S. RAGAS: Automated Evaluation of Retrieval Augmented Generation. arXiv 2023, arXiv:2309.15217. [Google Scholar] [CrossRef]

- Siriwardhana, S.; Weerasekera, R.; Wen, E.; Kaluarachchi, T.; Rana, R.; Nanayakkara, S. Improving the Domain Adaptation of Retrieval Augmented Generation (RAG) Models for Open Domain Question Answering. Trans. Assoc. Comput. Linguist. 2022, 11, 1–17. [Google Scholar] [CrossRef]

- Chen, W.; Hu, H.; Chen, X.; Verga, P.; Cohen, W.W. MuRAG: Multimodal Retrieval-Augmented Generator for Open Question Answering over Images and Text. arXiv 2022, arXiv:2210.02928. [Google Scholar] [CrossRef]

- Melz, E. Enhancing LLM Intelligence with ARM-RAG: Auxiliary Rationale Memory for Retrieval Augmented Generation. arXiv 2023, arXiv:2311.04177. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Networks 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Horn, R.A.; Johnson, C.R. Matrix Analysis; Cambridge University Press: Cambridge, UK, 1985. [Google Scholar]

- Rudin, W. Principles of Mathematical Analysis, 3rd ed.; McGraw-Hill: New York, NY, USA, 1976. [Google Scholar]

- Frith, C.; Frith, U. Theory of mind. Curr. Biol. 2005, 15, R644–R646. [Google Scholar] [CrossRef] [PubMed]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen technical report. arXiv 2023, arXiv:2309.16609. [Google Scholar] [CrossRef]

- Young, A.; Chen, B.; Li, C.; Huang, C.; Zhang, G.; Zhang, G.; Li, H.; Zhu, J.; Chen, J.; Chang, J.; et al. Yi: Open Foundation Models by 01.AI. arXiv 2024, arXiv:2403.04652. [Google Scholar] [CrossRef]

- Zeng, A.; Liu, X.; Du, Z.; Wang, Z.; Lai, H.; Ding, M.; Yang, Z.; Xu, Y.; Zheng, W.; Xia, X.; et al. Glm-130b: An open bilingual pre-trained model. arXiv 2022, arXiv:2210.02414. [Google Scholar]

- Porter, M.F. An algorithm for suffix stripping. Program 1980, 14, 130–137. [Google Scholar] [CrossRef]

- Aizawa, A. An information-theoretic perspective of tf–idf measures. Inf. Process. Manag. 2003, 39, 45–65. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Richards, B. Type/token ratios: What do they really tell us? J. Child Lang. 1987, 14, 201–209. [Google Scholar] [CrossRef] [PubMed]

- isnowfy. SnowNLP. 2013. Available online: https://github.com/isnowfy/snownlp (accessed on 28 March 2025).

- Renella, N.; Eger, M. Towards automated video game commentary using generative AI. In Proceedings of the the AIIDE Workshop on Experimental AI in Games, Salt Lake City, UT, USA, 8 October 2023. [Google Scholar]

- Lin, C.Y.; Hovy, E. Manual and automatic evaluation of summaries. In Proceedings of the the ACL-02 Workshop on Automatic Summarization. Association for Computational Linguistics, Philadelphia, PA, USA, 11–12 July 2002; pp. 8–13. [Google Scholar]

| Sentiment Analysis | Cosine Similarity | Lexical Diversity | SNOWNLP | ||||

|---|---|---|---|---|---|---|---|

| Neg | Neu | Pos | Compound | ||||

| GPT-3.5 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0032 | 0.09 | 0.0 |

| GPT-4 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0380 | 1.0 | 0.0 |

| Yi-34B | 0.0 | 1.0 | 0.0 | 0.0 | 0.0250 | 0.55 | 0.99 |

| GLM-4 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0050 | 0.86 | 0.98 |

| Our | 0.0 | 1.0 | 0.0 | 0.0 | 0.7955 | 0.95 | 0.0 |

| Original | 0.0 | 1.0 | 0.0 | 0.0 | - | 1.0 | 0.0 |

| Match Consistency | Fluency | |||||

|---|---|---|---|---|---|---|

| KEI | Detail Accuracy | Naturalness | Information Organization | Logical Coherence | ||

| GPT-3.5 | 0.37 | 0.95 | 0.86 | 0.69 | 3.75 | |

| GPT-4 | 0.46 | 0.91 | 0.90 | 0.80 | 4.46 | |

| Yi-34B | 0.23 | 0.87 | 0.82 | 0.62 | 3.52 | |

| GLM-4 | 0.32 | 0.83 | 0.84 | 0.58 | 2.72 | |

| Our | 0.81 | 0.97 | 0.95 | 0.89 | 4.34 | |

| Sentiment Analysis | Cosine | Lexical | SNOWNLP | ||||

|---|---|---|---|---|---|---|---|

| Neg | Neu | Pos | Compound | Similarity | Diversity | ||

| Our(w/o RAG)(Vanilla) | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.87 | 1.0 |

| Our(w/o RAG)(1st-ToM) | 0.0 | 0.0 | 1.0 | 0.0 | 0.0126 | 1.0 | 1.0 |

| Our(w/o RAG)(2nd-ToM) | 0.0 | 1.0 | 0.0 | 0.0 | 0.0380 | 1.0 | 1.0 |

| Our(w RAG)(1st-ToM) | 0.0 | 1.0 | 0.0 | 0.0 | 0.7519 | 0.92 | 0.0 |

| Our(w RAG)(2nd-ToM) | 0.0 | 1.0 | 0.0 | 0.0 | 0.7955 | 0.95 | 0.0 |

| Original | 0.0 | 1.0 | 0.0 | 0.0 | - | 1.0 | 0.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, J.; Tao, M.; Liang, X.; He, Y.; Tao, Y.; Zhang, M. Enhancing Commentary Strategies for Guandan: A Study of LLMs in Game Commentary Generation. Symmetry 2025, 17, 1274. https://doi.org/10.3390/sym17081274

Su J, Tao M, Liang X, He Y, Tao Y, Zhang M. Enhancing Commentary Strategies for Guandan: A Study of LLMs in Game Commentary Generation. Symmetry. 2025; 17(8):1274. https://doi.org/10.3390/sym17081274

Chicago/Turabian StyleSu, Jiayi, Meiling Tao, Xuechen Liang, Yangfan He, Yiling Tao, and Miao Zhang. 2025. "Enhancing Commentary Strategies for Guandan: A Study of LLMs in Game Commentary Generation" Symmetry 17, no. 8: 1274. https://doi.org/10.3390/sym17081274

APA StyleSu, J., Tao, M., Liang, X., He, Y., Tao, Y., & Zhang, M. (2025). Enhancing Commentary Strategies for Guandan: A Study of LLMs in Game Commentary Generation. Symmetry, 17(8), 1274. https://doi.org/10.3390/sym17081274