Abstract

In recent years, the proliferation of fake news and misinformation has grown exponentially, far surpassing that of genuine news and posing a serious threat to social stability. Existing research in fake news detection primarily applies contrastive learning methods with a single-hot labeling strategy. The issue does not lie with contrastive learning as a technique but with its current application in fake news detection systems. Specifically, these systems penalize all negative samples equally due to the use of single-hot labeling, thus overlooking the underlying semantic relationships among negative samples. As a result, contrastive learning models tend to learn from simple samples while neglecting highly deceptive samples located at the boundary between true and false, as well as the heterogeneity of text-image features, which complicates cross-modal fusion. To mitigate these known limitations in current applications, this paper proposes a fake news detection method based on contrastive learning and cross-modal interaction. First, a consistency-aware soft-label contrastive learning mechanism based on semantic similarity is designed to provide more granular supervision signals for contrastive learning. Secondly, a difficult negative sample mining strategy based on a similarity matrix is designed to optimize the symmetry alignment of image and text features, which effectively improves the model’s ability to discriminate boundary samples. To further optimize the feature fusion process, a cross-modal interaction module is designed to learn the symmetric interaction relationship between image and text features. Finally, an attention mechanism is designed to adaptively adjust the contributions of text-image features and interaction features, forming the final multimodal feature representation. Experiments are conducted on two major social media platform datasets, and compared with existing methods, the proposed method effectively improves the detection capability of fake news.

1. Introduction

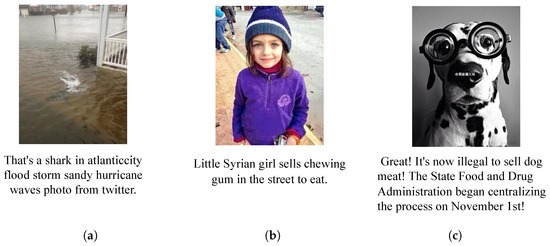

The widespread adoption of the internet and social media platforms has enabled people to access information more efficiently and disseminate it more rapidly [1,2]. In recent years, the spread of fake news and misleading information has reached unprecedented levels of complexity and prevalence, with multimodal content (especially text–image combinations) increasingly becoming the primary means of information dissemination online [3]. However, this trend has also created favorable conditions for the proliferation of fake news. Illegal information disseminators combine carefully designed text with misleading visual content, exploiting the apparent coherence of multimodal information to mask its underlying inconsistencies, thereby enhancing the deceptive nature of the information [4]. As shown in Figure 1, the text information in (a) does not match the image description, and the image is easily identifiable as a forgery. In (b), the text describes the tragic living conditions of the little girl, while the image shows the little girl smiling. In (c), the text and image descriptions share a similar subject but differ in details. The text information about “selling dog meat being illegal” contrasts with the image showing a dog wearing large-framed glasses, further highlighting its nature as fake news. According to research from the Massachusetts Institute of Technology (MIT) [5], fake news spreads six times faster than real news on social media and has a more far-reaching impact. This phenomenon not only reflects the severe challenges in current online information ecosystem governance but also indicates that developing efficient and robust multimodal fake news detection technology has become a crucial breakthrough for enhancing information credibility and ensuring online content security.

Figure 1.

Examples of fake news in Chinese and English social media datasets. (a) The text and image can be easily identified as fake. (b) The text conveys a tragic mood while the little girl in the image is smiling. (c) The text states that “selling dog meat is illegal,” while the image shows a dog wearing large-framed glasses.

Early research on fake news detection primarily relied on text analysis, utilizing traditional machine learning algorithms such as logistic regression and random forests. With the advancement of deep learning technology, researchers began incorporating recurrent neural networks (RNNs) and Transformer architectures to capture temporal features and long-range semantic dependencies in text [6]. Given that content on social media platforms exhibits typical cross-modal characteristics, inconsistencies or subtle misdirection between text and images have become common deceptive tactics in fake news. Researchers have thus begun exploring multimodal fake news detection methods, which integrate image and text information, significantly improving the efficiency and accuracy of fake news detection [7].

In recent years, multimodal pre-training methods based on contrastive learning have achieved significant results in multiple research fields, indicating that contrastive learning may become an important paradigm for multimodal representation learning [8,9,10,11,12,13,14]. Contrastive learning optimizes feature alignment between images and text by symmetrically bringing positive sample pairs closer together and symmetrically pushing negative sample pairs further apart. This method has been proven to enhance the understanding of visual and textual semantics effectively. However, the single-hot labeling strategy adopted in existing contrastive learning frameworks imposes indiscriminate penalties on all negative sample predictions, ignoring semantic differences between samples [12]. Additionally, existing methods may cause contrastive learning models to prioritize learning to distinguish relatively simple sample features while neglecting those with high semantic similarity and high discriminative difficulty. This learning bias results in weak discriminative ability in boundary regions, making it difficult to accurately distinguish samples where modal features are normal but cross-modal relationships are abnormal. Text and image information from different modalities reside in heterogeneous feature spaces, and how to effectively fuse these features and capture their interrelationships remains an open challenge [15]. Traditional simple concatenation or shallow fusion methods struggle to establish deep semantic connections between modalities, resulting in a significant loss of complementary information and ultimately impacting detection performance.

Considering the above factors, this paper proposes a false news detection method based on contrastive learning and cross-modal interaction. A dual encoder structure is adopted to extract semantic feature representations of text and images separately and establish a correspondence between them. Then, a contrastive learning objective is used to ensure cross-modal alignment between the text and image modalities. As mentioned above, traditional contrastive learning methods in fake news detection primarily employ a single-hot labeling strategy, which fails to capture fine-grained semantic associations between modalities. To address this issue and enhance the model’s discriminative capability, this paper designs a consistency-aware soft label contrastive learning mechanism. This mechanism dynamically generates soft labels based on the actual semantic similarity of modal features and adapts them through an inconsistency detector, thereby providing the model with more refined supervisory signals.

In addition, this paper designs a difficulty-based negative sample mining strategy based on a similarity matrix, actively screening the most challenging negative sample pairs using the similarity matrix, optimizing the symmetry alignment of image and text features, and combining a triplet loss to enhance the model’s discriminative ability for boundary samples.

To effectively fuse cross-modal information, this paper designs a cross-modal interaction module to achieve deep semantic interaction between text and image features. Through a multi-head attention mechanism, it performs symmetry modeling on modal features to generate correlation features that represent complex relationships between modalities. Finally, a cross-modal fusion module is designed, using an enhanced channel attention network [16] to dynamically adjust the weight distribution of different features, forming the final discriminative features. This method can obtain more accurate modality alignment representations and more discriminative cross-modal features, effectively integrating this information to improve the performance of multimodal fake news detection.

In summary, the main contributions of this paper are as follows:

- A consistency-aware soft label contrastive learning mechanism is designed, which dynamically generates and adaptively adjusts soft labels based on the actual semantic similarity of modal features, thereby alleviating the issue of insufficient capture of fine-grained semantic associations.

- A similarity matrix-based difficult negative sample mining strategy is proposed, which actively selects the most challenging negative sample pairs and combines a triplet loss to enhance the model’s discriminative ability for boundary samples.

- A feature fusion framework combining cross-modal interaction modules and channel attention mechanisms is constructed. Multi-head attention is used to achieve deep text–image semantic interaction, and channel attention networks are employed to dynamically adjust the weights of different modal features, thereby generating more discriminative fusion representations.

2. Related Work

This section provides an overview of the most relevant prior work in fake news detection and contrastive learning. We first summarize mainstream approaches for detecting fake news, followed by a discussion of recent advances in contrastive learning for multimodal misinformation detection.

2.1. Fake News Detection

False news detection has emerged as a significant research direction in the fields of artificial intelligence and natural language processing in recent years, attracting widespread attention from the academic community [17]. Against the backdrop of rapid advancements in internet technology, social media platforms have provided convenient channels for the dissemination of false information, expanding its reach and causing severe social harm. Early false news detection methods primarily relied on manually designed rule-based features and traditional machine learning algorithms, analyzing content features, writing style, and emotional tone of news texts to assess their authenticity. Classic works include the feature engineering method based on word frequency statistics proposed by Rubin et al. [18], as well as supervised learning frameworks using classifiers such as naive Bayes and support vector machines. With breakthroughs in deep learning technology, an increasing number of researchers have begun to adopt end-to-end models such as convolutional neural networks and recurrent neural networks to capture the deep semantic features of text automatically. In particular, pre-trained language models such as BERT [19] obtain general semantic representations through large-scale unsupervised pre-training, significantly improving the detection performance of text modalities.

With the increasing richness of information types on social media platforms, the field of fake news detection has gradually evolved from single-modal analysis to multimodal fusion research. Multimodal fusion not only utilizes heterogeneous information such as text and images but also improves the accuracy and robustness of fake news detection by modeling the complementary and synergistic relationships between different modalities. To learn cross-modal features, the EANN [20] model innovatively introduces an event discriminator, utilizing an adversarial training mechanism to help the feature extractor remove information related to specific events. MVAE [21] learns a joint latent variable space through a multimodal variational autoencoder, achieving semantic-level reconstruction and fusion of the original features. MCAN [22] designs a multi-layer collaborative attention mechanism, stacking multiple collaborative attention layers to capture cross-modal fine-grained interaction features, thereby achieving more effective information fusion. The CAFE [23] framework measures ambiguity using Kullback–Leibler divergence and dynamically adjusts the weights of single-modal and multimodal features, achieving more flexible information integration. The LIIMR [24] method can automatically identify modality information that is more discriminative in fake news detection, dynamically adjusting the contribution of each modality to the final decision, thereby enhancing the model’s overall performance and interpretability. IFIS [25] combines intra-modal feature aggregation with inter-modal semantic fusion, further enhancing the model’s understanding and discrimination capabilities for complex multimodal information. In this paper, the optimized pre-trained model CLIP is used for feature extraction and semantic alignment of image and text information, and a cross-modal interaction module is designed to generate multimodal fusion relevance features, providing the model with more semantic information.

2.2. Contrastive Learning

In recent years, with the rapid development of large-scale multimodal pre-trained models, cross-modal alignment and contrastive learning have gradually become important development directions in the field of multimodal fusion [26]. Contrastive learning, as a self-supervised learning framework, constructs positive and negative sample pairs and optimizes the similarity distribution in the feature space, enabling the effective learning of discriminative representations. The model optimizes the alignment of image and text features by symmetrically pulling positive sample pairs closer and pushing negative sample pairs further away. This framework has achieved significant results in computer vision and natural language processing, particularly in cross-modal understanding tasks, providing a new technical approach for multimodal fake news detection. The CLIP model proposed by Radford et al. [27] uses contrastive learning for pre-training on 400 million image–text pairs, achieving precise alignment in the cross-modal semantic space, laying an important foundation for subsequent research. The ALIGN model developed by Jia et al. [28] further demonstrates that contrastive learning can maintain stable feature learning capabilities even on large-scale noisy data (over 100 million image–text pairs). These breakthroughs have driven the development of contrastive learning in the multimodal domain.

In the field of fake news detection, a variety of approaches have adapted and extended contrastive learning to improve model performance. For example, Zhou [29] proposed the FND-CLIP framework, which combines a pre-trained CLIP model with an attention mechanism, and reports improved results on benchmark datasets such as Politifact and GossipCop. Kananian et al. [30] introduced the GRaMuFeN model, which leverages graph neural networks and a contrastive similarity loss to model multimodal global dependencies by constructing a shared space between text graphs and image representations. Wu et al. [31] developed the MFIR model, which utilizes cross-modal fusion mechanisms and multimodal inconsistency learning modules to explore semantic contradictions between text and images. Yan et al. [11] presented the SARD model, integrating heterogeneous information networks and an enhanced contrastive learning strategy to improve semantic consistency discrimination on complex datasets such as Fakeddit and MM-COVID. Shen et al. [10] described the MCOT framework, incorporating cross-modal attention mechanisms, contrastive learning, and optimal transfer theory to facilitate feature interaction and embedding alignment. Zhou et al. [8] proposed the Clip-GCN model, combining CLIP representations with graph convolutional networks to improve adaptability to new fake news patterns. Liu et al. [9] introduced expert mixture networks and intermodal contrastive objectives in the MIMoE-FND model, aiming to reduce modality redundancy and conflicts.

However, existing approaches generally overlook the semantic relationships between negative samples in contrastive learning settings, often treating all negative pairs equally and failing to fully exploit fine-grained semantic information. This paper seeks to address this gap by introducing a soft label contrastive learning mechanism and a difficult negative sample mining strategy, which together encourage the model to focus on samples with inconsistent text and images and enhance its ability to detect challenging boundary cases.

3. Methodology

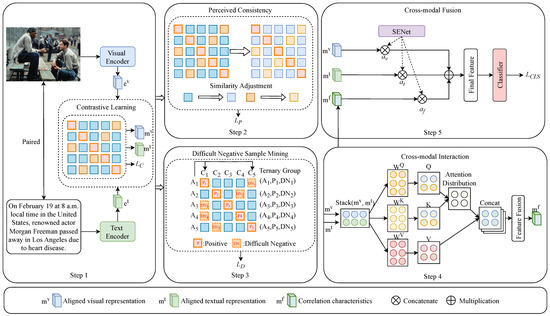

This paper proposes a multimodal fake news detection method that integrates contrastive learning and cross-modal interaction. The model consists of five key stages: feature encoding and alignment, consistency-aware soft label contrastive learning, hard negative mining, semantic interaction across modalities, and final fusion-based classification. The overall architecture is illustrated in Figure 2, with detailed step-by-step descriptions provided below.

Figure 2.

Overview of the proposed model architecture. The numbered components represent key steps in the multimodal fake news detection. These steps are explained in detail immediately below the figure.

The architecture in Figure 2 consists of the following key components:

Step 1: Multimodal Feature Representation. Text and image inputs are independently encoded using a pre-trained CLIP model. The resulting modality-specific embeddings are then projected into a shared semantic space via learnable projection layers, enabling effective cross-modal alignment.

Step 2: Consistent Perception Soft Label Contrast Learning. A soft label contrastive learning mechanism with consistency perception is introduced. Soft labels are generated based on the semantic similarity between text and image and dynamically adjusted using a consistency detector to provide fine-grained supervisory signals across modalities.

Step 3: Difficult Negative Sample Mining. A similarity matrix is constructed to identify hard negative samples. A triplet loss is employed to emphasize these challenging cases and improve the model’s ability to discriminate boundary or ambiguous examples.

Step 4: Cross-Modal Interaction. A multi-head attention mechanism is applied to the text and image embeddings, capturing semantic correlations and generating joint representations that reflect complex cross-modal relationships.

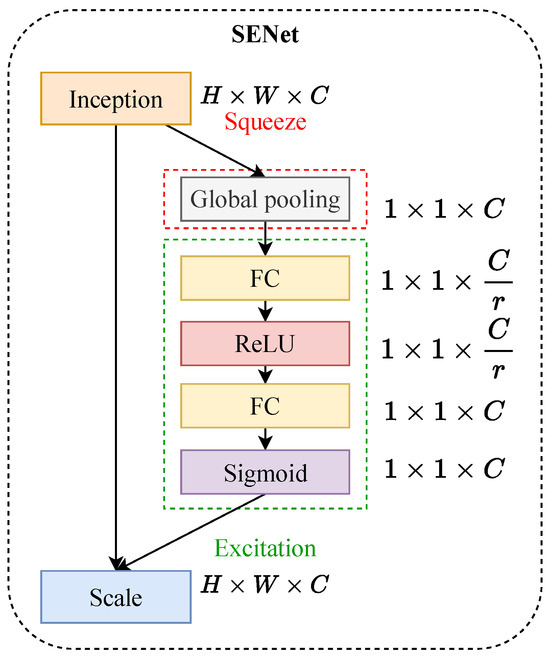

Step 5: Fusion and Classification. The original and interacted features are fused using a channel attention module (SENet), which adaptively weighs the importance of each modality. The final fused feature is then passed through a fully connected layer to produce the prediction output.

3.1. Modality Encoder

This paper employs a multimodal feature encoding framework based on the CLIP model to achieve efficient extraction and semantic alignment of textual and visual features. The pre-training advantages of the CLIP model on large-scale cross-modal datasets are fully retained. Through a specially designed feature transformation layer, the original features are mapped to a low-dimensional task space, thereby enhancing feature stability and model generalization capabilities. This encoding framework ensures computational efficiency while providing a semantically consistent feature representation foundation for downstream multimodal fake news detection tasks.

3.1.1. Text Encoder

In terms of text encoding, the input text is first processed through standardized tokenization to convert it into a token sequence that meets the model’s input requirements. These token sequences are then input into the CLIP text encoder. To optimize feature representation, the encoded text features undergo dimension transformation through a multi-layer MLP projection network and layer normalization processing, ultimately yielding text representations in a unified semantic space. This text encoder effectively captures textual semantic and contextual information, providing high-quality text features for multimodal tasks.

3.1.2. Image Encoder

In terms of image encoding, the input images first undergo standardized preprocessing, including size adjustment (224 × 224 pixels) and channel normalization. The preprocessed images are then input into CLIP’s image encoder, which is based on the ViT architecture [32] and can effectively extract high-level semantic features from images. Similar to the text processing workflow, image features are also transformed through a dedicated projection network and undergo the same normalization process, ultimately yielding image representations aligned with text features. This image encoder efficiently extracts key semantic information from image content and achieves natural alignment with text features, laying the foundation for multimodal information fusion and subsequent discriminative tasks.

3.2. Consistent Perception Soft Label Contrast Learning Mechanism

Contrastive learning has proven effective in learning discriminative, semantically aligned representations across modalities, which is critical for multimodal fake news detection. However, conventional single-hot labeling strategies used in contrastive learning fail to capture fine-grained semantic relationships between modalities and treat all negative samples equally. To address these limitations, we introduce a consistency-aware soft label contrastive learning mechanism. This mechanism dynamically generates soft labels based on semantic similarity and adapts the training objective according to predicted cross-modal consistency, enabling the model to focus on more challenging sample pairs and providing finer-grained supervision for multimodal alignment.

First, pre-trained CLIP models with frozen parameters are used to extract feature representations from text and images, respectively. These are then mapped to a unified low-dimensional semantic space via a projection layer to obtain unified-dimensional text-image features. Subsequently, the contrastive learning mechanism is applied for cross-modal alignment. Assuming there are N text-image sample pairs in a training batch, for each sample pair , the image-to-text similarity and the text-to-image similarity can be calculated as follows:

The temperature hyperparameter plays a crucial role in contrastive learning by scaling the logits before computing the softmax, thereby controlling the sharpness of the similarity distribution between positive and negative pairs. Specifically, a smaller makes the probability distribution more peaked, which forces the model to focus more on distinguishing hard negatives, while a larger results in a softer distribution that reduces the impact of challenging samples. We set in our experiments, which is consistent with widely adopted values in seminal works such as CLIP [27]. These studies have demonstrated that setting to 0.07 achieves a good balance between training stability and discriminative power. Therefore, we chose 0.07 as the default temperature parameter in all experiments.

The function measures similarity scores using dot products. and are the one-hot encoded label vectors for image-to-text and text-to-image pairs, respectively, where positive sample pairs are assigned 1 and negative pairs 0, serving as targets for cross-entropy calculation:

Similarly, we can calculate and obtain the contrastive learning loss:

As mentioned above, in response to the limitations of the single one-hot labeling strategy in traditional contrastive learning for multimodal fake news detection, this paper proposes a soft label contrastive learning mechanism based on consistent perception to enhance the model’s ability to model fine-grained semantic relationships in multimodal data. As shown in Algorithm 1, based on the previous unimodal embeddings and , an adaptive transformation is performed on both using a feature enhancement module to obtain complementary feature representations:

Among them, are multi-layer perceptrons that act on text and images, respectively, with the purpose of better aligning features from different modalities in a unified space. This equation is proposed in this work. To simultaneously leverage the “general representation” and “enhanced features” of single-modal embeddings, the two are concatenated and fused, using the fused embedding to construct a semantic similarity matrix as a soft label:

where represents vector concatenation, and is the domain feature fusion weight. This equation is proposed in this work.

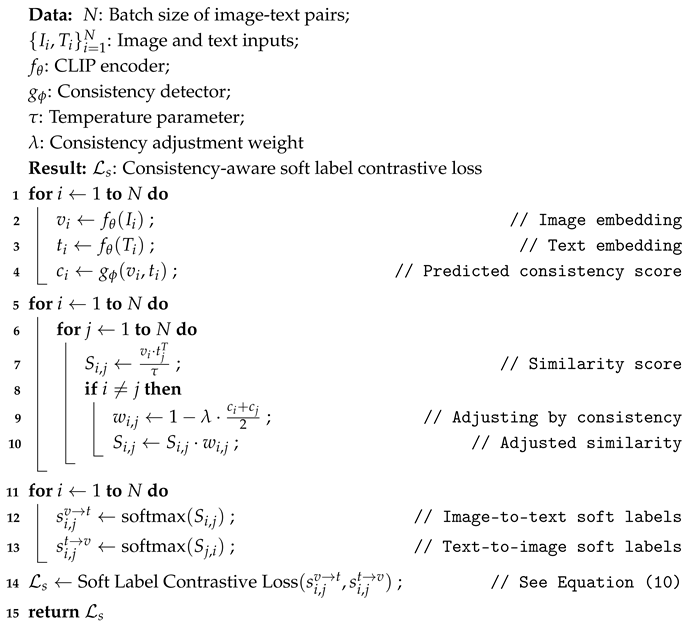

| Algorithm 1: Consistent Perception Soft Label Contrast Learning Mechanism. |

|

Use the fused features of the image–text pairs to model the semantic matching degree between images and text and calculate the cosine similarity:

Additionally, an inconsistency detector has been introduced into the soft label generation process to further enhance the model’s ability to model inconsistencies between modalities. This detector outputs inconsistency scores based on the fused features of text and images and uses these scores as weights to adjust the similarity matrix dynamically, thereby giving higher priority to sample pairs with higher inconsistency in loss calculation. An inconsistency-weighted matrix is constructed from the inconsistency score vector :

where is the inconsistency weight parameter used to control the importance of inconsistent samples. and are the respective inconsistency prediction scores for the image–text sample pair . This equation is proposed in this work.

Therefore, during training, the model places greater emphasis on “inconsistent” sample pairs, thereby enhancing its ability to distinguish between inconsistent text and images. Take image-to-text similarity as an example. For the pair, the image-to-text similarity can be calculated as follows:

where is the temperature initialized to 0.07. Similarly, the similarity between text and image can be calculated.

Based on the above similarity distributions and as soft labels, the soft label loss is defined as the cross-entropy between the predicted distribution and the soft label distribution:

Finally, the total loss of cross-modal contrastive learning is defined as:

Among them, controls the contribution of the soft label mechanism. Through the soft label contrastive learning mechanism with consistent perception, the model pays more attention to sample pairs with high inconsistency, providing the model with more granular semantic information.

3.3. Difficult Negative Sample Mining

In order to optimize the symmetry alignment of image and text features and improve the model’s ability to recognize boundary samples, this paper designs a dynamic difficult negative sample mining mechanism. First, based on the single-modality embeddings and , the similarity matrix between images and text can be calculated. In order to ignore the positive samples on the diagonal and ensure that the most difficult negative samples are not mistakenly selected in the subsequent selection process, a mask matrix M is constructed, and only the similarity matrix of the negative samples is retained:

Among them is the mask matrix , with the diagonal being 0 (positive samples) and the rest being 1 (negative samples). In this paper, represents the matrix that only retains the similarity of negative samples, and the position of positive samples is set to the minimum value to avoid being selected as negative samples. This equation is proposed in this work.

For each sample i, the non-self sample with the highest similarity can be found from its corresponding row, the “negative sample most similar to the positive sample.” An image and text anchor each sample to construct two triplet structures (Anchor, Positive, Hard Negative), and the triplet loss is calculated. The loss anchored by the image is:

Similarly, we can obtain the loss with text as the anchor point:

where is the boundary interval hyperparameter, and the above loss ensures that the model maintains a certain interval when discriminating between positive and negative samples, thereby enhancing the discrimination boundary. This equation is proposed in this work.

Finally, the triplet losses of all samples are normalized and averaged to obtain the overall loss of difficult negative samples:

3.4. Cross-Modal Interaction

Given the highly correlated nature of text and image semantics in multimodal fake news detection, this paper proposes a cross-modal interaction mechanism to capture this cross-modal collaborative feature effectively. Specifically, pre-trained models with frozen parameters are used to extract and project the feature representations of text and images into a unified space, denoted as and , respectively. These represent the single-modal embedding representations for each sample. To unify the input format, the text and image features are stacked to form a two-dimensional tensor as the sequence input: . Next, symmetrical modeling of modal features through a multi-head attention mechanism, which can capture attention modules in multiple subspaces in parallel. Specifically, the input X is first mapped to query (Q), key (K), and value (V) matrices, and the output of each attention head is:

The outputs of multiple attention heads are concatenated and then undergo linear projection to obtain the final interaction representation:

Among them, h is the number of attention heads, is the output weight matrix. The final represents the fused text-image context features.

To further enhance the fusion effect of intermodal representations, the interacted text–image features are concatenated, and a lightweight feedforward network is introduced for nonlinear compression and feature mapping. After two layers of linear transformation and normalization, the final cross-modal correlation representation is obtained:

and are linear transformation matrices, and h is the hidden dimension. is a lightweight feedforward network, and Dropout is used in this process to mitigate overfitting. This equation is proposed in this work.

3.5. Cross-Modal Fusion

Given that different modalities contribute differently to the final classification task, this paper introduces an attention mechanism to weigh the features of each modality, thereby achieving more discriminative feature fusion. The input to this fusion module is formed by the adaptive connection of two types of embedded features: one type is extracted and projected onto (visual) and (text) are extracted and projected onto a unified space by pre-trained models with frozen parameters; the other is semantic relevance features extracted from the cross-modal interaction module.

As shown in Figure 3, inspired by the successful mechanism of the compression and incentive network [16], this paper designs an attention-weighted module aimed at adaptively modeling intermodal relationships and assigning reasonable weights to different features. Specifically, given three sets of feature vectors , , and of length L, they are first concatenated into a joint feature matrix of shape . Then, a global average pooling operation is applied to compress each modality channel into a scalar, thereby generating a global context vector. To fully capture the intermodal dependencies, an attention weight is extracted through a gating mechanism combined with a sigmoid activation function. Finally, the original features are weighted and reconstructed using the scaling function , and the resulting attention vector represents the relative importance of each modal feature in the fusion process and is used to guide subsequent feature fusion and decision inference.

Figure 3.

The architecture of SENet.

Next, we enter the classification output module, which gradually reduces the dimension of the feature space through a series of linear transformations and nonlinear activation functions until the output layer maps it to a predetermined number of categories. Specifically, given an unimodal representation, cross-modal correlations, and attention weights, the final representation can be calculated as follows:

The symbol ⊕ denotes a series operation. Feed the input into a fully connected network to predict the label:

The classifier consists of two fully connected layers and combines Dropout and Layer Norm to improve the robustness and generalization ability of the model. The cross-entropy loss function is used:

where y represents the true label. This paper improves the performance of multimodal fake news detection by using a cross-modal fusion module to assign reasonable attention scores to each modality and effectively utilize information from all modalities.

The final model’s total loss function is:

Among them, represents the comprehensive contrast learning loss, represents the difficult negative sample triplet loss, and represents the classifier cross-entropy loss.

Most of the equations used in this work (e.g., softmax, cross-entropy loss, cosine similarity) are standard in the literature and are commonly presented without specific citations in related studies. Where an equation represents a novel contribution of this paper, we have indicated this explicitly in the text.

4. Experiments

4.1. Datasets

The model in this paper was evaluated on two real-world datasets: Twitter [33] and Weibo [34].

The Twitter dataset, introduced in the MediaEval Multimedia Veracity task, consists of tweets associated with images, where a single image may appear in multiple tweets due to reposting behavior. The dataset exhibits modality imbalance, as not every post contains a unique image. The Weibo dataset was constructed by Jin et al., where fake posts were verified by Weibo’s official fact-checking system, and real news was sourced from the Xinhua News Agency. It covers posts published between May 2012 and January 2016. To ensure fair comparison and data quality, we follow the original preprocessing steps, including the removal of duplicates and low-quality samples. An overview of the dataset composition is presented in Table 1.

Table 1.

The statistics of the pre-processed multimodal fake news datasets.

4.2. Implementation Details

The model uses dual-modal input: images and text. For image preprocessing, we utilized the CLIPProcessor provided by the Hugging Face Transformers library to ensure consistency with the pretrained CLIP model. All images are first converted to RGB format and resized to 224 × 224 pixels. The processor then normalizes the images and converts them into tensor format compatible with the CLIP image encoder. Preprocessed image tensors are cached as .pt files to reduce redundant computation. For text preprocessing, we similarly use the CLIPProcessor’s built-in tokenizer, with a maximum sequence length of 77 tokens. Before tokenization, raw text is cleaned to remove noise such as user mentions, URLs, and special characters. Tokenization is performed with truncation and padding to ensure uniform input shape, returning both input IDs and attention masks. To achieve feature alignment, the model maps image and text features to a 64-dimensional space through a projection layer for alignment.

During training, the initial learning rate is set to 0.001, the batch size to 64, and the AdamW optimizer is used; the model is trained for 50 epochs with a cosine learning rate scheduling strategy. All experiments were implemented using the Pytorch 2.2.1 framework and run on an NVIDIA A800 GPU.

4.3. Evaluation Metrics

We use four standard evaluation metrics for binary classification tasks: accuracy, precision, recall, and F1 score. Their definitions are as follows:

- -

- Accuracy measures the proportion of correctly predicted samples among all samples.

- -

- Precision is the proportion of true positives among all predicted positive samples.

- -

- Recall is the proportion of true positives among all actual positive samples.

- -

- F1 score is the harmonic mean of precision and recall.

These metrics are defined as:

where , , , and denote the number of true positives, true negatives, false positives, and false negatives, respectively.

4.4. Baseline Models

The proposed model is compared with the following baseline models:

- (1)

- EANN [20]: Utilizes an event discriminator with an adversarial training mechanism to help the feature extractor remove information related to specific events.

- (2)

- MVAE [21]: Utilizes a multimodal variational autoencoder to assist in fake news detection by learning shared representations.

- (3)

- MCAN [22]: Utilizes a multi-layer collaborative attention mechanism to stack multiple collaborative attention layers and capture fine-grained interactions between modalities.

- (4)

- CAFE [23]: Measures ambiguity by evaluating the KL divergence between single-modality feature distributions and dynamically adjusts the weights of single-modality and multimodality features.

- (5)

- LIIMR [24]: Utilizes intra-modal and inter-modal relationships for fake news detection.

- (6)

- MFIR [31]: Identifies semantic inconsistencies between text and images in multimodal news to achieve explainable fake news detection.

- (7)

- MRAN [35]: Generates high-order fusion features by hierarchically extracting semantic features and calculating intra-modal and cross-modal similarities and uses these fusion features for fake news detection.

4.5. Performance Comparison

Table 2 shows a performance comparison between the method proposed in this paper and other multimodal methods on the Twitter and Weibo datasets. The following observations can be made:

Table 2.

Comparison of experimental results for methods.

Although the EANN model extracts event-independent features through event adversarial learning, its feature concatenation strategy completely ignores the semantic associations between modalities. The MVAE framework, despite utilizing variational autoencoders for feature learning, also lacks an effective cross-modal interaction mechanism. Both methods fail to model semantic relationships between modalities during feature fusion, leading to the loss of critical discriminative information.

The CAFE method maps features to a shared semantic space through cross-modal alignment and introduces an unimodal disambiguation score to achieve adaptive feature aggregation. However, this method’s performance is highly dependent on data quality, sensitive to feature noise, and lacks stability in practical applications. In contrast, the contrastive learning framework used in this paper maximizes the discriminative power of positive and negative sample pairs in the semantic space through optimized objective design, significantly narrowing the semantic gap between modalities. More importantly, this method not only preserves unimodal features but also reinforces cross-modal associations through deep semantic alignment, thereby enhancing the discriminative consistency of features.

Compared to MCAN, which primarily achieves feature interaction through a collaborative attention network using direct interaction and alignment between modalities, LIIMR simultaneously considers intra-modal and inter-modal relationships and employs more detailed and multi-level feature fusion. However, both lack research on modal inconsistency during feature fusion, resulting in limited generalization ability when facing significant cross-modal semantic differences. The innovation proposed in this paper lies in the consistency-aware soft label contrastive learning mechanism. Specifically, this mechanism dynamically generates soft labels based on the actual semantic similarity of modal features and adjusts them through an inconsistency detector, thereby providing more refined supervision signals than traditional binary contrastive learning and effectively enhancing the detection capability of modal inconsistencies in fake news.

The MFIR framework achieves a certain degree of identification of semantic conflicts between text and images through multimodal feature fusion and inconsistency inference mechanisms and uses this inconsistency as an auxiliary discriminative basis, thereby enhancing the model’s interpretability. The MRAN model employs a relationship-aware multimodal attention mechanism to optimize detection performance by modeling the multi-level associative relationships between text and images. Although these two methods have different technical focuses, they both aim to improve detection performance by enhancing feature fusion and modality interaction. However, they still have some limitations when handling complex scenarios. MFIR lacks dynamic adaptive capabilities in feature fusion and inconsistency perception, making it difficult to adapt to diverse data distributions. Although the MRAN model performs well in modality relationship modeling, it does not conduct a sufficiently in-depth analysis of features, particularly in the mining of difficult negative samples, which limits the model’s ability to distinguish boundary samples.

To address these critical issues, the method proposed in this paper effectively resolves the problems mentioned above. First, it designs a soft label contrastive learning mechanism for consistency perception, providing more fine-grained supervision signals for contrastive learning. Then, it employs difficult negative sample mining and dynamic feature regularization strategies to gradually increase the weight of difficult negative samples as the model trains, enabling the model to focus progressively on more challenging samples. Additionally, it enhances the model’s robustness through a dynamically adjusted feature masking mechanism. Next, cross-modal interaction based on a multi-head attention mechanism is used to generate correlation features that capture complex relationships between modalities. Channel attention is then used to dynamically adjust the importance weights of different features, comprehensively considering text representations, image representations, and their correlations to form the final discriminative features. Experimental results show that the proposed method outperforms baseline models in terms of detection accuracy, generalization ability, and robustness, providing a new solution for multimodal fake news detection.

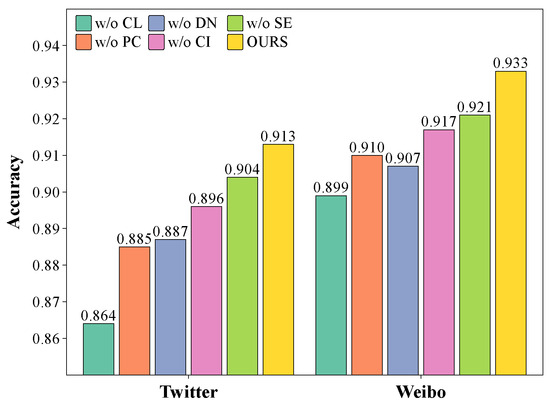

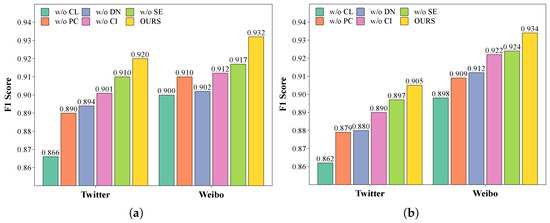

4.6. Ablation Study

To assess the effectiveness of each innovative feature of the proposed model, this paper compares the model by removing each module from the entire model. The implementation of each experiment is as follows:

- (1)

- w/o CL: Only CLIP is retained to extract text and image features, and the contrastive learning loss is removed.

- (2)

- w/o PC: The soft label contrastive learning mechanism based on consistency perception is removed, and only the hard targets of contrastive learning are used to learn aligned unimodal representations.

- (3)

- w/o DN: Removes the difficult negative sample mining mechanism and uses only the soft label contrastive learning mechanism to optimize the contrastive learning loss.

- (4)

- w/o CI: Removes the cross-modal interaction module and uses a simple concatenation of and as cross-modal correlation features.

- (5)

- w/o SE: Removes the channel attention module and uses a simple concatenation to obtain the final features.

Figure 4 and Figure 5 show the accuracy, F1 score on real news, and F1 score on fake news for the ablation experiments, respectively. The results clearly demonstrate that the performance of all ablation variants is inferior to that of the complete model proposed in this paper, strongly confirming the necessity and effectiveness of each component. Through detailed analysis, the following key findings can be drawn:

Figure 4.

Accuracy in Twitter and Weibo datasets.

Figure 5.

F1 scores in Twitter and Weibo datasets. (a) F1 score of real news. (b) F1 score of real news.

Removing contrastive learning (w/o CL) leads to a significant decrease in model performance, with a decrease on the Twitter dataset and a decrease on the Weibo dataset. This result clearly indicates that the contrastive learning mechanism plays a crucial role in aligning multimodal feature representations.

When the soft label contrastive learning mechanism based on consistency perception (w/o PC) is removed, the model performance decreases by 2.8% and 2.3% on the Twitter and Weibo datasets, respectively. This result validates that the soft label mechanism helps the model capture more granular intermodal consistency information, significantly enhancing detection performance.

Removing the difficult negative sample mining mechanism (w/o DN) caused the model to lose 2.6% of its performance on both datasets. Experiments show that this mechanism enhances the model’s discriminative ability by filtering challenging samples, and its importance is particularly prominent when handling text–image content that is similar but has different authenticity.

The removal of the cross-modal interaction module (w/o CI) and the channel attention mechanism (w/o SE) had a negligible impact on model performance, indicating that the cross-modal interaction module effectively captures subtle intermodal associations, while the attention mechanism primarily helps the model optimize the fusion process of complex multimodal features.

Observations reveal that the model’s performance decline on the Twitter dataset is generally greater than on the Weibo dataset. This phenomenon may be attributed to the presence of a large number of tweets associated with a single event in the Twitter dataset, which to some extent limits the model’s ability to learn broad feature representations. In contrast, the Weibo dataset, being larger in scale, provides more abundant training samples, mitigating the impact of data noise.

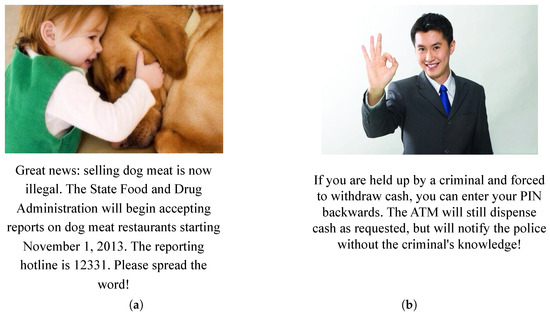

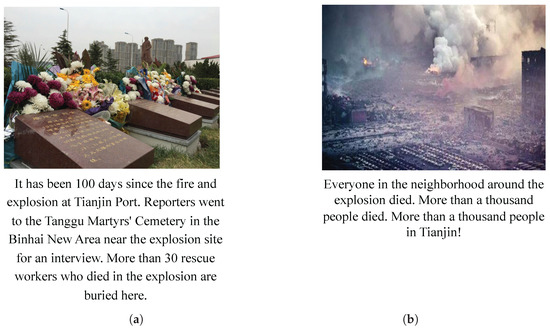

4.7. Case Study

To better demonstrate the effectiveness of the proposed components—particularly the consistency-aware soft label contrastive learning mechanism and the difficult negative sample mining strategy—we present two groups of case studies from the Weibo dataset.

Case Group 1: Detecting Semantic Inconsistency Between Modalities. Figure 6a presents a fake news post where the text reports a serious policy-related topic—the banning of dog meat sales—while the accompanying image depicts a warm and affectionate moment between a girl and a dog. Although the entities in both modalities (dogs) match, the emotional tone of the image is inconsistent with the seriousness of the text, potentially misleading readers through emotional association.

Figure 6.

Examples of fake news in the Weibo dataset. (a) A policy-related text with an emotionally positive image. (b) A misleading financial tip accompanied by a trust-evoking professional portrait.

Similarly, in Figure 6b, the text describes a false security tip regarding ATM emergency alerts, whereas the image shows a professionally dressed man making an “OK” gesture—introducing an impression of authority and credibility. The combination may increase the believability of the claim despite its falsity.

Traditional contrastive learning methods often emphasize coarse semantic similarity, leading them to overlook such mismatches in tone or intent. In contrast, our model leverages soft labels to assign nuanced similarity scores and identify intermodal contradictions. As a result, it successfully identifies both examples as fake news.

Case Group 2: Identifying Fine-Grained Differences Within the Same Event. Figure 7a,b both relate to the same real-world event—the Tianjin Port explosion. Figure 7a includes accurate textual information with verified casualty counts and a respectful image of the cemetery. Figure 7b, however, contains vague and emotionally charged language (e.g., “all nearby residents died,” “1000+ casualties”) and uses an image that, while visually related, was not taken at the verified scene.

Figure 7.

Real vs. fake news under the same event. (a) Verified report with cemetery image. (b) Overstated claims with reused explosion scene.

Despite high text-image similarity at a superficial level, our model distinguishes between these two samples by leveraging the difficult negative sample mining mechanism. Because both examples are semantically close but differ in truthfulness, the model treats them as hard negatives. The use of triplet loss strengthens the model’s sensitivity to subtle textual exaggeration and image relevance, allowing it to accurately classify Figure 7b as fake and Figure 7a as real.

5. Discussion

The proposed model achieves robust and superior performance on both the Weibo and Twitter datasets, reaching detection accuracies of 93.3% and 91.3%, respectively, and consistently outperforming existing multimodal detection approaches (see Table 2). Ablation studies further confirm that each component—contrastive learning, the soft label mechanism, and difficult negative sample mining—contributes significantly to handling complex cross-modal relationships and challenging boundary cases (see Figure 4 and Figure 5). These results highlight the importance of fine-grained semantic modeling and targeted negative sampling for effective multimodal fake news detection.

In addition to the empirical results, we provide a theoretical perspective on why the soft-label contrastive learning mechanism and difficult negative mining jointly improve model generalization and robustness. Traditional hard-label (one-hot) supervision in contrastive learning treats all negative pairs equally, which can cause the model to overfit to easily distinguishable negative samples while neglecting those that are more challenging and semantically similar to the positive pairs. This often results in limited boundary discrimination and increased bias, particularly in complex multimodal scenarios. The soft-label contrastive learning mechanism alleviates these issues by generating supervision signals based on the actual semantic similarity between samples. By assigning soft probabilities rather than absolute binary labels, the model receives finer-grained guidance and is less prone to overfitting to the training data distribution. This approach enables the model to better capture ambiguous or borderline cases, thus enhancing its capacity to generalize to unseen examples. Furthermore, the difficult negative sample mining strategy dynamically emphasizes the most challenging negative samples, prompting the model to focus on discriminating subtle semantic differences between hard negatives and positives. This targeted learning process leads to more robust representations and stronger boundary awareness, reducing the risk of bias toward easy or majority-class samples. Collectively, these two mechanisms ensure that supervision is both semantically aware and adaptive, effectively mitigating overfitting and bias, and providing a solid theoretical foundation for the observed improvements in generalization and robustness.

Our experimental results and case analyses demonstrate that the proposed model is robust when handling complex and noisy multimodal social media data, especially in scenarios with semantic inconsistency between text and images. The model consistently outperforms baseline methods even on challenging samples with subtle or misleading relationships. However, its robustness under conditions such as missing modalities, adversarial samples, or rapidly evolving fake news tactics remains to be fully evaluated in future studies.

While the model shows strong performance on both datasets, it is important to note certain limitations in the data. In the Twitter dataset, a significant portion of tweets share the same image or lack images altogether, leading to modality imbalance that may influence the model’s reliance on textual features. Similarly, both datasets exhibit topic bias—Weibo focuses primarily on Chinese-language socio-political content, while Twitter covers a broader range but tends to be noisy. These imbalances and biases may affect generalization to other domains or platforms.

The proposed approach contributes to real-world fake news detection systems by addressing subtle inconsistencies between modalities—an aspect often overlooked by conventional models. The use of soft label contrastive learning allows for nuanced supervision, enabling better detection of semantically incongruent image–text pairs. Furthermore, the difficult negative sampling strategy enhances boundary discrimination, which is critical in complex social environments. From a research perspective, our model challenges the prevalent use of one-hot supervision in contrastive learning for fake news detection and offers a novel direction for multimodal misinformation research under noisy and low-resource conditions.

6. Conclusions

This paper addresses key issues in current multimodal fake news detection tasks, such as insufficient contrastive learning supervision signals, weak model discrimination capabilities for boundary samples, and inadequate modality fusion. It proposes a multimodal fake news detection method that integrates consistent perceptual soft label contrastive learning, difficult negative sample mining, and cross-modal interaction fusion. Specifically, the proposed soft label contrastive learning mechanism utilizes text–image semantic similarity to construct soft supervision targets and introduces an inconsistency detector for dynamic adjustment, enabling the model to more precisely capture subtle semantic differences between text and images, thereby achieving higher-quality cross-modal feature alignment. The difficult negative sample mining module enhances the model’s discrimination ability on true-false boundary samples by constructing a triplet loss function. The combination of cross-modal interaction modules and cross-modal fusion modules further learns the symmetrical interaction relationship between text and image features, optimizing the fusion and weight distribution of multimodal features. Extensive experiments demonstrate that our method outperforms representative models such as EANN, MVAE, MCAN, LIIMR, MFIR, and MRAN on two real-world datasets, Weibo and Twitter.

Future work will focus on the following areas: first, expanding the model’s ability to process information from more modalities (such as video and audio) to enhance its generalization to complex social content; second, combining external knowledge such as knowledge graphs and user behavior to further enhance the model’s contextual reasoning capabilities and overall effectiveness in detecting fake news. As multimodal information processing technologies continue to advance, fake news detection models will play an increasingly important role in practical applications, providing robust support for maintaining the health and security of cyberspace.

Author Contributions

Conceptualization, Z.H.; methodology, Z.H. and H.W.; software, H.W.; validation, H.W.; formal analysis, H.W. and L.L.; investigation, H.W.; resources, Z.H.; data curation, H.W.; writing—original draft, H.W.; writing—review and editing, Z.H. and H.W.; visualization, H.W.; supervision, Z.H. and L.L.; project administration, Z.H. and H.W.; funding acquisition, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Gansu Province Higher Education Institutions Industrial Support Program: 2020C-29; National Natural Science Foundation of China: 61562002.

Data Availability Statement

All data used in this study are publicly available online. The microblogging dataset is available through GitHub at https://github.com/yaqingwang/EANN-KDD18 (accessed on 1 August 2024); the Twitter dataset is also available through GitHub at https://github.com/cyxanna/CAFE (accessed on 1 August 2024).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Tufchi, S.; Yadav, A.; Ahmed, T. A comprehensive survey of multimodal fake news detection techniques: Advances, challenges, and opportunities. Int. J. Multimed. Inf. Retr. 2023, 12, 28. [Google Scholar] [CrossRef]

- Wang, L. Research and Outlook on Technology for Detecting False Information on the Internet. 太原理工大学学报 2022, 53, 397–404. (In Chinese) [Google Scholar]

- Su, J.; Cardie, C.; Nakov, P. Adapting fake news detection to the era of large language models. arXiv 2023, arXiv:2311.04917. [Google Scholar]

- Liu, Y.; Guo, Y.; Fang, J.; Fan, J.; Hao, Y.; Liu, J. A Review of Deep Learning Research on Cross-Modal Image Retrieval. Comput. Sci. Explor. 2022, 16, 489–511. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Singh, B.; Sharma, D.K. Predicting image credibility in fake news over social media using multi-modal approach. Neural Comput. Appl. 2022, 34, 21503–21517. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Pang, A.; Yu, G. Clip-GCN: An adaptive detection model for multimodal emergent fake news domains. Complex Intell. Syst. 2024, 10, 5153–5170. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Li, Z.; Yao, R.; Zhang, Y.; Wang, D. Modality interactive mixture-of-experts for fake news detection. In Proceedings of the ACM on Web Conference 2025, Sydney, Australia, 28 April–2 May 2025; pp. 5139–5150. [Google Scholar]

- Lai, H.; Nissim, M. mCoT: Multilingual instruction tuning for reasoning consistency in language models. arXiv 2024, arXiv:2406.02301. [Google Scholar]

- Yan, F.; Zhang, M.; Wei, B.; Ren, K.; Jiang, W. Sard: Fake news detection based on clip contrastive learning and multimodal semantic alignment. J. King Saud-Univ.-Comput. Inf. Sci. 2024, 36, 102160. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Xiao, B.; Liu, C.; Yuan, L.; Gao, J. Unified contrastive learning in image-text-label space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19163–19173. [Google Scholar]

- Bao, H.; Wang, W.; Dong, L.; Liu, Q.; Mohammed, O.K.; Aggarwal, K.; Som, S.; Piao, S.; Wei, F. Vlmo: Unified vision-language pre-training with mixture-of-modality-experts. Adv. Neural Inf. Process. Syst. 2022, 35, 32897–32912. [Google Scholar]

- Yifan, Z.; Yuming, F.; Kede, M. Advances in Image Fusion Technology in the Era of Deep Learning. Chin. J. Image Graph. 2023, 28, 102–117. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Shahid, W.; Jamshidi, B.; Hakak, S.; Isah, H.; Khan, W.Z.; Khan, M.K.; Choo, K.K.R. Detecting and mitigating the dissemination of fake news: Challenges and future research opportunities. IEEE Trans. Comput. Soc. Syst. 2022, 11, 4649–4662. [Google Scholar] [CrossRef]

- Conroy, N.K.; Rubin, V.L.; Chen, Y. Automatic deception detection: Methods for finding fake news. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers); Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. Eann: Event adversarial neural networks for multi-modal fake news detection. In Proceedings of the 24th ACM Sigkdd International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. Mvae: Multimodal variational autoencoder for fake news detection. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2915–2921. [Google Scholar]

- Wu, Y.; Zhan, P.; Zhang, Y.; Wang, L.; Xu, Z. Multimodal fusion with co-attention networks for fake news detection. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online Event, 1–6 August 2021; pp. 2560–2569. [Google Scholar]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Tun, L.; Shang, L. Cross-modal ambiguity learning for multimodal fake news detection. In Proceedings of the ACM Web Conference 2022, Virtual Event, 25–29 April 2022; pp. 2897–2905. [Google Scholar]

- Singhal, S.; Pandey, T.; Mrig, S.; Shah, R.R.; Kumaraguru, P. Leveraging intra and inter modality relationship for multimodal fake news detection. In Proceedings of the Companion Web Conference 2022, Virtual Event, 25–29 April 2022; pp. 726–734. [Google Scholar]

- Zhu, P.; Hua, J.; Tang, K.; Tian, J.; Xu, J.; Cui, X. Multimodal fake news detection through intra-modality feature aggregation and inter-modality semantic fusion. Complex Intell. Syst. 2024, 10, 5851–5863. [Google Scholar] [CrossRef]

- Zhang, M.; Chang, K.; Wu, Y. Multi-modal Semantic Understanding with Contrastive Cross-modal Feature Alignment. arXiv 2024, arXiv:2403.06355. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Zhou, Y.; Yang, Y.; Ying, Q.; Qian, Z.; Zhang, X. Multimodal fake news detection via clip-guided learning. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 2825–2830. [Google Scholar]

- Kananian, M.; Badiei, F.; Gh. Ghahramani, S.A. GraMuFeN: Graph-based multi-modal fake news detection in social media. Soc. Netw. Anal. Min. 2024, 14, 104. [Google Scholar] [CrossRef]

- Wu, L.; Long, Y.; Gao, C.; Wang, Z.; Zhang, Y. MFIR: Multimodal fusion and inconsistency reasoning for explainable fake news detection. Inf. Fusion 2023, 100, 101944. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Boididou, C.; Papadopoulos, S.; Zampoglou, M.; Apostolidis, L.; Papadopoulou, O.; Kompatsiaris, Y. Detection and visualization of misleading content on Twitter. Int. J. Multimed. Inf. Retr. 2018, 7, 71–86. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Wong, K.F. Detect Rumors in Microblog Posts Using Propagation Structure via Kernel Learning; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017. [Google Scholar]

- Yang, H.; Zhang, J.; Zhang, L.; Cheng, X.; Hu, Z. MRAN: Multimodal relationship-aware attention network for fake news detection. Comput. Stand. Interfaces 2024, 89, 103822. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).