1. Introduction

The lace textile industry encounters significant pressures in optimizing its dyeing processes, where both efficiency and product quality are critical concerns [

1,

2]. This process is characterized by sequence-dependent setup times, such as the extensive cleaning necessitated when switching between dark and light color families. Additionally, machine eligibility, dictated by varying weight capacities, adds another layer of complexity. Compounding these two elements, the stochastic nature of re-dyeing operations, frequently triggered by quality inspection failures introduces significant variability. Such rework, implemented to salvage imperfect products, is not unique to textiles and is prevalent in manufacturing sectors like panel furniture processing [

3], bearing production [

4], and automotive parts manufacturing [

5]. Actually, these combined factors can lead to substantial production delays [

6] and increased operational costs in the lace dyeing process.

The dyeing optimization process is vital for tackling chronic tardiness issues in the lace textile industry. This research is motivated by a significant challenge faced by a major lace manufacturing facility in Fuzhou, China, which is the nation’s second-largest producer. The factory employs parallel automatic dyeing vessels. Effective scheduling of these vessels requires the simultaneous consideration of deterministic constraints, such as sequence-dependent setups and machine eligibility, as well as the inherent stochasticity of re-dyeing operations. The latter introduces considerable variability in processing times, which can lead to significant delays. The primary challenge lies in scheduling parallel machines to minimize the tardiness criterion under these complex and uncertain conditions.

This recognition has elevated rework operations to a prominent research theme within manufacturing systems. Recent advancements in rework-aware scheduling have focused on single-machine and job-shop issues, with hybrid metaheuristic algorithms emerging as powerful tools to address their complexities.

Table 1 summarizes the key characteristics of representative studies related to scheduling problems with rework operations and parallel machine environments.

Zhu et al. [

7] investigated a single-machine resource-constrained multi-project scheduling problem that focuses on detection and rework. They adopted a mixed-integer programming model and a tabu search framework with a chain-based baseline scheme to satisfy both online and offline scheduling. Mahmoud et al. [

8] tackled a stochastic job-shop problem by introducing a Markovian modeling framework that incorporates both job-specific costs and success probability distributions. Zhang et al. [

9] proposed a hybrid optimization algorithm that integrates differential evolution (DE) and particle swarm optimization to solve an uncertain re-manufacturing scheduling model with rework risk. This algorithm outperformed baseline algorithms in 17 out of 18 test instances by utilizing enhanced population diversity mechanisms.

In the past two years, Albayrak et al. [

10] employed an enhanced non-dominated sorting genetic algorithm-II (NSGA-II), a hybrid metaheuristic that combines evolutionary strategies with local searches, to address an energy-sensitive flexible job shop problem considering rework processes and new job arrival. The optimization objectives included minimizing energy consumption, workload, and makespan. To tackle green re-entrant job-shop scheduling with job reworking, Wang et al. [

4] designed a multi-objective hybrid algorithm based on a local neighborhood search. Quan et al. [

11] proposed a two-level virtual workflow model combined with an adaptive evolutionary algorithm to address scheduling challenges in flexible job shop scheduling with rework. Additionally, Kim et al. [

12] developed a genetic programming-based reinforcement learning algorithm for dynamic hybrid flow shop scheduling with reworks, which can achieve superior total tardiness minimization by integrating variable neighborhood search with deep Q-networks. Peng et al. [

13] applied an improved gazelle optimization algorithm with dynamic operators and mutation strategies to address multi-skill project scheduling with rework risks.

While these studies contribute to the broader field of rework scheduling, fewer have focused specifically on parallel machine environments. Kao et al. [

14] developed mixed-integer programs for unrelated parallel batching machines, comparing static and dynamic equipment health indicators. Wang et al. [

15] used a modified genetic algorithm and simulated annealing for unrelated parallel machine scheduling with random rework. They demonstrated that simulated annealing outperformed the genetic algorithm in terms of computational efficiency for minimizing the expected total weighted tardiness. Rezaeian et al. [

16] studied unrelated parallel machines with eligibility constraints and release times, optimizing makespan through sensitivity analysis. Wang et al. [

17] investigated the problem with random rework and limited pre-emption, and proposed a two-stage heuristic algorithm to balance makespan with earliness–tardiness costs. Few of these studies consider the deterministic–stochastic nature of the problem, such as deterministic setup times and probabilistic re-dyeing operations in textile manufacturing. Notably,

Table 1 synthesizes these key contributions, revealing a critical gap: none of the existing studies simultaneously consider deterministic setup times, machine eligibility, and probabilistic re-dyeing operations in parallel machine scheduling for textile manufacturing.

Moreover, to effectively deal with stochastic rework in parallel machine scheduling, the concept of total estimated processing time (

) has emerged as a pivotal metric for tardiness prediction. Building on previous research that minimizes total estimated tardiness (

) in stochastic environments [

17,

18,

19],

employs Bayesian estimation to integrate probabilistic processing times and rework cycles. By embedding

into the scheduling model, we directly target

minimization in parallel dyeing vessel coordination.

This work examines the above issue as a parallel machine scheduling problem with sequence-dependent setup times, machine eligibility, and probabilistic re-dyeing operations, which is strongly NP-hard [

20]. Therefore, metaheuristics are frequently applied in the related literature to address practical instances of these problems [

21,

22,

23,

24,

25,

26]. Among metaheuristics, DE [

27] is selected for its simplicity, efficiency, and robustness in solving complex optimization problems. Compared to genetic algorithms, DE typically exhibits faster convergence and better performance in continuous and high-dimensional search spaces, making it particularly suitable for scheduling problems with stochastic components [

28,

29,

30,

31,

32,

33]. Its capacity to balance exploration and exploitation further enhances its applicability to the considered problem. To the best of our knowledge, DE has not yet been applied to lace dyeing scheduling with

minimization, which motivates this study.

On the other hand, the hybrid metaheuristic advancements above highlight the efficacy of combining complementary optimization mechanisms to tackle stochasticity and complexity in scheduling. However, existing frameworks often lack symmetry-driven coordination between exploration and exploitation [

34], which is critical for parallel machine environments with probabilistic re-dyeing. In the context of metaheuristics like DE or symmetry can be leveraged to design algorithms that efficiently explore the search space while effectively exploiting promising solutions. Motivated by this gap, our work builds on the hybrid metaheuristic paradigm by introducing a symmetry-based dual-subpopulation collaborative structure to address lace dyeing scheduling challenges.

Expanding upon our previous work [

35], this study introduces a symmetry-driven optimization paradigm for parallel machine scheduling. The novelty and main contributions of our work are highlighted as follows:

To minimize , a stochastic integer programming model is developed. This model captures uncertainty in lace manufacturing rework cycles and is validated optimally via CVX (a MATLAB, R2024-based convex optimization toolbox) on small-scale synthetic and industrial datasets.

A novel symmetry-driven TCDE algorithm is designed, where symmetrically structured subpopulations collaborate to balance exploration and exploitation. Unlike conventional hybrid metaheuristics for rework scheduling, Subpopulation A employs chaotic parameter adaptation to ensure ergodic symmetry in global search, while Subpopulation B uses diversity-convergence adaptive control to maintain convergence symmetry in local exploitation.

A symmetrical collaborative mechanism is proposed, which involves periodic exchange of migrants between subpopulations and guidance from an elite set to facilitate knowledge sharing among subpopulations. This mechanism addresses the lack of symmetry-driven coordination in existing hybrid metaheuristics.

Extensive computational experiments conducted on both synthetic and industrial datasets comprehensively evaluate the performance of TCDE. Ablation studies provide further evidence of the critical role played by the novel components of TCDE, with computational results solidly confirming its advantages in addressing the problem.

The remainder of this paper is organized as follows. The problem description is presented in

Section 2.

Section 3 outlines the proposed TCDE for solving the problem. Numerical experiments on TCDE are reported in

Section 4, and the conclusions are summarized in the final section, which also includes suggestions for future research topics.

2. Problem Description

2.1. Problem Statement

In this paper, the dyeing optimization problem is defined as a parallel machine scheduling problem with sequence-dependent setup times, machine eligibility, and probabilistic re-dyeing operations. The notations used for this problem are presented in

Table 2.

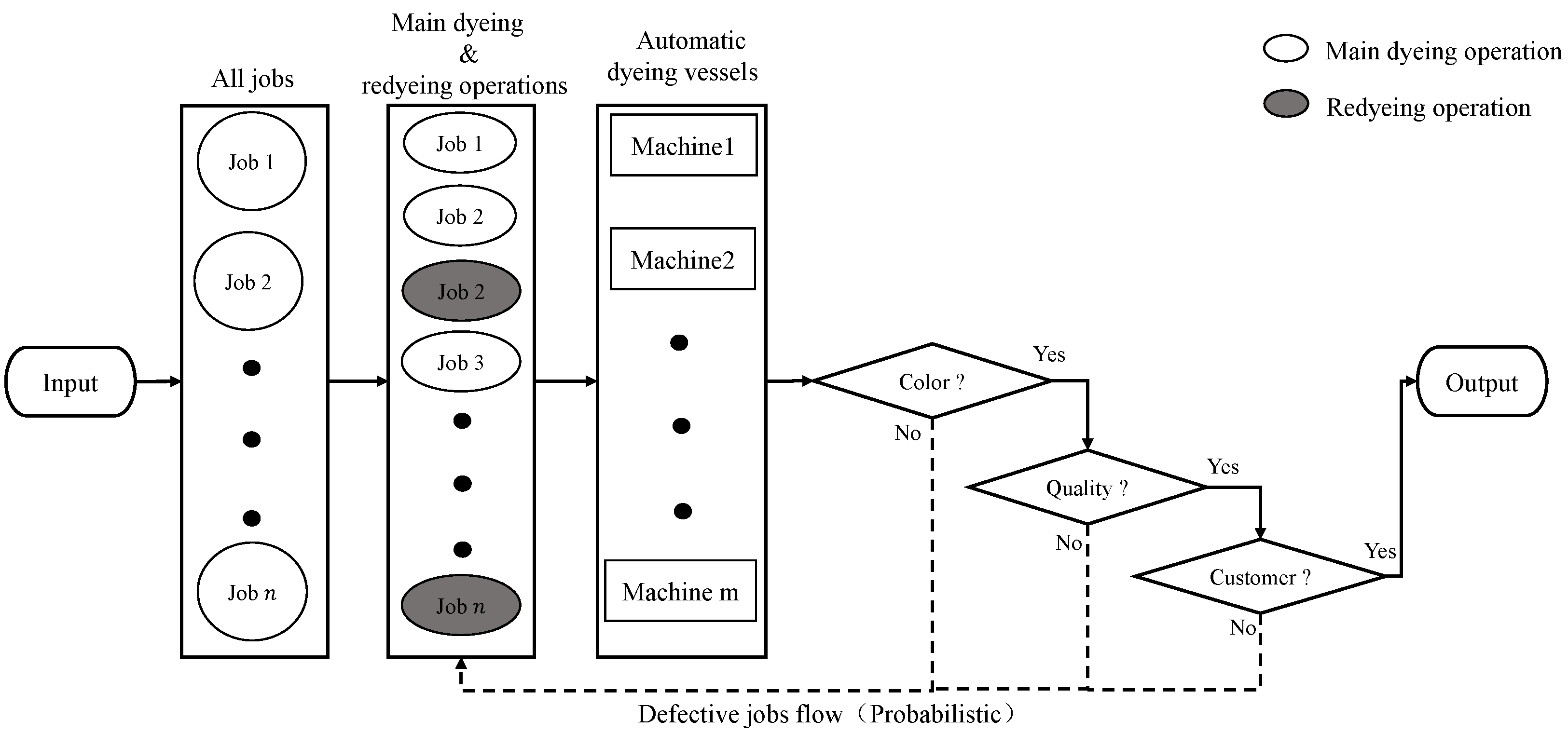

Once the main dyeing operation is completed, the job undergoes three sequential inspections: color, quality, and customer inspection. If a job fails any of these inspections, it is returned for re-dyeing until it passes, with no further inspections required after passing. The number of re-dyeing processes is finite (

), and the imperfect production probability

is estimated from historical data. Each re-dyeing operation reduces the dyeing time linearly via a decreasing coefficient

(

), where

and

. The first operation is the main dyeing process, while subsequent operations are probabilistic processes.

Figure 1 illustrates the configuration of the problem.

Each job is defined by four attributes: color family (

), weight (

), main dyeing time (

), and due date (

). In the factory, eight color families are split into light and dark depths, which influence

(see

Table 3). No setup time is needed between successive jobs of the same color family. However, when transitioning between different color families, setup involves cleaning, with the thoroughness (either rough or thorough) determined by the color depth of the preceding job. Setup times demonstrate asymmetric properties based on color depth transitions: thorough cleaning (from dark to light) requires three times the duration of rough cleaning (from light to dark).

Moreover, each vessel can process only one job at a time without pre-emption and has a capacity limit of . Job j can be assigned to vessel k only if . Jobs cannot be merged, even within the same color family. There are also additional constraints, including the absence of pre-emption or insertion.

2.2. Mathematical Model

The proposed mathematical model for minimizing

is as follows:

This is subject to the following:

The goal is to minimize , as demonstrated in Equation (1). The estimated tardiness for job j, denoted as , is calculated as the maximum of the difference between and , or zero, as defined in Equation (2). Equation (3) calculates within a probabilistic framework. Equation (4) ensures that each job is assigned to one machine and processed in a sequential manner. A dummy operation Equation (5) initializes each machine. Equation (6) allows the hth operation of job j to be empty if the th operation of job i is the last on a machine for three consecutive jobs. Equation (7) establishes the setup completion time of the first operation on each machine as 0. Equation (8) ensures that M scales linearly with the problem size and avoids arbitrarily large values, thereby improving both numerical stability and computational efficiency. Equation (9) links the setup completion time to the preceding operation’s completion time , incorporating setup time controlled by a large number M and binary variable . Equation (10) ensures that the setup completion time is at least the processing time . Equation (11) relates to with setup and processing times. Equation (12) ensures is at least . Equation (13) ensures is at least the previous operation’s completion time plus . Equation (14) enforces machine capacity constraints. The decision variable is binary, as stated in Equation (15).

It is important to note that we use problem-specific upper bounds derived from actual production constraints, which enhances numerical stability and mitigates the issues associated with large constant values. Furthermore, the maximum number of re-dyeing operations (denoted as H) is fixed based on practical considerations observed in the dyeing factory under investigation. Historical production data and expert insights indicate that excessive re-dyeing is strictly limited in practice, as it can lead to fabric damage, increased energy consumption, and higher production costs. Therefore, setting a fixed upper bound H is both realistic and essential to ensure model tractability and industrial applicability.

2.3. Illustrative Example

To make the problem and constraints concrete, consider the instance summarized in

Table 4. Three jobs must be processed on two parallel dyeing vessels. Each job is characterized by color family, weight, main processing time and due date. Vessel capacities are

kg and

kg. The maximum number of dyeing operations (main + reworks) is set to

; the processing-time reduction coefficient for any rework is

. Sequence-dependent setup times follow the following rule: dark-to-light requires 3 h, light-to-dark 1 h, and identical color families need 0 h.

A feasible schedule obtained by the proposed TCDE algorithm is depicted in

Figure 2. The Gantt chart reveals the following key observations:

Machine eligibility: Job 3 (20 kg) can only be assigned to , whereas jobs 1 and 2 fit both vessels.

Sequence-dependent setup: Three hours of cleaning are required on when the color changes from dark (job 3) to light (job 2).

Probabilistic reworks: Job 1 fails the first inspection with probability and returns for a 1-h rework (operation 1–2). The expected completion time is h, yielding tardiness of 1.67 h.

The fitness of each chromosome is evaluated using the expected job completion time, with the first operation as the main dyeing process and subsequent operations as probabilistic re-dyeing processes. All chromosomes satisfy the precedence constraints. In

Figure 2,

. Similarly,

and

. Thus,

for this solution is 1.6730.

3. TCDE for the Considered Problem

Usually, metaheuristics are favored over mathematical models (e.g., mixed-integer programming models) for their efficiency in solving complex problems within practical timeframes [

26]. Therefore, we propose symmetry-driven TCDE to tackle the scheduling problem. To provide a clear and intuitive understanding of the proposed TCDE algorithm, we present a flowchart and pseudocode that illustrate its workflow. Specifically,

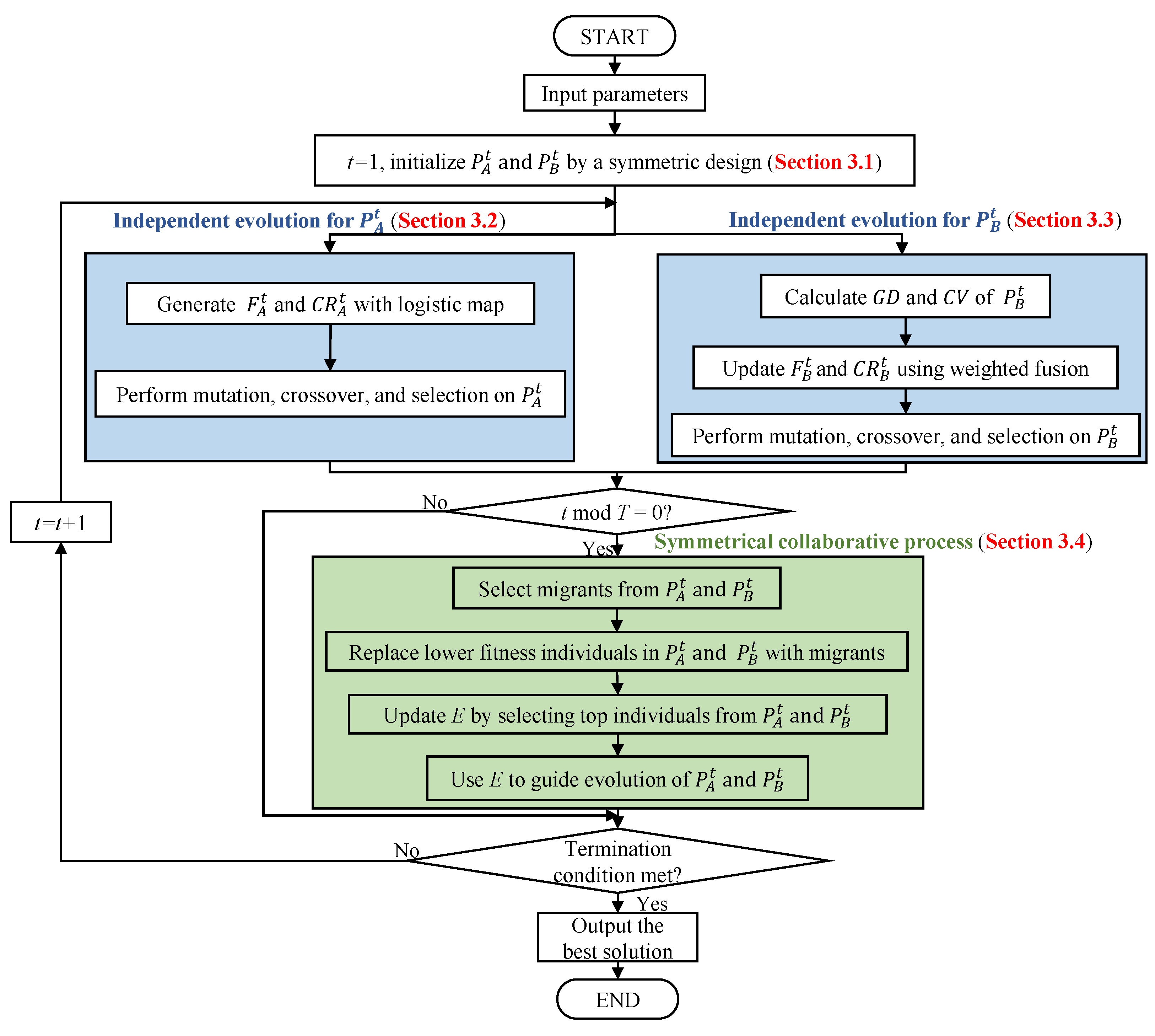

Figure 3 depicts the flow chart, while Algorithm 1 details the step-by-step implementation of TCDE. The key components and their interactions are detailed in subsequent subsections.

| Algorithm 1 Symmetry-driven TCDE |

Input: parameters

- 1:

- 2:

randomly initialize population and by a symmetric design ( Section 3.1) - 3:

while termination condition not met do - 4:

/****** Independent evolution for (Section 3.2)******/ - 5:

update using chaos theory: - 6:

generate chaotic parameters and using logistic map - 7:

perform mutation, crossover, and selection on with and - 8:

/****** Independent evolution for (Section 3.3)******/ - 9:

update by population diversity and convergence speed: - 10:

calculate population diversity and convergence speed of - 11:

update and using weighted fusion - 12:

perform mutation, crossover, and selection on with and - 13:

/**** Symmetrical collaborative process (Section 3.4)****/ - 14:

if then - 15:

select migrants from and based on solution quality - 16:

replace lower fitness individuals in and with migrants - 17:

update elite set E by selecting the top individuals from and - 18:

use elite set E to guide the evolution of and - 19:

end if - 20:

- 21:

end while

Output: the best solution

|

The TCDE algorithm operates through a structured workflow, as illustrated in

Figure 2 and Algorithm 1. It begins with the initialization of two subpopulations,

and

, using a symmetric design as described in

Section 3.1. Throughout the iterative process on generation

t,

evolves using parameters

(scaling factor of

) and

(crossover rate of

), which are dynamically generated via a logistic map rooted in chaos theory (

Section 3.2). Correspondingly,

adjusts its parameters

(scaling factor of

) and

(crossover rate of

) by evaluating population diversity

and convergence speed

, as depicted in

Section 3.3. This process creates parameter symmetry through distinct but equivalent adaptation mechanisms. At every

T generation, migrants are exchanged between

and

to introduce new diversity, replacing lower fitness individuals and updating the elite set

E, which guides subsequent evolution (

Section 3.4). This symmetrical interactive and collaborative mechanism used in

Section 3.4 not only enriches population diversity but also enhances global search capabilities and improves convergence speed.

3.1. Population Initialization

Given predefined population sizes

N and

L (where

), the population is represented by a

matrix

, with

t indicating the generation number. Matrix

is composed of rows

, each consisting of

L real numbers. These rows are decoded into scheduling solutions. To ensure the feasibility of initial solutions, a random key-based encoding scheme is used [

1]. Each entry

is assigned a value within

, where

is the set of machines capable of processing job

j.

Moreover, the operation sequence employs structured encoding, where each job’s main dyeing and re-dyeing operations are marked by a unique symbol. Each job appears exactly H times, representing its H operations. This method ensures every chromosome corresponds to a feasible solution. For instance, job is represented by the number 1, with its re-dyeing operations numbered sequentially up to H. Similarly, job is denoted by , and its re-dyeing processes are numbered from to , and so on.

An illustrative example is presented above in

Section 2.3. The initial population is shown as Matrix

in Equation (16). For clarity, matrix values are rounded to four decimal places, and

. Given

,

and

, the intervals for the matrix elements are

and

.

Algorithm 2 illustrates the decoding procedure. During decoding, the machine assignment for the

h-th operation of job

j is determined by

, and the operation sequence on each machine is sorted by the decimal part

. Operations of the same job are unified in symbol representation to form a feasible schedule based on operation order. The fitness of each chromosome is evaluated using the expected value method for calculating job completion times, considering the first operation as the main dyeing process and subsequent operations as probabilistic re-dyeing processes.

| Algorithm 2 Decoding process |

Input: - 1:

for to m do - 2:

decide all operations assigned to machine by using the ceiling value , and obtain the operation sequence on is sorted according to the decimal part - 3:

all operations of a job are unified under the same job symbol, that is, each is changed to - 4:

end for - 5:

, , - 6:

while

do - 7:

while do - 8:

if then - 9:

find the unassigned job j, , process it on machine , and its operation sequence is then interpreted based on its job order of occurrence - 10:

, - 11:

end if - 12:

, if - 13:

end while - 14:

end while

Output: of the solution |

In the decoding process, each chromosome ensures a feasible solution. For example, take the first row

of

. The ceiling values

assign operations 2, 4, and 6 to machine 1, and the rest to machine 2. The decimal parts

then order operations on each machine. For machine 1, the sequence is

; for machine 2, it is

. To maintain feasibility, the operations of the same job follow their predefined order. Finally, unifying all operations under the same job symbol provides

and

, which are used to construct a feasible schedule. The Gantt chart for this decoded solution is shown in

Figure 2, where

for

is 1.6730.

In TCDE, the symmetric design of the two-population structure is evident right from the initialization stage. Subpopulations and , each of size , are randomly initialized to establish a symmetrical duality. This balanced configuration allows the two subpopulations to complement each other, mirroring interactions in symmetric systems.

3.2. Independent Evolution for

The evolution of is independently driven by parameters dynamically adjusted via a chaos theory-based logistic map. This approach leverages the chaotic properties of ergodicity and stochasticity to enhance global exploration and prevent local optima trapping, resulting in enhanced algorithmic performance and efficiency in solving complex optimization problems.

3.2.1. Generate Chaotic Parameters and Using the Logistic Map

In basic DE [

27], the parameters (including scaling factor

F and crossover rate

) are constant, yet they significantly impact the performance of DE. To improve this, a self-adaptive mechanism using chaotic theory can be embedded into DE [

35]. Chaotic sequences, known for ergodicity, stochasticity, and irregularity, enhance the algorithm’s exploitation and convergence in the search space.

In this work, the logistic map, a simple dynamic system exhibiting chaotic behavior, is used as the self-adaptive mechanism for

and

in

. Updating these parameters is based on the logistic map, as shown in Equation (17).

where

is the chaotic number used for

and

, with

as a control parameter, where

. Equation (14) generates a deterministic chaotic dynamic number in the range

, ensuring

and, when

,

. During evolution,

and

are initialized and then dynamically adjusted each iteration via this chaotic mechanism. This strategy diminishes the initial values’ influence on the algorithm’s performance, enhances global convergence and improves its capacity to avoid local optima.

3.2.2. Mutation

In

, the ‘DE/rand/1’ mutation operator is applied to generate the mutant vector

. For each target vector

, the mutant vector

is generated using the scaling factor

and three distinct randomly selected target vectors,

,

, and

, as shown in Equation (18):

Here, the scaling factor

controls the amplification of differential variation

, with

[

27].

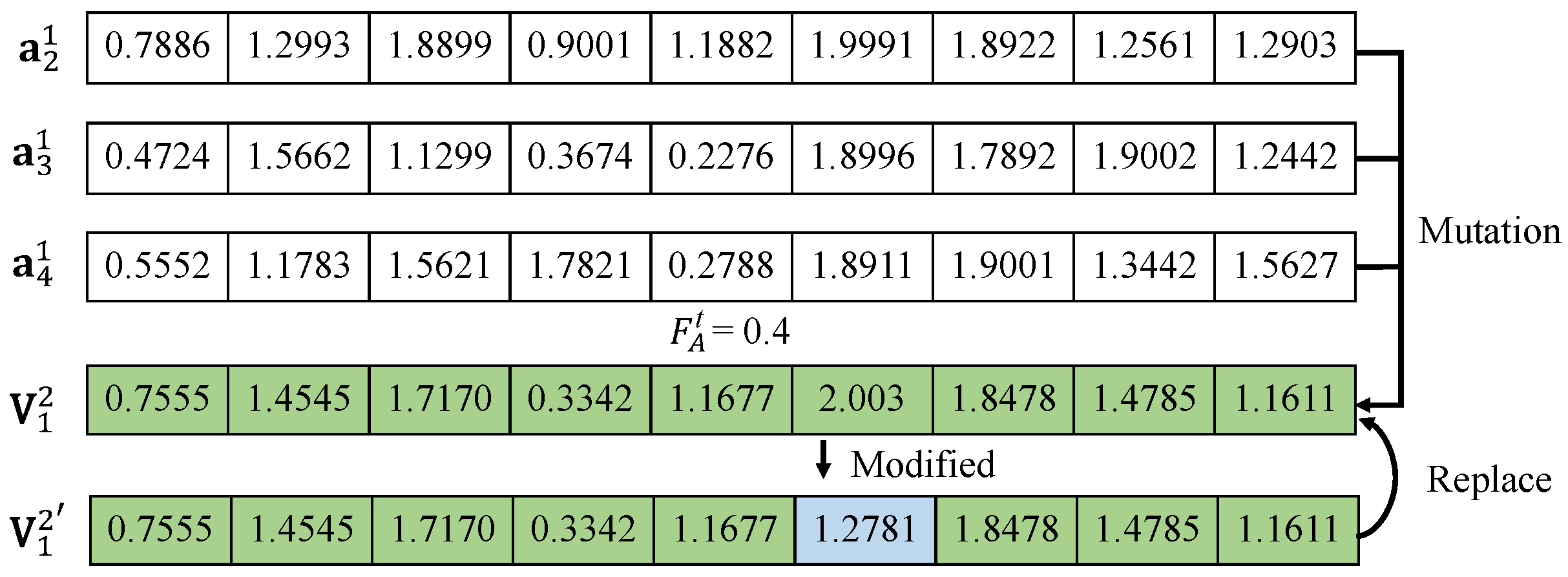

However, the mutation operator may generate vectors that violate capacity constraints, requiring a repair modification. In this process, only the elements that violate the constraints are regenerated. For example, in matrix

, the mutation operator and repair modification are illustrated in

Figure 4. With

,

,

,

, and

, the initial mutant vector

obtained from Equation (18) is

. Since

,

must fall within the range

. The element

exceeds this range and is thus infeasible. A new value of 1.2781 is randomly selected from

to replace

. The repaired vector

becomes

, with all other elements satisfying the capacity constraints as defined by Equation (14).

3.2.3. Crossover

After the mutation phase, a crossover operator is applied to obtain a trial vector

, controlled by the crossover rate

. The trial vector

is formed by selecting elements from the mutant vector

and the target vector

. The trial element

is generated using Equation (19):

Here,

is a random number between (0, 1), and

is a randomly chosen index from

, ensuring that

includes at least one element from

. For instance, using the example from

Section 3.2.2 with

,

, and the given

values, the trial elements

are calculated via Equation (19), resulting in

, as depicted in

Figure 5.

3.2.4. Greedy Selection

After the crossover phase, a selection process is performed to update the target vector

. The target vector

is compared to its corresponding trial vector

using a one-to-one greedy criterion. If the objective value of

is no larger than that of

, then

is updated to

; otherwise,

is retained. This selection process is formalized in Equation (20).

3.3. Independent Evolution for

The evolution of is driven by the dynamic adjustment of and based on population diversity () and convergence speed (). This adaptive mechanism balances exploration and exploitation, with an emphasis on enhancing local search efficiency. Mutation, crossover, and selection operations on use the updated parameters to enhance local exploitation, similar to those on .

3.3.1. Population Diversity Metric

The population diversity (

) is measured using the following formula:

where

. Here,

represents the average distance between individuals in the subpopulation

, which can reflect the overall diversity.

3.3.2. Convergence Speed Metric

The convergence speed (

) is assessed using the following formula:

where

and

are the best fitness values in the current and previous generations, respectively. A smaller

indicates faster convergence.

3.3.3. Parameter Adjustment Strategy

Based on the population diversity

and convergence speed

, the parameters

and

are adaptively adjusted as follows:

Similarly, the adjustment formula for can be derived. This strategy dynamically adjusts and based on the current state of the population. When diversity is high (), is decreased to enhance local search ability. Conversely, when diversity is low (), is increased to improve global search ability. Additionally, is adjusted based on convergence speed: it is increased if convergence is fast () to prevent premature convergence, and it is decreased if convergence is slow () to accelerate the process. The weights and are set to 0.5 to balance the influences of diversity and convergence speed.

To simplify the parameter space, a relative diversity threshold

is introduced to unify

and

:

where

is the average genetic diversity over the past

t generations. This ensures

remains positive and

does not exceed

, maintaining a reasonable diversity window around the historical average.

By dynamically adjusting and based on the population’s diversity and convergence speed, this self-adaptive mechanism enhances the algorithm’s local search ability while maintaining a balance between global and local search capabilities. This ensures more efficient exploration of the search space and improves the overall performance.

3.4. Symmetrical Collaborative Process

In the symmetrical collaborative process, TCDE employs a coevolution mechanism to strengthen the global search capability and accelerate convergence speed. This mechanism promotes information exchange and and maintains diversity through individual bidirectional migration and information sharing between and .

3.4.1. Individual Bidirectional Migration

Individual bidirectional migration is conducted at specific intervals, referred to as migration intervals, to facilitate information exchange between and . In detail, the process is as follows: at each migration interval T, a fixed number of individuals, denoted as , are selected from each subpopulation based on their fitness values. The top individuals with the highest fitness values in are migrated to , replacing the individuals with the lowest fitness values in . Similarly, the top individuals from are migrated to to replace the individuals with the lowest fitness values in . This bidirectional migration ensures that both subpopulations maintain a high fitness level while introducing fresh genetic material.

Mathematically, the migration process can be described as follows:

where

and

are the subsets of

individuals with lower fitness in

and

, respectively.

and

are the subsets of

individuals with higher fitness. To balance simplicity and effectiveness,

=

. This setting ensures an appropriate exchange of individuals between subpopulations, which promotes diversity while keeping computational efficiency.

3.4.2. Information-Sharing Mechanism

The information-sharing mechanism aims to increase subpopulation diversity and prevent premature convergence. It consists of two key components:

Update the elite set E by selecting top individuals from and : A shared elite set E is formed by selecting elite individuals from each subpopulation. These elite individuals guide the evolution of both subpopulations, ensuring high-quality genetic information is propagated.

Use the elite set E to guide the evolution of and : During the guide phase, individuals from the shared elite set E are introduced into each subpopulation’s guide operation. This helps maintain diversity and improves the overall search capability.

To ensure the elite set size is adaptive, we propose a self-adaptive mechanism. The size of the elite set

is dynamically calculated based on

:

where

is the maximum possible diversity. This formula ensures that the elite set size decreases as the population diversity increases, which helps to maintain balance between exploration and exploitation.

The guide operation for an individual

x in either subpopulation is modified as follows:

where

e is an individual randomly selected from

E, and

is the scaling factor. If

, then

; otherwise,

.

The symmetrical coevolution process, facilitated by individual bidirectional migration and information sharing, enables the coordinated evolution of both subpopulations. This process not only maintains structural equilibrium through reciprocal knowledge transfer but also enriches diversity and accelerates convergence.

In summary, the proposed TCDE algorithm embodies the concept of symmetry through its dual-subpopulation structure. The two subpopulations, and , represent two symmetrical yet distinct exploration strategies. focuses on global exploration using chaotic parameter adaptation, while emphasizes local exploitation with adaptive control based on population diversity and convergence speed. The symmetrical collaboration between and is further enhanced by the periodic migration of top individuals and the guidance of an elite set, which facilitates the exchange of genetic information and maintains population diversity. This symmetrical design enables the algorithm to efficiently navigate the complex search space and converge on high-quality solutions.

3.5. Computational Complexity Analysis

The computational complexity of the TCDE algorithm is analyzed through its core components. Population initialization involves creating two subpopulations, and , each of size , with operations per individual. This results in a complexity of . During the independent evolution phase, ’s chaotic parameter adaptation via logistic maps requires operations per generation. The mutation, crossover, and selection steps for contribute operations per generation. For , calculating the population diversity () and convergence speed () involves operations. Parameter adaptation and evolution in add operations per generation. The symmetrical collaboration mechanism, including migrant selection and elite-set updates, contributes operations at each migration interval T. Over G generations, the overall computational complexity simplifies to for sufficiently large N. This places TCDE in the same complexity class as other population-based metaheuristics, making it suitable for large-scale instances with parallelization.

4. Computational Experiments and Results

The experiments were conducted in MATLAB R2023a using the CVX solver (version 3.0) for exact solutions in small-scale instances. All tests ran on a workstation with an Intel Core i9-13900K CPU (3.0 GHz) and 64 GB RAM.

MATLAB R2024 was selected for implementation primarily due to its strengths in handling the problem’s key components: (1) efficient matrix operations for population-based metaheuristic (TCDE) iterations; (2) seamless integration with the CVX toolbox (used for validating small-scale instances via convex optimization); (3) built-in functions for statistical analysis. These features align well with the needs of our scheduling model, which involves complex numerical computations and comparative experiments.

4.1. Data Description and Comparative Algorithms

The dataset is grounded in the industrial data characteristics from our prior study [

35], which analyzed a lace textile factory in Fuzhou, China. Drawing upon factory records and worker expertise, the re-dyeing constraints are modeled to reflect real-world operational limits, allowing up to three re-dyeing operations per job (

). The re-dyeing probabilities

follow a uniform distribution

, while processing time-reduction coefficients

are set to

based on operator feedback during repeated rework.

To comprehensively evaluate the performance of TCDE, computational experiments are designed using two types of datasets: industrial-derived synthetic data and real-world industrial data, each in small and large scales. For synthetic data, their instances are constructed by abstracting empirical patterns from real industrial operations. Real-world industrial datasets are collected from the lace textile factory to validate the practical applicability of TCDE. Additionally, all small-scale instances are solved via CVX to obtain optimal benchmarks.

There are currently no existing methods for the considered parallel machine scheduling problem with probabilistic re-dyeing operations, sequence-dependent setup times, and machine eligibility constraints. To demonstrate the advantages and benefits of the proposed TCDE algorithm, three comparative algorithms are selected: the hybrid differential evolution algorithm (HDE, [

35]), the fuzzy genetic algorithm (FGA, [

36]), and the modified metaheuristics for random rework (MMRR, [

17]).

Both HDE and FGA are utilized to address parallel machine scheduling problems with some constraints. HDE focuses on minimizing total tardiness in deterministic environments, whereas FGA targets minimizing total weighted tardiness by integrating fuzzy logic. These two algorithms have been successfully applied to deal with multi-constraint scheduling problems; thus, they can be adapted to the considered problem. Meanwhile, MMRR is designed to handle the unrelated parallel machine scheduling problem with random rework. For this study, MMRR has been adapted to include color-family constraints and sequence-dependent setup times, with its rework model adjusted to reflect the finite number of re-dyeing operations and processing time reduction patterns.

To guarantee a fair comparison, all compared algorithms, HDE, FGA and MMRR, were re-implemented by the authors strictly according to the parameter settings and procedural descriptions in their original papers. No third-party or built-in solver routines were used for any of the metaheuristics.

4.2. Parameter Settings

In this study, the stopping criterion for all algorithms was set to non-improving iterations. This decision was based on testing that showed TCDE and other comparison algorithms converge effectively within this number of iterations.

The other parameters of TCDE, namely population size

N, migration interval

T, diversity threshold

, chaos control parameter

, initial scaling factor

, and initial crossover rate

, were optimized using the Taguchi method [

37] in the instance

. For fairness,

and

were both initialized to

, and

and

to

.

Table 5 lists three reasonable levels for each parameter using the orthogonal array

. Each parameter combination was tested independently 10 times, with mean

values collected as response variables.

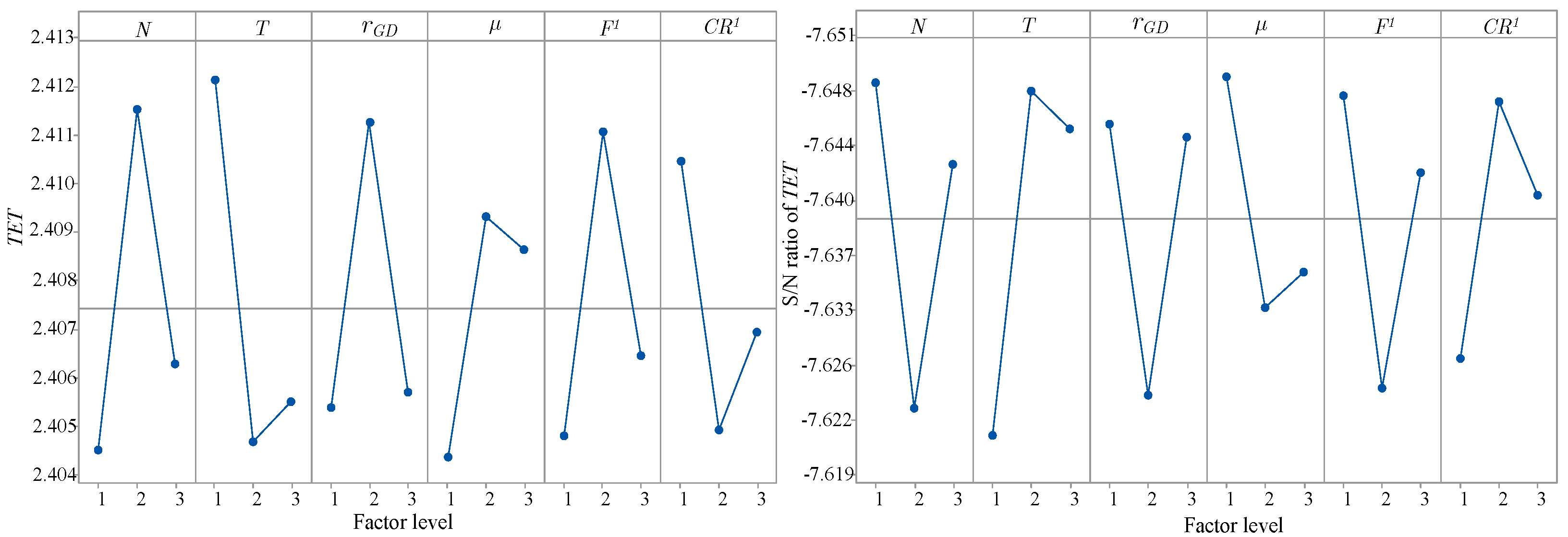

Figure 6 shows the mean

and corresponding S/N ratios, defined as

.

As shown in

Figure 6, when the levels of

N,

T,

,

,

, and

are set to 2, 1, 2, 2, 2, and 1, respectively, TCDE generates a smaller mean

and a larger S/N ratio than other level combinations. Consequently, the optimal parameter combination is adopted as

,

,

,

,

, and

.

Regarding HDE, FGA, and MMRR, all parameters except for the stopping condition are directly obtained from [

17,

35,

36]. These parameter settings, validated via Taguchi-based analysis, have been proven to deliver optimal performance in most instances.

4.3. Ablation Experiment on Synthetic Datasets

To test the effectiveness of the proposed TCDE and its components, an ablation experiment was performed on both small-scale and large-scale synthetic datasets. Three variants of TCDE were designed to evaluate the contribution of each key component. DE1 removes the chaotic parameter adaptation in subpopulation , specifically disabling the logistic map-driven dynamic adjustments of and . The comparison between TCDE and DE1 aims to isolate the impact of chaotic exploration on global search performance. DE2 eliminates the diversity-convergence adaptive control mechanism in subpopulation , freezing the parameter update rules based on and . This variant serves to quantify how local exploitation prevents premature convergence. DE3 entirely disables the periodic exchange of elite individuals between and , along with the guidance from the adaptive elite set E. By contrasting TCDE with DE3, the experiment reveals the synergistic effects of knowledge transfer and coevolution across subpopulations.

4.3.1. Small-Scale Synthetic Datasets

Table 6 shows the optimal solutions, computation times for CVX, and best objective values on small-scale synthetic datasets for TCDE and its three variants.

is measured in hours, and computation time in seconds. The data size is denoted as

, with a gradual increase. Each algorithm was run independently 10 times. Notably, Instance 21 is specifically marked as lacking a CVX solution, where CVX is unable to find the optimal solution within two hours.

As depicted in

Table 6, TCDE matches the optimal solutions derived by CVX in all instances, while DE1 fails to achieve optimality in five cases (e.g., Instances 5, 6, and 12), exhibiting a 10.67% higher average

compared to TCDE. DE2 struggles with premature convergence, particularly in complex setups like Instance 15, resulting in a 1.57% higher average

. While DE3 achieves optimality in all 20 small-scale instances, its performance deteriorates for larger configurations (e.g., Instance 21), yielding a higher average

than TCDE. Therefore, it can be concluded that TCDE achieves the best objective values, despite it requiring a relatively longer computational time.

4.3.2. Large-Scale Synthetic Datasets

Table 7 presents the average objective values and computational times of TCDE, DE1, DE2, and DE3 for large-scale synthetic datasets. As shown in

Table 7, TCDE significantly outperforms its variants, achieving a 59.94%, 50.74%, and 43.15% reduction on average

compared to DE1, DE2, and DE3, respectively. For example, in Instance 3, TCDE achieves a

of 1.0 h, whereas DE1, DE2, and DE3 yield 13.4, 18.1, and 12.7 h, respectively. Although TCDE takes 27.33%, 29.26%, and 57.06% longer to compute than DE1, DE2, and DE3 on average, it still maintains superior solution quality. Especially in complex scenarios like Instance 10, TCDE reduces

by 32.42% compared to DE3.

Overall, the integration of chaos-driven exploration, diversity-convergence exploitation, and the collaborative mechanism of TCDE addresses the limitations of TCDE variants. These results highlight the effectiveness of the strategies incorporated into TCDE, such as chaotic parameter adaptation, diversity-convergence adaptive control, and symmetrical collaborative mechanism, in enhancing the comprehensive performance of the algorithm. While computational costs increase moderately, these strategies collectively ensure that near-optimal solutions can be achieved with manageable computational overhead.

Although TCDE occasionally requires more computational time than some competitors (e.g., DE3), this increase is marginal and well-justified by the significant improvement in solution quality. In practical applications such as lace-dyeing production, a reduction in TET by just one hour can save approximately USD 1200 in penalty costs, while the additional computational expense remains below USD 0.10 per hour. Thus, the trade-off strongly favors TCDE in terms of overall economic efficiency.

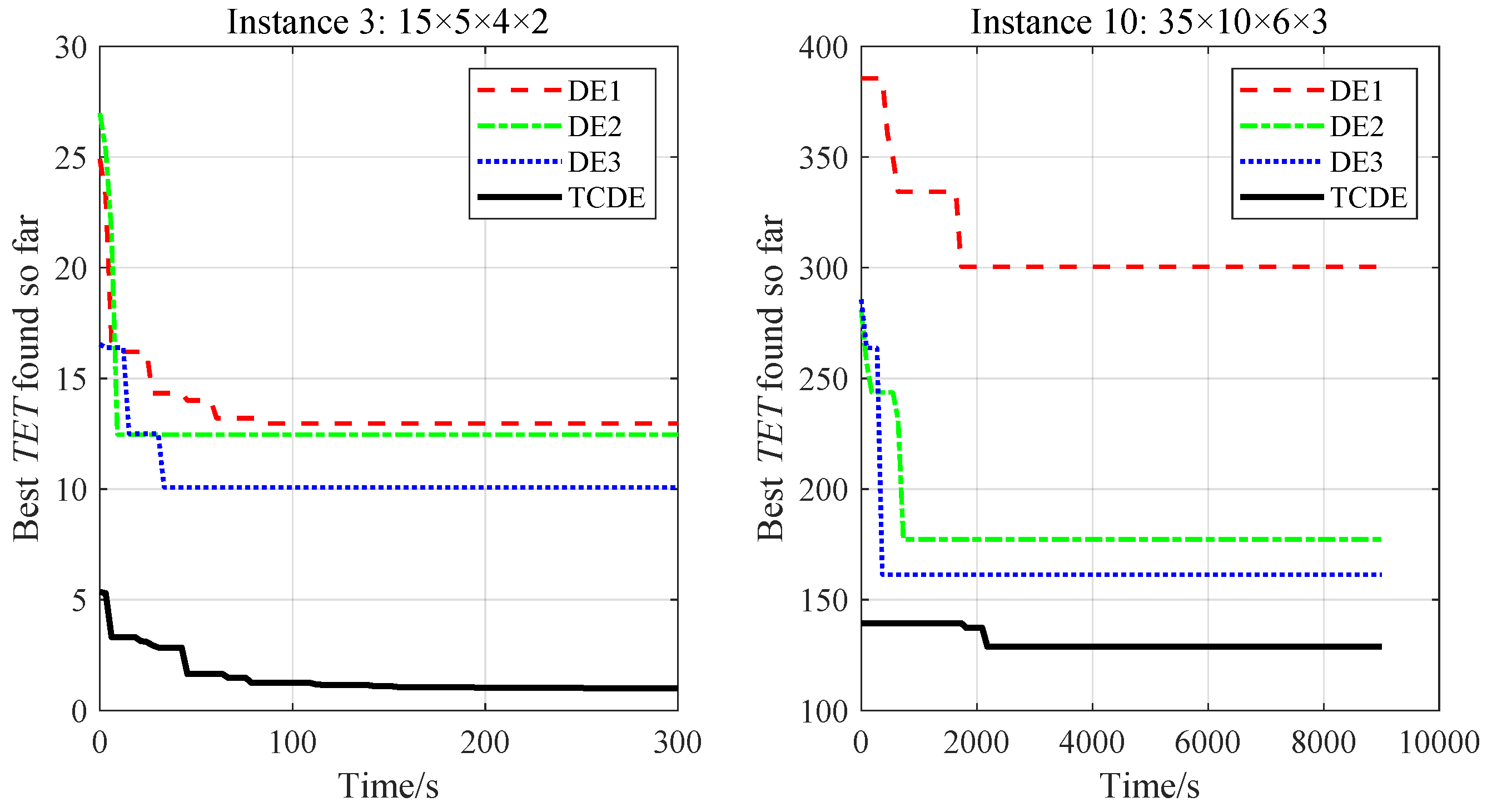

Figure 7 illustrates the convergence behavior of TCDE and its variants in two representative large-scale instances. TCDE shows a rapid decrease in the early stage, reflecting strong global exploration capability, and stabilizes quickly in the later stage. It outperforms other algorithms in terms of convergence speed, further confirming that TCDE effectively balances exploration and exploitation.

4.4. Comparative Experiment on Industrial Datasets

The industrial datasets used in this study are derived from a lace textile factory in Fuzhou, China. The industrial solutions are currently generated manually based on the first-in-first-out (FIFO) rule or the earliest due date (EDD) rule. In the industrial sample tests, the proposed TCDE algorithm is compared against three state-of-the-art algorithms: HDE, FGA, and MMRR, as well as industrial solutions.

4.4.1. Small-Scale Industrial Datasets

Table 8 displays the optimal solutions and computation times for CVX, HDE, FGA, MMRR, TCDE, and the industrial solutions across small-scale industrial datasets.

Figure 8 presents a bar chart comparing each algorithm’s results (HDE, FGA, MMRR, TCDE, and industrial) against the CVX optimal solution for each dataset instance. In

Figure 8, ‘CVX Optimal’ denotes the optimal solution obtained via CVX, while ‘CVX N/A’ indicates instances where CVX failed to derive an optimal solution within the time limit.

As outlined in

Table 8, TCDE consistently matches the optimal solutions derived by CVX in all instances. In contrast, industrial solutions exhibit significantly higher

values, particularly in more complex instances. HDE and FGA also achieve optimal solutions in many cases, but show variability in computation time. Although TCDE requires longer computational time than some algorithms, such as FGA, in certain instances, it exhibits remarkable performance in obtaining optimal solutions. This relatively longer computation time is justified by its effectiveness. MMRR, while effective in some cases, generally underperforms compared to TCDE. As visually summarized in

Figure 8, TCDE consistently matches the CVX optimal solutions across all instances, whereas the industrial method exhibits significantly higher

values. Therefore, TCDE consistently outperforms the industrial solutions and shows better overall performance than HDE, FGA, and MMRR in small-scale industrial datasets.

In summary, TCDE emerges as the most effective algorithm for small-scale industrial datasets due to its consistent ability to match CVX’s optimal solutions. While it may require longer computational time than some algorithms like FGA, its superior solution quality makes it the preferred choice. The industrial solutions, despite their simplicity, are not competitive in terms of solution quality, particularly as the problem complexity increases.

4.4.2. Large-Scale Industrial Datasets

Table 9 presents the average objective value and average computational times for HDE, FGA, MMRR, and TCDE, along with the industrial method, on large-scale industrial datasets. “I” denotes the industrial solution.

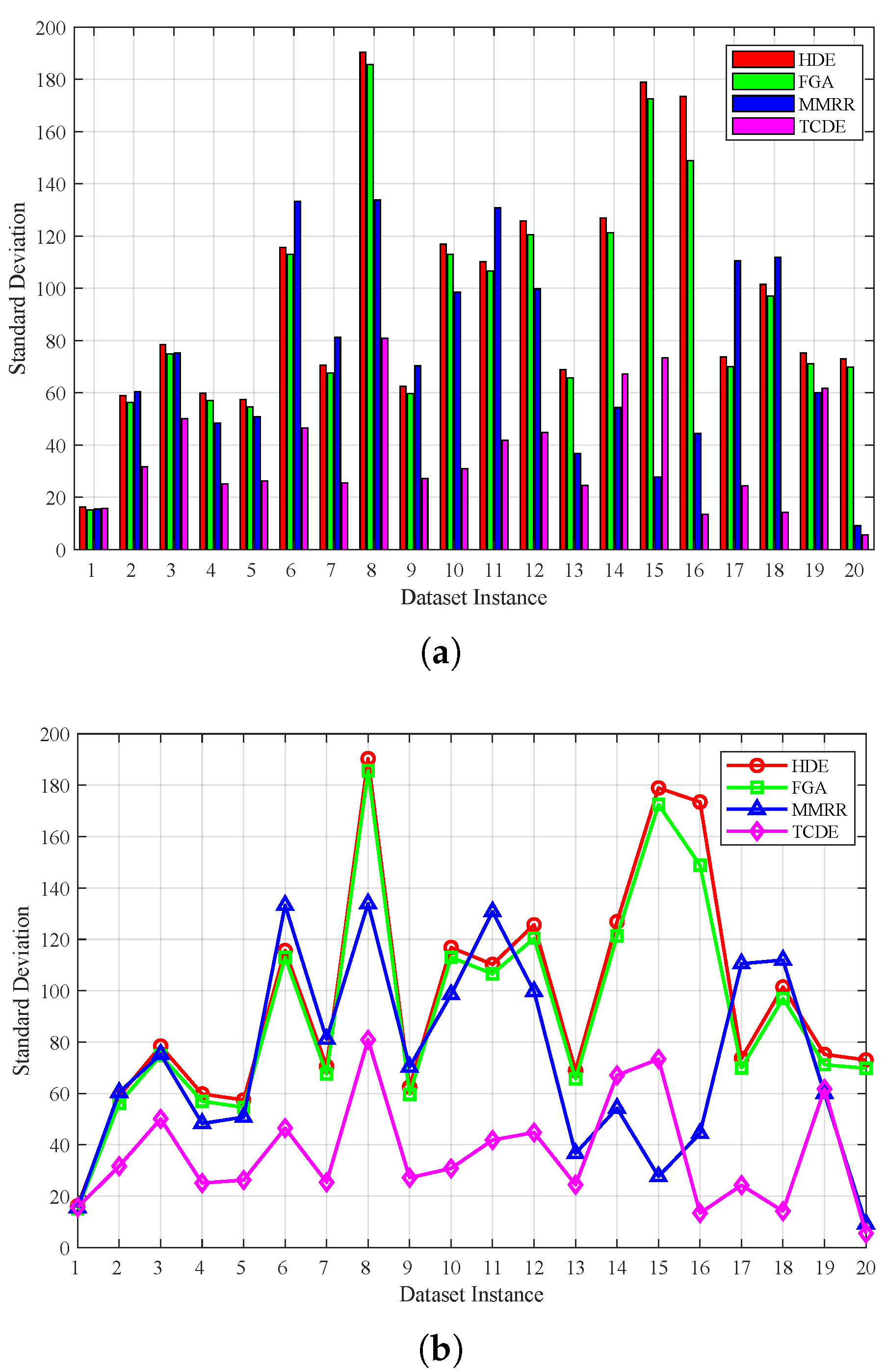

Figure 9 provides a boxplot comparison of TCDE, HDE, FGA, MMRR, and the industrial method. The standard deviation results of HDE, FGA, MMRR, and TCDE across 20 large-scale industrial datasets are effectively visualized in

Figure 10.

Figure 10a displays a bar chart where each group of bars corresponds to a specific dataset instance, and

Figure 10b depicts a line chart where each line represents the standard deviation of one algorithm across the 20 dataset instances.

As described in

Table 9, TCDE consistently outperforms HDE, FGA, MMRR, and traditional industrial methods in minimizing

while maintaining acceptable computational efficiency. Compared to real industrial solutions, HDE, FGA, MMRR, and the proposed TCDE algorithm reduce the

by an average of 59.77%, 22.53%, 23.79%, and 64.81%, respectively. The results also demonstrate that TCDE achieves lower average objective values across all 20 dataset instances. Although TCDE incurs longer computational times than HDE, FGA, and MMRR, its solution quality justifies this trade-off. For example, in Instance 4, TCDE achieves a

of 5.39 h in 57.67 s, whereas HDE requires 43.33 s to produce a

three times higher at 15.40 h. This balance between runtime and performance aligns with industrial requirements, where minimizing tardiness outweighs marginal time savings. As shown in

Figure 9, TCDE consistently demonstrates lower median

and narrower interquartile ranges, further confirming its robust performance across all test instances.

The standard deviation comparison provided in

Figure 10 further solidifies the proposed algorithm’s position as a reliable solution. Its stability is evident from its low standard deviation, which is 27% lower than that of HDE, 57% lower than that of FGA, and 49% lower than that of MMRR. Furthermore, both the bar chart and line chart indicate that the algorithm exhibits lower variability in its performance across different dataset instances. The bar chart in

Figure 10a reveals that TCDE’s average objective values are not only lower but also exhibit less fluctuation, suggesting a more robust optimization process. Similarly, the line chart in

Figure 10b illustrates that TCDE maintains a tighter performance range, which minimizes the likelihood of obtaining suboptimal solutions in diverse scenarios.

On the whole, TCDE demonstrates a high level of robust performance in reducing for large-scale industrial scheduling problems. It achieves significant reductions while ensuring stability and reasonable runtimes. Moreover, its strong capacity to address complex scheduling challenges makes it an invaluable instrument for practical manufacturing systems, offering a reliable solution for optimizing production efficiency and minimizing delays in real-world applications.

4.5. Comprehensive Performance Summary

To systematically compare the performance of TCDE with benchmark algorithms across all datasets,

Table 9 summarizes key metrics, including average

, average computational time, and relative improvement (

), compared to industrial solutions.

As shown in

Table 10, TCDE outperforms all benchmark algorithms across all dataset types and scales. For synthetic datasets, TCDE achieves the lowest average

(3.2 h for small-scale and 42.3 h for large-scale) and the highest improvement over industrial solutions (74.6% and 82.3%, respectively), demonstrating its robustness in both simple and complex artificial scenarios.

In industrial datasets, TCDE maintains its superiority: it reduces the average to 2.1 h (small-scale) and 189.6 h (large-scale), with improvements over industrial solutions reaching 78.4% and 64.8%. Notably, in large-scale industrial datasets, where real-world complexity is highest, TCDE outperforms HDE by 58.4%, FGA by 64.4%, and MMRR by 61.9%, highlighting its practical applicability.

While TCDE exhibits slightly longer computational times compared to HDE, FGA, and MMRR, this trade-off is justified by its significantly better solution quality, which aligns with industrial priorities of minimizing tardiness over marginal time savings. The low standard deviation values across all metrics further confirm TCDE’s stability across diverse scheduling scenarios.

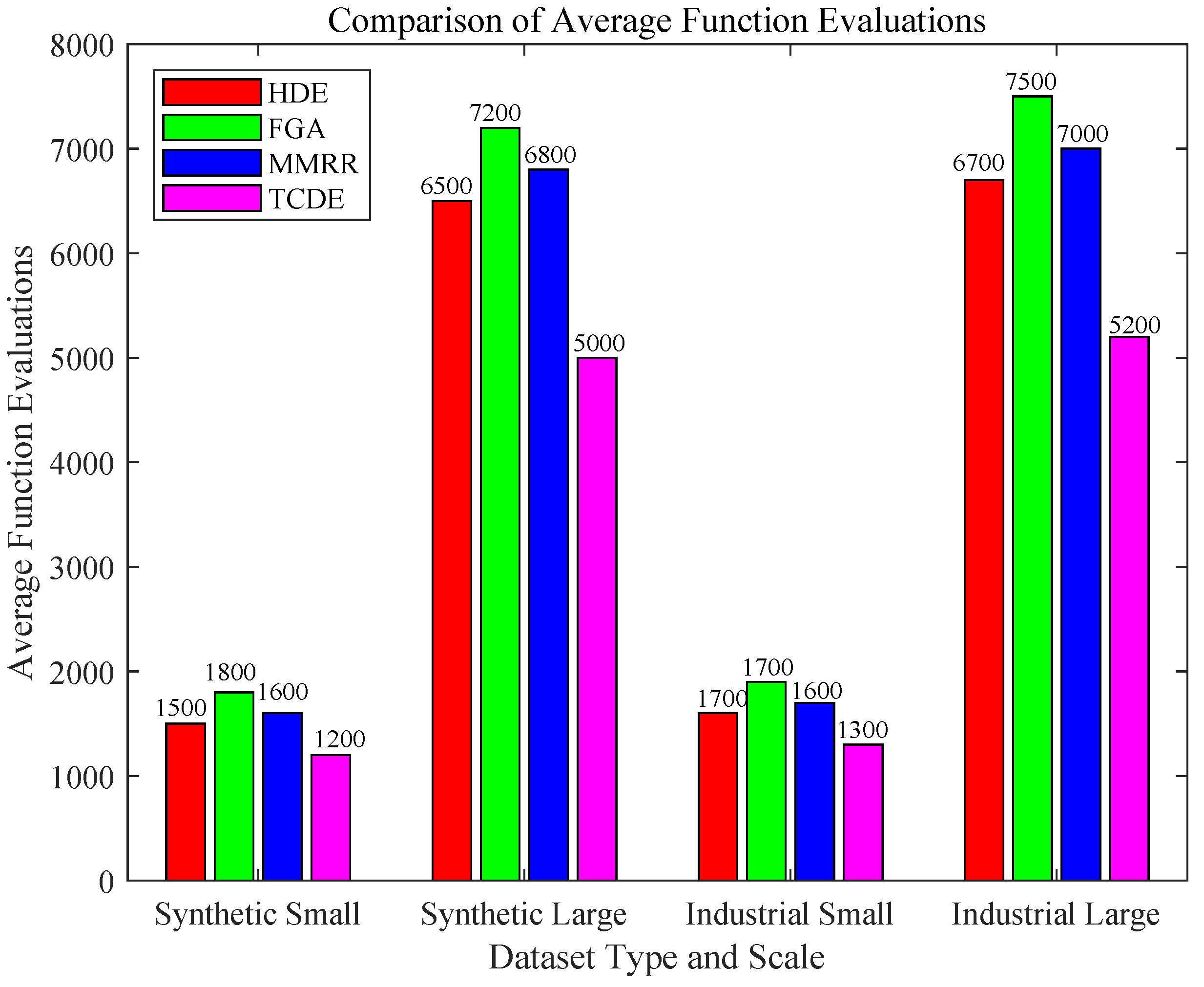

In addition to computational time, algorithmic efficiency was assessed using the number of function evaluations (FEs). This hardware-agnostic metric reflects the optimization effort necessary to achieve convergence.

Figure 11 illustrates the comparison of the average number of function evaluations needed by each algorithm across different dataset instances.

Table 11 presents the average number of function evaluations required by each algorithm across various dataset instances.

As shown in

Table 11 and

Figure 11, TCDE consistently requires fewer function evaluations compared to HDE, FGA, and MMRR across all dataset types and scales. This indicates that TCDE achieves better solution quality with higher computational efficiency in terms of function evaluations. In summary, the additional experiments demonstrate that TCDE not only outperforms other algorithms in terms of solution quality but also requires fewer function evaluations, making it a more efficient and effective choice for solving the considered scheduling problem.

4.6. Statistical Significance Analysis

To statistically validate the significance of TCDE’s performance superiority, the Friedman test, a non-parametric method suitable for comparing multiple algorithms across diverse datasets, was conducted on the average

values. The test rejects the null hypothesis of equal performance among all algorithms, which can indicate statistically significant differences.

Table 12 summarizes the adjusted

p-values and conclusions for all pairwise comparisons.

Post-hoc pairwise comparisons were performed using the Holm–Bonferroni correction to identify specific differences between TCDE and each comparative algorithm. As shown in

Table 12, TCDE exhibits significantly lower average

than HDE, FGA, and MMRR at the 95% confidence level (

p-values < 0.05). In contrast, no significant differences were found among the comparative algorithms themselves. These results confirm that the performance advantages of TCDE are statistically robust and are not attributable to random variation.

4.7. Practical Applicability

The proposed TCDE algorithm is designed with practical manufacturing environments in mind. The scheduling problem addressed in this study originates from a real-world lace dyeing factory in Fuzhou, China, where sequence-dependent setup times, machine eligibility, and probabilistic re-dyeing operations are daily challenges.

In addition to minimizing

, machine utilization (

) is another critical performance indicator in practice. It measures how efficiently the available machine capacity is used. The machine utilization is defined as follows:

where

indicates whether the operation

h of job

j is assigned to machine

k;

is the completion time of the last job on machine

k.

A higher machine utilization indicates a more compact schedule with less idle time, which is desirable in manufacturing environments to reduce operational costs and improve throughput.

Table 13 summarizes the key performance indicators across different scheduling methods, including average tardiness, machine utilization, and average runtime. These results demonstrate the practical value of TCDE in improving the production efficiency and reducing operational costs.

5. Conclusions, Limitations, and Future Work

5.1. Conclusions

The symmetry-driven TCDE algorithm has demonstrated exceptional effectiveness in minimizing . This can be attributed to the effective symmetrical collaboration between the two subpopulations. The chaotic parameter adaptation in equips the algorithm with strong global exploration capabilities, while the adaptive control in ensures refined local exploitation. The periodic exchange of individuals between the subpopulations further strengthens the symmetry of the algorithm, enabling it to maintain a good balance between exploration and exploitation throughout the optimization process. Extensive experimental validation on both industrial and synthetic datasets has shown that TCDE outperforms current state-of-the-art methods, showcasing its significant potential for real-world applications in enhancing sustainable manufacturing within the textile industry. This symmetrical approach is particularly advantageous in handling the complex and uncertain scheduling problem under consideration. Its ability to handle the inherent complexity and variability of the lace dyeing process with probabilistic re-dyeing operations makes it a valuable asset for promoting sustainable and intelligent manufacturing practices.

5.2. Research Limitations

Despite its strengths, the TCDE framework has some key limitations. First, it focuses on static scheduling and lacks efficient mechanisms to handle real-time disruptions like unexpected job arrivals or machine failures. Second, its execution times for large-scale instances (typically minutes to hours) may not meet the subsecond response requirements of real-time cloud-based production systems, especially for rapid rescheduling. Third, it assumes input data (e.g., re-dyeing probabilities, processing times) are accurate, but adversarial noise or data corruption (e.g., erroneous sensor measurements) could degrade performance, as the algorithm lacks explicit mechanisms to detect or mitigate such perturbations. In practice, adversarial noise (e.g., maliciously altered due dates or erroneous re-dyeing probabilities) or data corruption (e.g., sensor errors in job weight measurements) could degrade performance. Additionally, the fixed H assumption for reworks, while simplifying the model, may limit adaptability in highly dynamic or uncertain environments.

5.3. Recommendation for Future Research

Future research will prioritize bridging these gaps through several interconnected pathways. Most notably, deploying TCDE within real-time cloud/edge architectures offers compelling potential for dynamic scheduling. A proposed edge-cloud collaborative framework would position lightweight TCDE variants on edge devices for low-latency response to local disruptions (e.g., machine breakdowns, urgent job insertions), while leveraging cloud resources for heavy computations like predictive scenario analysis and parameter tuning. To handle dynamic job arrivals, we will develop an event-triggered TCDE variant that performs incremental rescheduling instead of full recomputations. This variant will incorporate a dynamic prioritization module that adjusts job weights based on real-time factors such as due date urgency, customer priority, and production bottlenecks. For instance, urgent jobs with tight deadlines could be assigned higher priority weights, guiding the symmetry-driven exploration-exploitation balance in TCDE to prioritize their timely completion.

Moreover, although TCDE is demonstrated in the context of lace dyeing, its core mechanisms, such as symmetry-driven dual-population collaboration, chaotic parameter adaptation, and elite-based migration, are designed to address general scheduling challenges. These include probabilistic operations, sequence-dependent setups, and machine eligibility constraints, which are common in various manufacturing systems (e.g., automotive parts, panel furniture, and electronics). Thus, TCDE is highly portable and can be adapted to other scheduling domains with appropriate modeling.

Further extensions include applying TCDE’s symmetry-driven framework to green manufacturing contexts with energy/carbon constraints and multi-factory supply chain scheduling. Hybridization with complementary metaheuristics like artificial bee colony algorithms could expand its cross-domain applicability. Throughout these developments, maintaining robust security protocols for Cloud–Edge communication and implementing fail-safe mechanisms will be essential for industrial adoption. Collectively, these advancements position TCDE as a cornerstone for responsive, data-driven manufacturing within Industry 4.0 ecosystems, transforming theoretical optimization capabilities into tangible productivity gains for complex production environments like lace dyeing.

Additionally, to address vulnerabilities to adversarial noise and corrupted inputs, adaptive mechanisms will be integrated into TCDE. This includes enhancing the diversity metrics in the second subpopulation to detect outliers in input data (e.g., implausible re-dyeing probabilities), triggering adjusted parameter settings to increase exploration and reduce reliance on noisy parameters. Additionally, during the decoding process, job attributes such as color family and weight will be cross-checked against historical distributions, with ambiguous values replaced by consensus from the elite set to preserve feasibility. TCDE will also be trained on synthetic noisy datasets (e.g., perturbed due dates or machine capacities) to enable dynamic adjustment of migration intervals under high noise, accelerating knowledge exchange between subpopulations to maintain performance in minimizing total estimated tardiness.

Finally, TCDE’s framework shows promise for energy-aware scheduling. By integrating real-time power consumption models, it can optimize both tardiness and energy efficiency. Adaptive parameter control can balance throughput with energy constraints, while edge deployment reduces computational and cloud data transfer costs. In addition, future work will extend the TCDE framework to handle multi-objective optimization by incorporating factors such as energy consumption and machine utilization into the fitness function. A Pareto-based selection mechanism will be developed to balance trade-offs among conflicting objectives, enabling more comprehensive and sustainable scheduling solutions in real-world industrial settings. To address the fixed rework limit H, dynamic modeling is introduced. Real-time quality feedback and Bayesian updating adjust expected reworks per job based on observed defect rates and history.