1. Introduction

In medical studies, sampling a dichotomous outcome emulates a Bernoulli trial, and the ratio between the desired (positive) outcome (x) and the number of trials (or sample size, m) leads to a proportion (). This is a sampling with replacement. The sampling strategy is foundational when dealing with finite populations. In sampling with replacement, after extracting an individual (or a case) from a population, it can be selected for insertion again, having a non-zero probability of being extracted again in the future, while sampling without replacement does not turn it back in the population.

The statistical experiment is as follows: taking a bag with green and yellow balls, we draw out and back in from the bag

m balls (the sample), and count how many

x are green (

Figure 1).

In

Figure 1 are illustrated a bag full of balls and a bag half full, after extraction of some of its balls. In a sampling with replacement, the bag is always full, since each ball extracted is immediately returned to the bag. This is the binomial experiment. On the other side, sampling without replacement takes the balls from the bag without returning them in it. Even if the outcome of the two side-by-side experiments may look the same (at the end of the extraction, in both instances

x green and

yellow balls may be recorded, the experiments are in fact different, and their associated statistics are different too. For instance, a sampling without replacement, if continued indefinitely, will exhaust the balls from the bag, while sampling with replacement will not, even if in the beginning, there are, for example, only two balls in the bag.

There usually is no counting function providing the total number of the balls in the bag (finite or not). To the question “How many green balls (x) out of m will be extracted if the experiment is repeated?”, the result can be expressed using a confidence interval (CI) assuming a success rate (the rate of success is usually 95%).

Fundamental in this context is the probability associated with the reproduction of the observed outcome (

in Equation (

1)).

where (

) is the second sampling with replacement experiment, while (

) is the first.

Imposing the size of the second sample at the value of the first one (

), Equation (

1) changes to Equation (

2):

In Equations (

1) and (

2), the true proportion rate (from the population) has been replaced by the observed value of it (from the sample). All information from the sampling has been used and no more information is available, so, Equations (

1) and (

2) define sufficient statistics.

In layman’s terms, one will never know what is to come from a draw or a sampling in the case of sampling with replacement. A resampling of size

m will have a variable number of successes

y. However, Equation (

2) defines a probability mass function—it is easy to check that Equation (

2) is the (

)-th term from binomial expansion (Equation (

3)).

With Equation (

2), a CI supporting the drawing

y successes from

m trials could be constructed around the

y value.

The first approach to binomial CI can be attributed to Clopper and Pearson (

in Equation (

4)), where the problem was seen as providing an open interval

for an observed

, the proportion of units

x from a sample of

m randomly drawn from a very large population [

1]. However, the major limitation of their proposed method is that they give equal weights to the tails, which symmetrizes the confidence interval, making it more intuitive, but biased in the case of binomial. The second disadvantage is that it is expressed from a simple summation of the probabilities from the tails. Lastly, the third disadvantage is that it is expressed via an analytical formula from a continuous distribution (Equation (

4)).

where

is Fisher’s distribution (the same can be expressed using Beta distribution), with

and

.

Other alternatives were explored as well. Jeffreys [

2] takes different values for the two constants:

and

. One can imagine such many corrections. However, the main problem is still unsolved: all of those are patches, and none of those is optimal.

One major progress was made by Casella [

3], which proposed refining of the CI by using algorithms instead of mathematical formulas. The solutions proposed here differ from the one proposed by Casella. Here, it is admitted that there is no ideal solution, only optimal ones, when the optimality condition needs to be specified.

2. Background

In the case of continuous distribution functions, the cumulative distribution function has an inverse, and a calculable CI is extracted from it. Fisher called this case fiducial [

4].

Calculating the CI (with conventional risk of 5% or otherwise) for a discrete probability distribution is a problem of combinatorics, fact recognized since the beginning [

1] but the complexity of the problem denied the availability of an exact method for its calculation until much later [

5].

Discrete distribution functions play an important role in statistics. Take, for instance, the uniform discrete distribution, which is at the foundation of any random number generator [

6]. The Poisson distribution often serves to provide a size for a sample to be drawn [

7,

8], while binomial and negative binomial distributions are twin correspondences of Gaussian and Gamma distributions [

9,

10].

To estimate the CI for a binomial distribution, a series of approximations has been proposed, speculating the normal asymptotic behavior of the binomial distribution [

11,

12,

13,

14,

15,

16]. The construction of exact intervals has been proposed as well [

5,

17,

18,

19,

20].

3. From Wald’s to Binomial CI

In many ways, Wald’s CI [

11], derived under the assumption of an infinite population, is a scholastic example of a CI.

Let us take an example,

, where

is the cumulative function of the normal distribution. For

= 0.01

is about 2.57583. A Wald CI [

11] will be calculated by Equation (

5).

However, this interval makes no sense with boundaries smaller than 0 or larger than m. Furthermore, any non-integer value of the boundary makes no difference, since x takes only integer values.

A CI for binomial distribution should be seen as the outcome of an algorithm rather than of an simple mathematical formula.

The outcome of such an algorithm is a series of numbers, indexed from 0 to

m:

. By symmetry, a CI should be constructed with these numbers for

x out of

m as a closed interval, as in Equation (

6).

where

depends on

, but their dependence was not explicitated for the sake of simplification. The construction of

should be made in a manner in which always

(or in a more convenient notation

).

In Equation (

5), the symmetry is realized around

x (

+

=

), while in Equation (

7), the antisymmetry is realized around

m (

). This is a major transformation. However, in some instances, some simple functions can be used for the change.

One can try to apply the percepts of Equation (

6) on the values provided by Equation (

5). If

(Equation (

5)) is expressed by its boundaries

and

(as a closed interval,

), then symmetrization of it imposes the transformations given in Equation (

7).

In Equation (

7), the antisymmetry is realized with ceil (

and floor (

) functions along with max and min. However, the solutions provided by Equation (

7) are not optimal.

Table 1 contains CIs calculated with Equation (

5), their transformation into ordered numbers series as in Equation (

6), and their coverage probabilities.

The

p-value listed in

Table 1 is the actual non-coverage probability. One can expect the value of

p to be identical with imposed coverage probability (

). However, it is possible to achieve this coincidence only for continuous distributions. In the case of discrete distributions, the actual coverage probability will always oscillate around the imposed value, and this is the central piece of the problem: how to construct the confidence interval to have the best agreement between the imposed and actual coverages. The solution depends on how the best agreement is defined.

Table 1 lists CIs for the binomial variables (

x in

Table 1) with nonnegative integers as boundaries.

One should notice the symmetry in the intervals provided in

Table 1. The solution provided in

Table 1 is not optimal. Thus,

If it is optimal to provide an at least coverage, then should be [0, 4] instead of [0, 3], with a coverage of 99.86% instead of 98.79% and should be [5, 9] instead of [6, 9], with a coverage of 99.86% instead of 98.79%; should be [0, 7] instead of [1, 7], and should be [0, 7] instead of [1, 7], each with a coverage of 95.27% and 96.12% instead of 92.44%;

If it is optimal to provide an at most coverage, then should be [0, 4] instead of [0, 5], and should be [3, 9] instead of [4, 9], each with a coverage of 96.96% instead of 99.46%; should be [0, 5] or [1, 6] instead of [0, 6], and should be [4, 9] or [3, 8] instead of [3, 9], with a coverage of 95.76% or 96.57% instead of 99.17%;

As it can be observed, imposing a single rule (such as “at least” or “at most”) is not enough—see alternatives for and , where further action is to decide among two alternatives; in this instance, the closest one can be picked;

If it is optimal to provide the closest coverage to the imposed, then would be [0, 7] and would be [2, 9], each with a 99.92% coverage instead of 98.67%;

To be closest to the imposed coverage but at least equal to or at most equal to seems reasonable criteria as well.

The example given in

Table 1 as well as the discussion from above clearly indicates that there is no unique recipe for an ideal CI for binomial distribution. There are many optimal solutions, depending on what is considered to be optimal.

A CI provided by a mathematical formula correcting another mathematical formula, such as Agresti–Coull [

14] and Wilson [

12], will only chop the interval, without regard to the associated probabilities. Furthermore, this applicability is limited to

imposed non-coverage error.

4. Algorithms for an Exact Calculation of the Binomial CIs

One should change the way in which one constructs a CI for a discrete distribution. In the case of binomial distribution, a mathematical formula is not able to encompass the complexity of the issue. Three algorithms have been proposed in [

21]. In this instance, for two of the algorithms (out of three), simplified versions are considered (a best balanced and a best conservative), and their solutions are discussed.

In the following descriptions, is the imposed level, x is the number of successes, m is the number of trials, is the CI, and q is its actual coverage.

The differences between the algorithms are as follows. Algorithm 1 systematically keeps the error below the imposed level, while Algorithm 2 systematicaly keeps the error closest to the imposed level. There is a symmetry in the CIs provided by both algorithms. Their general form is

, where

m is the sample size and

. Some

series are listed in the

Section 8 (Raw Data) for the following case studies. The

series are monotonic for Algorithm 2, while they are not for Algorithm 1. As an effect of the simplified design of the CIs, which are mirrored relatively to the middle, the actual coverage probabilities series are also symmetric.

Algorithms 1 and 2 have great generality. By summing the probabilities of the events left out and summing the probabilities of the events included, Algorithm 2 provides the interval as the closest solution to the imposed level

. By summing the probabilities of the events left out and summing the probabilities of the events included, Algorithm 1 provides the interval as the closest solution to the imposed level

under the specified constraint - the sum of the probabilities for the events left out is at most equal with the imposed level. One can feed Algorithms 1 and 2 with arbitrary values for

x,

m, and

(under the usual constraints,

,

,

, and

, and even the analytical function

), without change to the outcome of the algorithms (the closest solution to the imposed level

without (Algorithm 2) or with (Algorithm 1) constraint regarding the sum of probabilities of events left out).

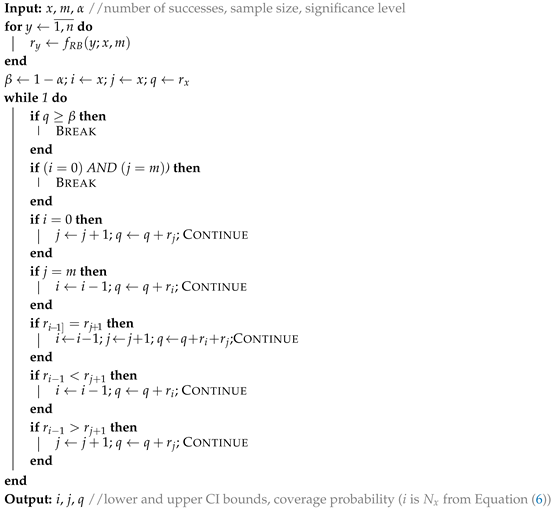

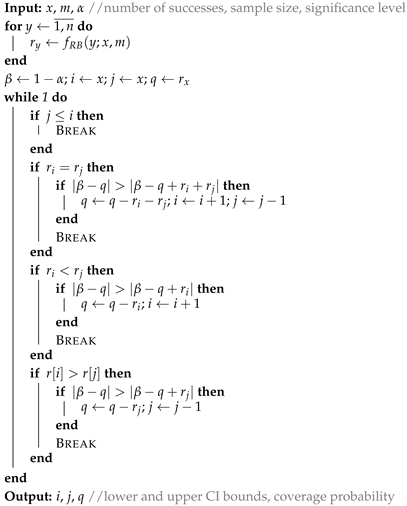

| Algorithm 1: Conservative. |

|

5. Case Studies

The CIs, designed CI2 (from Algorithm 2) and CI3 (from Algorithm 1), are calculable for any selection of (), m (), and x (). However, differences between their outputs are significant for small sample sizes m. Two small ( and ), one medium (), and one big () sample sizes were chosen as case studies. For those samples, at significance level (the risk of being in error), the CIs were generated.

One would ask which is the error rate if CI is obtained using other approaches. The answer to this question is shortly provided for the following cases, taking into consideration that, when one event is failed to be included in the coverage interval, the error is considered to be of type I (false positive, erroneous rejection). When one event is erroneously included in the coverage interval, then the error is considered to be of type II (false negative, erroneous failure).

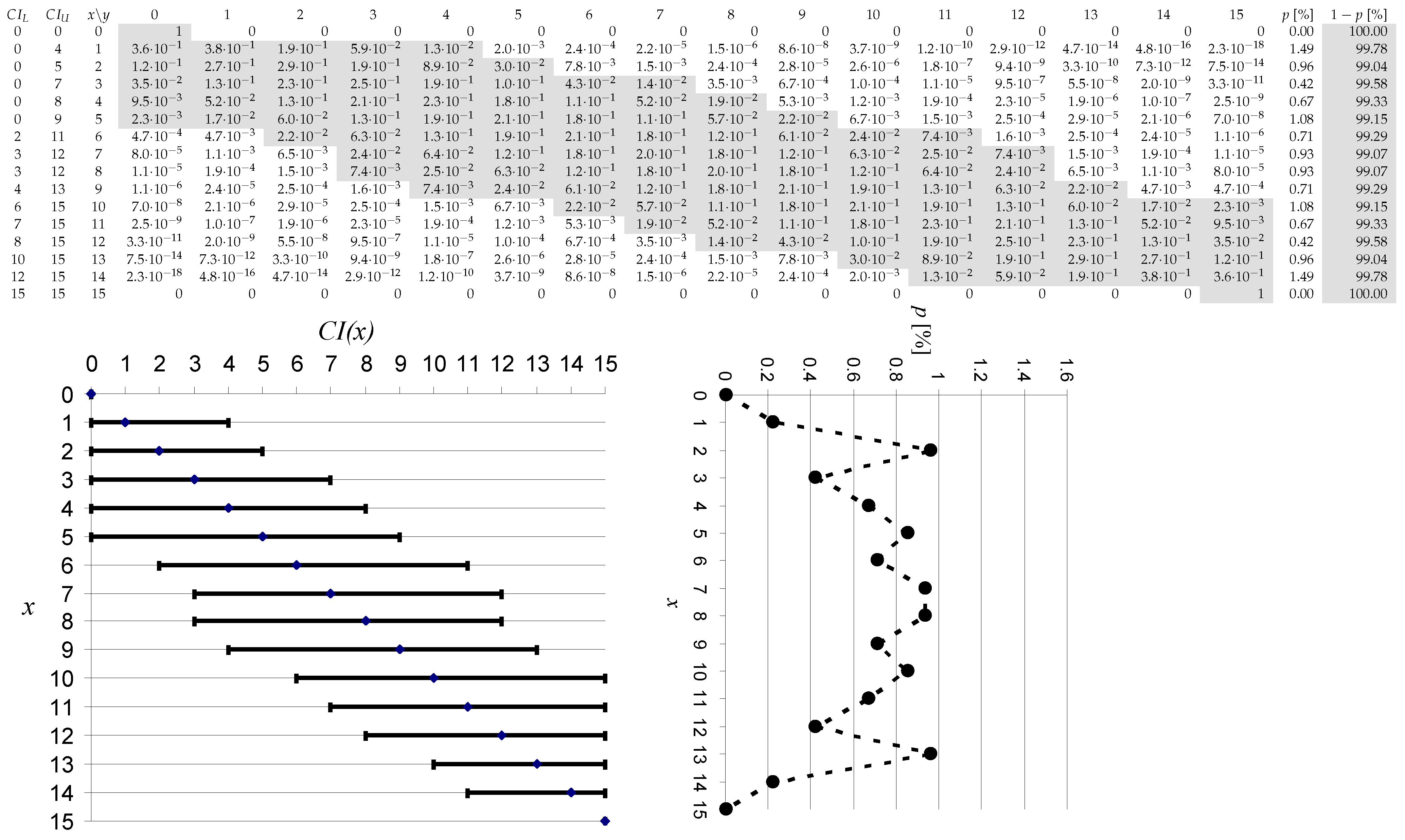

5.1. Case Study 1: m = 15

The

values constructing CIs (as

) are given in the Appendix at

entry (

, …,

).

Figure 2 and

Figure 3 below are given to illustrate the way in which the CI is constructed. At any number of successes

x, the CI contains

x, but unlike Wald’s CI, the borders are at not equal distance from

x.

It can be observed in

Figure 2 and

Figure 3 that the CI is shortest at the ends of the domain (at

and

), and is increasing monotonically in width until the middle (at

for

x even and at

for

x odd), where it reaches its maximum width. The same behavior is for any value of

m and

.

The shaded parts in

Figure 2 and

Figure 3 represent the series of

y values included in the confidence interval, while

(shaded too) is its actual coverage probability (which is the sum of the individual probabilities shaded in its row). The increase in

is caused by enlarging the CI, while the decreasing of it (by adding one unit wherever possible at its ends) is caused by the shrinking of it (by substracting one unit wherever possible at its ends).

There are differences between solutions proposed by Algorithms 1 and 2. The coverage of CI proposed by Algorithm 1 is always at least equal with the imposed level. Thus, if CI(1,15,0.01) with Algorithm 2 is [0, 3], with Algorithm 1, it is [0, 4]. The same can be said for CI(14,15,0.01). The enlarged CI from Algorithm 2 to Algorithm 1 is for (from [1, 9] to [0, 9]) and (from [6, 14] to [6, 15]) too. The CI proposed by Algorithm 1 is always equal or contains the CI proposed by Algorithm 2. There are 11 (out of 16, 68.75%) common values (, , , , , , , , , , , and ).

If one uses Equation (

5) instead of Algorithm 2, a number of 5 events will not be included in the CI, while another 5 will be omitted to be included, giving a total error rate of 8.77% (114 events are to be included in total). If one uses Equation (

5) instead of Algorithm 1, a number of 7 events will not be included in the CI, while another 7 will be omitted to be included, giving a total error rate of 11.86% (118 events are to be included in total). Furthermore, in this instance, in 10 cases, the CI provided has a coverage smaller than the one imposed by the significance level, providing an error rate of 62.5% (there are 16 cases in total).

5.2. Case Study 2: m = 30

The

values constructing CIs (as

) are given in the Appendix at

entry (

, …,

). The plot of actual non-coverage probabilities is given in

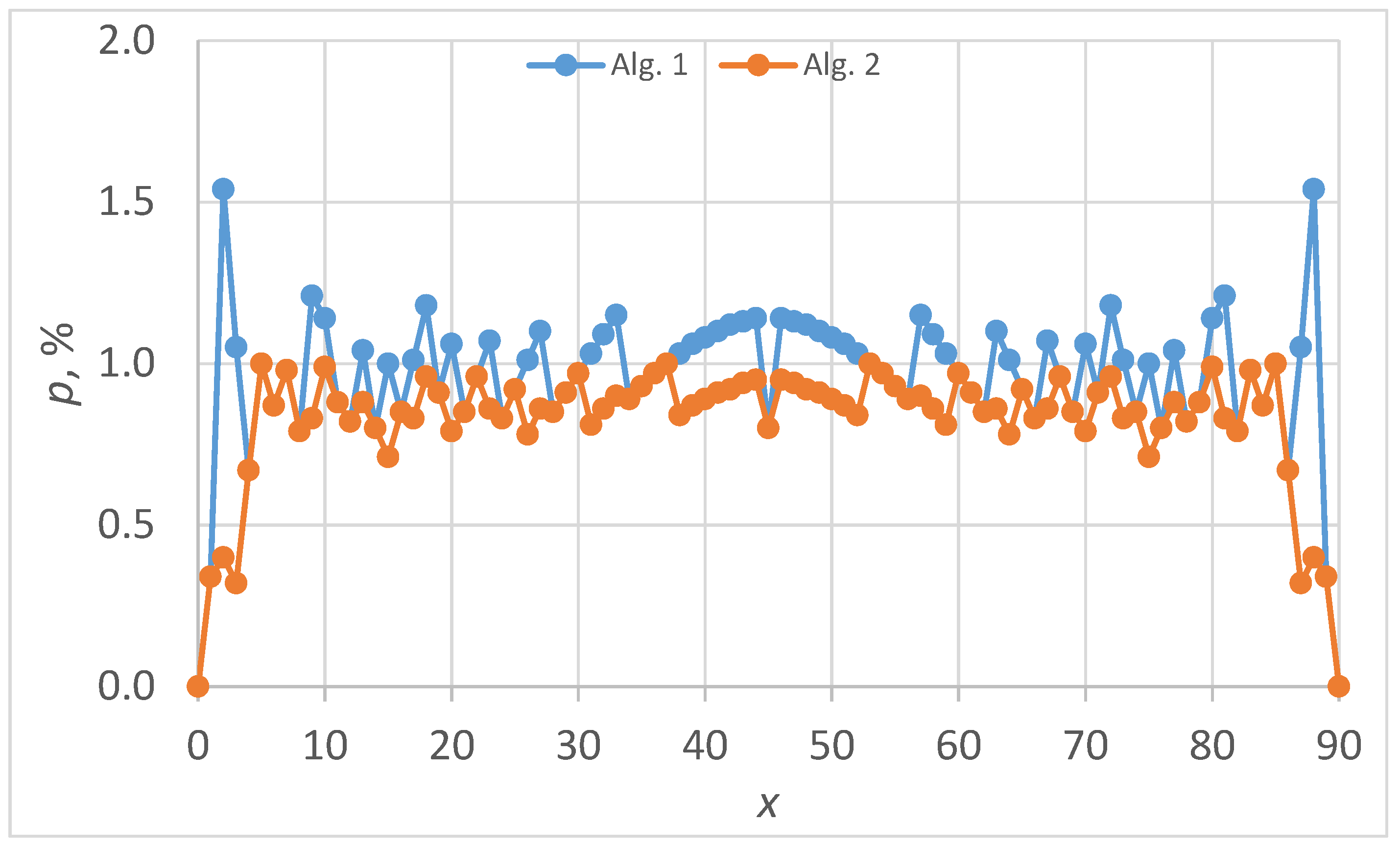

Figure 4.

The alternative proposed by Algorithm 2 on

Figure 4 jumps below and above the imposed level of 1%, with the smallest deviation to it, while the one proposed by Algorithm 1 on

Figure 4 is visible below the imposed level of 1%, being the closest choice to it. There are 13 (out of 31, 41.94%) common

values (

,

,

,

,

,

,

,

,

,

,

,

, and

).

If one uses Equation (

5) instead of Algorithm 2, a number of 9 events will not be included in the CI, while another 9 will be omitted to be included, giving a total error rate of 5.57% (323 events are to be included in total). If one uses Equation (

5) instead of Algorithm 1, a number of 16 events will not be included in the CI, while another 16 will be omitted to be included, giving a total error rate of 9.38% (341 events are to be included in total). Furthermore, in this instance, in 22 cases, the CI provided has a coverage smaller than the one imposed by the significance level, providing an error rate of 71.0% (there are 31 cases in total).

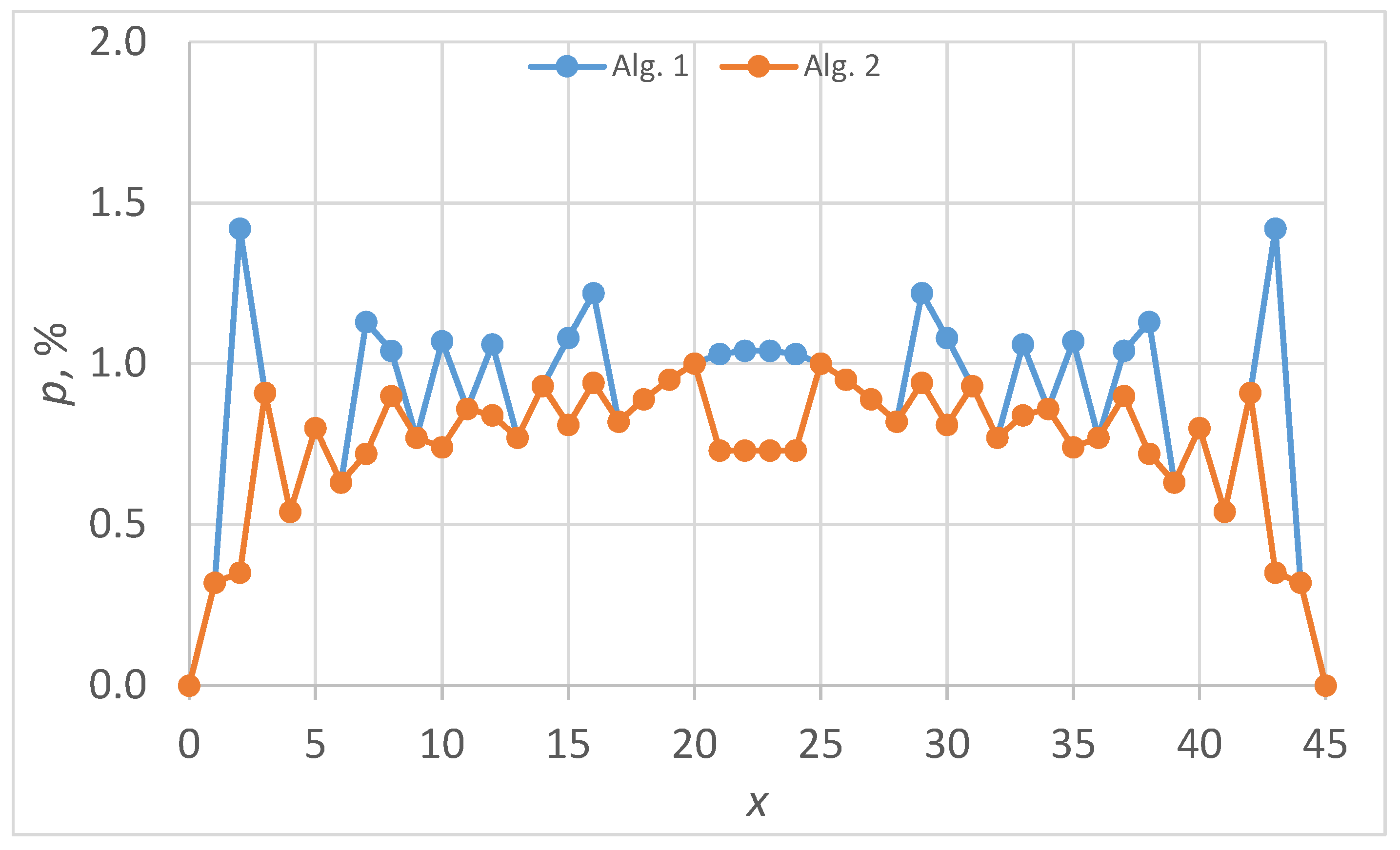

5.3. Case Study 2: m = 45

The

values constructing CIs (as

) are given in the Appendix at

entry (

, …,

). The plot of actual non-coverage probabilities is given in

Figure 5.

The alternative proposed by Algorithm 2 on

Figure 4 jumps below and above the imposed level of 1%, with the smallest deviation to it, while the one proposed by Algorithm 1 on

Figure 4 is visible below the imposed level of 1%, being the closest choice to it. There are 24 (out of 46, 52.20%) common

values.

If one use Equation (

5) instead of Algorithm 2, a number of 15 events will not be included in the CI, while another 15 will be omitted to be included, giving a total error rate of 4.97% (604 events are to be included in total). If one use Equation (

5) instead of Algorithm 1, a number of 22 events will not be included in the CI, while another 22 will be omitted to be included, giving a total error rate of 7.07% (622 events are to be included in total). Furthermore, in this instance, in 19 cases, the CI provided has a coverage smaller than the one imposed by the significance level, providing an error rate of 41.3% (there are 46 cases in total).

5.4. Case Study 3: m = 90

The

values constructing CIs (as

) are given in the Appendix at

entry (

, …,

). The plot of actual non-coverage probabilities is given in

Figure 6.

The alternative proposed by Algorithm 2 on

Figure 4 jumps below and above the imposed level of 1%, with the smallest deviation to it, while the one proposed by Algorithm 1 on

Figure 4 is visible below the imposed level of 1%, being the closest choice to it. There are 69 (out of 91, 75.82%) common

values.

If one uses Equation (

5) instead of Algorithm 2, a number of 34 events will not be included in the CI, while another 34 will be omitted to be included, giving a total error rate of 3.97% (1715 events are to be included in total). If one uses Equation (

5) instead of Algorithm 1, a number of 54 events will not be included in the CI, while another 54 will be omitted to be included, giving a total error rate of 6.14% (1759 events are to be included in total). Furthermore, in this instance, in 66 cases, the CI provided has a coverage smaller than the one imposed by the significance level, providing an error rate of 72.5% (there are 91 cases in total).

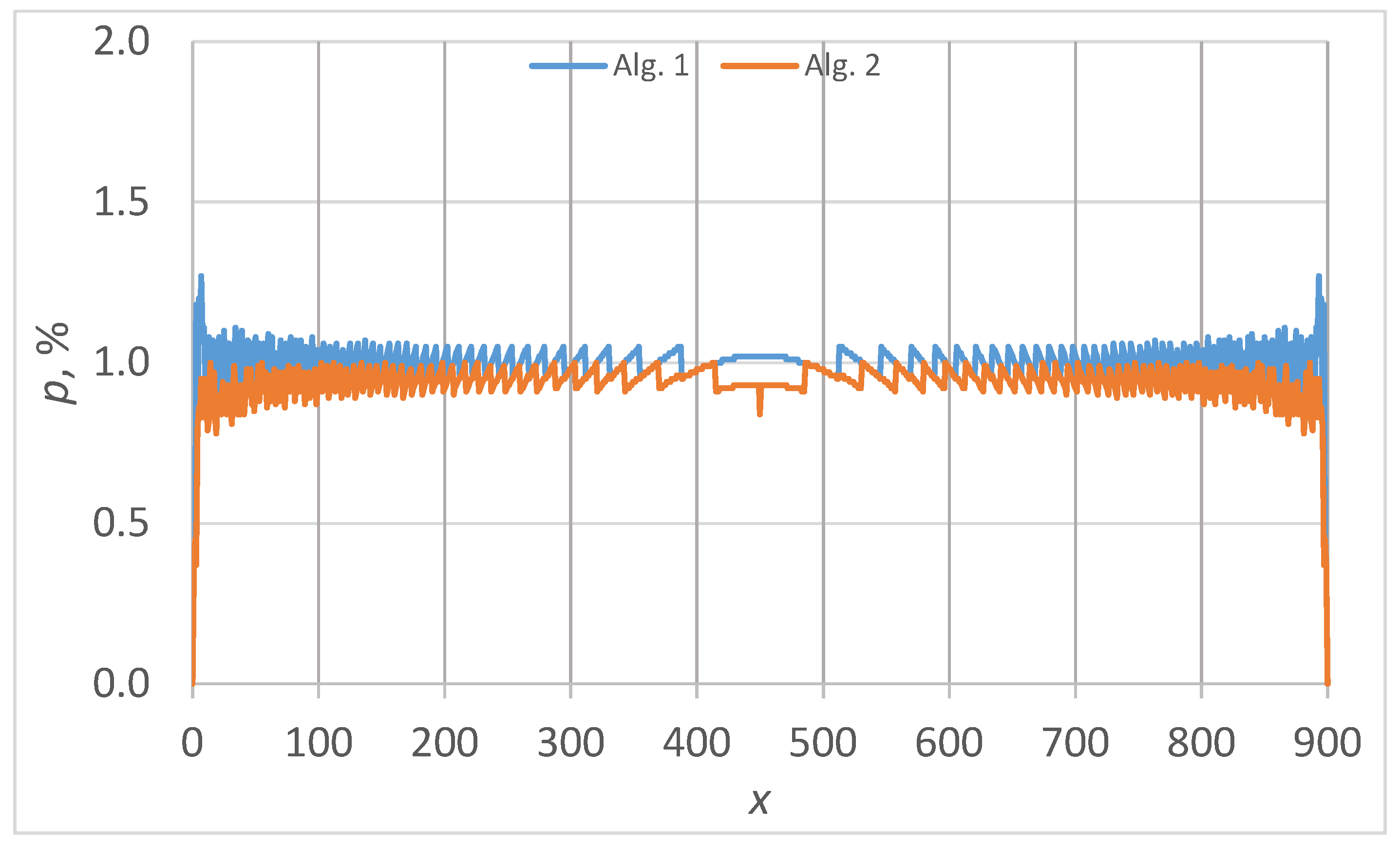

5.5. Case Study 4: m = 900

The values of the numbers constructing the confidence intervals with

are given in

Section 8 for

(

, …,

). The coverages were obtained with Algorithm 2 for CI2 and Algorithm 1 for CI3. The plot of the actual non-coverage probabilities is given in

Figure 7.

The CI2 vs. CI3 distinct points are hardly visible in

Figure 7, confirming the tendency in decreasing their proportion.

If one uses Equation (

5) instead of Algorithm 2, a number of 282 events will not be included in the CI, while another 282 will be omitted to be included, giving a total error rate of 1.03% (54,577 events are to be included in total). If one uses Equation (

5) instead of Algorithm 1, a number of 452 events will not be included in the CI, while another 452 will be omitted to be included, giving a total error rate of 1.70% (55,037 events are to be included in total). Furthermore, in this instance, in 641 cases, the CI provided has a coverage smaller than the one imposed by the significance level, providing an error rate of 71.1% (there are 901 cases in total).

6. Discussion

The two proposed algorithms (Algorithms 1 and 2) work fine, and a series of cases were used to emphasize their behavior. The actual non-coverage probability proposed by Algorithm 2 is closer to the imposed level than the actual non-coverage probability proposed by Algorithm 1 in average. The averages are given in

Table 2.

The average of the actual non-coverage is not monotonically convergent to the imposed level (see the value of actual non-coverage for vs. for and from Algorithm 2). The averages for the actual non-coverage provided by the Algorithm 1 are slightly more departed (in average) from the imposed level, the constraint of always being smaller than the imposed level explaining this behavior. The tendency in convergence to the imposed level is with (about for Algorithm 2 CI average coverages and slightly more accelerated for Algorithm 2—about ).

One major point is to provide the simplest answers to simple questions. Here are three:

6.1. Question Q1

Are there differences when a different significance level is used?

There definitely are differences at any change of the imposed level of significance. With the increase in , the CI width is decreased. In the following cases, the value of has been changed to (five times bigger risk of being in error).

6.2. Question Q2

Having now two alternatives (Algorithms 1 and 2), which algorithm should be used to express the CI for a binomial variable?

The constraints defined in the algorithms, as well as run results provided in

Figure 4,

Figure 5,

Figure 6 and

Figure 7 and

Table 2, indicate that Algorithm 2 is suited for the case when the request is to provide a CI with coverage as close as possible to the imposed value. On the other hand, Algorithm 1 is suited for the case when the request is to provide a CI with coverage closest but always at least equal to the imposed value.

6.3. Question Q3

How should one deal with binomial proportions instead of binomial variables?

The main issue is the habit of simplification. For instance, for , one often writes , for , one writes or , but it is, in fact, not the same thing.

The preference for real numbers is obvious—people are much more comfortable with them and they quickly indicate the order of magnitude (0.0… or 0.1… is close to 0, 0.8… or 0.9… is close to 1, 0.4… or 0.5… is close to 0.5), which allows an instant interpretation. At the same time, however, it lets something slip through the cracks. One can express (with three decimal places for example) any real sub-unit number, but its exact value cannot be obtained as a proportion of two integers, except under certain conditions and very few situations (one of these being that the sample size is 1000; for the sample size 997, however, there is no non-trivial situation).

Thus, for

positive outcomes from

trials, in order to pass along all information, one would firstly need a convention to express the proportion as is, without simplifying it (binomial proportion is then

), and the CI is expressed accordingly with fractions too. For 95% CI, simply using the data given in

Section 8, with Algorithm 2, CI is

and its actual coverage is 95.63%. Further examples are given in

Table 3 (

series given in

Section 8).

6.4. Real-World Data Analysis

In [

22], a study was conducted with 20 patients subject to dermal regeneration template placement over areas with exposed bone/tendon prior to split-thickness skin grafting, all being confirmed clinically and microbiologically negative for previously treated infection. With non-invasive fluorescence imaging, the bacterial load was identified using a defined threshold in eight out of twenty patients. Out of eight, four developed an infection. Three out of the four infections were positive for Pseudomonas. Based on the study data, the following research questions can be formed (the null hypothesis is that the effect being studied does not exist, and the observation can be merely by chance):

Q4: Was the detection of the bacterial load with non-invasive fluorescence imaging statistically significant in the group, or can it be asserted as being observed by chance?

Q5: Was the developing of a bacterial infection a real risk for the patients following the procedure, or can it be asserted as being observed by chance?

Q6: Was Pseudomonas a real threat for the patients following the procedure, or can it be asserted as being observed by chance?

Q7: Was the developing of a bacterial infection a real risk for the patients possessing bacterial load detected by non-invasive fluorescence imaging, or can it be asserted as being observed by chance?

Q8: Was infection with Pseudomonas a real risk for the patients possessing bacterial load detected by non-invasive fluorescence imaging, or can it be asserted as being observed by chance?

In [

23], a survey study collected data from 28,346 cases of abortions performed before the ninth week of pregnancy, of which the MEFEEGO Pack facility was used. On 26 April 2023, the Japanese Ministry of Health, Labour and Welfare approved the use of the medical abortion pill package with the condition that the patient undergoes either hospitalization or outpatient observation at a facility with beds, called MEFEEGO Pack in Japan. This facility was used in 435 cases, with no serious complications. The question (let Q9 be this question) is if the patients with MEFEEGO Pack represented a significant proportion within the sample.

Table 5 summarizes this analysis.

Observation by chance increases slowly with sample size. Thus, at a significance level of 1%,

3 out of 9 can be by chance; at 4 out of 9, the null hypothesis that the observation can be merely by chance must be rejected (see

Table 1);

4 out of 15 can be by chance; at 5 out of 15, the null hypothesis that the observation can be merely by chance must be rejected (see

series in

Section 8 for

, Algorithm 2, and

);

4 out of 15, 30, 45, of 90, and of 900 can be by chance; at 4 out of 15, 30, 45, of 90, and of 900, the null hypothesis that the observation can be merely by chance must be rejected (see

series in

Section 8 for Algorithm 2 and

) at

,

,

,

, and

;

For data in

Table 5: 4 out of 28,346 can be by chance while at 5 out of 28,346, the null hypothesis that the observation can be merely by chance must be rejected.

Observation by chance increases much faster with significance level. Thus, if at significance level of 5%, 3 out of 28,346 can be by chance while 4 out of 28,346 cannot, at significance level of 1‰, 7 out of 28,346 is still by chance while 8 out of 28,346 is not.

7. Applications

In this section, for a series of data taken from two literature sources, the CIs using the proposed method are calculated in order to provide a clear reproducibility of the results and to make available a comparison with any other existing methods.

In [

24], it was communicated that the primary efficacy end point was observed in 135 of 173 patients in the scaffold group and 48 of 88 patients in the angioplasty group. The authors used the Kaplan–Meier estimate [

25] to get an absolute difference and its associated 95% CI. Using Algorithm 2, 95% CI for

in the scaffold group is

, with a true non-coverage of 5.3%, while with Algorithm 1, 95% CI is

, with a true non-coverage of 4.6%. Using Algorithm 2, 95% CI for

in the angioplasty group is

, with a true non-coverage of 5.5%, while with Algorithm 1, 95% CI is

, with a true non-coverage of 4.6%. In the same study [

24], it was communicated that the primary safety end point was observed in 165 of 170 patients in the scaffold group and 90 of 90 patients in the angioplasty group. Involving Algorithm 2, 95% CI for

in the scaffold group is obtained as

, with a true non-coverage of 3.6%, while with Algorithm 1, 95% CI is obtained the same. Involving either of Algorithms 1 and 2, 95% CI for

in the angioplasty group is obtained as

, with a true non-coverage of 3.6%, while with Algorithm 1, 95% CI is obtained the same. In all cases, there is no overlap between the CIs of the scaffold and angioplasty groups, so there are statistically significant differences. To get an accurate CI for the difference of the two proportions (excess risk), an algorithm-based method (such as the ones reported in [

26]) should be used.

In [

27], 3200 women were split into intervention (1593) and control (1607) groups. After assignment, 3195 women remained eligible (1590 in the intervention group and 1605 in the control group). Overall, 3061 live births occurred, with 1536 in the intervention group and 1525 in the control group. A total of 85 severe pneumonia cases were identified in the intervention group of 1498 and 90 severe pneumonia cases in the control group of 1486, as well as 182 pneumonia cases, according to WHO IMCI (World Health Organization Integrated Management of Childhood Illness) guidelines [

28] (see also Supplementary Appendix of [

27]), in the intervention group of 1498 and 197 in the control group of 1498 (see Figure 2 in [

27]). A first concern is if there is a statistical difference in the split between the two groups, calculating CI with Algorithms 1 and 2. Thus, with Algorithm 2,

For , 95% CI with Algorithm 2 is , and for , 95% CI is with an obvious overlap, so no statistical difference here;

For , 95% CI with Algorithm 2 is , and for , 95% CI is with an obvious overlap, so no statistical difference here either;

For , 95% CI with Algorithm 2 is , and for , 95% CI is with an obvious overlap, so no statistical difference here either.

Moreover, with Algorithm 1,

For , 95% CI with Algorithm 2 is , and for , 95% CI is , identical with the one provided by Algorithm 2;

For , 95% CI with Algorithm 2 is , and for , 95% CI is , identical with the one provided by Algorithm 2;

For , 95% CI with Algorithm 2 is , and for , 95% CI is with an obvious overlap, so no statistical difference here either.

As the results show, for large samples, there is a smaller likelihood that the CI provided by Algorithm 1 and Algorithm 2 is different (in the 2/3 cases above, it was identical). Continuing with the pneumonia cases, have 95% CI with Algorithm 2 as , and have 95% CI as and with Algorithm 1 as , and have 95% CI as . The CIs overlap, so no statistical difference here either. Finally, for pneumonia cases, according to WHO IMCI guidelines, have 95% CI with Algorithm 2 as , and have 95% CI as , and with Algorithm 1, have 95% CI as , and have 95% CI as . The CIs overlap, so no statistical difference here either, even if the difference (from 182 to 197) seemed significant.

8. Raw Data: Ordered Integer Sequences

8.1. Series for , , , and at

The series of numbers are to be used to generate 99% coverage CIs (

) for the binomial variables provided in

Table 6. For a variable

x from a sample of size

m, the interval is

.

8.2. Series for and

From Algorithm 1: 0 0 0 0 0 1 0 2 1 3 3 4 4 4 6 6 6 8 8 8 10 11 11 11 13 14 14 14 16 17 17 17 19 20 21 21 21 23 24 25 25 25 27 28 29 29 30 30 32 33 34 34 35 35 37 38 39 39 40 40 41 43 44 45 45 46 46 48 49 50 49 51 52 52 53 55 56 55 56 58 59 59 60 62 62 62 63 65 66 66 67 69 69 69 70 71 73 74 74 76 76 77 78 78 79 81 82 83 84 85 85 86 87 87 88 90 91 92 93 94 94 95 96 96 97 98 100 101 102 103 104 105 105 106 107 107 108 109 111 112 113 114 115 116 116 117 118 118 119 120 122 123 124 125 126 127 128 128 129 130 130 131 132 133 135 136 137 138 139 140 141 141 142 143 143 144 145 146 148 149 150 151 152 153 154 155 155 156 157 158 158 159 160 161 163 164 165 166 167 168 169 170 171 171 172 173 174 174 175 176 177 178 180 181 182 183 184 185 186 187 188 189 189 190 191 192 193 193 194 195 196 197 199 200 201 202 203 204 205 206 207 208 209 209 210 211 212 213 213 214 215 216 217 218 220 221 222 223 224 225 226 227 228 229 230 231 232 232 233 234 235 236 237 237 238 239 240 241 242 243 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 259 260 261 262 263 264 265 266 267 267 268 269 270 271 272 273 274 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 294 295 296 297 298 299 300 301 302 303 304 305 305 306 307 308 309 310 311 312 313 314 315 316 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 532 533 534 535 536 537 538 539 540 541 543 544 545 546 547 548 549 550 551 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 569 570 571 572 573 574 575 577 578 579 580 581 582 583 584 586 587 588 589 590 591 592 593 594 595 596 597 598 598 599 600 601 602 603 604 606 607 608 609 610 611 613 614 615 616 617 618 619 620 621 622 623 623 624 625 626 627 628 630 631 632 633 634 636 637 638 639 640 641 642 643 644 645 645 646 647 648 649 651 652 653 654 655 657 658 659 660 661 662 663 664 665 665 666 667 668 670 671 672 673 674 676 677 678 679 680 681 682 683 683 684 685 686 688 689 690 691 693 694 695 696 697 698 699 699 700 701 702 704 705 706 708 709 710 711 712 713 714 714 715 716 718 719 720 721 723 724 725 726 727 728 728 729 730 732 733 734 736 737 738 739 740 741 741 742 743 745 746 747 749 750 751 752 753 754 754 755 757 758 759 761 762 763 764 765 765 766 768 769 770 772 773 774 775 776 776 778 779 780 782 783 784 785 785 787 788 789 790 792 793 794 794 796 797 798 799 801 802 802 803 805 806 807 808 810 809 811 812 814 815 816 816 817 819 821 822 823 824 824 826 827 829 830 831 831 832 834 836 837 838 838 840 841 843 844 844 845 847 849 850 850 852 853 855 856 856 858 860 861 861 863 865 866 866 868 870 871 872 874 875 876 878 879 880 882 882 885 885 888 888 891 892 894 896 900

From Algorithm 2: 0 0 0 0 0 1 1 2 2 3 3 4 4 5 6 6 7 8 8 9 10 11 11 12 13 14 14 15 16 17 17 18 19 20 21 21 22 23 24 25 25 26 27 28 29 29 30 31 32 33 34 34 35 36 37 38 39 39 40 41 42 43 44 45 45 46 47 48 49 50 50 51 52 53 54 55 56 56 57 58 59 60 61 62 62 63 64 65 66 67 68 69 69 70 71 72 73 74 75 76 76 77 78 79 80 81 82 83 84 85 85 86 87 88 89 90 91 92 93 94 94 95 96 97 98 99 100 101 102 103 104 105 105 106 107 108 109 110 111 112 113 114 115 116 116 117 118 119 120 121 122 123 124 125 126 127 128 128 129 130 131 132 133 134 135 136 137 138 139 140 141 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 693 694 695 696 697 698 699 700 701 702 703 704 705 706 708 709 710 711 712 713 714 715 716 717 718 719 720 721 723 724 725 726 727 728 729 730 731 732 733 734 736 737 738 739 740 741 742 743 744 745 746 747 749 750 751 752 753 754 755 756 757 758 759 761 762 763 764 765 766 767 768 769 770 772 773 774 775 776 777 778 779 780 782 783 784 785 785 787 788 789 790 792 793 794 794 796 797 798 799 801 802 802 803 805 806 807 808 810 810 811 812 814 815 816 817 818 819 821 822 823 824 825 826 827 829 830 831 832 833 834 836 837 838 839 840 841 843 844 845 846 847 849 850 851 852 853 855 856 857 858 860 861 862 863 865 866 867 868 870 871 872 874 875 876 878 879 880 882 883 885 886 888 889 891 893 894 896 900

8.3. Series for , , , and , at

The series of numbers are to be used to generate 95% coverage CIs (

) provided in

Table 7. For a variable

x from a sample of size

m, the interval is

.

9. Conclusions

The problem of calculating CIs (with conventional risk of 1% or otherwise) was solved using deterministic algorithms. Two algorithms were proposed: one to account for a minimum departure from the imposed level, and the other to account for minimum departure from the imposed level, but constrained to always be smaller than the imposed level. A series of examples served to illustrate and compare the solutions. When comparing the two solutions, with the increase in the sample size, the ratio of identic CIs is increasing. Also, the difference between the actual coverage and the imposed coverage is shrunk with the increase in the sample size. As the trend in the error rate (calculated for a series of increasing sample sizes) shows, in convergence, the CI calculated with Algorithm 2 and the CI calculated with Wald overlap (asymptotically).