A Discrete-Time Neurodynamics Scheme for Time-Varying Nonlinear Optimization with Equation Constraints and Application to Acoustic Source Localization

Abstract

1. Introduction

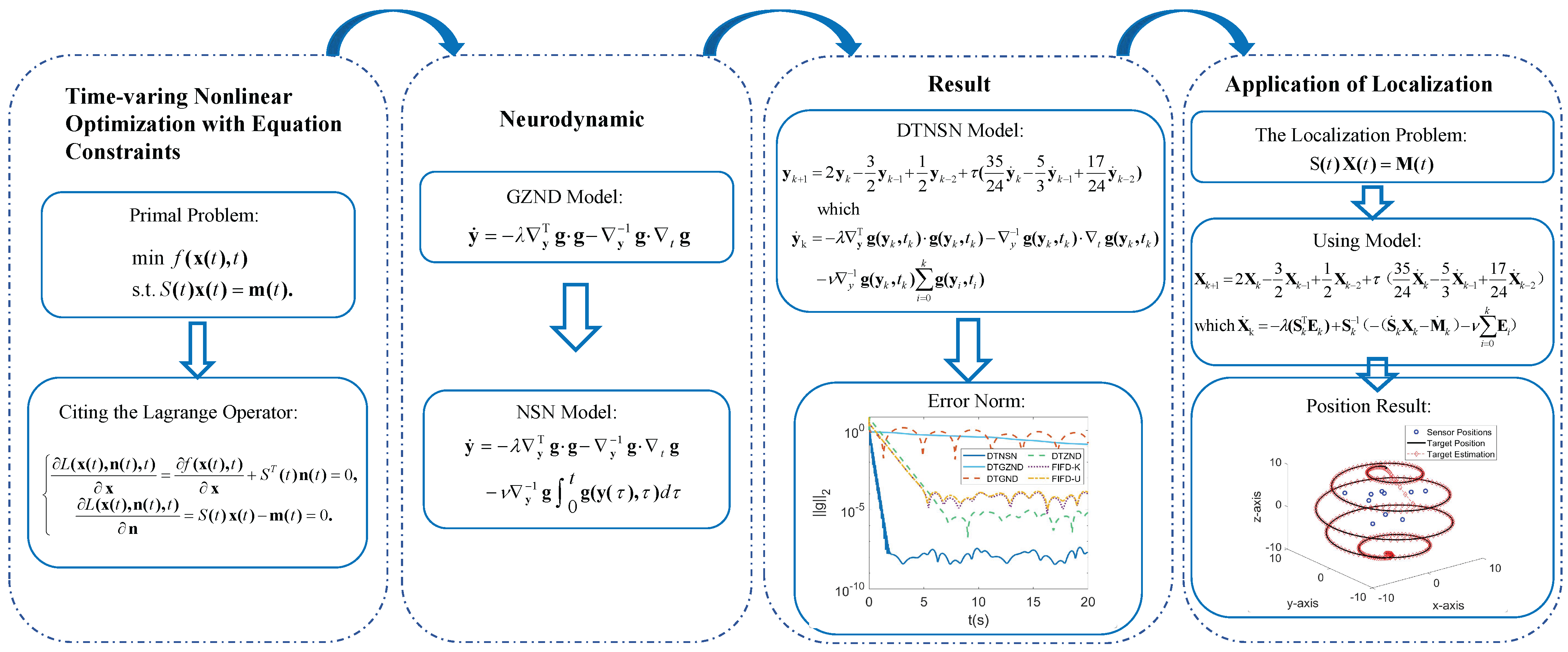

- In this paper, a DTNSN model is constructed as a solution for time-varying nonlinear optimization with equation constraints. The DTNSN model has good convergence performance and noise suppression compared with existing models.

- The DTNSN model uses an explicit linear three-step discretization method and is therefore easier to implement in hardware.

- In numerical simulations, the DTNSN model shows good convergence performance and noise suppression in many types of time-varying nonlinear optimization problems with equation constraints.

- The DTNSN model is successfully applied to acoustic source localization and proves its utility with better performance than the traditional Kalman filter.

2. Problem Statement and Model Construction

2.1. Problem Statement

2.2. Continuous Time Modeling

2.3. DTNSN Model

| Algorithm 1 DTNSN (13) for Solving Time-Varying Nonlinear Optimization with Equation Constraints (1) | |

| Require: , , , , | ▹ Time Complexity: |

| Ensure: | |

| 1: Initialize: | ▹ |

| 2: Initialize: | ▹ |

| 3: for to 2 do | ▹ |

| 4: | ▹ |

| 5: | ▹ |

| 6: end for | |

| 7: for to do | ▹ |

| 8: | ▹ |

| 9: | ▹ |

| 10: end for | |

| Note: denotes the time complexity, where . | |

2.4. Discrete Time Modeling

3. Theoretical Analysis and Results

3.1. Convergence Analysis Without Noise Disturbance

- Since , and therefore , and and are both real numbers, we can obtainwhich further leads toThus, the vector error can be generalized as

- Since , . The vector error can be derived as

- Since , and are conjugate complex numbers, we obtain

3.2. Convergence Analysis with Constant Noise Disturbance

3.3. Convergence Analysis with Time-Varying Linear Noise Disturbance

3.4. Convergence Analysis with Random Bounded Noise Disturbance

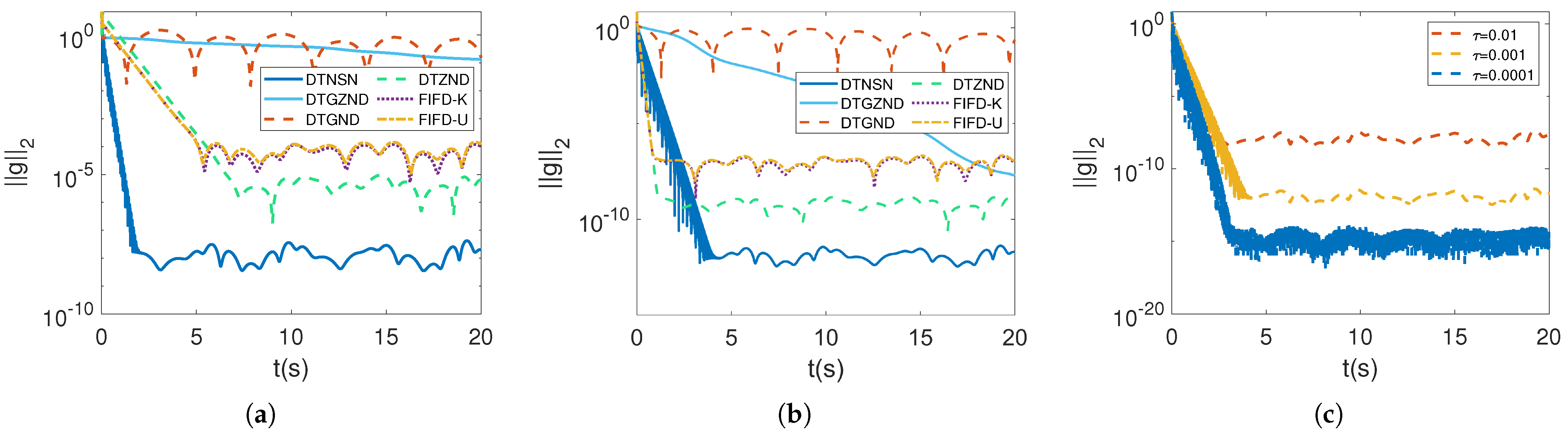

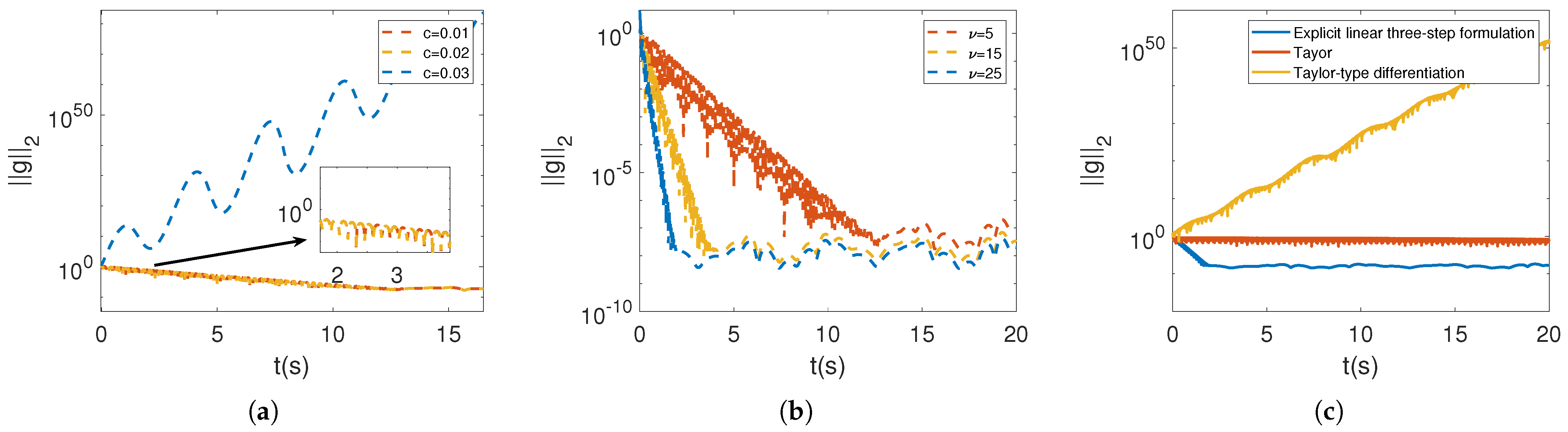

4. Simulative Verification

4.1. Comparison of Performance in a Noiseless Environment

4.2. Performance Comparison in Noisy Environments

4.2.1. Constant Noise Disturbance

4.2.2. Time-Varying Linear Noise Disturbance

4.2.3. Random Bounded Noise Disturbance

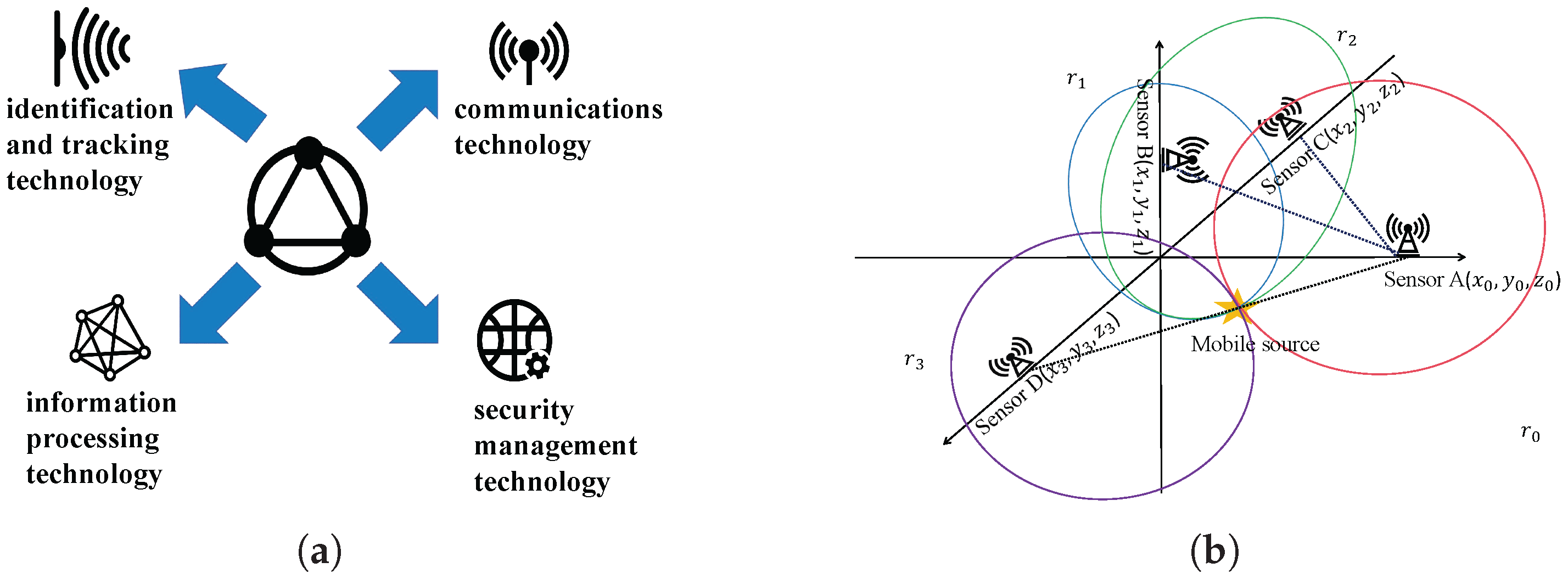

4.3. Example Simulation of Acoustic Source Localization in IIOT

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kizielewicz, B.; Sałabun, W. A New Approach to Identifying a Multi-Criteria Decision Model Based on Stochastic Optimization Techniques. Symmetry 2020, 12, 1551. [Google Scholar] [CrossRef]

- Liu, C.; Gong, Z.; Teo, K.L.; Feng, E. Multi-objective optimization of nonlinear switched time-delay systems in fed-batch process. Appl. Math. Model. 2016, 40, 10533–10548. [Google Scholar] [CrossRef]

- Almotairi, K.H.; Abualigah, L. Hybrid Reptile Search Algorithm and Remora Optimization Algorithm for Optimization Tasks and Data Clustering. Symmetry 2022, 14, 458. [Google Scholar] [CrossRef]

- Cafieri, S.; Monies, F.; Mongeau, M.; Bes, C. Plunge milling time optimization via mixed-integer nonlinear programming. Comput. Ind. Eng. 2016, 98, 434–445. [Google Scholar] [CrossRef]

- Li, G.; Shuang, F.; Zhao, P.; Le, C. An Improved Butterfly Optimization Algorithm for Engineering Design Problems Using the Cross-Entropy Method. Symmetry 2019, 11, 1049. [Google Scholar] [CrossRef]

- Zhang, J.; Hong, L.; Liu, Q. An Improved Whale Optimization Algorithm for the Traveling Salesman Problem. Symmetry 2021, 13, 48. [Google Scholar] [CrossRef]

- Baek, J.; Cho, S.; Han, S. Practical time-delay control with adaptive gains for trajectory tracking of robot manipulators. IEEE Trans. Ind. Electron. 2018, 65, 5682–5692. [Google Scholar] [CrossRef]

- Andrei, N. An accelerated subspace minimization three-term conjugate gradient algorithm for unconstrained optimization. Numer. Algorithms 2014, 65, 859–874. [Google Scholar] [CrossRef]

- Nosrati, M.; Amini, K. A new diagonal quasi-Newton algorithm for unconstrained optimization problems. Appl. Math. 2024, 69, 501–512. [Google Scholar] [CrossRef]

- Wu, W.; Zhang, Y.; Tan, N. Adaptive ZNN Model and Solvers for Tackling Temporally Variant Quadratic Program with Applications. IEEE Trans. Ind. Inform. 2024, 20, 13015–13025. [Google Scholar] [CrossRef]

- Chen, J.; Pan, Y.; Zhang, Y. ZNN Continuous Model and Discrete Algorithm for Temporally Variant Optimization with Nonlinear Equation Constraints via Novel TD Formula. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 3994–4004. [Google Scholar] [CrossRef]

- Kong, L.; He, W.; Yang, W.; Li, Q.; Kaynak, O. Fuzzy Approximation-Based Finite-Time Control for a Robot with Actuator Saturation Under Time-Varying Constraints of Work Space. IEEE Trans. Cybern. 2021, 51, 4873–4884. [Google Scholar] [CrossRef] [PubMed]

- Xu, F.; Shen, T. Look-Ahead Prediction-Based Real-Time Optimal Energy Management for Connected HEVs. IEEE Trans. Veh. Technol. 2020, 69, 2537–2551. [Google Scholar] [CrossRef]

- Xu, L.; Li, X.R.; Duan, Z.; Lan, J. Modeling and State Estimation for Dynamic Systems with Linear Equality Constraints. IEEE Trans. Signal Process. 2013, 61, 2927–2939. [Google Scholar] [CrossRef]

- Suszyński, M.; Peta, K.; Černohlávek, V.; Svoboda, M. Mechanical Assembly Sequence Determination Using Artificial Neural Networks Based on Selected DFA Rating Factors. Symmetry 2022, 14, 1013. [Google Scholar] [CrossRef]

- Jiang, Y.; Peng, Z.; Wang, J. Safety-Certified Multi-Target Circumnavigation with Autonomous Surface Vehicles via Neurodynamics-Driven Distributed Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 2092–2103. [Google Scholar] [CrossRef]

- Li, W.; Wu, H.; Jin, L. A Lower Dimension Zeroing Neural Network for Time-Variant Quadratic Programming Applied to Robot Pose Control. IEEE Trans. Ind. Inform. 2024, 20, 11835–11843. [Google Scholar] [CrossRef]

- Xiao, L.; Li, X.; Cao, P.; He, Y.; Tang, W.; Li, J.; Wang, Y. A Dynamic-Varying Parameter Enhanced ZNN Model for Solving Time-Varying Complex-Valued Tensor Inversion with Its Application to Image Encryption. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 13681–13690. [Google Scholar] [CrossRef]

- Zhang, J.; Jin, L.; Cheng, L. RNN for Perturbed Manipulability Optimization of Manipulators Based on a Distributed Scheme: A Game-Theoretic Perspective. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5116–5126. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Zhang, Y. Solving Complex-Valued Time-Varying Linear Matrix Equations via QR Decomposition with Applications to Robotic Motion Tracking and on Angle-of-Arrival Localization. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3415–3424. [Google Scholar] [CrossRef]

- Jin, L.; Yan, J.; Du, X.; Xiao, X.; Fu, D. RNN for Solving Time-Variant Generalized Sylvester Equation with Applications to Robots and Acoustic Source Localization. IEEE Trans. Ind. Inform. 2020, 16, 6359–6369. [Google Scholar] [CrossRef]

- Narendra, K.; Parthasarathy, K. Gradient methods for the optimization of dynamical systems containing neural networks. IEEE Trans. Neural Netw. 1991, 2, 252–262. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Yang, Y.; Ruan, G. Performance analysis of gradient neural network exploited for online time-varying quadratic minimization and equality-constrained quadratic programming. Neurocomputing 2011, 74, 1710–1719. [Google Scholar] [CrossRef]

- Xu, F.; Li, Z.; Nie, Z.; Shao, H.; Guo, D. Zeroing Neural Network for Solving Time-Varying Linear Equation and Inequality Systems. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2346–2357. [Google Scholar] [CrossRef]

- Zhang, Y.; Shi, Y.; Xiao, L.; Mu, B. Convergence and stability results of Zhang neural network solving systems of time-varying nonlinear equations. In Proceedings of the 2012 8th International Conference on Natural Computation, Chongqing, China, 29–31 May 2012; pp. 143–147. [Google Scholar]

- Chen, J.; Pan, Y.; Li, S.; Zhang, Y. Design and Analysis of Reciprocal Zhang Neuronet Handling Temporally-Variant Linear Matrix-Vector Equations Applied to Mobile Localization. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2065–2074. [Google Scholar] [CrossRef]

- Tan, N.; Yu, P.; Zheng, W. Uncalibrated and Unmodeled Image-Based Visual Servoing of Robot Manipulators Using Zeroing Neural Networks. IEEE Trans. Cybern. 2024, 54, 2446–2459. [Google Scholar] [CrossRef]

- Qiu, B.; Guo, J.; Mao, M.; Tan, N. A Fuzzy-Enhanced Robust DZNN Model for Future Multiconstrained Nonlinear Optimization with Robotic Manipulator Control. IEEE Trans. Fuzzy Syst. 2024, 32, 160–173. [Google Scholar] [CrossRef]

- Li, W.; Zou, Y.; Ma, X.; Qiu, B.; Guo, D. Novel Neural Controllers for Kinematic Redundancy Resolution of Joint-Constrained Gough–Stewart Robot. IEEE Trans. Ind. Inform. 2024, 20, 4559–4570. [Google Scholar] [CrossRef]

- Nguyen, H.; Olaru, S.; Gutman, P.; Hovd, M. Constrained control of uncertain, time-varying linear discrete-time systems subject to bounded disturbances. IEEE Trans. Autom. Control 2015, 60, 831–836. [Google Scholar] [CrossRef]

- Gan, Z.; Salman, E.; Stanaćević, M. Figures-of-merit to evaluate the significance of switching noise in analog circuits. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2015, 23, 2945–2956. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z. Zhang neural network for online solution of time-varying convex quadratic program subject to time-varying linear-equality constraints. Phys. Lett. A 2009, 373, 1639–1643. [Google Scholar] [CrossRef]

- Fu, Z.; Zhang, Y.; Tan, N. Gradient-feedback Zhang neural network for unconstrained time-variant convex optimization and robot manipulator application. IEEE Trans. Ind. Inform. 2023, 19, 10489–10500. [Google Scholar] [CrossRef]

- Li, J.; Mao, M.; Uhlig, F.; Zhang, Y. Z-type neural-dynamics for time-varying nonlinear optimization under a linear equality constraint with robot application. J. Comput. Appl. Math. 2018, 327, 155–166. [Google Scholar] [CrossRef]

- Liufu, Y.; Jin, L.; Xu, J.; Xiao, X.; Fu, D. Reformative Noise-Immune Neural Network for Equality-Constrained Optimization Applied to Image Target Detection. IEEE Trans. Emerg. Top. Comput. 2021, 10, 973–984. [Google Scholar] [CrossRef]

- Guo, J.; Qiu, B.; Hu, C.; Zhang, Y. Discrete-time nonlinear optimization via zeroing neural dynamics based on explicit linear multi-step methods for tracking control of robot manipulators. Neurocomputing 2020, 412, 477–485. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, Y. Design and experimentation of accelerationlevel drift-free scheme aided by two recurrent neural networks. IET Control Theory Appl. 2013, 7, 25–42. [Google Scholar] [CrossRef]

- Sun, M.; Wang, Y. General five-step discrete-time Zhang neural network for time-varying nonlinear optimization. Bull. Malays. Math. Sci. 2020, 43, 1741–1760. [Google Scholar] [CrossRef]

- Griffiths, D.F.; Higham, D.J. Numerical Methods for Ordinary Differential Equations: Initial Value Problems; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Qi, Y.; Jin, L.; Wang, Y.; Xiao, L.; Zhang, J. Complex-Valued Discrete-Time Neural Dynamics for Perturbed Time-Dependent Complex Quadratic Programming with Applications. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3555–3569. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y. Discrete-time Zhang neural network of O(τ3) pattern for time-varying matrix pseudoinversion with application to manipulator motion generation. Neurocomputing 2020, 142, 165–173. [Google Scholar] [CrossRef]

- Grondin, F.; Michaud, F. Lightweight and optimized sound source localization and tracking methods for open and closed microphone array configurations. Robot. Auton. Syst. 2019, 113, 63–80. [Google Scholar] [CrossRef]

| Model | EDI | Hyperparameters | MSSRE | |||

|---|---|---|---|---|---|---|

| Without Noise | With CN | With TVLN | With RBN | |||

| DTGZND (15) | Yes | |||||

| DTGND (16) | No | |||||

| DTZND (17) | Yes | |||||

| FIFD-K (20) | Yes | |||||

| FIFD-U (21) | No | |||||

| DTNSN (13) | Yes | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, Y.; Song, Z.; Wu, K.; Yan, J.; Chen, C.; Zhu, D. A Discrete-Time Neurodynamics Scheme for Time-Varying Nonlinear Optimization with Equation Constraints and Application to Acoustic Source Localization. Symmetry 2025, 17, 932. https://doi.org/10.3390/sym17060932

Cui Y, Song Z, Wu K, Yan J, Chen C, Zhu D. A Discrete-Time Neurodynamics Scheme for Time-Varying Nonlinear Optimization with Equation Constraints and Application to Acoustic Source Localization. Symmetry. 2025; 17(6):932. https://doi.org/10.3390/sym17060932

Chicago/Turabian StyleCui, Yinqiao, Zhiyuan Song, Keer Wu, Jian Yan, Chuncheng Chen, and Daoheng Zhu. 2025. "A Discrete-Time Neurodynamics Scheme for Time-Varying Nonlinear Optimization with Equation Constraints and Application to Acoustic Source Localization" Symmetry 17, no. 6: 932. https://doi.org/10.3390/sym17060932

APA StyleCui, Y., Song, Z., Wu, K., Yan, J., Chen, C., & Zhu, D. (2025). A Discrete-Time Neurodynamics Scheme for Time-Varying Nonlinear Optimization with Equation Constraints and Application to Acoustic Source Localization. Symmetry, 17(6), 932. https://doi.org/10.3390/sym17060932