4.1. Experimental Environment and Parameter Settings

The experiments were conducted on a 64-bit Windows operating system. The hardware configuration included an NVIDIA 3080Ti GPU with 12 GB of VRAM, an Intel (R) Core (TM) i7-11700K CPU, and 31.9 GB of RAM. The deep-learning framework used was PyTorch 2.3.0, with Python 3.8 as the programming language. The computational platform was CUDA 12.1. During training, the proposed model was developed based on the YOLOv5s architecture. The initial learning rate was set to 0.01, with a momentum of 0.937. The optimizer used was stochastic gradient descent (SGD) with momentum. Weight decay was set to 0.0005. The number of training epochs was 100, and the batch size was 16. All input images were resized to a resolution of 640 × 640 pixels.

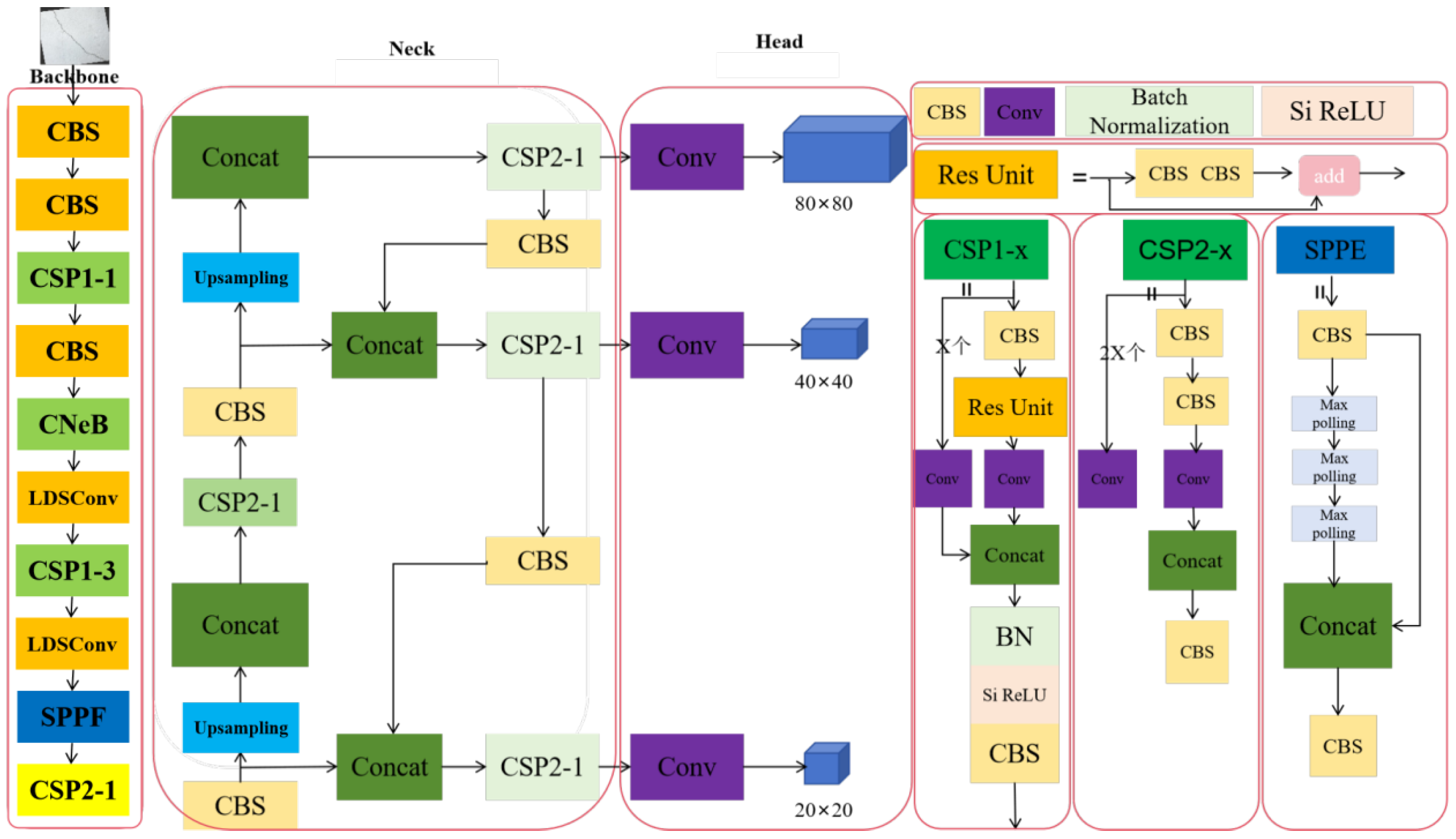

To validate the effectiveness of the improved loss function, we compared it with the original YOLOv5s model (

Figure 6). After approximately 40 iterations, the loss function tended to stabilize, and the model reached its optimization. As shown in

Figure 6, the loss value of the proposed model decays faster, and the final minimum loss is lower compared to the model before optimization. This indicates that the DGFW-IoU loss function significantly enhances the model’s convergence speed.

4.2. Experimental Dataset

Although the proposed CGSW-YOLOv5 algorithm improves the ability to capture fine details, the diversity and complexity of the existing datasets remain limited when handling cracks at different scales and under varying environmental conditions. To further enhance the model’s performance in real-world applications, especially for fine, non-uniform, or occluded cracks, it was necessary to introduce a new dataset that includes more complex backgrounds and multi-scale crack features. Training the model on such a challenging and diverse dataset will significantly improve its adaptability and robustness, enabling it to better handle the complex environmental variations encountered in practice. Therefore, the construction of this dataset was divided into three parts: First, Crack500 and SDNET2018 were selected as benchmark test sets. Second, appropriate images were selected from publicly available datasets, such as “Concrete Crack Images for Classification” and “crack-detection”. Finally, additional images of concrete structural cracks were collected from various structures in Dalian City, as shown in

Figure 7. To ensure annotation accuracy, the collected images were manually filtered during the labeling phase. Non-structural microcracks and surface shrinkage cracks caused by thermal stress were removed to avoid label noise that could negatively impact model training. After merging the public and collected data, a total of 5400 crack images were obtained. The image data covered a variety of concrete structures, including bridge decks, retaining walls, and tunnel linings. All crack images were labeled at the pixel level using Labelme 3.16 software and classified into three categories: transverse cracks, longitudinal cracks, and network (crazing) cracks. The dataset included cracks caused by shrinkage, freeze-thaw cycles, mechanical loads, and material aging, offering high diversity and contributing to improved model generalization and adaptability. The classification of transverse, longitudinal, and network cracks was based on crack orientation, formation mechanism, and morphology. Transverse cracks extend perpendicular to the principal stress direction or material texture, typically caused by tensile or shrinkage stress, and appear straight and evenly distributed. Longitudinal cracks run parallel to the main stress direction, often resulting from shear, compression, or freeze-thaw effects, and extend along or obliquely to the structural axis. Network cracks are formed due to multi-directional stress or material degradation, exhibiting irregular intersecting patterns that are dense, shallow, and lack a dominant direction. The dataset was randomly split into a training set and a validation set with a ratio of 8:2.

4.3. Model Performance Evaluation

To comprehensively evaluate the performance of the improved model, the following metrics were used:

(1) Recall (

R): This measures the proportion of correctly predicted samples among all target samples. (2) Precision (

P): This measures the proportion of correctly predicted samples among all predicted positive samples. And (3) mAP@0.5: This refers to the mean average precision at an Intersection over Union (

IoU) threshold of 0.5. Higher values of Precision, Recall, and mAP@0.5 indicate better model performance. The formulas for each metric are as follows:

In the formulas, TP represents the number of correctly identified cracks, FP represents the number of incorrectly identified cracks, FN represents the number of missed cracks, and AP represents the area under the Precision-Recall (P-R) curve. mAP refers to the mean average precision across multiple IoU thresholds for m class labels. Additionally, the number of parameters is an important indicator for evaluating model performance. Generally, the fewer the parameters, the lighter the model. The computational load is also measured by the number of Floating-Point Operations Per Second (FLOPs).

4.4. Experimental Results

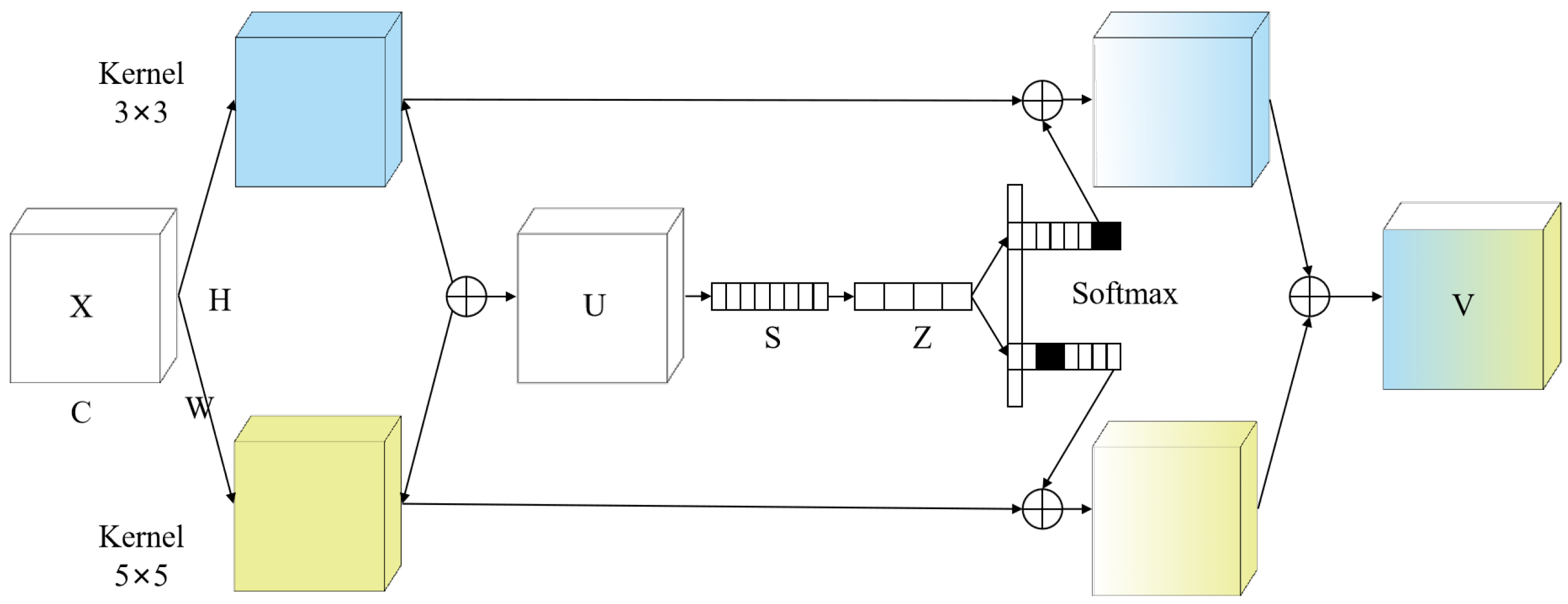

4.4.1. Comparison of Attention Mechanisms

To address the significant variation in feature information across crack targets of different scales, we propose the Adaptive Multi-Scale Feature Aggregation (AMFA) attention mechanism to enhance multi-scale feature interaction. To evaluate the impact of various attention mechanisms on model performance, six attention modules—GAMAttention, S2A-Net, SEAttention, Shuffle-Net, AMFA, and SocaAttention—were integrated into the improved model. The experimental results are summarized in

Table 1.

The results indicate that, although AMFA slightly increases model complexity, it achieves superior detection performance compared to the other methods. Specifically, on the custom concrete crack dataset, AMFA obtained the highest accuracy of 76.54% and mAP50 of 70.24%, outperforming the second-best Soca by 0.77% in accuracy and 1.47% in mAP. While the parameter count of AMFA is higher than that of SEAttention, the improvement in feature extraction capabilities significantly offsets the additional complexity. This is mainly because lightweight attention mechanisms, such as SE, lead to considerable accuracy loss and are insufficient for capturing fine crack details.

Meanwhile, the parameter sizes of GAM, S2A, and Shuffle attention models increased to 8.77 × 106, 9.13 × 106, and 7.03 × 106, respectively. However, their mAP gains were only 1.51%, 1.28%, and 0.37%, indicating that the performance improvement was not proportional to the increase in model size. In terms of computational efficiency, Soca consumes 2.8 fewer FLOPs than AMFA, but fails to meet real-time monitoring requirements. In contrast, AMFA achieves notable accuracy improvements while maintaining a relatively high FLOPs level, demonstrating that effective allocation of computational resources is crucial for performance optimization.

AMFA enables the network to focus more on informative channels during feature extraction and automatically selects optimal convolution operators, thereby improving crack detection performance and supporting subsequent multi-scale detection. These results confirm that AMFA-weighted features, when passed to the Neck for fusion, achieve a better balance between detection accuracy and efficiency. This provides a more effective solution for the detection of fine-scale cracks.

4.4.2. Comparison of Loss Functions

To evaluate the impact of different loss functions on model performance, comparative experiments were conducted based on the improved model. The tested loss functions include CIoU, EIoU, AlphaIoU, SIoU, and the proposed DGFW-IoU. The experimental results are shown in

Table 2.

As observed in

Table 2, although the proposed DGFW-IoU introduces a slight increase in parameters, it achieves the best performance in terms of precision (P), recall (R), and mAP. Specifically, it obtains 73.82% in precision, 57.22% in recall, and 69.55% in mAP. These results demonstrate that DGFW-IoU provides more accurate localization for concrete crack detection tasks.

4.4.3. Ablation Experiment

To evaluate the contribution of each improvement module to the overall model performance, we used YOLOv5s as the baseline and progressively integrated the DGFW-IoU loss function along with other proposed modules. The effectiveness of each component was assessed through a series of ablation experiments. The results are summarized in

Table 3.

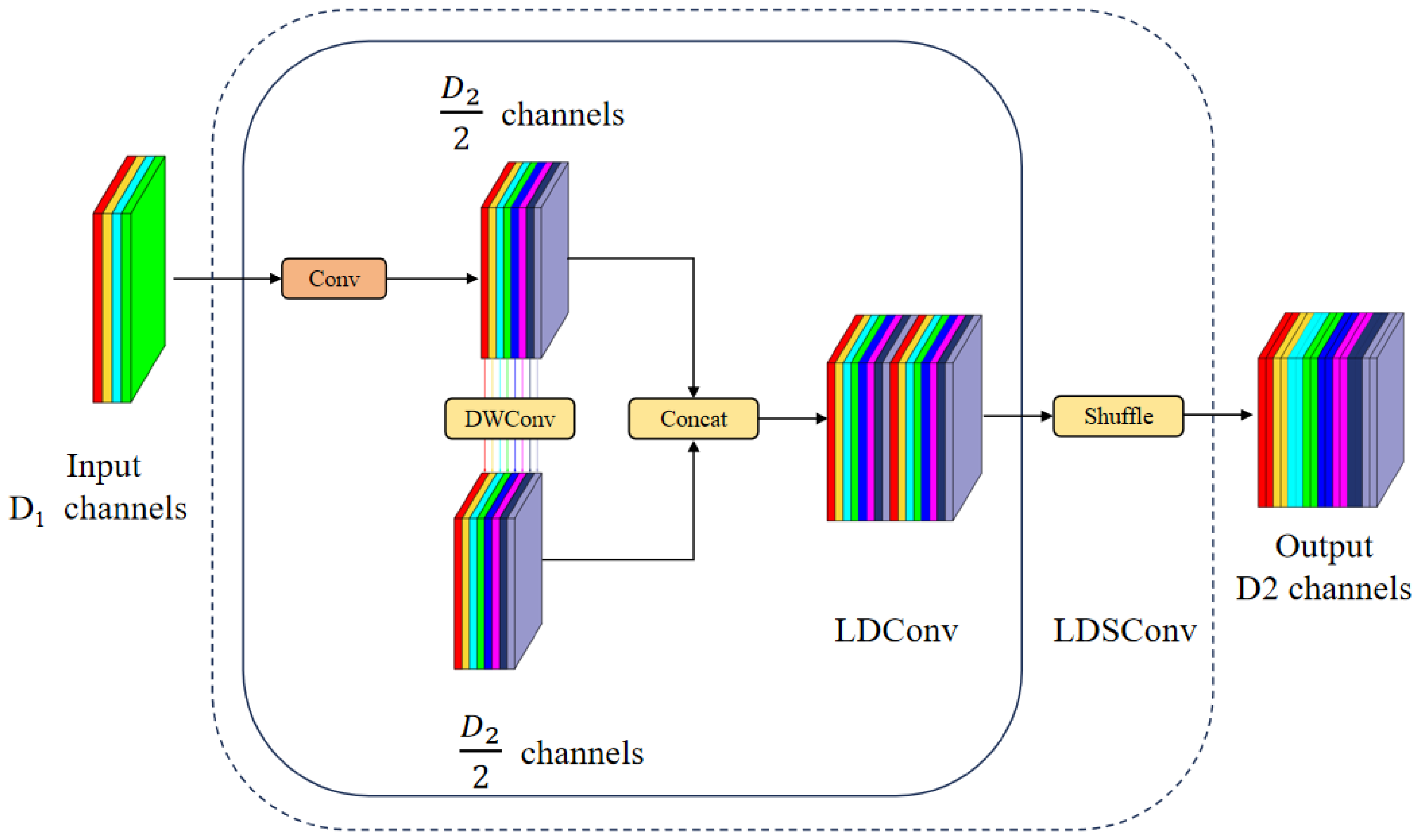

Comparing Experiments 2 and 3 with the baseline (Experiment 1), it is evident that the introduction of the CNeB module and the convolutional attention mechanism AMFA significantly improves model performance, with mAP50 increasing by 1.28% and 0.69%, respectively. From the inference time perspective, the CNeB module optimizes the computation path using depthwise separable convolution, reducing the per-frame inference time from 59.5 ms to 56.7 ms. In contrast, AMFA slightly increases the inference time to 58.6 ms due to its dual-branch attention computation. The performance gains can be attributed to the CNeB module’s ability to better capture fine-grained crack features, while AMFA enhances the model’s perception of multi-scale crack characteristics by adaptively adjusting the receptive field. To further enhance performance, Experiment 4 introduces the lightweight LDSConv module, leading to an mAP50 improvement of 0.89%. Notably, LDSConv reduces the inference time to 54.2 ms—a 9.1% decrease compared to the baseline—while maintaining a strong feature representation. This is achieved through a dual-stream feature reorganization strategy that not only enriches feature diversity but also improves model generalization. Experiments 5 and 6 demonstrate that combining the CNeB module with LDSConv and AMFA results in further performance gains without sacrificing accuracy, despite a reduction in parameter count. When CNeB and LDSConv operate jointly, the inference time is further reduced to 52.9 ms, saving 2.3 ms compared to using LDSConv alone. This indicates a cumulative effect in computation path optimization between the modules.

Finally, as shown in Experiment 8, when all the proposed improvement modules are introduced, the model achieves optimal performance, with the mAP50 increasing to 71.74%, a significant improvement over the baseline in Experiment 1. At this point, the model demonstrates the best time efficiency, with an inference time of only 42.7 ms, a 28.2% speedup compared to the original YOLOv5s. Based on these experimental results, we gradually added the CNeB module, AMFA mechanism, DGFW-IoU loss function, and LDSConv module to assess their contributions to the model’s performance. The results show that the introduction of the CNeB module significantly improved the model’s performance in detecting fine cracks. The AMFA mechanism further enhanced the model’s adaptability to cracks at different scales, while the DGFW-IoU loss function improved the localization ability for small cracks in complex backgrounds. The LDSConv module optimized the feature extraction path, significantly reduced inference time, and maintained high detection accuracy, which substantially improved the model’s generalization ability and practical applicability in engineering.

4.4.4. Comparison Experiment

To further validate the improvements and performance advantages of CGSW-YOLOv5, we conducted comparison experiments on our custom dataset with several mainstream object detection algorithms, including Faster R-CNN [

21], SSD [

22], YOLOv3-tiny [

23], YOLOv4 [

24], YOLOv5s [

25], YOLOv7-tiny [

26], YOLOv8 [

27], and the general-purpose model Segment Anything (SAM) [

28], to verify the necessity of domain-specific designs. All experiments were conducted using the same training parameter settings, and the results are shown in

Table 4.

The experimental results show that, compared to the two-stage detection algorithm Faster R-CNN and the single-stage detection algorithm SSD, our algorithm improves mAP50 by 12.70% and 13.01%, respectively, while reducing single-frame inference time by 29.5 ms and 27.1 ms. Notably, SAM, as a general-purpose segmentation model, achieves an mAP50 of only 41.27% in the zero-shot detection task, with an inference time of 3200 ms. Its FLOPs (4500 G) are 138 times higher than those of CGSW-YOLOv5, which fully demonstrates the limitations of general models in specialized scenarios. From a computational efficiency perspective, CGSW-YOLOv5 has a FLOPs value of 32.5 G, which is higher than YOLOv5s (15.8 G) but significantly lower than the computational load of Faster R-CNN and SSD. Additionally, key metrics, such as parameter count and model weight file size, are significantly reduced—its model weight file is 55.85 MB, only 50.3% of Faster R-CNN’s size, and 97.7% smaller than SAM’s 2400 MB, making it well-suited for embedded devices with storage limitations. YOLOv3-tiny, due to its inherent limitations in multi-object and small-object detection, achieves an mAP50 of only 22.71%, much lower than the other algorithms. Although SAM has a high parameter count of 636 M, its detection accuracy is still 30.47% lower than CGSW-YOLOv5, proving that simply increasing model capacity does not solve the specialized detection problem. Compared to YOLOv4, CGSW-YOLOv5s not only has an advantage in detection accuracy but also reduces inference time from 66.2 ms to 42.7 ms, with a larger speedup compared to SAM. It also outperforms YOLOv4 in terms of FLOPs, parameter count, and model weight file size. Although YOLOv7-tiny achieves a detection accuracy of 59.02%, it is still 12.72% lower than our algorithm. Notably, CGSW-YOLOv5s achieves a 30.4% speedup in inference time while maintaining higher accuracy than YOLOv7-tiny, thanks to the dual-stream feature reorganization mechanism of the LDSConv module, which optimizes feature extraction efficiency through parallel computation paths. In contrast, SAM’s ViT-H architecture, due to its global attention mechanism, faces difficulties in optimizing computational paths, resulting in a frame rate of less than 0.3 FPS on ARM devices. In the comparison with YOLOv8, CGSW-YOLOv5 shows significant advantages: mAP50 increases by 3.1%, inference time decreases by 17.1 ms, and FLOPs reduce by 46.7% compared to YOLOv8. Although YOLOv8 performs well on conventional datasets, it exhibits significant errors when handling fine, complex cracks, especially in areas with crack intersections and occlusions. CGSW-YOLOv5 captures these details more accurately, with a single-frame processing speed of 42.7 ms, meeting real-time detection requirements and maintaining high detection accuracy, even in low computational resource environments.

In summary, CGSW-YOLOv5s achieves a good balance between accuracy and lightweight design in concrete crack detection tasks. By optimizing feature extraction efficiency and resource allocation, it demonstrates advanced performance and practical applicability in the field of object detection.

Based on the

Table 5 results and statistical tests (including the Friedman test, Nemenyi post-hoc test, and critical difference (CD) analysis), the proposed CGSW-YOLOv5 demonstrates clear advantages over other models. Although the Friedman test yielded a

p-value of 0.066, which does not meet the standard threshold for statistical significance, the subsequent Nemenyi test revealed significant differences between CGSW-YOLOv5 and models, such as SAM and YOLOv3-tiny in terms of detection accuracy and inference time. The differences in average ranks exceeded the critical difference (CD = 8.216), confirming the effectiveness of the proposed improvements. Specifically, CGSW-YOLOv5 outperforms both traditional methods and general-purpose models in mAP50 and inference speed. For example, SAM performs poorly under complex environmental conditions, with an inference time of up to 3200 ms, while CGSW-YOLOv5 achieves a much lower inference time of 42.7 ms and a detection accuracy of 72.85%, demonstrating strong adaptability and efficiency. In contrast, no significant difference was observed between YOLOv4 and YOLOv7-tiny, suggesting performance convergence among some lightweight models.

In summary, CGSW-YOLOv5 significantly improves detection accuracy and practical applicability while maintaining a compact model size and low computational cost, making it suitable for intelligent crack detection in concrete structures under resource-constrained conditions.

4.4.5. Generalization Experiment

In practical applications, environmental factors, such as weather changes, lighting conditions, and background noise, can significantly affect crack detection. To investigate the impact of these external variables, this study conducted extensive experiments under various environmental conditions, including wind, rain, and fog. The data were preprocessed and split into training and testing sets in an 8:2 ratio. Under the same experimental setup, the CGSW-YOLOv5s concrete crack detection model was compared with Faster R-CNN [

21], SSD [

22], YOLOv3-tiny [

23], YOLOv4 [

24], YOLOv5s [

25], YOLOv7-tiny [

26], YOLOv8 [

27], and SAM [

28]. The results are shown in

Table 6 and

Figure 8.

The Experiment 6 results show that, compared to Faster R-CNN and SSD, the proposed CGSW-YOLOv5s model achieves the highest detection accuracy while maintaining the lowest number of parameters. Inference speed is improved by 29.1 ms and 26.7 ms, respectively. Notably, SAM performs poorly in complex environments, with an mAP50 of only 41.78% and an inference time of 3200 ms. With 1332 M parameters and a model size of 4570 MB, SAM is not feasible for mobile deployment. Although YOLOv3-tiny and YOLOv7-tiny have an advantage in terms of parameter count, their detection accuracy is 47.24% and 13.34% lower than our algorithm, respectively. Additionally, their actual inference speeds are 8.8 ms and 18.1 ms slower than our model, which fails to meet the detection requirements for various types of concrete cracks.

Compared to the original YOLOv5s, CGSW-YOLOv5s shows a significant improvement of 4.6% in detection accuracy and reduces inference time from 65.1 ms to 43.1 ms. This is due to the depthwise separable convolution design in the CNeB module and the dual-stream feature reorganization strategy of LDSConv. Compared to the latest YOLOv8, our algorithm still achieves a faster inference speed by 16.7 ms while maintaining the accuracy advantage and reducing FLOPs by 45.5%. SAM shows lower accuracy in wind, rain, and fog scenarios, while CGSW-YOLOv5 maintains a detection accuracy of 72.85% under complex environmental conditions, with only a 2.3 ms increase in inference time.

The experimental results demonstrate that environmental factors do not lead to a decrease in detection accuracy. By introducing the AMFA module for enhanced multi-scale feature aggregation and optimizing localization accuracy with the DGFW-IoU loss function, the model’s adaptability in complex environments is significantly improved. Specifically, the AMFA mechanism effectively captures multi-scale crack features, reducing missed and false detections caused by environmental interference, while the DGFW-IoU loss function dynamically adjusts the loss weight for small targets, improving the detection accuracy of small cracks in complex backgrounds.

4.4.6. Visual Comparison Experiment

Figure 9 presents the detection results for three different types of concrete structure cracks: transverse cracks, vertical cracks, and mesh cracks. For each crack type, the figure shows the detection results of seven different models: Faster R-CNN [

21], SSD [

22], YOLOv3-tiny [

23], YOLOv4 [

24], YOLOv5s [

25], YOLOv7-tiny [

26], and YOLOv8 [

27].

The model comparison in

Figure 9 displays three different types of concrete cracks: horizontal cracks, vertical cracks, and mesh cracks. It visually demonstrates the detection advantages of CGSW-YOLOv5, especially in capturing the complete contours of intersecting mesh cracks (highlighted in the red box) in complex backgrounds. For each crack category, the image shows the detection results of seven different models (Faster R-CNN [

21], SSD [

22], YOLOv3-tiny [

23], YOLOv4 [

24], YOLOv5s [

25], YOLOv7-tiny [

26], YOLOv8 [

27], and CGSW-YOLO). From the figure, it can be observed that for the horizontal crack category, the confidence values of CGSW-YOLO are 0.99 and 0.92, showing a significant improvement compared to Faster R-CNN, SSD, YOLOv3-tiny, YOLOv4, YOLOv5s, YOLOv7-tiny, YOLOv8, and SAM. For transverse cracks, CGSW-YOLO achieves high confidence scores of 0.99 and 0.92, outperforming all comparison models in detection accuracy. In the longitudinal crack category, YOLOv3-tiny exhibits missed detections, while CGSW-YOLO not only meets the basic detection requirements but also demonstrates higher confidence scores, making it more suitable for practical applications. In the case of mesh cracks, all baseline models show varying degrees of false negatives and false positives. Notably, CGSW-YOLO attains a confidence score of 1.00, significantly higher than the other models. Overall, CGSW-YOLO demonstrates robust and accurate performance across all crack types, with particularly outstanding results in detecting complex mesh cracks, highlighting its superior reliability and detection precision.

The

Table 7 and

Table 8 results indicate that false positives in transverse cracks often arise from linear texture similarities on the surface, such as formwork joints. The high missed detection rate for reticular cracks is closely related to the non-uniform and asymmetric topology of their structure. When the branching angles deviate from the typical distribution of training samples, current models exhibit insufficient feature coupling at intersection nodes. Regarding the high false positive rate under rainy conditions, although the dilated convolution in the AMFA mechanism helps expand the receptive field, it lacks effective suppression of pseudo-symmetric features caused by water stain reflections.

Figure 10 compares the training curves of the original YOLOv5s model and the improved GSCW-YOLO model in terms of the loss function, mAP50, and mAP50-95. As shown in subfigures (a) and (b), the GSCW-YOLO model exhibits faster convergence and significantly improved training stability compared to YOLOv5s. In subfigures (c) and (d), the mAP50 and mAP50-95 curves of the improved GSCW-YOLO are clearly higher than those of YOLOv5s. The GSCW-YOLO algorithm not only provides high-precision detection results for concrete structural cracks but also demonstrates strong accuracy and robustness in detecting fine cracks. It effectively meets the practical demands for efficiency and accuracy in crack detection under various environmental conditions. This offers strong technical support for quality monitoring and maintenance of concrete structures and holds significant value for advancing the health assessment of concrete structures in the construction industry.