Abstract

A new text matching model based on dynamic gated sparse attention feature distillation with a faithful semantic preservation strategy is proposed to address the fact that text matching models are susceptible to interference from weakly relevant information and that they find it difficult to obtain key features that are faithful to the original semantics, resulting in a decrease in accuracy. Compared to the traditional attention mechanism, with its high computational complexity and difficulty in discarding weakly relevant features, this study designs a new dynamic gated sparse attention feature distillation method based on dynamic gated sparse attention, aiming to obtain key features. Weakly relevant features are obtained through the synergy of dynamic gated sparse attention, a gradient inversion layer, a SoftMax function, and projection theorem literacy. Among these, sparse attention enhances weakly correlated feature capture through multimodal dynamic fusion with adaptive compression. Then, the projection theorem is used to identify and discard the noisy features in the hidden layer information to obtain the key features. This feature distillation strategy, in which the semantic information of the original text is decomposed into key features and noise features, forms an orthogonal decomposition symmetry in the semantic space. A new variety of faithful semantic preservation strategies is designed to make the key features faithful to the original semantic information. This strategy introduces an interval loss function and calculates the angle between the key features and the original hidden layer information with the help of cosine similarity in order to ensure that the features reflect the semantics of the original text. This can further update the iterative key features and thus improve the accuracy. The strategy builds a feature fidelity verification mechanism with a symmetric core of bidirectional considerations of semantic accuracy and correspondence to the original text. The experimental results show that the accuracies are 89.10% and 95.01% in the English datasets MRPC and Scitail, respectively; 87.8% in the Chinese dataset PAWX; and 80.32% and 80.27% in the Ant Gold dataset, respectively. Meanwhile, the accuracies in the KUAKE-QTR dataset and Macro-F1 are 70.10% and 68.08%, respectively, which are better than other methods.

1. Introduction

Text matching, as a basic topic in the field of natural language processing, is centered on determining the semantic relationship between two sentences. This technique is widely used in various fields such as retrieval enhancement generation [1], question and answer systems [2], and text summarization [3]. In recent years, general-purpose micromodels such as DeepSeek R1 [4] and GPT-4 [5] have emerged, and they have demonstrated strong question-answering capabilities in general-purpose domains. However, when confronted with a lack of specialized domain knowledge, these models often struggle to provide accurate and comprehensive answers [6].

To compensate for this deficiency, text matching models are used to match rare, specialized domain knowledge bases based on user queries, thus empowering big general-purpose language models to construct accurate responses using this rare knowledge. However, in the process of matching specialized domain knowledge, the model needs to deal with complex and diverse textual knowledge, which is often interfered with by weakly relevant information in the text, making it difficult for the model to acquire key features that are faithful to the semantics of the original text, thus affecting its performance.

Two main approaches exist to address this problem. The first approach is to add discrete and continuous noise to the corpus of the pre-trained language model in order to enhance the model’s resistance to noise and thus improve its performance [7]. However, this approach is affected by the proportion of noise added, and too much or too little noise may lead to poor noise resistance in the model. The second method, on the other hand, truncates the content with small self-information by calculating the self-information of the sentence [8]. However, with this method, it is difficult to dynamically determine which information is the key information that matches the semantics of the original text.

Given the limitations of the above methods, this study proposes an idea that is not affected by the proportion of introduced noise but that can also dynamically judge the key information that conforms to the semantics of the original text. The core of this idea lies in obtaining the key representations that conform to the semantics of the original text, which is crucial for improving the performance of the text matching model. To achieve this goal, this study designs a text matching model based on dynamically gated sparse attention feature distillation with a faithful semantic preservation strategy.

Specifically, the method in this paper is inspired by the idea of improving the factual consistency of news summaries through contrast preference optimization [9]. In this study, we first design a new method based on dynamically gated sparse attentional feature distillation and aimed at acquiring key features. The method captures features that are weakly correlated with the matching relation through the synergy of dynamically gated sparse attention, a gradient inversion layer [10], a SoftMax function, and the projection theorem [11]. Among these, sparse attention enables the capturing ability to capture weakly correlated features through multimodal dynamic fusion and adaptive compression. Next, the projection theorem is used to identify and discard the noisy features in the hidden layer information to capture the key features.

Second, to ensure that these key features are faithful to the original semantic information, this study also designs a new faithful semantic preservation strategy. This strategy introduces the interval loss function [12] and calculates the clip angle between the key features and the hidden layer information of the original text with the help of cosine similarity [13], thereby ensuring that the features accurately reflect the semantics of the original text. This strategy can further update and iterate the key features, thus improving the accuracy of the model.

Finally, this study proves the effectiveness of the proposed method by comprehensively validating it on generic and vertical domain datasets in both Chinese and English domains. The method is easy to combine with pre-trained language models, enabling it to demonstrate higher efficiency and flexibility in real-world application deployment. In summary, by designing a text matching model based on dynamically gated sparse attentional feature distillation with faithful semantic preservation strategies, this study provides new ideas and methods for solving the key problems found in knowledge matching in professional domains.

The main contributions of this study are summarized as follows:

- Dynamic gated projection sparse attention: We innovatively proposed to outperform the limitations of static gating, fixed block size compression, and the lack of adaptive padding of NSA (native sparse attention) by learning gated weight fusion branching, linear projection compression of feature dimensions, and supporting adaptive padding.

- Feature distillation strategy: Based on dynamic gating sparse attention combined with gradient inversion, SoftMax, and the projection theorem, this strategy accurately captures weakly correlated features, removes noise, and extracts key features.

- Faithful semantic preservation: A new strategy is designed to introduce interval loss and cosine similarity to ensure that the key features are faithful to the original semantics and improve accuracy.

- The method proposed in this study seamlessly integrates pre-trained language models and has been validated on a wide range of datasets in both specialized and general domains.

2. Related Work

Text matching technology, as a core component in many fields, such as information retrieval enhancement, Q&A systems, patent matching, etc., is of great importance. This technique aims to accurately match the content of the available knowledge base according to the user’s query requirements, which in turn provides key knowledge support for the large language model to achieve comprehensive and accurate answers.

Earlier text matching models mainly relied on neural networks such as LSTM [14] and GRU [15], which work in tandem with attentional mechanisms to capture the semantic information of the text and assign higher weights to important content. For example, methods such as the context-enhanced neural network (CENN) [16], lexical decomposition and combination (L.D.C) [17], BiMPM [14], and pt-DecAttachar.c [18] have achieved remarkable results under this framework.

With the accumulation of neural network technology, significant increases in computational power, and dramatic increases in the amount of training data, pre-trained language models have emerged. These models, such as BERT [19], DeBERTa [20], and RoBERTa [21], are based on the Transformer architecture, adopt a multi-head attention mechanism, and capture deeper representations of the language by pre-training on large-scale text data. In the text-matching task, the pre-trained models only need to be fine-tuned under the sequence classification framework and have a small number of classification layers added to show excellent semantic matching performance.

Given the excellent performance of pre-trained language models, researchers have exploited them to develop new text-matching models. For example, the BERTbase-based Simple Contrastive Learning Sentence Representation Model (SimCSE-BERTbase) [22] uses contrastive learning techniques to optimize sentence representations, while the BERTbase-based Angular Contrastive Learning Sentence Representation Model (ArcCSE-BERTbase) [22] enhances the discriminative degree of sentence representations through angular contrastive learning. In addition, researchers have developed the DisRoBERTa [23] model based on sentence core information extraction, and all these models enhance the matching effect by improving the attention mechanism or utilizing the sentence’s main idea information.

Given the excellent performance of pre-trained language models, researchers have utilized them to develop new text matching models. For example, the Simple Contrastive Learning Sentence Representation Model based on BERTbase (SimCSE-BERTbase) [22] uses contrastive learning techniques to optimize sentence representations, while the Angle Contrastive Learning Sentence Representation Model based on BERTbase (ArcCSE-BERTbase) [22] enhances the discriminative degree of sentence representations through angle contrastive learning. In addition, researchers have developed the DisRoBERTa [23] model based on sentence core information extraction, Divide and Conquer: Text Semantic Matching with Disentangled Keywords and Intents (DC-Match) [24], Multi-Concept Parsed Semantic Matching (MCP-SM) [25], Transformer-based Combined Attention Transformer for Semantic Sentence Matching (Comateformer) [26], and the cutting-edge Semantic Text Matching Model Augmented with Perturbations (STMAP) [27].

The core of the SimCSE-BERTbase method lies in the construction of the SimCSE framework, which effectively enhances the representation of sentence embeddings with the help of unsupervised and supervised contrast learning strategies, achieves a significant performance leap in semantic text similarity tasks, and improves the anisotropic properties of the pre-trained embedding space. To solve the sentence representation learning problem, the ArcCSE method is proposed. The discriminative ability of paired sentences is enhanced by additive angular interval contrast loss, and a self-supervised task is designed to capture the semantic entailment relationships among ternary sentences, which ultimately outperforms the existing methods on the STS and SentEval tasks. The researchers also developed the DisRoBERTa model based on sentence core information extraction and proposed a discrete latent variable model incorporating topic information for semantic text similarity tasks. The model constructs a shared latent space to represent sentence pairs through vector quantification, enhances semantic information capture using topic modeling, and optimizes the Transformer language model through a semantics-driven attention mechanism, ultimately outperforming a variety of neural network baselines in multi-dataset experiments.

DC-Match proposes a divide-and-conquer strategy to address the problem of different matching granularity requirements for query sentences in text semantic matching by separating the keywords and intents for matching training, which can be seamlessly integrated into the pre-trained language model without compromising the inference efficiency, and achieves significant and stable performance optimization for multiple PLMs on three benchmark datasets. MCP-SM addresses the existing semantic matching methods by proposing a multi-concept parsing semantic matching framework based on pre-trained language models to address the problems of existing semantic matching methods that rely on NER technology to identify keywords and have limited performance in small languages. The framework is versatile and flexible and can parse text into multiple concepts for multilingual semantic matching, free from the dependence on NER models. It has been experimentally verified on multilingual datasets such as English, Chinese, and Arabic, and its performance is excellent and widely applicable. Aiming at the problem that the traditional Transformer model ignores the nuances when evaluating sentence pair relevance, a Transformer-based combinatorial attention network is proposed. The model is designed with a quasi-attention mechanism with combinatorial properties that learns to combine, subtract, or adjust specific vectors when constructing a representation and calculates a double affinity score based on similarity and dissimilarity to represent inter-sentence relations more efficiently. Experiments show that the method achieves consistent performance improvement on multiple datasets. The STMAP method aims to address the shortcomings of traditional methods in semantic text matching in terms of small-sample learning and the possible semantic deviations caused by data enhancement. It performs data enhancement based on Gaussian noise with noise mask signals and uses an adaptive optimization network to dynamically optimize the training targets generated from the enhanced data. Experiments show that STMAP achieves excellent results on several Chinese and English datasets, especially when the amount of data is reduced, in which case it still significantly outperforms the baseline model and demonstrates strong performance and effectiveness.

Based on this, this study innovatively proposes a dynamic gating projection sparse attention mechanism and feature distillation strategy combined with a faithful semantic preservation approach. The mechanism effectively extracts features through dynamic gated weight fusion branching, introducing linear projection to compress feature dimensions and supporting adaptive padding; the feature distillation strategy uses techniques such as dynamic gated sparse attention to accurately capture features that are weakly correlated with the matching relationship and remove noise to extract key information; and the faithful semantic preservation method ensures that the extracted features are faithful to the original text’s semantics. The methods proposed in this study are easy to combine with pre-trained language models, and their effectiveness is verified on a wide range of datasets in both specialized and general domains, further advancing the development of text matching tasks.

3. Design of Text Matching Model Based on Dynamically Gated Sparse Attention Feature Distillation with Faithful Semantic Preservation Strategy

Based on the feature extraction capabilities of dynamic gated sparse attention, gradient reversal layer (GRL) [10], and projection theorem techniques [11], this study argues that these techniques can effectively capture key features in sentences. Specifically, the dynamically gated sparse attention mechanism can accurately focus on the important information in the text through dynamically gated weight fusion branching, the introduction of linear projection to compress the feature dimensions, and the support of adaptive padding, while the synergy between GRL and the projection theorem further enhances the accuracy and robustness of feature extraction. In addition, the faithful semantic preservation strategy proposed in this study combines the interval loss function and the cosine similarity function, demonstrating the unique advantages of measuring the similarity of two features and preventing overfitting. The strategy ensures that the extracted features can faithfully reflect the semantics of the original text by calculating the angle between the key features and the hidden layer information of the original text, thus further iteratively optimizing the key features.

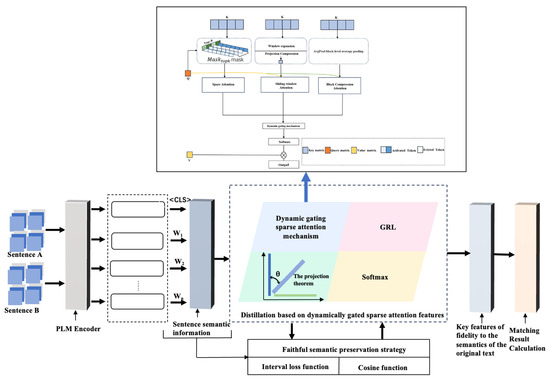

On this basis, this study constructs a text matching model based on dynamic gated sparse attention feature distillation with a faithful semantic preservation strategy. The overall architecture of the model is shown in Figure 1, where the semantic representations of text pairs are first obtained using a pre-trained language model, and then key features are extracted by a dynamic gated sparse attention feature distillation strategy. In the computational process of the attention mechanism, the sparse attention strategy is first adopted to effectively cut down the redundant operations in the computational process. Subsequently, the sliding window attention technique is used to focus on the local features in the data sequence to achieve finer feature capture. Then, the block compression attention method is used to efficiently optimize the attention computation and improve the overall computational efficiency, while the dynamic gating mechanism is introduced. At the same time, a dynamic gating mechanism is introduced, which dynamically adjusts the weight of the attention allocation according to the real-time characteristics of the input data and enhances the flexibility and adaptability of the attention mechanism. Finally, the processing results of the above phases are organically integrated, and the comprehensive output is obtained as a complete attention calculation result. In the end, a faithful original semantic preservation strategy is introduced to calculate the angle between the key features and the hidden layer information of the original text using the interval loss function and cosine similarity to ensure that the features are faithful to the original text semantics, and the key features are further updated and iterated accordingly.

Figure 1.

Model architecture.

The model contains three explicit training objectives: first, to optimize weakly correlated features and filter out weakly correlated features with potential value for the matching task through the dynamically gated sparse attention mechanism; second, to use the projection theorem to identify and discard noisy features, to obtain purer key features, and to compute the classification loss for global matching based on these key features in order to measure the model’s ability to predict the text-matching task in the global context of the between the predictive ability of the model and the actual labels; and third, to correct the key features through the interval loss function to ensure that their feature space distributions are consistent with the semantics of the original text and thereby further improve the matching accuracy and generalization ability of the model. These three training objectives complement each other and jointly drive the model’s performance.

The symmetry of this study is mainly reflected in the following ways: (1) In the dynamic gated sparse attention-based feature distillation strategy, weakly correlated features are obtained through dynamic gated sparse attention, a gradient inversion layer, the SoftMax function, and the projection theorem. Then, the projection theorem is used to identify and discard the noisy features in the hidden layer information to obtain the key features. Using the projection theorem, the original text information is decomposed in the direction of useful information in order to obtain key features, while the original text information is decomposed in the direction of weakly relevant features so as to obtain noise features. This feature distillation strategy decomposes the original semantic information into key features and noise features, forming orthogonal decomposition symmetry in semantic space. (2) The symmetry of the faithful semantic preservation strategy. To ensure that the key features are faithful to the original semantic information, this study introduces a new faithful semantic preservation strategy. This strategy calculates the angle between the key features and the hidden layer information of the original text using the interval loss function and cosine similarity in order to ensure that the features accurately reflect the semantics of the original text. The strategy focuses on both the semantic accuracy of the features and the correspondence between the features and the original text, forming a symmetric verification and maintenance mechanism.

3.1. Global Matching Using Key Features

This paper first defines the textual semantic matching task, which centers on the fact that given two text sequences and , a classifier is trained to predict whether there is a semantic equivalence between them. Here, and denote the two text sequences, respectively, and denote the ith and jth words in the sequences, and r and s denote the lengths of the text sequences.

In recent years, pre-trained language models have made significant progress in text comprehension and expression learning, providing powerful tools for natural language processing tasks. To improve the quality of the semantic representation of the text to be matched in the text semantic matching task, pre-trained models such as BERT [19], DeBERTa [20], and RoBERTa [21] are used in this study. These models can capture rich and accurate semantic information and optimize performance on specific tasks through fine-tuning.

For the global text matching task, this study adopts a strategy based on contextual feature distillation. Specifically, the text sequences and are spliced into a continuous sequence , whose representation implies generalized information about the whole sequence. This process can be expressed as shown below.

Here, is a special token in front of the sequence, and represents the aggregated sequence representation after processing by the final hidden layer, which implies generalized information about the whole sequence. PLM represents the pre-trained language model used.

3.1.1. Dynamic Gating Sparse Attention Mechanism

The dynamic gated projection sparse attention mechanism innovatively introduces learnable gated weight fusion branches, linear projection compression of feature dimensions, and adaptive padding, thus overcoming the limitations of the NSA [28] mechanism in terms of static gating, fixed block size compression, and a lack of adaptive padding. In attention computation, sparse attention is first used to reduce computational redundancy, followed by sliding window attention to capture local features, optimize computational efficiency with the help of block compression attention, introduce the dynamic gating mechanism to enhance the flexibility of attention allocation, and finally synthesize the outputs of these steps to complete the overall computation of the attention mechanism.

First, sparse attention calculation is carried out using the Top-K masking operation to filter key feature associations by retaining only the first k (k = S × r, where r is the sparsity rate, and S is the length of the sequence) maximum attention values for each line. This strategy not only reduces the computational complexity from O(S2) to O(SlogS), which breaks through the traditional computational bottleneck, but also improves the model noise immunity by truncating the weak connections, thus optimizing the feature processing efficiency and accuracy.

Here, denotes the query matrix, denotes the key matrix, Mask_topk(.) denotes the mask that preserves the first k maxima of each row, r is the sparsity rate, denotes the scaling factor to prevent the dot product value from being too large, d is the dimension of each attention head, and denotes the sparse attention score matrix.

Second, in computing the sliding window attention, the Unfold operation of the sliding window is used to efficiently extract the local contextual features of the sequence and the projection matrix (where ω is the window parameter controlling the local perceptual range, and d is the dimension of each attention head). Dimensionality compression is achieved. This process both captures the short-range dependencies of the sequences and significantly reduces the feature dimensions through the projection operation, which effectively mitigates the overfitting problem in high-dimensional space and improves the generalization performance of the model.

Here, denotes the learnable projection matrix, and Unfold denotes the sliding window display operation. In the sliding window attention mechanism, denotes the key matrix after the sliding window unfolding operation. B denotes the number of samples processed simultaneously; H denotes the number of attention heads, which is used in the multi-head attention mechanism; S denotes the length of the sequence and the number of input tokens; ω denotes the size of the window, the length of the local context covered by the sliding window; d denotes the dimension of each attention head; and denotes the sliding window attention score matrix.

Then, block compression attention is carried out, and the computation adopts the average pooling (AvgPool) technique for block compression of the key matrix, which effectively compresses the key matrix and generates low-resolution global feature representations by setting the block size b. The technical significance of this method lies in the following: On the one hand, it significantly reduces the sequence length S to , which, in turn, significantly reduces the computational volume and improves the computational efficiency; on the other hand, it effectively makes up for the limitation of the field of view of the local attention mechanism through the introduction of coarse-grained global features, which provides more comprehensive and rich feature information for the model.

Here, AvgPool denotes the average pooling operation, q denotes the query matrix, denotes the transpose of the pooled key matrix, and d is the dimension of each attention head. is the scaling factor, which is used to prevent the gradient from becoming unstable due to too large a dot product result. denotes the block compression attention score matrix.

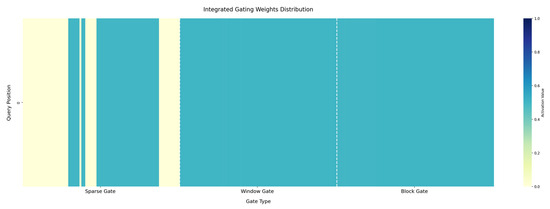

Then, the calculation of the dynamic gating mechanism is carried out, which is achieved by performing mean pooling along the sequence dimension S to extract the global statistical features and then generating the three-channel gating weights through the Sigmoid function; this method realizes a sequence-based perceptual measure of feature importance and is able to dynamically regulate the degree of contribution of each branch, thus improving the model performance.

Here, denotes the Sigmoid excitation function, [;] denotes splicing along the channel dimension, denotes the sparse attention score matrix, denotes the sliding window attention score matrix, denotes the block compression attention score matrix, and g denotes the three-channel gating weights generated by the dynamic gating mechanism.

Finally, when calculating the final output of the dynamically gated multimodal attention, the outputs of the three attention branches are first weighted and fused using the dynamic gating weights , and element-by-element multiplication is used in the fusion process to achieve adaptive combination. The method breaks through the limitations of traditional multimodal attention equal weight splicing and achieves feature selection through a learnable dynamic gating mechanism, effectively suppressing noise interference and enhancing key feature expression.

Here, is the fused attention score matrix, ϵ [0,1] is the dynamic gating weight, denotes the output of the ith attention mechanism, and ⊙ denotes element-by-element multiplication.

Second, the fused attention score matrix is normalized by the Softmax function to generate the attention weight distribution, which is then weighted and summed with the value vector matrix V to finally obtain the context-aware output containing multimodal features. This process innovatively introduces a multimodal feature fusion mechanism to achieve synergistic optimization of global and local features.

Here, Output denotes the multimodal context-aware output, the result of the weighted summation of the value vectors by the attention weights; V denotes the value vector matrix; and SoftMax denotes the normalization function, which serves to transform the attention score matrix into a probability distribution.

Based on the multimodal context-aware output, on the one hand, by multiplying the output processed by the gradient inversion layer with the weight matrix and adding the bias and then using the SoftMax function, a vector of predicted probability distributions corresponding to the samples can be obtained, which visually presents the values of probabilities in the different categories and is used for classification decisions. On the other hand, the cross-entropy loss function is used to measure the degree of difference between the predicted probability of the model and the real label, and in the process of model training, the goal is to minimize the loss value, prompting the model to constantly adjust the parameters, especially in the field of self-adaptation, adversarial learning, and other scenarios, which can guide the model to learn a better representation of the features so that the prediction results are closer to the real situation, as shown in the expression below:

where denotes the vector of predicted probability distributions corresponding to the ith sample. Softmax denotes a common activation function that converts the input values into probability distributions. GRL denotes the gradient inversion layer. Output denotes the final output of the dynamically gated multimodal attention. W denotes the learnable parameter matrix, b denotes the bias vector, and denotes the loss value based on the gradient inversion layer corresponding to the ith sample. CrossEntropy denotes the cross-entropy loss function. denotes the true label of the ith sample. denotes the loss value of the gradient-based inversion layer corresponding to the ith sample. Optimizing the training process of , we introduce the gradient inversion loss , which aims to enhance the model’s recognition and learning of weakly correlated features by minimizing these losses.

On this basis, this study highlights the key role of the projection theorem in feature dimensionality reduction, especially its feature decomposition capability, which can effectively remove noise from sentence semantic information. To this end, we design a feature distillation layer based on the projection theorem to reduce the negative impact of weakly correlated components on the matching effect. The working principle of this layer is to project the final hidden layer aggregation representation of the sentence in the direction in order to obtain the noise features. The specific formula is shown below:

At the feature distillation layer, we apply the projection operator Proj(-,-) to process the vector . This process extracts the noise feature in the multimodal context, sensing the direction of Output, where which represents the modes of Output.

3.1.2. Extraction of Core Semantic Features

The noise feature is successfully removed after the projection operation. This step significantly reduces the interference of noise on the sentence-matching task, which in turn improves the overall performance of the model. The specific expression is shown below:

In the optimization step, we first remove the noise feature from the final hidden layer aggregated representation of the sentence , thus obtaining a purer aggregated sequence representation . Subsequently, we use the projection operator Proj(-,-) in the direction of to further obtain the key feature .

Finally, the key feature is applied to unfold the prediction. The prediction session contains only one trainable classification layer with weights, where Z represents the number of labels, and C is the hidden layer dimension. This layer receives key features and from sentences a and b as a means of predicting the label y with the following expression:

Finally, this study introduces the standard classification loss for fine-tuning training, which is calculated as shown below.

In this study, and are used to characterize the final hidden layer states of sentences a and b precisely. To train the model, a classification loss function is introduced to evaluate the sentence matching efficacy, and parameter optimization is achieved by minimizing the error.

3.2. Faithful Original Text Semantic Retention Strategies

The interval loss function is widely used in various tasks due to its effectiveness in preventing overfitting, and its robustness feature is particularly important for the original text semantic preservation computation in this study. The cosine similarity function, as a common tool for measuring the similarity between vectors, plays a key role in the distance calculation task. This study aims to utilize this property of the cosine similarity function to achieve effective distance modulation based on maintaining the similarity between the key information and the information in the hidden layer of the original text.

3.2.1. Cosine Similarity Calculation

In order to ensure that the key semantic feature extracted by the model is faithful to the original semantic representation , this study applies the cosine function to measure the similarity between the final hidden layer aggregation representation and the key semantic feature . The specific calculation formula is as follows:

In this equation, cos(.,.) is the cosine similarity operator symbol, and c(θ) visually presents the degree of similarity between and , while θ denotes the angle between and .

3.2.2. Similarity and Overfitting Regulation Module

To achieve the goal of directly optimizing the similarity between the semantic representation of the original text and the key semantic feature and at the same time avoiding the overfitting problem of the model, this study selects the spacing loss as the core objective function [29]. The specific formulas are presented as follows:

Within this model architecture, the hyperparameter takes a fixed value of 0.006, and max(.,.) is used as a maximization operator to screen the maximum elements in the given input. For each sample, accurately refers to the interval loss value of the ith sample, and is interval loss function that this study relies on, which plays a key role in the whole process of model training and optimization.

3.3. Multi-Loss Synergistic Optimization Strategy

To improve the performance of the model in the matching task and obtain more accurate matching results, this study innovatively adopts three loss functions—, , and —to jointly train the model. The three loss functions optimize the model in different dimensions: acts as the classification loss of the global matching model, which is responsible for judging the semantic relationship between key features in the sentences after distillation layer processing and helps the model understand the overall meaning of the text; is used to focus on optimizing weakly relevant features; and is the interval loss, which motivates the model to learn the key semantic features to fit the original text in order to enable the model to accurately grasp the core semantics. The synergistic optimization of these three loss functions can give full play to their advantages and improve the matching ability of the model in all aspects. The specific expression is as follows:

During the model training process, the total loss L is used, which consists of the classification loss , the gradient reversal loss , and the interval loss . These loss terms are responsible for global matching, weakly relevant contextual feature optimization, and key semantic features fitting the semantics of the original text, respectively, which together drive the model performance.

The pseudo-code precisely preserves the core innovative elements of the original code, covering the sparse attention feature distillation technique based on the dynamic gating mechanism with a faithful semantic preservation strategy, as shown in Algorithm 1. At the same time, it simplifies the specific implementation details using mathematical notation. The pseudo-code is shown below:

| Algorithm 1. Text Matching Model Based on Dynamically Gated Sparse Attention Feature Distillation with Faithful Semantic Preservation Strategy |

| Require: input_ids, token_type_ids, attention_mask, labels |

| 1: // Gradient Reversal Components |

| 2: function GradientReversalForward(x,) |

| 3: Save in context} |

| 4: return x |

| 5: end function |

| 6: function GradientReversalBackward(grads) |

| 7: dx − grads |

| 8: return dx, None |

| 9: end function |

| 10: // Enhanced Sparse Attention Mechanism |

| 11: function EnhancedSparseAttention (Q,K,V,mask) |

| 12: B, S, D shape(K) |

| 13: num_heads 4, sparse_ratio 0.5 |

| 14: // Sparse Attention Branch |

| 15: |

| 16: |

| 17: |

| 18: Window Attention Branch |

| 19: |

| 20: |

| 21: //W: window projection |

| 22: // Block Compression Branch |

| 23: |

| 24: //C: compressed blocks |

| 25: // Dynamic Gating Fusion |

| 26: |

| 27: |

| 28: // Mask & Normalization |

| 29: |

| 30: return attnV |

| 31: end function |

| 32: // Main Model Forward Pass |

| 33: function ModelForward}{…} |

| 34: |

| 35: |

| 36: // Common Space Projection |

| 37: |

| 38: /h: pooled_output, c: common_emb |

| 39: final_emb |

| 40: // Multi-Task Loss Calculation |

| 41: |

| 42: |

| 43: |

| 44: |

| 45: return L, logits |

| 46: end function |

4. Experimental Setup

4.1. Dataset

To comprehensively verify the applicability and effectiveness of the textual semantic matching method proposed in this paper in multiple generic and vertical domains, such as paraphrasing, textual implication, semantic equivalence recognition or paraphrase recognition, and Q&A, and to facilitate comparative analysis with other models, five representative datasets were carefully selected for comprehensive evaluation in this study, as shown in Table 1.

Table 1.

Statistics of the experimental dataset.

The datasets in Table 1 cover both Chinese and English domains. Each dataset has unique characteristics. This enables a comprehensive evaluation of method performance across different linguistic and cultural contexts. The English domain datasets include MRPC [30] for general applications and Scitail [31] for specialized scientific scenarios. The MRPC dataset collects sentence pairs from internet sources. It uses binary labels to determine semantic equivalence between sentences. This study employs the official MRPC version from the GLUE project. It contains 5801 sentence pair samples. The SciTail dataset differs, as it is the first implication benchmark using naturally occurring standalone sentences. It includes 27,026 text pairs categorized as implications or non-implications. This structure facilitates performance assessment specifically for textual entailment tasks.

In the Chinese language, PAWS, in the general domain, the Ant Gold Service dataset, in the vertical finance domain, and KUAKE-QTR, in the healthcare vertical domain, are selected for this study. The PAWS dataset is used for semantic equivalence judgment and paraphrase recognition in text matching tasks. It contains 51,401 sentence pairs categorized as matches or mismatches. Due to missing true labels in the acquired test set, the validation set is utilized for testing. The Ant Gold Service dataset is provided by Ant Gold Service Company. This paper extracts partial data from this financial-domain collection. The dataset comprises 37,334 question–answer pairs classified as exact matches or non-matches. KUAKE-QTR is a Chinese medical text matching dataset constructed by Aliyun that contains 27,087 pairs of annotated sentences, and its labeling system is classified into four categories based on the degree of semantic relevance: complete topic matching, partial matching, a small amount of matching, and no matching at all. Due to the lack of real annotated labels in the official test set, the original validation set is used as the test set for performance evaluation in the experiments in order to guarantee the reliability of the model effect validation. By evaluating across these five datasets, this study investigates the applicability and effectiveness of text semantic matching methods across diverse scenarios. The analysis aims to support subsequent research and practical applications through comprehensive insights.

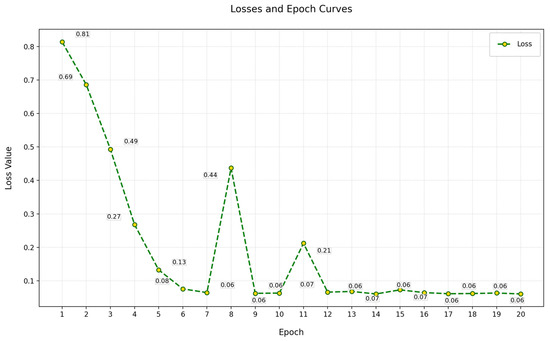

4.2. Parameter Settings

This study ensured fair comparisons by applying identical hyperparameters to all pre-trained models during fine-tuning, including both baseline models and the proposed model. Key hyperparameter choices are thoroughly documented to guarantee accuracy and reproducibility. The optimizer, weight decay rate, and learning rate align with established practices from prior research [32]. The AdamW optimizer [33] was selected with β1 = 0.9 and β2 = 0.999, effectively decoupling weight decay from gradient updates to mitigate overfitting—a widely adopted approach in pretrained model fine-tuning. A weight decay rate of 0.01 maintains parameter consistency with comparison models, preserving experimental fairness. The learning rate of 2 × 10−5 balances preservation of pretrained semantic information with stable fine-tuning, falling within standard practice ranges. Batch sizes were adjusted per dataset to ensure experimental consistency: 64 for the PAWS and Ant Gold datasets versus 16 for MRPC and SciTail. The PAWS and Ant Gold datasets underwent 50,000 fine-tuning steps with performance evaluations every 2000 steps, utilizing Chinese checkpoints from prior work [34]. These configurations—spanning optimizer choices, rate selections, and training protocols—were rigorously validated against practical requirements to ensure methodological validity and result reliability.

This study utilized a high-performance computing platform with specialized hardware and software configurations. The hardware features an NVIDIA TITAN RTX GPU offering 24 GB VRAM and CUDA 10.1.243 architecture acceleration. Processing power comes from an 11th-gen Intel Core i7-11700 CPU with 8 cores, 16 threads, and a 2.50 GHz base clock. The system employs 125.7 GiB DDR4 RAM for memory-intensive tasks and a 1 TB NVMe SSD delivering 3500 MB/s read/write speeds. The Ubuntu 18.04.6 LTS operating system runs on a 64-bit kernel (version 5.4.0-150-generic). The software stack combines the PyTorch 2.0.1 deep learning framework with the Hugging Face Transformers 4.36.2 and Datasets 2.0.0 libraries. Python 3.9.7 serves as the primary programming language. All experiments leveraged CUDA acceleration to optimize parallel computing efficiency.

5. Results and Analyses

5.1. Accuracy Test Results in the English Dataset

- (1)

- Experimental results on the MRPC dataset

In this study, DeBERTa-base is adopted as the model skeleton in the English dataset to better capture the characteristics of the English language. The experimental results on the MRPC dataset demonstrate the significant advantages of this paper’s approach. As shown in Table 2, compared with traditional supervised learning models such as L.D.C [17], BiMPM [14], and R2-Net [35], this paper’s method improves 10.63%, 9.5%, and 4.73% in accuracy, respectively. This enhancement indicates that, compared with the traditional supervised learning models, the method in this paper has a stronger ability to process semantically rich text pairs and can mine and utilize the key features that match the semantics of the original text more effectively. Meanwhile, compared with the current classical text matching models based on pre-trained language models, such as SimCSE-BERTbase [22], ArcCSE-BERTbase [22], DisRoBERTa [23], DC-Match (DeBERTa-base) [24], and MCP-SM (DeBERTa-base) [25], this paper’s method also achieves significant improvements in accuracy by 15.08%, 14.73%, 1.45%, 0.3%, and 0.2%, respectively. These enhancements demonstrate the effectiveness of the dynamically gated sparse attention-based feature distillation strategy and the faithful semantic preservation strategy. The former can efficiently extract key features and reduce the interference of weakly relevant information, while the latter ensures that the extracted key features are faithful to the original semantics, which further enhances the performance of the model. This experimental result not only verifies the superiority of this paper’s method over other models but also reveals the important roles of the dynamically gated sparse attention feature distillation strategy and the faithful semantic preservation strategy in the text-matching task.

Table 2.

Experimental results of the MRPC text matching dataset (accuracy).

To verify the generality of the method proposed in this paper, we apply it to all the pre-trained language models in the third group and observe the changes in the accuracy of the matching results. As shown in Table 3, the arrows indicate the trend of the change in accuracy from the original pre-trained language models to those after applying the method in this paper. This step aims to comprehensively evaluate the applicability of this paper’s method on different pre-trained language models and thus verify its generality. By comparing the changes in accuracy rates before and after application, we can have a more intuitive understanding of the effect of this paper’s method on the performance enhancement of each model, which in turn provides strong support for the wide application of the method.

Table 3.

Experimental results of the MRPC text matching dataset (accuracy and F1).

In this study, experiments are conducted using pre-trained language models with different architectures and parameter scales to verify the generality of the proposed method. Table 3 shows the trend of the accuracy of the original pre-trained language models with the application of the method in this paper. With the same set of configurations, we fine-tuned the original version of each pre-trained language model and its improved method without additional tuning of hyperparameters. The results show that the matching accuracies of all the pre-trained language models are improved on both datasets, which demonstrates the wide applicability of this paper’s method. Especially noteworthy is that the performance improvement is more significant for the small dataset MRPC. Specifically, compared to the BERT-base [19], RoBERTa-base [21], and DeBERTa [20] models, this paper’s method improves the accuracy by 0.87%, 0.63%, and 0.64%, respectively. In terms of F1, compared to the BERT-base [19], RoBERTa-base [21], and DeBERTa [20] models, the method in this paper improves the accuracy by 0.57%, 0.18%, and 0.31%, respectively. This result emphasizes the importance of the key semantic information in the sentence matching the original text in text matching, especially in the case of limited training data. This paper’s method significantly improves the model performance by effectively extracting these key features.

- (2)

- Experimental results on the Scitail dataset

The experiments conducted on the Scitail dataset are shown in Table 4. In this study, the comparison methods are grouped into four main categories: first, supervised matching methods including Decomposable Attention Model (Decatt) [37], Enhanced Sequential Inference Model (ESIM) [38], BiMPM [14], Combined Attribute Alignment Factor Factor Decomposition Encoder (CAFE) [39], Collaborative Stacked Residual Affinity Network (CSRAN) [40], Decomposition Graph Embedding Model (DGEM) [31], Hermite Collaborative Attention Recurrent Network (HCRN) [41], RE2 [42], DRr-Net [15], and AL-RE2 [43]; second, the then cutting-edge matching methods based on pre-trained language models, namely Knowledge-Enhanced Text Matching Models without the use of a knowledge base (KETM-KB∗) [44], Knowledge-Enhanced Text Matching Model (KETM∗) [44], Knowledge-Enhanced Text Matching Model without Knowledge Base and with BERT (KETM-KB (BERT)∗) [44], Knowledge-Enhanced Text Matching Model (KETM (BERT)) [44], Knowledge-Enhanced Text Matching Model (KETM (BERT)) [44] based on the Transformer model, and the Transformer model of the BERT-Base combinatorial attentional network [26] (BERT-Base-Comateformer) and RoBERTa-Base combinatorial attentional network [26] (RoBERTa-Base-Comateformer); third, fine-tuned pre-trained language modeling approaches such as BERT-base [19], RoBERTa-base [21], and DeBERTa [20]; and fourth, the approach innovatively proposed in this paper.

Table 4.

Accuracy experiment results for the SciTail dataset.

The method proposed in this paper demonstrates excellent results in the comparative evaluation with many mainstream models. Compared to traditional supervised models such as DecAtt [37], BiMPM [14], DRr-Net [15], and AL-RE2 [43], the method in this paper obtains a significant performance jump of 22.71%, 19.71%, 7.61%, and 7.99%, respectively; and in the face of the state-of-the-art matching methods based on pre-trained language models, like KETM-KB∗ [44], KETM∗ [44], KETM-KB (BERT)∗ [44], KETM (BERT) [44], BERT- Base–Comateformer [26], and RoBERTa- Base-Comateformer [26], the accuracies are also improved by 5.51%, 4.61%, and 2.91%, respectively, and 2.41%, 2.61%, and 1.81%, respectively. Even compared to the widely used baseline models BERT-base [19], RoBERTa-base [21], and DeBERTa [20], the method in this paper still has performance improvements of 1.78%, 1.27%, and 0.37%, respectively. Such significant improvements are attributed to the feature distillation strategy based on dynamically gated sparse attention, which can accurately and efficiently distill key features, weaken the interference of weakly relevant information, and at the same time, use the faithful semantic preservation strategy to ensure that the extracted features are highly compatible with the original text, which strengthens the performance of the model in all aspects and strongly verifies the validity and sophistication of this paper’s method in the task of textual entailment.

However, the performance improvement for a single pre-trained language model alone is not enough to confirm the broad applicability potential of the method in this paper. To this end, we extend the method to all the pre-trained language models covered in the third group, and the details of the variations in the accuracy of the matching results are shown in Table 5, where the arrows accurately indicate the fluctuations in the accuracy between the different methods. Compared with the mainstream baseline models BERT-base [19], RoBERTa-base [21], and DeBERTa-base [20], the method in this paper achieves 0.18%, 0.43%, and 0.37% performance improvement, respectively. In terms of F1 value, the method in this paper achieves an improvement of 0.22%, 0.56%, and 0.4% compared to the current mainstream baseline models BERT-base [19], RoBERTa-base [21], and DeBERTa-base [20], respectively. This series of empirical results, on the one hand, confirms the adaptability of this paper’s method in the mainstream model architectures of commonly used baselines, and on the other hand, strongly supports its effectiveness in the multivariate model scenarios, which builds up a solid foundation for the widespread application of the method.

Table 5.

Experimental results of the accuracy of pre-trained models on the SciTail dataset and applying the method of this paper.

5.2. Test Results on the Chinese Dataset

- (1)

- Experimental results on the PAWS dataset

In the Chinese matching experiments, we additionally choose RoBERTa-base as the infrastructure and evaluate the proposed method on the binary classification PAWS Chinese dataset. In addition to the conventional accuracy index, we introduce the F1 value to consider the performance of the classification task in depth. As shown in Table 6, the proposed method is highly effective in this dichotomous classification scenario, with an improvement of 2.85% in accuracy and 0.8% in F1 value compared to the Mac-BERT [19] and RoBERTa-base [21] models and an improvement of 2.85% in accuracy and 0.8% in F1 value compared to the state-of-the-art Semantic Text Matching Model Augmented with Perturbations [27] (STMAP). STMAP, which was at the forefront of the field at the time, had an accuracy jump of 6.6%. Behind this significant improvement, the feature distillation strategy based on dynamically gated sparse attention can accurately capture the key features and strongly screen out the interference of weakly relevant information, while the faithful semantic preservation strategy ensures that the key features and the original text semantics are seamlessly integrated, which synergistically strengthens the overall performance of the model. While the optimization of a single pre-trained language model is not enough to confirm the general applicability of the method, the current results have conclusively demonstrated the high adaptability and effectiveness of the method in the context of commonly used baseline mainstream models.

Table 6.

Experimental results on the PAWS dataset (accuracy).

To investigate the effectiveness of this paper’s method, it is extended to all the pre-trained language models in the second group, and the dynamics of the matching accuracy are presented in Table 7, where the arrows outline the changes in the accuracy before and after applying this paper’s method to the original models. Compared to the Mac-BERT [19] and RoBERTa-base [21] models, it is found that the proposed method achieves an increase of 0.1% and 0.80% in the accuracy dimension and 0.21% and 1.06% in the F1 metrics dimension, respectively. This demonstrates that the two synergistic mechanisms can improve model performance. The attention-based dynamic gating sparse feature extraction method can effectively isolate key features while suppressing less-relevant information. Meanwhile, a semantic preservation strategy maintains consistency between extracted features and the meaning of the original input. Together, these complementary approaches improve the enhanced model performance by focusing on feature selection and semantic fidelity. The two strategies complement each other and demonstrate the effectiveness of these two core strategies under different pre-trained model architectures.

Table 7.

Accuracy and F1 experimental results of pre-trained models on the PAWS dataset and after applying the method of this paper.

- (2)

- Experimental results on the Ant Financial Services dataset

To comprehensively evaluate the model performance, this study conducted experiments on the Chinese binary classification Ant Gold Service dataset using the accuracy rate as the main evaluation index. The experimental results show that, as shown in Table 8, compared to Mac-BERT [19] and RoBERTa-base [21], the method proposed in this study improves the accuracy rate by 1.18% and 0.71%, respectively, which indicates that the method has significant effectiveness on the Chinese binary classification financial Q&A task.

Table 8.

Accuracy experimental results on the Ant Financial Services dataset.

To verify the broad applicability of this paper’s method, this study applies it to two different pre-trained language models and evaluates its performance on the Chinese binary classification Anthem dataset. As shown in Table 9, compared to Mac-BERT [19] and RoBERTa-base [21], this paper’s method improves the accuracy by 0.55% and 0.71%, respectively. Compared to Mac-BERT [19] and RoBERTa-base [21], this paper’s method improves F1 by 0.11% and 1.12%, respectively. These results indicate that this paper’s method shows applicability and effectiveness under both pre-trained language models, showing its potential for wide application in Chinese binary financial Q&A tasks.

Table 9.

Experimental results of accuracy and F1 metrics for pre-trained models and the application of the proposed method on the Ant Financial Services dataset.

5.3. Ablation Experiments

To rigorously validate the effectiveness of the components within this paper’s methodology, the Scitail, MRPC, PAWS, and Ant Financial Services datasets were carefully selected for ablation experiments, as shown in Table 10 and Table 11. Focusing on the DeBERTa model, with the introduction of the gradient inversion loss and the enabling of the key feature matching mechanism, the model shows a certain degree of optimization in the respective datasets, with an increase of 0.28% and 0.29%, respectively. This phenomenon reveals that in specific datasets and application scenarios, relying on the dynamically gated sparse attention feature distillation strategy can efficiently identify and extract key features, simultaneously weaken the interference of weakly relevant information, and thus effectively improve the accuracy of text matching. In terms of F1 value, there is an increase of 0.35% in the Scitail dataset, which indicates that the dynamic gating mechanism can adapt to the long-tailed distribution characteristics of this dataset and synchronously improve the balance between precision and recall by accurately extracting domain-critical features (e.g., fine-grained semantic correlations of scientific terminology); however, there is a decrease of 0.06% in the F1 value in the MRPC dataset, reflecting the fact that this dataset has a higher sensitivity to the local syntactic structure, and the dynamic gated sparse attention feature distillation strategy may mistakenly remove some of the short-range dependencies (e.g., denotative disambiguation or juxtaposition structure) that are crucial for semantic matching when filtering redundant information, leading to an increase in the misclassification rate of a few difficult samples. Further, adding the interval loss LI on top of the above infrastructure, the detailed comparison reveals that the model performance is again advanced by 0.09% and 0.35%, respectively, compared to only adding LJ and LGRL. This result strongly supports the idea that the faithful semantic preservation strategy ensures that the extracted key features closely match the semantics of the original text, which injects a strong impetus into the model’s performance and strengthens the model’s superiority in the text-matching task. In terms of F1 values, there is an increase of 0.05% and 0.37% in the Scitail and MRPC datasets, respectively. This indicates that interval loss L_I further consolidates the robustness of dynamic gating to long-tailed features in Scitail by constraining intra-class aggregation and inter-class separation in the feature space (e.g., enhancing semantic differentiation of scientific terms or preserving contextual associations of denotative structures), alleviating the problem of mismatching of low-frequency terms, while in MRPC, it significantly improves the F1 value of the dynamic gating by reinforcing the local semantic alignment (e.g., repairing the dynamic gating sparse syntactic dependencies that may be over-cropped by the attentional feature distillation strategy) and significantly improves the model’s ability to discriminate difficult samples (e.g., structurally similar but semantically repugnant sentence pairs), thus achieving a cross-amplitude jump in F1 values. The differential gain between the two types of datasets confirms the dual value of interval loss for global semantic preservation and local structural correction, and its synergy with dynamic gating strategies can be dynamically adapted to the core claims of different tasks.

Table 10.

Results of Scitail and MRPC ablation experiments on the English dataset (accuracy and F1).

Table 11.

Results of the PAWS and Ant Financial Services data ablation experiments on the Chinese dataset.

Table 11 explores the RoBERTa-base model in the context of the Chinese PAWS and Ant Financial Services datasets. Initially, after the introduction of the gradient inversion loss, the rigorous evaluation reveals that the model’s accuracy and F1 metrics in the PAWS dataset have increased by 0.1% compared to the original pre-trained language model. In terms of F1, a slight decrease of 0.07% compared to the original pre-trained language model suggests that this dataset is more sensitive to local syntactic structures and that the dynamically gated sparse attentional feature distillation strategy may mistakenly remove some of the short-range dependencies (such as denotative disambiguation or parallel structures) that are crucial for semantic matching when filtering redundant information, leading to an increase in the misclassification rate for a few difficult samples. The accuracy and F1 metrics of the model in the Ant Financial Services dataset have both increased by 0.54% and 0.75%, respectively, compared to the original pre-trained language model. This remarkable result shows that the adaptive contextual feature distillation method is like an accurate ‘information filter’, which can effectively sieve out the noise information in the sentence matching process and build a solid foundation for the improvement of the accuracy rate. Following this, the interval loss LI is added on top of the gradient inversion loss that has already been incorporated, and the performance of the model in the PAWS dataset leaps forward again, with an additional 0.7% improvement in the accuracy rate. The model’s performance in the Ant Financial Services dataset takes another leap, with an additional 0.16% and 0.37% improvement in the accuracy and F1 metrics, respectively. This fully demonstrates that the faithful semantic preservation strategy is like a solid semantic calibrator, which ensures that the extracted key features are closely faithful to the original semantics and injects a strong impetus for the continuous enhancement of the comprehensive performance of the model, optimizing the model’s performance in all aspects of text processing tasks.

5.4. Performance Analysis of Noisy Data and Low-Resource Scenarios

In this study, three types of noise generation methods, namely Appendlrr, BackTrans, and Punctuation, are used in the TextFlint tool to construct a systematic evaluation framework. Appendlrr simulates redundant symbols interference in real-life scenarios by randomly inserting redundant characters to destroy the local semantic boundaries of the text; BackTrans, with the help of monolingual translation mechanism, makes use of the syntactic reconstruction and lexical substitution characteristics of the translation process to generate texts with deep semantic shifts in order to simulate cross-language transmission distortion; and Punctuation, through the addition, deletion, and substitution of punctuation marks, destroys the syntactic logic and clause structure of the text in a targeted way, especially for the design of stress tests for models relying on punctuation parsing. These three methods cover complex noise types such as character-level redundancy, translation noise, and syntactic variation that occur frequently in real-life scenarios from the dimensions of local structural noise (Appendlrr), semantic consistency perturbation (BackTrans), and syntactic boundary blurring (Punctuation), forming a multi-dimensional linkage noise stress test system.

From the experimental results on the high-noise dataset, both DeBERTa- and RoBERTa-based models show lower-than-expected robustness, but there are differences in performance, as shown in Table 12. Quantitative analysis shows that the accuracy of DeBERTa on the three noisy datasets (BackTrans, Appendlrr, and Punctuation) is 87.71%, 87.82%, and 88.93%, respectively, which is an average decrease of only 0.94% compared to the 89.10% accuracy of the DeBERTa-based method in this paper on the original MRPC dataset. The accuracy of this paper’s method based on RoBERTa on the original MRPC dataset is 88.46%, while RoBERTa’s accuracy on the three noisy datasets (BackTrans, Appendlrr, and Punctuation) is 86.67%, 86.84%, and 88.29%, respectively. The accuracy of this paper’s method on the original dataset decreases by 1.19% compared to the average accuracy on the three noisy datasets. These indicate that the DeBERTa-based method proposed in this paper is slightly better against noise. It is worth noting that both models maintain high performance in the Punctuation scenario, e.g., DeBERTa’s accuracy is 88.93%, while RoBERTa’s accuracy is 88.29%, which is even closer to the original data performance, suggesting that this type of surface noise has a limited impact on the model. Qualitatively, DeBERTa’s attentional decoupling mechanism may be more adept at capturing semantic associations under noise interference, while RoBERTa’s strong generalization ability obtained through large-scale pre-training also supports its stability in noisy environments. The maximum drop of 1.79% in performance fluctuation between the two models under text structure disruption (Appendlrr) and back-translation noise (BackTrans), on the other hand, reveals the actual challenge of deep semantic distortions on the models.

Table 12.

Results of MRPC noise experiments on the English dataset (accuracy).

In the Chinese medical text matching task, this study validates the effectiveness of the proposed method based on the RoBERTa-base model on the four-classification dataset KUAKE-QTR, as shown in Table 13. The experiments use macro-F1 as the core metric, and the results show that the method improves the macro-F1 value by 0.62% compared to the original RoBERTa-base [21] and outperforms the current MING-MOE family of macromodels based on the hybrid enhancement of sparse low-rank fitness experts by a significant margin [45] (Medical-Intelligent Networked Generation with Mixture of Experts, MING-MOE), with macro-F1 enhancements of 12.02%, 6%, 3.88%, and 0.62%, respectively. The performance improvement stems from the synergy between the dynamic gating sparse attention mechanism and the faithful semantic preservation strategy: The former focuses on the weakly relevant information accurately through adaptive feature distillation, while the latter ensures the strict alignment of features with the original text through semantic space constraints, and they together strengthen the model’s ability to parse medical texts at a fine-grained semantic level. Although the optimization effect under a single pre-trained model is not yet able to fully validate the universality of the method, the experiments have sufficiently demonstrated its strong adaptability on mainstream baseline models, providing an efficient technical path for the text matching task in the medical domain.

Table 13.

Experimental results for the Chinese low-resource dataset KUAKE-QTR (macro-F1).

In the experiments on the Chinese medical dataset KUAKE-QTR, the system optimization based on the RoBERTa-base model demonstrates significant performance improvement, as shown in Table 14. By introducing the gradient inversion loss, the accuracy and macro-F1 value of the model on the KUAKE-QTR dataset are improved by 0.27% and 0.25%, respectively, which verifies the ability of the adaptive contextual feature distillation method as an effective information filtering mechanism, and it strengthens the discriminative feature extraction by suppressing the noise interference in the sentence matching process. After further superimposing the interval loss LI, the model performance achieves a secondary leap with an additional 0.38% and 0.37% improvement in accuracy and macro-F1 value, respectively, which indicates that the semantic preservation strategy successfully constructs a dual optimization mechanism: The gradient inversion loss focuses on the key feature filtering while the interval loss ensures a high degree of consistency between the feature representations and the original semantics by constraining the semantic spatial distribution. The synergistic effect of the two not only consolidates the semantic understanding foundation of the model but also systematically improves the comprehensive performance of the medical text matching task through the hierarchical feature optimization path.

Table 14.

Experimental results of KUAKE-QTR ablation for Chinese low-resource datasets.

5.5. Time and Space Complexity Analysis

This study analyses the time and memory complexity of this paper’s method based on DeBERTa-base, the original DeBERTa-base, this paper’s method based on RoBERTa-base, the original RoBERTa-base, and DC-Match (RoBERTa-base), as shown in Table 15.

Table 15.

Time and memory complexity analysis.

In Table 15, the improved DeBERTa-base method proposed in this paper is higher than the original DeBERTa-base model in terms of time complexity and memory complexity, which mainly stems from the computational and storage overheads of the additional components. Time complexity rises from the original O(L(3n2d + 2nd2)) to O(L(3n2d + 2nd2) + bh(n2 + nw + nk)), primarily due to implementing a sparse attention mechanism and explicit projection operations. The memory footprint expands to O(L(3n2 + 2nd) + 3bhS2) versus the original O(L(3n2 + 2nd) + 150M), requiring storage for sparse attention branch outputs, projected intermediate states, and larger AdamW optimizer parameters. While the improvements boost semantic modeling through sparse attention and explicit feature projection, they demand greater processing resources. The baseline DeBERTa remains preferable for resource-limited environments despite potential compromises in complex semantic capture capability. This trade-off highlights the balance between enhanced performance and practical deployment considerations.

This study’s enhanced RoBERTa-base approach exhibits elevated computational and memory demands compared to the baseline model due to added architectural components. The time complexity increases from the original O(L(n2d + nd2)) to O(L(n2d + nd2) + bh(n2 + nw + nk)), driven by the implementation of a three-way sparse attention mechanism (with h = 4 heads, w = 4 window size, and k = 4 blocks) and explicit two-dimensional projection operations. The sparse attention branch—particularly its n2 term—introduces significant computational overhead in long-sequence processing. Memory requirements expand from O(L(n2 + nd) + 125M) to O(L(n2 + nd) + 3bhS2), accommodating three sparse attention intermediate states (3bhS2), dual explicit projection states, and a cosine similarity matrix. While the improvements boost local feature extraction and semantic alignment through sparse attention patterns and projection transformations, they demand greater hardware resources. The original RoBERTa-base remains preferable for resource-constrained deployments despite potential limitations in modeling global dependencies. Selection between the two variants should balance task-specific performance needs against available computational capacity and memory constraints.

This study’s enhanced RoBERTa-base method demonstrates distinct computational trade-offs compared to DC-Match (RoBERTa-base), reflecting architectural design priorities. DC-Match employs three parallel encoders, resulting in triple the time complexity (O(3L(n2d + nd2) + 4bn2d)) versus the original single-encoder model due to independent encoder computations and KL divergence loss calculations. The proposed method maintains lower complexity (O(L(n2d + nd2) + bh(n2 + nw + nk))) through sparse attention mechanisms (4 heads, 4-window locality, 4-block partitioning) and explicit projections, strategically reducing global attention via localized operations. Memory requirements starkly contrast: DC-Match demands triple-encoder parameters (375M) with O(3L(n2 + nd) + 375M + 3Lhn2) complexity for storing three attention matrices and joint probability intermediates, while our approach uses single-encoder parameters (125M) with O(L(n2 + nd) + 3bhS2) through sparse attention optimization. DC-Match enhances multi-view semantic alignment through encoder interactions at triple the resource costs, suitable for well-resourced multi-perspective tasks. Conversely, our method prioritizes efficiency via localized feature extraction and single-encoder architecture, balancing performance with reduced memory footprint—particularly advantageous for long-sequence processing or hardware-limited deployments. This architectural dichotomy highlights fundamental design choices between comprehensive multi-encoder systems and optimized single-model efficiency.

5.6. Runtime Comparison

This paper reveals the trade-off between computational efficiency and model performance of the proposed improved method through experimental quantitative analysis and theoretical complexity modeling. Experiments on the Scitail dataset show that the improved method increases the training time by 50.75, 54.85, and 21.15 s and the testing time by 0.05, 0.18, and 0.05 s, compared to the original BERT, RoBERTa, and DeBERTa models, respectively, where the testing time of the DeBERTa baseline even shows a 0.06 s of optimization, as shown in Table 16. This phenomenon confirms the assertion in the theoretical analysis that the addition of sparse attention mechanisms and explicit projection operations mainly affects the computational load in the training phase, and including the parallelization of the three-way branching can partially offset its computational overhead through batch optimization in the inference phase, whereas the need to repeatedly update the added parameters in the training phase significantly increases the time cost. From the model capability dimension, the improved approach enhances local and global pattern capture through multi-granularity feature extraction with sparse attention and semantic space alignment with explicit projections, at the cost of an increase in the spatial complexity to O(L(n2 + nd) + 3bhS2), involving additional storage requirements for the attentional intermediate states and projection matrices. This increase in spatio-temporal complexity is positively correlated with hardware resource investment.

Table 16.

Comparison of runtime on the English dataset Scitail.

Experiments on the Chinese dataset Anthem show that the improved method proposed in this paper increases the computation elapsed time by 932.57 s and 1987.87 s in the training phase compared to the Mac-BERT and RoBERTa-base baseline models, respectively, while the testing phase produces only a weak increment of 0.27 s and 0.98 s, respectively, as shown in Table 17. This phenomenon stems from the dual effects of the enhanced sparse attention mechanism and the explicit projection operation in the model architecture: The sparse attention strengthens the local feature modeling capability through the three-way branching structure of h = 4 heads, w = 4 windows, and k = 4 blocks, but, at the same time, introduces an incremental time complexity containing the n2 terms, which significantly increases the computational burden, especially in the case of long sequences; meanwhile, the two-dimensional transformations added by the explicit projection operation and the storage requirements of the three-way attention intermediate results raise the memory complexity from O(L(n2 + nd) + 125M) to O(L(n2 + nd) + 3bhS2) for the original model. Despite the enhanced semantic alignment and contextual characterization capabilities of the improved method through structural innovations, its O(L(n2d + nd2) + bh(n2 + nw + nk)) time complexity is significantly higher than that of the original model’s O(L(n2d + nd2)), which determines that RoBERTa-base is still of greater engineering utility in resource-constrained scenarios. Therefore, the model selection needs to take into account the task’s demand for feature capture depth and the affordable range of hardware resources to achieve a dynamic balance between computational efficiency and semantic modeling accuracy.

Table 17.

Comparison of run times on the Anthem dataset.

5.7. Performance Comparison of Attention Mechanisms

The purpose of this study is to comparatively evaluate the performance of the dot product full attention model, the linear variant attention Linformer, and the dynamically gated sparse attention model on the MRPC and PAWS datasets in order to deeply explore the differences in the performance of these two attention models when dealing with different linguistic tasks.

Specifically, in the experiments on the MRPC and PAWS datasets, we applied the pointwise multiplicative full attention model, the linear attention variant Linformer, and the dynamically gated sparse attention model to the two species baseline models, RoBERTa-base [21] and DeBERTa-base [20], respectively. As shown in Figure 2, the dynamic gated sparse attention model improves in accuracy by 0.45% and 0.11%, respectively, compared to the dot product full attention model. In the experimental comparison of the MRPC and PAWS datasets, the accuracy of Linformer is reduced by 0.2% and 0.69% compared to the dynamically gated sparse attention model, respectively. This gap mainly stems from the fact that the low-rank approximation method adopted by Linformer struggles to adequately capture the long-range dependency information when processing long texts, resulting in limited semantic relevance modeling capability. On the other hand, the dynamically gated sparse attention model shows better performance in the MRPC dataset tests based on the RoBERTa-base [21] and DeBERTa-base [20] architectures, and its advantages mainly come from the fact that the model extracts features more efficiently by fusing branches with dynamically gated weights, introducing linear projection to compress the feature dimensions, and supporting adaptive padding.