1. Introduction

Forests, as one of the most important ecosystems on Earth, play a crucial role in maintaining biodiversity and regulating the climate, among other vital functions [

1]. Their spatial structure and functional distribution often exhibit multi-layered self-similarity, with symmetry appearing in remote sensing point cloud data as distinct geometric patterns, such as the radial symmetry of tree trunks and the conical or hemispherical symmetry of tree canopies. However, climate change and human activities are increasingly disrupting this natural symmetry. Illegal logging has worsened spatial variability in forest stands, while extreme weather events have caused asymmetric changes in canopy structure [

2,

3,

4].

Against these limitations, the techniques of efficient forest monitoring have become a particularly important issue, which serve as essential tools for forest conservation and management. Although traditional methods can provide accurate forest management data, they are time-consuming and labor-intensive, thus making it difficult for large-scale forest monitoring. Additionally, the complex terrain and dense vegetation create significant challenges for data acquisition and large-scale data processing. Consequently, exploring the advanced techniques to achieve efficient and accurate forest monitoring has emerged as a central focus in current forestry research and practical applications [

5].

With the breakthrough progress of deep learning techniques in computer vision, computer graphics, and natural language processing, an increasing number of researchers have introduced the techniques of deep learning into remote sensing point cloud data processing. Classical networks such as PointNet++ [

6], through hierarchical feature extraction, can effectively capture the geometric symmetry of trees (e.g., the cylindrical symmetry of trunks and the radial symmetry of canopies), significantly improving the performance of individual tree segmentation.

Despite the development of deep learning in the area of remote sensing, several challenges remain in practical applications. On the one hand, remote sensing point cloud data typically exhibit high dimensionality, complexity, and noise, imposing stricter requirements on the robustness of deep learning models. On the other hand, the vulnerability of deep learning models to adversarial attacks also limits their application in real-world scenarios [

7,

8]. Attackers may disrupt the symmetric properties of tree point clouds by adding noise points, deleting key points, or locally perturbing point coordinates, leading to mis-segmentation of tree boundaries or missed detection of target trees, thereby compromising the accuracy of forest resource assessments. Therefore, while integrating deep learning into remote sensing, researchers must also focus on the model robustness, generalization capability, and model interpretability [

9], enhancing model reliability in remote sensing environments and providing some superior solutions for forest resource management. This study presents a comprehensive framework aiming to boost the robustness of individual tree segmentation in remote sensing point clouds. It designs multiple targeted adversarial sample generation strategies to evaluate the model’s performance under attack, and proposes two defense strategies—data augmentation defense and adversarial training—to strengthen the robustness of the model against adversarial samples.

This study presents a robust individual tree segmentation framework for remote sensing point cloud data, specifically designed to improve model resilience against adversarial perturbations in complex forest environments. Unlike prior works that primarily focus on 2D image domains, our framework is tailored to the structural characteristics of forest point clouds by incorporating both adversarial learning and geometric-aware data augmentation. The proposed method integrates denoising and controlled noise injection into a targeted data augmentation pipeline and introduces a keypoint-guided adversarial attack strategy based on saliency estimation in 3D space. These keypoints are used to guide perturbations such as FGSM and Gaussian noise, as well as point addition and deletion, resulting in structurally meaningful adversarial examples that better reflect realistic challenges in forest segmentation tasks. Furthermore, we introduce a dynamic training strategy that adaptively adjusts the ratio of adversarial to clean samples based on training loss dynamics, striking an effective balance between robustness and generalization.

The main technical contributions of this work can be summarized as follows:

To address the susceptibility of point cloud-based tree segmentation models to adversarial perturbations, we propose a robust training framework that integrates data augmentation and adversarial learning. This strategy significantly enhances model resilience, improving segmentation accuracy and reliability in forest monitoring tasks.

We propose a keypoint-guided adversarial attack strategy that leverages salient 3D regions within the point cloud to apply structured perturbations. Specifically, we employ FGSM, Gaussian noise attacks, and further enhance the perturbation effect by adding or removing points in the vicinity of identified keypoints. This approach disrupts local geometric structures and semantic continuity, generating more realistic and challenging adversarial examples for robust model evaluation.

A dynamic training mechanism is designed to regulate the ratio of adversarial to clean samples based on training loss dynamics. This adaptive scheme maintains a balance between robustness and generalization, mitigating the risk of overfitting or under-training due to excessive noise injection.

2. Related Work

In recent years, the wide application of deep learning technology across various fields has spurred research on adversarial attack samples and their defense strategies [

10]. By adding tiny perturbations to the input data that are difficult for the human eye to recognize, the adversarial sample can cause serious degradation in the model’s performance or even trigger completely wrong predictions. During the process of collecting remote sensing point cloud data, various factors, such as sensor error, environmental interference, terrain relief, vegetation occlusion, etc., may affect the data, resulting in noisy points, local loss, and density inequality in the data. These problems can be regarded as natural adversarial attacks, in essence, and can affect the segmentation accuracy and ultimately affect the accuracy of forest resource assessment. Therefore, improving the adversarial robustness of deep learning models has become a key issue in current research [

11].

The research on adversarial attacks mainly focuses on the method of generating adversarial samples and their effect on model performance. Goodfellow et al. [

12] proposed the fast gradient sign method (FGSM), which generates adversarial samples by calculating the gradient of the loss function concerning the input data and applying a disturbance of a certain magnitude along the sign direction of this gradient. Madry et al. [

13] proposed the projected gradient descent (PGD) method, which uses the gradient information to slightly perturb the input sample at each iteration and projects it back to the predefined perturbation range. However, the multiple iterations required by PGD also cause significant computational overhead, making it difficult to apply to large-scale datasets. In addition, Kurakin et al. [

14] proposed a method for generating and testing adversarial examples in the physical world. They created adversarially modified images that could still fool deep learning models even after being printed out, exposed to changes in lighting, and captured by a camera. At the same time, they explored how to perturb LiDAR point cloud data in the physical environment to trick autonomous driving systems from correctly recognizing objects by adding or removing key points. Such attacks may cause vehicles to misjudge obstacles and affect driving safety.

With the increasing threat of adversarial attacks, in order to improve the robustness of the model, researchers have gradually pursued new methods of adversarial defense. The research on adversarial defense mainly focuses on improving the resistance of the model to adversarial samples. Adversarial training is one of the most effective adversarial defense methods [

15]. In the training process, adversarial samples are introduced to make the model learn the features of adversarial disturbances, so as to improve its robustness. Goodfellow et al. [

12] introduced FGSM adversarial training, where adversarial examples generated using the FGSM method are added to the training set to help the model build resistance against similar types of attacks. The PGD adversarial training method proposed by Madry et al. [

13] significantly improves the robustness of the model by generating complex adversarial samples. In addition, data augmentation techniques are also widely used in adversarial defense. In the point cloud segmentation task, Wang et al. [

16] increased the dataset and generated more diverse training data through random rotation, translation, and noise injection, which enhanced the model’s ability to adapt to changes in point cloud data. Meanwhile, gradient masking is a defense method that reduces the effect of attacks by limiting the gradient information of adversarial examples. Cohen et al. [

17] proposed a stochastic smoothing method, which, unlike adversarial training, makes the decision boundary of the model smoother by adding noise to the input data, thereby improving the stability under adversarial attacks and providing a mathematically provable robustness guarantee.

3. Methods

This study proposes a defense strategy by combining data augmentation defense and adversarial training. From the two aspects of data preprocessing and model training, this study aims to enhance the ability of the model to defend against adversarial samples, ensure that the model can still accurately segment individual trees in complex environments, and improve the robustness of the model.

3.1. Adversarial Sample Generation

3.1.1. Adversarial Point Perturbation-Based Attacks

Here, we employ the FGSM algorithm to implement adversarial attacks based on point perturbation. FGSM, proposed by Goodfellow et al. [

12], is a classical adversarial attack method whose fundamental principle involves utilizing the gradient information of learning models to generate adversarial samples, thereby inducing the misclassification of neural networks.

Specifically, the FGSM method first calculates the gradient information of the input data based on its defined loss function to determine the perturbation direction that maximizes the loss. It then applies a controlled-magnitude perturbation along this direction to generate adversarial samples that cause neural network misclassification. The formulation is shown as Equation (1):

Here, x represents the original point cloud data, xadv represents the generated adversarial sample, and ε is a hyperparameter that controls the intensity of the disturbance. For the dataset with high resolution and dense point cloud distribution, a smaller value of ε is chosen to avoid excessive perturbation that could compromise the physical meaning of the data. In contrast, for lower-resolution datasets with more noise, ε can be increased to improve the effectiveness of the adversarial attack. The sign(·) function is used to determine the direction of the perturbation. represents the gradient of the defined loss function with respect to input x, given parameter θ, and label y.

3.1.2. Gaussian Noise-Based Attacks

Gaussian noise follows a normal distribution probability density function. During adversarial sample generation, randomly distributed Gaussian noise perturbations are added to the original data to alter its features and induce model mispredictions. For remote sensing point cloud data of large-scale tree scenes, Gaussian noise attacks are implemented by perturbing their 3D coordinates of point clouds, that is, the positions of sampling points are adjusted using random variables conforming to a Gaussian distribution, as shown in the following Equation (2):

Here, N(0,σ

2) denotes a Gaussian-distributed random variable with zero mean and variance σ

2. The variance σ

2 controls the intensity of noise, the larger the variance will lead to more severe noise disturbance, which will cause a large shift in the point cloud coordinates, thus affecting the segmentation results of the model, while a smaller variance will cause a small disturbance with a relatively weaker effect on the model.

3.1.3. Point Addition-Based Attacks

In this study, we also employ a keypoint-based insertion attack, where new points are added at critical locations in the point cloud. These key points tend to be in high curvature or density regions of the point cloud and play a key role in describing the shape, structure, and boundaries of trees, so adding additional points in these regions will change the local geometric features.

The point addition-based attack method aims to construct a set of adversarial sampling points, where each additional sampling point is artificially generated rather than sampled from the original point cloud scenes. Due to the inherent properties of point cloud data, the number of sampling points in a local point cloud

P is not fixed, allowing for the intentional addition of a specified number of adversarial sampling points to achieve the attack effect [

18]. However, given the vast search space of 3D point clouds, randomly adding adversarial points may yield insufficient perturbation intensity to effectively disrupt model predictions. Furthermore, for networks like PointNet++ [

6], centrally located sampling points are often filtered out by max-pooling layers. Since max-pooling selects the maximum value across each feature dimension and central sampling points rarely exhibit maximal features, their impact on final segmentation results is minimal [

19], making randomly added sampling points less effective for attacks.

To address this problem, we design an adversarial point generation strategy based on key points by referring to the saliency map calculation method proposed by Zheng [

20]. Specifically, we first transform the original point cloud into a spherical coordinate system with the origin at the center of the point cloud. Through iterative relocation of sampled points toward the coordinate center while computing loss variations before and after movement, we estimate the saliency scores for each sampling point and ultimately generate a point cloud saliency map. The method takes the median of the 3D coordinates of all sampled points in the point cloud model as the coordinate value of the point cloud center, denoted as x

c. The calculation is as follows:

Here, () represents the coordinate values under each dimension corresponding to the sampled point , and md(·) denotes the median operation.

Then, translating a single sample point toward the point cloud center by a distance of

will increase the value of the loss function

, the gradient

is computed as follows:

Here,

, and the saliency value

is computed as follows:

In the saliency map, points with higher scores are regarded as key points, because these points are usually located in important regions that determine the object category, so adding adversarial points near the key points is more likely to affect the prediction results of the model and make it induce mis-segmentation or misclassification.

3.1.4. Point Deletion-Based Attacks

In addition to the above several attack methods, this study also implements a simple and effective attack method—point deletion-based attacks. This method removes a certain number of points, or points from specific locations, in the original remote sensing tree point cloud data. As a result, it changes the overall structure and feature distribution of the point cloud data, and then interferes with the normal performance of the individual tree segmentation model.

In this paper, two different strategies are used to select the deletion points. The first method is random point deletion, which randomly picks points from the whole point cloud data and removes them according to a certain proportion. However, although this approach is simple to implement, it lacks pertinence and may not effectively affect model performance. To enhance the attack effect, we also adopt another more targeted deletion strategy, which selects key points for removal based on the point cloud saliency map. Since the shape information of a point cloud is mainly determined by its surface points, the points located in the central region contribute less to the prediction results of the model. In addition, moving a point to its center has the same effect as removing the point directly under certain conditions. Therefore, similar to the attack method based on adding points, this paper refers to the saliency map calculation method proposed by Zheng [

20]. By generating the saliency map of point cloud data, key points with higher saliency scores are selected, and these points are moved to the central position to achieve the effect of deleting points.

Point deletion-based attacks change the density distribution and spatial topology of the point cloud, making it difficult for the model to accurately extract the characteristics of trees when processing the data, which leads to segmentation errors, such as mistakenly dividing part of a tree into multiple trees.

3.2. Adversarial Defense Methods

This study mainly employs two strategies to defend against adversarial samples, namely, data enhancement defense and adversarial training strategy.

3.2.1. Data Augmentation

Data augmentation is a basic and effective method to defend against the interference of adversarial samples. Through a variety of transformation operations on the original point cloud data, data augmentation cannot only expand the scale of the dataset but also help the model learn more stable and more generalizable features, so as to effectively reduce the impact of adversarial samples and improve the robustness of the model.

In the task of image processing, the common data augmentation methods include rotation, translation, and other transformations on images [

11]. Rotating images simulate the appearance of objects from different viewing angles, so that the model can learn the features of the object when presented in multiple directions, thereby improving the recognition ability of the model. However, PointNet++ [

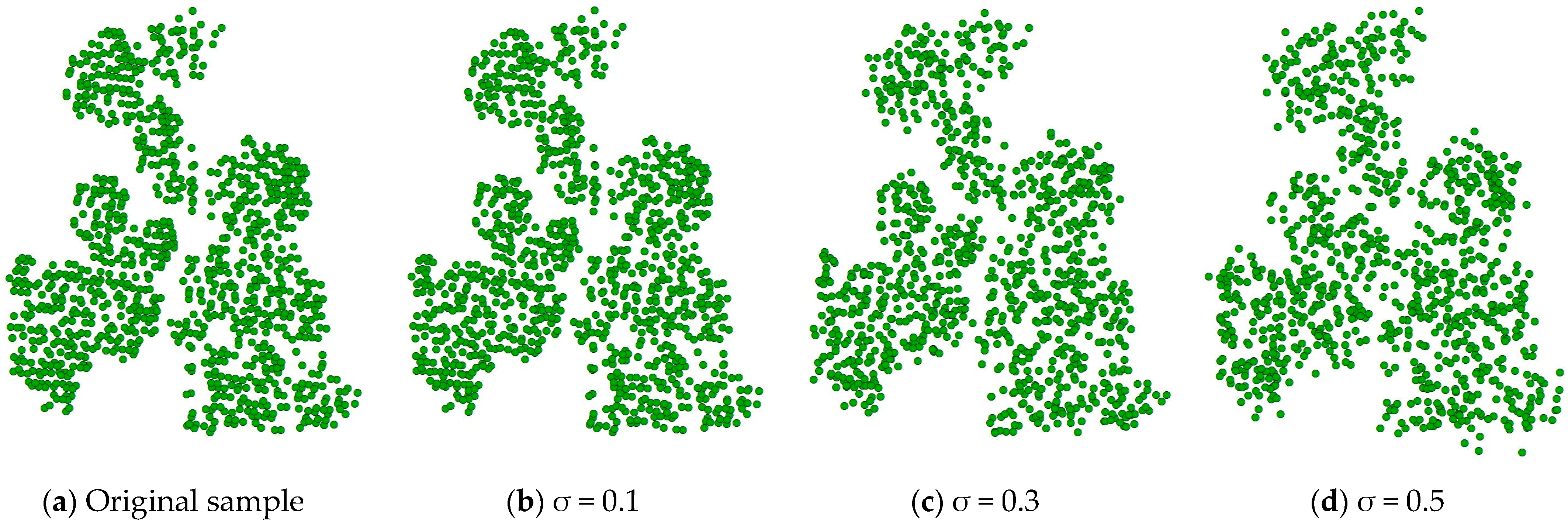

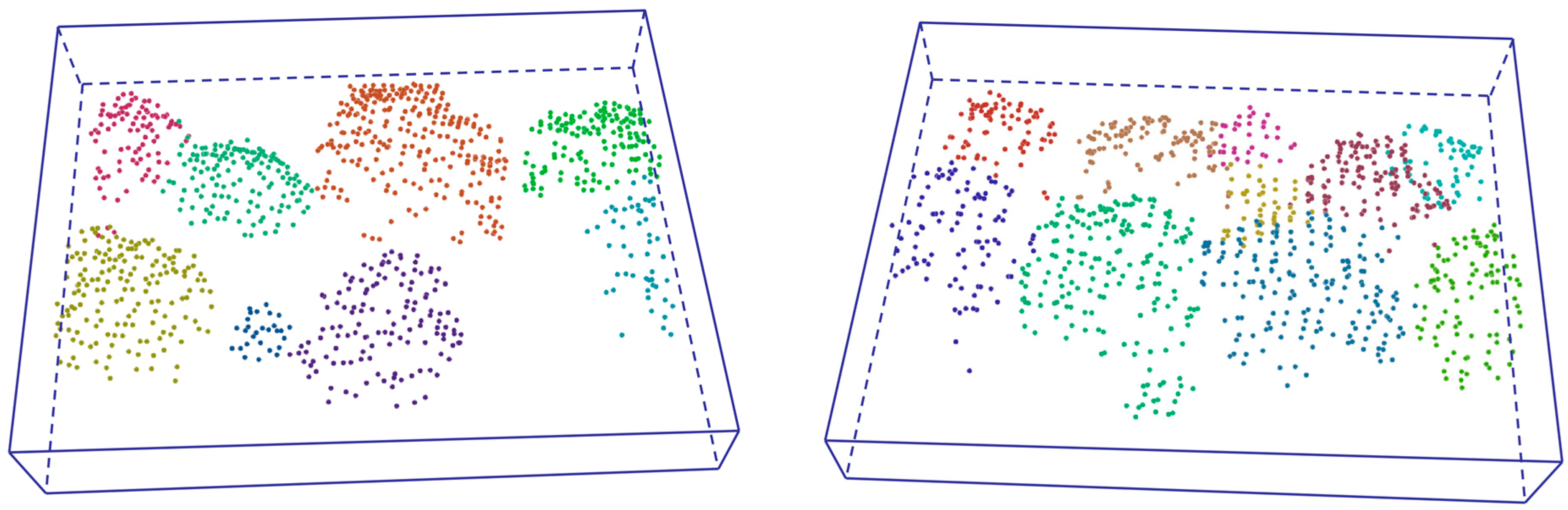

6] adopts a deep learning architecture based on hierarchical feature extraction and selects the most significant features in each local region through max pooling, so that it can maintain the effective extraction of key features after point cloud data rotation. This means that rotating point cloud samples does not significantly help to improve the performance of the proposed model, and it may introduce additional computational costs instead. Therefore, this study employs a targeted data augmentation strategy combining denoising and controlled noise injection, where the injected noise follows two key principles: spatial distribution follows a truncated Gaussian (μ = 0, σ = 0.3) within local neighborhoods, and structural constraints limit maximum displacement to 0.5 m to maintain the original structure. As shown in

Figure 1, our enhanced samples preserve tree morphology while reducing scattered points, yielding cleaner and more compact point clouds. When σ is too small (e.g., σ = 0.1), the perturbation is minimal, resulting in augmented samples that are almost indistinguishable from the original ones, thereby failing to effectively improve the model’s robustness, as shown in

Figure 1b. In contrast, when σ is too large (e.g., σ = 0.5), the point cloud becomes overly scattered, leading to geometric distortion and compromising the semantic features of the trees, as illustrated in

Figure 1d. In addition, controlled noise is added to the enhanced samples to simulate remote sensing point cloud sampling errors or environmental disturbances that may occur in real scenes. This moderate noise enhancement not only improves the diversity of the datasets but also forces the model to pay more attention to the overall morphological characteristics of trees in the learning process, rather than relying too much on the precise location of local points, thus enhancing the robustness of the model to noise.

Implementing effective preprocessing operations before inputting data into the model is crucial for reducing the impact of adversarial perturbations. As a simple and efficient method, low-pass filtering eliminates part of the adversarial disturbance by suppressing the high-frequency noise. In point cloud data processing, low-pass filtering can be applied to the coordinates and associated attributes of each point. For the 3D coordinates (x, y, z) of the point cloud, the moving average filter is used to calculate the average of the coordinates in the neighborhood centered at each point, and it is used as the filtered result. In this way, the outliers and noise interference in the data can be effectively reduced, and the data input to the model can be as close to the real and clean state as possible.

In addition, during the model training iterations, a moderate amount of noise can be added to the training data to allow the model to continuously encounter and learn the features of the data carrying noise, thereby enhancing its resistance to noise. The method of adding noise used in this paper is Gaussian noise, with the variance controlled between 0.005 and 0.01. In each training iteration, a noise vector conforming to the Gaussian distribution is generated according to the set variance and added to the three-dimensional coordinates of the point cloud. The purpose of noise injection is to enhance the model’s resistance to noise disturbances. However, the selection of noise intensity also needs to be carefully controlled. Excessive noise may severely damage the basic morphological features of the trees, making it impossible to maintain the general outline of the trees, and causing unreasonable topological relationships between adjacent points, etc., preventing the model from learning effective features. On the other hand, if the noise intensity is too small, it may be difficult to achieve the expected enhancement effect and improve the robustness of the model.

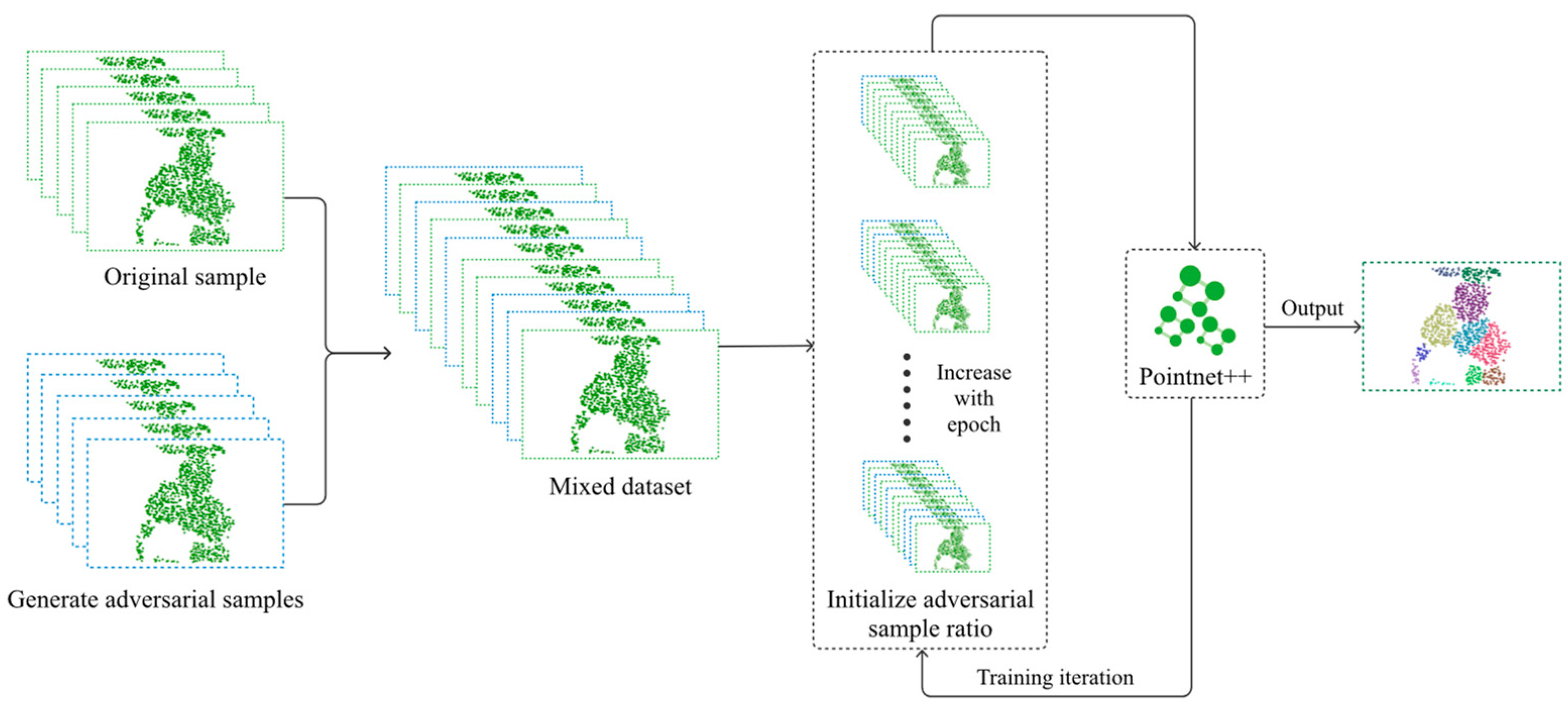

3.2.2. Adversarial Training

Adversarial training plays an important role in improving the robustness of individual tree segmentation models for remote sensing point clouds as an effective defense, specifically for adversarial samples. The core principle is to introduce original samples and adversarial samples—generated by different methods—into the model’s training process so that the model gradually adapts to the interference caused by adversarial samples during the process of continuous learning, so as to enhance the defense abilities of adversarial samples. In this paper, we generate adversarial samples using the four attack methods described above to help the model complete adversarial training and improve the robustness of the model.

Figure 2 shows the flow of adversarial training. Adversarial samples are first generated according to the four attack methods introduced above, and then the generated adversarial samples are mixed with the original samples to form an augmented training dataset. To ensure training effectiveness, we implement a dynamic adjustment strategy for the adversarial sample ratio. In the early stages of training, adversarial samples are not included, allowing the model to focus on learning from the original data and fully understand the feature distribution of normal point cloud data. Since the original samples accurately reflect the features of tree point clouds in real-world scenarios, teaching the model these correct and stable features first helps prevent confusion during feature learning caused by premature exposure to adversarial samples. As training progresses and the model’s performance improves, the proportion of adversarial samples in the training set gradually increases. This allows the model to continuously refine its parameters to handle different types and degrees of adversarial attacks, improving its ability to adapt to adversarial conditions. With this dynamic approach, the model maintains strong performance on original samples while maximizing its defense against adversarial attacks.

4. Experiments

4.1. Remote Sensing Point Cloud Dataset

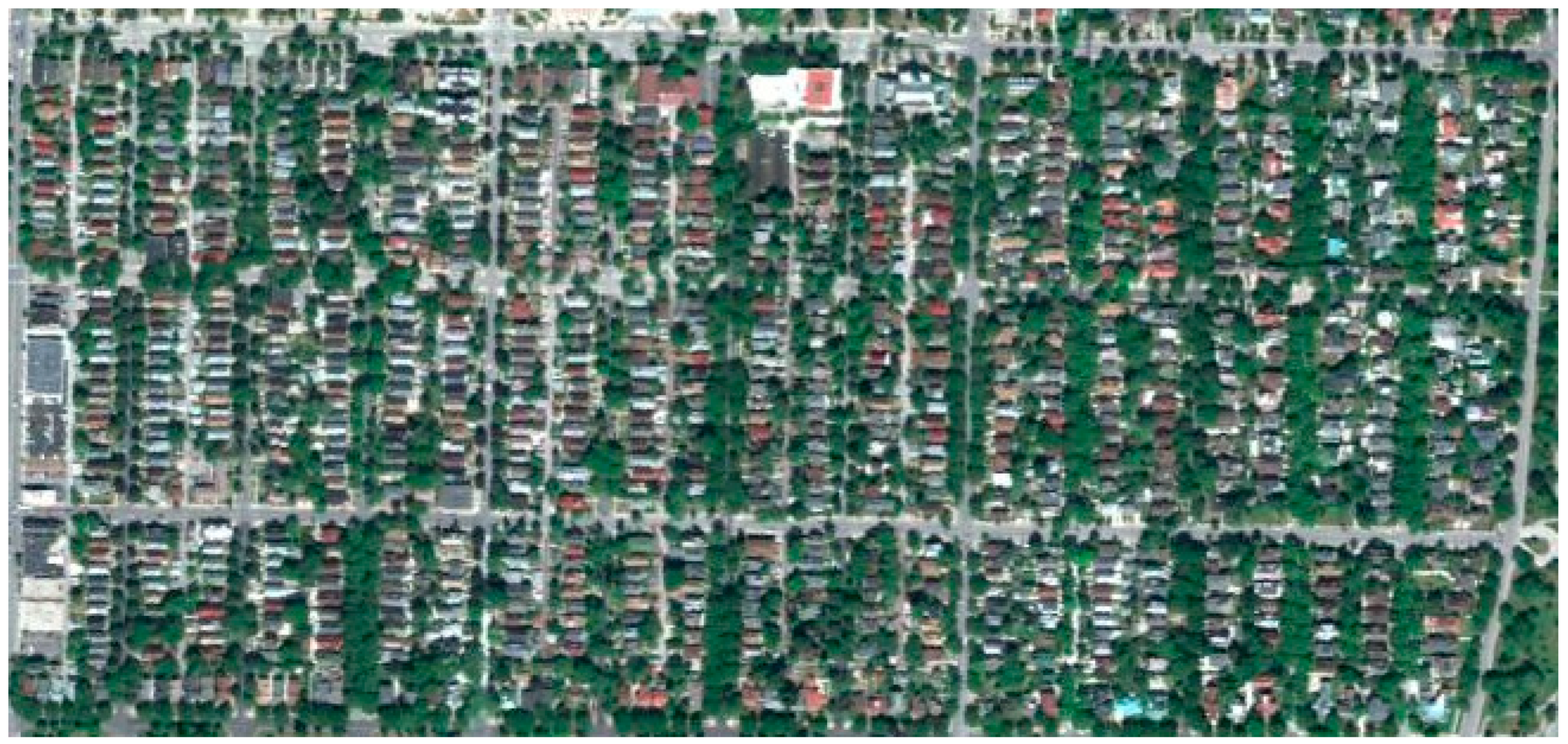

The dataset used in the experiment in this paper is located in the east of Milwaukee, Wisconsin, MA, USA, between the Milwaukee River and Lake Michigan (43.07 N, 87.87 W), covering an area of about 2 km

2, as shown in

Figure 3. To reduce the interference of non-vegetation on the segmentation of individual trees, we use the normalized difference vegetation index (NDVI). NDVI is calculated by dividing the reflectance difference between the NIR band (863.56 nm) and the red band (666.71 nm) by their sum. According to reference [

21], NDVI values for temperate forests typically range from 0.4 to 0.9. Therefore, we adopted 0.4 as the threshold to distinguish trees (NDVI ≥ 0.4) from other features (NDVI < 0.4). We generated NDVI images from hyperspectral imagery and spatially overlaid them with the object height model (OHM). Pixels with NDVI values below 0.4 were classified as non-vegetation features and set to null in the OHM. By applying this NDVI criterion, we removed non-vegetation features, including roads and buildings, and ultimately generated a canopy height model (CHM) for mapping tree heights.

Considering the large volume of point cloud datasets in various scenarios, the remote sensing point cloud dataset was first downsampled to ensure efficient processing and training of the point cloud segmentation network. According to [

22], the dataset was divided into multiple 100 m × 100 m regions based on spatial location, with each region sampled to include 1024 points. This approach reduces its computational load while preserving the geometric and semantic information of each region, providing sufficient point cloud feature information for subsequent network training.

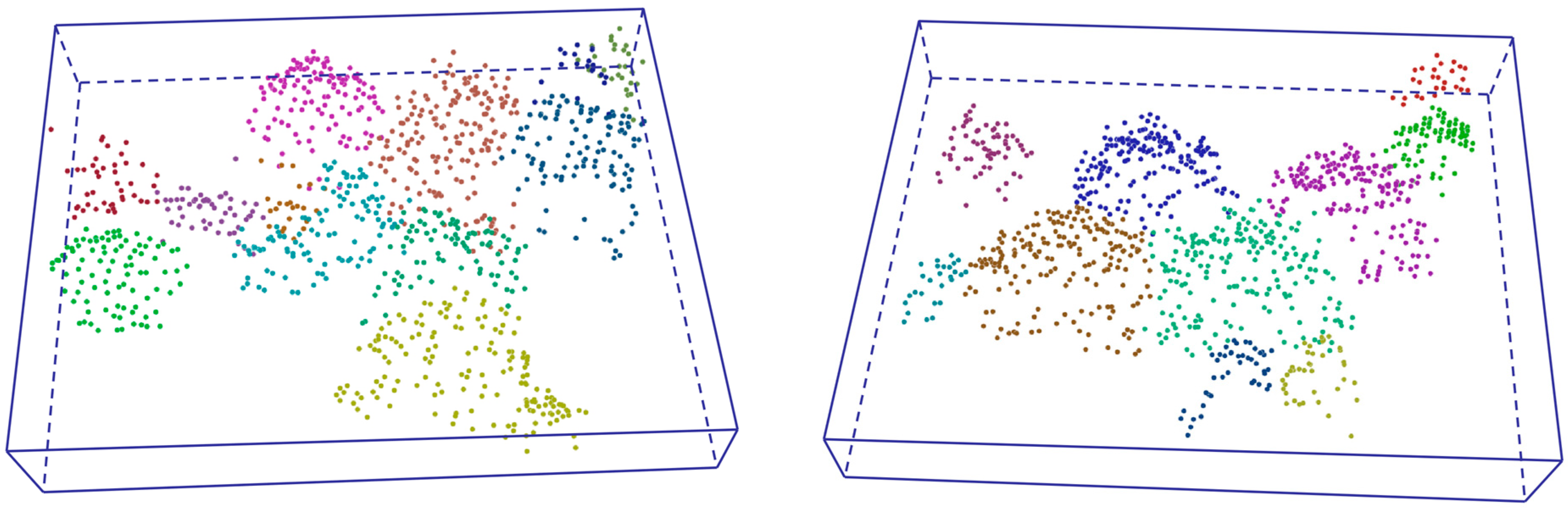

Figure 4 shows four sampled tree-scene examples from the remote sensing data.

4.2. Method Performance of Attack and Defense

This study adopts the individual tree segmentation model based on PointNet++ [

6] and DBSCAN [

23] as the benchmark model, following the methodologies of [

22,

24], to evaluate the proposed attack and defense approaches. To better show the individual tree segmentation results, the point cloud scene is visualized from a top-down perspective.

This study employs mean intersection over union (mIoU) as the evaluation metric for measuring segmentation performance. It measures the degree of overlap between each tree divided by the model and the real tree, with a value ranging from 0 to 1, with higher values indicating better segmentation. The mIoU can be calculated as the following Equation (6):

Here, N denotes the total number of trees in the sample, P denotes the point cloud set of the instance tree predicted by the model, and G denotes the point cloud set of the corresponding tree in the ground truth. |P ∩ G| denotes the intersection of these two sets. |P ∪ G| denotes the union of the ground truth tree point cloud and the predicted tree point cloud.

4.2.1. Point-Perturbation-Based Adversarial Attack Analysis

In the process of constructing the adversarial sample using FGSM [

12], this paper uses the L

2 distance as the perturbation constraint to ensure that the difference between the original sample and the generated adversarial sample is within the control range. Because perturbation intensity directly affects the attack effect, perturbation intensity was controlled by changing the weight in the experiment to obtain a better attack effect, and the perturbation intensity was finally set to 0.16.

Table 1 shows the experimental results of the adversarial point cloud perturbation attack method on the test datasets of eight different scenes, and compares and analyzes the differences in attack effects before and after the addition of adversarial training. Without adversarial training, the individual tree segmentation model does not take any defense measures against adversarial samples, and the experimental results show that the mIoU index decreases significantly, which fully exposes the vulnerability of the model to adversarial attacks. After adversarial training, where adversarial samples were included in the training process, the model learned the characteristics of adversarial samples, thereby enhancing its defensive capability against aggressive samples and achieving noticeable improvement in mIoU values. These results indicate that adversarial training effectively strengthens the model’s robustness, enabling more stable performance when facing adversarial attacks. Moreover, we evaluated the impact of adversarial training on model performance under point-perturbation-based attacks across four remote sensing point cloud scenes using paired

t-tests. The results revealed a statistically significant improvement in mIoU(%) scores after implementing adversarial training (t = 14.62,

p = 1.67 × 10

−6). With a 95% confidence interval of 15.22% to 19.93% for the mean improvement, the findings demonstrate that adversarial training effectively mitigates the impact of point-level perturbations, maintaining segmentation accuracy despite coordinate-level adversarial disturbances. This evidence confirms that the proposed method enhances the robustness of the model against point-perturbation-based attacks.

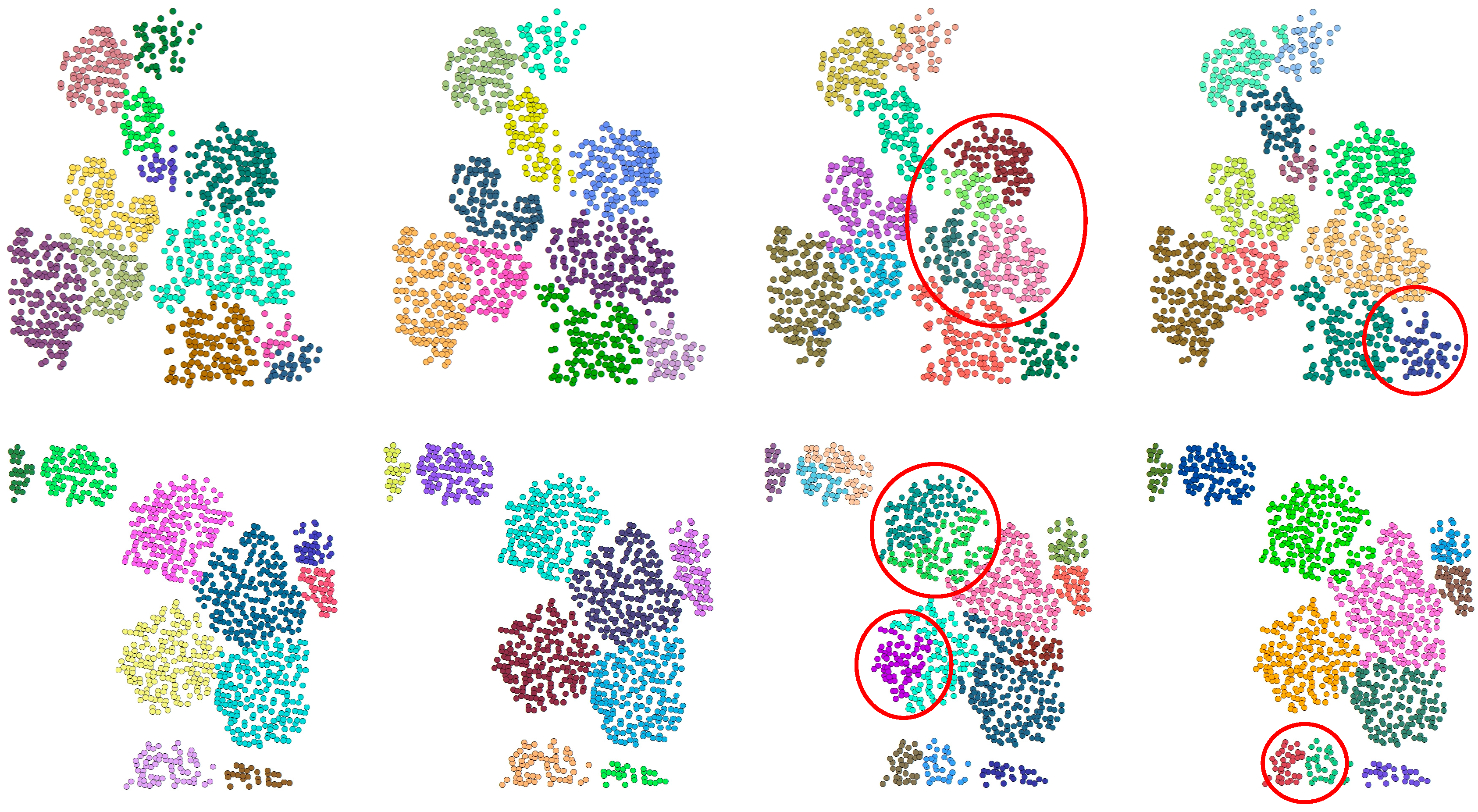

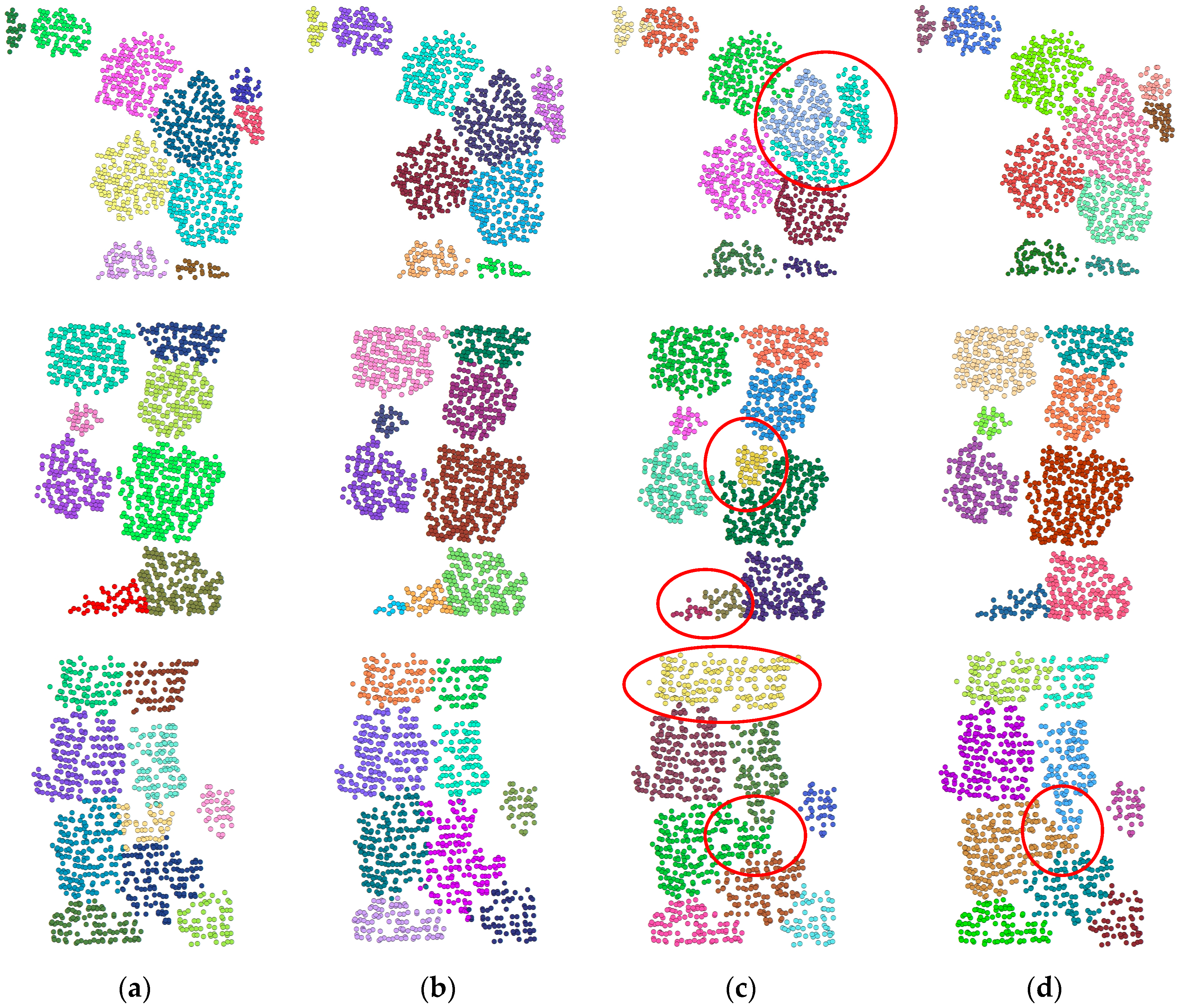

Figure 5 shows the comparative analysis of the adversarial samples generated by FGSM in individual tree segmentation tasks. The experimental results show that although the segmentation results are generally consistent with the ground truth, there are still errors, especially in contour blur and point cloud misclassification. It is worth noting that the adversarial examples generated by FGSM can significantly reduce the performance of the undefended segmentation model while maintaining the high similarity of the original point cloud without obvious outliers, resulting in a large decrease in mIoU indicators and obvious segmentation errors. After adversarial training, the performance of the model significantly improved, as evidenced by clearer tree boundary division and fewer misclassifications, confirming its enhanced robustness against FGSM attacks. However, there are still differences between the trained segmentation results and the original output—as shown in scene 1, the model consistently misclassifies two independent trees as a single object both before and after adversarial training.

4.2.2. Gaussian Noise Attack Analysis

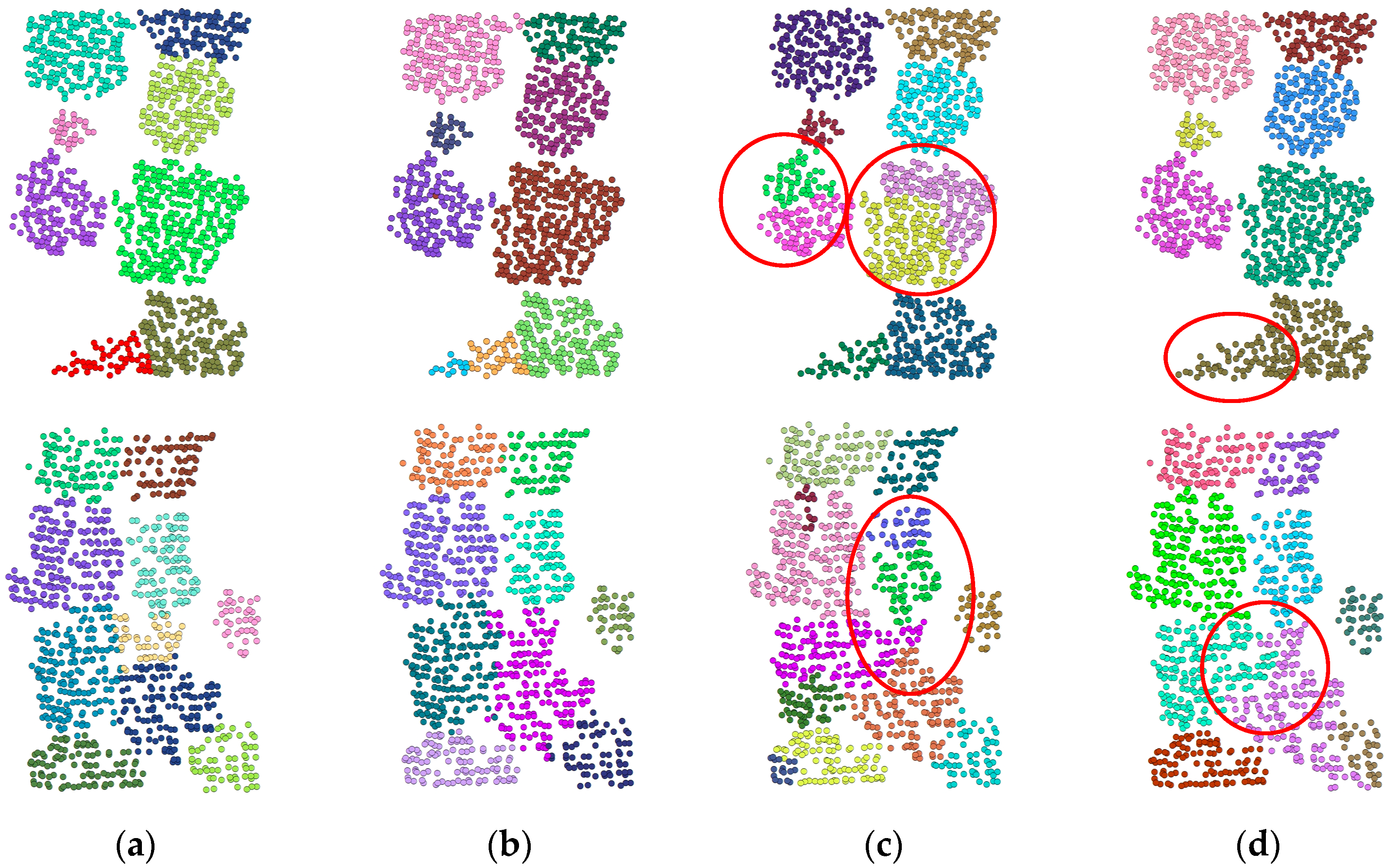

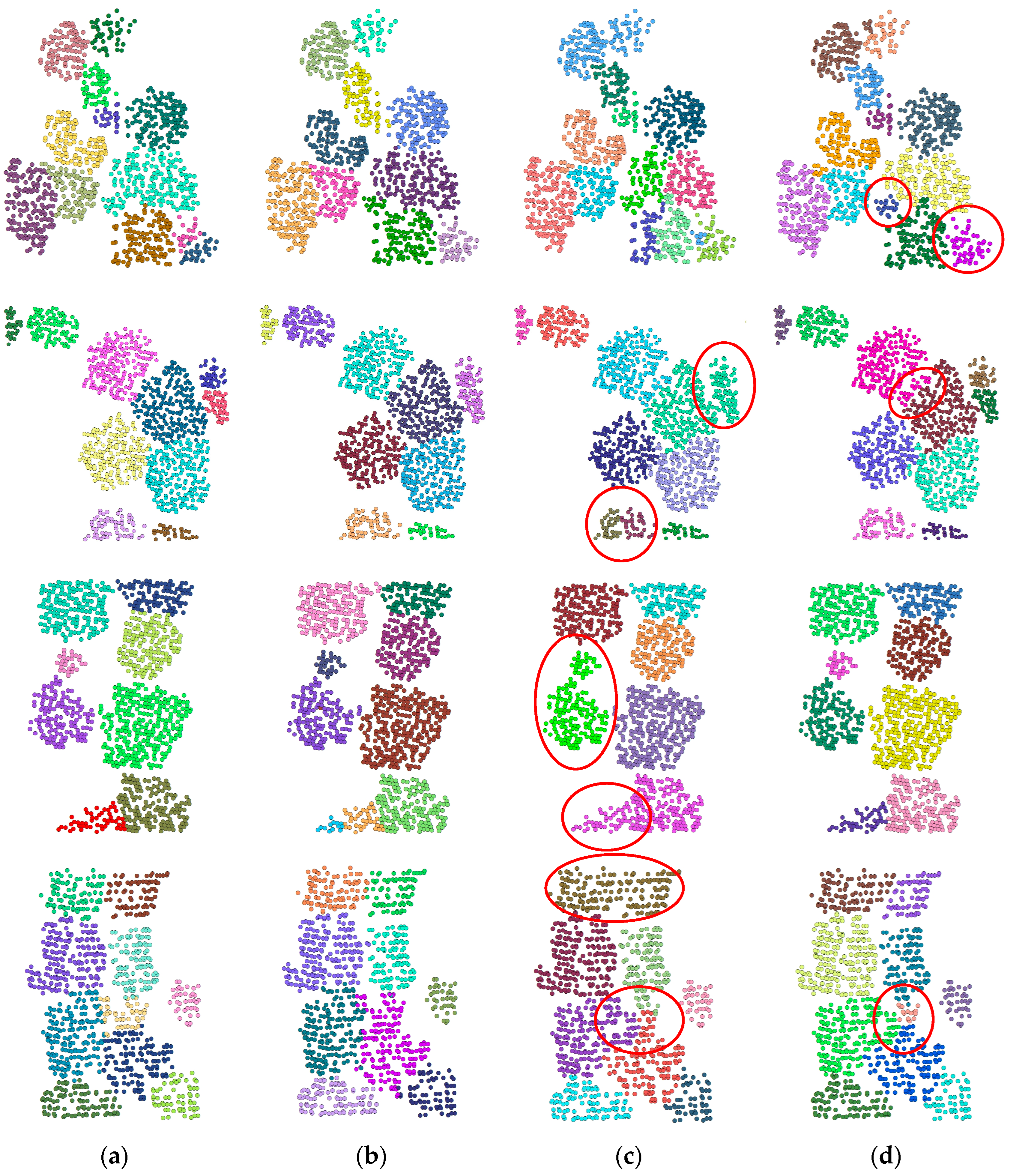

Figure 6 presents a comparison of adversarial samples generated using Gaussian noise in the individual tree segmentation task. Visually, the segmentation results of the original samples are largely consistent with the ground truth annotations, though issues such as jagged crown edges and incorrect classifications of local point clouds remain. While these adversarial examples, affected by Gaussian noise, closely resemble the original point cloud and maintain its shape, they cause significant errors in the undefended segmentation model. This leads to a noticeable drop in mIoU and a sharp rise in misclassifications. For instance, in scene 1, the model mistakenly combines multiple trees with intertwined branches and unclear boundaries into a single tree.

The network performance data in

Table 2 highlight the effectiveness of our adversarial training in mitigating Gaussian noise attacks across four different remote sensing point cloud scenarios. Under various attack conditions, the model’s performance decreases notably. For example, the mIoU value drops significantly in all scenarios, with Scenario 4 seeing the largest decline, from 0.9066 to 0.6631, a decrease of 26.9%. After adversarial training, the mIoU values in all scenarios recover between 21% and 28% of the loss, showing a strong improvement. Notably, in Scenario 3, the post-defense performance (0.9215) even surpasses the original sample performance (0.9102), suggesting that adversarial training might have enhanced the model’s ability to extract features. However, in three out of the four scenarios, there is still a performance gap of 1–6%, indicating that while adversarial training offers substantial protection, it is difficult to achieve complete immunity to Gaussian noise attacks. Nevertheless, all test scenarios show positive results, with scene 1 seeing a minimum improvement of 17.3%. This confirms the robustness of the proposed method against such attacks. These results not only expose the vulnerability of segmentation models to carefully crafted noise perturbations but also underscore the need for proactive defense strategies in critical forestry tasks. Through a paired

t-test analysis of point cloud segmentation under Gaussian noise contamination, we demonstrate the effectiveness of adversarial training in enhancing model robustness. The results show a statistically significant improvement in mIoU (t = 17.03, p = 5.89 × 10

−7), with a tightly bounded 95% confidence interval of [14.52%, 18.30%], indicating both the reliability and magnitude of the performance gain.

4.2.3. Point-Addition Attack Analysis

Extending the saliency map computation method proposed by Zheng [

20], this study first calculated the saliency score for each point within local point clouds to generate point-wise saliency maps. High-scoring points are subsequently identified as keypoints, and the newly generated adversarial points are initialized to the positions of these keypoints, and the interference is achieved by a displacement operation. The effect of a point-addition attack is closely related to the number of added adversarial points; the more the number of added adversarial points, the more obvious the disturbance to the original local point cloud. To maintain the experimental validity, the number of added points must be carefully constrained within an appropriate range. In our experiments, each point cloud sample contained 1024 points, and the number of adversarial additions was set to 50.

Table 3 presents the mIoU performance comparison under point-addition attacks before and after adversarial training. Experimental results show that point-addition attacks significantly degrade model performance, causing an average mIoU reduction of 21.4%. Scene 1 shows the largest decrease (22.5%), while Scene 3 demonstrates relatively better robustness with a 21.3% reduction. After adversarial training, model performance improves substantially, recovering to 92.1% of its original level on average. Scene 2 and Scene 3 achieve near-complete recovery at 101.5% and 99.8%, respectively, while Scene 1 and Scene 4 show remaining gaps of 7.8% and 4.6%. These results collectively validate adversarial training as an effective but incomplete solution, reducing attack success rates by 58–92% across different scenarios while highlighting the need for scene-specific defense optimization. A paired

t-test was conducted to compare the segmentation mIoU before and after adversarial training. The results showed a 95% confidence interval of [14.87%, 19.16%] for the mIoU difference, with t = 15.52 and

p = 1.11 × 10

−6. The null hypothesis was rejected, indicating a statistically significant improvement in segmentation mIoU after adversarial training. This improvement demonstrated the model’s enhanced capability to detect adversarial samples generated through point-addition attacks, confirming substantially improved model robustness.

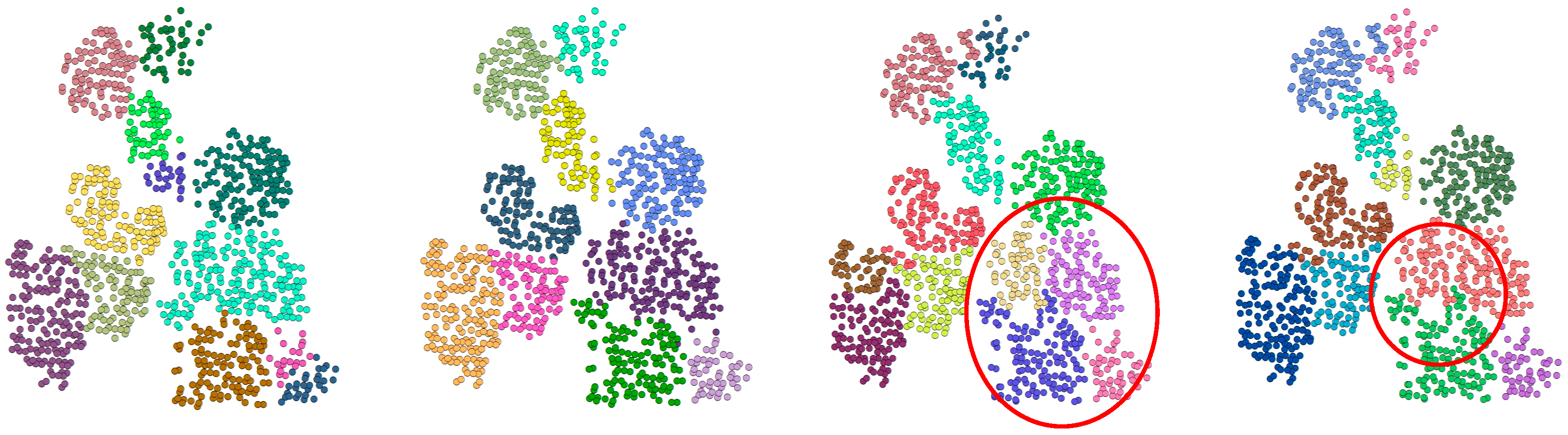

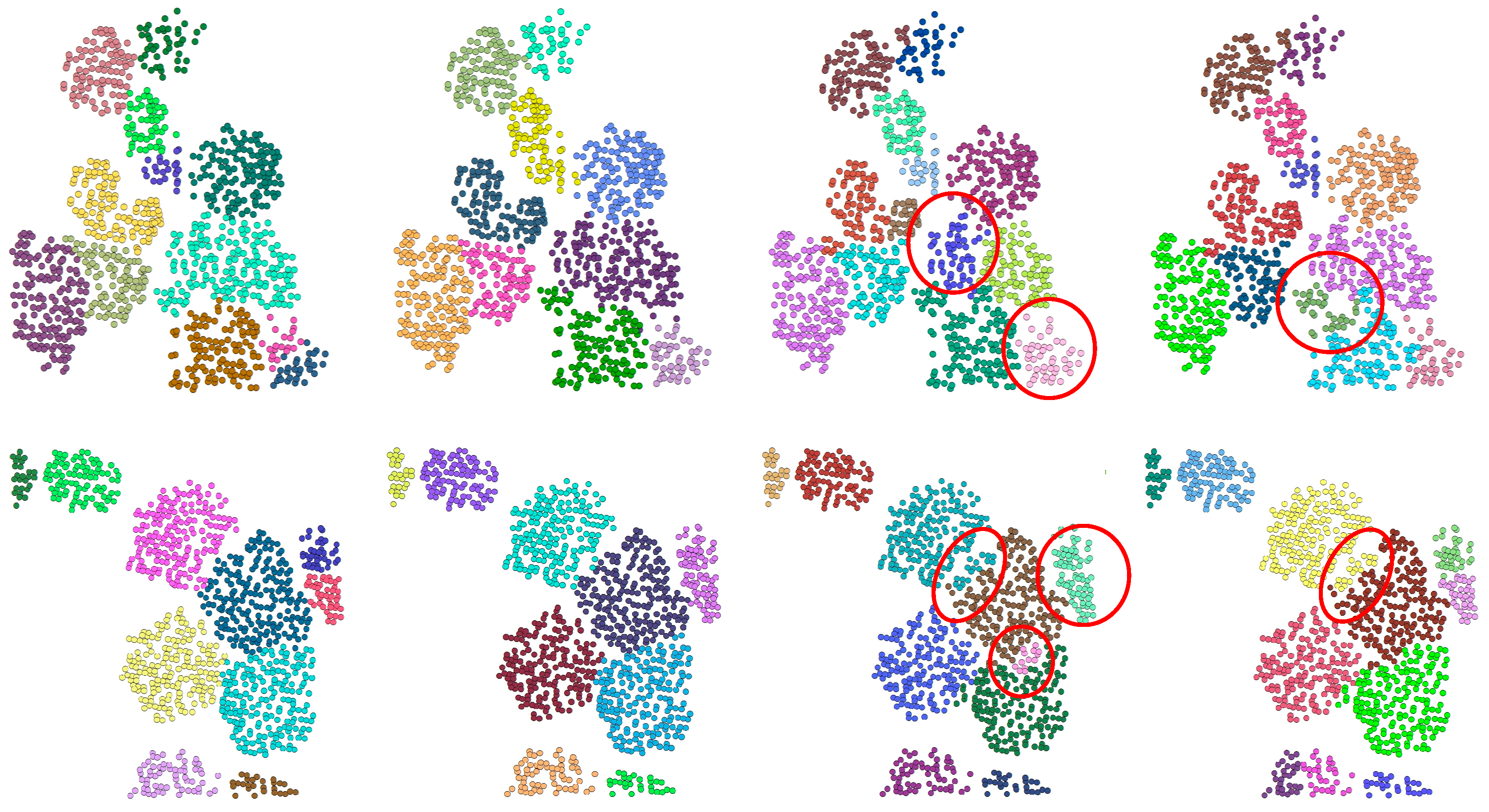

Figure 7 shows the effect of adversarial attacks based on the addition of sampling points on the segmentation results of a single wood. It compares the segmentation effects of the original model and the adversarially trained model. It can be observed from the visualization results that the addition of sampling point attacks alters the data structure of the underlying point cloud scenes, leading to more misclassification in the boundary region of the scenes and even incomplete segmentation results. In addition, due to the interference of adding sampling points, the model’s ability to distinguish adjacent trees may decrease, causing different trees to be segmented into the same tree. For example, in Scene 1 of

Figure 7, two trees were segmented as one tree. Also, in Scene 4 of

Figure 7, the boundaries between two trees were misidentified.

4.2.4. Point-Deletion Attack Analysis

Consistent with point-addition attacks, point-deletion attacks exhibit attack efficacy that scales with the number of removed points, inducing progressively stronger perturbations as more points are eliminated. Following the same saliency-based methodology, we first compute saliency scores for all points within target regions, then iteratively remove the top 50 most salient points to balance attack potency with experimental control.

Table 4 compares the mIoU performance under point-deletion attacks before and after adversarial training. Results indicate that point-deletion attacks significantly degrade segmentation performance. Without adversarial training, the average mIoU decreases by 20.6%, with Scene 4 showing the largest drop (27.1%) and Scene 3 the smallest (14.7%). Adversarial training substantially improves model robustness. After training, the average mIoU recovers to 92.3% of its original value. Notably, Scene 3’s mIoU exceeds its original performance by 2.1%, demonstrating the effectiveness of adversarial training. However, performance improvements vary across scenes, with Scene 1 and Scene 4 still showing 4.6% and 6.6% deficits, respectively, indicating room for improvement in certain scenarios. A paired

t-test was performed to evaluate the improvement in mIoU after applying adversarial training under point-deletion attacks across four remote sensing scenes. The test yielded a

t-value of 10.90 and a

p-value of 1.21 × 10

−5, indicating a statistically significant performance gain. The mean improvement falls within a 95% confidence interval ranging from 10.82% to 15.56%, confirming both the reliability and magnitude of the enhancement. These findings support the effectiveness of the proposed adversarial training strategy in improving the model’s robustness against point-deletion perturbations in 3D point cloud segmentation tasks.

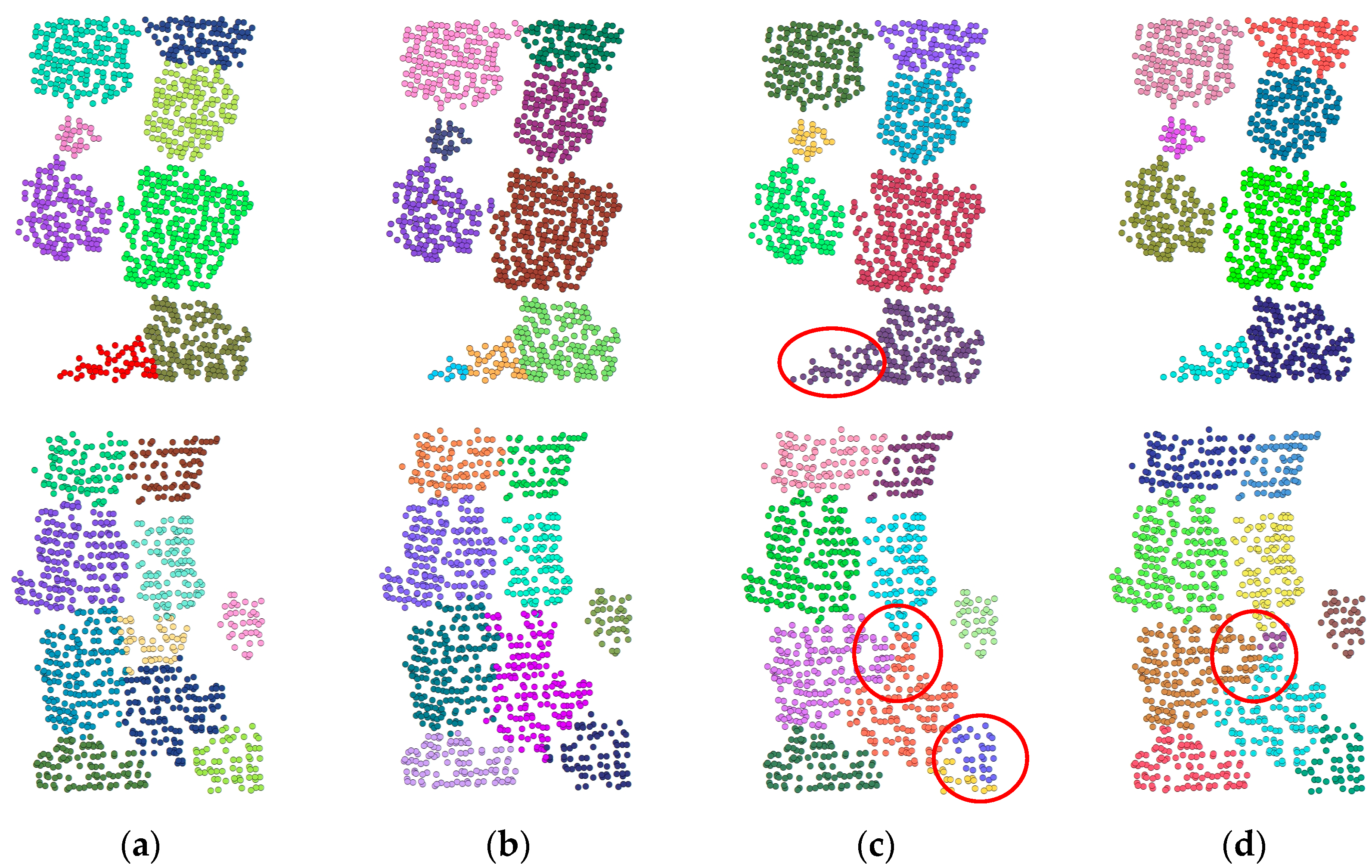

As demonstrated in

Figure 8, point-deletion attacks degrade structural integrity by eliminating critical point cloud data, causing significant segmentation errors. Specifically, in the segmentation results of the model without adversarial training, some trees were misclassified due to the absence of key points, and even some trees were seriously misjudged, or some areas were missing. In addition, the deletion point attack also affected the ability of the model to recognize the boundary of trees, outlining some trees blurred or even confused with neighboring trees.

4.3. Cross-Attack Robustness Evaluation of the Proposed Adversarial Training Framework

To comprehensively assess the generalization capability of our adversarial training framework, we conducted an ablation study involving four representative adversarial attack methods: FGSM [

12], Gaussian noise injection [

25], point-addition attack [

18], and point-deletion attack [

18]. For each attack type, we independently trained a defense model using adversarial samples generated exclusively by that method and evaluated its robustness against all four types of attacks. The results are summarized in

Table 5, which presents a cross-attack generalization matrix of mIoU (%) scores. Each row corresponds to a defense model trained under a specific attack scenario, and each column represents the attack method used during testing.

As shown in

Table 5, defense models trained on a single attack type tend to perform well against their corresponding attack but exhibit noticeable vulnerability when exposed to other attack variants. In contrast, our proposed method achieves the highest mIoU scores across all attack types—85.17% for FGSM, 86.35% for Gaussian noise, 88.63% for point-addition, and 87.92% for point-deletion—demonstrating superior generalization and robustness. This result highlights the effectiveness of our approach in defending against a wide range of adversarial perturbations and underscores its practical value for improving the resilience of individual tree segmentation models in diverse threat scenarios.

5. Conclusions

This study provides an efficient and robust solution for practical applications of individual tree segmentation from remote sensing point clouds, demonstrating its significant theoretical and practical value. However, the vulnerability of existing methods to adversarial sample attacks substantially limits their applicability in complex real-world point cloud scenes. To address these challenges in the task of individual tree segmentation, particularly for the segmentation models using PointNet++ and DBSCAN, we proposed a novel strategy integrating data augmentation and adversarial training. This approach enables the segmentation model to accurately identify tree boundaries even when the symmetric properties of tree point clouds are compromised, thereby enhancing the robustness of individual tree segmentation. To verify our conclusion, statistical validation using paired t-tests confirms the effectiveness of our method. Across multiple test scenarios, adversarial training led to statistically significant improvements in mIoU, with p-values well below 0.01 and 95% confidence intervals showing consistent performance gains. These results provide strong empirical evidence that the proposed method enhances segmentation robustness and generalization under noise and structural perturbations.

Despite the promising results, our method has several limitations that warrant further investigation. First, the use of saliency maps for identifying critical regions in point clouds, while effective in most cases, may suffer from reduced reliability in scenes with extremely high point density or significant real-world noise. In such scenarios, noise points may be mistakenly interpreted as salient, or structurally important regions may be under-emphasized due to the smoothing effects of densely packed points. These distortions can compromise the quality of the adversarial samples generated and impact the accuracy of robustness evaluation. Second, the robustness training framework introduced in this study has been primarily validated on urban forest datasets. Its performance in more complex environments, such as dense natural forests or highly heterogeneous vegetation, remains to be fully explored. These settings may involve occlusions, irregular tree structures, or underrepresented species, all of which can challenge the generalizability of our model.

Future research can further explore the cross-domain applicability of the proposed framework by extending it to more complex forest datasets and broader 3D perception tasks such as autonomous driving and urban mapping. In large-scale forest environments, variations in tree species, topography, and acquisition conditions introduce additional challenges to individual tree segmentation. We can combine multi-scale remote sensing data (such as hyperspectral images and UAV RGB images) and use the complementarity of different sensor data to improve the robustness of the segmentation model. Additionally, modeling the inherent symmetry of trees more deeply and embedding it into the loss function or feature extraction layers can guide the network to learn biologically plausible geometric patterns. For instance, symmetry-aware attention mechanisms may help the model detect and localize asymmetric disturbances caused by wind damage, growth irregularities, or anthropogenic activities. Temporal point cloud data can also be incorporated to develop adaptive training strategies that dynamically adjust symmetry constraints based on seasonal variations and growth cycles, enabling the model to capture the evolving structure of healthy trees.

Beyond forestry, the proposed adversarially trained segmentation framework holds promise for high-stakes domains such as autonomous driving and urban mapping. In autonomous driving, LiDAR-based scene understanding is essential to identify road vegetation and dynamic obstacles in challenging conditions. The adversarial training strategy introduced by our method can enhance the resilience of segmentation models to sensor noise, occlusions, and adversarial perturbations common in real-world driving environments. Similarly, in urban mapping, accurate point cloud segmentation is essential to delineate buildings, street trees, utility structures, and other urban assets. By exploiting the generality of the proposed method, in particular its ability to capture geometric regularities and resist structural distortions, the framework can be used to improve the accuracy and robustness of 3D semantic segmentation in complex urban scenes. These future directions will not only expand the impact of the proposed work but also contribute to safer and interpretable 3D scene understanding in large-scale smart cities and intelligent transportation applications.

Author Contributions

Conceptualization, R.S. and Y.M.; methodology, R.S.; software, H.L.; validation, R.S.; formal analysis, R.S. and Y.M.; investigation, R.S.; resources, Y.M. and H.L.; data curation, H.L.; writing—original draft preparation, R.S.; writing—review and editing, R.S. and Y.M.; visualization, R.S.; project administration, Y.M.; funding acquisition, Y.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Zhejiang Provincial Natural Science Foundation of China under grant no. LZ23F020002 and the National Natural Science Foundation of China under grant no. 61972458.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to privacy issues.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hua, F.; Bruijnzeel, L.A.; Meli, P.; Martin, P.A.; Zhang, J.; Nakagawa, S.; Miao, X.; Wang, W.; McEvoy, C.; Peña-Arancibia, J.L.; et al. The biodiversity and ecosystem service contributions and trade-offs of forest restoration approaches. Science 2022, 376, 839–844. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, S.; Li, J.; Ma, L.; Wu, R.; Luo, Z.; Wang, C. Rapid urban roadside tree inventory using a mobile laser scanning system. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3690–3700. [Google Scholar] [CrossRef]

- Ahmadi, S.A.; Ghorbanian, A.; Golparvar, F.; Mohammadzadeh, A.; Jamali, S. Individual tree detection from unmanned aerial vehicle (UAV) derived point cloud data in a mixed broadleaf forest using hierarchical graph approach. Eur. J. Remote Sens. 2022, 55, 520–539. [Google Scholar] [CrossRef]

- Picos, J.; Bastos, G.; Míguez, D.; Alonso, L.; Armesto, J. Individual tree detection in a eucalyptus plantation using unmanned aerial vehicle (UAV)-LiDAR. Remote Sens. 2020, 12, 885. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5099–5108. [Google Scholar]

- Naderi, H.; Bajić, I.V. Adversarial attacks and defenses on 3d point cloud classification: A survey. IEEE Access 2023, 11, 144274–144295. [Google Scholar] [CrossRef]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2022, 131, 531–551. [Google Scholar] [CrossRef]

- Sun, J.; Koenig, K.; Cao, Y.; Chen, Q.A.; Mao, Z.M. On adversarial robustness of 3d point cloud classification under adaptive attacks. arXiv 2020, arXiv:2011.11922. [Google Scholar]

- Yi, L.; Kim, V.G.; Ceylan, D.; Shen, I.-C.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A scalable active framework for region annotation in 3D shape collections. ACM Trans. Graph. 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Athalye, A.; Carlini, N. On the robustness of the cvpr 2018 white-box adversarial example defenses. arXiv 2018, arXiv:1804.03286. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: London, UK, 2018; pp. 99–112. [Google Scholar]

- Ren, K.; Zheng, T.; Qin, Z.; Liu, X. Adversarial attacks and defenses in deep learning. Engineering 2020, 6, 346–360. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Cohen, J.; Rosenfeld, E.; Kolter, Z. Certified adversarial robustness via randomized smoothing. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 1310–1320. [Google Scholar]

- Shi, Y.; Tang, K.; Peng, W.; Wu, J.; Gu, Z.; Fang, M. Adversarial Attacks on Deep Local Feature Matching Models of 3D Point Clouds. J. Comput. Des. Comput. Graph. 2022, 34, 1379–1390. [Google Scholar] [CrossRef]

- Bo, N.A.N.; Yongwei, M. Point cloud replacement adversarial attack based on saliency map. J. Image Graph. 2022, 27, 500–510. [Google Scholar]

- Zheng, T.; Chen, C.; Yuan, J.; Li, B.; Ren, K. Pointcloud saliency maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE Computer Society Press: Los Alamitos, CA, USA, 2019; pp. 1598–1606. [Google Scholar]

- Cristiano, P.M.; Madanes, N.; Campanello, P.I.; Di Francescantonio, D.; Rodríguez, S.A.; Zhang, Y.-J.; Carrasco, L.O.; Goldstein, G. High NDVI and potential canopy photosynthesis of South American subtropical forests despite seasonal changes in leaf area index and air temperature. Forests 2014, 5, 287–308. [Google Scholar] [CrossRef]

- Wang, W.Y.; Yu, R.; Huang, Q.G.; Neumann, U. Sgpn: Similarity group proposal network for 3d point cloud instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2569–2578. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. KDD 1996, 96, 226–231. [Google Scholar]

- Jiang, L.; Zhao, H.; Shi, S.; Liu, S.; Fu, C.W.; Jia, J. Pointgroup: Dual-set point grouping for 3d instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4867–4876. [Google Scholar]

- Zheltonozhskii, E.; Baskin, C.; Nemcovsky, Y.; Chmiel, B.; Mendelson, A.; Bronstein, A.M. Colored noise injection for training adversarially robust neural networks. arXiv 2020, arXiv:2003.02188. [Google Scholar]

Figure 1.

Comparison of original and augmented samples under different σ values.

Figure 1.

Comparison of original and augmented samples under different σ values.

Figure 2.

Adversarial training flowchart.

Figure 2.

Adversarial training flowchart.

Figure 3.

Dataset of remote sensing point cloud.

Figure 3.

Dataset of remote sensing point cloud.

Figure 4.

Four sampled remote sensing point cloud scenes of trees contain 1024 points, with points of the same color indicating that they belong to the same tree.

Figure 4.

Four sampled remote sensing point cloud scenes of trees contain 1024 points, with points of the same color indicating that they belong to the same tree.

Figure 5.

Comparison of adversarial sample segmentation based on FGSM. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 5.

Comparison of adversarial sample segmentation based on FGSM. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 6.

Comparison of adversarial sample segmentation based on Gaussian noise attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 6.

Comparison of adversarial sample segmentation based on Gaussian noise attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 7.

Comparison of adversarial sample segmentation based on point-addition attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 7.

Comparison of adversarial sample segmentation based on point-addition attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 8.

Comparison of adversarial sample segmentation based on point-deletion attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Figure 8.

Comparison of adversarial sample segmentation based on point-deletion attacks. The red ellipses highlight the difference between the segmentation results. (a) Ground truth of the original sample; (b) segmentation result of the original sample without any perturbation; (c) segmentation result under adversarial attack before adversarial training, where some trees are incorrectly merged or fragmented due to perturbations; (d) segmentation result after adversarial training, showing improved consistency with the GT and better resilience to adversarial noise.

Table 1.

Comparison of mIoU (%) under adversarial point disturbance attack before and after adversarial training.

Table 1.

Comparison of mIoU (%) under adversarial point disturbance attack before and after adversarial training.

| | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 | Scene 7 | Scene 8 |

|---|

| Original sample | 86.94 | 88.80 | 91.02 | 90.66 | 90.11 | 88.23 | 89.35 | 91.48 |

| Before adversarial training | 67.48 | 68.83 | 65.41 | 69.58 | 68.37 | 66.52 | 70.24 | 68.19 |

| After adversarial training | 82.57 | 84.12 | 88.74 | 82.53 | 85.76 | 83.49 | 86.82 | 89.21 |

Table 2.

Comparison of mIoU (%) under Gaussian noise attacks before and after adversarial training.

Table 2.

Comparison of mIoU (%) under Gaussian noise attacks before and after adversarial training.

| | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 | Scene 7 | Scene 8 |

|---|

| Original sample | 86.94 | 88.80 | 91.02 | 90.66 | 90.11 | 88.23 | 89.35 | 91.48 |

| Before adversarial training | 70.32 | 70.67 | 72.19 | 66.31 | 71.85 | 69.23 | 73.64 | 68.92 |

| After adversarial training | 82.49 | 85.91 | 92.15 | 84.94 | 86.72 | 84.35 | 89.27 | 88.56 |

Table 3.

Comparison of mIoU (%) under point-addition attacks before and after adversarial training.

Table 3.

Comparison of mIoU (%) under point-addition attacks before and after adversarial training.

| | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 | Scene 7 | Scene 8 |

|---|

| Original sample | 86.94 | 88.80 | 91.02 | 90.66 | 90.11 | 88.23 | 89.35 | 91.48 |

| Before adversarial training | 67.37 | 73.89 | 71.64 | 66.82 | 68.91 | 67.53 | 70.28 | 72.45 |

| After adversarial training | 80.18 | 90.16 | 90.88 | 86.47 | 83.27 | 89.62 | 87.04 | 87.39 |

Table 4.

Comparison of mIoU (%) under point-deletion attacks before and after adversarial training.

Table 4.

Comparison of mIoU (%) under point-deletion attacks before and after adversarial training.

| | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 | Scene 7 | Scene 8 |

|---|

| Original sample | 86.94 | 88.80 | 91.02 | 90.66 | 90.11 | 88.23 | 89.35 | 91.48 |

| Before adversarial training | 72.15 | 74.52 | 77.95 | 66.14 | 73.28 | 73.65 | 76.84 | 67.92 |

| After adversarial training | 82.37 | 84.87 | 93.22 | 84.69 | 83.46 | 85.91 | 89.05 | 85.37 |

Table 5.

Cross-attack generalization matrix for mIoU (%). Rows indicate the defense models trained with different types of adversarial samples, and columns indicate the attack type used during evaluation.

Table 5.

Cross-attack generalization matrix for mIoU (%). Rows indicate the defense models trained with different types of adversarial samples, and columns indicate the attack type used during evaluation.

| Defense/Attack | FGSM | Gaussian Noise | Point-Addition | Point-Deletion |

|---|

| FGSM defense [12] | 85.52 | 71.31 | 68.70 | 75.26 |

| Gaussian defense [25] | 73.82 | 85.19 | 72.42 | 70.92 |

| Point-addition defense [18] | 65.23 | 71.08 | 90.15 | 65.74 |

| Point-deletion defense [18] | 69.63 | 78.91 | 78.92 | 89.46 |

| Ours | 85.17 | 86.35 | 88.63 | 87.92 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).