Abstract

With the rapid advancement of intelligent transportation systems (ITSs), behavior detection within the Internet of Vehicles (IoVs) has become increasingly critical for maintaining system security and operational stability. However, existing detection approaches face significant challenges related to data privacy, node trustworthiness, and system transparency. To address these limitations, this study proposes a blockchain-driven federated learning framework for anomaly detection in IoV environments. A reputation evaluation mechanism is introduced to quantitatively assess the credibility and contribution of connected and autonomous vehicles (CAVs), thereby enabling more effective node management and incentive regulation. In addition, a multi-level model aggregation strategy based on dynamic vehicle selection is developed to integrate local models efficiently, with the optimal global model securely recorded on the blockchain to ensure immutability and traceability. Furthermore, a reputation-based prepaid reward mechanism is designed to improve resource utilization, enhance participant loyalty, and strengthen overall system resilience. Experimental results confirm that the proposed framework achieves high anomaly detection accuracy and selects participating nodes with up to 99% reliability, thereby validating its effectiveness and practicality for deployment in real-world IoV scenarios.

1. Introduction

In the 1990s, the concept of the Internet of Vehicles (IoVs) was first introduced [1], with the goal of enabling comprehensive interconnection within transportation networks [2] through advanced Information and Communication Technology (ICT). The IoV facilitates real-time collection and processing of traffic-related data—such as road congestion, weather conditions, and vehicle trajectories—playing a crucial role in enhancing traffic efficiency, optimizing vehicle scheduling, and advancing intelligent transportation management. In recent years, as autonomous driving technology has matured and the number of connected vehicles has continued to grow, the scale and complexity of IoV systems have expanded accordingly. However, this growth has also introduced increasingly severe cybersecurity challenges [3]. Particularly with the widespread deployment of connected and autonomous vehicles (CAVs), the risk of cyberattacks in IoV environments has significantly increased. According to data from Upstream [4], over 900 automotive cyberattacks have occurred in the past decade, underscoring the critical importance of cybersecurity in the IoV ecosystem. In response, many countries and regions have introduced dedicated vehicle cybersecurity regulations, highlighting the central role of intrusion detection mechanisms in safeguarding IoV systems [5].

Behavior detection mechanisms are widely regarded as effective tools for enhancing the security of in-vehicle information systems [6]. Traditional machine learning [7,8] and deep learning techniques [9,10] have made notable progress in detecting abnormal behaviors, such as unauthorized access, irregular driving patterns, and communication attacks. However, these methods typically rely on centralized architectures, where distributed data are uploaded to a central server for processing. In the context of IoV, such architectures face multiple challenges, including high communication costs, privacy risks, and limitations in storage resources. These issues are especially pronounced when dealing with large-scale, distributed data and complex models, as terminal devices often lack sufficient computational resources.

Federated learning (FL) [11,12,13], as a distributed machine learning paradigm, addresses these challenges by allowing participants to train models locally and transmit only the model update parameters to a central server for aggregation and optimization. This approach effectively reduces data transmission requirements and significantly alleviates the storage and computational burdens associated with traditional centralized learning.

Nevertheless, existing FL frameworks still face critical challenges. On one hand, their reliance on a central server for model aggregation exposes them to single points of failure (SPOF) and distributed denial-of-service (DDoS) attacks. On the other hand, the current mechanisms lack transparency in the model update process and offer insufficient traceability, making them vulnerable to data poisoning and model inversion attacks. To address these issues, blockchain technology—owing to its decentralized, tamper-resistant, and transparent characteristics—has emerged as a promising complementary solution. Integrating blockchain into FL systems can not only eliminate the risk of SPOF but also enable transparent and immutable logging of local model updates on-chain [14,15], thereby enhancing the trustworthiness and auditability of the system. Furthermore, by incorporating smart contracts, blockchain allows for the implementation of reputation-based reward and punishment mechanisms that incentivize participants to contribute high-quality updates and deter malicious behavior, thus strengthening the overall security and stability of federated learning in IoV environments.

Although recent studies have integrated blockchain technology with federated learning to enhance privacy protection and intrusion detection in decentralized environments, several critical challenges remain unaddressed. First, the absence of a dynamic incentive mechanism hinders the sustained motivation of resource-contributing nodes, thereby limiting active and long-term participation. Second, the model aggregation process often lacks transparency and is susceptible to manipulation or disruption by malicious participants. Third, existing node selection strategies are typically simplistic and fail to incorporate a holistic evaluation of node capability, reputation, and task complexity, resulting in suboptimal model performance and reduced system robustness. To address these challenges, this paper introduces the blockchain-based Internet of Vehicles (IoVs) federated learning gaming mechanism. This mechanism necessitates FL participants to remit a fee prior to engaging in the training process and incorporates a reputation value [16] to ensure that only nodes possessing both the willingness and capability to participate are included, thereby enhancing participant activity and loyalty through economic incentives. It is worth noting that blockchain and federated learning systems inherently reflect the principle of symmetry in node interactions and model aggregation, wherein nodes maintain equal status and consistent behavior, contributing to overall system stability and fairness. Therefore, maintaining functional, incentive, and trust symmetry in mechanism design is crucial for improving system performance. By introducing economic incentives and rational selection criteria, the proposed method enhances node activity and loyalty, achieves internal system symmetry, and promotes efficient resource utilization and collaborative system stability. The main contributions of this paper are as follows:

(1) Introduction of a reputation value assessment mechanism: This study introduces a reputation-based evaluation mechanism designed to quantitatively assess the trustworthiness and contribution levels of connected and autonomous vehicles (CAVs). By incorporating this mechanism, the system is capable of incentivizing active and reliable participants while effectively filtering out potentially malicious or low-contributing nodes, thereby facilitating the training of higher-quality global models.

(2) Dynamic anomaly detection method: A dynamic anomaly detection method based on federated learning is proposed to support real-time, decentralized security monitoring. Leveraging a distributed CAV node selection algorithm, this method enables the adaptive aggregation of local models while ensuring traceability and integrity through blockchain-based storage, thereby enhancing system robustness and transparency.

(3) Blockchain pre-payment game mechanism: This work further proposes a novel prepayment game mechanism grounded in blockchain technology, wherein CAV nodes are required to deposit a participation fee prior to engaging in federated learning. Through a carefully designed reward and penalty scheme, the mechanism effectively promotes long-term participation, improves the reliability of node selection, and enhances overall resource utilization efficiency within the system.

2. Related Work

Federated learning is a framework that facilitates collaborative machine learning across multiple client devices while ensuring data privacy through decentralized local training. This approach utilizes a central server to aggregate model updates from the clients, enabling coordinated learning without the necessity of exchanging raw data [17]. Previous intrusion detection research has predominantly relied on centralized methods, including machine learning and deep learning, wherein data from multiple sources is aggregated on a central server for processing and analysis. This approach enhances detection accuracy and efficiency while enabling streamlined model updates. However, with increasing concerns regarding data privacy, federated learning has gained prominence in the field of intrusion detection. Federated learning enables model training and the generation of global models on distributed devices without the requirement to share raw data, thereby mitigating the privacy and security risks inherent in centralized approaches.

Lu Y et al. [18] proposed a novel federated learning-based architecture that facilitates data sharing by reducing the transmission load and addressing the privacy concerns of the providers. An asynchronous federated learning scheme utilizing Deep Reinforcement Learning (DRL) for node selection enhances efficiency. The reliability of shared data is ensured through the integration of the learning model into the blockchain and the implementation of two-stage verification. Regarding intrusion detection methods for maritime transportation systems, Liu W et al. [19] proposed a Fed Batch model based on a CNN-MLP model and federated learning. The model preserves local data privacy on ships by locally training the model through federated learning and updating the global model via model parameter exchange. Sawsan Abdul Rahman et al. [20] proposed an FL-based intrusion detection scheme for IoT that preserves data privacy by training the detection model locally on each device. The accuracy of the FL aggregation model is approximately 83.09%. The federated approach demonstrates a significant advantage over self-learning methods due to its capacity to aggregate knowledge across multiple devices. Khan I.A. et al. [21] developed a privacy-preserving federated reinforcement learning system called Fed-Inforce-Fusion for breach detection in IoMT networks. This system identifies cyber-attacks and uncovers potential relationships in healthcare data through reinforcement learning. It enables distributed healthcare nodes to collaboratively train a comprehensive breach detection model while preserving privacy. Furthermore, a fusion strategy is employed to enhance model performance and reduce communication overhead by allowing clients to dynamically participate in the federation process. However, this approach has a limitation. As the model update depends on the cooperative work of distributed devices, response measures typically cannot be implemented promptly after anomaly detection, potentially resulting in delayed response times or increased complexity.

Federated learning addresses the challenges of data privacy and communication overhead in Telematics by enabling vehicles to locally train intrusion detection models, thus eliminating the necessity to transmit raw data to a central server. This distributed approach aligns with the decentralized nature of IoV, enhancing data privacy protection while providing a secure and adaptive solution for intrusion detection. Maha Driss et al. [22] proposed a federated learning-based framework for Vehicular Sensor Networks (VSNs), designed to enhance attack detection. The framework incorporates gated recurrent units and integrated Random Forest-based models, achieving a maximum detection accuracy of 99.52%. Hammoud A. et al. [23] demonstrated a fog federation-based federated learning architecture that utilizes hedonic games to ensure stability by independently considering the preferences of each fog provider within the federation. The intermodal transportation system was modeled using a road traffic sign dataset, and simulation results demonstrated the model’s high accuracy and quality of service. Yang J et al. [24] designed an IVN intrusion detection method based on ConvLSTM and utilizes the federated learning framework for model training. The model accuracy and system overhead are optimized by a PPO-based federated client selection (FCS) scheme. FedWeg et al. [25] developed a sparse federated training method to minimize communication costs and privacy risks in IoV object detection through dynamic model aggregation. Sparse training is performed on edge devices, with lightweight models uploaded to the server. A dynamic sparsity adjustment scheme gradually increases the sparsity ratio, and the inverse of these ratios is used to compute aggregation weights, mitigating the impact of sparse training on learning performance. While these methods have achieved notable success in the field of IoV, there remains potential for further enhancement in model security.

Blockchain technology has recently been utilized in a diverse range of applications across various fields, including IoT [26], Industrial IoT [27], IDS [28], and numerous other domains. For instance, data transmission is safeguarded by integrating blockchain technology with FL, ML, and Deep Reinforcement Learning (DRL) to optimize the sharing mechanism of IDS, thereby enhancing the efficiency and overall effectiveness of data collection [29]. He X et al. [30] proposed a collaborative intrusion detection algorithm that integrates CGAN with a blockchain-enhanced distributed federated learning framework. By incorporating LSTM networks, the data generation capability of CGAN is improved, and differential privacy is employed to enable secure collaborative training across multiple distributed datasets. Blockchain is used to store and share training models, ensuring the security of the global model aggregation and update process. The proposed method demonstrates a strong generalization ability and performs well in intrusion detection tasks. Chai H et al. [31] designed a hierarchical blockchain framework combined with a hierarchical federated learning algorithm for knowledge sharing in large-scale Internet of Vehicles (IoVs) environments. This framework enables vehicles to acquire and disseminate environmental data through machine learning. The knowledge-sharing process is modeled as a multi-leader, multi-follower game-based market transaction to incentivize participation. Simulation results show that the proposed scheme improves sharing efficiency, enhances learning quality, and demonstrates strong resilience against malicious attacks. Xie et al. [5] proposed the IoV-BCFL framework, which also integrates blockchain and federated learning for intrusion detection in the Internet of Vehicles (IoV), with a particular focus on secure storage and post-event traceability of intrusion logs. This method adopts a FedAvg variant to achieve distributed training and employs RSA encryption and IPFS technology to store detection logs on the blockchain. Smart contracts are used to implement dual-chain recording and querying of both models and logs. Ulllah et al. [32] proposed the SecBFL-IoV framework, which embeds blockchain and homomorphic encryption (HE) mechanisms into the federated learning system to enhance the privacy and robustness of model updates. The framework introduces a Certificate Authority (CA) to distribute keys, enabling end-to-end encryption and designs a blockchain-based secure aggregation process to effectively defend against label-flipping and model poisoning attacks.

In summary, federated learning and blockchain technologies have been widely applied in recent years to domains such as intrusion detection, privacy preservation, and resource optimization. Federated learning effectively mitigates the risks associated with data privacy breaches and the high communication overhead inherent in traditional centralized approaches by enabling distributed local training. It demonstrates particular effectiveness in decentralized data environments, such as the Internet of Vehicles (IoVs) and the Internet of Things (IoTs). When integrated with deep learning, reinforcement learning, and related techniques, federated learning offers notable advantages in dynamic intrusion detection, node selection, and resource management. Moreover, the incorporation of blockchain technology further enhances system transparency and security, providing a trustworthy framework for data sharing and model aggregation throughout the federated learning process. In this paper, we propose a blockchain-driven federated learning framework for anomaly detection in telematics systems. A reputation-based reward and punishment mechanism is introduced to improve participant loyalty and engagement, thereby ensuring the effectiveness and stability of the federated learning process. In addition, a prepayment game-theoretic mechanism is designed to optimize resource allocation and enhance the motivation of participating nodes, ultimately improving the overall performance of the federated learning system.

3. System Model

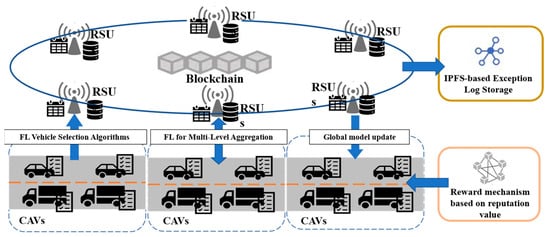

To address the issues identified in the introduction regarding the integration of blockchain and federated learning—specifically, the lack of effective incentive mechanisms, insufficient transparency in the aggregation process, and suboptimal node selection strategies—this paper proposes the system architecture illustrated in Figure 1, drawing inspiration from existing frameworks, such as IoV-BCFL and SecBFL-IoV. The design aims to overcome existing limitations in incentive mechanisms, aggregation transparency, and node selection strategies. This section provides a detailed explanation of the proposed system model, with a focus on the collaborative mechanism between CAV nodes and RSUs in the Internet of Vehicles environment. With the support of RSUs, CAV nodes can dynamically participate in the processes of model selection and training, thereby improving the flexibility and adaptability of the federated learning framework. In addition, the adoption of an enhanced Federated Averaging (FedAvg) algorithm—augmented by the reputation values of CAV nodes—significantly strengthens the trustworthiness and robustness of the model aggregation process.

Figure 1.

The system model in blockchain-driven federated learning in intrusion detection for the Internet of Vehicles.

The integration of blockchain technology further reinforces the security of the federated learning central server by effectively mitigating the risk of single points of failure. Through the immutable recording of model updates and operational logs on the blockchain, the system achieves enhanced transparency and overall integrity.

The proposed system model involves four primary roles, each with distinct functional responsibilities, which are summarized as follows:

(1) Connected and Autonomous Vehicles (CAVs): CAVs are primarily responsible for participating in local intrusion detection model training within the federated learning framework by utilizing their own local datasets. These datasets consist exclusively of normal traffic data to ensure privacy preservation. Each CAV operates as a federated learning client node and performs model training via its On-Board Unit (OBU). Upon completing local training, CAVs transmit the model parameters to the corresponding roadside units (RSUs). Furthermore, CAVs may submit requests to access various models from the RSUs. Once authorized, they can download the latest block from the blockchain to obtain the most recent global model and are obligated to participate in the global training process accordingly.

(2) Roadside Units (RSUs): RSUs serve as the core coordinators in the model assignment, parameter aggregation, and CAV management within the federated learning process. They perform three essential functions. First, RSUs implement a federated learning-based vehicle selection algorithm to dynamically identify CAV nodes that are most suitable for participation in model training, ensuring training effectiveness and data diversity. Second, RSUs manage the synthesis and approval of model requests from CAVs and distribute the required models using smart contracts. Upon receiving trained parameters from CAVs, RSUs execute model aggregation and validation using the improved federated averaging method and subsequently initiate consensus processes to generate new blockchain blocks. Third, RSUs dynamically calculate and collect prepayment fees based on CAVs’ reputation scores and training history prior to initiating federated tasks. After task completion, RSUs allocate rewards according to a reputation-based reward and punishment mechanism, thereby promoting active and trustworthy participation.

(3) Blockchain: Blockchain technology is integrated into the system to ensure the immutability, transparency, and security of federated learning operations. Each RSU maintains a distributed ledger for securely recording model updates and consensus results. The decentralized nature of blockchain prevents any single node from monopolizing control over the IoV network, thereby significantly reducing the risks of data tampering, malicious manipulation, and single-point failures.

(4) IPFS: IPFS is employed in conjunction with blockchain to store anomaly detection logs. Upon detecting anomalous events in the IoV network, the system stores the relevant log data in IPFS and generates a corresponding hash, which is then recorded on the blockchain. This ensures that the logs remain tamper-resistant and verifiable. Administrators of the anomaly detection system periodically retrieve and analyze these logs from IPFS to optimize system performance and continuously improve the federated learning models, thereby enhancing the overall security and responsiveness of the IoV anomaly detection framework.

4. Methodology

To facilitate the understanding of the proposed scheme, a set of key symbols used throughout the framework is defined and summarized. These definitions provide foundational clarity for the subsequent derivation of formulas and the description of related algorithms. Table 1 enumerates these key symbols and their corresponding interpretations.

Table 1.

Definition and description of key symbols.

4.1. Prepaid-Based Vehicle Node Selection

The selection of appropriate vehicle nodes for participation in federated learning is of paramount importance. To overcome the limitations of existing node selection methods that rely solely on computing resources or static reputation values, this paper proposes a dynamic prepaid mechanism that comprehensively considers nodes’ historical performance, task complexity, and reputation. By introducing the prepaid mechanism, the system prioritizes the selection of nodes with higher prepaid amounts, thereby encouraging the participation of high-capability nodes. The involvement of high-quality nodes not only improves the efficiency and accuracy of model training but also optimizes resource utilization, enhances data diversity, and ensures data privacy and security. In addition, this mechanism helps reduce communication overhead, further improving overall system performance. By achieving a better balance among resource allocation, data security, and model performance, the proposed approach significantly enhances the practicality and reliability of the federated learning system. The following section will provide a detailed explanation of the proposed vehicle node selection algorithm and its computation method.

In a federated learning network, it is essential to define two critical parameters before initiating the training process: the maximum number of participating nodes denoted as , which limits the number of vehicle nodes selected for a single federated round; and the maximum training time , which constrains the duration of each round within a specified period.

To ensure the selection of high-quality nodes, we introduce a prepayment-based node selection mechanism. Each vehicle node calculates a prepayment value , which reflects its expected contribution to the training process. This value is derived from both hardware status and historical behavioral data, including the latest reward , historical reputation value , driving record , network bandwidth , and task complexity .

The driving record is computed as follows:

where and are weight coefficients, is the number of violation records, and is the mileage driven.

The complexity of the current task is calculated as follows:

where and are the weight coefficients of the current task complexity, is the amount of data (MB) of the current task of node i, is the training time of a single round of model j of vehicle node i, is the total time of the federal learning limitation training, and is the maximum number of converged rounds of training of model j of vehicle node i, obtained from the historical records. Overfitting is prevented by taking the smaller value of and to ensure that the number of training rounds does not exceed the maximum number of converged rounds of the node.

The prepayment amount is calculated as follows:

where is the adjustment factor, which is used to adjust the overall level of the advance payment.

The corresponding pseudocode for the method is detailed in Algorithm 1. The algorithm first calculates the driving record for each vehicle node (Lines 2–4), which is used to assess the historical driving performance. Next, it computes the task complexity (Lines 6–9), including training rounds and execution complexity, to evaluate the computational demand of the task. Then, the prepaid amount for each vehicle node is calculated (Lines 11–13) to determine the payment priority among vehicles. Finally, all nodes are sorted in descending order based on , and the top nodes are selected as the final node set and returned (Lines 15–17).

| Algorithm 1 Prepaid-Based Vehicle Node Selection |

| Input: Nmax, Tmax, λ, γ1, γ2, θ1, θ2; vehicle set V = {v1, …, vN}, each vi has: Rewardi, , VCi, MLi, BWi, Di, , EOi,j,max |

| Output: Selected vehicle set Vselected |

| 1: /* Compute driving record DRi using Equation (1) */ |

| 2: for each vehicle vi ∈ V do |

| 3: Compute DRi |

| 4: end for |

| 5: /* Compute task complexity TCi using Equation (2) */ |

| 6: for each vehicle vi ∈ V do |

| 7: Compute trainable rounds per model |

| 8: Compute TCi |

| 9: end for |

| 10: /* Compute prepaid value Pi using Equation (3) */ |

| 11: for each vehicle vi ∈ V do |

| 12: Compute Pi |

| 13: end for |

| 14: /* Sort and select top Nmax nodes */ |

| 15: Sort vehicles by Pi in descending |

| 16: Select top Nmax vehicles into Vselected |

| 17: return Vselected |

4.2. Reputation-Based Federated Learning

To enhance model adaptability and detection accuracy, this paper proposes a multi-level federated learning model aggregation strategy, which extends beyond the traditional single-layer aggregation methods such as FedAvg. In the study, the federated learning network is conceptualized as a monopoly market, comprising several operator headquarters (RSUs full nodes), M operator branches (RSUs’ light nodes), and N vehicle nodes. Vehicle node i functions as a data owner with a local data sample size of Si and multiple models. Each vehicle node can autonomously select Xi models for participation in the federated learning task. Each local model is trained on input–output sample pairs, where the input represents feature vectors of message data and the output denotes traffic classification labels. These local models are used to classify message flows in vehicular behavior detection. Once trained, vehicle nodes transmit model updates to associated RSU light nodes, which perform intermediate aggregation. This multi-level structure increases robustness to noise and heterogeneity in local data distributions.

Unlike conventional methods such as FedAvg, which perform aggregation based on equal or data-size-proportional weights, our method incorporates a reputation-weighted scheme to account for the trustworthiness and historical performance of each vehicle node. When a vehicle node registers to participate in the federated learning network, it is required to submit information such as the number of CPU cores of the device, memory capacity, power capacity, and the driver’s violation record to construct a Reputation Attribute Matrix (RAM).

denotes the correlation metric function of the trusted measure of the reputation attribute .

Each attribute is evaluated by a metric function , and the initial reputation value for vehicle node is computed as follows:

The vehicle node i determines the local reputation value based on the path transmission loss and the accuracy of the provided message during transit, subsequently transmitting the generated reputation within the region to the roadside unit. The dynamic local reputation value of node considers both message accuracy and communication quality:

where and denote the weights of path loss and message transmission accuracy, and and are additional parameters to adjust the impact of path loss and message transmission accuracy.

where represent the number of messages sent by vehicle node i to RSUs’ light nodes in the federated learning network that are correct and incorrect, respectively.

Given that the local reputation value of the vehicle is time-sensitive, the RSUs’ light node responsible for message delivery will change as the vehicle moves. Consequently, the local reputation of vehicle node i is defined as a set with respect to time t. In the absence of message interaction at time t, the initialized reputation is utilized for value assignment. When vehicle node i transitions to a different communicating RSUs’ light node, the reputation is updated using the weighted update method of historical reputation value.

where denotes the local trust value of the -th history in the window time, and is a decay function to control the weight of the history trust value.

Each model j of vehicle node i generates learning outcomes , which are subsequently transmitted to the RSUs’ light node through transactions. comprises local accuracy, ε_ij, and model parameters . The RSUs’ light node aggregates into a block and uploads it to the blockchain (BC). Subsequently, the learning results undergo initial aggregation, thereby forming a preliminary global model for model j.

where is the local reputation value between vehicle node i and RSUs’ light node, and t denotes the number of rounds.

The RSUs’ light node disseminates the updated global model parameters to all vehicles, enabling them to utilize these parameters in the subsequent round of training. Upon receipt of the global model, the vehicles conduct further training and updating based on the local data model j. The updated global model is computed as follows:

The training process is iterated multiple times until the RSU light node converges or reaches a predetermined number of training iterations against the local data model j. The message traffic classification in the vehicle’s local data sample comprises n message security classifications . To obtain the optimal detection model for classification, the RSU’s full nodes are selected and optimized based on the global model results and the results of q RSUs’ light nodes in the federated learning network, where .

The global model structure will be partitioned into B seed models based on the model type. For q RSUs’ light nodes, the B seed models will be integrated utilizing a weighted average fusion methodology. This fusion process will amalgamate the global parameters of the RSU light nodes, yielding an integrated global model for the B seed models.

where . The RSU’s full node utilizes local data to fine-tune each model, thereby enhancing its accuracy. All optimized sub-models are selected for deployment on the original vehicle node, employing the most effective model for the actual message detection task. The remaining sub-models are utilized for subsequent global model training, wherein new local data are continuously acquired and the models are optimized and updated periodically.

The pseudocode for the reputation-based federated learning model is presented in Algorithm 2. First, the local reputation value of each vehicle node is calculated (Lines 2–5), where the message transmission accuracy serves as a key factor in the reputation evaluation. Next, the reputation value is dynamically updated over time (Lines 7–9) to ensure adaptability in reputation assessment. Subsequently, the federated learning training process is executed (Lines 11–21), in which each vehicle node trains the model using its local dataset and computes the updated model parameters The RSU light nodes aggregate all model parameters (Lines 15–16) to obtain the global model parameters , which are then broadcast to all vehicle nodes (Lines 18–20). Finally, the RSU full nodes aggregate the parameters to form the final global model (Line 23), which is again broadcast to all vehicle nodes (Line 24), and the global model is returned.

| Algorithm 2 Reputation-Based Federated Learning |

| Input: M RSU nodes, L vehicle nodes, initial reputation {LRi}, weights ω 1, ω2, adjustment parameters γ, η, attributes {rai} |

| Output: Aggregated global model Qb |

| 1: /* Compute local reputation LRi using Equation (6) */ |

| 2: for each vehicle node i ∈ V do |

| 3: Compute message accuracy Turei |

| 4: Compute LRi |

| 5: end for |

| 6: /* Update time-based reputation using Equation (8) */ |

| 7: for each time t and node i ∈ V do |

| 8: Compute |

| 9: end for |

| 10: /* Federated learning process*/ |

| 11: for each training round t do |

| 12: for each vehicle node i ∈ V do |

| 13: Train model on Si and update θi |

| 14: end for |

| 15: /* RSU lightweight aggregation using Equation (9) */ |

| 16: Compute global model |

| 17: /* Broadcast global model using Equation (10) */ |

| 18: for each vehicle i ∈ V do |

| 19: Receive for next round |

| 20: end for |

| 21: end for |

| 22: /* RSU full node aggregation using Equation (11) */ |

| 23: Compute final global model Qb |

| 24: return Qb |

4.3. Reputation-Based Incentive Mechanism

To encourage active and high-quality participation in the federated learning process, we introduce a reputation-based incentive mechanism that allocates rewards according to each node’s trust level, computational contribution, and energy efficiency.

In the local training process, the iterative computation time for model j on vehicle node i is defined as follows:

where and are the CPU and GPU cycle frequency of vehicle node i during the training of local model j. is the number of cycles required by vehicle node i during the training of local model j using a single data sample.

According to reference [5], the energy consumption for one iteration of local training using model j on vehicle node i is defined as follows:

where is the effective capacitance parameter of the computing chipset of vehicle node i. In each round of training, the learning rate η is dynamically adjusted according to the convergence of the model to accelerate model convergence and improve accuracy. In the process of global model training, all vehicle nodes in the federated learning network go through multi-level global iterations to realize multi-model global learning. Vehicle nodes send their local model update results to RSU light nodes via wireless communication. The result contains , which is the local accuracy achieved by the local model j update and corresponds to the local data quality of the vehicle node i. Where higher leads to fewer local iterations and the number of local iterations is .

The data transfer rate of vehicle node i is denoted as follows:

where represents the broadband, is the transmission power of the vehicle node, is the point-to-point channel gain from the vehicle node i to the RSU light node, and is the background noise.

It is assumed that the size of the data updated for each model j is consistent across all vehicle nodes and is represented by the constant . The transmission time for each vehicle node to perform a local model j update is denoted as .

Consequently, the total duration for vehicle node i to participate in one global multilevel model iteration is defined as follows:

The transmission energy consumption for transmitting local model j of vehicle node i during the global iteration is defined as follows:

During a global iteration, the total energy consumption of vehicle node i is described as follows:

The vehicle node i receives rewards and penalties within the federated learning network from the RSU light node that issued the task. The reward value, denoted as Reward, is calculated using the following formula:

where and are weighting coefficients, which can be experimentally or empirically adjusted to the data to ensure the reasonableness and effectiveness of the reward and punishment mechanism; is the total energy consumption during one global iteration of vehicle i; is the global trust of the vehicle; FLPO is the federated learning model result; is the local accuracy of the model of vehicle i in predicting the message event of j; and n is the number of the i-th vehicle’s model.

The pseudocode for the reputation-based reward and punishment mechanism is presented in Algorithm 3. First, the local training time is calculated (Lines 2–4) to evaluate the training time cost for different vehicles. Next, the total energy consumption is computed (Lines 6–8), including the computation energy , data transmission rate , total training time communication energy consumption , and the final total energy consumption . Finally, based on the energy consumption, the reward value for each vehicle node is determined (Lines 10–12), and the final reward set is returned (Line 13).

| Algorithm 3 Reputation-Based Incentive Mechanism |

| Input: Training sets {Si}, CPU frequency fij, bandwidth BWi, transmission power ρi |

| Output: Reward values {Rewardi} |

| 1: /* Compute local training time using Equation (12) */ |

| 2: for each vehicle i ∈ V and model j do |

| 3: Compute |

| 4: end for |

| 5: /* Compute total energy consumption*/ |

| 6: for each vehicle i ∈ V and model j do |

| 7: Compute , ri, , , total energy Ei |

| 8: end for |

| 9: /* Compute reward using Equation (19) */ |

| 10: for each vehicle i ∈ V do |

| 11: Compute Rewardi |

| 12: end for |

| 13: return {Rewardi} |

4.4. Computational Complexity Analysis

To evaluate the scalability and feasibility of the proposed framework, this paper analyzes the computational complexity of its three core components:

(1) Vehicle Selection Algorithm: This process involves calculating the prepaid value for each node and selecting the top nodes to participate in federated learning. Given that the total number of candidate vehicle nodes is K, the complexity is mainly determined by the sorting step, resulting in an overall time complexity of .

(2) Federated Learning Model Aggregation Algorithm: Each vehicle node is required to train M local models, each with P parameters. Upon completion, model aggregation is performed at both the RSU light nodes and the RSU full node. Since the number of RSUs is much smaller than the number of vehicle nodes, the overall time complexity of the system can be approximated as .

(3) Reputation-based Reward Mechanism: In each training round, the mechanism evaluates the local training time, transmission energy consumption, and prediction accuracy of each node to calculate the reward value. Let T denote the number of local training iterations. The total complexity of this module is , which approaches linear complexity when is a constant.

The above complexity analysis demonstrates that the proposed methods are highly scalable and suitable for deployment in large-scale Internet of Vehicles (IoVs) environments.

5. Experimental Evaluation

5.1. Environment and Parameters of the Experiment

This section verifies the efficacy of the proposed multilevel federated learning based on the vehicle association selection algorithm and the reward mechanism based on reputation value through experimental simulations. Initially, the federation learning process is examined in terms of loss function and accuracy. The performance of the framework is analyzed by comparing the learning consumption and effectiveness of five models, with the convolutional neural network (CNN) selected as the primary model. Subsequently, the variation in prepayment and reward values in the node selection algorithm is considered, focusing on the deposit values of 10 specific nodes. It is assumed that the collection power of exceeds that of , enabling to collect more training data than , i.e., > . Similarly, possesses more balances than , thus allowing to obtain higher reward prices than , i.e., .

During the model evaluation, the IoT-23 [33] dataset, released by Stratosphere Lab, is used as a benchmark for anomaly detection in IoT environments. It contains 16 categories of normal and malicious traffic, including attacks such as Mirai, Okiru, DDoS, and port scanning. Each record represents a network flow with features such as duration, packet size, and inter-arrival time. In our experiments, each vehicle node randomly selects 300,000 samples, split into 80% for training and 20% for testing. All features are normalized (mean = 0, variance = 1) to ensure training stability. The key parameters set for the experiments include a learning rate of 0.001, a batch size of 64, and 100 training rounds. The Adam [34] optimizer is employed, and the loss function utilized is the cross-entropy loss. To ensure the reproducibility of the experimental results, a fixed random seed (e.g., 42) is used. Table 2 presents the configuration of additional key parameters.

Table 2.

The configuration of key parameters.

5.2. Experimental Evaluation of Multilevel Federated Learning Based on Vehicle Selection Algorithms

To evaluate the performance of the federated learning algorithms proposed in this study within an Internet of Things (IoTs) environment, a series of comprehensive experiments were conducted using the IoT-23 dataset. This dataset offers a rich collection of realistic network traffic data, encompassing both benign and malicious behaviors, making it well-suited for assessing the effectiveness of intrusion detection systems. The experimental design aimed to investigate the capability of federated learning to operate effectively in a distributed setting characterized by heterogeneous and non-independent identically distributed (Non-IID) data. Specifically, the focus was placed on the framework’s ability to collaboratively train models across multiple decentralized devices (i.e., participating nodes).

In the experimental setup, multiple nodes were instantiated, each assigned an independent subset of the IoT-23 dataset to simulate realistic Non-IID data distributions. Local training was conducted on each node using five different model architectures, with the convolutional neural network (CNN) selected as the local base model due to its superior accuracy. Federated learning was employed to implement multi-level model aggregation across participating nodes. Key hyperparameters, including learning rate, batch size, and the number of training rounds, were configured as shown in Table 2 to ensure stable convergence of the global model within a reasonable timeframe.

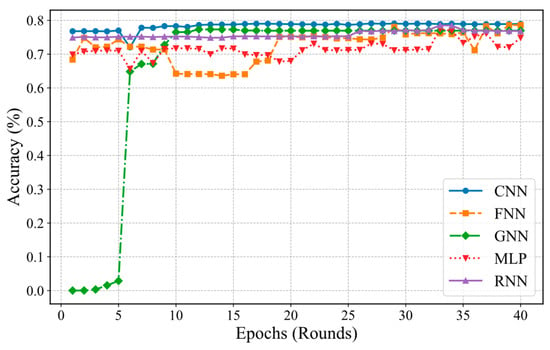

Figure 2 illustrates the trend of accuracy for each model during the training process. Except for the GNN model, which exhibits lower accuracy in the first five rounds, the other models demonstrate rapid accuracy improvement in the initial stages and stabilize thereafter. The CNN model maintains consistently high accuracy throughout the training process, indicating its strong feature extraction capability and convergence performance. Although the GNN model eventually achieves high accuracy, its performance fluctuates significantly in the early stages. The FNN and MLP models show slightly lower accuracy, with occasional fluctuations during certain training phases.

Figure 2.

Comparison of federated learning accuracy across five models.

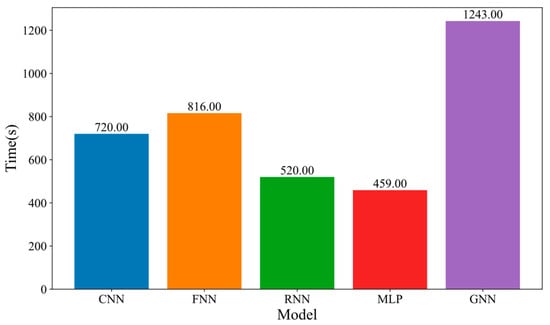

Figure 3 presents the time consumption of different models over 40 training rounds, revealing significant disparities in computational cost among the five models. The Graph Convolutional Network (GNN) consumes the most time at 1243 s, reflecting its high computational complexity. In contrast, the Multilayer Perceptron (MLP) and Recurrent Neural Network (RNN) models consume less time, at 459 s and 520 s, respectively, demonstrating higher computational efficiency. The convolutional neural network (CNN) and Fully Connected Network (FNN) models consume 720 and 816 s, respectively, indicating that CNN achieves a balance between modeling capacity and reasonable training cost.

Figure 3.

Comparison of time consumption in federated learning across five models.

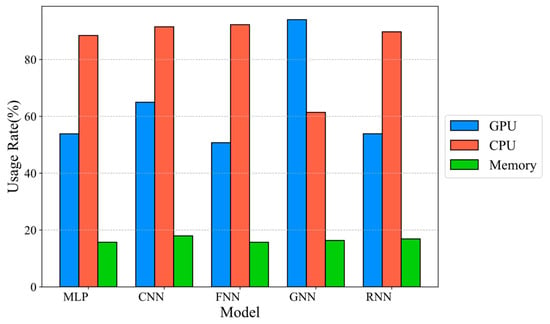

Figure 4 compares the utilization rates of GPU, CPU, and memory resources across models. The GNN model shows approximately 80% utilization of both GPU and CPU, indicating a high dependency on computational resources, although its memory usage remains relatively low. The CNN model demonstrates balanced resource utilization, maintaining good performance without excessive reliance on specific hardware. In contrast, while the MLP and RNN models show lower resource usage, their performance is somewhat limited.

Figure 4.

Comparison of CPU and GPU utilization in federated learning.

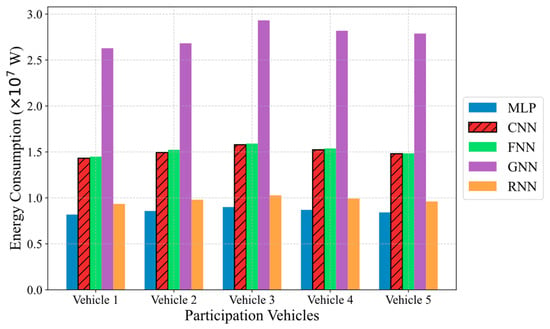

Figure 5 shows the energy consumption of participating vehicles under different models. The GNN model exhibits the highest energy consumption, approaching 3.0×10⁷ watts. The CNN and RNN models, by comparison, demonstrate relatively lower energy consumption, indicating good energy efficiency—particularly advantageous for energy-constrained vehicular computing environments.

Figure 5.

Comparison of energy consumption in federated learning.

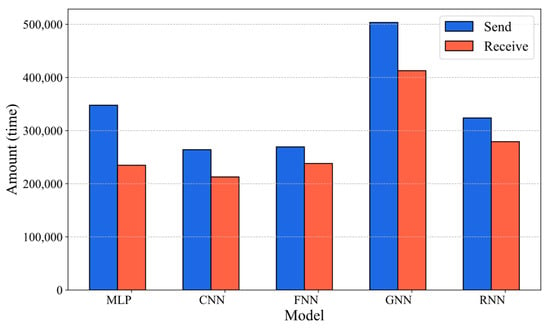

Figure 6 compares the communication overhead of different models during the federated learning process. The GNN model has the highest number of transmissions and receptions, approximately 40,000 and 35,000, respectively, indicating a significant communication burden. The CNN and MLP models incur relatively lower communication overhead, which helps reduce bandwidth consumption and latency during the federated learning process, thereby improving overall communication efficiency.

Figure 6.

Comparison of network throughput in federated learning across five models.

Collectively, the aforementioned experimental results elucidate the performance disparities among the various models, with GNNs demonstrating superior accuracy despite exhibiting the highest computational time and RNNs displaying inferior accuracy initially, notwithstanding its relatively low computational time. These findings contribute to the understanding of the compromise between time efficiency and accuracy that must be considered when selecting an appropriate model for a specific application scenario.

In the experiments, this study employs CNNs as the primary model based on several factors:

1. Superior Convergence and Accuracy: Experimental results (Table 3) demonstrate that CNN exhibits excellent convergence and stable accuracy during training. Under various learning rates and batch sizes, the model generally achieves or approaches 80% accuracy. Notably, when the learning rate is set to 0.001 and the batch size to 64, the model performs best, with a loss of 0.483 and an accuracy of 0.790. The precision, recall, and F1-score reach 0.772, 0.793, and 0.783, respectively, showing minimal fluctuation and stable performance. These results indicate that CNN possesses strong adaptability and reliability in handling complex behavior detection tasks.

Table 3.

CNN parameter comparison.

2. Balanced Computational Efficiency and Model Complexity: Compared to more complex models such as GNNs, CNN offers a better trade-off between computational efficiency and model accuracy. In terms of training time, CNN takes 10–15% longer than FNN but significantly outperforms it in accuracy. Moreover, CNN requires 20–30% fewer computational resources than GNNs while still producing high-quality outputs. Therefore, CNN is an ideal choice for resource-constrained scenarios, achieving a good balance between computational cost and prediction accuracy.

In conclusion, CNNs are the preferred model for the federated learning experiments in this study due to their superior feature extraction capability, stable accuracy performance, moderate computational resource requirements, and extensive application experience.

This investigation conducted an experiment on CNN-based federated learning. The study employed the weighted average federated learning method based on CNNs for the has-been detection problem in the IoV scenario. The convolutional neural network model was distributed across multiple vehicle nodes and RSUs, trained locally on each node, and the model updates from all nodes were aggregated on the RSUs to form a global model through federated learning. The experiment comprised 40 training cycles (Epochs), and the model’s convergence and performance were evaluated by monitoring the loss value and accuracy of each node in each cycle.

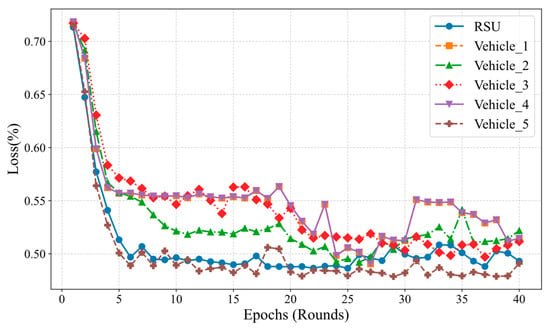

Figure 7 illustrates the variation in loss values (Loss) of the CNN model at the roadside unit (RSU) and multiple vehicle nodes across different training rounds (Epochs) during the federated learning process. It is evident that the loss values of all nodes decrease rapidly in the initial stages of training, indicating that at the beginning of federated learning, each node can quickly reduce errors through local training and global model aggregation. In particular, the loss value of the RSU node significantly decreases and stabilizes within the first five rounds, suggesting that the global model is effectively optimized. As the number of training rounds increases, the loss values of vehicle nodes gradually converge. Experimental results show that by rounds 25 to 30, the loss values of most vehicle nodes have reached their minimum and stabilized, indicating that the global model has largely converged at this point. In the later stages, some vehicle nodes exhibit slight fluctuations in loss values, possibly due to discrepancies between their local data distributions and the global model—a common phenomenon in federated learning. Nevertheless, the overall trend demonstrates that, through federated learning, the models at each node can effectively reduce errors and achieve stability.

Figure 7.

Iteration of loss value changes in federated learning with CNN model.

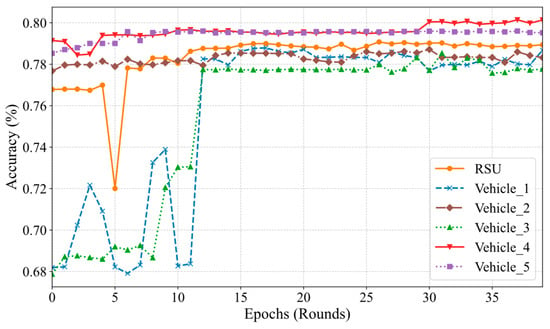

Figure 8 demonstrates the variation in accuracy of the CNN model on the RSU and each vehicle node at different training rounds during the federated learning process. The figure demonstrates that the accuracies of most nodes increase rapidly after the initial few rounds of training and subsequently stabilize. The accuracy of the RSU node exhibits a rapid increase in the early stages of training and stabilizes at approximately 0.76 after the 5th round, indicating the effectiveness of the global model in federated learning. For the majority of vehicle nodes, the accuracy also gradually improves and maintains a high level (between approximately 0.78 and 0.79) following initial fluctuations. These results indicate that despite potential heterogeneity among vehicle nodes in terms of data distribution, the federated learning framework enables the nodes to share learning outcomes and progressively enhance the performance of the local model.

Figure 8.

Iteration of accuracy changes in federated learning with CNN model.

The accuracy of the vehicle nodes and exhibits significant variability during the initial stage; however, after several iterations of federated learning, the accuracy demonstrates substantial improvement and gradually converges with the performance of other nodes. This observation suggests that within the federated learning process, even if individual node models initially exhibit suboptimal performance, the continuous updating and aggregation of the global model enables these nodes to benefit from the federated learning approach, ultimately enhancing the predictive accuracy of their respective models.

The analysis of these two graphs demonstrates that federated learning can effectively share learning outcomes among multiple heterogeneous nodes. Despite different nodes having diverse data distributions and initial performances, through multiple rounds of training in federated learning, the model performances of each node (both in terms of the loss value and the accuracy) tend to stabilize and achieve a high level. This validates the efficacy of federated learning for distributed model training in IoV, particularly when addressing heterogeneous and unbalanced data.

5.3. Experimental Evaluation of Reputation-Based Reward Mechanism Algorithms

- Storage amount evaluation

To evaluate the effectiveness of the reward and punishment mechanism in the IoV scenario, the experiment simulates 10 vehicle nodes and 1 RSU, each holding local data. The model is trained using the IoT-23 dataset within a federated learning framework, employing weighted averaging for aggregation. A dynamic reward and punishment mechanism is introduced, adjusting each node’s model weight and participation rate based on training accuracy and update frequency. High-contributing nodes are rewarded, while low-performing nodes are penalized, thereby encouraging active participation.

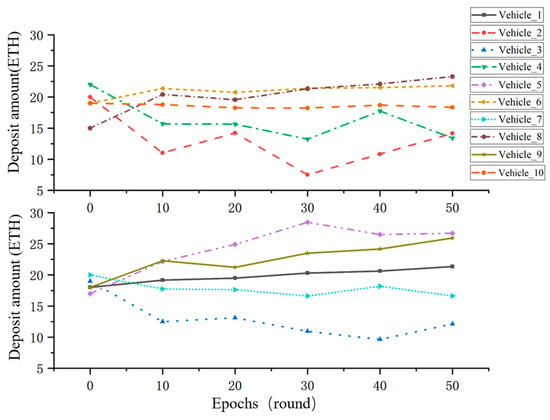

Figure 9 illustrates the changes in deposit values of vehicle nodes V1 to V10 over the first 50 rounds of federated learning. The figures show that as the learning progresses, the deposit values of different vehicles follow distinct trends:

Figure 9.

Deposit value across learning rounds in federated learning.

and : These vehicles maintain stable deposit values throughout the learning process, indicating consistent data quality and contribution, which helps them sustain steady deposit levels.

and : These vehicles exhibit overall low and highly fluctuating deposit values, suggesting instability in their data quality and contribution, leading to significant variations in deposit levels across rounds.

and : These vehicles show a significant increase in deposit values during the learning process, indicating growing data quality and contribution, enabling them to maintain high deposit levels.

: This vehicle experiences a decline in deposit value in the early stages, followed by gradual stabilization. This suggests that it was initially penalized due to poor data quality or insufficient contribution but later improved its performance and regained deposit value through enhanced contribution.

Experimental results show that, due to differences in contribution and data quality, vehicle nodes exhibit varying trends in deposit value changes. High-performing nodes maintain or increase their deposit values, while low-performing nodes experience fluctuations or declines. These findings indicate that the reward and punishment mechanism effectively differentiates node contributions, dynamically adjusts deposit values, and incentivizes participation, thereby enhancing the fairness and efficiency of the federated learning process.

- 2.

- Comparative evaluation of trusted nodes

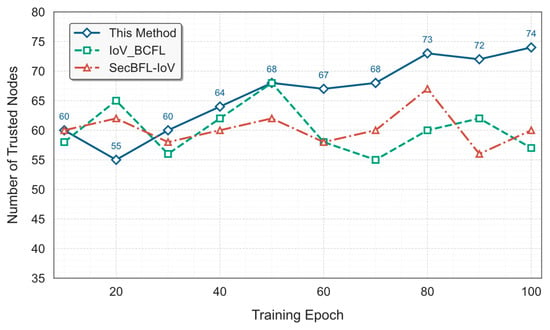

To evaluate the effectiveness of the prepayment-based incentive and penalty mechanism in enhancing system stability and model accuracy, we conducted comparative experiments between the proposed method and two existing approaches: IoV-BCFL and SecBFL-IoV. The experiment simulated a federated learning environment with 100 vehicular nodes, among which 75 nodes were designated as trusted nodes. In each training round, the system randomly selected 80 nodes to participate in the federated learning task.

To incorporate incentive mechanisms, each node was initialized with a storage balance of 20 ETH. A participation threshold was set such that nodes with a balance below 10 were disqualified from participating in subsequent training rounds. To assess the stability and effectiveness of different node selection strategies, we recorded the number of trusted nodes selected in each round, using this as a key metric to measure system reliability and model robustness.

As shown in Figure 10, the IoV-BCFL and SecBFL-IoV methods, which lack a prepayment incentive mechanism, failed to dynamically optimize node selection, resulting in the number of trusted nodes fluctuating around 60. In contrast, our proposed method utilizes the prepayment mechanism to effectively encourage sustained participation from high-reputation nodes. As a result, the number of trusted nodes steadily increased over the training rounds. After round 60, the proposed method significantly outperformed the baselines, and by round 100, the number of trusted participating nodes reached 74—nearly all trusted nodes—demonstrating the strong effectiveness of the proposed mechanism in promoting reliable participation and enhancing system stability.

Figure 10.

Trusted node comparison.

To further validate the performance difference among the three methods in terms of trusted node selection, a one-way ANOVA test was conducted. The result yielded an F-statistic of 5.32 and a p-value of 0.0113, indicating a statistically significant difference (p < 0.05) among the proposed method, IoV-BCFL, and SecBFL-IoV. This confirms that our proposed approach significantly outperforms the baseline methods in maintaining a higher number of trusted nodes during the federated learning process.

5.4. Summary of Experiments

Through the analysis of multiple experimental results, this study systematically evaluates the performance of different models and participating nodes within the federated learning framework. Particular attention is given to the effectiveness of the proposed approach and the management of communication overhead under the influence of the integrated reward and punishment mechanisms. The following sections detail the key experimental findings and provide comprehensive summaries of the evaluation results obtained.

- Trade-offs in model selection

In the comparative experiments involving different model architectures (Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6), significant variations were observed in terms of model accuracy, training time, and communication overhead. Among the evaluated models, the CNN was selected as the primary focus of this study due to its well-balanced trade-off between feature extraction capability and computational efficiency. Although GNNs exhibited superior accuracy performance, their substantial communication overhead renders them less suitable for deployment in resource-constrained environments. In contrast, while models such as RNNs impose relatively lower computational demands, they fall short in terms of accuracy and communication efficiency when compared to CNNs and other alternatives.

- 2.

- Management of communication overhead in federated learning

In the experiments evaluating communication overhead, the results reveal that the number of transmissions and receptions differs significantly across model types, reflecting the inherent complexity of the model architectures and the frequency of parameter synchronization required during federated learning. Although GNNs achieve the highest accuracy, their intricate graph structures lead to the greatest communication burden. By contrast, models such as MLPs and CNNs offer a more favorable balance between communication efficiency and predictive performance. These findings underscore the importance of considering the trade-off between model complexity and communication cost when selecting models for federated learning, particularly in scenarios constrained by bandwidth or energy limitations.

In addition to the quantitative comparisons presented in Figure 4, Figure 5 and Figure 6—which illustrate GPU, CPU, memory utilization, energy consumption, and communication overhead—it is essential to further examine the intrinsic trade-off between model performance and system communication costs. The incentive mechanism proposed in this paper, which is grounded in a prepaid reward and punishment strategy, prioritizes the participation of high-reputation nodes in the federated learning process. This strategy not only improves the accuracy and stability of local model updates but also effectively reduces communication overhead by preventing redundant or low-quality parameter uploads. As demonstrated in Figure 10, low-quality nodes with unstable training performance are filtered out in the early stages, thereby significantly decreasing unnecessary communication during training rounds. This process conserves valuable communication resources and contributes to an overall reduction in system energy consumption.

- 3.

- Effectiveness of the reward mechanism

The experimental results on the reward mechanism in federated learning (Figure 7, Figure 8 and Figure 9) demonstrate its effectiveness in enhancing the accuracy of the global model, particularly under conditions of non-uniform data distribution and significant disparities in node contributions. By dynamically adjusting the nodes’ contribution levels and participation frequencies, the reward mechanism fosters both fairness and efficiency within the federated learning process. Specifically, nodes exhibiting consistently high contributions were assigned greater participation weights over successive training rounds, thereby positively influencing the convergence and performance of the global model. Conversely, nodes with initially lower contributions gradually increased their deposit values and participation frequencies through adaptive strategies encouraged by the mechanism. These findings indicate that the reward mechanism plays a vital role in incentivizing active participation, balancing resource utilization, and promoting sustained engagement of nodes in federated learning environments.

- 4.

- Dynamic Adjustment of Node Performance and Contribution

The experimental results (Figure 9) reveal that node deposit values fluctuate as the number of federated learning rounds increases. Nodes demonstrating superior performance tend to maintain or increase their deposit values, whereas those with suboptimal performance may experience reductions due to insufficient contributions. This dynamic adjustment mechanism enables the system to evaluate and appropriately reward or penalize individual nodes in real-time throughout the training process, thereby enhancing the convergence efficiency and overall performance of the global model. Moreover, this mechanism provides a practical solution to address challenges posed by data heterogeneity and unbalanced data distributions, allowing federated learning to better accommodate the complexity and variability characteristic of real-world application scenarios.

Collectively, the aforementioned experimental results validate the feasibility and effectiveness of the proposed blockchain-driven federated learning framework for anomaly detection in connected vehicle environments. The framework demonstrates notable advantages in handling heterogeneous data, managing communication overhead, and dynamically regulating node contributions. Furthermore, the integration of reward and punishment mechanisms significantly improves the fairness and efficiency of federated learning, thereby enhancing its adaptability and robustness in distributed computing contexts.

6. Summary and Scope

This study advances the application of federated learning in behavior detection for the Internet of Vehicles by introducing several innovative mechanisms. First, a multi-level federated learning approach based on a vehicle selection algorithm is proposed, enabling distributed CAVs to dynamically participate in local model training. The optimal global model is aggregated at RSUs using a weighted averaging algorithm and subsequently recorded on the blockchain, thereby enhancing system transparency and ensuring network security. Second, a reputation evaluation mechanism is developed to assess the trustworthiness and contributions of CAV nodes. This mechanism incentivizes high-performing nodes while penalizing or excluding underperforming ones, thereby promoting fairness and encouraging active participation across the system. Third, a blockchain-based prepaid reward mechanism is introduced, requiring CAVs to deposit a fee prior to training and receive rewards after the global model is successfully generated. By integrating the prepaid reward and punishment mechanism for node selection with a dynamic anomaly detection method for federated training, the system ensures that the most reliable and suitable nodes are selected for participation. As a result, the trust level among participating nodes reaches up to 99%, significantly enhancing the overall security and stability of the IoV environment.

Future research can further build upon the contributions of this study in several directions. First, the reputation evaluation mechanism may be refined and extended to support larger and more complex vehicular networks. Second, the dynamic anomaly detection method can be integrated with additional machine learning algorithms to improve its adaptability to a wider range of real-world scenarios. Finally, broader implementation of the blockchain-based prepayment mechanism is encouraged, with the potential to enhance resource utilization efficiency and expand its applicability to other distributed computing environments.

Overall, the findings of this study offer novel insights into the design of federated learning frameworks for telematics and lay a solid foundation for future research. Continued refinement and expansion of these mechanisms are expected to play a pivotal role in advancing intelligent transportation systems and enabling secure, scalable, and efficient IoV applications.

Author Contributions

Conceptualization, Q.S. and L.W.; methodology, C.C.; software, L.W.; validation, Q.S., L.W. and C.C.; formal analysis, Y.B.; investigation, Q.S.; resources, Q.S.; data curation, L.W.; writing—original draft preparation, L.W.; writing—review and editing, C.C.; visualization, C.C.; supervision, Q.S.; project administration, Q.S.; funding acquisition, Q.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62476145, in part by the Postgraduate Research & Practice Innovation Program of Jiangsu Province under Grant SJCX24_2013, and in part by the Social Welfare Science Project of Nantong under Grant MS2024027.

Data Availability Statement

The data that support the findings of this study are publicly available in the IoT-23 dataset, which can be accessed at https://www.stratosphereips.org/datasets-iot23 (accessed on 19 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lu, N.; Cheng, N.; Zhang, N.; Shen, X.; Mark, J.W. Connected vehicles: Solutions and challenges. IEEE Internet Things J. 2014, 1, 289–299. [Google Scholar] [CrossRef]

- Catherine, H.; James, W.; IDC. China Connected Vehicle Market Report. 2023. Available online: https://s3.cn-north-1.amazonaws.com.cn/aws-dam-prod/2023_IDC_China_Connected_Vehicle_Market_Report_Reprint.pdf (accessed on 15 June 2024).

- Boualouache, A.; Ghamri-Doudane, Y. Zero-x: A blockchain-enabled open-set federated learning framework for zero-day attack detection in iov. IEEE Trans. Veh. Technol. 2024, 73, 12399–12414. [Google Scholar]

- Turgeman, N. Infographic: Top Real-World Threats Facing Connected Cars and Fleets. 2022. Available online: https://upstream.auto/blog/infographic-top-real-world-threats-facing-connected-cars-fleets/ (accessed on 1 September 2022).

- Xie, N.; Zhang, C.; Yuan, Q.; Kong, J.; Di, X. IoV-BCFL: An intrusion detection method for IoV based on blockchain and federated learning. Ad Hoc Netw. 2024, 163, 103590. [Google Scholar] [CrossRef]

- Boualouache, A.; Engel, T. A survey on machine learning-based misbehavior detection systems for 5g and beyond vehicular networks. IEEE Commun. Surv. Tutor. 2023, 25, 1128–1172. [Google Scholar] [CrossRef]

- Inuwa, M.M.; Das, R. A comparative analysis of various machine learning methods for anomaly detection in cyber attacks on IoT networks. Internet Things 2024, 26, 101162. [Google Scholar] [CrossRef]

- Din, I.U.; Khan, K.H.; Almogren, A.; Guizani, M. Machine Learning for Trust in Internet of Vehicles and Privacy in Distributed Edge Networks. IEEE Internet Things J. 2025, 1-1. [Google Scholar] [CrossRef]

- Zamanzadeh Darban, Z.; Webb, G.I.; Pan, S.; Aggarwal, C.; Salehi, M. Deep learning for time series anomaly detection: A survey. ACM Comput. Surv. 2024, 57, 1–42. [Google Scholar] [CrossRef]

- Gonaygunta, H.; Nadella, G.S.; Pawar, P.P.; Kumar, D. Enhancing cybersecurity: The development of a flexible deep learning model for enhanced anomaly detection. In Proceedings of the 2024 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 3 May 2024; pp. 79–84. [Google Scholar]

- Qi, P.; Chiaro, D.; Guzzo, A.; Ianni, M.; Fortino, G.; Piccialli, F. Model aggregation techniques in federated learning: A comprehensive survey. Future Gener. Comput. Syst. 2024, 150, 272–293. [Google Scholar] [CrossRef]

- Pei, J.; Liu, W.; Li, J.; Wang, L.; Liu, C. A review of federated learning methods in heterogeneous scenarios. IEEE Trans. Consum. Electron. 2024, 70, 5983–5999. [Google Scholar] [CrossRef]

- Ali, S.; Li, Q.; Yousafzai, A. Blockchain and federated learning-based intrusion detection approaches for edge-enabled industrial IoT networks: A survey. Ad Hoc Netw. 2024, 152, 103320. [Google Scholar] [CrossRef]

- Feng, L.; Zhao, Y.; Guo, S.; Qiu, X.; Li, W.; Yu, P. Blockchain-based asynchronous federated learning for Internet of Things. IEEE Trans. Comput 2021, 71, 1092–1103. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.-L. Blockchained on-device federated learning. IEEE Commun. Lett. 2019, 24, 1279–1283. [Google Scholar] [CrossRef]

- Zou, Y.; Shen, F.; Yan, F.; Lin, J.; Qiu, Y. Reputation-based regional federated learning for knowledge trading in blockchain-enhanced IoV. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–6. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Blockchain empowered asynchronous federated learning for secure data sharing in internet of vehicles. IEEE Trans. Veh. Technol. 2020, 69, 4298–4311. [Google Scholar] [CrossRef]

- Liu, W.; Xu, X.; Wu, L.; Qi, L.; Jolfaei, A.; Ding, W.; Khosravi, M.R. Intrusion detection for maritime transportation systems with batch federated aggregation. IEEE Trans. Intell. Transp. Syst. 2022, 24, 2503–2514. [Google Scholar] [CrossRef]

- Rahman, S.A.; Tout, H.; Talhi, C.; Mourad, A. Internet of things intrusion detection: Centralized, on-device, or federated learning? IEEE Netw. 2020, 34, 310–317. [Google Scholar] [CrossRef]

- Khan, I.A.; Razzak, I.; Pi, D.; Khan, N.; Hussain, Y.; Li, B.; Kousar, T. Fed-inforce-fusion: A federated reinforcement-based fusion model for security and privacy protection of IoMT networks against cyber-attacks. Inf. Fusion 2024, 101, 102002. [Google Scholar] [CrossRef]

- Driss, M.; Almomani, I.; e Huma, Z.; Ahmad, J. A federated learning framework for cyberattack detection in vehicular sensor networks. Complex Intell. Syst. 2022, 8, 4221–4235. [Google Scholar] [CrossRef]

- Hammoud, A.; Otrok, H.; Mourad, A.; Dziong, Z. On demand fog federations for horizontal federated learning in IoV. IEEE Trans. Netw. Serv. Manag. 2022, 19, 3062–3075. [Google Scholar] [CrossRef]

- Yang, J.; Hu, J.; Yu, T. Federated AI-enabled in-vehicle network intrusion detection for internet of vehicles. Electronics 2022, 11, 3658. [Google Scholar] [CrossRef]

- Qian, Y.; Rao, L.; Ma, C.; Wei, K.; Ding, M.; Shi, L. Toward efficient and secure object detection with sparse federated training over internet of vehicles. IEEE Trans. Intell. Transp. Syst. 2024, 25, 14507–14520. [Google Scholar] [CrossRef]

- Liang, C.; Shanmugam, B.; Azam, S.; Karim, A.; Islam, A.; Zamani, M.; Kavianpour, S.; Idris, N.B. Intrusion detection system for the internet of things based on blockchain and multi-agent systems. Electronics 2020, 9, 1120. [Google Scholar] [CrossRef]

- Sengupta, J.; Ruj, S.; Bit, S.D. A comprehensive survey on attacks, security issues and blockchain solutions for IoT and IIoT. J. Netw. Comput. Appl. 2020, 149, 102481. [Google Scholar] [CrossRef]

- Rao, B.T.; Narayana, V.L.; Pavani, V.; Anusha, P. Use of blockchain in malicious activity detection for improving security. Int. J. Adv. Sci. Technol. 2020, 29, 9135–9146. [Google Scholar]

- Liu, C.H.; Lin, Q.; Wen, S. Blockchain-enabled data collection and sharing for industrial IoT with deep reinforcement learning. IEEE Trans. Ind. Inform. 2018, 15, 3516–3526. [Google Scholar] [CrossRef]

- He, X.; Chen, Q.; Tang, L.; Wang, W.; Liu, T. CGAN-based collaborative intrusion detection for UAV networks: A blockchain-empowered distributed federated learning approach. IEEE Internet Things J. 2022, 10, 120–132. [Google Scholar] [CrossRef]

- Chai, H.; Leng, S.; Chen, Y.; Zhang, K. A hierarchical blockchain-enabled federated learning algorithm for knowledge sharing in internet of vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3975–3986. [Google Scholar] [CrossRef]

- Ulllah, I.; Deng, X.; Pei, X.; Mushtaq, H. SecBFL-IoV: A Secure Blockchain-Enabled Federated Learning Framework for Resilience Against Poisoning Attacks in Internet of Vehicles. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; pp. 410–428. [Google Scholar]

- García, S.; Erquiaga, M.J.; Zunino, A. IoT-23: A Labeled Dataset for IoT Network Traffic with Malicious and Benign Behavior; Stratosphere Laboratory, Czech Technical University in Prague: Prague, Czech Republic, 2020. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).