Abstract

Traditional convolutional neural networks face challenges in handling multi-scale targets in remote sensing object detection due to fixed receptive fields and simple feature fusion strategies, which affect detection accuracy. This study proposes an adaptive feature extraction object detection network (AFEDet). Compared with previous models, the design philosophy of this network demonstrates greater flexibility and complementarity. First, parallel dilated convolutions effectively expand the receptive field to capture multi-scale features. Subsequently, the channel attention gating mechanism further refines these features and assigns weights based on the importance of each channel, enhancing feature quality and representation ability. Second, the multi-scale enhanced feature pyramid network (MeFPN) constructs a structurally symmetrical bidirectional transmission path. It aligns multi-scale features in the same semantic space using linear transformation, reducing scale bias and improving representation consistency. Finally, the scale adaptive loss (SAL) function dynamically adjusts loss weights according to the scale of the target, guiding the network to learn features of different scale targets evenly during training and optimizing the model’s learning direction. The proposed architecture inherently integrates symmetry principles through its bidirectional feature fusion paradigm and equilibrium-seeking mechanism. Specifically, the symmetric structure of MeFPN balances information flow between shallow and deep features, while SAL applies a symmetry-inspired loss-weighting strategy to maintain optimization consistency across different scales. Experimental results show that, on the DOTA dataset, the proposed method improves the mAP by 7.12% compared to the baseline model.

1. Introduction

The main task of remote sensing target detection is to accurately identify the categories and locations of objects in remote sensing images [1]. It has a wide range of application fields, including but not limited to military and mineral exploration [2]. Due to the growth of deep learning, convolutional neural networks (CNNs) can play a vital role in remote sensing image analysis because of their powerful feature-learning capabilities. CNN can automatically extract hierarchical features of images, thus enabling the recognition and localization of objects in complex backgrounds.

However, conventional object detection approaches encounter numerous challenges when dealing with remote sensing images [3]. As shown in Figure 1, the background of these images is extremely complex, with various terrain elements intertwined, which increases the difficulty of object recognition. Moreover, the scale of objects varies significantly, making it difficult for traditional methods to handle multi-scale objects uniformly and efficiently. Additionally, objects of the same category exhibit significant differences in appearance due to factors such as shooting angles, lighting, and the environment, which pose difficulties for accurate classification.

Research on multi-scale feature representation focuses on two main approaches [4]. One category involves the use of convolution kernels with variable receptive fields, such as dilated convolutions [5] and adaptive pooling techniques [6]. These methods have made some progress in capturing multi-scale features. However, when faced with complex and variable object scales, they still cannot fully cover the required scales, resulting in incomplete feature extraction and thus affecting the detection accuracy. The other category of methods combines hierarchical backbone networks with feature pyramid networks (FPN), which attempt to achieve multi-scale representation by integrating features at different levels [7,8]. In practical applications, due to the structural differences between the two, information loss or misalignment is likely to occur during the feature fusion process [9]. Moreover, the multi-scale feature extraction ability of the backbone network is limited, making it difficult [1] to deal with objects with extreme scale variations. Increasing the number of FPN layers can attempt to solve some problems, but it will increase the model complexity and lead to a large consumption of computing resources.

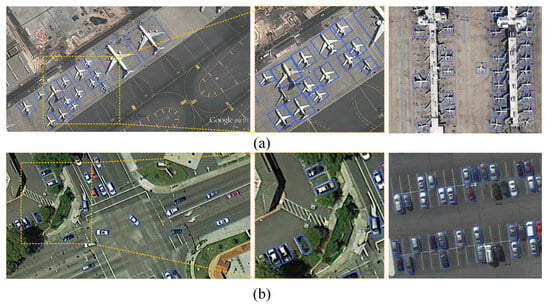

Figure 1.

(a) FPN combined high-level and low-level info via top-down paths and lateral links. (b) PANet [10] added bottom-up paths for bidirectional info flow. (c) BiFPN [11] used weighted feature fusion to enhance the network structure.

To address the limitations of existing object detection techniques in handling complex scenes and multi-scale targets, this paper proposes an adaptive feature-enhanced detection network (AFEDet). AFEDet addresses the limitations of traditional methods by incorporating dynamic scale selection and bidirectional feature fusion. As shown in Figure 2, the network consists of three main components: adaptive feature extraction (AFE), multi-scale enhanced feature pyramid network (MeFPN), and scale-aware loss (SAL). AFE extracts key features, MeFPN enhances multi-scale fusion, and SAL dynamically adjusts loss weights to optimize training. Experiments show that AFEDet offers key contributions, including:

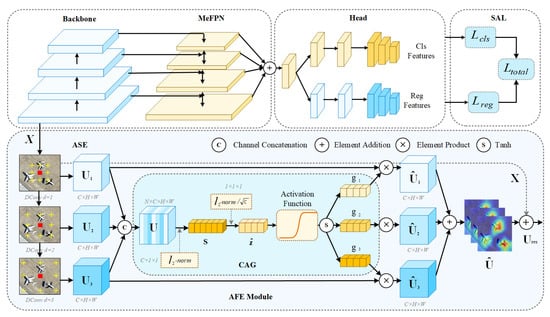

Figure 2.

This figure illustrates the overall architecture of AFEDet, which comprises AFE, MeFPN, and SAL. AFE consists of two sub-modules ASE and CAG. Specifically, ASE extracts features using parallel dilated convolutions. CAG processes these features by compressing them with the -norm, yielding . Finally, a activation function generates the gating vector , which is then multiplied element-wise with the corresponding feature maps to optimize feature selection.

(1) Flexible Feature Extraction Method: AFE adheres to the principle of operational symmetry. It first employs a sequence of parallel dilated convolutions, expanding the receptive field with minimal computational cost. Then, in the -normalization gating module, it introduces a symmetric tanh function, which dynamically adjusts channel weights based on the input features. This mechanism effectively suppresses interference while maintaining a balance between different receptive field branches.

(2) Efficient Feature Fusion Strategy: MeFPN follows the principle of structural symmetry, utilizing linear scale transformation to precisely align upper- and lower-layer feature maps to the middle layer scale. This ensures spatial and semantic consistency across different scales. Unlike traditional FPN, which uses unidirectional information flow, MeFPN employs a symmetric bidirectional transmission path. This design improves fusion efficiency and reduces computational complexity.

(3) Dynamically Adjusted Loss Weights: SAL is designed based on the principle of optimization symmetry, incorporating scale-aware weighting to dynamically adjust loss weights according to target size. During training, it increases the classification and regression loss weight for small objects, enhancing feature learning and detection accuracy.

2. Related Work

2.1. Aerial Object Detection

Traditional deep learning detectors typically use horizontal bounding boxes for object localization. However, in remote sensing imagery, object rotation, dense distribution, and significant aspect ratio variations make it difficult for convolutional networks to extract rotation-invariant features and achieve precise localization [12]. Current research mainly focuses on optimizing rotated bounding boxes and regions and extracting rotation-sensitive features.

Rotated bounding box encoding forms the foundation of rotation-based detection. Early conventional object detection methods, which used horizontal and vertical rectangular boxes, failed to effectively localize rotated objects. The OpenCV representation [13] and long-side representation [14] improved localization accuracy by integrating rotation angles and aspect ratios, laying the groundwork for subsequent research. On this basis, rotated anchor box design has evolved significantly. RRPN [15] was the first to introduce rotated candidate boxes into the RPN [16] architecture, but it suffered from angle discretization limitations. To address this, CSL [17] and DCL [18] reformulated the angle prediction problem as a classification task, while VGL (variance Gaussian labeling) created discrete Gaussian angle labels, successfully overcoming the continuity and periodicity issues associated with regression-based angle prediction. Further improvements include [14], which introduced multi-directional anchors for specific targets, and [19], which optimized anchor box design through a feature refinement module and rotation-equivariant networks, significantly improving recall and accuracy. RoI Transformer [20] employed spatial transformation and the RPS-RoI-Align module, enhancing both detection speed and the performance of object classification and boundary regression. AO-RCNN and [21] introduced unique network structures and strategies, achieving progress in complex scene detection and small object detection.

2.2. Multi-Scale Object Detection

Multi-scale object detection is a significant challenge in remote sensing image analysis [22]. Traditional FPNs [23] improve detection performance by fusing features from different hierarchical levels [24], yet they still exhibit limitations in complex scenes.

To enhance small-object detection, [21] proposed a scale-invariant anchor strategy to accurately locate small objects while utilizing position-sensitive RoI pooling for precise feature extraction. Additionally, DFPN [25] and EFPN [26] introduced improvements in feature extraction enhancement and feature fusion path optimization, respectively. However, these methods fail to achieve high accuracy in large-object detection. The primary reason for this limitation is that the top-down upsampling operation in the FPN can lead to information loss, which in turn causes feature misalignment issues. Info-FPN [27] attempted to enhance feature fusion accuracy by incorporating template matching and feature offset learning, but it still requires further optimization in complex scenes.

To improve large-object detection, attention mechanisms have been introduced. [9] utilizes attention mechanisms to focus on key features of large targets, while MSRO-Net [28] adopts a perceptual pyramid feature aggregation module to balance local and global feature extraction, thereby enhancing the representation capability for objects of varying scales. However, these approaches increase computational complexity and require more training data. Despite these improvements, they still do not fully address the limitations of FPN in handling scale diversity.

In contrast, the proposed MeFPN adopts an improved feature fusion strategy. By integrating BFM and leveraging linear scale transformation, MeFPN accurately aligns features across different levels while maintaining a lightweight model design, significantly improving detection accuracy.

2.3. Attention Mechanisms

The attention mechanism mimics the selective attention characteristics of human vision, enabling the model to focus on key features, suppress irrelevant data, and thus improve the accuracy and efficiency of detection. Spatial attention [29] mechanisms such as large selective kernel network (LSKNet) [30] adjust the receptive field through spatial selection strategies, enhancing the distinguishability between objects and the background. For the channel attention mechanism, for example, the practice of integrating SENet [31] into the Faster R-CNN framework [32] strengthens the extraction of key information by re-distributing channel weights. However, most existing methods [33] are based on traditional architectures, making it difficult to deeply integrate multi-scale feature representation with scale-adaptive adjustments. In complex multi-scale scenarios, these methods perform poorly, unable to adapt to scale changes, prone to losing details when dealing with small targets, and having difficultly when capturing global features when dealing with large targets.

Our proposed AFE is different from traditional methods. It combines the channel attention mechanism and the parallel dilated convolution sequence for the first time. Without augmenting the overall quantity of model parameters, it skillfully broadens the receptive field. AFE dynamically adjusts the dilation rate of each branch and concatenates outputs along the channel dimension, enabling efficient multi-scale feature extraction and improving adaptability to varying target scales.

In terms of optimizing the channel attention, we introduce the normalized channel attention gating (CAG) module. The traditional SENet [31] has low sample discrimination when dealing with normalized inputs and is sensitive to noise and outliers, affecting the stability of the model. In contrast, CAG adopts a more robust measurement method in the channel dimension. This method is superior to average pooling and max-pooling, being able to better resist noise and outliers and preserve vector information, and has rotational invariance. Subsequently, a parameter-free normalization method and the activation function are used to adaptively adjust the channel scale, maintaining numerical stability during training. It can adjust the feature contribution in two directions to better adapt to different feature distributions.

3. Methodology

3.1. Adaptive Feature Extraction Module

Traditional object detection methods use fixed-sized convolution kernels and a single network structure. When dealing with objects of different scales, it is difficult for them to adapt to scale changes and they cannot effectively extract comprehensive features.

Parallel Dilated Convolution Sequence: AFE adopts a parallel dilated convolution sequence. This design expands the receptive field without extra parameters and excels in tasks like image recognition requiring a good grasp of data spatial relationships. The pseudocode of this module is shown in Table 1. Assume that the input feature map is denoted as , and the output of each branch can be formulated as follows:

where d represents the dilation rate, which determines the interval of skipping pixels during the convolution process of the convolutional kernel, and is the kernel function that describes the specific operation rules of the convolution operation.

Table 1.

AFE algorithm.

The receptive fields of different branches can be expressed as

Here, k denotes the kernel size, stands for the receptive field of the preceding layer, and is the dilation rate of the current layer. The dilation rates of various layers can be configured based on practical requirements. By doing so, the network is able to flexibly modify the size of the receptive field to meet the feature extraction needs of objects at different scales.

All branch outputs are combined in the channel dimension, with the following formula representing this process:

where n represents the number of branches, and represents the concatenation operation along the channel. Through this concatenation method, the features with different receptive fields extracted by different branches are fused to obtain more abundant feature information.

Channel Attention Gating Module: Selective kernel network (SKNet) [33] uses global average pooling (GAP) and fully connected layers to perceive the relationships between channels and assign channel importance. However, for normalized inputs, its output lacks discrimination among different samples. Moreover, global average pooling is insensitive to noise and outliers, and these will be averaged into the final result, affecting the model’s stability. To resolve these issues, the present paper employs the normalized CAG. For the features of each branch, the -norm [34] is calculated along the channel dimension. Unlike average pooling and max pooling, which select the maximum or average value within the pooling window as output, the -norm, computed as , can preserve richer feature information. This enables the extraction of more detailed features during the feature extraction phase. The formula for the -norm is as follows:

Here, is a learnable weight parameter used to adjust the importance of each channel’s feature, and is assigned a value of to prevent division-by-zero errors during the computation.

The feature vector of each channel is processed through a parameter-free normalization technique based on -norm scaling, and the formula is as follows:

The scalar is introduced to adjust the scale of , preventing its value from being too small when the number of channels C is large, and ensuring the comparability of features of different channels in subsequent calculations. Subsequently, the activation function is used to dynamically adjust the contribution of features, and the formula is

Here, represents the normalized scale vector, while and denote the gating weight and bias, respectively. These are employed to adaptively regulate the scale of each channel within the feature map. The output of the function lies within the range of . This property can effectively preserve numerical stability throughout the training process and permits the adjustment of feature contributions in both positive and negative directions. As a result, the model can better adapt to diverse feature distributions.

3.2. Multi-Scale Enhanced Feature Pyramid Network

Previously, in the realm of feature fusion, numerous methodologies frequently resorted to straightforward techniques like feature concatenation or direct addition to integrate multi-level features, as depicted in Figure 3b. This approach overlooks the disparities in scale and semantics among features at various levels. Consequently, it readily leads to information loss or semantic ambiguity in the fused features, making it arduous to fully harness the merits of features at each layer during object detection.

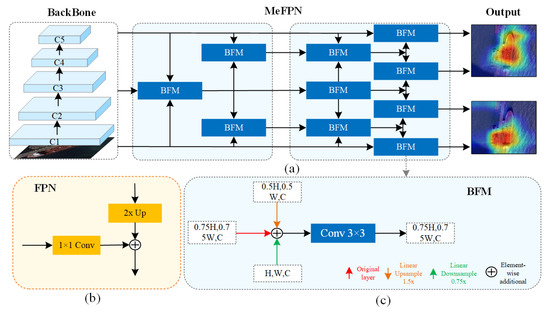

Figure 3.

(a) Shows the overall structure of MeFPN. (c) Details the bidirectional fusion module (BFM). (b) Unlike traditional FPN that use convolutions for unidirectional fusion, MeFPN employs BFM for more effective feature integration.

To effectively utilize multi-level features, MeFPN integrates a BFM based on structural symmetry, as illustrated in Figure 3a. Within the BFM (Figure 3c), a linear scale transformation is applied to align features from different levels to a common intermediate scale. This ensures that features from various levels can be fused at a unified scale, facilitating the efficient integration of high-level semantic cues with low-level fine-grained details. The formula for linear scale transformation is as follows:

Here, and represent the scaling factors for upsampling and downsampling, respectively. We employ bilinear interpolation to upscale higher-level feature maps, bringing their scales closer to the intermediate level in upsampling.The upsampling factor , and the new pixel value is computed as a weighted average of four neighboring pixels:

Here, is the upsampled feature map, denotes the weight coefficient determined by the relative position of the new coordinate and neighboring pixels, and represents the pixel values in the higher-level feature map.

We employ convolution-based downsampling with a fixed stride to adjust lower-level feature maps. Specifically, a convolution kernel slides over the lower-level feature map with a downsampling factor , following the equation

Here, is the downsampled feature map, K represents the convolution kernel value at position , denotes the corresponding pixel value in the original lower-level feature map, and k is the kernel size. The stride s is determined by the downsampling factor .

To enhance the feature representation, the scaled features are combined with the original layer features in an element-wise manner. The corresponding formula is presented below:

where represents the features of the original layer. This preserves the advantages of each layer while enriching the overall feature set.

Finally, further feature extraction and fusion are carried out through a convolution operation to achieve more in-depth feature extraction. The formula for the fusion layer is

Here, K is a convolution kernel, and the convolution operation. The convolution screens and integrates fused features to extract more representative ones, boosting the model’s multi-scale object detection ability.

Therefore, the BFM design of MeFPN not only maintains high-efficiency feature fusion but also enhances the feature representation and discriminative ability through precise scale alignment and a two-way fusion mechanism. It achieves more flexible and accurate feature capture of multi-scale objects with lower computational complexity. The pseudocode of it is shown in Table 2. By stacking multiple BFMs, features at different levels can be combined more fully, further enhancing the feature representation ability.

Table 2.

MeFPN algorithm.

3.3. Scale-Aware Loss Function

In remote sensing images, detection difficulty varies greatly by object scale. Small objects are harder to detect due to small pixel proportion and vague features [35]. Traditional loss functions treat all-scale objects alike when computing losses, causing the network to struggle to improve small-object detection accuracy during training and thus affecting overall performance.

To balance detection difficulty differences, we introduce a scale-sensitive loss function that adjusts loss weights by object scale. Specifically, a scale weight is added to the classification loss function:

Here, the relative scale of an object is given by , where , , , and are object and image width-height values, respectively. Trainable parameters and are vital for regulating adjustment speed and maintaining scale-weight balance. Their values generally range for from to 10 (increased for datasets with many small objects), and is selected around the average relative scale based on target scale statistics. In actual training, random initialization and gradient descent are combined to fine tune them. In the DOTA [36] dataset experiment, with dense small objects, multiple comparative tests show the model performs well when and . The classification loss function is expressed as

Here, is a balance factor for sample balancing, adjusting sample weights in loss calculation, and is the model’s predicted probability. The focusing parameter b is usually 2, reducing the loss weight of easy-to-classify samples so the model focuses on hard-to-classify ones. For small objects, is small, increasing classification loss and making the model focus on them. For large objects, is near 1, with no obvious increase in classification loss, ensuring stable detection.

The intersection over union (IoU) [37] is commonly employed as a metric for evaluating regression loss. Nevertheless, IoU comes with certain limitations. Take the situation where there is no overlap between the ground-truth box (GT) and the anchor box, for instance; in such a case, the IoU value drops to 0, potentially resulting in the vanishing gradient problem. Moreover, when the anchor box is entirely enclosed within the GT box, IoU fails to precisely assess the positioning status.

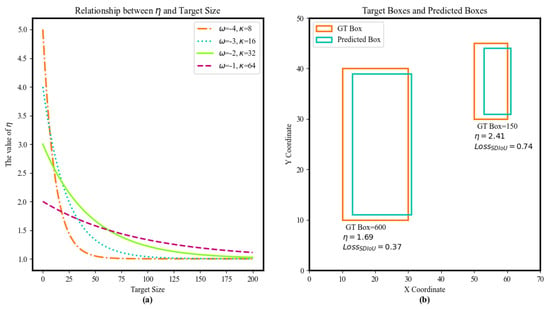

To address these issues, this paper proposes the scale-aware intersection over union (SDIoU), which balances the loss contribution of objects of different sizes through the scale weight . As shown in Figure 4, the GT box is and the anchor box is . The SDIoU is defined as follows:

Figure 4.

The loss function, which balances the difference in loss for objects of different sizes by introducing a scale weight : (a) shows the relationship between and the target size for different parameters and ; (b) shows the loss for different sizes of objects.

Here, d is the Euclidean distance between the GT box and anchor box centers, c is the diagonal of their smallest enclosing box, is the scale weight, adjusts small-object scaling, and determines large-object’s return rate to standard IoU. Multiple tests show the model gets best accuracy for different-scale objects when and . In practice, these two parameters are fine tuned based on dataset features and task requirements.

The SAL integrates the and the to formulate an overall loss function:

where and are the weights of the classification and regression error terms, respectively. In the experiments, their values are set to and . The determination of these two weights is obtained through comparative experiments on multiple datasets, comprehensively considering indicators such as the precision and recall rate of the model in the detection of objects at different scales. By using scale-reciprocal loss weighting in the SAL function, these symmetric components collectively enhance the system’s robustness to scale variations while maintaining computational efficiency.

4. Experimental Methods and Discussion

4.1. Experimental Details

(1) Experimental Environment: The experiment was conducted on a system equipped with eight NVIDIA GeForce RTX 4090D GPUs for computational support; the experimental code was developed based on the MMRotate [38] framework built on PyTorch 1.13.1, providing convenient tools and efficient implementations.

(2) Selection of Backbone Networks: ResNet50 and ResNet101 [39] were selected as backbone networks. The baseline model used was [19], a single-stage rotated object detector, which performs exceptionally well in detecting rotated objects with high aspect ratios. This model serves as a reliable reference for evaluating AFEDet’s performance.

(3) Data Processing: The DOTA dataset has a high resolution, and input images were cropped into 1024 × 1024 pixel blocks. To prevent information loss and enhance data diversity, a sliding window with a 200-pixel overlap was applied. For HRSC2016 [40] and UCAS-AOD [41] datasets, images were resized to 512 × 800 pixels [42]. The data augmentation included random horizontal, vertical, or diagonal flipping (25% probability), and random rotation (50% probability).

(4) Training Parameter Settings: For the DOTA dataset, training and validation sets were merged. The AdamW optimizer was used with a batch size of 32, the initial learning rate was , momentum was set among , and the weight decay was . Training was for 24 epochs, with the linearly decaying from epoch 16 to epoch 22. For the HRSC2016 and UCAS-AOD datasets, training and validation sets were also merged. The SGD optimizer was used with a batch size of 32, an initial learning rate , momentum of , and a weight decay of . Training was for 36 epochs, with the learning rate decaying from epoch 24 to epoch 33.

4.2. Datasets

(1) DOTA [36]: a large-scale remote sensing object detection dataset with 15 categories, including buildings, vehicles, and other common objects. This dataset contains 2806 images with resolutions ranging from 800 × 800 to 4000 × 4000 pixels, simulating various imaging conditions. It also includes over 188,000 annotated object instances, providing a rich resource for model training, facilitating precise object recognition and localization.

(2) HRSC2016 [40]: extracted from Google Earth images, this dataset contains 27 categories and is specifically designed for ship detection; oriented bounding box (OBB) annotations are used for precise ship localization. This dataset contains 1061 labeled images, with 436 for training, 181 for validation, and 444 for testing.

(3) UCAS-AOD [41]: composed of 2127 annotated images collected from Google Earth, this dataset was used primarily for detecting airplanes in various flight states. The image sizes range from 400 × 400 to 1200 × 1200 pixels, and OBB annotations are provided for accurate target localization.

4.3. Evaluation Metrics

To comprehensively evaluate the detection performance of the model, this study adopts precision, recall, mAP, and FPS as evaluation metrics. The mAP represents the average area under the precision–recall curve across different target categories. Precision is the proportion of true positives among all samples predicted as positive, calculated as

where (true positives) represents the correctly predicted positive samples, and (false positives) represents the incorrectly predicted positive samples.

Recall measures the proportion of correctly predicted positive samples among all actual positive samples. The calculation formula is as follows:

where (false negatives) represents the actual positive samples that were incorrectly predicted as negative.

The reflects the model’s overall detection performance across all categories. The calculation formula is as follows:

Here, N represents the total number of object categories, and represents the average precision () of category i.

4.4. Comparative Experiments

To verify AFEDet’s performance and generalization ability, experiments were conducted on the three public datasets above, comparing AFEDet with state-of-the-art models.

4.4.1. Evaluation on the DOTA Dataset

Table 3 presents the evaluation results on the DOTA dataset. AFEDet achieved an mAP of 80.91%, outperforming all comparison methods. Compared with similar methods, mAP improved by 7.12% over a baseline model, and mAP increased by 8.48% compared to FMSSD. Even compared to high-performing models, AFEDet still achieved a 1.49% improvement.

Table 3.

Comparison of AFEDet with state-of-the-art object detection models on the DOTA dataset.

For two-stage detection models, AFEDet surpassed G-Rep, SCRDet, and SCRDet++, demonstrating superior detection capabilities in complex remote sensing scenes. For specific categories, small-object detection (PL and HC categories) achieved 81.66% accuracy, surpassing all competing methods. This highlights AFEDet’s ability to capture fine details, which is crucial for accurately detecting small targets in real-world applications.

4.4.2. Evaluation on the HRSC2016 Dataset

The evaluation results on the HRSC2016 dataset are presented in Table 4. When AFEDet was implemented with ResNet101 as the backbone network, it achieved an mAP of 91.88%, significantly outperforming many comparison methods. In terms of accuracy improvement, AFEDet showed remarkable gains compared to other models. Specifically, it outperformed by 18.81%, achieved an increase of 2.42% over DCL, and demonstrated an improvement of 1.72% over TIOE-Det. When compared to and , the model’s performance improved by 1.71% and 2.62%, respectively. Even when compared to Oriented RCNN, which achieved an mAP of 90.50%, AFEDet still maintained an accuracy advantage, further confirming its effectiveness in remote sensing object detection.

Table 4.

Comparison of AFEDet with state-of-the-art object detection models on the HRSC2016 dataset.

Regarding FPS performance, AFEDet demonstrated outstanding efficiency, achieving 22.9 FPS, which is significantly higher than (2.0 FPS) and (12.0 FPS). Although and Oriented RCNN achieved 12.7 FPS and 15.1 FPS, respectively, AFEDet maintained a higher mAP while offering superior detection speed. This balance between high detection accuracy and fast processing speed highlights AFEDet’s practical potential.

4.4.3. Evaluation on the UCAS-AOD Dataset

For the UCAS-AOD dataset, we conducted experiments using ResNet50 as the backbone network to evaluate the detection performance of AFEDet. The results, presented in Table 5, indicate that AFEDet achieved an mAP of 90.30%, demonstrating its strong capability in remote sensing object detection. Breaking down the results further, AFEDet obtained an AP of 90.46% for vehicle detection and 90.28% for airplane detection, outperforming other methods in both categories. Compared to R-RetinaNet, AFEDet achieved a 2.73% improvement in mAP, while it outperformed Faster R-CNN by 1.94%. In comparison with RoI Transformer, AFEDet still demonstrated a 1.1% advantage in detection accuracy. Even when evaluated against competitive models such as G-Rep, DAL, and , AFEDet consistently achieved the highest mAP, further solidifying its superior performance in object detection tasks. These results confirm that AFEDet exhibits strong target detection capabilities on the UCAS-AOD dataset, excelling in both precision and localization accuracy.

Table 5.

Comparison of AFEDet with state-of-the-art object detection methods on the UCAS-AOD dataset.

4.4.4. Detection Visualization Results

(1) The Visualization Result of DOTA

In Figure 5a,b, both large and medium-sized objects can be easily and accurately identified. Even for elongated targets, as shown in Figure 5c–e, precise recognition can still be achieved. In Figure 5k–o, various densely parked targets, such as airplanes and ships, are also accurately detected. Figure 5f–j present the prediction results of objects of different sizes and shapes in a complex background. Notably, in Figure 5n, objects of varying sizes have been successfully detected.

Figure 5.

AFEDet’s exceptional object detection performance across various scenarios in the DOTA dataset.

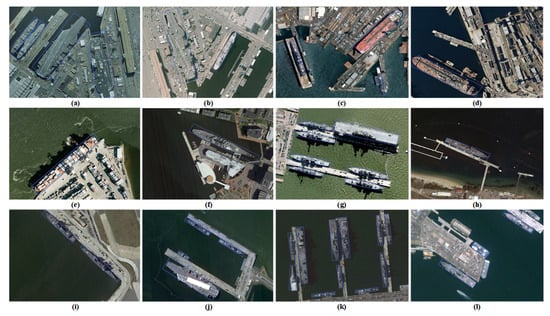

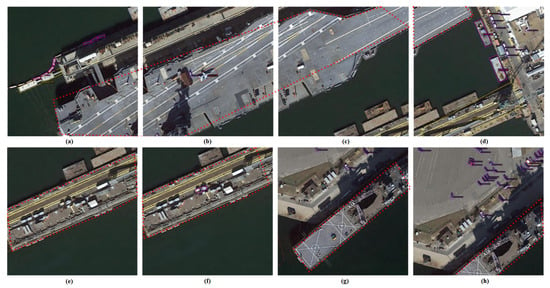

(2) The Visualization Result of HRSC2016

Figure 6 presents detection results on the HRSC2016 dataset, where AFEDet demonstrates highly efficient detection performance. As shown in Figure 6a,c, the model accurately detects various types of ships densely docked in ports, including large cargo ships and small warships. The bounding boxes tightly fit the ship contours, emphasizing the model’s precision in target localization. Even in complex backgrounds, as illustrated in Figure 6d–f, AFEDet successfully differentiates individual ships, effectively reducing missed detections. The ability to distinguish ships even in cluttered and densely populated harbor environments highlights the robustness and accuracy of AFEDet’s detection mechanism.

Figure 6.

AFEDet’s high-efficiency detection results on the HRSC2016 dataset. Even with a distracting background of similar patterns, AFEDet can clearly and accurately distinguish the contours and fine structures of ships.

(3) The Visualization Result of UCAS-AOD

Figure 7 displays detection results on the UCAS-AOD dataset, further demonstrating AFEDet’s adaptability in various scenarios. As shown in Figure 7a, the model achieves high-precision airplane detection, ensuring tight bounding box alignment, even for small and distant aircraft. In densely packed parking areas, AFEDet effectively differentiates each airplane, minimizing overlapping bounding boxes and avoiding false detections. Additionally, Figure 7b illustrates detection results in urban road environments, where AFEDet maintains strong performance despite background interference from buildings, vegetation, and other structures. The model’s ability to accurately detect vehicles of various sizes and orientations further underscores its robustness in complex backgrounds.

Figure 7.

The detection results on the UCAS-AOD dataset. AFEDet effectively handles complex and densely packed scenes, preserving target details. Even in the presence of partial occlusion or similar interferences, it can still accurately locate the targets.

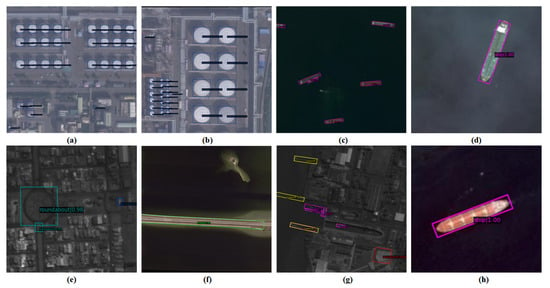

(4) Detection Results under Extreme Lighting Variations

As shown in Figure 8, the model demonstrates stable detection performance under extreme lighting variations. Even in low-light environments where object features are barely visible, such as in Figure 8e,g, the model is still capable of recognizing small objects that are difficult to detect with the naked eye, showcasing strong robustness in challenging conditions.

Figure 8.

AFEDet’s performance under extreme lighting conditions.

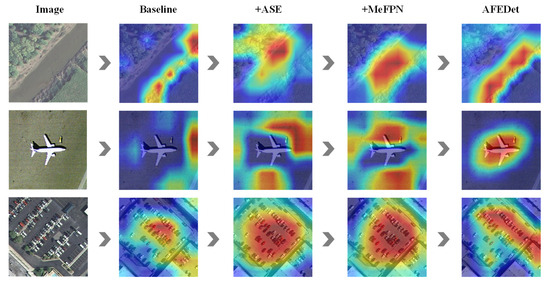

4.5. Ablation Study

4.5.1. Key Component Analysis

To further analyze the impact of different modules on the performance of AFEDet, we conducted an ablation study using the DOTA dataset. The results, presented in Table 6, show that each module contributes significantly to the overall performance of the model. The baseline model, which lacks the AFE, MeFPN, and SAL modules, achieved an mAP of 84.68%. When AFE was added, the mAP increased to 87.59%, reflecting an improvement of 3.44%. Additionally, recall and precision saw improvements of 5.3% and 4.34%, respectively. This indicates that AFE effectively enhances multi-scale feature extraction through parallel dilated convolution and channel attention mechanisms, significantly improving detection accuracy. Integrating MeFPN further boosted the mAP to 88.90%, representing an additional improvement of 4.98% compared to the baseline. This performance gain can be attributed to MeFPN’s bidirectional fusion strategy, which enhances feature alignment across different scales, reduces feature degradation, and improves information retention. Furthermore, incorporating the SAL function resulted in an mAP increase to 86.27%, an improvement of 1.88% over the baseline. The SAL function optimizes loss balancing for small-object detection, increasing the model’s ability to capture and accurately recognize small-scale targets.

Table 6.

Ablation study results of AFEDet.

When all three modules-AFE, MeFPN, and SAL-were combined, AFEDet achieved its highest mAP of 91.29% (7.8%↑), with recall improving by 9.4% and precision increasing by 9.7% compared to the baseline. These results confirm that the integration of these three modules significantly enhances AFEDet’s ability to detect multi-scale objects, making it more effective in complex remote sensing scenarios.

The visualization results in Figure 9 indicate that AFE enhances feature extraction by making feature representation more focused and highlighting key information. MeFPN improves the model’s response accuracy to multi-scale targets. After integrating these three modules, AFEDet achieves the most precise response in the target regions, demonstrating strong object localization capabilities.

Figure 9.

Visualization of comparative results from the ablation experiments.

4.5.2. Parameter Analysis of SDIoU

To investigate the impact of SDIoU parameters on the model’s performance in detecting objects of different scales, we conducted an ablation study using the DOTA dataset. The detailed experimental results are shown in Table 7.

Table 7.

Comparison of different loss functions’ performance on object detection.

In this experiment, we adjusted the parameters and to observe changes in the model’s sensitivity and accuracy for different target scales. To determine the optimal values for and , we first referred to the weight formula , combined with the model’s theoretical principles and prior research experience, to preliminarily define the parameter ranges as and = [1, 64]. Next, we selected multiple parameter combinations within the defined range and employed a five-fold cross-validation method to precisely record key performance metrics, including detection precision and recall for small, medium, and large targets. Simultaneously, we monitored the effect of variations in and on and overall model performance.

Through extensive experimentation and data analysis, we found that reducing the values of and significantly improved the model’s localization sensitivity for small targets, leading to a substantial increase in small-target detection accuracy. As shown in Table 7, when and were set to relatively small values (e.g. ), the model achieved a mAP of 80.8% (an improvement of 3.5%), while the detection accuracy for small targets (column S) increased to 78.8% (a 4.7% improvement). This enhancement is particularly significant compared to traditional IoU and DIoU methods, which do not incorporate scale adaptivity, strongly validating the effectiveness of SDIoU in small-object detection. Based on a comprehensive evaluation of the model’s performance across different target scales, we determined the optimal values of and , ensuring the model achieves the highest possible accuracy when detecting objects of varying scales.

4.5.3. Computational Complexity Analysis

To evaluate the practicality of the proposed method in real-world applications, we compare different object detection models in terms of parameter count, floating-point operations (FLOPs), and FPS. The results are shown in Table 8.

Table 8.

Comparison of different remote sensing object detection models in terms of parameters, FLOPs, and FPS.

AFEDet demonstrates a significant advantage in parameter efficiency, with only 20.91M parameters, considerably lower than models like LSKNet (31.0M). This compact parameter design reduces storage requirements and memory consumption, enhancing deployability on resource-constrained devices. In terms of computational complexity, AFEDet achieves 50.26G FLOPs, which is lower than most comparison models, indicating its ability to operate efficiently under limited computational resources while maintaining detection accuracy. AFEDet achieves 19.7 FPS, outperforming (8.3 FPS) and MSRO-Net (12.5 FPS) and approaching LSKNet (20.5 FPS). This demonstrates that AFEDet effectively balances detection speed and computational cost, meeting real-time processing requirements. The improvements in inference speed can be attributed to the parameter-free normalization method and the BFM, which enable precise alignment of intermediate-layer features and accelerate information flow within the model.

In comparison, MSRO-Net has a high parameter count and FLOPs, resulting in increased computational complexity and limiting its scalability. Although SKNet-101 has lower FLOPs, its higher parameter count may introduce additional challenges. Overall, AFEDet achieves a well-balanced trade-off between parameters, computational cost, and speed, enabling efficient detection with low complexity. This makes AFEDet a cost-effective solution for remote sensing object detection with strong practical applicability.

5. Discussion of Experimental Results

The ablation study demonstrates that the three key modules AFE, MeFPN, and SAL effectively enhance the performance of AFEDet. AFE leverages parallel dilated convolutions and channel attention mechanisms to improve the model’s adaptability to multi-scale targets. MeFPN reduces information loss and enhances feature perception through bidirectional fusion strategies. The SAL function optimizes small-object detection, improving both model accuracy and robustness. The synergy among these modules enables AFEDet to excel in multi-scale object detection and achieve outstanding results across multiple remote sensing datasets.

However, AFEDet still faces certain challenges. As illustrated in Figure 10, the model struggles to detect densely packed or overlapping objects. Most existing algorithms primarily focus on individual object features, making it difficult for the model to recognize occluded object boundaries, leading to missed detections. Future research will explore semantic segmentation techniques to distinguish occluded and visible parts, thereby enhancing the model’s ability to extract features from occluded areas and guiding it to uncover potential hidden features. For large-scale objects, as depicted in Figure 11, the model faces challenges due to image cropping. When a large object is divided into multiple image patches, the model learns only the segmented parts rather than the entire object structure. This discrepancy between the segmented features and the original complete feature distribution results in detection difficulties. To address this issue, we plan to incorporate ViT [54] (Vision Transformer) architectures to capture long-range dependencies, helping the model understand the complete structure of large objects.

Figure 10.

This figure demonstrates that the detection effect is affected when the objects are closely aligned and occlude each other.

Figure 11.

This figure shows that the integrity of the super-large target is destroyed after it is segmented, and then the model cannot accurately identify the segmented target.

Overall, while AFEDet focuses on addressing object scale diversity, it still faces limitations in handling occluded targets, complex object appearances, and extreme variations in real-world scenarios. The sample balancing process is insufficient, leading to under-representation of certain complex scenarios, while data augmentation techniques remain relatively simple. Future improvements should focus on optimizing these aspects to further enhance model performance.

6. Conclusions

The proposed AFEDet aims to achieve an optimal balance between multi-scale feature extraction and computational complexity. By incorporating a channel attention mechanism, an -norm-based gating module, a bidirectional fusion module, and a dynamic weight adjustment loss function, AFEDet has demonstrated outstanding performance across multiple remote sensing datasets, with the effectiveness of its key components validated through ablation experiments. However, challenges remain in handling occluded and extremely large objects; also, further improvement in computational speed for real-time applications is expected. Future research will explore ViT [54] or multi-head attention mechanisms, model distillation, and pruning techniques to enhance both accuracy and efficiency. Additionally, we plan to extend AFEDet to applications in low-altitude flight [1] and field exploration [2], with targeted optimizations to improve its robustness and adaptability across diverse scenarios.

Author Contributions

Conceptualization, X.Y.; methodology, X.Y. and D.J.; software, X.Y. and S.G.; validation, S.G. and D.J.; formal analysis, X.W.; investigation, X.W.; resources, X.W.; data curation, X.Y.; writing—original draft preparation, X.Y.; writing—review and editing, X.Y.; visualization, X.Y.; supervision, D.J.; project administration, D.J.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Key Project of Nanjing Communications Institute of Technology (JZ2306) and the Open Research Fund of Jiangsu Province Engineering Research Center of Traffic Energy Conservation and Emission Reduction (JGKF2024010).

Data Availability Statement

The DOTA V1.0 is available at https://captain-whu.github.io/DOTA/dataset.html (accessed on 15 March 2025). The HRSC2016 is available at https://aistudio.baidu.com/aistudio/datasetdetail/31232 (accessed on 15 March 2025). The UCAS-AOD is available at https://aistudio.baidu.com/datasetdetail/70265 (accessed on 15 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Z.; Xie, X.; Guo, Q.; Xu, J. Improved YOLOv7-Tiny for Object Detection Based on UAV Aerial Images. Electronics 2024, 13, 2969. [Google Scholar] [CrossRef]

- Shirazi, A.; Hezarkhani, A.; Beiranvand Pour, A.; Shirazy, A.; Hashim, M. Neuro-Fuzzy-AHP (NFAHP) Technique for Copper Exploration Using Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) and Geological Datasets in the Sahlabad Mining Area, East Iran. Remote Sens. 2022, 14, 5562. [Google Scholar] [CrossRef]

- Li, J.; Pei, Y.; Zhao, S.; Xiao, R.; Sang, X.; Zhang, C. A review of remote sensing for environmental monitoring in China. Remote Sens. 2020, 12, 1130. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, F.; Hu, P. Small-Object Detection in UAV-Captured Images via Multi-Branch Parallel Feature Pyramid Networks. IEEE Access 2020, 8, 145740–145750. [Google Scholar] [CrossRef]

- Li, W.; Zhang, X.; Peng, Y.; Dong, M. DMNet: A network architecture using dilated convolution and multiscale mechanisms for spatiotemporal fusion of remote sensing images. IEEE Sens. J. 2020, 20, 12190–12202. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, W.; Gui, Q.; Li, X.; Wang, L. Split depth-wise separable graph-convolution network for road extraction in complex environments from high-resolution remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, J.; Sun, W.; Lei, T.; Benediktsson, J.A.; Jia, X. Hierarchical attention feature fusion-based network for land cover change detection with homogeneous and heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Pei, G.; Zhang, L. Feature hierarchical differentiation for remote sensing image change detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Zhang, C.; Duan, C.; Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 2022, 43, 1131–1155. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Huang, Z.; Li, W.; Xia, X.G.; Wu, X.; Cai, Z.; Tao, R. A Novel Nonlocal-Aware Pyramid and Multiscale Multitask Refinement Detector for Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–20. [Google Scholar] [CrossRef]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic refinement network for oriented and densely packed object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11207–11216. [Google Scholar]

- Zhang, Z.; Guo, W.; Zhu, S.; Yu, W. Toward Arbitrary-Oriented Ship Detection With Rotated Region Proposal and Discrimination Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1745–1749. [Google Scholar] [CrossRef]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Yang, X.; Yan, J. On the Arbitrary-Oriented Object Detection: Classification based Approaches Revisited. Int. J. Comput. Vis. 2022, 130, 1340–1365. [Google Scholar]

- Yang, X.; Hou, L.; Zhou, Y.; Wang, W.; Yan, J. Dense Label Encoding for Boundary Discontinuity Free Rotation Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15814–15824. [Google Scholar]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. arXiv 2019, arXiv:1908.05612. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Gao, T.; Xia, S.; Liu, M.; Zhang, J.; Chen, T.; Li, Z. MSNet: Multi-Scale Network for Object Detection in Remote Sensing Images. PAttern Recognit. 2025, 158, 110983. [Google Scholar] [CrossRef]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. Augfpn: Improving multi-scale feature learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12595–12604. [Google Scholar]

- Wang, J.; Tong, Q.; He, C. A Longitudinal Dense Feature Pyramid Network for Object Detection. In Proceedings of the 2021 4th International Conference on Artificial Intelligence and Pattern Recognition, Yibin, China, 20–22 August 2021; pp. 518–523. [Google Scholar]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic ship detection in remote sensing images from google earth of complex scenes based on multiscale rotation dense feature pyramid networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Guo, W.; Li, W.; Gong, W.; Cui, J. Extended feature pyramid network with adaptive scale training strategy and anchors for object detection in aerial images. Remote Sens. 2020, 12, 784. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, J.; Zhou, Y.; Wang, H.; Yao, R.; Zhang, L.; Xue, Y. Info-FPN: An Informative Feature Pyramid Network for object detection in remote sensing images. Expert Syst. Appl. 2023, 214, 119132. [Google Scholar] [CrossRef]

- Li, S.; Yan, F.; Liu, Y.; Shen, Y.; Liu, L.; Wang, K. A multi-scale rotated ship targets detection network for remote sensing images in complex scenarios. Sci. Rep. 2025, 15, 2510. [Google Scholar]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Dai, Y.; Hou, Q.; Liu, L.; Liu, Y.; Cheng, M.M.; Yang, J. LSKNet: A foundation lightweight backbone for remote sensing. Int. J. Comput. Vis. 2024, 133, 1–22. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Liu, W.; Lin, Y.; Liu, W.; Yu, Y.; Li, J. An attention-based multiscale transformer network for remote sensing image change detection. Isprs J. Photogramm. Remote Sens. 2023, 202, 599–609. [Google Scholar]

- Cui, Z.; Leng, J.; Liu, Y.; Zhang, T.; Quan, P.; Zhao, W. SKNet: Detecting Rotated Ships as Keypoints in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 8826–8840. [Google Scholar] [CrossRef]

- Deng, Y.J.; Yang, M.L.; Li, H.C.; Long, C.F.; Fang, K.; Du, Q. Feature Dimensionality Reduction With L2,p-Norm-Based Robust Embedding Regression for Classification of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Jeune, P.L.; Mokraoui, A. Rethinking intersection over union for small object detection in few-shot regime. arXiv 2023, arXiv:2307.09562. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Du, S.; Zhang, B.; Zhang, P. Scale-sensitive IOU loss: An improved regression loss function in remote sensing object detection. IEEE Access 2021, 9, 141258–141272. [Google Scholar]

- Zhou, Y.; Yang, X.; Zhang, G.; Wang, J.; Liu, Y.; Hou, L.; Jiang, X.; Liu, X.; Yan, J.; Lyu, C.; et al. MMRotate: A Rotated Object Detection Benchmark using PyTorch. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. CoRR 2016, 770–778. [Google Scholar]

- Liu, Z.; Wang, H.; Weng, L.; Yang, Y. Ship rotated bounding box space for ship extraction from high-resolution optical satellite images with complex backgrounds. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1074–1078. [Google Scholar]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, S.; Hu, M.; Song, Q. Object Detection in Remote Sensing Images Based on Adaptive Multi-Scale Feature Fusion Method. Remote Sens. 2024, 16, 907. [Google Scholar] [CrossRef]

- Wang, P.; Sun, X.; Diao, W.; Fu, K. FMSSD: Feature-Merged Single-Shot Detection for Multiscale Objects in Large-Scale Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3377–3390. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8232–8241. [Google Scholar]

- Yang, X.; Yan, J.; Liao, W.; Yang, X.; Tang, J.; He, T. SCRDet++: Detecting Small, Cluttered and Rotated Objects via Instance-Level Feature Denoising and Rotation Loss Smoothing. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2384–2399. [Google Scholar] [CrossRef] [PubMed]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic Anchor Learning for Arbitrary-Oriented Object Detection. arXiv 2020, arXiv:2012.04150. [Google Scholar] [CrossRef]

- Hou, L.; Lu, K.; Yang, X.; Li, Y.; Xue, J. G-Rep: Gaussian Representation for Arbitrary-Oriented Object Detection. Remote Sens. 2023, 15, 757. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. ReDet: A Rotation-Equivariant Detector for Aerial Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2786–2795. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Dong, Y.; Yang, X. Task interleaving and orientation estimation for high-precision oriented object detection in aerial images. Isprs J. Photogramm. Remote Sens. 2023, 196, 241–255. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Yang, R.; Wang, G.; Pan, Z.; Lu, H.; Zhang, H.; Jia, X. A novel false alarm suppression method for CNN-based SAR ship detector. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1401–1405. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Pan, Y.; Li, Y.; Yao, T.; Ngo, C.W.; Mei, T. Stream-ViT: Learning Streamlined Convolutions in Vision Transformer. IEEE Trans. Multimed. 2025, 1–11. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).